Deep Neural Networks with Koopman Operators for Modeling and Control of Autonomous Vehicles

Abstract

Autonomous driving technologies have received notable attention in the past decades. In autonomous driving systems, identifying a precise dynamical model for motion control is nontrivial due to the strong nonlinearity and uncertainty in vehicle dynamics. Recent efforts have resorted to machine learning techniques for building vehicle dynamical models, but the generalization ability and interpretability of existing methods still need to be improved. In this paper, we propose a data-driven vehicle modeling approach based on deep neural networks with an interpretable Koopman operator. The main advantage of using the Koopman operator is to represent the nonlinear dynamics in a linear lifted feature space. In the proposed approach, a deep learning-based extended dynamic mode decomposition algorithm is presented to learn a finite-dimensional approximation of the Koopman operator. Furthermore, a data-driven model predictive controller with the learned Koopman model is designed for path tracking control of autonomous vehicles. Simulation results in a high-fidelity CarSim environment show that our approach exhibit a high modeling precision at a wide operating range and outperforms previously developed methods in terms of modeling performance. Path tracking tests of the autonomous vehicle are also performed in the CarSim environment and the results show the effectiveness of the proposed approach.

Index Terms:

Vehicle dynamics, Koopman operator, deep learning, extended dynamic mode decomposition (EDMD), data-driven modeling, model predictive control (MPC).I Introduction

Autonomous vehicles and driving technologies have received notable attention in the past several decades. Autonomous vehicles are promising to free the hands of humans from tedious long-distance drivings and have huge potential to reduce traffic congestion and accidents. A classic autonomous driving system usually consists of key modules of perception, localization, decision-making, trajectory planning and control. In trajectory planning and control, the information of vehicle dynamics is usually required for realizing agile and safe maneuver, especially in complex and unstructured road scenarios.

In order to realize high-performance trajectory tracking of autonomous vehicles, many control methods, such as Linear Quadratic Regulator (LQR) [1] and Model Predictive Control (MPC) [2], rely on vehicle model information with different levels and structures. However, to obtain a precise model of vehicle dynamics is difficult for the following reasons: i) the vehicle dynamics in the longitudinal and lateral directions are highly coupled; ii) strong non-linearity and model uncertainty become dominant factors especially when the vehicle reaches the limit of tire-road friction [3, 4, 5]. Among the existing approaches, classic physics-based modeling methods rely on Newton’s second law of motion, where multiple physical parameters are required to be determined, see [6, 7]. Indeed, some model parameters, such as cornering stiffness coefficient, are not measurable and difficult to be estimated [8, 9, 10].

As an alternative to analytic modeling of vehicle dynamics, machine learning techniques have been used in recent years for building data-driven models of vehicles, see [11, 12]. However, deep neural networks commonly lack interpretability, which has recently been noted as a challenge for applications with safety requirements and remains as a cutting-edge research topic. As a result, the models established by deep neural networks might have unknown sensitive modes and be fragile to model uncertainties. Also, due to the nonlinear activation functions, the obtained dynamics model is not easy to be used for designing a well-posed controller such as MPC, LQR, and so forth. Recently, the Koopman operator, being an invariant linear operator (probably of infinite dimension), has been regarded as a powerful tool for capturing the intrinsic characteristics of a nonlinear system via linear evolution in the lifted observable space. To obtain a realistic model description, usually, dimensionality reduction methods such as Dynamic Mode Decomposition (DMD) in [13, 14] and Extended Dynamic Mode Decomposition (EDMD) in [15] can be used to approximate the Koopman operator with a finite dimension matrix. In [16], a data-driven identification method using Koopman operator was proposed for vehicle dynamics but the basis functions were either manually designed or determined by the knowledge of the nonlinear dynamics, which is a nontrivial task due to the dynamics in the longitudinal and lateral directions are strongly coupled and highly nonlinear. It is necessary to study learning-based construction methods for basis functions of the Koopman operator. Furthermore, the data-driven vehicle models studied in previous works have not been verified in practical controller design process.

To solve the above problems, this paper proposes a novel data-driven vehicle modeling approach based on deep neural networks with an interpretable Koopman operator. In the proposed approach, a deep learning-based extended dynamic mode decomposition (Deep EDMD) algorithm is presented to learn a finite basis function set of the Koopman operator. Furthermore, a data-driven model predictive controller is designed for path tracking control of autonomous vehicles by making use of the dynamic model learned by Deep EDMD. In the proposed algorithm, deep neural networks serve as the encoder and decoder in the framework of EDMD for learning the Koopman operator. The simulation studies in a high-fidelity CarSim environment are performed for performance validation. The results show that the proposed Deep EDMD method outperforms EDMD, deep neural network (DNN), and extreme learning machine-based EDMD (ELM-EDMD, designed using the ELM described in [17]) algorithms in terms of modeling performance. Also, the model predictive controller designed with Deep EDMD can realize satisfactory trajectory tracking performance and meet real-time implementation requirements.

The contributions of the paper are two-fold:

1) An EDMD-based deep learning approach (Deep EDMD) is proposed for identifying an integrated vehicle dynamical model in both longitudinal and lateral directions. Different from other machine learning-based approaches, deep neural networks play the role of learning feature representations for EDMD in the framework of the Koopman operator. Also, the deep learning network is utilized for automatically learning suitable observable basis functions, which is in contrast to that being manually selected or based on prior model information as in [16]. The resulting model is a linear global model in the lifted space with a nonlinear mapping from the original state space.

2) A novel model predictive controller was designed with the learned model using Deep EDMD (called DE-MPC) for time-varying reference tracking of autonomous vehicles. In DE-MPC, the linear part of the model is used as the predictor in the prediction horizon of the MPC, while the nonlinear mapping function is used for resetting the initial lifted state at each time instant with the real state.

The rest of this paper is structured as follows. In section II, the most related works are reviewed, while the dynamics of four-wheel vehicles are shown in Section III. Section IV presents the main idea of the Deep EDMD method for data-driven model learning. And Section V gives a linear MPC with Deep EDMD for reference tracking. The simulation results are reported in SectionVI, while some conclusions are drawn in Section VII.

II Related Works

In the past decades, neural networks were widely studied to approximate dynamical systems due to their powerful representation capability. Earlier works in [18, 19] have resorted to recurrent neural networks (RNNs) for the approximation of dynamics. It was verified that any continuous curve can be represented by the output of RNNs. In [20], an RNN-based modeling approach was presented to model the dynamical steering behavior of an autonomous vehicle. The obtained nonlinear model was used to design a nonlinear MPC for realizing steering control. In addition to RNNs, fruitful contributions have resorted to artificial neural networks (ANNs) with multiple hidden layers for the approximation of vehicle dynamics. Among them, [11] used fully-connected layers to model the vehicle dynamics in the lateral direction. The physics model was considered for determining the inputs of the networks to guarantee the reliability of the modeling results. It is noteworthy that most of the models with neural networks are nonlinear, and the identification process may suffer from the vanishing of gradients. In recent years, the reliability in identification with neural networks has received notable attention. In [21], different levels of convolution-like neural network structures were proposed for modeling the longitudinal velocity of vehicles. During the construction of neural networks, it integrated the physics principles to improve the robustness and interpretability of the modeling. Note that, most of the above approaches assumed the decoupling of dynamics and approximate in the sole longitudinal direction or lateral direction. As a fact, couplings between both directions can be naturally dealt with when it comes to use multi-variable control techniques, such as MPC and LQR.

As an alternative, the Koopman operator has been recently realized to be a powerful tool for representing nonlinear dynamics. The main idea of the Koopman operator was initially introduced by Koopman [22], with the goal of describing the nonlinear dynamics using a linear model with the state space constructed by observable functions. It has been proven that the linear model with the Koopman operator can ideally capture all the characteristics of the nonlinear system as long as the state space adopted is invariant. That is to say, the structure with the Koopman operator is interpretable. A sufficient condition for the convergence of the Koopman operator is that an infinite number of observable functions are provided.

For practical reasons, dimensionality reduction methods, such as DMDs, have been used to obtain a linear model of finite order, see [13, 23] and the references therein. The order of the resultant model corresponds to the number of observable functions used. In general, the observable functions are directly measured with multiple sensors. To avoid redundant sensors, efforts have been contributed to extending the idea of DMDs for constructing observable functions, see [24, 25, 15]. In EDMDs, the observable functions are designed using basis functions probably of multiple types, such as Gaussian functions, polynomial functions, and so forth. The convergence has been proven in [26] under the assumption that the state space constructed by basis functions is invariant or of infinite dimension.

From a practical viewpoint, the approximation performance might be sensitive to basis functions, hence the selection of basis functions requires specialist experience. Motivated by the above problems, recent works have resorted to design deep neural networks to generate observable functions automatically. In [27], an autoencoder is utilized to learn dictionary functions and Koopman modes for unforced dynamics. The finite-horizon approximation of the Koopman operator was computed via solving a least-squares problem. In [28], a deep variational Koopman (DVK) model was proposed with a Long Short Term Memory (LSTM) network for learning the distribution of the lifted functions. A deep learning network was used in [29] to learn Koopman modes in the framework of DMD. From the application aspect, a deep DMD method has been proposed for the modal analysis of fluid flows in [30]. In [31] and [32], the Koopman operator has been extended to the modeling of power systems. In [33], a highway traffic prediction model was built based on the Koopman operator. Other applications can be founded for representing neurodynamics in [34] and molecular dynamics in [35, 36], and model reduction in [37]. In the aspect of robot modeling and control, a DMD-based modeling and controlling approach was proposed for soft robotics in [38] and the Koopman operator with EDMD was applied to approximate vehicle dynamics in [16]. Different from the above works, we propose a deep neural network to learn the basis functions of the Koopman operator in the framework of EDMD, with the goal of data-driven modeling of vehicles in both longitudinal and lateral directions. The deep neural networks serve as the encoder and decoder whose weights are automatically updated in the learning process, avoiding the manual selection problem of observable functions as in [16].

III The Basic Model Description of Autonomous Vehicles

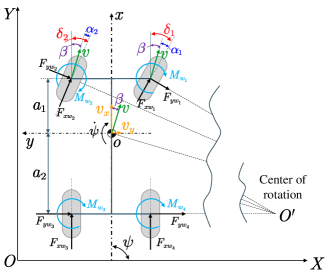

In this paper, we consider the type of autonomous vehicles consisting of four wheels with front-wheel-steering functionality. A sketch of the vehicle dynamics is depicted in Figure 1.

As shown in Figure 1, according to [3, 39], the longitudinal and lateral forces can be represented as

| (1) |

where , denote the longitudinal and lateral forces of tire , denotes the wheel angle of tire , and . The term denotes the longitudinal and lateral air-resistance forces applied to the vehicle body. The parameter , , and denote the air mass density, the frontal area to the airflow, and the relative air speed respectively, and where and are drag coefficients defined as configurable functions with respect to the yaw angle. and can be calculated by the Pacejka model in [4, 39], which is an empirical formula given as

| (2) |

where , and where is the tire load while is a function with respect to the longitudinal slip and sideslip angle . Besides, and are functions with respect to the steering wheel angle and the engine (stands for the throttle if and for the brake otherwise). That is to say, is a function with respect to and , i.e. . and are shape factors and , where is the cornering stiffness. According to the Newton-Euler equation, we can obtain equations of motion as follows:

| (3a) | |||

| (3d) | |||

| (3g) | |||

where and are the longitudinal and lateral velocities respectively, is the mass of vehicle, is the yaw moment of inertia, is the yaw angle, is the slip angle, , and are the distances between the front and rear axles to the center of gravity, and .

Combining (1)-(3g), it is possible to write the vehicle dynamics with interactions in the longitudinal and lateral directions, that is

| (4) |

where the state , , where the steering wheel angle establishes a relation with , i.e., . The choice of dynamics constructed as (4) avoids to measure , , and with expensive sensors, but it is challenging from the modeling viewpoint due to the strong non-linearity being hidden in . For this reason, we propose a deep neural network method based on the Koopman operator to capture the global dynamics of (4) with a linear model using lifted abstract state space.

IV The Deep EDMD Approach for Vehicle Dynamics Modeling

In this section, we first give the formulation with the Koopman operator and its numerical approximation with EDMD for approximating vehicle dynamics. Then, we present the main idea of the Deep EDMD algorithm for modeling the vehicle dynamics, where a deep neural network is utilized to construct an observable subspace of the Koopman operator automatically.

IV-A The Koopman operator using EDMD

The Koopman operator has been initially proposed for capturing the intrinsic characteristics via a linear dynamical evolution for an unforced nonlinear dynamics. With a slight change, the Koopman operator can also be used for representing systems with control forces. The rigorous definition of the Koopman operator can be defined as in [13, 23]. Instead, we formulate our modeling problem to fit with the Koopman operator. Let

| (5) |

be the discrete-time version of (4) with a specified sampling interval, where is the index in discrete-time. Let , where , and , is a shift operator. One can define the Koopman operator on (5) with the extended complete state space being described as

| (6) |

where denotes the Koopman operator, is the composition of with , is the observable in the lifted space. (6) can be seen as representing dynamics (4) via the Koopman operator with a lifted (infinite) observable space via a linear evolution. As the state is of infinite horizon, for practical reasons we adopt in the rest of this paper for approximating the Koopman operator with a finite horizon. In the classic Koopman operator, the observables are eigenfunctions associated with eigenvalues , which reflects the intrinsic characteristics of the nonlinear dynamics. Let be approximated with Koopman eigenfunctions and modes corresponding to the eigenvalues . One can predict the nonlinear system at any time in the lifted observable space as follows [27]:

| (7) |

The main idea of EDMD for approximating the Koopman operator is to represent the observable functions with basis functions and compute a finite-horizon version of the Koopman operator using the least-squares method. In this case, the observable functions can be designed with multiple types of weighted basis functions, e.g. radial basis functions (RBF) with different kernel centers and widths:

| (8) |

where , , , are basis functions, and where , and is the weighting matrix. With (8), (6) becomes

| (9) |

where is a residual term. To optimize , is considered as the cost to be minimized. For forced dynamics, i.e. the vehicle dynamics (4), we define where and corresponding to the former lines of and be the former columns of the last m lines of . Therefore, the lifted vehicle dynamics based on the Koopman operator can be written as

| (10) |

where . The analytical solution of , can be computed via minimizing the residual term

| (11) |

where . Its analytical solution is

| (12) |

where , . Similarly, we can obtain the matrix by solving:

| (13) |

leading to

| (14) |

where , .

IV-B The Deep EDMD Algorithm

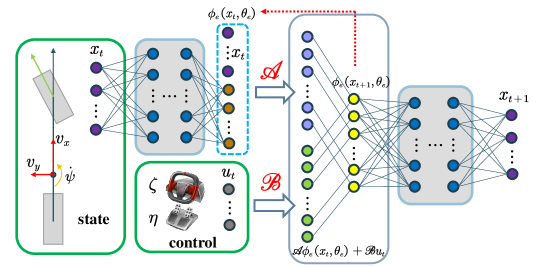

Different from EDMD, in this subsection we propose a Deep EDMD algorithm for modeling the vehicle dynamics, in which a deep neural network is utilized to construct an observable subspace of the Koopman operator automatically. Also, the merit of Deep EDMD with respect to classic multi-layer perceptions is that, the deep learning network is integrated into the EDMD method to learn an estimate of the Koopman operator with finite dimension. To proceed, one can write the approximated dynamics resulting from Deep EDMD in the following form:

| (15) |

where , , is the encoder parameterized with , is the decoder parameterized with . With a slight abuse of notations, in the rest of the paper we use to stand for unless otherwise specified.

As shown in Fig. 2, the Deep EDMD algorithm relies on an autoencoder structure. The encoder, i.e., , consisting of fully-connected layers, is in charge of mapping the original state to a lifted observable space. The weights and are placed connecting to the last layer of the encoder without activation functions. In principle, and can be trained synchronously with the encoder. In case the encoder might experience unanticipated errors and vanishing gradient, and can be updated as the last training step in which way, the worst scenario of Deep EDMD might degrade into EDMD. Similar to the encoder, the decoder consists of fully-connected layers, devoting to recovering the original state from the lifted observable space. To be specific, at any hidden layer , the output can be described as

| (16) |

where in turn stands for the subscripts for encoder or decoder, and are the weight and bias of the hidden layer , where denotes the activation functions of the hidden layer of the encoder and decoder, , where is the number of layers of the encoder and decoder. In the encoder, , , is designed using a rectified linear units (ReLU), see [40], while no activation function is used in the last layer. As for the decoder, , uses ReLU as the activation, while the last layer adopts a Sigmoid activation function. In this way, the lifted state and the predicted state can be computed with the last layer of the parameterized networks, i.e.,

| (17) |

| (18) |

Concerning (16), one can promptly get the whole expressions of the encoder from (17) as well as the decoder from (18).

As the objective is to approximate the vehicle dynamics in a long time window, the multi-step prediction error instead of the one-step one is to be minimized. Hence the state and control sequences with time information are used to formulate the optimization problem. Different from that in EDMD with one-step prediction approximation, the resulting problem is difficult to be solved analytically, but it can be trained in a data-driven manner. To introduce the multi-step prediction loss function, we first write the state prediction in time steps:

| (19) |

where is the -step ahead state starting from , i.e.,

| (20) | ||||

Specifically, we minimize the sum of prediction errors along the time steps, which leads to the loss function being defined as

| (21) |

Another perspective of validating the modeling capability of Deep EDMD is to evaluate the prediction error in the lifted observable space, i.e., it is crucial to minimize the error of the state evolution in the lifted space and the lifted state trajectory mapping from the real dynamics. To this objective, we adopt the loss function in the lifted linear space as

| (22) |

In order to minimize the reconstruction error, the following loss function about the decoder is to be minimized:

| (23) |

Also, to guarantee the robustness of the proposed algorithm, the loss function in the infinite norm is adopted, i.e.,

| (24) | ||||

With the above loss functions introduced, the resulting learning algorithm aims to solve the following optimization problem:

| (25) |

where is the overall optimization function defined as

| (26) |

where , , are the weighting scalars, , in turn is a regularization term used for avoiding over-fitting.

Assuming that the optimization problem (25) is solved, the resulting approximated dynamics for (4) can be given as

| (27) |

// The training is performed in a batch-mode manner.

V MPC with Deep EDMD for trajectory tracking of autonomous vehicles

In this section, a novel model predictive controller based on Deep EDMD, i.e., DE-MPC, is proposed to show how the learned dynamical model can be utilized for controlling the nonlinear system with good performance. To this end, the learned model (27) is firstly used to define an augmented version with the input treated as the extended state, i.e.,

| (28) |

where ,

. And the state initialization at any time instant is given as

| (29) |

It is now ready to state a model predictive controller with (28) and (29). The objective of DE-MPC is to steer the time-varying reference , which can be computed with the lifted observable function using the reference trajectory. To obtain satisfactory tracking performance, we also include the original state as the abstract one in the lifted space. Therefore, at any time instant , the finite horizon optimization problem can be stated as

| (30c) | |||

| subject to:

1) model constraint (28) with initialization (29); 2) the constraints on control and its increment: | |||

| (30d) | |||

| (30e) | |||

where is the prediction horizon, is the control horizon, , are positive-definite matrices for penalizing the tracking errors and the control increment; while is the penalty matrix of the slack variable and is a vector with all the entries being . Note that we use and the control increment is assumed for all . In the following we will reformulate (30c) in a more straightforward manner. First, let , one can compute

| (31) |

where

, for , and , . Therefore, we can reformulate optimization problem (30c) as

| (32) | ||||

where , , and , and is defined as the sequence . The matrices and .

Let be the optimal solution to (32) at time , the control applied to the system is

| (33) |

Then the optimization problem is solved again in the time according to the moving horizon strategy. To clearly illustrate the algorithm of DE-MPC, the implementing steps are summarized in Algorithm 2.

VI Simulation and Performance Evaluation

In this section, the proposed Deep EDMD method was validated in a high-fidelity CarSim simulation environment. The comparisons with EDMD, ELM-EDMD, and DNN were considered to show the effectiveness of our approach.

VI-A Data Collection

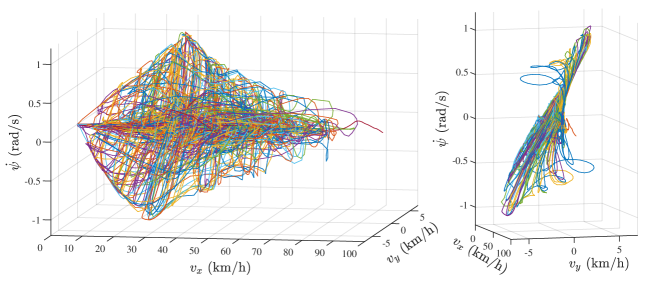

We validated our approach in a high-fidelity CarSim environment, where the dynamics were modeled with real vehicle data sets. Specifically, we chose a C-Class sedan car in the CarSim environment as the original Car model. The input-output data sets of the adopted vehicle were controlled with a Logitech G29 driving force steering wheels and pedals in CarSim 2019 combined Simulink/MATLAB 2017b. The collected data sets consisted of 40 episodes and each episode filled with data of 10000 to 40000-time steps. In the data collection, the sampling period was chosen as . The initial state was set to zero for each episode, i.e., . Besides, the engine throttle was initialized as , while the steering wheel angle was initialized randomly by the initial angle of the steering wheel entity. In the data collection process, we only allowed one of the throttle and the brake to control and the values of the throttle and brake are in the range of and . Besides, the value of the steering wheel angle was limited in the range of . Fig. 3 shows the distribution of the collected data sets, where each path represents a whole episode.

| Hyperparameter | Value |

| Learning rate | |

| Batch size | 64 |

| 10 | |

| 41 | |

| 1.0 | |

| 1.0 | |

| 0.3 | |

| 1.0 | |

VI-B Data preprocessing and training

The collected data sets were normalized according to the following rule

| (34) |

We collected about frame snapshots data consisting of 40 episodes and randomly chose 2 episodes as testing set, 2 episodes for validation, and the rest 36 episodes were regarded as the training set. In the training process, every two adjacent state samples were concatenated as new state data. Also, to enrich the diversity of the training data, we randomly generated the sampling starting point in the range of so that we could sample data sequences from a different starting position before each training epoch.

In Deep EDMD, the structure of the encoder was of five layers with the structure chosen as . And the structure of the decoder was set as . The simulations were performed with being set as . All the parameters used in the simulation are listed in Table LABEL:tab:training_hyperparameters. All the weights were initialized with a uniform distribution limiting each weight in the range for , where is the number of the network layer [41].

For comparison, an EDMD and an ELM-EDMD were designed to show the effectiveness of the proposed approach. In the EDMD method, we used the kernel functions described in [16] to construct the observable functions. We adopted thin plate spline radial basis functions as the basis functions and the kernel centers were chosen randomly with a normal distribution

| (35) |

where are the centers of the basis functions , , and are the standard deviation and mean of the data sets. The ELM-EDMD method adopted the same structure as the EDMD approach, except for the kernel functions being replaced by the extreme learning machines for learning the observable functions.

Also, a DNN method was used for comparison, to show the strength of our approach in terms of modeling and robustness.The structure of the DNN method was chosen as . Two scenarios are considered in the comparison. In the first scenario, all the parameters were regarded as optimization variables to be learned, while in the second scenario, an additional hidden layer was added in the Deep EDMD method and the DNN method respectively, where weights and biases were updated randomly with a normal distribution during the whole training process. The loss function adopted in DNN was given as follows:

| (36) | ||||

where denotes the predicted next state, is the trainable weights and biases of DNN. The first term denotes the multi-step predicted loss, the second term is the infinite norm to penalize the largest error of the prediction, and the last term is the regularization term used for avoiding over-fitting. And the , and are the weights of each term and their values are shown in Table LABEL:tab:training_hyperparameters. The EDMD method was trained in the MATLAB 2017b environment with an Intel i9-9900K@3.6GHz, while the methods of Deep EDMD, ELM-EDMD, and DNN were trained using the Python API in Tensorflow [42] framework with an Adam optimizer [43], using an NVIDIA GeForce GTX 2080 Ti GPU.

VI-C Performance evaluation

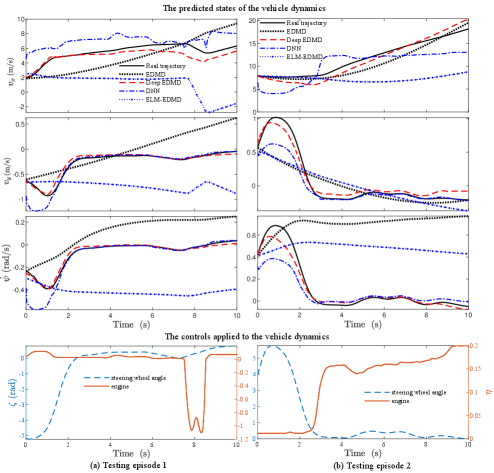

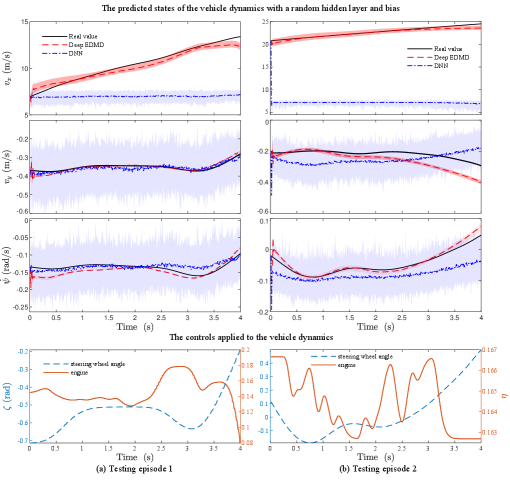

Validation of the learned model with Deep EDMD: For numerical comparison, the trained models with all the algorithms were validated with the same training data sets. The resulting state predictions of all the algorithms under the same control profile are illustrated in Fig. 4. It can be seen that the resulting models Deep EDMD can capture the changes of the velocities in the longitudinal and lateral directions, which means the models are effective at a wide operating range. Also, the root mean square errors (RMSEs) of each algorithm corresponding to the trajectories in Fig. 4 are listed in Table LABEL:tab:RMSE_normal_cases, which shows that the RMSEs of the proposed Deep EDMD for , , and are smallest ones among all the approaches. In addition, in the two testing cases, the results obtained with Deep EDMD using , are better than that with EDMD and ELM-EDMD. Specifically, the state trajectories obtained with EDMD and ELM-EDMD diverge after a short time period. It is worth noting that, DNN exhibits comparable performance with Deep EDMD in the prediction of lateral velocity and yaw rate, but shows larger prediction errors in the prediction of longitudinal velocity.

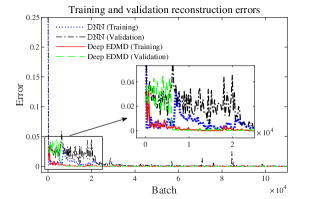

To fully compare our method and DNN, the training and validation errors collected in the training process are shown in Fig. 5. It can be seen that, after 30000 batches of training, the training and validation reconstruction losses of Deep EDMD with converge to and respectively, and to and after 100000 batches. As for DNN, the training and validation losses converge to and after 30000 batches, and to and after 100000. The converging speeds of DNN are slower than that of our approach.

| Test episode 1 | Test episode 2 | |||||||

| EDMD | Deep EDMD | DNN | ELM-EDMD | EDMD | Deep EDMD | DNN | ELM-EDMD | |

| 1.99 | 0.75 | 1.78 | 4.88 | 1.42 | 1.15 | 2.80 | 5.61 | |

| 0.36 | 0.04 | 0.14 | 0.55 | 0.25 | 0.08 | 0.16 | 0.27 | |

| 0.20 | 0.02 | 0.08 | 0.37 | 0.66 | 0.05 | 0.12 | 0.44 | |

| Test episode 1 | Test episode 2 | |||

| Deep EDMD | DNN | Deep EDMD | DNN | |

| 0.4 | 3.86 | 0.35 | 15.56 | |

| 0.01 | 0.01 | 0.05 | 0.06 | |

| 0.01 | 0.01 | 0.01 | 0.04 | |

| 1.38 | 0.93 | |

| 0.20 | 0.18 | |

| 0.08 | 0.05 |

In order to show the robustness of our approach, the obtained models of Deep EDMD and DNN with an additional random layer and bias, are used to generate the state predictions with 100 repeated times with different training data sets. The results of state predictions are collected and shown in Fig. 6 and the prediction RMSEs of Deep EDMD and DNN are collected in Table LABEL:tab:RMSE_random_cases. The results show that the average prediction errors and error variations with Deep EDMD are much smaller than that with DNN. This is due to the Koopman operator with the EDMD framework adopted in the proposed approach. Indeed, the worst scenario of Deep EDMD with the random layer can be regarded as an EDMD framework, hence the robustness of the results can be guaranteed.

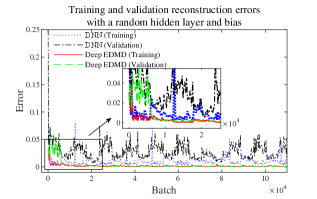

We also collect the training and validation reconstruction errors obtained with Deep EDMD and DNN. The results are displayed in Fig. 7, which show that the random hidden layer has a large negative effect on DNN based training process. The associated training and validation errors fluctuate around 0.01 and 0.027. As for Deep EDMD with , the training and validation reconstruction errors converge to and eventually, which are much smaller than that with DNN.

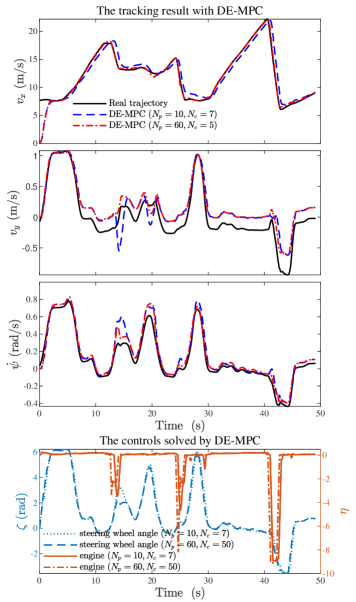

Trajectory tracking with DE-MPC: To further validate the potential of the proposed Deep EDMD approach, we have designed the DE-MPC controller for tracking a time-varying reference in the CarSim/Simulink simulation environment. In the simulation test, the control steering wheel angle, throttle, and the brake pressure are limited in the interval , , and respectively. Also, the control increments are limited respectively to the range , , and . The state of the vehicle has been initialized as and , , . And the reference signals for the output have been selected from the collected testing data-set. The sampling interval in the simulation has been chosen as .

In order to fully show the effectiveness of the learned model, the simulation tests have been performed under two different prediction horizon choices, i.e., , and , . The simulation results for the control tests are shown in Fig. 8 and the RMSEs of reference tracking under these two cases are listed in Table LABEL:tab:RMSE_comtrol_cases. It can be seen that in both cases satisfactory tracking performance can be realized. In the case and , a slight overshoot occurs when tracking the reference of and recovered in a number of steps, while the controller performs better in the case , in terms of both tracking accuracy and smoothness. This is probably due to the greater prediction horizon and control horizon being used. Despite the larger prediction horizon adopted, the online computation is efficient since the linear model constraint is used. The average computational time for and are and respectively. As a consequence, the real-time implementing requirement can be satisfied considering the sampling interval being 10ms.

VII Conclusions

In this paper, we propose a novel data-driven vehicle modeling and control approach based on deep neural networks with an interpretable Koopman operator. In the proposed approach, a deep learning-based extended dynamic mode decomposition (Deep EDMD) algorithm is presented to learn a finite basis function set of the Koopman operator. Based on the dynamic model learned by Deep EDMD, a novel model predictive controller called DE-MPC is presented for path tracking control of autonomous vehicles. In the proposed algorithm, deep neural networks serve as the encoder and decoder in the framework of EDMD for learning the Koopman operator. The differences of our approach with the classic DNN for modeling vehicle dynamics can be summarized as follows: i) in our approach, the deep learning networks are designed as the encoder and decoder to learn the Koopman eigenfunctions and Koopman modes, which is different from classic machine learning-based modeling methods; ii) the resulting model is a global predictor with a linear dynamic evolution and a nonlinear static mapping function, hence it is friendly for real-time implementation with optimization-based methods, such as MPC.

Simulation studies with data sets obtained from a high-fidelity CarSim environment have been performed, including the comparisons with EDMD, ELM-EDMD, and DNN. The results show the effectiveness of our approach and the advantageous point in terms of modeling accuracy than EDMD, ELM-EDMD, and DNN. Also, our approach is more robust than DNN in the scenario which has the random hidden and bias layer in the training process. We also tested the performance of DE-MPC for realizing trajectory tracking in the CarSim environment. Satisfactory tracking performance further verifies the effectiveness of the proposed Deep EDMD and DE-MPC methods. Future research will utilize the learned model for trajectory tracking in a real-world experimental platform of autonomous vehicles.

References

- [1] J. Chen, W. Zhan, and M. Tomizuka, “Autonomous driving motion planning with constrained iterative lqr,” IEEE Transactions on Intelligent Vehicles, vol. 4, no. 2, pp. 244–254, 2019.

- [2] D. Moser, R. Schmied, H. Waschl, and L. del Re, “Flexible spacing adaptive cruise control using stochastic model predictive control,” IEEE Transactions on Control Systems Technology, vol. 26, no. 1, pp. 114–127, 2017.

- [3] R. Rajamani, Vehicle dynamics and control. Springer Science & Business Media, 2011.

- [4] H. Pacejka, Tire and vehicle dynamics. Elsevier, 2005.

- [5] R. B. Dieter Schramm, Manfred Hiller, Vehicle Dynamics. Springer Berlin Heidelberg, 2014.

- [6] B. A. H. Vicente, S. S. James, and S. R. Anderson, “Linear system identification versus physical modeling of lateral-longitudinal vehicle dynamics,” IEEE Transactions on Control Systems Technology, pp. 1–8, 2020.

- [7] K. Berntorp and S. Di Cairano, “Tire-stiffness and vehicle-state estimation based on noise-adaptive particle filtering,” IEEE Transactions on Control Systems Technology, vol. 27, no. 3, pp. 1100–1114, 2019.

- [8] C. Sierra, E. Tseng, A. Jain, and H. Peng, “Cornering stiffness estimation based on vehicle lateral dynamics,” Vehicle System Dynamics, vol. 44, no. sup1, pp. 24–38, 2006.

- [9] B. A. H. Vicente, S. S. James, and S. R. Anderson, “Linear system identification versus physical modeling of lateral-longitudinal vehicle dynamics,” IEEE Transactions on Control Systems Technology, 2020.

- [10] J. Na, Y. Huang, X. Wu, G. Gao, G. Herrmann, and J. Z. Jiang, “Active adaptive estimation and control for vehicle suspensions with prescribed performance,” IEEE Transactions on Control Systems Technology, vol. 26, no. 6, pp. 2063–2077, 2017.

- [11] N. A. Spielberg, M. Brown, N. R. Kapania, J. C. Kegelman, and J. C. Gerdes, “Neural network vehicle models for high-performance automated driving,” Science Robotics, vol. 4, no. 28, p. eaaw1975, 2019.

- [12] N. Deo and M. M. Trivedi, “Convolutional social pooling for vehicle trajectory prediction,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, 2018, pp. 1468–1476.

- [13] P. J. Schmid, “Dynamic mode decomposition of numerical and experimental data,” Journal of fluid mechanics, vol. 656, pp. 5–28, 2010.

- [14] I. G. Kevrekidis, C. W. Rowley, and M. O. Williams, “A kernel-based method for data-driven koopman spectral analysis,” Journal of Computational Dynamics, vol. 2, no. 2, pp. 247–265, 2016.

- [15] M. O. Williams, I. G. Kevrekidis, and C. W. Rowley, “A data–driven approximation of the koopman operator: Extending dynamic mode decomposition,” Journal of Nonlinear Science, vol. 25, no. 6, pp. 1307–1346, 2015.

- [16] V. Cibulka, T. Haniš, and M. Hromčík, “Data-driven identification of vehicle dynamics using koopman operator,” in 2019 22nd International Conference on Process Control (PC19). IEEE, 2019, pp. 167–172.

- [17] G.-B. Huang, Q.-Y. Zhu, and C.-K. Siew, “Extreme learning machine: theory and applications,” Neurocomputing, vol. 70, no. 1-3, pp. 489–501, 2006.

- [18] K.-i. Funahashi and Y. Nakamura, “Approximation of dynamical systems by continuous time recurrent neural networks,” Neural networks, vol. 6, no. 6, pp. 801–806, 1993.

- [19] X. Li and W. Yu, “Dynamic system identification via recurrent multilayer perceptrons,” Information sciences, vol. 147, no. 1-4, pp. 45–63, 2002.

- [20] G. Garimella, J. Funke, C. Wang, and M. Kobilarov, “Neural network modeling for steering control of an autonomous vehicle,” in 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2017, pp. 2609–2615.

- [21] M. Da Lio, D. Bortoluzzi, and G. P. Rosati Papini, “Modelling longitudinal vehicle dynamics with neural networks,” Vehicle System Dynamics, pp. 1–19, 2019.

- [22] B. O. Koopman, “Hamiltonian systems and transformation in hilbert space,” Proceedings of the national academy of sciences of the united states of america, vol. 17, no. 5, p. 315, 1931.

- [23] J. H. Tu, C. W. Rowley, D. M. Luchtenburg, S. L. Brunton, and J. N. Kutz, “On dynamic mode decomposition: Theory and applications,” arXiv preprint arXiv:1312.0041, 2013.

- [24] M. Korda and I. Mezić, “Linear predictors for nonlinear dynamical systems: Koopman operator meets model predictive control,” Automatica, vol. 93, pp. 149–160, 2018.

- [25] Q. Li, F. Dietrich, E. M. Bollt, and I. G. Kevrekidis, “Extended dynamic mode decomposition with dictionary learning: A data-driven adaptive spectral decomposition of the koopman operator,” Chaos: An Interdisciplinary Journal of Nonlinear Science, vol. 27, no. 10, p. 103111, 2017.

- [26] M. Korda and I. Mezić, “On convergence of extended dynamic mode decomposition to the koopman operator,” Journal of Nonlinear Science, vol. 28, no. 2, pp. 687–710, 2018.

- [27] S. E. Otto and C. W. Rowley, “Linearly recurrent autoencoder networks for learning dynamics,” SIAM Journal on Applied Dynamical Systems, vol. 18, no. 1, pp. 558–593, 2019.

- [28] J. Morton, F. D. Witherden, and M. J. Kochenderfer, “Deep variational koopman models: Inferring koopman observations for uncertainty-aware dynamics modeling and control,” in IJCAI, 2019.

- [29] B. Lusch, J. N. Kutz, and S. L. Brunton, “Deep learning for universal linear embeddings of nonlinear dynamics,” Nature communications, vol. 9, no. 1, pp. 1–10, 2018.

- [30] J. Morton, A. Jameson, M. J. Kochenderfer, and F. Witherden, “Deep dynamical modeling and control of unsteady fluid flows,” in Advances in Neural Information Processing Systems, 2018, pp. 9258–9268.

- [31] Y. Susuki, I. Mezic, F. Raak, and T. Hikihara, “Applied koopman operator theory for power systems technology,” Nonlinear Theory and Its Applications, IEICE, vol. 7, no. 4, pp. 430–459, 2016.

- [32] Y. Susuki and I. Mezić, “Nonlinear koopman modes and power system stability assessment without models,” IEEE Transactions on Power Systems, vol. 29, no. 2, pp. 899–907, 2013.

- [33] A. Avila and I. Mezić, “Data-driven analysis and forecasting of highway traffic dynamics,” Nature communications, vol. 11, no. 1, pp. 1–16, 2020.

- [34] B. W. Brunton, L. A. Johnson, J. G. Ojemann, and J. N. Kutz, “Extracting spatial–temporal coherent patterns in large-scale neural recordings using dynamic mode decomposition,” Journal of neuroscience methods, vol. 258, pp. 1–15, 2016.

- [35] H. Wu, F. Nüske, F. Paul, S. Klus, P. Koltai, and F. Noé, “Variational koopman models: slow collective variables and molecular kinetics from short off-equilibrium simulations,” The Journal of chemical physics, vol. 146, no. 15, p. 154104, 2017.

- [36] A. Mardt, L. Pasquali, H. Wu, and F. Noé, “Vampnets for deep learning of molecular kinetics,” Nature communications, vol. 9, no. 1, pp. 1–11, 2018.

- [37] S. Peitz and S. Klus, “Koopman operator-based model reduction for switched-system control of pdes,” Automatica, vol. 106, pp. 184–191, 2019.

- [38] D. Bruder, B. Gillespie, C. D. Remy, and R. Vasudevan, “Modeling and control of soft robots using the koopman operator and model predictive control,” arXiv preprint arXiv:1902.02827, 2019.

- [39] R. N. Jazar, Vehicle dynamics: theory and application. Springer, 2017.

- [40] V. Nair and G. E. Hinton, “Rectified linear units improve restricted boltzmann machines,” in Proceedings of the 27th international conference on machine learning (ICML-10), 2010, pp. 807–814.

- [41] I. Goodfellow, Y. Bengio, and A. Courville, Deep learning. MIT press, 2016.

- [42] M. Abadi, A. Agarwal, P. Barham, E. Brevdo, Z. Chen, C. Citro, G. S. Corrado, A. Davis, J. Dean, M. Devin et al., “Tensorflow: Large-scale machine learning on heterogeneous distributed systems,” arXiv preprint arXiv:1603.04467, 2016.

- [43] D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,” arXiv preprint arXiv:1412.6980, 2014.