This work was partially supported by JST CREST Grant Number JPMJCR2012, Japan and JSPS KAKENHI Grant Number JP21J10780, Japan.

Corresponding author: Junya Ikemoto (e-mail: ikemoto@hopf.sys.es.osaka-u.ac.jp).

Deep reinforcement learning under signal temporal logic constraints using Lagrangian relaxation

Abstract

Deep reinforcement learning (DRL) has attracted much attention as an approach to solve optimal control problems without mathematical models of systems. On the other hand, in general, constraints may be imposed on optimal control problems. In this study, we consider the optimal control problems with constraints to complete temporal control tasks. We describe the constraints using signal temporal logic (STL), which is useful for time sensitive control tasks since it can specify continuous signals within bounded time intervals. To deal with the STL constraints, we introduce an extended constrained Markov decision process (CMDP), which is called a -CMDP. We formulate the STL-constrained optimal control problem as the -CMDP and propose a two-phase constrained DRL algorithm using the Lagrangian relaxation method. Through simulations, we also demonstrate the learning performance of the proposed algorithm.

Index Terms:

Constrained Reinforcement Learning, Deep Reinforcement Learning, Lagrangian Relaxation, Signal Temporal Logic.=-15pt

I Introduction

Reinforcement learning (RL) is a machine learning method for sequential decision making problems [1]. In RL, a learner, which is called an agent, interacts with an environment and learns a desired policy automatically. Recently, RL with deep neural networks (DNNs) [2], which is called Deep RL (DRL), has attracted much attention for solving complicated decision making problems such as playing video games [3]. DRL has been studied in various fields and many practical applications of DRL have been proposed [4, 5, 6]. On the other hand, when we apply RL or DRL to a problem in the real world, we must specify a state space of an environment for the problem beforehand. The states of the environment need to include sufficient information in order to determine a desired action at each time. Additionally, we must design a reward function for the task. If we do not design it to evaluate behaviors precisely, the learned policy may not be appropriate for the task.

Recently, controller design methods for temporal control tasks such as periodic, sequential, or reactive tasks have been studied in the control system community [7]. In these studies, linear temporal logic (LTL) has often been used. LTL is one of temporal logics that have developed as formal methods in the computer science community [8]. LTL can express a temporal control task in a logical form.

LTL has also been applied to RL for temporal control tasks [9]. By using RL, we can obtain a policy to complete a temporal control task described by an LTL formula without a mathematical model of a system. The given LTL formula is transformed into an -automaton that is a finite-state machine and accepts all traces satisfying the LTL formula. The transformed automaton can express states that include sufficient information to complete the temporal control task. We regard a system’s state and an automaton’s state as an environment’s state for RL.The reward function for the temporal control task is designed based on the acceptance condition of the transformed automaton. Additionally, DRL algorithms for satisfying LTL formulae have been proposed in order to solve problems with continuous state-action spaces [10, 11].

In real world problems, it is often necessary to describe temporal control tasks with time bounds. Unfortunately, LTL cannot express the time bounds. Then, metric interval temporal logic (MITL) and signal temporal logic (STL) are useful [12]. MITL is an extension of LTL and has time-constrained temporal operators. Furthermore, STL is an extension of MITL. Although LTL and MITL have predicates over Boolean signals, STL has inequality formed predicates over real-valued signals, which is useful to specify dynamical system’s trajectories within bounded time intervals. Additionally, STL has a quantitative semantics called robustness that evaluates how well a system’s trajectory satisfies the given STL formula [13]. In the control system community, controller design methods to complete tasks described by STL formulae have been proposed [14, 15], where the control problems are formulated as constrained optimization problems using models of systems. Model-free RL-based controller design methods have also been proposed [16, 17, 18, 19]. In [16], Aksaray et al. proposed a Q-learning algorithm for satisfying a given STL formula. The satisfaction of the given STL formula is based on a finite trajectory of the system. Thus, as an environment’s state for a temporal control task, we use the extended state consisting of the current system’s state and the previous system’s states instead of using an automaton such as [9]. Additionally, we design a reward function using the robustness for the given formula. In [17], Venkataraman et al. proposed a tractable learning method using a flag state instead of the previous system’s state sequence to reduce the dimensionality of the environment’s state space. However, these methods cannot be directly applied to problems with a continuous state-action space because they are based on a classical tabular Q-learning algorithm. For problems with continuous spaces, in [18], Balakrishnan et al. introduced a partial signal and applied a DRL algorithm to design a controller that partially satisfies a given STL specification and, in [19], we proposed a DRL-based design of a network controller to complete an STL control task with network delays.

On the other hand, for some control problems, we aim to design a policy that optimizes a given control performance index under a constraint described by an STL formula. For example, in practical applications, we should operate a system in order to satisfy a given STL formula with minimum fuel costs. In this study, we tackle to obtain the optimal policy for a given control performance index among the policies satisfying a given STL formula without a mathematical model of a system.

I-A Contribution:

The main contribution is to propose a DRL algorithm to obtain an optimal policy for a given control performance index such as fuel costs under a constraint described by an STL formula. Our proposed algorithm has the following three advantages.

- 1.

-

2.

We obtain a policy that not only satisfies a given STL formula but also is optimal with respect to a given control performance index. We consider the optimal control problem constrained by a given STL formula and formulate the problem as a constrained Markov decision process (CMDP) [22]. In the CMDP problem, we introduce two reward functions: one is the reward function for the given control performance index and the other is the reward function for the given STL constraint. To solve the CMDP problem, we apply a constrained DRL (CDRL) algorithm with the Lagrangian relaxation [23]. In this algorithm, we relax the CMDP problem into an unconstrained problem using a Lagrange multiplier to utilize standard DRL algorithms for problems with continuous spaces.

-

3.

We introduce a two-phase learning algorithm in order to make it easy to learn a policy satisfying the given STL formula. In a CMDP problem, it is important to satisfy the given constraint. The agent needs many experiences satisfying the given STL formula in order to learn how to satisfy the formula. However, it is difficult to collect the experiences considering both the control performance index and the STL constraint in the early learning stage since the agent may prioritize to optimize its policy with respect to the control performance index. Thus, in the first phase, the agent learns its policy without the control performance index in order to obtain experiences satisfying the STL constraint easily, which is called pre-training. After obtaining many experiences satisfying the STL formula, in the second phase, the agent learns its optimal policy for the control performance index under the STL constraint, which is called fine-tuning.

Through simulations, we demonstrate the learning performance of the proposed algorithm.

I-B Related works:

I-B1 Classical RL for satisfying STL formulae

Aksaray et al. proposed a method to design policies satisfying STL formulae based on the Q-learning algorithm [16]. However, in the method, the dimensionality of an environment’s state tends to be large. Thus, Venkataraman et al. proposed a tractable learning method to reduce the dimensionality [17]. Furthermore, Kalagarla et al. proposed an STL-constrained RL algorithm using a CMDP formulation and an online learning method [24]. However, since these are tabular-based approaches, we cannot directly apply them to problems with continuous spaces.

I-B2 DRL for satisfying STL formulae

DRL algorithms for satisfying STL formulae have been proposed [18, 19]. However, these studies focused on satisfying a given STL formula as the main objective. On the other hand, in this study, we regard the given STL formula as a constraint of a control problem and tackle the STL-constrained optimal control problem using a CDRL algorithm with the Lagrangian relaxation.

I-B3 Learning with demonstrations for satisfying STL formulae

Learning methods with demonstrations have been proposed [25, 26]. They designed a reward function using demonstrations, which was an imitation learning method. On the other hand, in this study, we do not use demonstrations to design a reward function for satisfying STL formulae. Alternatively, we design a reward function for satisfying STL formulae using robustness and the log-sum-exp approximation [16].

I-C Structure:

The remainder of this paper is organized as follows. In Section II, we review STL and the Q-learning algorithm to learn a policy satisfying STL formulae briefly. In Section III, we formulate an optimal control problem under a constraint described by an STL formula as a CMDP problem. In Section IV, we propose a CDRL algorithm with the Lagrangian relaxation to solve the CMDP problem. We relax the CMDP problem to an unconstrained problem using a Lagrange multiplier to utilize the DRL algorithm for unconstrained problems with continuous spaces. In Section V, by numerical simulations, we demonstrate the usefulness of the proposed method. In Section VI, we conclude the paper and discuss future works.

I-D Notation:

is the set of the nonnegative integers. is the set of the real numbers. is the set of nonnegative real numbers. is the -dimensional Euclidean space. For a set , and are the maximum and minimum value in if they exist, respectively.

II Preliminaries

II-A Signal temporal logic

We consider the following discrete-time stochastic dynamical system.

| (1) |

where , , and are the system’s state, the agent’s control action, and the system noise at . , , and are the system’s state space, the control action space, and the system noise space, respectively. The system noise is an independent and identically distributed random variable with a probability density . is a regular matrix that is a weighting factor of the system noise. is a function that describes the system dynamics. Then, we have the transition probability density . The initial state is sampled from a probability density . For a finite system trajectory whose length is , denotes the partial trajectory for the time interval , where .

STL is a specification formalism that allows us to express real-time properties of real-valued trajectories of systems [12]. We consider the following syntax of STL.

where , , and are nonnegative constants for the time bounds, and are the STL formulae, is a predicate in the form of , is a function of the system’s state, and is a constant. The Boolean operators , , and are negation, conjunction, and disjunction, respectively. The temporal operators and refer to Globally (always) and Finally (eventually), respectively, where denotes the time bound of the temporal operator. , or , are called STL sub-formulae. comprises multiple STL sub-formulae .

The Boolean semantics of STL is recursively defined as follows:

The quantitative semantics of STL, which is called robustness, is recursively defined as follows:

which quantifies how well the trajectory satisfies the given STL formulae [13].

The horizon length of an STL formula is recursively defined as follows:

is the required length of the state sequence to verify the satisfaction of the STL formula .

II-B Q-learning for Satisfying STL Formulae

In this section, we review the Q-learning algorithm to learn a policy satisfying a given STL formula [16]. Although we often regard the current state of the dynamical system (1) as the environment’s state for RL, the current system’s state is not enough to determine an action for satisfying a given STL formula. Thus, Aksaray et al. defined the following extended state using previous system’s states.

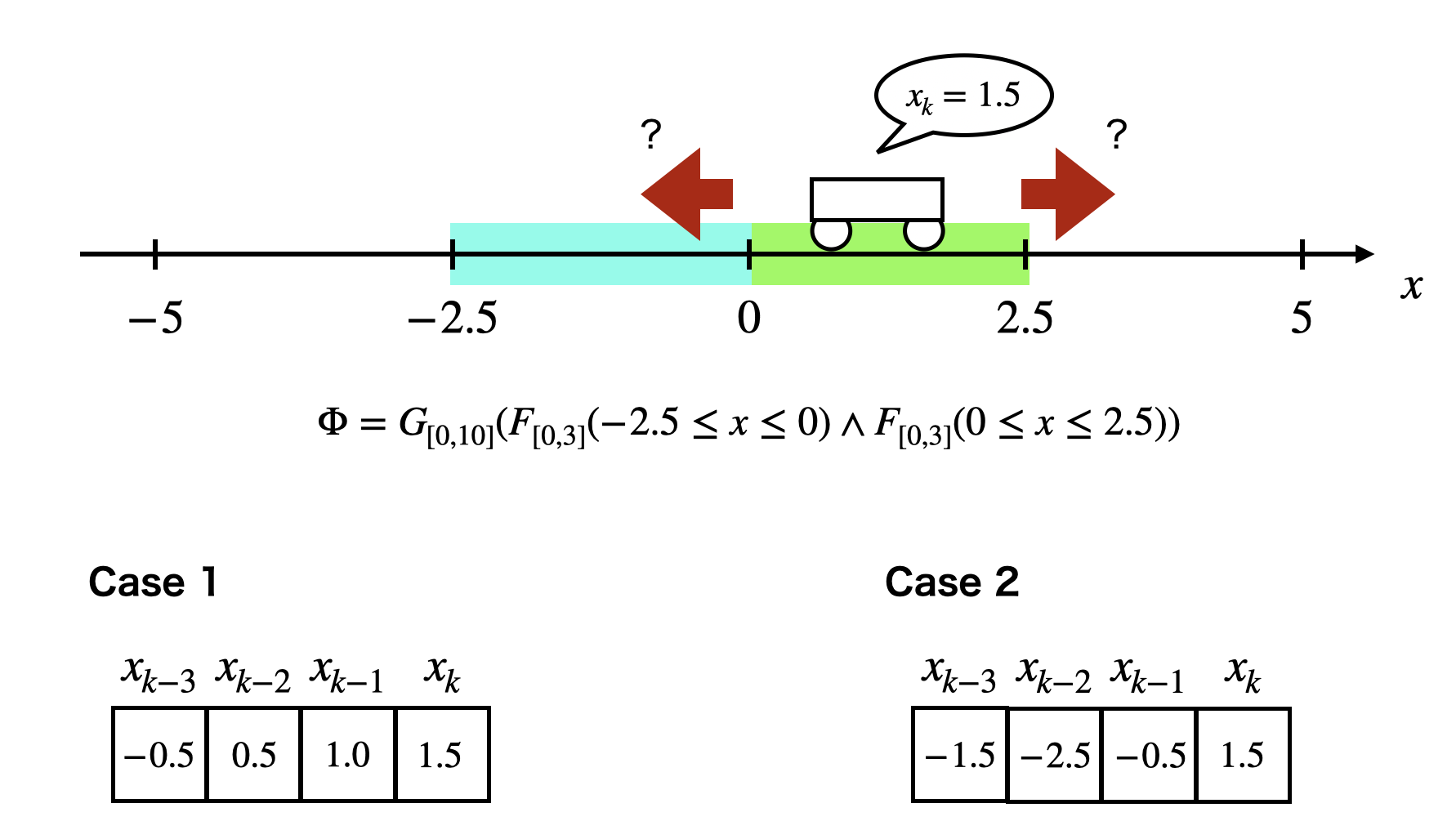

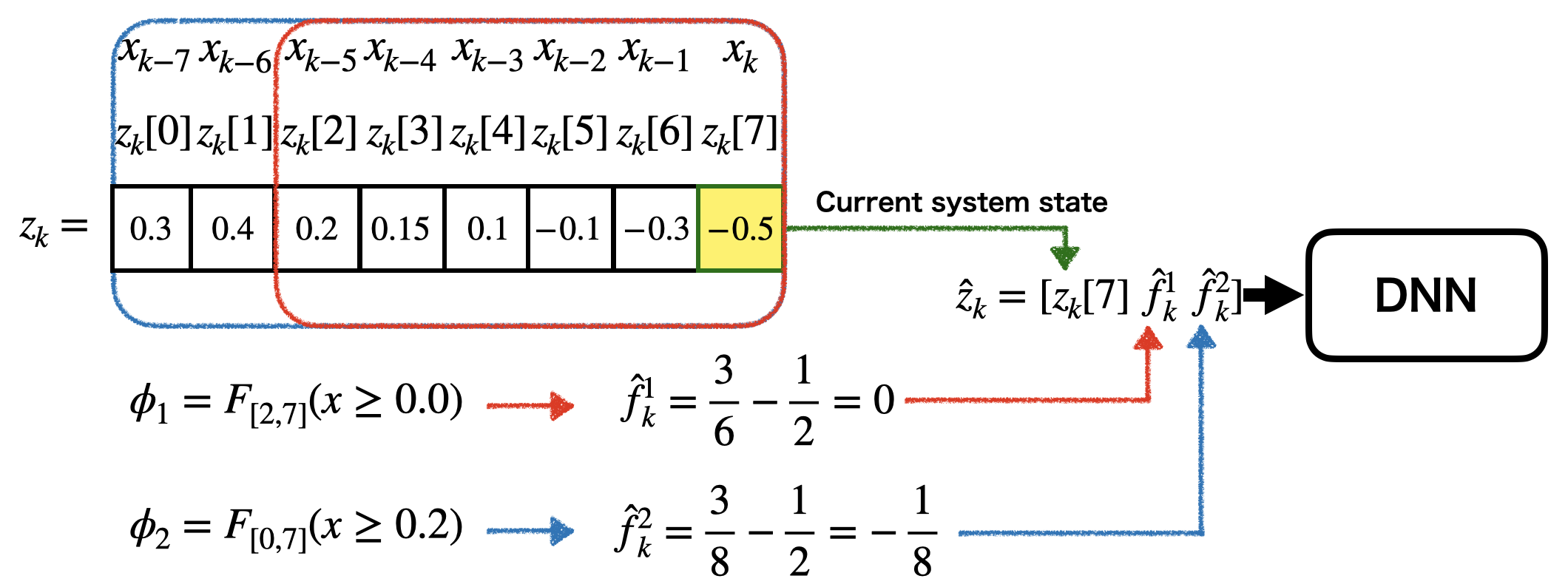

where for the given STL formula (or ) and is an extended state space.We show a simple example in Fig. 1. We operate a one-dimensional dynamical system to satisfy the STL formula

At any time in the time interval , the system should enter both the blue region and the green region before 3 time steps are elapsed, where there is no constraint for the order of the visits. Let the current system’s state be . Note that the desired action for the STL formula is different depending on the past state sequence. For example, in the case where , we should operate the system to the blue region right away. On the other hand, in the case where , we do not need to move it. Thus, we regard not only the current system’s state but also previous system’s states as an environment’s state for RL.

Additionally, Aksaray et al. designed the reward function using robustness and the log-sum-exp approximation. The robustness of a trajectory with respect to the given STL formula is as follows:

| (2) | |||||

We consider the following problem.

| (3) |

where is the system’s trajectory controlled by the policy and the function is an indicator defined by

| (4) |

Since and ,

| (5) | |||||

Then, we use the following log-sum-exp approximation.

| (6) | |||||

| (7) |

where is an approximation parameter. We can approximate or with arbitrary accuracy by selecting a large . Then, (5) can be approximated as follows:

Since the function is a strictly monotonic function and is a constant, we have

Thus, we use the following reward function to satisfy the given STL formula .

To design a controller satisfying an STL formula using the Q-learning algorithm, Aksaray et al. proposed a -MDP as follows:

Definition 1 (-MDP): We consider an STL formula (or ), where and comprises multiple STL sub-formulae . Subsequently, we set , that is, . A -MDP is defined by a tuple , where

-

•

is an extended state space that is an environment’s state space for RL. The extended state is a vector of multiple system’s states .

-

•

is an agent’s control action space.

-

•

is a probability density for the initial extended state with , where is generated from .

-

•

is a transition probability density for the extended state. When the system’s state is updated by , the extended state is updated by as follows:

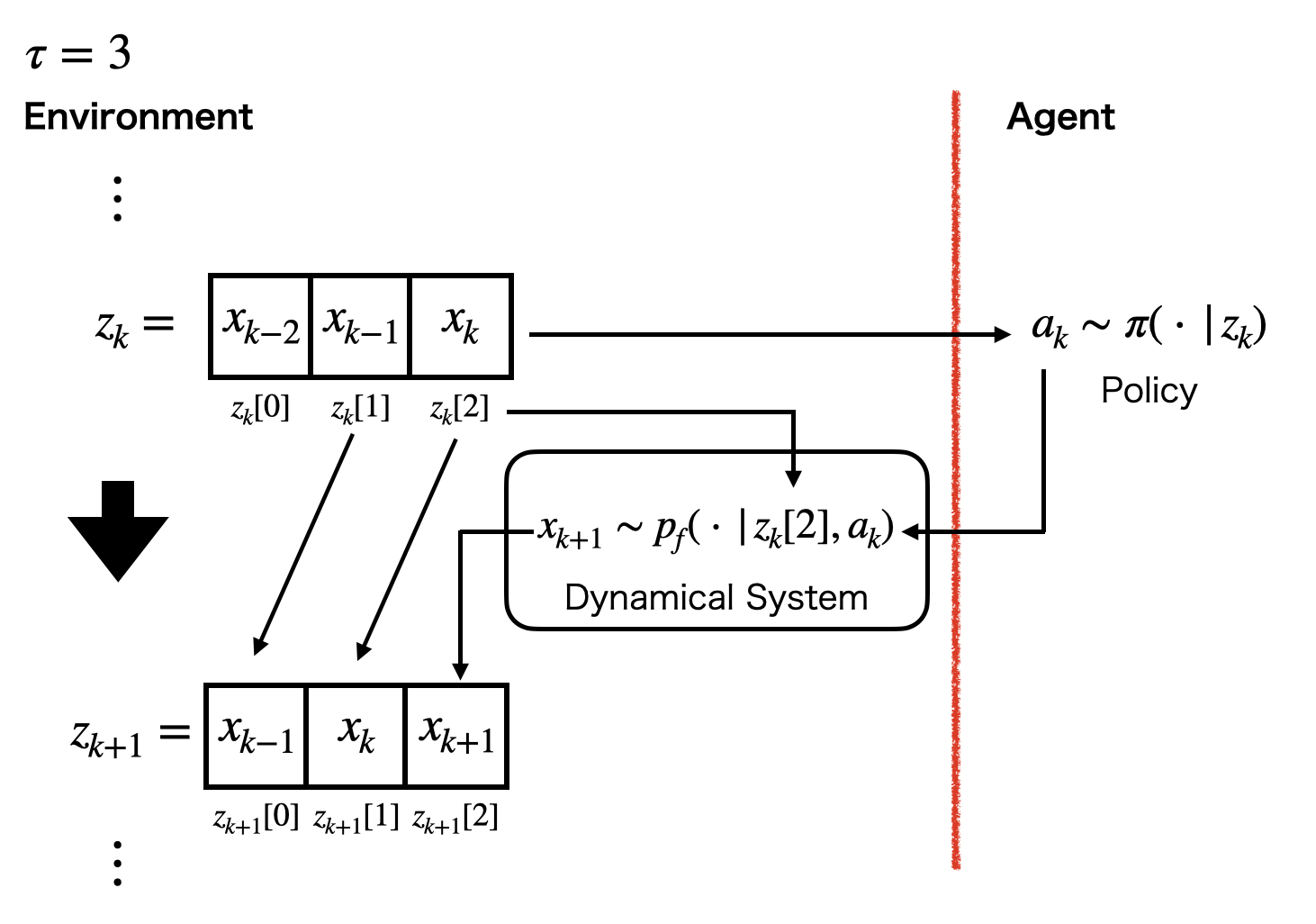

Fig. 2 shows an example of the transition. We consider the sequence that consists of system’s states as the extended state at time . In the transition, the head system’s state is removed from the sequence and other system’s states are shifted to the left. After that, the next system’s state updated by is inputted to the tail of the sequence. The next extended state depends on the current extended state and the agent’s action .

-

•

is the STL-reward function defined by (LABEL:tau_reward).

III Problem formulation

We consider the following optimal policy design problem constrained by a given STL formula , where the system model (1) is unknown.

| (9) |

where is a discount factor and is a reward function for a given control performance index. is the expectation value with respect to the distributions , , and . We introduce the following -CMDP that is an extension of a -MDP [16] to deal with the problem (9).

Definition 2 (-CMDP): We consider an STL formula (or ) as a constraint, where and comprises multiple STL sub-formulae . Subsequently, we set , that is, . A -CMDP is defined by a tuple , where

-

•

is an extended state space that is an environment’s state space for RL. The extended state is a vector of multiple system’s states .

-

•

is an agent’s control action space.

-

•

is a probability density for the initial extended state with , where is generated from .

-

•

is a transition probability density for the extended state. When the system’s state is updated by , the extended state is updated by as follows:

-

•

is the STL-reward function defined by (LABEL:tau_reward) for satisfying the given STL formula .

-

•

is a reward function as follows:

where is a reward function for a given control performance index.

We design an optimal policy with respect to under satisfying the STL formula using a model-free CDRL algorithm [23]. Then, we define the following functions.

where is a discount factor close to . is the expectation value with respect to the distributions , , and . We reformulate the problem (9) as follows:

| (10) | |||

| (11) |

where is a lower threshold. In this study, is a hyper-parameter for adjusting the satisfiability of the given STL formula. The larger is, the more conservatively the agent learns a policy to satisfy the STL formula. We call the constrained problem with (10) and (11) a -CMDP problem. In the next section, we propose a CDRL algorithm with the Lagrangian relaxation to solve the -CMDP problem.

IV Deep reinforcement learning under a signal temporal logic constraint

We propose a CDRL algorithm with the Lagrangian relaxation to obtain an optimal policy for the -CMDP problem. Our proposed algorithm is based on the DDPG algorithm [20] or the SAC algorithm [21], which are DRL algorithms derived from the Q-learning algorithm for problems with continuous state-action spaces. In both algorithms, we parameterize an agent’s policy using a DNN, which is called an actor DNN. The agent updates the parameter vector of the actor DNN based on . However, in this problem, the agent cannot directly use since the mathematical model of the system is unknown. Thus, we approximate using another DNN, which is called a critic DNN. Additionally, we use the following two techniques proposed in [3].

-

•

Experience replay,

-

•

Target network.

In the experience replay, the agent does not update the parameter vectors of DNNs immediately when obtaining an experience. Alternatively, the agent stores the obtained experience to the replay buffer . The agent selects some experiences from the replay buffer randomly and updates the parameter vector of DNNs using the selected experiences. The experience replay can reduce correlation among experience data. In the target network technique, we prepare separate DNNs for the critic DNN and the actor DNN, which are called a target critic DNN and a target actor DNN, respectively, and output target values for updates of the critic DNN. The parameter vectors of the target DNNs are updated by tracking the parameter vectors of the actor DNN and the critic DNN slowly. If we do not use the target network technique for updates of the critic DNN, we need to compute the target value using the current critic DNN, which is called bootstrapping. If we update the critic DNN substantially, the target value computed by the updated critic DNN may change largely, which leads to oscillations of the learning performance. It is known that the target network technique can improve the learning stability.

Remark: The standard DRL algorithm based on Q-learning is the DQN algorithm [3]. However, the DQN algorithm cannot handle continuous action spaces due to its DNN architecture.

On the other hand, we cannot directly apply the DDPG algorithm and the SAC algorithm to the -CMDP problem since these are algorithms for unconstrained problems. Thus, we consider the following Lagrangian relaxation [27].

| (12) |

where is a Lagrangian function given by

| (13) |

and is a Lagrange multiplier. We can relax the constrained problem into the unconstrained problem.

IV-A DDPG-Lagrangian

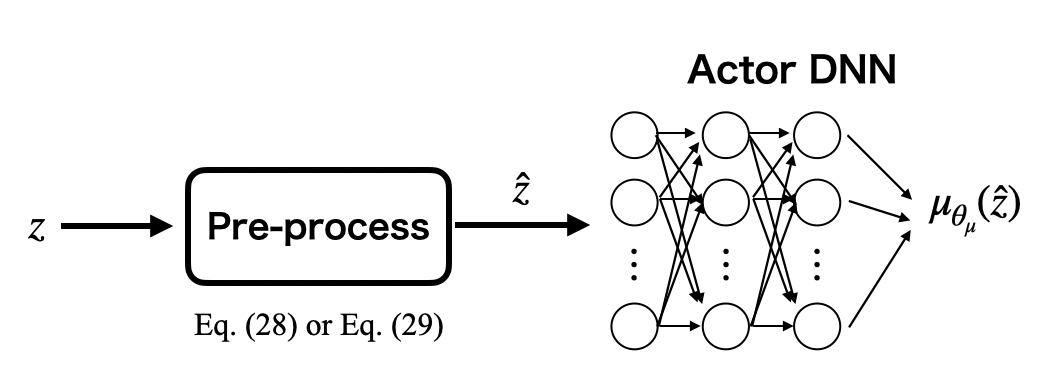

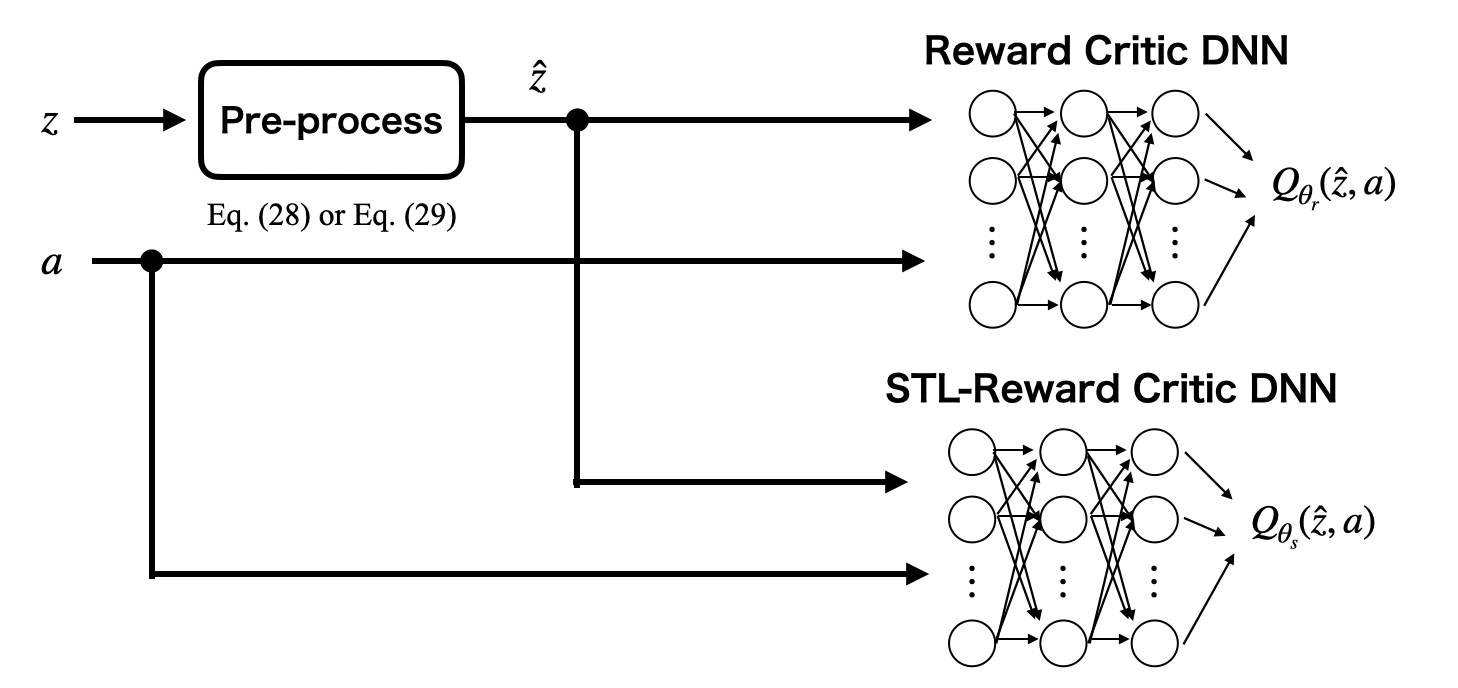

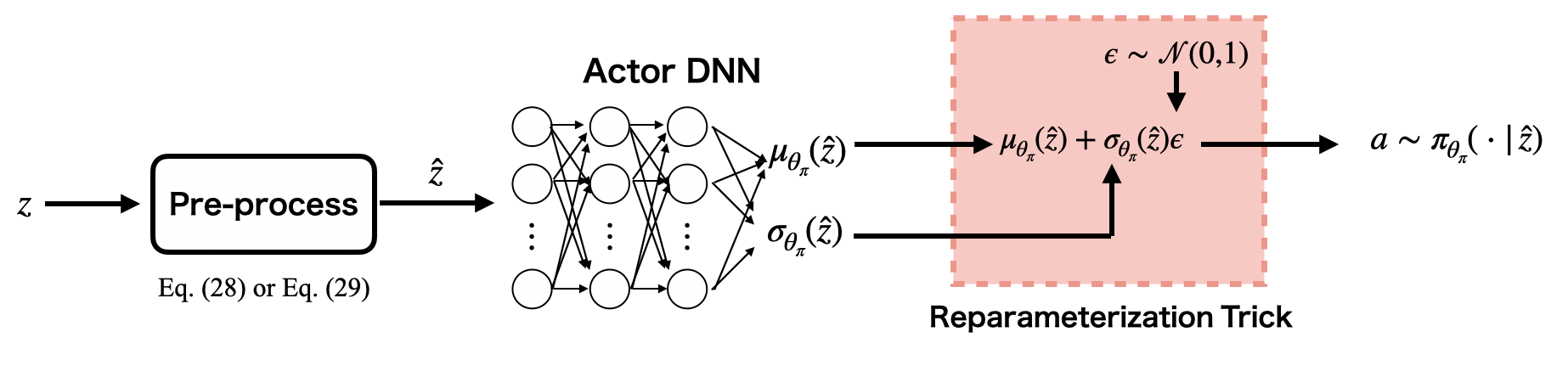

We parameterize a deterministic policy using a DNN as shown in Fig. 3, which is an actor DNN. Its parameter vector is denoted by . In the DDPG-Lagrangian algorithm, the parameter vector is updated by maximizing (13). However, and are unknown. Thus, as shown in Fig. 4, and are approximated by two separate critic DNNs, which are called a reward critic DNN and an STL-reward critic DNN, respectively. The parameter vectors of the reward critic DNN and the STL-reward critic DNN are denoted by and , respectively. and are updated by decreasing the following critic loss functions.

| (14) | |||||

| (15) |

where and are the outputs of the reward critic DNN and the STL-reward critic DNN, respectively. The target values and are given by

and are the outputs of the target reward critic DNN and the target STL-reward critic DNN, respectively, and is the output of target actor DNN. , , and are parameter vectors of the target reward critic DNN, the target STL-reward critic DNN, and the target actor DNN, respectively. Their parameter vectors are slowly updated by the following soft update.

| (16) | |||||

where is a sufficiently small positive constant. The agent stores experiences to the replay buffer and selects some experiences from randomly for updates of and . is the expected value under the random sampling of the experiences from . In the standard DDPG algorithm [20], the parameter vector of the actor DNN is updated by decreasing

where is the expected value with respect to sampled from randomly. However, in the DDPG-Lagrangian algorithm, we consider (13) as an objective instead of . Thus, the parameter vector of the actor DNN is updated by decreasing the following actor loss function.

| (17) |

The Lagrange multiplier is updated by decreasing the following loss function.

| (18) |

where is the expected value with respect to .

Remark: is a nonnegative parameter adjusting the relative importance of the STL-reward critic DNN against the reward critic DNN in updating the actor DNN. Intuitively, if the agent’s policy does not satisfy (11), then we increase the parameter , which increases the relative importance of the STL-critic DNN. On the other hand, if the agent’s policy satisfies (11), then we decrease the parameter , which decreases the relative importance of the STL-critic DNN.

IV-B SAC-Lagrangian

SAC is a maximum entropy DRL algorithm that obtains a policy to maximize both the expected sum of rewards and the expected entropy of the policy. It is known that a maximum entropy algorithm improves explorations by acquiring diverse behaviors and has the robustness for the estimation error [21]. In the SAC algorithm, we design a stochastic policy . We use the following objective with an entropy term instead of .

| (19) | |||||

where is an entropy of the stochastic policy and is an entropy temperature. The entropy temperature determines the relative importance of the entropy term against the sum of rewards.

We use the Lagrangian relaxation for the SAC algorithm such as [28, 29]. Then, a Lagrangian function with the entropy term is given by

| (20) |

We model the stochastic policy using a Gaussian with the mean and the standard deviation outputted by a DNN with a reparameterization trick [30] as shown in Fig. 5, which is an actor DNN. The parameter vector is denoted by . Additionally, we need to estimate and to update the parameter vector like the DDPG-Lagrangian algorithm. Thus, and are also approximated by two separate critic DNNs as shown in Fig. 4. Note that, in the SAC-Lagrangian algorithm, the reward critic DNN estimates not only but also the entropy term. The parameter vectors are also updated using the experience replay and the target network technique. and are updated by decreasing the following critic loss functions.

| (21) | |||

| (22) |

where , , and and are the outputs of the reward critic DNN and the STL-reward critic DNN, respectively. The target values are computed by

where and are outputs of the target reward critic DNN and the target STL-reward critic DNN, respectively, and is the expected value with respect to . Their parameter vectors , are slowly updated like (16). In the standard SAC algorithm, the parameter vector of the actor DNN is updated by decreasing

where is the expected value with respect to the experiences sampled from and the stochastic policy . However, in the SAC-Lagrangian algorithm, we consider (20) as the objective instead of (19). Thus, the parameter vector of the actor DNN is updated by decreasing the following actor loss function.

| (23) | |||||

The Lagrange multiplier is updated by decreasing the following loss function.

| (24) |

where is the expected value with respect to and . The entropy temperature is updated by decreasing the following loss function.

| (25) |

where is a lower bound which is a hyper-parameter. In [21], the parameter is selected based on the dimensionality of the action space. Additionally, in the SAC algorithm, to mitigate the positive bias in updates of , the double Q-learning technique [31] is adopted, where we prepare two critic DNNs and two target critic DNNs. Thus, in the SAC-Lagrangian, we also adopt the technique.

IV-C Pre-training and fine-tuning

In this study, it is important to satisfy the given STL constraint. In order to learn a policy satisfying a given STL formula, the agent needs many experiences satisfying the formula. However, it is difficult to collect the experiences considering both the control performance index and the STL constraint in the early learning stage since the agent may prioritize to optimize its policy with respect to the control performance index. Thus, we propose a two-phase learning algorithm. In the first phase, which is called pre-train, the agent focuses on learning a policy satisfying a given STL formula to store experiences receiving high STL rewards to a replay buffer , that is, the agent learns its policy considering only STL-rewards.

Pre-training for DDPG-Lagrangian

The parameter vector of the actor DNN is updated by decreasing

| (26) |

Pre-training for SAC-Lagrangian

The parameter vector of the actor DNN is updated by decreasing

| (27) |

instead of (23). On the other hand, is updated by (22), where is computed by

In the second phase, which is called fine-tune, the agent learns the optimal policy constrained by the given STL formula. In the DDPG-Lagrangian algorithm, the actor DNN is updated by (17). In the SAC-Lagrangian algorithm, the actor DNN is updated by (23).

Remark: The two-phase learning may become unstable temporally because it discontinuously changes the objective functions. In such a case, we may start the second phase with changing the objective functions from those used in the first phase smoothly and slowly.

IV-D Pre-process

If is a large value, it is difficult for the agent to learn its policy due to the large dimensionality of the extended state space. Then, pre-process is useful in order to reduce the dimensionality, which is related to [17]. In the previous study, a flag state for each sub-formula is defined as a discrete state. The flag discrete state space is combined with the system’s discrete state space. On the other hand, in this study, it is assumed that the system state space is continuous. If we use the discrete flag states, the pre-processed state space is a hybrid state space that has discrete values and continuous values. Thus, we consider the flag state as a continuous value and input it to DNNs as shown in Fig. 6.

We introduce a flag value for each STL sub-formula , where it is assumed that .

Definition 3 (Pre-process): For an extended state , a flag value of an STL sub-formula is defined as follows:

(i) For ,

| (28) |

(ii) For ,

| (29) |

Note that and the flag value represents the normalized time lying in . Intuitively, for , the flag value indicates the time duration in which is always satisfied, whereas, for , the flag value indicates the instant when is satisfied. The flag values calculated by (28) or (29) are transformed into as follows:

| (30) |

The transformed flag values are used as inputs to DNNs to prevent positive biases of the flag values and inputting to DNNs. We compute the flag value for each STL sub-formula and construct a flag state , which is called pre-processing. We use the pre-processed state as an input to DNNs instead of the extended state .

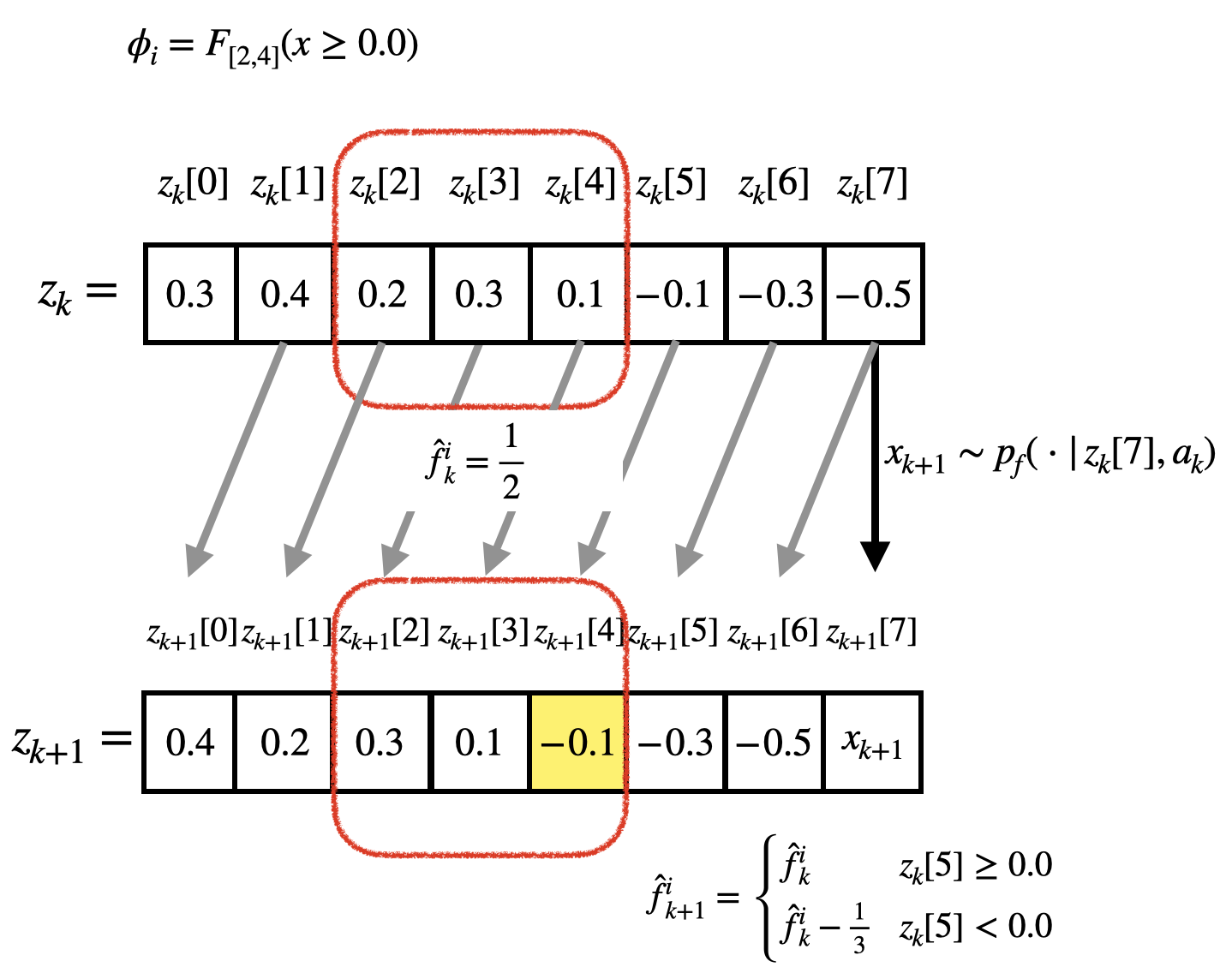

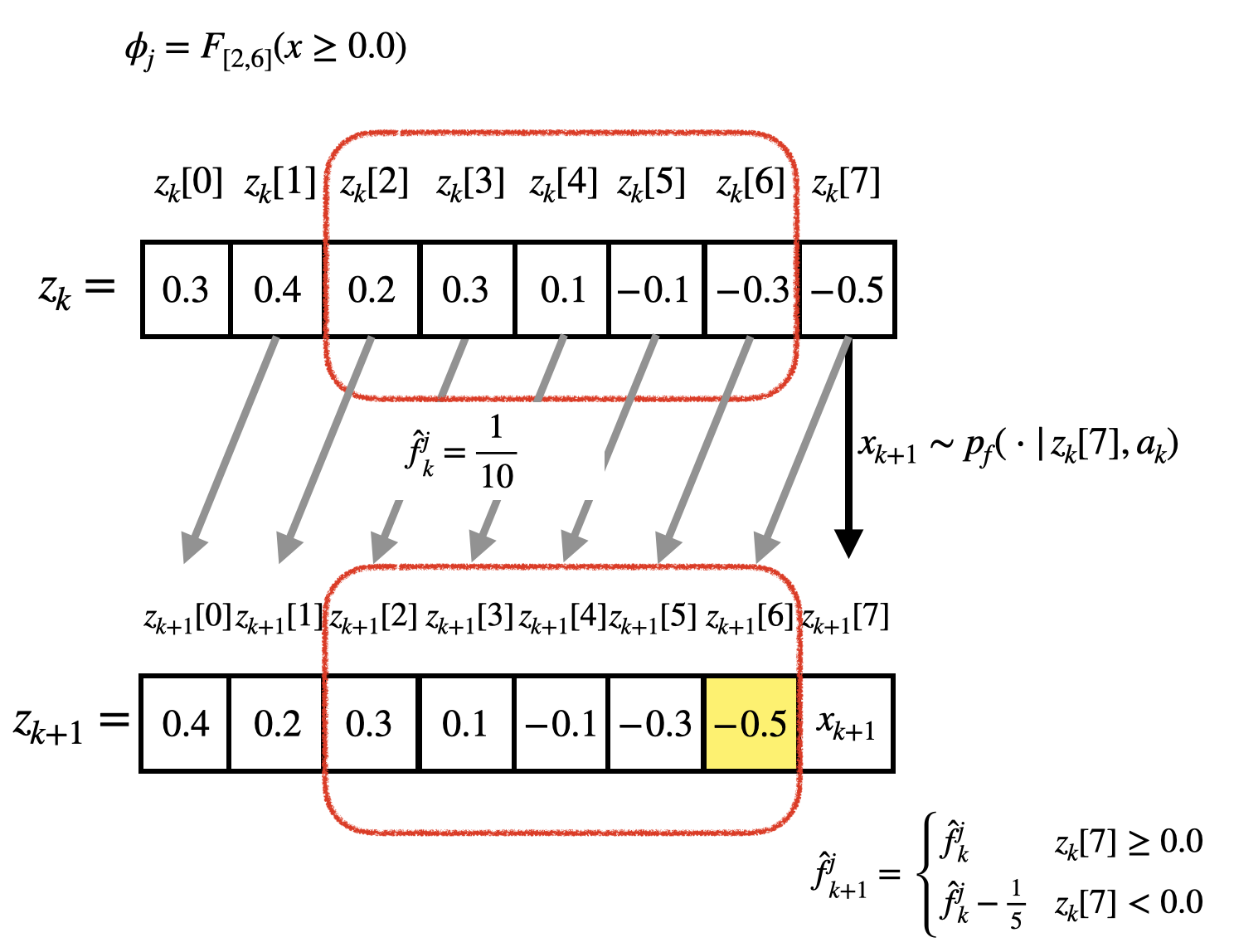

Remark: It is important to ensure the Markov property of the pre-processed state for the agent to learn its policy. If , then the pre-processed state satisfies the Markov property. We consider the current pre-processed state and the next pre-processed state . is generated by , where is the current action. Therefore, depends on and the current action . For each transformed flag value , it is updated by

-

1.

(31) -

2.

(32)

where . The transformed flag values are updated by the next system’s state. Therefore, the next transformed flag values depends on , , and the current action . Thus, the Markov property of the pre-processed state holds.

On the other hand, in the case where , we must include to the pre-processed state in order to ensure the Markov property, where and . For example, as shown in Fig. 7, there may be some transformed flag values that are updated with information other than and the current action. Note that, in the case as shown in Fig. 8, the transformed flag value is updated by , that is, the agent with DNNs can learn its policy using when . As the difference increases, we need to include more past system states in the pre-processed state.

For simplicity, in this study, we focus on the case where . Then, the pre-processing is most effective in terms of reducing the dimensionality of the extended state space.

IV-E Algorithm

Our proposed algorithm to design an optimal policy under the given STL constraint is presented in Algorithm 1. In line 1, we select a DRL algorithm such as the DDPG algorithm and the SAC algorithm. From line 2 to 4, we initialize the parameter vectors of the DNNs, the entropy temperature (if the algorithm is the SAC-Lagrangian algorithm), and the Lagrange multiplier. In line 5, we initialize a replay buffer . In line 6, we set the number of the repetition of pre-training . In line 7, we initialize a counter for updates. In line 9, the agent receives an initial state . From line 10 to 11, the agent sets the initial extended state and computes the pre-processed state . One learning step is done between line 13 and 25. In line 13, the agent determines an action based on the pre-processed state for an exploration. In line 14, the state of the system changes by the determined action and the agent receives the next state , the reward , and the STL-reward . From line 15 to 16, the agent sets the next extended state using and and computes the next pre-processed state . In line 17, the agent stores the experience in the replay buffer . In line 18, the agent samples experiences from the replay buffer randomly. If the learning counter is , the agent pre-trains the parameter vectors in Algorithm 3. Then, the parameter vectors of the reward critic DNN and the STL-reward critic DNN are updated by (14) and (15) (or (21) and (22)), respectively. The parameter vector of the actor DNN (or ) is updated by (26) (or (27)). In the SAC-based algorithm, the entropy temperature is updated by (25). On the other hand, if the learning counter is , the agent fine-tunes the parameter vectors in Algorithm 4. Then, the parameter vector of the actor DNN (or ) is updated by (17) (or (23)) and the other parameter vectors are updated same as the case . The Lagrange multiplier is updated by (18) (or (24)). In line 24, the agent updates the parameter vectors of the target DNNs by (16). In line 25, the learning counter is updated. The agent repeats the process between lines 13 and 25 in a learning episode.

V Example

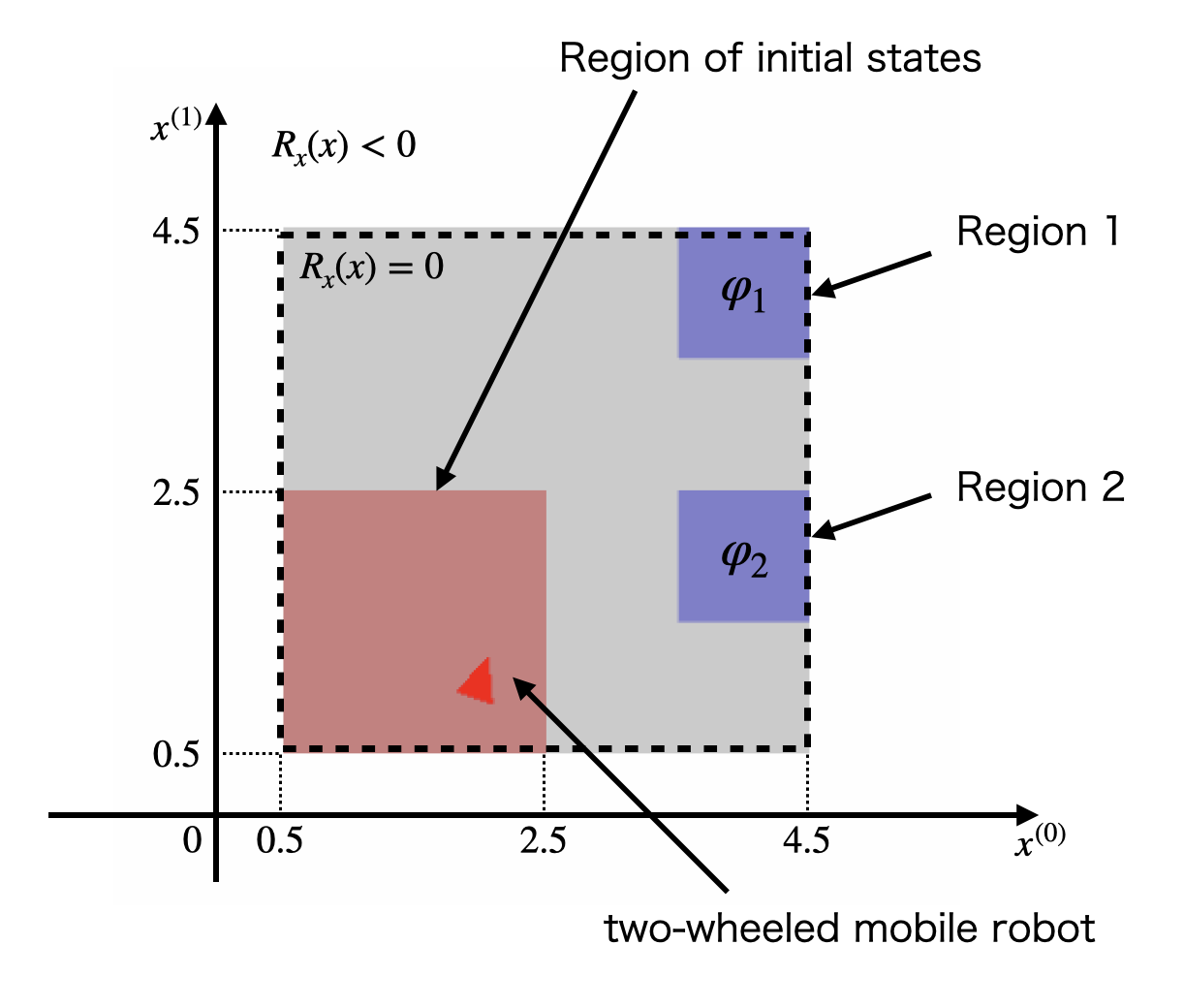

We consider STL-constrained optimal control problems for a two-wheeled mobile robot shown in Fig. 9, where its working area is . Let be the steering angle with . A discrete-time model of the robot is described by

| (33) |

where , , and . is sampled from a standard normal distribution . We assume that and , where is the unit matrix. The initial state of the system is sampled randomly in . The region 1 is and the region 2 is . We consider the following two constraints.

Constraint 1 (Recurrence): At any time in the time interval , the robot visits both the regions 1 and 2 before time steps are elapsed, where there is no constraint for the order of the visits.

Constraint 2 (Stabilization): The robot visits the region 1 or 2 in the time interval and stays there for time steps.

These constraints are described by the following STL formulae.

Formula 1:

| (34) |

Formula 2:

| (35) |

where

We consider the following reward function

| (36) |

where

| (37) | |||||

| (38) |

(37) is the term for keeping the working area. As the agent moves away from the working area, the agent receives a larger negative reward. (38) is the term for fuel costs.

V-A Evaluation

We apply the SAC-Lagrangian algorithm to design a policy constrained by an STL formula. In all simulations, the DNNs had two hidden layers, all of which have 256 units, and all layers are fully connected. The activation functions for the hidden layers and the outputs of the actor DNN are the rectified linear unit functions and hyperbolic tangent functions, respectively. We normalize and as and , respectively. The size of the replay buffer is , and the size of the mini-batch is . We use Adam [32] as the optimizers for all main DNNs, the entropy temperature, and the Lagrange multiplier. The learning rate of the optimizer for the Lagrange multiplier is and the learning rates of the other optimizers are . The soft update rate of the target network is . The discount factor is . The target for updating the entropy temperature is . The STL-reward parameter is . The agent learns its control policy for steps. The initial parameters of both the entropy temperature and the Lagrange multiplier are . For performance evaluation, we introduce the following three indices:

-

•

a reward learning curve shows the mean of the sum of rewards for 100 trajectories,

-

•

an STL-reward learning curve shows the mean of the sum of STL-rewards for 100 trajectories, and

-

•

a success rate shows the number of trajectories satisfying the given STL constraint for 100 trajectories.

We prepare initial states sampled from and generate trajectories using the learned policy for each evaluation. We show the results for (Case 1) and (Case 2). We do not use pre-training in Case 1. All simulations were done on a computer with AMD Ryzen 9 3950X 16-core processor, NVIDIA (R) GeForce RTX 2070 super, and 32GB of memory and were conducted using the Python software.

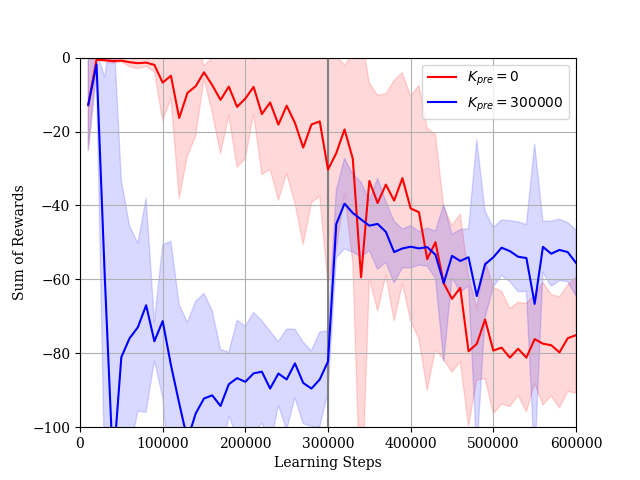

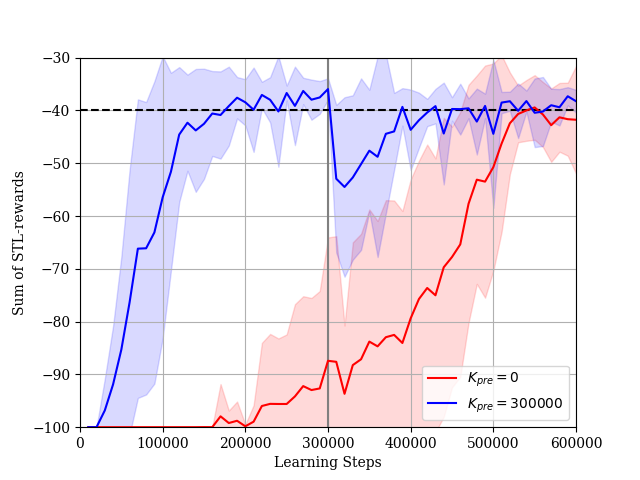

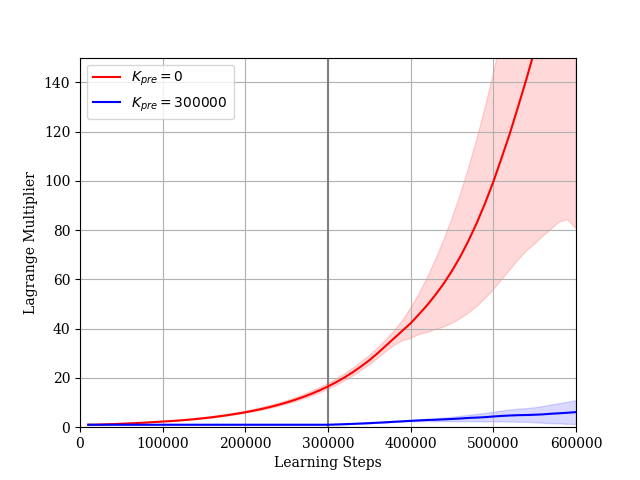

V-A1 Formula 1

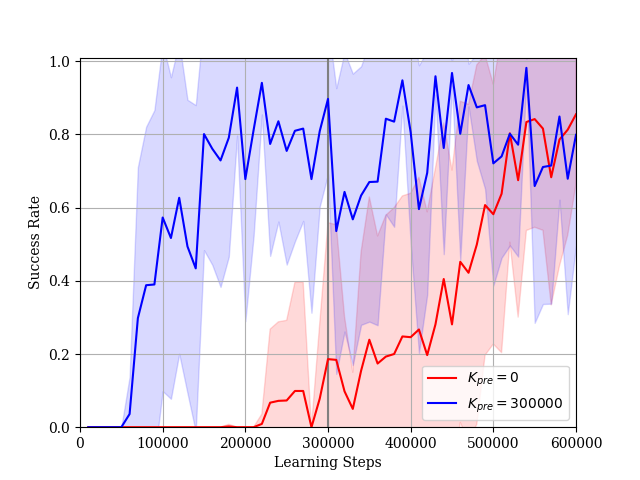

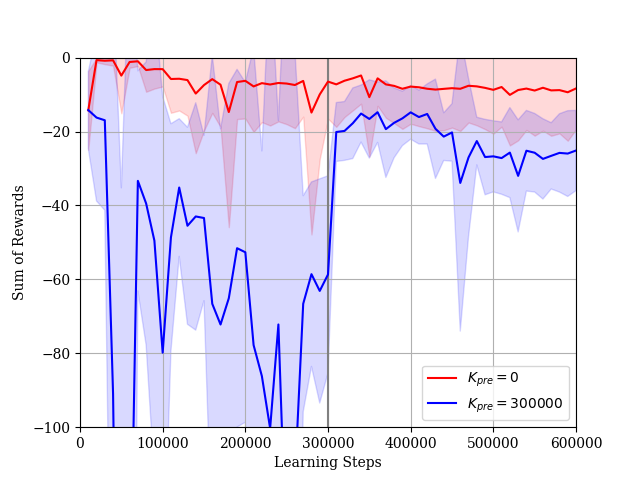

We consider the case where the constraint is given by (34). In this simulation, we set and . The dimension of the extended state is . The reward learning curves and the STL-rewards learning curves are shown in Figs. 10 and 11, respectively. In Case 1, it takes a lot of steps to learn a policy such that the sum of STL-rewards is near the threshold . The reward learning curve decreases gradually while the STL-reward curve increases. This is an effect of lacking in experience satisfying the STL formula . If the agent cannot satisfy the STL constraint during its explorations, the Lagrange multiplier becomes large as shown in Fig. 12. Then, the STL term of the actor loss becomes larger than the other terms. As a result, the agent updates the parameter vector considering only the STL rewards. On the other hand, in Case 2, the agent can obtain enough experiences satisfying the STL formula in pre-training steps. The agent learns the policy such that the sum of the STL-rewards is near the threshold relatively quickly and fine-tunes the policy under the STL constraint after pre-training. According to the results in the both cases, our proposed method is useful to learn the optimal policy under the STL constraint. Additionally, as the sum of STL-rewards obtained by the learned policy is increasing, the success rate for the given STL formula is also increasing as shown in Fig. 13.

V-A2 Formula 2

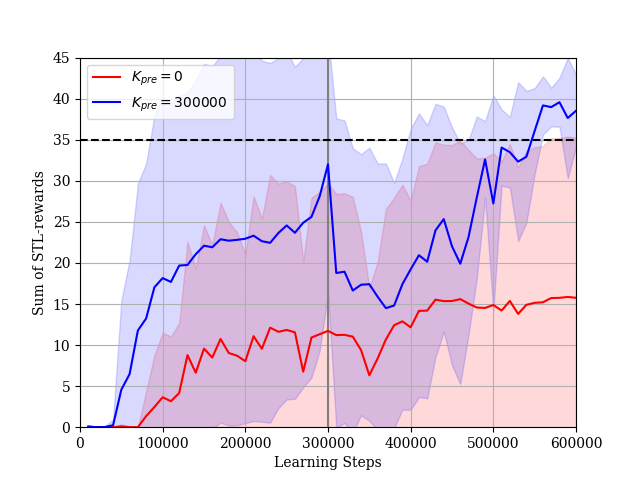

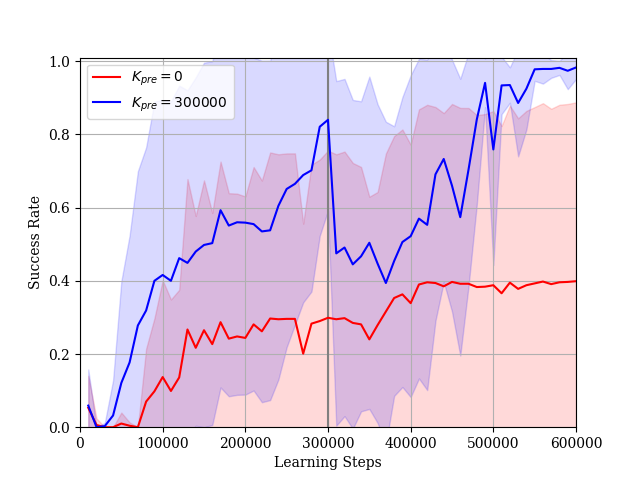

We consider the case where the constraint is given by (35). In this simulation, we set and . The dimension of the extended state is . We use the reward function in stead of (LABEL:tau_reward) to prevent the sum of STL-rewards diverging to infinity. The reward learning curves and the STL-rewards learning curves are shown in Figs. 14 and 15, respectively. In Case 1, although the reward learning curve maintains more than , the STL-reward learning curve maintains much less than the threshold . On the other hand, in Case 2, the agent learns a policy such that the sum of STL-rewards is near the threshold and fine-tunes the policy under the STL constraint after pre-training. Our proposed method is useful for not only the formula but also the formula . Additionally, as the sum of STL-rewards obtained by the learned policy is increasing, the success rate for the given STL formula is also increasing as shown in Fig. 16.

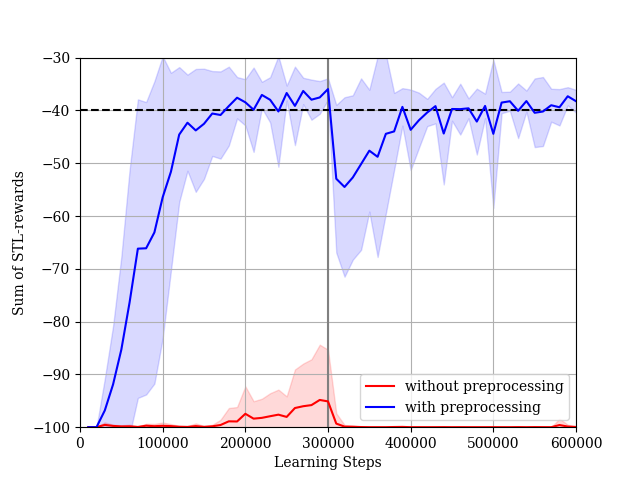

V-B Ablation studies for pre-processing

In this section, we show the ablation studies for pre-processing introduced in Section IV.D. We conduct the experiment for using the SAC-Lagrangian algorithm. In the case without pre-processing, the dimensionality of the input to DNNs is and, in the case with pre-processing, the dimensionality of the input to DNNs is . The STL-reward learning curves for each case are shown in Fig. 17. The agent without pre-processing cannot improve the performance of its policy for STL-rewards. The result concludes that pre-processing is useful for a problem constrained by an STL formula with a large .

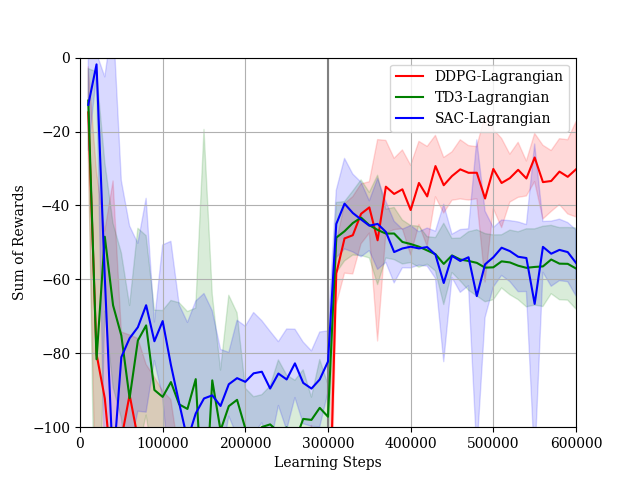

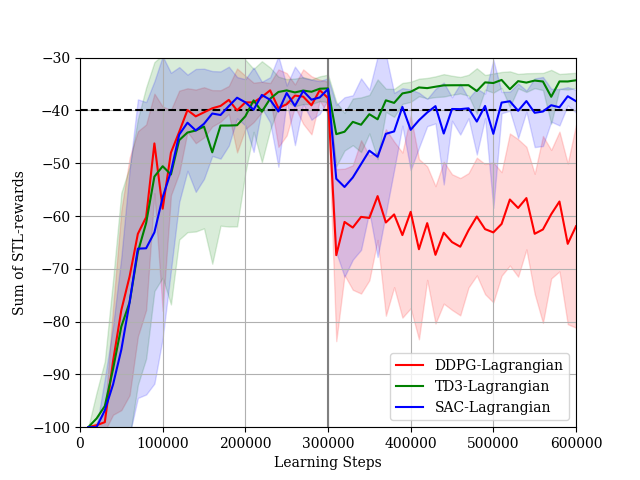

V-C Comparison with another DRL algorithm

In this section, we compare the SAC based algorithm with other algorithms: DDPG [20] and TD3 [31]. TD3 is an extended DDPG algorithm with the clipped double Q-learning technique to mitigate the positive bias for the critic estimation. For the DDPG-Lagrangian algorithm and the TD3-Lagrangian algorithm, we need to set a stochastic process generating exploration noises. We use the following Ornstein-Uhlenbeck process.

where is a noise generated by a standard normal distribution . We set the parameters . For the TD3-Lagrangian algorithm, the target policy smoothing and the delayed policy updates are same as the original paper [31]. The target policy smoothing is implemented by adding noises sampled from the normal distribution to the actions chosen by the target actor DNN, clipped to , the agent updates the actor DNN and the target DNNs every learning steps. Other experimental settings such as hyper parameters, optimizers, and DNN architectures, are same as the SAC-Lagrangian algorithm.

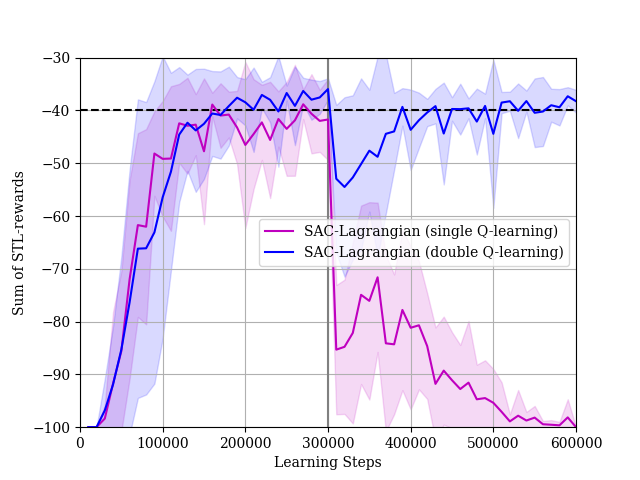

We conduct experiments for . We show the reward learning curves and the STL-reward learning curves in Figs. 18 and 19, respectively. Although all algorithms can improve the policy with respect to rewards after fine-tuning, the DDPG algorithm cannot improve the policy with respect to the STL-rewards. The STL-reward curve of the DDPG-Lagrangian algorithm is much less than the threshold. On the other hand, the TD3-Lagrangian algorithm and the SAC-Lagrangian algorithm can learn the policy such that the STL-rewards are more than threshold. These results show the importance of the double Q-learning technique to mitigate positive biases for critic estimations in the fine-tuning phase. Actually, the technique is used in both the TD3-Lagrangian algorithm and the SAC-Lagrangian algorithm. Then, we show the result in the case where we do not use the double Q-learning technique in the SAC-Lagrangian in Fig. 20. Although the agent can learn a policy such that the STL-rewards are near the threshold in the pre-train phase, the performance of the agent’s policy with respect to the STL-rewards is degraded in the fine-tune phase.

VI Conclusion

We considered a model-free optimal control problem constrained by a given STL formula. We modeled the problem as a -CMDP that is an extension of a -MDP. To solve the -CMDP problem with continuous state-action spaces, we proposed a CDRL algorithm with the Lagrangian relaxation. In the algorithm, we relaxed the constrained problem into an unconstrained problem to utilize a standard DRL algorithm for unconstrained problems. Additionally, we proposed a practical two-phase learning algorithm to make it easy to obtain experiences satisfying the given STL formula. Through numerical simulations, we demonstrated the performance of the proposed algorithm. First, we showed that the agent with our proposed two-phase algorithm can learn its policy for the -CMDP problem. Next, we conducted ablation studies for pre-processing to reduce the dimensionality of the extended state and showed the usefulness. Finally, we compared three CDRL algorithms and showed the usefulness of the double Q-learning technique in the fine-tune phase.

On the other hand, the syntax in this study is restrictive compared with the general STL syntax. Relaxing the syntax restriction is a future work. Furthermore, we may not directly apply our proposed methods to high dimensional decision making problems because it is difficult to obtain experiences satisfying a given STL formula for the problems. Solving the issue is also an interesting direction for a future work.

References

- [1] R. S. Sutton and A. G. Barto, Reinforcement Learning: An Introduction, 2nd ed. Cambridge, MA, USA: MIT Press, 2018.

- [2] H. Dong, Z. Ding, and S. Zhang Eds., Deep Reinforcement Learning Fundamentals, Research and Applications, Singapore: Springer, 2020.

- [3] V. Mnih, K. Kavukcuoglu, D. Silver, A. A. Rusu, J. Veness, M. G. Bellemare, A. Graves, M. Riedmiller, A. K. Fidjeland, G. Ostrovski, S. Petersen, C. Beattie, A. Sadik, I. Antonoglou, H. King, D. Kumaran, D. Wierstra, S. Legg, and D. Hassabis, “Human-level control through deep reinforcement learning,” Nature, vol. 518, pp. 529–533, Feb. 2015.

- [4] S. Gu, E. Holly, T. Lillicrap, and S. Levine, “Deep reinforcement learning for robotic manipulation with asynchronous off-policy updates,” in Proc. 2017 IEEE Int. Conf. on Robotics and Automation (ICRA), May 2017, pp. 3389–3396.

- [5] N. C. Luong, D. T. Hoang, S. Gong, D. Niyato, P. Wang, Y.-C. Liang, and D. I. Kim, “Applications of deep reinforcement learning in communications and networking: A survey,” IEEE Communications Surveys & Tutorials, vol. 21, no. 4, pp. 3133–3174, May 2019.

- [6] T. T. Nguyen, N. D. Nguyen, and S. Nahavandi, “Deep reinforcement learning for multiagent systems: A review of challenges, solutions, and applications,” IEEE Transactions on Cybernetics, vol. 50, no. 9, pp. 3826–3839, Sept. 2020.

- [7] C. Belta, B. Yordanov, and E. A. Gol, Formal Methods for DiscreteTime Dynamical Systems, Cham, Switzerland: Springer, 2017.

- [8] C. Baier and J.-P. Katoen, Principles of Model Checking, Cambridge, MA, USA: MIT Press, 2008.

- [9] M. Hasanbeig, A. Abate, and D. Kroening, “Logically-Constrained Reinforcement Learning,” 2018, arXiv:1801.08099.

- [10] L. Z. Yuan, M. Hasanbeig, A. Abate, and D. Kroening, “Modular deep reinforcement learning with temporal logic specifications,” 2019, arXiv:1909.11591.

- [11] M. Cai, M. Hasanbeig, S. Xiao, A. Abate, and Z. Kan, “Modular deep reinforcement learning for continuous motion planning with temporal logic,” IEEE Robotics and Automation Letters, vol. 6, no. 4, pp. 7973-7980, Aug. 2021.

- [12] O. Maler and D. Nickovic, “Monitoring temporal properties of continuous signals,” Formal Techniques, Modeling and Analysis of Timed and Fault-Tolerant Systems, pp. 152–166, Jan. 2004.

- [13] G. E. Fainekos and G. J. Pappas, “Robustness of temporal logic specifications for continuous-time signals,” Theoretical Computer Science, vol. 410, no. 42, pp. 4262–4291, Sept. 2009.

- [14] V. Raman, A. Donzé, M. Maasoumy, R. M. Murray, A. Sangiovanni-Vincentelli, and S. A. Seshia, “Model predictive control with signal temporal logic specifications,” in Proc. IEEE 53rd Conf. on Decision and Control (CDC), Dec. 2014, pp. 81–87.

- [15] L. Lindemann and D. V. Dimarogonas, “Control barrier functions for signal temporal logic tasks,” IEEE Control Systems Letters, vol. 3, no. 1, pp. 96–101, Jan. 2019.

- [16] D. Aksaray, A. Jones, Z. Kong, M. Schwager, and C. Belta, “Q-learning for robust satisfaction of signal temporal logic specifications,” in Proc. IEEE 55th Conf. on Decision and Control (CDC), Dec. 2016, pp. 6565–6570.

- [17] H. Venkataraman, D. Aksaray, and P. Seiler, “Tractable reinforcement learning of signal temporal logic objectives,” 2020, arXiv:2001.09467.

- [18] A. Balakrishnan and J. V. Deshmukh, “Structured reward shaping using signal temporal logic specifications,” in Proc. 2019 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), Nov. 2019, pp. 3481–3486.

- [19] J. Ikemoto and T. Ushio, “Deep reinforcement learning based networked control with network delays for signal temporal logic specifications,” in Proc. IEEE 27th Int. Conf. on Emerging Technologies and Factory Automation (ETFA), Sept. 2021, doi: 10.1109/ETFA52439.2022.9921505.

- [20] T. P. Lillicrap, J. J. Hunt, A. Pritzel, N. Heess, T. Erez, Y. Tassa, D. Silver, and D. Wierstra, “Continuous control with deep reinforcement learning,” 2015, arXiv:1509.02971.

- [21] T. Haarnoja, A. Zhou, K. Hartikainen, G. Tucker, S. Ha, J. Tan, V. Kumar, H. Zhu, A. Gupta, P. Abbeel, and S. Levine, “Soft actor-critic algorithms and applications,” 2018, arXiv:1812.05905.

- [22] E. Altman, Constrained Markov Decision Processes, New York, USA: Routledge, 1999.

- [23] Y. Liu, A. Halev, and X. Liu, “Policy learning with constraints in model-free reinforcement learning: A survey,” in Proc. Int. Joint Conf. on Artificial Intelligence Organization (IJCAI), Aug. 2021, pp. 4508–4515.

- [24] K. C. Kalagarla, R. Jain, and P. Nuzzo, “Model-free reinforcement learning for optimal control of Markov decision processes under signal temporal logic specifications,” in Proc. IEEE 60th Conf. on Decision and Control (CDC), Dec. 2021, pp. 2252–2257.

- [25] A.G. Puranic, J.V. Deshmukh, and S. Nikolaidis, “Learning from demonstrations using signal temporal logic,” 2021, arXiv:2102.07730.

- [26] A.G. Puranic, J.V. Deshmukh, and S. Nikolaidis, “Learning from demonstrations using signal temporal logic in stochastic and continuous domains,” IEEE Robotics and Automation Letters, vol. 6, no. 4, pp. 6250-6257, Oct. 2021.

- [27] D. P. Bertsekas, Constrained Optimization and Lagrange Multiplier Methods, New York, NY, USA: Academic Press, 2014.

- [28] S. Ha, P. Xu, Z. Tan, S. Levine, and J. Tan, “Learning to walk in the real world with minimal human effort,” 2020, arXiv:2002.08550.

- [29] W. Wang, N. Yu, Y. Gao, and J. Shi, “Safe off-policy deep reinforcement learning algorithm for Volt-VAR control in power distribution systems,” IEEE Transactions on Smart Grid, vol. 11, no. 4, pp. 3008–3018, Jul. 2020.

- [30] D. P. Kingma and M. Welling, “Auto-encoding variational Bayes,” 2013, arXiv:1312.6114.

- [31] S. Fujimoto, H. van Hoof, and D. Meger, “Addressing function approximation error in actor-critic methods,” in Proc. Int. Conf. on machine learning (ICML), Jul. 2018, pp. 1587–1596.

- [32] D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,” 2014, arXiv:1412.6980.