Deflated Dynamics Value Iteration

Abstract

Abstract: The Value Iteration (VI) algorithm is an iterative procedure to compute the value function of a Markov decision process, and is the basis of many reinforcement learning (RL) algorithms as well. As the error convergence rate of VI as a function of iteration is , it is slow when the discount factor is close to . To accelerate the computation of the value function, we propose Deflated Dynamics Value Iteration (DDVI). DDVI uses matrix splitting and matrix deflation techniques to effectively remove (deflate) the top dominant eigen-structure of the transition matrix . We prove that this leads to a convergence rate, where is -th largest eigenvalue of the dynamics matrix. We then extend DDVI to the RL setting and present Deflated Dynamics Temporal Difference (DDTD) algorithm. We empirically show the effectiveness of the proposed algorithms.

Deflated Dynamics Value Iteration

Jongmin Lee1, Amin Rakhsha2, Ernest K. Ryu3, Amir-massoud Farahmand2

1 Seoul National University, South Korea

2 University of Toronto & Vector Institute, Canada

3 University of California, Los Angeles, USA

1. Introduction

Computing the value function for a policy or the optimal value function is an integral step of many planning and reinforcement learning (RL) algorithms. Value Iteration (VI) is a fundamental dynamic programming algorithm for computing the value functions, and its approximate and sample-based variants, such as Temporal Different Learning [Sutton, 1988], Fitted Value Iteration [Ernst et al., 2005, Munos and Szepesvári, 2008], Deep Q-Network [Mnih et al., 2015], are the workhorses of modern RL algorithms [Bertsekas and Tsitsiklis, 1996, Sutton and Barto, 2018, Szepesvári, 2010, Meyn, 2022].

The VI algorithm, however, can be slow for problems with long effective planning horizon problems, when the agent has to look far into future in order to make good decisions. Within the discounted Markov Decision Processes formalism, the discount factor determines the effective planning horizon, with closer to corresponding to longer planning horizon. The error convergence rate of the conventional VI as a function of the iteration is , which is slow when is close to .

Recently, there has been a growing body of research that explores the application of acceleration techniques of other areas of applied math to planning and RL: Geist and Scherrer [2018], Sun et al. [2021], Ermis and Yang [2020], Park et al. [2022], Ermis et al. [2021], Shi et al. [2019] applies Anderson acceleration of fixed-point iterations, Lee and Ryu [2023] applies Anchor acceleration of minimax optimization, Vieillard et al. [2020], Goyal and Grand-Clément [2022], Grand-Clément [2021], Bowen et al. [2021], Akian et al. [2022] applies Nesterov acceleration of convex optimization and Farahmand and Ghavamzadeh [2021] applies ideas inspired by PID controllers in control theory.

More detail is provided in Appendix 7.1.

We introduce a novel approach to accelerate VI based on modification of the eigenvalues of the transition dynamics, which are closely related to the convergence of VI. To see this connection, consider the policy evaluation problem where the goal is to find the value function for a given policy . VI starts from an arbitrary and iteratively sets , where and are the reward vector and the transition matrix of policy , respectively. If , the error vector at iteration can be shown to be . Let us take a closer look at this difference.

For simplicity, assume that is a diagonalizable matrix, so we can write with consisting of the (right) eigenvectors of and being the diagonal matrix with . Since , after some manipulations, we see that

The diagonal terms are of the form, so they all converge to zero. The dominant term is , which corresponds to the largest eigenvalue. As the largest eigenvalue of the stochastic matrix is , this leads to the dominant behaviour of , which we also get from the norm-based contraction mapping analysis. The second dominant term behaves as , and so on.

If we could somehow remove from the subspace corresponding to the top eigen-structure with eigen-values , the dominant behaviour of the new procedure would be , which can be much faster than of the conventional VI. Although this is perhaps too good to seem feasible, this is exactly what the proposed Deflated Dynamics Value Iteration (DDVI) algorithm achieves. Even if is not a diagonalizable matrix, DDVI works. DDVI is based on two main ideas.

The first idea is the deflation technique, well-studied in linear algebra (see Section 4.2 of Saad [2011]), that allows us to remove large eigenvalues of . This is done by subtracting a matrix from . This gives us a “deflated dynamics” that does not have eigenvalues . The second idea is based on matrix splitting (see Section 11.2 of Golub and Van Loan [2013]), which allows us to use the modified dynamics to define a VI-like iterative procedure and still converge to the same solution to which the conventional VI converges.

Deflation has been applied to various algorithms such as conjugate gradient algorithm [Saad et al., 2000], principal component analysis [Mackey, 2008], generalized minimal residual method [Morgan, 1995], and nonlinear fixed point iteration [Shroff and Keller, 1993] for improvement of convergence. While some prior in accelerated planning can be considered as special cases of deflation [Bertsekas, 1995, White, 1963], to the best of our knowledge, this is the first application of the deflation technique that eliminates multiple eigenvalues in the context of RL. On the other hand, multiple planning algorithms have been introduced based on the general idea of matrix splitting [Hastings, 1968, Kushner and Kleinman, 1968, 1971, Reetz, 1973, Porteus, 1975, Rakhsha et al., 2022], though with a different splitting than this work.

After a review of relevant background in Section 2, we present DDVI and prove its convergence rate for the Policy Evaluation (PE) problem in Section 3. Next, in Section 4, we discuss the practical computation of the deflation matrix. In Section 5, we explain how DDVI can be extended to its sample-based variant and introduce the Deflated Dynamics Temporal Difference (DDTD) algorithm. Finally, in Section 6, we empirically evaluate the proposed methods and show their practical feasibility.

2. Background

We first briefly review basic definitions and concepts of Markov Decision Processes (MDP) and Reinforcement Learning (RL) [Bertsekas and Tsitsiklis, 1996, Sutton and Barto, 2018, Szepesvári, 2010, Meyn, 2022]. We then describe the Power Iteration and QR Iterations, which can be used to compute the eigenvalues of a matrix.

For a generic set , we denote as the space of probability distributions over set . We use to denote the space of bounded measurable real-valued functions over . For a matrix , we use spec(A) to denote its spectrum (the set of eigenvalues), to denote its spectral radius (the maximum of the absolute value of eigenvalues), and to denote its norm.

Markov Decision Process.

The discounted MDP is defined by the tuple , where is the state space, is the action space, is the transition probability kernel, is the reward kernel, and is the discount factor. In this work, we assume that the MDP has a finite number of states. We use to denote the expected reward at a given state-action pair. Denote for a policy. We define the reward function for a policy as . The transition kernel of following policy is denoted by and is defined as

The value function for a policy is where denotes the expected value over all trajectories induced by and . We say that is optimal value functions if . We say is optimal policies if .

The Bellman operator for policy is defined as the mapping that takes and returns a new function such that its value at state is . The Bellman optimality operator is defined as . The value functions and are the fixed points of the Bellman operators, that is, and .

Value Iteration.

The Value Iteration algorithm is one of the main methods in dynamic programming and planning for computing the value function of a policy, the Policy Evaluation (PE) problem, or the optimal value function , for the Control problem. It is iteratively defined as

where is the initial function. For discounted MDPs where , the Bellman operators are contractions, so by the Banach fixed-point theorem [Banach, 1922, Hunter and Nachtergaele, 2001, Hillen, 2023], the VI converges to the unique fixed points, which are or , with the convergence rate of .

Let us now recall some concepts and methods from (numerical) linear algebra, see Golub and Van Loan [2013] for more detail. For any (not necessarily symmetric nor diagonalizable) and for any matrix norm , Gelfand’s formula states that . Hence, for any , which we denote by . Furthermore, if is diagonalizable, then .

Power Iteration.

Powers of can be used to compute eigenvalues of . The Power Iteration starts with an initial vector and for computes

If is the eigenvalue such that and the other eigenvalues have strictly smaller magnitude, then for almost all starting points (Section 7.3.1 of Golub and Van Loan [2013]).

QR iteration.

The QR Iteration (or Orthogonal Iteration) algorithm [Golub and Van Loan, 2013, Sections 7.3 and 8.2] is a generalization of the Power Iteration that finds eigenvalues. For any , let have orthonormal columns and perform

for . If , the columns of converge to an orthonormal basis for the dominant -dimensional invariant subspace associated with the eigenvalues and diagonal entries of converge to for almost all starting [Golub and Van Loan, 2013, Theorem 7.3.1].

3. Deflated Dynamics Value Iteration

We present Deflated Dynamics Value Iteration (DDVI), after introducing its two key ingredients, matrix deflation and matrix splitting.

3.1. Matrix Deflation

Let be an matrix with eigenvalues , sorted in decreasing order of magnitude with ties broken arbitrarily. Let denote the conjugate transpose of . We describe three ways of deflating this matrix.

Fact 1 (Hotelling’s deflation, [Meirovitch, 1980, Section 5.6]).

Assume linearly independent eigenvectors corresponding to exist. Write and to denote the top right and left eigenvectors scaled to satisfy and for all . If , then .

Hotelling deflation makes small by eliminating the top eigenvalues of , but requires both right and left eigenvectors of . Wielandt’s deflation, in contrast, requires only right eigenvectors.

Fact 2 (Wielandt’s deflation, [Soto and Rojo, 2006, Theorem 5]).

Assume linearly independent eigenvectors corresponding to exist. Write to denote the top linearly independent right eigenvectors. Assume that vectors , which are not necessarily the left eigenvectors, satisfy and for all . If , then .

Wielandt’s deflation, however, still requires right eigenvectors, which are sometimes numerically unstable to compute. The Schur deflation, in contrast, only requires Schur vectors, which are stable to compute [Golub and Van Loan, 2013, Sections 7.3]. For any , the Schur decomposition has the form , where is an upper triangular matrix with for , and is a unitary matrix. We write to denote the -th column of and call it the -th Schur vector for . Specifically, the QR iteration computes the top eigenvalues and Schur vectors.

Fact 3 (Schur deflation, [Saad, 2011, Proposition 4.2]).

Let . Write to denote the top Schur vectors. If , then .

3.2. Matrix Splitting: SOR

Consider the splitting of a matrix in the form of . Let . Then, solves the linear system if and only if

which, in turn, holds if and only if

provided that is invertible. Successive over-relaxation (SOR) attempts to find a solution through the fixed-point iteration

Note that classical SOR uses a lower triangular , upper triangular , and diagonal . Here, we generalize the standard derivation of SOR to any splitting .

Policy evaluation is the problem of finding a unique that satisfies the Bellman equation , or in an expanded form, . Notice that this is equivalent to

for any . The SOR iteration for PE is

As an example, notice that for and , we recover the original VI. The convergence behaviour of this procedure depends on the choice of and . Soon we propose particular choices that can lead to acceleration.

3.3. Deflated Dynamics Value Iteration

We are now ready to introduce DDVI. Let be a rank- deflation matrix of satisfying the conditions of Facts 1, 2, or 3. Using (1), we can express the SOR iteration for PE with deflation matrix as

| (2) |

We call this method Deflated Dynamics Value Iteration (DDVI). Theorem 3.1 and Corollary 3.2 describe the rate of convergence of DDVI for PE.

Theorem 3.1.

Let be a policy and let be the eigenvalues of sorted in decreasing order of magnitude with ties broken arbitrarily. Let . Let be a rank- deflation matrix of satisfying the conditions of Facts 1, 2, or 3. For , DDVI (2) exhibits the rate111The precise meaning of the notation is as for any .

as , where

When , we can simplify DDVI (2) as follows.

Corollary 3.2.

Let us compare this convergence rate with the original VI’s. The rate for VI is , which is slow when . By choosing to deflate the top eigenvalues of , DDVI has the rate of behaviour, which is exponentially faster whenever is smaller than . The exact behaviour depends on the spectrum of the Markov chain (whether eigenvalues are close to or far from them) and the number of eigenvalues we decided to deflate by .

In Section 4, we discuss a practical implementation of DDVI based on the power iteration and orthogonal iteration and implement it in the experiments of Section 6. We find that smaller values of lead to more stable iterations when using approximate deflation matrices. We also show how we can use DDVI in the RL setting in Section 5.

This version of DDVI (2) applies to the policy evaluation (PE) setup. The challenge in extending DDVI to the Control setup is that changes throughout the iterations, and so should the deflation matrix . However, when , the deflation matrix can be kept constant, and we utilize this fact in Section 4.1.

4. Computing Deflation Matrix

The application of DDVI requires practical means of computing the deflation matrix . In this section, we provide three approaches as examples.

4.1. Rank- DDVI for PE and Control

Recall that for any stochastic matrix, the vector is a right eigenvector corresponding to eigenvalue . This allows us to easily obtain a rank-1 deflation matrix for for any policy .

Let with be a vector with non-negative entries satisfying . This rank- Wielandt’s deflation matrix can be used for DDVI (PE) as in Section 2, but we can also use it for the Control version of DDVI.

We define the rank- DDVI for Control as

| (3) |

Here, we benefitted from the fact that is not a function of in order to take it out of the . Compare with (2) of DDVI (PE), we set for simplicity. We have the following guarantee for rank- DDVI for Control.

Theorem 4.1.

Discussion.

Although rank-1 DDVI for Control does accelerate the convergence , a subtle point to note is that the greedy policy obtained from is not affected by the rank-1 deflation. Briefly speaking, this is because adding a uniform constant to through has no effect on the . Indeed, the maximizer in (3) is the same as , produced by the (non-deflated) value iteration, when . Having the term in the update adds the same constant to all states, so it does not change the maximizer of the next policy.

4.2. DDVI with Automatic Power Iteration

Assume that the top eigenvalues of have distinct magnitude, i.e., . Let be a rank- deflation matrix of as in Fact 2.

Consider the DDVI algorithm (2) with as in Corollary 3.2. If , since (verified in Appendix 8), we have . Therefore, is the iterates of a power iteration for the matrix starting from initial vector . As discussed in Fact 2, the top eigenvalue of is . For large , we expect , where is the top eigenvector of . With , we can recover the -th right eigenvector of through the formula ([Bru et al., 2012, Proposition 5]) .

Leveraging this observation, DDVI with Automatic Power Iteration (AutoPI) computes an approximate rank- deflation matrix while performing DDVI: Start with a rank- deflation matrix, using the first right eigenvector , and carry out DDVI iterations. If a certain error criteria is satisfied, use to approximate the second right eigenvector . Then, update the deflation matrix to be rank , and gradually increase the deflation rank. Algorithm 3 in Appendix 9 has the detail.

Rank- DDVI with the QR Iteration.

We can also use the QR Iteration to compute the top Schur vectors, and then construct rank- Schur deflation matrix and performs DDVI. We can also perform the QR Iteration in an automated manner, similar to AutoPI. This results in the AutoQR algorithm. The detail is in Appendix 9.

5. Deflated Dynamics Temporal Difference Learning

Many practical RL algorithms such as Temporal Difference (TD) Learning [Sutton, 1988, Tsitsiklis and V. Roy, 1997], Q-Learning [Watkins, 1989], Fitted Value Iteration [Gordon, 1995], and DQN [Mnih et al., 2015] can be viewed as sample-based variants of VI. Consequently, the slow convergence in the case of has also been observed for these algorithms by Szepesvári [1997], Even-Dar and Mansour [2003], Wainwright [2019] for TD Learning and by Munos and Szepesvári [2008], Farahmand et al. [2010], Chen and Jiang [2019], Fan et al. [2020] for Fitted VI and DQN. Here we introduce Deflated Dynamics Temporal Difference Learning (DDTD) as a sample-based variant of DDVI. To start, recall that the tabular temporal difference (TD) learning performs the updates

where is stepsize, are random samples from the environment such that is the subsequent state following , and is the expected -step reward obtained by following policy from state . TD is a sample-based variant of VI for PE. Two key ingredients of TD learning are the random coordinate updates and the temporal difference error whose conditional expectation is equal to . Applying the same ingredients to DDVI, we obtain DDTD. The improved convergence rate for DDVI compared to VI suggests that DDTD will also exhibit improved convergence rates.

Specifically, we define DDTD as

| (4) |

where is a rank- deflation matrix of and are i.i.d. random variables such that and . The -update notation means for all .

DDTD is the sample-based variant of DDVI performing asynchronous updates. The following result provides almost sure convergence of DDTD.

Theorem 5.1.

Let when . For , DDTD (4) converges to almost surely.

The following result describes the asymptotic convergence rate of DDTD.

Theorem 5.2.

Let be the eigenvalues of sorted in decreasing order of magnitude with ties broken arbitrarily. Let for where . For , DDTD (4) exhibits the rate .

We note that in Theorem 5.2, as the rank of DDTD increases, also increases, and this implies that higher rank DDTD has a larger range of convergent step size compared to the plain TD learning.

5.1. Implementation of DDTD

To implement DDTD practically, we take a model-based approach for obtaining . At each iteration, the agent uses the new samples to update an approximate model of the true dynamics. This updated approximate model is used to compute . We update every -th iterations since the change in and consequently the resulting at each iteration is small. Whenever a new is computed, we set to be . This step ensures that smoothly converges to by updating all coordinates of at once based on new . Also, we use the random sample reward in place of the expected reward . We formally state the algorithm as Algorithm 1.

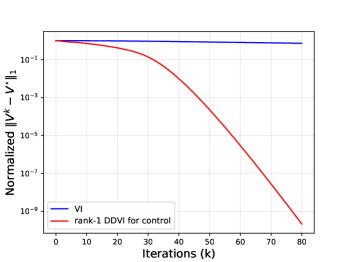

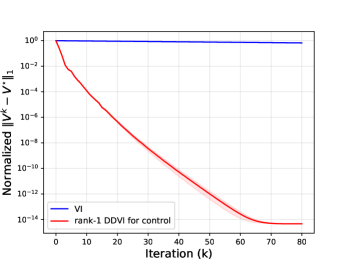

6. Experiments

For our experiments, we use the following environments: Maze with states and actions, Cliffwalk with states with 4 actions, Chain Walk with 50 states with 2 actions, and random Garnet MDPs [Bhatnagar et al., 2009] with 200 states. The discount factor is set to for DDTD experiments and in other experiments. Appendix 10 provides full definitions of the environments and policies we used. All experiments were carried out on local CPUs. For comparisons, we use the normalized error of defined as .

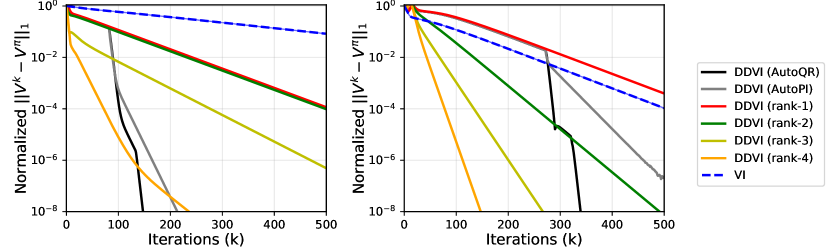

DDVI with AutoPI, AutoQR, different fixed ranks.

In Figure 1, we compare rank- DDVI for multiple values of and DDVI with AutoPI and AutoQR against the VI in Chain Walk and Maze. QR iteration (Section 2) is used to calculate with Schur vectors. In almost all cases, DDVI exhibits a significantly faster convergence rate compared to VI. Aligned with the theory, we observe that higher ranks achieve better convergence rates. Also, as DDVI with AutoPI and AutoQR progress and update , their convergence rate improves.

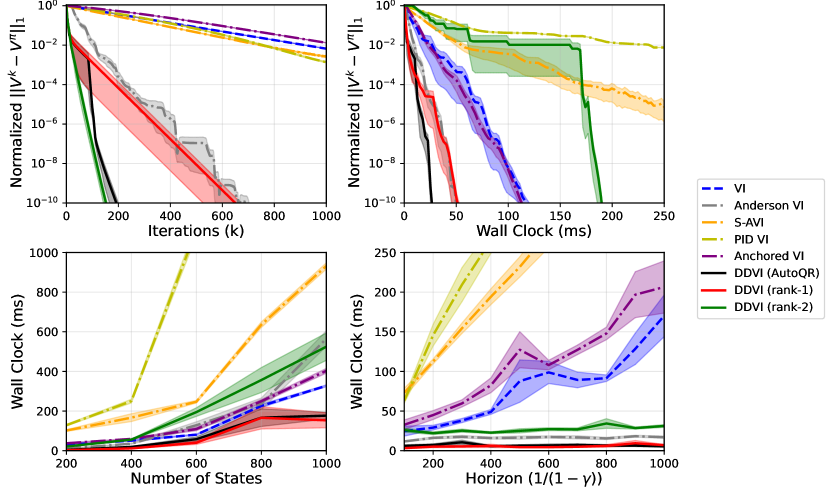

DDVI and other baselines.

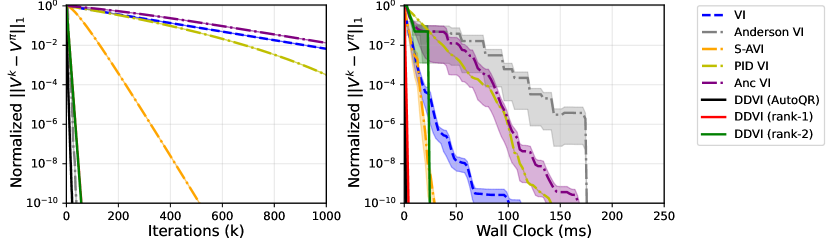

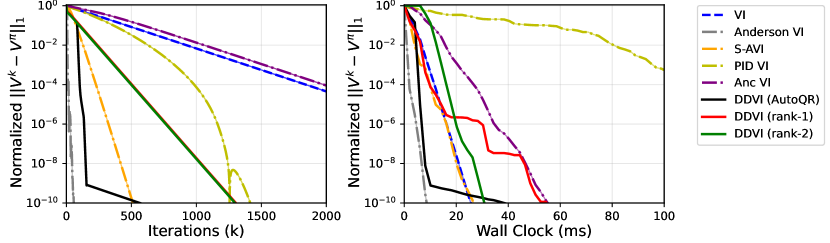

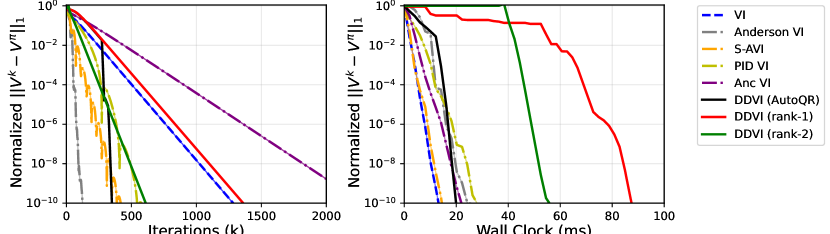

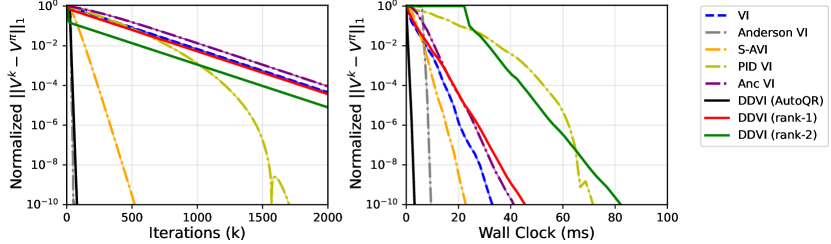

We perform an extensive comparison of DDVI against the prior accelerated VI methods: Safe Accelerated VI (S-AVI)[Goyal and Grand-Clément, 2022], Anderson VI [Geist and Scherrer, 2018], PID VI [Farahmand and Ghavamzadeh, 2021], and Anchored VI [Lee and Ryu, 2023]. In this experiment, we use the Implicitly Restarted Arnoldi Method [Lehoucq et al., 1998] from SciPy package to calculate the eigenvalues and eigenvectors for DDVI. Figure 2 (top-left) shows the convergence behaviour of the algorithms by iteration count in 20 randomly generated Garnet environments, where our algorithms outperform all the baselines. This comparison might not be fair as the amount of computation needed for each iteration of algorithms is not the same. Therefore, in Figure 2 (top-right), we compare the algorithms by wall clock time. It can be seen that rank- DDVI initially has to spend time to calculate before it starts to update the value function, but after the slow start, it has the fastest rate. The fast rate can compensate for the initial time if a high accuracy is needed. DDVI with AutoQR and rank- DDVI show a fast convergence from the beginning.

We further investigate how the algorithms scale with the size of MDP and the discount factor. Figure 2 (bottom-left) shows the runtime to reach a normalized error of against the number of states. DDVI with AutoQR and rank- DDVI show the best scaling. Note that even rank- calculates a non-trivial also scales competitively. Figure 2 (bottom-right) show the scaling behaviour with horizon of the MDP, which is . We measure the runtime to reach a normalized error of for the horizon ranging from to which corresponds to ranging from to . Remarkably, DDVI algorithms have a low constant runtime even with long horizon tasks with . This is aligned with our theoretical result, as the rate remains small as approaches .

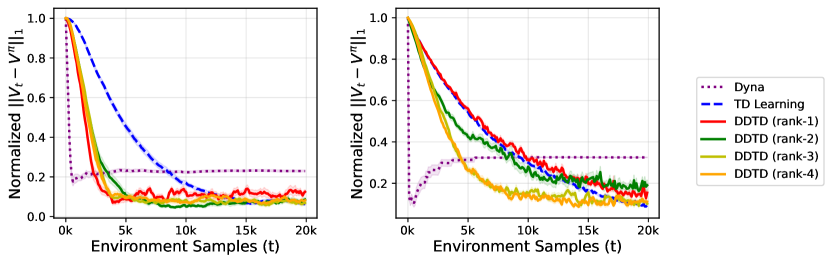

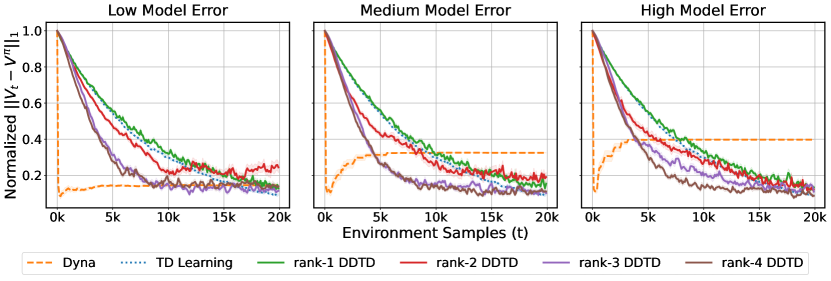

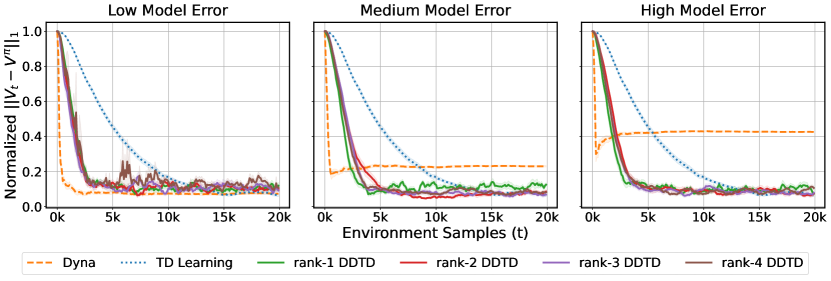

Rank- DDTD with QR iteration.

We compare DDTD with TD Learning and Dyna. For the sake of making the setting more realistic, we consider the case where the approximate model cannot exactly learn the true dynamics. Figure 3 shows that DDTD with large enough rank, can outperform TD. Note that unlike Dyna, DDTD does not suffer from model error, which shows that DDTD only uses the model for acceleration and is not a pure model-based algorithm.

7. Conclusion

In this work, we propose a framework for accelerating VI through matrix deflation. We theoretically analyzed our proposed methods DDVI and DDTD, and presented experimental results showing speedups in various setups.

The positive experimental results demonstrate matrix deflation to be a promising technique that may be applicable to a broader range of RL algorithms. One direction of future work is to extend the theoretical analysis DDVI for Control using a general rank- deflation matrix. Theoretically analyzing other RL methods combined with matrix deflation is another interesting direction.

Acknowledgements

AMF acknowledges the funding from the Natural Sciences and Engineering Research Council of Canada (NSERC) through the Discovery Grant program (2021-03701) and the Canada CIFAR AI Chairs program.

References

- Akian et al. [2022] M. Akian, S. Gaubert, Z. Qu, and O. Saadi. Multiply accelerated value iteration for non-symmetric affine fixed point problems and application to Markov decision processes. SIAM Journal on Matrix Analysis and Applications, 43(1):199–232, 2022.

- Andre et al. [1997] D. Andre, N. Friedman, and R. Parr. Generalized prioritized sweeping. Neural Information Processing Systems, 1997.

- Azar et al. [2011] M. Azar, R. Munos, M. Ghavamzadeh, and H. Kappen. Speedy Q-learning. Neural Information Processing Systems, 2011.

- Bacon [2018] P. Bacon. Temporal Representation Learning. PhD thesis, McGill University, 2018.

- Bacon and Precup [2016] P. Bacon and D. Precup. A matrix splitting perspective on planning with options. arXiv:1612.00916, 2016.

- Banach [1922] S. Banach. Sur les opérations dans les ensembles abstraits et leur application aux équations intégrales. Fundamenta Mathematicae, 3(1):133–181, 1922.

- Bertsekas [1995] D. P. Bertsekas. Generic rank-one corrections for value iteration in markovian decision problems. Operations research letters, 17(3):111–119, 1995.

- Bertsekas and Tsitsiklis [1996] D. P. Bertsekas and J. N. Tsitsiklis. Neuro-Dynamic Programming. Athena Scientific, 1996.

- Bhandari et al. [2018] J. Bhandari, D. Russo, and R. Singal. A finite time analysis of temporal difference learning with linear function approximation. 2018.

- Bhatnagar et al. [2009] S. Bhatnagar, R. S. Sutton, M. Ghavamzadeh, and M. Lee. Natural actor–critic algorithms. Automatica, 45(11):2471–2482, 2009.

- Borkar [1998] Vivek S Borkar. Asynchronous stochastic approximations. SIAM Journal on Control and Optimization, 36(3):840–851, 1998.

- Borkar and Meyn [2000] Vivek S Borkar and Sean P Meyn. The ode method for convergence of stochastic approximation and reinforcement learning. SIAM Journal on Control and Optimization, 38(2):447–469, 2000.

- Bowen et al. [2021] W. Bowen, X. Huaqing, Z. Lin, L. Yingbin, and Z. Wei. Finite-time theory for momentum Q-learning. Conference on Uncertainty in Artificial Intelligence, 2021.

- Bru et al. [2012] R. Bru, R. Canto, R. L. Soto, and A. M. Urbano. A Brauer’s theorem and related results. Central European Journal of Mathematics, 10:312–321, 2012.

- Chen and Jiang [2019] J. Chen and N. Jiang. International conference on machine learning. Information-Theoretic Considerations in Batch Reinforcement Learning, 2019.

- Chen et al. [2020] S. Chen, A. Devraj, A. Busic, and S. Meyn. Explicit mean-square error bounds for monte-carlo and linear stochastic approximation. International Conference on Artificial Intelligence and Statistics, 2020.

- Chen et al. [2023] Z. Chen, S. T Maguluri, S. Shakkottai, and K. Shanmugam. A lyapunov theory for finite-sample guarantees of markovian stochastic approximation. Operations Research, 2023.

- Dai et al. [2011] P. Dai, D. S. Weld, J. Goldsmith, et al. Topological value iteration algorithms. Journal of Artificial Intelligence Research, 42:181–209, 2011.

- Dalal et al. [2018] G. Dalal, B. Szörényi, G. Thoppe, and S. Mannor. Finite sample analyses for td (0) with function approximation. Association for the Advancement of Artificial Intelligence, 2018.

- Devraj and Meyn [2021] A. M. Devraj and S. P. Meyn. Q-learning with uniformly bounded variance. IEEE Transactions on Automatic Control, 67(11):5948–5963, 2021.

- Ermis and Yang [2020] M. Ermis and I. Yang. A3DQN: Adaptive Anderson acceleration for deep Q-networks. IEEE Symposium Series on Computational Intelligence, 2020.

- Ermis et al. [2021] M. Ermis, M. Park, and I. Yang. On Anderson acceleration for partially observable Markov decision processes. IEEE Conference on Decision and Control, 2021.

- Ernst et al. [2005] D. Ernst, P. Geurts, and L. Wehenkel. Tree-based batch mode reinforcement learning. Journal of Machine Learning Research, 2005.

- Even-Dar and Mansour [2003] E. Even-Dar and Y. Mansour. Learning rates for Q-learning. Journal of Machine Learning Research, 2003.

- Fan et al. [2020] J. Fan, Z. Wang, Y. Xie, and Z. Yang. A theoretical analysis of deep Q-learning. Learning for dynamics and control, 2020.

- Farahmand and Ghavamzadeh [2021] A. Farahmand and M. Ghavamzadeh. PID accelerated value iteration algorithm. International Conference on Machine Learning, 2021.

- Farahmand et al. [2010] A. Farahmand, R. Munos, and C. Szepesvári. Error propagation for approximate policy and value iteration. Neural Information Processing Systems, 2010.

- Geist and Scherrer [2018] M. Geist and B. Scherrer. Anderson acceleration for reinforcement learning. European Workshop on Reinforcement Learning, 2018.

- Golub and Van Loan [2013] G. H. Golub and C. F. Van Loan. Matrix Computations. The John Hopkins University Press, 4th edition, 2013.

- Gordon [1995] G. Gordon. Stable function approximation in dynamic programming. International Conference on Machine Learning, 1995.

- Goyal and Grand-Clément [2022] V. Goyal and J. Grand-Clément. A first-order approach to accelerated value iteration. Operations Research, 71(2):517–535, 2022.

- Grand-Clément [2021] J. Grand-Clément. From convex optimization to MDPs: A review of first-order, second-order and quasi-newton methods for MDPs. arXiv:2104.10677, 2021.

- Gupta et al. [2015] A. Gupta, R. Jain, and P. W Glynn. An empirical algorithm for relative value iteration for average-cost MDPs. IEEE Conference on Decision and Control, 2015.

- Hastings [1968] N. A. J. Hastings. Some notes on dynamic programming and replacement. Journal of the Operational Research Society, 19:453–464, 1968.

- Hillen [2023] T. Hillen. Elements of Applied Functional Analysis. AMS Open Notes, 2023.

- Hunter and Nachtergaele [2001] J. K. Hunter and B. Nachtergaele. Applied analysis. World Scientific Publishing Company, 2001.

- Jaakkola et al. [1993] T. Jaakkola, M. Jordan, and S. Singh. Convergence of stochastic iterative dynamic programming algorithms. Advances in neural information processing systems, 1993.

- Kushner and Kleinman [1968] H. Kushner and A. Kleinman. Numerical methods for the solution of the degenerate nonlinear elliptic equations arising in optimal stochastic control theory. IEEE Transactions on Automatic Control, 1968.

- Kushner and Kleinman [1971] H. Kushner and A. Kleinman. Accelerated procedures for the solution of discrete Markov control problems. IEEE Transactions on Automatic Control, 1971.

- Lagoudakis and Parr [2003] M. G. Lagoudakis and R. Parr. Least-squares policy iteration. Journal of Machine Learning Research, 2003.

- Lakshminarayanan and Szepesvari [2018] C. Lakshminarayanan and C. Szepesvari. Linear stochastic approximation: How far does constant step-size and iterate averaging go? International Conference on Artificial Intelligence and Statistics, 2018.

- Lee and Ryu [2023] J. Lee and E. K. Ryu. Accelerating value iteration with anchoring. Neural Information Processing Systems, 2023.

- Lehoucq et al. [1998] R.B. Lehoucq, D.C. Sorensen, and C. Yang. ARPACK Users’ Guide: Solution of Large-scale Eigenvalue Problems with Implicitly Restarted Arnoldi Methods. Society for Industrial and Applied Mathematics, 1998.

- Mackey [2008] L. Mackey. Deflation methods for sparse PCA. In Neural Information Processing Systems, 2008.

- McMahan and Gordon [2005] H. B. McMahan and G. J. Gordon. Fast exact planning in Markov decision processes. International Conference on Automated Planning and Scheduling, 2005.

- Meirovitch [1980] L. Meirovitch. Computational methods in structural dynamics. Springer Science & Business Media, 1980.

- Meyn [2022] S. Meyn. Control Systems and Reinforcement Learning. Cambridge University Press, 2022.

- Mnih et al. [2015] V. Mnih, K. Kavukcuoglu, D. Silver, A. A. Rusu, and et al. Human-level control through deep reinforcement learning. Nature, 518(7540):529–533, 2015.

- Moore and Atkeson [1993] A. W. Moore and C. G. Atkeson. Prioritized sweeping: Reinforcement learning with less data and less time. Machine Learning, 1993.

- Morgan [1995] R. B. Morgan. A restarted gmres method augmented with eigenvectors. SIAM Journal on Matrix Analysis and Applications, 16(4):1154–1171, 1995.

- Munos and Szepesvári [2008] R. Munos and C. Szepesvári. Finite-time bounds for fitted value iteration. Journal of Machine Learning Research, 2008.

- Park et al. [2022] M. Park, J. Shin, and I. Yang. Anderson acceleration for partially observable Markov decision processes: A maximum entropy approach. arXiv:2211.14998, 2022.

- Peng and Williams [1993] J. Peng and R. J. Williams. Efficient learning and planning within the Dyna framework. Adaptive Behavior, 1(4):437–454, 1993.

- Porteus [1975] E. L. Porteus. Bounds and transformations for discounted finite markov decision chains. Operations Research, 23(4):761–784, 1975.

- Rakhsha et al. [2022] A. Rakhsha, A. Wang, M. Ghavamzadeh, and A. Farahmand. Operator splitting value iteration. Neural Information Processing Systems, 2022.

- Reetz [1973] D. Reetz. Solution of a markovian decision problem by successive overrelaxation. Zeitschrift für Operations Research, 17:29–32, 1973.

- Saad [2011] Y. Saad. Numerical methods for large eigenvalue problems: revised edition. SIAM, 2011.

- Saad et al. [2000] Y. Saad, M. Yeung, J. Erhel, and F. Guyomarc’h. A deflated version of the conjugate gradient algorithm. SIAM Journal on Scientific Computing, 21(5):1909–1926, 2000.

- Sharma et al. [2020] H. Sharma, M. Jafarnia-Jahromi, and R. Jain. Approximate relative value learning for average-reward continuous state mdps. Uncertainty in Artificial Intelligence, 2020.

- Shi et al. [2019] W. Shi, S. Song, H. Wu, Y. Hsu, C. Wu, and G. Huang. Regularized Anderson acceleration for off-policy deep reinforcement learning. Neural Information Processing Systems, 2019.

- Shroff and Keller [1993] G. M. Shroff and H. B. Keller. Stabilization of unstable procedures: the recursive projection method. SIAM Journal on numerical analysis, 30(4):1099–1120, 1993.

- Sidford et al. [2023] A. Sidford, M. Wang, X. Wu, and Y. Ye. Variance reduced value iteration and faster algorithms for solving markov decision processes. Naval Research Logistics, 70(5):423–442, 2023.

- Soto and Rojo [2006] R. L. Soto and O. Rojo. Applications of a Brauer theorem in the nonnegative inverse eigenvalue problem. Linear algebra and its applications, 416(2-3):844–856, 2006.

- Sun et al. [2021] K. Sun, Y. Wang, Y. Liu, B. Pan, S. Jui, B. Jiang, L. Kong, et al. Damped Anderson mixing for deep reinforcement learning: Acceleration, convergence, and stabilization. Neural Information Processing Systems, 2021.

- Sutton [1988] R. S. Sutton. Learning to predict by the methods of temporal differences. Machine Learning, 1988.

- Sutton and Barto [2018] R. S. Sutton and A. G. Barto. Reinforcement Learning: An Introduction. The MIT Press, second edition, 2018.

- Szepesvári [1997] C. Szepesvári. The asymptotic convergence-rate of Q-learning. Neural Information Processing Systems, 1997.

- Szepesvári [2010] C. Szepesvári. Algorithms for Reinforcement Learning. Morgan Claypool Publishers, 2010.

- Tsitsiklis and V. Roy [1997] J. N. Tsitsiklis and B. V. Roy. An analysis of temporal difference learning with function approximation. IEEE Transactions on Automatic Control, 1997.

- Vieillard et al. [2020] N. Vieillard, B. Scherrer, O. Pietquin, and M. Geist. Momentum in reinforcement learning. International Conference on Artificial Intelligence and Statistics, 2020.

- Wainwright [2019] M. J. Wainwright. Stochastic approximation with cone-contractive operators: Sharp -bounds for -learning. arXiv:1905.06265, 2019.

- Watkins [1989] C. J. C. H. Watkins. Learning from Delayed Rewards. PhD thesis, King’s College, University of Cambride, 1989.

- White [1963] D. J. White. Dynamic programming, markov chains, and the method of successive approximations. J. Math. Anal. Appl, 6(3):373–376, 1963.

- Wingate et al. [2005] D. Wingate, K. D. Seppi, and S. Mahadevan. Prioritization methods for accelerating MDP solvers. Journal of Machine Learning Research, 2005.

Appendix

7.1. Prior works

Acceleration in RL.

Prioritized sweeping and its several variants [Moore and Atkeson, 1993, Peng and Williams, 1993, McMahan and Gordon, 2005, Wingate et al., 2005, Andre et al., 1997, Dai et al., 2011] specify the order of asynchronous value function updates, which may lead to accelerated convergence to the true value function. Speedy Q-learning [Azar et al., 2011] changes the update rule of Q-learning and employs aggressive learning rates to accelerate convergence. Sidford et al. [2023] accelerate the computation of by sampling it with variance reduction techniques. Recently, there has been a growing body of research that explores the application of acceleration techniques of other areas of applied math to planning and RL: Geist and Scherrer [2018], Sun et al. [2021], Ermis and Yang [2020], Park et al. [2022], Ermis et al. [2021], Shi et al. [2019] applies Anderson acceleration of fixed-point iterations, Lee and Ryu [2023] applies Anchor acceleration of minimax optimization, Vieillard et al. [2020], Goyal and Grand-Clément [2022], Grand-Clément [2021], Bowen et al. [2021], Akian et al. [2022] applies Nesterov acceleration of convex optimization, and Farahmand and Ghavamzadeh [2021] applies ideas inspired by PID controllers in control theory.

Matrix deflation.

Deflation techniques were first developed for eigenvalue computation [Meirovitch, 1980, Saad, 2011, Golub and Van Loan, 2013]. Matrix deflation, eliminating top eigenvalues of a given matrix with leaving the rest of the eigenvalues untouched, has been applied to various algorithms such as conjugate gradient algorithm [Saad et al., 2000], principal component analysis [Mackey, 2008], generalized minimal residual method [Morgan, 1995], and nonlinear fixed point iteration [Shroff and Keller, 1993] for improvement of convergence.

Some prior work in RL has explored subtracting constants from the iterates of VI, resulting in methods that resemble our rank- DDVI. For discounted MDP, Devraj and Meyn [2021] propose relative Q-learning, which can roughly be seen as the Q-learning version of rank-1 DDVI for Control, and Bertsekas [1995] proposes an extrapolation method for VI, which can be seen as rank-1 DDVI with shur deflation matrix for PE. White [1963] introduced relative value iteration in the context of undiscounted MDP with average reward, and several variants were proposed [Gupta et al., 2015, Sharma et al., 2020].

Matrix splitting of value iteration.

Matrix splitting has been studied in the RL literature to obtain an acceleration. Hastings [1968] and Kushner and Kleinman [1968] first suggested Gausss-Seidel iteration for computing value function. Kushner and Kleinman [1971] and Reetz [1973] applied Successive Over-Relaxation to VI and generalized Jacobi and Gauss-Seidel iteration. Porteus [1975] proposed several transformations of MDP that can be seen as a Gauss–Seidel variant of VI. Rakhsha et al. [2022] used matrix splitting with the approximate dynamics of the environment and extended to nonlinear operator splitting. Bacon and Precup [2016], Bacon [2018] analyzed planning with options through the matrix splitting perspective.

Convergence analysis of TD learning

Jaakkola et al. [1993] first proved the convergence of TD learning using the stochastic approximation (SA) technique. Borkar and Meyn [2000], Borkar [1998] suggested ODE-based framework to provide asymptotic convergence of SA including TD learning with an asynchronous update. Lakshminarayanan and Szepesvari [2018] study linear SA under i.i.d noise with respect to mean square error and Chen et al. [2020] study asymptotic convergence rate of linear SA under Markovian noise. Finite time analysis of TD learning first provided by Dalal et al. [2018] and extended to Markovian noise setting by Bhandari et al. [2018]. Leveraging Lyapunov theory, Chen et al. [2023] establishes finite time analysis of Markovian SA with an explicit bound.

8. Proof of Theoretical Results

8.1. Proof of Theorem 3.1

By definition of DDVI (2) and fixed point , we have

where is a rank- deflation matrix of satisfying the conditions of Facts 1, 2, or 3. This implies that

for some constant .

First, suppose satisfies Facts 1 or 2. Let and . Define for some function . Then are matrices and . By Jordan decomposition, where is Jordan matrix with for . Let be submatrix of satisfying for . Then, by condition of Theorem 3.1, and -th column of is for . By simple calculation, we get and .

Since

we get

Then,

where is -th unit vector and , and is upper traingular matrix with diagonal entries .

Now suppose satisfies Fact 3. Similarly, let . Then, . By Schur decomposition, where is an upper triangular matrix with for , and is a unitary matrix. By simple calculation, we have where is submatrix of such that for and .

Since

we get

Then,

where is upper triangular matrix with digonal entries , satisfying . By simple calculation, we know that is upper triangular matrix with digonal entries .

Therefore, by spectral analysis, we conclude that

as , where

8.2. Proof of Collorary 3.2

8.3. Proof of Theorem 4.1

By definition of DDVI for Control (3),

where . Let . Note that

Thus,

where and is optimal policy. This implies that

and

Then, there exist such that

for . Define for all and . Then satisfies

Thus, we get

and this implies that

Then, we have

since is stochastic matrix with .

Suppose there exist unique optimal policy . Then, if (non-deflated) VI generates for , there exist such that for . Since DDVI for Control generates same policy with VI for Control, DDVI for Control also generates greedy policy for iterations. Therefore, we get

for and some constant .

8.4. Proof of Theorem 5.1

Consider following stochastic approximation algorithm

| (5) |

for , where , , is uniformly Lipschitz, and are i.i.d random variables. Let , , , and . Then following proposition holds.

Proposition 8.1.

[Borkar and Meyn, 2000, Theorem 2.2 and 2.5] If (i) , (ii) are martingale difference sequence , (iii) for some constant , (iv) has asymptotically stable equilibrium origin, and (v) has a unique globally asymtotically stable equilibrium , then of (5) converges to almost surely, and furthermore, of

| (6) |

for where is random variable taking a value in , satisfying for some constant , also converge to almost surely.

In (6), and are -th coordinate of and , respectively. (6) can be interpreted as asynchronous version of (5), and we note that Proposition 8.1 is a simplified version with stronger conditions of Theorem 2.2 and 2.5 of Borkar and Meyn [2000].

Recall that DDTD (4) with is

| (7) |

We will first show that and this directly implies that . For applying Proposition 8.1, consider

where , such that and are i.i.d random variables, and . Then, , , and .

We now check the conditions. First, since implies , is uniformly Lipschitz.

for all . This implies that is martingale difference sequence. Also, for constant .

By definition, . In Section 8.1, we showed that . Hence, . Since for all , real parts of all eigenvalues are negative. This implies that , has an asymtotically stable equilibrium origin and has a unique globally asymtotically stable equilibrium .

8.5. Proof of Theorem 5.2

Consider following linear stochastic approximation algorithm

| (8) |

for , where , are random variables, , , and , for and . Then, following Proposition holds.

Proposition 8.2.

[Chen et al., 2020, Theorem 2.5 and 2.7] If (i) for , (ii) is ergodic (aperiodic and irreducible) Markov process with unique invariant measure , (iii) and , (iv) is Hurwitz, (v) for all spec(), then where .

We note that Proposition 8.1 is also a simplified version with stronger conditions of Theorem 2.5 and 2.7 of Chen et al. [2020].

Since are i.i.d random variables, are Markov process. Let and be transition matrix of . Then . Since for any distribution , is unique invarinat measure. Hence, is irreducible and aperiodic.

and . Then, .

8.6. Non-asymptotic convergence analysis of DDTD

Consider following the stochastic approximation algorithm

| (9) |

for , where , , are Markov chain with unique distribution and transition matrix . Let and . Define mixing time as where stands for the total variation distance. Let be fixed point of . Then following proposition holds.

Proposition 8.3.

[Chen et al., 2023, Theorem 1] Let (i) is aperiodic and irreducible Markov chain, (ii) is -contraction respect to some , (iii) is -uniformly Lipschitz with respect to and for any , (iv) are martingale difference sequence, and (v) for some . Then, if ,

for all , and if ,

for all , where is nonincreasing sequence satisfying for all , , , , , for chosen norm such that is -smooth function with respect to , , , and such that is chosen satisfying .

DDTD (4) with is equivalent to

where , and . As we showed in Section 8.5, is irreducible and aperiodic Markov chain with a invariant measure . Furthermore, mixing time of is .

Let be the eigenvalues of sorted in decreasing order of magnitude with ties broken arbitrarily. Let . Since spectral radius is the infimum of matrix norm, for any , there exist such that where .

, and implies that .

. and has fixed point since and share same fixed point.

Thus, by Theorem 8.3 and , we have following non-asymptotic convergence result of DDTD.

Theorem 8.4 (DDTD with constant stepsize).

Let be the eigenvalues of sorted in decreasing order of magnitude with ties broken arbitrarily. Let . For any , there exist and such that for and , DDTD exhibits the rate

for all where .

Theorem 8.5 (DDTD with diminishing stepsize).

Let be the eigenvalues of sorted in decreasing order of magnitude with ties broken arbitrarily. For any , there exist and such that for and , DDTD exhibits the rate

for all where .

9. DDVI with QR Iteration, AutoPI and AutoQR

9.1. DDVI with QR Iteration

Recall that the QR iteration approximates top Schur vectors. Algorithm 2 uses the QR Iteration to construct the rank- Schur deflation matrix and performs DDVI. Compared to the standard VI, rank- DDVI requires additional computation for the QR iteration.

9.2. DDVI with AutoPI and AutoQR

We first introduce DDVI with AutoPI for . If , we have

by (2), and

is the iterates of a power iteration with respect to . In Section 8.1, we show that

For , we expect that spectral radius of matrix is and and have same top eigenvector. With same argument in Section 4.2, we can recover the -th eigenvector of by [Bru et al., 2012, Proposition 5]. Leveraging this observation, we formalize this approach in Algorithm 3.

Now, we introduce DDVI with AutoQR for .

Assume the top eigenvalues of are distinct, i.e., . Let be a rank- deflation matrix of as in Fact 3. If , we again have

and

is the iterates of a power iteration with respect to . In Section 8.1, we show that

For , we expect that spectral radius of matrix is and and have same top eigenvector. With same argument in Section 4.2, for large , we expect where is the top eigenvector of . Thus, by orthornormalizing against , we can obtain top -th Schur vector of Saad [2011, Section 4.2.4]. Leveraging this observation, we propose DDVI with Automatic QR Iteration (AutoQR) and formalize this in Algorithm 4.

10. Environments

We introduce four environments and polices used in experiments.

Cliffwalk

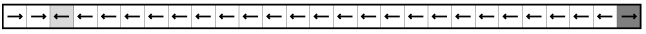

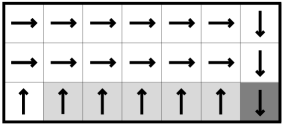

The Cliffwalk is a grid world. The top-left corner is initial state, top-right corner is goal state with reward of , and other states in first row are terminal states with reward of . There is a penalty of in all other states. The agent has four actions: UP(), RIGHT(), DOWN(), and LEFT(). Each action has chance to successfully move, but with probability of one of the other three directions is randomly chosen and the agent moves in that direction. If the agent attempts to get out of the boundary, it will stay in place. Optimal and non optimal poilcies of Cliffwalk used in experiments are as follows:

Optimal policy:

Non optimal policy:

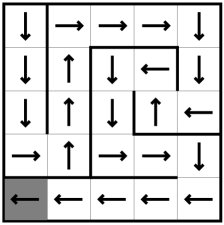

Maze

The Maze is a grid world with walls. The top-left corner is the initial state, and the bottom-left corner is the goal state with reward of . Similar to the Clifffwalk, there is a penalty of in all other states, and the agent has four actions: UP(), RIGHT(), DOWN(), and LEFT(). Each action has chance to successfully move but with probability of one of the other three directions is randomly chosen and the agent moves in that direction. If the agent attempts to get out of the boundary or hits a wall, it will stay in place. Optimal and non optimal poilcies of Maze used in experiments are as follows:

Optimal policy:

Non optimal policy:

Chain Walk

We use the Chain Walk environment, as described by Farahmand and Ghavamzadeh [2021], which is similar to the formulation by Lagoudakis and Parr [2003]. Chain Walk is parametrized by the tuple . It is circular chain, where the state and are connected. The reward is state-dependent. State is goal state with reward , state gives reward , and all other states give reward . Agent has two actions: RIGHT() and LEFT(). Each action has chance to successfully move but with probability of agent will stay in place, and with probability of agent will move opposite direction. Optimal poilcy of Chain Walk used in experiments is as follows:

Optimal policy:

Non optimal policy:

,

Garnet

We use the Garnet environment as described by Farahmand and Ghavamzadeh [2021], Rakhsha et al. [2022], which is based on Bhatnagar et al. [2009]. Garnet is parameterized by the tuple . and are the number of states and actions, and is the branching factor of the environment, which is the number of possible next states for each state-action pair. We randomly select states without replacement and then, transition distribution is generated randomly. We select states without replacement, and for each selected , we assign state-dependent reward a uniformly sampled value in .

11. Rank- DDVI for Control Experiments

In this experiment, we consider Chain Walk and Garnet. We use Garnet with 100 states, 8 actions, a branching factor of 6, and 10 non-zero rewards throughout the state space. We use normalized errors , , and , where is the number of states. For Garnet, we plot average values on the 100 Garnet instances and denote one standard error with the shaded area. Figure 7(a) and 7(b) show that rank- DDVI for Control does indeed provide an acceleration.

12. Additional DDVI Experiments and Details

In all experiments, we set DDVI’s . In experiments for Figure 1, the QR iteration is run for 600 iterations. For PID VI, we set and . In Anderson VI, we have . In Figure 2, we use 20 Garnet mdps with branching factor , and .

We perform further comparison of convergence in Figures 8, 9, 10, 11. Figure 8 is run with Garnet environment with states and branching factor that matches the setting in [Goyal and Grand-Clément, 2022].

13. DDTD Experiments

A key part of our experimental setup is the model . To show the robustness of DDTD to model error and also its advantage over Dyna, we consider the scenario that has some non-diminishing error. We achieve this with the same technique as [Rakhsha et al., 2022]. Assume that each iteration , the empirical distribution of the next state from is , which is going to be updated with every sample. For some hyperparameter , we set the approximate dynamics as

| (10) |

where for is the uniform distribution over . The hyperparameter controls the amount of error introduced in . If , we have which becomes arbitrarily accurate with more samples. With larger , will be smoothed towards the uniform distribution more, which leads to a larger model error. In Dyna, we keep the empirical average of past rewards for each in and perform planning with at each iteration to calculate the value function, that is . In Figure 3, we have shown the result for . In Figure 12 and Figure 13 we show the results for in both Maze and Chain Walk environments.

As we see in Figure 12 and Figure 13, DDTD shows a faster convergence than the conventional TD. In Maze, this is only achieved with higher rank versions of DDTD but in Chain Walk, even rank-1 DDTD is able to significantly accelerate the learning. Also note that unlike Dyna, DDTD is converging to the true value function despite the model error. We observe that the impact of model error on DDTD is very mild. In some cases such as rank-3 DDTD in Maze, between and , we observe slightly slower convergence with higher model error. The hyperparamaters of TD Learning and DDTD are given in Table 1 and 2.

| rank-1 DDTD | rank-2 DDTD | rank-3 DDTD | rank-4 DDTD | TD | |

|---|---|---|---|---|---|

| (learning rate) | 0.3 | ||||

| - | |||||

| 10 | 10 | 10 | 10 | - |

| rank-1 DDTD | rank-2 DDTD | rank-3 DDTD | rank-4 DDTD | TD | |

|---|---|---|---|---|---|

| learning rate () | 1 | 1 | 1 | 1 | 1 |

| - | |||||

| 10 | 10 | 10 | 10 | - |