Detection and Mitigation of

Byzantine Attacks in Distributed Training

Abstract

A plethora of modern machine learning tasks require the utilization of large-scale distributed clusters as a critical component of the training pipeline. However, abnormal Byzantine behavior of the worker nodes can derail the training and compromise the quality of the inference. Such behavior can be attributed to unintentional system malfunctions or orchestrated attacks; as a result, some nodes may return arbitrary results to the parameter server (PS) that coordinates the training. Recent work considers a wide range of attack models and has explored robust aggregation and/or computational redundancy to correct the distorted gradients.

In this work, we consider attack models ranging from strong ones: omniscient adversaries with full knowledge of the defense protocol that can change from iteration to iteration to weak ones: randomly chosen adversaries with limited collusion abilities which only change every few iterations at a time. Our algorithms rely on redundant task assignments coupled with detection of adversarial behavior. We also show the convergence of our method to the optimal point under common assumptions and settings considered in literature. For strong attacks, we demonstrate a reduction in the fraction of distorted gradients ranging from 16%-99% as compared to the prior state-of-the-art. Our top-1 classification accuracy results on the CIFAR-10 data set demonstrate 25% advantage in accuracy (averaged over strong and weak scenarios) under the most sophisticated attacks compared to state-of-the-art methods.

Index Terms:

Byzantine resilience, distributed training, gradient descent, deep learning, optimization, security.I Introduction and Background

Increasingly complex machine learning models with large data set sizes are nowadays routinely trained on distributed clusters. A typical setup consists of a single central machine (parameter server or PS) and multiple worker machines. The PS owns the data set, assigns gradient tasks to workers, and coordinates the protocol. The workers then compute gradients of the loss function with respect to the model parameters. These computations are returned to the PS, which aggregates them, updates the model, and maintains the global copy of it. The new copy is communicated back to the workers. Multiple iterations of this process are performed until convergence has been achieved. PyTorch [1], TensorFlow [2], MXNet [3], CNTK [4] and other frameworks support this architecture.

These setups offer significant speedup benefits and enable training challenging, large-scale models. Nevertheless, they are vulnerable to misbehavior by the worker nodes, i.e., when a subset of them returns erroneous computations to the PS, either inadvertently or on purpose. This “Byzantine” behavior can be attributed to a wide range of reasons. The principal causes of inadvertent errors are hardware and software malfunctions (e.g., [5]). Reference [6] exposes the vulnerability of neural networks to such failures and identifies weight parameters that could maximize accuracy degradation. The gradients may also be distorted in an adversarial manner. As ML problems demand more resources, many jobs are often outsourced to external commodity servers (cloud) whose security cannot be guaranteed. Thus, an adversary may be able to gain control of some devices and fool the model. The distorted gradients can derail the optimization and lead to low test accuracy or slow convergence.

Achieving robustness in the presence of Byzantine node behavior and devising training algorithms that can efficiently aggregate the gradients has inspired several works [7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18]. The first idea is to filter the corrupted computations from the training without attempting to identify the Byzantine workers. Specifically, many existing papers use majority voting and median-based defenses [7, 8, 9, 10, 11, 12, 13] for this purpose. In addition, several works also operate by replicating the gradient tasks [14, 15, 16, 17, 18] allowing for consistency checks across the cluster. The second idea for mitigating Byzantine behavior involves detecting the corrupted devices and subsequently ignoring their calculations [19, 20, 21], in some instances paired with redundancy [17]. In this work, we propose a technique that combines the usage of redundant tasks, filtering, and detection of Byzantine workers. Our work is applicable to a broad range of assumptions on the Byzantine behavior.

There is much variability in the adversarial assumptions that prior work considers. For instance, prior work differs in the maximum number of adversaries considered, their ability to collude, their possession of knowledge involving the data assignment and the protocol, and whether the adversarial machines are chosen at random or systematically. We will initially examine our methods under strong adversarial models similar to those in prior work [22, 14, 23, 11, 10, 24, 25]. We will then extend our algorithms to tackle weaker failures that are not necessarily adversarial but rather common in commodity machines [5, 6, 26]. We expand on related work in the upcoming Section II.

II Related Work and Summary of Contributions

II-A Related Work

All work in this area (including ours) assumes a reliable parameter server that possesses the global data set and can assign specific subsets of it to workers. Robust aggregation methods have also been proposed for federated learning [27, 28]; however, as we make no assumption of privacy, our work, as well as the methods we compare with do not apply to federated learning.

One category of defenses splits the data set into batches and assigns one to each worker with the ultimate goal of suitably aggregating the results from the workers. Early work in the area [12] established that no linear aggregation method (such as averaging) can be robust even to a single adversarial worker. This has inspired alternative methods collectively known as robust aggregation. Majority voting, geometric median, and squared-distance-based techniques fall into this category [8, 9, 10, 11, 12, 13].

One of the most popular robust aggregation techniques is known as mean-around-median or trimmed mean [11, 10]. It handles each dimension of the gradient separately and returns the average of a subset of the values that are closest to the median. Auror [25] is a variant of trimmed mean which partitions the values of each dimension into two clusters using k-means and discards the smaller cluster if the distance between the two exceeds a threshold; the values of the larger cluster are then averaged. signSGD in [26] transmits only the sign of the gradient vectors from the workers to the PS and exploits majority voting to decide the overall update; this practice reduces the communication time and denies any individual worker too much effect on the update.

Krum in [12] chooses a single honest worker for the next model update, discarding the data from the rest of them. The chosen gradient is the one closest to its nearest neighbors. In later work [24], the authors recognized that Krum may converge to an ineffectual model in the landscape of non-convex high dimensional problems, such as in neural networks. They showed that a large adversarial change to a single parameter with a minor impact on the norm can make the model ineffective. In the same work, they present an alternative defense called Bulyan to oppose such attacks. The algorithm works in two stages. In the first part, a selection set of potentially benign values is iteratively constructed. In the second part, a variant of trimmed mean is applied to the selection set. Nevertheless, if machines are used, Bulyan is designed to defend only up to fraction of corrupted workers.

Another category of defenses is based on redundancy and seeks resilience to Byzantines by replicating the gradient computations such that each of them is processed by more than one machine [15, 16, 17, 18]. Even though this approach requires more computation load, it comes with stronger guarantees of correcting the erroneous gradients. Existing redundancy-based techniques are sometimes combined with robust aggregation [16]. The main drawback of recent work in this category is that the training can be easily disrupted by a powerful, omniscient adversary that has full control of a subset of the nodes and can mount judicious attacks [14].

Redundancy-based method DRACO in [17] uses a simple Fractional Repetition Code (FRC) (that operates by grouping workers) and the cyclic repetition code introduced in [29, 30] to ensure robustness; majority voting and Fourier decoders try to alleviate the adversarial effects. Their work ensures exact recovery (as if the system had no adversaries) with Byzantine nodes, when each task is replicated times; the bound is information-theoretic minimum, and DRACO is not applicable if it is violated. Nonetheless, this requirement is very restrictive for the typical assumption that up to half of the workers can be Byzantine.

DETOX in [16] extends DRACO and uses a simple grouping strategy to assign the gradients. It performs multiple stages of aggregation to gradually filter the adversarial values. The first stage involves majority voting, while the following stages perform robust aggregation. Unlike DRACO, the authors do not seek exact recovery; hence the minimum requirement in is small. However, the theoretical resilience guarantees that DETOX provides depend heavily on a “random choice” of the adversarial workers. In fact, we have crafted simple attacks [14] to make this aggregator fail under a more careful choice of adversaries. Furthermore, their theoretical results hold when the fraction of Byzantines is less than .

A third category focuses on ranking and/or detection [19, 17, 20]; the objective is to rank workers using a reputation score to identify suspicious machines and exclude them or give them lower weight in the model update. This is achieved by means of computing reputation scores for each machine or by using ideas from coding theory to assign tasks to workers (encoding) and to detect the adversaries (decoding). Zeno in [20] ranks each worker using a score that depends on the estimated loss and the magnitude of the update. Zeno requires strict assumptions on the smoothness of the loss function and the gradient estimates’ variance to tolerate an adversarial majority in the cluster. Similarly, ByGARS [19] computes reputation scores for the nodes based on an auxiliary data set; these scores are used to weigh the contribution of each gradient to the model update.

II-B Contributions

In this paper, we propose novel techniques which combine redundancy, detection, and robust aggregation for Byzantine resilience under a range of attack models and assumptions on the dataset/loss function.

Our first scheme Aspis is a subset-based assignment method for allocating tasks to workers in strong adversarial settings: up to omniscient, colluding adversaries that can change at each iteration. We also consider weaker attacks: adversaries chosen randomly with limited collusion abilities, changing only after a few iterations at a time. It is conceivable that Aspis should continue to perform well with weaker attacks. However, as discussed later (Section V-B), Aspis requires large batch sizes (for the mini-batch SGD). It is well-recognized that large batch sizes often cause performance degradation in training [31]. Accordingly, for this class of attacks, we present a different algorithm called Aspis+ that can work with much smaller batch sizes. Both Aspis and Aspis+ use combinatorial ideas to assign the tasks to the worker nodes. Our work builds on our initial work in [22] and makes the following contributions.

-

•

We demonstrate a worst-case upper bound (under any possible attack) on the fraction of corrupted gradients when Aspis is used. Even in this adverse scenario, our method enjoys a reduction in the fraction of corrupted gradients of more than 90% compared with DETOX [16]. A weaker variation of this attack is where the adversaries do not collude and act randomly. In this case, we demonstrate that the Aspis protocol allows for detecting all the adversaries. In both scenarios, we provide theoretical guarantees on the fraction of corrupted gradients.

-

•

In the setting where the dataset is distributed i.i.d. and the loss function is strongly convex and other technical conditions hold, we demonstrate a proof of convergence for Aspis. We demonstrate numerical results on the linear regression problem in this part; these show the advantage of Aspis over competing methods such as DETOX.

-

•

For weaker attacks (discussed above), our experimental results indicate that Aspis+ detects all adversaries within approximately 5 iterations.

-

•

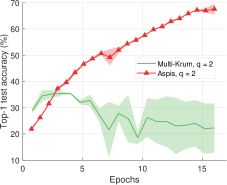

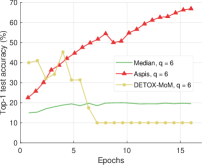

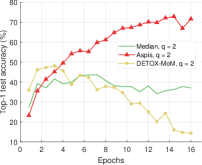

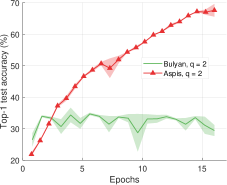

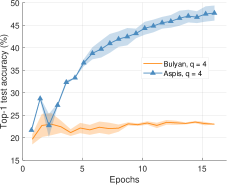

We present top-1 classification accuracy experiments on the CIFAR-10 [32] data set for various gradient distortion attacks coupled with choice/behavior patterns of the adversarial nodes. Under the most sophisticated distortion methods [23], the performance gap between Aspis/Aspis+ and other state-of-the-art methods is substantial, e.g., for Aspis it is 43% in the strong scenario (cf. Figure 7(a)), and for Aspis+ 19% in the weak scenario (cf. Figure 13).

III Distributed Training Formulation

Assume a loss function for the sample of the dataset where is the set of parameters of the model.111The paper’s heavily-used notation is summarized in Appendix Table II. The objective of distributed training is to minimize the empirical loss function with respect to , where

Here denotes the number of samples.

We use either gradient descent (GD) or mini-batch Stochastic Gradient Descent (SGD) to solve this optimization. In both methods, initially is randomly set to ( is the model state at the end of iteration ). When using GD, the update equation is

| (1) |

Under mini-batch SGD a random batch of samples is chosen to perform the update in the iteration. Thus,

| (2) |

In both methods is the learning rate at the iteration. The workers denoted , compute gradients on subsets of the batch. The training is synchronous, i.e., the PS waits for all workers to return before performing an update. It stores the data set and the model and coordinates the protocol. It can be observed that GD can be considered an instance of mini-batch SGD where the batch at each iteration is the entire dataset. Our discussion below is in the context of mini-batch SGD but can easily be applied to the GD case by using this observation.

We consider settings in this work that depend on the underlying assumptions on the dataset and the loss function. Setting-I does not make any assumption on the dataset or the loss function. In Setting-II at the top-level (technical details appear in Section VI) we assume that the data samples are distributed i.i.d. and the loss function is strongly-convex. The results that we provide depend on the underlying setting.

Task assignment: Each batch is split into disjoint subsets , which are then assigned to the workers according to our placement policy. In what follows we refer to these as “files” to avoid confusion with other subsets that we need to refer to. Computational redundancy is introduced by assigning a given file to workers. As the load on all the workers is equal it follows that each worker is responsible for files ( is the computation load). We let be the set of files assigned to worker and be the group of workers assigned to file ; our placement scheme is such that uniquely identifies the file ; thus, we will sometimes refer to the file by its worker assignment, . We will also occasionally use the term group (of the assigned workers) to refer to a file. We discuss the actual placement algorithms used in this work in the upcoming subsection III-A.

Training: Each worker is given the task of computing the sum of the gradients on all its assigned files. For example, if file is assigned to , then it calculates and returns them to the PS. In every iteration, the PS will run our detection algorithm once it receives the results from all the users in an effort to identify the adversaries and will act according to the detection outcome.

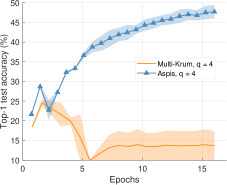

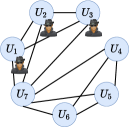

Figure 1 depicts this process. There are machines and distinct files (represented by colored circles) replicated times.222Some arrows and ellipses have been omitted from Figure 1; however, all files will be going through detection. Each worker is assigned to files and computes the sum of gradients (or a distorted value) on each of them. The “d” ellipses refer to PS’s detection operations immediately after receiving all the gradients.

Metrics: We consider various metrics in our work. For Setting-I we consider (i) the fraction of distorted files, and (ii) the top-1 test accuracy of the final trained model. For the distortion fraction, let us denote the number of distorted files upon detection and aggregation by and its maximum value (under a worst-case attack) by . The distortion fraction is . The top-1 test accuracy is determined via numerical experiments. In Setting-II, in addition we consider proofs and rates of convergence of the proposed algorithms. We provide theoretical results and supporting experimental results on these.

| Scheme | Byzantine choice/orchestration | Gradient distortion |

| Draco [17] | optimal | reversed gradient, constant |

| DETOX [16] | random | ALIE, reversed gradient, constant |

| ByzShield [14] | optimal | ALIE, reversed gradient, constant |

| Bulyan [24] | N/A | -norm attack targeted on Bulyan |

| Multi-Krum [12] | N/A | random high-variance Gaussian vector |

| Aspis | ATT-1, ATT-2 | ALIE, FoE, reversed gradient |

| Aspis+ | ATT-3 | ALIE, constant |

III-A Task Assignment

Let be the set of workers. Our scheme has (i.e., fewer workers than files). Our assignment of files to worker nodes is specified by a bipartite graph where the left vertices correspond to the workers, and the right vertices correspond to the files. An edge in between worker and a file indicates that the is responsible for processing file .

III-A1 Aspis

For the Aspis scheme we construct as follows. The left vertex set is and the right vertex set corresponds to -sized subsets of (there are of them). An edge between and (where ) exists if . The worker set is in one-to-one correspondence with and the files are in one-to-one correspondence with the -sized subsets.

Example 1.

Consider workers and . Based on our protocol, the files of each batch are associated one-to-one with 3-subsets of , e.g., the subset corresponds to file and will be processed by , , and .

Remark 1.

Our task assignment ensures that every pair of workers processes files. Moreover, the number of adversaries is . Thus, upon receiving the gradients from the workers, the PS can examine them for consistency and flag certain nodes as adversarial if their computed gradients differ from or more of the other nodes. We use this intuition to detect and mitigate the adversarial effects and compute the fraction of corrupted files.

III-A2 Aspis+

For Aspis+, we use combinatorial designs [33] to assign the gradient tasks to workers. Formally, a design is a pair (, ) consisting of a set of elements (points), , and a family (i.e., multiset) of nonempty subsets of called blocks, where each block has the same cardinality . Similar to Aspis, the workers and files are in one-to-one correspondence with the points and the blocks, respectively. Hence, for our purposes, the parameter of the design is the redundancy. A design is one where any subset of points appear together in exactly blocks. The case of has been studied extensively in the literature and is referred to as a balanced incomplete block design (BIBD). A bipartite graph representing the incidence between the points and the blocks can be obtained naturally by letting the points correspond to the left vertices, and the blocks correspond to the right vertices. An edge exists between a point and a block if the point is contained in the block.

Example 2.

A design, also known as the Fano plane, consists of the points and the block multiset contains the blocks , , , , , and with each block being of size . In the bipartite graph representation, we would have an edge, e.g., between point and blocks , and .

In Aspis+ we construct by the bipartite graph representing an appropriate design.

Another change compared to the Aspis placement scheme is that the points of the design will be randomly permuted at each iteration, i.e., for permutation , the PS will map . For instance, let us circularly permute the points of the Fano plane in Example 2 as and . Then, the file assignment at the next iteration will be based on the block collection . Permuting the assignment causes each Byzantine to disagree with more workers and to be detected in fewer iterations; details will be discussed in Section V-C. Owing to this permutation, we use a time subscript for the files assigned to for the iteration; this is denoted by .

IV Adversarial Attack Models and Gradient Distortion Methods

We now discuss the different Byzantine models that we consider in this work. For all the models, we assume that at most workers can be adversarial. For each assigned file a worker will return the value to the PS. Then,

| (3) |

where is the sum of the loss gradients on all samples in file , i.e.,

and is any arbitrary vector in . Within this setup, we examine adversarial scenarios that differ based on the behavior of the workers. Table I provides a high-level summary of the Byzantine models considered in this work as well as in related papers. As we will discuss in Section VIII-B, for those schemes that do not involve redundancy and merely split the work equally among the workers, all possible choices of the Byzantine set are equivalent, and no orchestration333We will use the term orchestration to refer to the method adversaries use to collude and attack collectively as a group. of them will change the defense’s output; hence, those cases are denoted by “N/A” in the table.

IV-A Attack 1

We first consider a weak attack, denoted ATT-1, where the Byzantine nodes operate independently (i.e., do not collude) and attempt to distort the gradient on any file they participate in. For instance, a node may try to return arbitrary gradients on all its assigned files. For this attack, the identity of the workers may be arbitrary at each iteration as long as there are at most of them.

IV-B Attack 2

Our second scenario, named ATT-2, is the strongest one we consider. We assume that the adversaries have full knowledge of the task assignment at each iteration and the detection strategies employed by the PS. The adversaries can collude in the “best” possible way to corrupt as many gradients as possible. Moreover, the set of adversaries can also change from iteration to iteration as long as there are at most of them.

IV-C Attack 3

This attack is similar to ATT-1 and will be called ATT-3. On the one hand, it is weaker in the sense that the set of Byzantines (denoted ) does not change in every iteration. Instead, we will assume that there is a “Byzantine window” of iterations in which the set remains fixed. Also, the set will be a randomly chosen set of workers from , i.e., it will not be chosen systematically. A new set will be chosen at random at all iterations , where (mod ). Conversely, it is stronger than ATT-1 since we allow for limited collusion amongst the adversarial nodes. In particular, the Byzantines simulated by ATT-3 will distort only the files for which a Byzantine majority exists.

IV-D Gradient Distortion Methods

For each of the attacks considered above, the adversaries can distort the gradient in specific ways. Several such techniques have been considered in the literature and our numerical experiments use these methods for comparing different methods. For instance, ALIE [23] involves communication among the Byzantines in which they jointly estimate the mean and standard deviation of the batch’s gradient for each dimension and subsequently use them to construct a distorted gradient that attempts to distort the median of the results. Another powerful attack is Fall of Empires (FoE) [34] which performs “inner product manipulation” to make the inner product between the true gradient and the robust estimator to be negative even when their distance is upper bounded by a small value. Reversed gradient distortion returns for , to the PS instead of the true gradient . The constant attack involves the Byzantine workers sending a constant gradient with all elements equal to a fixed value. To our best knowledge, the ALIE algorithm is the most sophisticated attack in literature for deep learning techniques.

V Defense Strategies in Aspis and Aspis+

In our work we use the Aspis task assignment and detection strategy for attacks ATT-1 and ATT-2. For ATT-3, we will use Aspis+. Recall that the methods differ in their corresponding task assignments. Nevertheless, the central idea in both detection methods is for the PS to apply a set of consistency checks on the obtained gradients from the different workers at each iteration to identify the adversaries.

Let the current set of adversaries be with ; also, let be the honest worker set. The set is unknown, but our goal is to provide an estimate of it. Ideally, the two sets should be identical. In general, depending on the adversarial behavior, we will be able to provide a set such that . For each file, there is a group of workers which have processed it, and there are pairs of workers in each group. Each such pair may or may not agree on the gradient value for the file. For iteration , let us encode the agreement of workers on common file during the current iteration by

| (4) |

Across all files, the total number of agreements between a pair of workers during the iteration is denoted by

| (5) |

Since the placement is known, the PS can always perform the above computation. Next, we form an undirected graph whose vertices correspond to all workers . An edge exists in only if the computed gradients (at iteration ) of and match in “all” their common assignments.

V-A Aspis Detection Rule

In what follows, we suppress the iteration index since the Aspis algorithm is the same for each iteration. For the Aspis task assignment (cf. Section III-A1), any two workers, and , have common files.

Let us index the adversaries in and the honest workers in . We say that two workers and disagree if there is no edge between them in . The non-existence of an edge between and only means that they disagree in at least one of the files that they jointly participate in. For corrupting the gradients, each adversary has to disagree on the computations with a subset of the honest workers. An adversary may also disagree with other adversaries.

A clique in an undirected graph is defined as a subset of vertices with an edge between any pair of them. A maximal clique is one that cannot be enlarged by adding additional vertices to it. A maximum clique is one such that there is no clique with more vertices in the given graph. We note that the set of honest workers will pair-wise agree on all common tasks. Thus, forms a clique (of size ) within . The clique containing the honest workers may not be maximal. However, it will have a size of at least . Let the maximum clique on be . Any worker with will not belong to a maximum clique and can right away be eliminated as a “detected” adversary.

number of files , maximum iterations , file assignments , robust estimator function .

The essential idea of our detection is to run a clique-finding algorithm on (summarized in Algorithm 1). The detection may be successful or unsuccessful depending on which attack is used; we discuss this in more detail shortly.

We note that clique-finding is well-known to be an NP-complete problem [35]. Nevertheless, there are fast, practical algorithms with excellent performance on graphs even up to hundreds of nodes [36, 37]. Specifically, the authors of [37] have shown that their proposed algorithm, which enumerates all maximal cliques, has similar complexity as other methods [38, 39], which are used to find a single maximum clique. We utilize this algorithm. Our extensive experimental evidence suggests that clique-finding is not a computation bottleneck for the size and structure of the graphs that Aspis uses. We have experimented with clique-finding on a graph of workers and for different values of ; in all cases, enumerating all maximal cliques took no more than 15 milliseconds. These experiments and the asymptotic complexity of the entire protocol are addressed in Supplement Section XI-A.

During aggregation (see Algorithm 2), the PS will perform a majority vote across the computations of each file (implementation details in Supplement Section XI-B). Recall that workers have processed each file. For each such file , the PS decides a majority value

| (6) |

Assume that is odd and let . Under the rule in Eq. (6), the gradient on a file is distorted only if at least of the computations are performed by Byzantines. Following the majority vote, we will further filter the gradients using a robust estimator (see Algorithm 2, line 25). This robust estimator is either the coordinate-wise median or the geometric median; a similar setup was considered in [14, 16]. For example, in Figure 1, all returned values for the red file will be evaluated by a majority vote function on the PS, which decides a single output value; a similar voting is done for the other 3 files. After the voting process, Aspis applies the robust estimator on the “winning” gradients , .

V-A1 Defense Strategy Against ATT-1

Under ATT-1, it is clear that a Byzantine node will disagree with at least honest nodes (as, by assumption in Section IV-A, it will disagree with all of them), and thus, the degree of the node in will be at most , and it will not be part of the maximum clique. Thus, each of the adversaries will be detected, and their returned gradients will not be considered further. The algorithm declares the (unique) maximum clique as honest and proceeds to aggregation. In particular, assume that workers have been identified as honest. For each of the files, if at least one honest worker processed it, the PS will pick one of the “honest” gradient values. The chosen gradients are then averaged for the update (cf. Eq. (2)). For instance, in Figure 1, assume that , , and have been identified as faulty. During aggregation, the PS will ignore the red file as all 3 copies have been compromised. For the orange file, it will pick either the gradient computed by or as both of them are “honest.” The only files that can be distorted in this case are those that consist exclusively of adversarial nodes.

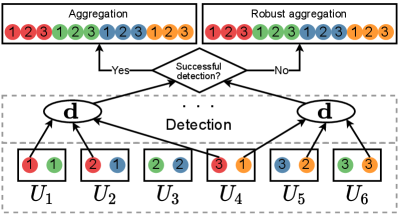

Figure 2(a) (corresponding to Example 1) shows an example where in a cluster of size , the adversaries are and the remaining workers are honest with . In this case, the unique maximum clique is , and detection is successful. Under this attack, the distorted files are those whose all copies have been compromised, i.e., .

V-A2 Defense Strategy Against ATT-2 (Robust Aggregation)

Let denote the set of disagreement workers for adversary , where can contain members from and from . If the attack ATT-2 is used on Aspis, upon the formation of we know that a worker will be flagged as adversarial if . Therefore to avoid detection, a necessary condition is that .

We now upper bound the number of files that can be corrupted under any possible strategy employed by the adversaries. Note that according to Algorithm 2, we resort to robust aggregation in case of more than one maximum clique in . In this scenario, a gradient can only be corrupted if a majority of the assigned workers computing it are adversarial and agree on a wrong value. The proof of the following theorem appears in Appendix Section X-A.

Theorem 1.

Consider a training cluster of workers with adversaries using algorithm in Section III-A1 to assign the files to workers, and Algorithm 1 for adversary detection. Under any adversarial strategy, the maximum number of files that can be corrupted is

| (7) |

Furthermore, this upper bound can be achieved if all adversaries fix a set of honest workers with which they will consistently disagree on the gradient (by distorting it).

Remark 3.

We emphasize that the maximum fraction of corrupted gradients is much lesser as compared to the baseline and with respect to other schemes as well (details in Sec. VII). For instance and , at most 0.022 fraction of the gradients are corrupted in Aspis as against 0.2 for the baseline scheme.

In Appendix Section X-A we show that under ATT-2 there is bound to be more than one maximum clique in the detection graph. Thus, the PS cannot unambiguously decide which one is the honest one; detection fails and we fall back to the robust aggregation technique.

V-B Motivation for Aspis+

Our motivation for proposing Aspis+ originates in the limitations of the subset assignment of Aspis. It is evident from the experimental results in Section VIII-B that Aspis is more suitable to worst-case attacks where the adversaries collude and distort the maximum number of tasks in an undetected fashion; in this case, the accuracy gap between Aspis and prior methods is maximal. Aspis does not perform as well under weaker attacks such as the reversed gradient attack (cf. Figures 8(a), 8(b), 8(c) even though it achieves a much smaller distortion fraction , as discussed in Section VII. This can be attributed to the fact that the number of tasks is and even for the considered cluster of , , it would require splitting the batch into 455 files; hence, the batch size must be a multiple of 455. There is significant evidence that large batch sizes can hurt generalization and make the model converge slowly [31, 40, 41]. Some workarounds have been proposed to solve this problem. For instance, the work of [41] uses layer-wise adaptive rate scaling to update each layer using a different learning rate. The authors of [42] perform implicit regularization using warmup and cosine annealing to tune the learning rate as well as gradient clipping. However, these methods require training for a significantly larger number of epochs. For the above reasons, we have extended our work and proposed Aspis+ to handle weaker Byzantine failures (cf. ATT-3) while requiring a much smaller batch size.

V-C Aspis+ Detection Rule

The principal intuition of the Aspis+ detection approach (used for ATT-3) is to iteratively keep refining the graph in which the edges encode the agreements of workers during consecutive and non-overlapping windows of iterations. At the beginning of each such window, the PS will reset to be a complete graph, i.e., as if all workers pairwise agree with other. Then, it will gradually remove edges from as disagreements between the workers are observed; hence, the graph will be updated at each of the iterations of the window, and the PS will assume that the Byzantine set does not change within a detection window. In practice, as we do not know the “Byzantine window,” we will not assume an alignment between the two kinds of windows, and we will set for our experiments. The detection method will be the same for all detection windows; thus, we will analyze the process in one window of steps.

For a detection window, let us encode the agreement of workers on common file during the current iteration of the window as

| (8) |

Across all files, the total number of agreements between a pair of workers during the iteration is denoted by

| (9) |

Assume that the current iteration of the window is indexed with . The PS will collect all agreements for each pair of workers up until the current iteration as

| (10) |

Since the placement is known, the PS can always perform the above computation. Next, it will examine the agreements and update as necessary.

Based on the task placement (cf. Section III-A2), an edge exists in only if the computed gradients of and match in all their common groups in all iterations up to the current one indexed with , i.e., a pair needs to have for an edge to be in . If this is not the case, the edge will be removed from . After all such edges are examined, detection is done using degree counting. Given that there are Byzantines in the cluster, after examining all pairs of workers and determining the form of , a worker will be flagged as Byzantine if . Based on Eq. (10), it is not hard to see that such workers can be eliminated and their gradients will not be considered again until the last iteration of the current window. The only exception to this is if the Byzantine set changes before the end of the current detection window. This is possible due to a potential misalignment between the “Byzantine window” and the detection window (recall that is assumed to avoid trivialities). In this case, more than workers may be detected as Byzantines; the PS will, by convention, choose to be the most recently detected Byzantines. Algorithm 3 discusses the detection protocol. Following detection, the PS will act as follows. If at least one Byzantine has been detected, it will ignore the votes of detected Byzantines, and for each group, if there is at least one “honest” vote, it will use this as the output of the majority voting group; also, if a group consists merely of detected Byzantines, it will completely ignore the group. The remaining groups will go through robust aggregation (as in Section V-A). In our experiments in Section VIII-C, all Byzantines are detected successfully in at most 5 iterations. Example 3 showcases the utility of permutations in our detection algorithm using workers.

Example 3.

We will use the assignment of Example 2 with workers assigned to tasks according to a Fano plane and let us denote the assignment of workers to groups (blocks of the design) during the iteration by , initially equal to . For the windows, assume that and . Also, let and the Byzantine set be . Based on ATT-3, workers are in majority within a group in which they disagree with worker . After the first permutation, a possible assignment is . Then, are in the same group as the honest with which they disagree; hence, , and none of them affords to disagree with more honest workers to remain undetected. However, if the next permutation assigns the workers as then the adversaries will cast a different vote than as well. Both of them will be detected after only three iterations.

Remark 4.

Using a , i.e., a design with (a typical value for the redundancy) to assign the files on a cluster with Byzantines, the maximum number of files one can distort is [33], where is the total number of files; this is when each possible pair of Byzantines, among the possible ones appear together in a distinct block and distorts the corresponding file. In Aspis+, the focus is on weak attacks and determining the worst-case choice of adversaries that maximize the number of distorted files is beyond the scope of our work.

VI Convergence Results and Experiments under Setting-II

In this section, we operate under Setting-II (cf. Section III). By leveraging the work of Chen et al. [13] we demonstrate that our training algorithm converges to the optimal point.

We assume that the data samples are distributed i.i.d. from some unknown distribution . We are interested in finding that minimizes over the ; here the expectation is over the distribution and the ’s are distributed i.i.d as well. In general, since the distribution is unknown, cannot be computed and we instead minimize the empirical loss function given by . We need the following additional assumptions.

In the discussion below, we say that a random vector is sub-exponential with sub-exponential norm if for every unit-vector , is a sub-exponential random variable with sub-exponential norm at most , i.e., [43, Sec 2.7]. To keep notation simple, we reuse the letter to denote different numerical constants in each use. This practice is common when working with classes of distributions such as sub-exponential.

- •

-

•

The function is strongly convex, and differentiable with respect to with -Lipschitz gradient. This means that for all and we have

-

•

The random vectors for are sub-exponential with sub-exponential norm . This assumption ensures that concentrates around its mean .

-

•

Let . For , the random vectors are sub-exponential with sub-exponential norm .

-

•

For any there exists (dependent on and ) that is non-increasing in such that is -smooth with high probability, i.e,

Here is the feasible parameter set.

For Aspis, Theorem 1 guarantees an upper bound on the fraction of corrupted gradients regardless of what attack is used. In particular, treating the majority logic and clique finding as a pre-processing step, we arrive at a set of files, at most (cf. Theorem 1) of which are “arbitrarily” corrupted. At this point, the PS applies the robust estimator - “geometric median” and uses it to perform the update step. We can leverage Theorem 5 of [13] to obtain the following result where is the length of the parameter vector and for the quantity .

Theorem 2.

(adapted from [13]) Suppose that are all constants and . Assume that for positive such that and . Fix any and any such that and . There exist universal constants such that if

then with probability at least , for all , the iterates of our algorithm with satisfy

| (11) |

An instance of a problem that satisfies the assumptions presented above is the linear regression problem. Formally, the data set consists of vectors , where . We construct the data matrix of size using these vectors as its rows. The labels corresponding to the data points are computed as follows: , where denotes the parameter set. For this problem, our loss function is the least-squares loss, i.e., we have for where denotes the row of .

VI-A Numerical Experiments

We use the GD algorithm (1) with the initial randomly chosen parameter vector . We partition the data matrix row-wise into submatrices , and correspondingly the label vector into sub-vectors , where is the number of files of the distributed algorithm. A file consists of a pair . For each of its assigned files , worker either computes the honest partial gradient or a distorted value and returns it to the PS. Using the formulation of Section IV, the gradient in Eq. (3) for linear regression is the product .

Metrics: For each scheme and value of we run multiple Carlo simulations, and calculated the average least-square loss that each algorithm converges to across the Monte Carlo simulations. For each simulation we declare convergence if the final empirical loss is less than 0.1 We record the fraction of experiments that converged and the rate of convergence. In computing the average loss, the experiments that did not converged are not taken into account (for more details, please see Supplement Section XI-C).

VI-A1 Experiment Setup

In our experiments, we set , while our cluster consists of workers. All replication-based schemes use . For Aspis+, we considered a design [33].

The geometric median is available as a Python library [44]. Initially, we tuned the learning rate for each scheme and each distortion method to decide the one to use for the Monte Carlo simulations; all learning rates , have been tested. Also, we fix the random seeds of our experiments to be the same across all schemes; this guarantees that the data matrix as well as the original model estimate will be the same across all methods. At the beginning of the algorithm, all elements of and are generated randomly according to a distribution. For all runs, we chose to terminate the algorithm when the norm of gradient is less than or the algorithm has reached a maximum number of 2000 iterations. Our code is available online 444https://github.com/kkonstantinidis/Aspis.

VI-A2 Results

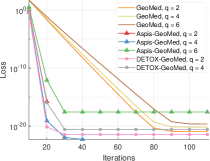

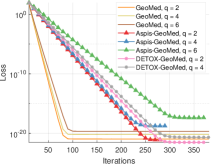

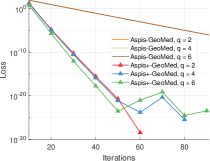

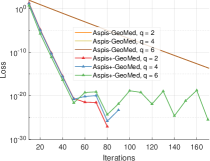

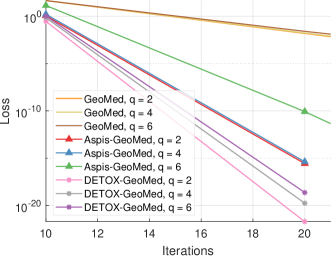

The first set of experiments are for the strong attack ATT-2. The baseline scheme where geometric median is applied on all gradients returned by the workers is referred to as GeoMed and it has no redundancy. DETOX, Aspis, and Aspis+ use geometric median as part of the robust aggregation. Under reversed gradient (see Figure 4(a)), it is clear that all schemes perform well and achieve similar loss for Byzantines. Nevertheless, baseline geometric median needed at least 100 iterations to converge while the redundancy-based schemes have a faster convergence rate. However, the situation is very different for where Aspis converges within 30 iterations. In contrast, the DETOX loss diverged in all 100 simulations, while the convergence rate of the baseline scheme is much slower.

For the constant attack (see Fig. 4(b)) however, the relative performance of the baseline scheme and Aspis is reversed, i.e., the baseline scheme has a faster convergence rate as compared with Aspis. Moreover, Aspis and DETOX have roughly similar convergence rates for . As before for , none of the DETOX simulations converged.

Our last set of results are with the ALIE attack and the results are reported in Figure 6. As the baseline geometric median simulations converged much slower than other schemes, they could not fit properly into the figure; it achieved final loss approximately equal to , , and for , and respectively. On the other hand, Aspis converged to in 30 iterations for . For this attack all schemes converged to very low loss values in all simulation runs. Nevertheless as is evident from Figure 6, both Aspis and DETOX converge to loss values less that within 15 iterations.

Another experiment we performed compares our two proposed methods, Aspis and Aspis+. For both schemes, we generate a new random Byzantine set every iterations (introduced as ATT-3 Section IV-C) while the detection window for Aspis+ is of length . For a comparable attack we use ATT-1 on Aspis (cf. Section IV-A), i.e., all adversaries distort all their assigned files. We compare the two schemes under reversed gradient attack in Figure 5(a) and under constant attack in Figure 5(b). Both methods achieve low final loss in the order of or lower; Aspis converged to lower losses of the order of in approximately 280 iterations in all cases. Nevertheless, Aspis+ achieves a faster convergence rate which aligns with the fact that it’s mostly suitable for weaker adversaries.

VII Distortion Fraction Evaluation

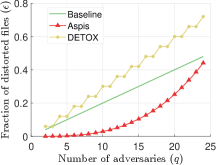

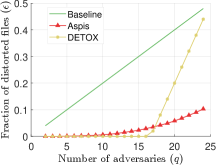

The main motivation of our distortion fraction analysis is that our deep learning experiments (cf. Section VIII-B) and prior work [14] show that is a surrogate of the model’s convergence with respect to accuracy. This comparison involves our work and state-of-the-art schemes under the best- and worst-case choice of the adversaries in terms of the achievable value of . We also compare our work with baseline approaches that do not involve redundancy or majority voting and aggregation is applied directly to the gradients returned by the workers (, and ).

For Aspis, we used the proposed attack ATT-2 from Section IV-B and the corresponding computation of of Theorem 1. DETOX in [16] employs a redundant assignment followed by majority voting and offers robustness guarantees which crucially rely on a “random choice” of the Byzantines. Our prior work [14] (ByzShield) has demonstrated the importance of a careful task assignment and observed that redundancy by itself is not sufficient to allow for Byzantine resilience. That work proposed an optimal choice of the Byzantines that maximizes , which we used in our current experiments. In short, DETOX splits the workers into groups. All workers within a group process the same subset of the batch, specifically containing samples. This phase is followed by majority voting on a group-by-group basis. Reference [14] suggests choosing the Byzantines so that at least workers in each group are adversarial in order to distort the corresponding gradients. In this case, and . We also compare with the distortion fraction incurred by ByzShield [14] under a worst-case scenario. For this scheme, there is no known optimal attack, and we performed an exhaustive combinatorial search to find the adversaries that maximize among all possible options; we follow the same process here to simulate ByzShield’s distortion fraction computation while utilizing the scheme of that work based on mutually orthogonal Latin squares. The reader can refer to Figure 3(a) and Appendix Tables III, IV, and V for our results. Aspis achieves major reductions in ; for instance, is reduced by up to 99% compared to both and in Figure 3(a).

Next, we consider the weak attack, ATT-1. For our scheme, we will make an arbitrary choice of adversaries which carry out the method introduced in Section V-A1, i.e., they will distort all files, and a successful detection is possible. As discussed in Section V-A1, the fraction of corrupted gradients is . For DETOX, a simple benign attack is used. To that end, let the files be . Initialize and choose the Byzantines as follows: for , among the remaining workers in add a worker from the group to the adversarial set . Then,

The results of this scenario are in Figure 3(b).

VIII Large-Scale Deep Learning Experiments

All these experiments are performed under Setting-I, i.e., no assumptions are made about the dataset or the loss function. Accordingly, the evaluation here is in terms of the distortion fraction (see Section VII) and numerical experiments (described below). For the experiments, we used the mini-batch SGD (see (2)) and the robust estimator (see Algorithm 2) is the coordinate-wise median.

VIII-A Experiment Setup

We have evaluated the performance of our methods and competing techniques in classification tasks on Amazon EC2 clusters. The project is written in PyTorch [1] and uses the MPICH library for communication between the different nodes. We worked with the CIFAR-10 data set [32] using the ResNet-18 [45] model. We used clusters of , , workers, redundancy , and simulated values of during training. Detailed information about the implementation can be found in Appendix Section X-B.

Competing methods: We compare Aspis against the baseline implementations of median-of-means [46], Bulyan [24], and Multi-Krum [12]. If is the number of adversarial computations, then Bulyan requires at least total number of computations while the same number for Multi-Krum is . These constraints make these methods inapplicable for larger values of for which our methods are robust. The second class of comparisons is with methods that use redundancy, specifically DETOX [16]. For the baseline scheme we compare with median-based techniques since they originate from robust statistics and are the basis for many aggregators. Multi-Krum combines the intuitions of majority-based and squared-distance-based methods. Draco [17] is a closely related method that uses redundancy. However we do not compare with it since it is very limited in the number of Byzantines that it is resilient to.

Note that for a baseline scheme, all choices of are equivalent in terms of the value of . In our comparisons between Aspis and DETOX we will consider two attack scenarios concerning the choice of the adversaries. For the optimal attack on DETOX, we will use the method proposed in [14] and compare with the attack introduced in Section V-A2. For the weak one, we will choose the adversaries such that they incur the minimum value of in DETOX for given and compare its performance with the scenario of Section V-A1. All schemes compared with Aspis+ consider random sets of Byzantines, and for Aspis+, we will use the attack ATT-3.

VIII-B Aspis Experimental Results

VIII-B1 Comparison under Optimal Attacks

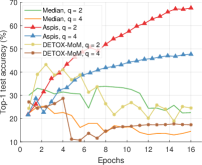

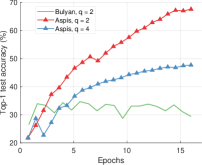

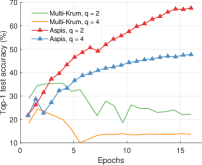

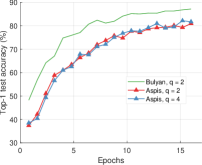

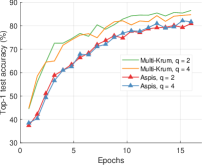

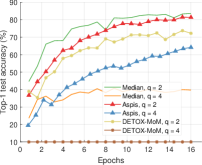

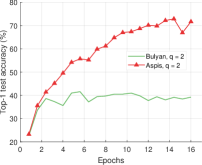

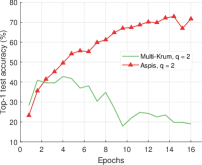

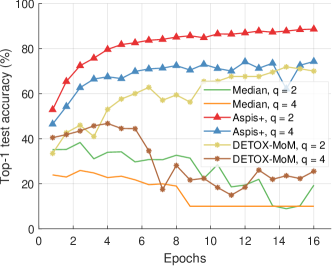

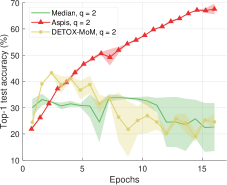

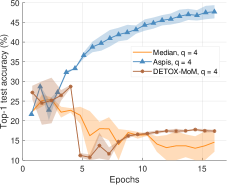

We compare the different defense algorithms under optimal attack scenarios using ATT-2 for Aspis. Figure 7(a) compares our scheme Aspis with the baseline implementation of coordinate-wise median ( for , respectively) and DETOX with median-of-means ( for , respectively) under the ALIE attack. Aspis converges faster and achieves at least a 35% average accuracy boost (at the end of the training) for both values of ( for , respectively).555Please refer to Appendix Tables III and IV for the values of the distortion fraction each scheme incurs. In Figures 7(b) and 7(c), we observe similar trends in our experiments with Bulyan and Multi-Krum, where Aspis significantly outperforms these techniques. For the current setup, Bulyan is not applicable for since . Also, neither Bulyan nor Multi-Krum can be paired with DETOX for since the inequalities and , where , cannot be satisfied; for the specific case of Bulyan even would not be supported by DETOX. Please refer to Section VIII-A and Section VII for more details on these requirements. Also, note that the accuracy of most competing methods fluctuates more than in the results presented in the corresponding papers [16] and [23]. This is expected as we consider stronger attacks than those papers, i.e., optimal deterministic attacks on DETOX and, in general, up to 27% adversarial workers in the cluster. Also, we have done multiple experiments with different random seeds to demonstrate the stability and superiority of our accuracy results compared to other methods (against median-based defenses in Appendix Figure 15, Bulyan in Figure 16 and Multi-Krum in Supplement Figure 17); we point the reader to Appendix Section X-B3 for this analysis. This analysis is clearly missing from most prior work, including that of ALIE [23] and their presented results are only a snapshot of a single experiment. The results for the reversed gradient attack are shown in Figures 8(a), 8(b), and 8(c). Given that this is a weaker attack [14, 16] all schemes, including the baseline methods, are expected to perform well; indeed, in most cases, the model converges to approximately 80% accuracy. However, DETOX fails to converge to high accuracy for as in the case of ALIE; one explanation is that for . Under the Fall of Empires (FoE) distortion (cf. Figure 11) our method still enjoys an accuracy advantage over the baseline and DETOX schemes which becomes more important as the number of Byzantines in the cluster increases.

We have also performed experiments on larger clusters ( workers) as well. The results for the ALIE distortion with the ATT-2 attack can be found in Figure 12. They exhibit similar behavior as in the case of .

VIII-B2 Comparison under Weak Attacks

For baseline schemes, the discussion of weak versus optimal choice of the adversaries is not very relevant as any choice of the Byzantines can overall distort exactly out of the gradients. Hence, for weak scenarios, we chose to compare mostly with DETOX while using ATT-1 on Aspis. The accuracy is reported in Figures 11 and 11, according to which Aspis shows an improvement under attacks on the more challenging end of the spectrum (ALIE). According to Appendix Table IIIIII(b), Aspis enjoys a fraction while and for .

VIII-C Aspis+ Experimental Results

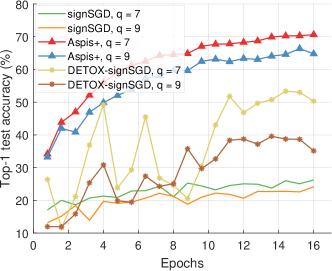

For Aspis+, we considered the attack ATT-3 discussed in Section IV-C. We tested clusters of with and workers among which are Byzantine. In the former case, a design [33] with blocks (files) was used for the placement, while in the latter case, we used a design [33] with blocks (files). A new random Byzantine set is generated every iterations while the detection window is of length .

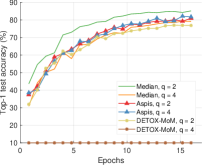

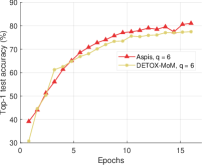

The results for are in Figure 13. We tested against the ALIE distortion, and all compared methods use median-based defenses to filter the gradients. Aspis+ demonstrates an advantage of at least 15% compared with other algorithms (cf. ). For , we tried a weaker distortion than ALIE, i.e., the constant attack paired with signSGD-based defenses [26]. In signSGD, the PS will output the majority of the gradients’ signs for each dimension. Following the advice of [16], we pair this defense with the stronger constant attack as sign flips (e.g., reversed gradient) are unlikely to affect the gradient’s distribution. Aspis+ with median still enjoys an accuracy improvement of at least 20% for and a larger one for . The results are in Figure 14; in this figure, the DETOX accuracy is an average of two experiments using two different random seeds.

IX Conclusions and Future Work

In this work, we have presented Aspis and Aspis+, two Byzantine-resilient distributed schemes that use redundancy and robust aggregation in novel ways to detect failures of the workers. Our theoretical analysis and numerical experiments clearly indicate their superior performance compared to state-of-the-art. Our experiments show that these methods require increased computation and communication time as compared to prior work, e.g., note that each worker has to transmit gradients instead of in related work [16, 17] (see Appendix Section X-B4 for details). We emphasize, however, that our schemes converge to high accuracy in our experiments, while other methods remain at much lower accuracy values regardless of how long the algorithm runs for.

Our experiments involve clusters of up to workers. As we scale Aspis to more workers, the total number of files and the computation load of each worker will also scale; this increases the memory needed to store the gradients during aggregation. For complex neural networks, the memory to store the model and the intermediate gradient computations is by far the most memory-consuming aspect of the algorithm. For these reasons, Aspis is mostly suitable for training large data sets using fairly compact models that do not require too much memory. Aspis+, on the other hand, is a good fit for clusters that suffer from non-adversarial failures that can lead to inaccurate gradients. Finally, utilizing GPUs and communication-related algorithmic improvements are worth exploring to reduce the time overhead.

X Appendix

| Symbol | Meaning |

| number of workers | |

| number of adversaries | |

| redundancy (number of workers each file is assigned to) | |

| batch size | |

| samples of batch of iteration | |

| number of files (alternatively called groups or tasks) | |

| worker | |

| computation load (number of files per worker) | |

| set of files of worker | |

| set of workers assigned to file | |

| true gradient of file with respect to | |

| returned gradient of for file with respect to | |

| majority gradient for file | |

| worker set | |

| graph used to encode the task assignments to workers | |

| graph indicating the agreements of pairs of workers in all of their common gradient tasks in iteration | |

| set of adversaries | |

| maximum clique in | |

| number of distorted gradients after detection and aggregation | |

| maximum number of distorted gradients after detection and aggregation (worst-case) | |

| disagreement set (of workers) for adversary | |

| , i.e., minimum number of distorted copies needed to corrupt majority vote for a file | |

| , i.e., fraction of distorted gradients after detection and aggregation | |

| subset of files where the set of active adversaries is of size ; for linear regression this is the data matrix corresponding to the file | |

| data matrix of linear regression | |

| number of points of linear regression | |

| dimensionality of linear regression model |

| \collectcell0.004\endcollectcell | \collectcell0.133\endcollectcell | \collectcell0.2\endcollectcell | \collectcell0.04\endcollectcell | |

| \collectcell0.022\endcollectcell | \collectcell0.2\endcollectcell | \collectcell0.2\endcollectcell | \collectcell0.12\endcollectcell | |

| \collectcell0.062\endcollectcell | \collectcell0.267\endcollectcell | \collectcell0.4\endcollectcell | \collectcell0.2\endcollectcell | |

| \collectcell0.132\endcollectcell | \collectcell0.333\endcollectcell | \collectcell0.4\endcollectcell | \collectcell0.32\endcollectcell | |

| \collectcell0.242\endcollectcell | \collectcell0.4\endcollectcell | \collectcell0.6\endcollectcell | \collectcell0.48\endcollectcell | |

| \collectcell0.4\endcollectcell | \collectcell0.467\endcollectcell | \collectcell0.6\endcollectcell | \collectcell0.56\endcollectcell |

| \collectcell0.002\endcollectcell | \collectcell0.133\endcollectcell | \collectcell0\endcollectcell | |

| \collectcell0.002\endcollectcell | \collectcell0.2\endcollectcell | \collectcell0\endcollectcell | |

| \collectcell0.009\endcollectcell | \collectcell0.267\endcollectcell | \collectcell0\endcollectcell | |

| \collectcell0.022\endcollectcell | \collectcell0.333\endcollectcell | \collectcell0\endcollectcell | |

| \collectcell0.044\endcollectcell | \collectcell0.4\endcollectcell | \collectcell0.2\endcollectcell | |

| \collectcell0.077\endcollectcell | \collectcell0.467\endcollectcell | \collectcell0.4\endcollectcell |

| \collectcell0.002\endcollectcell | \collectcell0.095\endcollectcell | \collectcell0.143\endcollectcell | \collectcell0.02\endcollectcell | |

| \collectcell0.008\endcollectcell | \collectcell0.143\endcollectcell | \collectcell0.143\endcollectcell | \collectcell0.06\endcollectcell | |

| \collectcell0.021\endcollectcell | \collectcell0.19\endcollectcell | \collectcell0.286\endcollectcell | \collectcell0.1\endcollectcell | |

| \collectcell0.045\endcollectcell | \collectcell0.238\endcollectcell | \collectcell0.286\endcollectcell | \collectcell0.16\endcollectcell | |

| \collectcell0.083\endcollectcell | \collectcell0.286\endcollectcell | \collectcell0.429\endcollectcell | \collectcell0.24\endcollectcell | |

| \collectcell0.137\endcollectcell | \collectcell0.333\endcollectcell | \collectcell0.429\endcollectcell | \collectcell0.33\endcollectcell | |

| \collectcell0.211\endcollectcell | \collectcell0.381\endcollectcell | \collectcell0.571\endcollectcell | \collectcell0.43\endcollectcell | |

| \collectcell0.307\endcollectcell | \collectcell0.429\endcollectcell | \collectcell0.571\endcollectcell | \collectcell0.51\endcollectcell | |

| \collectcell0.429\endcollectcell | \collectcell0.476\endcollectcell | \collectcell0.714\endcollectcell | \collectcell0.59\endcollectcell |

| \collectcell0.001\endcollectcell | \collectcell0.095\endcollectcell | \collectcell0\endcollectcell | |

| \collectcell0.001\endcollectcell | \collectcell0.143\endcollectcell | \collectcell0\endcollectcell | |

| \collectcell0.003\endcollectcell | \collectcell0.19\endcollectcell | \collectcell0\endcollectcell | |

| \collectcell0.008\endcollectcell | \collectcell0.238\endcollectcell | \collectcell0\endcollectcell | |

| \collectcell0.015\endcollectcell | \collectcell0.286\endcollectcell | \collectcell0\endcollectcell | |

| \collectcell0.026\endcollectcell | \collectcell0.333\endcollectcell | \collectcell0\endcollectcell | |

| \collectcell0.042\endcollectcell | \collectcell0.381\endcollectcell | \collectcell0.143\endcollectcell | |

| \collectcell0.063\endcollectcell | \collectcell0.429\endcollectcell | \collectcell0.286\endcollectcell | |

| \collectcell0.09\endcollectcell | \collectcell0.476\endcollectcell | \collectcell0.429\endcollectcell |

| \collectcell0.001\endcollectcell | \collectcell0.083\endcollectcell | \collectcell0.125\endcollectcell | \collectcell0.031\endcollectcell | |

| \collectcell0.005\endcollectcell | \collectcell0.125\endcollectcell | \collectcell0.125\endcollectcell | \collectcell0.063\endcollectcell | |

| \collectcell0.014\endcollectcell | \collectcell0.167\endcollectcell | \collectcell0.25\endcollectcell | \collectcell0.125\endcollectcell | |

| \collectcell0.03\endcollectcell | \collectcell0.208\endcollectcell | \collectcell0.25\endcollectcell | \collectcell0.188\endcollectcell | |

| \collectcell0.054\endcollectcell | \collectcell0.25\endcollectcell | \collectcell0.375\endcollectcell | \collectcell0.281\endcollectcell | |

| \collectcell0.09\endcollectcell | \collectcell0.292\endcollectcell | \collectcell0.375\endcollectcell | \collectcell0.375\endcollectcell | |

| \collectcell0.138\endcollectcell | \collectcell0.333\endcollectcell | \collectcell0.5\endcollectcell | \collectcell0.5\endcollectcell | |

| \collectcell0.202\endcollectcell | \collectcell0.375\endcollectcell | \collectcell0.5\endcollectcell | \collectcell0.5\endcollectcell | |

| \collectcell0.282\endcollectcell | \collectcell0.417\endcollectcell | \collectcell0.625\endcollectcell | \collectcell0.531\endcollectcell | |

| \collectcell0.38\endcollectcell | \collectcell0.458\endcollectcell | \collectcell0.625\endcollectcell | \collectcell0.625\endcollectcell |

| \collectcell0\endcollectcell | \collectcell0.083\endcollectcell | \collectcell0\endcollectcell | |

| \collectcell0\endcollectcell | \collectcell0.125\endcollectcell | \collectcell0\endcollectcell | |

| \collectcell0.002\endcollectcell | \collectcell0.167\endcollectcell | \collectcell0\endcollectcell | |

| \collectcell0.005\endcollectcell | \collectcell0.208\endcollectcell | \collectcell0\endcollectcell | |

| \collectcell0.01\endcollectcell | \collectcell0.25\endcollectcell | \collectcell0\endcollectcell | |

| \collectcell0.017\endcollectcell | \collectcell0.292\endcollectcell | \collectcell0\endcollectcell | |

| \collectcell0.028\endcollectcell | \collectcell0.333\endcollectcell | \collectcell0\endcollectcell | |

| \collectcell0.042\endcollectcell | \collectcell0.375\endcollectcell | \collectcell0.125\endcollectcell | |

| \collectcell0.059\endcollectcell | \collectcell0.417\endcollectcell | \collectcell0.25\endcollectcell | |

| \collectcell0.082\endcollectcell | \collectcell0.458\endcollectcell | \collectcell0.375\endcollectcell |

X-A Proof of Theorem 1

For a given file , let with be the set of “active adversaries” in it, i.e., consists of Byzantines that collude to create a majority that distorts the gradient on it. In this case, the remaining workers in belong to , where we note that . Let denote the subset of files where the set of active adversaries is of size ; note that depends on the disagreement sets . Formally,

| (12) | |||||

Then, for a given choice of disagreement sets, the number of files that can be corrupted is given by . We obtain an upper bound on the maximum number of corrupted files by maximizing this quantity with respect to the choice of , i.e.,

| (13) |

where the maximization is over the choice of the disagreement sets . With given in (12), assuming , the number of distorted files is upper bounded by

| (14) |

For that, recall that and that an adversarial majority of at least distorted computations for a file is needed to corrupt that particular file. Note that consists of those files where the active adversaries are of size ; these can be chosen in ways. The remaining workers in the file belong to where . Thus, the remaining workers can be chosen in at most ways. It follows that

| (15) |

Therefore,

| (16) | |||||

| (17) | |||||

| (18) | |||||

| (19) |

Eq. (17) follows from the convention that when or . Eq. (19) follows from Eq. (18) using the following observations

-

•

in which the first equality is straightforward to show by taking all possible cases: , and .

-

•

By symmetry, .

The upper bound in Eq. (16) is met with equality when all adversaries choose the same disagreement set, which is a -sized subset of the honest workers, i.e., for . In this case, it can be seen that the sets are disjoint so that (14) is met with equality. Moreover, (15) is also an equality. This finally implies that (16) is also an equality, i.e., this choice of disagreement sets saturates the upper bound.

It can also be seen that in this case, the adversarial strategy yields a graph with multiple maximum cliques. To see this, we note that the adversaries in agree with all the computed gradients in . Thus, they form a clique of of size in . Furthermore, the honest workers in form another clique , which is also of size . Thus, the detection algorithm cannot select one over the other and the adversaries will evade detection; and the fallback robust aggregation strategy will apply.

X-B Experiment Setup Details

X-B1 Cluster Setup

We used clusters of , , and workers arranged in various setups within Amazon EC2. Initially, we used a PS of type i3.16xlarge and several workers of type c5.4xlarge to set up a distributed cluster; for the experiments, we adapted GPUs, g3s.xlarge instances were used. However, purely distributed implementations require training data to be transmitted from the PS to every single machine, based on our current implementation; an alternative approach one can follow is to set up shared storage space accessible by all machines to store the training data. Also, some instances were automatically terminated by AWS per the AWS spot instance policy limitations;666https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/spot-interruptions.html this incurred some delays in resuming the experiments that were stopped. In order to facilitate our evaluation and avoid these issues we decided to simulate the PS and the workers for the rest of the experiments on a single instance either of type x1.16xlarge or i3.16xlarge. We emphasize that the choice of the EC2 setup does not affect any of the numerical results in this paper since in all cases, we used a single virtual machine image with the same dependencies. Handling of the GPU floating-point precision errors have been discussed in Supplement Section XI-B.

X-B2 Data Set Preprocessing and Hyperparameter Tuning

The CIFAR-10 images have been normalized using standard mean and standard deviation values for the data set. The value used for momentum (for gradient descent) was set to , and we trained for epochs in all experiments. The number of epochs is precisely the invariant we maintain across all experiments, i.e., all schemes process the training data the same number of times. The batch size and the learning rate are chosen independently for each method; the number of iterations is adjusted accordingly to account for the number of epochs. For Section VIII-B, we followed the advice of the authors of DETOX and chose and for the DETOX and baseline schemes. For Aspis, we used (32 samples per file) and (3 samples per file) for the ALIE experiments and (3 samples per file) for the remaining experiments except for the FoE optimal attack (cf. Figure 11) for which performed better. In Section VIII-C, we used and for DETOX as well as for baseline schemes while for Aspis+ we used for the ALIE experiments and for the constant attack experiments. In Supplement Table VI, a learning rate schedule is denoted by ; this notation signifies the fact that we start with a rate equal to , and every iterations, we set the rate equal to , where is the index of the current iteration and is set to be the number of iterations occurring between two consecutive checkpoints in which we store the model (points in the accuracy figures). We also index the schemes in order of appearance in the corresponding figure’s legend. Experiments that appear in multiple figures are not repeated in Supplement Table VI (we ran those training processes once). In order to pick the optimal hyperparameters for each scheme, we performed an extensive grid search involving different combinations of . In particular, the values of we tested are 0.3, 0.1, 0.03, 0.01, 0.003, 0.001, and 0.0003, and for we tried 1, 0.975, 0.95, 0.7 and 0.5. For each method, we ran 3 epochs for each such combination and chose the one which was giving the lowest value of average cross-entropy loss (principal criterion) and the highest value of top-1 accuracy (secondary criterion).

X-B3 Error Bars

In order to examine whether the choice of the random seed affects the accuracy of the trained model we have performed the experiments of Section VIII-B for the ALIE distortion for two different seeds for the values for every scheme; we used and as random seeds. These tests have been performed for the case of workers. In Figure 15(a), for a given method, we report the minimum accuracy, the maximum accuracy, and their average for each evaluation point. We repeat the same process in Figures 16(a) and Supplement Figure 17(a) when comparing with Bulyan and Multi-Krum, respectively. The corresponding experiments for are shown in Figures 15(b), 16(b), and Supplement Figure 17(b).

Given the fact that these experiments take a significant amount of time and that they are computationally expensive, we chose to perform this consistency check for a subset of our experiments. Nevertheless, these results indicate that prior schemes [16, 8, 24] are sensitive to the choice of the random seed and demonstrate an unstable behavior in terms of convergence. In all of these cases, the achieved value of accuracy at the end of the 16 epochs of training is small compared to Aspis. On the other hand, the accuracy results for Aspis are almost identical for both choices of the random seed.

X-B4 Computation and Communication Overhead

Our schemes provide robustness under powerful attacks and sophisticated distortion methods at the expense of increased computation and communication time. Note that each worker has to perform forward/backward propagation computations and transmit gradients per iteration. In related baseline [24, 12] and redundancy-based methods [16, 17], each worker is responsible for a single such computation. Experimentally, we have observed that Aspis needs up to overall training time compared to other schemes to complete the same number of training epochs. We emphasize that the training time incurred by each scheme depends on a wide range of parameters, including the utilized defense, the batch size, and the number of iterations, and can vary significantly. Our implementation supports GPUs, and we used NVIDIA CUDA [47] for some experiments to alleviate a significant part of the overhead; however, a detailed time cost analysis is not an objective of our current work. Communication-related algorithmic improvements are also worth exploring. Finally, our implementation natively supports resuming from a checkpoint (trained model) and hence, when new data becomes available, we can only use that data to perform more training epochs.

X-B5 Software

Our implementation of the Aspis and Aspis+ algorithms used for the experiments builds on ByzShield’s [14] PyTorch skeleton and has been provided along with dependency information and instructions 777https://github.com/kkonstantinidis/Aspis. The implementation of ByzShield is available at [48] and uses the standard Github license. We utilized the NetworkX package [49] for the clique-finding; its license is 3-clause BSD. The CIFAR-10 data set [32] comes with the MIT license; we have cited its technical report, as required.

References

- [1] A. Paszke, S. Gross, F. Massa, A. Lerer, J. Bradbury, G. Chanan, T. Killeen, Z. Lin, N. Gimelshein, L. Antiga, A. Desmaison, A. Kopf, E. Yang, Z. DeVito, M. Raison, A. Tejani, S. Chilamkurthy, B. Steiner, L. Fang, J. Bai, and S. Chintala, “PyTorch: An imperative style, high-performance deep learning library,” in Advances in Neural Information Processing Systems, December 2019, pp. 8024–8035.

- [2] M. Abadi, P. Barham, J. Chen, Z. Chen, A. Davis, J. Dean, M. Devin, S. Ghemawat, G. Irving, M. Isard, M. Kudlur, J. Levenberg, R. Monga, S. Moore, D. G. Murray, B. Steiner, P. Tucker, V. Vasudevan, P. Warden, M. Wicke, Y. Yu, and X. Zheng, “TensorFlow: A system for large-scale machine learning,” in 12th USENIX Symposium on Operating Systems Design and Implementation (OSDI 16), November 2016, pp. 265–283.

- [3] T. Chen, M. Li, Y. Li, M. Lin, N. Wang, M. Wang, T. Xiao, B. Xu, C. Zhang, and Z. Zhang, “MXNet: A flexible and efficient machine learning library for heterogeneous distributed systems,” December 2015. [Online]. Available: https://arxiv.org/abs/1512.01274

- [4] F. Seide and A. Agarwal, “CNTK: Microsoft’s open-source deep-learning toolkit,” in Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, August 2016, p. 2135.

- [5] Y. Kim, R. Daly, J. Kim, C. Fallin, J. H. Lee, D. Lee, C. Wilkerson, K. Lai, and O. Mutlu, “Flipping bits in memory without accessing them: An experimental study of DRAM disturbance errors,” in Proceeding of the 41st Annual International Symposium on Computer Architecuture, June 2014, pp. 361––372.

- [6] A. S. Rakin, Z. He, and D. Fan, “Bit-flip attack: Crushing neural network with progressive bit search,” in 2019 IEEE/CVF International Conference on Computer Vision (ICCV), October 2019, pp. 1211–1220.

- [7] N. Gupta and N. H. Vaidya, “Byzantine fault-tolerant parallelized stochastic gradient descent for linear regression,” in 2019 57th Annual Allerton Conference on Communication, Control, and Computing (Allerton), September 2019, pp. 415–420.

- [8] G. Damaskinos, E. M. El Mhamdi, R. Guerraoui, A. H. A. Guirguis, and S. L. A. Rouault, “Aggregathor: Byzantine machine learning via robust gradient aggregation,” in Conference on Systems and Machine Learning (SysML) 2019, March 2019, p. 19.

- [9] D. Yin, Y. Chen, K. Ramchandran, and P. Bartlett, “Defending against saddle point attack in Byzantine-robust distributed learning,” in Proceedings of the 36th International Conference on Machine Learning, June 2019, pp. 7074–7084.

- [10] ——, “Byzantine-robust distributed learning: Towards optimal statistical rates,” in Proceedings of the 35th International Conference on Machine Learning, July 2018, pp. 5650–5659.

- [11] C. Xie, O. Koyejo, and I. Gupta, “Generalized Byzantine-tolerant SGD,” March 2018. [Online]. Available: https://arxiv.org/abs/1802.10116

- [12] P. Blanchard, E. M. El Mhamdi, R. Guerraoui, and J. Stainer, “Machine learning with adversaries: Byzantine tolerant gradient descent,” in Advances in Neural Information Processing Systems, December 2017, pp. 119–129.

- [13] Y. Chen, L. Su, and J. Xu, “Distributed statistical machine learning in adversarial settings: Byzantine gradient descent,” Proc. ACM Meas. Anal. Comput. Syst., vol. 1, no. 2, December 2017.

- [14] K. Konstantinidis and A. Ramamoorthy, “ByzShield: An efficient and robust system for distributed training,” in Machine Learning and Systems 3 (MLSys 2021), April 2021, pp. 812–828.

- [15] Q. Yu, S. Li, N. Raviv, S. M. M. Kalan, M. Soltanolkotabi, and S. Avestimehr, “Lagrange coded computing: Optimal design for resiliency, security and privacy,” April 2019. [Online]. Available: https://arxiv.org/abs/1806.00939