Deterministic Team Problems with Signaling Incentive

Abstract

This paper considers linear quadratic team decision problems where the players in the team affect each other’s information structure through their decisions. Whereas the stochastic version of the problem is well known to be complex with nonlinear optimal solutions that are hard to find, the deterministic counterpart is shown to be tractable. We show that under a mild assumption, where the weighting matrix on the controller is chosen large enough, linear decisions are optimal and can be found efficiently by solving a semi-definite program.

Index Terms:

Team Decision Theory, Game Theory, Convex Optimization.Notation

| The set of symmetric matrices. | |

| The set of symmetric positive | |

| semidefinite matrices. | |

| The set of symmetric positive | |

| definite matrices. | |

| The set of functions with | |

| , | |

| , , . | |

| Denotes the pseudo-inverse of the | |

| square matrix . | |

| Denotes the matrix with minimal number | |

| of columns spanning the nullspace of . | |

| The th block row of the matrix . | |

| The block element of in position. | |

| . | |

| . | |

| is the trace of the matrix . | |

| The set of Gaussian variables with | |

| mean and covariance . |

I Introduction

The team problem is an optimization problem, where a number of decision makers (or players) make up a team, optimizing a common cost function with respect to some uncertainty representing nature. Each member of the team has limited information about the global state of nature. Furthermore, the team members could have different pieces of information, which makes the problem different from the one considered in classical optimization, where there is only one decision function that has access to the entire information available about the state of nature.

Team problems seemed to possess certain properties that were considerably different from standard optimization, even for specific problem structures such as the optimization of a quadratic cost in the state of nature and the decisions of the team members. In stochastic linear quadratic decision theory, it was believed for a while that certainty-equvalence holds between estimation and optimal decision with complete information, even for team problems. The certainty-equivalence principle can be briefly explained as follows. First assume that every team member has access to the information about the entire state of nature, and find the corresponding optimal decision for each member. Then, each member makes an estimate of the state of nature, which is in turn combined with the optimal decision obtained from the full information assumption. It turns out that this strategy does not yield an optimal solution (see [9]).

A general solution to static stochastic quadratic team problems was presented by Radner [9]. Radner’s result gave hope that some related problems of dynamic nature could be solved using similar arguments. But in 1968, Witsenhausen [11] showed in his well known paper that finding the optimal decision can be complex if the decision makers affect each other’s information. Witsenhausen considered a dynamic decision problem over two time steps to illustrate that difficulty. The dynamic problem can actually be written as a static team problem:

| minimize | |||

| subject to |

where and are Gaussian with zero mean and variance and , respectively. Here, we have two decision makers, one corresponding to , and the other to . Witsenhausen showed that the optimal decisions and are not linear because of the signaling/coding incentive of . Decision maker measures , and hence, its measurement is affected by . Decision maker tries to encode information about in its decision, which makes the optimal strategy complex.

The problem above is actually an information theoretic problem. To see this, consider the slightly modified problem

| minimize | |||

| subject to |

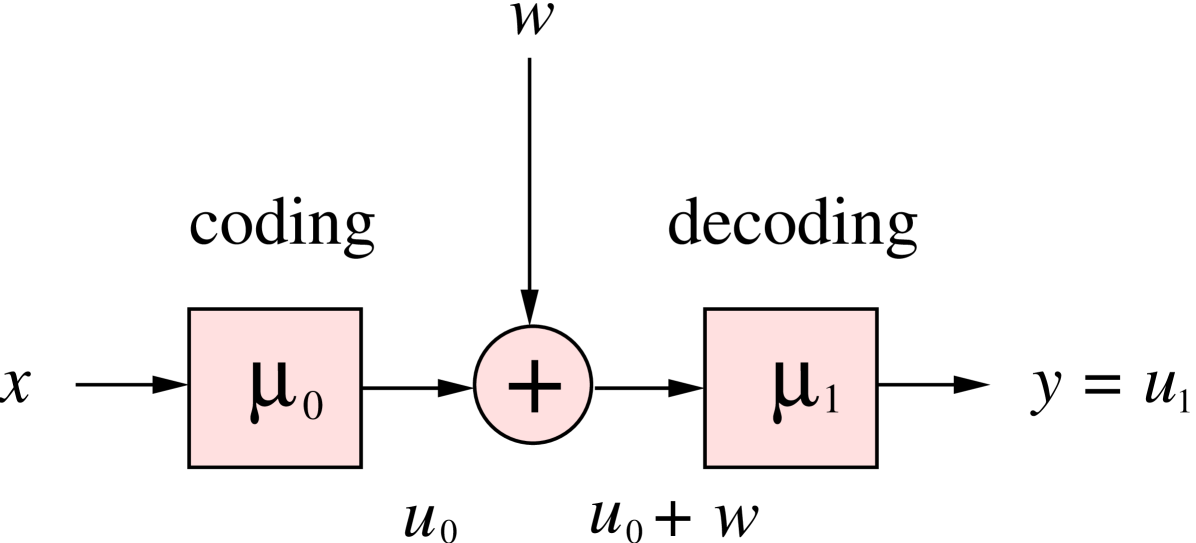

The modification made is that we removed from the objective function, and instead added a constraint to make sure that it has a limited variance (of course we could set an arbitrary power limitation on the variance). The modified problem is exactly the Gaussian channel coding/decoding problem (see Figure 1)! The optimal solution to Witsenhausens counterexample is still unknown. Even if we would restrict the optimization problem to the set of linear decisions, there is still no known polynomial-time algorithm to find optimal solutions. Another interesting counterexample was recently given in [7].

In this paper, we consider the problem of distributed decision making with information constraints under linear quadratic settings. For instance, information constraints appear naturally when making decisions over networks. These problems can be formulated as team problems. Early results considered static team theory in stochastic settings [8], [9], [5]. In [2], the team problem with two team members was solved. The solution cannot be easily extended to more than two players since it uses the fact that the two members have common information; a property that doesn’t necessarily hold for more than two players. [2] uses the result to consider the two-player problem with one-step delayed measurement sharing with the neighbors, which is a special case of the partially nested information structure, where there is no signaling incentive. Also, a nonlinear team problem with two team members was considered in [1], where one of the team members is assumed to have full information whereas the other member has only access to partial information about the state of the world. Related team problems with exponential cost criterion were considered in [6]. Optimizing team problems with respect to affine decisions in a minimax quadratic cost was shown to be equivalent to stochastic team problems with exponential cost, see [3]. The connection is not clear when the optimization is carried out over nonlinear decision functions. In [4], a general solution was given for an arbitrary number of team members, where linear decision were shown to be optimal and can be found by solving a linear matrix inequality. In the deterministic version of Witsenhausen’s counterexample, that is minimizing the quadratic cost with respect to the worst case scenario of the state (instead of the assumption that is Gaussian), the linear decisions where shown to be optimal in [10].

We will show that for static linear quadratic minimax team problems, where the players in the team affect each others information structure through their decisions, linear decisions are optimal in general, and can be found by solving a linear matrix inequality.

II Main Results

The deterministic problem considered is a quadratic game between a team of players and nature. Each player has limited information that could be different from the other players in the team. This game is formulated as a minimax problem, where the team is the minimizer and nature is the maximizer. We show that if there is a solution to the static minimax team problem, then linear decisions are optimal, and we show how to find a linear optimal solution by solving a linear matrix inequality.

III Deterministic Team Problems with Signaling Incentive

Consider the following team decision problem

| (1) | ||||

| subject to | ||||

where and , for ,

is a quadratic cost given by

, , and

The players ,…, make up a team, which plays against nature represented by the vector , using . This problem is more complicated than the static team decision problem studied in [4], since it has the same flavour as that of the Witsenhausen counterexample that was presented in the introduction. We see that the measurement of decision maker could be affected by the other decision makers through the terms , .

Note that we have the equality which is equivalent to . Using this substitution of variable, the team problem (1) is equivalent to

| (2) |

Assumption 1

Theorem 1

Let be the value of the game (1) and suppose that Assumption 1 holds. Then the following statements hold:

-

()

There exist linear decisions , , where the value is achieved.

-

()

If , then for any , a linear decision with that achieves is obtained by solving the linear matrix inequality

find subject to

Proof:

() Note that

Now introduce , , such that

and

| (3) | ||||

Then,

and Hence, we have that

Then, for any , there exists a decision function such that

for all . Under Assumption 1, we have that

for any . Thus,

we can apply Theorem 1 in [4], which implies that there must exist linear decisions that can achieve

any . By compactness, there must exist linear decisions that achieve .

() Let for . Then

and the proof is complete. ∎

IV Linear Quadratic Control with Arbitrary Information Constraints

Consider the dynamic team decision problem

| (4) | ||||

| subject to | ||||

Now write and as

It is easy to see that the optimal control problem above is equivalent to a static team problem of the form (1). Thus, linear controllers are optimal under Assumption 1.

Example 1

Consider the deterministic version of the Witsenhausen counterexample presented in the introduction:

| s. t. | |||

Substitue , and in the inequality

Then, we get the equivalent problem

| s. t. |

Completing the squares gives the following equivalent inequality

For , we can search over , and we can use Theorem 1 to deduce that linear decisions are optimal, and can be computed by iteratively solving a linear matrix inequality, where the iterations are done with respect to . We find that

For , we iterate with respect to , and we find optimal linear decisions given by

Example 2

Consider the deterministic counterpart of the multi-stage finite-horizon stochastic control problem that was considered in [7]:

subject to the dynamics

It is easy to check that and for (compare with Assumption 1) . Thus, linear decisions are optimal. This is compared to the stochastic version, where linear decisions where not optimal for .

V Conclusions

We have considered the static team problem in deterministic linear quadratic settings where the team members may affect each others information. We have shown that decisions that are linear in the observations are optimal and can be found by solving a linear matrix inequality.

For future work, it would be interesting to consider the case where the measurements are given by , for an arbitrary matrix .

VI Acknowledgements

The author is grateful to Professor Anders Rantzer, Professor Bo Bernhardsson, and the reviewers for valuable comments and suggestions.

This work is supported by the Swedish Research Council.

References

- [1] P. Bernhard and N. Hovakimyan. Nonlinear robust control and minimax team problems. International Journal of Robust and Nonlinear Control, 9(9):239–257, 1999.

- [2] G. Didinsky and T. Basar. Minimax decentralized controllers for discrete-time linear systems. In 41st Conference on Decision and Control, pages 481–486, 1992.

- [3] C. Fan, J. L. Speyer, and C. R. Jaensch. Centralized and decentralized solutions of the linear-exponential-gaussian problem. IEEE Trans. on Automatic Control, 39(10):1986–2003, 1994.

- [4] A. Gattami, B. Bernhardsson, and A. Rantzer. Robust team decision theory. IEEE Tran. Automatic Control, 57(3):794 – 798, march 2012.

- [5] Y.-C. Ho and K.-C. Chu. Team decision theory and information structures in optimal control problems-part i. IEEE Trans. on Automatic Control, 17(1), 1972.

- [6] J. Krainak, J. L. Speyer, and S. I. Marcus. Static team problems-part i. IEEE Trans. on Automatic Control, 27(4):839–848, 1982.

- [7] G. M. Lipsa and N. C. Martins. Finite horizon optimal memoryless control of a delay in gaussian noise: A simple counterexample. In IEEE Conference on Decision and Control, pages 1628–1635, December 2008.

- [8] J. Marschak. Elements for a theory of teams. Management Sci., 1:127–137, 1955.

- [9] R. Radner. Team decision problems. Ann. Math. Statist., 33(3):857–881, 1962.

- [10] M. Rotkowitz. Linear controllers are uniformly optimal for the Witsenhausen counterexample. In IEEE Conference on Decision and Control, pages 553–558, 2006.

- [11] H. S. Witsenhausen. A counterexample in stochastic optimum control. SIAM Journal on Control, 6(1):138–147, 1968.