Differentially Private ADMM Algorithms for Machine Learning

Abstract

In this paper, we study efficient differentially private alternating direction methods of multipliers (ADMM) via gradient perturbation for many machine learning problems. For smooth convex loss functions with (non)-smooth regularization, we propose the first differentially private ADMM (DP-ADMM) algorithm with performance guarantee of -differential privacy (-DP). From the viewpoint of theoretical analysis, we use the Gaussian mechanism and the conversion relationship between Rényi Differential Privacy (RDP) and DP to perform a comprehensive privacy analysis for our algorithm. Then we establish a new criterion to prove the convergence of the proposed algorithms including DP-ADMM. We also give the utility analysis of our DP-ADMM. Moreover, we propose an accelerated DP-ADMM (DP-AccADMM) with the Nesterov’s acceleration technique. Finally, we conduct numerical experiments on many real-world datasets to show the privacy-utility tradeoff of the two proposed algorithms, and all the comparative analysis shows that DP-AccADMM converges faster and has a better utility than DP-ADMM, when the privacy budget is larger than a threshold.

Index Terms:

Differentially private, alternating direction method of multipliers (ADMM), gradient perturbation, momentum acceleration, Gaussian mechanism.I Introduction

In the era of ‘Big Data’, most people’s lives will be presented on the internet in different types of data, and personal privacy may be leaked when data are released/shared. Data is the most valuable resource for research institutions and decision-making departments, which can greatly improve the service system of society, but meanwhile we must also protect the privacy of users who provide data often containing personal identifiable information and various confidential data. Generally, research institutions use these data sets to train machine learning models. Sometimes the models are made publicly available, which gives attackers an opportunity to obtain individual privacy. Thus, it is necessary to add privacy preservation techniques during training and learning process.

In recent years, privacy-preserving technologies are very popular. Differential privacy (DP) [1] that can provide rigorous guarantees for individual or personal privacy by adding randomized noise has been extensively studied in the literature [2, 3]. Therefore, whether in academia or industry, differential privacy is the most recognized privacy preserving technology. A lot of applications have been studied to be equipped with differential privacy in many fields, such as data mining, machine learning and deep learning. For instance, differentially private recommender systems have been extensively studied [4][5][6][7] to deal with the privacy leakage during collaborative filtering process. Besides, personalized online advertising [8], health data [9], face recognition [10][11], network trace analysis [12] and search logs [13] have all been studied by utilizing differential privacy for privacy-preserving. Of course, real-world applications have also been widely developed by technology companies and government agencies, such as Google [14], Apple [15], Microsoft [16], and US Census Bureau [17]. Thus, we can see that differential privacy plays an important role in privacy-preserving nowadays.

Empirical risk minimization (ERM) is a commonly used supervised learning problem. Let be a dataset with samples, where . Many machine learning problems such as ERM are formulated as the following minimization problem:

| (1) |

where each is a smooth convex loss function, and is a simple convex (non)-smooth regularizer such as the -norm or -norm regularizer. Many differential privacy algorithms have been proposed to deal with ERM problems, such as DP-SGD [18], DP-SVRG [19], and DP-SRGD [20]. Therefore, this paper mainly considers the generalized ERM problem with more complex regularizers (e.g., with a given matrix and a regularized parameter ), such as graph-guided fused Lasso [21], generalized Lasso [22] and graph-guided support vector machine (SVM) [23].

The alternating direction method of multipliers (ADMM) is an efficient and popular optimization method for solving the generalized ERM problem. Although there are many research works on differential privacy ADMMs, most of them focus on differential privacy of distributed ADMMs such as [24, 25, 26]. In fact, distributed ADMMs are mostly suitable for federated learning instead of centralized problems. Therefore, only a few of them work on the centralized and stochastic ADMMs [27] or objective perturbed centralized and deterministic ADMMs such as [28] to deal with centralized problems.

However, the gradients of stochastic ADMMs such as [23] have their own gradient noise due to random sampling [29]. Thus, it is difficult to estimate the noise added into stochastic algorithms. Generally, stochastic differentially private algorithms need some methods to estimate their privacy loss [18, 30]. But rough estimations may lead to bad utility of algorithms. As for the position where we add the noise to, actually, gradient perturbation is a better choice than output/objective perturbation for first-order algorithms [31]. Firstly, at each iteration, gradient perturbation can release the noisy gradient so that the utility of the algorithm will not be affected. Secondly, objective perturbation often requires strong assumptions on the objective function, while gradient perturbation only needs to bound the sensitivity of gradients. It does not require strong assumptions on the objective. Moreover, as for DP-ERM problems, gradient perturbation often achieves better empirical utility than output/objective perturbations. Thus, it is very meaningful to study differentially private ADMM algorithms under gradient perturbation. Until now, there is no gradient perturbed differential privacy work on centralized and deterministic ADMM algorithms. Therefore, in this paper, we will focus on centralized and deterministic ADMMs and propose efficient gradient perturbed differential privacy ADMM algorithms for solving the generalized ERM problem (i.e., Problem (2) in Section II).

ADMM algorithms can deal with more complicated ERM problems, especially the ERM problem with equality constraints, e.g., graph-guided logistic regression and graph-guided SVM problems. In this paper, we propose two efficient deterministic differential privacy ADMM algorithms that satisfy -differential privacy (DP), namely differential privacy ADMM (DP-ADMM) algorithm and differential privacy accelerated ADMM (DP-AccADMM) algorithm. Our algorithms can be applied to many real-world applications, such as finance, medical treatment, Internet and transportation. Because we give a quantitative representation of privacy leakage, even if attackers own the largest background, they can not get individual privacy information. That is, under our privacy guarantees, our algorithms satisfy -DP.

In the first proposed algorithm (i.e., DP-ADMM), we replace by its first-order approximation to get the gradient term , and then add Gaussian noise as gradient perturbation into it. Moreover, we give the privacy guarantee analysis to show the size of the noise variance added to the algorithm, which can ensure adequate security of our algorithm. In particular, we use the relationship between Rényi differential privacy (RDP) and -DP to get the privacy guarantee of our algorithm. Utility preserving is one of the important indicators used to measure the utility of our algorithm that seeks to preserve data privacy while maintaining an acceptable level of utility. Therefore, we provide the utility bound for our algorithm, which indicates how good the model can be trained. Unlike distributed differential privacy ADMMs [25, 26], we define a new convergence criterion to analyze the convergence property of our algorithm and give our utility bound.

However, common ADMMs converge very slowly when approaching the optimal solution, so is DP-ADMM. A common solution is to introduce an acceleration technique to ADMMs. Like the Nesterov method [32] and the momentum acceleration method [33], the Nesterov accelerated method is a well-known momentum acceleration method [34], and has a faster convergence rate than traditional momentum acceleration methods [35]. In particular, Goldstein et al. [36] proved that their accelerated ADMM with Nesterov acceleration has a convergence rate , while traditional non-accelerated ADMMs only have the convergence rate , where is the number of iterations.

Therefore, in the second proposed algorithm (i.e., DP-AccADMM), we use the Nesterov acceleration technique to accelerate our DP-ADMM. The convergence speed of non-accelerated ADMMs will slow down gradually with the increase of the iteration number especially when the dataset is huge, so is DP-ADMM. Therefore, we also propose a new accelerated DP-AccADMM algorithm. Then we conduct some experiments to compare the performance of DP-ADMM and DP-AccADMM. Moreover, we give some comparative analysis for our experiments, which shows that DP-AccADMM converges much faster than DP-ADMM and retains a good performance on testing data, when the privacy budget becomes bigger and reaches a threshold.

Our contributions of this paper are summarized as follows:

-

•

Based on deterministic ADMMs, we propose two efficient differentially private ADMM algorithms for solving (non)-smooth convex optimization problems with privacy protection. In the proposed algorithms, each subproblem is solved exactly in a closed-form expression by using a first-order approximation.

-

•

We use the relationship between RDP and -DP to get the privacy guarantees of our DP-ADMM algorithm. Moreover, we design a new convergence criterion to complete the convergence analysis of DP-ADMM. It is non-trivial to provide the utility bound and gradient complexity for our algorithm. To the best of our knowledge, we are the first to give the theoretical analysis of gradient perturbed differentially private ADMM algorithms in the centralized and deterministic settings.

-

•

We empirically show the effectiveness of the proposed algorithms by performing extensive empirical evaluations on graph-guided fused Lasso models and comparing them with their counterparts. The results show that DP-AccADMM performs much better than DP-ADMM in terms of convergence speed. In particular, DP-AccADMM continuously improves performance on test sets with the increase of privacy budget and even outperforms DP-ADMM.

The remainder of this paper is organized as follows. Section II discusses recent research advances in differential privacy methods. Section III introduces the preliminary of differential privacy. We propose two new efficient differential privacy ADMM algorithms and analyze their privacy guarantees in Section IV. Experimental results in Section V show the effectiveness of our algorithms. In Section VI, we conclude this paper and discuss future work.

II Related Work

In this section, we present the formulation considered in this paper, and review some differential privacy methods.

II-A Problem Setting

For solving the generalized ERM problem (1) with a smooth convex function and a complex sparsity inducing regularizer, we introduce an auxiliary variable and an equality constraint , and can reformulate Problem (1) as follows:

| (2) |

where , , and . Therefore, this paper aims to propose efficient differentially private ADMM algorithms for solving the more general equality constrained minimization problem (2).

Let be a penalty parameter, and be a dual variable. Then the augmented Lagrangian function of Problem (2) is:

In an alternating or sequential fasion, at iteration , ADMM performs the following update rules:

| (3) | |||

| (4) | |||

| (5) |

This is the classic update form of ADMM [37]. While updating the variable , this update step usually has a high computational complexity, especially when the dataset is very large.

II-B Related Work

As for differential privacy, there are three main types of perturbation used to solve the empirical risk minimization problems under differential privacy [2, 3]. Output perturbation is to perturb the model parameters. For instance, [38] analyzed that introducing output perturbation can make -anonymous algorithms satisfy differential privacy. [39] analyzed the sensitivity of optimal solution between neighboring databases. Objective perturbation is to perturb the objective function trained by algorithms. [40] proposed an algorithm introduced objective perturbation to solve ERM problems and analyzed its privacy guarantee to satisfy -DP. Gradient perturbation is to perturb the gradients used for updating parameters by first-order optimization methods. For instance, [19] proposed DP-SVRG by introducing gradient perturbation to the SVRG operator in [29], and used moment accountant to complete both its privacy guarantee and utility guarantee.

In recent years, there are some research works on the ADMM algorithms that satisfies differential privacy. However, most of them are about distributed ADMM algorithms. For instance, [25] analyzed the relationship between privacy guarantee and utility guarantee on their differentially private distributed ADMM algorithms. Of course, there are few research works focused on differentially private centralized ADMM algorithms. For example, [41] proposed two stochastic ADMM algorithms, which satisfy -RDP and provide privacy guarantee of the algorithms. However, there is no research work on differentially private centralized and deterministic ADMM algorithms. Therefore, in this paper, we focus on differentially private centralized deterministic ADMM algorithms, and propose two efficient deterministic DP-ADMM and DP-AccADMM algorithms with privacy guarantees.

III Preliminaries

Notations. Throughout this paper, the norm is the standard Euclidean norm, denotes the -norm, i.e., , and is the spectral norm (i.e., the largest singular value of the matrix). We denote by the gradient of if it is differentiable, or any of the subgradients of at if is only Lipschitz continuous. is a dataset of samples.

III-A Differential Privacy

[1] introduced the formal notion of differential privacy as follows.

Definition 1 (Differential privacy).

A randomized mechanism is -differential privacy (-DP) if for all neighboring datasets differing by one element and for all events in the output space of , we have

And when , is -differential privacy (-DP).

Definition 2 (-sensitivity [2]).

For a function , the -sensitivity of is defined as follows:

| (6) |

where are a pair of neighboring datasets, which differ in a single entry.

Sensitivity is a key indicator that determines the size of the noise added to the algorithm. That is, to achieve -DP for a function , we usually use the following Gaussian mechanism, where the added noise is sampled from Gaussian distribution with variance that is proportional to the -sensitivity of .

Definition 3 (Gaussian mechanism [2]).

Given a function , the Gaussian mechanism is defined as follows:

where is drawn from Gaussian distribution with . Here is the -sensitivity of the function q, i.e., .

III-B Rényi Differential Privacy

Although the definition of -DP is widely used in the objective and output perturbation methods, the notion of Rényi differential privacy (RDP) [42] is more suitable for gradient perturbation methods including the proposed algorithms.

Definition 4 (Rényi divergence [42]).

For two probability distributions and defined on , the Rényi divergence of order is defined as follows:

Definition 5 (Rényi Differential Privacy (RDP) [42]).

A randomized mechanism is -Rényi differentially private (-RDP) if for all neighboring datasets , we have

That is, the Rényi divergence of the output of the function is less than .

Definition 6 (Rényi Gaussian Mechanism [42]).

Given a function , the Gaussian mechanism satisfies -RDP, where .

III-C Nesterov’s Accelerated Method

In [32], Nesterov presented a first-order minimization scheme with a global convergence rate for solving Problem (1). This convergence rate is provably optimal for the class of Lipschitz differentiable functionals. As shown in Algorithm 1, the Nesterov method accelerates the gradient descent by using an overrelaxation step as follows:

where with the initial value .

In 2014, Goldstein et al. [36] proposed an accelerated ADMM algorithm (AccADMM) by introducing Nesterov acceleration. They also proved that their algorithm has a global convergence rate of .

IV Differentially Private ADMMs

In this section, we propose two new deterministic differentially private ADMM algorithms for many machine learning problems such as the -norm regularized and graph-guided fused Lasso. The proposed algorithms protect privacy by adding gradient perturbations. In particular, the second algorithm (DP-AccADMM) introduces the Nesterov acceleration into the first algorithm (DP-ADMM). Moreover, we also provide the privacy guarantees for the proposed algorithms.

IV-A Differentially Private ADMM

For solving the equality constrained minimization problem (2), and the specific algorithmic steps of our deterministic differentially private ADMM (DP-ADMM) algorithm are presented in Algorithm 2.

Analogous to the general ADMM algorithm, our DP-ADMM algorithm updates and iterates the variables in an alternating fashion. But when updating , we use the first-order approximation of at with Gaussian noise (i.e., ) to replace , where is the added Gaussian noise, and is a noisy variance computed by privacy guarantee, which satisfies the Gaussian mechanism. Then we add a squared norm term into the following proximal update rule of ,

| (7) |

where is a step-size or learning rate, , to ensure that , and with a given positive semi-definite matrix as in [23, 43]. Introducing this squared norm term can make the distance between adjacent iterates (i.e., and ) not be too far, and prevent the noise from affecting the iterates too much.

By using the linearized proximal point method [23], we can obtain the closed-form solution of in Eq. (7) as follows:

As shown in Algorithm 2, the update rules of in Eq. (3) and in Eq. (5) remain unchanged for DP-ADMM. Here, is the regularization term used in many machine learning problems, e.g., the sparsity inducing term . For instance, if it is the -norm regularized term, then the closed-form solution can be easily obtained using the soft-thresholding operator [44], and when it is the -norm term, the closed-form solution can be obtained by derivation.

IV-B Privacy Guarantee Analysis

In this subsection, we theoretically analyze both privacy guarantee and utility guarantee of DP-ADMM. To facilitate our discussion, we first make the following basic assumptions.

Assumption 1.

For a convex and Lipschitz-smooth function , there exists a constant such that for any .

Assumption 2.

The matrix has full row rank. It makes sure that the matrix has a pseudo-inverse.

Through the Gaussian mechanism of RDP, we can get the relationship between and of RDP and the variance of the added noise. Then, we can obtain the relationship between and by the conversion relationship between RDP and -DP. Thus, we can get the size of Gaussian noise variance.

Theorem 1 (Privacy guarantee).

For DP-ADMM, it satisfies -differential privacy with some constants and , if

| (8) |

where .

The detailed proof of Theorem 1 is given in Appendix A.

Let () be an optimal solution of Problem (2). Different from distributed differential privacy ADMMs [25, 26], by constructing the following convergence criterion ,

where and , we can complete the convergence analysis of DP-ADMM. Then by introducing the noise variance term and finding the number of iterations required to reach the stopping criterion, we can get the utility bound and gradient complexity of DP-ADMM. The utility bound is an important indicator to measure the performance of differential privacy algorithms including DP-ADMM. It usually reflects the minimum value that the algorithm can converge to. The lower the utility bound is, the smaller the loss can decrease and the better the trained model is.

Gradient complexity reflects the number of gradient calculations required by the model to reach the utility bound. It is usually proportional to the running time of the algorithm and has a certain relationship with the convergence rate of the algorithm. The smaller the gradient complexity is, the fewer the number of gradients need to be calculated.

Theorem 2 (Utility guarantee).

In DP-ADMM, let be defined in Eq. (8), , and . Then, if the number of iterations , we can get the utility bound of our DP-ADMM algorithm as follows:

| (9) |

Moreover, the gradient complexity of our DP-ADMM algorithm is .

The detailed proof of Theorem 2 can be found in Appendix B. Theorem 2 shows that the privacy guarantee and utility guarantee of DP-ADMM. That is, the size of the added noise variance required by the algorithm to meet differential privacy requirement is given, and the minimum value of the algorithm’s loss is obtained. Compared with the theoretical analysis of traditional ADMMs, we find that the convergence rate of DP-ADMM does not change. This means that differential privacy protection does not affect the convergence rate of the optimization algorithm or the speed at which the optimization algorithm converges to the optimal value, but the optimal value that the algorithm converges to.

IV-C Differentially Private Accelerated ADMM

Non-accelerated ADMMs including DP-ADMM can be used to solve many machine learning problems with equality constraints, but it has a flaw that the convergence speed is not fast enough as in the non-private case. Especially when approaching to the exact solution, the convergence speed is very slow. Therefore, we propose an accelerated version of our DP-ADMM algorithm, called DP-AccADMM.

Inspired by the accelerated ADMM algorithm [36] using the Nesterov method, we introduce the Nesterov accelerated technique into our DP-ADMM algorithm. The detailed steps of our accelerated differentially private ADMM (DP-AccADMM) algorithm are presented in Algorithm 3. In particular, we add a weight coefficient during the iteration process to linearly combine the two adjacent iterates, so that the current iterate can be updated by using the information of the previous iterate. That is, the current descent direction is the combination of the current optimal direction and the previous descent direction, which will make the current iterate to move forward a distance on the previous descent direction. Note that the weight coefficient for momentum acceleration changes with the increase of iterations. In our DP-AccADMM algorithm, we set the weight coefficient at the -th iteration as follows:

where . And the momentum accelerated update rule of is defined as:

| (10) |

Such an accelerated scheme is consistent with the acceleration method proposed by Nesterov [34] and the accelerated ADMM algorithm proposed by Goldstein et al. [36]. In addition, the dual variable also has the same accelerated scheme as , as shown in Step 7 of Algorithm 3.

Although we do not give the theoretical analysis of DP-AccADMM, we conduct many experiments to compare the performance of the two algorithms, DP-AccADMM and DP-ADMM, whose noise level is the same, as shown in Theorem 1. We will report many experimental results and give some discussions in the next section.

V Experimental Results

In this section, we evaluate the performance of our DP-ADMM and DP-AccADMM algorithms and report some experimental results on four publicly available datasets, as shown in Table I. We use our two algorithms to solve the general convex graph-guided fused Lasso problem, and compare our algorithms with their counterparts: ADMM and Acc-ADMM. We first show the convergence speed performance of all the methods in terms of CPU time (seconds) during the training process, and then show the performance of model training and testing as the privacy parameter changes.

V-A Graph-Guided Fused Lasso

To evaluate performance of the proposed algorithms, we consider the following -norm regularized graph-guided fused Lasso problem,

| (11) |

where is the logistic loss function on the feature-label pair , i.e., , and is the regularization parameter. We set as in [43, 45, 46], where is the sparsity pattern of the graph obtained by sparse inverse covariance selection [47]. We used four publicly available datasets in our experiments, as listed in Table I.

| Data sets | training | test | features | |

|---|---|---|---|---|

| a9a | 32,561 | 16,281 | 123 | 1e-5 |

| a8a | 22,696 | 9,865 | 123 | 1e-5 |

| ijcnn1 | 49,990 | 91,701 | 22 | 1e-5 |

| bio_train | 72,876 | 72,875 | 74 | 1e-5 |

V-B Parameter Setting

We fix for all the experiments, and we choose and , where is an intermediate constant in the solution process and is the penalty parameter of the ADMM algorithms. But in our theoretical analysis, will not influence the utility bound of our differentially private algorithms. Thus, we fix as a constant. Moreover, we set and set as a constant on each dataset but differs among these datasets.

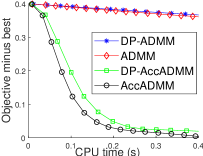

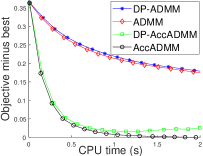

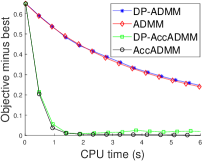

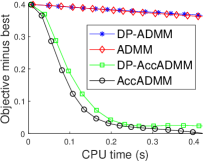

V-C Objective Value Decreasing with CPU Time

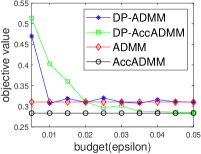

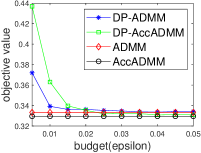

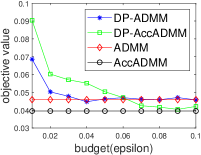

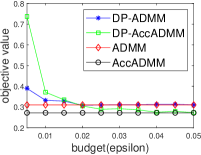

Fig. 1 plots the objective gap (i.e., the objective value minus the minimum value) of the differential privacy ADMM algorithms as the number of iterations increases. Actually, the accelerated ADMM algorithm can achieve the convergence rate of , and it is much faster than the traditional ADMM algorithm. In our experiments, it can be seen that the convergence speed of DP-AccADMM is almost the same as that of its non-differential privacy counterpart, AccADMM. In addition, we can see that there are two gaps between two pairs of algorithms. These gaps are the embodiment of the utility boundary in the utility analysis in the experiments. And the gap between DP-AccADMM and AccADMM is larger than that between DP-ADMM and ADMM. It means that the Nesterov acceleration may increase the utility bound of the algorithm, which will reduce the utility of the algorithm. Therefore, we do another experiment to show the performance of test accuracy of all these algorithms.

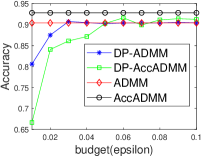

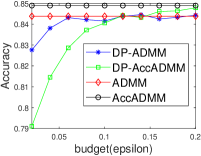

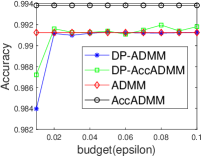

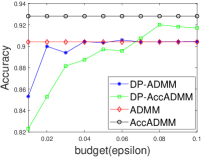

V-D Performance on Simulated Data

Fig. 2 plots the objective value and test accuracy of the differential privacy ADMM algorithms with different privacy budgets for solving -norm regularized graph-guided fused Lasso. We can see that AccADMM converges much faster than ADMM in terms of objective value. DP-AccADMM performs worse than DP-ADMM, when the private budget is small (e.g., ). But with the increase of the budget (e.g., on bio_train), DP-AccADMM outperforms DP-ADMM in terms of both objective value and test accuracy. As the private budget increases, the objective value of DP-AccADMM gradually approaches to that of AccADMM, and the objective value of DP-ADMM approaches to that of ADMM. Thus, the Nesterov acceleration usually leads to a worse utility, when is small. However, when becomes larger, DP-AccADMM gets better and outperforms DP-ADMM.

From test accuracy results, we can see that DP-AccADMM also performs worse than DP-ADMM, when the private budget is small. With the increase of , the test accuracy of DP-AccADMM gradually improves, approaching or even exceeding the test accuracy of DP-ADMM. Moreover, the test accuracy of DP-AccADMM gradually approaches to that of AccADMM and the test accuracy of DP-ADMM gradually approaches to that of ADMM. Thus, for test accuracy, we can see that the Nesterov acceleration technique usually leads to a worse test accuracy, when the private budget is small (e.g., ). However, with the increase of (e.g., on a8a and ijcnn1), the test accuracy of DP-AccADMM gradually increases and outperforms DP-ADMM until it gradually approaches to the test accuracy of AccADMM.

VI Conclusions and Further Work

In this paper, we proposed two efficient differentially private ADMM algorithms with the guarantees of -DP. The first algorithm, DP-ADMM, uses gradient perturbation to achieve -DP. Moreover, we also provided its privacy analysis and utility analysis through Gaussian mechanism and convergence analysis. The second algorithm, DP-AccADMM, uses the Nesterov method to accelerate the proposed DP-ADMM algorithm. All the experimental results showed that DP-AccADMM has a much faster convergence speed. In particular, DP-AccADMM can achieve a higher classification accuracy, when the privacy budget reaches a certain threshold.

Similar to DP-SVRG [19], we can extend our deterministic differentially private ADMM algorithms to the stochastic setting for solving large-scale optimization problems as in [48]. As for the theoretical analysis of DP-AccADMM, we will complete it as our future work. Moreover, we can employ some added noise variance decay schemes as in [49] to reduce the negative effects of gradient perturbation, and provide stronger privacy guarantees and better utility [50]. In addition, an interesting direction of future work is to extend our differentially private algorithms and theoretical results from the two-block version to the multi-block ADMM case [51].

Appendix A: Proof of Theorem 1

Before giving the proof of Theorem 1, we present the following lemmas. We first give the following relationship between RDP and -DP [42]:

Lemma 1 (From RDP to -DP).

If a random mechanism satisfies -RDP, then for any , satisfies -DP.

This lemma shows the conversion relationship between -RDP and -DP. And RDP has the following composition properties [42]:

Lemma 2.

For , if random mechanisms satisfy -RDP, then their composition satisfies -RDP. Moreover, the input of the -th mechanism can be based on the output of the first (i-1) mechanisms.

This lemma shows the changes of the coefficients that satisfy the RDP during the compounding process of a series of random mechanisms of the algorithm iteration.

Lemma 3 ([42]).

Given a function , the Gaussian Mechanism satisfies -RDP, where .

This lemma shows the relationship between the satisfied RDP coefficients and the size of added noise variance .

Proof of Theorem 1:

Proof.

-RDP -DP -DP, where

| (12) |

Let , , and , then

| (13) |

We can obtain

-RDP.

According to Lemma 2, we have

-RDP, -RDP.

According to Definition 2 (i.e., the -sensitivity of the function ), let , then we have . And according to Lemma 3, we have

-RDP.

Therefore, we can obtain

| (14) |

That is, when the size of the noise variance we added satisfies Eq. (14), the proposed DP-ADMM algorithm satisfies -DP. This completes the proof. ∎

Appendix B: Proof of Theorem 2

Before giving the proof of Theorem 2, we first present the following lemmas.

Lemma 4 ([43]).

.

This lemma presents the relationship between the optimal solution of the dual problem and the optimal solution of the original problem. Let us simply express with and the gradient term, which is convenient for our subsequent proof.

| (15) |

Setting the derivative with respect to at to zero, we have

| (16) |

Let

Then , and . Thus, we get a simple representation of .

Lemma 5 ([43]).

If , then we have

Lemma 6 ([43]).

Let , we have

Lemma 7 ([43]).

Proof of Theorem 2:

Proof.

Below let us bound . Let

where , then we have

Therefore,

| (17) |

Then, we have

and

Since , then , and . Taking expectations on both sides of the above inequalities, we have

Since

then

According to Lemma 7, we have

And we have

Thus, according to Lemma 8, we have

Summing up the above inequality for all , we obtain

The convexity of can be obtained from the convexity of both and . Let . Then

Since , , and , then we have

and

Combining the above results, we have

Acknowledgments

We thank all the reviewers for their valuable comments. This work was supported by the National Natural Science Foundation of China (Nos. 61876220, 61876221, 61976164, 61836009 and U1701267), the Project supported the Foundation for Innovative Research Groups of the National Natural Science Foundation of China (No. 61621005), the Program for Cheung Kong Scholars and Innovative Research Team in University (No. IRT_15R53), the Fund for Foreign Scholars in University Research and Teaching Programs (the 111 Project) (No. B07048), the Science Foundation of Xidian University (Nos. 10251180018 and 10251180019), the National Science Basic Research Plan in Shaanxi Province of China (Nos. 2019JQ-657 and 2020JM-194), and the Key Special Project of China High Resolution Earth Observation System-Young Scholar Innovation Fund.

References

- [1] C. Dwork, F. McSherry, K. Nissim, and A. Smith, “Calibrating noise to sensitivity in private data analysis,” in The Third Theory of Cryptography Conference, 2006, pp. 265–284.

- [2] C. Dwork and A. Roth, “The algorithmic foundations of differential privacy,” Found. Trends Theor. Comput. Sci., vol. 9, no. 3-4, pp. 211–407, 2014.

- [3] M. Gong, Y. Xie, K. Pan, K. Feng, and A. K. Qin, “A survey on differentially private machine learning,” IEEE Comput. Intell. Mag., vol. 15, no. 2, pp. 49–64, 2020.

- [4] F. McSherry and I. Mironov, “Differentially private recommender systems: Building privacy into the netflix prize contenders,” in Proc. 15th ACM SIGKDD Int. Conf. Knowledge Discovery and Data Mining, 2009, pp. 627–636.

- [5] A. Machanavajjhala, A. Korolova, and A. D. Sarma, “Personalized social recommendations-accurate or private?” in Proc. 37th Int. Conf. Very Large Data Bases, 2011, pp. 440–450.

- [6] T. Zhu, G. Li, Y. Ren, W. Zhou, and P. Xiong, “Differential privacy for neighborhood-based collaborative filtering,” in Proc. IEEE/ACM Int. Conf. Advances in Social Networks Analysis and Mining, 2013, pp. 752–759.

- [7] T. Zhu, G. Li, W. Zhou, and P. Xiong, “Privacy preserving for tagging recommender systems,” in IEEE WIC ACM Int. Conf. Web Intelligence and Intelligent Agent Technology, 2013, pp. 81–88.

- [8] Y. Lindell and E. Omri, “A practical application of differential privacy to personalized online advertising,” IACR Cryptology ePrint Archive, vol. 2011, p. 152, 2011.

- [9] F. K. Dankar and K. El Emam, “The application of differential privacy to health data,” in Proc. Joint EDBT/ICDT Workshops, 2012, pp. 158–166.

- [10] M. A. P. Chamikara, P. Bertok, I. Khalil, D. Liu, and S. Camtepe, “Privacy preserving face recognition utilizing differential privacy,” Computers & Security, vol. 97, 2020.

- [11] A. Othman and A. Ross, “Privacy of facial soft biometrics: Suppressing gender but retaining identity,” in Proc. European Conf. Computer Vision, 2014, pp. 682–696.

- [12] F. McSherry and R. Mahajan, “Differentially-private network trace analysis,” ACM SIGCOMM Computer Communication Review, vol. 40, no. 4, pp. 123–134, 2010.

- [13] M. Gotz, A. Machanavajjhala, G. Wang, X. Xiao, and J. Gehrke, “Publishing search logs—a comparative study of privacy guarantees,” IEEE Trans. Knowl. Data Eng., vol. 24, no. 3, pp. 520–532, 2011.

- [14] Ú. Erlingsson, V. Pihur, and A. Korolova, “Rappor: Randomized aggregatable privacy-preserving ordinal response,” in Proc. ACM SIGSAC Conf. Computer and Communications Security, 2014, pp. 1054–1067.

- [15] D. P. T. Apple, “Learning with privacy at scale,” Technical report, Apple, 2017.

- [16] B. Ding, J. Kulkarni, and S. Yekhanin, “Collecting telemetry data privately,” in Proc. Adv. Neural Inf. Process. Syst., 2017, pp. 3571–3580.

- [17] J. M. Abowd, “The us census bureau adopts differential privacy,” in Proc. 24th ACM SIGKDD Int. Conf. Knowledge Discovery & Data Mining, 2018, pp. 2867–2867.

- [18] M. Abadi, A. Chu, I. Goodfellow, H. B. McMahan, I. Mironov, K. Talwar, and L. Zhang, “Deep learning with differential privacy,” in Proc. ACM SIGSAC Conf. Computer and Communications Security, 2016, pp. 308–318.

- [19] D. Wang, M. Ye, and J. Xu, “Differentially private empirical risk minimization revisited: Faster and more general,” in Proc. Adv. Neural Inf. Process. Syst., 2018, pp. 2722–2731.

- [20] L. Wang, B. Jayaraman, D. Evans, and Q. Gu, “Efficient privacy-preserving nonconvex optimization,” arXiv: 1910.13659, 2019.

- [21] S. Kim, K. A. Sohn, and E. P. Xing, “A multivariate regression approach to association analysis of a quantitative trait network,” Bioinformatics, vol. 25, pp. 204–212, 2009.

- [22] R. J. Tibshirani and J. Taylor, “The solution path of the generalized lasso,” Annals of Statistics, vol. 39, no. 3, pp. 1335–1371, 2011.

- [23] H. Ouyang, N. He, L. Tran, and A. Gray, “Stochastic alternating direction method of multipliers,” in Proc. Int. Conf. Machine Learning, 2013, pp. 80–88.

- [24] Z. Huang and Y. Gong, “Differentially private ADMM for convex distributed learning: Improved accuracy via multi-step approximation,” arXiv: 2005.07890v1, 2020.

- [25] Z. Huang, R. Hu, Y. Guo, E. Chan-Tin, and Y. Gong, “DP-ADMM: ADMM-based distributed learning with differential privacy,” IEEE Trans. Inf. Forensics Secur., vol. 15, pp. 1002–1012, 2019.

- [26] X. Wang, H. Ishii, L. Du, P. Cheng, and J. Chen, “Privacy-preserving distributed machine learning via local randomization and ADMM perturbation,” IEEE Trans. Signal Process., vol. 68, pp. 4226–4241, 2020.

- [27] C. Chen and J. Lee, “Rényi differentially private ADMM for non-smooth regularized optimization,” in Proc. the Tenth ACM Conf. Data and Application Security and Privacy, 2020, pp. 319–328.

- [28] P. Wang and H. Zhang, “Differential privacy for sparse classification learning,” Neurocomputing, vol. 375, no. 29, pp. 91–101, 2020.

- [29] R. Johnson and T. Zhang, “Accelerating stochastic gradient descent using predictive variance reduction,” in Proc. Adv. Neural Inf. Process. Syst., 2013, pp. 315–323.

- [30] C. Dwork, G. N. Rothblum, and S. Vadhan, “Boosting and differential privacy,” in Proc. IEEE the 51st Annual Symposium on Foundations of Computer Science, 2010, pp. 51–60.

- [31] D. Yu, H. Zhang, W. Chen, T.-Y. Liu, and J. Yin, “Gradient perturbation is underrated for differentially private convex optimization,” in Proc. 29th Int. Joint Conf. Artificial Intelligence, 2020, pp. 3117–3123.

- [32] Y. Nesterov, “A method of solving a convex programming problem with convergence rate ,” Soviet Math. Doklady, vol. 27, pp. 372–376, 1983.

- [33] N. Qian, “On the momentum term in gradient descent learning algorithms,” Neural networks, vol. 12, no. 1, pp. 145–151, 1999.

- [34] Y. Nesterov, Introductory Lectures on Convex Optimization: A Basic Course. Boston: Springer US, 2014.

- [35] S. Ruder, “An overview of gradient descent optimization algorithms,” arXiv: 1609.04747, 2016.

- [36] T. Goldstein, B. O’Donoghue, S. Setzer, and R. Baraniuk, “Fast alternating direction optimization methods,” SIAM J. Imaging Sciences, vol. 7, no. 3, pp. 1588–1623, 2014.

- [37] S. Boyd, N. Parikh, E. Chu, B. Peleato, J. Eckstein et al., “Distributed optimization and statistical learning via the alternating direction method of multipliers,” Foundations and Trends® in Machine learning, vol. 3, no. 1, pp. 1–122, 2011.

- [38] N. Li, T. Li, and S. Venkatasubramanian, “L-closeness: Privacy beyond k-anonymity and l-diversity,” in IEEE Int. Conf. Data Engineering, 2007, pp. 24–24.

- [39] K. Chaudhuri, C. Monteleoni, and A. D. Sarwate, “Differentially private empirical risk minimization,” Journal of Machine Learning Research, vol. 12, pp. 1069–1109, 2011.

- [40] K. Fukuchi, Q. K. Tran, and J. Sakuma, “Differentially private empirical risk minimization with input perturbation,” in Proc. Int. Conf. Discovery Science, 2017, pp. 82–90.

- [41] C. Chen, J. Lee, and D. Kifer, “Renyi differentially private erm for smooth objectives,” in Proc. 22nd Int. Conf. Artificial Intelligence and Statistics, 2019, pp. 2037–2046.

- [42] I. Mironov, “Rényi differential privacy,” in Prof. IEEE 30th Computer Security Foundations Symposium, 2017, pp. 263–275.

- [43] S. Zheng and J. T. Kwok, “Fast-and-light stochastic ADMM,” in Proc. Int. Joint Conf. Artificial Intelligence, 2016, pp. 2407–2613.

- [44] D. L. Donoho, “De-noising by soft-thresholding,” IEEE Trans. Inform. Theory, vol. 41, no. 3, pp. 613–627, 1995.

- [45] H. Ouyang, N. He, and A. Gray, “Stochastic ADMM for nonsmooth optimization,” arXiv: 1211.0632v2, 2012.

- [46] S. Azadi and S. Sra, “Towards an optimal stochastic alternating direction method of multipliers,” in Proc. Int. Conf. Machine Learning, 2014, pp. 620–628.

- [47] L. El Ghaoui, O. Banerjee, and A. D’Aspremont, “Model selection through sparse maximum likelihood estimation for multivariate gaussian or binary data,” Journal of Machine Learning Research, pp. 485–516, 2008.

- [48] Y. Liu, F. Shang, H. Liu, L. Kong, L. Jiao, and Z. Lin, “Accelerated variance reduction stochastic ADMM for large-scale machine learning,” IEEE Trans. Pattern Anal. Mach. Intell., 2020.

- [49] J. Ding, X. Zhang, M. Chen, K. Xue, C. Zhang, and M. Pan, “Differentially private robust ADMM for distributed machine learning,” in Proc. 2019 IEEE Inte. Conf. Big Data, 2019, pp. 1302–1311.

- [50] A. Bellet, R. Guerraoui, M. Taziki, and M. Tommasi, “Personalized and private peer-to-peer machine learning,” in Proc. 21st Int. Conf. Artificial Intelligence and Statistics, 2018, pp. 473–481.

- [51] C. Chen, B. He, Y. Ye, and X. Yuan, “The direct extension of ADMM for multi-block convex minimization problems is not necessarily convergent,” Math. Comp., vol. 155, pp. 57–79, 2016.