toltxlabel=true, tozreflabel=false \zxrsetuptoltxlabel=true, tozreflabel=false

Differentially Private Federated Learning:

Servers Trustworthiness, Estimation, and Statistical Inference

Abstract

Differentially private federated learning is crucial for maintaining privacy in distributed environments. This paper investigates the challenges of high-dimensional estimation and inference under the constraints of differential privacy. First, we study scenarios involving an untrusted central server, demonstrating the inherent difficulties of accurate estimation in high-dimensional problems. Our findings indicate that the tight minimax rates depends on the high-dimensionality of the data even with sparsity assumptions. Second, we consider a scenario with a trusted central server and introduce a novel federated estimation algorithm tailored for linear regression models. This algorithm effectively handles the slight variations among models distributed across different machines. We also propose methods for statistical inference, including coordinate-wise confidence intervals for individual parameters and strategies for simultaneous inference. Extensive simulation experiments support our theoretical advances, underscoring the efficacy and reliability of our approaches.

1 Introduction

1.1 Overview

Federated learning is an efficient approach for training machine learning models on distributed networks, such as smartphones and wearable devices, without moving data to a central server (konevcny2016federated, ; kairouz2021advances, ; li2020federated, ). Since its proposal in (mcmahan2017communication, ), federated learning has gained significant attention in both practical and theoretical machine learning communities. One of the key attractions of federated learning is its ability to provide a certain level of data privacy by keeping raw data on local machines. However, without specific design choices, there are no formal privacy guarantees. To fully exploit the benefits of federated learning, researchers have introduced the concept of differential privacy (abadi2016deep, ; dwork2018privacy, ; dwork2006calibrating, ; dwork2014algorithmic, ; dwork2017exposed, ) to quantify the exact privacy level in federated learning. A series of research papers have focused on federated learning with differential privacy, applying various algorithms and methods (hu2020personalized, ; truex2020ldp, ; wei2020federated, ). Despite these efforts, there remains a significant gap between practical usage and statistical guarantees, particularly in the high-dimensional setting with sparsity assumptions, where theoretical results for the optimal rate of convergence and statistical inference results are largely missing.

In this paper, we focus on studying the estimation and inference problems in the federated learning setting under differential privacy, particularly in the high-dimensional regime. In federated learning, there are several local machines containing data sets from different sources, and a central server to coordinate all local machines to train learning models collaboratively. We present our key results in two major settings for privacy and federated learning. In the first setting, we consider an untrusted central server (lowy2021private, ; wei2020federated, ; hu2020personalized, ) where each machine sends only privatized information to the central server. For example, when using smartphones, where users may not fully trust the server and do not want their personal information to be directly updated on the remote central server. In the second setting, we consider a trusted central server where each machine sends raw information without making it private. (mcmahan2022federated, ; geyer2017differentially, ; mcmahan2017learning, ) For example, in different hospitals, patient data may not be shared among hospitals to protect patient privacy, but they can all report their data to a central server, such as a non-profit organization or an institute, to gain more information and publish statistics on certain diseases.

In the first part of our paper, we demonstrate that under the assumption that the central server is untrusted, the optimal rate of convergence for mean estimation is O(), where is the number of local machines and each containing data points, is the parameter of interest, is the sparsity level, and is the privacy parameter. As commonly assumed in high-dimensional settings where the dimension is comparable or even larger than the number of data, such an optimality result shows the incompatibility of untrusted central server setting and high-dimensional statistics. As a result, we can only hope to get a good estimation under the trusted central server setting in the high-dimensional regime.

In the second part of the paper, we consider the case of a trusted central server and design algorithms that allow for accurate estimations and obtain a near-optimal rate of convergence up to logarithm factors. We also present statistical inference results, including the construction of coordinate-wise confidence intervals with privacy guarantees, and the solution to conduct simultaneous inference privately. This will assist in hypothesis testing problems and construction of confidence intervals for a given subset of indices of a vector simultaneously in high-dimensional settings. We emphasize that our algorithms for estimation and inference are suited for practical purposes, considering its capacity to (1) leverage data from multiple devices to improve machine learning models and (2) draw accurate conclusions about a population from a sample while preserving individual privacy. For instance, in healthcare, we could combine patient data from multiple hospitals to develop more accurate models for disease diagnosis and treatment, while ensuring that patient privacy is protected. We summarize our major contributions as follows:

-

•

For the untrusted central server setting, we provably show that federated learning is not suited for high-dimensional mean estimation problems by providing the optimal rate of convergence under the untrusted central server constraints. This suggests us to consider a trusted central server setting to utilize federated learning for such problems.

-

•

For the trusted central server setting, we design novel algorithms to achieve private estimation with federated learning. We first consider the estimation in homogeneous federated learning setting and then we extend it to a more complicated heterogeneous federated learning setting. We also provide a sharp rate of convergence for our algorithm in both settings.

-

•

In addition, we consider statistical inference problems in both homogeneous and heterogeneous federated learning settings. We provide algorithms for coordinate-wise and simultaneous confidence intervals, which are two common inference problems in high-dimensional statistics. It is worth mentioning that our proposed methods for high-dimensional differentially private inference problems are novel and unique, which has not been developed even for the single-source and non-federated learning setting. Theoretical results show that our proposed confidence intervals are asymptotically valid, supported by simulations.

1.2 Related Work

In the literature, several works focused on designing private algorithms in federated learning/distributed learning based on variants of stochastic gradient decent algorithms. (agarwal2018cpsgd, ) proposed a communication efficient algorithm, CP-SGD algorithm for learning models with local differential privacy (LDP). (erlingsson2020encode, ) proposed a distributed LDP gradient descent algorithm by applying LDP on gradients with ESA framework (bittau2017prochlo, ). (girgis2021shuffled, ) extended works on LDP approach for federated learning and proposed a distributed communication-efficient LDP stochastic gradient descent algorithm through shuffled model and analyzed the upper bound of the convergence rate. However, the trade-off between statistical accuracy and the privacy cost has not been considered in these works.

In the distributed settings, the trade-off between statistical accuracy and information constraints has been discussed in various papers. Two common types of information constraints are communication constraints and privacy constraints. We refer to (zhang2013information, ; braverman2016communication, ; han2018geometric, ; barnes2020lower, ; garg2014communication, ) for more discussions on communication constraints, considering the situation where the bits of the information during communication have constraints.

A series of work discusses the trade-off between accuracy and privacy in high-dimensional and non-federated learning problems, including top- selection (steinke2017tight, ), sparse mean estimation (cai2019cost, ), linear regression (cai2019cost, ; talwar2015nearly, ), generalized linear models (cai2020cost, ), latent variable models (zhang2021high, ). However, the discussion on privacy constraints in the distributed settings are still largely lacking. Among the existing works, most of them focus on the local differential privacy (LDP) constraint. In (barnes2020fisher, ), the mean estimation under loss for Gaussian and sparse Bernoulli distributions are discussed. (duchi2019lower, ) discussed the lower bounds under LDP constraints in the blackboard communication model for mean estimation of product Bernoulli distributions and sparse Gaussian distributions. acharya2020general proposed a more general approach to combine both communication constraints and privacy constraints. Compared with previous works, we focus on the problem where there are data points on each machine. Our interest lies in the -DP instead of LDP, which is a weaker constraint containing broader settings. We further note that, compared with the blackboard communication model (braverman2016communication, ; garg2014communication, ), in the federated learning setting, we assume that the existence of a central server and that each server is only allowed to communicate with the central server. This setting enables us to enhance more privacy.

When we are finalizing this paper444An initial draft of this paper was published as a Ph.D. dissertation in 2023 zhethesis ., we realized an independent and concurrent work li2024federated . li2024federated also considers differentially private federated transfer learning under high-dimensional sparse linear regression model. Namely, they proposed a notion of federated differential privacy that allows multiple rounds of -differentially private transmissions between local machines and the central server, and provides algorithms to filter out irrelevant sources, and exploit information from relevant sources to improve the performance of estimation of target parameters. We differntiate our research with their paper as follows: (1) While they consider differentially private federated learning under untrusted server setting, we deal with both trusted and untrusted server settings. We also highlight a fundamental difficulty of pure -differentially private estimation under untrusted central server settings in federated learning by establishing a tight minimax lower bound, and resort to trusted server settings for estimation and inference problems. (2) While their investigation centers on differentially private estimation within a federated transfer learning framework—specifically focusing on parameter estimation for a target distribution using similar source data—our work focuses on private estimation and inference for parameters that are either common across all participating machines, or vary across different machines.

We also cite papers that provided us inspirations for the design of our proposed algorithms and methods. (javanmard2014confidence, ) introduces a de-biasing produce for the statistical inference problems. (li2020transfer, ) considers the transfer learning problem in high-dimensional settings, which enables us to combine information from other sources to benefit the estimation problems. Such idea could be adopted in the hetergeneous federated learning problems. For the simultaneous inference problems, we refer to (zhang2017simultaneous, ; yu2022distributed, ), which discussed how to conduct simultaneous inference for high-dimensional problems.

Notation. We introduce several notations used throughout the paper. Let represent a vector. Given a set of indices , refers to the components of corresponding to the indices in . The norm of , for , is given by , whereas represents the number of non-zero elements in , also called as its sparsity level.

We use to indicate the number of machines, for the number of samples per machine, for the dimensionality of vectors, and for their sparsity level. The total number of samples across all machines is denoted by . Additionally, we define the truncation function , which projects a vector onto the ball of radius centered at the origin.

For a matrix , and denote the largest and smallest -restricted eigenvalues of , denoted as and , respectively.

For sequences and , implies as grows, signifies that is upper bounded by a constant multiple of , and indicates that is lower bounded by a constant multiple of , where constants are independent of . The notation denotes that is both upper and lower bounded by constant multiples of .

In this work, we often use symbols to represent universal constants. Their specific values may vary depending on the context, but they are independent from other tunable parameters.

2 Preliminaries

2.1 Differential Privacy

We start form the basic concepts and properties of differential privacy (dwork2006calibrating, ). The intuition behind differential privacy is that a randomized algorithm produces similar outputs even when an individual’s information in the dataset is changed or removed, thereby preserving the privacy of individual data. The formal definition of differential privacy is given below.

Definition 2.1 (Differential Privacy (dwork2006calibrating, ))

Let be the sample space for an individual data, a randomized algorithm is -differentially private if and only if for every pair of adjacent data sets and for any , the inequality below holds:

where we say that two data sets and are adjacent if and only if they differ by one individual datum.

In the above definition, the two parameters control the privacy level. From the definition, with smaller and , the outcomes given adjacent and become closer, making it harder for an adversary to distinguish if the original dataset is or , indicating the privacy constraint becomes more stringent. Furthermore, when , we could use -differentially private as the abbreviation of -differentially private.

In the rest of this section, we introduce several useful properties of differential privacy and how to create a differential private algorithm from non-private counterparts. One common strategy is through noise injection. The scale of noise is characterized by the sensitivity of the algorithm:

Definition 2.2

For any algorithm and two adjacent data sets and , the -sensitivity of is defined as:

We then introduce two mechanisms. For algorithms with finite -sensitivity, we add Laplace noises to achieve differential privacy, while for -sensitivity, we inject Gaussian noises.

Proposition 2.3 (The Laplace Mechanism (dwork2006calibrating, ; dwork2014algorithmic, ))

Let be a deterministic algorithm with . For with coordinates be i.i.d samples drawn from Laplace, is -differentially private.

Proposition 2.4 (The Gaussian Mechanism (dwork2006calibrating, ; dwork2014algorithmic, ))

Let be a deterministic algorithm with . For with coordinates be i.i.d samples drawn from , is -differentially private.

The post-processing and composition properties are two key properties in differential privacy, which enable us to design complicated differentially private algorithms by combining simpler ones. Such properties are pivotal in the design of algorithms in later chapters.

Proposition 2.5 (Post-processing Property (dwork2006calibrating, ))

Let be an -differentially private algorithm and be an arbitrary function which takes as input, then is also -differentially private.

Proposition 2.6 (Composition property (dwork2006calibrating, ))

For , let be -differentially private algorithm, then is -differentially private algorithm.

We also mention NoisyHT algorithm (Algorithm 1) introduced by dwork2018differentially , which stands for the noisy hard-thresholding algorithm. The algorithm aims to pursue both sparsity of the output and privacy at the same time.

In the last step, denotes the operator that makes while preserving . This algorithm could be seen as a private top-k selection algorithm, which helps build our proposed algorithm in later section.

Specifically, when the sparsity is chosen to be , the algorithm outputs the maximum element chosen after a single iteration in the private manner. We refer this special case as the Private Max algorithm, which is implemented in Algorithm 7 used for simultaneous inference.

2.2 Federated Learning

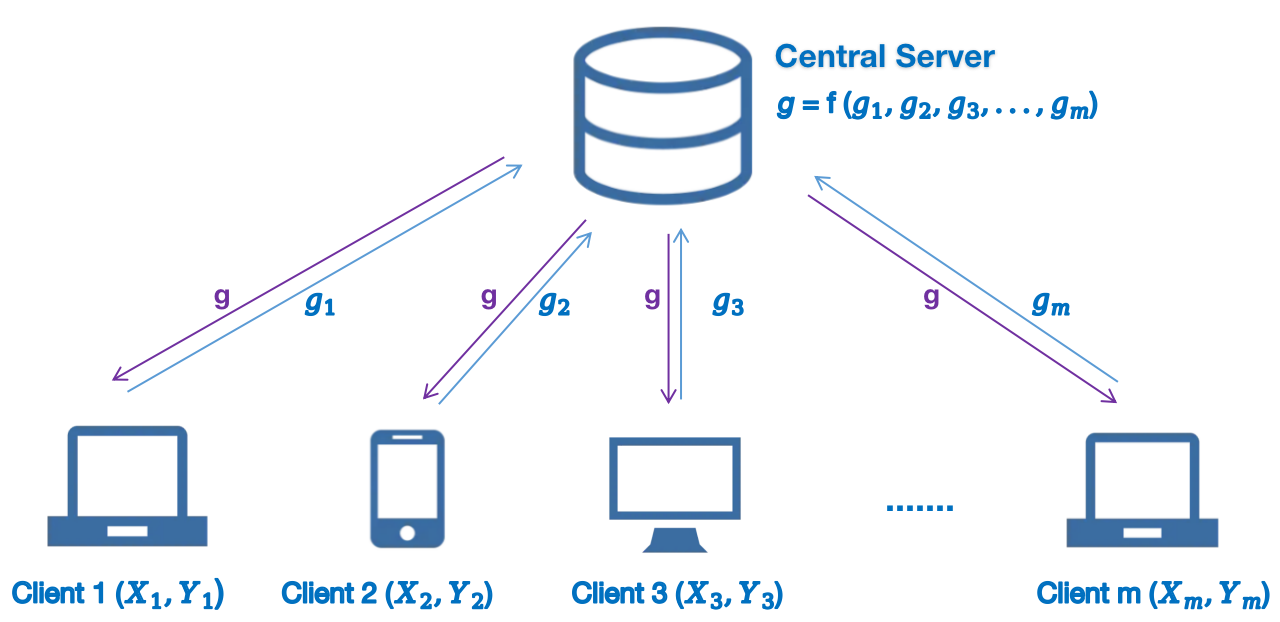

Federated learning introduced in (mcmahan2017communication, ) is a technique designed to train a machine learning algorithm across multiple devices, without exchanging data samples. A central server coordinates the process, with each local machine sending model updates to be aggregated centrally. Figure 1 illustrates the basic concept of federated learning

One characteristic of federated learning is that the training of machine learning models occurs locally, and only parameters and updates are transferred to the central server and shared by each node. Specifically, communication between local machines and the server is bidirectional: machines send updates to the central server, and in return, they receive aggregated information after processing. Communication among local machines is prohibited to prevent privacy leakage. Intuitively, federated learning inherently provides a certain level of privacy.

Although without rigorous definitions, there are two main branches of central server settings in federated learning: the untrusted central server setting and the trusted central server setting (lowy2021private, ; wei2020federated, ; mcmahan2022federated, ; geyer2017differentially, ). In the first setting, where the central server is untrusted, each piece of information sent from the machine to the central server should be differentially private. In the second setting, we assume a trusted central server exists. In this scenario, it is safe to send raw information from the machine to the central server without additional privacy measures. However, to prevent information leakage among local machines, the information sent back from the server should also be differentially private.

Another key aspect of federated learning is that the datasets on each local machine are commonly not independent and identically distributed (i.i.d.). This allows federated learning to train on heterogeneous datasets, aligning with practical scenarios where the datasets on different machines are typically diverse and their sizes may also vary. We will demonstrate that federated learning can efficiently estimate the local model when models on different local machines differ but share some similarities, a concept we refer to as heterogeneous federated learning in Section 5.

2.3 Problem Formulation

In this paper, we assume that there exists a central server and local machines. We denote the data on these machines by , respectively, with . On any machine , there are data points . For simplicity, we assume that there are equal data points for each machine. We note that the result could be easily generalized to cases where the sample sizes on each machine differ.

We consider both untrusted and trusted central server settings. For the untrusted setting, we require that the information sent from local machines to the server is private. In this scenario, we show that in the high-dimensional setting, even with sparsity assumptions, it is impossible to achieve small estimation error when the central server is untrusted. In the trusted setting, we consider the high-dimensional linear regression problem with -sparse . We will first study the case where all machines share the same , (referred to homogeneous federation learning,) and then study a more general case where models on different machines are not equal, but share certain similarities (referred to heterogeneous federation learning.) We show that our algorithm can adapt to such similarity—with larger similarity, the algorithm achieves a faster rate of convergence.

3 An Impossibility Result in the Untrusted Central Server setting

In this section, we study the untrusted server setting where the local machines need to send privatized information to the central server to ensure privacy. We show an impossibility result that in high-dimensional settings where the data dimension is comparable to or greater than the sample size, accurate estimation is not feasible even if we consider a simple sparse mean estimation problem.

As mentioned in Section 2.3, we consider a federated learning setting with machines, where each machine handles data points . Let . We assume that each data point follows a Gaussian distribution , where is a sparse -dimensional vector with sparsity . The goal is to estimate in the federated learning setting when the central server is untrusted. In this section, we provide an optimal rate of convergence for this problem and show that the untrusted central server setting is not suited for high-dimensional problems.

We begin by deriving the minimax lower bound, which characterizes the fundamental difficulty of this estimation problem. In untrusted server setting, we additionally assume that each piece of information sent from the local machine to the central server follows -differential privacy. To achieve this, we introduce the privacy channel , a function that is responsible for privatizing the information transmitted from the local machines. Given the input and the privacy channel , representing all the information (from multiple rounds) transmitted to the central server. More precisely, we require privacy guarantees such that for any two adjacent datasets and , differing by only one data point on any local machine, and for an output representing the information sent from the local machine to the central server, differential privacy guarantee holds.

We consider any mechanism in the federated learning setting with local machines and one central server, operated on the dataset . serves as a procedure to estimate , where each local machine collaborates exclusively with the central server without direct interaction among themselves. On each machine , the mechanism uses the privacy channel and data sample to generate , which is then transmitted to the central server. The central server receives the information from all machines. After multi-rounds of collaboration between local machines and the central server, we obtain the sparse and private estimator . We denote the class of all mechanisms that satisfy the above constraints as . Under this setting we establish a lower bound for the estimation error of the mean in Theorem 1.

Theorem 1

Suppose is generated as above. Let be a -sparse -dimensional mean of Gaussian distribution satisfying . We consider the estimation of the mean vector under the untrusted central server federated learning setting with local machines and data points in each machine. Then, there exists a constant such that

The lower bound contains two terms. The first term, of order , represents the minimax risk of mean estimation using only the samples from a local machine. The second term, of order , accounts for the error from federated learning across multiple machines under privacy constraints. Theorem 1 suggests that we cannot perform better than either choosing to estimate the mean using only the local machine or adopting the federated learning approach and combining information from different machines. However, in the latter approach, we must at least incur a rate of , which is linearly proportional to the dimension . This result suggests that privacy constraints significantly impact the efficiency of federated learning in high-dimensional settings. Furthermore, as the number of machines increases, we can possibly attain better performance, highlighting the merit of federated learning.

We also show the tightness of the lower bound in Theorem 1 by providing the upper bound.

Theorem 2

Suppose that conditions in Theorem 1 hold. Then, there exists an -differentially private algorithm for the estimation of as

where is some constant.

The proof follows by constructing an algorithm that transforms Gaussian mean to Bernoulli mean according to the sign of the Gaussian mean, motivated by Algorithm 2 discussed in (acharya2020general, ), where the authors discuss -bit protocol for estimating the product of Bernoulli family. More details of the algorithm are deferred to Section A.2. Based on the results from Theorems 1 and 2, we obtain the optimal rate of convergence for sparse mean estimation under differentially private federated learning setting. As a result, when the central server is untrusted, it is impossible to find an approach to achieve accurate estimation under the untrusted server assumption. This highlights the necessity of the trusted server setting for statistical estimation and inference in high-dimensional federated learning scenarios. In the following sections, we develop estimation and inference procedures under the trusted server settings.

4 Homogeneous Federated Learning Setting

4.1 Algorithms for Estimation Problems

In this section, we consider the setting of a trusted central server, where local machines fully trust the central server and send unprivatized information to it without implementing privacy measures. However, when the central server sends information back to the local machines, it must ensure that this information is privatized to avoid any privacy leakage across local machines.

In this subsection, we first focus on the statistical estimation problems in this setting and then develop inference results in the next subsection. More specifically, our primary focus is on the linear regression problem in a high-dimensional setting, where the ground truth, denoted as , is a sparse -dimensional vector. We initially study the simpler case in this section, where the underlying generative models for each local machine are identical, which we refer to as the homogeneous federated learning setting. A more complicated heterogeneous setting will be discussed in the following section. Specifically, we consider the following high-dimensional linear regression model:

where we assume is the error term whose coordinates are independent and following sub-Gaussian distribution with variance proxy , denoted by . is a random matrix whose rows are following sub-Gaussian distribution with a covariance matrix .

We first introduce the parameter estimation algorithm under differentially private federated settings with a trusted central server.

In Step 1 of Algorithm 2, the information computed on each local machine is transmitted to the central server. The second step involves calculations performed at the central server. Prior to sending the information back to the local machines, it undergoes privacy preservation through the application of the NoisyHT algorithm, as introduced in Algorithm 1. Subsequently, the local machine updates its estimation based on the information received from the central server.

We compare Algorithm 2 with Algorithm 4.2 in (cai2019cost, ), which addresses the private estimation in linear regression under non-federated learning settings. Unlike the latter, our algorithm does not transmit all data points to the central server. Instead, we calculate the gradient updates locally on each machine and send only these local gradients to the server. This design enhances privacy protection, as the original data remains visible only on the local machine and is not exposed externally. Furthermore, this approach of gradient updates also reduces communication costs by transmitting only a -dimensional vector from each local machine for the gradient update. Previous research has also considered non-private distributed methods for linear regression problems, such as (lee2017communication, ; zhang2012communication, ). Our algorithm, however, ensures differential privacy. In practice, the sparsity level can be determined using a private version of cross-validation, while other parameters may be pre-chosen based on our theoretical analysis.

4.2 Algorithms for Inference Problems

In this subsection, we focus on statistical inference problems in the homogeneous federated learning setting, such as constructing coordinate-wise confidence intervals for parameters and performing simultaneous inference. To begin, we develop a method for constructing coordinate-wise confidence intervals, for example, for the -th index of , . However, it is important to note that the output of Algorithm 2 is biased due to hard thresholding. To overcome this bias, we employ a de-biasing procedure, a common technique in high-dimensional statistics, as demonstrated in previous studies (javanmard2014confidence, ). This procedure involves approximating the -th column of the precision matrix to construct confidence intervals for each . Subsequently, we focus on obtaining an estimate of the precision matrix in a private manner.

The structure of Algorithm 3 is similar to Algorithm 2, as both adopt an iterative communication between the central server and the local machines; the information is initially transmitted from the local machines to the server, then, the central server performs calculations and use the NoisyHT algorithm (Algorithm 1) to ensure the privacy of the information. Subsequently, each local machine updates the gradient and progresses to the next iteration. The primary distinction between two algorithms lies in the computation of the gradient on each machine.

Denote the output of Algorithm 2 as and the output of Algorithm 3 as . Then the de-biased differentially private estimator of is given by

| (4.1) |

where , and is the injected random noise to ensure privacy, following a Gaussian distribution , where with some constants defined later.

The debiased estimator in (4.1) enables us to construct a differentially private confidence intervals. Although the variance of the error term in the linear regression model is usually unknown, we can estimate from the data in a private manner. The estimation is based on the residual term between the response and the fitted value . We summarize the method to estimate in the private federated learning setting in Algorithm 4.

When examining the convergence rates of and in Theorem 3, we observe the crucial roles of the largest and smallest restricted eigenvalues of . Since these eigenvalues directly influence the construction of confidence intervals and cannot be directly obtained from the data, their private estimation becomes essential. Below, we outline an algorithm to estimate the largest restricted eigenvalue, . To estimate the smallest restricted eigenvalue, , the same algorithm can be used by modifying Step 4 from “argmax” to “argmin”.

Based on Algorithms 4 and 5, we provide a constuction for coordinate-wise confidence intervals in Algorithm 6.

So far we focused on constructing confidence intervals for individual coordinates of the parameter vector . However, in high-dimensional settings, we are often interested in group inference problem, where we test hypotheses involving multiple coordinates simultaneously. Specifically, we consider the problem of testing the null hypothesis given by

against the alternative hypothesis,

for at least one , where is a subset of all coordinates and we allow to be the same order as . Additionally, we also construct simultaneous confidence intervals for all coordinates in . Note that the problem discussed above are common in high-dimensional data analysis, with applications such as multi-factor analysis of variance (hothorn2008simultaneous, ), additive modeling (wiesenfarth2012direct, ). Previous research works have discussed similar problems in the non-private setting, including (chernozhukov2013gaussian, ; zhang2017simultaneous, ; yu2022distributed, ).

To address the problem, simultaneous inference can be conducted using a test statistic

Major challenges of simultaneous inference in a private federated learning setting include: (1) minimizing the communication cost from local machines to the server while retaining all data on the local machines, and (2) ensuring the privacy of the procedure, which necessitates a tailored privacy-preserving mechanism at each step of the algorithm.

In our framework, we propose an algorithm based on the bootstrap method. As previously mentioned, to build confidence intervals, our interest lies in the statistic computed by the maximum coordinate of over . By decomposing this statistic, we obtain a term . To determine the distribution of this term, we bootstrap the residuals .

We outline the algorithm as follows: we first estimate and using Algorithm 2 and 3, respectively. Accordingly, by stacking for all , we get an estimator of the precision matrix . The details are provided in Algorithm 7.

On line 4 of Algorithm 7, we employ the Private Max algorithm, which we mentioned earlier as a variation of NoisyHT algorithm (Algorithm 1) by directly picking , to obtain the maximum element in a vector in a private manner. It is also important to note that the Private Max algorithm is applied to a subset of . After presenting the algorithm, we denote as:

is used as the statistic for inference problems later.

As previously mentioned, we can easily construct a simultaneous confidence interval for each by:

where is obtained from our algorithm with prespecified . We can similarly perform hypothesis testing; first calculate the test statistic and obtain from our algorithm with prespecified , then reject if the statistic lies in the rejection region.

4.3 Theoretical Results

In this subsection, we provide theoretical guarantee for the algorithms and methods discussed in the previous subsections. Before proceeding, we outline key assumptions concerning the design matrix , precision matrix , and the true parameter of the linear regression model, which are essential for our subsequent analyses.

-

(P1)

Parameter Sparsity: The true parameter vector satisfies for some constant and .

-

(P2)

Precision matrix sparsity: For each column of the precision matrix , , it satisfies that for some constant and .

-

(D1)

Design Matrix: for each row of the design matrix , denote by , is sub-Gaussian with sub-Gaussian norm .

-

(D2)

Bounded Eigenvalues of the covariance matrix: For the covariance matrix , there exists a constant such that .

The above assumptions (P1) and (P2) bounds the norm and norm of the parameters and , and assumption (D1) guarantees that each row of follows a sub-Gaussian distribution, and assumption (D2) requires the covariance matrix has bounded eigenvalues. These assumptions are commonly used for theoretical analysis of differentially private algorithms and debiased estimators (cai2020cost, ; cai2019cost, ; javanmard2014confidence, ).

With assumptions (P1)-(D2), we analyze the algorithms we presented. We begin with the estimation problem and provide a rate of convergence of and .

Theorem 3

Let be an i.i.d. samples from the high-dimensional linear model. Suppose that assumptions (P1), (P2), (D1), (D2) are satisfied. Additionally,

-

•

we choose parameters as follows: let , , , , , , , and , where are the largest and smallest s-restricted eigenvalues of .

- •

Then there exists some absolute constant such that, if , , and for a sufficiently large constant , then, for the output from Algorithm 2 and Algorithm 3,

| (4.2) |

and

| (4.3) |

hold with probability .

The upper bound of Algorithm 3 in (4.3) can be interpreted as follows. The first term represents the statistical error, while the second term accounts for the privacy cost. Furthermore, the result is comparable to that of Theorem 4.4 in (cai2019cost, ), which addresses private linear regression in a non-federated setting. This comparison suggests that the federated learning approach does not affect the convergence rate adversely; instead, it allows us to leverage the benefits of federated learning. We also note that the advantages of federated learning will be further explored in the heterogeneous federated learning setting, which will be discussed in the next chapter.

The remainder of this subsection presents the theoretical results for the inference problem. We begin with the construction of coordinate-wise confidence intervals. As mentioned before, is usually unknown and we estimate in a private manner, presented in Algorithm 4. Lemma 4.1 states the statistical guarantee of our algorithm.

Lemma 4.1

Next, we consider a simplified version of the confidence interval, where the privacy cost is dominated by the statistical error. In this scenario, we assume that the privacy level is relatively low and the privacy constraints are loose, meaning that the privacy parameters and are relatively large, allowing for nearly cost-free estimation. We present our result in the following theorem.

Theorem 4

Suppose that the conditions in Theorem 3 hold. Assume that and . Also assume that the privacy cost is dominated by statistical error, i.e., there exists a constant such that . Then, given the de-biased estimator defined in (4.1), the confidence interval is asymptotically valid:

where

Also, the confidence interval is -differentially private.

Theorem 4 assumes that the privacy cost is dominated by the statistical error. However, when the privacy constraint is more stringent with small privacy parameters and , the privacy cost may be larger than the statistical error. In this scenario, we generalize Theorem 4 to analyze Algorithm 6. We note that the largest and smallest restricted eigenvalues of also need to be estimated by Algorithm 5. Lemma 4.2 quantifies the estimation error of the largest restricted eigenvalue of .

Lemma 4.2

If and for some constant , then the output from Algorithm 5 is -differentially private. Moreover, holds where is the largest restricted eigenvalue of .

We then present a theoretical result for the confidence interval in a more general case in Theorem 5.

Theorem 5

Compared to the non-private counterpart in (javanmard2014confidence, ), we claim that our confidence interval has a similar form but with additional noise injected to ensure privacy. When the noise level is low, the confidence interval closely approximates the non-private counterpart, allowing us to nearly achieve privacy without incurring additional costs. Furthermore, when the privacy level is high, the confidence interval has a larger length to attain the same confidence level.

Finally, for the simultaneous inference problems, we demonstrate that -quantile of statistic in (4.2) is close to the -quantile of calculated in Algorithm 7 for each using the bootstrap method. The next theorem states the statistical properties of Algorithm 7.

Theorem 6

Assume the conditions in Theorem 4 hold. Additionally, we assume that and the privacy cost is dominated by the statistical error, i.e., there exists a constant such that . We also assume that there exists a constant such that , and that , where is the number of iterations for bootstrap . The noise level is chosen as . Then, computed in Algorithm 7 satisfies

Theorem 6 has useful applications: we can obtain a good estimator of the -quantile of using the bootstrap method and then use it to construct confidence intervals or perform hypothesis testing. Numerical results will be presented in later chapters to further support our claims.

5 Heterogeneous Federated Learning Setting

5.1 Methods and Algorithms

In this section, we consider a more general setting where the parameters of interest on each machine are not identical, but they share some similarities. Specifically, we consider the scenario where, on each machine , we assume a linear regression model:

where represents the true parameter on machine . We assume that each is a vector whose coordinates follow a sub-Gaussian distribution: subG, i.i.d. We also assume that each row of follows a sub-Gaussian distribution i.i.d. with mean zero and covariance matrix . We further quantify the similarity of each by assuming that that there exists a subset with satisfying for any .

A naive approach would be estimating each locally, as in the non-private setting. However, in the context of federated learning, we can improve the estimation with a sharper rate of convergence by exploiting similarities of the model across machines. To achieve this, we decompose into the sum of two vectors, , where captures the signals common to all , and captures the signals unique to each machine.

We employ a two-stage procedure to estimate each : in the first stage, we estimate using Algorithm 2 with a sparsity level of indicating the number of shared signals. In the second stage, we estimate on the individual machine. Our final estimation of is given by . The procedure is summarized in Algorithm 8.

Similar to the previous section, we next address inference problems. Our algorithms consist of two parts: the construction of coordinate-wise confidence intervals and simultaneous inference. We begin by describing the algorithm for coordinate-wise confidence intervals in Algorithm 9.

In Algorithm 9, is the -differentially private estimator of the -th row of the precision matrix of covariance matrix . We define the variable in step 3 by

| (5.1) |

We then provide Algorithm 10 for the simultaneous inference problem. Similar to the previous chapter, we can perform simultaneous inference for each to build simultaneous confidence interval and hypothesis testing.

5.2 Theoretical Results

In this subsection, we provide theoretical analysis for the algorithms in heterogeneous federated learning settings. We begin our theoretical analysis with the estimation problem, which resembles Theorem 3.

Intuitively, when are similar but not identical, federated learning can be used to estimate their common elements and the remaining parameters can be estimated individually on each machine. This results in a sharper rate of convergence as the estimation of the common component can exploit the information from more data points. We summarize the result in Theorem 7.

Theorem 7

In the case where , i.e., the models are largely different across machines, the third and fourth term on the right hand side of (5.2) dominates the estimation error, and the estimation accuracy of via federated learning becomes closer to that with a single machine (). In high level, this is because the information from other machines is not helpful in the estimation when there exists a large dissimilarity of models across machines. However, with a large , federated learning can leverage the similarity of models to improve estimation accuracy. As a result, the rate in (5.2) becomes closer to the rate in 4.2 for homogeneous federated learning setting when .

We next present our results for the inference problems. To start we verify that the output from Algorithm 9 is a asymptotic confidence interval for .

Theorem 8

Finally, we provide a statistical guarantee for Algorithm 10. Similar to the previous section, we define as:

Theorem 9

Assume that the conditions in Theorem 4 hold. We additionally assume that and the privacy cost is dominated by the statistical error, i.e., there exists a constant such that and . We also assume that there exists a constant such that . The noise level is chosen as . Then,

Theorem 9 states that -quantile of is asymptotically close to , which validates the simultaneous confidence intervals based on obtained by the bootstrap method. This result allows us to perform simultaneous inference such as the confidence intervals and hypothesis testing based on .

6 Simulations

In this section, we conduct simulations to investigate the performance of our proposed algorithm as discussed in the preceding sections. Specifically, we explore the more complex heterogeneous federated learning setting, where each machine operates on different models yet exhibits similarities. Our simulations are divided into three main parts.

In Section 6.1, we present the simulation results for the coordinate-wise estimation problem within a private federated setting, discussing the differences between the estimated and the true across various scenarios. We also examine the coverage of our proposed confidence intervals. Section 6.2 extends the settings to simultaneous inference.

We generate simulateion simulation datasets as follows. First, we sample the data , for , where each follows a Gaussian distribution with mean zero and covariance matrix . We set such that for each , . On each machine, we assume a -sparse unit vector with , where is the number of non-zero shared signals. For each , we set the first shared elements to and additionally select machine-specific entries from the remaining indices to be . We then compute , where each follows a Gaussian distribution with .

6.1 Estimation and Confidence Interval

In this subsection, we investigate the estimation accuracy and confidence interval coverage of our algorithm for coordinate-wise inference. Namely, we consider the following scenarios:

-

•

Fix number of machines , , , , and . Set the number of samples on each machine to be , respectively.

-

•

Fix number of samples on each machine , , , , and . Set the number of machines to be ,

-

•

Fix number of machines , number of samples on each machine , , , . Set, , , , respectively.

-

•

Fix number of machines , number of samples on each machine , , , , . Set , respectively.

-

•

Fix number of machines , number of samples on each machine , , , and . Set respectively.

-

•

Fix number of machines , number of samples on each machine , , , and . Set , respectively.

For each setting, we report the average estimation error among 50 replications. Also, in each setting, we calculate the confidence interval with for each index of using our proposed algorithm. To evaluate the quality of confidence interval, we define as the coverage of the confidence interval:

We also define the coverage for non-zero and zero entries of by and , respectively, where is the set of non-zero indices in .

We report the estimation error, coverage of true parameter and length of confidence interval for each configuration listed above in Table 2:

| Simulation Results | |||||

|---|---|---|---|---|---|

| Estimation Error (Sd) | length | ||||

| (3000,15,800,15,8,0.8) | 0.0213 (0.0028) | 0.940 | 0.929 | 0.940 | 0.0532 |

| (4000,15,800,15,8,0.8) | 0.0170 (0.0032) | 0.945 | 0.960 | 0.944 | 0.0437 |

| (5000,15,800,15,8,0.8) | 0.0141 (0.0021) | 0.940 | 0.945 | 0.940 | 0.0378 |

| (4000,10,800,15,8,0.8) | 0.0218 (0.0047) | 0.945 | 0.945 | 0.945 | 0.0437 |

| (4000,20,800,15,8,0.8) | 0.0126 (0.0025) | 0.944 | 0.941 | 0.944 | 0.0437 |

| (4000,15,600,15,8,0.8) | 0.0162 (0.0031) | 0.946 | 0.933 | 0.946 | 0.0436 |

| (4000,15,1000,15,8,0.8) | 0.0191 (0.0027) | 0.940 | 0.933 | 0.940 | 0.0439 |

| (4000,15,800,15,4,0.8) | 0.0188 (0.0032) | 0.952 | 0.945 | 0.953 | 0.0420 |

| (4000,15,800,15,12,0.8) | 0.0137 (0.0016) | 0.944 | 0.937 | 0.944 | 0.0462 |

| (4000,15,800,10,8,0.8) | 0.0105 (0.0017) | 0.946 | 0.947 | 0.946 | 0.0389 |

| (4000,15,800,20,8,0.8) | 0.0243 (0.0036) | 0.941 | 0.932 | 0.941 | 0.0497 |

| (4000,15,800,15,8,0.5) | 0.0240 (0.0038) | 0.940 | 0.949 | 0.940 | 0.0550 |

| (4000,15,800,15,8,0.3) | 0.0943 (0.0281) | 0.928 | 0.941 | 0.928 | 0.0792 |

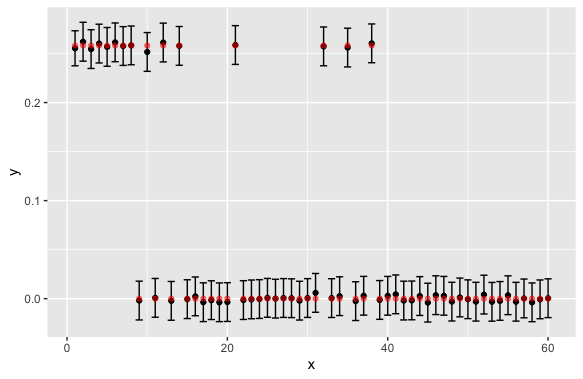

From Table 2, we observe a consistent result with our theory. Namely, for the estimation error, the error becomes small as gets larger as we require less level of privacy. Also, more data points on each machine, more number of machines, smaller sparsity level lead to better estimation accuracy. For confidence intervals, we observe that the coverage is close to for , , and , is and stable in different settings. To further illustrate our claim, we pick the setting of and plot the confidence intervals versus the true value among 50 replications in Figure 3. We randomly select 60 out of coordinates.

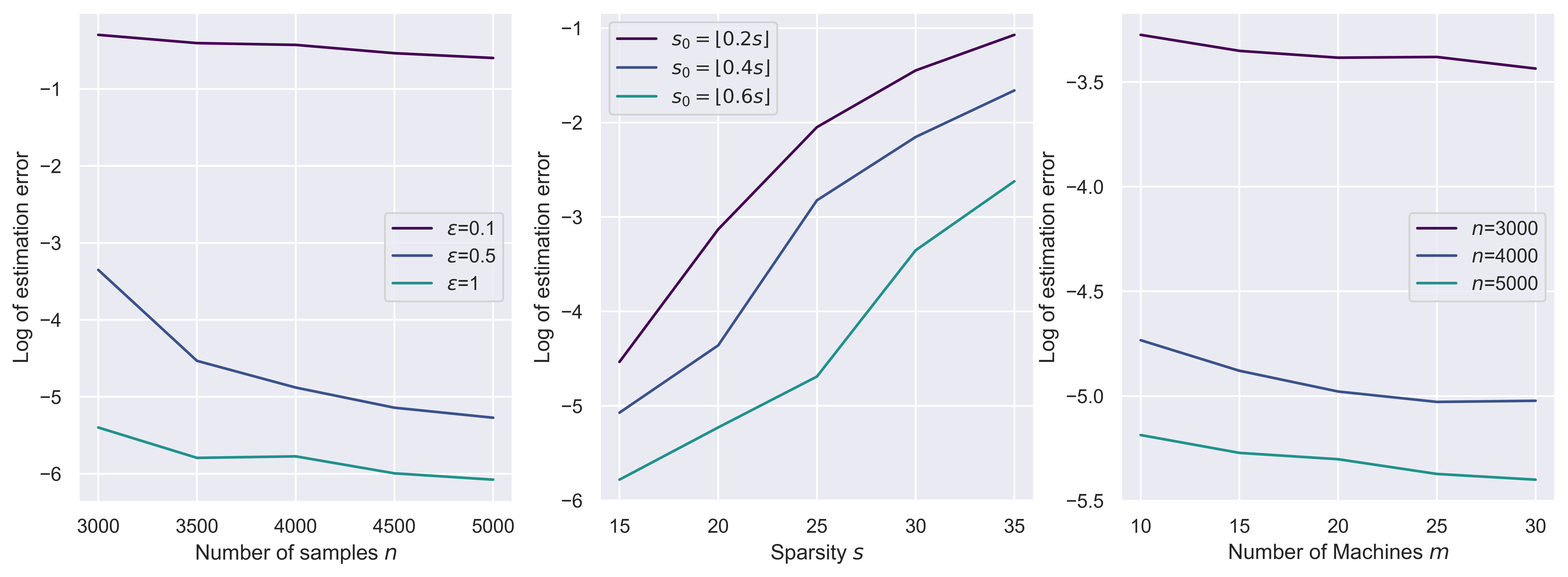

We also summarize our results in Figure 4, where we plot the estimation error against the change in the number of samples, sparsity, and number of machines. For the figure, we fixed , , , , for the middle figure, we fixed , , , , and for the right figure, we fixed , , , . The error is averaged over replications.

From the left figure in Figure 4, we observe the decreasing error when we increase . When the privacy parameter is large, we have better estimation error. From the middle figure, we observe that as the sparsity level grows, the estimation error also increases. Also, when the sparsity for the shared signal becomes large, the estimation error also becomes large. In the right figure, we observe a consistent decrease of error when we increase the number of machines. All these figures support Theorem 7.

6.2 Simultaneous Inference

In this subsection, we investigate our proposed algorithms for simultaneous inference problems. We aim to build a simultaneous confidence interval when under three settings: , , and . For each setting, we repeat 50 simulations and report the coverage and length of the confidence intervals. The results are shown in Table 5.

| Simulation Results for Simultaneous Inference | ||||||

|---|---|---|---|---|---|---|

| len() | len() | len() | ||||

| (3000,15,800,15,8,0.8) | 0.981 | 0.883 | 0.983 | 0.091 | 0.066 | 0.091 |

| (4000,15,800,15,8,0.8) | 0.985 | 0.910 | 0.987 | 0.079 | 0.057 | 0.079 |

| (5000,15,800,15,8,0.8) | 0.987 | 0.875 | 0.990 | 0.071 | 0.051 | 0.071 |

| (4000,10,800,15,8,0.8) | 0.989 | 0.894 | 0.991 | 0.079 | 0.057 | 0.079 |

| (4000,20,800,15,8,0.8) | 0.983 | 0.898 | 0.986 | 0.079 | 0.057 | 0.079 |

| (4000,15,600,15,8,0.8) | 0.993 | 0.878 | 0.995 | 0.077 | 0.057 | 0.077 |

| (4000,15,1000,15,8,0.8) | 0.994 | 0.878 | 0.997 | 0.080 | 0.057 | 0.080 |

| (4000,15,800,15,4,0.8) | 0.983 | 0.772 | 0.993 | 0.079 | 0.057 | 0.079 |

| (4000,15,800,15,12,0.8) | 0.975 | 0.957 | 0.974 | 0.079 | 0.058 | 0.079 |

| (4000,15,800,10,8,0.8) | 0.986 | 0.976 | 0.985 | 0.079 | 0.055 | 0.079 |

| (4000,15,800,20,8,0.8) | 0.974 | 0.850 | 0.982 | 0.078 | 0.059 | 0.078 |

| (4000,15,800,15,8,0.5) | 0.940 | 0.882 | 0.940 | 0.103 | 0.083 | 0.102 |

| (4000,15,800,15,8,0.3) | 0.953 | 0.789 | 0.975 | 0.127 | 0.097 | 0.126 |

From simulation results, we can observe that our proposed simultaneous confidence interval mostly exhibit over-coverage for , and under-coverage for . This pattern has also been observed in previous works addressing simultaneous inference (van2014asymptotically, ; zhang2017simultaneous, ). Therefore, this could be attributed to the inherent nature of simultaneous inference rather than to algorithmic reasons.

7 Discussions and Future Work

In this paper, we study the high-dimensional estimation and inference problems within the context of federated learning. In scenarios involving an untrusted central server, our findings reveal that accurate estimation is infeasible, as the rate of convergence is adversely proportional to the dimension . Conversely, in the trusted central server setting, we developed algorithms that achieve an optimal rate of convergence. We also explored inference challenges, detailing methodologies for both point-wise confidence intervals and simultaneous inference.

There are several extensions for further research. Currently, our models presume that each machine operates under a linear regression framework. We can possibly expand our algorithm to accomodate more complex models, such as generalized linear models, classification models, or broader machine learning models. Moreover, an interesting extension would be to refine our understanding of model similarity across machines. Although Section 5 currently bases model similarity on norms, reflecting non-sparse patterns, future studies could explore norm-based similarities, particularly focusing on and norms, to enhance our approach to heterogeneous federated learning settings.

References

- [1] Martin Abadi, Andy Chu, Ian Goodfellow, H Brendan McMahan, Ilya Mironov, Kunal Talwar, and Li Zhang. Deep learning with differential privacy. In Proceedings of the 2016 ACM SIGSAC conference on computer and communications security, pages 308–318, 2016.

- [2] Jayadev Acharya, Clément L Canonne, and Himanshu Tyagi. General lower bounds for interactive high-dimensional estimation under information constraints. arXiv preprint arXiv:2010.06562, 2020.

- [3] Naman Agarwal, Ananda Theertha Suresh, Felix Yu, Sanjiv Kumar, and H Brendan Mcmahan. cpsgd: Communication-efficient and differentially-private distributed sgd. arXiv preprint arXiv:1805.10559, 2018.

- [4] Leighton Pate Barnes, Wei-Ning Chen, and Ayfer Özgür. Fisher information under local differential privacy. IEEE Journal on Selected Areas in Information Theory, 1(3):645–659, 2020.

- [5] Leighton Pate Barnes, Yanjun Han, and Ayfer Ozgur. Lower bounds for learning distributions under communication constraints via fisher information. Journal of Machine Learning Research, 21(236):1–30, 2020.

- [6] Andrea Bittau, Úlfar Erlingsson, Petros Maniatis, Ilya Mironov, Ananth Raghunathan, David Lie, Mitch Rudominer, Ushasree Kode, Julien Tinnes, and Bernhard Seefeld. Prochlo: Strong privacy for analytics in the crowd. In Proceedings of the 26th symposium on operating systems principles, pages 441–459, 2017.

- [7] Mark Braverman, Ankit Garg, Tengyu Ma, Huy L Nguyen, and David P Woodruff. Communication lower bounds for statistical estimation problems via a distributed data processing inequality. In Proceedings of the forty-eighth annual ACM symposium on Theory of Computing, pages 1011–1020, 2016.

- [8] T Tony Cai, Yichen Wang, and Linjun Zhang. The cost of privacy: Optimal rates of convergence for parameter estimation with differential privacy. arXiv preprint arXiv:1902.04495, 2019.

- [9] T Tony Cai, Yichen Wang, and Linjun Zhang. The cost of privacy in generalized linear models: Algorithms and minimax lower bounds. arXiv preprint arXiv:2011.03900, 2020.

- [10] Victor Chernozhukov, Denis Chetverikov, and Kengo Kato. Gaussian approximations and multiplier bootstrap for maxima of sums of high-dimensional random vectors. The Annals of Statistics, 41(6):2786–2819, 2013.

- [11] John Duchi and Ryan Rogers. Lower bounds for locally private estimation via communication complexity. In Conference on Learning Theory, pages 1161–1191. PMLR, 2019.

- [12] Cynthia Dwork and Vitaly Feldman. Privacy-preserving prediction. In Conference On Learning Theory, pages 1693–1702. PMLR, 2018.

- [13] Cynthia Dwork, Frank McSherry, Kobbi Nissim, and Adam Smith. Calibrating noise to sensitivity in private data analysis. In Theory of cryptography conference, pages 265–284. Springer, 2006.

- [14] Cynthia Dwork, Aaron Roth, et al. The algorithmic foundations of differential privacy. Foundations and Trends in Theoretical Computer Science, 9(3-4):211–407, 2014.

- [15] Cynthia Dwork, Adam Smith, Thomas Steinke, and Jonathan Ullman. Exposed! a survey of attacks on private data. Annual Review of Statistics and Its Application, 4:61–84, 2017.

- [16] Cynthia Dwork, Weijie J Su, and Li Zhang. Differentially private false discovery rate control. arXiv preprint arXiv:1807.04209, 2018.

- [17] Úlfar Erlingsson, Vitaly Feldman, Ilya Mironov, Ananth Raghunathan, Shuang Song, Kunal Talwar, and Abhradeep Thakurta. Encode, shuffle, analyze privacy revisited: Formalizations and empirical evaluation. arXiv preprint arXiv:2001.03618, 2020.

- [18] Ankit Garg, Tengyu Ma, and Huy Nguyen. On communication cost of distributed statistical estimation and dimensionality. Advances in Neural Information Processing Systems, 27:2726–2734, 2014.

- [19] Robin C Geyer, Tassilo Klein, and Moin Nabi. Differentially private federated learning: A client level perspective. arXiv preprint arXiv:1712.07557, 2017.

- [20] Antonious Girgis, Deepesh Data, Suhas Diggavi, Peter Kairouz, and Ananda Theertha Suresh. Shuffled model of differential privacy in federated learning. In International Conference on Artificial Intelligence and Statistics, pages 2521–2529. PMLR, 2021.

- [21] Yanjun Han, Ayfer Özgür, and Tsachy Weissman. Geometric lower bounds for distributed parameter estimation under communication constraints. In Conference On Learning Theory, pages 3163–3188. PMLR, 2018.

- [22] Torsten Hothorn, Frank Bretz, and Peter Westfall. Simultaneous inference in general parametric models. Biometrical Journal: Journal of Mathematical Methods in Biosciences, 50(3):346–363, 2008.

- [23] Rui Hu, Yuanxiong Guo, Hongning Li, Qingqi Pei, and Yanmin Gong. Personalized federated learning with differential privacy. IEEE Internet of Things Journal, 7(10):9530–9539, 2020.

- [24] Adel Javanmard and Andrea Montanari. Confidence intervals and hypothesis testing for high-dimensional regression. The Journal of Machine Learning Research, 15(1):2869–2909, 2014.

- [25] Peter Kairouz, H Brendan McMahan, Brendan Avent, Aurélien Bellet, Mehdi Bennis, Arjun Nitin Bhagoji, Kallista Bonawitz, Zachary Charles, Graham Cormode, Rachel Cummings, et al. Advances and open problems in federated learning. Foundations and Trends® in Machine Learning, 14(1–2):1–210, 2021.

- [26] Jakub Konecy, H Brendan McMahan, Felix X Yu, Peter Richtárik, Ananda Theertha Suresh, and Dave Bacon. Federated learning: Strategies for improving communication efficiency. arXiv preprint arXiv:1610.05492, 2016.

- [27] Jason D Lee, Qiang Liu, Yuekai Sun, and Jonathan E Taylor. Communication-efficient sparse regression. The Journal of Machine Learning Research, 18(1):115–144, 2017.

- [28] Mengchu Li, Ye Tian, Yang Feng, and Yi Yu. Federated transfer learning with differential privacy. arXiv preprint arXiv:2403.11343, 2024.

- [29] Sai Li, T Tony Cai, and Hongzhe Li. Transfer learning for high-dimensional linear regression: Prediction, estimation, and minimax optimality. arXiv preprint arXiv:2006.10593, 2020.

- [30] Tian Li, Anit Kumar Sahu, Ameet Talwalkar, and Virginia Smith. Federated learning: Challenges, methods, and future directions. IEEE signal processing magazine, 37(3):50–60, 2020.

- [31] Andrew Lowy and Meisam Razaviyayn. Private federated learning without a trusted server: Optimal algorithms for convex losses. arXiv preprint arXiv:2106.09779, 2021.

- [32] Brendan McMahan, Eider Moore, Daniel Ramage, Seth Hampson, and Blaise Aguera y Arcas. Communication-efficient learning of deep networks from decentralized data. In Artificial intelligence and statistics, pages 1273–1282. PMLR, 2017.

- [33] Brendan McMahan and Abhradeep Thakurta. Federated learning with formal differential privacy guarantees. Google AI Blog, 2022.

- [34] H Brendan McMahan, Daniel Ramage, Kunal Talwar, and Li Zhang. Learning differentially private recurrent language models. arXiv preprint arXiv:1710.06963, 2017.

- [35] Thomas Steinke and Jonathan Ullman. Tight lower bounds for differentially private selection. In 2017 IEEE 58th Annual Symposium on Foundations of Computer Science (FOCS), pages 552–563. IEEE, 2017.

- [36] Kunal Talwar, Abhradeep Thakurta, and Li Zhang. Nearly-optimal private lasso. In Proceedings of the 28th International Conference on Neural Information Processing Systems-Volume 2, pages 3025–3033, 2015.

- [37] Stacey Truex, Ling Liu, Ka-Ho Chow, Mehmet Emre Gursoy, and Wenqi Wei. Ldp-fed: Federated learning with local differential privacy. In Proceedings of the Third ACM International Workshop on Edge Systems, Analytics and Networking, pages 61–66, 2020.

- [38] Sara Van de Geer, Peter Bühlmann, Ya’acov Ritov, and Ruben Dezeure. On asymptotically optimal confidence regions and tests for high-dimensional models. 2014.

- [39] Kang Wei, Jun Li, Ming Ding, Chuan Ma, Howard H Yang, Farhad Farokhi, Shi Jin, Tony QS Quek, and H Vincent Poor. Federated learning with differential privacy: Algorithms and performance analysis. IEEE Transactions on Information Forensics and Security, 15:3454–3469, 2020.

- [40] Manuel Wiesenfarth, Tatyana Krivobokova, Stephan Klasen, and Stefan Sperlich. Direct simultaneous inference in additive models and its application to model undernutrition. Journal of the American Statistical Association, 107(500):1286–1296, 2012.

- [41] Yang Yu, Shih-Kang Chao, and Guang Cheng. Distributed bootstrap for simultaneous inference under high dimensionality. Journal of Machine Learning Research, 23(195):1–77, 2022.

- [42] Xianyang Zhang and Guang Cheng. Simultaneous inference for high-dimensional linear models. Journal of the American Statistical Association, 112(518):757–768, 2017.

- [43] Yuchen Zhang, John C Duchi, Michael I Jordan, and Martin J Wainwright. Information-theoretic lower bounds for distributed statistical estimation with communication constraints. In NIPS, pages 2328–2336. Citeseer, 2013.

- [44] Yuchen Zhang, Martin J Wainwright, and John C Duchi. Communication-efficient algorithms for statistical optimization. Advances in neural information processing systems, 25, 2012.

- [45] Zhe Zhang. Differential privacy in statistical learning. ProQuest Dissertations and Theses, page 156, 2023.

- [46] Zhe Zhang and Linjun Zhang. High-dimensional differentially-private em algorithm: Methods and near-optimal statistical guarantees. arXiv preprint arXiv:2104.00245, 2021.

Appendix A Proof of main results

A.1 Proof of Theorem 1

We show the proof of the lower bound of the estimation. The main idea of the proof is as follows, we will first assume that in the general case where each data point on each machine follows a general distribution , then we will further assume some conditions of this distribution, and prove that the lower bound of the mean estimation could be attained under these conditions. Finally, we will show that under the assumptions that the data points follow the normal distribution, the specific conditions hold, thus we could finish the proof.

To start this proof, we first introduce the perturbation space , where is a pre-chosen constant and associate each parameter with and refer the distribution as . We characterize the distance between two parameters and by the hamming distance of and , such approach will be compatible with the Assouad’s method, as will be shown later in the proof. We note that when the hamming distance of and get smaller, it indicts that the distance between and becomes closer. Also, for each , we further denote as the vector which flips the sign of the -th coordinate of . Then, we state below conditions:

Condition 1

For every and , it holds that . Further, there exist and measurable functions such that , which is a constant and:

Condition 2

For all and , .

Condition 3

There exists some such that, for all , the random vector is -sub-Gaussian for with independent coordinates.

The above conditions characterize the distribution , we will later verify that the Gaussian distribution could satisfy the above conditions in the later proof. Then, we state our first claim.

Corollary 1

For each coordinate of the , for any , fix . Let be the inputs on the local servers, i.i.d. with common distribution . Let be the information sent from all the local servers to the central machine generated through the channel . Then, if the condition 1 satisfies, there exists a constant , we have:

where , .

The proof of the above corollary is in appendix B.1. The above corollary characterizes the difference between the distribution of and , which is the difference between the distribution of the information about the each coordinate of , which could be seen as the information between and , namely, the information between the information and the parameters.

In the precious corollary, we just assumed a general channel , in the following corollary, we could further specifies the above corollary when the channel , be a -differentially private constraint channel and we could further simplify the upper bound in Corollary 1.

Corollary 2

If be a privacy constraint channel and for any family of distributions satisfying condition 1 and condition 2. With the same notations as Corollary 1 we have:

The proof of the above corollary could be found in appendix B.2. The above corollary focus on the upper bound of , in the next corollary, we will focus on the lower bound, which is an Assouad-type bound. We first introduce another condition:

Condition 4

Fix . Let be the loss between the true parameter and the estimation. Then, for every , the below inequalities hold:

where denotes the Hamming distance with definition , and for each coordinate .

The above condition characterizes the connection between with the perturbation space. With the above assumption, we could further obtain the lower bound of :

Corollary 3

Let and assume that , satisfy Condition 4. Let be a random variable on with distribution . Suppose that constitutes an -estimator of the true parameter under loss and . Then the below inequality holds:

where , .

The proof of the above corollary could be found in appendix B.3. In the following proof, we are going to verify that the Gaussian distribution satisfies all the above conditions, thus the result in Corollary 2 and Corollary 3 holds. Then, according to these two corollaries, we will present the lower bound for the mean estimation in the high-dimensional federated learning setting.

For the parameters, we could fix , , . For the probability where , we fix . Let denote the probability density function of the standard Gaussian distribution . We first suppose that, for some , there exists an -estimator for the true parameter under loss. Then, if we have , then we could finish the proof. Otherwise, we fix a parameter , this is possible with a choice of , the sparsity level. We could design the parameter, the mean of the Gaussian distribution and by the formula: , where . Then, we could verify that , where . From the definition of Gaussian density, for , we have:

Therefore, for and , we have

where and . By using the Gaussian moment-generating function, we could verify that, for ,

so that the condition 1 and condition 2 are satisfied. Here, notice that in the proof of Corollary 1, we require that where is a constant, we could verify that since , then from the definition of , Then we have , which could verify the condition for corollary 1. Also, by the choice of and , it is easy to verify that condition 4 also holds with:

Thus, all the conditions mentioned above have been verified. Then, we could finish the proof of our lower bound. Combining the result of corollary 2 and corollary 3, we get the result below:

where is a constant. Also, notice that holds since , we could find a constant , it follows that

From the choice of , we could claim that , then we could obtain our lower bound, which finished the proof.

A.2 Proof of Theorem 2

In the proof of Theorem 2, we will design a mechanism to get an estimation of the parameter and then we obtain the upper bound of . The overall mechanism is designed as follows: we first calculate the mean for data points on each machine. Then, we transform the Gaussian mean to Bernoulli mean according to the sign of the Gaussian mean motivated by the Algorithm 2 discussed in [2], l-bit protocol for estimating product of Bernoulli family.

Then, we could use the -local differentially private mechanism to achieve mean estimation for the product of Bernoulli family in the federated learning setting. After obtaining the estimation, we could convert the estimated Bernoulli mean back to Gaussian mean estimation.

First, for each data point on the machine, it follows the distribution of . Then for the mean on -th machine, the mean follows a distribution of . Then, we could convert it to a Bernoulli variable , where when and when . Then the mean of , which denote as is:

for each coordinate of . Suppose the estimation of is denoted by , then suppose the estimation is given by , we could find such relationship:

| (A.1) |

where is a constant. The last inequality comes from the Lipschitz condition of a Erf function. Then, we could get the upper bound for the Bonoulli mean estimation directly from Theorem 3 in [2], where

where is the privacy parameter, is the number of machines. Combining the last two inequalities (A.1) and (A.2), we could get the upper bound for the mean Gaussian estimation:

which finished the proof.

A.3 Proof of Theorem 3

It is not difficult to observe that the convergence rate would be the same as in the non-federated learning setting. We denote as the sample loss function and be the population level. In the estimation of , and is the sample version. In the estimation of , . We start from the estimation of and the estimation of is the same. In this proof, we use to refer the total number of samples . Then, it holds that:

Lemma A.1

Under assumptions of Theorem 5, it holds that:

| (A.2) |

Proof: From direct calculation, we could obtain that:

The last inequality is according to the choice of such that . Then, we also have . Thus we have obtained the right hand side of the inequality. By a similar approach, we could also obtain the left hand side.

Lemma A.2

Under assumptions of Theorem 5, it holds that there exists an absolute constant such that

| (A.3) |

where is a constant number such that

Notice that are injected from the NoisyHT algorithm. The proof of the above lemma follows from the result in Lemma 8.3 from [8]. Then, we could start the proof by iterating (A.3) over . Denote to obtain

| (A.4) |

The second inequality is a consequence of the upper inequality in (A.2) and the bounds of and . We can also bound from below by the lower inequality in (A.2):

| (A.5) |

Now (A.4) and (A.5) imply that, with ,

| (A.6) | ||||

| (A.7) |

Thus,

In the above inequality, is a constant. Then, we could calculate the upper bound of . From the result of tail bound of Laplace random variables, we could find that with high probability that , where . Then, we have with high probability:

Similarly, we could obtain the same result for the estimation of , which finishes the proof.

A.4 Proof of Theorem 4

The structure of the proof consist of three part, the first part is to show that our algorithm provides an -differentially private confidence interval. In the second part, we will show that is a consistent estimator of true , which is unbiased. In the last part, we will show that the confidence interval is asymptotically valid. Before we start the first part, let us first analyze :

According to the assumptions of the theorem, we have learnt that for each row of , is sub-Gaussian with . Then according to the properties of sub-Gaussian random variables, we have: with probability . Then for each element of , , we have:

Thus,

Then, with probability , we have . By a union bound, we could have with probability , . By the choice of in the theorem, we have with a high probability.

Then, we could verify that the confidence interval is -differentially private. From [8], we could obtain that the output is -DP. In a similar manner, we could also verify that the output is also -DP. Thus, for two adjacent data sets and which differ by one data and , we have:

Thus,

Denote . Thus, if follows , is -DP. For the term , we could obtain that:

Thus, for two adjacent data sets and differ by one data and , we have:

By Holder inequality and Cauchy inequality, we have , thus we have:

Denote . Then, let follows a Gaussian distribution of . We could claim that is -differentially private.

We start the second part of the proof. First, with probability , we have for each , so we could decompose by the following approach:

Thus, we have:

| (A.8) |

We will analyze the three terms in (A.8) one by one. For the first term, we could further decompose this term as:

| (A.9) |

For the first term in (A.4), we could further decompose this term from :

| (A.10) |

In the last inequality, we use to denote the largest s-restricted eigenvalue of the covariance matrix . From Theorem 3, we could obtain that there exists a constant such that:

Also, for the output , we could have the similar result:

Combining (A.4) and (A.4), we could obtain that

Then, we could focus on the second term of (A.4). We first introduce the following lemma:

Lemma A.3

(Lemma 6.2 in [24]) For the vector . Denote , then with probability , we have:

Thus, for the second term of (A.4), we have:

| (A.11) |

Combine the result from (A.4), (A.4) to (A.4), we could obtain that the first term of (A.8) is . We could also analyze the third term of (A.8),. Then, by the definition of , we have from the assumption. Also, we notice that . By the concentration of Gaussian distribution, we also have that .

Finally, we analyze the term . From our definition, is sub-Gaussian random noise. Then, from the central limit theorem, we could conclude that:

Thus, . Also, from lemma 4.1, we could claim that under our assumptions, . We could get the result where with high probability, .

Therefore, we could claim that is asymptotically confidence interval for . Therefore, we have finished the proof of theorem.

A.5 Proof of Theorem 5

The proof is similar to the proof of Theorem 4, the difference is that we need to consider the case where the privacy cost is not dominated by the statistical error. Then, for the proof of Theorem 5, we follow the proof of Theorem 4 until (A.8). The analysis for the second term and the third term for (A.8) stays the same. On the other hand, for the first term of (A.8), we have: We will analyze the three terms in (A.8) one by one. For the first term, in the same manner, we could decompose this term as:

| (A.12) |

For the first term in (A.5), we could further decompose this term from :

| (A.13) |

Thus, for the second term of (A.5), by Lemma A.3, we have:

| (A.14) |

When the privacy cost is not dominated by the statistical error and also , we can observe that the equation (A.5) has smaller convergence rate that (A.5). Then, combining (A.5) and (A.5), there exists a constant , such that:

Then, insert the result into (A.8), we have:

| (A.15) |

Notice that for the first term on the right hand, the constant could be set to 1 because it comes from the tail bound of Laplace random variable. From the result in (A.15), we could also apply the central limit theorem to show that the second term is asymptotically Gaussian, notice that in the right hand side, the second term and the third term asymptotically follows a distribution of . Also, by the concentration of Gaussian distribution, we have with high probability, .Thus, the privacy conditions are satisfied. Therefore, we have:

Then, also by our assumptions and the result in Lemma 4.1, we could claim that . Thus, finally, the confidence interval is given by:

which finishes our proof.

A.6 Proof of Theorem 6

Let us first show that our algorithm is private. The major proof lies in the choice of noise level . To decompose, we can find:

Then, suppose in an adjacent data set, the different data in denoted as and . Then, we calculate:

Thus, the privacy could be guaranteed. Then, let us start the proof of consistency. Throughout the proof, we define , be the whole data set where and . . Let us define another multiplier bootstrap statistic:

where are all standard Gaussian variables. At the same time, we also define:

The proof consists three major steps, we start from the first step and measure . This measurement is quite straightforward, we could apply Theorem 3.1 from [10]. However, we need to verify Corollary 2.1 from [10]. Notice that for any , . Also, it is not difficult to verify that is sub-exponential. Since from assumption D1, we have is sub-Gaussian and from the linear model, we know that is also, sub-Gaussian. Then, the condition could be verified. Thus, by applying Theorem 3.1 and also under the condition where there exists a constant such that we could have:

| (A.16) |

where represents the maximum element between the two matrix and , denote as , where and are defined as:

and

Then, from Corollary 3.1 in [10] and Lemma E.2 in [41], we could verify that . With a proper choice of , e.g, there exists a constant and let , we have . Next, we would like to associate with . Similarly, from Theorem 3.2 in [10] and (A.16), we could have:

From the definition of and , we have:

Then, for any in , by Holder inequality and Cauchy–Schwarz inequality, we have:

On one hand, from previous proof, we obtain that when the privacy cost is dominated by statistical error uniformly for . On the other hand, by the fact that have bounded maximum eigenvalue and traditional linear regression model, we could apply Bernstein inequality and also obtain that is . Combine these two results, we could claim that there exist constants such that:

uniformly for all , then we can choose properly such that . At last, we need to relate with . Our major goal is to prove that and are close to each other for any . We first associate with . From the design of private max algorithm, from Lemma 3.4 in [8], suppose is the element chosen from and is from without noise injection, we use to represent the noise injected when we pick the largest value privately, we find that, for any :

From Lemma A.1 in [8], we can verify that there exists constant such that . When we choose , e.g, , then from the conditions, we could claim that , also notice that the scale of noise we injected is small, it is easy to verify that . The following discussions will be between and . Denote as the symmetric difference, then we have:

| (A.17) |