Differentially Private Mechanisms for Count Queries∗111∗This is a working paper. Comments to improve this work are welcome.

Abstract

In this paper, we consider the problem of responding to a count query (or any other integer-valued queries) evaluated on a dataset containing sensitive attributes. To protect the privacy of individuals in the dataset, a standard practice is to add continuous noise to the true count. We design a differentially-private mechanism which adds integer-valued noise allowing the released output to remain integer. As a trade-off between utility and privacy, we derive privacy parameters and in terms of the the probability of releasing an erroneous count under the assumption that the true count is no smaller than half the support size of the noise. We then numerically demonstrate that our mechanism provides higher privacy guarantee compared to the discrete Gaussian mechanism that is recently proposed in the literature.

I Introduction

Conducting nationwide censuses is one of the most important tasks of national or federal agencies around the world. For example, the 2020 Census in the US is currently under way, which is conducted by the US Bureau of the Census every ten years [1]. In Australia, census is conducted by the Australian Bureau of Statistics (ABS) every five years and the next round is due in 2021 [2]. Responding to count queries about census outcomes is one of the most important tasks of such agencies. This can be important for distributing national funds, determining optimal voting districts and conducting various scientific or economic studies of the population. However, this might lead to dire privacy compromises.

In order to protect the privacy of individuals in the census records, the true census results are heavily guarded. Instead, noisy versions may be released to the public in a one-off general-purpose publication or privately disclosed to a data analyst in response to specific queries. There is clearly a tension between releasing an accurate and reliable version of the census outcome and protecting the privacy of individuals in a population. This tension gets aggravated particularly for underrespresented groups in small towns and counties. Oftentimes, their livelihoods and future funding depends on them being accurately accounted for in published census results. At the same, due to being very small in size, they are at the greatest risk of being re-identified even if perturbed versions of their data is published. For an interesting coverage of this issue in the 2010 and 2020 US Census rounds, the reader is referred to a recent article published in the New York Times [3].

Motivated by these applications, in this paper we are interested in formulating and studying optimal tradeoffs between utility and privacy when a data analyst sends a query to a data curator about the number of individuals with a common sensitive attribute in a large dataset. Assuming is the true count, the data curator releases a random variable , where is an integer-valued noise variable with a suitably-chosen probability distribution.

It is not surprising that the celebrated differential privacy (DP) framework [4] has been applied to integer-valued mechanisms for count queries. In fact, DP is a strong candidate for publishing private 2020 US Census outcomes [5]. Other countries such as Australia and New Zealand are closely watching this space and have already taken steps in evaluating the performance of their existing mechanisms “from the lens of differential privacy” [6].

A standard approach to preserving -DP is to perturb the count query by adding random noise with Laplacian distribution whose variance is proportional to a certain property of the query (i.e., sensitivity). Adding continuous noise to an integer-valued query makes the output less interpretable. Geometric distribution, as the discrete counterpart of Laplace distribution, was shown in [7] to be ”optimal” for integer-valued queries with unit sensitivity. The optimality was defined in terms of minimizing the expected cost function (chosen from a family of utility functions with some mild regularity conditions) under a Bayesian framework. Their result, in particular, implies that adding (truncated or folded) geometric noise to the above-mentioned counting query preserves DP while minimizing the probability of error, . This setting was extended to the mini-max setting in [8] and to queries with general sensitivity and under the worst case setting in [9]. The design and implementation of geometric distribution on finite-precision machines were investigated in [10].

More recently, the problem of minimizing the -norm of error of the query output, subject to -DP, was revisited in [11]. It was argued that despite minimizing the probability of error, the geometric mechanism suffers from a number of limitations. Most importantly, the error depends on the true count , leading to uneven accuracy for different queries. In the very high-privacy regime when , this unevenness in the distribution results in overwhelmingly reporting two extreme values or (zero count or maximum possible count in the database). To address this issue, they proposed an explicit fair mechanism (EM). However, the noise in the EM method adds bias, that is, . Moreover, EM (and also truncated geometric) mechanism does not restrict the support size of the noise. As such, the output can be any number between the two extremes and with non-zero probability.

In a different, and quite recent, line of work, a discrete version of the Gaussian density was considered for designing privacy-preserving mechanism for count queries. In particular, it was shown in [12] that adding “discrete” Gaussian noise provides similar privacy and utility guarantees to those obtained by the well-understood Gaussian mechanism. Discrete Gaussian noise is supported over and thus the output of such mechanism can be all integers regardless of the domain of the query. This might lead to unpleasant outcomes in some practical scenarios. For example, according to the New York Times article [3], the population of a county in the 2010 US Census was over-reported by a factor of almost 8 (the true count was around 90, but was reported to be around 920). While post-processing (truncating or folding the probability mass function) can be used to limit the range of discrete Gaussian mechanisms, the question remains whether one can explicitly incorporate a finite support of the noise into the mechanism design process.

In this paper, we adopt a different approach than [11, 12]. We begin by enforcing three basic desired constraints on the noise distribution. These properties include zero bias, fixed hard support, and fixed error probability irrespective of the true count . By varying the probability of error we can trade off accuracy with privacy. The hard support for ensures that for any given , the output takes value in a pre-specified range with probability one; in particular is required to be always non-negative. We first design a noise distribution satisfying above constraints and then use it to construct a differentially-private mechanism (under a mild condition on the dataset). The constraint on the noise support has two implications. First, the values of the noise added must depend on the true count (to ensure the non-negativity of the output). Second, the mechanism provides a slightly relaxed privacy guarantee: it is -DP with probability for some parameter . This framework is known as approximate DP and usually denoted by -DP [13, 14]. While the proposed noise has similar distribution as discrete Gaussian (with slightly heavier tail), our numerical findings indicate that the privacy performance of our mechanism (in terms of and ) is tighter than what would be obtained via discrete Gaussian mechanism with the same variance.

We can summarize our observations about the interplay of privacy and utility parameters as follows. As expected, a lower privacy requirement, i.e., higher , results in lower when the noise support size and probability of releasing an erroneous value are fixed. By moderately increasing the noise support size while keeping and error probability fixed, we can also significantly improve . For example, increasing the noise support from to we obtain significantly smaller . It turns out that when and the noise support size are fixed, reducing the error probability (and hence improving data reliability) can be tolerated up a certain threshold without significantly affecting . However, further reducing the error probability below such a threshold can have severe effects on the privacy performance through increasing beyond typically acceptable levels.

Notation: For an integer , we use to denote the set . For and , we use to denote the set .

II Problem Formulation

Suppose a dataset of size at most is given. A query on the number of entries in the database that possess a certain sensitive attribute is received by the data curator. Assume is the actual (positive) count of such attribute. To protect the privacy of individuals in the dataset, the data curator releases a noisy version of as

| (1) |

where is an integer-valued noise variable. We assume the noise distribution satisfies the following intuitive properties:

-

P1.

Fix . Noise variable takes values in , where , i.e., if . This properties ensures that the mechanism’s output is always non-negative. A smaller implies a smaller noise support set, which in turn implies a larger accuracy in reporting the query.222Note that the support of the output for a given is limited to . For , we allow the output to be greater than the maximum possible real count (by at most ). If , this overshoot is negligible.

-

P2.

Fix . The probability of releasing the correct value is set to

irrespective of the true count . Equivalently, the probability of releasing an erroneous value is

A larger means more reliability in releasing the correct value. Conversely, a smaller means better privacy in the sense of concealing the true count of the sensitive attribute in the dataset.

-

P3.

In addition, the error must have zero bias. That is, , which is a statistically desirable property.333Note that if , maintaining zero bias is not possible jointly with and P1. Hence, we limit ourselves to queries with .

The following proposition provides a simple family of noise distribution that satisfy these properties.

Proposition 1.

Let the true count for a query be . For any and , the following distribution satisfies P1-P3

| (2) |

In light of this proposition, we can obtain that any convex combination of , i.e.,

| (3) |

with non-negative coefficients satisfying , also meets P1-P3. We can thus use this convex combination to obtain a general data-dependent noise distribution satisfying P1-P3. The following example makes this construction clear.

Example 1.

Let , and . Note that for , two distributions are possible for by choosing and , as described below

| (4) |

For and , four distributions are similarly possible by combining with . Overall, the general convex structure in (3) produces the noise distributions and given by

| (5) |

Each column of this matrix is a valid noise distribution for a given , subject to . Note that row indices range from to . Also, note that . This is because for , we have , dictating as the support set of , instead of . Moreover, for or . By adding such noise to the true count , the distribution of , given , becomes

| (6) |

where the rows range from to . The value of for all other values of and not shown above is identical to zero.

II-A Incorporating -DP Conditions

Consider the mechanism with being a data-dependant noise with distribution given in (3). Our objective is to determine the smallest and such that this mechanism is -differentially private (DP) [13].

Definition 1.

Given and , a randomized algorithm is said to be -differentially private (DP) if for all and all neighboring datasets differing in one element.

Below we specialize this standard definition for our count query setup. Given an integer-valued query about a dataset , mechanism randomizes the true query response, say , via a data-dependent additive noise process, i.e., . Since the query is integer-valued, we have for all pairs of neighboring datasets and . Without loss of generality, in the following we assume that for neighboring and in the computation of DP parameters. Assuming , then it implies that we need to verify the DP requirement for dataset for which or .

Remark 1.

Our approach to computing the DP parameters of mechanism (16) is as follows. We first consider Definition 1 for the singular events , i.e., all subsets satisfying and determine the corresponding parameters and . Clearly, these parameters differ from the DP parameters. We then convert these parameters to a valid set of DP parameters. In the following, we use and to denote the parameters of mechanism (16) when applying Definition 1 for singular events .

To formulate our goal, we fix and consider the following linear program

| (7) | ||||

| (8) |

Given the mechanism and Proposition 1, we can explicitly write the optimization of in terms of as follows.

Proposition 2.

For given , the optimal value is the solution of the following linear program

| (9) | ||||

| s.t. | ||||

| (10) | ||||

| (11) | ||||

| (12) | ||||

| (13) |

where was defined in (2) and .

Clearly, this above optimization problem is a linear program. If the computational complexity allows, an explicit expression for can be obtained through Fourier-Motzkin elimination of all . Nevertheless, we show in the next section that such expression can be derived directly if we make a minor assumption on .

III Explicit Solutions for

In this section, we show that if the true count satisfies , then can be readily derived.

Proposition 3.

If noise support parameter satisfies , then the following data-independent noise distribution satisfies P1-P3

| (14) |

for all and such that . In this case,

| (15) |

Notice that the assumption enables us to design a noise distribution that satisfies P1-P3 while being independent of the query (i.e., ). The simple mechanism given in Proposition 3 can be represented by the matrix

| (16) |

where for exposition we have assumed . Each column of this matrix represents for .

III-A Lower bounds on

To compute for mechanism (16), we first derive a lower bound for (this subsection) and then show that it is in fact achievable (next subsection). For notational brevity, define . The main tool for deriving the lower bound is the following proposition.

Proposition 4.

For the noise distribution given by Proposition 3, satisfies the following inequalities

| (17) | ||||

| (18) | ||||

| (19) |

This proposition follows simply by comparing consecutive columns of the matrix (16). Letting and , the system of inequalities given in Proposition 4 can be expressed as

| (20) | ||||

| (21) | ||||

| (22) |

We now present a series of lower bounds on that we term Type-I lower bounds.

Lemma 1.

For the noise distribution given by Proposition 3, we have

| (23) |

Proof.

First note that, since , we have from (22),

| (24) |

Since , a second lower bound is obtained from (21) for by eliminating both and as follows

| (25) | ||||

| (26) |

Generalizing this for , we obtain a general class of lower bounds as follows

| (27) | |||

| (28) | |||

| (29) |

Iterating this process, we obtain

| (30) | |||

| (31) |

which upon rearranging implies . ∎

In general, none of these lower bounds dominates another. We will shortly elaborate on the relationship between for . Before doing so, we first obtain another lower bound for , termed a Type-II lower bound.

Lemma 2.

For the noise distribution given by Proposition 3, we have

| (32) |

Proof.

Theorem 1.

To show that this lower bound is in fact tight, we need to carefully investigate the dynamic of ’s. To do so, we define crossover values as

| (34) |

For any , the relational order between two consecutive lower bounds and is uniquely determined by the relation of the parameter with the crossover value as prescribed in the following lemma. This, together with strictly decreasing behavior of the sequence (to be proved in Lemma 4) will ensure that we can find the maximum among all , analytically.

Lemma 3.

We have for if and only if is such that .

Proof.

First consider and note that

| (35) |

For , it follows that if and only if

After a straightforward algebraic manipulation, we can show that it is equivalent to

Last, we have if and only if

which is in turn equivalent to

Hence, we conclude if and only if

∎

Lemma 4.

The sequence is strictly decreasing.

Proof.

This can be easily verified by writing the difference between and and checking that

| (36) |

∎

For notational simplicity, define and . Thus, is still monotonically decreasing. Combining Lemma 3 with Lemma 4, we obtain the following result.

Theorem 2.

Proof.

To prove the only-if direction, note that due to strict monotonicity of , implies . Therefore, according to Lemma 3, implies . Similarly, implies . Therefore, implies . In summary, implies . The forward direction can be similarly argued. ∎

Together with Theorem 1, this theorem implies that if , then

Next, we design a mechanism for which this lower bound is achieved.

III-B Achievability of Lower Bounds

In this section, we show that the lower bound on is in fact achievable, i.e., there exists with and such that the mechanism (16) satisfies the constraints of the linear program (9) with .

Definition 2 (Optimal ’s).

Now we are ready for the main result of this section.

Theorem 3.

Proof.

First note that once we prove that is achievable, Theorem 1 implies that . Thus, we only need to prove the achievability of . Note further that satisfy the inequalities in Proposition 4, with replaced by , with equality. Therefore, we only need to show that are valid in the sense that they are non-negative and sum to 1.

Assume in general and in particular, we have , for some (recall that ). It follows from Theorem 2 that , where is a Type-I lower bound given in (23). First, we note that according to (39)

| (40) |

Now consider in (39). Applying (39) iteratively, we obtain

| (41) | ||||

| (42) | ||||

| (43) |

where in the last two steps we have used the fact that and the definition of in (34). Note that the definition of in (39) together with555Note that from (32) we conclude . Therefore, . implies that for . In particular, we have for . Note that

| (44) |

where we use the fact that for .

Finally, if then from Theorem 2, we conclude , which is the Type-II lower bound on . In this case, are given in (37) and (38). A similar argument as above shows that for each and also .

∎

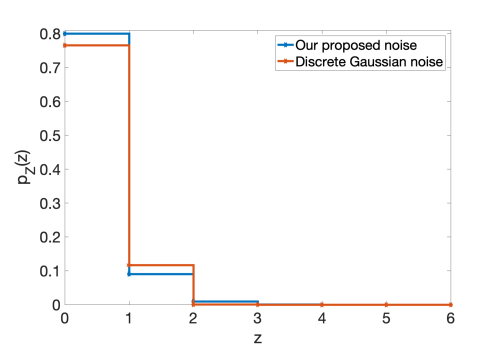

This theorem demonstrates that , defined in Definition 2, constructs the “optimal” mechanism when plugging into (16) or equivalently the optimal noise distribution when plugging into (14). Fig. 1 shows such optimal noise distribution for (), (), and for (noting the symmetry for ). Note that the minimum true count is assumed to be , which seems a reasonable assumption in large datasets with hundreds of participants. Using (34), we find that . Therefore, according to Theorem 2. It follows from (39) that only , , and are non-zero. Consequently, the noise distribution is in fact supported on , instead of what we originally required in P1, i.e., .

Using Equation (1) in [12], we also plot in Fig. 1, discrete Gaussian distribution, denoted by , for . We set its variance identical to that of our noise distribution, i.e., . It is worth noting that discrete Gaussian distribution is not compactly supported; however, the probability that it assigns to points outside is negligible and is not shown in Fig. 1. While the two distributions may look somewhat similar, they have important differences that substantially impact their privacy performance. Notice, for instance, that and , and hence can be as large as about 282. Note that in our proposed distribution, and , and hence . Consequently, in the discrete Gaussian mechanism the smallest such that for is , while for our mechanism is sufficient. This indicates that the optimally-chosen coefficient in our setting improves the privacy guarantee of discrete Gaussian mechanism. In the next section, we provide more detailed comparison between there two mechanisms.

Remark 2.

As mentioned in Remark 1, we restricted the definition of differential privacy in Definition 1 to the singular events. As a result, the parameters and computed in this section do not correspond to the DP parameters. To compute the relationship between these parameters, recall that the mechanism (16) is -DP if for any event

for all possible query responses . Since , our analysis in this section implies that for any

where denotes the set of with and a closed-form expression for was given in Theorem 3. The same argument shows that . This observation indicates that the mechanism (16) is -DP when is chosen according to Definition 2.

IV Numerical Results

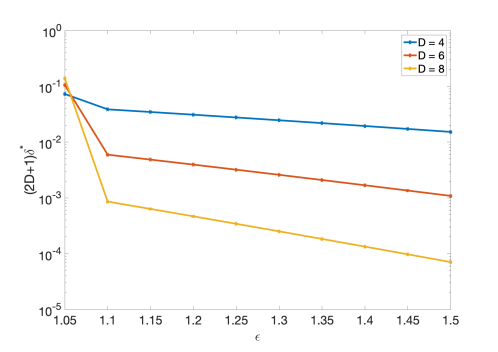

We begin this section by numerically computing the DP parameters and of our mechanism according to Theorem 3.666We mostly consider practical parameter ranges resulting in . We thus write instead of . In Fig. 2, we assume and plot the in terms of for different values of . It is clear that is no greater than for and . It is worth noting that while we reveal the true count with probability , from Definition 2, the mechanism’s output lies in an acceptable range of with a much higher probability for .

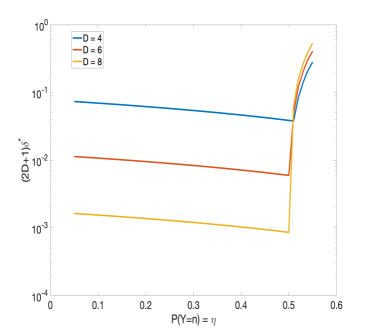

In Fig. 3, we illustrate the relationship between the DP parameter and for different values of and . Quite predictably, for given and , there exists a threshold for above which grows fast to one; thus indicating a trade-off between utility (reliability) and privacy. To strike a good balance between the reliability and privacy, one may choose the the largest value of just before the ‘knee’ phenomenon occurs. For instance, assuming , our mechanism provides -DP and -DP guarantee while presenting and , respectively. We remark that and were numerically chosen such that the knee phenomenon occurs slightly after and , respectively.

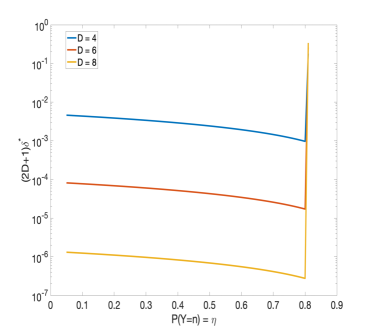

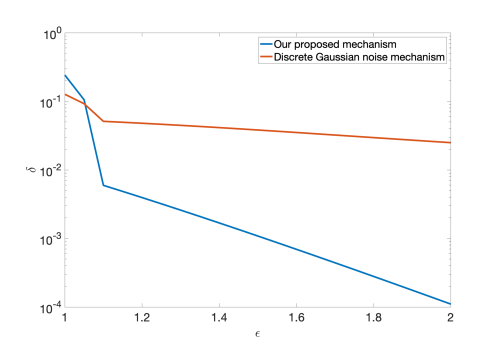

Next, we compare our mechanism with the recently proposed discrete Gaussian mechanism [12] in terms of the utility-privacy performance. There may be different ways for conducting such comparison. To have a fair comparison, we take the following steps:

- 1.

-

2.

We generate a discrete Gaussian probability mass function (pmf) according to Equation (1) in [12] with the variance . This dictates that the discrete Gaussian mechanism has the same utility as our mechanism. We refer to the resulting distribution as .

-

3.

Let and be the DP parameters of the discrete Gaussian mechanism. It is proved in [12, Theorem 7] that

(45) where is the variance of the discrete Gaussian noise added to the true count. By replacing by , we make use of this expression to compare the discrete Gaussian mechanism with our mechanism in terms of privacy parameters.

As depicted in Fig 4, the -DP performance of our proposed scheme is superior to that of the discrete Gaussian mechanism with the same variance for a wide range of privacy parameters (both mechanisms perform rather poorly for , resulting in quite high ). Many other experiments were conducted and they all showed trends similar to what is shown in Fig. 4.

V Conclusion

In this work, we explicitly constructed a privacy-preserving mechanism for responding to integer-valued queries to a dataset containing sensitive attributes. This mechanism is noise-additive but, unlike many other noise-additive private mechanisms, it adds an integer-valued noise to the true count. The noise distribution is parameterized by which specifies the allowable probability of error in responding the true count, thereby balancing the privacy-utility trade-off. We proved that this mechanism is -differentially private where both and depend on noise support size and . This mechanism is contrasted with the recently proposed discrete Gaussian mechanism [12] which adds discretized Gaussian noise (with infinite support) to the true count. Our numerical findings indicate that the resulting and are tighter than those obtained in discrete Gaussian mechanism for many practical range of .

We conclude this work with a note on future direction. In this work, we assume that there is a single query to a dataset. However, in many practical scenarios there are several queries to a single dataset, each of which might depend on the previous ones. Provided that each query is responded by a our private mechanism, it is essential to determine how privacy degrades as the number of queries increases. We believe that the new technique propose in [15] might be helpful for this purpose.

References

- [1] US Bureau of the Census, 2020 Census. [Online]. Available: https://www.census.gov

- [2] Australian Bureau of Statistics, 2021 Census. [Online]. Available: https://www.abs.gov.au

- [3] Changes to the Census Could Make Small Towns Disappear. [Online]. Available: https://www.nytimes.com/interactive/2020/02/06/opinion/census-algorithm-privacy.html

- [4] C. Dwork, “Differential Privacy,” in Automata ,Languages and Programming, 2006.

- [5] US Bureau of the Census, Disclosure Avoidance and the 2020 Census. [Online]. Available: https://www.census.gov/about/policies/privacy/statistical_safeguards/disclosure-avoidance-2020-census.html

- [6] J. Bailie and C.-H. Chien, “ABS Perturbation Methodology Through the Lens of Differential Privacy,” 2019. [Online]. Available: http://www.unece.org/fileadmin/DAM/stats/documents/ece/ces/ge.46/2019/mtg1/SDC2019_S2_ABS_Bailie_D.pdf

- [7] A. Ghosh, T. Roughgarden, and M. Sundararajan, “Universally utility- maximizing privacy mechanisms,” in STOC, 2009. [Online]. Available: Available:http://doi.acm.org/10.1145/1536414.1536464

- [8] M. Gupte and M. Sundararajan, “Universally optimal privacy mechanisms for minimax agents,” in Proceedings of the Twenty-Ninth ACM SIGMOD-SIGACT-SIGART Symposium on Principles of Database Systems, ser. PODS ’10. New York, NY, USA: Association for Computing Machinery, 2010, p. 135–146. [Online]. Available: https://doi.org/10.1145/1807085.1807105

- [9] Q. Geng and P. Viswanath, “The optimal noise-adding mechanism in differential privacy,” IEEE Transactions on Information Theory, vol. 62, no. 2, pp. 925–951, 2016.

- [10] V. Balcer and S. Vadhan. (2019) Differential privacy on finite computers. [Online]. Available: https://arxiv.org/abs/1709.05396

- [11] G. Cormode, T. Kulkarni, and D. Srivastava. (2017) Constrained Differential Privacy for Count Data. [Online]. Available: https://arxiv.org/abs/1710.00608

- [12] C. Canonne, G. Kamath, and T. Steinke. (2020) The Discrete Gaussian for Differential Privacy. [Online]. Available: https://arxiv.org/abs/2004.00010v2

- [13] C. Dwork and A. Roth, The Algorithmic Foundations of Differential Privacy. Foundations and Trends in Theoretical Computer Science. Now, 2014.

- [14] C. Dwork, F. McSherry, K. Nissim, and A. Smith, “Calibrating noise to sensitivity in private data analysis,” in Proceedings of the Third Conference on Theory of Cryptography, ser. TCC’06. Berlin, Heidelberg: Springer-Verlag, 2006, pp. 265–284.

- [15] S. Asoodeh, J. Liao, F. P. Calmon, O. Kosut, and L. Sankar, “A better bound gives a hundred rounds: Enhanced privacy guarantees via -divergences,” 2020. [Online]. Available: https://arxiv.org/abs/2001.05990