DiffMD: A Geometric Diffusion Model for Molecular Dynamics Simulations

Abstract

Molecular dynamics (MD) has long been the de facto choice for simulating complex atomistic systems from first principles. Recently deep learning models become a popular way to accelerate MD. Notwithstanding, existing models depend on intermediate variables such as the potential energy or force fields to update atomic positions, which requires additional computations to perform back-propagation. To waive this requirement, we propose a novel model called DiffMD by directly estimating the gradient of the log density of molecular conformations. DiffMD relies on a score-based denoising diffusion generative model that perturbs the molecular structure with a conditional noise depending on atomic accelerations and treats conformations at previous timeframes as the prior distribution for sampling. Another challenge of modeling such a conformation generation process is that a molecule is kinetic instead of static, which no prior works have strictly studied. To solve this challenge, we propose an equivariant geometric Transformer as the score function in the diffusion process to calculate corresponding gradients. It incorporates the directions and velocities of atomic motions via 3D spherical Fourier-Bessel representations. With multiple architectural improvements, we outperform state-of-the-art baselines on MD17 and isomers of datasets. This work contributes to accelerating material and drug discovery.

Introductions

Molecular dynamics (MD), an in silico tool that simulates complex atomic systems based on first principles, has exerted dramatic impacts in scientific research. Instead of yielding an average structure by experimental approaches including X-ray crystallography and cryo-EM, MD simulations can capture the sequential behavior of molecules in full atomic details at the very fine temporal resolution, and thus allow researchers to quantify how much various regions of the molecule move at equilibrium and what types of structural fluctuations they undergo. In the areas of molecular biology and drug discovery, the most basic and intuitive application of MD is to assess the mobility or flexibility of various regions of a molecule. MD substantially accelerates the studies to observe the biomolecular processes in action, particularly important functional processes such as ligand binding (Shan et al. 2011), ligand- or voltage-induced conformational change (Dror et al. 2011), protein folding (Lindorff-Larsen et al. 2011), or membrane transport (Suomivuori et al. 2017).

Nevertheless, the computational cost of MD generally scales cubically with respect to the number of electronic degrees of freedom. Besides, important biomolecular processes like conformational change often take place on timescales longer than those accessible by classical all-atom MD simulations. Although a wide variety of enhanced sampling techniques have been proposed to capture longer-timescale events (Schwantes, McGibbon, and Pande 2014), none of them is a panacea for timescale limitations and might additionally cause decreased accuracy. Thus, it is an urgent demand to fundamentally boost the efficiency of MD while keeping accuracy.

Recently, deep learning-based MD (DLMD) models provide a new paradigm to meet the pressing demand. The accuracy of those models stems from not only the distinctive ability of neural networks to approximate high-dimensional functions but the proper treatment of physical requirements like symmetry constraints and the concurrent learning scheme that generates a compact training dataset (Jia et al. 2020). Despite their success, current DLMD models primarily suffer from the following three issues. First, most DLMD models still rely on intermediate variables (e.g., the potential energy) and multiple stages to generate subsequent biomolecular conformations. This substantially raises the computational expenditure and hinders the inference efficiency, since the inverse Hessian scales as cubically with the number of atom coordinates (Cranmer et al. 2020). Second, existing DLMD models regard the DL module as a black-box to predict atomic attributes and never inosculate the neural architecture with the theory of thermodynamics. Last but not least, the majority of prevailing geometric methods (Gilmer et al. 2017; Schütt et al. 2018; Klicpera, Groß, and Günnemann 2020) are designed for immobile molecules and not suitable for dynamic systems where the directions and velocities of atomic motions count.

This paper proposes DiffMD that aims to address the above-mentioned issues. First, DiffMD is a one-stage procedure and forecasts the simulation trajectories without any dependency on the potential energy or forces. For the second issue, inspired by the consistency of diffusion processes in nonequilibrium thermodynamics and probabilistic generative models (Sohl-Dickstein et al. 2015; Song and Ermon 2019), DiffMD adopts a score-based denoising diffusion generative model (Song et al. 2020b) with the exploration of various stochastic differential equations (SDEs). It sequentially corrupts training data by slowly increasing noise and then learns to reverse this corruption. This generative process highly accords with the enhanced sampling mechanism in MD (Miao, Feher, and McCammon 2015), where a boost potential is added conditionally to smooth biomolecular potential energy surface and decrease energy barriers. Besides, to make geometric models aware of atom mobility, we propose an equivariant geometric Transformer (EGT) as the score function for our DiffMD. It refines the self-attention mechanism (Vaswani et al. 2017) with 3D spherical Fourier-Bessel representations to incorporate both the intersection and dihedral angles between each pair of atoms and their associated velocities.

We conduct comprehensive experiments on multiple standard MD simulation datasets including MD17 and isomers. Numerical results demonstrate that DiffMD constantly outperforms state-of-the-art DLMD models by a large margin. The significantly superior performance illustrates the high capability of our DiffMD to accurately produce MD trajectories for microscopic systems.

Preliminaries

Background

We consider an MD trajectory of a molecule with timeframes. denotes the conformation at time and is assumed to have atoms. There and denote the 3D coordinates and -dimension roto-translational invariant features (e.g. atom types) associated with each atom, respectively. corresponds to the atomic velocities. We denote a vector norm by , its direction by , and the relative position by .

Molecular Dynamics

MD with classical potentials.

The fundamental idea behind MD simulations is to study the time-dependent behavior of a microscopic system. It generates the atomic trajectories for a specific interatomic potential with certain initial conditions and boundary conditions. This is obtained by solving the first-order differential equation of the Newton’s second law:

| (1) |

where is the net force acting on the -th atom of the system at a given point in the -th timeframe, is the corresponding acceleration, and is the mass. is the potential energy function. The classic force field (FF) defines the potential energy function in Appendix. Then numerical methods are utilized to advance the trajectory over small time increments with the assistance of some integrator (see more introductions to MD in Appendix).

Enhanced sampling in MD.

Enhanced sampling methods have been developed to accelerate MD and retrieve useful thermodynamic and kinetic data (Rocchia, Masetti, and Cavalli 2012). These methods exploit the fact that the free energy is a state function; thus, differences in free energy are independent of the path between states (De Vivo et al. 2016). Several techniques such as free-energy perturbation, umbrella sampling, tempering, and metadynamics are invented to reduce the energy barrier and smooth the potential energy surface (Luo et al. 2020; Liao 2020).

Score-based Generative Model

Score-based generative models (Song et al. 2020b) refer to the score matching with Langevin dynamics (Song and Ermon 2019) and the denoising diffusion probabilistic modeling (Sohl-Dickstein et al. 2015) .They have shown effectiveness in the generation of images (Ho, Jain, and Abbeel 2020) and molecular conformations (Shi et al. 2021).

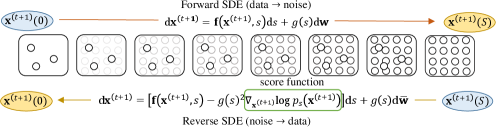

Diffusion process.

Assume a diffusion process indexed by a continuous time variable , such that , for which we have a dataset of i.i.d. samples, and , for which we have a tractable form to generate samples efficiently. Let be the probability density of , and be the transition kernel from to , where . Then the diffusion process is modeled as the solution to an It SDE (Song et al. 2020b):

| (2) |

where is a standard Wiener process, is a vector-valued function called the drift coefficient of , and is a scalar function known as the diffusion coefficient of .

Reverse process.

By starting from samples of and reversing the diffusion process, we can obtain samples . The reverse-time SDE can be acquired based on the result from Anderson (1982) that the reverse of a diffusion process is also a diffusion process as:

| (3) |

where is a standard Wiener process when time flows backwards from to 0, and is an infinitesimal negative timeframe. The score of a distribution can be estimated by training a score-based model on samples with score matching (Song and Ermon 2019). To estimate , one can train a time-dependent score-based model via a continuous generalization to the denoising score matching objective (Song et al. 2020b):

| (4) |

Here is a positive weighting function, is uniformly sampled over , and . With sufficient data and model capacity, score matching ensures that the optimal solution to Eq. 4, denoted by , equals for almost all and . We can typically choose (Song et al. 2020b).

DiffMD

Model Overview

Most prior DLMD studies such as Zhang et al. (2018) rely on the potential energy as the intermediate variable to acquire atomic forces and update positions, which demands an additional backpropagation calculation and significantly increases the computational costs. Some recent work starts to abandon the two-stage manner and choose the atom-level force as the prediction target of deep networks (Park et al. 2021). However, they all rely on the integrator from external computational tools to renew the positions in accordance with pre-calculated energy or forces. None embraces a straightforward paradigm to immediately forecast the 3D coordinates in a microscopic system concurrently based on previously available timeframes, i.e., . To bridge this gap, we seek to generate trajectories without any transitional integrator.

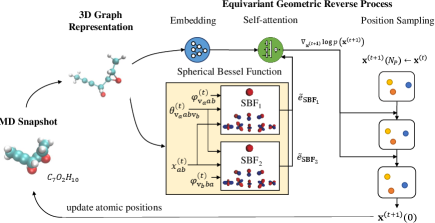

Several MD simulation frameworks assume the Markov property on biomolecular conformational dynamics (Chodera and Noé 2014; Malmstrom et al. 2014) for ease of representation, i.e., . We also hold this assumption and aim to estimate the gradient field of the log density of atomic positions at each timeframe, i.e. . In this setting, we design a score network based on the Transformer architecture to learn the scores of the position distribution, i.e., . During the inference period, we regard the conformation of the previous frame as the prior distribution, from which is sampled. Note that , we formulate it as a node regression problem. The whole procedure of DiffMD is depicted in Fig. 1.

Score-based Generative Models for MD

The motivation for our extending the denoising diffusion models to MD simulations is their resemblance to the enhanced sampling mechanism. Inspired by non-equilibrium statistical physics, these models first systematically and slowly destroy structures in distribution through an iterative forward diffusion process and then reverse it, similar to the behavior of perturbing the free energy in the system and striving to minimize the overall energy.

Perturbing data conditionally with SDEs.

Our goal is to construct a diffusion process indexed by a continuous time variable , such that and . There, and are the data distribution and the prior distribution of atomic positions respectively, as Equation 2.

How to incorporate noise remains critical to the success of the generation, which ensures the resulting distribution does not collapse to a low dimensional manifold (Song and Ermon 2019). Conventionally, is an unstructured prior distribution, such as a Gaussian distribution with fixed mean and variance (Song et al. 2020b), which is uninformative for . This construction of improves the sample variety for image generation (Brock, Donahue, and Simonyan 2018) but may not work well for MD. One reason is corrupting molecular conformations unconditionally would trigger severe turbulence to the microscopic system; besides, it ignores the fact that molecular conformations of neighboring frames and are close to each other and their divergence is dependent on the status of the former one. Therefore, it is necessary to formulate with the prior knowledge of .

To be explicit, the noise does not constantly grow along with , but depends on prior states. This strategy aligns with the Gaussian accelerated MD (GaMD) mechanism (details are in Appendix) and serve as a more practical way to inject turbulence into . Driven by the foregoing analysis, we introduce a conditional noise in compliance with the accelerations at prior frames and choose the following SDE:

| (5) |

where the noise term is dynamically adjusted as:

| (6) |

here is the harmonic acceleration constant and represents the acceleration threshold. Once the system has a slow variation trend of motion (i.e., the systematical energy is low), it will be supplied with a large level of noise and verse vice. Thus, the conditional noise is inversely proportional to and inherits the merits of enhanced sampling.

Generating samples through reversing the SDE.

Following the reverse-time SDE (Anderson 1982), samples of the next timeframe can be attained by reversing the diffusion process as:

| (7) |

Once the score of each marginal distribution, , is known for all , we can simulate the reverse diffusion process to sample from . The workflow is summarized in Fig. 2.

Estimating scores for the SDE.

Intuitively, the optimal parameters of the conditional score network can be trained directly by minimizing the following formula:

| (8) |

Here and . stands for the disturbed conformation with the noised geometric position . Notably, other score matching objectives, such as sliced score matching (Song et al. 2020a) and finite-difference score matching (Pang et al. 2020) can also be applied here rather than denoising score matching in Eq. 8.

Equivariant Geometric Score Network

Equivariance is a ubiquitous symmetry, which complies with the fact that physical laws hold regardless of the coordinate system. It has shown efficacy to integrate such inductive bias into model parameterization for modeling 3D geometry (Köhler, Klein, and Noé 2020; Klicpera, Becker, and Günnemann 2021). Hence, we consider building the score network equivariant to rotation and translation transformations.

Existing equivariant models for molecular representations are static rather than kinetic. In contrast, along MD trajectories each atom has a velocity and a corresponding orientation. To be specific, for some pair of atoms , they formulate two intersecting planes (see Fig. 3) with respective velocities . We denote the angles between velocities and the connecting line of two atoms by and . We denote the dihedral angle between two half-phases as . These three angles contain pivotal geometric information for predicting pairwise interactions as well as their future positions. It is necessary to incorporate them into our geometric modeling. Unfortunately, the directions and velocities of atomic motion, uniquely owned by dynamic systems, are seldom concerned by prior models.

To this end, we draw inspiration from Equivariant Graph Neural Networks (EGNN) (Satorras, Hoogeboom, and Welling 2021), GemNet (Klicpera, Becker, and Günnemann 2021), and Molformer (Wu et al. 2021), and propose an equivariant geometric Transformer (EGT) as . Our EGT is roto-translation equivariant and leverages directional information. The -th equivariant geometric layer (EGL) takes the set of atomic coordinates , velocities , and features as input, and outputs a transformation on , , and . Concisely, .

We first calculate the spherical Fourier-Bessel bases to integrate all available geometric information:

| (10) |

| (11) |

where , , and control the degree, root, and order of the radial basis functions, respectively. is the interaction cutoff. is the spherical Bessel functions. is the -th root of the -degree Bessel functions. is the real spherical harmonics with degree and order . Remarkably, 3D spherical Fourier-Bessel representations including and enjoy the roto-translation invariant property due to their exploitation of the relative distance as well as the invariant angles. Then those directional vectors are fed into EGL as:

| (12) | ||||

| (13) | ||||

| (14) | ||||

| (15) | ||||

| (16) | ||||

| (17) |

Here denotes concatenation and is the number of total layers in EGT. , and are linear transformations. and are velocity and feature operations, which are commonly approximately by multi-layer perceptrons (MLPs). , and are respectively the query, key, and value vectors with the same dimension . The weight matrix and are learnable, transferring dimensions of the concatenated vectors back to . is the attention that the token pays to the token . denotes the Softmax function. Finally, at the last layer immediately draw the gradient field of locations, i.e., .

Note that EGL breaks down the coordinate update into two stages. First we compute the velocity , and then leverage it to update the position . The initial velocity is scaled by that maps the feature embedding to a scalar value. After that, the velocity of each atom is updated as a vector field in a radial direction. In other words, is renewed by the weighted sum of all relative differences . The weights of this sum are provided as the attention score . Meanwhile, those attention scores are used to gain the new feature .

Analysis on E() equivariance.

We analyze the equivariance properties of our model for E(3) symmetries. That is, our model should be translation equivariant on for any translation vector and it should also be rotation and reflection equivariant on for any orthogonal matrix and any translation matrix . More formally, our model satisfies (see proof in Appendix):

| (18) |

Trajectory Sampling

After training a time-dependent score-based model , we can exploit it to construct the reverse-time SDE and then simulate it with numerical methods to generate molecular conformations from . As analyzed before, and are heavily correlated and their divergence is minor. Based on this relationship, instead of using some Gaussian distributions (Song et al. 2020b), we leverage as a replacement to approximate the unknown prior distribution . That is, we regard as the perturbed version of and seize it as the starting point of our trajectory sampling process.

Numerical solvers provide approximate computation for SDEs. Many general-purpose numerical methods, such as Euler-Maruyama and stochastic Runge-Kutta methods, apply to the reverse-time SDE for sample generation. In addition to SDE solvers, we can also employ score-based Markov Chain Monte Carlo (MCMC) approaches such as Langevin MCMC or Hamiltonian Monte Carlo to sample from directly, and correct the solution of a numerical SDE solver. Readers are recommended to refer to Song et al. (2020b) for more details. We provide the pseudo-code of the whole sampling process in Algorithm 1.

Experiments

To verify the effectiveness of our DiffMD, we construct the following two tasks and empirically evaluate it:

Short-term-to-long-term (S2L) Trajectory Generation.

In this task setting, models are first trained on some short-term trajectories and are required to produce long-term trajectories of the same molecule given the starting conformation as . This extrapolation over time aims to examine the model’s capacity of generalization from the temporal view.

One-to-others (O2O) Trajectory Generation.

In the O2O task, models are trained on the entire trajectories of some molecules and examined on other molecules from scratch. This evaluates model’s eligibility to generalize to conformations of different molecules, namely, the discrepancy with respect to different molecular types.

Experiment Setup

Evaluation metric.

We adopt the accumulative root-mean-square-error (ARMSE) of all snapshots at a given -step time period as the evaluation metric. ARMSE evaluates the generated conformations as: , which is roto-translational invariant.

Baselines.

We compare DiffMD with several state-of-the-art methods for the MD trajectory prediction. Specifically, Tensor Field Network (TFN) (Thomas et al. 2018) adopts filters built from spherical harmonics to achieve equivariance. Radial Field (RF) is a GNN drawn from Equivariant Flows (Köhler, Klein, and Noé 2019). SE(3)-Transformer (Fuchs et al. 2020) is a equivariant variant of the self-attention module for 3D point-clouds. EGNN (Satorras, Hoogeboom, and Welling 2021) learns GNNs equivariant to rotations, translations, reflections and permutations. GMN (Huang et al. 2022) resorts to generalized coordinates to impose geometrical constraints on graphs. SCFNN (Gao and Remsing 2022) is a self-consistent field NN for learning the long-range response of molecular systems. The full experimental details are elaborated in Appendix.

Short-term-to-long-term Trajectory Generation

Data.

MD17 (Chmiela et al. 2017) 111Both MD17 and datasets are available at http://quantum-machine.org/datasets/ contains trajectories of eight thermalized molecules, and all are calculated at a temperature of 500K and a resolution of 0.5 femtosecond (ft). We use the first 20K frame pairs as the training set and split the next 20K frame pairs equally into validation and test sets. Unfortunately, MD17 does not include velocities of particles, for which we use as a substitution, similarly to GMN.

Results.

Table 1 documents the performance of baselines and our DiffMD in S2L, where the best performance is marked bold and the second best is underlined for clear comparison. Note that floating overflow is encountered by RF (denoted as NA). For all eight organic molecules, DiffMD achieves the lowest ARMSEs. Moreover, different organic molecules perform in different manners during MD. Particularly, benzene moves most actively than other molecules, which leads to the highest prediction errors.

| Methods | Aspirin | Benzene | Ethanol | Malonaldehyde | Naphthalene | Salicylic | Toluene | Uracil |

|---|---|---|---|---|---|---|---|---|

| TFN | NA | NA | NA | NA | NA | NA | NA | NA |

| RF | 3.707 | 19.724 | 5.963 | 18.532 | 13.791 | 2.071 | 4.052 | 2.382 |

| SE(3)-Tr. | 0.813 | 2.415 | 0.678 | 1.183 | 1.834 | 1.230 | 1.312 | 0.691 |

| EGNN | 0.868 | 2.518 | 0.719 | 0.889 | 0.484 | 0.632 | 1.034 | 0.464 |

| GMN | 0.814 | 2.528 | 0.751 | 0.880 | 0.832 | 0.895 | 1.018 | 0.494 |

| SCFNN | 1.151 | 2.832 | 1.084 | 1.096 | 0.923 | 0.918 | 1.229 | 0.857 |

| DiffMD | 0.648 | 2.365 | 0.637 | 0.784 | 0.298 | 0.471 | 0.820 | 0.393 |

| Relative Impro. | 20.2% | 2.1% | 5.9% | 10.9% | 38.4% | 25.4% | 19.5% | 15.4% |

One-to-others Trajectory Generation

Data.

(Brockherde et al. 2017) is a dataset that consists of the trajectories of 113 randomly selected isomers, which are calculated at a temperature of 100K and resolution of 1 fs using density functional theory with the PBE exchange-correlation potential. We select the top-5 isomers that have the largest ARMSEs out of 113 samples, using as the prediction for all the subsequent timeframes, as the validation targets and take the rest as the training set. Same as the MD17 case, we compute the distance vector between neighboring frames as the velocities.

| Methods | ISO_1004 | ISO_2134 | ISO_2126 | ISO_3001 | ISO_1007 |

|---|---|---|---|---|---|

| TFN | 7.390 | 10.990 | 10.412 | 4.697 | 10.677 |

| RF | 4.772 | 4.364 | 21.576 | 9.077 | 11.049 |

| SE(3)-Tr. | 5.253 | 6.186 | 4.334 | 5.304 | 7.514 |

| EGNN | 1.142 | 0.578 | 0.928 | 1.017 | 1.035 |

| GMN | 1.205 | 0.363 | 0.998 | 1.053 | 1.154 |

| SCFNN | 1.781 | 1.693 | 1.785 | 2.842 | 2.264 |

| DiffMD | 1.127 | 0.278 | 0.919 | 0.837 | 0.878 |

| Relative Impro. | 1.2% | 23.4% | 9.7% | 9.8% | 15.1% |

Results.

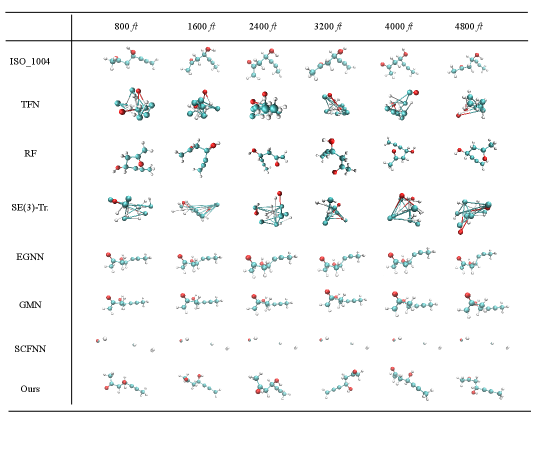

Table 2 reports ARMSE of baselines and our DiffMD on the five isomers from . DiffMD exceeds all baselines with a large margin for all target molecules. We plot snapshots at different timeframes in Appendix.

A closer inspection of the generated trajectories shows that several baselines have worse generation quality because their conformations are not geometrically and biologically constrained. On the contrary, generated conformations by models like EGNN and GMN are geometrically legal, but their variations are minute. Interestingly, we discover that conformations generated by SCFNN remain unchanged after a few timeframes, which indicates the network is stuck in a fixed point.

Related Work

Molecular Dynamics with Deep Learning

Recently, various DL models have become easy-to-use tools for fascinating MD with ab initio accuracy. Behler-Parrinello network (Behler and Parrinello 2007) is one of the first models to learn potential surfaces from MD data. After that, Deep-Potential net (Han et al. 2017) is further developed by extending to more advanced functions involving two neighbors. While DTNN (Schütt et al. 2017) and SchNet (Schütt et al. 2018) achieve highly competitive prediction performance across the chemical compound space and the configuration space in order to simulate MD (Noé et al. 2020). However, they still follow the routine of multi-stage simulations and rely on forces or energy as the prediction target. Huang et al. (2022) proposes an end-to-end GMN to characterize constrained systems of interacting objects, where molecules are defined as a set of rigidly connected particles with sticks and hinges. Also, their experiments fail to be realistic and the constraint strongly violates the nature of MD, since no distance between any pair of atoms are fixed.

Conformation Generation.

Researchers are increasingly interested in conformation generation. Some works start from 2D molecular graphs to gain their 3D structures via bi-level programming (Xu et al. 2021b) and continuous flows (Xu et al. 2021a). Some others concentrate on the inverse design to create the conformations of drug-like molecules or crystals with desired properties (Noh et al. 2019). Recently, Gao and Remsing (2022) propose an SCFNN that perturbs positions of the Wannier function centers induced by external electric fields. Latterly, diffusion models become a favored choice in conformation generation (Shi et al. 2021). Xu et al. (2022) introduce a GeoDiff by progressively injecting and eliminating small noises. However, its perturbations evolve over discrete times. A better approach would be to express dynamics as a set of differential equations since time is actually continuous. Furthermore, these studies leverage diffusion models in recovering conformations from molecular graphs instead of generating sequential conformations. We fill in the gap by applying them to yield MD trajectories.

Conclusion

We propose DiffMD, a novel principle to sequentially generate molecular conformations in MD simulations. DiffMD marries denoising diffusion models with an equivariant geometric Transformer, which enables self-attention to leverage directional information in addition to the interatomic distances. Extensive experiments over multiple tasks demonstrate that DiffMD is superior to existing state-of-the-art models. This research may shed light on the acceleration of new drugs and material discovery.

References

- Allen and Tildesley (2012) Allen, M. P.; and Tildesley, D. J. 2012. Computer simulation in chemical physics, volume 397. Springer Science & Business Media.

- Anderson (1982) Anderson, B. D. 1982. Reverse-time diffusion equation models. Stochastic Processes and their Applications, 12(3): 313–326.

- Behler and Parrinello (2007) Behler, J.; and Parrinello, M. 2007. Generalized neural-network representation of high-dimensional potential-energy surfaces. Physical review letters, 98(14): 146401.

- Brock, Donahue, and Simonyan (2018) Brock, A.; Donahue, J.; and Simonyan, K. 2018. Large scale GAN training for high fidelity natural image synthesis. arXiv preprint arXiv:1809.11096.

- Brockherde et al. (2017) Brockherde, F.; Vogt, L.; Li, L.; Tuckerman, M. E.; Burke, K.; and Müller, K.-R. 2017. Bypassing the Kohn-Sham equations with machine learning. Nature communications, 8(1): 1–10.

- Case et al. (2005) Case, D. A.; Cheatham III, T. E.; Darden, T.; Gohlke, H.; Luo, R.; Merz Jr, K. M.; Onufriev, A.; Simmerling, C.; Wang, B.; and Woods, R. J. 2005. The Amber biomolecular simulation programs. Journal of computational chemistry, 26(16): 1668–1688.

- Chmiela et al. (2017) Chmiela, S.; Tkatchenko, A.; Sauceda, H. E.; Poltavsky, I.; Schütt, K. T.; and Müller, K.-R. 2017. Machine learning of accurate energy-conserving molecular force fields. Science advances, 3(5): e1603015.

- Chodera and Noé (2014) Chodera, J. D.; and Noé, F. 2014. Markov state models of biomolecular conformational dynamics. Current opinion in structural biology, 25: 135–144.

- Cranmer et al. (2020) Cranmer, M.; Greydanus, S.; Hoyer, S.; Battaglia, P.; Spergel, D.; and Ho, S. 2020. Lagrangian neural networks. arXiv preprint arXiv:2003.04630.

- De Vivo et al. (2016) De Vivo, M.; Masetti, M.; Bottegoni, G.; and Cavalli, A. 2016. Role of molecular dynamics and related methods in drug discovery. Journal of medicinal chemistry, 59(9): 4035–4061.

- Dror et al. (2011) Dror, R. O.; Arlow, D. H.; Maragakis, P.; Mildorf, T. J.; Pan, A. C.; Xu, H.; Borhani, D. W.; and Shaw, D. E. 2011. Activation mechanism of the 2-adrenergic receptor. Proceedings of the National Academy of Sciences, 108(46): 18684–18689.

- Frenkel and Smit (2001) Frenkel, D.; and Smit, B. 2001. Understanding molecular simulation: from algorithms to applications, volume 1. Elsevier.

- Fuchs et al. (2020) Fuchs, F.; Worrall, D.; Fischer, V.; and Welling, M. 2020. Se (3)-transformers: 3d roto-translation equivariant attention networks. Advances in Neural Information Processing Systems, 33: 1970–1981.

- Gao and Remsing (2022) Gao, A.; and Remsing, R. C. 2022. Self-consistent determination of long-range electrostatics in neural network potentials. Nature Communications, 13(1): 1–11.

- Gilmer et al. (2017) Gilmer, J.; Schoenholz, S. S.; Riley, P. F.; Vinyals, O.; and Dahl, G. E. 2017. Neural message passing for quantum chemistry. In International conference on machine learning, 1263–1272. PMLR.

- Han et al. (2017) Han, J.; Zhang, L.; Car, R.; et al. 2017. Deep potential: A general representation of a many-body potential energy surface. arXiv preprint arXiv:1707.01478.

- Ho, Jain, and Abbeel (2020) Ho, J.; Jain, A.; and Abbeel, P. 2020. Denoising diffusion probabilistic models. Advances in Neural Information Processing Systems, 33: 6840–6851.

- Huang et al. (2022) Huang, W.; Han, J.; Rong, Y.; Xu, T.; Sun, F.; and Huang, J. 2022. Equivariant Graph Mechanics Networks with Constraints. In International Conference on Learning Representations.

- Jia et al. (2020) Jia, W.; Wang, H.; Chen, M.; Lu, D.; Lin, L.; Car, R.; Weinan, E.; and Zhang, L. 2020. Pushing the limit of molecular dynamics with ab initio accuracy to 100 million atoms with machine learning. In SC20: International conference for high performance computing, networking, storage and analysis, 1–14. IEEE.

- Kingma and Ba (2014) Kingma, D. P.; and Ba, J. 2014. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980.

- Klicpera, Becker, and Günnemann (2021) Klicpera, J.; Becker, F.; and Günnemann, S. 2021. Gemnet: Universal directional graph neural networks for molecules. Advances in Neural Information Processing Systems, 34.

- Klicpera, Groß, and Günnemann (2020) Klicpera, J.; Groß, J.; and Günnemann, S. 2020. Directional message passing for molecular graphs. arXiv preprint arXiv:2003.03123.

- Köhler, Klein, and Noé (2019) Köhler, J.; Klein, L.; and Noé, F. 2019. Equivariant flows: sampling configurations for multi-body systems with symmetric energies. arXiv preprint arXiv:1910.00753.

- Köhler, Klein, and Noé (2020) Köhler, J.; Klein, L.; and Noé, F. 2020. Equivariant flows: exact likelihood generative learning for symmetric densities. In International Conference on Machine Learning, 5361–5370. PMLR.

- Lambert (1991) Lambert, J. D. 1991. Numerical methods for ordinary differential systems: the initial value problem. John Wiley & Sons, Inc.

- Liao (2020) Liao, Q. 2020. Enhanced sampling and free energy calculations for protein simulations. In Progress in molecular biology and translational science, volume 170, 177–213. Elsevier.

- Lindorff-Larsen et al. (2011) Lindorff-Larsen, K.; Piana, S.; Dror, R. O.; and Shaw, D. E. 2011. How fast-folding proteins fold. Science, 334(6055): 517–520.

- Luo et al. (2020) Luo, R.; Zhang, Q.; Yang, Y.; and Wang, J. 2020. Replica-exchange Nos’e-Hoover dynamics for Bayesian learning on large datasets. Advances in Neural Information Processing Systems, 33: 17874–17885.

- Malmstrom et al. (2014) Malmstrom, R. D.; Lee, C. T.; Van Wart, A. T.; and Amaro, R. E. 2014. Application of molecular-dynamics based markov state models to functional proteins. Journal of chemical theory and computation, 10(7): 2648–2657.

- Martys and Mountain (1999) Martys, N. S.; and Mountain, R. D. 1999. Velocity Verlet algorithm for dissipative-particle-dynamics-based models of suspensions. Physical Review E, 59(3): 3733.

- Miao, Bhattarai, and Wang (2020) Miao, Y.; Bhattarai, A.; and Wang, J. 2020. Ligand Gaussian accelerated molecular dynamics (LiGaMD): Characterization of ligand binding thermodynamics and kinetics. Journal of chemical theory and computation, 16(9): 5526–5547.

- Miao, Feher, and McCammon (2015) Miao, Y.; Feher, V. A.; and McCammon, J. A. 2015. Gaussian accelerated molecular dynamics: unconstrained enhanced sampling and free energy calculation. Journal of chemical theory and computation, 11(8): 3584–3595.

- Miao et al. (2015) Miao, Y.; Feixas, F.; Eun, C.; and McCammon, J. A. 2015. Accelerated molecular dynamics simulations of protein folding. Journal of computational chemistry, 36(20): 1536–1549.

- Miao and McCammon (2016) Miao, Y.; and McCammon, J. A. 2016. Graded activation and free energy landscapes of a muscarinic G-protein–coupled receptor. Proceedings of the National Academy of Sciences, 113(43): 12162–12167.

- Miao and McCammon (2017) Miao, Y.; and McCammon, J. A. 2017. Gaussian accelerated molecular dynamics: Theory, implementation, and applications. In Annual reports in computational chemistry, volume 13, 231–278. Elsevier.

- Noé et al. (2020) Noé, F.; Tkatchenko, A.; Müller, K.-R.; and Clementi, C. 2020. Machine learning for molecular simulation. Annual review of physical chemistry, 71: 361–390.

- Noh et al. (2019) Noh, J.; Kim, J.; Stein, H. S.; Sanchez-Lengeling, B.; Gregoire, J. M.; Aspuru-Guzik, A.; and Jung, Y. 2019. Inverse design of solid-state materials via a continuous representation. Matter, 1(5): 1370–1384.

- Pang et al. (2020) Pang, T.; Xu, K.; Li, C.; Song, Y.; Ermon, S.; and Zhu, J. 2020. Efficient learning of generative models via finite-difference score matching. Advances in Neural Information Processing Systems, 33: 19175–19188.

- Pang et al. (2017) Pang, Y. T.; Miao, Y.; Wang, Y.; and McCammon, J. A. 2017. Gaussian accelerated molecular dynamics in NAMD. Journal of chemical theory and computation, 13(1): 9–19.

- Park et al. (2021) Park, C. W.; Kornbluth, M.; Vandermause, J.; Wolverton, C.; Kozinsky, B.; and Mailoa, J. P. 2021. Accurate and scalable graph neural network force field and molecular dynamics with direct force architecture. npj Computational Materials, 7(1): 1–9.

- Paszke et al. (2019) Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. 2019. Pytorch: An imperative style, high-performance deep learning library. Advances in neural information processing systems, 32.

- Rocchia, Masetti, and Cavalli (2012) Rocchia, W.; Masetti, M.; and Cavalli, A. 2012. Enhanced sampling methods in drug design. Physico-Chemical and Computational Approaches to Drug Discovery. The Royal Society of Chemistry, 273–301.

- Särkkä and Solin (2019) Särkkä, S.; and Solin, A. 2019. Applied stochastic differential equations, volume 10. Cambridge University Press.

- Satorras, Hoogeboom, and Welling (2021) Satorras, V. G.; Hoogeboom, E.; and Welling, M. 2021. E (n) equivariant graph neural networks. In International Conference on Machine Learning, 9323–9332. PMLR.

- Schlick (2010) Schlick, T. 2010. Molecular modeling and simulation: an interdisciplinary guide, volume 2. Springer.

- Schütt et al. (2017) Schütt, K. T.; Arbabzadah, F.; Chmiela, S.; Müller, K. R.; and Tkatchenko, A. 2017. Quantum-chemical insights from deep tensor neural networks. Nature communications, 8(1): 1–8.

- Schütt et al. (2018) Schütt, K. T.; Sauceda, H. E.; Kindermans, P.-J.; Tkatchenko, A.; and Müller, K.-R. 2018. Schnet–a deep learning architecture for molecules and materials. The Journal of Chemical Physics, 148(24): 241722.

- Schwantes, McGibbon, and Pande (2014) Schwantes, C. R.; McGibbon, R. T.; and Pande, V. S. 2014. Perspective: Markov models for long-timescale biomolecular dynamics. The Journal of chemical physics, 141(9): 09B201_1.

- Shan et al. (2011) Shan, Y.; Kim, E. T.; Eastwood, M. P.; Dror, R. O.; Seeliger, M. A.; and Shaw, D. E. 2011. How does a drug molecule find its target binding site? Journal of the American Chemical Society, 133(24): 9181–9183.

- Shi et al. (2021) Shi, C.; Luo, S.; Xu, M.; and Tang, J. 2021. Learning gradient fields for molecular conformation generation. In International Conference on Machine Learning, 9558–9568. PMLR.

- Sohl-Dickstein et al. (2015) Sohl-Dickstein, J.; Weiss, E.; Maheswaranathan, N.; and Ganguli, S. 2015. Deep unsupervised learning using nonequilibrium thermodynamics. In International Conference on Machine Learning, 2256–2265. PMLR.

- Song and Ermon (2019) Song, Y.; and Ermon, S. 2019. Generative modeling by estimating gradients of the data distribution. Advances in Neural Information Processing Systems, 32.

- Song et al. (2020a) Song, Y.; Garg, S.; Shi, J.; and Ermon, S. 2020a. Sliced score matching: A scalable approach to density and score estimation. In Uncertainty in Artificial Intelligence, 574–584. PMLR.

- Song et al. (2020b) Song, Y.; Sohl-Dickstein, J.; Kingma, D. P.; Kumar, A.; Ermon, S.; and Poole, B. 2020b. Score-based generative modeling through stochastic differential equations. arXiv preprint arXiv:2011.13456.

- Suomivuori et al. (2017) Suomivuori, C.-M.; Gamiz-Hernandez, A. P.; Sundholm, D.; and Kaila, V. R. 2017. Energetics and dynamics of a light-driven sodium-pumping rhodopsin. Proceedings of the National Academy of Sciences, 114(27): 7043–7048.

- Thomas et al. (2018) Thomas, N.; Smidt, T.; Kearnes, S.; Yang, L.; Li, L.; Kohlhoff, K.; and Riley, P. 2018. Tensor field networks: Rotation-and translation-equivariant neural networks for 3d point clouds. arXiv preprint arXiv:1802.08219.

- Tuckerman and Martyna (2000) Tuckerman, M. E.; and Martyna, G. J. 2000. Understanding modern molecular dynamics: Techniques and applications.

- Vaswani et al. (2017) Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A. N.; Kaiser, Ł.; and Polosukhin, I. 2017. Attention is all you need. Advances in neural information processing systems, 30.

- Wang et al. (2021) Wang, J.; Arantes, P. R.; Bhattarai, A.; Hsu, R. V.; Pawnikar, S.; Huang, Y.-m. M.; Palermo, G.; and Miao, Y. 2021. Gaussian accelerated molecular dynamics: Principles and applications. Wiley Interdisciplinary Reviews: Computational Molecular Science, 11(5): e1521.

- Wu et al. (2021) Wu, F.; Zhang, Q.; Radev, D.; Cui, J.; Zhang, W.; Xing, H.; Zhang, N.; and Chen, H. 2021. 3D-Transformer: Molecular Representation with Transformer in 3D Space. arXiv preprint arXiv:2110.01191.

- Xu et al. (2021a) Xu, M.; Luo, S.; Bengio, Y.; Peng, J.; and Tang, J. 2021a. Learning neural generative dynamics for molecular conformation generation. arXiv preprint arXiv:2102.10240.

- Xu et al. (2021b) Xu, M.; Wang, W.; Luo, S.; Shi, C.; Bengio, Y.; Gomez-Bombarelli, R.; and Tang, J. 2021b. An end-to-end framework for molecular conformation generation via bilevel programming. In International Conference on Machine Learning, 11537–11547. PMLR.

- Xu et al. (2022) Xu, M.; Yu, L.; Song, Y.; Shi, C.; Ermon, S.; and Tang, J. 2022. GeoDiff: a Geometric Diffusion Model for Molecular Conformation Generation. arXiv preprint arXiv:2203.02923.

- Zhang et al. (2018) Zhang, L.; Han, J.; Wang, H.; Car, R.; and Weinan, E. 2018. Deep potential molecular dynamics: a scalable model with the accuracy of quantum mechanics. Physical review letters, 120(14): 143001.

Appendix A Molecular Dynamics Simulations

Force Field for MD.

The following equation defines the classic force field for MD simulations as:

| (19) |

where , , and denote the set of all bonds, angles, and torsions. , , and correspond to the bond lengths, angles, and dihedral angles, respectively. and are reference values. and are force constants. is a parameter describing the multiplicity for the -th term of the series. is the corresponding phase angle. is the energy barrier. The torsion terms are defined by a cosine series of terms for each dihedral angle . is the distance between considered atoms. defines the depth of the energy well. is the minimum distance that equals the sum of the van der Waals radii of the two interaction atoms. and are the partial changes of a pair of atoms. stands for the permittivity of free space, and is the relative permittivity, which takes a value of one in vacuum.

The first three terms in Eq. 19 represent intramolecular interactions of the atoms. They depict variations in potential energy as a function of bond stretching, bending, and torsions between atoms directly involved in bonding relationships. They are represented as the summations over bond lengths (), angles (), and dihedral angles (), respectively. The last two terms stand for van der Waals interactions, modeled using the Lennard-Jones potential, and eletrostatic interactions, modeled using Coulomb’s law. These atomic forces are denoted as non-bonded items because they are caused by interactions between atoms that are not bonded.

Integrator.

Then numerical methods are utilized to advance the trajectory over small time increments with the assistance of some integrator. An integrator advances the trajectory over small time increments as:

| (20) |

where is usually taken around picoseconds (ps) to ps. Various strategies have been invented to iteratively update atomic positions including the central difference (Verlet, leap-frog, velocity Verlet, Beeman algorithm) (Frenkel and Smit 2001; Allen and Tildesley 2012), the predictor-corrector (Lambert 1991), and the symplectic integrators (Tuckerman and Martyna 2000; Schlick 2010).

MD trajectory iterations.

With sampled forces, we can now advance to update the velocities of each atom and compute their coordinates of the next timeframe. As an example of an integrator, the velocity-Verlet (Martys and Mountain 1999) in Eq. 21, is a simple and widely used algorithm in MD software such as AMBER (Case et al. 2005). The coordinates and velocities are updated simultaneously as:

| (21) | ||||

| (22) |

Gaussian accelerated MD

GaMD (Miao and McCammon 2017; Pang et al. 2017) is a robust computational method for simultaneous unconstrained enhanced sampling and free energy calculations of biomolecules, which greatly accelerates MD by orders of magnitude (Wang et al. 2021). It works by adding a harmonic boost potential to reduce energy barriers. When the system potential is lower than a threshold energy , a boost potential is injected as:

| (23) |

where is the harmonic force constant. The modified system potential is given by . Otherwise, when the system potential is above the threshold energy, the boost potential is set to zero.

Remarkably, the boost potential exhibits a Gaussian distribution, allowing for accurate reweighting of the simulations using cumulant expansion to the second order and recovery of the original free energy landscapes even for large biomolecules (Miao and McCammon 2016; Pang et al. 2017).

Moreover, three enhanced sampling principles are imposed to the boost potential in GaMD. Among them, for the sake of ensuring accurate reweighting (Miao et al. 2015), the standard deviation of ought to be adequately small, i.e., narrow distribution:

| (24) |

where and are the average and standard deviation of the system potential energies, is the standard deviation of with as a user-specific upper limit (e.g. 10T) for accurate reweighting (Miao, Bhattarai, and Wang 2020). This also indicates that our choice of ought to be small enough with some upper bound.

Appendix B Equivariance Proof for EGT

In this part, we provide a strict proof that EGT achieves E() equivariance on . More formally, for any orthogonal matrix and any translation matrix , the model should satisfy:

| (25) |

As assumed in the preliminary, is invariant to E() transformation. In other words, we do not encode any information about the absolute position or orientation of into . Moreover, the spherical representations and will be invariant too. This is because the distance between two particles and the three angles are invariant to translation as

| (26) |

and they are invariant to rotations as

| (27) |

As for angles, the intersection angles take the form of

| (28) | ||||

| (29) |

Similarly,

| (30) | ||||

| (31) |

while , , and are invariant as well. Thus, intersection angles are invariant. Suppose and are two normal vectors to the two planes, and the dihedral angle between these planes is defined as . The invariance of the dihedral angle can be proven in the same way as the intersection angles. Finally, it leads to the result that all query, key, value vectors, attention scores, and feature embeddings become invariant.

Now we aim to show:

| (32) |

The right hand of this equation can be written as:

| (33) | ||||

| (34) |

Obviously, it is easy to show that , and we omit this derivation.

Appendix C Experiment

Implementation Details

In this subsection, we introduce details of hyper-parameters used in our experiments.

Training details.

All models are implemented in Pytorch (Paszke et al. 2019), and are optimized with an Adam (Kingma and Ba 2014) optimizer with the initial learning rate of 5e-4 and weight decay of 1e-10 on a single A100 GPU. A ReduceLROnPlateau scheduler is utilized to adjust the learning rate according to the training ARMSE with a lower bound of 1e-7 and a patience of 5 epochs. The random seed is 1. The hidden dimension is 64. All baseline models are evaluated with 4 layers. For RF, TFN, EGNN, GMN, SCFNN, and DiffMD, the batch size is 200. The batch size is reduced to 100 for SE(3)-Transformer due to the memory explosion error. We train models for 200 epochs and test their performance each 5 epochs. Similar to (Shi et al. 2021; Huang et al. 2022), for graph-based baselines, we augment the original molecular graphs with 2-hop neighbors, and concatenate the hop index with atom number of the connected atoms as well as the edge type indicator as the edge feature. For GMN, we use the configurations in the paper that bonds without commonly-connected atoms are selected as sticks, and the rest of atoms as isolated particles. Notably, unlike Huang et al. (2022), we keep all atoms including the hydrogen atoms for a more accurate description of the microscopic system.

DiffMD architecture.

The score function, EGT, has 6 EGLs, and each layer has 8 attention heads. We adopt ReLU as the activation function and a dropout rate of 0.1. The input embedding size is 128 and the hidden size for the feed-forward network (FFN) is 2048. The numbers of the degree, root, and order of the radial basis are all 2. The interaction cutoff is 1.6 Å. We use the 2-norm of velocity concatenated with the atom number as the node feature, which is invariant to geometric transformations. As for the generative process, we exploit the ODE sampler instead of the predictor-corrector sampler because the former is much faster than the latter. The tolerances of the absolute error and the relative error are both 1e-5. We tune several key hyper-parameters via grid search based on the validation dataset (see Table 3).

| Name | Description | Range |

|---|---|---|

| The standard derivation when the acceleration is above the threshold. | [1e-3, 1e-2, 1e-1, 1] | |

| The acceleration threshold. | [1e-2, 1e-1, 1, 2, 5, 10] | |

| The harmonic acceleration constant. | [1e-2, 1e-1, 1, 10] | |

| The smallest time step for numerical stability in ODE sampler. | [1e-1, 0.2, 0.4, 0.8, 0.9, 0.99] |

Additional Results

Generated MD Trajectory Samples

We present visualizations of samples in the generated trajectories from different approaches in Fig 4. For each method, we sample every 800 fts. The first row is the ground truth trajectory. It can be seen that even if EGNN, GMN, DiffMD achieve low ARMSEs, their variance between different frames is tiny. Particularly, SCFNN is caught by a fixed point and its prediction keeps unchanged. That is, its output is the same as its input. On the other hand, other models including TFN, RF, and SE(3)-Transformer adjust the conformations dramatically, and the generated conformations are mostly illegal. In other words, they produce molecular structures that break the underlying law of biological geometry.