22institutetext: Key Lab of Intelligent Information Processing of Chinese Academy of Sciences (CAS), Institute of Computing Technology, CAS, Beijing, 100190, China

DiffULD: Diffusive Universal Lesion Detection

Abstract

Universal Lesion Detection (ULD) in computed tomography (CT) plays an essential role in computer-aided diagnosis. Promising ULD results have been reported by anchor-based detection designs, but they have inherent drawbacks due to the use of anchors: i) Insufficient training target and ii) Difficulties in anchor design. Diffusion probability models (DPM) have demonstrated outstanding capabilities in many vision tasks. Many DPM-based approaches achieve great success in natural image object detection without using anchors. But they are still ineffective for ULD due to the insufficient training targets. In this paper, we propose a novel ULD method, DiffULD, which utilizes DPM for lesion detection. To tackle the negative effect triggered by insufficient targets, we introduce a novel center-aligned bounding box padding strategy that provides additional high-quality training targets yet avoids significant performance deterioration. DiffULD is inherently advanced in locating lesions with diverse sizes and shapes since it can predict with arbitrary boxes. Experiments on the benchmark dataset DeepLesion[1] show the superiority of DiffULD when compared to state-of-the-art ULD approaches.

Keywords:

Universal Lesion Detection Diffusion Model.1 Introduction

Universal Lesion Detection (ULD) in computed tomography (CT)[2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18] plays an important role in computer-aided diagnosis (CAD)[19, 20]. The design of detection-only instead of identifying the lesion types in ULD[21, 22, 23, 24, 25, 26, 27, 28] prominently decreases the difficulty of this task for a specific organ (e.g., lung, liver), but it is still challenging for lesions vary in shapes and sizes among whole human body.

Previous arts in ULD are mainly motivated by the anchor-based detection framework, e.g., Faster-RCNN [29]. These studies focus on adapting the detection backbone to universally locate lesions in CT scans. For instance, Li et al. [8] propose the so-called MVP-Net, a multi-view FPN with a position-aware attention mechanism to assist ULD training. Yang et al. [30, 10, 16] propose a series of 3D feature fusion operators to incorporate context information from several adjacent CT slices for better performance. Li et al. [31] introduce a plug-and-play transformer block to form hybrid backbones which can better model long-distance feature dependency.

While achieving success, these anchor-based methods have inherent drawbacks: (i) Insufficient training target.

In stage-1, anchor-based methods identify the positive (lesion) anchors and label them as the region of interest (RoI) based on the IoU between anchors and ground-truth (GT) bounding boxes (BBoxes). An anchor is considered positive if its IoU with any GT BBox is greater than the IoU threshold and negative otherwise[29]. The positive anchors are sufficient in natural images as they usually have many targets per image [12]. However, the number of lesions per CT scan is limited, most CT slices only contain one or two lesions (i.e., detection targets in ULD) per CT slice [17]. Still applying the IoU-based anchor matching mechanism with such limited targets can lead to severe data imbalance and further hinders network convergence. Simply lowering the positive IoU threshold in the anchor-selecting mechanism can alleviate the shortage of positive anchors to some degree, but it leads to a higher false positive (FP) rate by labeling more low-IoU anchors as positive. (ii) Difficulties in anchor design. In anchor-based methods, the size, ratio and number of anchors are pre-defined hyper-parameters that significantly influence the detection performance[32]. Thus a proper design of anchor hyper-parameters is of great importance. However, tuning anchor hyper-parameters is a challenging task in ULD because of the variety of lesions (target) with diverse diameters (from 0.21 to 342.5mm). Even with a careful design, the fixed rectangle anchor boxes can be a kind of obstruction in capturing heterogeneous lesions, which have irregular shapes and vary in size.

To get rid of the drawbacks caused by the anchor mechanism, researchers resort to anchor-free detection, e.g., FCOS[33] and DETR[34]. But these methods experience difficulties in achieving state-of-the-art results in ULD, as they lack the initialization of position prior provided by anchors.

Recently, the diffusion probabilistic model (DPM) [35, 36, 37, 38, 39] has demonstrated its outstanding capabilities in various vision tasks. Chen et. al. follow the key idea of DPM and propose a noise-to-box pipeline, DiffusionDet[40], for natural image object detection. They achieved success on natural images with sufficient training targets, but still experience difficulties in dealing with tasks with insufficient training targets like ULD. This is because the DPM’s denoising is a dense distribution-to-distribution forecasting procedure that heavily relied on a large number of high-quality training targets to learn targets’ distribution accurately.

To address this issue, we hereby introduce a novel center-aligned BBox padding strategy in DPM detection to form a diffusion-based detector for Universal Lesion Detection, termed DiffULD.

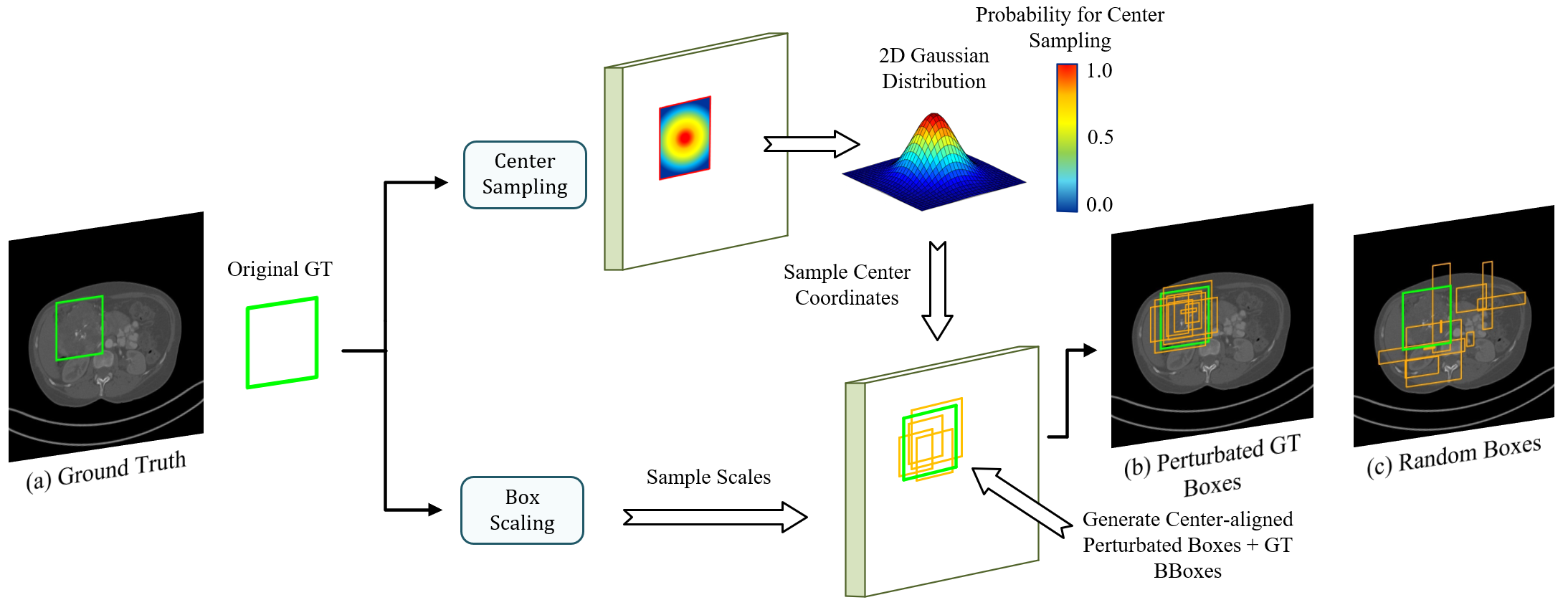

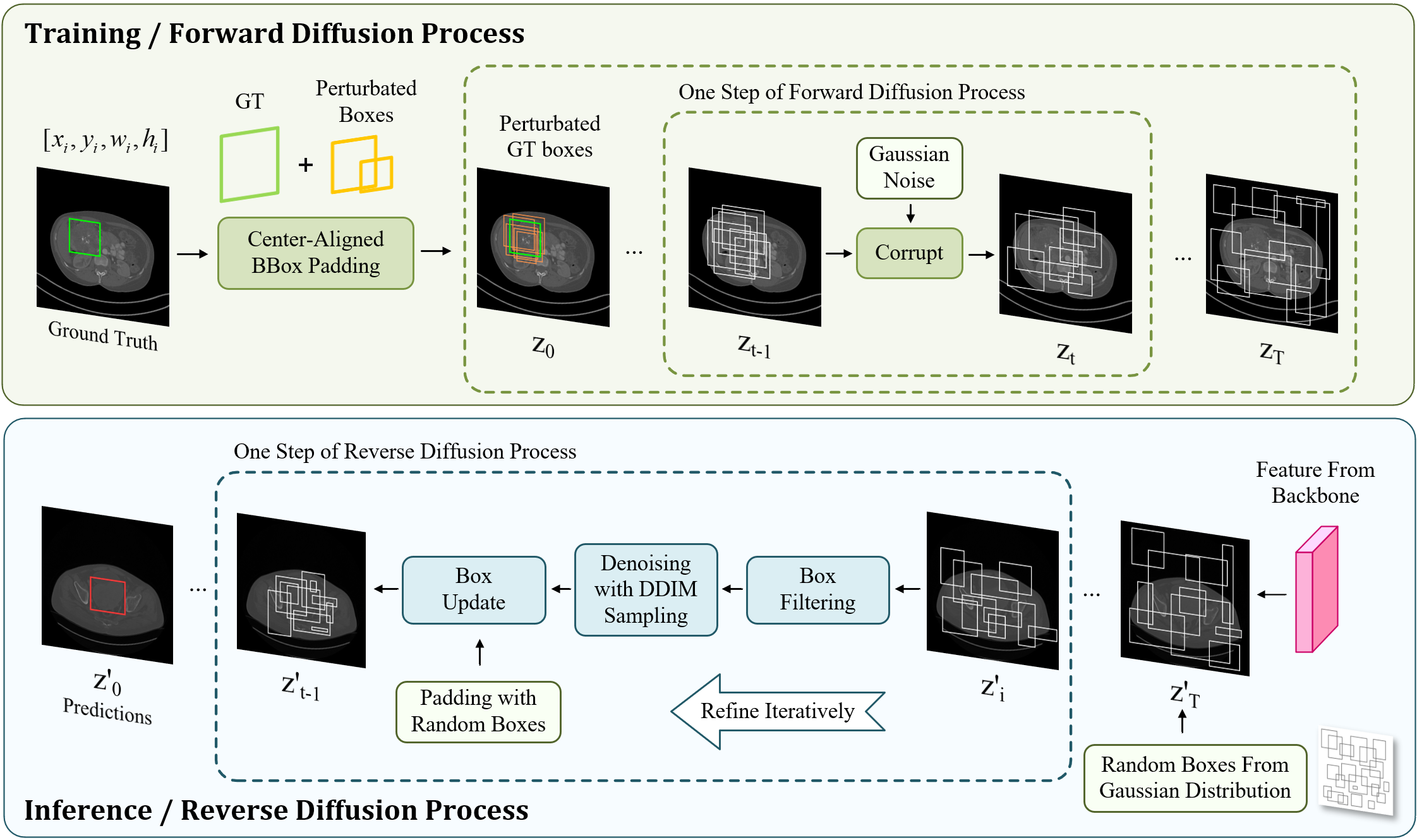

As shown in Fig. 1, DiffULD also formulates lesion detection as a denoising diffusion process from noisy boxes to prediction boxes similar to [40], but we further introduce the center-aligned BBox padding before DiffULD’s forward diffusion process to generate perturbated GT. Specifically, we add random perturbations to both scales and center coordinates of the original GT BBox, resulting in perturbated boxes whose centers remain aligned with the corresponding original GT BBox. Next, original GT boxes paired with these perturbated boxes are called perturbated GT boxes for simplicity. Finally, we feed the perturbated GT boxes to the model as the training objective during training. Compared with other training target padding methods (e.g., padding with random boxes), our strategy can provide additional targets of higher quality, i.e., center aligned with the original GT BBox. This approach effectively expands the insufficient training targets on CT scans, enhancing DPM’s detection performance and avoiding deterioration triggered by adding random targets.

The following DPM training procedure contains two diffusion processes. i) In the forward training process, DiffULD corrupts the perturbated GT with Gaussian noise gradually to generate noisy boxes step by step. Then the model is trained to remove the noise and reconstruct the original perturbated GT boxes. ii) In the reverse inference process, the trained DiffULD can refine a set of randomly generated boxes iteratively to obtain the final detect predictions.

Our method gets rid of the drawbacks of pre-defined anchors and the deterioration of training DPM with insufficient GT targets. Besides, DiffULD is inherently advanced in locating targets with diverse sizes since it can predict with arbitrary boxes, which is an advantageous feature for detecting lesions of irregular shapes and various sizes. To validate the effectiveness of our method, we conduct experiments against seven state-of-the-art ULD methods on the public dataset DeepLesion[1]. The results demonstrate that our method achieves competitive performance compared to state-of-the-art ULD approaches.

2 Method

In this section, we first formulate our overall detection process for DiffULD and then specify the training manner, inference process and backbone design.

2.1 Diffusion-based detector for lesion detection

Universal Lesion Detection can be formulated as locating lesions in input CT scan with a set of boxes predictions . For a particular box , it can be denoted as , where and are the coordinates of the top-left and bottom-right corners, respectively.

We design our model based on a diffusion model mentioned in [40]. As shown in Fig. 2, our method consists of two stages, a forward diffusion (or training) process and a reverse refinement (or inference) process. In the forward process, We denote GT bounding boxes as and generate corrupted training samples for latter DiffULD training by adding Gaussian noise iteratively, which can be defined as:

| (1) |

where represents the noise variance schedule and . Subsequently, a neural network conditioned on the corresponding CT scan is trained to predict from a noisy box by reversing the noising process step by step. During inference, for an input CT scan with a set of random boxes, the model is able to refine the random boxes to get a lesion detection prediction box , iteratively.

2.2 Training

In this section, we specify the training process with our novelty introduced ‘Center-aligned BBox padding’.

Center-aligned BBox padding. As shown in Fig. 1, we utilize Center-aligned BBox padding to generate perturbated boxes. Then, the perturbated boxes are paired with original GT BBoxes, forming perturbated GT boxes which are used to generate corrupted training samples for the latter DiffULD training by adding Gaussian noise iteratively.

For clarity, we denote as center coordinates of original GT BBox, where , are the width and height of .

We consider the generation in two parts: box scaling and center sampling. (i) Box scaling: We set a hyper-parameter for scaling. For , The width and height of the corresponding perturbated boxes are randomly sampled in uniform distributions on and . (ii) Center sampling: We sample the center coordinates of perturbated boxes from a 2D Gaussian distribution whose probability density function can be denoted as:

| (2) |

where is a size-adaptive parameter, which can be calculated according to the ’s width and height:

| (3) |

Besides, for each input CT scan , we collect all GT BBoxes in and add random perturbations to them and generate multiple perturbated boxes for each of them. Thus the number of perturbated boxes in an image varies with its number of GT BBoxes. For better training, we fix the number of perturbated boxes as for all training images.

As shown in Fig 1., the perturbated boxes cluster together and their centers are still aligned with the corresponding original GT BBox. Subsequently, perturbated GT boxes are sent for corruption as the training objective.

Box corruption. As shown in Fig. 1, we corrupt the parameters of with Gaussian noises. The noise scale is controlled by (in Eq. 1), which adopts the decreasing cosine scheduler in the different time step .

Loss function. As the model generates the same number of () predictions for the input image, termed as a prediction set, the loss function should be set-wised [34]. Specifically, each GT is matched with the prediction by the least matching cost, and the overall training loss[34] can be represented as:

| (4) |

where and are the pairwise box loss. We adopt and .

2.3 Inference

At the inference stage, with a set of random boxes sampled from Gaussian distribution, the model does refinement step by step to obtain the final predictions . For better performance, two key components are used:

Box filtering. In each refinement step, the model receives a set of box proposals from the last step. As the prediction starts from arbitrary boxes and the lack of GT (lesion), most of these proposals are very far from lesions. Keeping refining them in the following steps will hinder network training. Toward efficient detection, we send the proposals to the detection head and remove the boxes whose confidential scores are lower than a particular threshold . The remaining high-quality proposals are sent for followed DDIM sampling.

Box update with DDIM sampling. DDIM[41] is utilized to further refine the received box proposals by denoising. Next, these refined boxes are sent to the next step and start a new round of refinement. After multiple steps, final predictions are obtained.

However, we observe that if we just filter out boxes with low scores during iterative refinement, the model runs out of usable box proposals rapidly, which also leads to a deterioration in performance. Therefore, after the box updating, we add new boxes sampled from a Gaussian Distribution to the set of remaining boxes with. The number of box proposals per image is padded to the fixed number before they are sent to the next refinement step.

2.4 Backbone Design

Multi-window input. Most prior arts in ULD use a single and fixed window (e.g., a wide window of [1024, 4096]) to render the input CT scan, which suppresses organ-specific information and makes it hard for the network to focus on the various lesions. Therefore, taking cues from [8], we introduce 3 organ-specific HU windows to highlight multiple organs of interest. Their window widths and window levels are: for chest organs, for soft tissues and for abdomenal organs. Multi-window features are extracted with a ConvNeXt-T shared network.

3D context feature fusion. We modify the original A3D[16] DenseNet backbone for context fusion. We remove the first Conv3D Block and use the truncated network as our 3D context fusion module, which fuses the multi-view features from the last module. Multi-window features are fused with this module and sent to the detector subsequently for lesion detection.

3 Experiments

3.1 Settings

Our experiments are conducted on the standard ULD dataset DeepLesion[1]. The dataset contains 32,735 lesions on 32,120 axial slices from 10,594 CT studies of 4,427 unique patients. We use the official data split of DeepLesion which consists of 70%, 15%, 15% for training, validation, and test, respectively. Besides, we also evaluate the performance of 3 methods based on a revised test set from .

Training details. DiffULD is trained on CT scans of size 512 × 512 with a batch size of 4 on 4 NVIDIA RTX Titan GPUs with 24GB memory. For hyper-parameters, the threshold for box padding is set to . for box scaling is set to 0.4. for box filtering is set to 0.5. We use the Adam optimizer with an initial learning rate of and the weight decay as . The default training schedule is 120K iterations, with the learning rate divided by 10 at 60K and 100K iterations. Data augmentation strategies contain random horizontal flipping, rotation, and random brightness adjustment.

Evaluation metrics. The lesion detection is classified as true positive (TP) when the IoU between the predicted and the GT bounding box is larger than 0.5. Average sensitivities computed at 0.5, 1, 2, and 4 false-positives (FP) per image are reported as the evaluation metrics on the test set for a fair comparison.

Methods Slices @0.5 @1 @2 @4 Avg.[0.5,1,2,4] 3DCE[7] 27 62.48 73.37 80.70 85.65 75.55 RetinaNet[2] 3 72.18 80.07 86.40 90.77 82.36 MVP-Net[8] 9 73.83 81.82 87.60 91.30 83.64 MULAN[9] 27 76.10 82.50 87.50 90.90 84.33 AlignShift[10] 3 73.00 81.17 87.05 91.78 83.25 A3D[16] 3 74.10 81.81 87.87 92.13 83.98 DKA-ULD[42] 3 77.38 84.06 89.28 92.04 85.79 DiffULD (Ours) 3 77.84 (0.46) 84.57 (0.51) 89.41 (0.13) 92.31 (0.40) 86.03 (0.58) SATr[31] 7 81.02 86.64 90.69 93.30 87.91 DiffULD (Ours) 7 80.43 87.16 (0.52) 91.20 (0.51) 93.21 88.00 (0.08) FCOS[33] 3 56.12 67.31 73.75 81.44 69.66 CenterNet++[43] 3 67.34 75.95 82.73 87.72 78.43 DN-DETR[44] 3 69.27 77.90 83.97 88.59 81.02

Methods Slices @0.5 @1 @2 @4 Avg.[0.5,1,2,4] A3D[16] 7 88.73 91.23 93.89 95.91 92.44 SATr[31] 7 91.04 93.75 95.58 96.73 94.28 DiffULD (Ours) 7 91.65 (0.61) 94.33 (0.58) 95.97 (0.39) 96.86 (0.13) 94.70 (0.42)

3.2 Lesion detection performance

We evaluate the effectiveness of DiffULD against anchor-based ULD approaches such as 3DCE[7], MVP-Net[8], A3D[16] and SATr[31] on DeepLesion. Several anchor-free natural image detection methods such as FCOS[33] and DN-DETR[44] are also introduced for comparison. In addition, we conduct an extensive experiment to explore DiffULD’s potential on an augmented test set of completely annotated DeepLesion volumes, introduced by Lesion-Harvester[45].

Table 1. demonstrates that our proposed DiffULD achieves favorable performances when compared to recent state-of-the-art anchor-based ULD approaches such as SATr on both 3 slices and 7 slices. It outperforms prior well-established methods such as A3D and MULAN by a non-trivial margin. This validates that with our padding strategy, the concise DPM can be utilized in general medical object detection tasks such as ULD and attain impressive performance.

3.3 Ablation study

Baseline Duplicate Gaussian Uniform Center-Aligned FPPI = 0.5 FPPI = 1 ✓ 76.71 83.49 ✓ ✓ 77.01 83.61 ✓ ✓ 77.68 83.98 ✓ ✓ 77.22 83.75 ✓ ✓ 77.84 (1.13) 84.57 (1.08)

We provide an ablation study about our proposed approach: center-aligned BBox padding. As shown in Table 3., we compared it with various other padding strategies, including: (i) duplicating original GT boxes; (ii) padding random boxes sampled from a uniform distribution; (iii) padding random boxes sampled from a Gaussian distribution; (iv) padding with center-aligned strategy.

Our baseline method is training the diffusion model directly with no box padding, using our proposed backbone in 2.4. The performance is increased by 0.30% by simply duplicating the original GT boxes. Padding random boxes following uniform and Gaussian distributions brings 0.51% and 0.91% improvement respectively. Our center-aligned padding strategy accounts for 1.13% improvement from the baseline. We attribute this performance boost to center-aligned padding’s ability to provide high-quality additional training targets. It effectively expands the insufficient training targets on CT scans and enhances DPM’s detection performance while avoiding deterioration triggered by adding random targets. This property is favorable for utilizing DPMs on a limited amount of GT like ULD.

4 Conclusion

In this paper, we propose a novel ULD method termed DiffULD by introducing the diffusion probability model (DPM) to Universal Lesion Detection. We present a novel center-aligned BBox padding strategy to tackle the performance deterioration caused by directly utilizing DPM on CT scans with sparse lesion bounding boxes. Compared with other training target padding methods (e.g., padding with random boxes), our strategy can provide additional training targets of higher quality and boost detection performance while avoiding significant deterioration. DiffULD is inherently advanced in locating lesions with diverse sizes and shapes since it can predict with arbitrary boxes, making it a promising method for ULD. Experiments on both standard and augmented DeepLesion datasets show that our proposed method can achieve competitive performance compared to state-of-the-art ULD approaches.

References

- [1] Ke Yan et al. Deeplesion: automated mining of large-scale lesion annotations and universal lesion detection with deep learning. Journal of medical imaging, 5(3):036501, 2018.

- [2] Martin Zlocha et al. Improving retinanet for ct lesion detection with dense masks from weak recist labels. In MICCAI, pages 402–410. Springer, 2019.

- [3] Qingyi Tao et al. Improving deep lesion detection using 3d contextual and spatial attention. In MICCAI, pages 185–193. Springer, 2019.

- [4] Ning Zhang et al. 3d anchor-free lesion detector on computed tomography scans. In 2019 First International Conference on Transdisciplinary AI (TransAI), pages 48–51. IEEE, 2019.

- [5] Ning Zhang et al. 3d aggregated faster r-cnn for general lesion detection. arXiv preprint arXiv:2001.11071, 2020.

- [6] You-Bao Tang et al. Uldor: a universal lesion detector for ct scans with pseudo masks and hard negative example mining. In 2019 IEEE 16th International Symposium on Biomedical Imaging (ISBI 2019), pages 833–836. IEEE, 2019.

- [7] Ke Yan et al. 3d context enhanced region-based convolutional neural network for end-to-end lesion. In MICCAI, pages 511–519. Springer, 2018.

- [8] Zihao Li et al. Mvp-net: multi-view fpn with position-aware attention for deep universal lesion detection. In MICCAI, pages 13–21. Springer, 2019.

- [9] Ke Yan et al. Mulan: multitask universal lesion analysis network for joint lesion detection, tagging, and segmentation. In MICCAI, pages 194–202. Springer, 2019.

- [10] Jiancheng Yang et al. Alignshift: bridging the gap of imaging thickness in 3d anisotropic volumes. In MICCAI, pages 562–572. Springer, 2020.

- [11] Jinzheng Cai et al. Deep volumetric universal lesion detection using light-weight pseudo 3d convolution and surface point regression. In MICCAI, pages 3–13. Springer, 2020.

- [12] Han Li et al. Bounding maps for universal lesion detection. In MICCAI, pages 417–428. Springer, 2020.

- [13] Shu Zhang et al. Revisiting 3d context modeling with supervised pre-training for universal lesion detection in ct slices. In MICCAI, pages 542–551. Springer, 2020.

- [14] Ke Yan et al. Learning from multiple datasets with heterogeneous and partial labels for universal lesion detection in ct. IEEE Transactions on Medical Imaging, 40(10):2759–2770, 2020.

- [15] Youbao Tang et al. Weakly-supervised universal lesion segmentation with regional level set loss. In MICCAI, pages 515–525. Springer, 2021.

- [16] Jiancheng Yang et al. Asymmetric 3d context fusion for universal lesion detection. In MICCAI, pages 571–580. Springer, 2021.

- [17] Han Li et al. Conditional training with bounding map for universal lesion detection. In MICCAI, pages 141–152. Springer, 2021.

- [18] Fei Lyu et al. A segmentation-assisted model for universal lesion detection with partial labels. In MICCAI, pages 117–127. Springer, 2021.

- [19] S. Kevin Zhou et al. Handbook of medical image computing and computer assisted intervention. Academic Press, 2019.

- [20] S. Kevin Zhou et al. A review of deep learning in medical imaging: Imaging traits, technology trends, case studies with progress highlights, and future promises. Proceedings of the IEEE, 109(5):820–838, 2021.

- [21] Xin Yu et al. Deep attentive panoptic model for prostate cancer detection using biparametric mri scans. In MICCAI, pages 594–604. Springer, 2020.

- [22] Yinhao Ren et al. Retina-match: ipsilateral mammography lesion matching in a single shot detection pipeline. In MICCAI, pages 345–354. Springer, 2021.

- [23] Michael Baumgartner et al. nndetection: a self-configuring method for medical object detection. In MICCAI, pages 530–539. Springer, 2021.

- [24] Atefeh Shahroudnejad et al. Tun-det: a novel network for thyroid ultrasound nodule detection. In MICCAI, pages 656–667. Springer, 2021.

- [25] Luyang Luo et al. Oxnet: deep omni-supervised thoracic disease detection from chest x-rays. In MICCAI, pages 537–548. Springer, 2021.

- [26] Jiancong Chen et al. Ellipsenet: anchor-free ellipse detection for automatic cardiac biometrics in fetal echocardiography. In MICCAI, pages 218–227. Springer, 2021.

- [27] Canfeng Lin et al. Automated malaria cells detection from blood smears under severe class imbalance via importance-aware balanced group softmax. In MICCAI, pages 455–465. Springer, 2021.

- [28] Zipei Zhao et al. Positive-unlabeled learning for cell detection in histopathology images with incomplete annotations. In MICCAI, pages 509–518. Springer, 2021.

- [29] Shaoqing Ren et al. Faster r-cnn: Towards real-time object detection with region proposal networks. In NeurIPS, 2015.

- [30] Yang Jiancheng et al. Reinventing 2d convolutions for 3d images. In IEEE JBHI, pages 30009–2018, 2021.

- [31] Han Li et al. Satr: Slice attention with transformer for universal lesion detection. In MICCAI, pages 163–174. Springer, 2022.

- [32] Manu Sheoran et al. Dkma-uld: Domain knowledge augmented multi-head attention based robust universal lesion detection. In BMVC, 2021.

- [33] Zhi Tian et al. Fcos: Fully convolutional one-stage object detection. In IEEE ICCV, pages 9627–9636, 2019.

- [34] Nicolas Carion et al. End-to-end object detection with transformers. In ECCV, pages 213–229. Springer, 2020.

- [35] Jonathan Ho et al. Denoising diffusion probabilistic models. In NeurIPS, 2020.

- [36] Chitwan Saharia et al. Photorealistic text-to-image diffusion models with deep language understanding. In NeurIPS, 2022.

- [37] Ting Chen et al. A generalist framework for panoptic segmentation of images and videos. arXiv preprint arXiv:2210.06366, 2022.

- [38] Karl Holmquist et al. Diffpose: Multi-hypothesis human pose estimation using diffusion models. arXiv preprint arXiv:2211.16487, 2022.

- [39] Boah Kim et al. Diffusemorph: Unsupervised deformable image registration using diffusion model. In ECCV, pages 347–364. Springer, 2022.

- [40] Shoufa Chen et al. Diffusiondet: Diffusion model for object detection. arXiv preprint arXiv:2211.09788, 2022.

- [41] Jiaming Song et al. Denoising diffusion implicit models. arXiv:2010.02502, October 2020.

- [42] Manu Sheoran et al. An efficient anchor-free universal lesion detection in ct-scans. In 2022 IEEE 19th International Symposium on Biomedical Imaging (ISBI), pages 1–4. IEEE, 2022.

- [43] Kaiwen Duan et al. Centernet++ for object detection. arXiv preprint arXiv:2204.08394, 2022.

- [44] Feng Li et al. Dn-detr: Accelerate detr training by introducing query denoising. In IEEE CVPR, pages 13619–13627, 2022.

- [45] Jinzheng Cai et al. Lesion-harvester: Iteratively mining unlabeled lesions and hard-negative examples at scale. IEEE Transactions on Medical Imaging, 40(1):59–70, 2020.