Diffusion Approximations for Expert Opinions in a Financial Market with Gaussian Drift

Abstract

This paper investigates a financial market where returns depend on an unobservable Gaussian drift process. While the observation of returns yields information about the underlying drift, we also incorporate discrete-time expert opinions as an external source of information.

For estimating the hidden drift it is crucial to consider the conditional distribution of the drift given the available observations, the so-called filter. For an investor observing both the return process and the discrete-time expert opinions, we investigate in detail the asymptotic behavior of the filter as the frequency of the arrival of expert opinions tends to infinity. In our setting, a higher frequency of expert opinions comes at the cost of accuracy, meaning that as the frequency of expert opinions increases, the variance of expert opinions becomes larger. We consider a model where information dates are deterministic and equidistant and another model where the information dates arrive randomly as the jump times of a Poisson process. In both cases we derive limit theorems stating that the information obtained from observing the discrete-time expert opinions is asymptotically the same as that from observing a certain diffusion process which can be interpreted as a continuous-time expert.

We use our limit theorems to derive so-called diffusion approximations of the filter for high-frequency discrete-time expert opinions. These diffusion approximations are extremely helpful for deriving simplified approximate solutions of utility maximization problems.

Keywords: Diffusion approximations, Kalman filter, Ornstein–Uhlenbeck process, Expert opinions, Portfolio optimization, Partial information

2010 Mathematics Subject Classification: Primary 91G10; Secondary 93E11, 93E20, 60F25.

1 Introduction

Optimal trading strategies in dynamic portfolio optimization problems depend crucially on the drift of the underlying asset price processes. However, drift parameters are notoriously difficult to estimate from historical asset price data. Drift processes tend to fluctuate randomly over time and even if they were constant, long time series would be needed to estimate this parameter with a satisfactory degree of precision. Typically, drift effects are overshadowed by volatility. For these reasons, practitioners also incorporate external sources of information such as news, company reports, ratings or their own intuitive views when determining optimal portfolio strategies. These outside sources of information are called expert opinions. In the context of the classical one-period Markowitz model this leads to the well-known Black–Litterman approach, where return predictions are improved by means of views formulated by securities analysts, see Black and Litterman [3].

In this paper we consider a financial market where returns depend on an underlying drift process which is unobservable due to additional noise coming from a Brownian motion. The general setting has already been studied in Gabih et al. [11] for a market with only one risky asset and in Sass et al. [23] for markets with an arbitrary number of stocks. The ability to choose good trading strategies depends on how well the unobserved drift can be estimated. For estimating the hidden drift we consider the conditional distribution of the drift given the available observations, the so-called filter. The best estimate for the hidden drift process in a mean-square sense is the conditional mean of the drift given the available information. A measure for the goodness of this estimator is its conditional covariance matrix. In our setting, the filter is completely characterized by conditional mean and conditional covariance matrix since we deal with Gaussian distributions.

For investors who observe only the return process, the filter is the classical Kalman filter, see for example Liptser and Shiryaev [19]. An additional source of information is provided by expert opinions which we model as unbiased drift estimates arriving at discrete points in time. Investors who, in addition to observing the return process, have access to these expert opinions update their current drift estimates at each arrival time. These updates decrease the conditional covariance, hence they yield better estimates. This can be seen as a continuous-time version of the above mentioned static Black–Litterman approach.

We investigate in detail an investor who observes both the return process and the discrete-time expert opinions and study the asymptotic behavior of the filter when the frequency of the arrival of expert opinions tends to infinity. Sass et al. [23] and Gabih et al. [12] already addressed expert opinions which are independent of the arrival frequency and which have some minimal level of accuracy characterized by bounded covariances. In that setting, the conditional covariance of the drift estimate goes to zero as the arrival frequency goes to infinity. This implies that the conditional mean converges to the true drift process, i.e. in the limit investors have full information about the drift. Here, we study a different situation in which a higher frequency of expert opinions is only available at the cost of accuracy of the single expert opinions. In other words, as the frequency of expert opinions increases, the variance of expert opinions becomes larger. On the one hand, this assumption ensures that it is not possible for investors to gain arbitrarily much information in a fixed time interval. On the other hand, it enables us to derive a certain asymptotic behavior that yields a reasonable approximation of the filter for the investor who observes a certain, fixed number of discrete-time expert opinions. We consider two different situations, one with deterministic equidistant information dates and one with information dates that arrive randomly as the jump times of a Poisson process. For properly scaled variance of expert opinions that grows linearly with the arrival frequency we prove -convergence of the conditional mean and conditional covariance matrices as the frequency of information dates goes to infinity. Our limit theorems imply that the information obtained from observing the discrete-time expert opinions is asymptotically the same as that from observing a certain diffusion process having the same drift as the return process. That process can be interpreted as a continuous-time expert who permanently delivers noisy information about the drift.

Our limit theorems allow us to derive approximations of the filter for high-frequency discrete-time expert opinions which we call diffusion approximations. These are useful since the limiting filter is easy to compute whereas the updates for the discrete-time expert opinions lead to a computationally involved filter. This is extremely helpful for deriving simplified approximate solutions of utility maximization problems. We apply our diffusion approximations to a portfolio optimization problem with logarithmic utility. Numerical simulations show that the approximation is very accurate even for a small number of expert opinions. Our rigorous -convergence results of the filters however also allow to derive convergence of the value function in the more complicated problem with power utility, see Remark 5.5.

The idea of a continuous-time expert is in line with Davis and Lleo [7] who study an approach called “Black–Litterman in Continuous Time” (BLCT). Our results show how the BLCT model can be obtained as a limit of BLCT models with discrete-time experts. First papers addressing BLCT are Frey et al. [9, 10] who consider an HMM for the drift and expert opinions arriving at the jump times of a Poisson process.

Convergence of the discrete-time Kalman filter to the continuous-time equivalent has been addressed in the literature, e.g. by Salgado et al. [22] or Aalto [1] for the case of deterministic information dates. Our results for that case however do not follow directly from these convergence results. The reason is that in our case a suitable continuous-time expert has to be constructed first. The discrete-time expert opinions are then not simply a discretization of the continuous-time expert. We assume that they are noisy observations of the true drift process where the noise term is correlated with the Brownian motion in the diffusion that forms the continuous-time expert. Contrary to [1, 22] we also obtain convergence results for the case where the discrete expert opinions arrive at random time points rather than on an equidistant time grid.

Coquet et al. [6] consider weak convergence of filtrations which allows to prove convergence of conditional expectations in a quite general setting. However, their results do not directly apply to our situation since the approximating sequence of filtrations in our case is not included in the limit filtration.

In the literature, diffusion approximations also appear in other contexts. They are well-known in operations research and actuarial mathematics. The basic idea is to replace a complicated stochastic process by an appropriate diffusion process which is analytically more tractable than the original process. The approach is comparable with the normal approximation of sums of random variables by the Central Limit Theorem. When looking at these sums as stochastic processes or random walks the well-known Donsker Theorem leads to an approximation by a Brownian motion.

For an introduction to diffusion approximations based on the theory of weak convergence and applications to queueing systems in heavy traffic we refer to the survey article by Glynn [13]. In risk theory the application of diffusion approximations for computing ruin probabilities goes back to Iglehart [15]. We also refer to Grandell [14, Sec. 1.2], Schmidli [24, Sec. 5.10 and 6.5] and Asmussen and Albrecher [2, Sec. V.5] as well as the references therein. Starting point is the classical Cramér–Lundberg model where the cumulated claim sizes and finally the surplus of an insurance company are modeled by a compound Poisson process. For a high intensity of the claim arrivals and small claim sizes the latter can be approximated by a Brownian motion with drift. This results from the corresponding weak convergence of the properly scaled compound Poisson processes to a Brownian motion as the intensity tends to infinity. However, these classical results for compound Poisson processes cannot be applied directly to our problem. Here, the jumps of the filter processes do not constitute a sequence of i.i.d. random variables as in the compound Poisson case. Due to the Bayesian updating of the filter at the information dates the jump size distribution depends on the value of the filter at that time. This requires special techniques for proving limit theorems from which the diffusion approximations can be derived. To the best of our knowledge these techniques constitute a new contribution to the literature.

The paper is organized as follows. In Section 2 we introduce the model for our financial market including expert opinions and define different information regimes for investors with different sources of information. For each of those information regimes, we state the dynamics of the corresponding conditional mean and conditional covariance matrix. Section 3 investigates the situation where the discrete-time expert opinions arrive at deterministic equidistant time points. For an investor observing returns and discrete-time expert opinions we show convergence of the corresponding conditional mean and conditional covariance matrix to those of an investor observing the returns and the continuous-time expert. In Section 4 we prove analogous results for the situation where the time points at which expert opinions arrive are not deterministic time points but jump times of a standard Poisson process, i.e. with exponentially distributed waiting times between information dates. For the conditional mean we can then use a representation involving a Poisson random measure. When letting the intensity of the Poisson process go to infinity, we prove convergence to the same limiting filter as in the case with deterministic information dates. Section 5 provides an application of the convergence results to a utility maximization problem. For investors who maximize expected logarithmic utility of terminal wealth the optimal trading strategy depends on the conditional mean of the drift and the corresponding optimal terminal wealth is a functional of the conditional covariance matrices. That is why the convergence results from Sections 3 and 4 carry over to convergence of the corresponding value functions. Section 6 provides simulations and numerical calculations to illustrate our theoretical results. In Appendix A we collect some auxiliary results needed for the proofs of our main theorems. Appendix B gives the proofs of Theorems 3.2 and 3.3 and Appendix C those of Theorems 4.6 and 4.7.

Notation:

Throughout this paper, we use the notation for the identity matrix in . For a symmetric and positive-semidefinite matrix we call a symmetric and positive-semidefinite matrix the square root of if . The square root is unique and will be denoted by . Unless stated otherwise, whenever is a matrix, denotes the spectral norm of .

2 Market Model and Filtering

2.1 Financial Market Model

We consider a financial market with one risk-free and multiple risky assets. The basic model is the same as in Sass et al. [23]. In the following, we denote by a finite investment horizon and fix a filtered probability space where the filtration satisfies the usual conditions. All processes are assumed to be -adapted. The market consists of one risk-free bond with constant deterministic interest rate , and risky assets such that the -dimensional return process follows the stochastic differential equation

Here is an -dimensional Brownian motion with and we assume that has full rank. The drift is an Ornstein–Uhlenbeck process and follows the dynamics

where and , and is a -dimensional Brownian motion independent of . We assume that is a symmetric and positive-definite matrix to ensure that expectation and covariance of the drift process stay bounded and the drift process becomes asymptotically stationary. This is reasonable from an economic point of view. The initial drift is multivariate normally distributed, , for some and some which is symmetric and positive semidefinite. We assume that is independent of and . We denote and .

Investors in this market know the model parameters and are able to observe the return process . They neither observe the underlying drift process nor the Brownian motion . However, information about can be drawn from observing . Additionally, we include expert opinions in our model. These expert opinions arrive at discrete time points and give an unbiased estimate of the state of the drift at that time point. Let be an increasing sequence with values in , where we allow for index sets or for some . The , , are the time points at which expert opinions arrive. For the sake of convenience we also write although there is not necessarily an expert opinion arriving at time zero.

The expert view at time is modelled as an -valued random vector

where the matrix is symmetric and positive definite and is multivariate -distributed. We assume that the sequence of is independent and also that it is independent of both and the Brownian motions and . Note that, given , the expert opinion is multivariate -distributed. That means that the expert view at time gives an unbiased estimate of the state of the drift at that time. The matrix reflects the reliability of the expert.

Note that the time points do not need to be deterministic. However, we impose the additional assumption that the sequence is independent of the and also of the Brownian motions in the market and of . This essentially says that the timing of information dates carries no additional information about the drift . Nevertheless, information on the sequence may be important for optimal portfolio decisions. In the next sections we consider on the one hand the situation with deterministic information dates and on the other hand a case where information dates are the jump times of a Poisson process.

It is possible to allow relative expert views in the sense that an expert may give an estimate for the difference in drift of two stocks instead of absolute views. See Schöttle et al. [25] for how to switch between these two models for expert opinions by means of a pick matrix.

Our main results in Sections 3 and 4 address the question how to obtain rigorous convergence results when the number of information dates increases. We will show that, for certain sequences of expert opinions, the information drawn from these expert opinions, expressed by the filter, is for a large number of expert opinions essentially the same as the information one gets from observing yet another diffusion process. This diffusion process can then be interpreted as an expert who gives a continuous-time estimation about the state of the drift. Let this estimate be given by the diffusion process

| (2.1) |

where is an -dimensional Brownian motion with that is independent of all other Brownian motions in the model and of the information dates . The matrix has full rank equal to .

2.2 Filtering for Different Information Regimes

For an investor in the financial market defined above, the ability to choose good trading strategies is based heavily on which information is available about the unknown drift process . To be able to assess the value of information coming from observing expert opinions, we consider various types of investors with different sources of information. This follows the approach in Gabih et al. [11] and in Sass et al. [23]. The information available to an investor can be described by the investor filtration where serves as a placeholder for the various information regimes. We work with filtrations that are augmented by , the set of null sets under measure . We consider the cases

When speaking of the -investor we mean the investor with investor filtration , . Note that the -investor observes only the return process , the -investor combines the information from observing the return process and the discrete-time expert opinions , and the -investor observes the return process and the continuous-time expert . The -investor has full information about the drift in the sense that she can observe the drift process directly. This case is included as a benchmark.

As already mentioned, the investors in our financial market make trading decisions based on available information about the drift process . Only the -investor can observe the drift, the other investors have to estimate it. The conditional distribution of the drift under partial information is called the filter. In the mean-square sense, an optimal estimator for the drift at time given the available information is then the conditional mean . How close this estimator is to the true state of the drift can be assessed by looking at the corresponding conditional covariance matrix

Note that since we deal with Gaussian distributions here, the filter is also Gaussian and completely characterized by conditional mean and conditional covariance matrix. In the next sections we investigate the behavior of the filter for a -investor with access to an increasing number of expert opinions. For this purpose, we state in the following the dynamics of the filters for the various investors defined above. For the -investor, we are in the setting of the well-known Kalman filter.

Lemma 2.1.

The filter of the -investor is Gaussian. The conditional mean follows the dynamics

where is the solution of the ordinary Riccati differential equation

The initial values are and .

This lemma follows directly from the Kalman filter theory, see for example Theorem 10.3 of Liptser and Shiryaev [19]. Note that follows an ordinary differential equation, called Riccati equation, and is hence deterministic.

Next, we consider the -investor who observes the diffusion processes and .

Lemma 2.2.

The filter of the -investor is Gaussian. The conditional mean follows the dynamics

where is the solution of the ordinary Riccati differential equation

| (2.2) |

with and .

Proof.

First, note that the matrix is symmetric and positive definite, and hence nonsingular. The distribution of the filter as well as the dynamics of and then follow immediately from the Kalman filter theory, see again Theorem 10.3 in Liptser and Shiryaev [19]. ∎

Note that, just like in the case for the -investor, the conditional covariance matrix is deterministic.

Let us now come to the -investor. Recall that this investor observes the return process continuously in time and at (possibly random) information dates the expert opinions . We state the dynamics of and in the following lemma.

Lemma 2.3.

Given a sequence of information dates , the filter of the -investor is Gaussian. The dynamics of the conditional mean and conditional covariance matrix are given as follows:

-

(i)

Between the information dates and , , it holds

for , where follows the ordinary Riccati differential equation

for . The initial values are and , respectively, with and .

-

(ii)

The update formulas at information dates , , are

and

where .

Proof.

For deterministic time points , the above lemma is Lemma 2.3 of Sass et al. [23] where a detailed proof is given. For the more general case where the need not be deterministic, recall that we have made the assumption that the sequence is independent of the other random variables in the market. In particular, and the drift process are independent. Because of that, the dynamics of the conditional mean and conditional covariance matrix are the same as for deterministic information dates and we get the same update formulas, the only difference being that the update times might now be non-deterministic.

The Gaussian distribution of the filter between information dates follows as in the previous lemmas from the Kalman filter theory. The updates at information dates can be seen as a degenerate discrete-time Kalman filter. Hence, the distribution of the filter at information dates remains Gaussian after the Bayesian update. ∎

Note that the dynamics of and between information dates are the same as for the -investor, see Lemma 2.1. The values at an information date are obtained from a Bayesian update. If we have non-deterministic information dates then in contrast to both the -investor and the -investor, the conditional covariance matrices of the -investor are non-deterministic since updates take place at random times.

In the proofs of our main results we repeatedly need to find upper bounds for various expressions that involve the conditional covariance matrices or . A key tool is boundedness of these matrices. Here, it is useful to consider a partial ordering of symmetric matrices. For symmetric matrices we write if is positive semidefinite. Note that in particular implies that .

Lemma 2.4.

For any sequence we have and for all . In particular, there exists a constant such that

for all .

Proof.

Let be any sequence of expert opinions and the conditional covariance matrices of the corresponding filter. Every update decreases the covariance in the sense that , see Proposition 2.2 in Sass et al. [23]. Also, if and are solutions of the same Riccati differential equation, where the initial values fulfill , then for all , see for example Theorem 10 in Kuc̆era [18]. Inductively, we can deduce that in our setting for all . Also, one can show that for all in analogy to the proof of Proposition 3.1 in Sass et al. [23]. The key idea for the proof is to use the fact that for all .

By Theorem 4.1 in Sass et al. [23] there exists a positive-semidefinite matrix such that

Hence, is bounded by some constant , and the claim follows. ∎

3 Diffusion Approximation of Filters for Deterministic Information Dates

In this section we investigate the asymptotic behavior of the filters for a -investor when the frequency of expert opinion arrivals goes to infinity. We consider first the case for deterministic and equidistant information dates. Therefore, let and . Now assume that for every , where is the sequence of deterministic time points . So there are expert opinions that arrive equidistantly in the time interval , the distance between two information dates being .

In the following, we deduce convergence results for both the conditional means and the conditional covariance matrices of the -investor when sending to infinity. Note that convergence of discrete-time filters is addressed in earlier papers, e.g. by Salgado et al. [22] or Aalto [1]. There, the authors show convergence of the discrete-time Kalman filter to the continuous-time equivalent. In Aalto [1] the discrete-time filter is based on discrete-time observations of the continuous-time observation process whereas in Salgado et al. [22] the authors approximate both the continuous-time signal and observation by discrete-time processes. Neither of these assumptions match our model for the discrete-time expert opinions which is why we need to prove convergence in the following.

We use an additional superscript to emphasize dependence on the number of expert opinions, writing for example for the conditional covariance matrix of the filter corresponding to these expert opinions. In Sass et al. [23] a convergence result is proven for the case where the expert opinions are of the form

| (3.1) |

with expert’s covariances that are bounded for all and , see Theorem 3.1 in Sass et al. [23]. There it is shown that under the assumption of bounded expert’s covariances it holds

for any . Since is a measure for the goodness of the estimator , this means that the conditional mean of the -investor becomes an arbitrarily good estimator for the true state of the drift . One can easily deduce that

for any . Hence, the -investor essentially approximates the fully informed -investor.

This result heavily relies on the assumption that the expert covariances are all bounded, meaning that there is some minimal level of reliability of the experts. Here, we study a different situation where more frequent expert opinions are only available at the cost of accuracy. In other words, we assume that, as goes to zero, the variance of expert opinions increases. This is done for the purpose of approximating and for large and large . In the following we assume for the sake of simplicity that is not time-dependent. We then show that for properly scaled which grows linearly in , the information obtained from observing the discrete-time expert opinions is asymptotically the same as that from observing another diffusion process. This will be the diffusion already defined in (2.1).

Assumption 3.1.

Recall that the matrix is exactly the volatility of the diffusion process with the dynamics

and that has full rank. With as defined above the discrete-time expert opinions and the continuous-time expert are obviously correlated. In fact, it holds

Further, one can easily show by using Donsker’s Theorem that the piecewise constant process , defined by

for all , converges in distribution to as goes to infinity. For our main convergence results that are given in the following, we however require stronger notions of convergence.

The following theorem now states uniform convergence of to on for going to infinity.

Theorem 3.2.

The proof of Theorem 3.2 is given in Appendix B. It makes use of a discrete version of Gronwall’s Lemma for error accumulation, see Lemma A.1 in Appendix A.

Using the uniform convergence of the conditional covariance matrices to we can also deduce convergence of the corresponding conditional mean to in an -sense.

Theorem 3.3.

The proof of Theorem 3.3 can also be found in Appendix B. Theorems 3.2 and 3.3 state that in the setting of Assumption 3.1 the filter of a -investor observing equidistant expert opinions on converges to the filter of the -investor. Recalling that the -investor observes the diffusion processes and , this implies that the information obtained from observing the discrete-time expert opinions is for large arbitrarily close to the information that comes with observing the continuous-time diffusion-type expert . This diffusion approximation of the discrete expert opinions is useful since the associated filter equations for and are much simpler than those for and which contain updates at information dates. Computing on in the multivariate case requires the numerical solution of a Riccati differential equation on each subinterval . For high numbers of expert opinions this leads to very small time steps and high computing times. For computing the -investor’s filter one has to find the solution to only one Riccati differential equation on for which we can use more efficient numerical solvers. We will see in Section 5 that the convergence results carry over to convergence of the value function in a portfolio optimization problem.

Remark 3.4.

Note that for the convergence of the conditional covariance matrices to in Theorem 3.2 we do not need the assumption that is given as in (3.2). This is because the conditional covariance matrices do not depend on the actual form of the expert opinions, see Lemma 2.3. Hence, it would be sufficient to assume that the experts’ covariance matrices are given by . The assumption on the form of is only needed in Theorem 3.3 where the conditional mean is considered.

4 Diffusion Approximation of Filters for Random Information Dates

In this section we consider the situation where the experts’ opinions do not arrive at deterministic time points but at random information dates , where the waiting times between information dates are independent and exponentially distributed with rate . Recall that we have set for ease of notation. The information dates can therefore be seen as the jump times of a standard Poisson process with intensity . In this situation, the total number of expert opinions arriving in is no longer deterministic. However, as the intensity increases, expert opinions will arrive more and more frequently. So the question we address in this section is, in analogy to sending to infinity in the last section, what happens when goes to infinity. We use a superscript to emphasize the dependence on the intensity. The expert opinions are of the form

| (4.1) |

For constant variances , i.e. when there is some constant level of the expert’s reliability which does not depend on the arrival intensity , one can derive a similar result for the convergence to full information as in the case of deterministic information dates. This result implies that for large the -investor approximates the fully informed investor. More precisely, it holds

for all , see Gabih et al. [12]. In contrast to the above case we now again assume that, as the frequency of expert opinions increases, the variance of the expert opinions also increases. As in Section 3 it will turn out that letting grow linearly in is the proper scaling for deriving diffusion limits.

Assumption 4.1.

Let be a standard Poisson process with intensity that is independent of the Brownian motions in the model. Define the information dates as the jump times of that process and set . Furthermore, let the experts’ covariance matrices be given as for . Further, we assume that in (4.1) the -distributed random variables are linked with the Brownian motion from (2.1) via

so that

| (4.2) |

is the expert opinion at information date . Note that for defining the , the Brownian motion has to be extended to a Brownian motion on .

Given a realization of the drift process at the random information date , the only randomness in the expert opinion comes from the Brownian motion between the deterministic times and . Recall that is the Brownian motion that drives the diffusion which we interpret as our continuous expert. Hence there is a direct connection between the discrete expert opinions and the continuous expert.

In the following, we will omit the superscript at the time points for better readability, keeping the dependence on the intensity in mind.

Remark 4.2.

At first glance, it seems more intuitive to construct the expert opinions as

rather than in (4.2). However, we later want to prove convergence of to , which requires to look at the difference of a weighted sum of and . It turns out that when replacing with , this leads to an integral where the integrand is defined piecewisely as

However, the term in brackets does not have a finite variance. This carries over to the weighted sum mentioned above. This is mainly due to the fact that for , the expectation of does not exist. When considering instead, the difference that appears has finite variance since the additional randomness from the information dates is missing. Intuitively, the problem with the is that the expert opinions of this form put different weight on the paths of the Brownian motion in different intervals. This is in contrast to the continuous expert whose information comes from observing the diffusion , driven by the Brownian motion , continuously in time. Therefore, in terms of information about the Brownian motion , the modelled as in (4.2) are closer to the continuous expert than the .

The aim of this section is to determine the behavior of the conditional covariance matrix and of the conditional mean under Assumption 4.1 when goes to infinity, i.e. when expert opinions arrive more and more frequently, becoming at the same time less and less reliable. Here, it is useful to express the dynamics of and in a way that comprises both the behavior between information dates and the jumps at times . For this purpose, we work with a representation using a Poisson random measure as introduced in Cont and Tankov [5, Sec. 2.6].

Definition 4.3.

Let be a probability space and a measure on a measurable space . A Poisson random measure with intensity measure is a function such that

-

1.

For each , is a measure on .

-

2.

For every , is a Poisson random variable with parameter .

-

3.

For disjoint , the random variables are independent.

For a Poisson random measure , the compensated measure is defined by with .

The following proposition states the results we will need in the following. For a proof, see Cont and Tankov [5, Sec. 2.6.3].

Proposition 4.4.

Let . Let be the jump times of a Poisson process with intensity and let , , be a sequence of independent multivariate standard Gaussian random variables on . For any and let

denote the number of jump times in where takes a value in . Then defines a Poisson random measure and it holds:

-

(i)

The corresponding intensity measure satisfies

for , where is the multivariate standard normal density on .

-

(ii)

For Borel-measurable functions defined on it holds

Now we can use the Poisson random measure for reformulating the dynamics of .

Proposition 4.5.

In the following, we give convergence results in analogy to those in Theorems 3.2 and 3.3 stating that the conditional covariance matrix and the conditional mean of the -investor converge to the conditional covariance matrix and conditional mean of the -investor as goes to infinity. In the setting with deterministic equidistant information dates in Section 3 the conditional covariance matrices were deterministic. For the conditional means we proved -convergence. Due to the joint Gaussian distribution of the conditional means it was enough to prove -convergence and use a result from Rosiński and Suchanecki [21] to generalize to -convergence. In the setting of this section with random information dates, the conditional covariance matrices of the -investor are random and the joint distribution of the conditional means is no longer Gaussian. Therefore, the generalization mentioned above does not apply here. Hence, we directly prove -convergence in the following. The next theorem states -convergence of to on as goes to infinity.

Theorem 4.6.

The proof of Theorem 4.6 is given in Appendix C. It is based on applying Gronwall’s Lemma in integral form which we recall in Lemma A.5. We also prove -convergence of the conditional means.

Theorem 4.7.

Theorems 4.6 and 4.7 show that under Assumption 4.1, the filter of the -investor converges to the filter of the -investor. These are the analogous results to those in Section 3 where we have assumed deterministic and equidistant information dates. Here, we see that the convergence result also holds for non-deterministic information dates being defined as the jump times of a standard Poisson process, i.e. where the time between information dates is exponentially distributed with a parameter . When sending to infinity, the frequency of expert opinions goes to infinity.

Again, as for the case with deterministic information dates, the assumption that is given as in (4.2) is only needed for the proof of Theorem 4.7. For the proof of Theorem 4.6 it is sufficient to assume that the experts’ covariance matrices are of the form .

Remark 4.8.

Note that when comparing the convergence results from Theorems 3.2 and 4.6 for the conditional covariance matrices in the case , there is a difference in the speed of convergence that we have shown. For deterministic equidistant information dates, the speed of convergence of to zero is of the order . For random information dates, however, we only get a speed of for the convergence of

to zero. This can be explained by the additional randomness coming from the Poisson process that determines the information dates in this situation.

The above theorems provide a useful diffusion approximation since the filter of the -investor is easier to compute than the filter of the -investor for which there are updates at each information date. Further, the conditional covariance is deterministic and can be computed offline in advance while is a stochastic process that has to be updated when a new expert opinion arrives. For high-frequency expert opinions one may simplify the computation of by replacing the exact conditional covariance by its diffusion approximation . Given the discrete-time expert’s covariance matrix and the arrival intensity the volatility is chosen such that .

Even more important are the benefits from the simpler filter equations if we consider utility maximization problems for financial markets with partial information and discrete-time expert opinions. See the next section for an application to logarithmic utility and Remark 5.5 as well as Kondakji [17, Ch. 7,8] for the more involved power utility case where closed-form expressions for the optimal strategies are available for the -investor but not for the -investor.

5 Application to Utility Maximization

As an application of the convergence results from the last two sections we now consider a portfolio optimization problem in our financial market. For the sake of convenience, we assume here that the interest rate of the risk-free asset is equal to zero. However, the results below can easily be extended to a market model with .

An investor’s trading in the market can be described by a self-financing trading strategy with values in . Here, , , is the proportion of wealth that is invested in asset at time . The corresponding wealth process is then governed by the stochastic differential equation

with initial capital . An investor’s trading strategy has to be adapted to her investor filtration. To ensure strictly positive wealth, we also impose some integrability constraint on the trading strategies. Then we denote by

the class of admissible trading strategies for the -investor. The optimization problem we address is a utility maximization problem where investors want to maximize expected logarithmic utility of terminal wealth. Hence,

| (5.1) |

is the value function of our optimization problem. This utility maximization problem under partial information has been solved in Brendle [4] for the case of power utility. Karatzas and Zhao [16] address also the case with logarithmic utility. In Sass et al. [23], the optimization problem has been solved for an -investor with logarithmic utility in the context of the different information regimes addressed in this paper. We recall the result in the proposition below.

Proposition 5.1.

The optimal strategy for the optimization problem (5.1) is with , and the optimal value is

Proof.

The form of the optimal strategy and the first representation of the value function are already given in Proposition 5.1, respectively Theorem 5.1 of Sass et al. [23]. For the second representation of the value function, note that

Therefore, by taking expectation on both sides,

which we can plug into the first representation. ∎

Due to the representation of the optimal strategy via the conditional means it follows directly from Theorems 3.3 and 4.7 that the optimal strategy of the -investor converges in the -sense to the optimal strategy of the -investor as , respectively , goes to infinity.

Further, note that the value function of the -investor is an integral functional of the expectation of . The convergence results of Theorems 3.2 and 4.6 therefore carry over to convergence results for the respective value functions. First, we address the situation with deterministic information dates from Section 3 where we have shown uniform convergence of to .

Corollary 5.2.

Proof.

The analogous result also holds in the setting of Section 4 where information dates are the jump times of a Poisson process. Recall that in Theorem 4.6 we have shown convergence of to .

Corollary 5.3.

Proof.

Corollary 5.2 and Corollary 5.3 show that both under Assumption 3.1 and Assumption 4.1, the value function of the -investor converges to the value function of the -investor when the frequency of information dates goes to infinity.

The following proposition shows that not only does the value function of the -investor converge to the value function of the -investor, also the absolute difference of the utility attained by , respectively , goes to zero when increasing the number or the frequency of discrete-time expert opinions. This implies that the utility of the -investor observing the discrete-time expert opinions also pathwise becomes arbitrarily close to the utility of the -investor when the number of discrete-time expert opinions becomes large. For this result, we need the strong -convergence of the conditional expectations, convergence in distribution would not be enough here.

Proof.

Consider the setting of Assumption 3.1. Note that

where we have used the representation of the optimal strategies from Proposition 5.1. When applying the absolute value and the expectation we obtain

| (5.3) | ||||

For the first summand in (5.3) we have, due to the Cauchy–Schwarz inequality,

The right-hand side of this expression goes to zero when goes to infinity by Theorem 3.3 and by boundedness of , see Lemma 2.4. The second summand in (5.3) goes to zero by an analogous argumentation. For the third summand in (5.3), note that

In the second step we have used the Itô isometry. Again, the right-hand side of the above inequality goes to zero as goes to infinity by Theorem 3.3. The proof for the convergence under Assumption 4.1 is completely analogous. ∎

Note that the convergence of the value functions can also be deduced directly from the previous proposition. However, the proofs that we give in Corollaries 5.2, respectively 5.3 using the convergence of the conditional covariance matrices are more direct and thus yield a sharper bound for the order of convergence than what we would get from the previous proposition.

Remark 5.5.

For simplicity, we have restricted ourselves in this section to the case with logarithmic utility, where -convergence of the conditional covariance matrices and the conditional means is sufficient for proving convergence of the value functions and optimal strategies. Portfolio problems that consider maximization of expected power utility instead of logarithmic utility are typically much more demanding. We have seen that for logarithmic utility the value function is given in terms of an integral functional of the expected conditional variance of the filter. The resulting optimal portfolio strategy is myopic and depends on the current drift estimate only.

For power utility, the value functions can be expressed as the expectation of the exponential of a quite involved integral functional of the conditional mean. Hence it depends on the complete filter distribution and not only on its second-order moments. Further, the optimal strategies are no longer myopic and do not depend only on the current drift estimate but contain correction terms depending on the distribution of the future drift estimates. Therefore, for power utility, one needs the -convergence for for proving convergence of the value functions, -convergence would not be enough.

For the portfolio problem of the -investor in the power utility case closed-form expressions as above for the optimal strategies in terms of the filter are no longer available. One can apply the dynamic programming approach to the associated stochastic optimal control problem. For the -investor this leads to dynamic programming equations (DPEs) for the value function in form of a partial integro-differential equation (PIDE), see Kondakji [17, Ch. 7]. Solutions of those DPEs can usually only be determined numerically. The optimal strategy can be given in terms of that value function and the filter processes and . Meanwhile, for the -investor the above approach leads to DPEs which can be solved explicitly such that the value function can be given in terms of solutions to some Riccati equations. Again, the optimal strategies can be computed in terms of the value function and the filter processes and .

Diffusion approximations for the filter and the value function thus allow us to find approximate solutions for the -investor which can be given in closed form and with less numerical effort. This is extremely helpful for financial markets with multiple assets since the numerical solution of the resulting problem suffers from the curse of dimensionality and becomes intractable. While for a model with a single asset the PIDE has two spatial variables, for two assets there are already five and for three assets nine variables. For details we refer to our forthcoming papers on that topic.

6 Numerical Example

In this section we illustrate our convergence results from the previous sections by a numerical example. We consider a financial market with investment horizon one year. For simplicity, we assume that there is only one risky asset in the market, i.e. . Let the parameters of our model be defined as in Table 6.1.

| investment horizon | 1 | ||

|---|---|---|---|

| interest rate | 0 | ||

| mean reversion speed of drift process | 3 | ||

| volatility of drift process | 1 | ||

| mean reversion level of drift process | 0.05 | ||

| initial mean of drift process | 0.05 | ||

| initial variance of drift process | 0.2 | ||

| volatility of returns | 0.25 | ||

| volatility of continuous expert | 0.2 |

First, we illustrate our results from Section 3 in the setting with deterministic equidistant information dates , , where . Recall that the variance of the discrete-time expert in that case is

and that expert opinions are defined as in (3.2) by

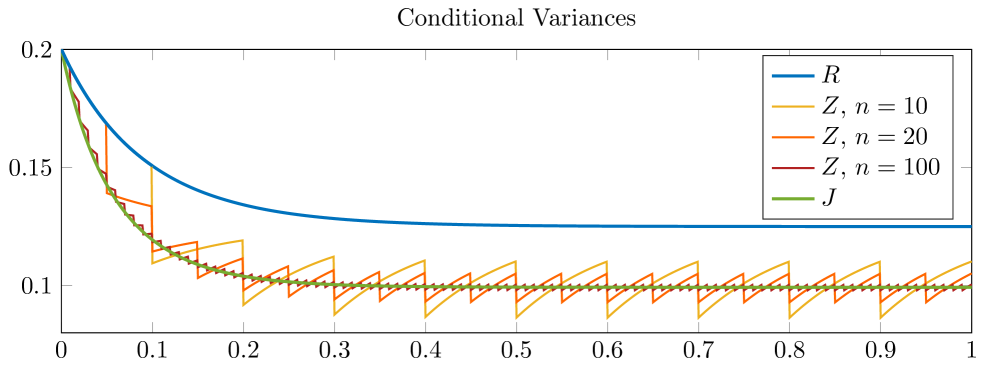

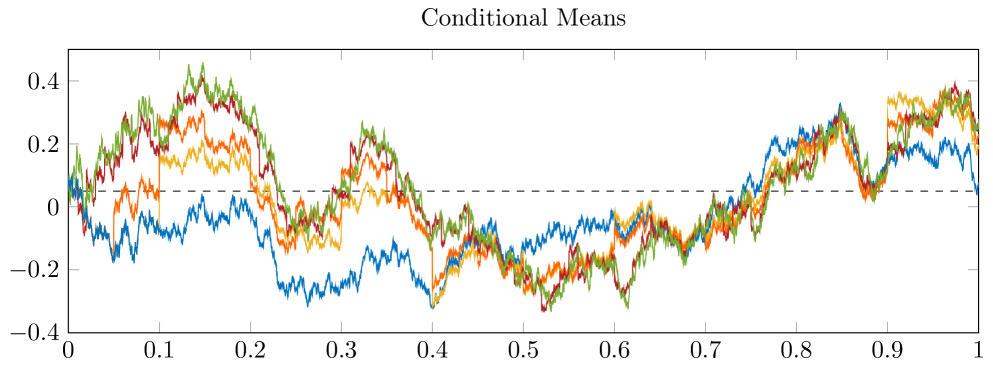

for . In Figure 6.1 we plot the filters of the -, - and -investor against time. For the -investor we consider the cases . In the upper plot one sees the conditional variances and as well as plotted against time. The lower plot shows a realization of the conditional means , and for the same parameters.

Recall that and as well as for any are deterministic. In the upper plot of Figure 6.1 one sees that for any fixed , the value of as well as the value of for any is less or equal than the value of . This is due to Lemma 2.4. For the -investors one sees that the updates at information dates lead to a decrease in the conditional variance. As the number increases, the conditional variances approach for any . This is due to what has been shown in Theorem 3.2.

Note that for going to infinity, and approach a finite value. Convergence has been proven in Proposition 4.6 of Gabih et al. [11] for markets with stock and generalized in Theorem 4.1 of Sass et al. [23] for markets with an arbitrary number of stocks. For we observe a periodic behavior with asymptotic upper and lower bounds in the limit. This has been studied in detail in Sass et al. [23, Sec. 4.2].

In the lower subplot we show a realization of the various conditional means. For the updating steps at information dates are visible. In general, we observe that when increasing the value of , the distance between the paths of and becomes smaller, as shown in Theorem 3.3.

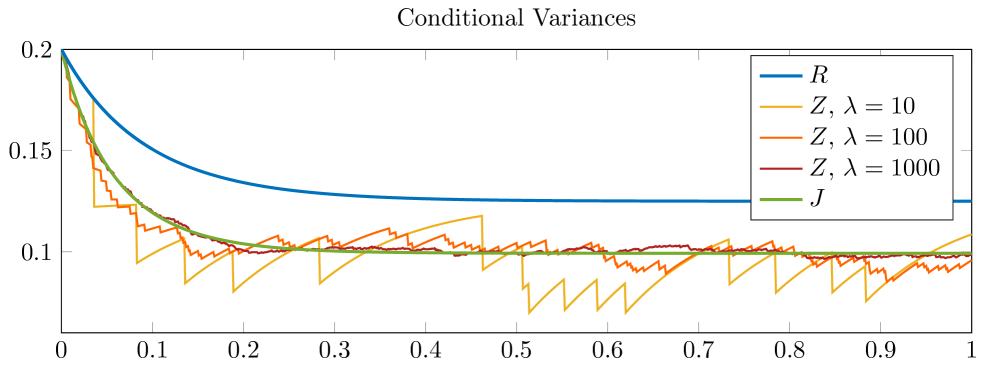

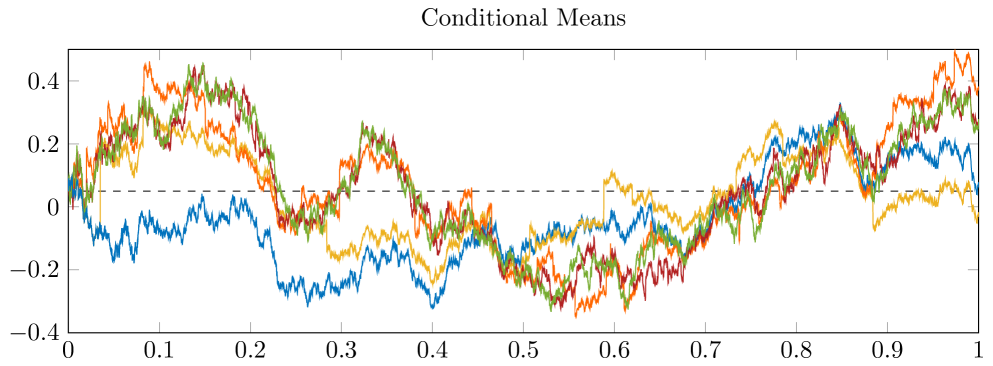

The analogous simulation can be done for the setting of Section 4 with random information dates defined as the jump times of a Poisson process. We again suppose that the model parameters are as given in Table 6.1. Recall that under Assumption 4.1 the expert’s variance is of the form with expert opinions given as in (4.2) via

Figure 6.2 shows, in addition to the filters of the - and -investor, the filters of the -investor for different intensities . Note that the conditional variances of the filter in the case of the -investor behave qualitatively like in the situation with deterministic information dates. The time at which the expert opinions arrive is now random, however. The waiting times between two information dates are exponentially distributed with parameter . As a consequence, the updates for the -investor do not take place as regularly as in Figure 6.1.

The upper plot of Figure 6.2 shows realizations for . In general, by increasing the value of the parameter , one can increase the frequency of information dates, causing convergence of to for any , as shown in Theorem 4.6. In the lower subplot, we see the corresponding realizations of , in addition to and as before. Again, the updates in the conditional mean of the -investor are visible. What is also striking is that, when we consider the -investor with intensity , there are times where the distance between two sequent information dates is rather big. During those times, the conditional mean of the -investor comes closer to the conditional mean of the -investor who does not observe any expert opinion. When the intensity is increased, however, the conditional mean of the -investor approaches the conditional mean of the -investor. For , the conditional means and already behave quite similarly. Note, however, that for fixed information dates is rather close to for already. Convergence of to has been shown in Theorem 4.7. The difference in the speed of convergence when comparing the situation with equidistant information dates to the situation with random information dates is also discussed there.

In the remaining part of this section we want to illustrate the convergence results in the portfolio optimization problem that was introduced in Section 5. Recall that the value function of the -investor has the form

| (6.1) |

i.e. it is an integral functional of the conditional covariance matrices . This leads to convergence of and to when , respectively , goes to infinity, see Corollaries 5.2 and 5.3. In Table 2(a) we list the value functions of the -investor and of the -investor as well as the value function of the -investor in the setting with equidistant information dates for different values of . We assume that investors have initial capital and that the model parameters are again those from Table 6.1. We see that the value functions are increasing in and approach the value for large values of .

Calculating the value function of the -investor in the situation with non-deterministic information dates is a little more involved. This is because the conditional covariance matrices are then also non-deterministic. The value function, see again (6.1), depends on the expectation of for . This value cannot be calculated easily. To determine the value function numerically for the parameters in Table 6.1, we therefore perform for each value of a Monte Carlo simulation with iterations. In each iteration, we generate a sequence of information dates as jump times of a Poisson process with intensity and calculate the corresponding conditional variances . By taking an average of all simulations this leads to a good approximation of . The diffusion approximation is available in closed form, its computation does not require numerical methods. Table 2(b) shows the resulting estimations for and in brackets the corresponding 95% confidence intervals.

The values lie between and , they are increasing in the intensity and for large values of they approach the value . This is in line with Corollary 5.3. We also observe that when setting the intensity equal to the deterministic number . Recall that an intensity means that there are on average information dates in the time interval . The randomness coming from the Poisson process however leads to a lower value function, compared to . This difference is negligible for large intensities.

| 0.3410 | ||

| 0.5245 | ||

| 0.5511 | ||

| 0.5531 | ||

| 0.5533 | ||

| 0.5533 |

| 0.3410 | ||

| 0.5221 (0.5211, 0.5230) | ||

| 0.5499 (0.5496, 0.5502) | ||

| 0.5530 (0.5529, 0.5531) | ||

| 0.5533 (0.5533, 0.5533) | ||

| 0.5533 |

Appendix A Auxiliary Results

In this appendix we give the proof of Proposition 4.5 and collect some auxiliary results that are used in the proofs of our main results.

Proof of Proposition 4.5.

From Lemma 2.2 one directly obtains

and the representation of follows immediately. From Lemma 2.3 recall that between information dates the matrix differential equation for reads

Now we can use Proposition 4.4 to include the updates of at information dates and write

| (A.1) |

for . Note that the integrand is matrix-valued and the integral is defined componentwise. By (A.1) we can write

| (A.2) | ||||

We see that

Therefore, the last integral in (A.2) can be written as

where the second equality follows from Proposition 4.4 and the last equality is due to being a probability density. Plugging back in into (A.2) yields

and the representation of is also proven. ∎

The following lemma can be interpreted as a discrete version of Gronwall’s Lemma for error accumulation. A statement similar to Lemma A.1 can be found in Demailly [8, Sec. 8.2.4].

Lemma A.1.

Let , be real-valued sequences with , , and , real numbers such that

Then for all it holds

where

Proof.

The proof can be done by induction. For the claim is obvious. For the induction step we observe that for all and hence

Due to the induction hypothesis we therefore have

which completes the proof. ∎

The next lemmas are used in the proof of Theorem 3.3. Firstly, the following lemma is a Cauchy–Schwarz inequality for multidimensional integrals.

Lemma A.2.

Let be an -valued stochastic process. Then

Proof.

Firstly, pulling the norm into the integral increases the expression on the left-hand side, so

Now we can apply the usual Cauchy–Schwarz inequality to the one-dimensional integral and get

The last step is due to Fubini. ∎

A key tool for estimations involving stochastic integrals is the Itô isometry. The following lemma uses the isometry to obtain an estimation for multivariate integrals.

Lemma A.3.

Let be an -dimensional Brownian motion. Let be an -valued stochastic process that is independent of , and a stopping time that is bounded by and also independent of . Then

where denotes the Frobenius norm and only depends on the dimension of the integrand .

Proof.

Note that for fixed, deterministic , the integral is a random variable with values in . The -th entry is

Hence,

When applying the expectation, we get due to independence

| (A.3) | ||||

Note that we can consider the filtration where . Since and are independent, is a Brownian motion with respect to . Also, is obviously adapted with respect to . Hence, we can apply the usual Itô isometry and obtain that the right-hand side of (A.3) equals

Now when taking into account the stopping time , we can write

Since is independent of we can deduce from the previous part of the proof that

Equivalence of norms implies the existence of the constant with the property that

which concludes the proof. ∎

Another estimate that is useful in the convergence proofs is given in the following lemma.

Lemma A.4.

Let and let be a symmetric and positive-definite matrix in with for all . Then there exists a constant such that

Proof.

For abbreviation let , . Then we can write

and therefore

Hence, we obtain

where . ∎

The next lemma states Gronwall’s Lemma in integral form which we use in the proofs of Theorems 4.6 and 4.7. A proof can be found for example in Pachpatte [20, Sec. 1.3].

Lemma A.5 (Gronwall).

Let be an interval and let and be continuous functions with

for all . Then

for all .

In Section 4 we work with a Poisson random measure. An important property of the compensated Poisson measure that we use for the proof of Theorem 4.6 is given in the following lemma, see Proposition 2.16 in Cont and Tankov [5].

Lemma A.6.

For an integrable real-valued function , the process with

is a martingale with and

| (A.4) |

Appendix B Proofs for Deterministic Information Dates

B.1 Proof of Theorem 3.2: Convergence of Covariance Matrices

Throughout the proof, we omit the superscript at information dates for the sake of better readability, keeping the dependence on in mind. The proof is based on finding a recursive formula for the distance between and where we make use of an Euler approximation of .

Euler scheme approximation of .

Recall the dynamics of from Lemma 2.2. To shorten notation, let with

denote the right-hand side of the differential equation (2.2). Then (2.2) reads as

The first step is to approximate by an Euler scheme. Therefore, define by setting

| (B.1) |

for all . From a Taylor expansion we get that

where is a matrix-valued function involving the second derivative of . Since and its derivatives are bounded on , see Lemma 2.4, the matrices are bounded, hence the local truncation error is proportional to . In other words, there exists some such that

| (B.2) |

for all .

Estimation of the error in .

Let , and let with , with . Then

Hence,

This implies that there exists a constant such that for all , with and it holds

| (B.3) |

Dynamics of .

Next, we take a look at the dynamics of , i.e. of the covariance matrix corresponding to the investor who observes the stock returns and the opinions of the discrete expert. We know that at information dates , , we have the update formula

Observe that

which can be written as the Neumann series

It follows that

| (B.4) |

where , since is bounded. Between information dates, the matrix follows the dynamics

for .

One time step for .

In the following, we construct a formula that connects with . Firstly, by making a Taylor expansion we see that

where . Now, when inserting the representation of from (B.4) and rearranging terms we can conclude that

| (B.5) |

where is a matrix with for .

Recursive formula for estimation error.

For , define and . Our aim is to find a recursive formula that yields an upper bound for these estimation errors. Let . Then we have by (B.5) that

Thus, by definition of and as given in (B.1),

Now, the estimations from (B.2), (B.3) and (B.5) yield

By a discrete version of Gronwall’s Lemma, see Lemma A.1 in the appendix, this implies

Therefore, for all we have

| (B.6) |

Difference of and for arbitrary .

We now show that there exists some such that for all . Let with . We can write

and hence

By (B.6), the second summand is bounded by . We now take a look at the other two summands. By definition of we can write the third summand as

where the second inequality is due to (B.2). Since and are continuous, the function is bounded by some on . Hence,

For the first summand we observe that, like in (B.5), we get the representation

for some matrix with . Then the right-hand side is bounded by . Also, we have

Putting these results together we obtain that there exists a constant such that

for all . ∎

B.2 Proof of Theorem 3.3: Convergence of Conditional Means

We first prove the claim for . We omit the superscript at information dates for the sake of better readability. The idea of the proof is to find a recursion for

and to apply the discrete version of Gronwall’s Lemma from Lemma A.1 to derive an appropriate upper bound.

For the proof we introduce the notation

for . Then Lemma A.4 in particular implies that

for some constant .

Recursive formulas for and .

The representation of via the stochastic differential equation in Lemma 2.2 yields the recursion

| (B.7) | ||||

where

and , the innovation process corresponding to the investor filtration , is an -dimensional -Brownian motion. Similarly, we get for the conditional mean the recursion

| (B.8) |

where

and , the innovation process corresponding to investor filtration , is an -dimensional -Brownian motion. Furthermore, the update formula for yields

| (B.9) | ||||

When looking at the difference between and it is convenient to work with representations that use the same Brownian motions.

Relation between the innovation processes.

Splitting the difference of and into summands.

Combining (B.10) with the above representation of yields after a slight rearrangement of terms

where

Application of the discrete Gronwall Lemma.

The idea is now to apply the discrete Gronwall Lemma from Lemma A.1 to the estimation

| (B.12) | ||||

In the inequality we have used that , and the fact that can be written as a sum of stochastic integrals over Brownian motions between and . Since is independent of these stochastic integrals, the term vanishes.

Finding upper estimates for the single summands.

We now show how to find upper estimates for the single summands in the decomposition above. First of all,

by properties of the spectral norm and positive definiteness of . By using the multidimensional Itô isometry from Lemma A.3 we deduce

Note that by Theorem 3.2.

Now for the term we use the Cauchy–Schwarz inequality from Lemma A.2 to see that

We then apply the mean value theorem for estimating the integral to see that

The jump size of at an information date is bounded, hence all in all we obtain

for constants , .

For the term we use again the multidimensional Itô isometry from Lemma A.3 and get

For the integral above we first use a mean value theorem argument and then Lemma A.4 for the estimation of to obtain

Putting these estimations together yields the existence of a constant such that

By writing the next summand as one integral, we can again apply the Cauchy–Schwarz inequality from Lemma A.2 and get

When using again the mean value theorem and the same argumentation as before we see that the integral is bounded by

In conclusion, we have a constant with

In a similar way, can be treated. By first writing as a single integral and applying the Cauchy–Schwarz inequality from Lemma A.2 as well as the mean value theorem we get

The expectation above is bounded by

By the same reasons as in the calculations above we obtain all in all that there exist constants and such that

We have now found upper bounds for all quadratic terms in (B.12). Only the mixed terms and remain to be considered. Firstly, we again rewrite as one integral

We see that

By using the mean value theorem and sublinearity of the spectral norm we obtain

The last inequality is due to boundedness of together with the fact that is bounded by a constant times , see Lemma A.4.

The mixed term can be handled in a similar way. It holds that

and hence by another application of the mean value theorem

The absolute value of the expectation is split into two summands as

From the same argumentations as above we deduce that there exist constants and with

Conclusion with discrete Gronwall Lemma.

Now we plug all these upper bounds into (B.12) and obtain that there exist constants such that

Setting in the discrete version of Gronwall’s Lemma, see Lemma A.1, we can conclude that

which proves the claim for . To find an upper bound that is valid for arbitrary time with , we observe that

The first summand is bounded by a constant times which can be seen from the representation in Lemma 2.2. From (B.9) we can deduce the same for the third summand. Hence, all in all there exists a constant such that

which proves the claim of the theorem for .

For proving the claim in the case note that the joint distribution of the conditional means is Gaussian. A classical result, see for example Rosiński and Suchanecki [21, Lem. 2.1], hence yields that there is a constant with

for all . This concludes the proof in the case .∎

Appendix C Proofs for Random Information Dates

C.1 Proof of Theorem 4.6: Convergence of Covariance Matrices

We first consider . Using the representations from Proposition 4.5 we see

Denote the integral with respect to the compensated measure by and the second one by . Now for it holds

| (C.1) |

Estimate for the martingale term .

Every component of the matrix-valued process is a martingale since we integrate a bounded integrand with respect to the compensated measure . In the following, for finding an upper bound for the term involving in (C.1) we first use Doob’s inequality for martingales to get rid of the supremum. In a second step we can calculate the second moment of the integral because we know the corresponding intensity measure of the Poisson random measure. In detail, we proceed as follows. By equivalence of norms there is a constant such that

| (C.2) | ||||

The last inequality follows from Doob’s inequality for martingales. Next, we can apply Lemma A.6 to the definition of and get

using that the integrand does not depend on and is a density. Plugging back into (C.2), we get, again by equivalence of norms,

| (C.3) |

Since the norm of the matrices is bounded by , see Lemma 2.4, we obtain

| (C.4) | ||||

When reinserting this upper bound into (C.3), we can conclude that

| (C.5) |

Estimate for the finite variation term .

Using the short-hand notation for the integrand of we get

| (C.6) |

by the Cauchy–Schwarz inequality in Lemma A.2. We now address the integrand of . Since

we obtain

| (C.7) | ||||

We analyze the second summand in more detail. For that purpose, we decompose

| (C.8) | ||||

and find upper bounds for the three summands. For the first summand we find

| (C.9) | ||||

For the second summand note that is equal to zero since a jump at time occurs with probability zero. For the third summand we observe

| (C.10) | ||||

We now use these upper bounds in (C.8) and obtain

| (C.11) | ||||

Hence we can write

| (C.12) | ||||

Conclusion with Gronwall’s Lemma.

We have found upper bounds for both summands from (C.1). Plugging in yields constants , such that

for all . By Gronwall’s Lemma in integral form, see Lemma A.5, it follows

| (C.13) |

for , which proves the claim for . For we use Lyapunov’s inequality to get

For it holds

due to boundedness of the conditional covariance matrices, see Lemma 2.4.∎

C.2 Proof of Theorem 4.7: Convergence of Conditional Means

Throughout the proof, we omit the superscript at time points and at the Poisson process for better readability.

We first prove the claim for . The proof uses again Gronwall’s Lemma, see Lemma A.5. Define for . The filtering equations from Lemma 2.3 yield

| (C.14) |

where defines the innovations process , an -dimensional -Brownian motion, and where

Note that the matrices are bounded since

The conditional mean can be written as

| (C.15) | ||||

Note that

This yields the representation , where

| (C.16) | ||||

| (C.17) | ||||

| (C.18) | ||||

| (C.19) | ||||

| (C.20) |

Hence we have

and it suffices to find upper bounds for the single summands on the right-hand side.

Estimation of stochastic integrals.

As a preliminary step, we deduce upper bounds for the -th moments of certain stochastic integrals w.r.t. . Let where is a matrix-valued integrand measurable with respect to . Then, conditional on is Gaussian with . By Rosiński and Suchanecki [21, Lem. 2.1] there is a constant such that

The multivariate version of Itô’s isometry from Lemma A.3 yields

By putting these inequalities together we get

| (C.21) | ||||

Estimate for .

By using Hölder’s inequality we have

| (C.22) | ||||

Estimate for .

Estimate for .

For the summand we can argue similarly as for and get

| (C.24) | ||||

Estimate for .

The estimation of is more involved. We can write

where for . Note that the two stochastic integrals do not align. We distinguish different cases by means of the random variable . This leads to the representation of as , where

| (C.25) | ||||

| (C.26) | ||||

| (C.27) |

For the first term due to (C.21) it holds

| (C.28) | ||||

Let and . Then

Hence, we can deduce that there exists a constant with

by means of Lemma A.4. Since is differentiable in with bounded derivative we deduce that . Using the moment generating function of we can show for a constant and all . Using also Theorem 4.6 and plugging back into (C.28) this implies

| (C.29) |

for all , where is a constant. Next, we consider , where (C.21) yields

| (C.30) | ||||

For the last inequality note that using the moment generating function of it can be shown for any that for all and a constant . For the estimation works similarly. By using (C.21) we obtain

| (C.31) | ||||

Combining (C.29), (C.30) and (C.31), for and all it holds

| (C.32) |

Estimate for .

By the same approach as for we find such that for all it holds

| (C.33) |

Conclusion with Gronwall’s Lemma.

References

- [1] A. Aalto, Convergence of discrete-time Kalman filter estimate to continuous time estimate, International Journal of Control 89 (2016), no. 4, pp. 668–679.

- [2] S. Asmussen & H. Albrecher, Ruin Probabilities, vol. 14 of Advanced Series on Statistical Science & Applied Probability, World Scientific (2010).

- [3] F. Black & R. Litterman, Global portfolio optimization, Financial Analysts Journal 48 (1992), no. 5, pp. 28–43.

- [4] S. Brendle, Portfolio selection under incomplete information, Stochastic Processes and their Applications 116 (2006), no. 5, pp. 701–723.

- [5] R. Cont & P. Tankov, Financial Modelling with Jump Processes, Chapman and Hall/CRC (2004).

- [6] F. Coquet, J. Mémin & L. Słominski, On weak convergence of filtrations, Séminaire de probabilités de Strasbourg 35 (2001), pp. 306–328.

- [7] M. H. A. Davis & S. Lleo, Black–Litterman in continuous time: the case for filtering, Quantitative Finance Letters 1 (2013), no. 1, pp. 30–35.

- [8] J.-P. Demailly, Gewöhnliche Differentialgleichungen: Theoretische und Numerische Aspekte, Vieweg+Teubner Verlag (1994).

- [9] R. Frey, A. Gabih & R. Wunderlich, Portfolio optimization under partial information with expert opinions, International Journal of Theoretical and Applied Finance 15 (2012), no. 1. 18 pages.

- [10] R. Frey, A. Gabih & R. Wunderlich, Portfolio optimization under partial information with expert opinions: a dynamic programming approach, Communications on Stochastic Analysis 8 (2014), no. 1, pp. 49–79.

- [11] A. Gabih, H. Kondakji, J. Sass & R. Wunderlich, Expert opinions and logarithmic utility maximization in a market with Gaussian drift, Communications on Stochastic Analysis 8 (2014), no. 1, pp. 27–47.

- [12] A. Gabih, H. Kondakji & R. Wunderlich, Asymptotic filter behavior for high-frequency expert opinions in a market with Gaussian drift (2019). arXiv:1812.03453 [q-fin.MF].

- [13] P. W. Glynn, Diffusion approximations, in Stochastic Models, vol. 2 of Handbooks in Operations Research and Management Science, chap. 4, Elsevier (1990), pp. 145–198.

- [14] J. Grandell, Aspects of Risk Theory, Springer-Verlag New York (1991).

- [15] D. L. Iglehart, Diffusion approximations in collective risk theory, Journal of Applied Probability 6 (1969), no. 2, pp. 285–292.

- [16] I. Karatzas & X. Zhao, Bayesian Adaptive Portfolio Optimization, chap. 17, Handbooks in Mathematical Finance: Option Pricing, Interest Rates and Risk Management, Cambridge University Press (2001), pp. 632–669.

- [17] H. Kondakji, Optimale Portfolios für partiell informierte Investoren in einem Finanzmarkt mit Gaußscher Drift und Expertenmeinungen, Ph.D. thesis, Brandenburg University of Technology Cottbus-Senftenberg (2019). Available at https://opus4.kobv.de/opus4-btu/frontdoor/deliver/index/docId/4736/file/Kondakji_Hakam.pdf.

- [18] V. Kuc̆era, A review of the matrix Riccati equation, Kybernetika 9 (1973), no. 1, pp. 42–61.

- [19] R. S. Liptser & A. N. Shiryaev, Statistics of Random Processes I: General Theory, vol. 5 of Applications of Mathematics, Springer-Verlag New York (1974).

- [20] B. G. Pachpatte, Inequalities for Differential and Integral Equations, vol. 197 of Mathematics in Science and Engineering, Academic Press (1997).

- [21] J. Rosiński & Z. Suchanecki, On the space of vector-valued functions integrable with respect to the white noise, Colloquium Mathematicae 43 (1980), no. 1, pp. 183–201.

- [22] M. Salgado, R. Middleton & G. C. Goodwin, Connection between continuous and discrete Riccati equations with applications to Kalman filtering, IEE Proceedings D – Control Theory and Applications 135 (1988), no. 1, pp. 28–34.

- [23] J. Sass, D. Westphal & R. Wunderlich, Expert opinions and logarithmic utility maximization for multivariate stock returns with Gaussian drift, International Journal of Theoretical and Applied Finance 20 (2017), no. 4. 41 pages.

- [24] H. Schmidli, Risk Theory, Springer International Publishing (2017).

- [25] K. Schöttle, R. Werner & R. Zagst, Comparison and robustification of Bayes and Black–Litterman models, Mathematical Methods of Operations Research 71 (2010), no. 3, pp. 453–475.

- [26] S.-D. Wang, T.-S. Kuo & C.-F. Hsu, Trace bounds on the solution of the algebraic matrix Riccati and Lyapunov equation, IEEE Transactions on Automatic Control 31 (1986), no. 7, pp. 654–656.