Diffusion Modeling with Domain-conditioned Prior Guidance for Accelerated MRI and qMRI Reconstruction

Abstract

This study introduces a novel approach for image reconstruction based on a diffusion model conditioned on the native data domain. Our method is applied to multi-coil MRI and quantitative MRI reconstruction, leveraging the domain-conditioned diffusion model within the frequency and parameter domains. The prior MRI physics are used as embeddings in the diffusion model, enforcing data consistency to guide the training and sampling process, characterizing MRI k-space encoding in MRI reconstruction, and leveraging MR signal modeling for qMRI reconstruction. Furthermore, a gradient descent optimization is incorporated into the diffusion steps, enhancing feature learning and improving denoising. The proposed method demonstrates a significant promise, particularly for reconstructing images at high acceleration factors. Notably, it maintains great reconstruction accuracy and efficiency for static and quantitative MRI reconstruction across diverse anatomical structures. Beyond its immediate applications, this method provides potential generalization capability, making it adaptable to inverse problems across various domains.

Diffusion Model, DDPM, MRI, Quantitative MRI, Reconstruction

1 Introduction

Magnetic resonance imaging (MRI) stands as an indispensable, non-invasive imaging tool, pivotal in both medical diagnosis and clinical research. Though MRI delivers unparalleled diagnostic value, its imaging time is lengthy compared to other imaging modalities, limiting its patient throughput. This limitation has galvanized innovations to accelerate the MRI process, all with the common goal of drastically reducing scan time without compromising image quality[1, 2]. Recently, deep learning has shown great potential in addressing this issue. Numerous techniques have been introduced that enhance the performance of optimization algorithms using finely tuned sophisticated neural networks, achieving excellent results[3]. A substantial portion of these state of the art methods utilize conditional models, adeptly converting undersampled data inputs into outputs accurately mirroring the fully-sampled data acquisitions [4, 5, 6, 7, 8, 9, 10, 11].

Quantitative magnetic resonance imaging (qMRI) provides quantitative measures of the physical parameters of tissues, providing additional information regarding its microstructural environment. This is typically accomplished by modeling the acquired MR signal and extracting the parameter of interest. To sufficiently characterize the signal model requires multiple acquisitions, making it both time-consuming and costly, even with well-established acceleration methods. For example, quantifying the spin-lattice relaxation time () using the variable flip angle (vFA) model[12, 13] requires acquisitions at multiple flip angles, leading to impractical scan times for clinical settings. Recent advances in deep learning have enabled innovative solutions to accelerate qMRI. Methods such as MANTIS[14, 15], RELAX[16], MoDL-QSM[17], and DOPAMINE[18] have utilized supervised or self-supervised learning to enable rapid MR parameter mapping using undersampled k-space data.

The desire for more robust and efficient techniques in both MRI and qMRI has led to the development of innovative approaches, among which diffusion models[19, 20, 21, 22, 23] have recently shown to be promising. A notable advancement in this area is the emergence of Denoising Diffusion Probabilistic Models (DDPMs)[24, 25, 26]. These models represent a new class of generative models that have achieved high sample quality without the need of adversarial training. DDPMs have quickly gained interest in MRI reconstruction due to its robustness, especially under distribution shifts. A few studies have explored the concept of DDPM-based MRI reconstructions[23, 21]. In these methods, DDPMs are trained to generate noisy MRI images, and reconstruction is achieved by iteratively learning to denoise at each diffusion step. This can be implemented through either unconditional or conditional DDPMs. However, to the best of our knowledge, no studies have investigated diffusion models for qMRI.

While diffusion methods have shown promising results, they are not without challenges. Some limitations include the omission of physical constraints during training[27], lack of compromise solution and optimal efficiency due to the sampling being initiated from a random noise image [28], and reliance on fully-sampled images for training[29]. In particular, DDPMs for MRI reconstruction generally starts in the image domain, where the unknown data distribution from training images go through repeated Gaussian diffusion processes which can be reversed by learned transition kernels. Applying the diffusion process in the image domain overlooks the underlying MRI physical model (i.e. k-space encoding) which is embedded in the measured k-space data. It can underperform at large distribution shifts, due to changes in scan parameters or difference in anatomy between training and testing. Since the raw MRI measurement is acquired in the frequency domain (i.e. k-space), it can be beneficial to directly apply the diffusion process in the frequency domain rather than image domain. Likewise, since qMRI focuses on the quantification of tissue parameters such as and proton density, it can also be advantageous to define the diffusion model conditioned on the parameter domain for qMRI.

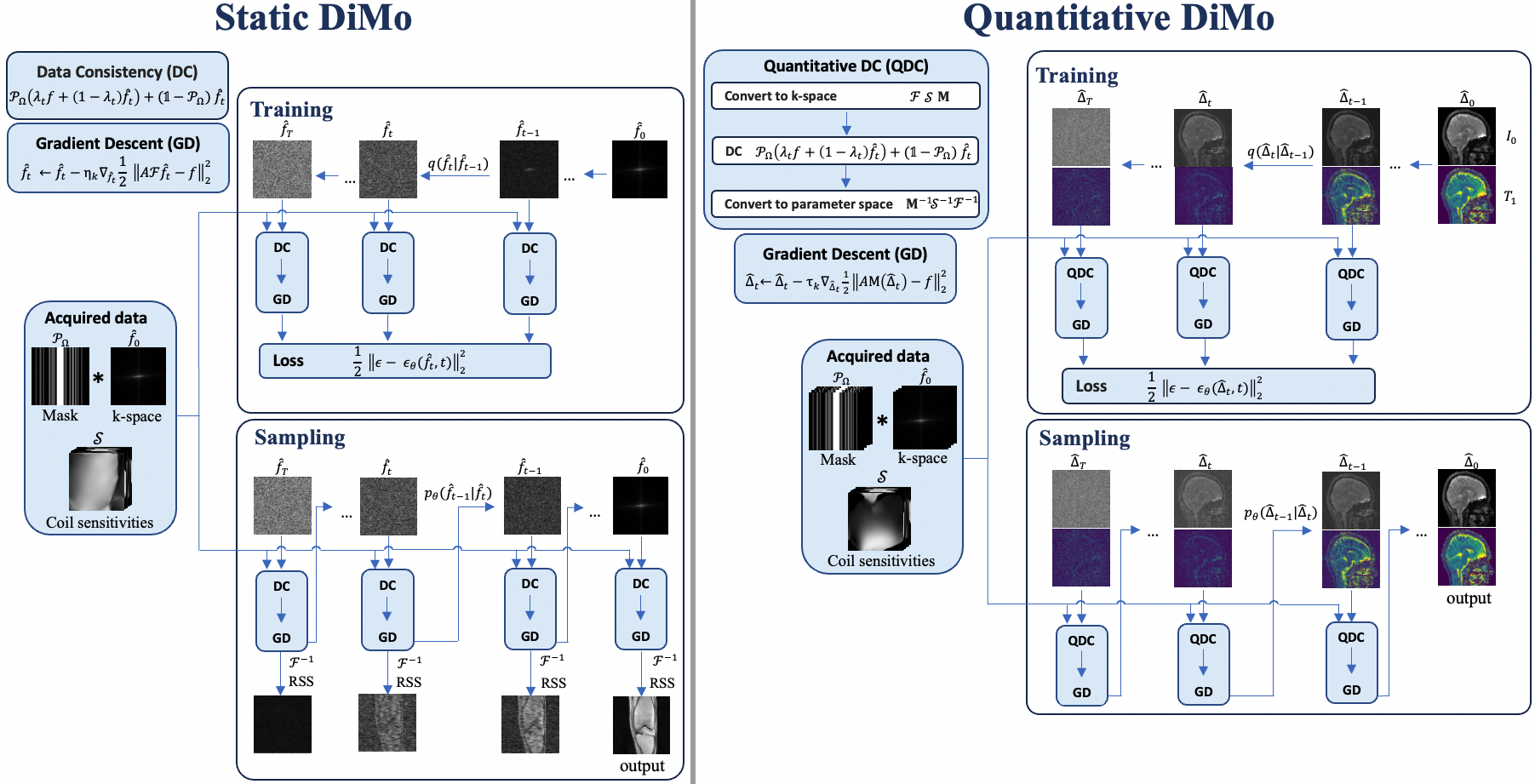

In this paper, we propose a novel and unified method that applies domain-conditioned diffusion models to accelerated static MRI and qMRI reconstruction, which we denote as Static Diffusion Modeling (DiMo) and Quantitative DiMo, respectively. The conditional diffusion process is defined in k-space for Static DiMo and in parameter space for Quantitative DiMo.

Three points that distinguish our method from previous works are: (1) The forward (diffusion) process and reverse (sampling) process are defined on the native data domain rather than image domain. This model is applied to multicoil static MRI and quantitative MRI reconstruction, which reflects domain-specific adaptation. (2) Prior physics knowledge is embedded in the diffusion process as a data consistency component for characterizing MRI k-space encoding and MR signal modeling for MRI and qMRI reconstruction. Gradient descent is also integrated in the diffusion steps to augment feature learning and promote denoising. (3) The proposed method preserves high reconstruction accuracy and efficiency under large undersampling rates for both static MRI and quantitative MRI reconstruction of different anatomies.

The rest of the paper is organized as follows: Section II gives the methods for Static DiMo and Quantitative DiMo. Section III describes experiment settings. Section IV presents experiment results. Section V discusses the limitation of the method and concludes the paper.

2 Methods

2.1 DDPM

DDPMs are generative models which are highly effective in learning complex data distributions. The forward diffusion process adds noise to the input data, gradually increasing the noise level until the data is transformed into pure Gaussian noise. This process systematically perturbs the structure of the data distribution. The reverse diffusion process, also known as the denoising process, is then applied to recover the original structure of the data from the perturbed data distribution.

Forward Process DDPM[24] presents the forward diffusion mechanism as a Markov Chain, wherein Gaussian noise is incrementally introduced across several steps to yield a collection of perturbed samples. Consider the uncorrupted data distribution that is characterized by density , which undergoes incremental transformations through the addition of Gaussian noise at various stages, resulting in a spectrum of modified samples. If we draw a data sample from , the forward diffusion process modifies this data point through integration of Gaussian perturbations at each time step which can be mathematically represented as

| (1a) | |||

| (1b) | |||

Here, is the total number of diffusion steps, while the noise scaling sequence defines the magnitude of variance introduced at each step. For isotropic Gaussian noise , at each time step,

| (2) |

With a large number of forward iterations, converges to an isotropic Gaussian sample, so we have . Let and , then given the initial sampled data and sampling step , we can obtain

| (3) |

Reverse Process The reverse process aims to retrieve by stripping away the noise from . Specifically, starting with a noise vector , we iteratively sample from the learnable transition kernel until through a learnable Markov chain in the reverse time direction.

| (4a) | |||

| (4b) | |||

The mean is parametrized by deep neural networks and . The objective during training is to minimize the discrepancy between the true data distribution and the estimated distribution, which can be achieved by minimizing the variational bound on log likelihood:

| (5) |

Ho et al.[24] proposed using deep neural networks to estimate added noise and perform parameterization on :

| (6) |

Accordingly, the loss function simplifies to

| (7) |

2.2 Static DiMo: Accelerated MRI reconstruction

Accelerated MRI considers the following measurement model

| (8) |

where is the MR image of interest consisting of n pixels, is its corresponding undersampled k-space measurement, and is measurement noise. The parameterized forward measurement matrix is defined as

| (9) |

where are the coil sensitivity maps for different coils, is the 2D discrete Fourier transform, and is the binary undersampling mask corresponding to sampled data points using undersampling pattern . The classical approach to solve for using undersampled k-space is to solve the following optimization problem:

| (10) |

The training of Static DiMo starts with the input of fully sampled k-space data, which we denote as . We can write , where is the inverse discrete Fourier transform. The task of Static DiMo is to minimize , thus we can rewrite equation (10) as:

| (11) |

The minimizer can be solved by applying the gradient decent algorithm, which is straightforward to compute:

| (12) |

Typical diffusion models aim to estimate . Since Static DiMo is defined in the frequency domain and , the problem can be interpreted as generating samples of which is conditioned on undersampling mask , scanned measurement and coil sensitivity maps .

According to DDPM[24], the diffusion process can be represented with a noise schedule as:

| (13a) | |||

| (13b) | |||

Let . We can track the forward process conditioned on the initial:

| (14a) | ||||

| (14b) | ||||

| (14c) | ||||

| (14d) | ||||

We choose so that , then we obtain

| (15) |

which approaches an isotropic Gaussian distribution.

Sample , the diffusion model has the following form:

| (16) |

The sampling of is the reverse diffusion process.

| (17a) | |||

| (17b) | |||

The training of is to optimize the variational bound of log likelihood in (5), and this can break down into optimizing random terms of with stochastic gradient descent. Now we analyse using (14b) and (17b), we can write

| (18a) | ||||

| (18b) | ||||

where is constant that independent from . From (3) we get , then apply the forward posterior formula (14d), we have

| (19) | ||||

We can derive that should be parametrized to predict , so we can put

| (20) |

where . Then the simplified loss function is

| (21) |

In equation (21), we can interpret it as being a function that is dependent on the components of . Then we can write our simplified loss function as

| (22a) | ||||

| (22b) | ||||

Now we can derive the function so that it can provide a prior guidance for the diffusion during training and sampling. The diffusion process starts with , we have by (3). An intuitive way of designing the function is to incorporate a data consistency (DC) layer which perturbs the k-space data with a linear combination of partially scanned data . Then we can further fine-tune the DC layer using gradient descent (GD) as described in (12). The proposed algorithm synergizes DDPM, MRI data consistency enforcement and gradient descent optimization, which is listed in Alg. 1:

In step 2, is a scheduling factor to guide the data consistency dynamics, which follows the exponential function to schedule the perturbation dynamics:

| (23) |

Prior knowledge of scanned signal decreases exponentially as grows. In doing this, the schedule parameter can balance network training and stabilize parameter learning. denotes the matrix with values of one and is the learnable step size for gradient descent.

Sampling leverages the Langevin dynamics with as learned gradient of data density which provide the local information of the data distribution. Here we compute where . Alg. 2 shows the sampling process which is the reverse of the diffusion process.

2.3 Quantitative DiMo: Accelerated qMRI reconstruction.

To extend from static MRI reconstruction to qMRI reconstruction, we first define the MR parameter maps as where symbolizes each MR parameter and is the total number of MR parameters to be estimated. Given the MR signal model , which is a function of , the MR parameters can be estimated by minimizing the following:

| (24) |

Quantitative DiMo is defined in the parameter domain, therefore we focus on estimating with an additional condition, the MR signal model . Since the diffusion processe and reverse process are highly similar to Static DiMo, we omit the derivation of Quantitative DiMo.

In contrast to the algorithm design of Static DiMo, a major difference is the function . Following the same framework as Static DiMo, Quantitative DiMo starts with equation (3), which is then input to the quantitative DC (QDC) layer, followed by fine-tuning with gradient descent. The Alg. 3 displays the training process of Quantitative DiMo and Alg. 4 shows the sampling process:

QDC layer is presented in steps 2-4 in the above algorithm. The addition of the QDC step is to perturb quantitative parameters and guide the parameter space denoising and generation process. Steps 5-7 further optimize the perturbed through iterating steps of gradient descent. Alg. 4 displays the sampling process which is the reverse of the diffusion process.

To demonstrate the feasibility of Quantitative DiMo for reconstructing accelerated qMRI, we used mapping using the vFA model [13] as an example. For MR images are acquired at multiple flip angles , where , the MR signal model reads as:

| (25) |

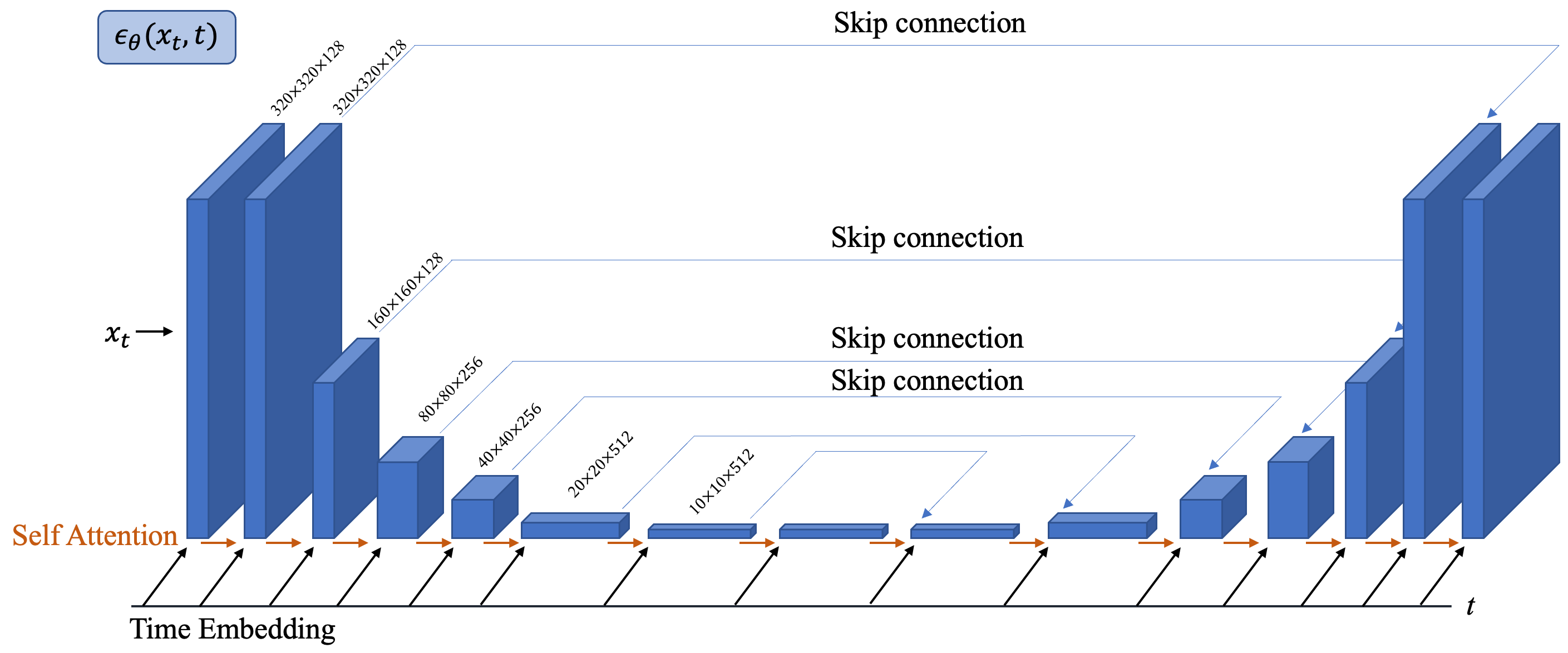

where denotes the spin-lattice relaxation time map and is proton density map. In this model, the MR parameters estimated are encapsulated in the set . Other imaging parameters such as flip angles and repetition time TR are known. Fig. 1 shows the framework of Static DiMo and Quantitative DiMo and Fig. 2 illustrates the U-Net architecture for .

3 Experiments

3.1 Experiments for Static DiMo

We acquired knee data from 25 subjects, using 20 for training and 5 for testing. These fully-sampled data were obtained from a 3T GE Premier scanner equipped with an 18-element knee coil array. The data was acquired using two-dimensional fast spin-echo (FSE) sequences along the coronal plane, with both proton density-weighting (PD-weighting) and -weighting. All images were resized resulting in in-plane matrix size of 320 320 with 3mm slice thickness. Number of slices varied from 30 to 38 in each dataset, amounting to 875 total slices for both training and testing. The experiments were conducted retrospectively using three different acceleration factors: AFs = 4, 5 and 6 using 1D variable density Cartesian undersampling masks[30] where the 20 central k-space lines were fully sampled. We evaluated the performance of the proposed method against several state-of-the-art methods which include Variational Network (VN)[6], ISTA-NET[31], and pMRI-Net[32] over two contrasts: PD- and -weighting. Results were compared in terms of peak signal to noise ratio (PSNR), structure similarity (SSIM) and normalized mean squared error (NMSE). The equations for assessing PSNR, SSIM, and NMSE between reconstruction and the ground truth are:

| (26) |

| (27) |

| (28) |

where represent the local mean of pixel intensities with standard deviations for images , respectively. denotes the covariance between and , and are two constant variables introduced to stabilize the division. We chose the total number of sampling steps as using a linear schedule from to with training batch size of 4. The total number of epochs used was 7000. The initial parameter for Alg.1 was chosen to be .

3.2 Experiments for Quantitative DiMo

Quantitative DiMo experiments were carried out using in-vivo brain data of healthy volunteers, obtained from a Siemens 3T Prisma scanner equipped with a 20-channel head coil. The five subject fully sampled vFA brain data was acquired along the sagittal plane using a spoiled gradient echo sequence with imaging parameters TE/TR = ms, FA = , in-plane matrix size = with 3mm slice thickness and a total number of 48 slices per subject. Leave-One-Out cross-validation was used for all five subjects to train and test our method.

Two undersampling schemes were retrospectively used: (1) 1D variable density Cartesian undersampling[30] with acceleration factor AF= , where the 16 central k-space lines were fully sampled and (2) 2D Poisson disk undersampling at AF= with the central 51x51 k-space portion fully sampled. The undersampling patterns were varied for each flip angle, like in previous studies[14, 16]. We compared Quantitative DiMo with two advanced non-deep learning qMRI reconstruction techniques Locally Low Rank (LLR)[33], Model-TGV[34], and a self-supervised deep learning method RELAX[16]. For LLR and Model-TGV, settings recommended in their original research papers were used along with available code. RELAX and Quantitative DiMo were trained through cross-validation. For the diffusion hyperparameters, we maintained the total number of sampling steps at using linear scheduling from to . The training batch size used was 8 with 5500 total epochs. The initial parameter for gradient descent in Alg. 3 was set to

All in-vivo studies were carried out under a protocol approved by our institution’s institutional review board. Another experiment condition was as follows: The coil sensitivity maps were estimated from ESPIRiT[35]. Separate maps were acquired[36] to correct for bias in estimating . All the programming in this study was implemented using Python language and PyTorch package, and experiments were conducted on one NVIDIA A100 80GB GPU and an Intel Xeon 6338 CPU at Centos Linux system.

4 Results

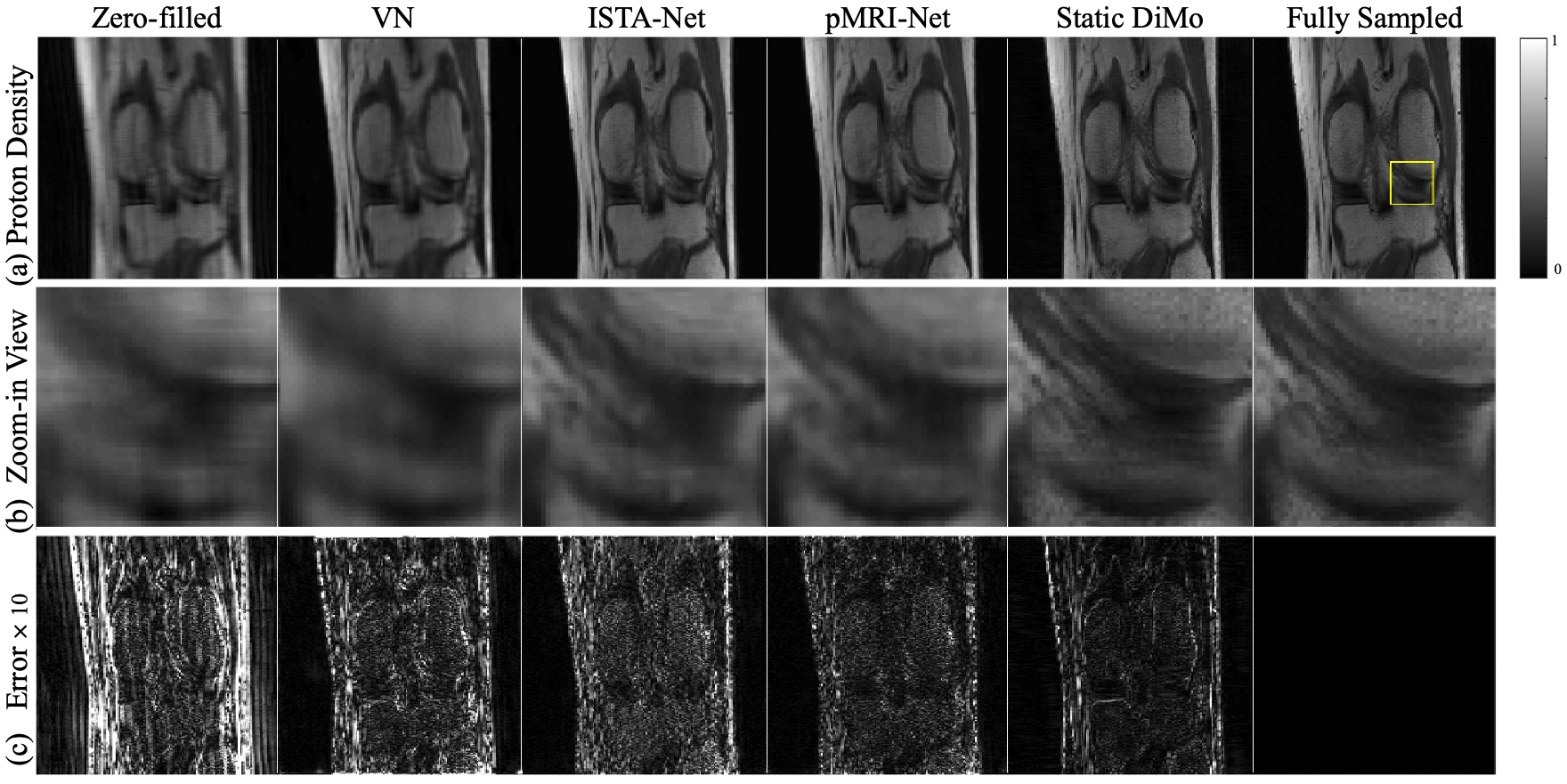

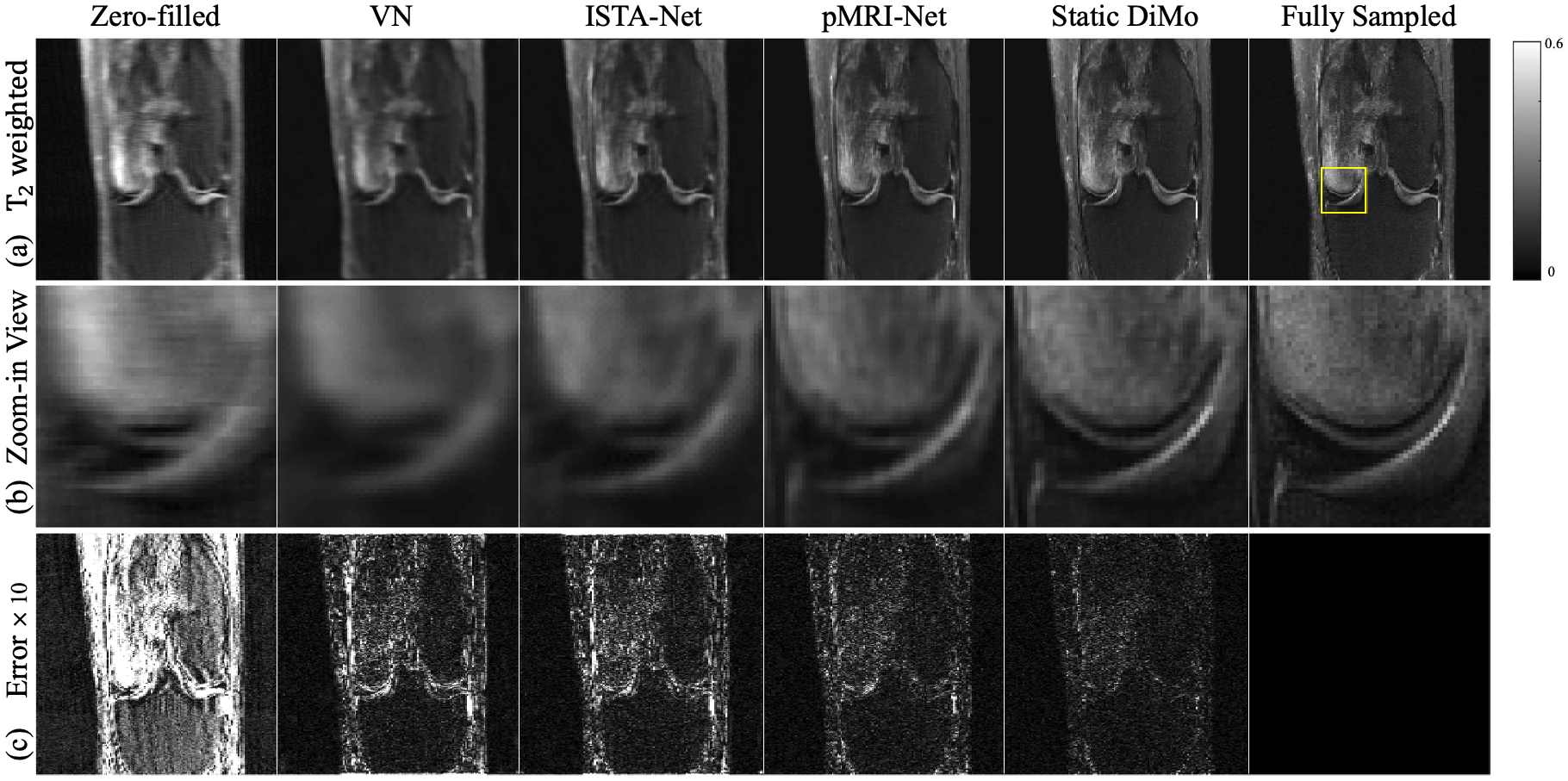

4.1 Results of Static DiMo

An example of reconstruction results obtained from different methods at AF = are shown for PD- (Fig. 3) and T2-weighted (Fig. 4) images along the coronal plane are displayed. Zero-filled results show significant blurring and artifacts due to undersampling. Although VN, ISTA-Net, and pMRI-Net are able to remove these artifacts, compared with the fully sampled reference images, blurring characteristic persists with VN showing the most, while ISTA-Net and pMRI-Net are comparable. Static DiMo’s reconstruction not only removes the undersampling artifacts, but also significantly suppresses blurring showing clarity and sharpness, thus outperforming both ISTA-Net and pMRI-Net. Furthermore, Static DiMo shows superior denoising capability, rooted in its denoising diffusion modeling. This not only proficiently removes noise and artifacts, but also retains the integrity of high-frequency image detail. This is further illustrated in the zoomed-in images of Fig. 3(b) and Fig. 4(b). Static DiMo clearly distinguishes tissue boundaries across various tissue types, including the cartilage, meniscus, bone and muscle. In addition, the fidelity of tissue texture and sharpness are preserved. The pixel-wise error maps shown in Fig. 3(c) and Fig. 4(c) also demonstrate that Static DiMo produces the least reconstruction errors with respect to the fully sampled reference. For both contrasts, Static DiMo consistently outperforms the other methods. Overall, in agreement with the qualitative observation in Fig. 3 and Fig. 4, reconstruction results are summarized in Table 1 for all testing subjects using quantitative metrics, showing the superior performance of Static DiMo over other methods in reconstruction fidelity, structure and texture preservation and noise suppression.

| PD-weighting1 | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| AF | 4x | 5x | 6x | ||||||

| Methods | PSNR | SSIM | NMSE | PSNR | SSIM | NMSE | PSNR | SSIM | NMSE |

| VN [6] | 30.5932 0.9312 | 0.9016 0.0390 | 0.1374 0.0203 | 29.3312 1.1914 | 0.8868 0.0482 | 0.1467 0.0269 | 27.5036 1.2453 | 0.8503 0.0594 | 0.1788 0.0301 |

| ISTA-Net[31] | 32.3933 1.1124 | 0.9640 0.0260 | 0.1030 0.0121 | 31.1878 1.2343 | 0.9555 0.0369 | 0.1150 0.0152 | 29.1795 1.2951 | 0.9603 0.0415 | 0.1281 0.0197 |

| pMRI-Net[32] | 33.5534 1.2351 | 0.9695 0.0144 | 0.0937 0.0058 | 32.7408 1.4180 | 0.9667 0.0198 | 0.1003 0.0086 | 30.8576 1.5202 | 0.9560 0.0235 | 0.1178 0.0128 |

| Static DiMo | 35.3733 1.2142 | 0.9704 0.0137 | 0.0738 0.0044 | 34.0121 1.3185 | 0.9691 0.0169 | 0.0839 0.0081 | 32.7645 1.4719 | 0.9597 0.0119 | 0.1084 0.0109 |

| -weighting1 | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| Methods | PSNR | SSIM | NMSE | PSNR | SSIM | NMSE | PSNR | SSIM | NMSE |

| VN [6] | 32.0078 0.5800 | 0.9129 0.0119 | 0.1464 0.0048 | 30.8641 0.6303 | 0.8975 0.0128 | 0.1743 0.0082 | 29.2356 0.7866 | 0.8708 0.0245 | 0.1934 0.0102 |

| ISTA-Net[31] | 34.1552 0.7873 | 0.9628 0.0056 | 0.1142 0.0042 | 32.7243 1.0213 | 0.9472 0.0093 | 0.1382 0.0065 | 30.0678 1.1038 | 0.9155 0.0109 | 0.1864 0.0080 |

| pMRI-Net[32] | 36.3567 1.1123 | 0.9767 0.0045 | 0.0867 0.0032 | 35.5938 1.1256 | 0.9728 0.0078 | 0.0942 0.0045 | 34.1399 1.1321 | 0.9646 0.0087 | 0.1108 0.0059 |

| Static DiMo | 37.6438 1.0343 | 0.9817 0.0039 | 0.0776 0.0025 | 36.2274 1.1227 | 0.9812 0.0071 | 0.0793 0.0049 | 35.1207 1.1348 | 0.9708 0.0079 | 0.0983 0.0056 |

1 Data are presented as mean std.

4.2 Results of Quantitative DiMo

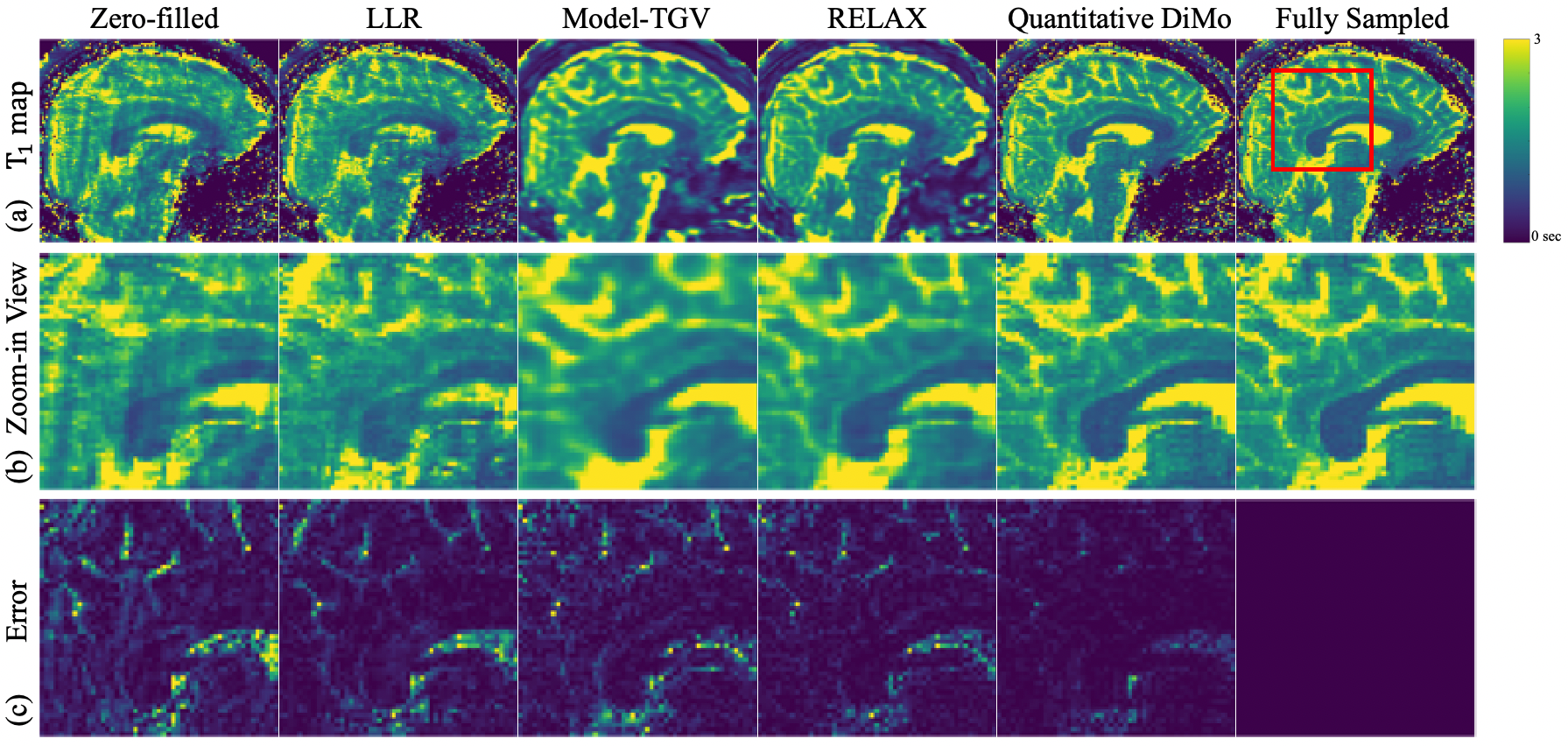

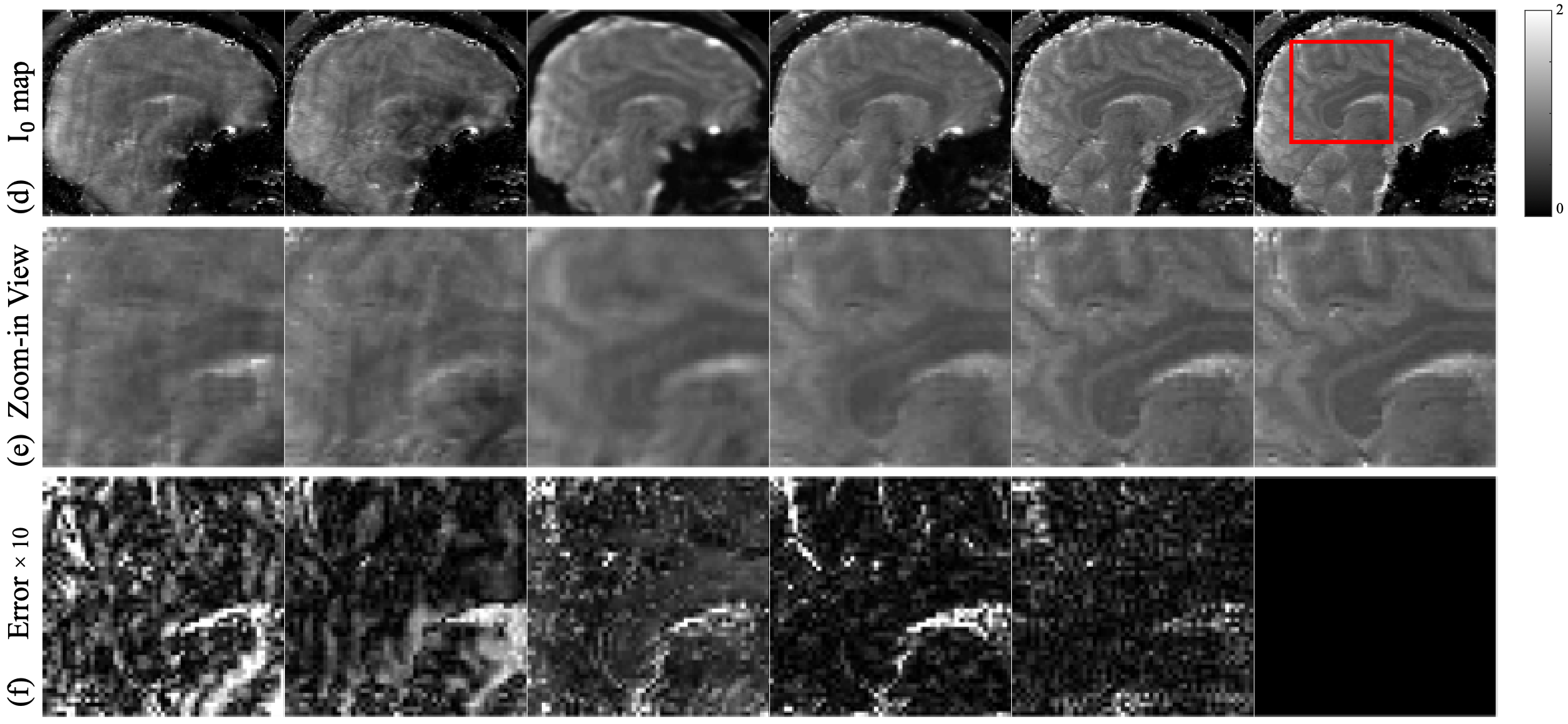

and maps estimated from 4 1D variable density Cartesian undersampling using various methods are shown in Fig. 5 The zero-filled map derived from pixel-wise fitting the undersampled images (Fig. 5(a)) exhibits noise and ripple artifacts due to aliasing. While LLR manages to mitigate these artifacts to an extent, the noisy signature persists. In its attempt to neutralize the noise, Model-TGV yields a more refined tissue appearance with enhanced contrast. However, the resulting maps are overly smooth, appearing blurry. Conversely, RELAX effectively suppresses both noise and artifacts, delivering sharper maps, albeit with a slightly blocky texture. This blockiness is speculated to arise from challenges in achieving convergence in the end-to-end network using limited data. Meanwhile, Quantitative DiMo generates clear and sharp maps. Its proficiency in removing noise translates to its superior map quality, both in terms of appearance and contrast. This is further witnessed in the zoom-ins (Fig. 5 (b)) which show that Quantitative DiMo clearly distinguishes the boundary between white matter and grey matter, appearing nearly identical to the fully sampled reference map. This is quantitatively confirmed in the error maps (Fig. 5(c)) where the zero-filled incurs the largest error, followed by LLR, Model-TGV, and RELAX.

Mean values obtained from representative white matter and grey matter regions are presented in Table 2 for all subjects. Overall, LLR shows least agreement with respect to the fully sampled reference method, followed by Model-GTV. On the other hand, both RELAX and Quantitative DiMo show good agreement. However, as shown by the Wilcoxon signed rank test results, Quantitative DiMo shows better correlation with the reference than RELAX.

| Region-of-interest | LLR | Model-TGV | RELAX | Quantitative DiMo | Fully Sampled |

|---|---|---|---|---|---|

| White matter region | |||||

| Corpus Callosum | |||||

| Frontal white matter | |||||

| Grey matter region | |||||

| Putamen | |||||

| Thalamus |

1 Data are presented as mean std. vs. fully sampled using Wilcoxon signed rank test.

The maps in Fig 5(d-f) exhibit a similar signature in reconstruction quality as the maps, where LLR and Model-TGV show aliasing artifacts with the most blur, further confirmed by the zoom-ins, reflecting the undersampling pattern of many high frequency data points being undersampled with low frequency data points fully sampled. The deep learning method RELAX shows its ability to denoise through artifacts removal and detail preservation. However, it still lacks sharpness resulting in smoothened edges. Quantitative DiMo produces the sharpest maps preserving detail, which again is confirmed by its error maps, showing least error.

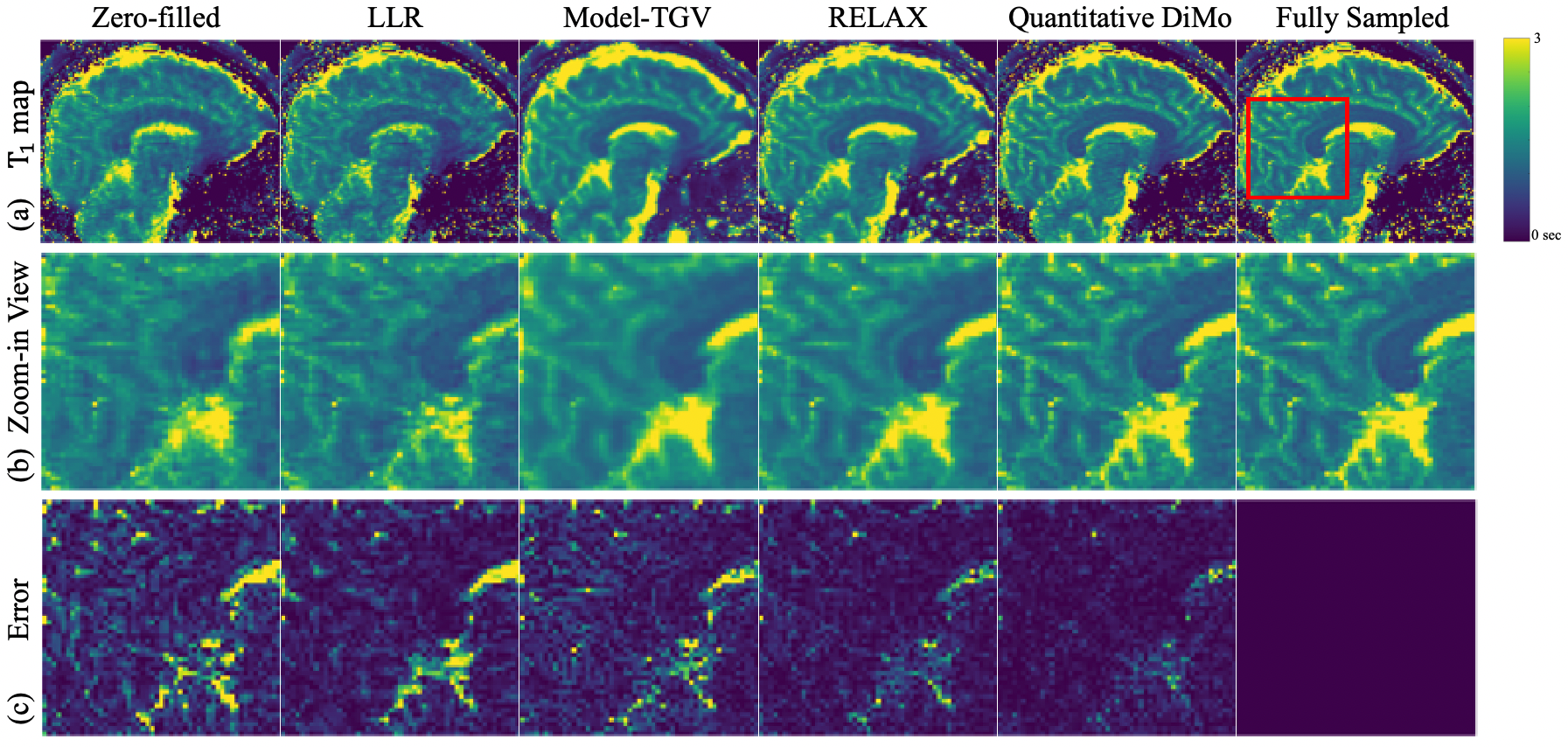

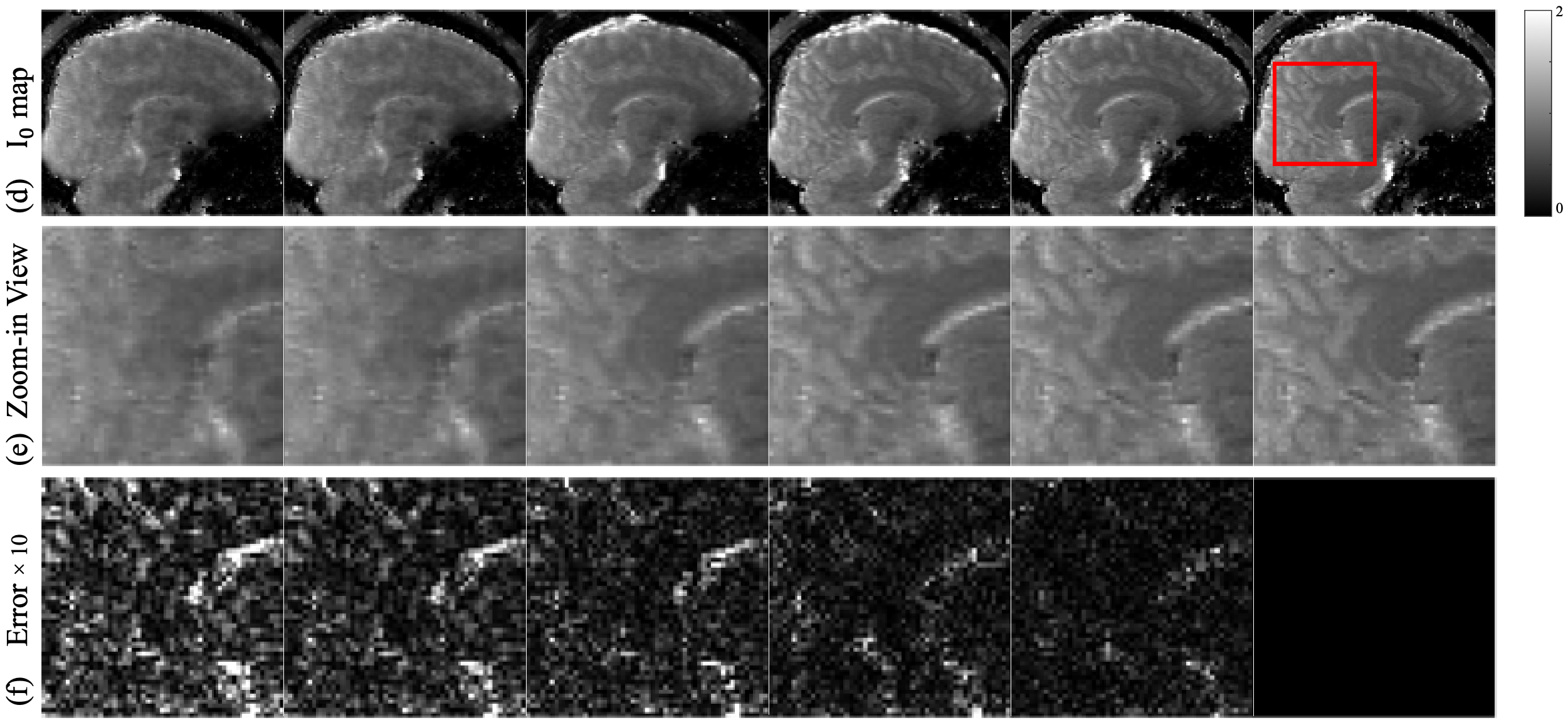

The and maps estimated from 4 2D Poisson disk undersampling are shown in Fig. 6. Examining Fig. 6(a) and Fig. 6(d), it is apparent that the main differences compared to the 4x 1D undersampling case are the different artifact patterns, which in turn stems from the different undersampling patterns. As seen in the zoom-in views of the (Fig. 6(b)) and (Fig. 6(e)) maps, compared to Fig. 5, LLR provides sharper maps details but still retain artifacts and noise, whereas Model-TGV preserves detail but exhibits blurred edges due to its general averaging of noise. RELAX captures sharp structures but is not able to attain details in some fine regions. Quantitative DiMo produces artifact free and maps with superior performance compared to other methods. This is again confirmed by the error maps (Fig. 6(c) and Fig. 6(f)), with Quantitative DiMo showing the least error. This is likely achieved through integrating the unrolling gradient descent algorithm and diffusion denoising network, prioritizing noise suppression without compromising the fidelity and clarity of the underlying tissue structure.

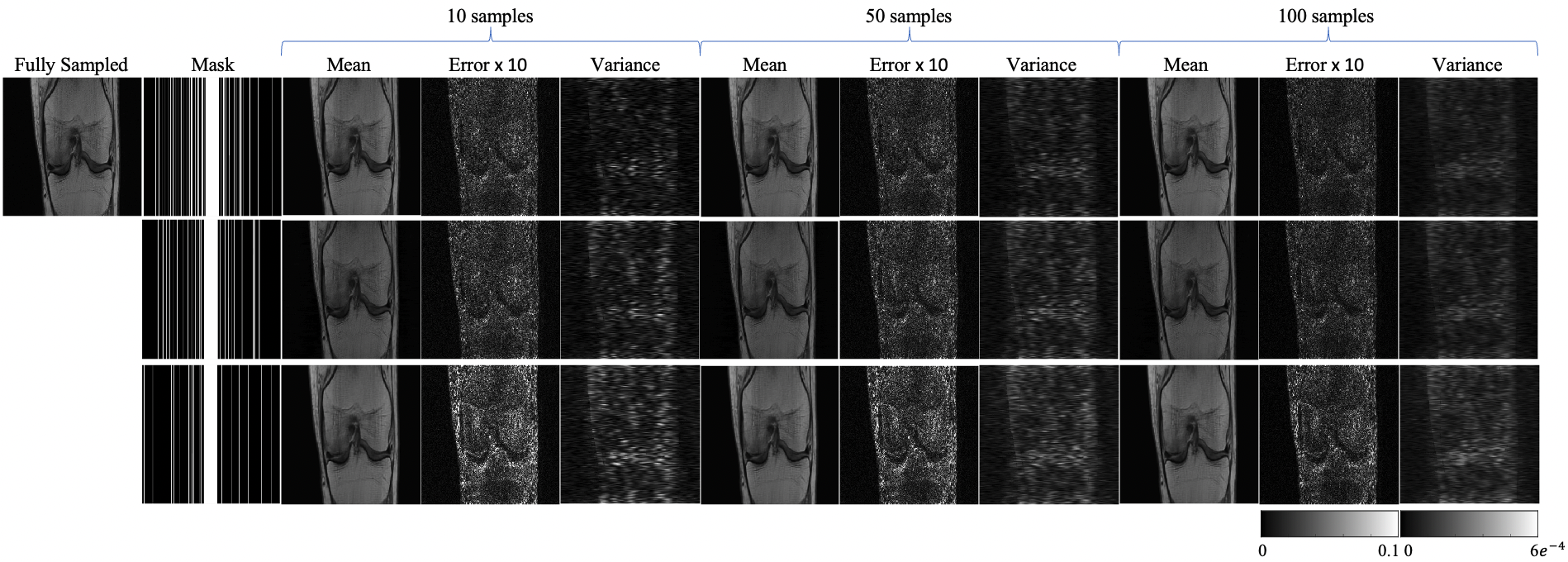

4.3 Ablation Study

An ablation study investigating model uncertainty was conducted by sampling the well-trained Static DiMo on PD data 100 times. From these 100 samples, we derived mean images, error maps, and variance maps. We then compared three distinct acceleration factors (4, 5, 6) using 1D Cartesian masks, shown in Fig.7. When compared with fully sampled images, the error and variance maps predominantly capture the edge and boundary details. Notably, higher acceleration factors increases the uncertainty with amplified errors, especially around the tissue interfaces. Incrementally increasing our sampling data counts, starting from 10, increasing to 50, and finally reaching 100, we witnessed a progression in the variance maps. Namely, they began displaying smoother transitions, with the high uncertainty edge regions gradually diminishing. This suggests that augmenting the number of sampling instances can be instrumental in attenuating noise within the mean images.

5 Discussion and Conclusion

While diffusion models, including ours, show impressive abilities on performing the image reconstruction task, there are still some limitations. For instance, diffusion process is computationally intensive, especially those involving complex structures or high-dimensional data, can be computationally expensive, requiring significant time and resources. Also, Diffusion models can be sensitive to the choice of parameters. A slight change in parameters can result in substantially different outcomes, making it crucial to fine-tune them for specific applications. For complex systems or datasets, it might be challenging to derive analytical solutions using diffusion models, necessitating the use of numerical methods that can introduce their own set of issues. Future research is warranted to explore to address these limitations.

To sum up, this paper proposed a diffusion model that is conditioned on native data domain for reconstructing MRI and quantitative MRI. The reconstruction shows promising results comparing to other deep learning methods, which reflects the robustness and efficiency of proposed Static DiMo and Quantitative DiMo.

References

- [1] K. P. Pruessmann, M. Weiger, M. B. Scheidegger, and P. Boesiger, “Sense: Sensitivity encoding for fast mri,” Magnetic Resonance in Medicine, vol. 42, no. 5, pp. 952–962, 1999.

- [2] M. A. Griswold, P. M. Jakob, R. M. Heidemann, M. Nittka, V. Jellus, J. Wang, B. Kiefer, and A. Haase, “Generalized autocalibrating partially parallel acquisitions (grappa),” Magnetic Resonance in Medicine, vol. 47, no. 6, pp. 1202–1210, 2002.

- [3] D. Liang, J. Cheng, Z. Ke, and L. Ying, “Deep magnetic resonance image reconstruction: Inverse problems meet neural networks,” IEEE Signal Processing Magazine, vol. 37, no. 1, pp. 141–151, 2020.

- [4] Y. Yang, J. Sun, H. Li, and Z. Xu, “Deep admm-net for compressive sensing mri,” in Advances in Neural Information Processing Systems, vol. 29. Curran Associates, Inc., 2016.

- [5] J. Schlemper, J. Caballero, J. V. Hajnal, A. Price, and D. Rueckert, “A deep cascade of convolutional neural networks for mr image reconstruction,” in Information Processing in Medical Imaging: 25th International Conference, IPMI 2017, Boone, NC, USA, June 25-30, 2017, Proceedings 25. Springer, 2017, pp. 647–658.

- [6] K. Hammernik, T. Klatzer, E. Kobler, M. P. Recht, D. K. Sodickson, T. Pock, and F. Knoll, “Learning a variational network for reconstruction of accelerated mri data,” Magnetic resonance in medicine, vol. 79, no. 6, pp. 3055–3071, 2018.

- [7] M. Mardani, E. Gong, J. Y. Cheng, S. S. Vasanawala, G. Zaharchuk, L. Xing, and J. M. Pauly, “Deep generative adversarial neural networks for compressive sensing mri,” IEEE transactions on medical imaging, vol. 38, no. 1, pp. 167–179, 2018.

- [8] B. Zhu, J. Z. Liu, S. F. Cauley, B. R. Rosen, and M. S. Rosen, “Image reconstruction by domain-transform manifold learning,” Nature, vol. 555, no. 7697, pp. 487–492, 2018.

- [9] R. Liu, Y. Zhang, S. Cheng, Z. Luo, and X. Fan, “A deep framework assembling principled modules for cs-mri: Unrolling perspective, convergence behaviors, and practical modeling,” IEEE Transactions on Medical Imaging, vol. 39, no. 12, pp. 4150–4163, 2020.

- [10] S. U. Dar, M. Yurt, M. Shahdloo, M. E. Ildız, B. Tınaz, and T. Cukur, “Prior-guided image reconstruction for accelerated multi-contrast mri via generative adversarial networks,” IEEE Journal of Selected Topics in Signal Processing, vol. 14, no. 6, pp. 1072–1087, 2020.

- [11] S. Wang, Z. Ke, H. Cheng, S. Jia, L. Ying, H. Zheng, and D. Liang, “Dimension: dynamic mr imaging with both k-space and spatial prior knowledge obtained via multi-supervised network training,” NMR in Biomedicine, vol. 35, no. 4, p. e4131, 2022.

- [12] E. K. Fram, R. J. Herfkens, G. Johnson, G. H. Glover, J. P. Karis, A. Shimakawa, T. G. Perkins, and N. J. Pelc, “Rapid calculation of t1 using variable flip angle gradient refocused imaging,” Magnetic Resonance Imaging, vol. 5, no. 3, pp. 201–208, 1987.

- [13] H. Z. Wang, S. J. Riederer, and J. N. Lee, “Optimizing the precision in t1 relaxation estimation using limited flip angles,” Magnetic resonance in medicine, vol. 5, no. 5, pp. 399–416, 1987.

- [14] F. Liu, L. Feng, and R. Kijowski, “Mantis: model-augmented neural network with incoherent k-space sampling for efficient mr parameter mapping,” Magnetic resonance in medicine, vol. 82, no. 1, pp. 174–188, 2019.

- [15] F. Liu, R. Kijowski, L. Feng, and G. El Fakhri, “High-performance rapid mr parameter mapping using model-based deep adversarial learning,” Magnetic resonance imaging, vol. 74, pp. 152–160, 2020.

- [16] F. Liu, R. Kijowski, G. El Fakhri, and L. Feng, “Magnetic resonance parameter mapping using model-guided self-supervised deep learning,” Magnetic resonance in medicine, vol. 85, no. 6, pp. 3211–3226, 2021.

- [17] R. Feng, J. Zhao, H. Wang, B. Yang, J. Feng, Y. Shi, M. Zhang, C. Liu, Y. Zhang, J. Zhuang et al., “Modl-qsm: Model-based deep learning for quantitative susceptibility mapping,” NeuroImage, vol. 240, p. 118376, 2021.

- [18] Y. Jun, H. Shin, T. Eo, T. Kim, and D. Hwang, “Deep model-based magnetic resonance parameter mapping network (dopamine) for fast t1 mapping using variable flip angle method,” Medical Image Analysis, vol. 70, p. 102017, 2021.

- [19] H. Chung, B. Sim, and J. C. Ye, “Come-closer-diffuse-faster: Accelerating conditional diffusion models for inverse problems through stochastic contraction,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 12 413–12 422.

- [20] Y. Xie and Q. Li, “Measurement-conditioned denoising diffusion probabilistic model for under-sampled medical image reconstruction,” in International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer, 2022, pp. 655–664.

- [21] H. Chung and J. C. Ye, “Score-based diffusion models for accelerated mri,” Medical image analysis, vol. 80, p. 102479, 2022.

- [22] J. Song, A. Vahdat, M. Mardani, and J. Kautz, “Pseudoinverse-guided diffusion models for inverse problems,” in International Conference on Learning Representations, 2022.

- [23] A. Güngör, S. U. Dar, Ş. Öztürk, Y. Korkmaz, H. A. Bedel, G. Elmas, M. Ozbey, and T. Çukur, “Adaptive diffusion priors for accelerated mri reconstruction,” Medical Image Analysis, p. 102872, 2023.

- [24] J. Ho, A. Jain, and P. Abbeel, “Denoising diffusion probabilistic models,” Advances in neural information processing systems, vol. 33, pp. 6840–6851, 2020.

- [25] A. Q. Nichol and P. Dhariwal, “Improved denoising diffusion probabilistic models,” in International Conference on Machine Learning. PMLR, 2021, pp. 8162–8171.

- [26] J. Song, C. Meng, and S. Ermon, “Denoising diffusion implicit models,” in International Conference on Learning Representations, 2020.

- [27] M. Özbey, O. Dalmaz, S. U. Dar, H. A. Bedel, Ş. Özturk, A. Güngör, and T. Çukur, “Unsupervised medical image translation with adversarial diffusion models,” IEEE Transactions on Medical Imaging, 2023.

- [28] G. Luo, M. Blumenthal, M. Heide, and M. Uecker, “Bayesian mri reconstruction with joint uncertainty estimation using diffusion models,” Magnetic Resonance in Medicine, vol. 90, no. 1, pp. 295–311, 2023.

- [29] C. Peng, P. Guo, S. K. Zhou, V. M. Patel, and R. Chellappa, “Towards performant and reliable undersampled mr reconstruction via diffusion model sampling,” in International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer, 2022, pp. 623–633.

- [30] M. Lustig, D. Donoho, and J. M. Pauly, “Sparse mri: The application of compressed sensing for rapid mr imaging,” Magnetic Resonance in Medicine: An Official Journal of the International Society for Magnetic Resonance in Medicine, vol. 58, no. 6, pp. 1182–1195, 2007.

- [31] J. Zhang and B. Ghanem, “Ista-net: Interpretable optimization-inspired deep network for image compressive sensing,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 1828–1837.

- [32] W. Bian, Y. Chen, and X. Ye, “An optimal control framework for joint-channel parallel mri reconstruction without coil sensitivities,” Magnetic Resonance Imaging, 2022.

- [33] T. Zhang, J. M. Pauly, and I. R. Levesque, “Accelerating parameter mapping with a locally low rank constraint,” Magnetic resonance in medicine, vol. 73, no. 2, pp. 655–661, 2015.

- [34] O. Maier, J. Schoormans, M. Schloegl, G. J. Strijkers, A. Lesch, T. Benkert, T. Block, B. F. Coolen, K. Bredies, and R. Stollberger, “Rapid t1 quantification from high resolution 3d data with model-based reconstruction,” Magnetic resonance in medicine, vol. 81, no. 3, pp. 2072–2089, 2019.

- [35] M. Uecker, P. Lai, M. J. Murphy, P. Virtue, M. Elad, J. M. Pauly, S. S. Vasanawala, and M. Lustig, “Espirit—an eigenvalue approach to autocalibrating parallel mri: where sense meets grappa,” Magnetic resonance in medicine, vol. 71, no. 3, pp. 990–1001, 2014.

- [36] L. I. Sacolick, F. Wiesinger, I. Hancu, and M. W. Vogel, “B1 mapping by bloch-siegert shift,” Magnetic resonance in medicine, vol. 63, no. 5, pp. 1315–1322, 2010.