DISCD: Distributed Lossy Semantic Communication for Logical Deduction of Hypothesis

Abstract

In this paper, we address hypothesis testing in a distributed network of nodes, where each node has only partial information about the State of the World (SotW) and is tasked with determining which hypothesis, among a given set, is most supported by the data available within the node. However, due to each node’s limited perspective of the SotW, individual nodes cannot reliably determine the most supported hypothesis independently. To overcome this limitation, nodes must exchange information via an intermediate server. Our objective is to introduce a novel distributed lossy semantic communication framework designed to minimize each node’s uncertainty about the SotW while operating under limited communication budget. In each communication round, nodes determine the most content-informative message to send to the server. The server aggregates incoming messages from all nodes, updates its view of the SotW, and transmits back the most semantically informative message. We demonstrate that transmitting semantically most informative messages enables convergence toward the true distribution over the state space, improving deductive reasoning performance under communication constraints. For experimental evaluation, we construct a dataset designed for logical deduction of hypotheses and compare our approach against random message selection. Results validate the effectiveness of our semantic communication framework, showing significant improvements in nodes’ understanding of the SotW for hypothesis testing, with reduced communication overhead.

Index Terms:

Logic, semantic, lossy, communication, deductionI Introduction

Low latency, minimal power consumption, and efficient bandwidth are critical in today’s hyper-connected systems. Autonomous technologies like self-driving cars and drones depend on real-time data exchange to ensure safety, while smart cities require reliable communication to optimize services. In healthcare, semantic communication enhances data accuracy for remote monitoring, improving patient outcomes. In industry 4.0, it ensures efficient, real-time information flow between machines and sensors, driving automation and advancing manufacturing intelligence. Semantic systems will support fast and explainable decision-making in next-generation applications such as automated truth verification, data connectivity and retrieval, financial modeling, risk analysis, privacy-enhancing technologies, and optimized agriculture [1, 2, 3]. In these applications, distributed systems may need to exchange data for deduction of a hypothesis to improve their generalization ability, decision-making performance, or statistical analysis. However, due to privacy concerns and communication limitations, transmitting their entire dataset may not be feasible. In such a case, semantic communication may play an essential role.

Rudolf Carnap’s foundational work introduced a model-theoretic approach to semantic communication compatible with First-Order Logic (FOL) to represent states. His framework provided a way to quantify semantic information using inductive probability distributions. Later, Hintikka expanded on Carnap’s work by addressing infinite universes and generalization issues [4]. Early post-5G semantic communication methods like D-JSCC and autoencoders employed neural network-based solutions [5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18]. Traditional model-theoretic approaches like Carnap’s and Floridi’s [19] offer interpretability but suffer from computational inefficiency and lack probabilistic foundations. Newer approaches include information expansion and knowledge collusion [20], knowledge-graph integration enhanced with probability graphs [21], memory supported deep learning based approaches [22] and topological approaches [23]. These methods, while effective, are not particularly tailored to FOL reasoning tasks [24, 25, 26, 27, 28] such as deduction of a hypotheses.

In this paper, we propose a novel lossy semantic communication framework for a distributed set of nodes, each with a deductive task and partial, possibly overlapping views of the world aiming to identify, among a set of hypotheses, the one best supported by the SotW. Nodes communicate with a central server to enhance their understanding of the SotW, transmitting the most informative messages about their local environments under bandwidth limitations and privacy concerns. The server aggregates data and returns the most informative messages to help nodes refine their local probability distributions over the state space.

Our semantic encoding algorithm selects messages based on the semantic informativeness of a message to a specific observer within communication constraints. Messages that have higher degree of confirmation (e.g., inductive logical probability) are deemed more informative as they leave less uncertainty about the SotW. In addition, this new paradigm is able to effectively manage large state spaces, maximizing each node’s understanding of the SotW while enabling more robust deduction performance despite nodes being unaware of each other’s or the central node’s full perspective and limited communication resources.

Finally, we showcase that as the number of communication rounds increases, nodes distribution over the state space converge towards the true distribution, minimizing Bayes risk over hypothesis set. Empirical validation through experiments using a FOL deduction dataset shows that our framework significantly improves nodes’ understanding of the world and deduction performance with reduced overhead.

II Background on FOL, Inductive Probabilities, and Semantic Information

II-A Representing the State of the World via First-Order Logic

We begin by illustrating how the state of the world can be represented using First-Order Logic (FOL). Consider a finite world characterized by a finite set of entities and a finite set of predicates . The set of all possible states of is denoted by , where . Each state provides a complete description of the world using the logical language , which includes the predicates , entities , quantifiers , and logical connectives .

Definition 1 (State Description).

A state description in is defined as:

| (1) |

where indicates the presence () or negation () of the predicate for entities and , is the index set of predicates.

In this setup, each state is a conjunction () of a specific enumeration of all possible predicates (or their negations), , applied to all ordered pairs of entities, . Any FOL sentence in this finite world can thus be expressed as a disjunction of these state descriptions.

Theorem 1 (FOL Representation in Finite World [29]).

Any FOL sentence in can be represented as:

| (2) |

where is the logical entailment operator and is the index set of finite states.

When extending to an infinite world where entities becomes a countably infinite set, a more intricate approach is needed. We introduce the concept of Q-sentences to handle this complexity.

Definition 2 (Q-sentence).

A Q-sentence in is defined as:

| (3) |

where indicate the presence or negation of predicates. This formulation encompasses all combinations, resulting in distinct Q-sentences, i.e., .

Building upon Q-sentences, we define attributive constituents to capture the relationships of individual entities.

Definition 3 (Attributive Constituent).

An attributive constituent in is:

| (4) |

where , is the index set over Q-sentences, and , and . The expression encapsulates all relationships entity has within the world, expressing what kind of individual is.

To represent the entire infinite world, we define constituents as combinations of attributive constituents.

Definition 4 (Constituent).

A constituent of width in is a conjunction of attributive constituents:

| (5) |

which represents the relational structure of entities in the world . Here, the width is the number of attributive constituents present in the conjunction forming .

Any FOL sentence in can then be expressed as a disjunction of such constituents.

Theorem 2 (FOL Representation in Infinite World [30]).

Any FOL sentence in can be represented as:

| (6) |

where is the index set of constituents.

As an illustration, consider the statement ”Every person owns a book.”, or more explicitly, ”For every x, if x is a person, then there exists a y such that y is a book and x owns y.”, formally expressed as:

| (7) |

To represent as a disjunction of constituents, we identify all constituents where holds. In each such constituent, for every entity satisfying , the attributive constituent must include:

Each constituent is then constructed as:

| (8) |

ensuring that all entities are accounted for. Therefore, can be expressed as:

| (9) |

State descriptions and constituents act like basis vectors in a vector space (as they partition the space and hence any two are mutually exclusive), allowing us to define a probability measure over them. The inductive logical probability of any sentence in the FOL language is then the sum of the probabilities of its constituents as per axioms of probability. In the next section, we will discuss how to define this probability measure using the frameworks proposed by Carnap and Hintikka.

II-B Inductive Logical Distribution on States

In FOL-based world , we introduce a probability measure over the fundamental elements—either state descriptions or constituents , collectively referred to as ”world states” . Let where is the state space. To define this measure, we make use of inductive logical probabilities, which serve as inductive Bayesian posteriors, built upon empirical observations and a prior distribution.

Definition 5 (Inductive Logical Probability).

For any state and corresponding evidence (observations) in , the inductive logical probability (a.k.a., degree of confirmation) that evidence supports sentence is defined as:

| (10) |

where is the number of observations, is the number of observations confirming state , is the weight assigned to state , is the prior coefficient, dependent on . The parameter , ranging from 0 to , balances the weight of prior against empirical data.

Then, the inductive logical probability measure over is defined as where . In the following section, we make use of inductive logical probabilities to define a semantic information metric to effectively quantify semantic informativeness regarding states of the world.

II-C Measuring Semantic Uncertainty about the SotW

To quantify the remaining uncertainty after new observations, Carnap proposed the concept of ”cont-information”. This metric assesses how observations influence an observer’s understanding of the SotW by evaluating the extent to which they decrease observer’s uncertainty.

Definition 6.

The cont-information in a world quantifies the informativeness of an observation to a specific observer by measuring the remaining uncertainty about the SotW. Given a state and evidence (observations) in , cont-information is defined as:

| (11) |

In simple terms measures the uncertainty remaining about state after observing e. Next, the cont-information measure is used to devise a communication framework. This measure captures the dynamic and cumulative nature of evidence accumulation in the communication and, as a result, the refinement in an observer’s understanding of the world state.

III Distributed Semantic Communication Framework

III-A Hypothesis Deduction Problem

We start by formalizing a hypothesis deduction problem at each user node in the wireless network. Let be a finite or infinite set of self-consistent statements representing possible hypotheses about the world at node . There exists a hypothesis which is most consistent, among all other hypothesis, with the actual SotW. The statements can be represented by a non-empty disjunction of state descriptions or constituents, as per Theorem 1 and 2. At each node , the task is to deduce the hypothesis (the most consistent hypothesis with the SotW). However, as each node has incomplete information, this deduction cannot be concluded reliably. The aim of the communication is, then, to receive most cont-informative information regarding world state so as more accurately determine which hypothesis is supported the most by world (entity) population.

III-B System Model

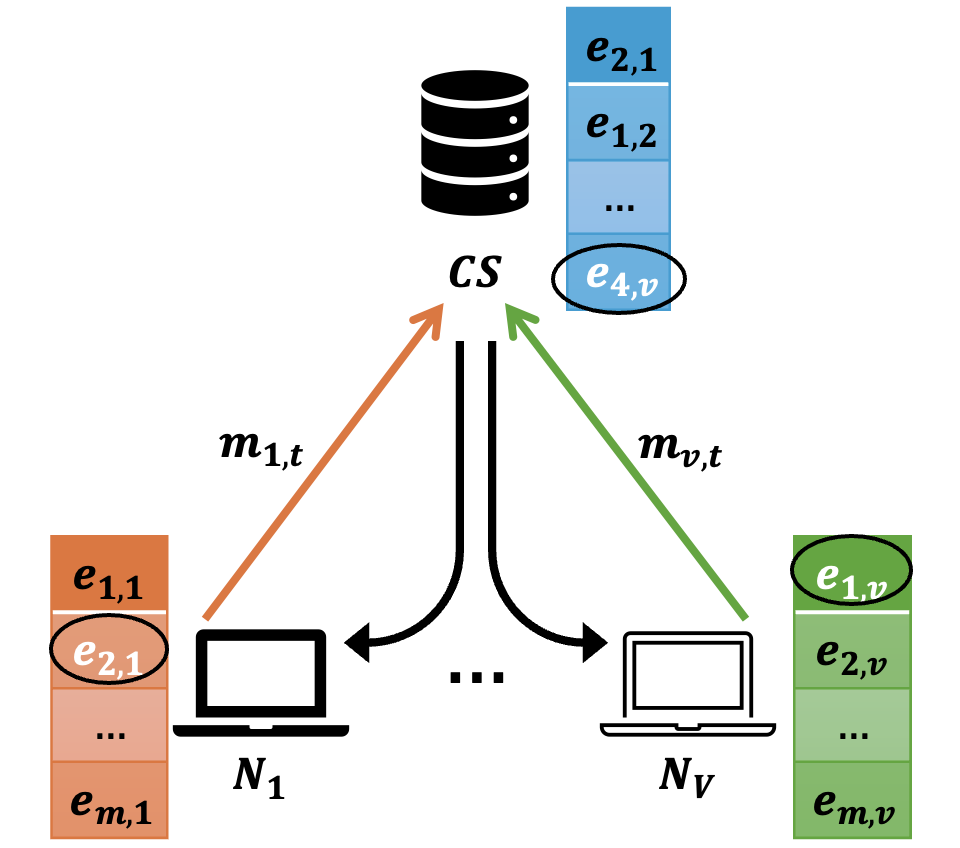

As shown in Fig.1, we consider a distributed network comprising a set of edge nodes and a central server . Each edge node possesses evidence obtained through observations of the world , which can be finite () or infinite (). Each node maintains a local inductive probability distribution over the state space , reflecting its view of the State of the World (SotW) at round . Each node aims to solve a hypothesis deduction problem , involving determining the hypothesis most supported by evidence from a set of possible hypotheses. Since the nodes have incomplete observations about SotW, they wish to collaborate with each other through a central server to share some information with each other to improve each node’s local distribution over states. However, due to communication constraints, every node can transmit only a limited amount of information to the server in each communication round. The central server aggregates messages from all nodes to update its own perception of the SotW, denoted by . The server then selects a message and broadcasts it back to the nodes to aid them in refining their local distributions. In the next section, we turn our attention to, for each round t of communication: (i) how a node chooses its message for transmission to the server, (ii) how the server updates its perceived SotW upon receiving all incoming messages from the nodes, (iii) how the server selects which message to broadcast to all nodes, (iv) how a node updates it’s perceived SotW upon receiving the message from the server, and (v) how the hypothesis deduction problem is solved.

III-C Communication Protocol

The communication occurs in iterative rounds, each consisting of two phases: an uplink phase and a downlink phase.

Uplink Phase

In round , each node selects a message (which is in the form of a FOL sentence) to transmit to the server . The message is chosen to maximize the semantic content informativeness with respect to the node’s observations , while considering previously received messages from the server and previously transmitted messages to the server . The optimization objective for node becomes:

| (12) |

where is the number of FOL sentences message contains.

Downlink Phase

Upon receiving messages from all nodes, the server updates its distribution on state space based on the aggregated information:

where Update function involves Bayesian updating (c.f., eqn. 10 and 18) to incorporate new evidence and adjust probabilities over .

The server then selects a message to broadcast, containing information not previously sent out:

| (13) |

while considering previously broadcasted messages from server . Upon receiving , node updates its distribution:

This iterative process allows nodes to progressively refine their understanding of the SotW. Once the communication concludes, nodes perform deduction.

Hypothesis Deduction

After rounds of communication, each node choose the hypothesis best supported by evidence as:

| (14) |

In the next section, we turn our attention to the theoretical properties of this communication scheme.

III-D Convergence Analysis Under Finite Evidence

In this section, we examine the convergence behavior of the communication framework under finite evidence to determine the relationship between empirical and true distribution over state space . To preserve generality, we assume an infinite universe and conduct the analysis over constituents.

Let describe a finite sample of individual observations, containing distinct attributive constituents accumulated at node after rounds of communication. A constituent of width is compatible with only if , where .

To compute the posterior distribution

| (15) |

one needs to specify the prior and the likelihood . A natural method to assign the prior is to assume it is proportional to , reflecting the assumption that there are individuals compatible with a particular constituent . This approach captures the intuition that constituents with larger widths (i.e., involving more attributive constituents) are more probable if we believe there are more individuals exemplifying them. The explicit equation for the prior is:

| (16) |

where is the gamma function, and is a parameter reflecting our prior belief about the number of individuals compatible with .

The likelihood is computed based on the number of observed samples confirming each attributive constituent in the evidence . It is given by:

| (17) |

where is a prior coefficient, possibly dependent on . This likelihood reflects how well constituent explains the observed evidence .

Using the prior and likelihood, the posterior probability of constituent given is:

| (18) |

where the sum in the denominator runs over all constituents compatible with the language and is the index set of constituents.

Using the prior in equation (16) and the likelihood in equation (17), we can analyze the convergence of the posterior probability as the number of observations increases. Specifically, these equations are used to derive a Probably Approximately Correct (PAC) bound that determines the minimum number of samples required to consider the minimal constituent to be approximately correct with high probability. (Despite omitted from this manuscript due to space concerns, it had been proven that asymptotically, the constituent with smallest width, a.k.a. the minimal constituent, receives probability 1 while all other constituents receive probability 0. See [31] for further discussion and proof.)

Theorem 3 (PAC-Bound for Constituents [31]).

Given evidence of size , let be such that for some . Then:

| (19) |

where .

This PAC bound provides a theoretical guarantee on the convergence of the posterior distribution to true distribution on the state space as more evidence is accumulated. The detailed derivation of this bound relies on the prior and likelihood expressions, as also discussed in the next section. Now, we turn our attention to showing that DISCD is superior compared to random transmissions.

III-E Effectiveness of Proposed Framework

In this section, we compare two communication strategies under sentence level communication constraints, from the perspective of a node , as detailed by the next theorem.

Theorem 4 (Effectiveness of DISCD Framework).

Given a fixed communication budget allowing the transmission of FOL sentences over the entire course of communication (e.g., in rounds) by the central server, consider the following two strategies:

1. DISCD Message Selection: Let constitute the sentences with the highest degree of confirmation that were selected and broadcast by central server during communication.

2. Random Message Selection: Let constitute sentences selected uniformly at random and broadcast by central server during communication.

Assume the minimal constituent corresponds to the true state of the world. We establish the following theorem.

-

(a)

The posterior probability of the minimal constituent satisfies

(20) -

(b)

The evidence yields a more accurate posterior distribution over constituents and yields a tighter PAC bound (as given by Theorem 3) compared to that of , e.g., .

Proof.

The posterior distributions and are given by (15) and (18). The likelihood depends on how well constituent explains the evidence , and similarly for . affects the likelihoods such that is higher because aligns closely with the specific observations in . For incorrect constituents , is lower due to poorer alignment with the detailed evidence as per (6). Therefore, the numerator increases, while the denominators adjust based on the altered likelihoods, leading to:

| (21) |

Similarly, the posterior probabilities for incorrect constituents decrease under , resulting in a more accurate posterior distribution. From the convergence analysis and Theorem 3, we can observe that the PAC bound depends on , the number of observations, and the likelihoods. As evidence increases with , it yields increasingly more discriminative likelihoods (i.e., higher probability concentration around more accurate constituents and the true constituent), tightening the PAC bound. Specifically, with higher and more informative likelihoods, the sum in the PAC bound inequality decreases:

| (22) |

With , the ratio becomes smaller due to the more discriminative likelihoods, leading to a smaller (tighter error bound) compared to .

∎

Elaborating on Theorem 4, it is observed that evidence affects the posterior in two ways. It provides more specific information and as the evidence is more informative (larger , larger ) and the likelihood function will often be higher for constituents that align closely with the evidence and lower for others. Also, may render some constituents impossible (assigning them a likelihood of zero) because they are inconsistent with the evidence.

Under , the likelihoods are less discriminative because random evidence provides less specific information. The differences in likelihoods between the true constituent and incorrect ones are smaller. As the likelihoods under are more discriminative, the posterior distribution is more peaked around the true constituent , assigning higher probability to and lower probabilities to others.

In other words, improves discrimination. By providing more specific observations, evidence with high degree of confirmation enhances the differences in likelihoods between the true constituent and incorrect constituents. The increased likelihood and decreased likelihoods result in a higher posterior probability for . The posterior distribution over constituents becomes more concentrated around , assigning lower probabilities to incorrect constituents and leading to a more accurate posterior. The improved likelihood ratios lead to a tighter PAC bound, reflecting a better estimation of . In the next section, we comment on the impact of improved posterior estimation on hypothesis deduction task success.

III-F Analysis of Semantic Uncertainty and Task Success

Albeit discussions in this section are for a single node , they can be trivially extended to multi node case. Each node aims to minimize its risk (expected loss) over :

where quantifies the discrepancy between and and can be selected as any appropriate distance metric.

As nodes exchange information and receive informative messages, the distributions converge towards (true distribution), reducing expected loss and enhancing their ability to identify , as and .

Therefore, as evidence accumulates and as per Theorems 3 & 4, leads to tighter converge towards the true (minimal) constituent , it better minimizes the Bayes risk compared to random selection of messages (e.g., ), yielding larger hypothesis deduction success rate. In the next section, we experimentally verify these theoretical observations.

IV Experiment Results

The effectiveness of DISCD communication algorithm compared to random selection was tested by applying it to a custom deduction dataset. The relevant code and dataset are accessible on GitHub [32]. The custom deduction dataset describes a story where each node has only a portion of it, i.e., possess incomplete information about the story. The story is divided across all nodes, each node receiving equal number of sentences. In order to reflect possible overlaps in the worldviews of different nodes, 30% of each node’s data is shared with at least one other whereas the remaining 70% is unique to that node. The task in each node is to determine, among 8 distinct hypothesis, the one most supported by the evidence (story) for the entities that are present in the node. However, as the information is incomplete, for some entities, nodes require information from others to be able to deduce the correct hypothesis.

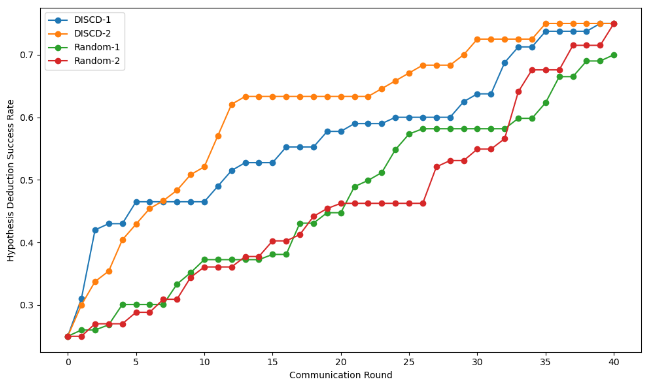

During communication, each node identifies the most informative subset of sentences from a total of sentences by solving the optimization problem in (12). Minimizing (12) requires calculating inductive logical probabilities, which, in turn necessitates counting the number of states in a FOL expression satisfies. As the state space is exponentially large, we utilize a state-of-the-art algorithmic boolean model counting tool sharpSAT-td to determine the number of states. To shed more light on how the this optimization works, consider the following example. Let node has sentences in its dataset. Assuming , in round 0, the node calculates the number of states in each sentence satisfies, and determines their inductive logical probability. Based on those probabilities, optimizes (12). After transmitting, say , and receiving another sentence from the server, call it , it continues with the round 1, where it calculates the probabilities for for . Then, another is selected, transmitted, and so on. For more details on how this optimization algorithm works, see [33, 34]. In our experiments, we tested subsets with sizes . Once the communication concludes, the nodes perform their respective hypothesis deduction tasks, which is same for all nodes in our experiments. We measure the success rate with the ratio of the population (entities) where the correct hypothesis can be deduced. The results are presented in Fig. 2.

DISCD clearly outperforms random selection in hypothesis deduction performance, for both message sizes (). In other terms, DISCD consistently selects sentences that significantly improve hypothesis deduction accuracy compared to random transmissions. Indeed, it only takes 5 rounds of communication for DISCD-1 to achieve 46.5% success rate whereas it takes 22 rounds of communication for Random-1 to achieve the same performance. Similarly, it takes 13 rounds to achieve 62.1% success rate for DISCD-2 whereas the same rate of success is achieved for Random-2 only after 34 rounds. After 40 rounds of communication, both DISCD-1 and DISCD-2 achieve 75.0% performance whereas random-1 can only achieve 70.0%. This demonstrates that DISCD can achieve similar performance in less number of rounds, saving from communication.

An important clarification shall be made regarding why the performance stalls from time to time. As each hypothesis has multiple premises which may require multiple pieces of essential information to be transmitted, it can take a number of rounds of communication until the performance improves. However, DISCD, thanks to rapid selection of important semantic information regarding SotW, improves accuracy rapidly before stalling, whereas in random selection, the stalling is distributed, and it takes longer to achieve the same level of performance.

Another clarification regards the distinction between DISCD-1 and DISCD-2. In DISCD-2, two FOL sentences are transmitted from nodes to server per round and server broadcasts two sentences back to nodes. This implies what sentences are selected and in which order can be different between the two cases and hence accounts for the different rates of increase in hypothesis deduction performance.

As the communication machinery, i.e., “compression and channel coding” is common between random method and our method, we did not consider them in comparative results. However, based on the number of sentences transmitted, we studied the average communication costs per node for both schemes for different levels of accuracies in deduction. The results are presented at Table I.

| Success Rate | DISCD-1 | Random-1 | DISCD-2 | Random-2 |

| 40% | 70.9 bits | 402.3 bits | 94.6 bits | 354.9 bits |

| 45% | 141.9 bits | 496.9 bits | 141.9 bits | 449.6 bits |

| 50% | 307.6 bits | 544.3 bits | 212.9 bits | 638.9 bits |

| 55% | 402.3 bits | 591.6 bits | 260.3 bits | 757.3 bits |

| 60% | 473.3 bits | 828.3 bits | 283.9 bits | 780.9 bits |

| 65% | 591.6 bits | 851.9 bits | 567.9 bits | 804.6 bits |

| 70% | 780.9 bits | 946.6 bits | 686.3 bits | 875.6 bits |

| 75% | 946.6 bits | N/A | 828.3 bits | 946.6 bits |

Based on Table I, DISCD-1 method, on average, saves approximately 270.49 bits of communication per node compared to random selection Random-1. Similarly, DISCD-2 method, on average, saves approximately 316.56 bits of communication compared to random selection Random-2.

In conclusion, the experiments demonstrate that the proposed semantic communication algorithm DISCD effectively selects and transmits the most informative sentences, achieving substantial success for deductive hypothesis selection task with fewer bits compared to random selection faster. Furthermore, these results validates the theoretical findings regarding convergence, effectiveness and task success. DISCD framework better informs the nodes regarding SotW, leading to better task success.

References

- [1] Deniz Gündüz, Zhijin Qin, Inaki Estella Aguerri, Harpreet S. Dhillon, Zhaohui Yang, Aylin Yener, Kai Kit Wong, and Chan-Byoung Chae. Beyond transmitting bits: Context, semantics, and task-oriented communications. IEEE Journal on Selected Areas in Communications, 41(1):5–41, 2023.

- [2] Jihong Park, Jinho D. Choi, Seong-Lyun Kim, and Mehdi Bennis. Enabling the wireless metaverse via semantic multiverse communication. 2023 20th Annual IEEE International Conference on Sensing, Communication, and Networking (SECON), pages 85–90, 2022.

- [3] Omar Hashash, Christina Chaccour, Walid Saad, Kei Sakaguchi, and Tao Yu. Towards a decentralized metaverse: Synchronized orchestration of digital twins and sub-metaverses. ICC 2023 - IEEE International Conference on Communications, pages 1905–1910, 2022.

- [4] Jaakko Hintikka. A two-dimensional continuum of inductive methods*. Studies in logic and the foundations of mathematics, 43:113–132, 1966.

- [5] Yashas Malur Saidutta, Afshin Abdi, and Faramarz Fekri. Joint source-channel coding over additive noise analog channels using mixture of variational autoencoders. IEEE Journal on Selected Areas in Communications, 39(7):2000–2013, 2021.

- [6] Zhenzi Weng, Zhijin Qin, and Geoffrey Ye Li. Semantic communications for speech recognition. In 2021 IEEE Global Communications Conference (GLOBECOM), pages 1–6, 2021.

- [7] Chao Zhang, Hang Zou, Samson Lasaulce, Walid Saad, Marios Kountouris, and Mehdi Bennis. Goal-oriented communications for the iot and application to data compression. IEEE Internet of Things Magazine, 5(4):58–63, 2022.

- [8] Başak Güler, Aylin Yener, and Ananthram Swami. The semantic communication game. IEEE Transactions on Cognitive Communications and Networking, 4(4):787–802, 2018.

- [9] Zhijin Qin, Xiaoming Tao, Jianhua Lu, and Geoffrey Y. Li. Semantic communications: Principles and challenges. ArXiv, abs/2201.01389, 2021.

- [10] Inaki Estella Aguerri and Abdellatif Zaidi. Distributed variational representation learning. IEEE transactions on pattern analysis and machine intelligence, 43(1):120–138, 2019.

- [11] Abdellatif Zaidi and Inaki Estella Aguerri. Distributed deep variational information bottleneck. In 2020 IEEE 21st International Workshop on Signal Processing Advances in Wireless Communications (SPAWC), pages 1–5. IEEE, 2020.

- [12] An Xu, Zhouyuan Huo, and Heng Huang. On the acceleration of deep learning model parallelism with staleness. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 2088–2097, 2020.

- [13] Vipul Gupta, Dhruv Choudhary, Ping Tak Peter Tang, Xiaohan Wei, Xing Wang, Yuzhen Huang, Arun Kejariwal, Kannan Ramchandran, and Michael W Mahoney. Training recommender systems at scale: Communication-efficient model and data parallelism. arXiv preprint arXiv:2010.08899, 2020.

- [14] Mounssif Krouka, Anis Elgabli, Chaouki ben Issaid, and Mehdi Bennis. Communication-efficient split learning based on analog communication and over the air aggregation. In 2021 IEEE Global Communications Conference (GLOBECOM), pages 1–6. IEEE, 2021.

- [15] Huiqiang Xie, Zhijin Qin, Geoffrey Y. Li, and Biing-Hwang Juang. Deep learning enabled semantic communication systems. IEEE Transactions on Signal Processing, 69:2663–2675, 2020.

- [16] Yulin Shao, Qingqing Cao, and Deniz Gündüz. A theory of semantic communication. ArXiv, abs/2212.01485, 2022.

- [17] Gangtao Xin, Pingyi Fan, and Khaled B. Letaief. Semantic communication: A survey of its theoretical development. Entropy, 26(2), 2024.

- [18] Shengteng Jiang, Yueling Liu, Yichi Zhang, Peng Luo, Kuo Cao, Jun Xiong, Haitao Zhao, and Jibo Wei. Reliable semantic communication system enabled by knowledge graph. Entropy, 24(6), 2022.

- [19] Luciano Floridi. Outline of a theory of strongly semantic information. SSRN Electronic Journal, 01 2004.

- [20] Gangtao Xin and Pingyi Fan. Exk-sc: A semantic communication model based on information framework expansion and knowledge collision. Entropy, 24(12), 2022.

- [21] Zhouxiang Zhao, Zhaohui Yang, Mingzhe Chen, Zhaoyang Zhang, and H. Vincent Poor. A joint communication and computation design for probabilistic semantic communications. Entropy, 26(5), 2024.

- [22] Huiqiang Xie, Zhijin Qin, and Geoffrey Y. Li. Semantic communication with memory. IEEE Journal on Selected Areas in Communications, 41:2658–2669, 2023.

- [23] Qiyang Zhao, Mehdi Bennis, Mérouane Debbah, and Daniella Harth da Costa. Semantic-native communication: A simplicial complex perspective. 2022 IEEE Globecom Workshops (GC Wkshps), pages 1513–1518, 2022.

- [24] Siheng Xiong, Yuan Yang, Faramarz Fekri, and James Clayton Kerce. Tilp: Differentiable learning of temporal logical rules on knowledge graphs. In The Eleventh International Conference on Learning Representations.

- [25] Siheng Xiong, Yuan Yang, Ali Payani, James C Kerce, and Faramarz Fekri. Teilp: Time prediction over knowledge graphs via logical reasoning. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 38, pages 16112–16119, 2024.

- [26] Yuan Yang, Siheng Xiong, Ali Payani, James C Kerce, and Faramarz Fekri. Temporal inductive logic reasoning over hypergraphs. In Proceedings of the Thirty-Third International Joint Conference on Artificial Intelligence, pages 3613–3621, 2024.

- [27] Yuan Yang, Siheng Xiong, Ali Payani, Ehsan Shareghi, and Faramarz Fekri. Harnessing the power of large language models for natural language to first-order logic translation. In Lun-Wei Ku, Andre Martins, and Vivek Srikumar, editors, Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 6942–6959, Bangkok, Thailand, August 2024. Association for Computational Linguistics.

- [28] Siheng Xiong, Ali Payani, Ramana Kompella, and Faramarz Fekri. Large language models can learn temporal reasoning. In Lun-Wei Ku, Andre Martins, and Vivek Srikumar, editors, Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 10452–10470, Bangkok, Thailand, August 2024. Association for Computational Linguistics.

- [29] Rudolf Carnap and Yehoshua Bar-Hillel. An outline of a theory of semantic information. 1952.

- [30] Jaakko Hintikka. Distributive normal forms in first-order logic. In J.N. Crossley and M.A.E. Dummett, editors, Formal Systems and Recursive Functions, volume 40 of Studies in Logic and the Foundations of Mathematics, pages 48–91. Elsevier, 1965.

- [31] Jaakko Hintikka and Risto Hilpinen. Knowledge, acceptance, and inductive logic. In Jaakko Hintikka and Patrick Suppes, editors, Aspects of Inductive Logic, volume 43 of Studies in Logic and the Foundations of Mathematics, pages 1–20. Elsevier, 1966.

- [32] https://github.com/ahmetfsaz/DISCD. Accessed: 2024-11-28.

- [33] Ahmet Faruk Saz, Siheng Xiong, and Faramarz Fekri. Lossy semantic communication for the logical deduction of the state of the world. arXiv preprint arXiv:2410.01676, 2024.

- [34] Ahmet Faruk Saz, Siheng Xiong, Yashas Malur Saidutta, and Faramarz Fekri. Model-theoretic logic for mathematical theory of semantic information and communication. arXiv preprint arXiv:2401.17556, 2024.