11email: {chir.michael47,tsopze.norbert}@gmail.com 22institutetext: Univ. Lille, Research Center, IMT Lille Douai, 59500 Douai, France 22email: jerry.lonlac@imt-lille-douai.fr 33institutetext: University Clermont Auvergne, LIMOS CNRS UMR 6158, F-63178 Aubière 33email: engelbert.mephu_nguifo@uca.fr

44institutetext: Sorbonne University, IRD, UMMISCO, F-93143, Bondy, France,

55institutetext: Department of Computer Engineering - ENSET - University of Douala, Cameroon

Discovering Frequent Gradual Itemsets with Imprecise Data

Abstract

The gradual patterns that model the complex co-variations of attributes of the form ”The more/less X, The more/less Y” play a crucial role in many real world applications where the amount of numerical data to manage is important, this is the biological data, or medical data. Recently, these types of patterns have caught the attention of the data mining community, where several methods have been defined to automatically extract and manage these patterns from different data models. However, these methods are often faced the problem of managing the quantity of mined patterns, and in many practical applications, the calculation of all these patterns can prove to be intractable for the user-defined frequency threshold and the lack of focus leads to generating huge collections of patterns. Moreover another problem with the traditional approaches is that the concept of gradualness is defined just as an increase or a decrease. Indeed, a gradualness is considered as soon as the values of the attribute on both objects are different. As a result, numerous quantities of patterns extracted by traditional algorithms can be presented to the user although their gradualness is only a noise effect in the data. To address this issue, this paper suggests to introduce the gradualness thresholds from which to consider an increase or a decrease. In contrast to literature approaches, the proposed approach takes into account the distribution of attribute values, as well as the user’s preferences on the gradualness threshold. The proposed algorithm makes it possible to extract gradual patterns on certain databases where state-of-the art gradual patterns mining algorithms fail due to too large search space. Moreover, results from an experimental evaluation on real databases show that the proposed algorithm is scalable, efficient, and can eliminate numerous patterns that do not verify specific gradualness requirements to show a small set of patterns to the user.

Keywords:

Itemset mining, gradual itemset, mining under constraint, noisy data1 Introduction

Human reasoning is most often based on inaccurate or incomplete data; indeed, it is easy for a human being to determine if a person is short or tall without knowing its exact size; which is not the case for a computer because it processes the exact data. Transmit the faculties of human reasoning to a computer was initiated by [1] whose purpose was to have imprecise data processed on a computer. Work based on fuzzy logic [1] and classical logic [2] has been carried out for the extraction of knowledge in categorical databases but has many difficulties in numerical databases.

Most works about correlations extraction [3, 4] on the processing of numerical databases proceed by discretizing numerical attributes, thus returning to the case of categorical data processing. But as numerical databases become important, finer dig methods are needed to extract more expressive knowledge representing the frequent variability between numerical values. To this end, the gradual patterns that model the complex co-variations of attributes of the form ”the more/less X, the more/less Y” were proposed.

Thanks to the knowledge that they provide in many applications [5, 6], gradual patterns have gained popularity, and several effective algorithms have been proposed to extract these patterns from numerical databases. These algorithms, which differ for the most part from the gradual semantics [5, 6, 7, 8], very often come up against a very large number of extracted patterns, making their exploitation difficult by the user. Thus, some reported works propose to use constraints to prune the search space during mining process and focus on the interesting patterns. This is the case of the approach proposed by [5] which takes into account the temporal constraint between objects during the mining process. Such an approach is adapted to the context with a temporal order among objects and makes it possible to generate only patterns that are useful and relevant to the user [5]. Several other approaches like [9] proposed to extract closed gradual patterns which are a compact representation of the entire gradual patterns. Although the proposed algorithms allow to significantly reduce the number of generated patterns, this patterns number remains very high in some applications. Indeed, the distribution of the data is not always uniform on all the attributes, as the gradualness is considered only in terms of increase and decrease of the attribute values, the number of extracted patterns can be more reduced and more significant for interpretation, if we integrate in the mining process a threshold of increase or decrease from which a gradualness should be considered. For example, in Medicine, the normal body temperature is around 37°C and professionals consider as fever sign the temperature greater than 38°C. This means that they can not make any decision based on temperature variation between 37°C and 38°C.

To deal with this problem, we propose a new algorithm called GRAPGT (GRAdual Patterns with Gradualness Threshold) to automatically extract gradual patterns, by integrating a variation threshold from which to consider an attribute gradualness (increase/decrease). This allows to take into account the user preferences on each attribute of the database during the mining process.

Embedded in the gradual semantic proposed by [5] or ParaMiner [10] or in GRITE [6], this approach makes it possible to reduce the number of generated patterns.

The main contributions of this paper is fourfolds:

-

•

We propose how to take into account user preferences in the mining process (in terms of variation threshold) on the variations of attribute values.

-

•

We propose to reduce the number of generated patterns through the introduction of a variation threshold from which to consider a gradualness (increase/decrease). The proposed approach let the user more precisely specify requirements about the gradualness threshold of patterns to be discovered.

-

•

We study the impact of the gradualness threshold on the gradual itemsets mimings process and on the patterns to be discovered.

-

•

We conduct many experiments on three real world datasets and one synthetic dataset in order to validate this proposition and to compare its results to that of the original algorithms in terms of scalability, runtime, and memory consumption.

The rest of this article is organized as follows: first of all, we present some preliminary notions on the gradual patterns mining in Section 2. In Section 4, we then describe our approach to extract gradual patterns with constraints on the variations of attribute values. Before concluding, we present and discuss experimental results in Section 5.

2 Gradual itemsets

In this section, we provide some useful definitions and formally describe the problem of mining frequent gradual itemsets (patterns) by defining the support of such itemsets in data. We also present some of the state-of-the-art approaches that automatically extract such patterns.

2.1 Preliminary definitions

The problem of mining gradual itemsets consists in mining attribute co-variations in a numerical data set of the form ”The more/less X, . . . , the more/less Y’”. We assume herein that we are given a database containing a set of objects that defines a relation on an attribute set with numerical values. Let denote the value of attribute over object for all .

To illustrate the notion of gradual itemsets, we consider the database given by Table 1. This database containing a set of objects defining a relation on an attribute set with numerical values. It describes the blood sugar level measured in a patient with diabete for days (). The columns represent this rate in grams per liter (g/l) taken on an empty stomach (), after lunch (), after dinner () and at bedtime () while the lines represent the days of observation.

| Tid | ||||

| 1.18 | 2.36 | 2.36 | 1.58 | |

| 1.32 | 3.01 | 2.58 | 2.45 | |

| 1.25 | 2.56 | 2.31 | 2.25 | |

| 1.30 | 3.15 | 2.80 | 2.36 | |

| 1.04 | 2.75 | 2.30 | 2.35 | |

| 1.48 | 3.56 | 2.75 | 2.53 | |

| 1.65 | 3.70 | 2.60 | 2.40 | |

| 1.28 | 4.08 | 3.09 | 2.90 |

Each attribute will hereafter be considered twice: once to indicate its increase, and another to indicate its decrease, using the and operators. This leads to new kinds of items, called gradual items.

Definition 1 (Gradual item)

Let be a data set defined on a numerical attribute set . A gradual item is defined under the form , where is an attribute of and .

A gradual item express a variation of an attribute associated to one of the values - increase or decrease-.

If we consider the numerical database of Table 1, (respectively ) is a gradual item meaning that the values of attribute are increasing (respectively decreasing). These two gradualness respectively increasing and decreasing are respected by the sequence of objects and .

A gradual pattern expresses monotonous variations of values of several attributes and is defined as follows:

Definition 2 (Gradual pattern)

A gradual pattern is a non-empty set of gradual items. A -itemset is an itemset containing gradual items.

In our example (see Table 6), is a gradual itemset means ”the more the blood sugar level taken on an empty stomach, the more the blood sugar level taken after lunch”. This gradual itemset is satisfied by the sequence of objects in Table 6.

The support (frequency) of a gradual itemset amounts to the extent to which a gradual pattern is present in a given database. Several support definitions have been proposed in the literature (e.g., [11, 12, 13, 7, 6, 5]), showing that gradual patterns can follow different semantics. We briefly describe in the next section these ways of defining the support of gradual itemsets in the data. In order to define these supports, we introduce the following definitions:

Definition 3 (Gradual Pattern Extension)

Let be a gradual itemset and a sequence of objects. is an extension of if , , holds.

From Table 1, the sequence of objects is an extension of gradual itemset . For a gradual itemset there might be several extensions. is also verified by the sequence . In general, a gradual itemset is relevant to explain the gradualness occurring in these extensions. The computation of the support value of a gradual pattern in a given database amounts to the extend to which the gradual pattern is present in . This is assessed by considering the most representative extension, i.e., the extension having the largest size.

Definition 4 (Gradual Itemset Support)

Let be a numerical database and be a gradual itemset of ( is its rows number). Let be the set of all the longest sequence of objects respecting Then, .

Considering Table 1, the longest sequence of objects that respects the gradual itemset is of size . Then, , meaning that five among the eight input objects can be ordered consecutively according to . A gradual itemset is frequent if its support is greater than or equal to a minimum threshold set by the user.

Definition 5 (Complementary Gradual Itemset)

Let be a gradual itemset, and be a function such that ” and ”. Then is the complementary (symmetric) gradual itemset of .

Any gradual pattern admits a complementary gradual pattern where the items are the same and the variations are all reversed. The complementary gradual pattern of is .

Definition 6 (Frequent Gradual Patterns Mining Problem)

Let be a numerical database and a minimum support threshold. The problem of mining gradual patterns is to find the complete set of frequent gradual patterns of with respect to i.e. finding the set .

2.2 Closed Gradual Patterns

The notion of the closure of the itemset is very important in the pattern mining task as it allows to obtain concise representation of patterns without loss of information. This notion has been widely studied in classical itemset mining framework [14, 15, 16, 17]. It was introduced for the first time when extracting gradual patterns in [9], where the authors propose a pair of functions defining a closure operator [18, 19] for the gradual patterns. Given a list of sequences of objects from a database, returns the gradual itemset respecting all objects sequence in , while the function returns the set of maximal sequences of objects which respects the variations of all gradual items in . A gradual itemset is said to be closed if we have .

3 Related works

The problem of mining gradual itemsets consists of discovering frequent simultaneous attribute co-variations in numerical databases. Several works have been devoted to the gradual itemset mining problem and many algorithms using different frameworks and formalisations have been developed to automatically extract these itemsets from numerical database. These itemsets have been extensively used for fuzzy command (e.g., “the closer the wall, the harder the break”). In this context, we are only interested in the knowledge revealed by such itemsets, and the focus is not on how to mine these gradual itemsets from huge databases and moreover these itemsets are not automatically extracted , they are provided by an expert, which is not always realistic in practice. In recent years, gradual itemsets has received much attention from the data mining community and several methods have been defined for automatically extracting these itemsets from numerical data.

Most of the proposed methods for extracting gradual itemsets generally differ in their application on the basis of the type of data (temporal data, temporal data sequences, data stream, multi-relational Data, graph data, etc.) from which the pattern extraction is performed.

In the follow, we provide an overview of the methods used by some of the most efficient gradual itemset extraction algorithms according to the data model considered and underline their advantages and limitations.

3.1 On the gradual itemsets extraction from numerical data

3.1.1 Regression-based approach:

[11] proposes the first interpretation of gradual dependency as a co-variation constraint and models gradual itemsets using statistical linear regression. So, linear regression is performed between the attribute pairs and the validity of the graduality is relied on the normalised mean squared error , together with the slope of the regression line. The gradual tendencies are revealed for attribute pairs that are strongly correlated with a strong slope of the regression line.

3.1.2 Approach based on the discovery of association rules:

[13] was the first using data mining methods through an adaptation of the Apriori algorithm to extract the gradual itemsets. The authors evaluate the support of a gradual itemset by considering the proportion of objects couples that verify the constraints expressed by all the gradual items in the itemset. In that work, the reported gradual tendencies are based on correlation between attributes. The authors consider correlation in terms of the rankings induced by the attributes and not in terms of their values. Due to memory complexity, the algorithm reported in [13] is limited to the extraction of gradual itemsets with maximum length equals to .

3.1.3 Conflict sets based approach:

In the same way [7] proposes a gradual pattern extraction approach based on a heuristic for computing an approximate support value of a gradual pattern. For a given gradual itemset, this approach removes the objects (conflicts set) which prevent the maximum number of objects in the database to be ordered according to the gradual itemset. In this approach, the author constructs the candidates by ordering the transactions (objects) according to the values of the attributes. For the generation of a candidate gradual pattern of size for example, the author will order the transactions in a direction of graduality (increasing or decreasing) of the first item then, will associate the second item and check if the order of the transactions is respected according to the values of the item. In the case where the order is not respected, the author proceeds by a deletion of the transactions (set of conflict) preventing this order. The support of a gradual pattern is considered here as the cardinality of the longest ordered list of transactions respecting the gradual itemset. The major limitation of this approach is that it is not complete. Indeed, the use of a heuristic implies that in some cases the frequency of a gradual pattern may be undervalued. This phenomenon is explained by the fact that the author makes choices each time a set of conflicts is encountered. However, whatever the policy adopted for the choice to be made, it may have a loss of gradual reasons, this being explained by the fact that when generating a gradual itemset of size , it is impossible to predict the best choice that will have an impact on the generation of gradual itemsets of size . One solution is to keep all possible choices.

3.1.4 Precedence graph based approach:

To deal with the disadvantage revealed in approach [7], [6] proposes a more complete approach named GRITE (GRadual ITemset Extraction) to automatically extract gradual itemsets from large databases. The authors consider the same definition of the support of a gradual pattern proposed in [7] and proposes a new method based on precedence graphs. In this method, the data are represented by a graph whose nodes are defined as the objects of the transactional database and the links stand for the precedence relation derived from the attributes taken into account. The author adopts a binary representation of the graph by a matrix. The support of the gradual pattern considered is defined as the length of the longest path in the graph. This approach makes it possible to efficiently generate itemsets of size from gradual itemsets of size .

In [20], the authors propose an algorithm that combines the principles of several existing approaches and benefits from efficient computational properties to extract frequent gradual itemsets. In fact, they consider the formulation proposed in [13], and propose an algorithm that take into account the binary structure used in [6]. To evaluate the support of a gradual itemset, the authors use the Kendall tau ranking correlation coefficient that computes the number of object pairs which are consistent or inconsistent in the database, to be in agreement with the considered gradual itemset.

3.2 On the reduction of the number of gradual itemsets extracted

Most of these methods come up against the problem of managing the very high quantity of extracted patterns. In practice, the number of frequent gradual itemsets can be large, making their interpretation by the expert almost impossible. One solution to reduce the number of extracted itemsets is to use the constraints in the process mining to focus on interest patterns.

From a set of specific gradual itemsets, as the closed gradual patterns [9], it is possible to regenerate the set of all gradual itemsets. Moreover, with the closed itemsets redundant information is avoided. Following this idea, [21] proposes an algorithm named GLCM, based on an extension of LCM [22] algorithm principle. GLCM permits to efficiently compute gradual itemsets over large real-world databases with a time complexity linear in the number of closed frequent gradual itemsets and a memory complexity constant w.r.t. the number of closed frequent gradual itemsets. The GLCM algorithm exploits the binary structure proposed in [6] to compute the support and the closure of gradual itemsets. Indeed, the proposed approach in [9] allows to reduce the number of extracted patterns as a post-processing step which is not efficient. This approach is just a post-processing of [6]. It does not allow to benefit from the runtime and memory reduction and thus does not provide any added value for running the algorithms. The authors of [21] cope with this by proposing an algorithm that reduces the number of patterns during the mining process.

In [10], ParaMiner, a generic and parallel algorithm for closed pattern mining, is proposed. It is based on the principle of pattern enumeration in strongly accessible set systems and its efficiency is due to a dataset reduction technique called EL-reduction, combined with a technique for performing dataset reduction in a parallel execution on a multi-core architecture.

3.3 On the gradual itemsets extraction from the complex data

Most of these algorithms use data mining techniques to extract gradual patterns. However, they are not relevant for extracting gradual patterns in certain application domains where numerical data present particular forms (e.g., temporal, stream, relational, or noisy data). So, some recent works have instead focused on extracting variants of gradual patterns on the numerical data supplied with specific constraints for expressing another kind of knowledge.

3.3.1 Extraction gradual itemsets from stream data:

In [23], an approach based on B-Trees and OWA (Ordered Weighted Aggregation) operator [24, 25] is proposed to mine data streams for gradual patterns. [26] proposes the relational gradual pattern concept, which enables to examine the correlations between attributes from a graduality point of view in multi-relational data.

3.3.2 Extraction gradual itemsets from noisy data:

Fuzzy gradual patterns are revisited in [27] for noisy data where it is often hardly possible to compare attribute values, either because the values are taken from noisy data, or because it is difficult to consider that a small difference between two values is meaningful. An example of a fuzzy gradual pattern could be expressed as “the closer the age of an employee to 46, the higher his/her income”.

3.3.3 Extraction gradual itemsets from temporal data:

Recently, in [5, 28], the authors proposed an approach to extract gradual patterns in temporal data with an application on paleoecological databases to grasp functional groupings of coevolution of paleoecological indicators that model the evolution of the biodiversity over time. [29] introduces a generic method for extracting and analyzing gradual patterns in spatial data at several levels of granularity. The authors apply their method on the Health data to measure potentially avoidable hospitalization related with both societal and financial issues in public policies. More recently, in [30] propose fuzzy temporal gradual patterns to integrate the fact that a temporal lag may exist between changes in some attributes and their impact on others. These fuzzy temporal gradual patterns allow to detect the cases of relevant correlations between the attributes of a database whose changes in the value one attribute causes a ripple effect on other attributes with respect to time. [31] is interested to extract gradual itemset from property graphs where the attributes of the gradual itemsets are information from the graph, and are retrieved from the graph nodes or relationships.

4 Mining gradual itemsets with gradual threshold constraints

In order to avoid the inconsistent gradual patterns and consider the user defined gradualness, we have modified the gradual patterns mining process by introducing the notion of gradual threshold.

The gradualness or gradual threshold is very related to the domain knowledge. For this observation, we define the gradualness as the Definition 7.

Definition 7 (gradual threshold)

Let be a numerical database and an attribute of , a gradual threshold is a user defined value such that a variation of between two tuples and of considered iff

Based on the data distribution, we propose to calculate as follows:

-

1.

Let and be a standard deviation of the values of the attribute . defined from the distribution of the values of is called the gradualness threshold of . It is calculated by the formula 1 where and are two real numbers .

(1) -

2.

Let and be the coefficient of variation (relative standard deviation) of the values of the attribute . is calculated from the distribution of the values of is called the gradualness threshold of and is defined as follow ( and are two real numbers):

(2) -

3.

Let and the component of sorted in increase order, can also calculated as the standard deviation of the different gaps between two consecutive values of . So is calculated using the equation 3 where .

(3)

When , gradualness is considered in terms of increasing and decreasing attribute values, which brings back to the case of state of the art approaches [5, 10, 6].

Example 1

From the Table 1, we can compute the gradual threshold for each numerical attribute. Here, we have consider and .

Definition 8 (Inconsistent gradual pattern)

Let be a gradual pattern and a sequence of objects that satisfy G. is consistence if and only if , , . Where is the gradual threshold of item .

As we stated in the introduction section, in certain domain of application such as medicine, this kind of gradual pattern does not provide information to experts, it is considered noisy or incoherent and therefore can negatively influence experts in decision making. It is important to remember that in this area, the requirements for quality of precision are very high because a bad (resp. Good) decision in most cases can kill (resp. Save) a life. In this paper, we are interested in extracting consistent or interesting gradual patterns.

Definition 9 (Consistent gradual pattern)

Let be a gradual pattern and a sequence of objects that satisfy G. is consistence if and only if , , . Where is the gradual threshold of item .

Consider Table 1, and assume that the data respects the time constraint. Using the Table 2, we can extract the consistent gradual patterns defined in the Table 7

| Using (1) | ; ; ; |

| Using (2) | Nothing |

| Using (3) | ; |

4.1 Algorithm

The algorithm 1 presents the different steps of the proposed method. This section explains these steps.

The steps of the algorithm 1 are described as following:

4.1.1 Initialize the gradual threshold : SetThreshold

It corresponds to the step 1 of Algorithm 1. Depending on the domain knowledge, the expert can set it using his knowledge. In this work, one of the formulas (1), (2) and (3) are applied on to the data. For these formulas, we set the values of the two parameters and equal to 1 and 0 respectively. These values ( and ) are considered as their default values.

4.1.2 Transformation the numerical database to categorical database : Num2Cat

For the algorithms GRITE [6] and T-GPatterns presented in [5], which first transform the numerical database in categorical one, this threshold is applied during the transformation as following:

-

1.

Case of T-GPatterns approach presented in [5]

The database ( and ) resulting from the application of the function on the numerical database , is calculated as following :

-

(a)

-

(b)

-

(c)

else

This function allows to generate more symbols ”o” than the function Num2Cat of the approach [5](see Table 4.a and 4.b) because graduality is considered if and only if the difference between values of attributes exceed the threshold. Table 4 presents how the numerical database of Table 1 is transformed without gradualness threshold application, by application of the formulas (1), formula (3) and formula (2) respectively.

-

(a)

-

2.

Case of GRITE algorithm [6].

The step ”binary matrices generation” of GRITE is modified by the threshold introduction. Let and be two tuples and an attribute. The binary matrices are calculated as following:-

(a)

The matrix of : iff and 0 otherwise.

-

(b)

The matrix of : iff else 0.

Like in the first case, the resulting matrices will be less dense than the matrix obtained with the GRITE algorithm. Table 5 shows how the binary matrix is obtained from the numerical database of Table 1 respectively without the gradualness threshold on the gradual item , with the gradualness threshold on the gradual item and the gradual item . As the computation of the support of a gradual itemset consists in computing the length of the longest path in the graph represented by such binary matrices. We can see from the binary matrices of Table 5 (c) and 5 (d) that introducing gradualness threshold allows to cup the paths in the graph represented by the binary matrices of Table 5 (a) and 5 (b).

It comes out from Table 5 (c) ( with ) that no path reaches to node and no path begins at node . This is not the case of the Table 5 (a) ( without threshold). Thus, by introducing gradualness threshold, we considerably reduce the search space during the mining process. The search space is even more reduced between the Table 5 (e) () and Table 5 (f) () with the gradualness threshold ). More interesting, the Table 5 (f) can even be reduced by removing object as it is isolated; its line and its column do not have any relation with other objects, it is meaningless in a gradual context. This allows to gain memory, and run-time, as deleted objects are not considered during future joins. On Table 5 (e), is deleted: all bits from the column and line are set to . Table 5 (g) represents the final matrix. From Table 5 (g), it is easy to see that the support of the gradual itemset () is equal to as no path begins at nodes , , and no path reaches the nodes , , , there is no path containing more than two objects. From this observation, we can even further reduce Table 5 (g) by removing the lines , , and the columns , , to obtain Table 5 (h) with only four lines and three columns.

-

(a)

| TID | ||||

| + | + | + | + | |

| - | - | - | - | |

| + | + | + | + | |

| - | - | - | - | |

| + | + | + | + | |

| + | + | - | - | |

| - | + | + | + |

| TID | ||||

| o | + | o | + | |

| o | o | o | o | |

| o | o | + | o | |

| - | o | - | o | |

| + | + | + | o | |

| o | o | o | o | |

| - | o | + | + |

| TID | ||||

| o | + | + | + | |

| o | - | - | - | |

| o | + | + | o | |

| - | - | - | o | |

| + | + | + | + | |

| + | o | - | o | |

| - | + | + | + |

| TID | ||||

| + | + | + | + | |

| - | - | - | o | |

| o | + | + | o | |

| - | - | - | o | |

| + | + | + | o | |

| + | + | - | o | |

| - | + | + | + |

| 0 | 1 | 1 | 1 | 0 | 1 | 1 | 1 | |

| 0 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | |

| 0 | 1 | 0 | 1 | 0 | 1 | 1 | 1 | |

| 0 | 1 | 0 | 0 | 0 | 1 | 1 | 0 | |

| 1 | 1 | 1 | 1 | 0 | 1 | 1 | 1 | |

| 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | |

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 0 | 1 | 0 | 1 | 0 | 1 | 1 | 0 |

| 0 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| 0 | 0 | 0 | 1 | 0 | 1 | 1 | 1 | |

| 0 | 1 | 0 | 1 | 1 | 1 | 1 | 1 | |

| 0 | 0 | 0 | 0 | 0 | 1 | 1 | 1 | |

| 0 | 1 | 0 | 1 | 0 | 1 | 1 | 1 | |

| 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | |

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | |

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 0 | 1 | 1 | 1 | 0 | 1 | 1 | 1 | |

| 0 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | |

| 0 | 1 | 0 | 1 | 0 | 1 | 1 | 1 | |

| 0 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | |

| 0 | 1 | 0 | 1 | 0 | 1 | 1 | 1 | |

| 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | |

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | |

| 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | |

| 0 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | |

| 0 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | |

| 0 | 0 | 1 | 1 | 0 | 1 | 1 | 1 | |

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 0 | 0 | 0 | 0 | 0 | 1 | 1 | 0 |

| 0 | 1 | 0 | 1 | 0 | 1 | 1 | 1 | |

| 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | |

| 0 | 0 | 0 | 1 | 0 | 1 | 1 | 1 | |

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | |

| 0 | 0 | 0 | 0 | 0 | 1 | 1 | 1 | |

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | |

| 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | |

| 0 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | |

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 0 | 0 | 0 | 0 | 0 | 1 | 1 | 1 | |

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 | 1 | 1 | 0 | |

| 0 | 0 | 0 | 0 | 0 | 1 | 0 | |

| 0 | 0 | 0 | 0 | 1 | 1 | 0 | |

| 0 | 0 | 0 | 0 | 1 | 1 | 1 | |

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 1 | 1 | 0 | |

| 0 | 1 | 0 | |

| 1 | 1 | 0 | |

| 1 | 1 | 1 |

4.1.3 Gradual itemsets mining : MiningAlgo

For T-GPatterns [5], the remainded step is the procedure . In our proposal, this step is done exactly as proposed by the authors. Introducing the threshold constraints in GRITE or Paraminer algorithm consists of changing the processing step where the binary matrix associated to each attribute is calculated. All other steps (initialize, AND operator and DeleteAloneTuples) are not changed.

4.2 GRAPGT algorithm Proprieties

Correctness. As the GRAPGT algorithm is based on the existing algorithms which is prove to be correct, the threshold introduction step added permits to make more sparse the the transformed matrix, input of the frequent itemsets mining algorithm (Apriori-like). As these algorithms are prove to be correct, GRAPGT is correct too.

Completeness. As the GRAPGT algorithm is based on the prove complet algorithm, the introduction threshold step does not change the this property for the obtained algorithm. The main consequence is the reduction of the gradual frequent itemsets due to the threshold.

Complexity. If the threshold of the different attributes are set by the user, the theoretical complexity remains that of the chose algorithm (GRITE, T-GPatterns,…). But if these thresholds are calculated by the formulas (1), (2), or (3) , the time complexity is impacted by where is the row number and the columns number. But as these algorithms are Apriori-based, this theoretical complexity remains exponential (), the same obtained when .

4.3 Impact of the threshold

After application of the gradualness threshold on the data, some aspects of the expected results are impacted.

4.3.1 On the resulted gradual itemsets

Proposition 1

Let be a numerical database, a set of frequent gradual itemsets obtained using the algorithm T-GPatterns or GRITE, and a set of gradual itemsets obtained by our approach it is true that

Proof

The main effect of the gradualness threshold to introduce more ”o” character in the intermediate transformed matrix. Let (resp. ) be a intermediate binary obtained by T-GPatterns without gradualness threshold (resp. with gradualness threshold), if then because is calculated with . With this observation and due to the fact that is more dense than , all frequent extracted from is also frequent in . This implies that .

4.3.2 Relationship between closed frequent gradual itemsets

Proposition 2

Let be a numerical database and an attribute of such that there exists a row and and ; (resp. ) is the intermediate binary matrix by the transformation of without (resp. with) gradualness threshold application.

If is a closed gradual itemset in such that then is closed gradual itemset in

Proof

From the operation of the closure defined in [9], gradual pattern and its extension forms a maximal rectangle in (rectangle of 1 value). So the ’o’ character put at the column in the matrix (due to the threshold application) consist in deleting a column in the maximal rectangle ; the remained rectangle is maximal, then is closed.

This proposition is also true if is replaced by .

4.3.3 On the support of extracted gradual itemsets

It is clear that after applying the graduality threshold, the support of some gradual itemsets decreases. So during exploration, this fact should be taken into account.

Property 1

Let be a numerical database and an attribute of . Let a minimum support threshold and a gradualness threshold set by a user. For the algorithm T-GPatterns, the following holds:

if then as well as all of its supersets are not frequent in .

Proof

Assume , . Suppose that is a frequent gradual item, according to the gradual itemset definition proposed in [5], this means that there is a list of sequence of consecutive objects such that , for and . So . So, . which contradicts the initial hypothesis.

Property 1 allows to perform dataset reduction before mining process. Hence the whole dataset is not required to compute candidate patterns for a given minimum support and gradualness threshold. The search space containing and all its supersets can be discarded.

5 Experiments

We present in this section an experimental study of the execution time and number of gradual patterns extracted using GRAPGT. We also evaluate the performance in terms of memory usage. It should be recalled here that the issue of the management of the quantity of mined patterns is a great challenge as in many practical applications, the number of patterns mined can prove to be intractable for user-defined frequency threshold. All the experiments are conduced on a computer with 8 GB of RAM, of processor Intel(R) Core(TM) i5-8250U. We compare first of all our R implementation of GRAPGT with the original R implementation of T-Gpatterns [5], and secondly the C++ implementation of GRAPGT with the original c++ implementation of ParaMiner [10].

5.1 Source code

The source code of our proposed algorithm GRAPGT (respectively GRAPGT-ParaMiner) can be obtained from https://github.com/chirmike/GRAPGT (respectively https://github.com/Chirmeni/GRAPGT-Paraminer).

5.2 Data Description

Table 6 presents the characteristics of the datasets used in the experiments for evaluating the performance of our proposed algorithm

| Dataset | #objects | #attributes | Domain | Origin |

| Paleo | 111 | 87 | Paleo-ecology | [5] |

| Cancer | 410 | 30 | Medical | [32] |

| Air quality | 9358 | 13 | ecology | [32] |

| paraMiner-data | 109 | 4413 | Synthetic | [10] |

The datasets described in Table 6 are numerical databases: the first numerical database used is a temporal database (database with a temporal order among objects (or rows)) of paleoecological indicators [5] from Lake Aydat located in the southern part of the Chane des Puys volcanic chain (Massif Central in France); this database contains objects corresponding to different dates identified on the lake record considered, and attributes corresponding to different indicators of paleoecological anthropization (pollen grains) (cf. [5] for more details).

The second numerical database is a temporal database obtained from UCI Machine Learning repository [32] describing the hourly averaged responses from an array of metal oxide chemical sensors embedded in an Air Quality Chemical Multisensor Device; this database contain instances corresponding to the hourly averaged responses and attributes corresponding to the ground truth hourly averaged concentrations for CO, Non Metanic Hydrocarbons, Benzene, Total Nitrogen Oxides (NOx) and Nitrogen Dioxide (NO2) and any more.

The third database is a non temporal Cancer database also taken from UCI Machine Learning repository describing characteristics of the cell nuclei computed from a digitized image of a fine needle aspirate (FNA) of a breast mass; this database used for experiments in Paraminer [10] containing objects described by attributes. The fourth numerical database is a synthetic non-temporal database from Paraminer’s database containing objects and attributes.

5.3 Results

The results of the experiment study on the four databases demonstrate the importance of incorporating the gradual threshold into the data mining process and the significant benefits it provides. This taking into account of gradual threshold in the mining process not only allows users to provide consistent gradual patterns (see Definition 8) extracts imprecise numerical databases, but also to reduce on average by the quantity of frequent gradual patterns to be analyzed, by the extraction time of these frequent gradual pattern and finally, by the size of the memory consumed. Furthermore, the gradual threshold removes noisy / inconsistent gradual patterns.

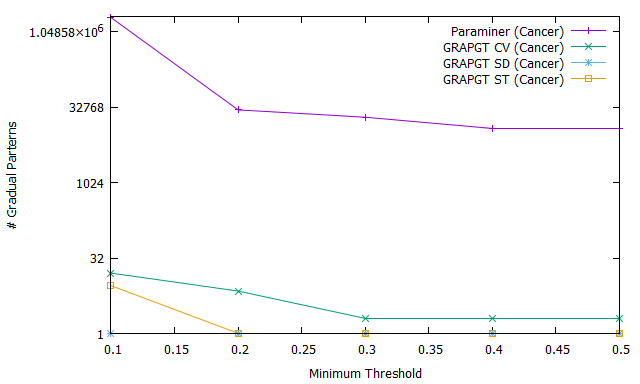

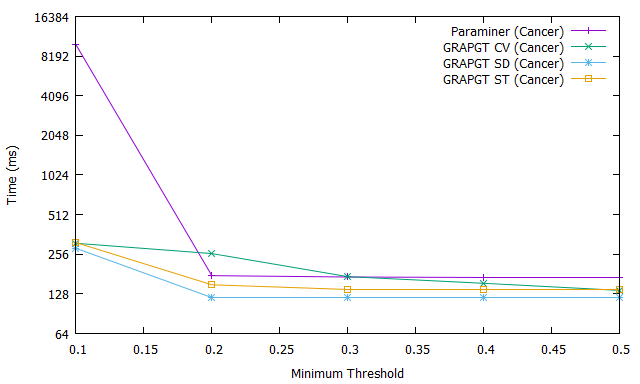

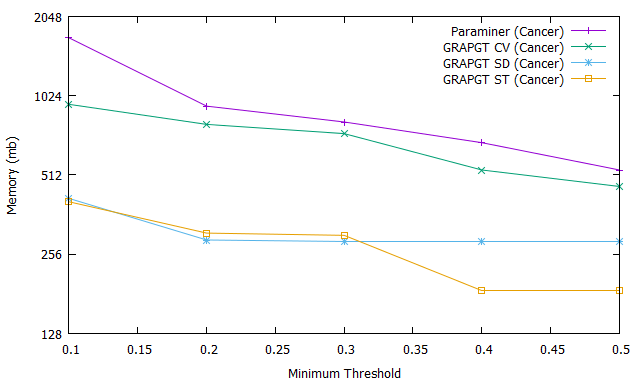

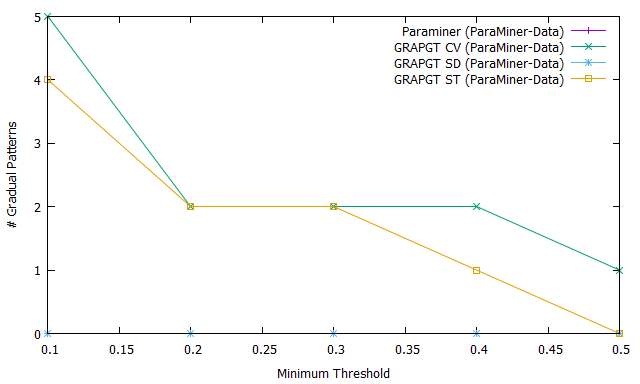

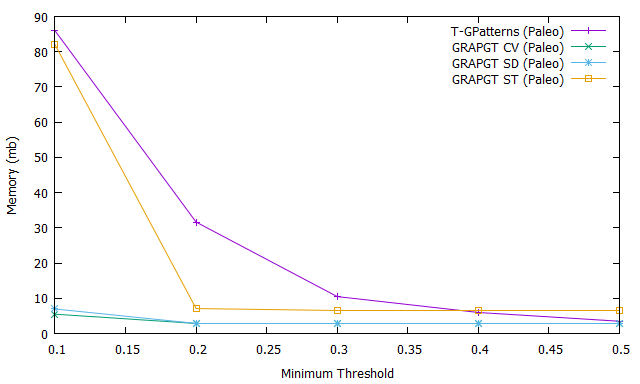

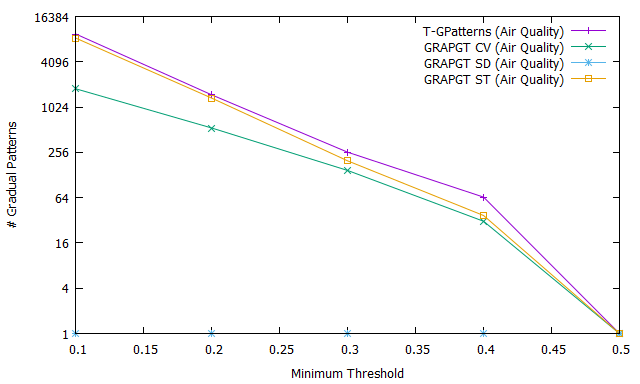

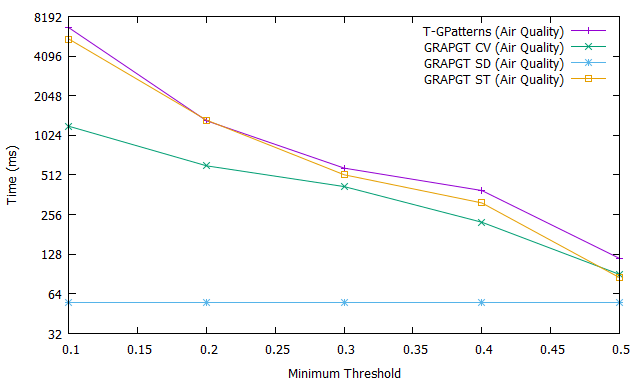

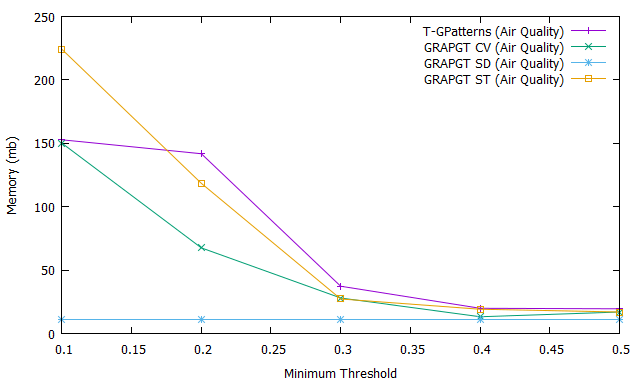

We present these results in two steps : first of all, we present results obtained on the non-temporal databases (Fig. 1 and 2), and secondly, we present results obtained on temporal databases (Fig. 3 and 4). We varied the support threshold in the interval with a step of . Throughout our experiments, we have set to and to .

5.3.1 Comparative experiments : non-temporal databases.

The first experiment compares the execution time, the memory usage and the number of frequent gradual patterns for GRAPGT and ParaMiner on non temporal databases Cancer and ParaMiner-Data (cf Table 6).

Fig. 1.a shows the number of frequent gradual patterns for ParaMiner algorithm (purple curve), GRAPGT CV algorithm (green curve), GRAPGT SD algorithm (blue curve), and GRAPGT ST algorithm (orange curve) on the cancer database when varying the support, with a reduced number of attributes. Figure 1.b shows the runtime of all these algorithms on the same cancer database when varying support, while Figure 1.c, shows the memory usage.

It should be like to remind you that GRAPGT CV, SD and ST are modified versions of the ParaMiner algorithm to which we have integrated different gradual thresholds (coefficient of variation, standard deviation and standard deviation of the deviations). The number of frequents gradual patterns results show that GRAPGT considerably reduces this number than ParaMiner when database have a small attributes : for a value of support threshold equal to it extracts frequent gradual patterns when the gradual threshold is defined by equation or formule (2), while ParaMiner extracts . This is a considerable advantage that should not be overlooked for the end user (the expert), because it is easier for an expert to analyze frequent gradual patterns at the expense of ; in addition, frequent gradual patterns extracted with our approach have an additional information : they are gradual patterns with a strong graduality power. The execution time results show that GRAPGT is faster that Paraminer for handling this database with small attributes when the support threshold increases : for a value of support threshold equal to it answers in ms when coefficient of variation considered like gradual threshold, while Paraminer needs hours and minutes. GRAPGT remains better in memory used compared to ParaMiner. Most the support is great, less execution time, number and memory are great.

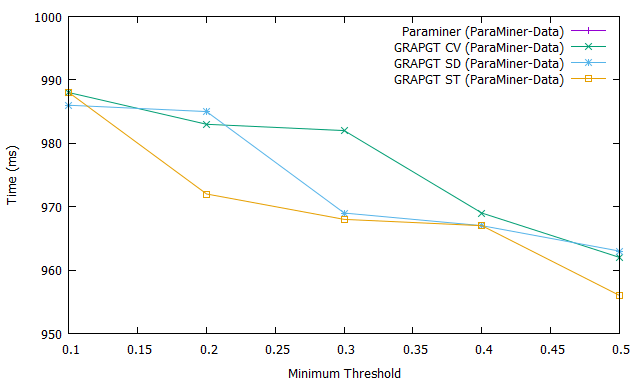

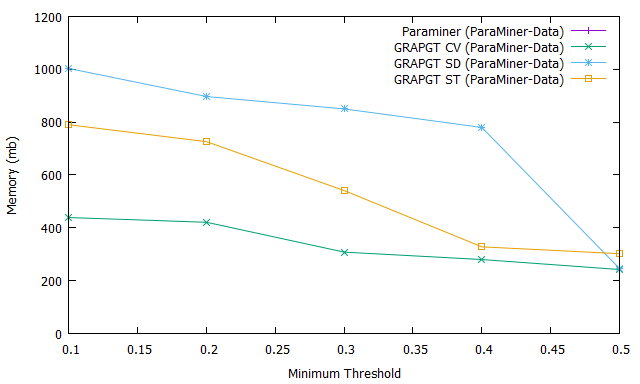

Fig. 2 shows the number of frequent gradual patterns extracted (Fig. 2.a), the execution time (Fig. 2.b) and the memory usage (Fig. 2.c) for previous four algorithms when varying the support on numerical database ParaMiner-Data. We can see that figures Fig. 2.a, 2.b and 2.c each have curves instead of . This observation is due to the fact that the ParaMiner algorithm without gradual threshold does not run until the end (it crashes memory) on the ParaMiner-Data database containing transactions and attributes. This database is more dense than the previous one, as the complexity lies in the number of attributes which determines the number of frequent gradual patterns (problem of combinatorial explosion).

However, when we introduce the graduality threshold, we manage to execute and extract a reduced number of frequent gradual patterns (e.g. when the coefficient of variation is considered as gradual threshold for a support equal to ). This result shows that our GRAPGT approach is scalable compared to ParaMiner because it gives a result where Paraminer fails. It goes without saying that the introduction of the graduality threshold considerably reduces the execution time, the memory usage and the number of frequent gradual patterns on databases with a small or large number of attributes ; in addition, these results show that it is easier for the end user or the expert to analyze the frequent gradual patterns extracted from approaches taking the gradual threshold into account than the approaches not taking this threshold into account.

5.3.2 Comparative experiments : temporal databases.

The next experiment compares the execution time, the memory usage and the number of frequent gradual patterns for GRAPGT and T-GPatterns on temporal databases Paleo and Air quality (cf Table 6). It is good to remember that the choice to compare our approach with the approaches of extraction of frequent gradual patterns on numerical temporal databases is due to the fact that the latter aims at the same objective as us : extract useful and relevant informations from a numerical database that respect certain constraints of the application domain. Taking these constraints into account has a positive impact for users. It makes it possible to reduce the number of frequent gradual patterns facilitating their analysis by the users.

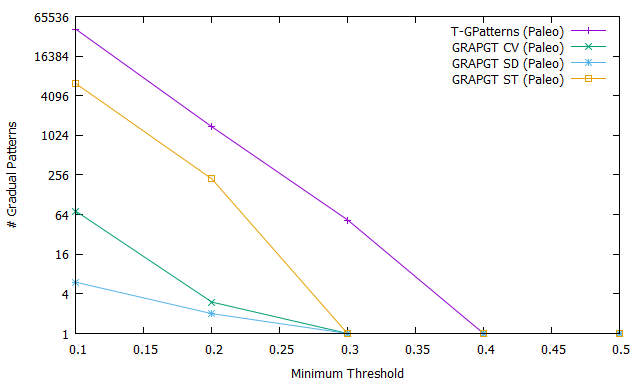

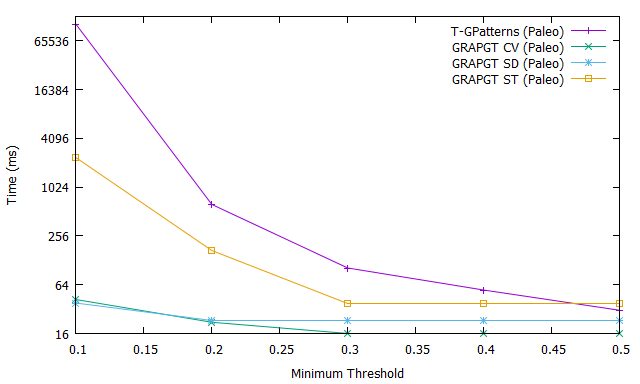

Thus, Fig 3.a shows the number of frequent gradual patterns for T-GPatterns algorithm (purple curve), GRAPGT CV algorithm (green curve), GRAPGT SD algorithm (blue curve), and GRAPGT ST algorithm (orange curve) on the paleoecology database when varying the support. In this Figure, when minimum support is equal to , the number of frequent gradual patterns extract by T-GPatterns without take into account gradual threshold is equal to ; this number is considerably reduce when the gradual threshold is taken into account : it is equal to when the gradual threshold is define by equation 1 (GRAPGT CV), when the gradual is defined by equation 2 (GRAPGT SD) and when the gradual threshold is defined by equation 3 (GRAPGT ST). Similarly, when the support increases, the number of frequent gradual patterns extracted from T-GPatterns remains greater than the number extracted from GRAPGT CV, GRAPGT SD and GRAPGT ST. In this context, these results show that it is easier for an user or an expert to analyze the frequent gradual patterns extracted from approaches taking the graduality threshold into account than the approaches not taking this threshold into account.

Fig. 3.b shows the evolution of the extraction time of the frequent gradual patterns of each algorithm as a function of the variation of the support. Here the end users will also save time if they integrate the gradual threshold into the mining process; this is easily seen in this Fig. 3.b. Fig. 3.c shows the memory usage for these four algorithms when varying support. It is easy to see that our GRAPGT algorithm consumes less memory than the T-GPatterns algorithm.

Fig 4 shows the evolution of the number (Fig. 4.a), the execution time (Fig. 4.b) and the memory usage (Fig. 4.c) of extraction frequent gradual patterns as a function of the variation of the support threshold after application of the T-GPatterns [5] algorithm (purple curve), GRAPGT CV algorithm (green curve), GRAPGT SD algorithm (blue curve), and GRAPGT ST algorithm (orange curve) on the air quality database. Unlike the paleoecological database, this database has a large number of attributes ().

The objective here is to show the impact of the gradual threshold on databases with a large number of attributes. We can easily see that the curves of the graph are monotonically decreasing which means that the number of frequent gradual patterns decreases when the support increases. In addition, the introduction of the gradual threshold considerably reduces the extraction time and number of frequent gradual patterns.

5.3.3 Consistent gradual patterns :

In this section, we present a list of the consistent (cf. Definition 8) gradual patterns that we were able to extract in the different databases that we presented above. We recall that a gradual pattern is said to be consistent if it has a great power of variation (increase or decrease).

6 Discussion

6.1 Memory space

The memory space used to keep the threshold vectors seems to augment the memory needs of the algorithm. This does not change the space complexity of the algorithm. But in fact during the experiment, we observe in some cases existing algorithms (ParaMiner) does not execute due to the memory space insufficiency while with the threshold introduction, our method runs and out some itemsets.

6.2 Execution time and complexity

The imprecision processing by the threshold introduction augments one step in the algorithm. Theoretically, the overall algorithm complexity does not change. The imprecision processing could also augments the execution time; but it is not the case. The introduced threshold permits to reduce the density of the intermediate (binary) matrix, which permit to reduce the execution time of the remained steps. This explains why for all datasets used in the execution time of the GRAPT algorithm is always lower than other algorithms.

6.3 Extracted gradual itemsets

The GRAPGT proposed due to the threshold introduction provides less gradual itemsets than the classical method. It then eliminates some gradual itemsets whose interpretation could also be useful for an expert. But the fact that the number of resulted gradual itemsets is lower, facilitates the expert interpretation work. So instead of providing a million of itemsets, a few are presented to the expert and he can easily manage it.

7 Conclusion and Perspectives

This paper proposes an approach to automatically extract gradual patterns by taking into account the user preferences about each attribute of the database during the mining process. An algorithm named GRAPGT (GRAdual Patterns with Gradualness Threshold) based on the integration of the constraints of variation threshold from which to consider a gradualness (increase/decrease) into traditional gradual patterns mining algorithms was proposed for extracting these patterns to avoid drawbacks of traditional gradual patterns mining algorithms on some data like noisy data; the graduality between two objects is no longer considered simply in terms of increase or decrease, but it is considered if and only if, the difference in attribute value between two objects is greater than a certain quantity (gradual threshold). Experimental results obtained on several real world databases have shown that the introduction of the gradual threshold in the gradual pattern mining process not only significantly reduces the amount of frequent gradual patterns to be analyzed, but also saves considerable time and memory. The proposed algorithm can returns a small set of gradual patterns to the user while filtering many patterns that are not meeting specific gradualness requirements. Moreover, it also allows the generation of gradual patterns on certain large databases where some algorithms in the literature fail for the reason of the search space very huge as show the experimental results (ParaMiner run on the ParaMiner-Data database fail). However, it would be interesting to study the impact of the gradualness threshold on the quality of frequent gradual patterns, and also on the choice of support threshold. This last point is a matter of work in progress.

References

- [1] L. A. Zadeh, G. J. Klir, and B. Yuan, Fuzzy sets, fuzzy logic, and fuzzy systems: selected papers. World Scientific, 1996, vol. 6.

- [2] E. Duneja and A. Sachan, “A survey on frequent itemset mining with association rules,” IJCA, vol. 46, no. 23, pp. 18–24, 2012.

- [3] J. Vizhi and T. Bhuvaneswari, “Data quality measurement on categorical data using genetic algorithm,” arXiv preprint arXiv:1202.3215, 2012.

- [4] S. Jagtap, B. Kodge, G. Shinde, and P. Devshette, “Role of association rule mining in numerical data analysis,” World Academy of Science, Engineering and Technology, International Journal of Computer, Electrical, Automation, Control and Information Engineering, vol. 6, no. 1, pp. 122–125, 2012.

- [5] J. Lonlac, Y. Miras, A. Beauger, V. Mazenod, J.-L. Peiry, and E. Mephu Nguifo, “An approach for extracting frequent (closed) gradual patterns under temporal constraint,” in FUZZ-IEEE, 2018, pp. 1–8.

- [6] L. Di-Jorio, A. Laurent, and M. Teisseire, “Mining frequent gradual itemsets from large databases,” in IDA, Lyon, France, August 31 - September 2, 2009, pp. 297–308.

- [7] L. Di-Jorio, A. Laurent, and M. Teisseire, “Fast extraction of gradual association rules: a heuristic based method,” in CSTST, Cergy-Pontoise, France, October 28-31, 2008, pp. 205–210.

- [8] A. Laurent, B. Negrevergne, N. Sicard, and A. Termier, “Pgp-mc: Towards a multicore parallel approach for mining gradual patterns,” in DASFAA. Springer, 2010, pp. 78–84.

- [9] S. Ayouni, A. Laurent, S. B. Yahia, and P. Poncelet, “Mining closed gradual patterns,” in ICAISC, Zakopane, Poland, June 13-17, Part I, 2010, pp. 267–274.

- [10] B. Négrevergne, A. Termier, M. Rousset, and J. Méhaut, “Para miner: a generic pattern mining algorithm for multi-core architectures,” Data Min. Knowl. Discov., vol. 28, no. 3, pp. 593–633, 2014.

- [11] E. Hüllermeier, “Association rules for expressing gradual dependencies,” in PKDD, Helsinki, Finland, August 19-23, Proceedings, 2002, pp. 200–211.

- [12] T. Calders, B. Goethals, and S. Jaroszewicz, “Mining rank-correlated sets of numerical attributes,” in KDD, 2006, pp. 96–105.

- [13] F. Berzal, J. C. Cubero, D. Sánchez, M. A. V. Miranda, and J. Serrano, “An alternative approach to discover gradual dependencies,” International Journal of Uncertainty, Fuzziness and Knowledge-Based Systems, vol. 15, no. 5, pp. 559–570, 2007.

- [14] E. Mephu Nguifo and P. Njiwoua, “Using lattice-based framework as a tool for feature extraction,” in Machine Learning: 10th European Conference on Machine Learning, Chemnitz, Germany, April 21-23, 1998, pp. 304–309.

- [15] N. Pasquier, Y. Bastide, R. Taouil, and L. Lakhal, “Discovering frequent closed itemsets for association rules,” in ICDT, Jerusalem, Israel, January 10-12, 1999, pp. 398–416.

- [16] S. Ben-Yahia, T. Hamrouni, and E. Mephu Nguifo, “Frequent closed itemset based algorithms: a thorough structural and analytical survey,” SIGKDD Explorations, vol. 8, no. 1, pp. 93–104, 2006.

- [17] S. Ben-Yahia, G. Gasmi, and E. Mephu Nguifo, “A new generic basis of ”factual” and ”implicative” association rules,” Intelligent Data Analysis, vol. 13, no. 4, pp. 633–656, 2009.

- [18] B. Ganter and R. Wille, Formal Concept Analysis - Mathematical Foundations. Springer, 1999.

- [19] E. Mephu Nguifo, “Galois lattice: A framework for concept learning-design, evaluation and refinement,” in Sixth International Conference on Tools with Artificial Intelligence, ICTAI ’94, New Orleans, Louisiana, USA, November 6-9, 1994, pp. 461–467.

- [20] A. Laurent, M. Lesot, and M. Rifqi, “GRAANK: exploiting rank correlations for extracting gradual itemsets,” in FQAS, Roskilde, Denmark, October 26-28, 2009, pp. 382–393.

- [21] T. D. T. Do, A. Termier, A. Laurent, B. Négrevergne, B. O. Tehrani, and S. Amer-Yahia, “PGLCM: efficient parallel mining of closed frequent gradual itemsets,” Knowl. Inf. Syst., vol. 43, no. 3, pp. 497–527, 2015.

- [22] T. Uno, M. Kiyomi, and H. Arimura, “LCM ver. 2: Efficient mining algorithms for frequent/closed/maximal itemsets,” in FIMI, Proceedings of the IEEE ICDM Workshop on Frequent Itemset Mining Implementations, Brighton, UK, November 1, 2004.

- [23] J. Nin, A. Laurent, and P. Poncelet, “Speed up gradual rule mining from stream data! A b-tree and owa-based approach,” J. Intell. Inf. Syst., vol. 35, no. 3, pp. 447–463, 2010.

- [24] R. R. Yager, “Families of owa operators,” Fuzzy Sets and Systems, vol. 59, no. 1, pp. 125–148, 1993.

- [25] ——, “On ordered weighted averaging aggregation operators in multicriteria decisionmaking,” IEEE Trans. Systems, Man, and Cybernetics, vol. 18, no. 1, pp. 183–190, 1988.

- [26] N. Phan, D. Ienco, D. Malerba, P. Poncelet, and M. Teisseire, “Mining multi-relational gradual patterns,” in SDM, 2015, pp. 846–854.

- [27] S. Ayouni, S. B. Yahia, A. Laurent, and P. Poncelet, “Fuzzy gradual patterns: What fuzzy modality for what result?” in SoCPaR, 2010, pp. 224–230.

- [28] J. Lonlac and E. Mephu Nguifo, “A novel algorithm for searching frequent gradual patterns from an ordered data set,” vol. 24, no. 5, 2020. to appear.

- [29] T. Ngo, V. Georgescu, A. Laurent, T. Libourel, and G. Mercier, “Mining spatial gradual patterns: Application to measurement of potentially avoidable hospitalizations,” in SOFSEM, 2018, pp. 596–608.

- [30] D. Owuor, A. Laurent, and J. Orero, “Mining fuzzy-temporal gradual patterns,” in FUZZ-IEEE, 2019, pp. 1–6.

- [31] F. Shah, A. Castelltort, and A. Laurent, “Extracting fuzzy gradual patterns from property graphs,” in IEEE International Conference on Fuzzy Systems, FUZZ-IEEE, New Orleans, LA, USA, June 23-26. IEEE, 2019, pp. 1–6.

- [32] K. Bache and M. Lichman, “UCI machine learning repository,” 2013. [Online]. Available: http://archive.ics.uci.edu/ml