Discriminative feature encoding for

intrinsic image decomposition

Abstract

Intrinsic image decomposition is an important and long-standing computer vision problem. Given an input image, recovering the physical scene properties is ill-posed. Several physically motivated priors have been used to restrict the solution space of the optimization problem for intrinsic image decomposition. This work takes advantage of deep learning, and shows that it can solve this challenging computer vision problem with high efficiency. The focus lies in the feature encoding phase to extract discriminative features for different intrinsic layers from an input image. To achieve this goal, we explore the distinctive characteristics of different intrinsic components in the high dimensional feature embedding space. We define feature distribution divergence to efficiently separate the feature vectors of different intrinsic components. The feature distributions are also constrained to fit the real ones through a feature distribution consistency. In addition, a data refinement approach is provided to remove data inconsistency from the Sintel dataset, making it more suitable for intrinsic image decomposition. Our method is also extended to intrinsic video decomposition based on pixel-wise correspondences between adjacent frames. Experimental results indicate that our proposed network structure can outperform the existing state-of-the-art.

Index Terms:

intrinsic image decomposition; deep learning; feature distribution; data refinement.I Introduction

In terms of intrinsic image decomposition, the albedo image indicates the surface material’s reflectivity which is unchanging under different illumination conditions, while the shading image accounts for illumination effects due to object geometry and camera viewpoint [1]. It is an ill-posed problem to reconstruct these two intrinsic images from a single color image , which has the formation model:

| (1) |

To solve this challenging inverse image formation problem, many researchers have tried applying physically motivated priors as constraints to disambiguate the decomposition [2, 3, 4, 5, 6, 7, 8, 9]. These methods usually represent the priors in the form of energy terms and solve the decomposition problem through graph-based inference algorithms. With the surge of ground-truth intrinsic decomposition data [10, 11, 12], data-driven deep learning methods [1, 13, 14, 15, 16, 17] have achieved promising decomposition results and have drawn more and more research interest. However, fully-supervised methods require high-quality and densely-labelled decompositions, which are expensive to acquire. To overcome this problem, methods training across different datasets [17], training on synthetic datasets [15, 17], adding additional constraints [16] and reusing physically motivated priors [1] have been proposed.

When developing their specific deep learning techniques, previous methods usually extract features via a shared encoder, and then use different decoders to disentangle information for specific intrinsic layers. Observing the different distributions between albedo and shading in the gradient domain [2], it is natural to assume that features representing different intrinsic layers can be separated in the embedding space. With the features separated during the encoding phase, decoders can be relieved from distilling clues for specific targets and focus on the reconstruction procedure. This idea motivates the research in this paper.

We propose a novel two-stream encoder-decoder network for intrinsic image decomposition. In particular, our feature distribution divergence (FDD) constraint is designed to encourage the two encoders to extract distinctive features for different intrinsic layers. Our feature distribution consistency (FDC) constraint is used to encourage the features of a reconstructed intrinsic layer to have a similar distribution pattern to ground-truth decompositions. Moreover, we provide an approach to deal with the illumination inconsistency between the ground truth shading and input images in the MPI Sintel dataset, making it more suitable for intrinsic image decomposition. We also provide an intrinsic decomposition method for video data based on pixel-wise correspondences between adjacent frames. This work is an extension of our previous published workshop paper [18], giving more detailed method descriptions, novel technical contributions, and more comprehensive experiments.

The major contributions of this work are:

A novel two-stream encoder-decoder network for intrinsic image decomposition, in which discriminative feature encoding is achieved via feature distribution divergence and feature distribution consistency constraints.

A data refinement algorithm for the MPI Sintel dataset, producing a more physically consistent dataset that better suits the intrinsic decomposition task.

Experimental results on various datasets to demonstrate the effectiveness of our proposed method, including experimental extension to decomposition of video data.

II Related work

II-A Approaches

Intrinsic image decomposition is a long standing computer vision problem. However, it is a seriously ill-posed problem to recover an albedo layer and a shading layer from a single color image [15]. In recent decades, considerable effort has been devoted to this challenging problem. These approaches can be coarsely classified into optimization-based methods using physically motivated priors, and deep learning based, data-driven methods [15, 19]. There are also approaches using multiple images as input [20, 21, 22, 23, 24, 25], treating the reflectance as a constant factor with changing illumination. These methods require the images to be captured by a static camera with varying illumination. A generative adversarial network (GAN) based domain transfer framework has also applied to image layer separation tasks [26]. Additional cues including depth maps [27, 28, 29, 30] and near-infrared images [31] are also taken into account in some work. Here, we focus on key works recovering intrinsic images from a single input.

II-B Physically motivated priors based methods

To solve this ill-posed intrinsic decomposition problem, researchers have derived several physically-inspired priors to constrain the solution space [15]. Land et al. [2] proposed the retinex algorithm, exploring the different properties of intrinsic components in the gradient domain: large derivatives are perceived as changes in reflectance properties, while smoother variations are seen as changes in illumination. Based on this assumption, many priors for intrinsic image decomposition have been explored. Derived from a piece-wise constant property, reflectance sparsity [3, 4] and low-rank reflectances [8] have been used as constraints. Other constrains include the distribution difference in the gradient domain [32, 33, 34], non-local textures [5, 6], shape and illumination [7], and user strokes [8, 9]. These hand-crafted priors are not likely to be valid across complex datasets [19]. Bi et al. [32] presented an approach using the norm for piece-wise image flattening, and proposed an algorithm for complex scene-level intrinsic image decomposition. Li et al. [33] presented a method to automatically extract two layers from an image based on differences in their distributions in the gradient domain. Sheng et al. [34] proposed an approach based on illumination decomposition, in which a shading image is decomposed into drift and step shading channels based on different distribution properties in gradient domain. Based on an analysis of the logarithmic transformation, Fu et al. [35] introduced a weighted variational model to refine the regularization terms for intrinsic image decomposition. Later, Fu et al. [36] presented an algorithm incorporating a reflectance sparseness regularizer based on the norm and a shading smoothness regularizer based on total variation. Non-local texture constraints [5, 6] are used to find pixels with the most similar reflectance within an image. Krebs et al. [37] developed a method for intrinsic image decomposition from a single RGB or multispectral image, taking the mathematical properties of the mean and standard deviation along the spectral axis into consideration. Although these methods restrict the solution space to a feasible region, such specifically designed priors cannot hold under complex conditions. The results are largely influenced by the parameter settings, which need expert knowledge. Our method differs in that it is data driven, and parameters are automatically learned from the dataset.

II-C Deep learning methods

Thanks to the public availability of intrinsic image datasets such as the MIT intrinsic dataset [10], the MPI Sintel dataset [11] and Intrinsic Images in the Wild (IIW) [12], application of deep learning to intrinsic decomposition has surged [13, 38, 39, 40, 41]. Direct intrinsics [14] provided the first entirely deep learning model that directly outputs albedo and shading layers given a color image. Results from this method are blurred due to down-sampling during encoding and deconvolution during decoding.

Facing the fact that high-quality and densely-labelled intrinsic images are expensive to acquire, many methods have been developed to train models with additional constraints [16, 42], reusing physically motivated priors [1, 43, 44, 45, 46], expanding the dataset with synthetic images [15, 47, 17] or training across datasets [17].

Fan et al. [16] provided a network structure using a domain filter between edges in the guidance map to encourage piece-wise constant reflectance. [42] used region masks to guide the separation of different intrinsic components. By utilizing additional constraints, the solution space for the problem is further restricted. Seo et al. [43] proposed an image-decomposition network, which makes use of all the three premises regarding consistency from retinex theory. In this work, pseudo images (generated color-transferred multi-exposure images) are used for training. Baslamisli et al. [1] presented a two-stage framework to firstly split the image gradients into albedo and shading components, which are then fed into decoders to predict pixel-wise intrinsic values. In their later work [44], the gradient descriptors for albedo and shading are derived from a physics-based reflection model and used to compute the shading map directly from RGB image gradients. [45] and [46] derived fine-grained shading components from a physics-based image formation model, in which the shading component is further decomposed into direct and indirect components, and shape-dependent/independent ones. These works proposed novel methods by revisiting physically motivated priors. Shi et al. [15] trained a model to learn albedo, shading and specular images on a large-scale object-level synthetic dataset by rendering ShapeNet [48]. Sial et al. [47] trained an intrinsic decomposition model on a synthetic ShapeNet-based scene dataset which has more realistic lighting effects. Li et al. [17] presented an end-to-end learning approach that learns better intrinsic image decomposition by leveraging datasets with different types of labels.

The majority of these methods extract features via a shared encoder, and then use different decoders to disentangle information for specific intrinsic layers. In contrast to these works, we try to exploit the difference between intrinsic components in feature space through a novel two-stream framework. With the features separated in the encoding phase, decoders can be relieved from distilling clues for specific targets and focus on the reconstruction procedure.

II-D Intrinsic video

Kong et al. [49] defined intrinsic video estimation as the problem of extracting temporally coherent albedo and shading from video alone. Ye et al. [50] proposed a probabilistic approach to propagate the reflectance from the initial intrinsic decomposition of the first frame. In order to achieve temporal consistency, these methods rely on optical flow to provide correspondences across time. Meka et al. [51] presented the first approach to tackle the hard intrinsic video decomposition problem at real-time frame rates. This method applies global consistency constraints in space and time based on random sampling. Lei et al. [52] presented a novel and general approach for blind video temporal consistency. This method is only trained on a pair of original and processed videos directly instead of a large dataset. In this paper, we simply extend our intrinsic image decomposition method to video based on optical flow, preserving temporal consistency during the decomposition process.

III Method

III-A Network structure

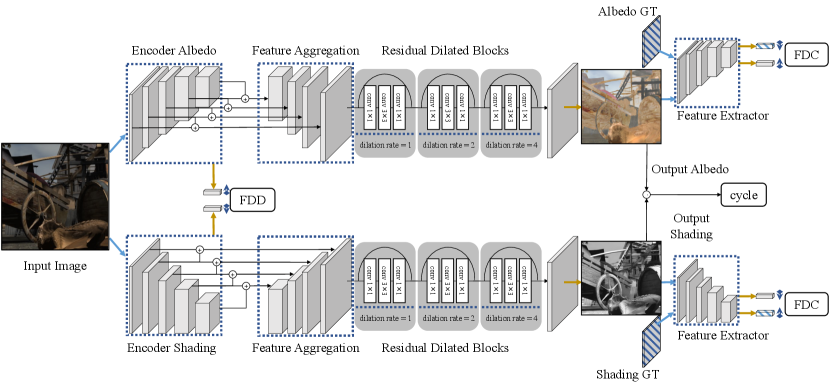

Our network architecture is visualized in Figure 1. The framework consists of two streams of encoder-decoder sub-networks. One performs albedo image reconstruction, and the other, shading image reconstruction. Taking the albedo sub-network for example, the input image is passed through a convolutional encoder to extract multi-level features, which are then aggregated by sequences of upsampling, concatenation, and convolution. In the decoding phase, the fused multi-scale features are fed into a sequence of three residual dilated blocks to reconstruct the albedo intrinsic image. The shading sub-network has the same structure as the albedo sub-network. In practice, we adopt VGG-19 [53] pretrained on ImageNet [54] as the initial encoder.

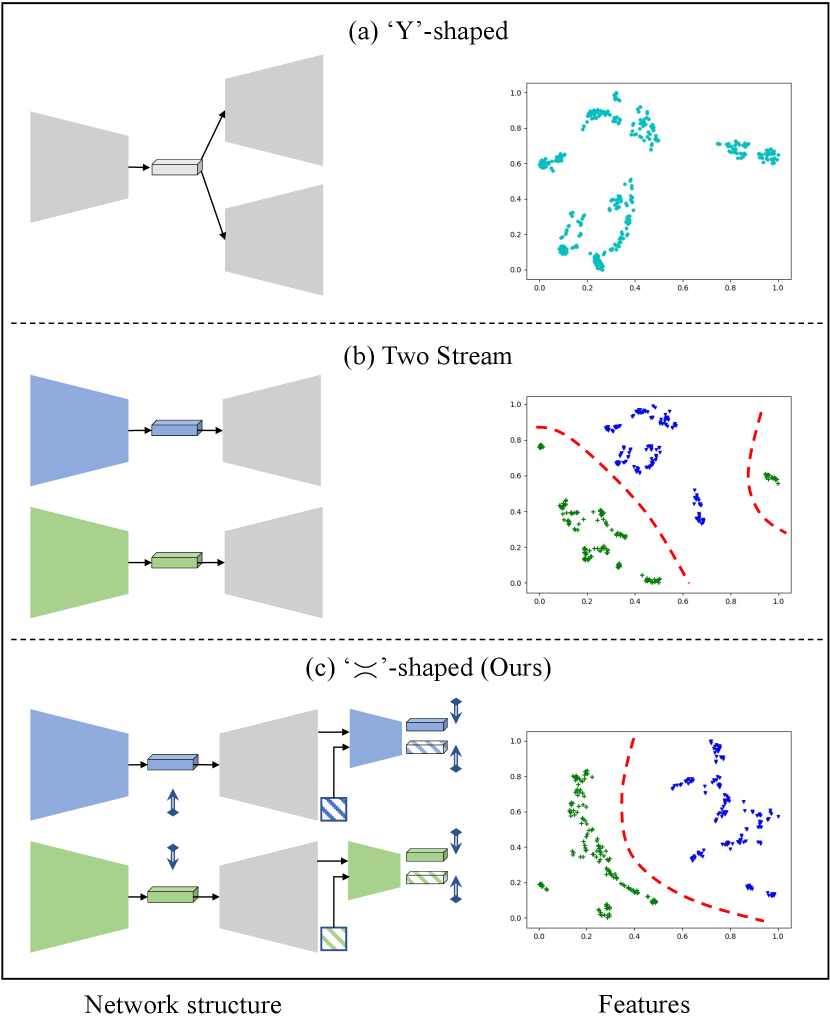

Previous works usually use a shared encoder to extract features containing both albedo and shading information. Different decoders are then applied to distill clues from the comprehensive features for specific intrinsic image reconstruction. The ‘Y’-shaped framework can be formulated as:

| (2) | ||||

where and denote the feature encoder and decoder respectively. and represent their corresponding trainable parameters. In this equation, we intend to represent as , in which the subscript of the function symbol means that the trainable parameters are different with respect to specific intrinsic components, while the network frameworks are the same.

Unlike such methods, our network design has two encoders for albedo and shading images respectively. In this paper, we denote this structure as an ‘’-shaped framework:

| (3) | ||||

Using this framework, the encoders (, ) are able to extract features more pertinent to their reconstruction targets (albedo, shading). In Figure 2, we visualize the feature distributions of different network structures, which explains our idea pictorially.

In each row of Figure 2, a feature embedding visualization using t-SNE is provided on the right. Each data point represents a feature extracted by the encoder, which is then fed into the corresponding intrinsic image reconstruction decoder. We use the same color coding for the embedding’s data points and the extracted feature vectors from the simplified network structure. The proposed ‘’-shaped framework (given in detail in Figure 1) results in a better feature embedding. For instance, in the embedding space, the features for different intrinsic components are better separated.

The rest of this section introduces the core idea and detailed design of the discriminative feature encoding. Then, important constraints for our intrinsic decomposition network are explained.

III-B Discriminative feature encoding

III-B1 Basis

Our work is inspired by Land et al. [2]: the retinex approach assumes that albedo and shading layers possess different properties in the gradient domain. By utilizing such discriminative properties, the intrinsic decomposition results can be improved. In this work, we study and exploit the discriminative properties in a more general convolutional feature space. We next describe the proposed discriminative feature encoding in detail.

III-B2 Feature distribution divergence

As Figure 1 shows, the encoding phase consists of multiple (convolution, relu, maxpooling) blocks, through which the input signal is encoded into several different abstraction levels. The multi-scale features are denoted , in which represents the output feature of the block. We define the feature distance function as , where denotes the feature channel number and the input signal has spatial size :

| (4) | ||||

Feature distance measurement is based on the cosine distance between two vectors and norm. In Eq. (4), and represent features from the albedo encoder and the shading encoder respectively. is the inner product in Euclidean space, ;, and represents a spatial location in a feature map. is a distance rescaling function in the form of a modified sigmoid function to ensure . We use

The feature distribution divergence is now formulated as:

| (5) |

where is the weight for the feature distance for abstraction level . Empirically, we use five different levels of abstraction in our experiments (). We set and , .

III-B3 Feature distribution consistency

The feature distribution divergence aims to increase the distance between the feature vectors embedded by different encoders. However, this is not sufficient for discriminative feature encoding. The core idea of Fisher’s linear discriminant is to maximize the distance between classes and minimize the distance within classes simultaneously. As an analogue of that, along with the feature distribution divergence described above, we use the feature perceptual loss [55] between the predicted and ground truth intrinsic images to constrain the encoding process, encouraging the embedded features to fit the real distribution.

We use the same distance measurement as for the feature distribution divergence. denotes the feature distance in the abstraction level.

The feature distribution consistency is formulated as:

| (6) | |||

where is a weight. Note that represents the feature similarity between and . Minimizing Eq. (6) encourages the predicted and ground truth intrinsic images to have similar perceptual features. In practice, the encoders are reused to extract features from the predicted and target results in our framework, so the embedded feature distribution can be optimized directly during training. Empirically, we set and , .

III-C Basic supervision constraints

III-C1 Use of losses

Besides the above constraints for discriminative feature encoding, several basic supervision losses are adopted to train the intrinsic image decomposition network.

As described in Eq. (3), given an image , the albedo image and the shading image are predicted through trained and . With densely-labelled intrinsic images and as ground truth data, we constrain the pixel-wise predictions using the reconstruction loss and the gradient loss .

III-C2 Reconstruction loss

We use the loss combined with the SSIM (structural similarity index [56]) loss as the reconstruction loss:

| (7) | ||||

where measures the structural similarity of images and . The SSIM loss is , indicating structural dissimilarity. Empirically, we set and . is computed pixel-wise.

The cycle loss is used to encourage the product of the predicted and to be similar to that of the input image .

III-C3 Gradient loss

We also use image gradients as supervision to help preserve details in the intrinsic images:

| (8) | |||

where are the image gradients in the and directions.

For datasets with ground truth decomposition like the MIT intrinsic dataset and MPI Sintel, the total loss is constructed as:

| (9) |

Empirically, we set .

III-D Adjustment for sparsely-labelled data

III-D1 Sparse labelling

As well as the densely-labelled datasets mentioned above, recently, the sparsely-labelled dataset IIW has become available, with a larger number of real-world images. To apply our core idea to this situation, we must slightly adjust the training framework, as we first explain, and then give the loss functions measuring the intrinsic image reconstruction quality.

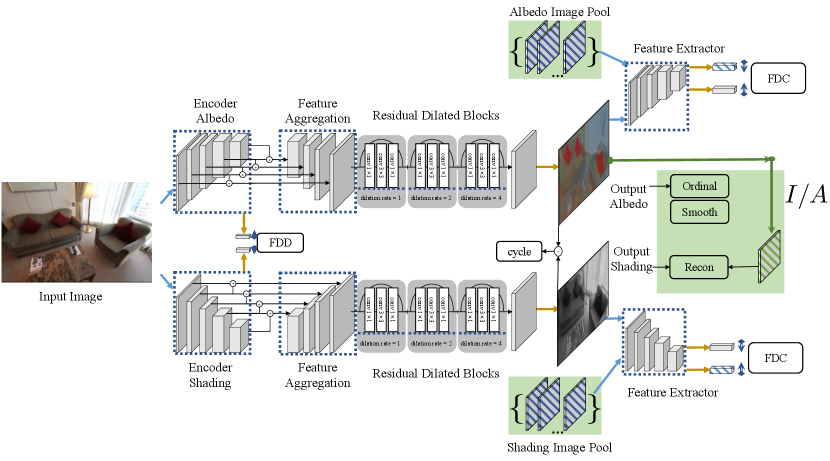

III-D2 Framework adjustment

In order to train on the sparsely-labelled IIW dataset, there are a few barriers to overcome. Figure 3 shows the adjustments made to our framework.

One problem is the lack of dense supervision during the albedo reconstruction procedure. The reconstruction loss in Eq. (7) and the gradient loss in Eq. (8) both need pixel-wise dense supervision. However, the IIW dataset only has sparse annotations of reflectance comparisons at selected points of the images. Therefore, we have to apply alternative constraints utilizing sparse labelling. Specifically, we use the ordinal loss to measure the difference between the output albedo image and the annotated input. Furthermore, smoothness constraints are applied to model the smoothness prior of the albedo component.

A further problem is the lack of shading ground truth, which is necessary in our two-stream training framework. The core idea of the feature distribution divergence is to extract distinctive features corresponding to different target intrinsic layers from the same input image. However, there is no annotation for the shading layers in this dataset. To circumvent the lack of ground truth data, we directly synthesize the target shading image from the input and the reconstructed albedo , using Eq. (1). Therefore, the synthesized shading image can be used as dense supervision.

Last but not least, the lack of reference intrinsic images causes problems for computing the feature distribution consistency. The FDC is designed to constrain the intrinsic image to have a similar distribution to the corresponding real reference. In detail, this constraint is achieved by minimizing the feature perceptual loss between the reconstructed and reference images. However, dense ground truth images are not provided in the IIW dataset, making ground truth features unavailable. To solve this problem, we maintain an image pool for each training stream, in which batches of reconstructed intrinsic images are gathered as the guidance for feature perceptual loss.

III-D3 Training constraints

As noted, we thus modify the constraints to suit the sparse annotations in the IIW dataset. We now describe the ordinal loss and the smoothness constraints used.

We first consider the ordinal loss. Since dense ground truth labels are not available in [12], it introduced the weighted human disagreement rate (WHDR) as an error metric. Following [17], we use an ordinal loss based on WHDR as the sparse supervision term.

The ordinal loss is obtained by accumulating all the annotated pairs in the albedo image:

| (10) |

where represents the error for a pair of annotated pixels in the predicted albedo image . A detailed definition is provided in Appendix A.

We next consider smoothness priors: we adopt the same ones as [17]. The smoothness constraints comprise the albedo smoothness constraint and the shading smoothness constraint . The albedo component is constrained using a multi-scale smoothness term, through which the albedo layer reconstruction is encouraged to be piecewise constant. Shading smoothness is constrained using a densely-connected term. Detailed definitions are provided in Appendix A.

III-E Adjustment for video data

The MPI Sintel dataset is composed of short films, so it naturally has temporal consistency. We also investigated a suitable framework for intrinsic decomposition of such video data.

For video intrinsic decomposition, adjacent pairs of frames are input into our two-stream networks to get the corresponding intrinsic layers. In addition to using the single image decomposition framework, optical flow is computed from the sequential input images, and used to provide temporal consistency guidance for the output intrinsic layers.

Optical flow is typically used to construct temporal correspondences between two adjacent frames, assuming that the pixel intensities of an object do not change between consecutive frames, and that neighbouring pixels have similar motion. In this work, the optical flow field of pairs of consecutive input images is obtained directly from the MPI Sintel dataset. Besides the single image intrinsic decomposition loss in Eq. (9), the optical flow is used to enhance the temporal consistency of the output intrinsic layers:

| (12) | ||||

where is the pixel in the albedo image of frame . We denote the optical flow map between the consecutive frames as . The mask records which pixels have valid optical flow value. if pixel is occluded, and is otherwise. We use and to balance the importance of the temporal consistency terms between albedo and shading layers.

IV Intrinsic data refinement

IV-A Basic approach

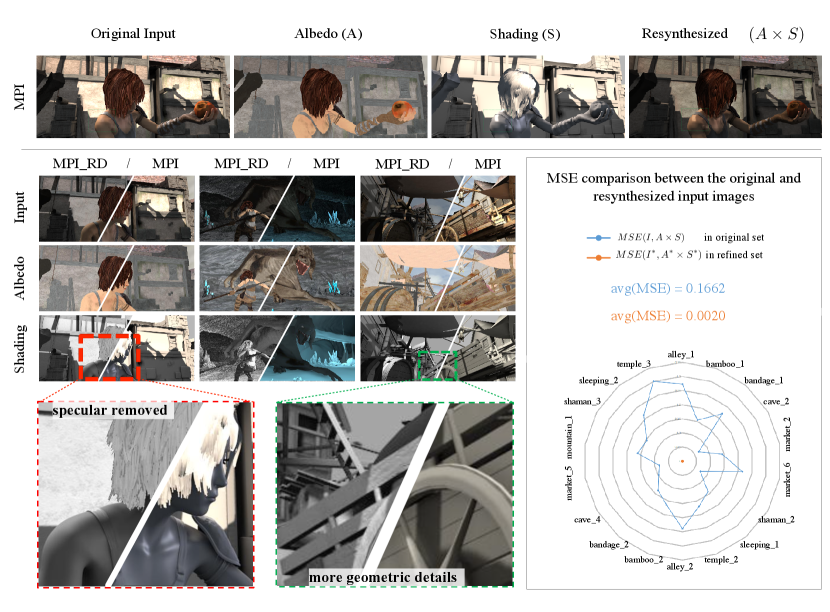

The MPI Sintel dataset [11] is a publicly-available densely-labelled dataset containing complex indoor and outdoor scenes. It was originally designed for optical flow evaluation. For research into intrinsic image decomposition, ground truth shading images have been rendered with a constant gray albedo considering illumination effects. However, due to the creation process, the original input frames can not be reconstructed from the ground truth albedo and shading layers through Eq. (1).

As the first row of Figure 5 shows later, the specular component of the shading image cannot be observed in the original image, which means it does not share the same illumination condition. Although the simplified image formation model Eq. (1) need not be strictly respected, it is physically incorrect to extract a shading layer depicting different illumination effects from the original image. To overcome this inconsistency, previous works [14] directly resynthesize original images from the ground truth albedo and shading via Eq. (1). However, this approach does not deal with the specular component of the shading layer, which is considered not to be modeled well by Eq. (1) [15].

In this paper, we propose an approach to refine the dataset in order to shift it into a domain more representative of real images. The refined MPI Sintel dataset (MPI_RD) is subject to the image formation model in Eq. (1), and the shading layers contain no color information (gray shading). In addition, the shading layers in the MPI_RD maintain consistency with the original images. This can be shown in two ways. For one thing, the specular component is removed from the shading layer. For another thing, shape details observed in the original images are preserved in the shading layer. We describe our data refinement algorithm in 1. In summary, we shift the distribution of the albedo layer to a higher mean value, and then reconstruct the shading layer from the original image and the shifted albedo (steps 1 to 5). Next, invalid pixels in the reconstructed shading layer are computed using local linear embedding (LLE) [57] with the input as the guiding image adopted to construct the embedding weights (steps 6 to 7). Finally, the input image is resynthesized from the processed albedo and shading images (step 8).

IV-B Improvements

Although the data refinement algorithm 1 successfully suppresses inconsistencies in the intrinsic components in the MPI dataset, problems still remain in terms of temporal consistency. For example, we can observe intensity jittering in consecutive albedo images, and areas lacking detail in shading images. We now discuss possible causes for these defects, as determined by investigating the dataset, and consequently provide methods to alleviate them.

Jittering effects can be observed in frames with notable statistical changes (mean color changes caused by large dark object occlusion). Areas lacking detail are usually observed in shading images whose source image has low contrast areas. Note that in the single-image refinement procedure, the validity mask is computed based on the source image and the original albedo and shading images. Invalid pixels (pixel values ) are directly truncated, and therefore not used when computing statistics of the albedo image. Such invalid areas in the shading image are then reconstructed using the LLE algorithm. In fact, the invalid areas lacking detail often overlap with the LLE reconstructed areas, resulting in low quality reconstruction. In conclusion, jittering effects are mainly due to large dark region changes between frames, while lack of detail is mainly caused by invalid region reconstruction artifacts.

This analysis results in a simple method to deal with those problems. To avoid intensity jittering between consecutive frames, we use optical flow to construct the correspondences between frames, and expand the pixel set for statistical computation. This increases robustness of the image statistics. To overcome lack of detail in shading images, the region reconstruction method is optimized to take temporal correspondences into consideration.

IV-C Temporal consistency measurement

To measure temporal consistency in the sequential data, we use the same video temporal consistency metric (TCM) as [58]:

| (13) |

where and represent the frame in the output video () and the input video () respectively. is the warping function using the optical flow. The TCM of the frame is calculated using the warping error between frames. The 2-norm of a matrix is the sum of squares of its elements. Through this equation, the processed video () is encouraged to be temporally consistent according with variations in the input video.

In order to visualize the video processing effects, we record pixelwise temporal consistency value in a TCM map, computed as:

| (14) |

where the TCM score is computed per pixel.

V Results and discussion

V-A Datasets

V-A1 MPI Sintel dataset and our refined version

Sintel is an open source 3D animated short film, which has been published in many formats for various research purposes. For intrinsic image decomposition, the clean pass images and the corresponding albedo and shading layers have been published as the MPI Sintel dataset, containing 18 sequences with a total of 890 frames. As discussed in IV, there is severe illumination inconsistency between the input frames and the shading layers in this dataset. Therefore, we provide the refined MPI Sintel dataset as a more suitable dataset for intrinsic image decomposition.

Figure 4(bottom) compares our refined MPI dataset (MPI_RD) to the original MPI dataset (MPI). In the shading layer of the first column, we can see that in our refined shading image, the specularity on the shoulder of the girl is removed, making the shading illumination consistent with the original input image. In the second and third columns, the shading layers from the MPI_RD dataset contain more geometric details than those from the MPI dataset. For instance, the wooden cart’s coarse surface is depicted in the refined shading in the third column, while the original shading from the MPI dataset has a smooth surface. These examples demonstrate that our refined MPI_RD improves consistency between the intrinsic decomposition and the input image. In Figure 4(bottom, right), the mean squared error (MSE) between the input image and the resynthesized image is computed. The MSE for the MPI_RD dataset is significantly smaller than for the MPI dataset, showing that the intrinsic decomposition model Eq. (1) is well respected in the refined dataset.

| MSE | LMSE | DSSIM | ||||||||

| Methods | albedo | shading | avg | albedo | shading | avg | albedo | shading | avg | |

| image split | retinex [10] | 0.0606 | 0.0727 | 0.0667 | 0.0366 | 0.0419 | 0.0393 | 0.2270 | 0.2400 | 0.2335 |

| Barron et al. [7] | 0.0420 | 0.0436 | 0.0428 | 0.0298 | 0.0264 | 0.0281 | 0.2100 | 0.2060 | 0.2080 | |

| Chen et al. [28] | 0.0307 | 0.0277 | 0.0292 | 0.0185 | 0.0190 | 0.0188 | 0.1960 | 0.1650 | 0.1805 | |

| MSCR [14] | 0.0100 | 0.0092 | 0.0096 | 0.0083 | 0.0085 | 0.0084 | 0.2014 | 0.1505 | 0.1760 | |

| Revisiting [16] | 0.0069 | 0.0059 | 0.0064 | 0.0044 | 0.0042 | 0.0043 | 0.1194 | 0.0822 | 0.1008 | |

| Ours | 0.0047 | 0.0046 | 0.0047 | 0.0037 | 0.0038 | 0.0038 | 0.0950 | 0.0774 | 0.0862 | |

| scene split | MSCR [14] | 0.0190 | 0.0213 | 0.0201 | 0.0129 | 0.0141 | 0.0135 | 0.2056 | 0.1596 | 0.1826 |

| Revisiting [16] | 0.0189 | 0.0171 | 0.0180 | 0.0122 | 0.0117 | 0.0119 | 0.1645 | 0.1450 | 0.1547 | |

| Ours | 0.0173 | 0.0195 | 0.0184 | 0.0118 | 0.0147 | 0.0133 | 0.1587 | 0.1405 | 0.1496 | |

For data augmentation, we randomly resize the input image by a scale factor in , and randomly crop a patch from the resized image per iteration. We also use horizontal flipping in the training phase. When comparing methods, following [16], we evaluate our results on both a scene split and an image split. For a scene split, half of the scenes are used for training and the other half for testing. For an image split, all 890 images are randomly separated into two sets. Evaluation on a scene split is considered more challenging as it requires more generalization capacity.

For video data, the refined version MPI_VRD (video refined data) alleviate image illumination jitter and local region inconsistencies. Quantitatively, taking the shading images of video sleeping_2 for example, temporal consistency refinement increases TCM from 0.74 to 0.78.

V-A2 IIW dataset

Intrinsic Images in the Wild (IIW) [12] is a large scale, public dataset of real-world scenes intended for intrinsic image decomposition. It contains 5,230 real images of mostly indoor scenes, combined with a total of 872,161 crowd-sourced annotations of reflectance comparisons between pairs of points sparsely selected throughout the images (on average 100 judgements per image). Following many prior works [13, 41, 39, 16], we split the IIW dataset by placing the first of every five consecutive images sorted by image ID into the test set, and the others into the training set. WHDR from [12] is employed to measure the quality of the reconstructed albedo images.

For the IIW dataset, our proposed network structure cannot be directly used due to the lack of dense labelling of albedo and shading layers. Actually, only sparse and relative reflectance annotations are provided. In order to take advantage of the proposed feature distribution divergence and feature distribution consistency, we modify the network. In detail, the predicted dense albedo is collected into an image pool to describe the distribution of albedo. The reconstructed shading using the original image and predicted albedo is used as dense supervision for shading prediction, and is also collected in an image pool to describe the shading distribution. We set the weights in Eq. (6) to .

V-B Comparison to state-of-the-art methods

V-B1 Using MPI Sintel and the refined dataset

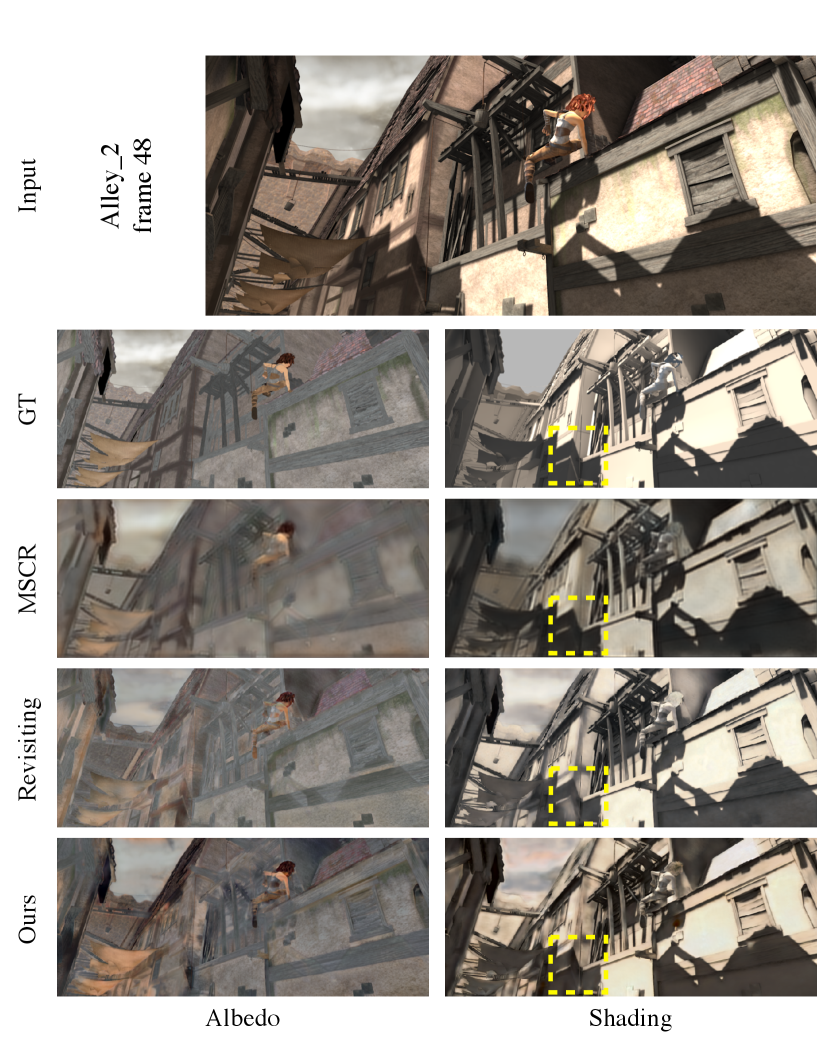

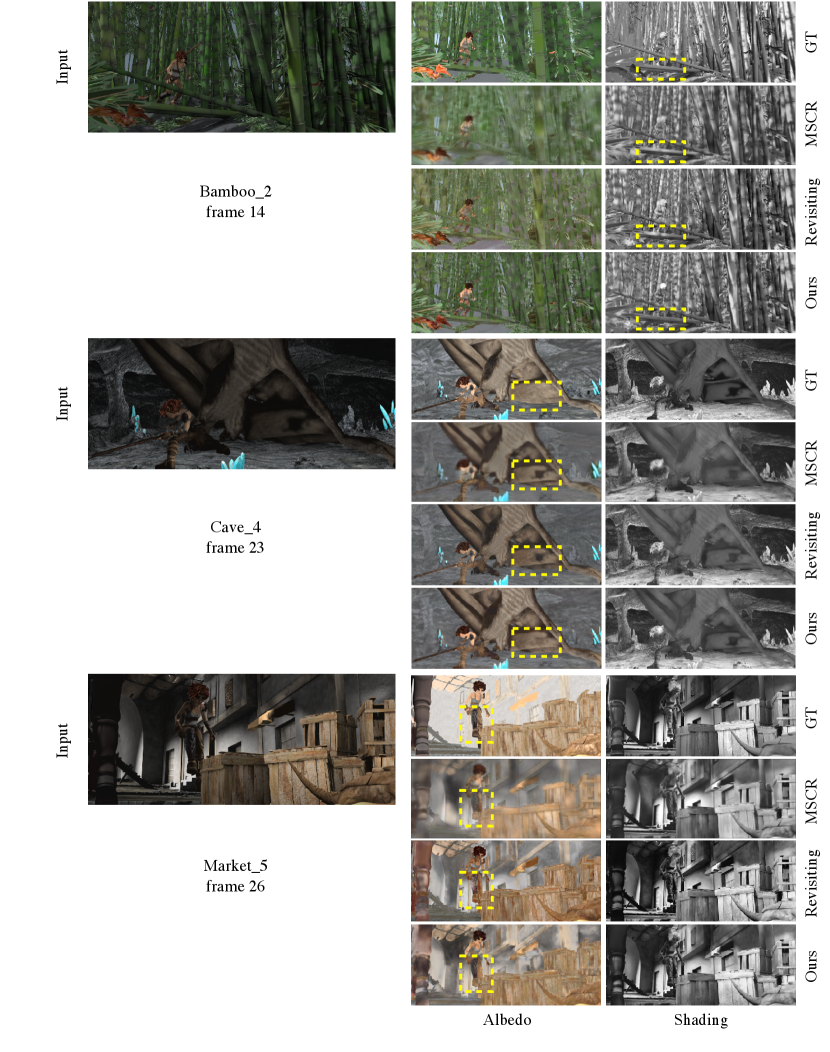

As Table I shows, our method achieves the best results on the MPI Sintel dataset using the image split. On the more challenging scene split, our method is competitive with the state of the art, and achieves the best results in 5 out of the 9 cases in the table. We show a group of qualitative results evaluated on the scene split in Figure 5. While the MSCR [14] results are relatively blurred due to the large kernel convolutions and down-sampling, our method provides sharper results comparable to Revisiting [16]. Moreover, our shading layer depicts better shadow area than [16].

As explained in IV, the MPI Sintel dataset has issues of data consistency between the original input images and the corresponding shading images. Because of the proposed feature distribution divergence, feature distribution consistency and the use of the cycle loss, our method is sensitive to such data inconsistency. Therefore, we compare our method to the state-of-the-art methods on the more challenging scene split of the refined MPI Sintel dataset. As Table II shows, our method achieves the best result, demonstrating the effectiveness of our method and data refinement process.

| MSE | LMSE | DSSIM | ||||||||

| Methods | albedo | shading | avg | albedo | shading | avg | albedo | shading | avg | |

| MSCR [14] | 0.0222 | 0.0175 | 0.0199 | 0.0151 | 0.0122 | 0.0136 | 0.1803 | 0.1619 | 0.1711 | |

| Revisiting [16] | 0.0196 | 0.0137 | 0.0167 | 0.0146 | 0.0094 | 0.0120 | 0.1651 | 0.1082 | 0.1366 | |

| ablation studies | Plain w/o ssim or grad | 0.0189 | 0.0147 | 0.0168 | 0.0137 | 0.0104 | 0.0120 | 0.1560 | 0.1140 | 0.1350 |

| Plain w/o ssim | 0.0169 | 0.0149 | 0.0159 | 0.0117 | 0.0103 | 0.0110 | 0.1530 | 0.1100 | 0.1320 | |

| Plain w/o grad | 0.0188 | 0.0142 | 0.0165 | 0.0123 | 0.0095 | 0.0109 | 0.1520 | 0.1090 | 0.1300 | |

| Plain | 0.0172 | 0.0147 | 0.0159 | 0.0116 | 0.0097 | 0.0106 | 0.1528 | 0.1085 | 0.1307 | |

| Ours w/o FDD | 0.0166 | 0.0134 | 0.0150 | 0.0112 | 0.0090 | 0.0101 | 0.1474 | 0.1048 | 0.1261 | |

| Ours w/o FDC | 0.0170 | 0.0130 | 0.0150 | 0.0113 | 0.0089 | 0.0101 | 0.1530 | 0.1070 | 0.1300 | |

| Ours | 0.0157 | 0.0126 | 0.0142 | 0.0105 | 0.0087 | 0.0096 | 0.1419 | 0.1015 | 0.1217 | |

| MSE | LMSE | |||

| Method | albedo | shading | avg | total |

| Barron et al. [7] | 0.0064 | 0.0098 | 0.0081 | 0.0125 |

| SRIE [35] | 0.0136 | 0.0128 | 0.0132 | 0.0192 |

| Relative Smoothness [33] | 0.0492 | 0.0342 | 0.0417 | 0.0216 |

| Zhou et al. [39] | 0.0252 | 0.0229 | 0.0240 | 0.0319 |

| Shi et al. [15] | 0.0216 | 0.0135 | 0.0175 | 0.0271 |

| MSCR [14] | 0.0207 | 0.0124 | 0.0165 | 0.0239 |

| Revisiting [16] | 0.0134 | 0.0089 | 0.0111 | 0.0203 |

| Ours (scratch) | 0.0134 | 0.0099 | 0.0117 | 0.0186 |

| Ours (MPI) | 0.0126 | 0.0106 | 0.0116 | 0.0175 |

| Ours (RD) | 0.0120 | 0.0095 | 0.0108 | 0.0170 |

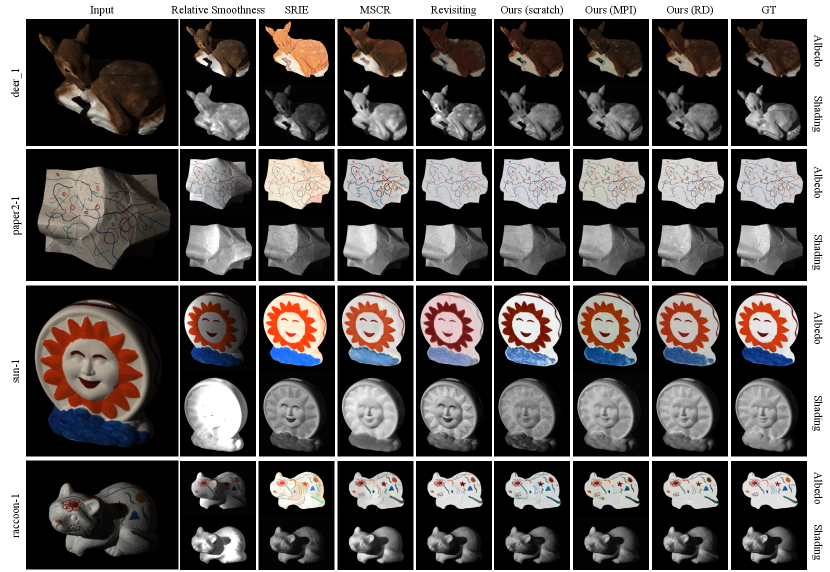

To further validate the effectiveness of the proposed method, we also conducted an ablation study on the training loses as well as the network architecture. Results are given in the bottom part of Table II. ‘Plain’ represents the baseline two-stream network structure shown in Figure 2(b). In the ablation study, the gradient loss and SSIM loss are progressively added to train the plain network. The experimental results show that using the gradient loss and SSIM loss simultaneously achieves better intrinsic image decomposition results. The proposed network architecture is denoted by ‘Ours’, as defined in Figure 2(c). It can be observed that using only the feature distribution divergence (w/o FDC) or the feature distribution consistency (w/o FDD) does not improve results much, while using both of them results in considerable improvement.

Figure 6 displays a side-by-side comparison with two other methods using the refined dataset MPI_RD. As can be seen, our method better separates shading from albedo information. For example, our method outputs consistent shadow around the girl’s neck.

V-B2 Using the MIT intrinsic dataset

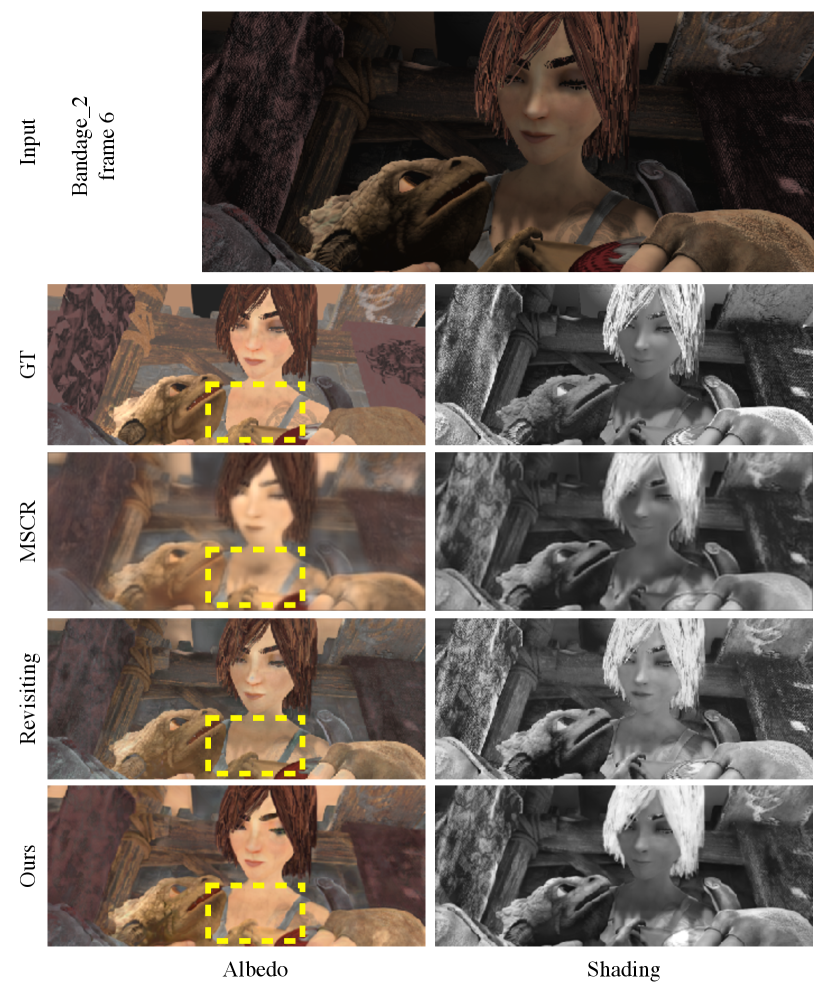

We further experimented on the MIT intrinsic dataset [10], which consists of object-level real images. In this experiment, classical methods [7, 35, 33] as well as learning based methods [16, 15, 14, 39] were compared to our proposed method. We also conduct an ablation study on the training strategies of our proposed network, including training from scratch (Ours scratch), pre-training on the original MPI Sintel dataset (Ours MPI), and pre-training on the refined MPI Sintel dataset (Ours RD). The experimental results are reported in Table III, and representative instances are selected for visual comparison in Figure 7.

As in [16], we used the 220 images in the dataset. In comparisons to previous methods, the split from [7] was used. Our refined MPI Sintel dataset has grayscale shading images, so we first pre-train the model on MPI_RD and then fine-tuned it on the MIT training set.

Numerical results are shown in Table III. Our method (Ours RD) achieves the best results in most cases in the table. Moreover, Ours RD performs better than Ours MPI in terms of LMSE, and both pre-training methods perform better than training from scratch, demonstrating that pre-training on the refined MPI Sintel dataset helps intrinsic image decomposition on the MIT dataset.

Qualitative results are illustrated in Figure 7. We can observe that our method (Ours RD) predicts sharp and accurate intrinsic layers. Classical methods may produce meaningful layer separation results, but good results depend on parameter tuning, which requires expert knowledge. Compared to (Ours scratch) and (Ours MPI), (Ours RD) achieves better region consistency with the ground truth.

V-B3 Using the IIW dataset

| Method | WHDR (mean) |

|---|---|

| Baseline (const shading) | 51.37 |

| Baseline (const reflectance) | 36.54 |

| Shen et al. 2011 [4] | 36.90 |

| Retinex (color) [10] | 26.89 |

| Retinex (gray) [10] | 26.84 |

| Garces et al. 2012 [59] | 25.46 |

| Zhao et al. 2012 [6] | 23.20 |

| flattening [32] | 20.94 |

| Bell et al. 2014 [12] | 20.64 |

| Zhou et al. 2015 [39] | 19.95 |

| Nestmeyer et al. 2017 (CNN) [41] | 19.49 |

| Zoran et al. 2015* [40] | 17.85 |

| Nestmeyer et al. 2017 [41] | 17.69 |

| Bi et al. 2015 [32] | 17.67 |

| CGIntrinsic [17] | 14.80 |

| Revisiting [16] | 14.45 |

| Ours | 13.60 |

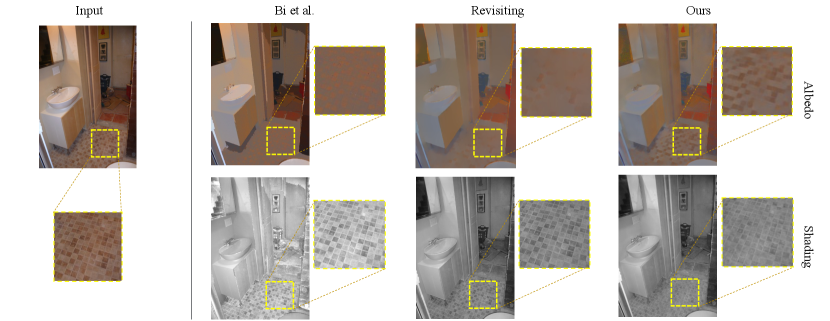

In Table IV, we report results obtained using the test set of the IIW dataset. Our proposed method achieves the best performance with a mean WHDR value of , a considerable improvement over the second best [16] with a mean WHDR value of . To better show the quality of our results, we give a qualitative comparison to state-of-the-art methods in Figure 8. Detailed intrinsic decomposition results are shown in the close-up windows. It can be observed that our method successfully preserves the texture of the floor tiles in the albedo layer, while the other approaches treat such texture as shading.

To further investigate the influence of the smoothness priors applied in the sparsely-labelled case, a comparison was conducted. Figure 9 shows the effects of changing the weights of the smoothness terms in Eq. (11): the albedo smoothness weight and shading smoothness weight were varied from 0 to 4 respectively. Results show that using the shading smoothness term increases the reconstruction loss, while using the albedo smoothness term decreases the reconstruction loss. Using both simultaneously provides the best result. In Figure 9(c, d, e), three representative parameter settings are used to show the resulting intrinsic decomposition. The albedo image in Figure 9(e) contains more precise contours and consistent region colors.

V-B4 Intrinsic decomposition of video data

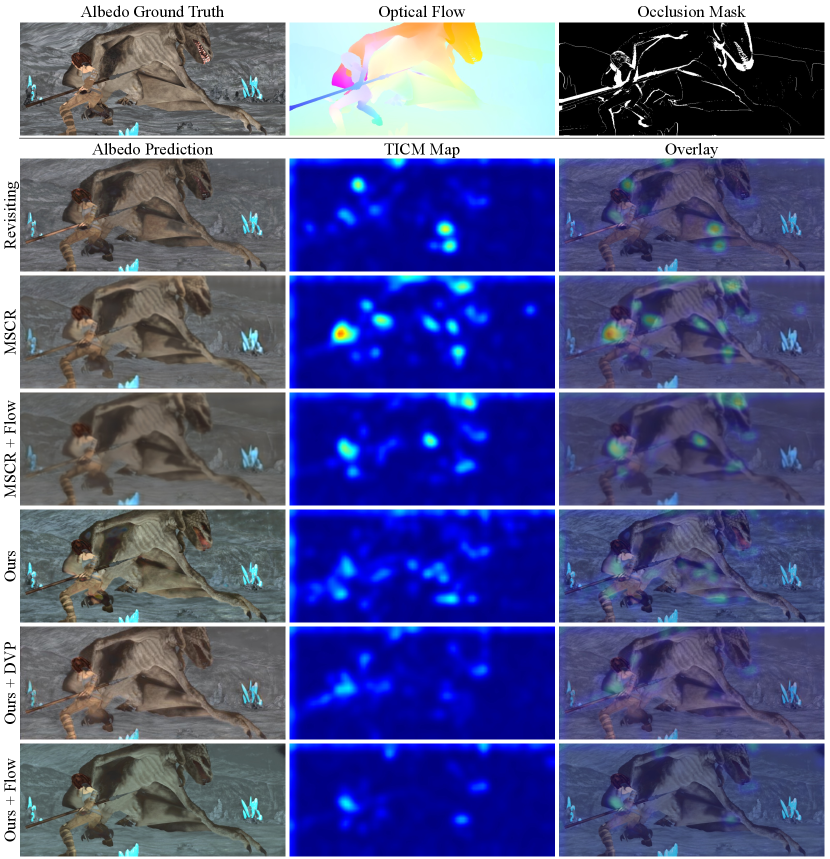

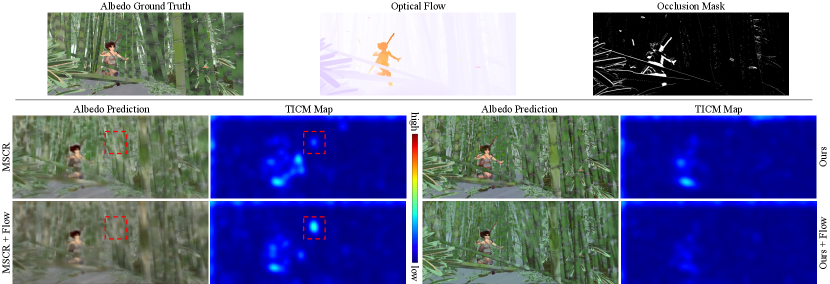

In this experiment, we evaluated the proposed intrinsic decomposition method on video data with respect to reconstruction quality of the intrinsic images, and to temporal consistency, through comparisons to alternative methods.

Framewise methods used for comparison include MSCR [14] and Revisiting [16]. The blind video temporal consistency method [52] is an unsupervised video smoothing method. In the experiment (Ours+DVP), it is applied as post-processing to increase the temporal consistency of the results produced by our framewise method (Ours). (Ours+Flow) is the proposed intrinsic decomposition method for video data. The temporal consistency constraint is applied by taking optical flow as input to construct correspondences between adjacent frames. We also extend the temporal consistency constraint to MSCR in the same way as for (Ours+Flow) in (MSCR+Flow). All methods were trained and tested on the MPI_VRD dataset.

The intrinsic decomposition accuracy scores of framewise methods and flow methods are shown in Table V, while the temporal consistency scores for 8 test short videos are shown in Table VI. Using adjacent frame temporal consistency as a constraint, (Ours+Flow) and (MSCR+Flow) achieve much better temporal consistency metric scores than their framewise counterparts, while the intrinsic decomposition accuracy scores remain almost unchanged. This demonstrates the effectiveness of our proposed extension method for video data. As a result, (Ours+Flow) achieves the best average accuracy and temporal consistency for intrinsic decomposition of video data.

We also visualize temporal consistency of a specific frame clipped from a video, using a temporal inconsistency metric (TICM) map to highlight temporally inconsistent areas. First, the TCM map is computed using Eq. (14). Then, the TCM map is smoothed by a Gaussian kernel of size 65. Finally, the Jet colormap is inverted to highlight inconsistent areas with warm colors (the colder the color, the better the consistency). A qualitative comparison of different intrinsic decomposition methods on video data is shown in Figure 10. It can be observed that, using the temporal consistency constraint, both (MSCR+Flow) and (Ours+Flow) achieve better temporal consistency compared to their framewise counterparts (MSCR and Ours).

In Figure 11, temporal consistency loss is added to framewise methods (MSCR) and (Ours). In the (Ours+Flow) result, temporally inconsistent areas are suppressed compared to (Ours). However, in the (MSCR+Flow) result, temporal inconsistency is unexpectedly amplified in the highlighted area in the red box. In addition, the (MSCR+Flow) result is more blurred than the one without temporal consistency loss. In MSCR, multiple scales of feature maps are merged in the encoding phase. While features from deeper layers contain high-level knowledge, they may lack texture details. The temporal consistency constraint may encourage MSCR to pay more attention to high-level features, resulting in more blurred outputs. The case of (MSCR+Flow) indicates that while temporal consistency loss could be easily extended to other deep neural networks, the outcome will largely depend on the characteristics of specific methods.

| MSE | LMSE | DSSIM | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Methods | albedo | shading | avg | albedo | shading | avg | albedo | shading | avg | |

| framewise | MSCR [14] | 0.0223 | 0.0181 | 0.0202 | 0.0154 | 0.0127 | 0.0140 | 0.1880 | 0.1609 | 0.1745 |

| Revisiting [16] | 0.0199 | 0.0137 | 0.0168 | 0.0148 | 0.0094 | 0.0121 | 0.1655 | 0.1095 | 0.1375 | |

| Ours | 0.0173 | 0.0163 | 0.0168 | 0.0120 | 0.0115 | 0.0117 | 0.1472 | 0.1123 | 0.1298 | |

| flow | MSCR+Flow | 0.0227 | 0.0184 | 0.0206 | 0.0150 | 0.0123 | 0.0136 | 0.1950 | 0.1727 | 0.1839 |

| Ours+DVP [52] | 0.0169 | 0.0165 | 0.0167 | 0.0117 | 0.0114 | 0.0116 | 0.1549 | 0.1204 | 0.1377 | |

| Ours+Flow | 0.0173 | 0.0152 | 0.0163 | 0.0117 | 0.0102 | 0.0109 | 0.1423 | 0.1054 | 0.1238 | |

| Methods | A | B | C | D | E | F | G | H | avg | |

|---|---|---|---|---|---|---|---|---|---|---|

| framewise | MSCR [14] | 48.18 | 62.30 | 38.70 | 31.80 | 5.49 | 52.36 | 86.50 | 31.47 | 44.60 |

| Revisiting [16] | 8.32 | 35.48 | 18.06 | 25.44 | 5.03 | 68.92 | 19.37 | 39.41 | 27.50 | |

| Ours | 0.12 | 27.61 | 6.55 | 4.57 | 2.04 | 88.99 | 12.80 | 40.05 | 22.84 | |

| flow | MSCR+Flow | 68.69 | 56.42 | 50.50 | 56.76 | 7.87 | 48.27 | 60.34 | 31.78 | 47.58 |

| Ours+DVP [52] | 78.93 | 47.52 | 28.72 | 10.19 | 22.71 | 51.89 | 72.33 | 54.06 | 45.79 | |

| Ours+Flow | 84.22 | 70.91 | 78.33 | 60.32 | 6.42 | 51.64 | 54.05 | 45.12 | 56.37 |

VI Conclusions

In this paper, we have presented a novel two-stream encoder-decoder network for intrinsic image decomposition. Our method is able to exploit discriminative properties of the features for different intrinsic images. Specifically, our feature distribution divergence is designed to increase the distance between features corresponding to different intrinsic images, and our feature perceptual loss is applied to constrain the feature distribution. These two modules work together to encode discriminative features for intrinsic image decomposition. We have also provided an algorithm to refine the MPI Sintel dataset to make it more suitable for intrinsic image decomposition. Visual results for MPI_RD and the more challenging IIW dataset demonstrate that our proposed method can achieve results with better albedo and shading separation than existing methods. Its extension to video data is able to decompose video into intrinsic image sequences with temporal consistency.

The limitations of our method are of two kinds. Firstly, the mathematical model of intrinsic decomposition applied in this work is relatively preliminary. We cannot recover scene properties such as 3D geometry, light source positions, global illumination, etc. It is worthwhile challenge to exploit feature discrimination properties in more complex and powerful mathematical models. Secondly, the temporal consistency constraint used to extend our method to intrinsic video decomposition does not generalize well. As Figure 11 shows, directly using the temporal consistency loss in MSCR can result in unwanted blurring. In future, it is of interest to derive more general temporal consistency constraints for intrinsic video decomposition.

Acknowledgements. Portions of this work were presented at the International Conference on Computer Vision Workshops in 2019 [18]. This work was supported by the National Natural Science Foundation of China (NSFC) (Grants 61972012, 61732016).

Declarations

Conflict of interest The authors declare that they have no conflict of interest.

Appendix A Constraints for sparsely-labelled data

A-A Ordinal loss

For each pair of annotated pixels in the predicted albedo image , we have the error function:

| (15) |

where denotes the relative reflectance (albedo) judgement from IIW. Values of are set to 1, 0, or depending on whether the relative brightness of pixel is greater, the same, or lower than that of pixel .

A-B Smoothness priors

The albedo component is constrained using a multi-scale smoothness term:

| (16) | ||||

in which indicates the 8-connected neighborhood of the pixel at position at scale . is the weight corresponding to the similarity between the pair of albedo pixels , which is formulated as . The greater the similarity of the two pixels, the smaller the weight, making the pairwise term loss less significant. is the feature vector defined as , where is spatial position, is image intensity, and and are the first two elements of chromaticity. is the covariance matrix defining the distance between two feature vectors. This albedo smoothness term encourages the reconstructed albedo layer to be piecewise constant.

The shading smoothness is formulated using a densely-connected term:

| (17) |

where is a bi-stochastic weight matrix derived from where

A detailed derivation can be found in [23, 60]. This weight is used to measure the positional difference of a pair of pixels in an image, with greater weight for nearby pixels.

Appendix B Further results

Further qualitative comparisons are shown in Figure 12, using the refined MPI Sintel dataset and Figure 13, using the IIW dataset.

References

- [1] Baslamisli AS, Le HA, Gevers T. CNN based learning using reflection and retinex models for intrinsic image decomposition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, 6674–6683.

- [2] Land EH, McCann JJ. Lightness and retinex theory. Josa, 1971, 61(1): 1–11.

- [3] Rother C, Kiefel M, Zhang L, Schölkopf B, Gehler PV. Recovering intrinsic images with a global sparsity prior on reflectance. In Advances in neural information processing systems, 2011, 765–773.

- [4] Shen L, Yeo C. Intrinsic images decomposition using a local and global sparse representation of reflectance. In CVPR 2011, 2011, 697–704.

- [5] Shen L, Tan P, Lin S. Intrinsic image decomposition with non-local texture cues. In 2008 IEEE Conference on Computer Vision and Pattern Recognition, 2008, 1–7.

- [6] Zhao Q, Tan P, Dai Q, Shen L, Wu E, Lin S. A closed-form solution to retinex with nonlocal texture constraints. IEEE transactions on pattern analysis and machine intelligence, 2012, 34(7): 1437–1444.

- [7] Barron JT, Malik J. Shape, illumination, and reflectance from shading. IEEE transactions on pattern analysis and machine intelligence, 2014, 37(8): 1670–1687.

- [8] Bousseau A, Paris S, Durand F. User-Assisted Intrinsic Images. In ACM SIGGRAPH Asia 2009 Papers, SIGGRAPH Asia ’09, 2009, 130–140.

- [9] Shen J, Yang X, Li X, Jia Y. Intrinsic image decomposition using optimization and user scribbles. IEEE transactions on cybernetics, 2013, 43(2): 425–436.

- [10] Grosse R, Johnson MK, Adelson EH, Freeman WT. Ground truth dataset and baseline evaluations for intrinsic image algorithms. In 2009 IEEE 12th International Conference on Computer Vision, 2009, 2335–2342.

- [11] Butler DJ, Wulff J, Stanley GB, Black MJ. A naturalistic open source movie for optical flow evaluation. In A Fitzgibbon et al (Eds), editor, European Conf. on Computer Vision (ECCV), Part IV, LNCS 7577, 2012, 611–625.

- [12] Bell S, Bala K, Snavely N. Intrinsic images in the wild. ACM Transactions on Graphics (TOG), 2014, 33(4): 159.

- [13] Narihira T, Maire M, Yu SX. Learning lightness from human judgement on relative reflectance. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2015, 2965–2973.

- [14] Narihira T, Maire M, Yu SX. Direct Intrinsics: Learning Albedo-Shading Decomposition by Convolutional Regression. In International Conference on Computer Vision (ICCV), 2015, 2992–2992.

- [15] Shi J, Dong Y, Su H, Yu SX. Learning non-lambertian object intrinsics across shapenet categories. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2017, 1685–1694.

- [16] Fan Q, Yang J, Hua G, Chen B, Wipf D. Revisiting deep intrinsic image decompositions. In Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, 8944–8952.

- [17] Li Z, Snavely N. Cgintrinsics: Better intrinsic image decomposition through physically-based rendering. In Proceedings of the European Conference on Computer Vision (ECCV), 2018, 371–387.

- [18] Wang Z, Lu F. Single image intrinsic decomposition with discriminative feature encoding. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, 2019, 0–0.

- [19] Bonneel N, Kovacs B, Paris S, Bala K. Intrinsic Decompositions for Image Editing. Computer Graphics Forum (Eurographics State of the Art Reports 2017), 2017, 36(2).

- [20] Weiss Y. Deriving intrinsic images from image sequences. In Proceedings Eighth IEEE International Conference on Computer Vision. ICCV 2001, volume 2, 2001, 68–75.

- [21] Matsushita Y, Lin S, Kang SB, Shum HY. Estimating intrinsic images from image sequences with biased illumination. In European Conference on Computer Vision, 2004, 274–286.

- [22] Laffont PY, Bazin JC. Intrinsic decomposition of image sequences from local temporal variations. In Proceedings of the IEEE International Conference on Computer Vision, 2015, 433–441.

- [23] Li Z, Snavely N. Learning intrinsic image decomposition from watching the world. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, 9039–9048.

- [24] Lettry L, Vanhoey K, Van Gool L. Unsupervised Deep Single-Image Intrinsic Decomposition using Illumination-Varying Image Sequences. Computer Graphics Forum, 2018, 37(7): 409–419.

- [25] Gong W, Xu W, Wu L, Xie X, Cheng Z. Intrinsic image sequence decomposition using low-rank sparse model. IEEE Access, 2018, 7: 4024–4030.

- [26] Liu Y, Lu F. Separate in Latent Space: Unsupervised Single Image Layer Separation. Proceedings of the AAAI Conference on Artificial Intelligence, 2020, 34(07): 11661–11668.

- [27] Barron JT, Malik J. Intrinsic scene properties from a single rgb-d image. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2013, 17–24.

- [28] Chen Q, Koltun V. A simple model for intrinsic image decomposition with depth cues. In Proceedings of the IEEE International Conference on Computer Vision, 2013, 241–248.

- [29] Lee KJ, Zhao Q, Tong X, Gong M, Izadi S, Lee SU, Tan P, Lin S. Estimation of intrinsic image sequences from image+ depth video. In European Conference on Computer Vision, 2012, 327–340.

- [30] Kim S, Park K, Sohn K, Lin S. Unified depth prediction and intrinsic image decomposition from a single image via joint convolutional neural fields. In European conference on computer vision, 2016, 143–159.

- [31] Cheng Z, Zheng Y, You S, Sato I. Non-local intrinsic decomposition with near-infrared priors. In Proceedings of the IEEE/CVF International Conference on Computer Vision, 2019, 2521–2530.

- [32] Bi S, Han X, Yu Y. An L1 Image Transform for Edge-Preserving Smoothing and Scene-Level Intrinsic Decomposition. ACM Trans. Graph., 2015, 34(4).

- [33] Li Y, Brown MS. Single image layer separation using relative smoothness. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2014, 2752–2759.

- [34] Sheng B, Li P, Jin Y, Tan P, Lee TY. Intrinsic image decomposition with step and drift shading separation. IEEE Transactions on Visualization and Computer Graphics, 2018, 26(2): 1332–1346.

- [35] Fu X, Zeng D, Huang Y, Zhang XP, Ding X. A weighted variational model for simultaneous reflectance and illumination estimation. In Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, 2782–2790.

- [36] Fu G, Zhang Q, Xiao C. Towards high-quality intrinsic images in the wild. In 2019 IEEE International Conference on Multimedia and Expo (ICME), 2019, 175–180.

- [37] Krebs A, Benezeth Y, Marzani F. Intrinsic image decomposition as two independent deconvolution problems. Signal Processing: Image Communication, 2020, 86: 115872.

- [38] Tang Y, Salakhutdinov R, Hinton G. Deep Lambertian Networks. In Proceedings of the 29th International Coference on International Conference on Machine Learning, ICML’12, 2012, 1419–1426.

- [39] Zhou T, Krahenbuhl P, Efros AA. Learning data-driven reflectance priors for intrinsic image decomposition. In Proceedings of the IEEE International Conference on Computer Vision, 2015, 3469–3477.

- [40] Zoran D, Isola P, Krishnan D, Freeman WT. Learning ordinal relationships for mid-level vision. In Proceedings of the IEEE International Conference on Computer Vision, 2015, 388–396.

- [41] Nestmeyer T, Gehler PV. Reflectance adaptive filtering improves intrinsic image estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2017, 6789–6798.

- [42] Fu G, Zhang Q, Zhu L, Li P, Xiao C. A Multi-Task Network for Joint Specular Highlight Detection and Removal. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, 7752–7761.

- [43] Seo K, Kinoshita Y, Kiya H. Deep Retinex Network for Estimating Illumination Colors with Self-Supervised Learning. In 2021 IEEE 3rd Global Conference on Life Sciences and Technologies (LifeTech), 2021, 1–5.

- [44] Baslamisli AS, Liu Y, Karaoglu S, Gevers T. Physics-based shading reconstruction for intrinsic image decomposition. Computer Vision and Image Understanding, 2021, 205: 103183.

- [45] Baslamisli AS, Das P, Le HA, Karaoglu S, Gevers T. ShadingNet: image intrinsics by fine-grained shading decomposition. International Journal of Computer Vision, 2021: 1–29.

- [46] Zhu Y, Tang J, Li S, Shi B. DeRenderNet: Intrinsic Image Decomposition of Urban Scenes with Shape-(In)dependent Shading Rendering. In 2021 IEEE International Conference on Computational Photography (ICCP), 2021, 1–11.

- [47] Sial HA, Baldrich R, Vanrell M. Deep intrinsic decomposition trained on surreal scenes yet with realistic light effects. JOSA A, 2020, 37(1): 1–15.

- [48] Chang AX, Funkhouser T, Guibas L, Hanrahan P, Huang Q, Li Z, Savarese S, Savva M, Song S, Su H, et al.. Shapenet: An information-rich 3d model repository. arXiv preprint arXiv:1512.03012, 2015.

- [49] Kong N, Gehler PV, Black MJ. Intrinsic video. In European Conference on Computer Vision, 2014, 360–375.

- [50] Ye G, Garces E, Liu Y, Dai Q, Gutierrez D. Intrinsic video and applications. ACM Transactions on Graphics (ToG), 2014, 33(4): 1–11.

- [51] Meka A, Zollhöfer M, Richardt C, Theobalt C. Live intrinsic video. ACM Transactions on Graphics (TOG), 2016, 35(4): 1–14.

- [52] Lei C, Xing Y, Chen Q. Blind video temporal consistency via deep video prior. Advances in Neural Information Processing Systems, 2020, 33.

- [53] Simonyan K, Zisserman A. Very Deep Convolutional Networks for Large-Scale Image Recognition. CoRR, 2014, abs/1409.1556.

- [54] Deng J, Dong W, Socher R, Li LJ, Li K, Fei-Fei L. Imagenet: A large-scale hierarchical image database. In 2009 IEEE conference on computer vision and pattern recognition, 2009, 248–255.

- [55] Johnson J, Alahi A, Fei-Fei L. Perceptual losses for real-time style transfer and super-resolution. In European conference on computer vision, 2016, 694–711.

- [56] Wang Z, Bovik AC, Sheikh HR, Simoncelli EP, et al.. Image quality assessment: from error visibility to structural similarity. IEEE transactions on image processing, 2004, 13(4): 600–612.

- [57] Roweis ST, Saul LK. Nonlinear dimensionality reduction by locally linear embedding. science, 2000, 290(5500): 2323–2326.

- [58] Yao CH, Chang CY, Chien SY. Occlusion-aware video temporal consistency. In Proceedings of the 25th ACM international conference on Multimedia, 2017, 777–785.

- [59] Garces E, Muñoz A, Lopez-Moreno J, Gutiérrez D. Intrinsic Images by Clustering. Computer Graphics Forum, 2012, 31(4): 1415–1424.

- [60] Barron JT, Adams A, Shih Y, Hernández C. Fast bilateral-space stereo for synthetic defocus. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2015, 4466–4474.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/cd450125-7812-4abb-9816-039de62f6ae5/Zongji_Wang.jpg) |

Zongji Wang received his B.S. degree in the School of Mathematics and Systems Science at Beihang University in 2014, and his Ph.D. degree in the School of Computer Science and Engineering at Beihang University in 2021. He is currently an Assistant Professor in the Key Laboratory of Network Information Systems Technology (NIST), Aerospace Information Research Institute, Chinese Academy of Sciences. His research interests include computer vision and computer graphics. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/cd450125-7812-4abb-9816-039de62f6ae5/Yunfei_Liu.jpeg) |

Yunfei Liu is currently pursuing a Ph.D. degree in the State Key Laboratory of Virtual Reality Technology and Systems, School of Computer Science and Engineering, Beihang University. His research interests include computer vision, computational photography, and image processing. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/cd450125-7812-4abb-9816-039de62f6ae5/Feng_Lu.jpg) |

Feng Lu received his B.S. and M.S. degrees in automation from Tsinghua University, in 2007 and 2010, respectively, and his Ph.D. degree in information science and technology from the University of Tokyo, in 2013. He is currently a Professor with the State Key Laboratory of Virtual Reality Technology and Systems, School of Computer Science and Engineering, Beihang University. His research interests include computer vision, human-computer interaction and augmented intelligence. |