Distangling Biological Noise in Cellular Images with a focus on Explainability

Abstract

The cost of some drugs and medical treatments has risen in recent years that many patients are having to go without. A classification project could make researchers more efficient.

One of the more surprising reasons behind the cost is how long it takes to bring new treatments to market. Despite improvements in technology and science, research and development continues to lag. In fact, finding new treatment takes, on average, more than 10 years and costs hundreds of millions of dollars. In turn, greatly decreasing the cost of treatments can make ensure these treatments get to patients faster. This work aims at solving a part of this problem by creating a cellular image classification model which can decipher the genetic perturbations in cell (occurring naturally or artificially). Another interesting question addressed is what makes the deep-learning model decide in a particular fashion, which can further help in demystifying the mechanism of action of certain perturbations and paves a way towards the explainability of the deep-learning model.

We show the results of Grad-CAM visualizations and make a case for the significance of certain features over others. Further we discuss how these significant features are pivotal in extracting useful diagnostic information from the deep-learning model.

1 Introduction

It has been a human endeavour, for time eternity, to know about the inner functioning of our body, be it at the microscopic or macroscopic or macroscopic scale. In his 1859 book, On the Origin of Species, Charles Darwin propounded the earth shattering theory of Natural Selection. A reconciliation in the statistic nature and biological nature of this excitingly new phenomenon was discovered posthumously in the works of Gregor Mendel, who blamed, factors - now called genes, as the sole reason for passage of traits or a slightly adjacent term heredity.

Mendel’s factors led scientist on frantic chase for the physical location of these genes and after around half century Alfred Hershey and Martha Chase, in the year 1952, proved definitely the seat of gene to be DNA [1], thought of as an useless bio-molecule till then. Followed by Har Gobind Khorana’s discovery of the genetic code led to a flurry of research in the field of molecular biology that has led bare infront of scientist many exciting inner workings of the cell. This research is often accompanied by a behemoth of data, be it numerical, or be it images. A host of deep learning and machine learning techniques have been thrown at these these data-sets to tease out patterns which can be of immense practical use to the human race.

1.1 Biological Primer

The process of formation of proteins, or the Central Dogma, is surprisingly not so much of a chemical concept but rather an informational one [2]. The strings of coded information in the DNA provide a template for the structure of protein, but the DNA is enslaved by its geography, i.e, it is only found inside the nucleus while the protein are found in the cytoplasm (outside the nucleus). To carry this information, another molecule called RNA (specifically messenger-RNA) is employed. siRNA are single stranded molecules which bind with mRNA (the intermediary molecule in between DNA and Protein) and stops the formation of the protein [3]. One way to study the function of a particular gene is by silencing it and then studying the resultant cell phenotype. This is also called loss-of-function analyses of the cell [4]. The total silencing renders the action of a particular gene useless, which is termed as gene-knockout and it is a low through put technique as compared to gene-knockdown. Another factor to keep in mind here is the off-target effects of the siRNA used, which might affect not only the mRNA it is designed to inhibit but other mRNAs with partial matches.

2 Data-Set

To understand the data fully, we need to understand the experimental setup under which the data is collected. This will help in understanding what are the sources of spurious effects which are introduced during the process of data collection (there maybe other unclassified sources as well).

2.1 Experimental Setup

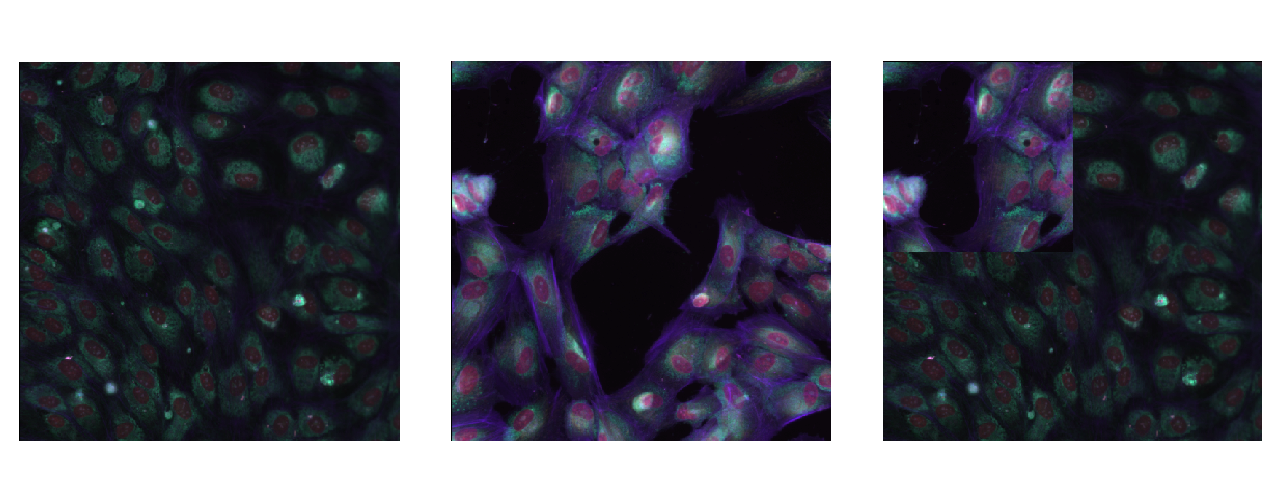

The experiment is conducted on single cell type, by choosing one of the four available cell types - HUVEC (Human Umbilical Vein Endothelial Cell), RPE (Retinal Pigment Epithelium), HepG2 (Human Liver Cancer Cell ) and U2OS (Human Bone Osteosarcoma Epithelial Cells). The images are taken from cell cultures.

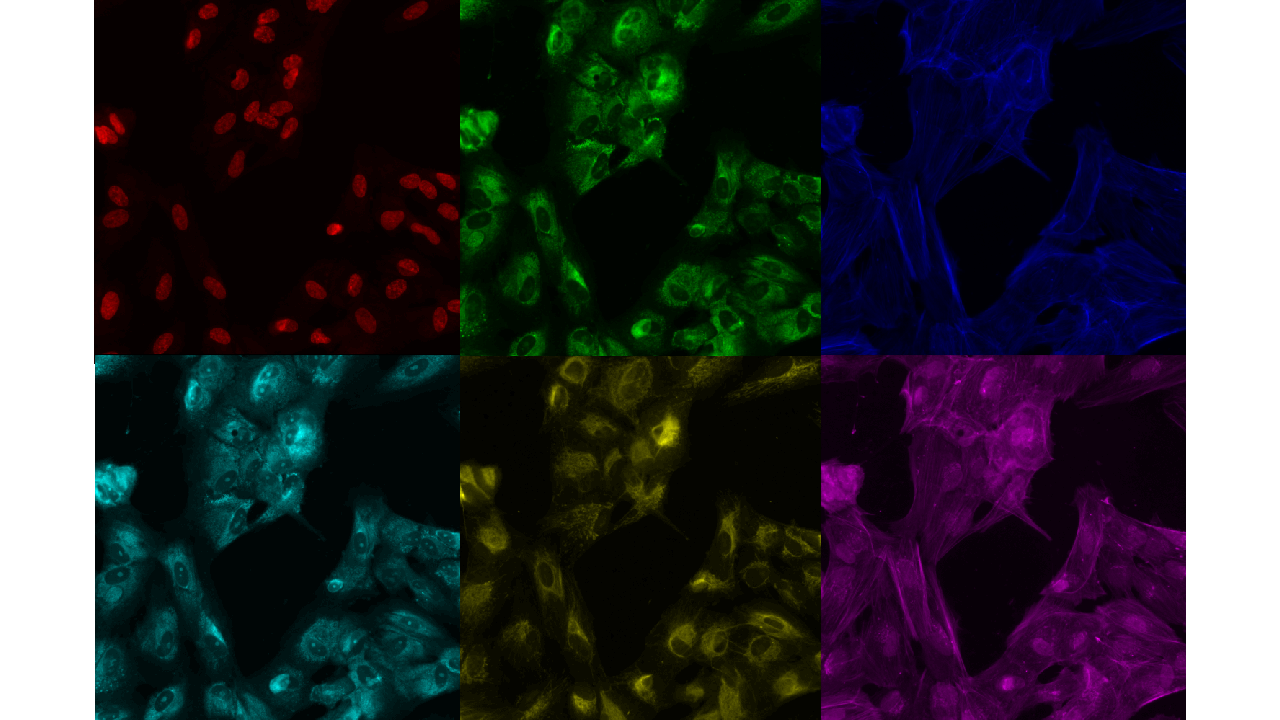

The siRNA is the object of interest, which is going to create the biological variability, or on a simpler level, changes which we are going to observe. Cell cultures are created in wells of plate which is can hold 384 such well (). There are in total 51 experiments conducted in the collection of database. One well can be thought as a mini-test tube. There are two imaging sites per well, images from the border wells are discarded as they might be affected by environmental noise like temperature differentials. The image obtained is of the size - i.e, there are six channels per image (for comparison in a standard image there are three channels - Red, Green and Blue).

2.2 Role of Controls

Controls play a very crucial role in determining the performance of the model as well as calibrating the model

Negative control is a well which is left untreated in the experiments, there is one negative control per plate. A negative control is included in the experiment to distinguish reagent specific effects from non-reagent specific effects in the siRNA-treated cells [4]. When the image from the negative control well is given as an input the model should not make confident predictions, this is a kind of validity check

Positive control is a well which is treated with reagents whose affect on the cell culture is known and well studied [4]. Positive controls are used to measure how efficient the reagent is. In this context when images from the positive control are given as input the model should predict the reagent class with maximum confidence. This again serves as a sanity check.

2.3 Related Statistics

There are in total images in the data-set. Out of which are in the train set and are in the test set. Since the images comprise of six channels, the total number of gray-scale images in the data-set is . There in total classes.

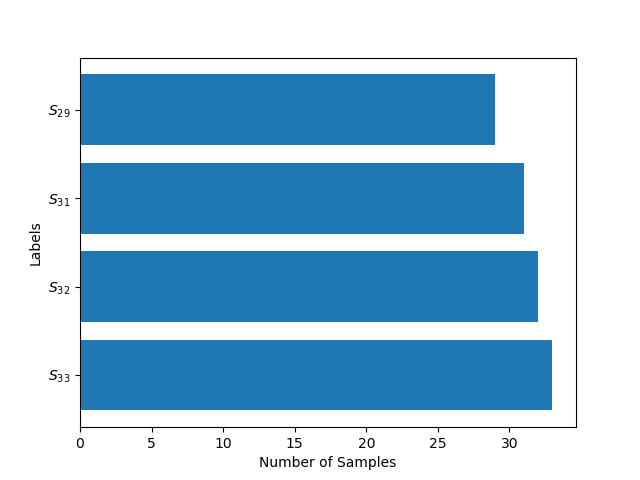

The number of samples per class, , is a very important number while training the data-set. A data-set might have a high yet a very low in effect rendering the size of the data-set misleading. The following figure 2 shows the distribution of :

In figure 2 is the set of Classes which have same Number of Samples (). In Table 1 we can see the number of classes which fall in the particular sets.

| Class Set | Number of Classes |

|---|---|

2.4 Pixel Value Distribution

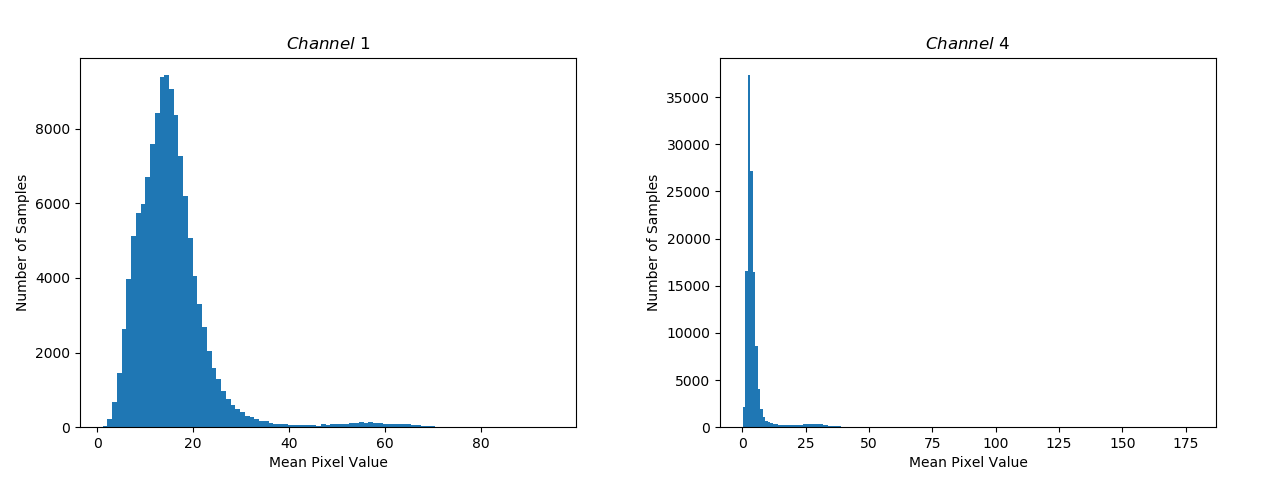

To get a good bearing on the distribution of pixel values in the data-set, we can look at the distribution of mean pixel value per image according to different channels.

The variance in the mean Pixel Values for Channel 1 is the highest and is the lowest for Channel 4 (Figure 3).

3 Methods and Techniques

In this section we discuss techniques such as selection of backbone for the deep-learning model, the design of loss, prediction schema and methods used to understand the inner-workings of the optimized model.

3.1 Backbone

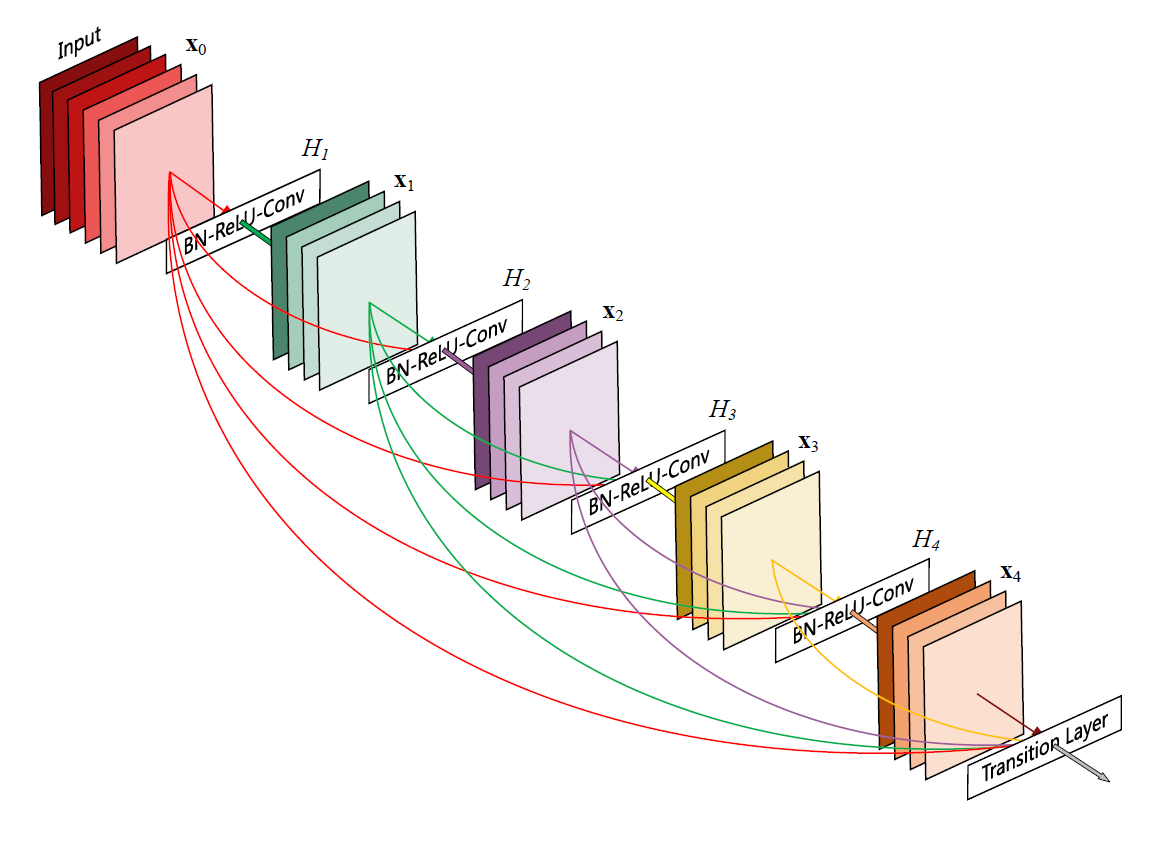

Densenet takes the idea of skip connections (introduced in Resnets [5]) one step further. In Resnet where each layer is connected to the next layer with a shortcut, in Densenet apart from the next layer each layer is connected to every other subsequent layer.

Resnets:

| (1) |

Densenets:

| (2) |

where is the feature map after the layer. is a composite function comprising of activations, non-linearity, pooling etc.

But this is all the similarity that exists between the two networks. Dense takes a departure in the way it introduces these connections, instead of summing up the input to the output, it is rather concatenated with it [6]. In equation [2] the vector is the concatenation of the feature maps outputted in layers .This can only be done if the dimension of the channels in the input are same as the output, therefore it is hard to maintain these skip connections through the entire network. These dense connectivity layers are packed into a module, called a dense block as shown in Figure 4. This is a miniature feed forward network in itself which has this added advantage of skip connections. These dense blocks are connected using transition layers. It is at these transition layers where the change in the dimensions of the feature map takes place.

3.2 Losses

Loss functions are a way to get measure of distance in the embedding space. Since the model tries to minimise this function, it encourages certain kind of structures on the embeddings produced by the model. We will trace the evolution of loss function for a particular type of problem - that of Face Recognition, because it is this class where the current task also finds its place. The difficulty which is posed by the problems of this class is that the intravariations within a class can be larger than inter-differences.

Softmax Loss is a go-to function which provides useful supervision signal in object detection problems. But due to the difficulty posed in the previous section, a vanilla softmax loss gives unsatisfactory results.

| (3) |

where is the batch-size and is the number of classes.

Based on the observations of Parde et al [7], one can infer about the quality of the input image by the L2-norm of the features learnt by the softmax loss function. This gave rise to L2-softmax [8], which proposes to enforce all the feature to have the same L2-Norm.

| (4) |

There have been other kinds of normalizations proposed for both weights and the features in the softmax loss function and it has become a common strategy in softmax.

While the Softmax Loss did help in solving the difficulty at hand up to some extent, practitioners in the Face Recognition community wanted that the samples should be separated more strictly to avoid misclassifying the difficult samples [9]. Arc Loss provides a way to deal with the misclassification of difficult samples. Arc Loss can be motivated in very straight forward way using the softmax loss function which is written as in equation [3], for simplicity we will assume . Writing , where is the angle between the weight and feature . Fixing the norm of to be as done in equation [4], following Wang et al [10] we also fix the feature by using and re-scaling it to . Making the changes we get,

| (5) |

further addition of an angular margin penalty between and results in intra-class compactness and inter-class discrepancy

| (6) |

3.3 Pseudo Labelling

Pseudo Labelling is a technique borrowed from the semi-supervised learning domain. The network is trained with labeled as well unlabelled data simultaneously. In place of labels, Pseudo Labels are used which is nothing but picking the class which has the maximum predicted probability [11].

Let be the pseudo label for a particular sample . Let be the predicted probabilities for the sample , then:

The labelled and unlabelled samples are used simultaneously to calculate the loss value as follows:

| (7) |

where are the predicted probabilities for unlabelled samples and is a coefficient balancing the effect of labeled and unlabelled samples on the network.

It is important to achieve a proper scheduling of , a higher value will disrupt the training and a low value will negate any benefits which can be derived from pseudo-labelling [12].

One way to schedule is as follows:

where is the epoch at which the pseudo-labelling starts and increases linearly until it attains its final value at where it is equal to .

The reasons proposed for the success of this technique is Low Density Separation between Classes, according to the cluster assumption the decision boundaries between clusters lie in low density regions, pseudo labelling helps the network output to be insensitive to variations in the directions of low-dimensional manifold [11].

3.4 CutMix

Data augmentation is broad variety of techniques which is used to increase the generalisation capabilities of the network, either by increasing the available train data-set or increasing the feature capturing strength of filters. There are some standard augmentation techniques such as flipping and rotating the input image which try to make the network agnostic of the pose of features in the image. Even after the application of said transforms the ability of the network to learn local features is not enhanced, there are few techniques which try to promote the object localisation capabilities.

One strategy to use is CutMix. A cropped-up part of one image is placed on top of another image and the labels are manipulated accordingly. More area one image has in the final image, the final label is higher for that image too. To achieve this first we need to make a bounding box which is the region removed from image . Let the coordinates of the bounding box = . The bounding box is sampled as follows:

| (8) |

where and is the CutMix coefficient which is sampled from a beta distribution with usually set to . Also note which is the cropped area ratio. Using this we get our mask which is equal to for all the points inside the bounding box . Therefore the final image and label thus become,

| (9) |

3.5 Activation Maps

There have been various techniques [13], [14] proposed in recent times to understand the workings of deep-CNNs. One major break through in this has been the technique called Class Activation Maps (CAM) [15], which proposes to produce these visualizations using global average pooling. A better and more sophisticated version has been proposed based on this work called Grad-CAM.

To go forward, we have to answer a pertinent question - What makes a good visual explanation? This can be answered in two parts. First, the technique should be able to clearly show the difference in localization properties of model for different classes, further it should show the discriminative behaviour in these localizations. Second, the visual explanations should a good resolution, therefore capturing the fine grain details about models attention. These two points are the focus of Grad-CAM.

Grad-CAM is gradient weighted global average pooling technique [16], which works by first computing the importance weights ,

| (10) |

An is computed for a particular class and Activation Map where the partial differentiation term inside the global average pooling function is the gradient of the class activation, (before the softmax) with respect to activation map

After this summation of each activation map, weighted by the importance in taken, to generate the Grad-CAM visualization

| (11) |

4 Experiments

4.1 Backbone

As compared to Resnet, a pre-trained densenet (trained on Imagenet dataset) performed better in general and was used for further training. The version used is the memory-efficient implementation of Densenet-161. The input is a six channel image of the dimensions , the dimensions of the transforming image along with the operation acting on it are tracked in the following table:

| Output Size | Layer-Specification |

|---|---|

| Conv, stride = 2 | |

| MaxPool, stride = 2 | |

| 6 | |

| 12 | |

| 36 | |

| 24 |

4.2 Inclusion of Cell Type

The way a siRNA reagent interacts with the cell to produce the end results, depends upto some extent on the cell-type as well. This information is incorporated in the model after the Densenet operations. There are four unique cell types, this is represented as a one-hot vector . The output of Densenet (a tensor of size ) is flattened using adaptive average pooling to bring it down to size () which is then further reduced in dimensions to produce a vector . Concatenating and we end up with a vector, .

4.3 Loss Selection

The loss function acts on a embedding, which is the output the final layer of the model. The final loss function is composite function made up of Softmax [3.2] and Arc-Margin [3.2] loss functions. Using the functions defined in [3] and [6] we get the composite loss,

| (12) |

The hyperparameter and in Equation 6, the value of is .

A composite loss function is used to produce a stable training regime. A loss function composed entirely of ended up in an oscillatory behavior. The inclusion helped stabilize the training process.

4.4 Accuracy and Loss

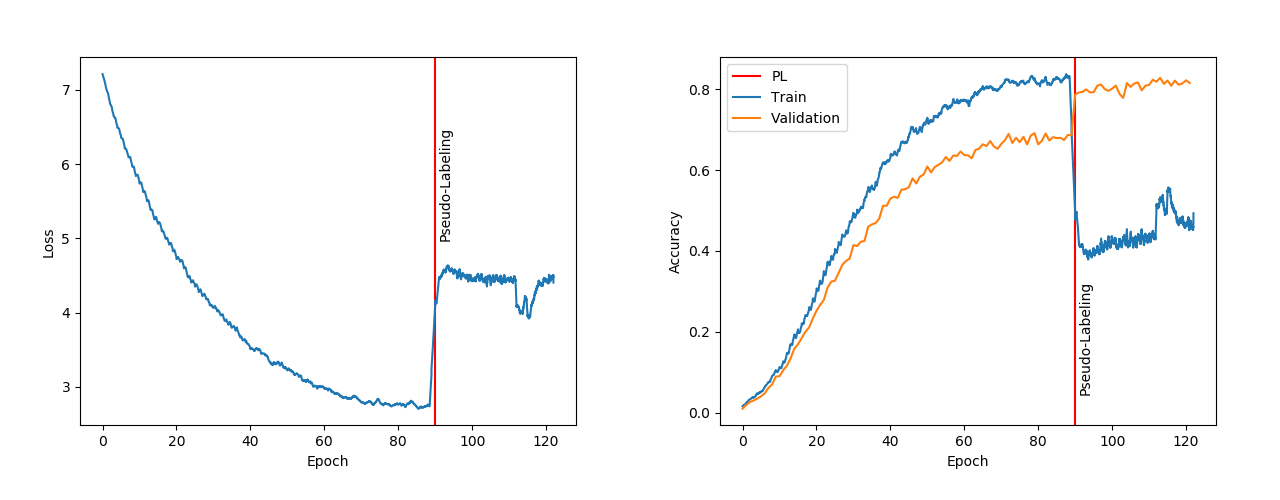

As can be seen in the Figure 6 the generalising capabilities of the network were improved almost instantaneously with the introduction of Pseudo-Labelling [3.3].

As defined in section 3.4, CutMix helps the model generalise better. The coefficient in equation [9] was sampled from the distribution with the value of .

Pseudo-Labelling proved better at helping the model generalise on the validation set. Though after the introduction of pseudo-labelling the training loss and training accuracy [6] both took a hit. We will get a better insight into the benefits of pseudo-labelling in the next section.

4.5 Template and Feature Vector

One way to look at the deep-learning model is through the lens of template and feature vectors. The first part of the model takes the image, and does the convolution and pooling operations, giving a feature vector . After this a dot product is taken between and , which is the template for the class. The resulting operation can be summarised for every class using,

| (13) |

where and , where is the number of classes. After taking softmax of we get . The value of is the probability that the image belongs to class . The key take away here is that,

Therefore a model will generalise well for which ’s are spread apart. Further all the feature vectors of the same class should form a cluster and feature vectors of different classes should be far apart.

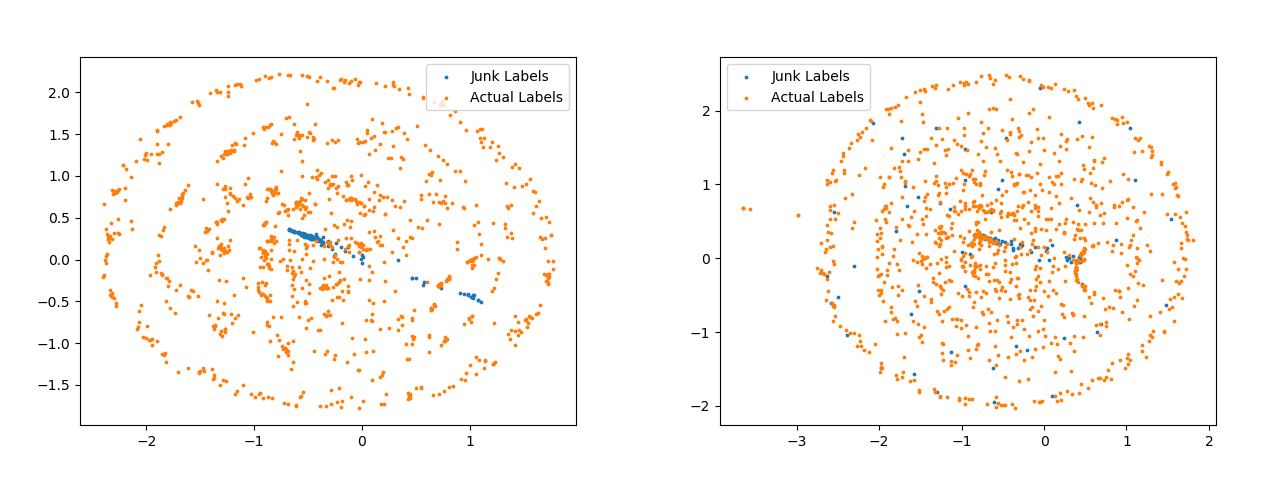

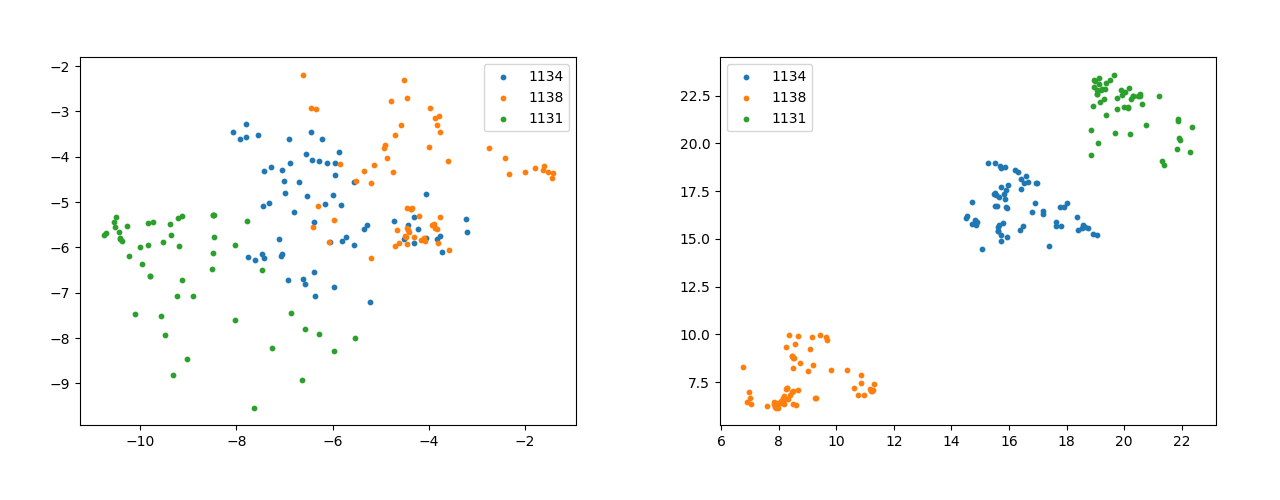

In this model we have separate template vectors for the two types of losses. The Figure 7 shows the difference between the template vectors obtained obtained with and without pseudo-labelling. As compared to the vectors in left Figure 7 the vectors are more spread apart in the right Figure 7.

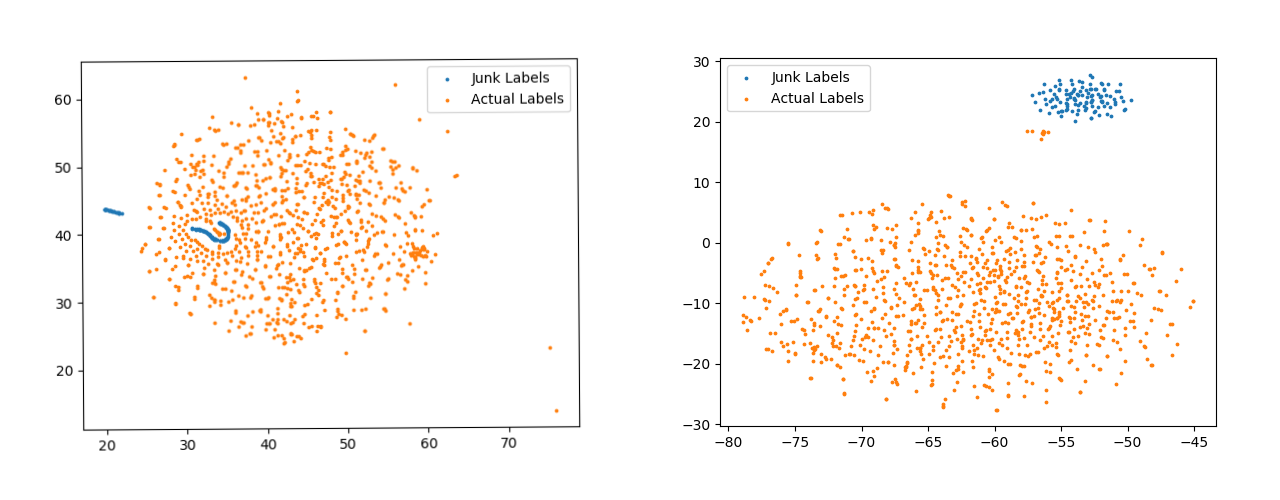

Figure 8 are for softmax template vectors. Apart from the spread of points, another thing to notice here are the blue points. The points shown with blue color are templates for Junk Classes. These are the classes for which there are no samples present in the data-set. The templates generated from the model trained with pseudo-labels has made a completely separate cluster for such classes. The distinction between the Junk Classes and Actual Classes is visible all the four Figure, 7, 8, but the distinction is best visible in the right Figure 8.

Another interesting plot to look for is that of the feature vectors. The plot without pseudo-labelling (Figure 9) has vague clusters and almost in-existent decision boundary. On the other hand the clusters in Figure 9 are much more well defined and tightly packed with a clear decision boundary separating the clusters. The significance further increases because of the fact that these three classes are the most prevalent in the data-set.

In the scatter Plot of class template vectors and feature vectors initially which is reduced to two dimensions using t-SNE [17]

4.6 Convolution Layers

Convolution layers act as filter which can be tuned in the training process to pick-up certain features and dampen others. The filter is usually square shaped and extends through all the channels of the image on which it is acting. Lets consider a square input image for simplicity (also the input image is square shaped in case). Let the input image be and the convolution filter be where is the number of input channels. The action of one filter produces a new Image with a single channel. When we club a lot of these kind of filters, we get that many images as output which can be stacked to form channels of the output image .

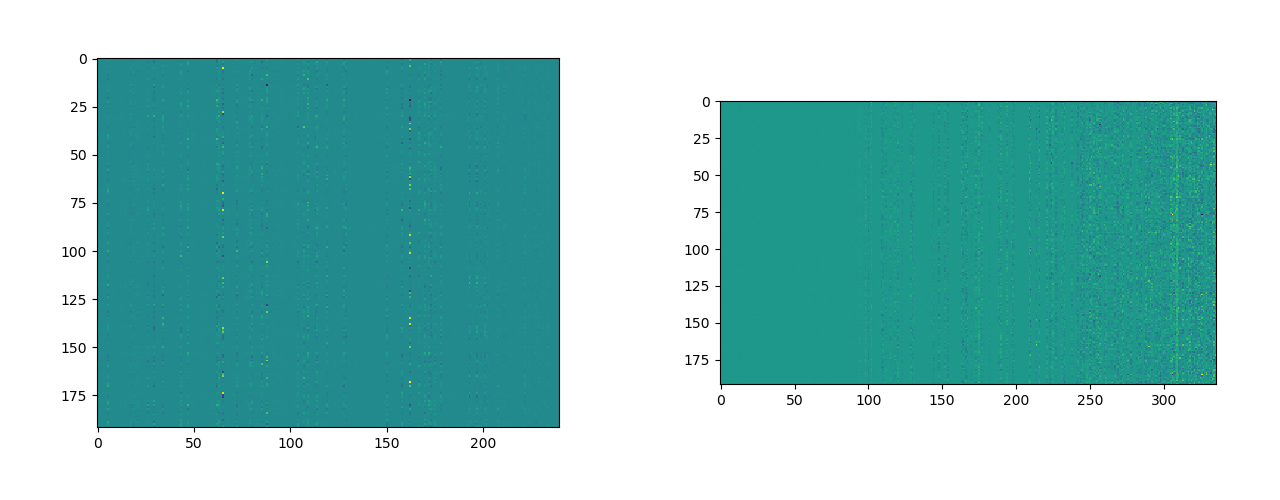

We are interested in filters of shape . The Figures in 10 shows two such filter layers. The image on the left [10] shows the part of the Denselayer (6) of Denseblock (1) [2].

Another way to think of a single convolution operation is as taking the weighted average of the channels of the input image .

| (14) |

where, is the filter of the convolution layer, is the channel of the output image and is the channel of the input image.

What is important to note in both the figures 10, is the vertical bands of one-color, which are repeated horizontally, forming contiguous blocks of same color.

A band of same color signifies that instead of weighing each input channel () differently they multiplied by the same number, hence an average of sorts, lets call such and output if this happens for the filter.

A block of same color signifies that these average images are repeated multiple times in the output image. If this happens for such filters, a lot of variability in the feature map is lost, which is not a good sign.

This phenomenon is observed predominantly in two of the layers shown in Figure 10.

4.7 Grad-CAM

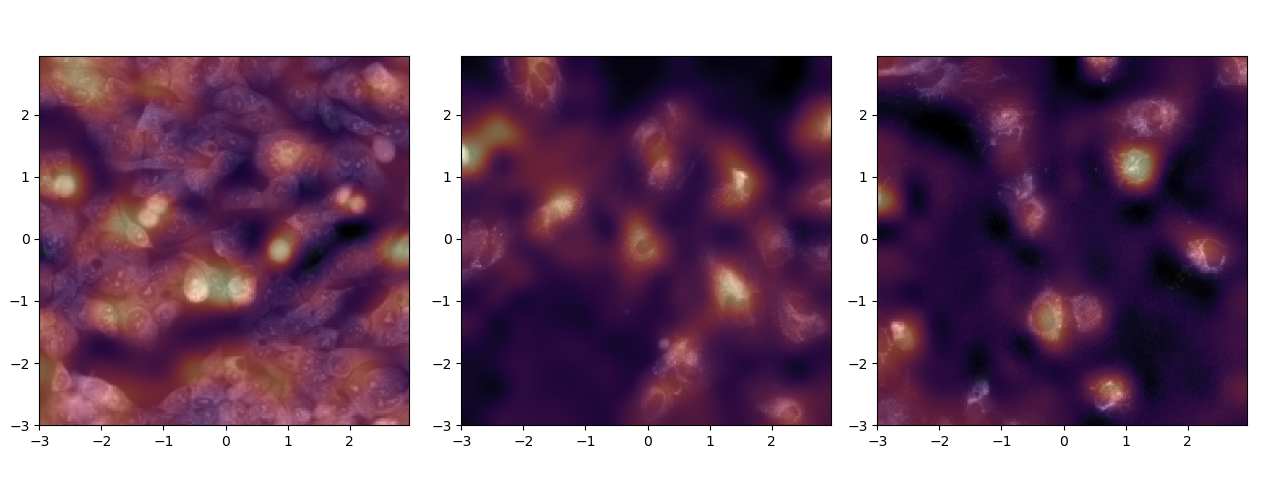

Visualization techniques such as Grad-CAM [3.5] provide us tools to better understand which part of the section of the image impacts the prediction in a positive way. This information is crucial to understand the feature selection capabilities of the network.

In figure 11, it is quite evident that the model seem to paying attention very specific features in the image. Further it would not be wrong to say that these features are of significance, given that it is the Endoplasmic Reticulum in the left, Mitochondria in the middle and Nucleolus in the right.

5 Discussion

Where does a model look at while deciding the proper classification? As seen in the previous section thanks advancements in visualization techniques like Grad-CAM, this question has become answerable to a certain extent.

A question going forward now that can be put up is - Can we benefit from this information? Usually when we train the model for an image classification task, the human capabilities are at par with the model (there maybe minor differences on data-sets of large scale, where sometimes the model outperforms the human or vice-versa but the magnitude is not even of one order). For problems such as these, the details about where the model pays attention serves nothing more than just a sanity check. For example a model trained to identify dogs should pay attention to the dog in the image, if a model does so we are assured that it has indeed learned to look in the right direction.

Now consider a possibility where the humans can not classify the images because there is no semantic object in the images to classify upon (which is the case in the problem at hand) and now suppose we train an agent which can do this task and is really good at it too. Wouldn’t it be interesting now to look at where the agent is looking?

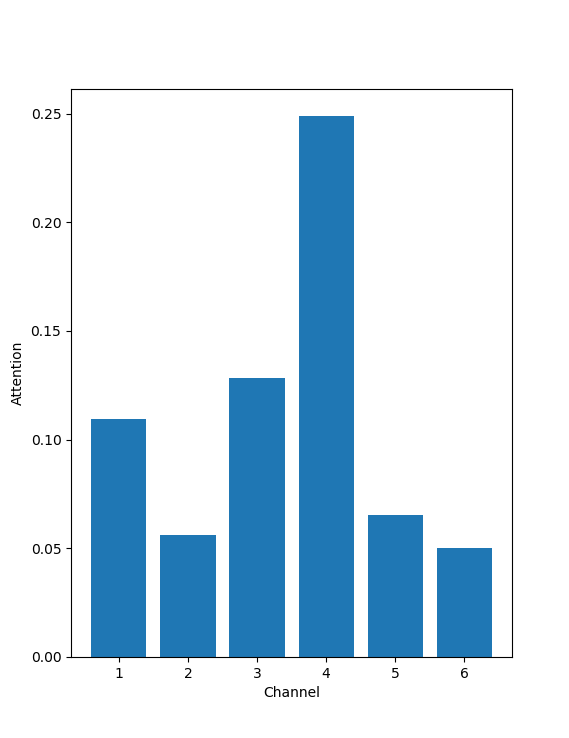

The figure 12 is a crude representation of where the model is looking at. As can be seen most attention is payed on the Channel 5 of the image, for a given cell-type and reagent label. This should be an indication that Channel 5 is the most affected by the reagent and is the Channel which is most crucial in the prediction of the image.

Attention in this case is nothing but as follows:

| (15) |

Where is any CAM visualization and is the channel of the image. This is the vector ( is the number of channels) is then -normalized to get the final attention. A higher number for a channel suggests that the attention map is concordant with channel hence the channel is significant.

References

- [1] Anthony JF Griffiths, Jeffrey H Miller, David T Suzuki, Richard C Lewontin, and William M Gelbart. Mendel’s experiments. In An Introduction to Genetic Analysis. 7th edition. WH Freeman, 2000.

- [2] Michel Morange. The central dogma of molecular biology. Resonance, 14(3):236–247, 2009.

- [3] Hassan Dana, Ghanbar Mahmoodi Chalbatani, Habibollah Mahmoodzadeh, Rezvan Karimloo, Omid Rezaiean, Amirreza Moradzadeh, Narges Mehmandoost, Fateme Moazzen, Ali Mazraeh, Vahid Marmari, et al. Molecular mechanisms and biological functions of sirna. International journal of biomedical science: IJBS, 13(2):48, 2017.

- [4] Haiyong Han. Rna interference to knock down gene expression. In Disease Gene Identification, pages 293–302. Springer, 2018.

- [5] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 770–778, 2016.

- [6] Gao Huang, Zhuang Liu, Laurens Van Der Maaten, and Kilian Q Weinberger. Densely connected convolutional networks. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 4700–4708, 2017.

- [7] Connor J Parde, Carlos Castillo, Matthew Q Hill, Y Ivette Colon, Swami Sankaranarayanan, Jun-Cheng Chen, and Alice J O’Toole. Deep convolutional neural network features and the original image. arXiv preprint arXiv:1611.01751, 2016.

- [8] Rajeev Ranjan, Carlos D Castillo, and Rama Chellappa. L2-constrained softmax loss for discriminative face verification. arXiv preprint arXiv:1703.09507, 2017.

- [9] Wang Mei and Weihong Deng. Deep face recognition: A survey. arXiv preprint arXiv: 1804.06655, 2018.

- [10] Hao Wang, Yitong Wang, Zheng Zhou, Xing Ji, Dihong Gong, Jingchao Zhou, Zhifeng Li, and Wei Liu. Cosface: Large margin cosine loss for deep face recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 5265–5274, 2018.

- [11] Dong-Hyun Lee. Pseudo-label: The simple and efficient semi-supervised learning method for deep neural networks. In Workshop on challenges in representation learning, ICML, volume 3, page 2, 2013.

- [12] Yves Grandvalet and Yoshua Bengio. Entropy regularization. Semi-supervised learning, pages 151–168, 2006.

- [13] Matthew D Zeiler and Rob Fergus. Visualizing and understanding convolutional networks. In European conference on computer vision, pages 818–833. Springer, 2014.

- [14] Aravindh Mahendran and Andrea Vedaldi. Understanding deep image representations by inverting them. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 5188–5196, 2015.

- [15] Bolei Zhou, Aditya Khosla, Agata Lapedriza, Aude Oliva, and Antonio Torralba. Learning deep features for discriminative localization. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 2921–2929, 2016.

- [16] Ramprasaath R Selvaraju, Michael Cogswell, Abhishek Das, Ramakrishna Vedantam, Devi Parikh, and Dhruv Batra. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE international conference on computer vision, pages 618–626, 2017.

- [17] Laurens van der Maaten and Geoffrey Hinton. Visualizing data using t-sne. Journal of machine learning research, 9(Nov):2579–2605, 2008.