Distributed Estimation for Interconnected Systems with Arbitrary Coupling Structures

Abstract

This paper is concerned with the problem of distributed estimation for time-varying interconnected dynamic systems with arbitrary coupling structures. To guarantee the robustness of the designed estimators, novel distributed stability conditions are proposed with only local information and the information from neighbors. Then, simplified stability conditions which do not require timely exchange of neighbors’ estimator gain information is further developed for systems with delayed communication. By merging these subsystem-level stability conditions and the optimization-based estimator gain design, the distributed, stable and optimal estimators are proposed. Quite notably, these optimization solutions can be easily obtained by standard software packages, and it is also shown that the designed estimators are scalable in the sense of adding or subtracting subsystems. Finally, an illustrative example is employed to show the effectiveness of the proposed methods.

Index Terms:

Time-varying interconnected systems; Distributed stability conditions; Distributed estimation; Optimal estimators.I Introduction

With the development of communication and sensor technology, the scale of systems is consistently increasing as they are getting more and more connected. As early as the 1960s, the concept of interconnected systems had been proposed [1], and interconnected systems have received more and more attention in recent decades due to their wide applications in power systems [2], multi-robot systems [3], complex networks [4, 5], and biological networks [6]. Generally, interconnected systems are high-dimensional complex systems composed of numerous dispersed subsystems, which can be state-coupled with their neighboring subsystems. The increased complexity of interconnected systems in terms of both system topologies and dynamics has prevented traditional estimation approaches from achieving satisfactory performance [7]. This can be mainly attributed to the poor scalability of centralized structure in traditional approaches. Firstly, the spatial distribution of subsystems will lead to high communication burden and field deployment cost for centralized methods. Meanwhile, the centralized methods also suffer heavy computational burden with the increase of the dimensions of interconnected systems. In addition, the intricate coupling structures of interconnected systems are not exploited in centralized methods, making it necessary to re-ensure stability when adding or subtracting subsystems. Therefore, it is imperative to consider advanced estimation approaches for interconnected systems to guarantee the accuracy and the stability of estimators.

Over the past several decades, different decentralized/distributed estimation approaches have been developed in the fields of multi-agent systems [8, 9], multi-sensor systems [10, 11, 12], and interconnected systems [13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23] to decrease communication overhead and computational complexity. In these approaches, local estimators are designed based on their own information and the information form their neighboring subsystems. However, the arbitrary couplings among subsystems impose more significant challenge to distributed analysis for interconnected systems, especially in terms of stability. For this reason, most of existing distributed estimation approaches for interconnected systems are based on special coupling structures or communication structures. With structural assumptions, the designed distributed estimators can provide better estimation performance and their stability can be ensured by local analysis. For example, the optimal locally unbiased filter was proposed in [13] with specific structure for information exchange, while the centralized and distributed moving horizon estimators were developed in [14] for sparse banded interconnected systems. The sparsity structure was also exploited to decompose interconnected systems into interconnected overlapping subsystems with coupled states that can be locally observed, then the distributed Kalman filter [15] and the consensus based decentralized estimator [16] were designed. Meanwhile, a sub-optimal distributed Kalman filtering problem was addressed in [17] for a class of sequentially interconnected systems. Note that it is difficult for most interconnected systems to transform into these structures. A hopeful idea to address distributed estimation problem without any structure constraints is to combine stability conditions and distributed estimator design methods. For instant, by adding constraints on stability conditions for general interconnected systems, distributed estimators with decoupling strategy were designed in [18, 19, 20] and a moving horizon estimator was proposed in [21] with the assumption of uncorrelated local estimation errors. Besides, the distributed estimators with plug-and-play fashion were developed in [22, 23] by exploiting the properties of infinity norm for small gain based stability conditions. However, how to design stable and distributed estimation methods based on local and neighboring information for general interconnected systems is still an open question.

Since the 1960s, the stability problem for general interconnected systems has received a great deal of attention [24, 25, 26]. To the best of our knowledge, besides the centralized analysis of stability for overall systems, the stability analysis methods for general interconnected systems can be divided into three categories: 1) methods based on scalar or vector Lyapunov function [1, 27, 28]; 2) methods based on small gain theorem [29, 30]; 3) methods based on dissipativity theory [31, 32]. For the first category, the stability conditions involving -matrices are derived by investigating the internal stability for both subsystems and the overall interconnected systems. Unfortunately, tests for -matrices are successful only when the couplings among subsystems are weak. In contrast, the stability conditions for the second category are obtained by analyzing the input-output stability of subsystems, where the couplings are treated as input terms from neighboring subsystems. It also requires weak coupling conditions for small gain theorem based methods, but the results are less conservative and can lead to relatively simple design guideline [24]. Another kind of input-output stability results in the third category are based on the concept of dissipativity, which are not necessarily weak coupling conditions due to their centralized analysis. However, the above stability conditions are not fully distributed, which means the knowledge of the dynamics and the couplings from neighboring subsystems is not enough in the analysis process. How to develop these stability conditions into scalable distributed conditions is still challenging. One way to address this problem is to derive the distributed stability conditions by totally local analysis [18, 19, 20, 21, 22, 23], where the stability of subsystems is locally and sequentially analyzed. Nevertheless, this approach is much more conservative than the centralized results, i.e., weak coupling conditions or structural assumptions are still required. Another promising idea is the subsystem-level analysis for centralized stability conditions by decomposing them into distributed ones. For example, the work in [33] focused on decomposing a centralized dissipativity condition into distributed dissipativity conditions of individual subsystems. Note that these conditions require huge communication burden to exchange message matrices among subsystems and cannot be generalized to time-varying interconnected systems.

It should be pointed out that the distributed estimator designed in [19] only provides stability conditions with specific subsystem coupling structures, which are further interpreted as the directed acyclic graphs of couplings in [20]. As for general subsystem connection structures, the design of distributed estimators with subsystem-level stability conditions is still challenging, and has not yet been fully solved. Motivated by the above analysis, we shall investigate the distributed estimation problem for general time-varying interconnected systems. The main contributions of this paper can be summarized as follows:

-

•

Distributed stability analysis. The distributed stability conditions, which only require subsystem-level knowledge of dynamics and couplings, are proposed for local estimators. Then, the effect of couplings on distributed conditions is discussed.

-

•

Distributed stability under delayed communication. The simplified distributed stability conditions are proposed for time-varying interconnected systems with one-step communication delay. It is shown that the simplified conditions do not need real-time exchange of subsystems’ gain information and can ease communication burden.

-

•

Distributed estimators design. By combining the distributed stability conditions and the optimization-based estimator gain design, a recursive, stable and optimal estimators for time-varying noisy interconnected systems are proposed, where an upper bound of local estimation error covariance is minimized. The proposed estimators are fully distributed, that is, only based on local and neighboring information.

Notations: Define , where is a natural number excluding zero, and denote the set of -dimensional real vectors by . Give sets and , represents the set of all elements of A that are not in , and is the intersection set of and . The superscript ‘’ represents the transpose, while the symmetric terms in a symmetric matrix are denoted by ‘’. The inverse of the matrix is denoted by , and represents the trace of the matrix . The identity matrix with appropriate dimensions is represented as ‘’, and the matrix with all zero elements is denoted by ‘’. The notation is a positive definite (negative definite) matrix, and is a positive semi-definite (negative semi-definite) matrix. The notation means a column vector whose elements are , while stands for a block diagonal matrix. The mathematical expectation is denoted by , and is the 2-norm of matrix . Given a block matrix , represents the th block. The maximum eigenvalue of matrix is represented as .

II Problem Formulation

II-A Time-varying Interconnected System Model

Consider a time-varying interconnected system constructed by subsystems, where the state and measurement dynamics of the th subsystem is described as follows:

| (1) |

The vectors and denote the state and the measurement of the subsystem , respectively. Moreover, , , , and are bounded matrices with appropriate dimensions, while the system noise and the measurement noise are uncorrelated Gaussian white noises satisfying

| (2) |

where and are the known covariances of and , respectively. if and otherwise. The set of neighbors for subsystem is denoted by , and the number of elements is . Therefore, the set can be described as

| (3) |

The coupling structure of the system is determined by whether the matrix is a null matrix. Since there is no constraints on the spatial distribution of subsystems, the coupling structure can be arbitrary. Then, the following subset is defined:

| (4) |

where the number of elements for is .

Remark 1. Compared with the work in [13, 14, 15, 16, 17], the addressed interconnected system model in this paper does not require any structural assumptions (i.e., the sparsity assumption on couplings). In this case, the model in (1) is more general and can cover a large part of practical situations. For example, the heavy duty vehicle systems [34] with aerodynamic interconnections can be modeled as interconnected systems with strongly connected topologies in the form of (1). On the other hand, there is no constraint on the coupling strength for the interconnected system model in this paper, which is different from the work in [22, 23]. In other words, the upper bound of can be arbitrarily large. However, the analysis of distributed stability and the distributed estimation problem for general interconnected system without any weak coupling assumptions will be more challenging.

To collaboratively achieve system tasks, subsystems need to exchange their information via communication networks. Therefore, the distributed communication structure in the following assumption is required.

Assumption 1 (Communication). Each subsystem can communicate with its neighbors.

Remark 2. Notice that the communication structure in Assumption 1 is distributed and has a limited range of information broadcast due to the limitation on network bandwidth and energy constraints for subsystems. Unlike the centralized communication structure with one subsystem communicates with all the other subsystems, the considered distributed communication structure is more practical. On the other hand, we restrict ourselves to the time-varying interconnected systems with constantly varying dynamics and couplings due to its wider applications. Take blocked power systems as an example, the couplings among different blocks are changing with the real-time power dispatching [35]. For time-varying interconnected systems, the distributed stability conditions in [33] are not suitable anymore, and thus novel distributed stability analysis approaches are required.

II-B Problem of Interest

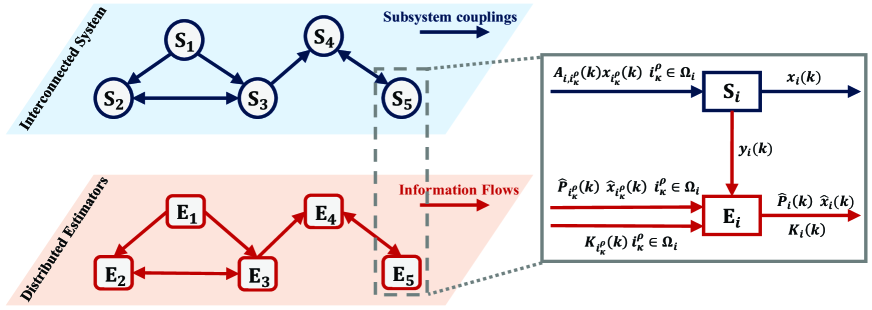

The structure of distributed estimators for interconnected systems with local information flows is depicted in Fig. 1. It is assumed that subsystems can only know their own dynamics, and thus the local measurements and the estimates form neighbors are used for state reconstruction. The estimator for the th subsystem is proposed as

| (5) |

where and are the one-step prediction and the estimate of subsystem state , respectively. Then, the estimation error iteration for the th subsystem is calculated by (1) and (5) as

| (6) |

where , while and are the one-step prediction error and the estimation error, respectively. The one-step prediction error covariance and the estimation error covariance can be calculated as

| (7) |

The major concern of the distributed estimation problem is to design suitable gain matrices such that the estimation error is stable and the estimation performance index is minimized. Specifically, the following definition is introduced to describe the property of stability for local estimators.

Definition 1 (Mean-square uniformly bounded). For the interconnected system in (1), the proposed estimator (5) is mean-square uniformly bounded if for arbitrarily large , there is (independent of ) such that

| (8) |

However, it is usually difficult for subsystems to timely obtain the cross-covariances by only local communication. Therefore, an upper bound of the estimation error covariance is used instead and the performance index for local estimation is designed as . Here, the optimal estimator gain design for subsystems can be formulated as an optimization problem:

| (9) | ||||

where is a subspace of stable estimator gains for subsystem at the instant .

In what follows, the augmented system dynamics and the augmented estimator iteration will be presented. By defining , we can obtain the overall system dynamics as

| (10) |

where with and

| (11) |

The upper bounds of bounded matrices are , , , , and , respectively. Then, let us denote and . The following augmented estimator and the augmented estimation error iteration are obtained:

| (12) |

where and .

Note that the stability of each local estimator depends on the stability of its neighboring estimators due to the interconnected estimation error . Hence, it is difficult to determine and design a stable estimator in a totally local analysis. On the other hand, a totally centralized analysis for the augmented estimation error system needs the knowledge of dynamics and couplings from the overall system, which cannot apply to large-scale interconnected systems.

Consequently, the aim of this paper is to address the following problems:

-

1)

Distributed stability conditions analysis: Analyze the distributed stability conditions such that the proposed estimator is mean-square uniformly bounded, where only subsystem-level knowledge of dynamics and couplings is required for each estimator.

-

2)

Distributed estimator design: Design distributed, stable and optimal estimators for time-varying interconnected systems with arbitrary coupling structures, where an upper bound of local estimation error covariance is minimized.

Remark 3. To design a fully distributed estimator, both the iteration form and the stability conditions for the estimator need to achieve local communication, computation and storage. Though the estimator in (5) only uses the information of local measurement and neighboring estimates, the local estimation errors are still interconnected. Therefore, the major difficulty for the distributed estimator design for general interconnected systems is to calculate the optimal estimator gain and maintain the stability without any globally interconnection information of estimation errors.

III Main Results

In this section, we firstly present distributed conditions to guarantee the stability of local estimators. Then, a fully distributed estimation approach is proposed by merging optimal and stable estimator gain designs.

III-A Distributed Stability Conditions

Let us denote the augmented estimation error covariance as and it is calculated by

| (13) | ||||

where the matrices and are the augmented noise covariances. Then, the centralized stability conditions are derived by the following proposition. Its proof appears in the Appendix.

Proposition 1. If the following centralized stability condition is satisfied

| (14) |

where is a finite positive number, then the proposed distributed estimator (5) is stable in the sense of mean-square uniformly bounded (8).

Under the distributed communication structure, the knowledge of dynamics and couplings from the overall system is hard to obtain for local estimators. Therefore, the following theorem provides distributed conditions to ensure the stability for all local estimators.

Theorem 1 (Distributed stability conditions). The following distributed conditions are sufficient to ensure the stability for the proposed distributed estimator (5):

-

C1)

For each subsystem

(15) -

C2)

For each pair of neighbors

(16)

where is a finite positive number, while parameter satisfies and

| (17) |

These conditions are equivalent to the following inequalities:

| (18) |

where

| (19) |

Proof. According to Schur complement lemma [36], the condition (C2) and the first inequality in the condition (C1) are equivalent to or for each pair of neighbors . By the definition of in (4), one can conclude that . Then, define the permutation matrix

| (20) |

By left and right multiplication of with and , the following equivalent inequality is derived:

| (21) |

where

| (22) |

By augmenting all the matrices in (21), one has that

| (23) | ||||

Then, define the permutation matrix

| (24) |

where and is a matrix with dimension that contains all zero elements, but an identity matrix of dimension at rows . By the property of positive definite matrix, if , then the matrix by left and right multiplication of with and is negative definite, i.e.,

| (25) | ||||

Inequality (25) is equivalent to

| (26) |

By Schur complement lemma, one has that

| (27) |

According to Proposition 1, inequality (27) and the second inequality in the condition (C1) are sufficient to ensure the mean-square boundedness (8). This completes the proof.

Remark 4. Intuitively, the stability of an independent subsystem without any couplings is not influenced by its neighboring subsystems, and thus local mean-square uniform boundedness condition is enough to ensure the stability. However, the stability condition for the subsystem that coupled with its neighbors will be tighter than the local mean-square uniform boundedness condition. Therefore, the distributed stability conditions in Theorem 1 contain two parts, the condition (C1) ensures that local estimation error system without interconnected terms is stable, while the condition (C2) is an additional requirement for the stability of systems with some coupling relationships.

Remark 5. For the distributed control problem of interconnected systems with known system states, the distributed conditions for stabilizing systems can be obtained by a similar derivation of Theorem 1. When the process dynamics (1) is controlled by an additional input term “”, the distributed state feedback controllers and “” can be designed, then the distributed stabilization conditions can be obtained by decomposing the matrix inequality with the property of positive definite matrix, where and are the corresponding augmented matrices.

The determination of the parameter is a trade-off between the stability and the performance of estimators, where smaller can provide more conservative margin of distributed stability and potentially worse estimation performance. On the other hand, the parameter only influences the ultimate boundedness of estimators and can be chosen as a large number to avoid estimation performance degradation.

Notice that the above distributed stability conditions need subsystem to know the gain from subsystem timely. However, one-step communication delay, naturally risen from networked environments, is inevitable and need to be taken into account when the estimator gain information is transmitted over the communication network from neighboring subsystems. To extend the result of Theorem 1 to more general communication environments with one-step transmission delay, the following conditions without synchronously knowing neighboring estimator gains are further proposed.

Corollary 1. For each subsystem, if the following inequalities are satisfied:

| (28) |

where is a finite positive number, and is constrained by

| (29) |

with parameter satisfies , then the proposed distributed estimator is stable in the sense of mean-square uniformly bounded (8).

Proof. The following upper bounds can be derived from the inequality (28):

| (30) |

By the Schur complement lemma, the first inequality in (30) can be converted to

| (31) |

Hence, the upper bounds of and can be obtained as

| (32) |

By the second and the third inequalities of (30), one has the following inequality:

| (33) |

Therefore, it can be concluded that

| (34) | ||||

Under the constraints in (29) and taking , the condition (C2) in Theorem 1 is derived. This completes the proof.

To balance the stability margin of each subsystem, the parameter and should be proportional to the size of couplings, and a feasible parameter selection is given by

| (35) |

Remark 6. By Corollary 1, the distributed stability conditions are simplified into finding an appropriate time-invariant parameter . Unlike the conditions in [33] that require huge communication burden to exchange message matrices among subsystems, the calculation of in this paper only needs subsystems to communicate with their neighbors to exchange the knowledge of and . Therefore, this procedure can be achieved offline with less communication overhead. Notice that the stability result with less communication and computational burden is more suitable for time-varying interconnected systems with different couplings and dynamics at each instant.

The distributed calculation of can be implemented in the following Algorithm.

Remark 7. The small gain theorem for interconnected systems can be stated as follows. Suppose that each local estimation error system (6) satisfies the local mean-square uniform boundedness condition , then the augmented estimation error system in (12) is stable if the set of small gain conditions (, if ) holds for each . The small gain conditions mean that the composition of the coupling matrices along every closed cycle is stable. However, it is hard to apply the small gain theorem to design distributed estimator or controller for interconnected systems with arbitrary coupling structures. For distributed estimation problem, feedback is introduced to adjust the size of in a distributed manner such that the augmented estimation error system is stable, while the coupling matrices cannot be adjusted. The small gain theorem requires that , which is not always satisfied and irrelevant to the estimator design. A natural problem is what distributed conditions does a subsystem need to meet with its neighbors such that the overall system is stable. To address the above problem, the distributed stability conditions are derived by decomposing the centralized stability condition in the paper. The result in Theorem 1 turns out to be the matrix inequalities for each pair of neighbors. Therefore, each subsystem only needs to satisfy these matrix inequalities with its neighbors, then the stability for the overall system can be ensured.

Remark 8. Compared with the stability analysis by Lyapunov functions [1, 27, 28], the proposed distributed stability conditions in Corollary 1 are less conservative in the requirement of weak coupling assumptions. According to the inequality (29), the strength of coupling can be arbitrarily large as long as the stability parameter is designed small enough. On the other hand, the conditions in Corollary 1 can be directly applied to the distributed estimation problem when the parameters are determined by Algorithm 1 offline.

III-B Optimization-based Distributed Estimator

In what follows, we would like to design optimal estimators for time-varying interconnected systems in a distributed way. We have the following results on optimization-based distributed estimator design. First of all, let us define the following matrices:

| (36) |

where is an upper bound of and is the th diagonal element of . Then, the gain design for the proposed distributed estimator (5) is provided in the following Theorem.

Theorem 2. For the time-varying interconnected system (1), the gain matrix of the proposed distributed estimator (5) is obtained by minimizing an upper bound of estimation error covariance and keeping the designed estimator mean-square uniformly bounded, as the following optimization problem:

| (37) | ||||

where is an upper bound of one-step prediction error covariance and is calculated as

| (38) |

with the upper bound of estimation error covariance calculated as

| (39) |

Proof. The cross-covariances among subsystems are difficult to online calculate by local communication, which means that direct calculation of the one-step prediction error covariance in (7) is not feasible. Therefore, an upper bound of the estimation error covariance is constructed and used for the gain design problem. Let be the th component of , while is defined as the th component of . By resorting to the well-known Hölder inequality, one has that

| (40) | ||||

Thus, the following upper bound of is derived:

| (41) |

Then, applying the inequality (41) to (7), it turns out that

| (42) |

Therefore, an upper bound of is constructed by . In this case, an upper bound of local estimation error covariance is derived as

| (43) | ||||

Then, it is proposed to construct an upper bound of as satisfying

| (44) |

The optimal estimator gain is obtained by minimizing this upper bound , which turns to be an optimization problem:

| (45) | ||||

By Schur complement lemma, the inequality constraint in (45) is converted into

| (46) |

Then,

the first inequality constraint in (37) is further derived by using Schur complement lemma again. Adding the distributed stability constraints in Theorem 1 or Corollary 1, the optimization problem in Theorem 2 is formulated. This completes the proof.

Remark 9. The inequality constraints (18) and (28) can be rewritten as linear matrix inequality forms, so the optimization problem in Theorems 2 can be directly solved by the function “mincx” of MATLAB LMI toolbox [36]. In addition, the information used in the optimization problem (37) is from subsystem and its neighbors, and the computational complexity is mainly determined by the dimensions of these subsystems. Therefore, the proposed estimators are recursive and fully distributed such that can be deployed for large-scale interconnected systems with local communication and computation requirements.

Remark 10. Notice that the work in [19, 20] only provides stability conditions with specific subsystem coupling structures, while the designed distributed estimators in Theorem 2 directly use the newly proposed subsystem-level stability conditions for general interconnected systems. This design methdology that combines the optimality and stability can overcome the disadvantages of totally local estimator analysis in terms of the stability problem. Moreover, the developed stability conditions enable plug-and-play operations, which means newly added subsystem does not influence the stability of previous subsystems and its own stability can be ensured by collecting its neighbors’ information. Thus, there is no need to redesign the stability parameters and this property is helpful for deployment of distributed estimators.

From Theorem 2, the computational procedures of the distributed estimation for general interconnected systems with and without one-step communication delay can be summarized by Algorithm 2. For systems with ideal communication, the real-time transmission of estimator gains is feasible and the required information for the inequality constraints in (18) can be obtained timely. However, the stability conditions in (18) will not work any more when one-step communication delay is taken into consideration. Instead, offline calculation of for the inequality constraints in (28) can solve the problem caused by communication delay.

IV Simulation Examples

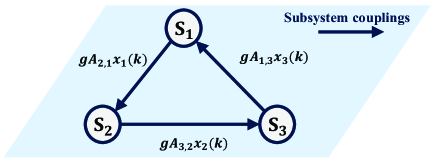

To illustrate the effectiveness of the proposed distributed estimators, a numerical study result is reported in this section. Let us consider the following interconnected system with three subsystems:

| (47) |

where

| (48) |

and and are identity matrices. The parameter is used to adjust the strength of couplings, and the coupling structure is described in Fig. 2. The process noise is Gaussian noises with covariance , and the measurement noises are Gaussian noises with covariances , and , respectively. The values for are calculated for different coupling strengths by tuning the parameter , and the result is shown in Table 1.

| Coupling Strength | 0.5 | 1 | 1.5 | 2 | 2.5 | 3 | 3.5 | 4 |

|---|---|---|---|---|---|---|---|---|

| 1.08 | 1.08 | 1.08 | 1.08 | 1.08 | 1.08 | 1.08 | 1.08 | |

| 1.21 | 1.01 | 0.90 | 0.81 | 0.75 | 0.70 | 0.66 | 0.63 | |

| 1.84 | 1.57 | 1.36 | 1.19 | 1.05 | 0.94 | 0.86 | 0.78 |

As the connection of subsystems gets more and more strong, the calculated value of decreases such that the stability conditions become stricter. This observation is consistent with the fact that the convergence rate and stability margin of the overall system is related to the size of its transition matrix.

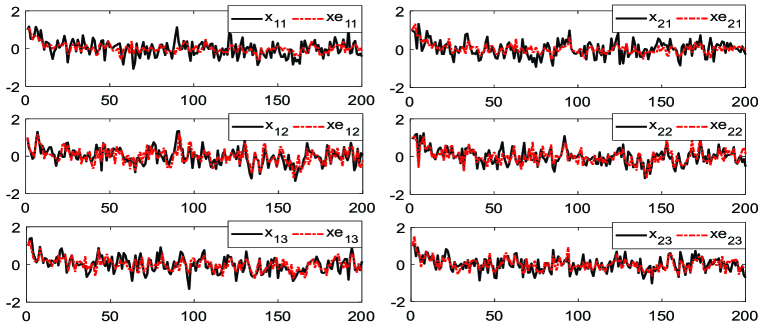

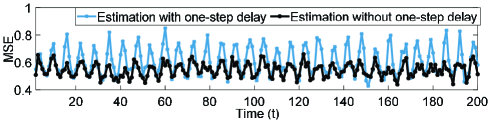

Then, the proposed optimization-based distributed estimators are deployed to estimate the states of this interconnected system. Under one-step communication delay, the trajectories of the states and the corresponding estimated values by Theorem 2 for this interconnected system are plotted in Fig. 3 when . As shown in Fig. 3, the proposed distributed estimator can track the real states well under a large coupling strength with communication delay. To compare the performance of the distributed estimators with and without the influence of one-step communication delay, Monte Carlo simulations with 100 runs have been performed by randomly varying the realization of process and measurement noises. The mean square error (MSE) is introduced to evaluate the performance, where

| (49) |

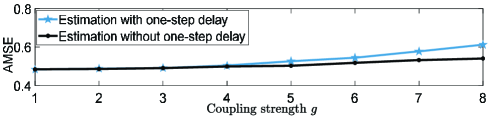

with being the state estimation error at the instant in the th simulation. Fig. 4 depicts the MSE performance comparison for two cases in Algorithms 2. The result shows that the estimation accuracy can maintain at a satisfactory level when one-step communication delay is taken into consideration. Moreover, to evaluate the dependence of performance on different coupling strengths, the AMSE (i.e., the asymptotic MSE defined as the average of the MSE computed in the whole time interval) is reported in Fig. 5. As the stability constraints are getting stricter under stronger couplings, the estimation accuracy is becoming worse. The performance degradation is caused by its conservatism from time-invariant stability parameters.

V Conclusion

In this paper, we presented new results for subsystem-level stability analysis and distributed estimator design for time-varying interconnected systems with arbitrary coupling structures. The proposed distributed stability conditions can ensure mean-square uniform boundedness without the requirement for the knowledge of dynamics and couplings from the overall interconnected systems. Then, the simplified conditions that do not need real-time exchange of subsystems’ gain information were developed for systems with one-step communication delay. Particularly, we showed that the distributed stability conditions do not need any coupling structure assumption and can be easily extended when a new subsystem is added to the original interconnected system. These conditions are applied to distributed estimator design problem for time-varying interconnected systems, and novel optimization-based estimator design approaches were proposed. Notice that the designed estimators are fully distributed, where only local information and the information from neighbors are required for the estimator iteration form and the stability conditions. Finally, an illustrative example was employed to show the effectiveness of the proposed methods.

Several topic for future research is left open. Extensions of the presented distributed stability conditions to co-design of distributed estimator and controller will be important. Another interesting extension is the development of secure estimator for interconnected systems with stability constraints. Due to the frequent information exchange among subsystems and the broadcast nature of communication medium, it is more vulnerable for practical interconnected systems to various attacks. To prevent system information from being collected by eavesdroppers to generate sophisticated attacks, the design of defense mechanisms is required and will be one of our future work. Meanwhile, the influence of cyber attacks will propagate among subsystems, and how to detect cyber attacks by subsystem cooperation is an important and interesting problem.

VI Appendix

Proof of Proposition 1. From the conditions in (14), an upper bound of can be derived as

| (50) |

By the augmented estimation error covariance in (13), if , then

| (51) | ||||

It turns out that

| (52) |

If , then and

| (53) | ||||

If at all instants, then . Now, we can conclude that is bounded as

| (54) |

By the boundedness of , one also has that is bounded. This completes the proof.

References

- 1 F. N. Bailey, “The application of Lyapunov’s second method to interconnected systems,” Journal of the Society for Industrial and Applied Mathematics, Series A: Control, vol. 3, no. 3, pp. 443–462, 1965.

- 2 V. Kekatos and G. B. Giannakis, “Distributed robust power system state estimation,” IEEE Transactions on Power Systems, vol. 28, no. 2, pp. 1617–1626, 2013.

- 3 Z. Feng, G. Hu, Y. Sun, and J. Soon, “An overview of collaborative robotic manipulation in multi-robot systems,” Annual Reviews in Control, vol. 49, pp. 113–127, 2020.

- 4 M. Dickison, S. Havlin, and H. E. Stanley, “Epidemics on interconnected networks,” Physical Review. E, Statistical, Nonlinear, and Soft Matter Physics, vol. 85, no. 6, p. 066109, 2012.

- 5 W. Li, Y. Jia, and J. Du, “State estimation for stochastic complex networks with switching topology,” IEEE Transactions on Automatic Control, vol. 62, no. 12, pp. 6377–6384, 2017.

- 6 Y. Huang, I. Tienda-Luna, and Y. Wang, “Reverse engineering gene regulatory networks,” IEEE Signal Processing Magazine, vol. 26, no. 1, pp. 76–97, 2009.

- 7 J. Lian, “Special section on control of complex networked systems (CCNS): Recent results and future trends,” Annual Reviews in Control, vol. 47, pp. 275–277, 2019.

- 8 C. Kwon and I. Hwang, “Sensing-based distributed state estimation for cooperative multiagent systems,” IEEE Transactions on Automatic Control, vol. 64, no. 6, pp. 2368–2382, 2019.

- 9 P. Yang, R. A. Freeman, and K. M. Lynch, “Multi-agent coordination by decentralized estimation and control,” IEEE Transactions on Automatic Control, vol. 53, no. 11, pp. 2480–2496, 2008.

- 10 R. Olfati-Saber, “Distributed Kalman filtering for sensor networks,” in 2007 46th IEEE Conference on Decision and Control, (New Orleans, LA, USA), pp. 5492–5498, IEEE, Dec. 2007.

- 11 B. Chen, W. A. Zhang, and L. Yu, “Distributed finite-horizon fusion Kalman filtering for bandwidth and energy constrained wireless sensor networks,” IEEE Transactions on Signal Processing, vol. 62, no. 4, pp. 797–812, 2014.

- 12 W. A. Zhang and L. Shi, “Sequential fusion estimation for clustered sensor networks,” Automatica, vol. 89, pp. 358–363, 2018.

- 13 C. Sanders, E. Tacker, T. Linton, and R. Ling, “Specific structures for large-scale state estimation algorithms having information exchange,” IEEE Transactions on Automatic Control, vol. 23, no. 2, pp. 255–261, 1978.

- 14 A. Haber and M. Verhaegen, “Moving horizon estimation for large-scale interconnected systems,” IEEE Transactions on Automatic Control, vol. 58, no. 11, pp. 2834–2847, 2013.

- 15 U. A. Khan, “Distributing the Kalman filter for large-scale systems,” IEEE Transactions on Signal Processing, vol. 56, no. 10, pp. 4919–4935, 2008.

- 16 S. S. Stanković, M. S. Stanković, and D. M. Stipanović, “Consensus based overlapping decentralized estimation with missing observations and communication faults,” Automatica, vol. 45, no. 6, pp. 1397–1406, 2009.

- 17 B. Chen, G. Hu, D. W. C. Ho, and L. Yu, “Distributed Kalman filtering for time-varying discrete sequential systems,” Automatica, vol. 99, pp. 228–236, 2019.

- 18 B. Chen, G. Hu, D. W. Ho, and L. Yu, “Distributed estimation for discrete-time interconnected systems,” in 2019 Chinese Control Conference (CCC), (Guangzhou, China), pp. 3708–3714, IEEE, July 2019.

- 19 B. Chen, G. Hu, D. W. C. Ho, and L. Yu, “Distributed estimation and control for discrete time-varying interconnected systems,” IEEE Transactions on Automatic Control, 2021. doi: 10.1109/TAC.2021.3075198.

- 20 Y. Zhang, B. Chen, L. Yu, and D. W. C. Ho, “Distributed Kalman filtering for interconnected dynamic systems,” IEEE Transactions on Cybernetics, 2021. doi: 10.1109/TCYB.2021.3072198.

- 21 M. Farina, G. Ferrari-Trecate, and R. Scattolini, “Moving horizon partition-based state estimation of large-scale systems,” Automatica, vol. 46, no. 5, pp. 910–918, 2010.

- 22 S. Riverso, M. Farina, R. Scattolini, and G. Ferrari-Trecate, “Plug-and-play distributed state estimation for linear systems,” in 52nd IEEE Conference on Decision and Control, (Firenze), pp. 4889–4894, IEEE, 2013.

- 23 S. Riverso, D. Rubini, and G. Ferrari-Trecate, “Distributed bounded-error state estimation based on practical robust positive invariance,” International Journal of Control, vol. 88, no. 11, pp. 2277–2290, 2015.

- 24 N. Sandell, P. Varaiya, M. Athans, and M. Safonov, “Survey of decentralized control methods for large scale systems,” IEEE Transactions on Automatic Control, vol. 23, no. 2, pp. 108–128, 1978.

- 25 A. N. Michel, “On the status of stability of interconnected systems,” IEEE Transactions on Systems, Man, and Cybernetics, vol. 13, no. 4, pp. 439–453, 1983.

- 26 H. Ito, “A geometrical formulation to unify construction of Lyapunov functions for interconnected iISS systems,” Annual Reviews in Control, vol. 48, pp. 195–208, 2019.

- 27 A. N. Michel, “Stability analysis of interconnected systems,” SIAM Journal on Control, vol. 12, no. 3, pp. 554–579, 1974.

- 28 W. M. Haddad and S. G. Nersesov, Stability and Control of Large-Scale Dynamical Systems: A Vector Dissipative Systems Approach. Princeton: Princeton University Press, 2011.

- 29 S. N. Dashkovskiy, B. S. R¨uffer, and F. R. Wirth, “Small gain theorems for large scale systems and construction of ISS Lyapunov functions,” SIAM Journal on Control and Optimization, vol. 48, no. 6, pp. 4089–4118, 2010.

- 30 H. Ito, “State-dependent scaling problems and stability of interconnected iISS and ISS systems,” IEEE Transactions on Automatic Control, vol. 51, no. 10, pp. 1626–1643, 2006.

- 31 P. Moylan and D. Hill, “Stability criteria for large-scale systems,” IEEE Transactions on Automatic Control, vol. 23, no. 2, pp. 143–149, 1978.

- 32 M. Vidyasagar, ed., Input-output analysis of large-scale interconnected systems. Decomposition, well-posedness and stability. Berlin/Heidelberg: Springer-Verlag, 1981.

- 33 E. Agarwal, S. Sivaranjani, V. Gupta, and P. J. Antsaklis, “Distributed synthesis of local controllers for networked systems with arbitrary interconnection topologies,” IEEE Transactions on Automatic Control, vol. 66, no. 2, pp. 683–698, 2021.

- 34 A. A. Alam, A. Gattami, and K. H. Johansson, “An experimental study on the fuel reduction potential of heavy duty vehicle platooning,” in 13th International IEEE Conference on Intelligent Transportation Systems, pp. 306–311, Sept. 2010.

- 35 J. Yang, W. A. Zhang, and F. Guo, “Dynamic state estimation for power networks by distributed unscented information filter,” IEEE Transactions on Smart Grid, vol. 11, no. 3, pp. 2162–2171, 2020.

- 36 S. Boyd, L. El Ghaoui, E. Feron, and V. Balakrishnan, eds., Linear Matrix Inequalities in System and Control Theory. Philadelphia, PA, USA: Society for Industrial and Applied Mathematics, 1994.