Distributed Learning for Principle Eigenspaces without Moment Constraints

Distributed Principal Component Analysis (PCA) has been studied to deal with the case when data are stored across multiple machines and communication cost or privacy concerns prohibit the computation of PCA in a central location. However, the sub-Gaussian assumption in the related literature is restrictive in real application where outliers or heavy-tailed data are common in areas such as finance and macroeconomic. In this article, we propose a distributed algorithm for estimating the principle eigenspaces without any moment constraint on the underlying distribution. We study the problem under the elliptical family framework and adopt the sample multivariate Kendall’tau matrix to extract eigenspace estimators from all sub-machines, which can be viewed as points in the Grassman manifold. We then find the “center” of these points as the final distributed estimator of the principal eigenspace. We investigate the bias and variance for the distributed estimator and derive its convergence rate which depends on the effective rank and eigengap of the scatter matrix, and the number of submachines. We show that the distributed estimator performs as if we have full access of whole data. Simulation studies show that the distributed algorithm performs comparably with the existing one for light-tailed data, while showing great advantage for heavy-tailed data. We also extend our algorithm to the distributed learning of elliptical factor models and verify its empirical usefulness through real application to a macroeconomic dataset.

Keyword: Elliptical distribution; Distributed learning; Grassman manifold; Spatial Kendall’s tau matrix; Principle Eigenspaces.

1 Introduction

Principal component analysis (PCA) is one of the most important statistical tool for dimension reduction, which extracts latent principal factors that contribute to the most variation of the data. For the classical setting with fixed dimension, the consistency and asymptotic normality of empirical principal components have been raised since Anderson (1963). In the last decade, high-dimensional PCA gradually attracted the attention of statisticians, see for example Onatski (2012), Wang & Fan (2017), Kong et al. (2021), Bao et al. (2022). The existing work on high-dimensional PCA typically assume the Gaussian/sub-Gaussian tail property of the underlying distribution, which is really an idealization of the complex random real world. Heavy-tailed data are common in research fields such as financial engineering and biomedical imaging (Jing et al., 2012; Kong et al., 2015; Fan et al., 2018; Li et al., 2022). Thus it is desperately needed to find a robust way to do principal component analysis for heavy-tailed data. Elliptical family provides one a proper way to capture the different tail behaviour of various common distributions such as Gaussian and -distribution, and has been widely studied in various high-dimensional statistical problems, see for example, Han & Liu (2014); Yu et al. (2019); Hu et al. (2019); Chen et al. (2021). Han & Liu (2018) presented a robust alternative to PCA, called Elliptical Component Analysis (ECA), for analyzing high dimensional, elliptically distributed data, in which multivariate Kendall’s tau matrix plays a central role. In essence, for elliptical distributions, the eigenspace of the population Kendall’s tau matrix coincides with that of the scatter matrix The multivariate Kendall’s tau is first introduced in Choi & Marden (1998) for testing dependence, and is later adopted for estimating covariance matrix and principal components (Taskinen et al., 2012). Properties of ECA in low dimension were considered by Hallin et al. (2010), Hallin et al. (2014), and some recent works related to high-dimensional ECA include but not limited to Feng & Liu (2017); Chen et al. (2021); He et al. (2022). Noticeably, He et al. (2022) extended ECA to factor analysis under the framework of elliptical factor model.

With rapid developments of information and technology, the modern datasets exhibit the characteristic of extremely large-scale and we are now embracing the big-data era. Efficient statistical inference algorithms on such enormous dataset is unprecedentedly desirable. Distributed computing provides an effective way to deal with large-scale datasets. In addition to computation efficiency, distributed computing is also relatively robust to possible failures in the subsevers. There are lots of other reasons for establishing rigorous statistical theories on distributed computing, such as privacy protection, data ownerships and limitation of data storage. Two patterns of data segmentation were considered in the literature over the past few years, which are horizontal and vertical. “Horizontal” reserves all the features in each subserver, while data are scattered to different machines. Conversely, “Vertical” means that features are divided into several parts, each storage has full access of data but part of the features, which is common in signal processing and sensor networks. Some representative works include but not limited to Qu et al. (2002), Zhang et al. (2012), Fan et al. (2019) and Fan et al. (2021). In particular, Fan et al. (2019) proposed a distributed PCA algorithm: first each submachine computes the top eigenvectors and transmits them to the central machine; then the central machine aggregates the information from all the submachines and conducts a PCA based on the aggregated information.

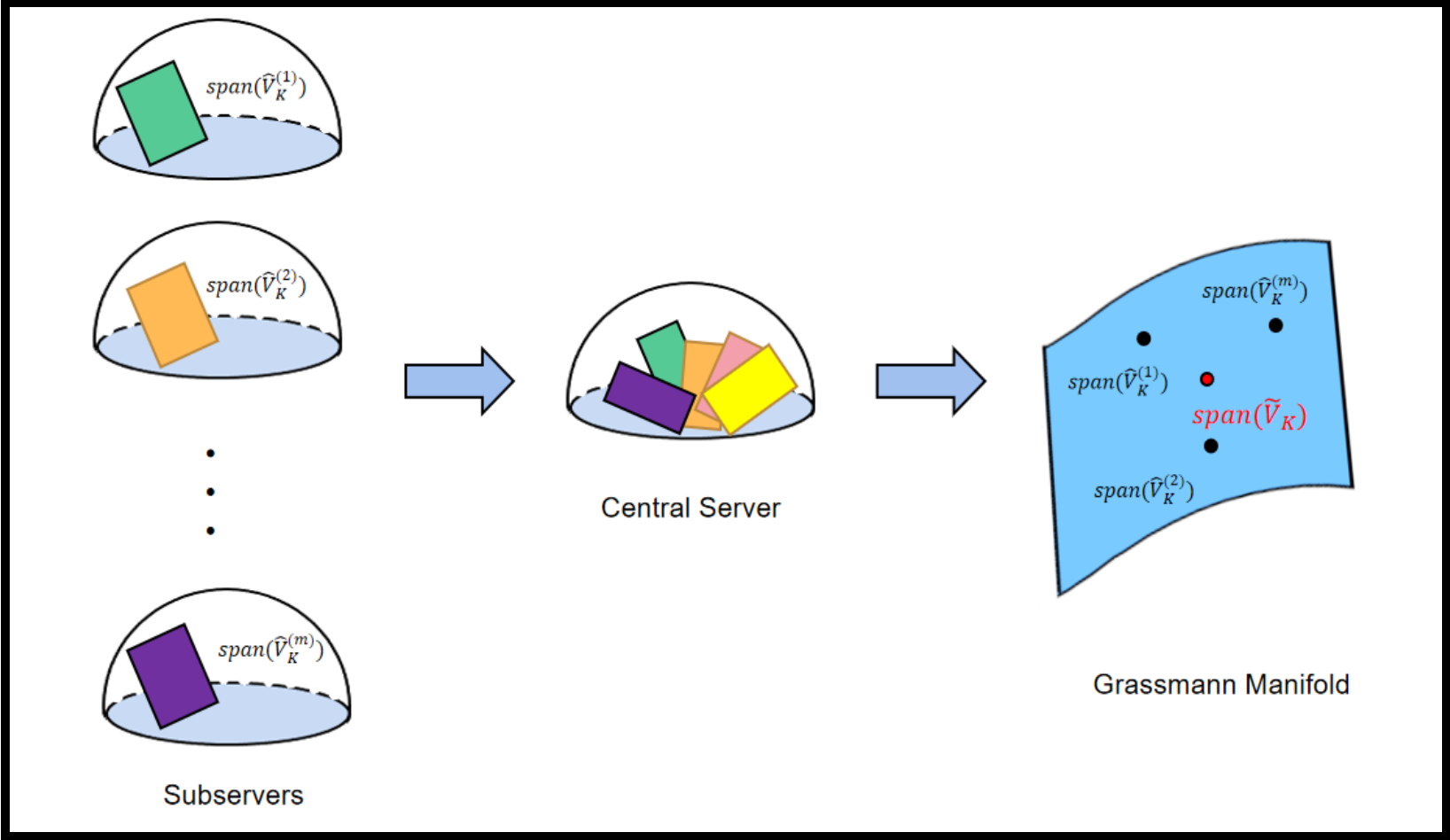

In the current work, we consider a robust alternative to the existing distributed PCA algorithm. We adopt the horizontal division and propose a distributed algorithm for estimating the principle eigenspaces without any moment constraint on the underlying distribution. In detail, assume that we have subservers under the elliptical family framework, we first extract the leading orthonormal eigenvectors (as columns of ) of the local sample multivariate Kendall’s tau matrix with observations on the -th subserver, for . We next transport these eigenspace estimators to the central server, where denotes the linear space spanned by the columns of . In essence, the transported local eigenspace estimators can be viewed as points (representatives of equivalent class) in the Grassmann manifold, see Figure 1 for better illustration. We then find the “center” ( the red point in Figure 1) of these points by minimizing the projection metrics on Grassmann manifolds in the central server, which is analogous to the physical notion of barycenter. Mathematically, the “center” satisfies

and it can be shown that is exactly the leading eigenvectors of the average projection matrix .

The contributions of the current work include the following aspects: firstly we for the first time propose a robust alternative to distributed principal PCA. The proposed distributed algorithm is easy to implement and avoids huge transportation costs between central processor and subservers; secondly we investigate the bias and variance for the robust distributed estimator and derive its convergence rate which depends on the effective rank of the scatter matrix, eigengap, and the number of submachines. We show that the distributed estimator performs as if we have full access of whole data; thirdly, we extend the distributed algorithm to the elliptical factor model in a horizontal partition regime, which is the first distributed algorithm for robust factor analysis as far as we know.

The rest of this paper is organized as follows. Section 2 includes some notations and preliminary results on elliptical family and multivariate Kendall’tau matrix. In Section 3, we present the details on our robust distributed algorithm for estimating principal eigenspace. In Section 4, we investigate the theoretical properties of the robust distributed estimator. In Section 5, we extend the distributed algorithm to the distributed elliptical factor analysis. Simulation results are given in Section 6. We also applied the distributed algorithm to analyze a large-scale real macroeconomic dataset. Further discussions and future work direction are left in Section 7.

2 Notations and Preliminaries

We first introduce some notations used throughout the article. Vectors and matrices will be written in bold symbol, scalars are written by regular letters. For two sequences , if there is a universal constant , such that , for which we also adopt another notation , while means , as . Index set is simply denoted by . For vectors, we define to be the unit vector with 1 in the -th component, is the -norm. For random variable , Orlicz norm is defined as . If random vectors share the same distribution, we write . For matrix , its transpose is ; is the -th largest eigenvalue of ; is the spectral norm and is the Frobenius norm. Given a fixed , the eigen gap of is , and is the effective rank of . The space spanned by the columns of is denoted by . For two matrices with orthogonal columns (), the spatial distance between and is .

Elliptical Distribution and Spatial Kendall’s Tau Matrix

Elliptical family is a large distribution family containing common distributions such as Gaussian and -distribution, which is widely used for modeling heavy-tailed data in finance and macroeconomics. To begin with, we recall its definition and some of its nice properties. For further details on the elliptical distribution, see for example Hult & Lindskog (2002).

In the following we give two equivalent definitions, one by its characteristic function, the other by its stochastic representation. A random vector belongs to the elliptical family, denoted by , if its characteristic function has the form:

where is a proper function defined on , is the location parameter, is called scatter matrix with . Equivalently,

where is a random vector uniformly distributed on the unit sphere in , is a scalar random variable indepent of , is a deterministic matrix satisfying . Thus we can also denote as and and are mutually determined. Elliptically distributed random vectors have the same nice properties as Gaussian random vectors, such as linear combination of elliptically distributed random vectors also follows elliptical distribution and their marginal distributions are also elliptical.

For Gaussian distribution, scatter matrix is the covariance matrix up to a constant. For non-Gaussian distribution, even with infinite variance (such as Cauchy distribution), scatter matrix still measures the dispersion of a random vector. To estimate the principal eigenspace of elliptically distributed data, simply performing PCA to the sample covariance matrix is not satisfactory due to the possible inexistence of the population covariance matrix. To address the problem, we turn to a tool, the spatial Kendall’s tau matrix. For and its independent copy , the population spatial Kendall’s tau matrix of is defined as:

The spatial Kendall’s tau matrix was first introduced in Choi & Marden (1998) and has been used for a lot of statistical problems such as covariance matrix estimation and principal eigenspace estimation, see Visuri et al. (2000), Fan et al. (2018) and Han & Liu (2018). A critical property of spatial Kendall’s tau matrix is that for elliptical vector , it shares the same eigenvectors of scatter matrix with the same ordering of eigenvalues, see Han & Liu (2018) for detailed proof. Suppose are independent data points from , the sample spatial Kendall’s tau matrix is naturally defined as a U-statistic:

3 Distributed PCA without Moment Constraint

In this section, a new distributed algorithm for estimating principal eigenspace robustly is introduced in detail, which generalizes the distributed PCA by Fan et al. (2019) to the elliptical family setting.

Suppose there are samples of in total, and we are interested in recovering the eigenspace spanned by the leading eigenvectors of the scatter matrix . The samples spread across machines, and on the -th machine, there are samples. For , let denote the samples stored on the -th machine. First calculate the local sample spatial Kendall’ tau matrix on the -th server as

We further compute the leading eigenvectors of sample Kendall’s tau matrix on the -th server and denote as the matrix with columns being these eigenvectors. We next transport these to the central server. The communication cost of the proposed distributed algorithm is of order . In contrast, to share all the data or entire spatial Kendall’tau matrix, the communication cost will be of order . In most cases , the distributed algorithm requires much less communication cost than naive data aggregation.

The transported local estimator would span an eigenspace , which can be viewed as points in the Grassmann manifold, as illustrated in Figure 1. We then find the “center” of these points by minimizing the projection metrics on Grassmann manifolds in the central server, i.e,

Denote and , which are the projection matrices of and respectively. Then we have:

The first two terms on the right hand side are fixed, so it’s equivalent to maximizing the last term, namely . Thus it’s clear that is the leading eigenvectors of the average projection matrix

At last we take as the final distributed estimator of the principal eigenspace. The detailed algorithm is summarized in Algorithm 1.

Fan et al. (2019) proposed a distributed PCA method in which they explain the aggregation procedure from the perspective of semidefinite programming (SDP) problem and minimize the sum of squared losses. In the current work, we provide a rationale of the aggregation procedure from the perspective of the Grassmann manifold, which is quite easy to understand. The viewpoint from the Grassmann manifold is similar to Li et al. (2022), where they proposed manifold PCA for matrix elliptical factor model but not from the perspective of distributed computation.

4 Theoretical properties

In this section, we investigate the theoretical property of the distributed estimator . We focus on the distance of the spaces spanned by the columns of and those of . We adopt the metric in Fan et al. (2019) to analyse the statistical error of as an estimator of . At the beginning, we give some assumptions for technical analysis.

Assumption 4.1.

Assume that , for a fixed , , the scatter matrix satisfies:

-

i)

There exists a universal positive constant such that the ratio of the maximal eigenvalue to minimal eigenvalue of the scatter matrix satisfies:

-

ii)

There is a relatively significant difference between the -th eigenvalue and the ()-th eigenvalue:

Assumption 4.1 imposes conditions on the eigengap and condition number of the scatter matrix, which is common in the high-dimensional PCA literature and is mild in the following sense. In terms of elliptical factor models in He et al. (2022), that’s , the scatter matrix of has the low rank plus sparsity structure , where is the scatter matrix of idiosyncratic errors , see also Section 5. Assume that there exists latent factors, the loading matrix satisfies the pervasive condition as , with being a positive definite matrix, and the eigenvalues of are bounded from below and above, then the scatter matrix would satisfy the conditions in Assumption 4.1.

In the following analysis, we decompose the error into two parts: the bias term and the variance term. In detail,

where is the leading eigenvectors of . In the following we present the upper bound of the variance term and the bias term in Theorem 4.1 and Theorem 4.2 respectively.

Theorem 4.1.

The measure of concentration is essential in high-dimensional statistical analysis. Fan et al. (2019) exerted the sub-Gaussian assumption to derive a form of concentration inequality. However, the proposed algorithm maps each eigenspace estimator to the Grassmann manifolds where concentration inequalities are easily derived due to the compactness therein, thus the results also hold even for Cauchy distribution without any moment. Next we give the upper bound of the bias term.

Theorem 4.2.

Under Assumption 4.1, further assume that . Then we have

By combining the results of the bias term and the variance term, we have the following corollary.

Corollary 4.1.

Under Assumption 4.1, further assume that . Then there exist positive constants such that

if the number of subservers satisfies for some constant , we have

By Corollary 4.1 and the theoretical result in Han & Liu (2018), we conclude that our algorithm is not only communication-efficient but also outputs an estimator which shares the same convergence rate as the ECA estimator with all samples available at one machine when is not larger than the bounds given in Corollary 4.1. This is also confirmed in the following simulation study.

5 Extension to Elliptical factor models

In this section, we assume that the data points has a elliptical factor model, i.e., ,

where are the latent factors, is the factor loading matrix and ’s are the idiosyncratic errors. Note that for the elliptical factor model, the loading space rather than is identifiable. We assume that random vectors are generated independently and identically from the elliptical distribution , where , see He et al. (2022) for more details on elliptic distribution. By the property of the elliptical family, the scatter matrix of has the form: . We assume that the loading matrix satisfies the pervasive condition as , where is a positive definite matrix, and the eigenvalues of are bounded from below and above. Then satisfies Assumption 4.1. Naturally, we use the space spanned by the leading eigenvectors of the sample Multivariate Kendall’s tau matrix as the estimator of the loading space . In the article, we assume that the observations spread across machines, and on the -th machine, there are observations. For , let denote the observations stored on the -th machine.

By the proposed distributed algorithm, we further propose a distributed procedure to estimate the factor loading space and the factor scores. The algorithm goes as follows: firstly for , compute the leading eigenvectors of the sample Kendall’s tau matrix on the -th server, and let ; then transport to the central processor; then perform spectral decomposition for the average of projection operators to get its leading eigenvectors , and let . At last is set as the final estimator of . If we are also interested in the factor scores on each server, then we simply transport back to each subserver, and solve a least squares problem to get the estimators of the factor scores. We summarized the procedure in Algorithm 2.

6 Simulation Studies and Real Example

6.1 Simulation Studies

In this section, we investigate the empirical performance of the proposed algorithm in terms of estimating loading space in factor models. In essence, our algorithm is a distributed Elliptical Component Analysis procedure, thus is briefly denoted as D-ECA. We compare ours with the distributed PCA (denoted as D-PCA) algorithm in Fan et al. (2019) as well as an oracle algorithm in which the full samples are available at one machine and elliptical component analysis (denoted as F-ECA) is performed.

We adopt a simplified data-generating scheme from the elliptical factor model, similar as that in He et al. (2022):

where and are jointly generated from elliptical distributions. We set and draw independently from the standard normal distribution. We use a fixed number of observations on each server, i.e., , but vary the number of servers and the dimensionality . The factors and the idiosyncratic errors are generated in the following ways: (i) are i.i.d. jointly elliptical random variables from multivariate Gaussian distributions ; (ii) are i.i.d. jointly elliptical random variables from multivariate centralized distributions with .

We compare the performance of the distributed algorithms by the distance between the estimated loading space and the real loading space. We adopt a distance between linear spaces also utilized in He et al. (2022) Precisely, for two matrices with orthonormal columns and , the distance between their column spaces is defined as:

The measure equals to 0 if and only if the column spaces of and are the same, and equals to 1 if and only if they are orthogonal. Indeed, we have .

| 20 | 5 | D-PCA | 0.034(0.006) | 0.080(0.019) | 0.126(0.034) | 0.259(0.066) |

| D-ECA | 0.035(0.006) | 0.038(0.006) | 0.040(0.007) | 0.042(0.007) | ||

| F-ECA | 0.034(0.005) | 0.038(0.006) | 0.039(0.006) | 0.041(0.007) | ||

| 10 | D-PCA | 0.024(0.005) | 0.057(0.013) | 0.092(0.022) | 0.169(0.031) | |

| D-ECA | 0.025(0.005) | 0.027(0.005) | 0.028(0.004) | 0.029(0.004) | ||

| F-ECA | 0.025(0.005) | 0.027(0.005) | 0.028(0.004) | 0.028(0.004) | ||

| 20 | D-PCA | 0.016(0.002) | 0.040(0.008) | 0.064(0.013) | 0.124(0.026) | |

| D-ECA | 0.017(0.002) | 0.019(0.008) | 0.019(0.003) | 0.020(0.004) | ||

| F-ECA | 0.017(0.002) | 0.019(0.008) | 0.019(0.003) | 0.020(0.004) | ||

| 50 | 5 | D-PCA | 0.032(0.003) | 0.076(0.014) | 0.126(0.026) | 0.247(0.038) |

| D-ECA | 0.033(0.003) | 0.037(0.003) | 0.037(0.003) | 0.040(0.003) | ||

| F-ECA | 0.033(0.003) | 0.036(0.003) | 0.037(0.003) | 0.040(0.003) | ||

| 10 | D-PCA | 0.023(0.002) | 0.054(0.008) | 0.085(0.012) | 0.167(0.023) | |

| D-ECA | 0.023(0.002) | 0.026(0.002) | 0.026(0.002) | 0.028(0.002) | ||

| F-ECA | 0.023(0.002) | 0.026(0.002) | 0.026(0.002) | 0.027(0.002) | ||

| 20 | D-PCA | 0.016(0.002) | 0.038(0.004) | 0.060(0.008) | 0.116(0.013) | |

| D-ECA | 0.017(0.002) | 0.018(0.002) | 0.019(0.002) | 0.020(0.002) | ||

| F-ECA | 0.017(0.002) | 0.018(0.002) | 0.019(0.002) | 0.020(0.002) | ||

| 100 | 5 | D-PCA | 0.032(0.002) | 0.077(0.014) | 0.123(0.020) | 0.240(0.031) |

| D-ECA | 0.033(0.002) | 0.036(0.002) | 0.037(0.002) | 0.039(0.002) | ||

| F-ECA | 0.033(0.002) | 0.036(0.002) | 0.036(0.002) | 0.039(0.002) | ||

| 10 | D-PCA | 0.023(0.001) | 0.054( 0.007) | 0.087(0.011) | 0.165(0.018) | |

| D-ECA | 0.023(0.001) | 0.026(0.001) | 0.026(0.001) | 0.028(0.002) | ||

| F-ECA | 0.023(0.001) | 0.025(0.001) | 0.026(0.001) | 0.028(0.002) | ||

| 20 | D-PCA | 0.016(0.001) | 0.037(0.003) | 0.059(0.006) | 0.116(0.010) | |

| D-ECA | 0.017(0.001) | 0.018(0.001) | 0.019(0.001) | 0.020(0.001) | ||

| F-ECA | 0.016(0.001) | 0.018(0.001) | 0.018(0.001) | 0.019(0.001) | ||

The simulation results are presented in Table 1, from which we mainly draw the following essential conclusions. Firstly, it confirms that distributed ECA is a more robust method compared with the distributed PCA. As revealed by the results, our distributed ECA has a pretty good performance even when data is extremely heavy-tailed, while performs comparably with the D-PCA under Gaussian distribution. Secondly, the performance of distributed ECA is comparable with that of the F-DCA, which indicates that the distributed algorithm works as well in the case when we have all observations in one machine and coincides with our theoretical analysis. Thus the distributed algorithm enjoys high computation efficiency while barely loses any accuracy.

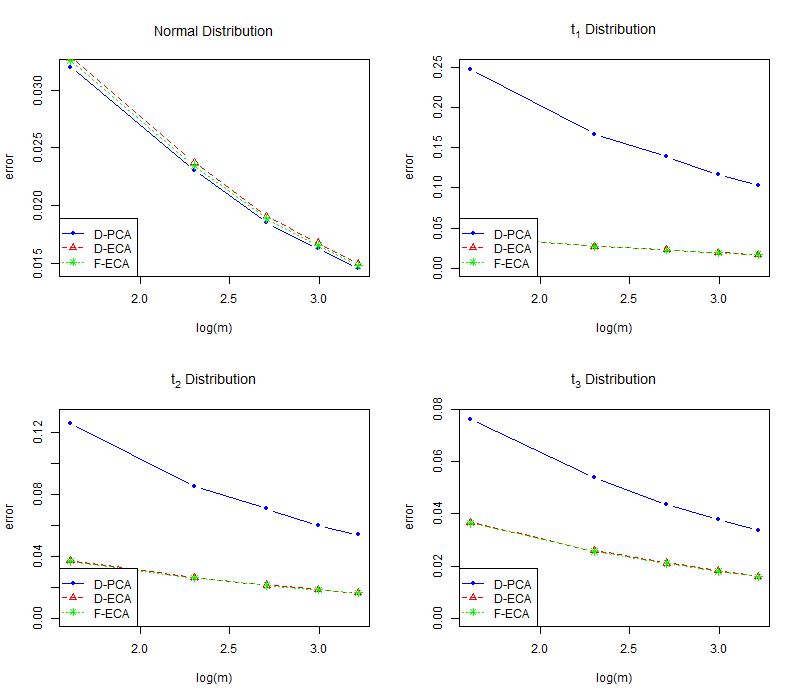

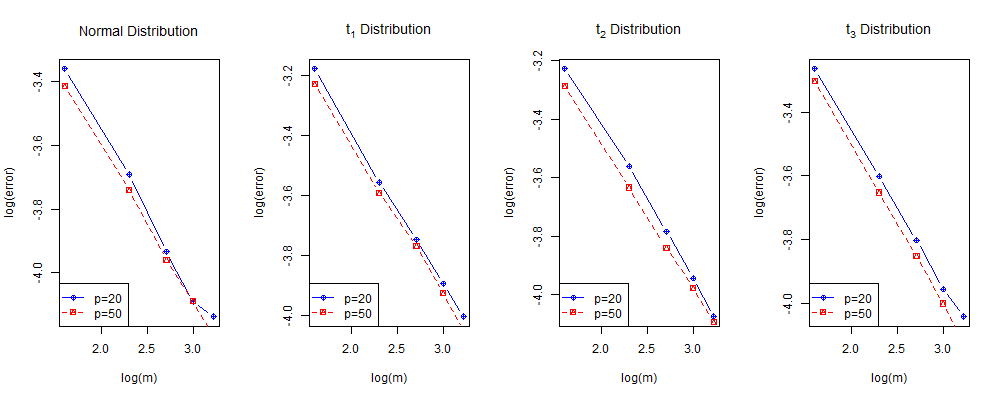

Figure 2 demonstrates the decay rate of the error as the number of servers increases when , where denotes the orthonormal output from the D-ECA . From Figure 2, we can see that the relationship between and is linear. Then we consider a regression model as follows:

The fitting result is that, for and for . As these experiments are conducted for fixed , the slope is consistent with the power of in the error bounds in Section 3, by noting that .

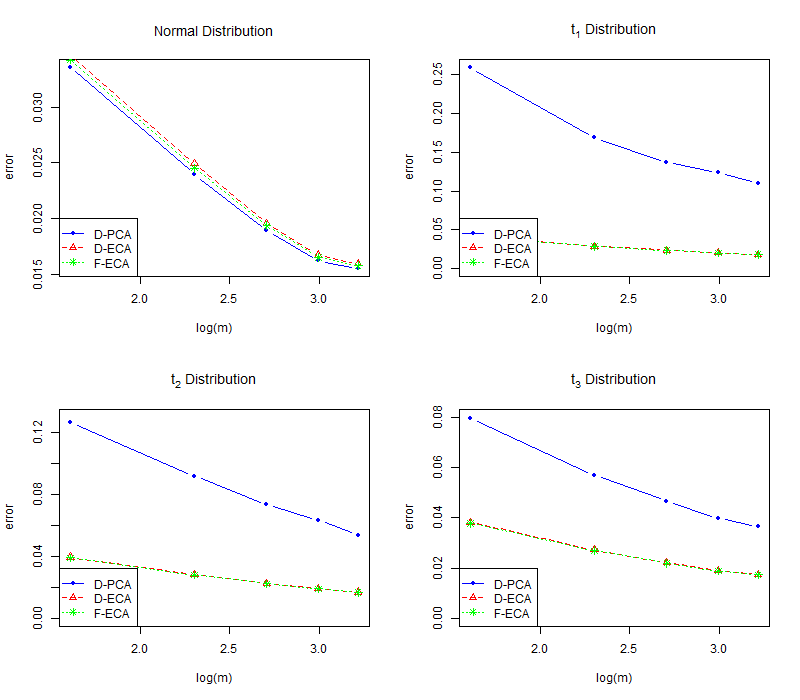

Figure 3 shows the errors of D-PCA, D-ECA and F-ECA with varying and fixed under different distributions. It is obvious from Figure 3 (see more simulation results in the supplement) that the error of D-PCA is much higher than that of D-ECA and F-ECA under the -distribution and the differences increase as the degrees of freedom declines, which again demonstrates that distributed ECA is a much more robust method than distributed PCA. Meanwhile, the errors of D-ECA and F-ECA are very close. Thus we confirm the conclusion that distributed ECA is a computation efficient method without losing much accuracy. In conclusion, when data are stored across different machines due to various kinds of reasons including communication cost, privacy, data security, our D-ECA can be regarded as an alternative to ECA based on full-samples. Also, it performs much more robust than distributed PCA in Fan et al. (2019).

6.2 Real example

We apply the distributed algorithm for elliptical factor model to an macroeconomic real dataset, i.e., U.S. weekly economic data which are available at Federal Reserve Economic Data (https://fred.stlouisfed.org/). Our dataset consists of 40 variables from October 2nd, 1996, to April 11th, 2018 . We divide the dataset into four equal subsets and store them on four servers . The series can roughly be classified into 5 groups: (1) Assets (series 1-3), (2) Loans (series 4-14), (3) Deposits and Securities, briefly denoted as DAS (series 15-26), (4) Liabilities (series 27-32), (5) Currency (series 33-40). By preliminary test, we find that more than two-thirds of the variables show the characteristics of heavy-tails, which indicates ECA is more suitable than PCA in analyzing the dataset.

We compare the forecasting performance of factors obtained by D-ECA, F-ECA, F-PCA, and D-PCA respectively. We adopt the rolling window prediction schemes which employs a fixed-length window of the most recent data (i.e., 10 weekly observations) to train the factor models and conduct out-of-sample forecasting. To measure the forecasting performance of the extracted factors, we establish the -step ahead prediction model:

We only regress on without considering the lagged variable of as we find that including the lagged variables in the model is less effective, which is consistent with the conclusion of Stock & Watson (2002).

| 1 | D-ECA/F-ECA | 0.98609 | 0.98390 | 1.00540 | 0.98852 | 1.00038 |

| D-ECA/F-PCA | 0.98727 | 0.98329 | 1.00378 | 0.98944 | 0.99921 | |

| F-PCA/F-ECA | 1.00089 | 1.00081 | 1.00366 | 1.00124 | 1.00333 | |

| 2 | D-ECA/F-ECA | 0.96390 | 0.98154 | 0.97463 | 0.97224 | 0.97600 |

| D-ECA/F-PCA | 0.96576 | 0.98136 | 0.97796 | 0.97125 | 0.97828 | |

| F-PCA/F-ECA | 0.99825 | 1.00355 | 0.98876 | 1.00359 | 0.99126 | |

| 3 | D-ECA/F-ECA | 0.97880 | 0.98323 | 1.00612 | 0.94963 | 1.00575 |

| D-ECA/F-PCA | 0.97804 | 0.98261 | 1.00620 | 0.94734 | 1.00565 | |

| F-PCA/F-ECA | 1.00277 | 1.00819 | 1.00747 | 1.00701 | 1.00720 | |

| 4 | D-ECA/F-ECA | 0.93355 | 0.96147 | 0.96971 | 0.95796 | 0.96653 |

| D-ECA/F-PCA | 0.93786 | 0.96351 | 0.94353 | 0.96158 | 0.96692 | |

| F-PCA/F-ECA | 0.99195 | 1.00563 | 0.99795 | 1.00233 | 0.99923 |

From Table 2, we see that the ahead prediction errors of D-ECA and F-ECA are similar. For 2-step ahead forecast and 4-step ahead forecast, the D-ECA prediction errors of these five groups are smaller than these of F-ECA. The prediction errors of D-ECA are not as good as those of F-ECA only for the 1-step ahead forecast and 3-step ahead forecast for DAS and Currency. On the other hand, whether distributed or full sample, the prediction errors of ECA is smaller than PCA. We also find that the F-ECA is better than the F-PCA in the 1-step ahead forecast and 3-step ahead forecast. The 2-step and 4-step prediction errors of Loans and Liabilities of F-ECA are better than those of F-PCA.

Overall, the factors extracted by the distributed algorithms tend to have higher forecasting performance than the algorithms with the full data. When it is extremely difficult to aggregate large datasets due to the issues of communication cost, privacy concern, data security, ownership and among many others, the distributed ECA algorithm provides a solution and may have higher forecasting performance than performing ECA/PCA to the whole data.

7 Discussion

We proposed a robust distributed algorithm to estimate principal eigenspace for high dimensional, elliptically distributed data, which are stored across multiple machines. The distributed algorithm reduce the transmission cost, and performs much better than distributed PCA when data are extremely heavy-tailed. The algorithm goes as follows: in the first step, we compute the leading eigenvectors of sample Kendall’s tau matrix on each server and then transport them to the central server; in the second step, we take an average of the projection operators and perform a spectral decomposition on it to get the leading eigenvectors, which spans the final estimated eigenspace. We derive the convergence rate of the robust distributed estimator. Numerical studies show that the proposed algorithm works comparably with the full sample ECA while performs better than distributed PCA when data are heavy-tailed. We also extend the algorithm to learn the elliptical factor model in a distributed manner, and the theoretical analysis is more challenging and we leave it as future work. It’s also interesting to study the “vertical” division for distributed robust PCA and elliptical factor model.

Acknowledgements

The authors gratefully acknowledge National Science Foundation of China (12171282, 11801316), National Statistical Scientific Research Key Project (2021LZ09), Young Scholars Program of Shandong University, Project funded by China Postdoctoral Science Foundation (2021M701997).

References

- (1)

- Anderson (1963) Anderson, T. W. (1963), ‘Asymptotic theory for principal component analysis’, The Annals of Mathematical Statistics 34(1), 122–148.

- Bao et al. (2022) Bao, Z., Ding, X., Wang, J. & Wang, K. (2022), ‘Statistical inference for principal components of spiked covariance matrices’, The Annals of Statistics 50(2), 1144–1169.

- Chen et al. (2021) Chen, X., Zhang, J. & Zhou, W. (2021), ‘High-dimensional elliptical sliced inverse regression in non-gaussian distributions’, Journal of Business & Economic Statistics 0(0), 1–12.

- Choi & Marden (1998) Choi, K. & Marden, J. (1998), ‘A multivariate version of kendall’s ’, Journal of Nonparametric Statistics 9(3), 261–293.

- Fan et al. (2021) Fan, J., Guo, Y. & Wang, K. (2021), ‘Communication-efficient accurate statistical estimation’, Journal of the American Statistical Association pp. 1–11.

- Fan et al. (2018) Fan, J., Liu, H. & Wang, W. (2018), ‘Large covariance estimation through elliptical factor models’, Annals of statistics 46(4), 1383.

- Fan et al. (2019) Fan, J., Wang, D., Wang, K. & Zhu, Z. (2019), ‘Distributed estimation of principal eigenspaces’, Annals of statistics 47(6), 3009.

- Feng & Liu (2017) Feng, L. & Liu, B. (2017), ‘High-dimensional rank tests for sphericity’, Journal of Multivariate Analysis 155, 217–233.

- Hallin et al. (2010) Hallin, M., Paindaveine, D. & Verdebout, T. (2010), ‘Optimal rank-based testing for principal components’, The Annals of Statistics 38(6), 3245–3299.

- Hallin et al. (2014) Hallin, M., Paindaveine, D. & Verdebout, T. (2014), ‘Efficient r-estimation of principal and common principal components’, Journal of the American Statistical Association 109(507), 1071–1083.

- Han & Liu (2014) Han, F. & Liu, H. (2014), ‘Scale-invariant sparse PCA on high-dimensional meta-elliptical data’, J. Amer. Statist. Assoc. 109(505), 275–287.

- Han & Liu (2018) Han, F. & Liu, H. (2018), ‘Eca: High-dimensional elliptical component analysis in non-gaussian distributions’, Journal of the American Statistical Association 113(521), 252–268.

- He et al. (2022) He, Y., Kong, X., Yu, L. & Zhang, X. (2022), ‘Large-dimensional factor analysis without moment constraints’, Journal of Business & Economic Statistics 40(1), 302–312.

- Hu et al. (2019) Hu, J., Li, W., Liu, Z. & Zhou, W. (2019), ‘High-dimensional covariance matrices in elliptical distributions with application to spherical test’, The Annals of Statistics 47(1), 527 – 555.

- Hult & Lindskog (2002) Hult, H. & Lindskog, F. (2002), ‘Multivariate extremes, aggregation and dependence in elliptical distributions’, Advances in Applied probability 34(3), 587–608.

- Jing et al. (2012) Jing, B.-Y., Kong, X.-B. & Liu, Z. (2012), ‘Modeling high-frequency financial data by pure jump processes’, The Annals of Statistics 40(2), 759–784.

- Kong et al. (2021) Kong, X.-B., Lin, J.-G., Liu, C. & Liu, G.-Y. (2021), ‘Discrepancy between global and local principal component analysis on large-panel high-frequency data’, Journal of the American Statistical Association 0(0), 1–12.

- Kong et al. (2015) Kong, X.-B., Liu, Z. & Jing, B.-Y. (2015), ‘Testing for pure-jump processes for high-frequency data’, The Annals of Statistics 43(2), 847–877.

- Li et al. (2022) Li, Z., He, Y., Kong, X. & Zhang, X. (2022), ‘Manifold principle component analysis for large-dimensional matrix elliptical factor model’, arXiv preprint arXiv:2203.14063 .

- Onatski (2012) Onatski, A. (2012), ‘Asymptotics of the principal components estimator of large factor models with weakly influential factors’, Journal of Econometrics 168(2), 244–258.

- Qu et al. (2002) Qu, Y., Ostrouchov, G., Samatova, N. & Geist, A. (2002), Principal component analysis for dimension reduction in massive distributed data sets, in ‘Proceedings of IEEE International Conference on Data Mining (ICDM)’, Vol. 1318, p. 1788.

- Stock & Watson (2002) Stock, J. H. & Watson, M. W. (2002), ‘Macroeconomic forecasting using diffusion indexes’, Journal of Business & Economic Statistics 20(2), 147–162.

- Taskinen et al. (2012) Taskinen, S., Koch, I. & Oja, H. (2012), ‘Robustifying principal component analysis with spatial sign vectors’, Statistics & Probability Letters 82(4), 765–774.

- Vershynin (2010) Vershynin, R. (2010), ‘Introduction to the non-asymptotic analysis of random matrices’, arXiv preprint arXiv:1011.3027 .

- Visuri et al. (2000) Visuri, S., Koivunen, V. & Oja, H. (2000), ‘Sign and rank covariance matrices’, Journal of Statistical Planning and Inference 91(2), 557–575.

- Wang & Fan (2017) Wang, W. & Fan, J. (2017), ‘Asymptotics of empirical eigenstructure for high dimensional spiked covariance’, Annals of Statistics 45, 1342–1374.

- Yu et al. (2019) Yu, L., He, Y. & Zhang, X. (2019), ‘Robust factor number specification for large-dimensional elliptical factor model’, Journal of Multivariate analysis 174, 104543.

- Yu et al. (2015) Yu, Y., Wang, T. & Samworth, R. J. (2015), ‘A useful variant of the davis–kahan theorem for statisticians’, Biometrika 102(2), 315–323.

- Zhang et al. (2012) Zhang, Y., Wainwright, M. J. & Duchi, J. C. (2012), ‘Communication-efficient algorithms for statistical optimization’, Advances in neural information processing systems 25.

Supplementary Materials for “Distributed Learning for Principle Eigenspaces without Moment Constraints”

Yong He 111Institute of Financial Studies, Shandong University, China; e-mail:heyong@sdu.edu.cn , Zichen Liu111Institute of Financial Studies, Shandong University, China; e-mail:heyong@sdu.edu.cn , Yalin Wang111Institute of Financial Studies, Shandong University, China; e-mail:heyong@sdu.edu.cn

This document provides detailed proofs of the main paper and additional simulation results. We start with some auxiliary lemmas in Section A and the proof of main theorems is provided in Section B. Section C provides the additional simulation results.

Appendix A Auxiliary Lemmas

Lemma A.1.

Let follows a continuous elliptical distribution, that is, with . K is the population multivariate Kendall’s tau matrix, its rank is assumed to be . Then:

where is a standard multivariate Gaussian distribution. In addition, K and share the same eigenspace with the same descending order of the eigenvalues.

This lemma is the foundation of principal component analysis for elliptically distributed data, and the proof can be found in Han & Liu (2018).

Lemma A.2.

Under Assumption 3.1 in the main paper, there exist a positive constant and a large , for :

Proof.

First, we claim that when ,

It is obviously true by:

By Theorem 3.5 in Han & Liu (2018), for large ,

According to the claim above, we know that as . Also,

By the assumption on ratio of eigenvalues, we have:

where the last inequality holds because:

Consequently, there exists a large , such that when :

∎

Lemma A.3.

When , we have:

Proof.

Since , we have:

By Weyl theorem,

Similarly,

Hence,

∎

Lemma A.4.

Let : be a matrix value kernel function. Let be independent observations of an random variable . Suppose that, for any , exists and there exist two constants such that

We then have

The proof of this lemma can be found in Han & Liu (2018).

Lemma A.5.

are samples from some elliptical distribution. Population and sample Kendall’s tau matrix are denoted by K and , respectively. If , we have:

Proof.

It is easily seen that . Since K and are positive semidefinite matrices, we have , and thus .

Note that kernel function of Kendall’s tau matrix is:

By some simple algebra,

According to Lemma A.4, let , then

We need to find a function with respect to and , which satisfies and

| (A.1) |

It is equivalent to:

It is easy to see that the right term is an increasing function of , so the last inequality above is equivalent to:

Let , it is easily to verify that it satisfies (A.1). Therefore,

By (5.14) in Vershynin (2010), we have

∎

Appendix B Proof of the Main Theorems

Proof of Theorem 4.1

Proof.

According to Davis-Kahan theorem in Yu et al. (2015), we have:

Note that is a bounded random variable, thus it is sub-Gaussian, naturally with finite Orlizc norm. Hence according to Lemma 4 in Fan et al. (2019),

In fact, we have

where the second inequality is by Jensen’s inequality. Combining

and the generalized Davis-Kahan theorem in Yu et al. (2015), which is

we have

Hence, combining all of the equations above and Lemma A.5, we have

| (B.1) |

According to Lemma A.2 and equation (B.1), it is not hard to show that

∎

Proof of Theorem 4.2

Appendix C Additional Simulation results

Figure 4 show the errors of D-PCA, D-ECA and F-ECA with varying and and fixed under different distributions. It is obvious from the Figure 4 that the error of D-PCA is much higher than that of D-ECA and F-ECA under the -distribution and the differences increase as the degrees of freedom declines, which again proves distributed ECA is a much more robust method than distributed PCA. Meanwhile, the errors of D-ECA and F-ECA are very close. Thus we confirm the conclusion that distributed ECA is a computation efficient method without losing much accuracy. In conclusion, when data are stored across different machines due to various kinds of reasons including communication cost, privacy, data security, our D-ECA can be regarded as an alternative to ECA based on full-samples.