Distributionally Robust Optimal and Safe Control of Stochastic Systems via Kernel Conditional Mean Embedding [extended version]

Abstract

We present a novel distributionally robust framework for dynamic programming that uses kernel methods to design feedback control policies. Specifically, we leverage kernel mean embedding to map the transition probabilities governing the state evolution into an associated repreducing kernel Hilbert space. Our key idea lies in combining conditional mean embedding with the maximum mean discrepancy distance to construct an ambiguity set, and then design a robust control policy using techniques from distributionally robust optimization. The main theoretical contribution of this paper is to leverage functional analytic tools to prove that optimal policies for this infinite-dimensional min-max problem are Markovian and deterministic. Additionally, we discuss approximation schemes based on state and input discretization to make the approach computationally tractable. To validate the theoretical findings, we conduct an experiment on safe control for thermostatically controlled loads (TCL).

I Introduction

We focus on discrete-time stochastic control problems, where states evolve according to an underlying stochastic transition kernel. The main objective is to design a feedback control policy that either minimizes an objective function or that maximizes the probability of satisfying temporal properties, such as safety or reach-avoid specifications [1, 2].

Drawing inspirations from recent advancements in the data-driven control literature [3, 4], which advocates for techniques that enable the design of feedback controllers based on available data, we introduce a novel design approach based on dynamic programming that leverages available trajectories of the system. To address sampling errors resulting from finite data, we employ techniques from “distributionally robust” optimization and control [5, 6, 7, 8], which have gained significant attention in the community. These techniques induce robustness in the space of probability measures through the creation of the so-called ambiguity sets. In other words, distributionally robust techniques compute the worst-case expected value of a function, when the underlying measure belongs to such an ambiguity set. In this paper, we relate these ideas to the class of min-max control problems initially investigated in the seminal work [9] and further explored in [10].

A key feature of our approach is the use of kernel methods [11, 12], specifically conditional mean embedding and Maximum Mean Discrepancy (MMD) [13]. These methods are employed to embed probability distributions into the associated reproducing kernel Hilbert space (RKHS) and measure distance between two probabilities distributions by using MMD. We create an ambiguity set represented as a ball in the space of probability measures, while the mean embedding provides an estimate of the center of the generated ambiguity set. This allows us to reason about uncertainty and ensure robustness in the decision-making process.

The construction of a min-max control problem through the ambiguity set formulation is relevant, especially in data-limited scenarios where the empirical estimate may not be rich enough to approximate the true conditional mean embedding. While conditional mean embedding and its empirical estimate have been applied in the context of Bayesian inference [14], dynamical systems [15] and more recently for reachability analysis [16, 17], we are not aware of any work that leverages this framework for control synthesis using distributionally robust dynamic programming. In fact, distributionally robust optimization (DRO) with ambiguity sets defined via MMD and kernel mean embedding has only been recently studied [12], where the authors established the strong duality result for this class of problems. Notably, kernel DRO problems has not received much attention, with [18] being the sole exception.

Before summarizing our main contributions, we highlight the widespread use of kernel methods, MMD, and kernel mean embedding within the machine learning and system identification literature [19, 13, 20, 11]. For instance, Muandet et al. [11] express the expectation of any function of the subsequent state as an inner product between such a function and the conditional mean embedding. When the transition probability is unknown, and we have access to state-input trajectories, the empirical estimate of the conditional mean embedding has been used to approximate the inner product computation [16, 21].

Moreover, distributionally robust dynamic programming has been studied in several recent contributions [22, 23, 24, 25], where ambiguity sets are defined in an exogenous manner, independent of the current state and action. While this is a reasonable assumption when the state evolution is uncertain in a parametric manner (e.g., the state transition being governed by known dynamics affected by a parametric uncertainty or additive disturbance), the more general case of state evolution given by a stochastic transition kernel requires defining the stochastic state evolution and its associated ambiguity set as a function of the current state and chosen action. This class of ambiguity sets is referred to as decision-dependent ambiguity sets and often poses challenges for tractable reformulations [26, 27]. Our main contributions are summarized as: (1) We introduce a novel framework that provides a solution to discrete-time stochastic control problems based on available system trajectories. The key objective is to derive robust control policies against sample errors due to finite data, achieved through the construction of ambiguity sets; (2) We simultaneously employ kernel mean embedding and MMD distance in space of probability distributions, enabling the formulation and solution to a distributionally robust dynamic programming; (3) We leverage certain functional analytic tools and an existing result in the literature to demonstrate that the set of optimal policies obtained through our formulation can be chosen to be Markovian and deterministic.

II Preliminaries

II-A Reproducing kernel Hilbert spaces (RKHS) and kernel mean embeddings

Let be a measurable space, where is an abstract set and represents a -algebra on (please refer to Chapter 1 in [28] for more details). Consider a measurable function , called a kernel, that satisfies the following properties.

-

•

Boundedness: For any , we have that .

-

•

Symmetry: For any , we have ;

-

•

Positive semidefinite (PSD): For any finite collection of points , where for all , and for any vector , we have that

In other words, the Gram matrix whose -th entry is given by is a positive semidefinite matrix for any choice of points .

A kernel function satisfying the above three properties is called a positive semidefinite kernel.

Two consequences are in place with the presence of a positive semidefinite kernel. First, every semipositive definite kernel is associated with a reproducing kernel Hilbert space (RKHS) , which is defined as

| (1) |

defined as the closure of all possible finite dimensional subspaces induced by the kernel . The space is equipped with the inner product . We may notice that the boundedness, symmetry and PSD properties above ensure that such an inner product is well-defined; hence, equipped with this inner product is a Hilbert space (see [29] for more details). By definition, for any function there exist a sequence of integers and a sequence of functions such that , which then implies that

thus justifying the reproducing kernel property of the Hilbert space . Alternatively, by the Riesz representation theorem (Theorem 4.11 in [29]), any Hilbert space with the reproducing kernel property can be identified with the RKHS of a PSD kernel. The interested reader is referred to [13] and references therein for more details. Second, there exists a feature map with the property . The canonical feature map is given by , and we use the notation .

We now introduce the notion of kernel mean embedding of probability measures [13, 11]. Let be the set of probability measures on , and be a random variable defined on with distribution . The kernel mean embedding is a mapping defined as

| (2) |

We have the following result from [13, 11] on the reproducing property of the expectation operator in the RKHS.

Lemma 1 (Lemma 3.1 [11]).

If , then and .

Lemma 1 implies that is indeed an element of the RKHS , and that the expectation of any function of the random variable can be computed by means of an inner product between the corresponding function and the kernel mean embedding. Let be a collection of independent samples from the distribution and the consider the random variable as

| (3) |

In other words, is the mean embedding of the empirical distribution induced by the samples.

II-B Kernel-based ambiguity sets

There are several ways to introduce a metric in the space of probability measures (see [30] for more details). In the context of RKHS and the kernel mean embedding introduced in the previous section, a common metric is a type of integral probability metric [13] called the Maximum mean discrepancy (MMD), which is defined as

| (4) |

In this work, we consider data-driven MMD ambiguity sets induced by observed samples defined as

| (5) |

where is the empirical distribution defined earlier. Thus, contains all distributions whose kernel mean embedding is within distance of the kernel mean embedding of the empirical distribution. The above ambiguity set also enjoys a sharp uniform convergence guarantees of as shown in [31]. Details are omitted for brevity.

II-C RKHS embedding of conditional distributions

We now consider random variables of the form taking values over the space . Let be the RKHS of real valued functions defined on with positive definite kernel and feature map . For any distribution , we define the bilinear covariance operator [32] as

| (6) |

For each fixed , we define a cross-covariance operator as the unique operator, which is well-defined due to Riesz representation theorem, such that, for all , we have

Similarly, we define the operator , and one may notice that , where is the adjoint operator of . When , the analogous operator is called the covariance operator. Of interest to our approach is the conditional mean embedding of the conditional distributions.

For two measurable spaces and , where and are associated -algebras, a stochastic kernel is a measurable mapping from to the set of probability measures equipped with the topology of weak convergence. Please refer to [33], Chapter 7, for a more detailed definition.

Definition 1 (Definition 4.1 [11]).

Given a stochastic kernel , its conditional mean embedding is a mapping such that, for all , the following property holds

| (7) |

that is, the inner product of the conditional mean embedding with a function in coincides with the conditional expectation of under the stochastic kernel .

A crucial result to the success of conditional mean embedding in machine learning applications is the relation proved in [32, 34] between the mappings in Definition 1 and the covariance and cross-covariance operators and as shown in the sequel.

Proposition 1 (Corollary 3, [34]).

The importance of Proposition 1 lies in the fact that it allows us to estimate the mapping by means of available data. In fact, in many applications, the joint or conditional distributions involving and are not known, rather we have access to i.i.d. samples drawn from the joint distribution induced by an underlying stochastic kernel (namely, the system dynamics, as discussed in the next section).

Remark 1.

The main step of the proof of Proposition 1 consists in showing that, for all , the relation holds. Details are omitted for brevity.

Let be the Gram matrix associated with , that is, the matrix whose -th entry given by . The empirical estimate of the conditional mean embedding was derived in [35] and is stated below.

Theorem 1 (Theorem 4.2 [11]).

An empirical estimate of the conditional mean embedding is given by

| (8) |

where with , being the identity matrix of dimension and being a regularization parameter.

III Kernel Distributionally Robust Optimal Control

We now connect the abstract mathematical framework introduced in the previous sections in the context of optimal control problems. Let be a probability measure space, where ; we use such measure-theoretic framework to formalize statements about the stochastic process in the sequel. Consider the discrete-time stochastic system given by

| (9) |

where and denote the state and control input at time , with being the set of admissible control inputs at time , and denotes the initial state. Observe that we denote the system dynamics by a stochastic kernel defined over the state-input pair, which describes a probability distribution over the next state. It is a standard machinery to assign the semantics for the dynamical system in (9) in such a way that it defines a unique probability measure in the space of sequences with value in using the Kolmogorov extension theorem. See [38] for more details.

We define a sequence of history-dependant (or non-Markovian) control policies , which maps a sequence of state-input pair into a probability measure with support in the space of admissible inputs . The collection of admissible control policies over a horizon of length is given by the set

composed by the set control policies with support on the admissible input set associated with the current system state. In case the control policy depends only on the current state, i.e., for all that agree on the last entry, then we call such a policy a Markovian policy.

Inspired by a growing interest in data-driven control methods [16, 21, 3], our objective is to design an admissible control policy that minimizes a performance index with respect to the generated trajectories of the system dynamics given in (9) by relying on available data set , composed by a sequence of state-input-next-state tuple. To account for the sampling error due to the finite dataset, we leverage techniques from distributionally robust optimization [7, 6, 5, 23] by creating a state-dependant ambiguity set around the estimate of the system dynamics, which is obtained using the mathematical framework introduced in Section II.

Formally, and referring to the notation used in Section II, let us identify the space with the cartesian product and with the state space of the dynamics in (9). Let and be the corresponding kernels that induce, respectively, the RKHS and . Let be the stochastic kernel associated with the state-transition matrix, and and be the conditional mean embeddings as in Definition 1 and Theorem 1, respectively; the latter obtained from the dataset .

A key novelty of our approach is the construction of an ambiguity set using the kernel mean embedding introduced in (2):

| (10) |

which is a collection of probability measures over whose mean embedding is close to the conditional mean embedding of the system dynamics. Notice the dependence of the current state-action pair through the conditional mean embedding. Similar to the control policy definition, we define a collection of admissible dynamics for a given time-horizon . Formally, for a given sequence of state-input pairs of size we define the set of admissible dynamics as

| (11) |

where denotes the ambiguity set in (10).

Our main goal is to solve a distributionally robust dynamic programming using the ingredients presented so far. To this end, let be the time-horizon, and consider the corresponding set of control policies and admissible dynamics . For any and , and for any initial state , where is the first entry of the admissible dynamics , we denote by the induced measure on the space of sequences in of length . For a given stage cost , we define the finite horizon expected cost as

| (12) |

where denotes the expectation operator with respect to . Our goal is to find a policy that solves the distributionally robust control problem given by

| (13) |

Problem (13) consists of a min-max infinite-dimensional problem, since the collection of control policy and admissible dynamics are infinite-dimensional spaces; and it has been studied in several related communities [5] under different lenses. This paper departs from related contributions within the control community [21, 16], showing that under technical conditions and leveraging compactness-related arguments that there exist optimal Markovian policies for the solution of (13). To this end, we consider the following technical conditions.

Assumption 1.

Let be a weakly compact set such that if and only if for some . The following conditions hold.

-

1.

The stage cost function in (12) is lower semicontinuous. Besides, there exists a constant and a continuous function such that

-

2.

For every bounded, continuous function , the functional , defined as is continuous on .

-

3.

There exists a constant such that for all .

-

4.

The set is compact for each . Furthermore, the set-valued mapping is upper semi-continuous.

Theorem 2.

Proof.

Our proof strategy is inspired by the analysis in [9]. Without loss of generality, we may assume that the cost function is bounded, that is, , (otherwise one could consider a weighted norm using the function appearing in item of Assumption 1 – see [9] for more details.) For each bounded function , we define the functionals and , and the mapping as

| (14) | |||

| (15) | |||

| (16) |

Specifically, (16) defines the distributionally robust dynamic programming (DP) operator under MMD ambiguity defined in (10), which is an infinite-dimensional optimization problem. Given any initial function , the value function of the distributionally robust control problem can be defined iteratively as

| (17) |

for . We are now ready to show that problem (13) admits a non-randomized, Markov policy which is optimal. To this end, we need to show (1) lower semi-continuity of the functional and (2) that the inner supremum in the definition of is achieved.

To show lower semi-continuity, we follow a similar approach as the proof of [9, Theorem 3.1] and [23, Theorem 1]. The primary challenge is to show that the functional used in (17) preserves the lower semi-continuity of the value function. We show this via induction. Let be lower semicontinuous. Following identical arguments as [9, Lemma 3.3], it can be shown that is lower semicontinuous on , provided one can show that the mapping is weakly111Due to the fact that we are dealing with some infinite-dimensional spaces, we rely on the topological notion of continuity. A function between two topological spaces (please refer to [39] for an introduction to these concepts) and , is continuous if for all we have that . The notions of weakly continuous, weakly compact, etc, are used due to the fact that we equip the infinite-dimensional spaces and with the topology. We refer the reader to [29], Chapter 4, for more details about these concepts. compact and lower semi-continuous (i.e., the condition analogous to [9, Assumption 3.1(g)]).

To show weak compactness of , let us first study properties of the set

where is the conditional mean embedding mapping of Definition 1 using the notation of Section III. Note that is a convex subset of the RKHS ; hence, by [29, Theorem 3.7], it is also weakly closed. Since Hilbert spaces are reflexive Banach spaces, then by Kakutani’s theorem (see [29, Theorem 3.17]) we show that the set is weakly compact. Now, observe that

where the mapping , defined in (2), is continuous due to boundedness and continuity of the kernel, thus weakly continuous. Since is also surjective222For any function there exists a sequence such that . Let and notice that , thus showing that is a surjective mapping., we have by the open mapping theorem ([29, Theorem 2.6]) that is also weakly compact.

For a fixed , we now show that the mapping is lower semicontinuous. We define the distance function from a distribution to a convex subset of as333This distance is only well-defined due to the weak compactness result shown above, as it would allow us to take convergent subsequences for any sequence achieving the infimum in this definition.

Let be given, with , , and . Thus,

Consider a sequence with and . From [40, Proposition 1.4.7], it follows that the lower semi-continuity of is equivalent to

To this end, we compute

from the definition of the ambiguity set. In the second last step, the second term goes to as due to the continuity of the kernel function and the definition of in Proposition 1. As a result, and thus, .

With the above properties of the mapping in hand, it can be shown following identical arguments as the proof of [9, Theorem 3.1] that both and are lower semicontinuous and there exists a function such that is the minimizer of (16) for . This concludes the proof.

∎

Remark 2.

Note that the ambiguity sets considered in related works on distributionally robust control [23, 22] do not depend on the current state and action, i.e., the mapping is independent of , and as a result, properties such as compactness and lower semi-continuity are easily shown. In contrast, the ambiguity set considered here is more general and directly captures the dependence of the transition kernel on the current state-action pair. In the DRO literature, such ambiguity sets are referred to as decision-dependent ambiguity sets [41, 27, 26], which are relatively challenging to handle, and less explored in the past.

By showing that there exist deterministic and Markovian policies in the optimal set of (13), Theorem 2 allows to reduce the search of general history-dependant policies to functions , which maps at each time instant a state to an admissible action. However, the result of Theorem 2 does not lead to a computationally tractable formulation towards solving problem (13). To this end, we now describe two approximation schemes that enable us to obtain a computational solution to (13). The first approximation is related to the fact that we are aiming towards a data-driven solution of (13); hence, the ambiguity set in (10) is replaced by its sample-based counterpart, where its center is defined according to the estimate of the conditional mean embedding mapping introduced in Proposition 1. That is, we consider instead the ambiguity set

| (18) |

Under this approximation and exploiting the rectangular structure of the admissible dynamics in (11), one may observe that the inner supremum in (10) is computed separately for each time instant and that if we replace by in the corresponding inner optimization we obtain, for any ,

| (19) |

where the last equality is a consequence of the reproducing property and

Following [12], the support of can then be computed as

where denote the coefficients of the empirical estimate of the conditional mean embedding as given in (8) evaluated at the state-action pair at the time instant , and where represents the collected sample444That is, for each , is a sample from . Within the machine learning literature, the transition kernel is usually referred to as a “generative model”. along the observed trajectories of the dynamics. To compute the norm , we solve, similar to Theorem 1, a regression problem given by

| (20) |

where are arbitrary points in the domain of the function . The solution of this regression problem is given by where

Variable is the Gram matrix associated with the available points, namely, it is the positive semidefinite matrix whose -entry is given by . Notice that the collection of points used to estimate may not necessarily coincide with those points used to estimate the conditional mean embedding in Theorem 1; we denote this in our notation by adding a prime as a superscript. Same comment applies for the regularizer appearing in (20) and in the expression of in Theorem 1.

We now discuss how to solve for optimal control inputs using a value iteration approach. To this end, we introduce our second approximation of the distributionally robust dynamic programming (13) by discretizing the action space. At a given state , we discretize the input space , denoting the resulting discrete set as . To define the value function, we modify slightly the set of admissible dynamics in (11) by fixing the initial distribution to a given point . In other words, we let555 The symbol , for some , represents the Diract measure, i.e., a probability measure that assigns unit mass to the point . for some . Then, for a given function , we define recursively the collection of functions , where , as , and

| (21) |

For a given , the inner supremum problem is an instance of (19) and can be computed using the techniques discussed in this section.

III-A Distributionally robust approach to forward invariance or safe control

While the discussion thus far has focused on the general optimal control problem, this approach can also be leveraged for synthesizing control inputs meeting safety specifications. Using the notation of previous sections, let be the time-horizon and be a measurable safe set. For an admissible control policy , admissible dynamics , and initial state , the probability of the state trajectory being safe is given by (more details can be found in [1])

| (22) |

where denote the solution of (9) under the dynamics and policy . Our goal is to solve the problem

| (23) |

using the mathematical framework proposed in this paper. In fact, one can show that an analogous result of Theorem 2 holds for (23), namely, there exists an optimal Markovian and deterministic policy. Hence, using similar approximations as in the previous section, function in (23) can be approximated recursively as (see [1] for more details)

| (24) |

for , where is the indicator function of the safe set . In the numerical example reported in the following section, we compute the safe control inputs by solving the inner problem in an identical manner as discussed in the previous subsection.

IV Numerical examples

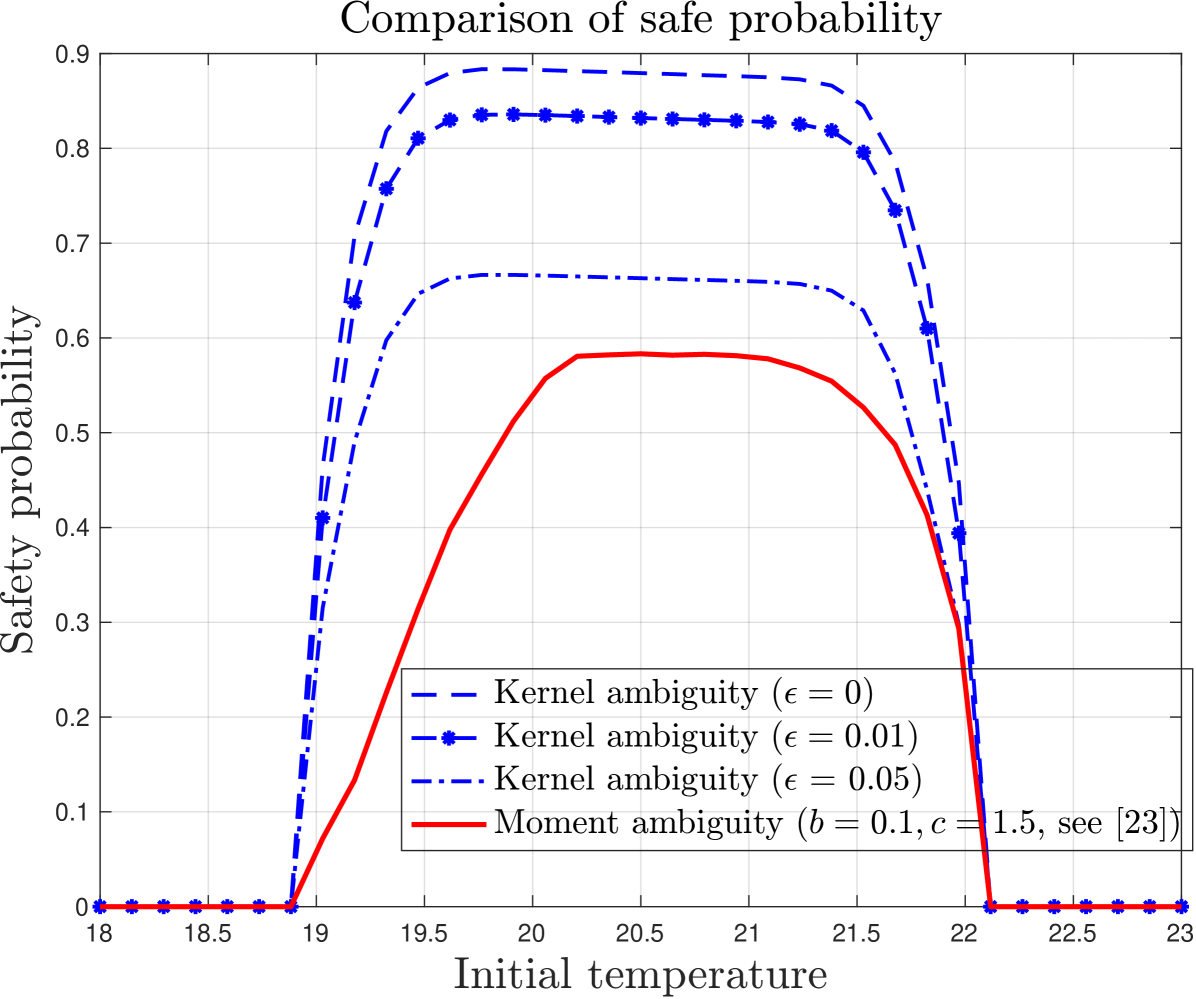

Inspired by papers [1, 23], we apply our methods to study safety probability of a thermostatically controlled load given by the dynamics

| (25) |

where the state is the temperature, is a binary control input, representing whether the load is on or off, and is a stochastic disturbance taking values in the uncertainty space . The parameters of (25) are given by , where , , , , , and . Our goal is keep the temperature within the range for minutes.

Our goal is to compute a control policy purely on available sampled trajectories for the model (25) and without solving an optimization problem at each iteration. To this end, we let be a collection of observed transitions from the model, where the pair is the set of random chosen points in the set and represents the observed transitions from such a state-input pair. We then solve the dynamic programming recursion given in (24) by partitioning the state space uniformly from to with 35 points, and using data points to estimate the conditional kernel mean embedding map (see Theorem 1) and to compute the norm of the value function, as shown in (20). We choose as the regularisation parameter, and use the kernel functions and given by

| (26) |

with and where , for the numerical examples. The choice of the kernel has shown better results for this problem when compared with a Gaussian kernel. Figure 1 shows the obtained value function for different values of the radius , where we notice a decrease in the returned value function with the increase in the size of the ambiguity set. The -axis represents the safety probability and the -axis is the temperature; notice that the value function is zero outside the safe set . We also compare the returned value function with the one obtained using the method proposed in [23] (we refer to the reader to this paper for the definition of the parameters and shown in the legend).

V Conclusion

We analyzed the problem of distributionally robust (safe) control of stochastic systems where the ambiguity set is defined as the set of distributions whose kernel mean embedding is within a certain distance from the empirical estimate of the conditional kernel mean embedding derived from data. We showed that there exists a non-randomized Markovian policy that is optimal and discussed how to compute the value function by leveraging strong duality associated with kernel DRO problems. Numerical results illustrate the performance of the proposed formulations and the impact of the radius of the ambiguity set. There are several possible directions for future research, including deriving efficient algorithms to perform value iteration without resorting to discretization, representing multistage state evolution using composition of conditional mean embedding operators, and performing a thorough empirical investigation on the impact of dataset size on the performance and computational complexity of the problem.

References

- [1] A. Abate, M. Prandini, J. Lygeros, and S. Sastry, “Probabilistic reachability and safety for controlled discrete time stochastic hybrid systems,” Automatica, vol. 44, no. 11, pp. 2724–2734, 2008.

- [2] S. Summers and J. Lygeros, “Verification of discrete time stochastic hybrid systems: A stochastic reach-avoid decision problem,” Automatica, vol. 46, no. 12, pp. 1951–1961, 2010.

- [3] C. Persis and P. Tesi, “Formulas for data-driven control: stabilization, optimality, and robustness,” IEEE Transactions on Automatic Control, vol. 65, no. 3, pp. 909–924, 2020.

- [4] J. Berberich, J. Kohler, M. A. Muller, and F. Allgower, “Data-driven model predictive control with stability and robustness guarantees,” IEEE Transactions on Automatic Control, vol. 66, no. 4, pp. 1702–1717, 2021.

- [5] A. Shapiro, “Distributionally robust stochastic programming,” SIAM Journal on Optimization, vol. 27, no. 4, pp. 2258–2275, 2017.

- [6] P. Mohajerin Esfahani and D. Kuhn, “Data-driven distributionally robust optimization using the wasserstein metric: performance guarantees and tractable reformulations,” Mathematical Programming, vol. 171, no. 1, pp. 115–166, 2018.

- [7] A. Hota, A. Cherukuri, and J. Lygeros, “Data-driven chance constrained optimization under wasserstein ambiguity sets,” in 2019 American Control Conference (ACC), IEEE, 2019.

- [8] L. Aolaritei, N. Lanzetti, H. Chen, and F. Dörfler, “Distributional uncertainty propagation via optimal transport,” arXiv, 2023.

- [9] J. González-Trejo, O. Hernández-Lerma, and L. Hoyos-Reyes, “Minimax control of discrete-time stochastic systems,” SIAM Journal on Control and Optimization, vol. 41, no. 5, pp. 1626–1659, 2002.

- [10] J. Ding, M. Kamgarpour, S. Summers, A. Abate, J. Lygeros, and C. Tomlin, “A stochastic games framework for verification and control of discrete time stochastic hybrid systems,” Automatica, vol. 49, no. 9, pp. 2665–2674, 2013.

- [11] K. Muandet, K. Fukumizu, B. Sriperumbudur, and B. Schölkopf, “Kernel mean embedding of distributions: A review and beyond,” Foundations and Trends® in Machine Learning, vol. 10, no. 1-2, pp. 1–141, 2017.

- [12] J. Zhu, W. Jitkrittum, M. Diehl, and B. Schölkopf, “Kernel distributionally robust optimization: Generalized duality theorem and stochastic approximation,” in International Conference on Artificial Intelligence and Statistics, pp. 280–288, PMLR, 2021.

- [13] A. Smola, A. Gretton, L. Song, and B. Schölkopf, “A Hilbert space embedding for distributions,” in International Conference on Algorithmic Learning Theory, pp. 13–31, Springer, 2007.

- [14] K. Fukumizu, L. Song, and A. Gretton, “Kernel Bayes’ rule: Bayesian inference with positive definite kernels,” The Journal of Machine Learning Research, vol. 14, no. 1, pp. 3753–3783, 2013.

- [15] B. Boots, A. Gretton, and G. J. Gordon, “Hilbert space embeddings of predictive state representations,” in Proceedings of the Twenty-Ninth Conference on Uncertainty in Artificial Intelligence, pp. 92–101, 2013.

- [16] A. Thorpe and M. Oishi, “Model-free stochastic reachability using kernel distribution embeddings,” IEEE Control Systems Letters, vol. 4, no. 2, pp. 512–517, 2019.

- [17] A. Thorpe and M. Oishi, “SOCKS: A stochastic optimal control and reachability toolbox using kernel methods,” in 25th ACM International Conference on Hybrid Systems: Computation and Control, ACM, 2022.

- [18] Y. Chen, J. Kim, and J. Anderson, “Distributionally robust decision making leveraging conditional distributions,” in 2022 IEEE 61st Conference on Decision and Control (CDC), pp. 5652–5659, IEEE, 2022.

- [19] G. Pillonetto, M. Quang, and A. Chiuso, “A new kernel-based approach for nonlinear system identification,” IEEE Transactions on Automatic Control, vol. 56, no. 12, pp. 2825–2840, 2011.

- [20] S. Grünewälder, G. Lever, L. Baldassarre, S. Patterson, A. Gretton, and M. Pontil, “Conditional mean embeddings as regressors,” in Proceedings of the 29th International Coference on International Conference on Machine Learning, pp. 1803–1810, 2012.

- [21] A. Thorpe, K. Ortiz, and M. Oishi, “State-based confidence bounds for data-driven stochastic reachability using Hilbert space embeddings,” Automatica, vol. 138, p. 110146, 2022.

- [22] I. Yang, “Wasserstein distributionally robust stochastic control: a data-driven approach,” IEEE Transactions on Automatic Control, vol. 66, no. 8, pp. 3863–3870, 2020.

- [23] I. Yang, “A dynamic game approach to distributionally robust safety specifications for stochastic systems,” Automatica, vol. 94, pp. 94–101, 2018.

- [24] M. Schuurmans and P. Patrinos, “A general framework for learning-based distributionally robust MPC of Markov jump systems,” IEEE Transactions on Automatic Control, vol. 68, no. 5, pp. 2950–2965, 2023.

- [25] M. Fochesato and J. Lygeros, “Data-driven distributionally robust bounds for stochastic model predictive control,” in 2022 IEEE 61st Conference on Decision and Control (CDC), pp. 3611–3616, IEEE, 2022.

- [26] F. Luo and S. Mehrotra, “Distributionally robust optimization with decision dependent ambiguity sets,” Optimization Letters, vol. 14, pp. 2565–2594, 2020.

- [27] N. Noyan, G. Rudolf, and M. Lejeune, “Distributionally robust optimization under a decision-dependent ambiguity set with applications to machine scheduling and humanitarian logistics,” INFORMS Journal on Computing, vol. 34, no. 2, pp. 729–751, 2022.

- [28] D. Salamon, Measure and Integration. European Mathematical Society, 2016, 2016.

- [29] H. Brezis, Functional Analysis, Sobolev Spaces and Partial Differential Equations. Springer, 2011.

- [30] D. Panchenko, Lecture Notes on Probability Theory. 2019.

- [31] Y. Nemmour, H. Kremer, B. Schölkopf, and J. Zhu, “Maximum mean discrepancy distributionally robust nonlinear chance-constrained optimization with finite-sample guarantee,” in IEEE Conference on Decision and Control (CDC), pp. 5660–5667, 2022.

- [32] C. Baker, “Joint measures and cross-covariance operators,” Transactions of the American Mathematical Society, vol. 186, pp. 273–273, 1973.

- [33] D. Bertsekas and S. Shreve, Stochastic Optimal Control: The Discrete-time Case. Athena Scientific, 1978.

- [34] K. Fukumizu, F. Bach, and M. Jordan, “Dimensionality reduction for supervised learning with reproducing kernel Hilbert spaces,” Journal of machine learning research, 2004.

- [35] L. Song, J. Huang, A. Smola, and K. Fukumizu, “Hilbert space embeddings of conditional distributions with applications to dynamical systems,” in Proceedings of the 26th Annual International Conference on Machine Learning, pp. 961–968, 2009.

- [36] S. Grunewalder, G. Lever, L. Baldassarre, M. Pontil, and A. Gretton, “Modelling transition dynamics in MDPs with RKHS embeddings,” in International Conference on Machine Learning (ICML), pp. 1603–1610, 2012.

- [37] C. Micchelli and M. Pontil, “On learning vector-valued functions,” Neural computation, vol. 17, no. 1, pp. 177–204, 2005.

- [38] R. Durrett, Probability: Theory and Examples. Cambridge University Press, 2010.

- [39] J. Munkres, Topology: A First Course. Prentice Hall, 1974.

- [40] J.-P. Aubin and H. Frankowska, Set-valued analysis. Springer Science & Business Media, 2009.

- [41] K. Wood, G. Bianchin, and E. Dall’Anese, “Online projected gradient descent for stochastic optimization with decision-dependent distributions,” IEEE Control Systems Letters, vol. 6, pp. 1646–1651, 2021.