Does Optimal Control Always Benefit from Better Prediction? An Analysis Framework for Predictive Optimal Control

Abstract

The “prediction + optimal control” scheme has shown good performance in many applications of automotive, traffic, robot, and building control. In practice, the prediction results are simply considered correct in the optimal control design process. However, in reality, these predictions may never be perfect. Under a conventional stochastic optimal control formulation, it is difficult to answer questions like “what if the predictions are wrong”. This paper presents an analysis framework for predictive optimal control where the subjective belief about the future is no longer considered perfect. A novel concept called the hidden prediction state is proposed to establish connections among the predictors, the subjective beliefs, the control policies and the objective control performance. Based on this framework, the predictor evaluation problem is analyzed. Three commonly-used predictor evaluation measures, including the mean squared error, the regret and the log-likelihood, are considered. It is shown that neither using the mean square error nor using the likelihood can guarantee a monotonic relationship between the predictor error and the optimal control cost. To guarantee control cost improvement, it is suggested the predictor should be evaluated with the control performance, e.g., using the optimal control cost or the regret to evaluate predictors. Numerical examples and examples from automotive applications with real-world driving data are provided to illustrate the ideas and the results.

keywords:

Model predictive control, optimal control, data-based control1 Introduction

In many automotive control[1][2], traffic control [3], robot control [4] and building control [5] applications, optimal control decisions need to be made in the presence of an uncertain future. This uncertain future is usually caused by complicated human behaviors or highly complex environment systems, and it can have a relative large impact on the control system performance[6]. The control policies in these applications need to be adjusted according to different potential future scenarios[7]. A common way to handle this is to use a predictor to forecast the future, and then apply the optimal controller with respect to this forecasted future[8]. The “prediction + optimal control” scheme has shown good performance in practice. There have also been theoretical results showing that predictions for certain control problems are beneficial[9]. In this paper, we refer to this type of control method as predictive optimal control.

Predictive optimal control is closely related to model predictive control (MPC). MPC was originally used to handle constraints to achieve recursive feasibility[10]. In some MPC applications, the prediction stages can forecast certain future external signal values that impacts the system dynamics[11]. The word external here means that this signal is neither a state nor an output of the system to be controlled. In this paper, we call this external signal the generalized disturbance. For our problems of interest, we use the phrase predictive optimal control instead of MPC, mainly because we want to to emphasize that a future generalized disturbance has to be forecasted, and the major goal of the control is to minimize a certain cost. Meanwhile, in this predictive optimal control framework, the prediction horizon and prediction update frequency is flexible. This still fits the general MPC framework, but it may be different from the commonly-used receding horizon MPC.

In many predictive optimal control applications, the future to be predicted has intrinsic uncertainties[12]. The to-be-predicted generalized disturbance may be future human maneuvers or ambient factors such as the temperature[13][14]. The future values of these signals are uncertain at the time of the forecast. In practice, the prediction results may be in either stochastic or deterministic forms[15]. Many applications simply use deterministic predictions as it is easier to compute its corresponding optimal solution[16]. With more data and higher computing power, probabilistic forecast, also called stochastic prediction, is drawing more and more attentions from the control community. Probabilistic forecast has been used in applications such as weather forecast[17]. Different measures for evaluating stochastic predictions have been developed [18][19]. There are techniques in Bayesian decision theory[20] [21] and stochastic MPC [22][23] utilizing a stochastic prediction for decision-making and control.

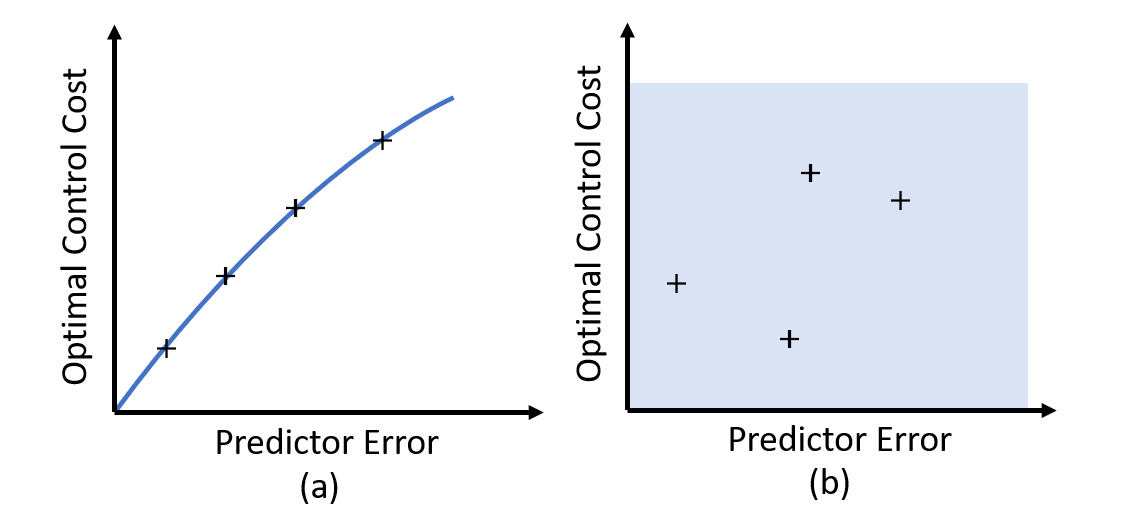

Since predictive optimal control has shown good performance, naturally, we are interested in investigating how we should design predictors and improve predictors. One important question is whether we can decouple the predictor design and the control system design. In practice, we sometimes put a lot of efforts into improving the predictor, and hope this can lead to better predictive optimal control performance. In this paper, the control performance is measured by the cost function defined in the optimal control. When we try to improve the control by improving the prediction, the underlying assumption is that there is a monotonic relationship (like the one shown in Fig.1 (a)) between the predictor error and the optimal control cost. However, in literatures, this assumption is rarely verified. Actually, as we will show in this paper, the relation between the predictor and the optimal control performance is complicated. Our theoretical analysis and numerical examples show that the relationship can be like Fig.1 (b), which means as the predictor improves, the optimal control cost may get worse.

The motivation of this paper is to provide tools to build connections between the predictor and the optimal control performance. Prediction of a complicated process involving human behaviors or complex systems may never be perfect. We need to be able to analyze the predictive optimal control system while admitting that the prediction may be wrong. To thoroughly analyze this problem, we need to build an analysis framework describing the predictive optimal control process.

Build a rigorous analysis framework for predictive optimal control is a nontrivial task for the following three reasons. First, while it is easy to tell if a deterministic prediction is wrong, how we should handle the stochastic prediction case and define a truth is not obvious, especially in scenarios where we cannot collect data repeatedly (i.e. it is almost impossible to re-create a driving scene with exactly the same environment, the same surrounding vehicles and drivers with the same status). Second, the “prediction + optimal control” scheme usually runs in a dynamic way with receding horizons, which means new prediction may override previous ones after an update, and new control policies may update accordingly. This adds up to the complexity. Third, the predictive optimal control involves a subjective predictor and an objective optimal control cost, which brings difficulties in notations. The complexities in describing the prediction performance and control performance are also somewhat related to the Bayesian vs. frequentist discrepancy in statistics. The predictor usually relies on some a priori assumptions, and the predicted probability distribution may be a subjective belief, which can be considered a Bayesian approach. However, the control performance to be optimized is the objective long-run cost, which fits the frequentists’ point of view. In order to analyze predictive optimal control problems, we need to consider some perspectives from the Bayesians and frequentists simultaneously in one framework.

The tricky relationship between prediction performance and optimization performance has been noticed by some researchers, and it has been analyzed from data’s point of views. In “machine-learning-based prediction + optimization” problems, there is a growing interest in decision-focused learning, which uses loss functions measuring the optimization results in the upstream machine learning prediction model training[24][25][26]. For most dynamic system predictive optimal control applications, the predictor design is still separated from the optimal control process. Meanwhile, there are many efforts on robust MPC focusing on generating good control policies despite imperfect predictions[22][27]. However, formulations which can describe impact of wrong prediction for dynamic systems has not yet been reported in literatures.

In stochastic predictive optimal control, we need to deal with two types of descriptions of the future generalized disturbance: one predicted subjective probability distribution (which we call the belief), and one “true” probability distribution (which will be precisely defined later). In this paper, we focus on two questions:

-

1.

Q1 (What): What is the proper framework that describes the relationships among the predicted probability distribution, the (to-be-defined) “true” probability distribution, the control performance, and other elements in predictive optimal control?

-

2.

Q2 (How): With limited data and generally-unknown “true” probability distribution, how should we evaluate the predictors which generate the subjective probability distributions?

We believe Q1 has been answered in this paper, and a general answer to Q2 is provided. More investigations of specific types of predictive optimal control will be needed for a comprehensive answer to Q2 in future studies.

In this paper, we presents a framework describing the relationship among the elements of predictive optimal control that can be used to analyze the impact of wrong predictions. We incorporate a stochastic environment model and define a new concept called the hidden prediction state to connect the subjective belief and the objective truth. Both the single-observation fixed-end-horizon case and the updating-observation receding-horizon case are considered. Then we use this framework to consider the predictor evaluation problem, in the practical case with limited data availability. Three commonly-used predictor evaluation measures, including the mean squared error, the regret and the log-likelihood, are discussed. We show that a better predictor with respect to the mean squared error or the log-likelihood may actually lead to worse control performance, and this may happen even if the predictor is arbitrarily close to the global optimal. Evaluating the predictor along with the control performance, such as using the control cost or the regret measure, can avoid this. The results are illustrated in numerical examples and simulation examples from automotive applications.

The paper is structured as follows. Section 2 provides the general problem formulation of predictive optimal control. Section 3 presents an analysis framework for predictive optimal control with a environment model. Section 4 discusses predictor evaluation measures. In Section 5, we use the proposed framework to analyze the relationship between the predictor performance and the control performance. Section 6 provides examples to illustrate the ideas and the results. Section 7 concludes this paper.

2 The Predictive Optimal Control Problem

2.1 The Optimal Control Problem

We consider the following discrete-time dynamic system

| (1) |

where , , and . The state and the generalized disturbance can be directly measured at step . is usually considered as the output of a complex system, which is called the environment. is the control input.

This dynamic system represents the physical system to be controlled, such as a vehicle, a robot, a machine, or a building. The measured generalized disturbance may be the human input such as the pedal position and steering of the vehicle, or the ambient factors such as surrounding traffic behavior or the temperature. We assume that has a relatively large impact on the dynamics and the cost. Therefore, when we design the control , we want to consider the impact of .

We consider a finite-horizon problem of steps. The cost in this finite horizon is the sum of running costs from step 0 to step and the terminal cost,

| (2) | ||||

The goal is to find a policy to minimize the cost .

If the complete disturbance sequence (the brackets here mean an ordered sequence) is known at step 0, the problem can be solved as a deterministic optimal control problem. Many tools such as dynamic programming and Pontryagin’s minimum principle can be used to solve it.

However, in practice we usually do not know in advance. In our problem, is unknown before step . If we do not know the future values of , we can no longer simply apply the deterministic optimal control. Instead, we can consider this sequence as a stochastic signal. It can be represented by a random matrix of dimension , or equivalently, a random vector of dimension .

The control objective is to find a feedback policy that uses available information to minimize the cost, or more rigorously, a certain expectation of the cost. In the context of optimal control in this paper, better control performance means a smaller cost expectation.

2.2 Components of Predictive Optimal Control

To handle the future uncertainties of the disturbance sequence , it is common to use a predictor to forecast it. We consider the general case where the forecast result is a discrete or continuous probability distribution of . If one specific disturbance sequence is forecasted instead of a probability distribution, we may consider it as a distribution with a one or near-one probability at this specific sequence, and zero or near-zero probability at all other sequences. We call this probability distribution our belief.

Definition 1 (Belief).

A belief is a subjective probability distribution of the future disturbance sequence .

We use to denote this probability distribution of . , where is the set of all possible probability distributions of . We write , which means follows the distribution . When there is no ambiguity, we do not distinguish the disturbance sequence probability distribution and its data representation, which may be a high-dimensional vector to represent a probability mass function, or a vector of parameters for a probability density function.

To obtain a belief , we need to observe the environment for necessary information. We assume that is the observation from the environment. The observation may be in the form of sensor readings, images, videos, or data received via communication, and their histories. We can define the concept of predictors as follows.

Definition 2 (Predictor).

A predictor is a mapping from an observation to a belief.

With the belief generated by the predictor, we can compute the optimal control. As we believe our belief, the expected cost to be minimized can be defined as

| (3) |

where our belief tells us that and . We use to denote the optimal policy that minimizes the above expected cost when . This optimal control problem is well-defined, though computing the exact optimal policy may be challenging if is complicated. In practice, an approximated solution is usually applied [28].

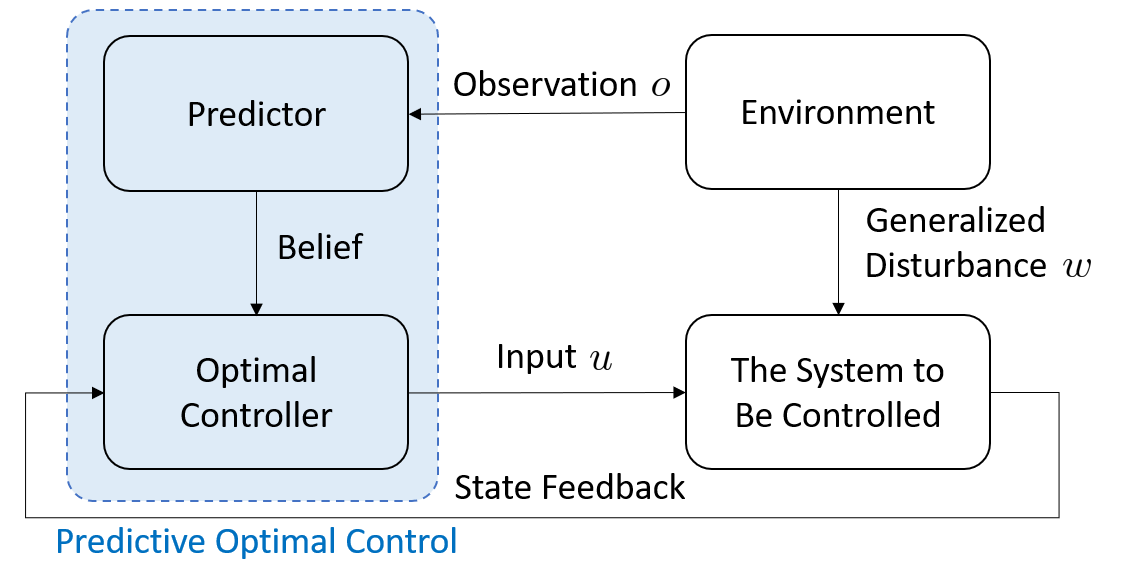

The predictive optimal control structure shown in Fig. 2 has been used in many control applications: first using a predictor to process the observation to obtain a belief , then computing (or approximately computing) the corresponding optimal control policy , finally applying the control to the dynamic system in (1). The conventional predictive optimal control formulation in practice just stops here, and it cannot provide further insights about the “prediction + optimal control” scheme. It assumes that is correct, so we use it in our optimal control design. However, since our belief is estimated, in most cases, it is actually different from the truth.

2.3 What If the Beliefs Are Wrong

In real-world applications, usually we know that our belief is imperfect. This brings up a lot of interesting questions. In the rest of this paper, we will focus on the case where we know our belief is not perfect, and we would like to find out how it impacts the control performance, and how we can improve our it.

The true probability distribution of the future disturbance sequence is denoted by . We will define this true probability distribution in Section 3.2. For now, let us assume there exists a well-defined one.

If we are not sure whether our belief is true, we have a problem immediately: since we do not know the true distribution , we cannot evaluate the cost expectation in (3) in the sense that . Based on our imperfect belief , we can only obtain an optimized policy with respect to this imperfect belief. As is only a subjective probability distribution which is not directly associated with any actual random vectors defined in our formulation so far, we use the notation to denote the believed expectation of a function when the random vector follows the believed distribution . We will stop using the ambiguous expectation notation in (3) for subject beliefs. Instead, the believed expected cost to be minimized is now defined as

| (4) |

Under this notation, the true expectation can be re-written as

| (5) |

Our optimal policy obtained using the imperfect belief minimizes (4), not (5). It initially seems that there is not much we can do if our best estimation is . However, a complete analysis framework will give us insights about the relationship between the predictor and the control performance, thus guide our predictor design.

3 An Analysis Framework

In this section, we present an analysis framework to consider the impact of imperfect predictions. We will first introduce the environment model with the hidden predictions state, then discuss what a true probability distribution is, and finally integrate recurrent prediction schemes with the presented model.

3.1 The Hidden Prediction State

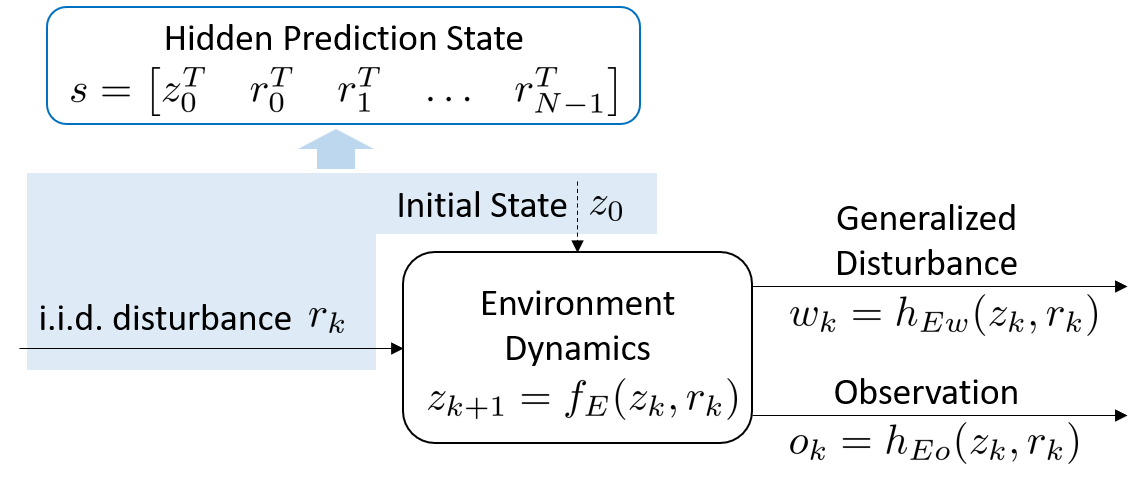

We assume that the environment that generates the disturbance is a dynamic system which can be described by the following equations,

| (6) | ||||

where is the state of the environment system, , , …, are independent and identically distributed (i.i.d.) random disturbance, is the measured output, which is also called the observation of the environment, and is the output of the environment system that impacts our system to be controlled. Since we assume that the value of is measured, contains all the information of . From the point of view of the system to be controlled, is the generalized disturbance. Since the environment is usually very complex, such as the human beings and the weather system, the dimensions of and can be very high and the exact formulas of (6) may never be known.

Let us define the hidden prediction state

| (7) |

as shown in Fig. 3. According to the environment model in (6), the generalized disturbance sequence can be completely determined by the hidden prediction state, which is a random vector, . Therefore, we write as is uniquely determined when is given. The observations can also be completely determined by . Therefore, we write

| (8) |

and use the notations

| (9) | ||||

for short.

In general, cannot be directly observed at step 0 due to two reasons. First, the environment state and environment disturbance may not be estimated by only measuring . Second, the i.i.d. random disturbances , , …, at future steps cannot be known at step 0.

Since there may be multiple ways building the environment model, the selection of the hidden prediction state is not unique. In predictive optimal control applications, it is not necessary to formulate the environment and define the hidden prediction state at all. However, the environment model in (6) and the definition of the hidden prediction state in (7) are the cores of the analysis framework, and they can help us in analyzing the problems when the predictions are not perfect.

3.2 The True Probability Distribution

In the previous section, we assume that there is a true probability distribution . Now let us define it with the environment model.

We consider the belief obtained at step 0. With the environment in (6), our belief is determined by applying the predictor on the observation , therefore we can write

| (10) |

When there is no ambiguity, we may also write instead of for conciseness. We investigate multiple potential ways to define the true probability distributions.

3.2.1 The A Posteriori Truth

One may argue that we will be able to know the realized disturbance sequence at step , so the true probability of the realized disturbance sequence is one, and the probabilities of all other disturbance sequence are zero. So for the belief , is the a posteriori truth.

3.2.2 The A Priori Truth

One may argue that there are intrinsic uncertainties in as the future values of the i.i.d. environment disturbance can never be known at step 0. In a prediction, the true probability distribution should be determined by what has happened by the time of the prediction, not from the future. This probability distribution should be determined at step 0. Therefore for the belief , the truth is the following conditional probability distribution

| (11) |

where , are the and component of respectively. We call this a priori true probability distribution , or for short.

3.2.3 The Observable Truth

One may also argue that since our only observation of the environment is , the best estimation of the distribution should be limited not just by the time of the prediction, but also by the information we have. Therefore for the belief , the truth is the following conditional probability distribution

| (12) |

We call this observable (not related to the observability in control theory) true probability distribution , or for short.

All the above three arguments about the true probability distributions make sense. As is the observable probability distribution that could be learned given enough data, we consider it as the benchmarking truth in this paper. Making close to is an intuitive way of improving the prediction, but how to define the difference between and is an interesting question which will be discussed in Section 4.

3.3 Recurrent Predictions

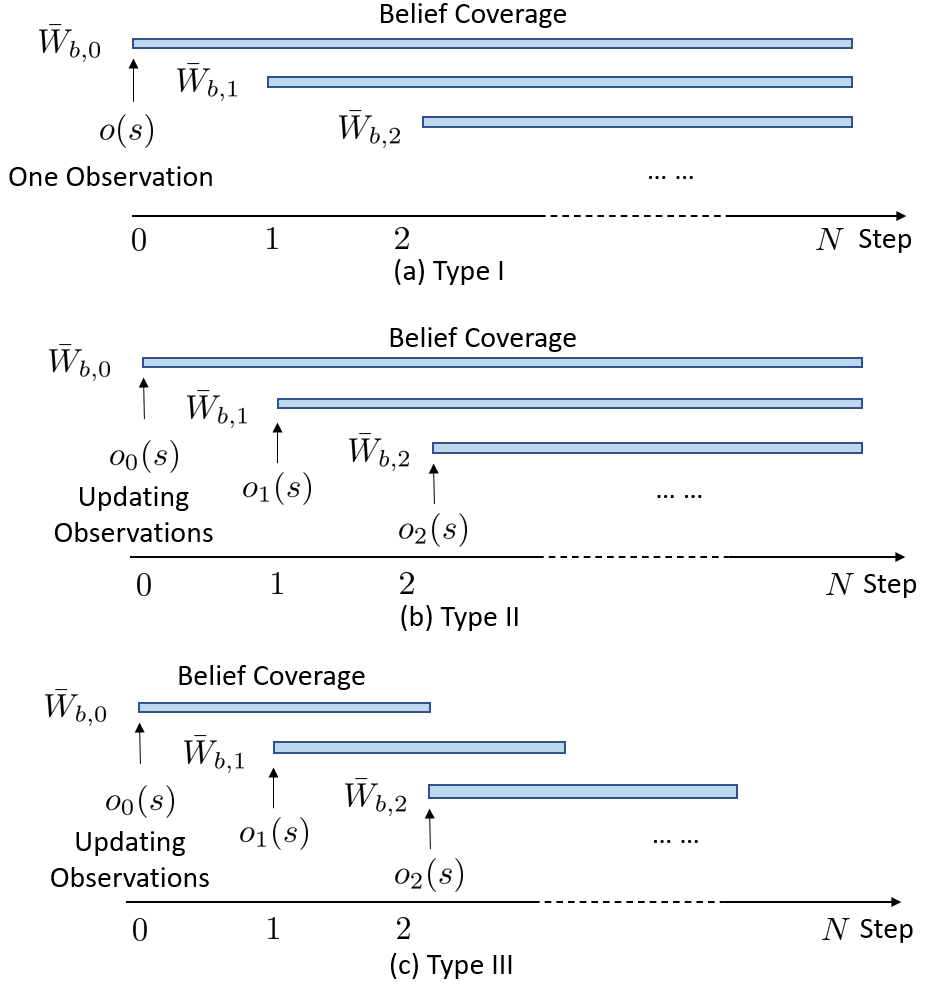

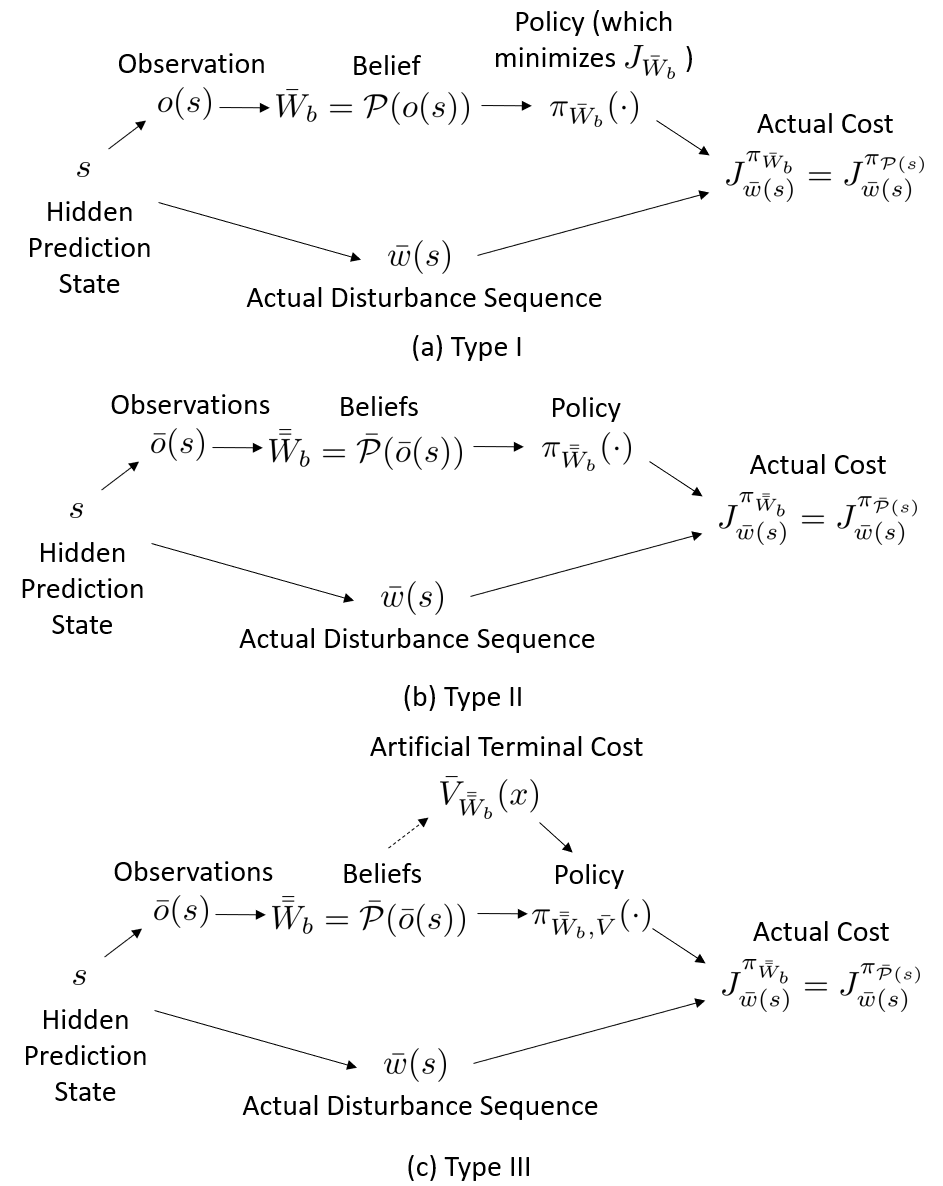

In the predictive optimal control implementation under the structure in Fig. (2), the prediction runs recurrently at every control step. Depending on whether new observations are used after the initial step and how long the prediction horizon is, we consider three typical types of recurrent predictions.

3.3.1 Type I: No Subsequent Observations After Step 0

We first consider the case where all information we obtain from the environment is and , which means that there are no subsequent observations after step 0. This type is commonly used when the process of obtaining the observation or forecasting is expensive, e.g., the observation is obtained in a pay-per-use way, or the initial forecasting computation takes a long time. At step 0, given a predictor , our belief is determined by the observation . We denote this belief at step 0 as . At step 1, even though no new observation of is available, is still measured so the forecasted belief can be updated according to the value of . This update is essentially computing a conditional probability given the original belief and . In some context, this is also called a filtration process. The filtration is repeated at every step. We denote the updated belief at step k as . The filtration update is

| (13) |

Each new belief contains the information of the probability distribution of the disturbance sequence from the current step to the terminal step, as shown in Fig. 4 (a).

Given a belief , the optimal policy minimizes the expected cost defined in (4). The policy can be obtained as a feedback control using all available information at each step. All available information includes the history of the measured state and the measured generalized disturbance . Since (1) tells us that when and are given, is Markovian. We can use the current in the feedback, instead of using all the history of . In general, the generalized disturbance is not Markovian. Actually it can be considered as the output of a partially observable Markov decision process. Therefore, we need to include the history of in the control feedback. However, when the belief update is just a filtration, even if is not Markovian, is Markovian. Therefore, we can use in the feedback, instead of the history of with the belief . The optimal policy can be computed recursively using dynamic programming. This feedback policy is in the form of

| (14) |

is completely determined by the initial belief , which means it is determined by the hidden prediction state along with the predictor .

We use to denote the cost of system (1) with a fixed initial state when the disturbance sequence is and the policy is the optimal policy obtained with the belief by minimizing (4). As shown in Fig. 5 (a), given a predictor , this actual cost is completely determined by .

We assume that any disturbance sequence with a non-zero probability in has a non-zero probability in . With this assumption, the policy obtained using can be applied when the true disturbance sequence distribution is . This is why we may want to consider a deterministic prediction as a distribution with a near-one (instead of one) probability at this specific sequence, and near-zero (instead of zero) probability at all other sequences.

3.3.2 Type II: A New Observation at Every Step

In Type II, a new observation is obtained at every step. Therefore, the belief is updated according to the new observation at every step. Each belief is still about the disturbance sequence from the current step to the terminal step, as shown in Fig. 4 (b). The belief is updated at every step using the new observation and the predictor,

| (15) |

where the predictor may be dependent on . We denote the sequence of beliefs as , and we write

| (16) |

as these beliefs are determined by the predictors and the sequence of observations .

In predictive optimal control, the control policy at step is determined using the belief , just like at any step of Type I. So the at every step, the feedback control policy is in the form

| (17) |

The policies in all steps form a sequence of policies . Each policy covers the time horizon from step to step , with the prediction made at step . Each of them depends on their observation , thus ultimately depends on . At step , we apply policy , then discard it since a new policy will be obtained at step . Combining the first steps (the step- where ) of each element of the policy sequence, we obtain one single policy, which can be denoted as . We also write this policy as , or equivalently, . The the actual cost is , which can also be written as . As shown in Fig. 5 (b), this cost is determined uniquely by in a similar way as Type I.

3.3.3 Type III: A New Observation at Every Step with Receding Horizon Prediction

In Type III, the prediction is made on a time window of fixed-length in a receding horizon way. This is commonly used in predictive optimal control applications. When the final step of the window is not , an artificial terminal cost is usually designed for the optimal control problem. The cost minimized at step is

| (18) |

where is the window length. The value of the artificial terminal cost will impact the control policy, therefore it will impact the actual cost. In this case, given the predictors, the actual cost is determined by both and the design of .

We may consider the artificial terminal cost as an estimation of the optimal cost-to-go, that is,

| (19) |

Then this type of problem can be considered as an approximation of Type II. If this artificial terminal cost is exactly the optimal cost-to-go, it is the same as Type II.

4 Predictor Evaluation

In this section, we use the developed framework to discuss predictor evaluation in the context of predictive optimal control. We will focus on the Type I problem, which is the foundation of all predictive control problems.

4.1 Just Being Accurate Is Not Enough

An accurate predictor means that when the predictor says the probability distribution of is , the distribution is indeed . However, just being accurate is not good enough for predictors. To show this, we will define it rigorously first.

Definition 3 (Maximum Indistinguishable Observation Set).

Give a predictor and a belief , the maximum indistinguishable observation set , is the set of all observations based on which the prediction output is ,

| (20) |

Definition 4 (Accurate).

A predictor is accurate over an observation set if belief generated by this predictor over any observation , equals the probability distribution of conditioned on , where is the maximum indistinguishable observation set of and .

This definition means that if we collect all realized disturbance sequence data for any fixed belief from an accurate predictor, the collected data distribution will match this belief. Simply being accurate does not mean a good predictor. For example, a blind predictor is a predictor that generates the same belief for all observations. An accurate blind predictor does not use any information from the observation , yet it offers accurate beliefs. It is accurate, but not very informative.

4.2 The Goal is , But Getting There Is A Challenge

The goal of prediction is to obtain the true distribution for every observation . To show this, we will prove that if our belief is the same as , we obtain the optimal control performance, as long as we use no more information about than .

Given a predictor , the predictive optimal control cost expectation is . By the law of total expectation and the definition of ,

| (21) |

By definition, is the optimal strategy that minimizes for every . Since we use no more information about than , given any , this cost is the best that we can achieve. Therefore, given any , minimizes the predictive optimal control actual cost . So it minimizes the predictive optimal control cost expectation.

The goal of our predictor result is . However, the path to achieve this goal is full of challenges. First, in practice, we may never be able to directly compare the predicted belief with our target as is unknown. Second, in general, there is no guarantee that predictors generating beliefs closers to lead to better control performance. Even locally around , as long as , we do not have such guarantees (details will be discussed in Section 5 and examples are provided in Section 6). Nevertheless, this ultimate goal still provides us an incentive to keep optimizing the predictor towards .

4.3 Available Data for Evaluation

In most predictive optimal control applications, it is not possible to obtain enough data to estimate for every . In practice, the data samples are in the form of pairs. For a few specific , we may have many pair samples such that can be directly estimated. However, for most , we usually do not have enough samples to estimate . For example, in human-driven vehicle speed prediction, where the to-be-predicted is the vehicle speed, and the observation is the driving scenario data including the vehicle status, driver status, road conditions, and traffic conditions, etc. We can collect many samples in the form of pairs under many driving scenarios. But for a specific driving scenario, it is very difficult to have repeated data showing how a driver behaves differently each time, as the scenario keeps changing. In practical situations, we usually make some assumptions on the predictor and parameterize the predictor so that it can be trained using pairs. We also need to evaluate predictor performance using many pairs, while not assuming we have repeated data for every specific to learn .

4.4 Predictor Evaluation Using Available Data

With one pair, given a predictor , we can compute a belief . Using a beleif and the corresponding realized disturbance sequence , we can define some one-time prediction performance measures. With multiple pairs, the predictor ’s performance can be evaluated by aggregating these one-time prediction performance measures.

4.4.1 One-Time Prediction Performance Measures

We consider two types of measures: the error-based measures, and the probability-based measures. Given a realized disturbance sequence and a belief , the one-time prediction performance measure is in the form of . If satisfies that

| (22) |

and

| (23) |

where means the probability of in the belief , we say is an error-based measure. Furthermore, when only if , this error measure is called a strict one-time prediction error measure. With a slight abuse of notation, we write if . Then minimizes any error-based measure by definition.

Here are two examples of error-based measures: the expected mean squared error (MSE), and the regret. The commonly-used MSE,

| (24) |

is a strict one-time prediction error measure. The expectation here is the subjective expectation with respect to the belief . The regret

| (25) |

is a non-strict one-time prediction error measure. It is defined as the difference between the optimal cost with the predicted belief, and the optimal cost with the a posteriori disturbance sequence. Computing the regret is usually much more difficult than computing the MSE, as it involves solving a stochastic optimal control problem. It is essentially evaluating the control performance of a specific dynamic system with this prediction, instead of evaluating the prediction alone.

Besides the error measures, we may also use a probability-based measure such as the log-likelihood,

| (26) |

where is believed probability of the realized disturbance sequence, and is the believed probability density at the realized disturbance sequence. We use the log-likelihood because we will sum up or average these one-time prediction performance to evaluate the predictor performance. There is a minus sign because we want to keep this probability-based measure consistent with the error-based measures, which are to be minimized.

4.4.2 Predictor Performance Measures

If we compute the average of multiple one-time prediction performance measures from one predictor’s result, we obtain a predictor performance measure, whose expectation is

| (27) | ||||

Based on our definition of the observable true distribution , for a given, fixed observation , the expectation of the one-time prediction performance measure of a belief can be computed using ,

| (28) |

The right-hand side of the (28) can be computed just using two probability distributions and . Therefore, we define

| (29) |

5 Predictor Performance vs. Control Performance

We are interested in the following two properties of predictor measures: (1) best-P-lowest-C: whether the best performed predictor always leads to the lowest predictive optimal control cost, (2) better-P-lower-C: whether better performed predictors always lead to lower predictive optimal control costs. The performance and cost here mean the expectation of the performance measure and the cost. Since the predictor performance measures are in the form of (30), we analyze this by investigating different predictor performance measures under each given observation .

5.1 MSE: A Poor Measure

if we choose the expected MSE as the one-time prediction performance measure, then,

| (31) | ||||

In this case, may not even locally minimize . This means that when using MSE, even with a large amount of data, we will miss the preditor’s ultimate goal . When there is no constraint, the best may be a deterministic prediction, which is inferior to the best stochastic predictor in terms of the control performance. With the proposed framework, examples can be easily constructed to show that the MSE-based measure is neither a best-P-lowest-C measure nor a better-P-lower-C measure (see Section 6).

5.2 Regret: A Good But Computationally Expensive Measure

If the regret-based predictor performance measure is used, then

| (32) | ||||

The second term is the posteriori optimal cost, which is a constant given . The first term is the same as the expected cost. The regret is essentially the control cost with a constant offset. globally minimizes . Furthermore, decreasing will lead to a decrease of the expected cost. Evaluating the predictors using regret-based error measures is essentially evaluating the control performance after connecting the prediction and the optimal control process. Actually, we can just compute the first term , which is the control cost under the predictor, to evaluate this predictor. The regret is both a best-P-lowest-C and a better-P-lower-C measure.

5.3 Log-Likelihood: A Probably-Fine Measure

If we use the probability-based measure log-likelihood , in the discrete case,

| (33) | ||||

This expectation is related to two probability distributions: the subjective belief and the observable true probability distribution (it is different from the entropy , which describes the property of one probability distribution). The best predictor is for all due to convexity, which means this measure is best-P-lowest-C. However, there is no guarantee to make it better-P-lower-C.

5.4 Summaries

A summary of the three predictor measures is provided in Table 1. Neither the MSE nor the log-likelihood measure have the Better-P-lower-C property. For a general predictive control problem, , there is no guarantee that predictors with better MSE or log-likelihood lead to better control performance. It implies that the predictor design cannot be simply decoupled from the downstream optimal control problem, and the predictor needs to be evaluated along with the control system performance, e.g., using the control cost or the regret as the predictor performance measure.

| Predictor Measure | Best-P-lowest-C | Better-P-lower-C | Computation |

|---|---|---|---|

| MSE | No | No | Low |

| Regret | Yes | Yes | High |

| Log-likelihood | Yes | No | Low |

6 Illustrative Examples

6.1 A Simple Linear System Example

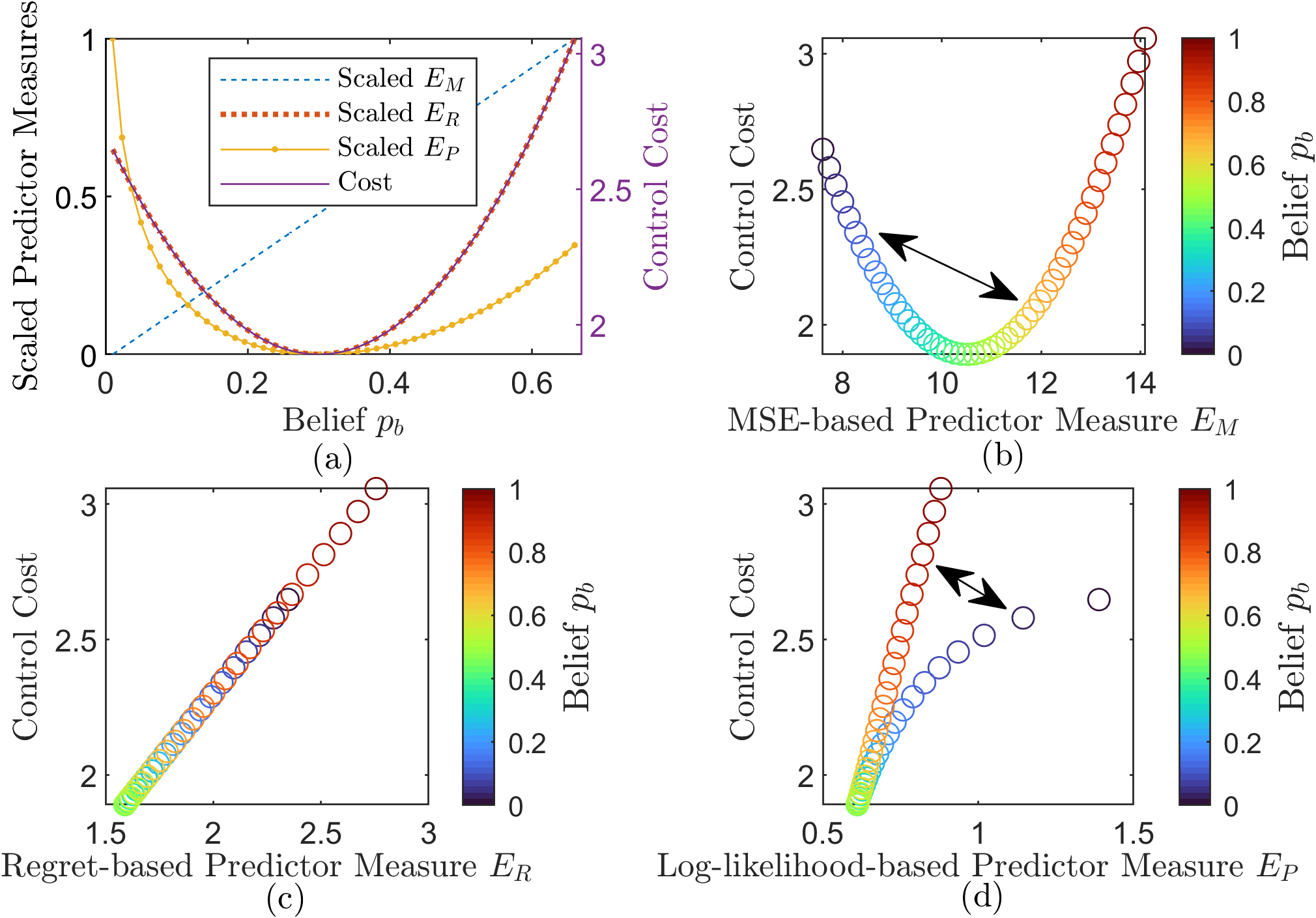

We provide a numerical example where the predictor measure gets better while the control cost gets worse in a Type I problem, and illustrate the differences of the three predictor measures shown in Table 1. Consider the simple linear system,

| (34) |

where , , with a quadratic cost function . There is no observation information other than . The input will be determined after is measured. So the key of this predictive optimal control is to forecast the value of and determine accordingly.

Assume that . Therefore, the observable true probability distribution of is in the form of

| (35) |

In addition, we assume that . In this example, the hidden prediction state can be considered as . If we know the value of and can use it to design the optimal control accordingly, the ideal optimal policy is

| (36) | ||||

and the ideal optimal cost expectation is,

| (37) |

A predictor gives a prediction of as and . generates a belief of . The predictive optimal solution is in the same form as (36) while replacing with . With this belief, the predictive optimal control cost expectation is

| (38) |

Obviously, minimizes this cost expectation.

When using the MSE, the regret, and the log-likelihood measure, the predictor performance measures are

| (39) | ||||

| (40) | ||||

| (41) |

respectively. In the regret and the log-likelihood, minimizes the measures, as they are best-P-lowest-C measures. Furthermore, for the regret, is just the cost expectation with a constant offset. However, in MSE, depending on the sign of , takes its minimal MSE value at or , which is different from its optimal result in terms of the optimal control cost.

We use to illustrates the better-P-lower-C properties of the measures. The trend of the predictor measures and the cost expectation is shown in Fig. 6 as the belief changes. In the MSE and the log-likelihood case, it is possible to improve the predictor measure while making the optimal control cost worse.

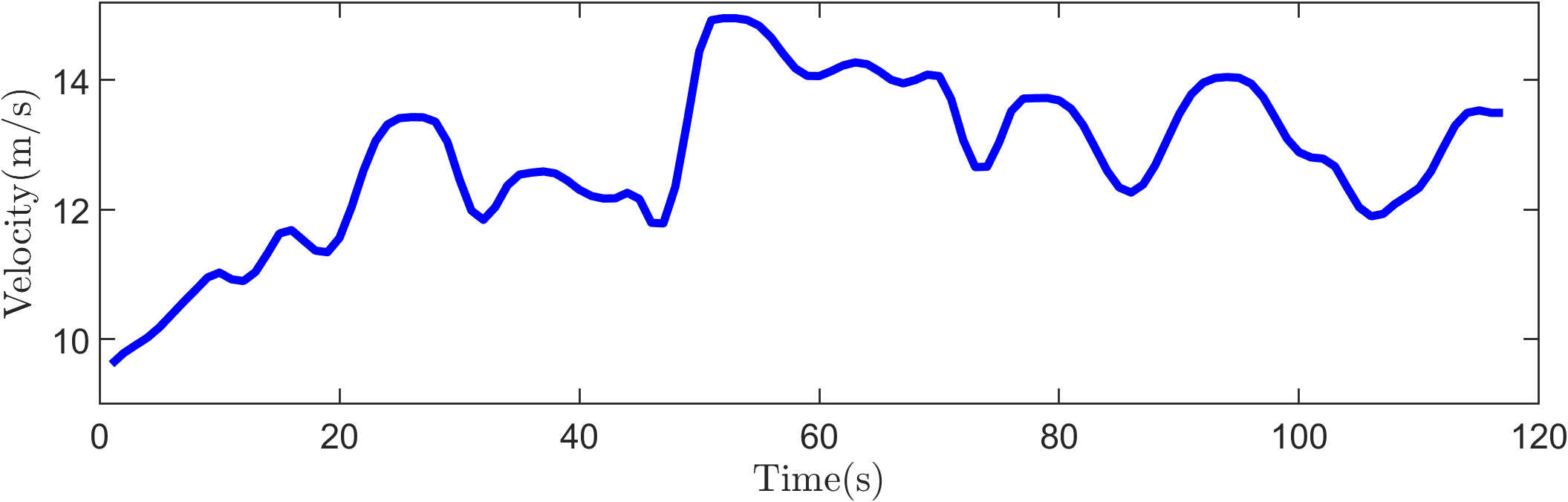

6.2 Automotive Examples with Real-World Driving Data

We use a hybrid electric vehicle example in the form of a Type III problem to demonstrate the relationship between predictors and control performance in the real world. Given a pre-defined driving cycle as a time-velocity table, optimal control can be used to determine the most efficient energy management strategy for a hybrid electric vehicle. With uncertain future driving cycles, predictive optimal control can be used and the future vehicle velocities are forecasted. We use real-world driving data from the Next Generation Simulation (NGSIM) [29]. We consider the energy management strategy for a simple hybrid electric vehicle powertrain shown in [30]. The hybrid electric vehicle powertrain model is as follows,

| (42) |

where , , is the battery state of charge (SOC), is a constant, is a variable representing the battery or the inverse of the battery efficiency, depending on the sign of the motor power, represents the motor efficiency or the inverse of the motor efficiency, is the motor speed, is the motor torque. We assume the following static relationship is known,

| (43) |

where is the total torque demand to the powertrain, is the vehicle velocity, is the vehicle acceleration. The control input is the motor torque . Once is determined, the engine torque is determined as

| (44) |

The cost function is defined as

| (45) | ||||

where , and are weights, is the fuel consumption determined by and , and is the target battery SOC to be maintained. This cost function considers the electric energy consumed, the fuel consumed, and the battery SOC variation from the target value during the steps.

In this problem, (or equivalently, ) is the generalized disturbance. Observation is just and its history. A predictor tries to forecast future , that is, the future velocity and acceleration. The future velocity and acceleration is dependent on various factors including the driving style, the road and traffic condition. In the proposed analysis framework, these factors are considered in the environment dynamics equations in (6). The hidden prediction state associated with this environment dynamic system may be a high-dimensional vector. But the exact formulas associated with the environment and the hidden prediction state are not needed for applying predictive optimal control.

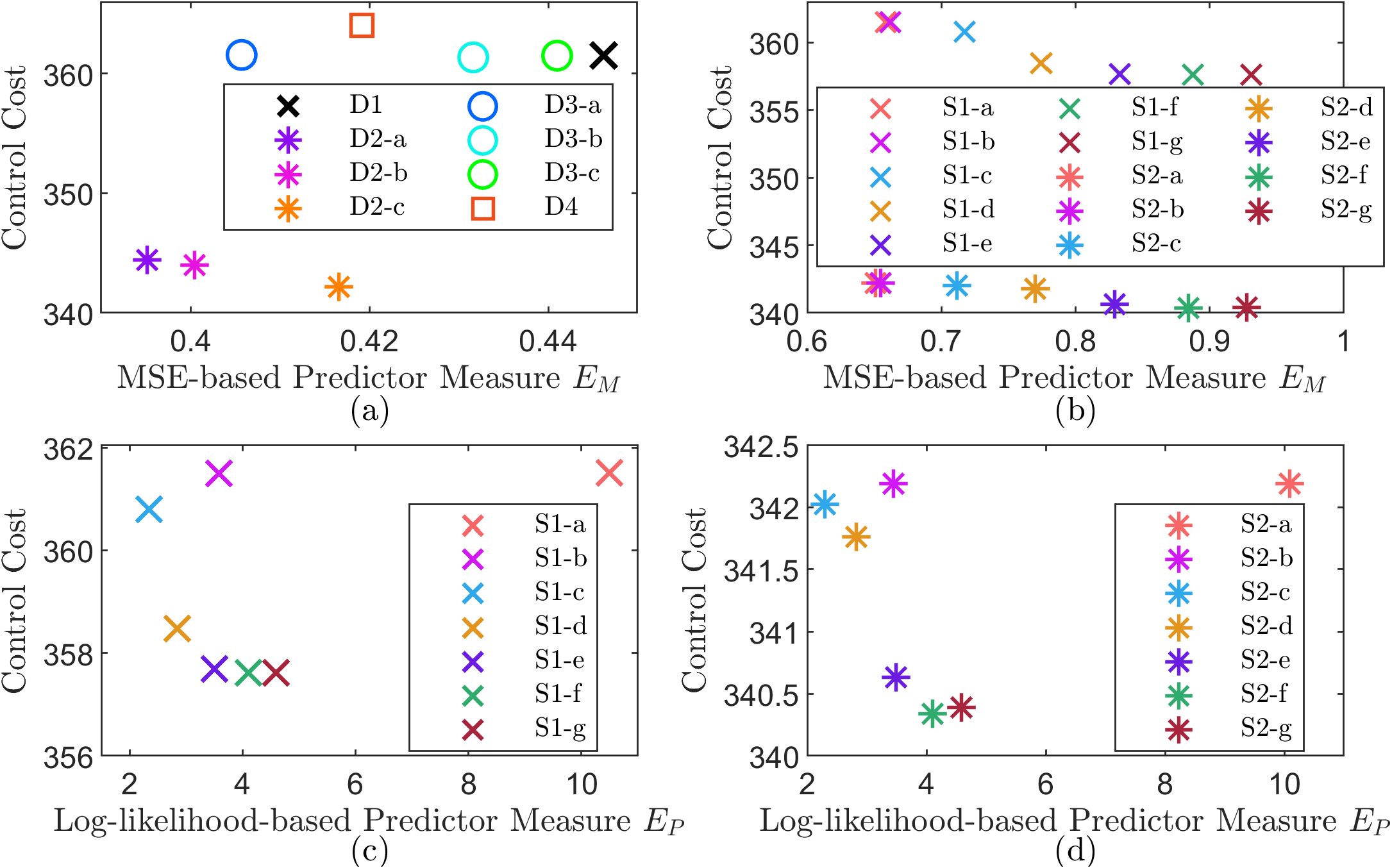

Four types of deterministic predictors and two types of stochastic predictors are designed.

(D1) Constant velocity predictor.

| (46) |

(D2) Linear decay acceleration predictor.

| (47) |

where is a constant parameter.

(D3) Exponential decay acceleration predictor.

| (48) |

where is a constant parameter.

(D4) Deterministic Long Short-Term Memory (LSTM) predictor,

| (49) |

where , , and is an LSTM network. The network is composed of an LSTM layer and a fully connected layer, where the LSTM layer is and the fully connected layer is . The LSTM network is trained using about six thousand vehicle trajectories from NGSIM data.

(S1) Zero-mean stochastic acceleration predictor.

| (50) |

where is a constant, stands for normal distribution.

(S2) Stochastic linear decay acceleration predictor.

| (51) | ||||

where and are constants.

In simulation, 22 predictors are created based on the above six types. Three different linear decay acceleration predictors D2-a, D2-b and D2-c are used, where , and respectively. Three different exponential decay acceleration predictors D3-a, D3-b and D3-c are used, where , and respectively. Seven different exponential decay acceleration predictors S1-a, S1-b, …, S1-g are used, where , and , respectively. Seven different exponential decay acceleration predictors S2-a, S2-b, …, S2-g are used, where , and , respectively. For all S2 predictors, is set to 5 to match the best D2 predictor.

We run simulation over a 112-second driving data from NGSIM, as shown in Fig. 7. The predictive optimal control is applied in a receding horizon fashion as the Type III problem. The energy management strategy runs at 1 Hz, and the prediction horizon is 5 steps.

We use dynamic programming to approximately solve the optimal control problems after each prediction. For stochastic predictors, the continuous probability distributions are discretized first. Each normally-distributed random variable is approximated by a discrete random variable with 5 possible values, before dynamic programming is applied.

The simulation results are shown in Fig. 8. In this Type III problem, the MSE and log-likelihood is defined as the average of multiple predictions. All four plots in Fig. 8 show similar patterns to Fig. 1 (b). Therefore, when using the MSE or the log-likelihood as the predictor error measure, a better predictor does not necessarily mean better optimal control performance. Comparing the deterministic predictor with its corresponding stochastic predictors (D1 vs S1, and D2 vs S2), it can be seen that in general the MSE-based predictor measures are larger for stochastic predictors. However, the stochastic predictors may lead to lower control cost. This implies that we should be cautious when directly comparing stochastic predictors with deterministic predictors. It is still suggested that the predictors should not be evaluated alone, but with the optimal control task performance.

7 Conclusions

In this paper, an analysis framework for predictive optimal control is presented. An environment model which generates the to-be-predicted signal is included in the framework. The truth of the to-be-predicted signal is properly defined with a hidden prediction state describing the current and future uncertainties in the environment. We use the proposed analysis framework to rethink the predictor evaluation problem. It is shown that improving the predictor using a general performance measure may not guarantee the improvement in control performance. It is suggested that for a general predictive control problem, the predictor should be evaluated along with the control system performance.

Acknowledgement

This work was supported by the National Natural Science Foundation of China under Grant 52188102 and Grant 52272416.

References

- [1] X. Zeng, J. Wang, A Parallel Hybrid Electric Vehicle Energy Management Strategy Using Stochastic Model Predictive Control With Road Grade Preview, IEEE Transactions on Control Systems Technology 23 (6) (2015) 2416–2423. doi:10/f7vxn2.

- [2] N. Wan, C. Zhang, A. Vahidi, Probabilistic Anticipation and Control in Autonomous Car Following, IEEE Transactions on Control Systems Technology 27 (1) (2019) 9. doi:10.1109/TCST.2017.2762288.

- [3] B.-L. Ye, W. Wu, K. Ruan, L. Li, T. Chen, H. Gao, Y. Chen, A survey of model predictive control methods for traffic signal control, IEEE/CAA Journal of Automatica Sinica 6 (3) (2019) 623–640. doi:10.1109/JAS.2019.1911471.

- [4] D. Fridovich-Keil, A. Bajcsy, J. F. Fisac, S. L. Herbert, S. Wang, A. D. Dragan, C. J. Tomlin, Confidence-aware motion prediction for real-time collision avoidance, The International Journal of Robotics Research 39 (2-3) (2020) 250–265. doi:10.1177/0278364919859436.

- [5] J. Drgoňa, J. Arroyo, I. C. Figueroa, D. Blum, K. Arendt, D. Kim, E. P. Ollé, J. Oravec, M. Wetter, D. L. Vrabie, L. Helsen, All you need to know about model predictive control for buildings, Annual Reviews in Control 50 (2020) 190–232. doi:https://doi.org/10.1016/j.arcontrol.2020.09.001.

- [6] C. Tang, Y. Liu, H. Xiao, L. Xiong, Integrated decision making and planning framework for autonomous vehicle considering uncertain prediction of surrounding vehicles, in: 2022 IEEE 25th International Conference on Intelligent Transportation Systems (ITSC), 2022, pp. 3867–3872. doi:10.1109/ITSC55140.2022.9922564.

- [7] C. Hubmann, J. Schulz, M. Becker, D. Althoff, C. Stiller, Automated driving in uncertain environments: Planning with interaction and uncertain maneuver prediction, IEEE Transactions on Intelligent Vehicles 3 (1) (2018) 5–17. doi:10.1109/TIV.2017.2788208.

- [8] M. Tomizuka, Model based prediction, preview and robust controls in motion control systems, in: Proceedings of 4th IEEE International Workshop on Advanced Motion Control - AMC ’96 - MIE, Vol. 1, 1996, pp. 1–6 vol.1. doi:10.1109/AMC.1996.509370.

-

[9]

C. Yu, G. Shi, S.-J. Chung, Y. Yue, A. Wierman,

Competitive control with delayed

imperfect information, in: 2022 American Control Conference (ACC), IEEE,

2022.

arXiv:2006.07569,

doi:10.23919/acc53348.2022.9867421.

URL http://arxiv.org/abs/2006.07569 - [10] D. Q. Mayne, J. B. Rawlings, C. V. Rao, P. O. M. Scokaert, Constrained model predictive control: Stability and optimality, Automatica 36 (6) (2000) 26. doi:10/brxgpc.

-

[11]

Y. Zhang, C. Edwards, M. Belmont, G. Li,

Robust

model predictive control for constrained linear system based on a sliding

mode disturbance observer, Automatica 154 (2023) 111101.

doi:https://doi.org/10.1016/j.automatica.2023.111101.

URL https://www.sciencedirect.com/science/article/pii/S0005109823002613 -

[12]

C. S. Vallon, F. Borrelli,

Data-Driven

Strategies for Hierarchical Predictive Control in Unknown

Environments, IEEE Transactions on Automation Science and Engineering

19 (3) (2022) 1434–1445.

doi:10.1109/TASE.2021.3137769.

URL https://ieeexplore.ieee.org/document/9675285/ -

[13]

S. Dey, T. Marzullo, X. Zhang, G. Henze,

Reinforcement

learning building control approach harnessing imitation learning, Energy and

AI 14 (2023) 100255.

doi:https://doi.org/10.1016/j.egyai.2023.100255.

URL https://www.sciencedirect.com/science/article/pii/S2666546823000277 -

[14]

A. Mugnini, F. Ferracuti, M. Lorenzetti, G. Comodi, A. Arteconi,

Day-ahead

optimal scheduling of smart electric storage heaters: A real quantification

of uncertainty factors, Energy Reports 9 (2023) 2169–2184.

doi:https://doi.org/10.1016/j.egyr.2023.01.013.

URL https://www.sciencedirect.com/science/article/pii/S2352484723000136 -

[15]

A. Panagiotelis, P. Gamakumara, G. Athanasopoulos, R. J. Hyndman,

Probabilistic

forecast reconciliation: Properties, evaluation and score optimisation,

European Journal of Operational Research 306 (2) (2023) 693–706.

doi:https://doi.org/10.1016/j.ejor.2022.07.040.

URL https://www.sciencedirect.com/science/article/pii/S0377221722006087 -

[16]

Q. Hu, M. R. Amini, I. Kolmanovsky, J. Sun, A. Wiese, J. B. Seeds,

Multihorizon Model

Predictive Control: An Application to Integrated Power and

Thermal Management of Connected Hybrid Electric Vehicles, IEEE

Transactions on Control Systems Technology 30 (3) (2022) 1052–1064.

doi:10.1109/TCST.2021.3091887.

URL https://ieeexplore.ieee.org/document/9478061/ - [17] T. N. Palmer, Stochastic weather and climate models, Nature Reviews Physics 1 (7) (2019) 463–471. doi:10.1038/s42254-019-0062-2.

- [18] A. S. Tay, K. F. Wallis, C. C. Al, Density forecasting: A survey, Journal of Forecasting 19 (4) (2000) 235–254. doi:10.1002/1099-131x(200007)19:4<235::aid-for772>3.0.co;2-l.

- [19] T. Gneiting, M. Katzfuss, Probabilistic Forecasting, Annual Review of Statistics and Its Application 1 (1) (2014) 125–151. doi:10.1146/annurev-statistics-062713-085831.

- [20] T. Denœux, Decision-making with belief functions: A review, International Journal of Approximate Reasoning 109 (2019) 87–110. doi:10.1016/j.ijar.2019.03.009.

- [21] F. Petropoulos, D. Apiletti, V. Assimakopoulos, M. Z. Babai, D. K. Barrow, S. B. Taieb, C. Bergmeir, R. J. Bessa, J. Bijak, J. E. Boylan, J. Browell, C. Carnevale, J. L. Castle, P. Cirillo, M. P. Clements, C. Cordeiro, F. L. C. Oliveira, S. De Baets, A. Dokumentov, J. Ellison, P. Fiszeder, P. H. Franses, D. T. Frazier, M. Gilliland, M. S. Gönül, P. Goodwin, L. Grossi, Y. Grushka-Cockayne, M. Guidolin, M. Guidolin, U. Gunter, X. Guo, R. Guseo, N. Harvey, D. F. Hendry, R. Hollyman, T. Januschowski, J. Jeon, V. R. R. Jose, Y. Kang, A. B. Koehler, S. Kolassa, N. Kourentzes, S. Leva, F. Li, K. Litsiou, S. Makridakis, G. M. Martin, A. B. Martinez, S. Meeran, T. Modis, K. Nikolopoulos, D. Önkal, A. Paccagnini, A. Panagiotelis, I. Panapakidis, J. M. Pavía, M. Pedio, D. J. Pedregal, P. Pinson, P. Ramos, D. E. Rapach, J. J. Reade, B. Rostami-Tabar, M. Rubaszek, G. Sermpinis, H. L. Shang, E. Spiliotis, A. A. Syntetos, P. D. Talagala, T. S. Talagala, L. Tashman, D. Thomakos, T. Thorarinsdottir, E. Todini, J. R. T. Arenas, X. Wang, R. L. Winkler, A. Yusupova, F. Ziel, Forecasting: Theory and practice, International Journal of Forecasting 38 (3) (2022) S0169207021001758. arXiv:2012.03854, doi:10.1016/j.ijforecast.2021.11.001.

- [22] A. Mesbah, Stochastic model predictive control: An overview and perspectives for future research, IEEE Control Systems Magazine 36 (6) (2016) 30–44. doi:10.1109/MCS.2016.2602087.

- [23] U. Rosolia, X. Zhang, F. Borrelli, Data-Driven Predictive Control for Autonomous Systems, Annual Review of Control, Robotics, and Autonomous Systems 1 (1) (2018) 259–286. doi:10/gg4z5d.

- [24] J. Mandi, E. Demirovi?, P. J. Stuckey, T. Guns, Smart Predict-and-Optimize for Hard Combinatorial Optimization Problems, Proceedings of the AAAI Conference on Artificial Intelligence 34 (02) (2020) 1603–1610. doi:10.1609/aaai.v34i02.5521.

-

[25]

A. N. Elmachtoub, P. Grigas, Smart

”Predict, then Optimize”, Management Science 68 (1) (2020) 9–26.

arXiv:1710.08005,

doi:10.1287/mnsc.2020.3922.

URL http://arxiv.org/abs/1710.08005 - [26] B. Wilder, B. Dilkina, M. Tambe, Melding the Data-Decisions Pipeline: Decision-Focused Learning for Combinatorial Optimization, Proceedings of the AAAI Conference on Artificial Intelligence 33 (01) (2019) 1658–1665. doi:10.1609/aaai.v33i01.33011658.

- [27] F. A. Bayer, M. Lorenzen, M. A. Müller, F. Allgöwer, Robust economic Model Predictive Control using stochastic information, Automatica 74 (2016) 151–161. doi:10.1016/j.automatica.2016.08.008.

- [28] R. Jing, X. Zeng, Predictive Optimal Control with Data-Based Disturbance Scenario Tree Approximation, in: 2021 American Control Conference (ACC), IEEE, New Orleans, LA, USA, 2021, pp. 992–997. doi:10.23919/ACC50511.2021.9483341.

- [29] U. D. of Transportation Federal Highway Administration, Next generation simulation (NGSIM) vehicle trajectories and supporting data, provided by ITS DataHub through Data.transportation.gov. Accessed 2023-02-01 from http://doi.org/10.21949/1504477” (2016). doi:http://doi.org/10.21949/1504477.

- [30] X. Zeng, J. Wang, Optimizing the energy management strategy for plug-in hybrid electric vehicles with multiple frequent routes, IEEE Transactions on Control Systems Technology 27 (1) (2019) 394–400. doi:10.1109/tcst.2017.2768042.