DoNet: Deep De-overlapping Network for Cytology Instance Segmentation

Abstract

Cell instance segmentation in cytology images has significant importance for biology analysis and cancer screening, while remains challenging due to 1) the extensive overlapping translucent cell clusters that cause the ambiguous boundaries, and 2) the confusion of mimics and debris as nuclei. In this work, we proposed a De-overlapping Network (DoNet) in a decompose-and-recombined strategy. A Dual-path Region Segmentation Module (DRM) explicitly decomposes the cell clusters into intersection and complement regions, followed by a Semantic Consistency-guided Recombination Module (CRM) for integration. To further introduce the containment relationship of the nucleus in the cytoplasm, we design a Mask-guided Region Proposal Strategy (MRP) that integrates the cell attention maps for inner-cell instance prediction. We validate the proposed approach on ISBI2014 and CPS datasets. Experiments show that our proposed DoNet significantly outperforms other state-of-the-art (SOTA) cell instance segmentation methods. The code is available at https://github.com/DeepDoNet/DoNet.

1 Introduction

Cytology image has been essential for cancer screening and earlier diagnosis, such as qualitative and quantitative identification of cellular morphology, nuclei size, nuclear-cytoplasmic ratio, and other cytological features [12, 25, 15]. However, examining tens of thousands of cells under the microscope visually is inherently tedious and suffers from inter-/intra-observer variability. Computational techniques enable efficient and accurate characterization of cells from cytology images [12, 16]. Among all computational techniques, cell segmentation has been a fundamental and widely-studied task, since the acquisition of cell-level identification is a pre-requisition for further assessment and analysis[23, 3].

Deep Learning (DL) methods show promising results for cell-nuclei segmentation in the histopathology image[14, 5, 17, 6]. However, cytology segmentation remains challenging for the following two reasons. Firstly, cells in a cytology image are prone to cluster with each other, leading to the overlapping issue. In the cytology images, the translucent cytoplasm of the cell (seen in Figure 1) tends to occlude each other with low contrast staining, leading to ambiguous cellular boundary predictions. This phenomenon is particularly evident in cervical cell images. Secondly, hard mimics, are widespread in the background, along with other technical artefacts such as bubbles, which could mislead the instance segmentation models [4]. Take the cervical cell image as an example, the widespread white blood cells and mucus stains lead to false predictions for nuclei. To address these challenges, several works[24, 33] propose the segment-then-refine paradigm, while others [40, 39] utilize the detection-based framework, e.g., Mask R-CNN [11]. However, they fail to model the interaction between intersection and complement sub-regions within the translucent cell cluster explicitly, resulting in a limited understanding of cross-region relationships.

Amodal instance segmentation tackles the occlusion problem by inferring the integral object based on the partially visible region [22]. Based on the fact that humans can infer the occluded region of an object despite the ambiguity, these methods attempt to learn the integrated object mask (amodal mask) for better occlusion reasoning capability[9, 37] via synthesizing occluded data label pairs and aggregating global information to enhance perceptual ability. Compared to natural scenes, cell instances in cytology images are mostly semi-transparent. Therefore, an occlusion (overlapping) region exits in both the occluding and occluded instances. However, treating semi-transparent overlapping regions as general occlusion regions is not optimal, since they have different appearances compared to non-overlapping regions, and could in fact provides richer shape information than general occlusion regions.

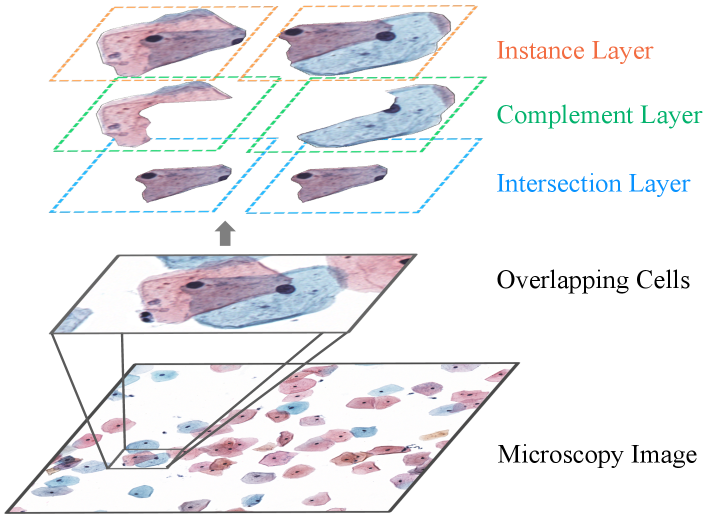

Motivated by the amodal perception, we propose a decompose-and-recombine strategy for translucent cell instance segmentation, named De-overlapping Network (DoNet). Figure 1 provides the schematic diagram. For each cell cluster with more than one cellular sub-region, DoNet starts from implicitly learning the hidden interaction of sub-regions by predicting instance masks from clusters. Then, it explicitly models the components and their relationships via the intersection layer, complement layer, and instance layer, to enhance its perceptual capability.

Initially, we adopt Mask R-CNN to get the coarse predictions, followed by a novel Dual-path Region segmentation Module (DRM) that combines features and coarse masks from the first stage to decompose cell clusters into intersection and complement sub-regions. Then, the semantic Consistency-guided Recombination Module (CRM) is designed to encourage consistency between the refined instances and integral sub-region predictions. Furthermore, to impose the morphological constraint that nuclei stay inside the cellular regions, we propose a Mask-guided Region Proposal Module (MRP) to encourage the model to focus on the intra-cellular area during nuclei segmentation.

The overall contributions are summarized as follows:

-

•

A novel de-overlapping network for cell instance segmentation with a decompose-and-recombined strategy, decomposing the cell regions with the DRM, as well as implicitly and explicitly modeling the semantic relationship between intersection, complement, and instance (cell) components via the CRM. These designs equip the network with enhanced perceptual capability in overlapping cellular sub-regions.

-

•

A mask-guided region proposal module (MRP) that leverages the cytoplasm attention map for the intra-cellular nuclei refinement, which imposes the biology prior of cellular instances into the module, effectively mitigating the influence of mimickers widespread in the background.

- •

2 Related Work

Cytology Instance Segmentation: Challenges such as cell clustering, hard mimics, and semi-transparent overlapping cytoplasm regions pose in the segmentation of cellular instance (e.g., cell, nuclei, cytoplasm) from cytology images. These challenges inspired numerous insightful works, especially after the publication of ISBI2014[33]. Two mainstream approaches are proposed to tackle the overlapping cell segmentation challenge: the segment-then-refine stream and the end-to-end training stream.

Early approaches tackling this challenge are primarily the combination of pixel-level segmentation models and additional post-processing techniques, such as seeded watershed algorithm [19], random walk [38], conditional random field algorithms [35], and star-convex parameterization [34]. To further improve the segmentation performance, some other methods introduce morphological prior. Song et al. design a dynamic multi-template deformation model, which leverages case-specific shape constraints to guide the inference of overlapping cell boundaries[30]. Tareef et al. propose to refine cytoplasm boundary via a learnable shape prior, which is dynamically modeled as the fusion of shape templates [32].

Escaping from the complex post-processing procedure, many later works turn to end-to-end training. These approaches typically adopt Mask R-CNN as their baseline. The first attempt following this approach was [29], a model for nuclear instance segmentation from liquid-based cytology smears. Based on the appearance similarity between different cells, Zhou et al. propose the IRNet to explore instance relation during overlapping cervical cell segmentation [40]. To leverage information from unlabeled data, Zhou et al. provide a mean-teacher-based semi-supervised learning scheme, MMT-PSM [39].

The above two methods have achieved notable performance improvements, yet still lack the necessary perceptual capability for overlapping regions, leading to sub-optimal results, i.e., ambiguous cell boundaries. In this study, we propose a de-overlapping network, named DoNet, to implicitly and explicitly model the interaction between the integral instance and its sub-regions.

Occluded Instance Segmentation: Instance segmentation, a typical task in computer vision, refers to the inference of bounding boxes and instance segmentation masks. Most notable approaches follow the detect-then-segment paradigm, such as Mask R-CNN [11] and its following variants [13, 2]. However, these approaches could not handle the occlusion problem, common in visual application scenarios like robotic manipulations, scene parsing, and autonomous driving [1, 10]. Due to the inconsistencies within a Regions of Interest (RoI), these models suffer from the limited perceptual capability, leading to ambiguous segmentation boundaries. To tackle this issue, a specific task, occluded instance segmentation aims at leveraging visible regions to perceive the entirety of the occluded instance accurately. This capability of inferring and reasoning occluded objects is defined as amodal perception [9], thus occluded instance segmentation is also called amodal instance segmentation.

Research on amodal instance segmentation starts from [22], which poses this challenging task and gives a synthesis-based solution. In this work, the first amodal instance segmentation dataset, the COCOA dataset, is established based on the COCO dataset. Afterward, some notable solutions accomplish this task and achieve state-of-the-art performance. Previous approaches tackle this challenge by leveraging information from visible instance segmentation, then inferring the amodal mask [9, 1]. For example, Occlusion R-CNN (ORCNN) is built on R-CNN with two mask heads, the visible mask head, and the amodal mask head, which directly predict the amodal mask and the visible mask, inferring the visible mask through the subtraction of these two masks [9]. Qi et al. [28] perform amodal instance segmentation with multi-level coding and establish a large-scale amodal instance segmentation dataset. Xiao et al. [37] leverage shape prior knowledge to infer amodal mask using memory codebook. Some other models, like BCNet [18] abandon the visible instance prediction based manner and directly models the relationship between the occluder and the occludee. To address the lack of amodal mask ground truth, ASBU [26] introduces a weakly supervised amodal segmenter, which generates pseudo-ground truth by boundary uncertainty estimation.

Essentially, the above occluded instance segmentation methods aim to enhance the amodal perception and reasoning capability, inspiring us to explore the interaction between instance sub-regions and the integral instance. Compared to amodal segmentation, whose focus on inferring the invisible region from the partially visible regions, our focus is to resolve the inconsistency between the intersection and complement regions.

3 Methodology

3.1 Overview

Problem formulation: Following the setting of cell instance segmentation, we are provided with a dataset with images and the corresponding annotations , each of which contains annotations of bounding boxes , object categories , and instance masks , where denotes the number of instances in the -th image. Here, we focus on segmenting the nucleus and cytoplasm for cell instance . For each cell cluster, based on the localization relationship among the cells, we decompose the instance mask into the intersection region and the complement region via the logical operation (see in Figure 1), which is used to model their relationship in the DoNet.

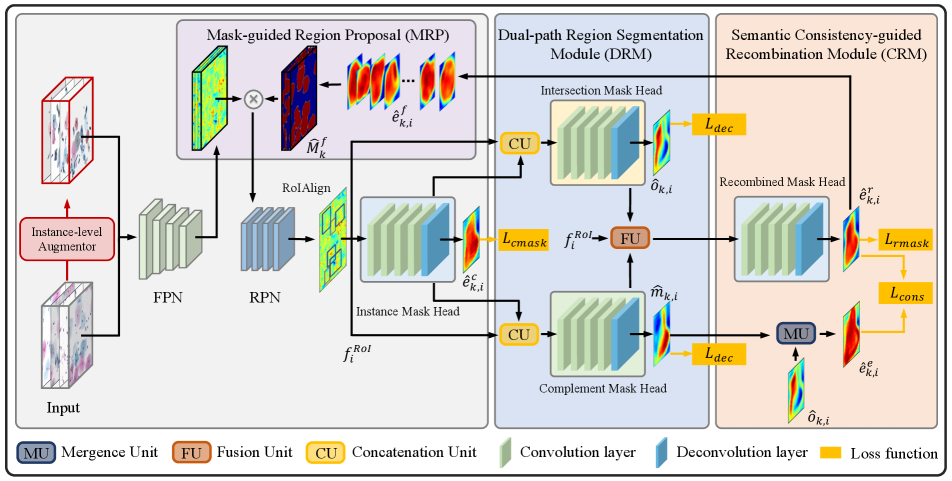

Workflow: The flowchart of the proposed DoNet is provided in Figure 2. It takes Mask R-CNN [11] as the base model, followed by a Dual-path Region segmentation Module (DRM) which takes the instance features as input to predict intersection regions and complement regions of cytoplasm via the intersection and complement layers (Section 3.2). After decomposing cell clusters according to the position relationship, a Consistency-guided Recombination Module (CRM) takes instance features from DRM and RoIAlign layer to generate the recombined masks for consistency regularization (Section 3.2). Then, the recombined cytoplasm prediction is utilized as prior knowledge for intra-cellular object prediction throughout the Mask-guided Region Proposal (MRP) (Section 3.3). We will present the details of our framework in the following sections.

Coarse Mask Segmentation: Previous research works on Mask R-CNN has shown competitive performance in instance segmentation [11, 13, 9], adopted into the proposed DoNet for coarse mask segmentation. Mask R-CNN consists of two stages. The first stage utilizes Feature Pyramid Network (FPN) for feature extraction and a Region Proposal Network (RPN) for candidate object bounding boxes generation. The second stage generates Region-of-Interest (RoI) features via the RoIAlign layer and predicts object classes and bounding boxes from the detection head, and semantic masks from the Instance Mask Head (), respectively. Due to limited perception capability in overlapping regions, may contain ambiguous boundaries. Thus, we denote as the coarse mask, which provides information for sub-region decomposition in DRM and suppresses the interference from background mimics. Following standard losses in [11], a multi-task loss for coarse mask segmentation is formed as,

| (1) |

where denotes the smooth-L1 loss for bounding box regression, denotes cross-entropy (CE) loss for classification, and denotes pixel-wise CE loss for segmentation.

3.2 Decompose-and-recombined Strategy

Previous solutions for amodal perception infer the integral structure of occluded instances using visible regions[9, 37]. However, they ignore information from overlapping regions, which tend to be sub-optimal in the case of complex superposition of translucent objects. To empower the perception capability of model for overlapping translucent regions, we specifically design the decompose-and-recombined strategy.

Dual-path Region Segmentation Module (DRM): As shown in Figure 2, DRM consists of an Intersection Mask Head and a Complement Mask Head with the same architecture. Let denotes the rich semantic feature before the coarse mask prediction in the Instance Mask Head. Both and take the concatenation of and as input to predict the intersection and complement regions in clusters. See the supplementary material for details of the Concatenation Unit. Specifically, each head consists of 4 convolutional layers to generate features in , followed by one deconvolutional layer to get the semantic mask with a resolution of . Here, we add the pixel-wise CE loss to both heads as the explicit constraint for decomposition,

| (2) |

Semantic Consistency-guided Recombination Module (CRM): To further enhance the perception capability for overlapping instances, CRM is designed to encourage DoNet to perceive integral instances. Specifically, let and denote the features before the last layer in the intersection mask head and the complement mask head. The rich semantic feature for the overlapping and non-overlapping region is considered as the residual information for the complex areas which is fused with and then fed into the recombined mask head. See the supplementary material for details of the Fusion Unit. Noted that we reuse the Instance Mask Head () here to predict the integral instance. This combination facilitates CRM to leverage contextual information of overlapping instances, leading to the improvement of perception capability. The refined mask from CRM is optimized by segmentation loss ,

| (3) |

To further enhance the feature representation capacity for the relationship of components, we add semantic consistency regularization between the recombination and merged predictions of and ,

| (4) |

where represents the merging operation for the intersection region and the complement region in Mergence Unit, which is , two sub-region masks are passed through Sigmoid function for normalization. Then, we calculate the Mask Exclusive-OR of two mask logits for merging them and suppressing redundant predicted pixels.

3.3 Mask-guided Region Proposal

It is inevitable for cytology images to exist abundant cellular debris and non-target objects, e.g., white blood cells and mucus. These objects often have a similar appearance as the nuclei, with small round surfaces stained in dark purple, which increases the model identification difficulty. To avoid the interference it brings, we further propose a Mask-guided Region Proposal module (MRP) to encourage the model to generate nuclei proposals in intra-cellular regions.

For each image, we can aggregate all the recombined instance predictions in CRM together into a semantic mask . The raw predictions are first mapped back and then summed up according to their bounding boxes predictions in the detection head, followed by a Sigmoid function to normalize them into probabilities. is considered as the attention score to re-weight features in origin FPN via element-wise multiplication:

| (5) |

where denotes the re-weighted features for nuclei proposal predictions in MRP. Thus, extracellular pixels with a lower probability to be cytoplasm are suppressed, which reduces the false positives for background instances (i.e., blood, mucus, and others). In addition, MRP also builds information interaction between different stages, which naturally encourages feature representation ability.

3.4 End-to-end Learning

Extended dataset with synthetic clusters: The semi-transparency characteristic of cytoplasm alleviates the effect of high overlap, allowing cytologists to delineate the contour of cellular instances based on expert knowledge. However, extremely challenging labeling and unavoidable label noise limit the availability of large-scale annotated datasets. To tackle this problem, we propose an instance-level data augmentor for overlapping cell data augmentation, which can generate large-scale synthetic cell clusters with controllable overlapping ratios and transparency based on the annotated cellular instances. This synthetic dataset further facilitates the generalization ability, by providing more diverse data to implicitly learn the concept of instance and its components. See the supplementary material for details of the synthetic pipeline. Unless otherwise specified, we do not use this synthetic dataset in the following comparison.

Overall Loss Function: The proposed cell instance segmentation framework can be trained in a supervised manner. The overall objective is as follows,

| (6) |

where denotes losses for RoI extraction and coarse mask prediction, denotes the decomposition loss for intersection and complement regions segmentation, is the segmentation loss for refined masks, supervises the semantic consistency between integral instance and sub-regions. , , and are trade-off parameters controlling the importance of each component.

4 Experiments

4.1 Experimental Setup

We evaluate our proposed DoNet in two cytology image datasets for overlapping cell instance segmentation:

ISBI2014[24]: This is a widely-used dataset from Overlapping Cervical Cytology Image Segmentation Challenge111 https://cs.adelaide.edu.au/~carneiro/isbi14_challenge/index.html, which consists of 8 extended depth-of-focus (EDF) real cervical cytology images and corresponding synthetic images. It contains high-quality pixel-level annotations for both nuclei and cytoplasm with a resolution of . We follow the setting in this challenge [24] to use 45, 90 and 810 images for training, validation and testing to evaluate our proposed DoNet in a supervised setting.

CPS[39]: This liquid-based cytology dataset contains 137 labeled images with a resolution of . In total, it contains 4439 cytoplasm and 4789 nuclei annotations. We conduct 3-fold cross-validation on this dataset.

Evaluation metrics: To measure the overall performance of the proposed DoNet, we utilize four commonly-used evaluation metrics in instance segmentation: aggregated Jaccard index (AJI), average Dice coefficient (Dice), F1-score (F1)[20], mean of Average Precision (mAP) [11]. In order to compare our result on the ISBI2014 with previous studies [24], we further adopt evaluation metrics including Dice, object-based false negative rate (), and pixel-based true positive rate ().

| Methods | ISBI2014 | CPS | ||||||

|---|---|---|---|---|---|---|---|---|

| mAP | Dice | F1 | AJI | mAP | Dice | F1 | AJI | |

| Mask R-CNN [11] | 59.09 | 91.15 | 92.54 | 77.07 | 48.28 3.10 | 89.32 0.50 | 85.07 2.01 | 69.20 2.27 |

| Cascade R-CNN[2] | 62.45 | 91.29 | 92.51 | 77.91 | 47.87 3.27 | 89.24 0.44 | 83.33 1.65 | 68.86 3.55 |

| Mask Scoring R-CNN[13] | 63.56 | 91.28 | 91.87 | 75.14 | 48.38 3.13 | 89.39 0.24 | 82.98 1.86 | 67.45 2.45 |

| HTC[8] | 59.62 | 91.39 | 88.08 | 75.00 | 47.60 3.56 | 89.08 0.51 | 81.30 2.56 | 66.35 2.84 |

| Occlusion R-CNN[9] | 62.35 | 91.75 | 93.18 | 78.64 | 48.14 2.84 | 89.08 0.28 | 85.69 2.28 | 69.51 2.45 |

| Xiao et al.[37] | 57.34 | 91.70 | 92.75 | 78.29 | 48.53 2.85 | 89.29 0.24 | 85.46 2.60 | 69.37 2.88 |

| DoNet | 64.02 | 92.13 | 93.23 | 79.05 | 49.43 3.83 | 89.54 0.25 | 85.51 2.33 | 70.08 2.84 |

| DoNet w/ Aug. | - | - | - | - | 49.65 3.52 | 89.50 0.38 | 86.30 2.01 | 70.56 2.34 |

Implementation details: We utilize the Mask R-CNN[11] in Detectron2 [36] as the baseline model. We use the ResNet-50-based FPN network in all experiments. During training, we adopt SGD with 0.9 momentum as the optimizer. We set the initial learning rate to 0.001 and add the linear warm-up in the first 1k iterations. We train the network for 60k iterations, decreasing the learning rate by a factor of 0.1 after 50k and 55k iterations.

4.2 Results

We quantitatively compare the cell instance segmentation results from the ISBI2014 and CPS in Table 1 with state-of-the-art methods in the field of general instance segmentation (Mask R-CNN[11], Cascade R-CNN[2], Mask Scoring R-CNN[13], HTC[8]) and amodal instance segmentation (Occlusion R-CNN[9], Xiao et al. [37]). Noted that the amodal instance segmentation methods and general instance segmentation methods perform differently on two datasets due to the varying degrees of overlapping. Our method achieves the highest scores among all metrics. Specifically, it gains and improvements for mAP and AJI compared with the best of others[9] on the ISBI2014 dataset, as well as and improvements compared with the best[37] on the CPS dataset. We also evaluate and for DoNet to compare results against winners of the ISBI2014 challenge[27, 33] and their following works [21, 31], which are mostly the segment-then-refine manners (Seen in Table 2).

Furthermore, by introducing the synthetic clusters as instance-level augmentation, DoNet further has and improvements for mAP and AJI on the CPS dataset.This is mainly because the synthetic overlapping cells can further enhance the model’s occlusion reasoning capability, which is consistent with the conclusions in [22].

4.3 Ablation Study

Effects of Network Components: We perform ablation studies to investigate the effects of the different components of our proposed pipeline for DoNet. The comparison results can be seen in Table 3. By adding DRM for explicit decomposing integral instances into intersection and complement sub-regions, we observe a increase in the average mAP of cytoplasm and nuclei on ISBI2014. However, directly adding DRM without the fusion of structural and morphological information, may mislead the model. This issue is observed in the more complex CPS dataset. To alleviate this problem, DoNet takes advantage of the decompose-and-recombined strategy by adding CRM after DRM.This strategy brings a total of and mAP improvements on two datasets by strengthening the model’s perception of overlapping regions while preserving morphological information.

| Base | DRM | CRM | MRP | ISBI2014 | CPS | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| mAP | Dice | F1 | AJI | mAP | Dice | F1 | AJI | ||||

| ✓ | 59.09 | 91.15 | 92.54 | 77.07 | 48.28 3.10 | 89.32 0.50 | 85.07 2.01 | 69.20 2.27 | |||

| ✓ | ✓ | 61.01 | 91.61 | 92.86 | 78.06 | 48.03 3.48 | 89.13 0.30 | 84.63 2.57 | 68.56 2.57 | ||

| ✓ | ✓ | ✓ | 63.43 | 91.87 | 94.16 | 79.88 | 49.12 3.26 | 89.47 0.31 | 84.82 2.73 | 69.26 2.63 | |

| ✓ | ✓ | ✓ | ✓ | 64.02 | 92.13 | 93.23 | 79.05 | 49.43 3.83 | 89.54 0.25 | 85.51 2.33 | 70.08 2.84 |

| FU | mAP | Dice | F1 | AJI | ||||||||||||

| Cyt. | Nuc. | Avg. | Cyt. | Nuc. | Avg. | Cyt. | Nuc. | Avg. | Cyt. | Nuc. | Avg. | |||||

| ✓ | 50.71 | 67.46 | 59.09 | 90.72 | 91.59 | 91.15 | 86.96 | 98.13 | 92.54 | 70.55 | 83.60 | 77.07 | ||||

| ✓ | ✓ | 54.84 | 65.94 | 60.39 | 91.56 | 91.54 | 91.55 | 87.00 | 97.68 | 92.34 | 72.07 | 82.67 | 77.37 | |||

| ✓ | ✓ | ✓ | 57.41 | 66.49 | 61.95 | 91.79 | 91.53 | 91.66 | 88.67 | 97.98 | 93.32 | 74.00 | 83.27 | 78.63 | ||

| ✓ | ✓ | ✓ | ✓ | 58.40 | 67.82 | 63.11 | 92.22 | 91.74 | 91.98 | 89.21 | 98.07 | 93.64 | 74.94 | 83.70 | 79.32 | |

| ✓ | ✓ | ✓ | ✓ | ✓ | 59.31 | 67.56 | 63.43 | 92.03 | 91.71 | 91.87 | 90.13 | 98.18 | 94.16 | 75.86 | 83.91 | 79.88 |

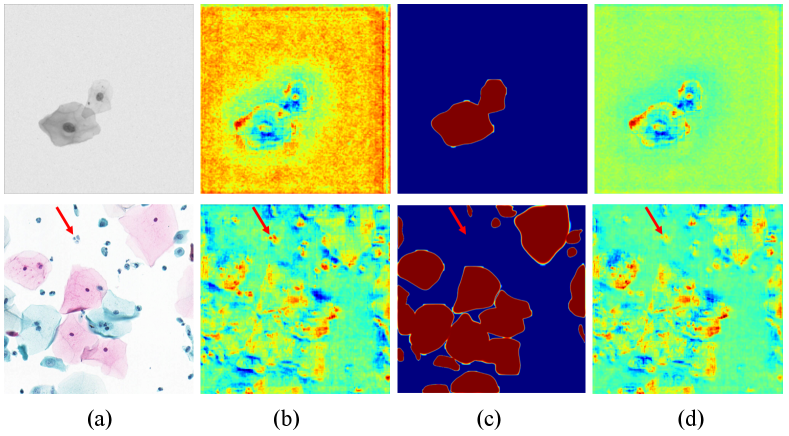

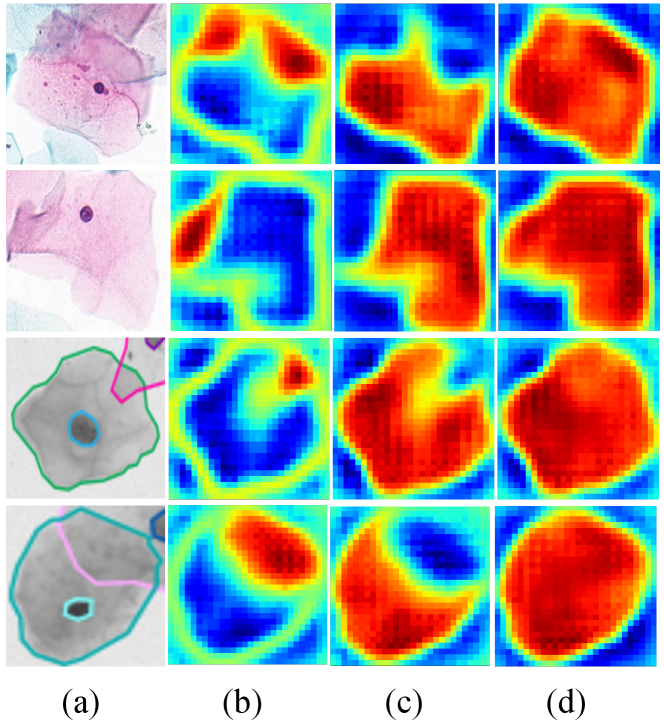

Applying MRP for mitigating the side effects from background mimics yields a further improvement of mAP and mAP on ISBI2014 and CPS datasets. Figure 3 provides the visualization of MRP operation, where background instances (e.g., mucus, karyoclasis, pointed by red arrow) are suppressed with strong responses in the feature map, encouraging the RPN to concentrate on cellular instance during nuclei region proposal.

Design Choice for DRM: We provide detailed comparisons on the ISBI2014 dataset to demonstrate the effectiveness of different components in DRM: 1) : instance mask head for coarse segmentation only; 2) : intersection mask head for overlapping region segmentation; 3) : complement mask head for non-overlapping region segmentation;

As seen in Table 4, adding yields an improvement of in average mAP, with a further gains from the additional . We notice that cytoplasm results have a more significant improvement of in mAP, which is indeed in line with our design intent.

Design Choice for CRM: The goal of semantic consistency regularization is to enhance the model’s overlapping reasoning capability by learning the concept of recombined instances from sub-regions. We provide comparisons to demonstrate the design choice (Table 4): 1) CU + FU: integration of rich semantic features as inputs via Concatenation Unit and Fusion Unit. 2) : consistency regularization between the recombined prediction and the fusion of sub-regions , . As seen in Table 4, by aggregating the rich semantic feature for overlapping and non-overlapping region via Fusion Unit, we observe an increase of average mAP on the ISBI2014 dataset. Adding consistency regularization further improves mAP for cytoplasm.

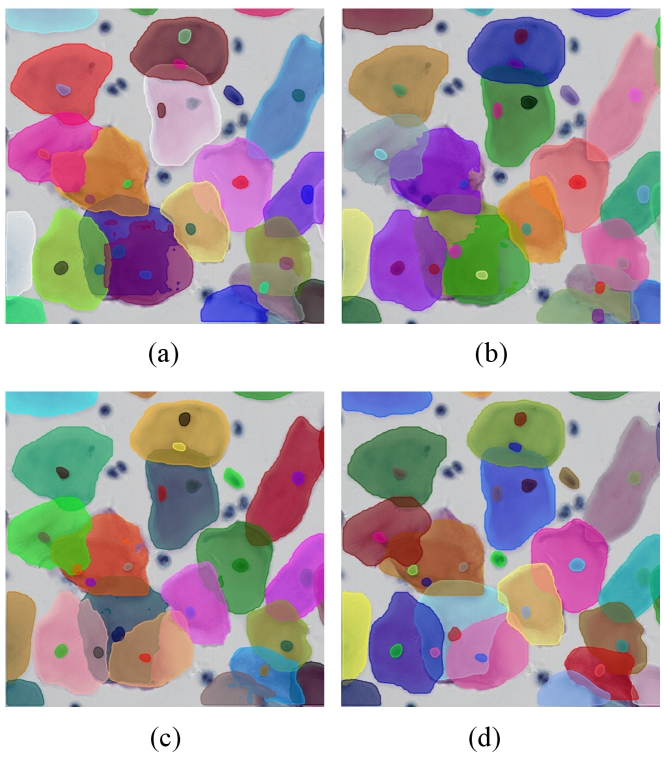

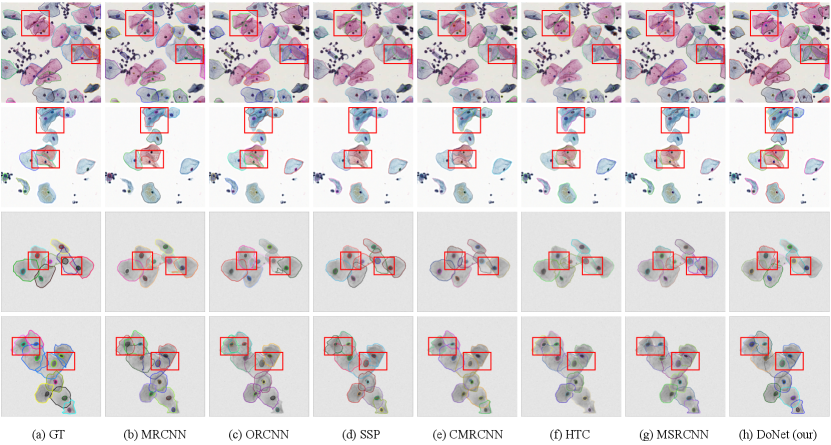

Figure 4 visualizes the results of the DoNet and other typical instance segmentation methods, including standard (Mask R-CNN), multi-task (DoNet w/o CRM), and amodal (Occlusion R-CNN) instance segmentation model. It demonstrates the importance of adding interaction among sub-regions via CRM and the strong perceptual capability of DoNet in overlapping regions.

4.4 Qualitative Analysis and Discussion

We visualize the heatmap of the intersection region, the complement region, and the integral instance in Figure 6. The proposed method successfully identifies sub-regions based on the overlapping concept, even in low-resolution areas with high transparency.

Furthermore, we provide qualitative comparisons on CPS (top) and ISBI2014 (bottom) datasets in Figure 5. Our proposed DoNet outperforms other instance segmentation methods. Specifically, red rectangles provide details and highlight the main difference among these results. In the segmentation results of the CPS data, it can be seen that overlapped cells with different staining (e.g., dark red and blue) show significant appearance inconsistency between cellular sub-regions. Previous works (e.g., Mask R-CNN[11] and Occlusion R-CNN[9]) with limited perceptual capability have difficulty capturing the relationship between pixels in the intersection and complement sub-regions, leading to ambiguous segmentation contours. In contrast, our DoNet can effectively distinguish different instance boundaries and can better perceive the integrality of cells. This generalized superiority is also observed in the ISBI2014 dataset with low-contrast cellular instances.

5 Conclusion

In this paper, we propose a de-overlapping network (DoNet) to address the overlapping challenges in cytology instance segmentation. The proposed DoNet enhances the model’s perception of overlapping regions by implicitly and explicitly modeling the interaction between cellular regions and the integral instance. Extensive experiments reveal the superiorities of the proposed DoNet, which not only provides immense potential for overlapping object perception in the medical domain but also occluded instance segmentation in general vision application scenarios.

Acknowledgement

This work was supported by National Natural Science Foundation of China (No. 62202403) and Beijing Institute of Collaborative Innovation Program (No. BICI22EG01).

References

- [1] Seunghyeok Back, Joosoon Lee, Taewon Kim, Sangjun Noh, Raeyoung Kang, Seongho Bak, and Kyoobin Lee. Unseen object amodal instance segmentation via hierarchical occlusion modeling. In 2022 International Conference on Robotics and Automation (ICRA), pages 5085–5092. IEEE, 2022.

- [2] Zhaowei Cai and Nuno Vasconcelos. Cascade r-cnn: Delving into high quality object detection. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 6154–6162, 2018.

- [3] Zhizhong Chai, Luyang Luo, Huangjing Lin, Hao Chen, Anjia Han, and Pheng-Ann Heng. Deep semi-supervised metric learning with dual alignment for cervical cancer cell detection. In 2022 IEEE 19th International Symposium on Biomedical Imaging (ISBI), pages 1–5. IEEE, 2022.

- [4] Haoxuan Che, Haibo Jin, and Hao Chen. Learning robust representation for joint grading of ophthalmic diseases via adaptive curriculum and feature disentanglement. In Medical Image Computing and Computer Assisted Intervention–MICCAI 2022: 25th International Conference, Singapore, September 18–22, 2022, Proceedings, Part III, pages 523–533. Springer, 2022.

- [5] Hao Chen, Xiaojuan Qi, Lequan Yu, Qi Dou, Jing Qin, and Pheng-Ann Heng. Dcan: Deep contour-aware networks for object instance segmentation from histology images. Medical image analysis, 36:135–146, 2017.

- [6] Hao Chen, Xiaojuan Qi, Lequan Yu, and Pheng-Ann Heng. Dcan: deep contour-aware networks for accurate gland segmentation. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, pages 2487–2496, 2016.

- [7] Jiajia Chen, Baocan Zhang, et al. Segmentation of overlapping cervical cells with mask region convolutional neural network. Computational and Mathematical Methods in Medicine, 2021, 2021.

- [8] Kai Chen, Jiangmiao Pang, Jiaqi Wang, Yu Xiong, Xiaoxiao Li, Shuyang Sun, Wansen Feng, Ziwei Liu, Jianping Shi, Wanli Ouyang, et al. Hybrid task cascade for instance segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 4974–4983, 2019.

- [9] Patrick Follmann, Rebecca König, Philipp Härtinger, Michael Klostermann, and Tobias Böttger. Learning to see the invisible: End-to-end trainable amodal instance segmentation. In 2019 IEEE Winter Conference on Applications of Computer Vision (WACV), pages 1328–1336. IEEE, 2019.

- [10] Andreas Geiger, Philip Lenz, and Raquel Urtasun. Are we ready for autonomous driving? the kitti vision benchmark suite. In 2012 IEEE conference on computer vision and pattern recognition, pages 3354–3361. IEEE, 2012.

- [11] Kaiming He, Georgia Gkioxari, Piotr Dollár, and Ross Girshick. Mask r-cnn. In Proceedings of the IEEE international conference on computer vision, pages 2961–2969, 2017.

- [12] Rana S Hoda, Syed A Hoda, et al. Fundamentals of Pap test cytology. Springer Science & Business Media, 2007.

- [13] Zhaojin Huang, Lichao Huang, Yongchao Gong, Chang Huang, and Xinggang Wang. Mask scoring r-cnn. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 6409–6418, 2019.

- [14] Elima Hussain, Lipi B Mahanta, Chandana Ray Das, Manjula Choudhury, and Manish Chowdhury. A shape context fully convolutional neural network for segmentation and classification of cervical nuclei in pap smear images. Artificial Intelligence in Medicine, 107:101897, 2020.

- [15] Hao Jiang, Sen Li, Weihuang Liu, Hongjin Zheng, Jinghao Liu, and Yang Zhang. Geometry-aware cell detection with deep learning. Msystems, 5(1):e00840–19, 2020.

- [16] Hao Jiang, Yanning Zhou, Yi Lin, Ronald CK Chan, Jiang Liu, and Hao Chen. Deep learning for computational cytology: A survey. Medical Image Analysis, page 102691, 2022.

- [17] Cheng Jin, Zhengrui Guo, Yi Lin, Luyang Luo, and Hao Chen. Label-efficient deep learning in medical image analysis: Challenges and future directions. arXiv preprint arXiv:2303.12484, 2023.

- [18] Lei Ke, Yu-Wing Tai, and Chi-Keung Tang. Deep occlusion-aware instance segmentation with overlapping bilayers. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 4019–4028, 2021.

- [19] Marek Kowal, Michał Żejmo, Marcin Skobel, Józef Korbicz, and Roman Monczak. Cell nuclei segmentation in cytological images using convolutional neural network and seeded watershed algorithm. Journal of Digital Imaging, 33(1):231–242, 2020.

- [20] Neeraj Kumar, Ruchika Verma, Sanuj Sharma, Surabhi Bhargava, Abhishek Vahadane, and Amit Sethi. A dataset and a technique for generalized nuclear segmentation for computational pathology. IEEE transactions on medical imaging, 36(7):1550–1560, 2017.

- [21] Hansang Lee and Junmo Kim. Segmentation of overlapping cervical cells in microscopic images with superpixel partitioning and cell-wise contour refinement. In Proceedings of the IEEE conference on computer vision and pattern recognition workshops, pages 63–69, 2016.

- [22] Ke Li and Jitendra Malik. Amodal instance segmentation. In European Conference on Computer Vision, pages 677–693. Springer, 2016.

- [23] Huangjing Lin, Hao Chen, Xi Wang, Qiong Wang, Liansheng Wang, and Pheng-Ann Heng. Dual-path network with synergistic grouping loss and evidence driven risk stratification for whole slide cervical image analysis. Medical Image Analysis, 69:101955, 2021.

- [24] Zhi Lu, Gustavo Carneiro, and Andrew P Bradley. An improved joint optimization of multiple level set functions for the segmentation of overlapping cervical cells. IEEE Transactions on Image Processing, 24(4):1261–1272, 2015.

- [25] Ritu Nayar and David C Wilbur. The Bethesda system for reporting cervical cytology: definitions, criteria, and explanatory notes. Springer, 2015.

- [26] Khoi Nguyen and Sinisa Todorovic. A weakly supervised amodal segmenter with boundary uncertainty estimation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 7396–7405, 2021.

- [27] M Nosrati and Ghassan Hamarneh. A variational approach for overlapping cell segmentation. ISBI overlapping cervical cytology image segmentation challenge, pages 1–2, 2014.

- [28] Lu Qi, Li Jiang, Shu Liu, Xiaoyong Shen, and Jiaya Jia. Amodal instance segmentation with kins dataset. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 3014–3023, 2019.

- [29] Nitiwat Sompawong, Jintapatee Mopan, Pakinee Pooprasert, Wanwisa Himakhun, Komsun Suwannarurk, Jarun Ngamvirojcharoen, Tee Vachiramon, and Charturong Tantibundhit. Automated Pap smear cervical cancer screening using deep learning. In Annual International Conference of the IEEE Engineering in Medicine and Biology Society, pages 7044–7048. IEEE, 2019.

- [30] Youyi Song, Ee-Leng Tan, Xudong Jiang, Jie-Zhi Cheng, Dong Ni, Siping Chen, Baiying Lei, and Tianfu Wang. Accurate cervical cell segmentation from overlapping clumps in Pap smear images. IEEE Transactions on Medical Imaging, 36(1):288–300, 2016.

- [31] Afaf Tareef, Yang Song, Heng Huang, Dagan Feng, Mei Chen, Yue Wang, and Weidong Cai. Multi-pass fast watershed for accurate segmentation of overlapping cervical cells. IEEE transactions on medical imaging, 37(9):2044–2059, 2018.

- [32] Afaf Tareef, Yang Song, Heng Huang, Yue Wang, Dagan Feng, Mei Chen, and Weidong Cai. Optimizing the cervix cytological examination based on deep learning and dynamic shape modeling. Neurocomputing, 248:28–40, 2017.

- [33] Daniela M Ushizima, Andrea GC Bianchi, and Claudia M Carneiro. Segmentation of subcellular compartments combining superpixel representation with voronoi diagrams. Technical report, Lawrence Berkeley National Lab.(LBNL), Berkeley, CA (United States), 2015.

- [34] Florin C Walter, Sebastian Damrich, and Fred A Hamprecht. Multistar: Instance segmentation of overlapping objects with star-convex polygons. In Proceedings of the IEEE International Symposium on Biomedical Imaging, pages 295–298. IEEE, 2021.

- [35] Tao Wan, Shusong Xu, Chen Sang, Yulan Jin, and Zengchang Qin. Accurate segmentation of overlapping cells in cervical cytology with deep convolutional neural networks. Neurocomputing, 365:157–170, 2019.

- [36] Yuxin Wu, Alexander Kirillov, Francisco Massa, Wan-Yen Lo, and Ross Girshick. Detectron2. https://github.com/facebookresearch/detectron2, 2019.

- [37] Yuting Xiao, Yanyu Xu, Ziming Zhong, Weixin Luo, Jiawei Li, and Shenghua Gao. Amodal segmentation based on visible region segmentation and shape prior. In Proceedings of the AAAI Conference on Artificial Intelligence, pages 2995–3003, 2021.

- [38] Han Zhang, Hongqing Zhu, and Xiaofeng Ling. Polar coordinate sampling-based segmentation of overlapping cervical cells using attention U-Net and random walk. Neurocomputing, 383:212–223, 2020.

- [39] Yanning Zhou, Hao Chen, Huangjing Lin, and Pheng-Ann Heng. Deep semi-supervised knowledge distillation for overlapping cervical cell instance segmentation. In International Conference on Medical Image Computing and Computer-Assisted Intervention, pages 521–531. Springer, 2020.

- [40] Yanning Zhou, Hao Chen, Jiaqi Xu, Qi Dou, and Pheng-Ann Heng. Irnet: Instance relation network for overlapping cervical cell segmentation. In International Conference on Medical Image Computing and Computer-Assisted Intervention, pages 640–648. Springer, 2019.