11email: lanlbn@cqut.edu.cn

DSSAU-Net:U-Shaped Hybrid Network for Pubic Symphysis and Fetal Head Segmentation

Abstract

In the childbirth process, traditional methods involve invasive vaginal examinations, but research has shown that these methods are both subjective and inaccurate. Ultrasound-assisted diagnosis offers an objective yet effective way to assess fetal head position via two key parameters: Angle of Progression (AoP) and Head-Symphysis Distance (HSD), calculated by segmenting the fetal head (FH) and pubic symphysis (PS), which aids clinicians in ensuring a smooth delivery process. Therefore, accurate segmentation of FH and PS is crucial. In this work, we propose a sparse self-attention network architecture with good performance and high computational efficiency, named DSSAU-Net, for the segmentation of FH and PS. Specifically, we stack varying numbers of Dual Sparse Selection Attention (DSSA) blocks at each stage to form a symmetric U-shaped encoder-decoder network architecture. For a given query, DSSA is designed to explicitly perform one sparse token selection at both the region and pixel levels, respectively, which is beneficial for further reducing computational complexity while extracting the most relevant features. To compensate for the information loss during the upsampling process, skip connections with convolutions are designed. Additionally, multiscale feature fusion is employed to enrich the model’s global and local information. The performance of DSSAU-Net has been validated using the Intrapartum Ultrasound Grand Challenge (IUGC) 2024 test set provided by the organizer in the MICCAI IUGC 2024 competition111https://codalab.lisn.upsaclay.fr/competitions/18413#learn_the_details, where we win the fourth place on the tasks of classification and segmentation, demonstrating its effectiveness. The codes will be available on GitHub.

Keywords:

DSSAU-Net DSSA Skip Connection Multiscale Feature Fusion.1 Introduction

Difficult labor is a major cause of maternal mortality and morbidity, referring to situations where, despite strong uterine contractions, parts of the fetus do not pass through the birth canal. Therefore, during labor, to prevent such occurrences, the position of the fetus needs to be monitored repeatedly. Traditional vaginal examinations are subjective and potentially invasive [2, 7], and some related methods are difficult to perform reliably [8]. The advent of ultrasound-assisted diagnosis has provided a non-invasive, accurate alternative for assessing fetal position and cervical dilation, gradually gaining acceptance in obstetrics [9]. Some studies [1, 11, 12, 14] have shown that intrapartum ultrasound assessment can help more accurately evaluate the positions of the fetal head and the pubic symphysis. The International Society of Ultrasound in Obstetrics and Gynecology recommends ultrasound assessment before considering instrumental vaginal delivery or suspecting delayed labor. Two reliable ultrasound parameters—the angle of progression (AoP) and the head-symphysis distance (HSD)—are used to predict the outcome of instrumental vaginal delivery [10].

In ultrasound-assisted diagnosis, the AoP and HSD are key parameters for assessing the progress of labor. These parameters rely on the segmentation of the fetal head (FH) and pubic symphysis (PS), aiding clinicians in real-time monitoring of the fetal delivery status. Therefore, many methods have begun to focus on improving the segmentation performance of FH and PS. For example, the Fetal Head–Pubic Symphysis Segmentation Network (FH-PSSNet) [6] uses an encoder-decoder framework, which incorporates a dual attention module, a multi-scale feature screening module, and a direction guidance block, for automatic AoP measurement. The Dual-path Boundary-guided Residual Network (DBRN) [5] integrates a multiscale weighted module (MWM), an enhanced boundary module (EBM), and a boundary-guided dual-attention residual module (BDRM) to address the challenges of implementing fully automated and accurate FH-PS segmentation in cases of low contrast or ambiguous anatomical boundaries. Additionally, BRAU-Net [3] uses only region-level sparse tokens for FH-PS segmentation, which is not robust enough for small targets due to speckle noise, ultrasound artifacts, and blurred target boundaries.

In contrast to these methods, we consider combining a sparse attention mechanism with convolution and adopting a multiscale feature fusion approach to achieve more effective segmentation of FH and PS. To this end, in this paper, we propose a novel U-shaped network architecture with sparse self-attention, termed DSSAU-Net, for the segmentation of the FH and PS. Specifically, the adopted sparse self-attention, derived from the idea of concentrating tokens at both region and pixel levels [18, 16], is a content-aware, dynamic mechanism, which is explicitly designed as dual selection operations and has been used in our other unpublished work. We call this sparse mechanism Dual Sparse Selection Attention (DSSA). DSSA significantly reduces computational complexity while extracting more accurate features. Additionally, to effectively extract multiscale features, inspired by UperNet [15] and Pyramid Pooling Module (PPM) [17], we stacked the building blocks constructed with this attention mechanism into a symmetric U-shaped encoder-decoder structure, in which the feature maps of different resolutions from the decoder component are fused to yield better segmentation results. Finally, we conduct experiments on the Intrapartum Ultrasound Grand Challenge 2024 dataset and verify the superiority of our method.

2 Method

In this section, we will first introduce the attention mechanism used in DSSAU-Net: Dual Sparse Selection Attention (DSSA). Next, we will provide a detailed description of the core module, the DSSA block, which is built upon this attention mechanism. Finally, we will outline the overall architecture of the network.

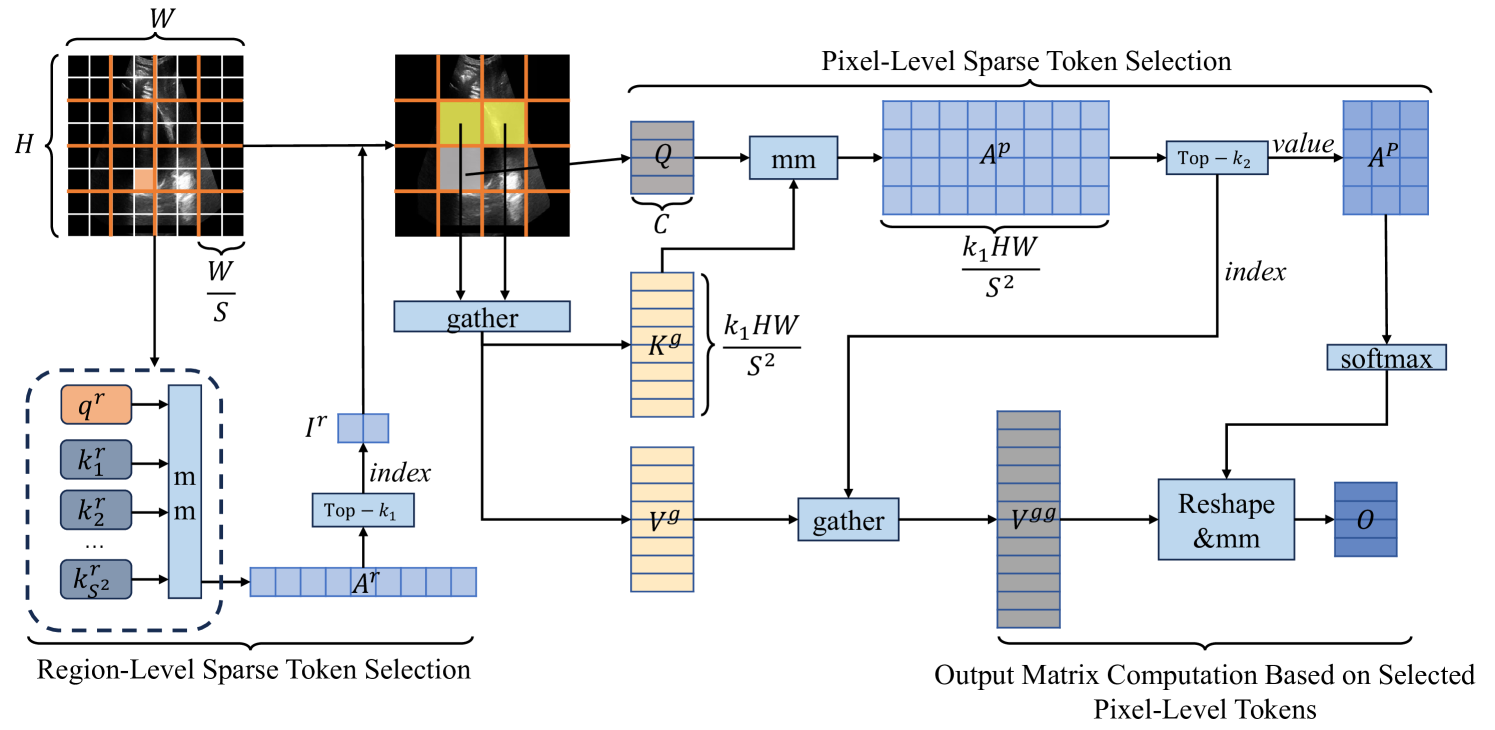

2.1 Dual Sparse Selection Attention (DSSA)

The core idea of DSSA is to explicitly perform sparse token selections at both region and pixel levels. It involves three main steps. First, for a given region-level token where the pixel-level token is located, mostly relevant regions are selected and irrelevant ones are filtered out according to the scores of attention matrix between region-level query and key . Second, for a given pixel-level token, mostly relevant pixels are selected, and irrelevant ones are filtered out by the scores of attention matrix between pixel-level query and key . Finally, the output matrix is computed by matrix multiplication between the normalized attention matrix and the pixel-level value . The conceptual diagram of DSSA is shown in Fig. 1. Following BiFormer [18], for a given input image of size , we divide the image into non-overlapped regions, and obtain the region image . Then, the region tokens: query, key, value, , can be derived with linear projections:

| (1) |

where presents the number of regions, indicates that a token is region-level, are corresponding projection weight matrices for the query, key, value, respectively.

As for the region-level sparse token selection, each region-level token query and key can be derived by averaging all the pixel-level tokens on the region token and key . The attention map between region-level queries and keys is obtained through matrix multiplication, representing the semantic relevance between regions. Then, we employ a top- operation to select the most relevant regions for each region containing a given pixel-level token and record their indices in . The process is described as follows:

| (2) |

| (3) |

To fully leverage the GPU’s acceleration capabilities, the filtered region-level tokens need to be gathered back into a matrix.

| (4) |

where are gathered key and value tensors, which will be further used to the pixel-level sparse token selection.

Concerning the pixel-level sparse token selection, for any pixel-level query in Q, we compute the relevance (i.e., attention matrix) between this query and the gathered pixel-level key in , indicated as as follows:

| (5) |

where means that this token is pixel-level. Additionally, since the first round of region-level sparse token selection involves an averaging operation within a region, which may retain noise features due to the overall high relevance. This could negatively impact the model’s ability to effectively extract features. Therefore, in , we perform a second round of pixel-level sparse token selection using a top- operation, which not only selects the most relevant tokens but implicitly removes noises. The values and indices corresponding to the selected tokens are stored and denoted as and , respectively:

| (6) |

| (7) |

where is determined by the scaling factor :

| (8) |

Similarly, to leverage GPU acceleration, is gathered based on to form :

| (9) |

Finally, is weighted with . Additionally, to preserve more fine-grained information beneficial for pixel-level segmentation, we add a local context enhancement term () to the final output , which is a depth-wise convolution:

| (10) |

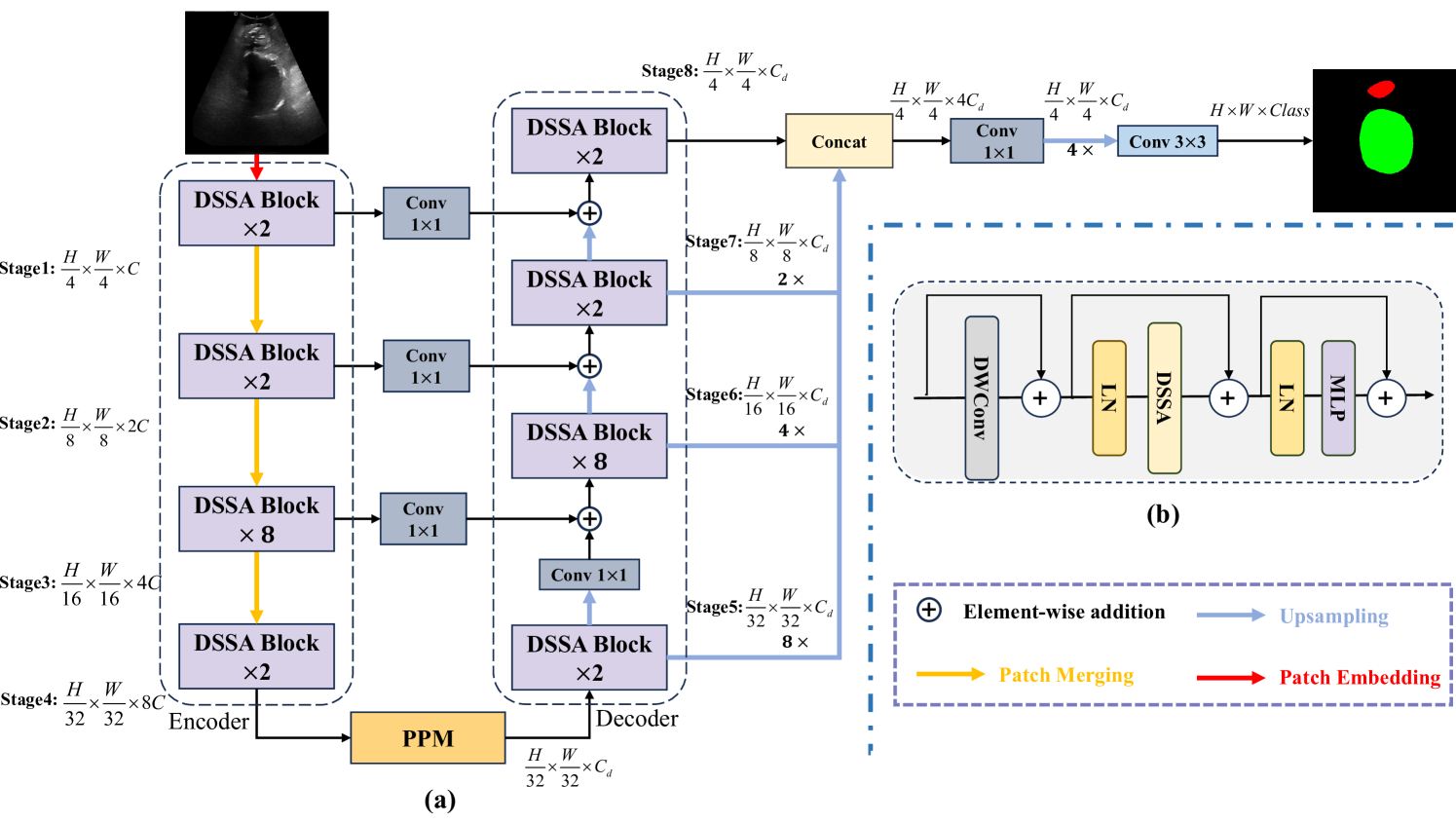

2.2 DSSA Block

Based on this novel concept of DSSA attention, we can construct the core block of the model, the DSSA block, as shown in Fig. 2(b). Specifically, a depth-wise convolution is first used to encode relative positional information, followed by a layer normalization. DSSA is then applied to compute attention, followed by another a layer normalization, and the result is fed into a two-layer Multilayer Perceptron (MLP) module. During this process, three residual connections are employed to help alleviate the vanishing gradient and enhance the stability of the model. The DSSA block can be formulated as:

| (11) |

| (12) |

where represents the output of each block, while indicates the output of the internal modules. denotes the -th block.

2.3 DSSAU-Net Architecture

DSSAU-Net is a hybrid CNN-Transformer architecture with the encoder-decoder structure built upon DSSA blocks, as shown in Fig. 2(a). As for Stage1, it consists of an overlapped patch embedding layer with two 3×3 convolutions and two DSSA blocks, which is used to transform the input image of sizes into the resulting feature map of size :

| (13) |

in Stage2, a Patch Merging layer is applied to reduce the number of tokens, and a linear embedding layer is used to increase the dimension to . Thus, the size of the feature map output by Stage2, also containing two DSSA blocks, is :

| (14) |

after that, similar process is repeated twice, the size of the corresponding feature maps and generated by Stage3 and Stage4 is and , respectively, which are formulated as:

| (15) |

| (16) |

On the encoder side, the number of blocks in each stage is set to 2, 2, 8, and 2, respectively.

Since aggregates contextual information from different stages, inspired by UperNet [15], we use the Pyramid Pooling Module (PPM) [17] to take full advantage of the rich semantic information contained in . On the decoder side, the dimension of the feature map is fixed to . This setup helps reduce the number of parameters and ensures that features at different scales are equally important. Subsequently, the feature map of size is fed into the hierarchically built decoder from Stage5 to Stage8. During this process, the feature map in each stage is processed with DSSA attention and fused with the feature map of the same resolution from the encoder. The resulting feature map is then upsampled to the output size of . The overall process can be described as:

| (17) |

| (18) |

| (19) |

| (20) |

where presents element-wise addition.

Additionally, to effectively fuse the multiscale features in different stages, , , and are upsampled to the same resolution as . All these upsampled feature maps are then concatenated along the channel dimension. After that, a convolution layer is applied, followed by upsampling, and another convolution layer. Finally, a feature map of resolution is output to predict pixel-level segmentation. The process can be formulated as:

| (21) |

| (22) |

2.4 Loss Function

We adopt a hybrid loss function to train DSSAU-Net, combining dice loss () and cross-entropy loss (). The dice loss helps alleviate class imbalance problems, while the cross-entropy loss ensures accurate pixel classification. These losses are defined as follows:

| (23) |

| (24) |

| (25) |

where is the predicted probability of the -th pixel, and is the ground truth of the -th pixel.

3 Experiments and Results

3.1 Datasets

We conduct experiments on the competition dataset. The original dataset consists of 2,575 training images and 40 validation images. During training, all images are resized to .

3.2 Metrics

In this work, we employ three primary evaluation metrics to measure the segmentation performance of the model, namely the Dice Similarity Coefficient (DSC), the Hausdorff Distance (HD), and the Average Surface Distance (ASD). The specific calculations are presented as follows:

| (26) |

| (27) |

| (28) |

Additionally, to further demonstrate the effectiveness of our method in the ultrasound-assisted delivery process, during the final testing phase, we develop an automated program to calculate the Angle of Progression (AoP) and the Head-Symphysis Distance (HSD).

3.3 Experimental Settings

This work is conducted on a Geforce RTX 3090 GPU with 24GB of memory. We meticulously design the experimental details. Specifically, the input images are resized to a resolution of 256256, and the number of regions in each DSSA stage is set to 88. In the encoder, the number of channels in each stage is set to [96, 192, 384, 768], and the of decoder is set to 64. Additionally, the initial learning rate is set to 1e-4. To improve the model’s generalization ability, we introduce several data augmentation strategies, such as rotation, flipping, and contrast adjustment. The backbone network uses weights pre-trained on the ImageNet dataset. The scaling factor is set to 1/8.

3.4 Results

We train our DSSAU-Net on the training set and evaluate the performance of DSSAU-Net on the validation set mentioned in Subsection 3.1. The evaluation is conducted using three segmentation metrics: DSC, HD, and ASD, as well as two key parameters related to ultrasound-assisted diagnosis: AoP and HSD. The results are presented in Table 1. It can be seen that DSSAU-Net achieves excellent segmentation results, with DSC, HD, and ASD reaching up to 86.43, 31.08, and 8.39, respectively, and has a low number of parameters and FLOPs. This reflects the effectiveness of both the designed U-shaped hierarchical network for the pubic symphysis and fetal head segmentation task and the DSSA mechanism in reducing computational resource consumption.

To further show the performance of DSSAU-Net, we compare it with the methods used by other teams in the MICCAI IUGC 2024 competition. The performance of classification and segmentation on the test set provided by the organizer, along with the final rank, is shown in Table 2. Our result wins fourth place in the classification and segmentation tasks in the competition. It can be seen that although DSSAU-Net did not achieve the highest final overall ranking, its performance on the segmentation task is still commendable. DSSAU-Net ranks second on both the HD and ASD metrics and third on the DSC metric, achieving an overall second place in the segmentation task. This demonstrates the strong capability of DSSAU-Net in handling segmentation tasks. However, its performance on the classification task is suboptimal, but this is in line with our expectations since our main goal is to develop a more accurate segmentation network. Overall, although DSSAU-Net performs well on the competition task and wins fourth place, it still has a large gap compared to the top-ranked team’s method. These gaps motivate us to continue our efforts to refine the network architecture and improve feature fusion strategies in future work.

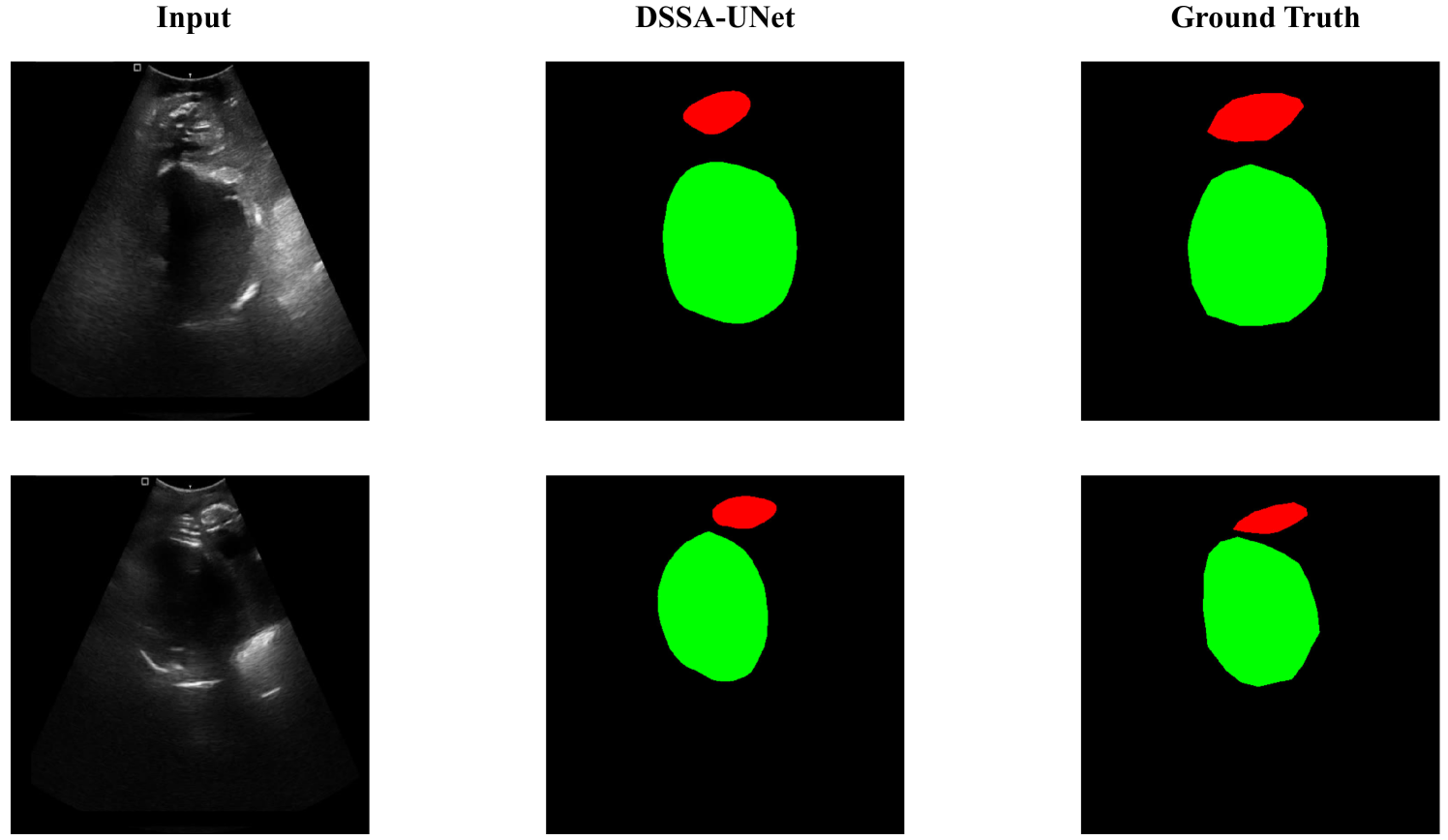

Additionally, we visualize the segmentation results and compare them with the ground truth, as shown in Fig. 3. One can see that the segmented masks generated by our approach closely match the boundaries and shape of ground truth.

| Methods | AoP(∘) | HSD(mm) | DSC(%) | HD(mm) | ASD(mm) | Params(M) | FLOPs(G) |

| DSSAU-Net | 9.88 | 10.52 | 86.34 | 31.08 | 8.39 | 29.25 | 7.15 |

| Group | Classification | Segmentation | Biometry | Rank | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Acc | F1 | AUC | MCC | DSC | ASD | HD | AoP | HSD | ||

| ganjie | 74.41 | 75.55 | 78.02 | 36.48 | 84.75 | 13.00 | 38.72 | 10.43 | 11.45 | 1 |

| vicbic | 56.70 | 63.06 | 68.68 | 12.86 | 88.57 | 9.43 | 28.42 | 9.49 | 10.39 | 2 |

| BioMedIA | 66.19 | 54.22 | 71.26 | 21.45 | 86.33 | 12.22 | 41.11 | 9.16 | 11.68 | 3 |

| CQUT-Smart | 63.19 | 67.80 | 62.25 | 24.23 | 85.35 | 11.07 | 37.38 | 10.75 | 10.79 | 4 |

| nkdinsdale95 | 67.89 | 74.88 | 75.71 | 25.19 | 81.69 | 14.39 | 40.44 | 15.33 | 15.23 | 5 |

| baseline | 47.99 | 45.15 | 49.40 | 7.58 | 78.68 | 22.65 | 89.10 | 13.20 | 19.72 | 6 |

| hhl hotpot | 57.07 | 69.30 | 43.83 | 0 | 47.67 | 82.34 | 242.07 | 62.45 | 58.06 | 7 |

| serikbay | 42.93 | 0 | 44.10 | 0 | 72.63 | 56.21 | 134.79 | 40.14 | 89.21 | 8 |

3.5 Ablation Study

In this section, to deeply explore the specific impact of each component of the DSSAU-Net on overall performance, we follow the above experimental settings and conduct a series of ablation studies on the provided training set and validation set. Specifically, we systematically analyze the effects of different factors, including the number of skip connections, the multiscale feature fusion module, and the selection of key hyperparameters in the proposed Dual Sparse Selection Attention.

3.5.1 Effect of the Number of Skip Connections:

Skip connection helps compensate for information loss during downsampling, which has been proved by previous studies[13, 4]. However, different network structures have varying complexities, and an excessive number of skip connections may not only be detrimental to segmentation performance but also increase the complexity of the network. Therefore, we conduct ablation experiments at resolutions of 1/4, 1/8, and 1/16 to explore the impact of different numbers of skip connections on the performance of DSSAU-Net. The results are shown in Table 3. When the skip connection is not used, the model has the worst performance on all segmentation metrics and biometry parameters. As the number of skip connections increases, the segmentation performance of the model gradually improves. The best performance is achieved when all skip connections are utilized at three resolutions. This is because multiscale skip connections are beneficial for compensating for the spatial information loss caused by downsampling and integrating high-level semantic information from deeper layers into the corresponding decoder layers, thereby enhancing segmentation performance. Thus, we report our final results conditioned on using all skip connections.

| # Skip Connection | Connection Place | AoP | HSD | DSC | HD | ASD | |||

|---|---|---|---|---|---|---|---|---|---|

| no skip | 1/4 | 1/8 | 1/16 | ||||||

| 0 | 10.18 | 12.14 | 85.84 | 32.90 | 9.23 | ||||

| 1 | 10.05 | 12.42 | 86.03 | 31.55 | 9.15 | ||||

| 2 | 9.98 | 11.79 | 86.11 | 31.57 | 8.89 | ||||

| 3 | 9.88 | 10.52 | 86.34 | 31.08 | 8.39 | ||||

3.5.2 Effect of Multiscale Feature Fusion Module:

The multiscale feature fusion (MFF) module is the core component of DSSAU-Net used to achieve accurate segmentation. Thus, we design the ablation experiment to analyze its effectiveness. The results are presented in Table 4. It is evident that compared to DSSAU-Net without the MFF module, the performance of DSSAU-Net with the MFF module improves by 0.37, 1.2, and 0.81 in terms of DSC, HD, and ASD, respectively. Consistent improvement can also be observed with respect to AoP and HSD. We believe that the reason may be that the shallow feature maps have higher resolution and contain more local spatial information, while the deep feature maps have lower resolution and cover rich global semantic information. Both of these advantages can be leveraged by the MFF module, thereby achieving more accurate segmentation.

| Method | AoP | HSD | DSC | HD | ASD |

|---|---|---|---|---|---|

| DSSAU-Net(w/o MFF) | 10.39 | 12.53 | 85.97 | 32.28 | 9.20 |

| DSSAU-Net | 9.88 | 10.52 | 86.34 | 31.08 | 8.39 |

3.5.3 Effect of the Number of Top- Tokens:

The dual sparse selection mechanism in DSSA not only significantly reduces computational complexity but also enables the extraction of accurate features. However, selecting different numbers of region-level tokens and pixel-level tokens may impact the performance of DSSAU-Net. Therefore, we design the ablation study to choose appropriate and values for the region-level tokens and pixel-level tokens. For a fair comparison, this experiment does not use pre-trained weights. The results are presented in Table 5. One can see that when is set to [1, 4, 16, 64], a better segmentation performance is achieved compared to [2, 8, 32, 64]. We analyze that this is because selecting too many regions may introduce more noise tokens, which is detrimental to learning effective features. Additionally, we fix to [1, 4, 16, 64] and further study the effect of the values of . It can be seen that when setting to 1/8, the model can achieve the best performance. There is a similar observation when is set to [2, 8, 32, 64] and is set to 1/8, the model also performs better compared to other values. This is easily understandable because when fewer tokens are selected during the pixel-level sparse selection, the model can learn fewer features. Conversely, selecting more tokens introduces more noise. Moreover, it can be seen from Table 5 that the FLOPs vary significantly with different values of , demonstrating the advantage of DSSA in reducing computational complexity. Therefore, we chose a compromise value of as the hyperparameter of the model.

| AoP | HSD | DSC | HD | ASD | FLOPs (G) | ||

|---|---|---|---|---|---|---|---|

| 1,4,16,64 | 1/4 | 13.79 | 16.94 | 82.61 | 40.16 | 10.84 | 7.17 |

| 1/8 | 13.50 | 16.89 | 83.42 | 38.15 | 10.41 | 7.15 | |

| 1/16 | 15.24 | 17.54 | 82.68 | 41.62 | 10.93 | 7.14 | |

| 2,8,32,64 | 1/4 | 15.57 | 17.17 | 82.61 | 41.60 | 11.01 | 7.39 |

| 1/8 | 15.54 | 17.24 | 82.73 | 40.49 | 10.79 | 7.35 | |

| 1/16 | 15.84 | 19.16 | 82.72 | 42.52 | 29.25 | 7.32 |

4 Discussion

In this paper, we have validated the performance of DSSAU-Net in ultrasound-assisted delivery. However, From Fig. 3, it can be seen that although DSSAU-Net has successfully segmented both PS (presentation of the fetal head) and FH (fetal head), closely aligning with the ground truth, there is still room for improvement in the precision of edge segmentation. For instance, segmenting the boundaries between target and background is challenging due to low contrast. Therefore, enhancing DSSAU-Net’s capability to extract local information and improving its performance under low-contrast imaging conditions will be the focus of future work.

5 Conclusion

In this work, we propose DSSAU-Net, a CNN-Transformer hybrid network for fetal head and pubic symphysis segmentation. The network is constructed by stacking efficient DSSA blocks, forming symmetrical encoder-decoder structure. Additionally, we introduce skip connections with convolution operations, and a pyramid pooling module to capture richer semantic information. The results on the challenge dataset demonstrate that our approach is capable of achieving accurate and efficient medical image segmentation. In future work, we will further explore more efficient attention mechanisms and investigate the fusion of feature information across different levels to enhance the model’s ability for both global and local modeling.

References

- [1] Bellussi, F., Ghi, T., Youssef, A., Cataneo, I., Salsi, G., Simonazzi, G., Pilu, G.: Intrapartum ultrasound to differentiate flexion and deflexion in occipitoposterior rotation. Fetal Diagnosis and Therapy 42(4), 249–256 (2017)

- [2] Boyle, A., Reddy, U.M., Landy, H.J., Huang, C.C., Driggers, R.W., Laughon, S.K.: Primary cesarean delivery in the united states. Obstetrics & Gynecology 122(1), 33–40 (2013)

- [3] Cai, P., Lu, J., Li, Y., Lan, L.: Pubic symphysis-fetal head segmentation using pure transformer with bi-level routing attention (2023), https://arxiv.org/abs/2310.00289

- [4] Cao, H., Wang, Y., Chen, J., Jiang, D., Zhang, X., Tian, Q., Wang, M.: Swin-unet: Unet-like pure transformer for medical image segmentation. In: European conference on computer vision. pp. 205–218. Springer (2022)

- [5] Chen, Z., Lu, Y., Long, S., Campello, V.M., Bai, J., Lekadir, K.: Fetal head and pubic symphysis segmentation in intrapartum ultrasound image using a dual-path boundary-guided residual network. IEEE Journal of Biomedical and Health Informatics (2024)

- [6] Chen, Z., Ou, Z., Lu, Y., Bai, J.: Direction-guided and multi-scale feature screening for fetal head–pubic symphysis segmentation and angle of progression calculation. Expert Systems with Applications 245, 123096 (2024)

- [7] Cohen, S., Lipschuetz, M., Yagel, S.: Is a prolonged second stage of labor too long? Ultrasound in Obstetrics & Gynecology 50(4), 423–426 (2017)

- [8] Dupuis, O., Ruimark, S., Corinne, D., Simone, T., André, D., René-Charles, R.: Fetal head position during the second stage of labor: comparison of digital vaginal examination and transabdominal ultrasonographic examination. European Journal of Obstetrics & Gynecology and Reproductive Biology 123(2), 193–197 (2005)

- [9] Fiorentino, M.C., Villani, F.P., Di Cosmo, M., Frontoni, E., Moccia, S.: A review on deep-learning algorithms for fetal ultrasound-image analysis. Medical image analysis 83, 102629 (2023)

- [10] Ghi, T., Eggebø, T., Lees, C., Kalache, K., Rozenberg, P., Youssef, A., Salomon, L., Tutschek, B.: Isuog practice guidelines: intrapartum ultrasound. Ultrasound in Obstetrics & Gynecology 52(1), 128–139 (2018)

- [11] Malvasi, A., Tinelli, A., Barbera, A., Eggebø, T., Mynbaev, O., Bochicchio, M., Pacella, E., Di Renzo, G.: Occiput posterior position diagnosis: vaginal examination or intrapartum sonography? a clinical review. The Journal of Maternal-Fetal & Neonatal Medicine 27(5), 520–526 (2014)

- [12] Ramphul, M., Ooi, P.V., Burke, G., Kennelly, M.M., Said, S.A., Montgomery, A.A., Murphy, D.J.: Instrumental delivery and ultrasound: a multicentre randomised controlled trial of ultrasound assessment of the fetal head position versus standard care as an approach to prevent morbidity at instrumental delivery. BJOG: An International Journal of Obstetrics & Gynaecology 121(8), 1029–1038 (2014)

- [13] Ronneberger, O., Fischer, P., Brox, T.: U-net: Convolutional networks for biomedical image segmentation. In: Medical image computing and computer-assisted intervention–MICCAI 2015: 18th international conference, Munich, Germany, October 5-9, 2015, proceedings, part III 18. pp. 234–241. Springer (2015)

- [14] Rueda, S., Fathima, S., Knight, C.L., et al.: Evaluation and comparison of current fetal ultrasound image segmentation methods for biometric measurements: A grand challenge. IEEE Transactions on Medical Imaging 33(4), 797–813 (2014)

- [15] Xiao, T., Liu, Y., Zhou, B., Jiang, Y., Sun, J.: Unified perceptual parsing for scene understanding. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) Computer Vision – ECCV 2018. pp. 432–448. Springer International Publishing, Cham (2018)

- [16] Zhao, G., Lin, J., Zhang, Z., Ren, X., Su, Q., Sun, X.: Explicit sparse transformer: Concentrated attention through explicit selection (2019), https://arxiv.org/abs/1912.11637

- [17] Zhao, H., Shi, J., Qi, X., Wang, X., Jia, J.: Pyramid scene parsing network. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 2881–2890 (2017)

- [18] Zhu, L., Wang, X., Ke, Z., Zhang, W., Lau, R.: Biformer: Vision transformer with bi-level routing attention. In: 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). pp. 10323–10333 (2023)