Dual Information Enhanced Multi-view Attributed Graph Clustering

Abstract

Multi-view attributed graph clustering is an important approach to partition multi-view data based on the attribute feature and adjacent matrices from different views. Some attempts have been made in utilizing Graph Neural Network (GNN), which have achieved promising clustering performance. Despite this, few of them pay attention to the inherent specific information embedded in multiple views. Meanwhile, they are incapable of recovering the latent high-level representation from the low-level ones, greatly limiting the downstream clustering performance. To fill these gaps, a novel Dual Information enhanced multi-view Attributed Graph Clustering (DIAGC) method is proposed in this paper. Specifically, the proposed method introduces the Specific Information Reconstruction (SIR) module to disentangle the explorations of the consensus and specific information from multiple views, which enables GCN to capture the more essential low-level representations. Besides, the Mutual Information Maximization (MIM) module maximizes the agreement between the latent high-level representation and low-level ones, and enables the high-level representation to satisfy the desired clustering structure with the help of the Self-supervised Clustering (SC) module. Extensive experiments on several real-world benchmarks demonstrate the effectiveness of the proposed DIAGC method compared with the state-of-the-art baselines.

Index Terms:

Multi-view attributed graph clustering, deep multi-view clustering, contrastive learning.I Introduction

Clustering is one of the most fundamental tasks of unsupervised learning [1, 2, 3, 4, 5, 6, 7]. The basic idea is to divide the data into several disjoint clusters, where the samples in the same cluster are highly similar to each other, and the samples in different clusters are less related. With the rapid growth of social media and the internet, data has become more complex since it is collected, processed, and stored in various ways. For example, on the movie network data, a movie can be connected with other movies not only with the co-actor relationship but also with the co-director relationship. Besides, each movie has its own keywords as the attribute features. This kind of data is termed as multi-view attributed graph data [8], which contains one attribute feature matrix and multiple adjacent matrices.

Since the adjacent matrix reflects the topological structure of graph data, numerous graph-based multi-view clustering methods [9, 10, 11] have been developed to explore such information, e.g., multi-view spectral clustering [12, 13, 14, 15], graph-based multi-view subspace clustering [16, 17, 18, 19, 20, 21], tensor learning-based multi-view clustering [22, 23] and ect.. The multi-view spectral clustering methods [24, 25, 26] are derived from the single-view spectral clustering methods, which maximize the agreements between different views and explicitly employ the normalized cut on the graph for a soft assignment fitting views. The graph-based multi-view subspace clustering methods [27, 28, 29, 30, 31] are based on the assumption that all views are shared with one latent embedding. They construct the self-representation matrices for all views and perform the single-view clustering method, e.g., -means, on the consensus representation matrix for the cluster partition. Moreover, tensor learning-based multi-view clustering methods [32, 33, 34, 35] also show promising clustering performance on multi-view graph data, which stack the representation matrices into a three-order tensor to explore the high-order correlation among all views. Despite great success, they only consider the topological structures, i.e, the adjacent matrices of the graph data, and pay little attention to the node attribute information [36].

To fully explore the topological information and the node attribute information of graph data, some efforts have been made in the Graph Neural Network (GNN)-based clustering methods in recent years [37]. Compared with the aforementioned shallow models, GNN has powerful nonlinear feature extraction ability, and the features extracted by GNN can directly be applied to the downstream tasks, e.g. clustering. The Graph Convolutional Network (GCN)-based methods [38] define a convolutional operation on the graph data for feature extraction. Motivated by the great success of autoencoders, Graph Auto-Encoder (GAE) network-based methods [39] extract deep embeddings in a self-training manner. The GNN-based multi-view clustering methods consider the complementary information between different views by extracting the deep representation of each view, and fusing them into the consensus one to mine the essential information for clustering.

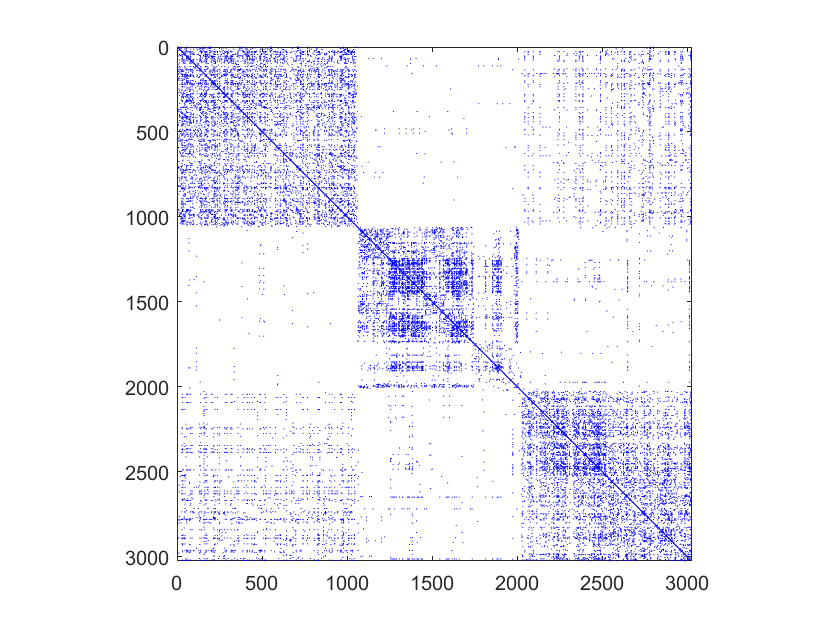

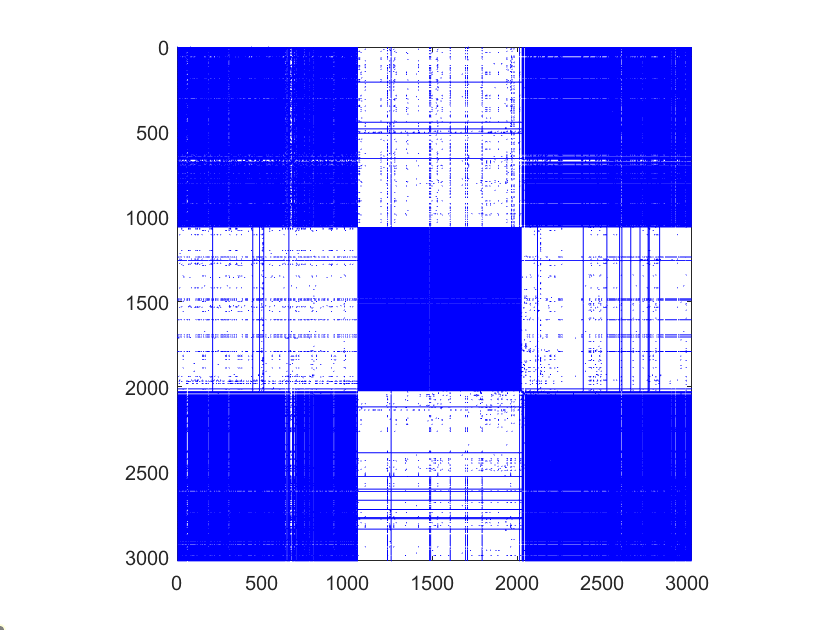

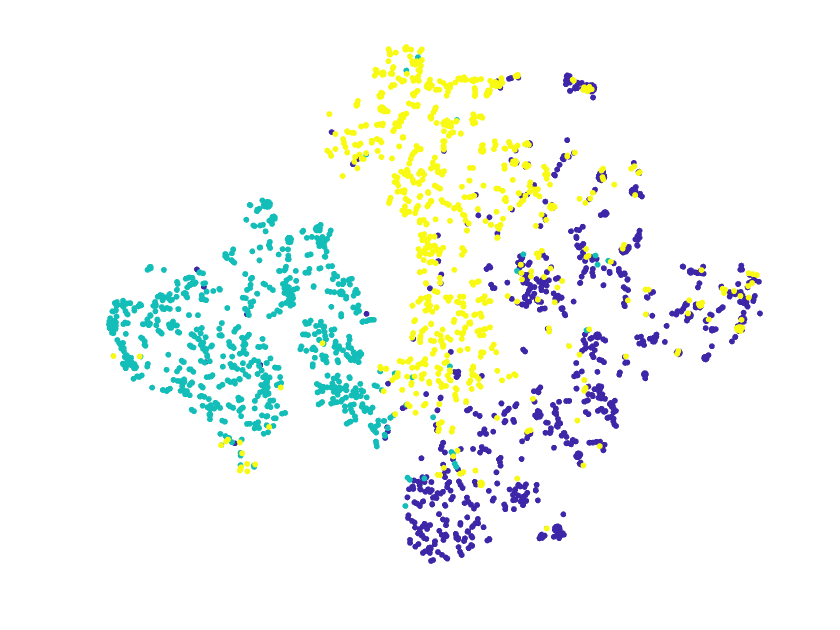

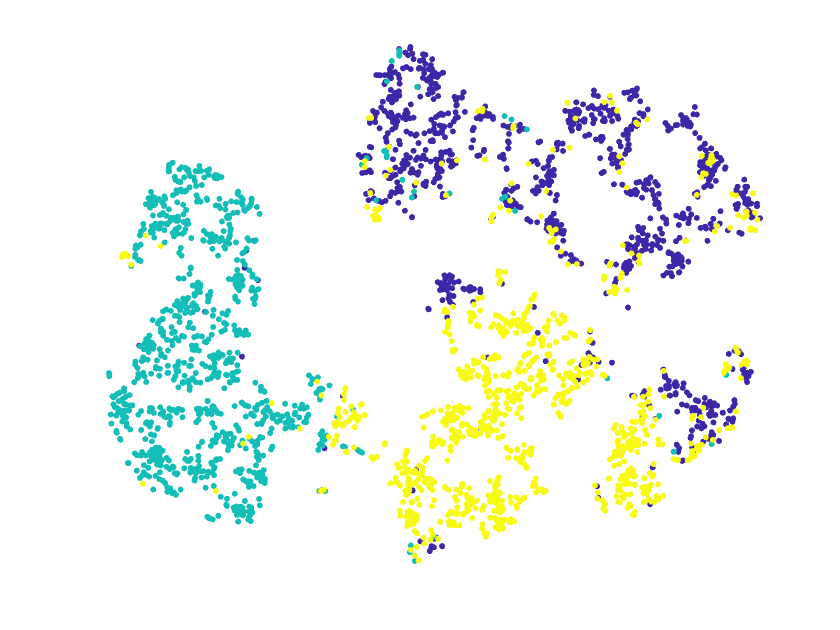

Despite significant performance, there are two main issues to be addressed for the existing deep multi-view attributed graph clustering methods: (1) Existing methods do not consider the inherent specific information embedded in each view when computing the consensus representation, which may interfere with the performance of the downstream tasks, i.e., clustering. The purpose of the GNN-based multi-view attributed graph clustering method is to find a cluster partition from the consensus representation that fits all views. For multi-view attributed graph data, as illustrated in Figure 1, the adjacency matrix of each view has its own special structure, but this structure information could not be dealt with appropriately, resulting in degraded clustering performance. (2) They use low-level representations to construct the consensus representation, which may introduce meaningless information and affect the downstream clustering task. The existing methods obtain the cluster partition directly from the low-level representations of each view, and some of them reconstruct the multiple adjacent matrices from one consensus representation. The target of multi-view clustering is to find a consensus cluster partition from all views while the reconstruction process aims to reconstruct the diversity of different views from the same representation, which induces the conflict between the two tasks and leads to sub-optimal clustering results.

To solve the aforementioned problems, in this paper, a novel Dual Information enhanced multi-view Attributed Graph Clustering (DIAGC) method is proposed, where the explorations of the consensus and specific information are disentangled elegantly. Besides, it fully utilizes the non-linearity of the low-level representations to generate the latent high-level clustering-oriented representation. Specifically, the proposed DIAGC method employs the Mutual Information Maximization (MIM) module and Specific Information Reconstruction (SIR) module to mine the rich information embedded in the deep representations of each view for the latent high-level representation. Besides, to avoid interferences from the inherent specific information embedded in different views, the Specific Information Reconstruction (SIR) module is introduced in DIAGC, enabling the GCN encoder to extract the purer consensus representation. The Self-supervised Clustering (SC) module forces the latent high-level representation to be a clustering-oriented one. By training these modules simultaneously, the modules can boost each other for better clustering results in a mutual manner.

In general, the contributions of this paper can be summarized as follows:

-

1.

To the best of our knowledge, this is the first attempt to disentangle the consensus and specific information learning for deep multi-view attributed graph clustering.

-

2.

A novel Dual Information enhanced multi-view Attributed Graph Clustering (DIAGC) method is developed, where the latent high-level clustering-oriented representation learning as well as the specific information reconstruction are seamlessly integrated into a unified framework.

-

3.

The experimental results on several real-world benchmarks compared with the state-of-the-art baselines demonstrate the effectiveness of the proposed DIAGC method.

The organization of this paper is as follows. In Section II, the related works are outlined. In Section III, some basic notations and the problem definitions are briefly reviewed. Section 2 introduces the proposed DIAGC method in detail. The experimental setups, real-world benchmarks, state-of-the-art baselines and experimental results are presented and analyzed in Section V. The conclusion of this paper is given in Section VI.

II Related Work

II-A Multi-view Clustering

The basic idea of multi-view clustering is to explore the consensus and complementary information embedded in multi-view data and learn a cluster partition that fits all views. Multi-view spectral clustering methods aim to learn a soft cluster indicator matrix directly from the original data. For instance, Kumar et al. [12] employ the co-regularized scheme to maximize the agreement between views. Xia et al. [13] propose a robust Markov-chain-based multi-view spectral clustering method where a low-rank transition matrix calculated by graphs is regarded as the input. Wang et al. [14] consider the clustering quality of each view and the clustering results agreement between different views. In [27], the self-weighting learning strategy is introduced to learn a block diagonal matrix for clustering from multiple graph inputs. In [28], similarity matrix learning, unified graph learning and indicator learning are incorporated into a unified framework. Liu et al. [29] consider the intra-view and inter-view correlations together and propose an adaptively weighted multi-view spectral clustering method. For graph-based multi-view subspace clustering methods, the self-representation matrices are constructed from the original graph data for clustering. In [40], the Hilbert Schmidt Independence Criterion (HSIC) term is introduced to explore the complementary information between the self-representation matrices from different views. Tang et al. [41] construct a consensus self-representation matrix with low-rank constraints for clustering. Zhang et al. [42] propose a one-step framework to explore the intra-view structure and the inter-view consistency simultaneously. In [43], the underlying correlations among different views and the specific information are explored to strengthen clustering performance. Recently, tensor learning-based multi-view clustering methods have achieved great progress. Xie et al. [44] stack the self-representation matrices from different views into a three-order tensor, and impose the t-SVD-based Tensor Nuclear Norm (TNN) on it to explore the high-order correlation among all views. Gao et al. [22] utilize the prior knowledge of the singular values and propose a weighted TNN for multi-view clustering. Wu et al. extend [13] by stacking the transition matrices into a tensor with the TNN constraint [45]. In [46], Chen et al. employ the weighted TNN for multi-view spectral clustering.

Unfortunately, the above methods can only process the attribute feature matrices or adjacent matrices separately, which can not fully explore the properties of the multi-view attributed graph data. To fill this gap, Lin et al. [8] introduce the graph filter for the attributed graph representation learning, where the high-order neighborhood correlation is also developed for clustering.

II-B Deep Attributed Graph Clustering

The main purpose of GNN is to learn the discriminative deep representation from the attributed graph. Graph Auto-Encoder (GAE) [39] based methods encode the adjacent matrix and attribute feature matrix into a deep representation, and then decode it with the decoder. Pan et al. [47] propose an adversarial graph embedding framework for clustering, where a discriminator is employed to force the deep representation to match the prior distribution. Wang et al. [48] incorporate cluster centroid learning and deep representation learning in a self-optimizing manner. In [38], the Graph Convolutional Network (GCN) is introduced to extract the multi-level deep representation of the attributed graph data for clustering. Sun et al. [49] employ the dual-decoder network to reconstruct the attribute feature matrix and the adjacent matrix of the input graph simultaneously. The above-mentioned methods are designed for single-view attributed graph data, and the complementary information of multi-view attributed graph data may not be fully exploited without considering the diversity of the inputs. Inspired by [50], Cheng et al. [51] introduce a consistent embedding encoder to maximize the agreements between views for a consensus representation fitting the target distribution. Fan et al. [52] define a most informative view with the modularity and decode the deep representation calculated from it to reconstruct multiple adjacent matrices. However, all GNN-based deep multi-view attributed graph clustering methods reconstruct the affinity matrix of each view from the consensus representation without explicitly considering the impact of the view-specific information, ultimately leading to sub-optimal clustering performance.

II-C Information Maximization

Information Maximization (or contrastive learning) has achieved great success in the field of computer vision and inspired many studies on unsupervised learning. Velickovic et al. [53] maximize the mutual information between the local patches and the global graph to obtain a compact representation. In [54], the information maximization is developed to maximize the agreement between the deep representations obtained by conducting GNN on the input graph and the augmented one. Pan et al. [55] employ a contrastive learning term to maximize the mutual information between each attribute node and its -nearest neighbors. In [56], the graph information bottleneck is proposed, which aims to balance the expressiveness and robustness of the deep representation. Yu et al. [57] employ the graph information bottleneck theory to address the sub-graph recognition problem. Suresh et al. [37] propose a graph information bottleneck-based edge augmentation module to adaptively drop the redundancy edges on the input graph. To further reduce the redundancy information between the input graph and adaptive augmented one, Gong et al. develop a variant by imposing the orthogonal constraint on the deep representations [58].

III Basic Notations and Problem Definition

| Notation | Meaning |

|---|---|

| The hyper-parameter | |

| The dimension of each attribute node | |

| The number of layers in the GE module | |

| The number of layers in the SIR module | |

| The freedom degree of the Student’s -distribution | |

| The number of clusters | |

| The parameters of the -th layer in the SIR module | |

| The attributed graph | |

| The multi-view attributed graph | |

| The edge set | |

| The node set | |

| The number of nodes | |

| The number of edges | |

| The number of views | |

| The adjacent matrix | |

| The degree matrix of the adjacent matrix | |

| The identity matrix | |

| The normalized adjacent matrix | |

| The attribute feature matrix | |

| The hidden representation of the -th encoder layer | |

| The parameters of the -th layer in the GE module | |

| The low-level representation | |

| The latent high-level clustering-oriented representation | |

| The reconstructed hidden representation | |

| The reconstructed specific information matrix | |

| The reconstructed adjacent matrix | |

| The soft label distribution | |

| The target distribution | |

| The clustering results |

Given an attributed graph , where is the edge set and is the node set, the numbers of edges and nodes are denoted as and , respectively. is the attribute feature matrix and is the dimension of each attribute node. is the adjacent matrix of where denotes that node and node are connected by an edge from and vice versa. The degree matrix of is and . Then, can be further normalized as by calculating , where is the identity matrix. For clarity, the basic notations used throughout this paper are summarized in Table I.

Definition 1 (Multi-view Attributed Graph): The multi-view attributed graph is consisting of an attribute feature matrix and multiple adjacent matrices , where is the number of views.

Definition 2 (Multi-view Attributed Graph Clustering): Given a multi-view attributed graph, the multi-view attributed graph clustering aims to mine a consensus cluster partition fitting all views.

IV The Proposed Method

In this section, we propose a Dual Information enhanced multi-view Attributed Graph Clustering (DIAGC) method.

IV-A Motivation

Generally, most existing deep multi-view attributed graph clustering methods only focus on the consensus information between views while neglecting the specific information of each view. As illustrated in Figure 1, the adjacent matrices of the ACM dataset are distinct between different views, indicating that each view has its own specific information. For multi-view attributed graph data, except for the study of the consensus representation, how to discover the specific information of different views remains a challenging problem. In addition, these methods reconstruct the attribute graph of each view from the consensus representation, which may cause the conflict between learning the consensus representation and reconstructing the view-specific attribute graph. Specifically, the consensus objective aims to learn the common information across multiple views as much as possible while the reconstruction objective expects the common representation to preserve the view-specific information of different views. Furthermore, these methods obtain the final cluster partition directly from the low-level representations, which can not fully mine the latent high-level information, i.e., the clustering-oriented information embedded in all views.

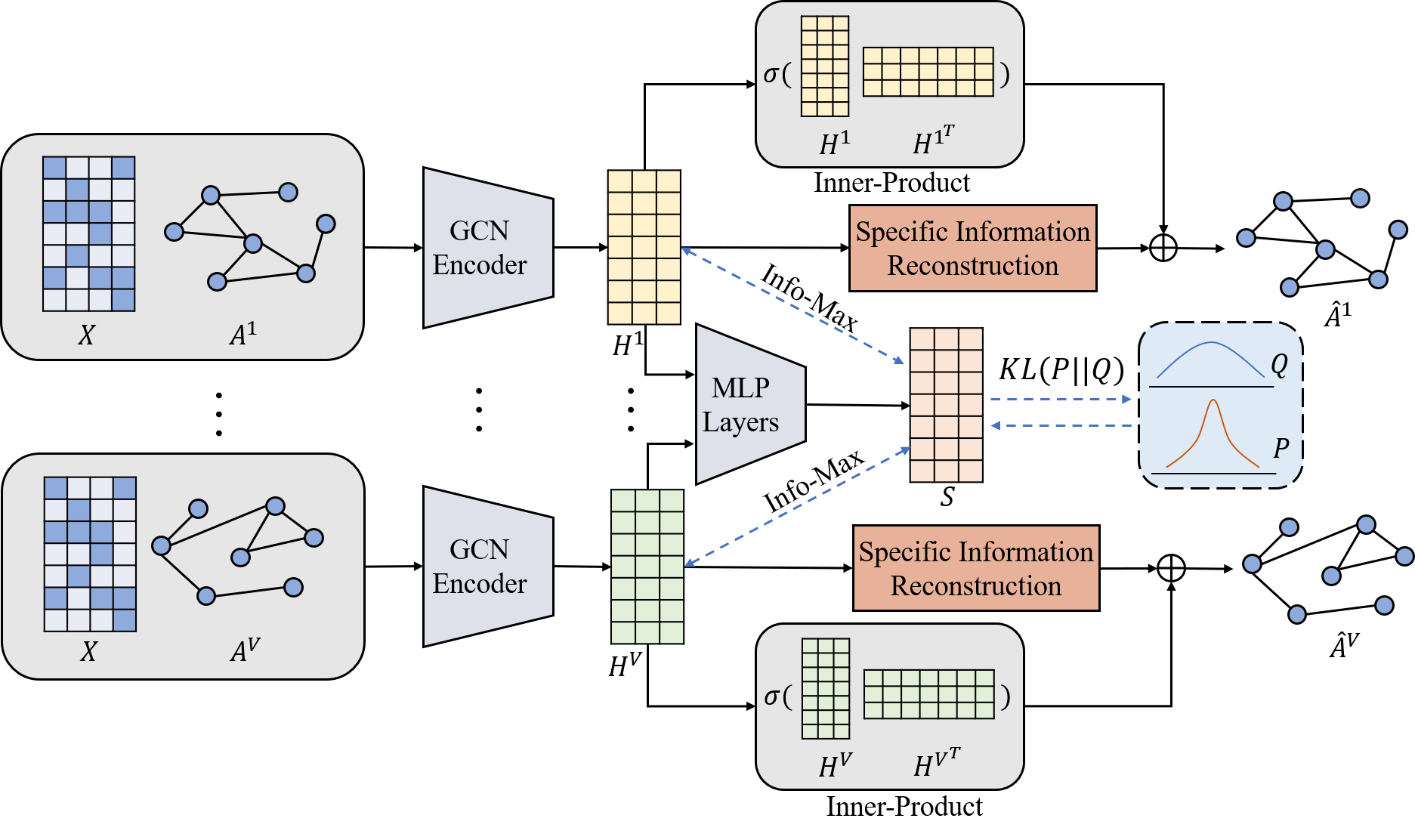

To this end, the basic idea of the DIAGC method is to disentangle the learning procedure of the consensus and view-specific information for acquiring a view-consistent cluster partition, and maximize the mutual information between the low-level representations and the latent high-level clustering-oriented one. For clarity, the overall framework of DIAGC is illustrated in Figure 2.

IV-B Methodology

The proposed DIAGC method consists of four modules, i.e., Graph Encoder (GE) module, Mutual Information Maximization (MIM) module, Specific Information Reconstruction (SIR) module and Self-supervised Clustering (SC) module.

IV-B1 Graph Encoder (GE) Module

To capture the low-level representations of each view, the graph encoder module is introduced. Specifically, the graph encoder is utilized as a non-linearity feature extractor to mine the deep non-linearity correlations embedded in the attribute graph of each view:

| (1) |

| (2) |

where denotes the parameters of the -th layer, is the number of layers, is the activation function, is the hidden representation and is the low-level representation of the -th view.

IV-B2 Mutual Information Maximization (MIM) Module

Mutual information maximization [48] encourages the same samples between different views to be similar. To explore the view-consensus high-level information embedded in each , we employ the Mutual Information Maximization (MIM) module:

| (3) |

where is the -th row of , and is the latent high-level representation recovered from all views. In particular, is generated by:

| (4) |

where is a sub-network consisting of several fully connected layers. When maximizing the mutual information between and , rather than simply adding together, can capture the intrinsic information of each view. Besides, the sub-network can also mine the nonlinear relationships between different views, which can fully utilize the nonlinear fitting ability for the latent high-level representation. Thus, we define a mutual information loss function as follows:

| (5) |

IV-B3 Specific Information Reconstruction (SIR) Module

Reconstructing the specific information enables the MIM module to capture the more essential information that fits all views. Specifically, the specific information reconstruction module is realized by a three-layer graph neural network:

| (6) |

| (7) |

where is the reconstructed -th layer hidden representation of the -th view, denotes the parameters of the -th layer, is the number of layers and is the reconstructed specific information matrix of the -th view.

IV-B4 Self-supervised Clustering (SC) module

Since multi-view attributed graph clustering is an unsupervised task, the ground-truth labels are not available, which introduces difficulties in the network training. To tackle such a problem, self-supervised clustering module is employed to guide the network training. Specifically, the -divergence is utilized:

| (8) |

where is the soft label distribution, i.e., measures the possibility of the -th instance belonging to the -th cluster, and is the target distribution. In our study, is calculated by the Student’s -distribution [59]:

| (9) |

where is the cluster centroids, is the freedom degree of the Student’s -distribution. And the target distribution is defined as:

| (10) |

where the second power on is utilized to make the target distribution denser. It enhances the learning process of , i.e., pushing away samples belonging to different clusters and gathering samples of the same cluster together. To initialize the cluster centroid in Eq. (9), the -means clustering method is performed on before the network training. After that, is updated adaptively in each training iteration. The SC module enables to be a high-level clustering-oriented representation.

| Dataset | #Views | #Nodes | #Feature dimensions | #Edges of the adjacent matrix | Attribute content | #Clusters |

| ACM | 2 | 3025 | 1830 | Co-Subject (29,281) | Keywords of the paper | 3 |

| Co-Author (2,210,761) | ||||||

| DBLP | 3 | 4057 | 334 | Co-Author (11,113) | Keywords of the author | 4 |

| Co-Conference (5,000,495) | ||||||

| Co-Term (6,776,335) | ||||||

| IMDB | 2 | 4780 | 1232 | Co-Actor (98,010) | Keywords of the movie plot | 3 |

| Co-Director (21,018) |

IV-C Overall Objective Function and Algorithm Summary

For the network training, we jointly optimize the mutual information loss, the reconstruction loss and the self-supervised clustering loss together. The total objective function can be defined as follows:

| (11) |

where is a hyper-parameter and is the reconstruction loss. In our study, is defined as:

| (12) |

where is the reconstructed adjacent matrix of the -th view. is calculated by:

| (13) |

where the first term is to capture the consensus information of the low-level representations, while the second term aims to reconstruct the view-specific information. is defined as:

| (14) |

It is worth noting that the reason for reconstructing from instead of is to ensure that is free from the interference of specific information, which may lead to sub-optimal solutions. With the help of Eq. (5) and Eq. (12), the exploration of the consensus and specific information are disentangled elegantly, i.e., the MIM module ensures contains the view-common information while the SIR module reconstructs the features that are characteristic of the -th view. After iterations, the final clustering results are obtained by performing the -means clustering method on .

For clarity, the overall algorithm of the proposed DIAGC method is outlined in Algorithm 1.

V Experiments

In this section, extensive experiments are conducted to validate the effectiveness of the proposed DIAGC method. Three widely used real-world multi-view attributed graph datasets and thirteen state-of-the-art baselines are adopted for the experiment. All experiments are conduced in Python 3.6 64-bit edition on an Intel(R) Xeon(R) Gold 6248R CPU @3.0GHz Ubuntu 20.04 workstation with 256 GB of RAM and RTX 3090 GPU.

V-A Dataset Description

The following three datasets are used in the experiments.

-

1.

ACM [60]: It is a paper network dataset containing 3025 papers as the attribute feature matrix. Besides, two kinds of relationships, i.e., co-paper and co-author relationships, are regarded as two views of the adjacent matrices, which can be divided into three categories111 https://dl.acm.org/..

-

2.

DBLP [61]: It is an author network dataset consisting of 4057 authors with three kinds of relationships, i.e., co-author, co-term and co-conference, as three different views of the adjacent matrices. Four categories of papers are collected: machine learning, information retrieval, database and data mining222 https://dblp.uni-trier.de/..

-

3.

IMDB [52]: It is a movie network dataset that contains 4780 movies with two kinds of relationships, i.e., co-actor and co-director. The movies are divided into three categories: drama, action and comedy333https://www.imdb.com/..

The statistical information of these datasets is summarized in Table II.

V-B Baselines

Thirteen clustering methods are compared with the proposed method. The details of these methods are provided as follows:

-

1.

LINE [62]: It is a deep single-view attributed graph clustering method, which is designed to preserve the first-order and the second-order proprieties of the attributed graph data. In our study, its best clustering results among all views are reported with LINEbest and its average clustering results of all views reported with LINEavg444https://github.com/tangjianpku/LINE..

-

2.

MNE [63]: It is a scalable multi-view network embedding model representing the adjacent relationships from different views of multi-view attributed graph data into a unified embedding space555https://github.com/HKUST-KnowComp/MNE..

-

3.

PMNE [64]: The Project Multi-layer Network Embedding (PMNE) method consists of three sub-methods, i.e., network aggregation-based method (PMNEn), result aggregation-based method (PMNEr) and layer co-analysis-based method (PMNElc). These methods project the multi-layer network into a delegated vector space for clustering.

-

4.

GAE [39]: The Graph Auto-Encoder (GAE) is one of the most representative methods of single-view attributed graph clustering, which is composed of one graph encoder and one graph decoder. The best clustering results of GAE among all views are reported with GAEbest and its average clustering results of all views are reported as GAEavg666https://github.com/tkipf/gae..

-

5.

ARGA [47]: The Adversarially Regularized Graph Autoencoder (ARGA) method is a single-view attributed graph clustering method, where a discriminator is utilized to ensure the deep representation calculated by encoder matching a prior distribution777https://github.com/GRAND-Lab/ARGA.

-

6.

DEAGC [48]: It is a goal-directed deep single-view attributed graph clustering method utilizing a self-optimizing module to learn a clustering-oriented deep representation.

-

7.

SDCN [38]: The Structural Deep Clustering Network (SDCN) method integrates the deep representations extracted by two deep neural networks to recover the distinct representations embedded in the single-view data888https://github.com/bdy9527/SDCN.

-

8.

RMSC [13]: It is a Markov-chain-based multi-view clustering method which takes each individual adjacent matrix as the transition probability matrix, and then obtains the shared low-rank representation from them999https://github.com/frash1989/ELM-MVClustering/tree/master/RMSC-ELM..

-

9.

PwMC [27]: It is a multi-view clustering method that adaptively learns the weights of each view for a consensus representation with low-rank constraint101010https://github.com/kylejingli/SwMC-IJCAI17/blob/master/SwMC.

-

10.

SwMC [27]: It is a self-weighted multi-view clustering method introducing a self-conducted weight learning scheme to remove the explicitly defined weight factor.

-

11.

O2MA [52]: It is a deep multi-view attributed graph clustering method, which learns the deep representation from a most informative graph view and reconstructs all views from the deep representation111111https://github.com/googlebaba/WWW2020-O2MAC..

-

12.

O2MAC [52]: It is a variant of O2MA containing a self-supervised clustering module with the clustering loss to generate the cluster-orient deep representation.

-

13.

MvAGC [8]: It is a multi-view attributed graph clustering method which adopts the graph filter learning term to capture the high-order neighborhood information121212https://github.com/sckangz/MvAGC..

V-C Evaluation Metrics

Four widely used evaluation metrics, namely, ACCuracy (ACC), F1-score (F1), Normalized Mutual Information (NMI) and Adjusted Rand Index (ARI) [52] are employed to evaluate the clustering performance. Let denote the ground-truth cluster partition, where consists of the nodes belonging to the -th cluster. Assuming that the cluster partition obtained by the clustering method is , ACC is calculated by:

| (15) |

where is the number of the elements of the set.

F1 is obtained by:

| (16) |

where and are precision and recall. They can be calculated by the following formulas:

| (17) |

where denotes the number of true positives, denotes the number of false positives and denotes the number of false negatives.

NMI is calculated by:

| (18) |

The formulation of ARI can be written as:

| (19) |

where is the expected value, denotes the rand index:

| (20) |

where is the number of true negatives. Larger ACC, F1, NMI and ARI indicate better clustering performance.

| Dateset | ACM | |||

|---|---|---|---|---|

| Method | ACC | F1 | NMI | ARI |

| LINEbest | 0.6479 | 0.6595 | 0.3941 | 0.3433 |

| LINEavg | 0.6479 | 0.6595 | 0.3941 | 0.3432 |

| MNE | 0.6370 | 0.6479 | 0.2999 | 0.2486 |

| PMNEn | 0.6936 | 0.6955 | 0.4648 | 0.4302 |

| PMNEr | 0.6492 | 0.6618 | 0.4063 | 0.3453 |

| PMNElc | 0.6998 | 0.7003 | 0.4755 | 0.4431 |

| GAEbest | 0.8216 | 0.8225 | 0.4914 | 0.5444 |

| GAEavg | 0.6990 | 0.7025 | 0.4771 | 0.4378 |

| ARGA | 0.8433 | 0.8451 | 0.5454 | 0.6064 |

| DAEGC | 0.8694 | 0.8707 | 0.5618 | 0.5935 |

| SDCN | 0.9045 | 0.9042 | 0.6831 | 0.7391 |

| RMSC | 0.6315 | 0.5746 | 0.3973 | 0.3312 |

| PwMC | 0.4162 | 0.3783 | 0.0332 | 0.0395 |

| SwMC | 0.3831 | 0.4709 | 0.0838 | 0.0187 |

| O2MA | 0.8880 | 0.8894 | 0.6515 | 0.6987 |

| O2MAC | 0.9042 | 0.9053 | 0.6923 | 0.7394 |

| MvAGC | 0.8975 | 0.8986 | 0.6735 | 0.7212 |

| DIAGC | 0.9170 | 0.9177 | 0.7161 | 0.7697 |

| Dateset | DBLP | |||

|---|---|---|---|---|

| Method | ACC | F1 | NMI | ARI |

| LINEbest | 0.8689 | 0.8546 | 0.6676 | 0.6988 |

| LINEavg | 0.8750 | 0.8660 | 0.6681 | 0.7056 |

| MNE | - | - | - | - |

| PMNEn | 0.7925 | 0.7966 | 0.5914 | 0.5265 |

| PMNEr | 0.3835 | 0.3688 | 0.0872 | 0.0689 |

| PMNElc | - | - | - | - |

| GAEbest | 0.8859 | 0.8743 | 0.6925 | 0.7410 |

| GAEavg | 0.5558 | 0.5418 | 0.3072 | 0.2577 |

| ARGA | 0.5816 | 0.5938 | 0.2951 | 0.2392 |

| DAEGC | 0.6205 | 0.6175 | 0.3249 | 0.2103 |

| SDCN | 0.6805 | 0.6771 | 0.3950 | 0.3915 |

| RMSC | 0.8994 | 0.8248 | 0.7111 | 0.7647 |

| PwMC | 0.3253 | 0.2808 | 0.0190 | 0.0159 |

| SwMC | 0.6538 | 0.5602 | 0.3760 | 0.3800 |

| O2MA | 0.9040 | 0.8976 | 0.7257 | 0.7705 |

| O2MAC | 0.9074 | 0.9013 | 0.7287 | 0.7780 |

| MvAGC | 0.9277 | 0.9225 | 0.7727 | 0.8276 |

| DIAGC | 0.9320 | 0.9273 | 0.7811 | 0.8357 |

| Dateset | IMDB | |||

|---|---|---|---|---|

| Method | ACC | F1 | NMI | ARI |

| LINEbest | 0.4268 | 0.2870 | 0.0031 | - |

| LINEavg | 0.4719 | 0.2985 | 0.0063 | - |

| MNE | 0.3958 | 0.3316 | 0.0017 | 0.0008 |

| PMNEn | 0.4958 | 0.3906 | 0.0359 | 0.0366 |

| PMNEr | 0.4697 | 0.3183 | 0.0014 | 0.0115 |

| PMNElc | 0.4719 | 0.3882 | 0.0285 | 0.0284 |

| GAEbest | 0.4298 | 0.4062 | 0.0402 | 0.0473 |

| GAEavg | 0.4442 | 0.4172 | 0.0413 | 0.0491 |

| ARGA | 0.4119 | 0.3685 | 0.0063 | - |

| DAEGC | - | - | - | - |

| SDCN | 0.4697 | 0.3183 | 0.0285 | 0.0284 |

| RMSC | 0.2702 | 0.3775 | 0.0054 | 0.0018 |

| PwMC | 0.2453 | 0.3164 | 0.0023 | 0.0017 |

| SwMC | 0.2671 | 0.3714 | 0.0056 | 0.0004 |

| O2MA | 0.4697 | 0.4229 | 0.0524 | 0.0753 |

| O2MAC | 0.4502 | 0.4159 | 0.0421 | 0.0564 |

| MvAGC | 0.5633 | 0.3783 | 0.0371 | 0.0940 |

| DIAGC | 0.5839 | 0.4301 | 0.0658 | 0.1316 |

V-D Comparison Experiments

In the comparison experiments, we run each method 10 times and report their average values in terms of ACC, F1, NMI and ARI in Table III, Table IV and Table V. From these tables, we have the following observations:

-

1.

The proposed DIAGC method achieves the best clustering performance compared with the baselines. These results verify that DIAGC could leverage the complementary information between different views to generate a more comprehensive representation for clustering.

-

2.

Compared with the earlier multi-view clustering methods, i.e., RMSC, PwMC and SwMC, most of deep learning-based methods achieve a large margin in terms of the evaluation metrics on the three multi-view attributed graph datasets. The results indicate that exploring the non-linearity relationships embedded in data is crucial for learning more discriminative representation.

-

3.

The GCN-based single-view clustering methods, i.e., GAE, AGRA, DAEGC and SDCN, perform much better than other single-view methods. The reason may be that GCN could incorporate both structural information embedded in the adjacent matrix and attribute information to mine the essential representation of attributed graph data.

-

4.

O2MA and O2MAC are both GCN-based multi-view attributed graph clustering methods. They utilize the consensus representation generated by GCNs as the deep representation for clustering and reconstruct adjacent matrices of all views from it. However, these two methods ignore the specific information of each view, which results in sub-optimal clustering performance. From Table III, it can be seen that the proposed DIAGC method performs much better than these two methods on all the benchmarks. Taking the ACM dataset as an example, it achieves 6.46% and 2.38% improvements in terms of NMI than O2MA and O2MAC, respectively. The reason is that the proposed DIAGC method could disentangle the consensus and the specific information learning procedures of each view. Such a process enables the network to generate a more clustering-friendly representation by eliminating the influence caused by the inherent specific information embedded in each view. With the help of specific information reconstruction module, the proposed DIAGC method could outperform all the deep baselines on the three datasets.

-

5.

On the IMDB dataset, all the baselines do not perform very well, which is caused by its sparsity. Even so, the proposed method can still achieve the best clustering performance on the IMDB dataset, which further validates its effectiveness.

In conclusion, the proposed DIAGC method can achieve more accurate clustering results than the existing state-of-the-art methods.

| Dateset | MIM | SIR | SC | ACC | F1 | NMI | ARI |

|---|---|---|---|---|---|---|---|

| ACM | ✓ | ✓ | 0.3901 | 0.3114 | 0.0609 | 0.007 | |

| ✓ | ✓ | 0.7709 | 0.7719 | 0.5117 | 0.4975 | ||

| ✓ | ✓ | 0.8955 | 0.8963 | 0.6603 | 0.7167 | ||

| ✓ | ✓ | ✓ | 0.9170 | 0.9177 | 0.7161 | 0.7697 | |

| DBLP | ✓ | ✓ | 0.2916 | 0.1909 | 0.0268 | 0.0010 | |

| ✓ | ✓ | 0.9295 | 0.9254 | 0.7706 | 0.8290 | ||

| ✓ | ✓ | 0.9300 | 0.9255 | 0.7708 | 0.8309 | ||

| ✓ | ✓ | ✓ | 0.9320 | 0.9273 | 0.7811 | 0.8357 | |

| IMDB | ✓ | ✓ | 0.5423 | 0.2614 | 0.0022 | 0.0089 | |

| ✓ | ✓ | 0.5073 | 0.4238 | 0.0509 | 0.1049 | ||

| ✓ | ✓ | 0.4473 | 0.4221 | 0.0561 | 0.0650 | ||

| ✓ | ✓ | ✓ | 0.5839 | 0.4301 | 0.0658 | 0.1316 |

V-E Ablation Study

To further validate the effectiveness of MIM, SIR and SC modules of the proposed DIAGC method, in this subsection, the ablation study is conducted and the experiment results are reported in Table VI. It is noteworthy that without the MIM module, the SC module is performed on the representation generated by the linear sum of all the deep representations, i.e., the SC module is performed on , and the final clustering results are obtained by performing the -means on . Without SIR module, the in Eq. (11) is reformulated as:

| (21) |

From Table VI, it can be seen that without the MIM module, the clustering results of DIAGC drop dramatically on all the benchmarks. The reason may be that is generated by linearly summing the deep representations of all views with the same weight, and such a naive learning strategy breaks the non-linear structure constructed by GNN, where there are only the low-level representations. In addition, the clustering results for all benchmarks are degraded in the absence of the SIR module, because the low-level representations computed by GCN without the SIR module are disturbed by the inherent specific information embedded in each view. Moreover, the effectiveness of the SC module is also validated and it improves the clustering results in terms of NMI by 5.58%, 1.03% and 0.97% on the ACM, DBLP and IMDB datasets, respectively. These results demonstrate that all the modules in the proposed DIAGC method are essential to improve the clustering performance.

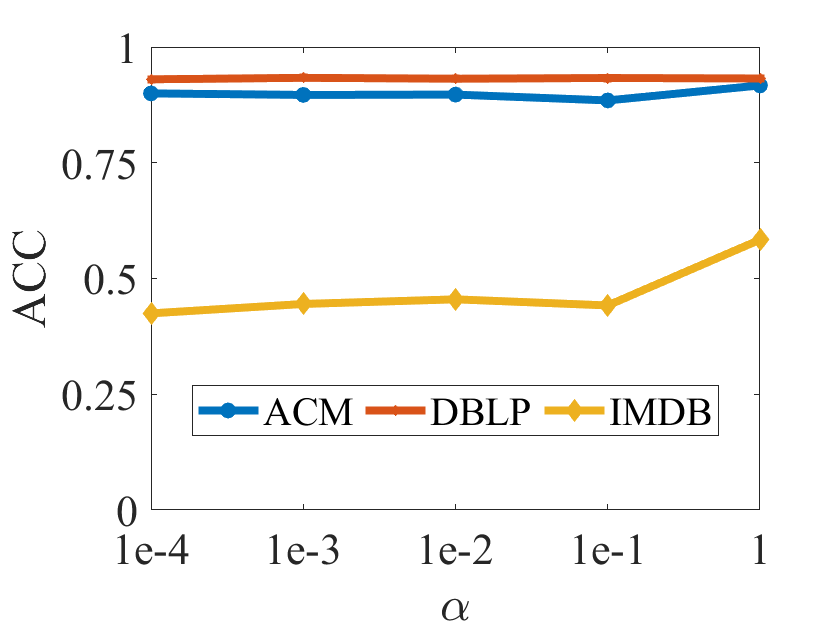

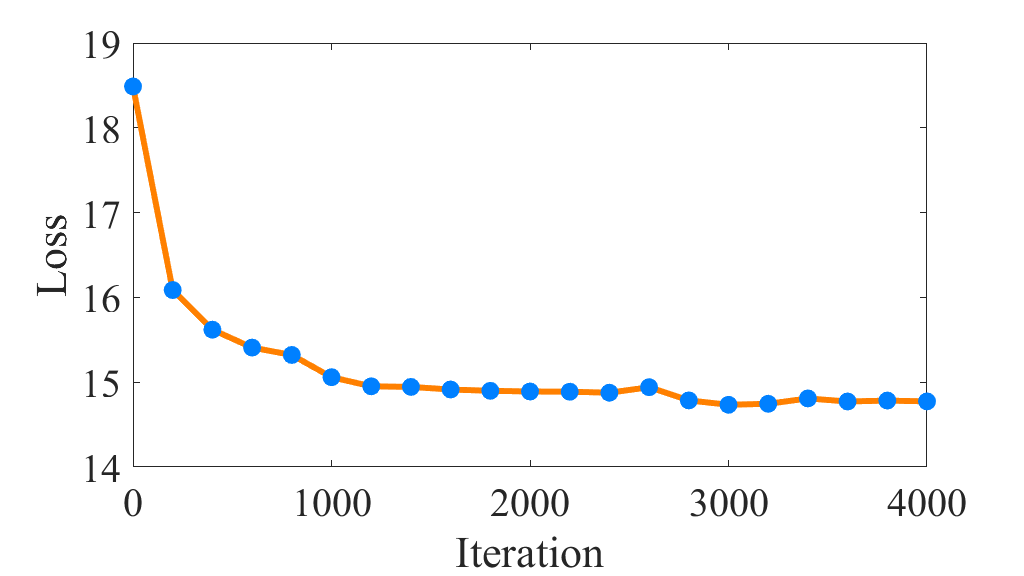

V-F Parameter Analysis

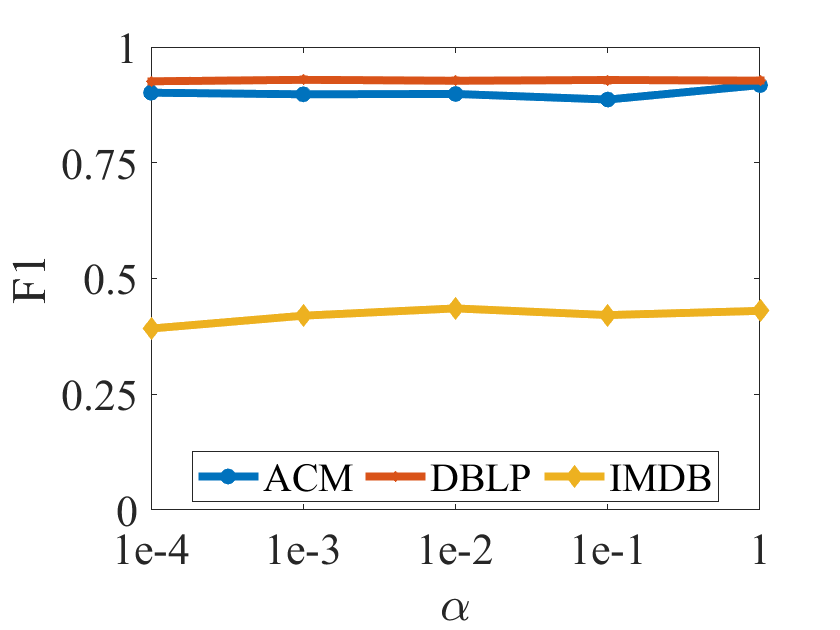

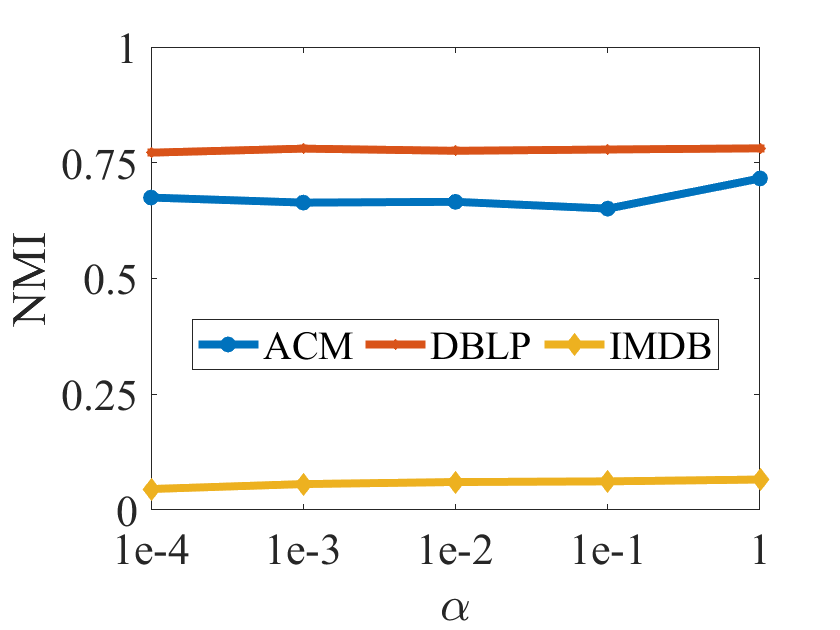

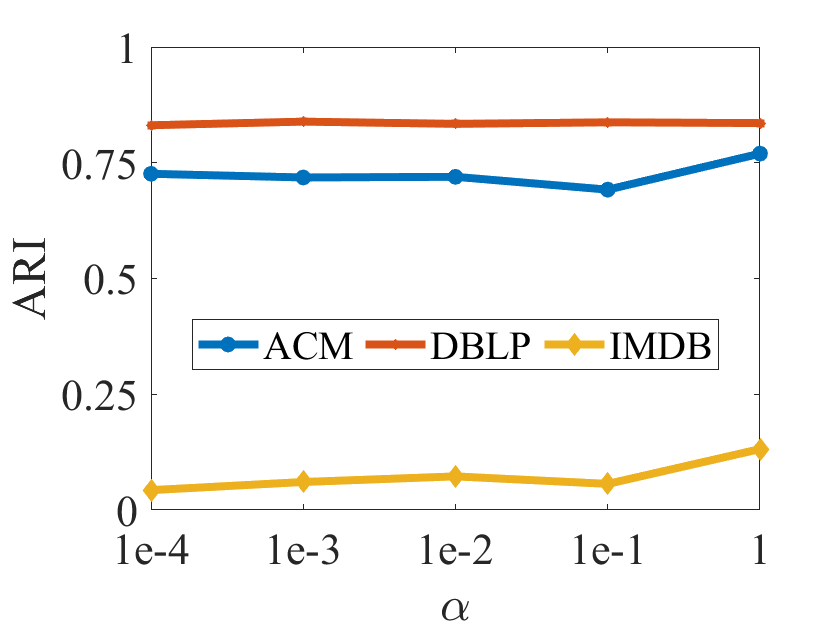

In this subsection, we would conduct the parameter analysis on all the benchmarks with the hyper-parameter , which is tuned in the range of . Figure 3 plots the clustering results in terms of ACC, F1, NMI and ARI on all the benchmarks, respectively. According to Figure 3, we can observe that the clustering performance of DIAGC is relatively stable over all the benchmarks with different values of , which indicates the robustness of DIAGC to obtain the promising clustering performance.

V-G Visualization

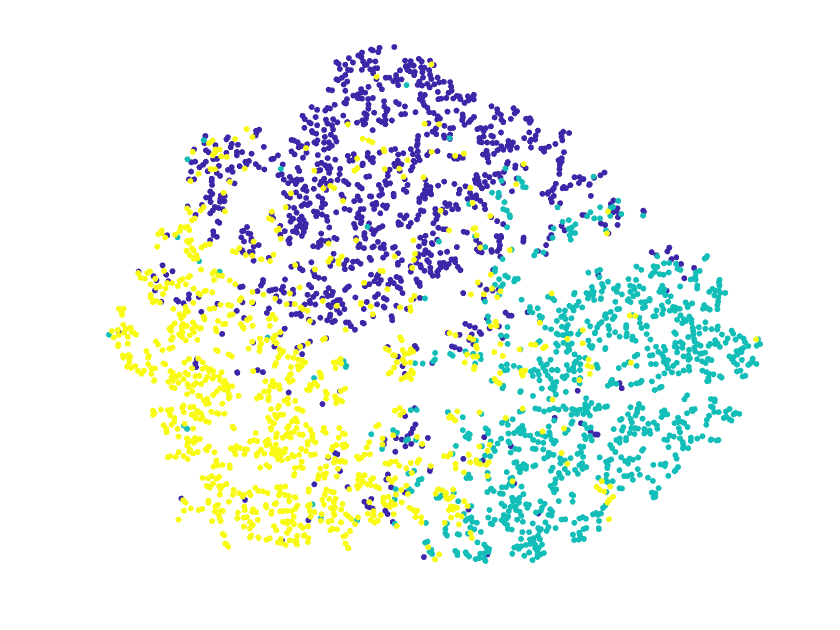

The visualization experiments of the proposed method compared with some baselines will be presented in this subsection to validate the superiority of the proposed DIAGC method. Specifically, we project the deep representations learned from the different models into a two-dimensional space. Then, the t-SNE visualization method is performed with all the representations on the ACM dataset. As shown in Figure 4, the DEAGC method does not perform well on the ACM dataset as it could not divide the data appropriately. The O2MAC method could separate the green data points from the rest of the data, but the other clusters are still blurry. One reason may be that the O2MAC method can only encode one view to calculate the deep representation, without considering the complementary information from different views. From Figure 4(c), it can be seen that the cluster structures obtained by DIAGC are generally clear, which validates the effectiveness of the proposed method.

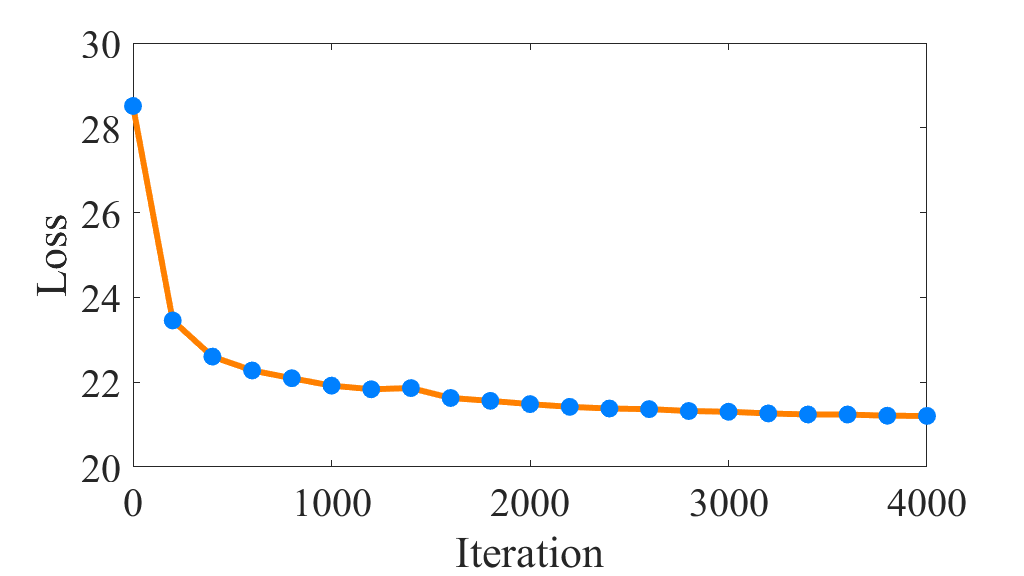

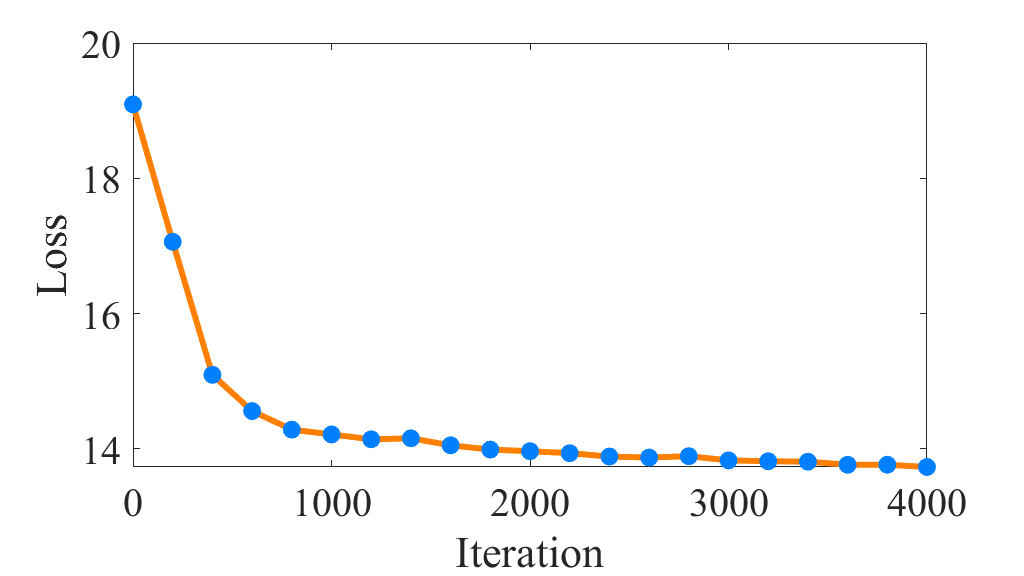

V-H Convergence Analysis

In this subsection, the convergence property of the proposed DIAGC method would be verified. Specifically, we plot the convergence curves about Eq. (11) versus iterations in Figure 5. As shown in Figure 5, the convergence curves are generally monotonically decreasing except for the small amplitude fluctuation caused by . Since the clustering centroids of are recalculated at each iteration, some bias would be inevitably introduced, resulting in that the between the soft label distribution and the target distribution is not always decreasing. Even though, the overall convergence curves of the proposed DIAGC method are decreasing and remain steady on all the benchmarks, which indicates the desirable convergence property of the proposed method.

VI Conclusions

In this paper, a novel Dual Information enhanced multi-view Attributed Graph Clustering (DIAGC) method is proposed. To the best of our knowledge, this is the first attempt to disentangle the consensus and specific information learning. Specifically, the proposed DIAGC method introduces the specific information reconstruction module to disentangle the exploration of the consensus and specific information, which enables the GCN encoder to calculate the more essential low-level representations. Further, the latent high-level representation is recovered from the low-level ones, and the consensus information of all views is retained by the mutual information maximization module. Finally, the self-supervised clustering module is employed to enforce the high-level representation to be the clustering-oriented one. The experimental results on several real-world benchmarks demonstrate the effectiveness of the proposed method.

References

- [1] B. J. Frey and D. Dueck, “Clustering by passing messages between data points,” Science, vol. 315, no. 5814, pp. 972–976, 2007.

- [2] Q. Song, J. Ni, and G. Wang, “A fast clustering-based feature subset selection algorithm for high-dimensional data,” IEEE Trans. Knowl. Data Eng., vol. 25, no. 1, pp. 1–14, 2013.

- [3] P. Chatterjee and P. Milanfar, “Clustering-based denoising with locally learned dictionaries,” IEEE Trans. Image Process., vol. 18, no. 7, pp. 1438–1451, 2009.

- [4] J. Fisher, P. Christen, Q. Wang, and E. Rahm, “A clustering-based framework to control block sizes for entity resolution,” in SIGKDD, 2015, pp. 279–288.

- [5] J. Dai and S. Wang, “Clustering-based spectrum sharing strategy for cognitive radio networks,” IEEE J. Sel. Areas Commun., vol. 35, no. 1, pp. 228–237, 2017.

- [6] Y. Zhang, E. Chen, B. Jin, H. Wang, M. Hou, W. Huang, and R. Yu, “Clustering based behavior sampling with long sequential data for CTR prediction,” in SIGIR, 2022, pp. 2195–2200.

- [7] M. Chen, J.-Q. Lin, X.-L. Li, B.-Y. Liu, C.-D. Wang, D. Huang, and J.-H. Lai, “Representation learning in multi-view clustering: A literature review,” Data Sci. Eng., vol. 7, no. 3, pp. 225–241, 2022.

- [8] Z. Lin and Z. Kang, “Graph filter-based multi-view attributed graph clustering,” in IJCAI, 2021, pp. 2723–2729.

- [9] L. Shu and L. J. Latecki, “Integration of single-view graphs with diffusion of tensor product graphs for multi-view spectral clustering,” in ACML, vol. 45, 2015, pp. 362–377.

- [10] F. Nie, J. Li, and X. Li, “Parameter-Free auto-weighted multiple graph learning: A framework for multiview clustering and semi-supervised classification,” in IJCAI, 2016, pp. 1881–1887.

- [11] C. Hou, F. Nie, H. Tao, and D. Yi, “Multi-View unsupervised feature selection with adaptive similarity and view weight,” IEEE Trans. Knowl. Data Eng., vol. 29, no. 9, pp. 1998–2011, 2017.

- [12] A. Kumar, P. Rai, and H. D. III, “Co-regularized multi-view spectral clustering,” in NIPS, 2011, pp. 1413–1421.

- [13] R. Xia, Y. Pan, L. Du, and J. Yin, “Robust multi-view spectral clustering via low-rank and sparse decomposition,” in AAAI, 2014, pp. 2149–2155.

- [14] C.-D. Wang, J.-H. Lai, and P. S. Yu, “Multi-View clustering based on belief propagation,” IEEE Trans. Knowl. Data Eng., vol. 28, no. 4, pp. 1007–1021, 2016.

- [15] Y. Chen, X. Xiao, Z. Hua, and Y. Zhou, “Adaptive transition probability matrix learning for multiview spectral clustering,” IEEE Trans. Neural Networks Learn. Syst., vol. 33, no. 9, pp. 4712–4726, 2022.

- [16] P. Zhang, X. Liu, J. Xiong, S. Zhou, W. Zhao, E. Zhu, and Z. Cai, “Consensus one-step multi-view subspace clustering,” IEEE Trans. Knowl. Data Eng., vol. 34, no. 10, pp. 4676–4689, 2022.

- [17] S. Wang, X. Liu, X. Zhu, P. Zhang, Y. Zhang, F. Gao, and E. Zhu, “Fast parameter-free multi-view subspace clustering with consensus anchor guidance,” IEEE Trans. Image Process., vol. 31, pp. 556–568, 2022.

- [18] S. Liu, S. Wang, P. Zhang, K. Xu, X. Liu, C. Zhang, and F. Gao, “Efficient one-pass multi-view subspace clustering with consensus anchors,” in AAAI, 2022, pp. 7576–7584.

- [19] J. Lv, Z. Kang, B. Wang, L. Ji, and Z. Xu, “Multi-view subspace clustering via partition fusion,” Inf. Sci., vol. 560, pp. 410–423, 2021.

- [20] X. Xiao, Y. J. Gong, Z. Hua, and W. N. Chen, “On reliable multi-view affinity learning for subspace clustering,” IEEE Trans. Multim., vol. 23, pp. 4555–4566, 2021.

- [21] C.-D. Wang, M. Chen, L. Huang, J.-H. Lai, and P. S. Yu, “Smoothness regularized multiview subspace clustering with kernel learning,” IEEE Trans. Neural Networks Learn. Syst., vol. 32, no. 11, pp. 5047–5060, 2021.

- [22] Q. Gao, W. Xia, Z. Wan, D. Y. Xie, and P. Zhang, “Tensor-SVD based graph learning for multi-view subspace clustering,” in AAAI, 2020, pp. 3930–3937.

- [23] Y. Chen, S. Wang, C. Peng, Z. Hua, and Y. Zhou, “Generalized nonconvex low-rank tensor approximation for multi-view subspace clustering,” IEEE Trans. Image Process., vol. 30, pp. 4022–4035, 2021.

- [24] X. Liu, X. Zhu, M. Li, L. Wang, C. Tang, J. Yin, D. Shen, H. Wang, and W. Gao, “Late fusion incomplete multi-view clustering,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 41, no. 10, pp. 2410–2423, 2019.

- [25] X. Peng, Z. Huang, J. Lv, H. Zhu, and J. T. Zhou, “COMIC: multi-view clustering without parameter selection,” in ICML, vol. 97, 2019, pp. 5092–5101.

- [26] P. Zhou, L. Du, X. Liu, Y. D. Shen, M. Fan, and X. Li, “Self-paced clustering ensemble,” IEEE Trans. Neural Networks Learn. Syst., vol. 32, no. 4, pp. 1497–1511, 2021.

- [27] F. Nie, J. Li, and X. Li, “Self-weighted multiview clustering with multiple graphs,” in IJCAI, 2017, pp. 2564–2570.

- [28] H. Wang, Y. Yang, and B. Liu, “GMC: graph-based multi-view clustering,” IEEE Trans. Knowl. Data Eng., vol. 32, no. 6, pp. 1116–1129, 2020.

- [29] B.-Y. Liu, L. Huang, C.-D. Wang, S. Fan, and P. S. Yu, “Adaptively weighted multiview proximity learning for clustering,” IEEE Trans. Cybern., vol. 51, no. 3, pp. 1571–1585, 2021.

- [30] Y. Liang, D. Huang, C.-D. Wang, and P. S. Yu, “Multi-View graph learning by joint modeling of consistency and inconsistency,” IEEE Trans. Neural Networks Learn. Syst., pp. 1–15, 2022.

- [31] F. Nie, S. Shi, J. Li, and X. Li, “Implicit weight learning for multi-view clustering,” IEEE Trans. Neural Networks Learn. Syst., pp. 1–14, 2021.

- [32] Y. Chen, S. Wang, X. Xiao, Y. Liu, Z. Hua, and Y. Zhou, “Self-Paced enhanced low-rank tensor kernelized multi-view subspace clustering,” IEEE Trans. Multim., vol. 24, pp. 4054–4066, 2022.

- [33] Y. Chen, X. Xiao, C. Peng, G. Lu, and Y. Zhou, “Low-Rank tensor graph learning for multi-view subspace clustering,” IEEE Trans. Circuits Syst. Video Technol., vol. 32, no. 1, pp. 92–104, 2022.

- [34] L. Fu, J. Yang, C. Chen, and C. Zhang, “Low-rank tensor approximation with local structure for multi-view intrinsic subspace clustering,” Inf. Sci., vol. 606, pp. 877–891, 2022.

- [35] J.-Q. Lin, M.-S. Chen, C.-D. Wang, and H. Zhang, “A tensor approach for uncoupled multiview clustering,” IEEE Trans. Cybern., pp. 1–14, 2022.

- [36] W. Xia, S. Wang, M. Yang, Q. Gao, J. Han, and X. Gao, “Multi-view graph embedding clustering network: Joint self-supervision and block diagonal representation,” Neural Networks, vol. 145, pp. 1–9, 2022.

- [37] S. Suresh, P. Li, C. Hao, and J. Neville, “Adversarial graph augmentation to improve graph contrastive learning,” in NIPS, 2021, pp. 15 920–15 933.

- [38] D. Bo, X. Wang, C. Shi, M. Zhu, E. Lu, and P. Cui, “Structural deep clustering network,” in WWW, 2020, pp. 1400–1410.

- [39] T. N. Kipf and M. Welling, “Variational graph auto-encoders,” CoRR, vol. abs/1611.07308, 2016.

- [40] X. Cao, C. Zhang, H. Fu, S. Liu, and H. Zhang, “Diversity-induced multi-view subspace clustering,” in CVPR, 2015, pp. 586–594.

- [41] C. Tang, X. Zhu, X. Liu, M. Li, P. Wang, C. Zhang, and L. Wang, “Learning a joint affinity graph for multiview subspace clustering,” IEEE Trans. Multim., vol. 21, no. 7, pp. 1724–1736, 2019.

- [42] G. Y. Zhang, Y. R. Zhou, X. He, C. D. Wang, and D. Huang, “One-step kernel multi-view subspace clustering,” Knowl. Based Syst., vol. 189, 2020.

- [43] T. Zhou, C. Zhang, X. Peng, H. Bhaskar, and J. Yang, “Dual shared-specific multiview subspace clustering,” IEEE Trans. Cybern., vol. 50, no. 8, pp. 3517–3530, 2020.

- [44] Y. Xie, D. Tao, W. Zhang, Y. Liu, L. Zhang, and Y. Qu, “On unifying multi-view self-representations for clustering by tensor multi-rank minimization,” Int. J. Comput. Vis., vol. 126, no. 11, pp. 1157–1179, 2018.

- [45] J. Wu, Z. Lin, and H. Zha, “Essential tensor learning for multi-view spectral clustering,” IEEE Trans. Image Process., vol. 28, no. 12, pp. 5910–5922, 2019.

- [46] M.-S. Chen, C.-D. Wang, and J.-H. Lai, “Low-rank tensor based proximity learning for multi-view clustering,” IEEE Transactions on Knowledge and Data Engineering, pp. 1–1, 2022.

- [47] S. Pan, R. Hu, G. Long, J. Jiang, L. Yao, and C. Zhang, “Adversarially regularized graph autoencoder for graph embedding,” in IJCAI, 2018, pp. 2609–2615.

- [48] C. Wang, S. Pan, R. Hu, G. Long, J. Jiang, and C. Zhang, “Attributed graph clustering: A deep attentional embedding approach,” in IJCAI, 2019, pp. 3670–3676.

- [49] D. Sun, D. Li, Z. Ding, X. Zhang, and J. Tang, “Dual-decoder graph autoencoder for unsupervised graph representation learning,” Knowl. Based Syst., vol. 234, p. 107564, 2021.

- [50] J. Xie, R. B. Girshick, and A. Farhadi, “Unsupervised deep embedding for clustering analysis,” in ICML, vol. 48, pp. 478–487.

- [51] J. Cheng, Q. Wang, Z. Tao, D. Y. Xie, and Q. Gao, “Multi-view attribute graph convolution networks for clustering,” in IJCAI, 2020, pp. 2973–2979.

- [52] S. Fan, X. Wang, C. Shi, E. Lu, K. Lin, and B. Wang, “One2Multi graph autoencoder for multi-view graph clustering,” in WWW, 2020, pp. 3070–3076.

- [53] P. Velickovic, W. Fedus, W. L. Hamilton, P. Liò, Y. Bengio, and R. D. Hjelm, “Deep graph infomax,” in ICLR (Poster), 2019.

- [54] T. Zhao, Y. Liu, L. Neves, O. J. Woodford, M. Jiang, and N. Shah, “Data augmentation for graph neural networks,” in AAAI, 2021, pp. 11 015–11 023.

- [55] E. Pan and Z. Kang, “Multi-view contrastive graph clustering,” in NIPS, 2021, pp. 2148–2159.

- [56] T. Wu, H. Ren, P. Li, and J. Leskovec, “Graph information bottleneck,” in NIPS, 2020.

- [57] J. Yu, T. Xu, Y. Rong, Y. Bian, J. Huang, and R. He, “Graph information bottleneck for subgraph recognition,” in ICLR, 2021.

- [58] L. Gong, S. Zhou, W. Tu, and X. Liu, “Attributed graph clustering with dual redundancy reduction,” in IJCAI, 2022, pp. 3015–3021.

- [59] L. van der Maaten and G. Hinton, “Visualizing data using t-sne,” JMLR, vol. 9, no. 86, pp. 2579–2605, 2008.

- [60] J. Tang, J. Zhang, L. Yao, J. Li, L. Zhang, and Z. Su, “ArnetMiner: extraction and mining of academic social networks,” in SIGKDD, 2008, pp. 990–998.

- [61] S. Pan, J. Wu, X. Zhu, C. Zhang, and Y. Wang, “Tri-Party deep network representation,” in IJCAI, 2016, pp. 1895–1901.

- [62] J. Tang, M. Qu, M. Wang, M. Zhang, J. Yan, and Q. Mei, “LINE: large-scale information network embedding,” in WWW, 2015, pp. 1067–1077.

- [63] H. Zhang, L. Qiu, L. Yi, and Y. Song, “Scalable multiplex network embedding,” in IJCAI, 2018, pp. 3082–3088.

- [64] W. Liu, P. Y. Chen, S. Yeung, T. Suzumura, and L. Chen, “Principled multilayer network embedding,” in ICDM Workshops, 2017, pp. 134–141.