EC12219

Applications of optimal nonlinear control to a whole-brain network of FitzHugh-Nagumo oscillators

Abstract

We apply the framework of optimal nonlinear control to steer the dynamics of a whole-brain network of FitzHugh-Nagumo oscillators. Its nodes correspond to the cortical areas of an atlas-based segmentation of the human cerebral cortex, and the inter-node coupling strengths are derived from Diffusion Tensor Imaging data of the connectome of the human brain. Nodes are coupled using an additive scheme without delays and are driven by background inputs with fixed mean and additive Gaussian noise. Optimal control inputs to nodes are determined by minimizing a cost functional that penalizes the deviations from a desired network dynamic, the control energy, and spatially non-sparse control inputs. Using the strength of the background input and the overall coupling strength as order parameters, the network’s state-space decomposes into regions of low and high activity fixed points separated by a high amplitude limit cycle all of which qualitatively correspond to the states of an isolated network node. Along the borders, however, additional limit cycles, asynchronous states and multistability can be observed. Optimal control is applied to several state-switching and network synchronization tasks, and the results are compared to controllability measures from linear control theory for the same connectome. We find that intuitions from the latter about the roles of nodes in steering the network dynamics, which are solely based on connectome features, do not generally carry over to nonlinear systems, as had been previously implied. Instead, the role of nodes under optimal nonlinear control critically depends on the specified task and the system’s location in state space. Our results shed new light on the controllability of brain network states and may serve as an inspiration for the design of new paradigms for non-invasive brain stimulation.

I Introduction

The widespread use of noninvasive electrical brain stimulation in clinical applications has sparked ongoing interest in studying the effects of external inputs on brain activity. Stimulation with electric fields in the range of V/m can already modulate oscillatory brain activity [1, 2], affect cross-regional synchronization [3, 4], and modulate cognitive performance [5]. Clinical studies have successfully demonstrated the efficacy of targeted transcranial stimulation in the treatment of neurological and psychiatric disorders and diseases such as epilepsy [6, 7], schizophrenia [8, 9], Alzheimer’s disease [10] and depression [11].

Brain network models offer a way to simulate and understand the human brain as a nonlinear dynamical system, in which each brain region is represented by a node, and the node dynamics is defined by a model of the average neural activity in that region [12]. Nodes interact with each other according to empirically measured human structural neural connectivity, which quantifies how neural activity in one brain region is coupled to the activity of connected regions.

Parcellation of human brains has yielded various brain atlases [13], which provide information on spatial and functional segregation, dividing the brain into distinct areas [14, 15]. The relative connectivity strength between these areas is defined by the number of axonal fibers, which can be estimated using structural neuroimaging scans of individual subjects. This results in a network model of the human brain where the edges reflect the relative strengths of axonal fiber bundles and the nodes represent individual brain regions. These nodes are then equipped with a dynamical system modeling the average neuronal activity in that region [16]. It has been repeatedly shown that when the parameters of brain network models are fitted to functional brain data, optimal operating points were close to the bifurcation lines of these models. This ensures that the model is in a state in which noise fluctuations can be amplified and produce realistic spatial correlation structures which are similar to empirical functional connectivity measurements [17, 18, 19, 20].

Previous theoretical investigations into the impact of external perturbations mostly relied on the assumption of a linear node dynamics, allowing the application of methods from linear control theory [21]. Thus, one can draw conclusions on the effects of external inputs to the system, based on the network topology and independent of its dynamical state [22, 23]. On the other hand, linear node dynamics cannot reproduce the dynamics of neural processes close to bifurcations [17, 24]. In order to describe the transitions from one dynamical regime to another, Muldoon et al. [25] consider nonlinear node dynamics. They conclude that the effects of stimulation-based control can be predicted by diagnostics from linear control theory based only on the structural connectivity of the network.

In this work we go beyond linear control theory and explore the framework of optimal nonlinear control [26] for the assessing the impact of perturbations on networks of coupled nonlinear systems. Optimal control is an optimization method that derives control policies based on the minimization of a cost functional which depends on the state and control variables. Here, we define a cost functional that penalizes the deviation to the desired network dynamics, the control energy, and the spatial sparsity. The latter allows us to find optimal control signals that apply to only a few control sites, as introduced by [27] and further studied by [28]. We used methods presented by Tröltzsch in [29] for their calculation, while the handling of the stochastic term is based on the work of Stannat et al. [30]. The resulting mathematical formulation is analogous to the formulation presented by Casas et al. [31] for partial differential equations.

We evaluate the framework of optimal control for a brain network of FitzHugh-Nagumo (FHN) [32, 33] oscillators with additive coupling and white Gaussian noise, where each oscillator represents a brain region and where the connections between them were chosen according to the human connectome derived from diffusion tensor imaging (DTI) measurements. FHN oscillators are well studied models for neural dynamics and detailed analyses of the dynamical states exist for single oscillators [34] and various network configurations, like two coupled units [35, 36], lattices [37], rings and hierarchical architectures [38], as well as random and small-world topologies [39, 40] and multiplex networks [41, 42, 43]. Similar empirical DTI-measured brain connectivities were used with FHN dynamics to model highly synchronized epileptic-seizure-like states [44, 45], unihemispheric sleep [46], and the functional organization of the resting brain [47, 48, 49].

We consider two different classes of control problems, targeted attractor switching between multistable network states and increasing network synchronization. The solutions obtained for given energy, precision, and sparseness constraints are well interpretable and result in intuitively sensible optimal control inputs for all network nodes over time.

We then correlate the control energies to controllability measures from linear control theory. We confirm the findings of Muldoon et al. [25] for the investigated state transition (from the low fixed-point state to the oscillatory regime). For other control tasks, however, we show, that diagnostics from linear control theory do not correlate with results from optimal nonlinear control. Conclusions drawn from the structural connectivity alone lead to contradictions, which can only be resolved if the dynamical state of the network and the nonlinear interactions between nodes are taken into account. Applications of nonlinear control theory to whole-brain models enables us to investigate control mechanisms also close to bifurcations. It thus may help in the search for more effective paradigms for realistic transcranial brain stimulation protocols.

II Nonlinear optimal control

II.1 Network model and control inputs

We consider networks of equivalent -dimensional and “noisy” nodes with additive and zero-delay internode coupling. Internode coupling strength is described by a dimensional adjacency matrix which is scaled by a global coupling strength . We allow for an instantaneous and additive control input to the network, which is described by independent control variables with . The equations describing the controlled network dynamics thus read:

| (1) |

where denotes the Kronecker product. The state of the network is described by a vector , where characterize the individual states of the nodes. The local node dynamics is given by with . All nodes additionally receive Gaussian white noise of similar intensity , which may be correlated within but is uncorrelated across the nodes of the network. The stochastic variables with are independently drawn from a Gaussian distribution, , with zero mean and unit variance. Within node correlations are quantified by the dimensional local noise scheme , while the across-node statistical independence is assured by a N-dimensional identity matrix . The internode coupling term consists of the Kronecker product of the adjacency matrix and the dimensional local coupling scheme , where the purpose of the former is to describe the relative interaction strength between nodes while the purpose of the latter is to distribute the between-node interactions across the variables which describe the local node dynamics. The matrix in the control term allows for the control of multiple nodes with different strength. The dimensional matrix implements the local control scheme, which is similar for every node in the network. Initial conditions are denoted by .

II.2 The cost functional and the optimality condition

The control is considered to be optimal (), if it minimizes an appropriate cost functional. To this end, we construct a cost functional for a state switching task, where the control input drives the network model from one stable state to another (see Section IV.1), and for a node synchronization task, where the control input increases the degree of synchronization among its nodes (see Section IV.2). For finite noise, i.e. for finite values of in Eq. (1), the overall cost functional is defined as a mean over noise realizations [30],

| (2) |

where denotes the cost functional for one noise realization and the angle brackets denote the mean. In the noise-free case no averaging is performed. The state dependent term only implicitly depends on the control and penalizes the deviation from the desired output. It will be different for the switching, , and synchronization, , tasks (see below). The control dependent term accounts for the cost of the control itself.

For the state switching task, we consider a noise-free system (), and the state dependent cost functional is defined in terms of the deviation of the controlled state to a predefined target state :

| (3) | ||||

where we consider the control being active within the time period . In order to penalize the precision only towards the end of the controlled time interval rather than during the transition between the initial and target states, the precision-penalizing variable can be time-dependent (see Section IV.1).

The state dependent cost functional for the synchronization task is defined in terms of the deviations of the normalized pairwise cross-correlations for all nodes and from , the desired mean cross-correlation in the synchronized target state:

| (4) |

The cross-correlation in component is defined as

| (5) |

with and where and denotes the temporal mean and the standard deviation. The mean over all values

| (6) |

is the component-wise network cross-correlation. Later we specialize to and suppress the index .

The input dependent cost functional penalizes the energy of the control signal and enforces its directional sparsity [27, 28]. It is given by

| (7) |

where and are the weights for the energy and sparsity terms. The first term corresponds to the L2-regulation and the second term to the L1-regulation of the cost-functional. Typically, increasing the sparsity reduces the number of controlled nodes, i.e. the nodes for which the control input is non-zero for at least part of the time period , while a higher penalty on the energy term typically leads to an overall reduction of control strengths.

Our goal is to find the optimal control that minimizes the cost functional for chosen , , and , leading to the minimization problem

| (8) |

Similarly to Troeltzsch et al. and Casas et al. [31, 29, 50], we analyze the gradient of the cost functional, which has to vanish when evaluated at the optimal control . By applying the method of Lagrange multipliers, we obtain an expression for the optimality condition that depends on the adjoint states corresponding to the Lagrange multipliers. The control is optimal, if

| (9) |

for , where is the derivative of the sparsity term, Eq. (7), with respect to the control inputs . The adjoint states are governed by a set of linear differential equations:

| (10) |

where is the Jacobian matrix of the state equations of the dynamical system, and is the integrand of the cost functional, . The adjoint state satisfies the boundary condition , and the differential equation is solved backwards in time.

II.3 Numerical solution of the minimization problem

The optimization problem is numerically solved using the conjugate gradient method. We integrate the equations for the network state and the adjoint given in Eqs. (1) and (10) (see Appendix B for details). The direction for each step of the conjugate gradient algorithm is defined by the Polak-Ribiere method [51], while its step size is derived using simple bisection [52]. We apply the Fletcher and Reeves algorithm [53] as presented in the following.

We initialize at iteration by choosing an initial control and drawing noise realizations . The corresponding states , Eq. (1), and adjoint states , Eq. (10), are calculated for every noise realization. Then as given in Eq. (9) is evaluated. The descent direction is initialized with . We then iterate until convergence:

-

while :

-

1.

compute the step size using bisection

-

2.

set

-

3.

calculate the states and the adjoint states for every noise realization and compute the mean

-

4.

evaluate the gradient

-

5.

compute using the Polak-Ribiere method:

-

6.

set the direction

-

7.

if is not a descent direction, set

-

8.

set

-

1.

Gradient-based optimization is only guaranteed to converge to local optima of the cost function. In general, however, multiple initial conditions converged to the same optimum. The only exception occurred for the state-switching task for high values of the sparseness parameter , for which a solution with finite values of coexists with the solution (see Appendix B for details).

III The whole-brain network

III.1 The local node dynamics

We consider a single FitzHugh-Nagumo (FHN) oscillator, with the activity variable and the linear recovery variable :

| (13) |

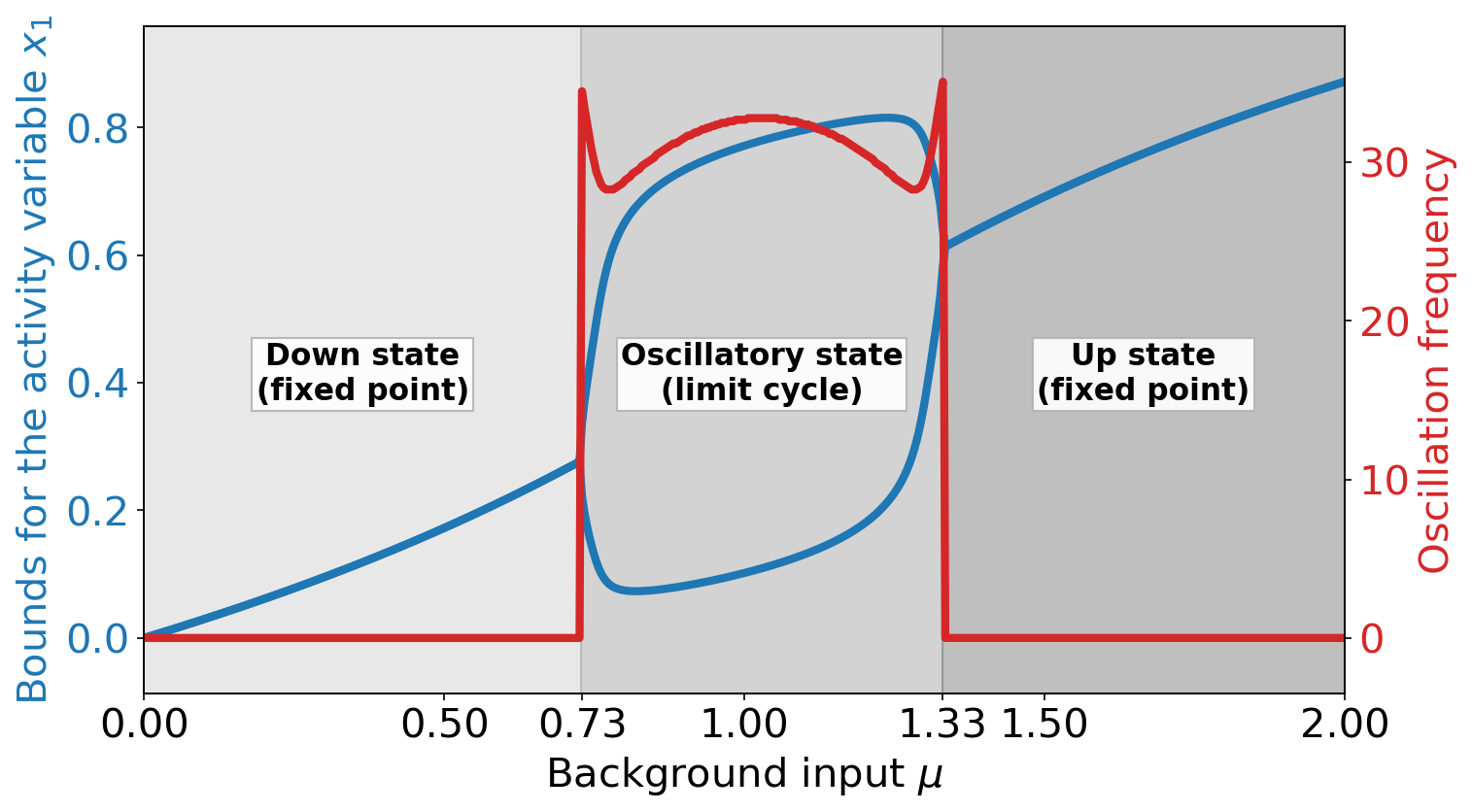

where is a node-independent, constant background input and . The parameters in are chosen to obtain the bifurcation diagram shown in Fig. 1. This bifurcation diagram, depending on the background input , shows three distinct states. In the down state, the node is in a stable fixed point and has a low constant value of the activity variable (in blue). In the oscillatory state, the activity variable oscillates at an input-dependent frequency (in red). In the up state, the node is again in a stable fixed point with a high constant value of the activity variable .

The succession of these states in the single node dynamics – a supercritical Andronov-Hopf bifurcation from the down state to the oscillatory state and another supercritical Andronov-Hopf bifurcation from the oscillatory state to the up state [34, 54] – closely resembles the states found in large random networks of excitatory and inhibitory spiking neuron models and their corresponding mean-field description [55]. The mean firing rate of these models changes as a function of background input in a way which is qualitatively similar to the value of the activity variable shown in Fig. 1. For this reason one can interpret the value of the activity variable as the difference between the output firing rate of a cortical node to a baseline value.

III.2 The brain network model

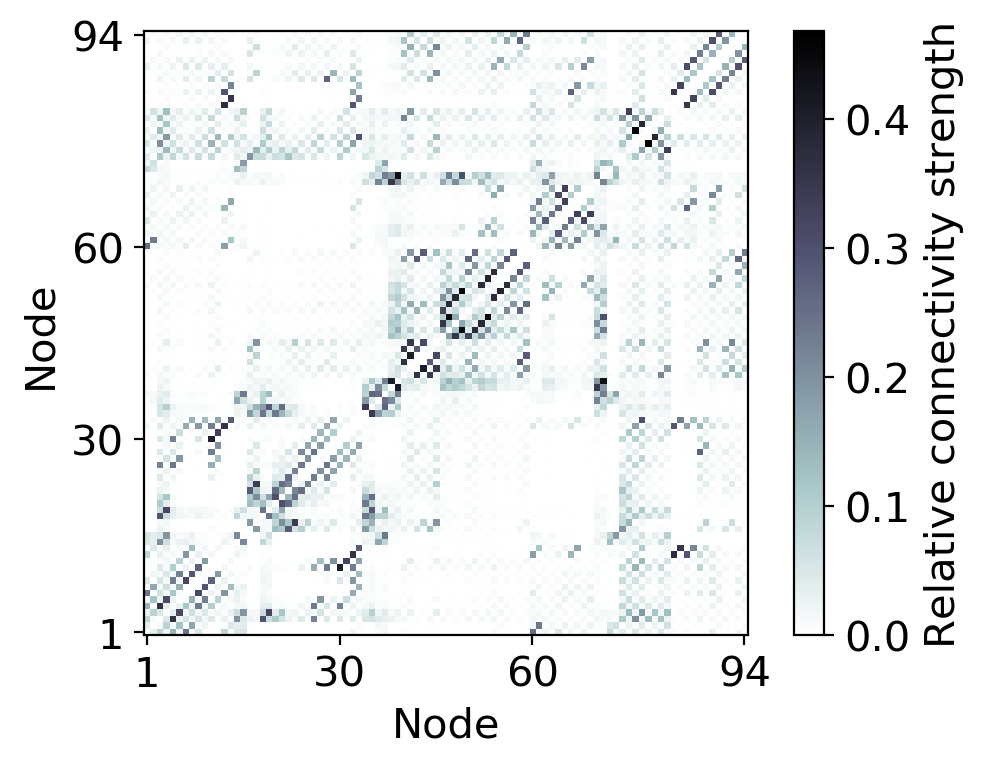

The topology of the whole-brain network model is derived from diffusion tensor imaging (DTI) data of 12 human subjects (all male and 26-30 years old) from the Human Connectome Project [56] (see Appendix D for subject IDs). The nodes in the network correspond to the cortical and subcortical regions defined by the AAL2 atlas-based segmentation [14] (cerebellum excluded). We performed probabilistic fiber tracking (using the Functional Magnetic Resonance Imaging of the Brain Software Library (FSL) [57], details on the processing pipeline in Appendix D) to determine the relative connection strength (edges) between these brain regions (nodes). The pairwise structural connectivity is summarized in the weighted adjacency matrix shown in Fig. 2. Since DTI data carries no directional information, must be symmetric. For the following, we define the weighted degree of a node as the sum over all afferent connection strengths, i.e. .

As motivated in Section III.1, the dynamics of each node in the network is described by a FHN oscillator [Eq. (13)]. The general network dynamics, Eq. (1), then simplifies to

| (14) |

for all nodes . This implies setting the control matrix , which means that all individual nodes can potentially receive independent control inputs. The local coupling, control, and noise schemes are set to , i.e. the indvidual FHN nodes receive node-external inputs only through their activity variables .

We do not consider finite delays for reasons of simplicity. Finite delays induce additional dynamical states. Although of high interest in terms of whole-brain modeling, these would not add to the conclusions drawn in Sections IV and V about the impact of non-linear optimal control and its comparison with diagnostics [23] derived from linear control and based on connectome properties only. Please refer to Section VI for a discussion of the biological plausibility of this assumption.

III.3 State space exploration

In this section, we describe and characterize the different dynamical states that can emerge in a whole-brain network of coupled FHN oscillators for a large range of parameter configurations. Having such an overview of the dynamical landscape of the system will allow us to formulate well defined control tasks in Section IV. Throughout the state space exploration we do not apply any control input [].

Initially we consider the noise free case (). Here, only two free model parameters remain: the global coupling strength and the time independent background input which is the same for each FHN oscillator. The state space of the FHN network is explored by simulating the network dynamics for wide ranges of these parameters (see Appendix B for details). The initial conditions are drawn randomly from a uniform distribution in the interval or taken as the state vector of the last time step of the previous simulation with same and slightly smaller (continuation). In order to avoid analyzing transient effects, we show and evaluate the network states only after a sufficiently long transient time .

If oscillations are present, the dynamical state is characterized by the strength of synchronization quantified by the total cross-correlation, Eq. (6), and by the dominant frequency . The latter is given by the frequency of the highest peak of the combined power spectrum of all nodes,

| (15) |

where with Fourier transform , denotes the power spectral density of an individual node.

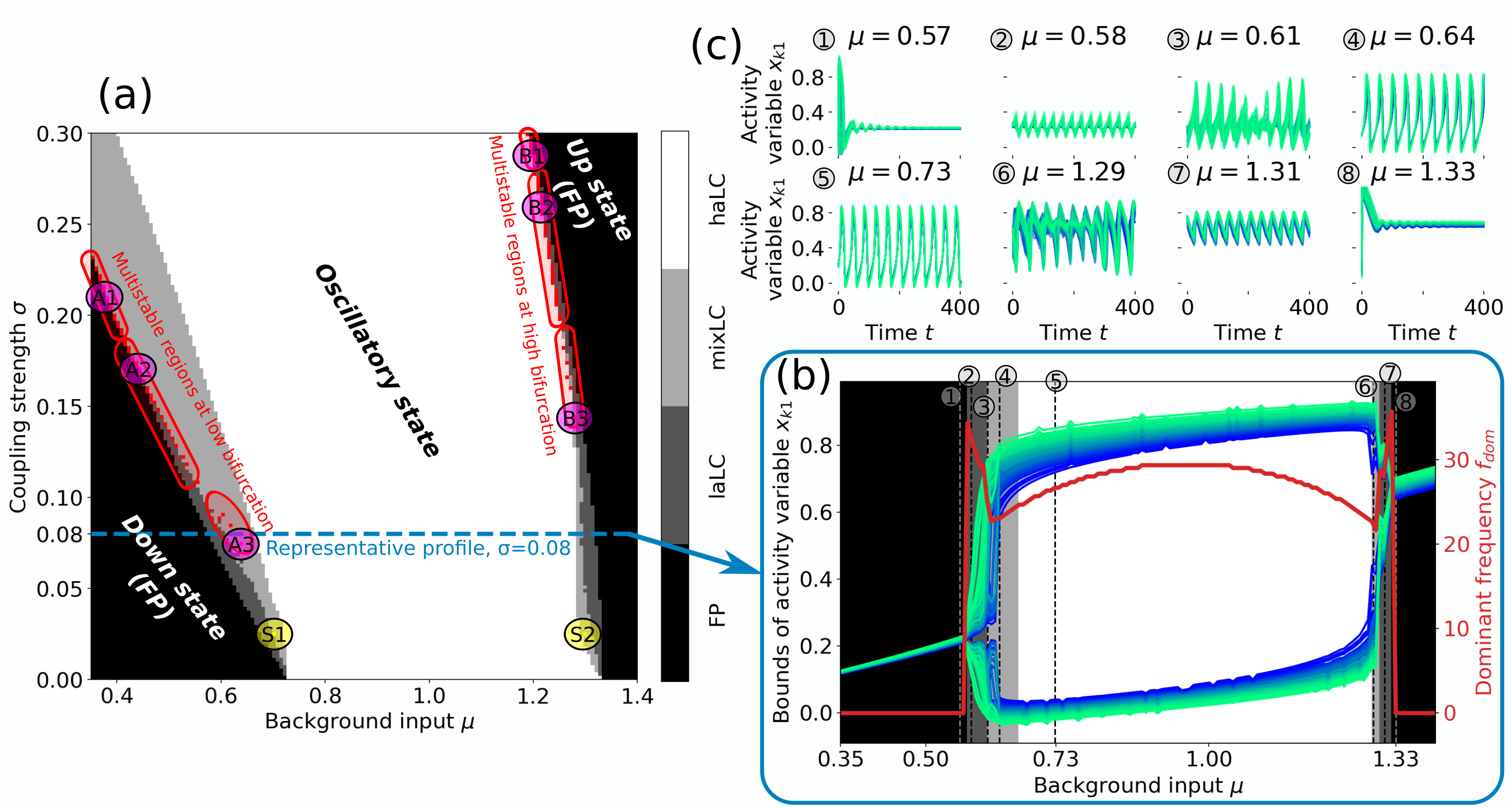

Figure 3 provides an overview of the state space of the FHN network. Similar to the case of a single FHN oscillator (cf. Fig. 1), the network shows two regions in parameter space, for which a stable fixed point (FP) exists. For small values of and the activity variable has a low value for all nodes [down state, cf. Fig. 3c(1)]; for large values of and the activity value is high [up state, cf. Fig. 3c(8)]. Figure 3a shows that the dynamics can transition from an down state to an oscillatory state, as well as from an oscillatory state to an up state by either increasing or . For convenience, we call these transitions low and high bifurcation, respectively.

Within the oscillatory regime, we observe network states that are qualitatively different in their appearance, which we explain in the following by their different underlying mechanisms. A single, uncoupled FHN node oscillates in its limit cycle (LC) if it receives a background input in the range of (cf. Fig. 1). We therefore expect a node in the network to show sustained oscillation (indiv. LC) – and consequently affecting the dynamics of the network – if its combined input is in this interval. We thus call a node “to be in its individual LC regime” if

| (16) |

for at least one point in time within the considered time interval. This criterion is further motivated by observations of the dynamics (cf. Fig. S1 in [78]) which show qualitative changes in behavior depending on whether it is met by all, some, or none of the nodes in the network.

If all nodes fulfill Eq. (16), we observe the network to be in a synchronous, high amplitude limit cycle [haLC in Fig. 3a, cf. Fig. 3c(5)]. If, however, no node fulfills Eq. (16), the network either inherits the fixed point solution from the individual nodes, or a new state emerges due to network effects. We observe both cases: The first one is true in both down and up state of the network in Fig. 3a. The second case appears for low and intermediate coupling strength at the transition from down or up state to oscillatory state. In these states the FP of the network dynamics is unstable due to coupling effects and a self-sustained low amplitude limit cycle [laLC in Fig. 3a, cf. Fig. 3c(2,7)] emerges. The geometric interpretation of this laLC is a rotation around the FP that would be transient for an isolated node, but is prevented from converging to a fixed-point by the network couplings.

It is also possible that only a fraction of the network nodes fulfill the criterion in Eq. (16), which can lead – even in the absence of noise and without network delays – to asynchronous and apparent aperiodic behavior [mixLC in Fig. 3a, cf. Fig. 3c(3,6), further indications for aperiodicity are provided in [78]]. On the network level, this can be seen as the result of the different frequencies of the two coexisting network limit cycles, laLC and haLC, interacting. Close to the bifurcation the frequency of the individual nodes (cf. Fig. 1), and their trajectories (cf. Fig. S3 in [78]), are particularly sensitive to their respective input. If the additive coupling input between the nodes is not sufficient to entrain a common frequency, this may result in asynchronous and potentially aperiodic network oscillations. States that are classified as mixLC are, however, not necessarily asynchronous. If the driving force of nodes that fulfill the criterion in Eq. (16) is high enough, it will lead to frequency entrainment. Therefore, the oscillations of the driven nodes [where Eq. (16) is not fulfilled] are similar to the ones of the driver nodes, resulting in dynamics that are similar to the haLC state [cf. Fig. 3c(4,5)].

The distinction between different dynamical states and types of network oscillations (laLC, mixLC, haLC) is particularly interesting since the transitions between these are the regions in state space, where we find multistable network states. Multistability is detected by simulating the network dynamics for 21 different initial conditions and comparing the resulting time series by calculating their node-wise correlation in the activity variable. We ignore the first arb. units of each time series to avoid analyzing transient effects and then compare the interval (equiv. to 10–35 periods) of one initial condition with of all other traces (second interval is sufficiently longer to account for all phase shifts). If the auto-correlation of at least one initial condition is close to one, this indicates the existence of a stable (periodic) state, and if the cross-correlation between two (or more) initial conditions is different from one, this indicates bi-(or multi-)stability. Red pixels in Fig. 3a show states with more than one stable solution, which are observed along both, the low and high bifurcation.

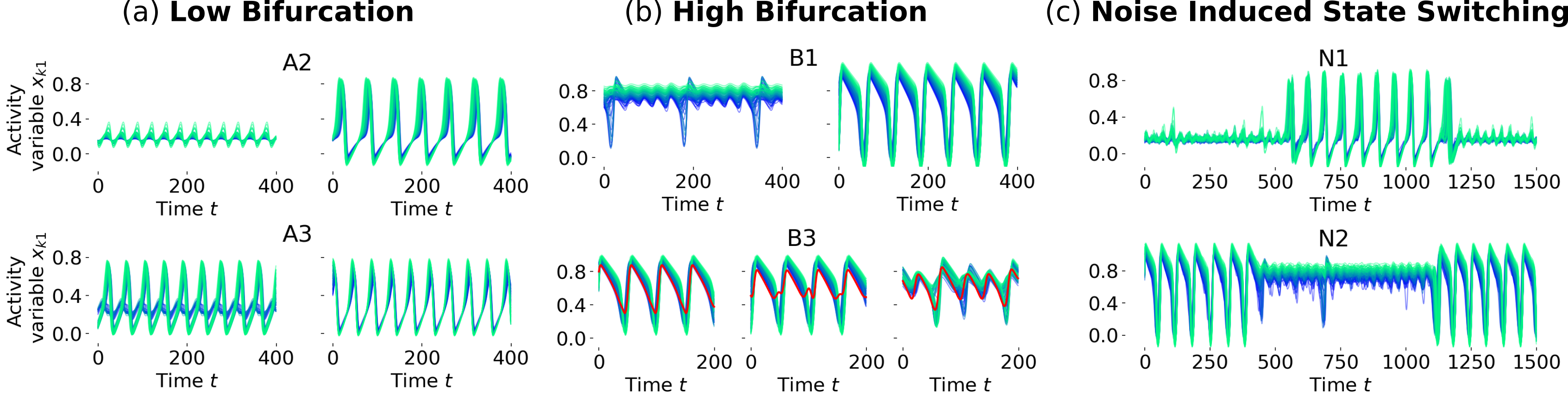

Numerically we found regions of bistability between FP and mixLC (A1), laLC and mixLC (A2, B2), and mixLC and haLC (B1), as well as multistability in mixLC with different numbers of nodes fulfilling the criterion in Eq. (16) (A3, B3) (cf. Fig. 3a). The corresponding time series of the activity variables are shown in Fig. 4. The automatic detection of multistability might not capture all multistable states, especially because some of the states may be very similar (cf. Fig. 4b, bottom panel). More detailed analyses could thus also uncover bistabilities between up state and mixLC, as well as between mixLC and haLC at the low bifurcation. We inspected the detected multistable states visually to assure that their difference are not due to transient effects and confirm their assignment to the specified multistable regions in Fig. 3a. We do not observe state switches in this noise-free case even for very long simulation times ( arb. units simulated for multiple initial conditions in each multistable region, A1–3, B1–3, of Fig. 3a), meaning that the states are stable and no temporal intermittency is found.

Adding noise to the network most strongly affects the dynamics of the states close to the bifurcations. The influence of noise on synchronous, mono-stable oscillatory states is, as expected, comparably small (cf. Figs. S4d, e in [78]). If the network dynamics is in a FP far away from the bifurcation line, the additional Gaussian white noise results in uncorrelated fluctuations in the activity variable (cf. Fig. S4i in [78]). Parametrizations that without noise would lead to a stable FP close to the bifurcation, show oscillations when sufficient noise is introduced (cf. Figs. S4a, h in [78]). The clear distinction between the FP and laLC network states in the noise-free case (cf. Fig. 3a) is therefore blurred for noisy dynamics. For asynchronous mixLC states, the additional noise can have a synchronizing effect (cf. Fig. S4c in [78]). This can be explained by more nodes fulfilling the criterion in Eq. (16), resulting in a stronger drive for the remaining nodes and therefore more synchronous oscillation. These effects lead to a shift of the bifurcation lines compared to the noise-free case and consequently also affects the location of multistable network states.

In the case of multistability, we observe that this stochastic additional input can lead to sufficient perturbations that drive the dynamics from one attractor to the other. This results in noise-induced state switching, as shown in Fig. 4c, at the bifurcation lines of the noisy state space. Such noise-induced transitions are a known phenomenon in systems of coupled oscillators [58] and may be exploited for the control of the neural dynamics in practice [59].

IV Optimal control of the brain network dynamics

IV.1 Switching between bistable network states

In this section, we present optimal control inputs that induce a switch between previously identified multistable states. All control inputs optimize the cost functional given in Eq. (2) with the state dependent terms given in Eq. (3) and the energy and sparsity terms given in Eq. (7). Energy and sparsity terms are evaluated over the whole time interval during which the control is active. The cost functional itself, however, considers the deviation from the target state only the end of the control period. For this we set for and else. The convergence criterion of the numerical solution of the minimization problem, as described in Section II.3, is set to for all our applications. We ensured for all presented examples that the method actually converges and that results do not change for lower values for . We computed the optimal control for different phase shifts of the initial and target states with respect to the control onset and present the results for the phase shift which leads to the smallest total control energy .

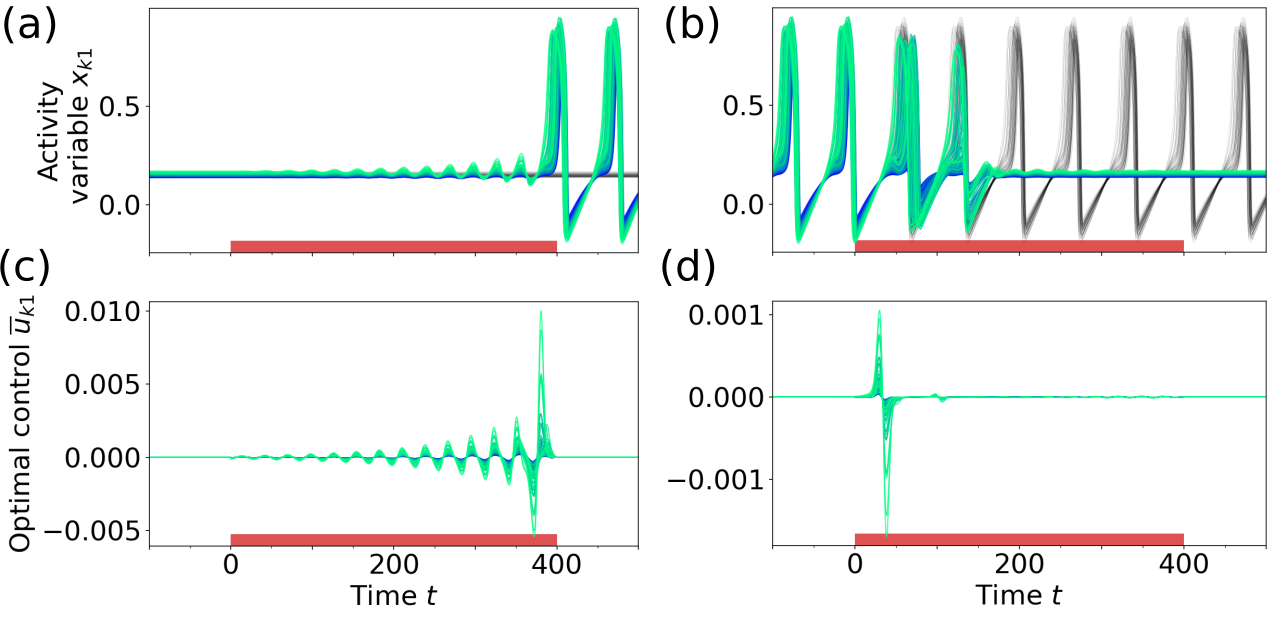

Figure 5 shows the result of applying optimal control at point A1 in Fig. 3a, where a low activity fixed point coexists with an oscillatory mixed state. The weight for the sparseness term in Eq. (7) was set to zero. Since the target state in this task is always stable, the network remains in this state once it is reached also after the control is turned off. If the initial state is the down state FP, the obtained optimal control input oscillates synchronously for all nodes with increasing amplitude. The frequency of the control input [] corresponds to the frequency of the fixed point’s focus [, measured by perturbing the FP], inducing resonance effects in the node activity. This strategy can be well observed in Video 1 (in [78]), showing the node oscillations and the respective optimal control inputs in state space.

When switching from the oscillatory to the down-state, as in Fig. 5d, the optimal control input consists of one short biphasic pulse applied to all of the nodes followed by minor corrections. It is a known result from control theory, that applying a biphasic control pulse around an extreme point is an efficient way to drive an oscillating system to a stop. Interestingly, the optimal control strategy in this example is not to apply the pulse at an extreme point of the activity variables , but rather in a way that the gradient of the control is highest when the phase velocity of the limit cycle oscillation is the lowest (best observed in Video 2 in [78]). Although the deviation from the target state is only penalized at the end of the control interval, the pulse is applied early in order to give the system enough time to come to rest.

Figure 6 shows the result of applying optimal control at point B1 in Fig. 3a, where a low amplitude limit cycle (laLC) around the high activity up-state coexists with an oscillatory mixed state (mixLC, cf. Section III). When switching from the laLC to the mixLC state (Figs. 6a, c), the frequency of the control input [] is again adapted to the frequency of the initial state [], utilizing resonance effects. While the nodes in the laLC state all oscillate with the same frequency (), their phase speed is not the same at all times [reflected by lower synchrony , best observed in Video 3 in [78]]. This results in a less synchronous oscillation of the control signals in Fig. 6c () compared to the control inputs at the low bifurcation [Fig. 5c, ]. In the other direction (Figs. 6b, d, Video 4 in [78]), the optimal control finds a short, off-phase biphasic pulse analogous to Fig. 5d. As a result, the amplitude of the network oscillation decreases to almost zero (for in Fig. 6b). Since there is no stable FP, the oscillation amplitude increases again over time and converges to the laLC target state approx. 550 time units after the control pulse (see also Fig. S5 in [78]).

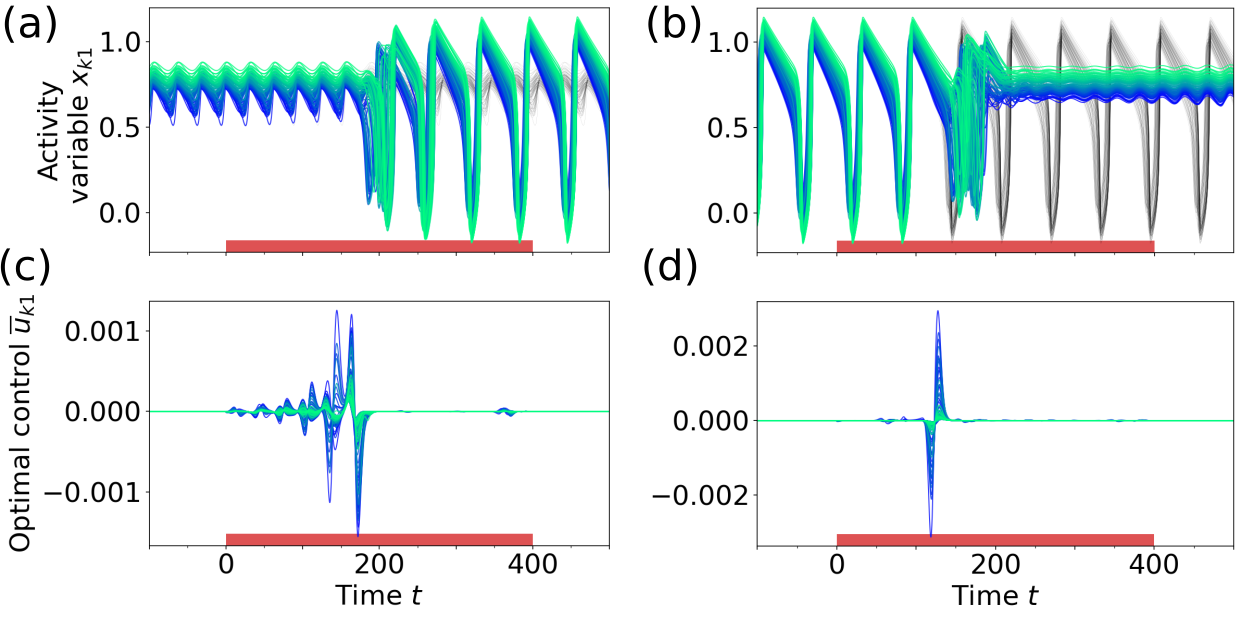

By increasing the sparsity parameter of the cost functional, we can tune the number of controlled nodes. Figure 7 shows the control energy [Eq. (12)] of the optimal control input to each node as a function of the sparsity parameter . For low values of , all nodes receive a finite control signal. When is increased, less nodes are controlled, until becomes so large, that the target state can no longer be reached. As expected, decreasing the number of controlled nodes needs to be compensated for with a higher control energy.

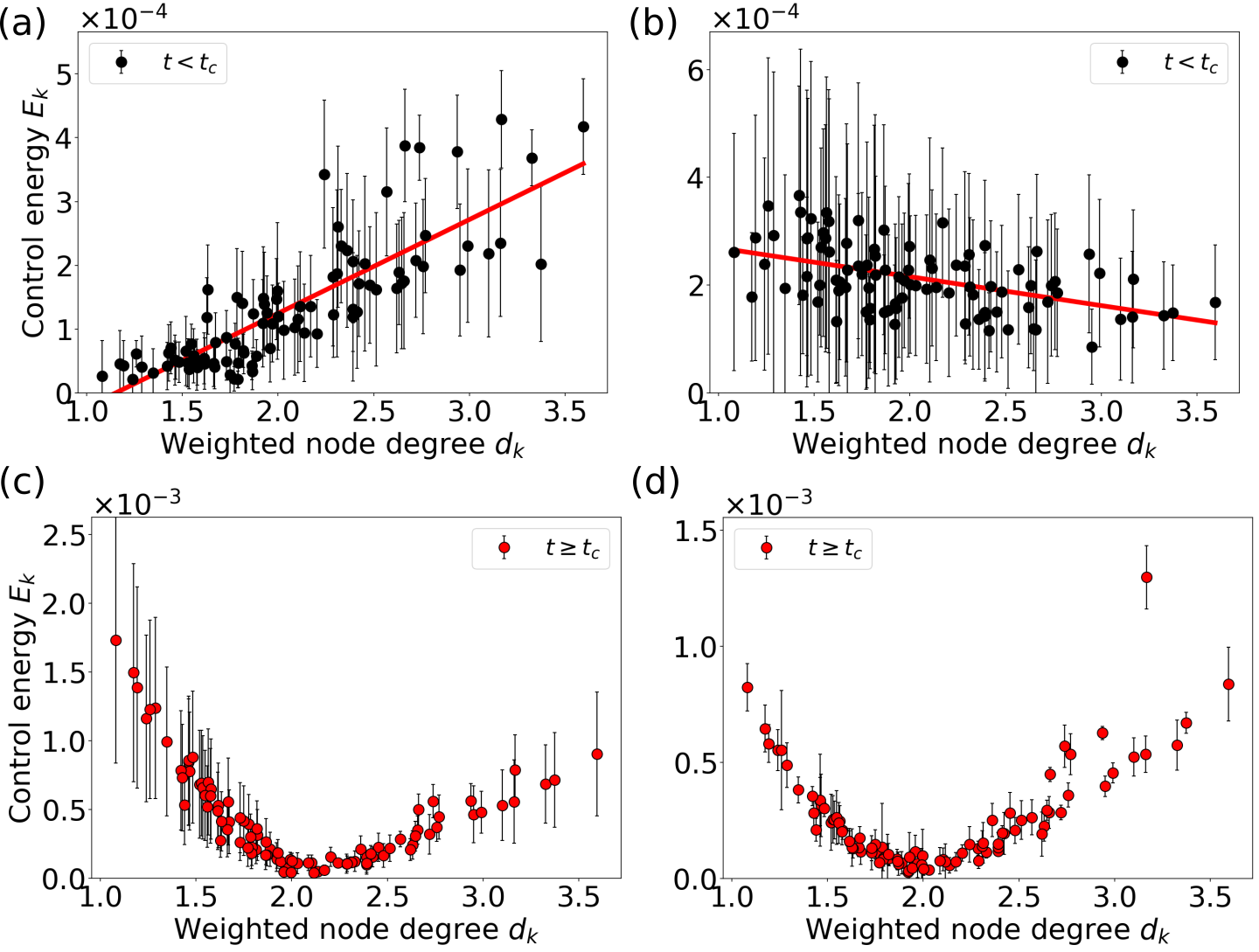

The results also show that at the low bifurcation (Figs. 7a, c), nodes with a high weighted degree receive, independent of the switching direction, a stronger control signal and remain controlled even for high values of . This shows that at the low bifurcation it is most important to control the network hubs since they act as driver nodes for attractor switching. At the high bifurcation (Figs. 7b, d), on the other hand, nodes with low weighted degree receive the strongest control inputs.

This different behavior can be explained based on the additive coupling scheme between the nodes (cf. Section III), which causes nodes with higher degree to typically receive stronger inputs. When choosing parameters close to the low bifurcation, these high degree nodes therefore have higher oscillation amplitudes (cf. Fig. 1) and are consequently driving the oscillation of the remaining nodes in the network. Close to the high bifurcation the nodes with lower degree, who receive less inputs, are more likely to remain in the limit cycle regime. Consequently – and in contrast to the dynamics close to the low bifurcation – the low degree nodes drive the oscillation of the network hubs. Videos 1 and 3 (in [78]) illustrate how the control inputs on the respective driver nodes force the network dynamics to the high-amplitude oscillation target states.

The optimal control inputs to the control sites have well interpretable shapes. When the objective is to transition from a low-amplitude state to one with a higher amplitude (mixLC, Figs. 5a and 6a), the optimal control strategy utilizes the characteristics of the flow field in state space and synchronously drives the network with its resonant frequency from one attractor towards the other. Switching in the opposite direction, and therefore leaving this basin of attraction, is achieved most efficiently with one strong, biphasic pulse. This deflects the system from its initial mixLC trajectory and causes it to drop to the attractor with a lower amplitude.

IV.2 Synchronizing the network dynamics

In this section, we apply the nonlinear control method to find the optimal inputs to synchronize the dynamics of the individual nodes. For this application we use the state dependent cost functionals given in Eq. (4) to penalize the deviation from the target cross correlation (, fully synchronous state) and in Eq. (7) to penalize the energy of the control and enforce its sparsity in space.

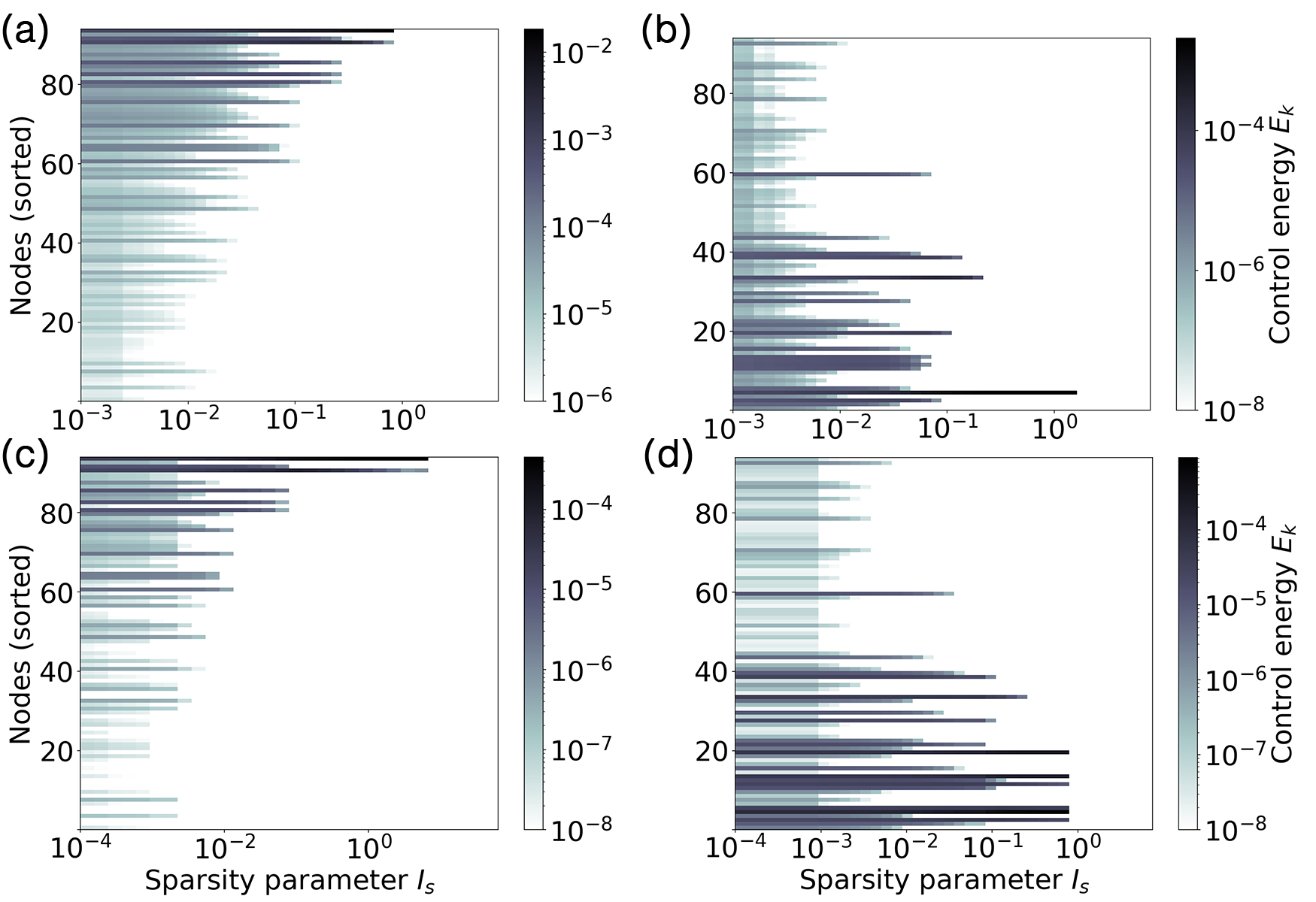

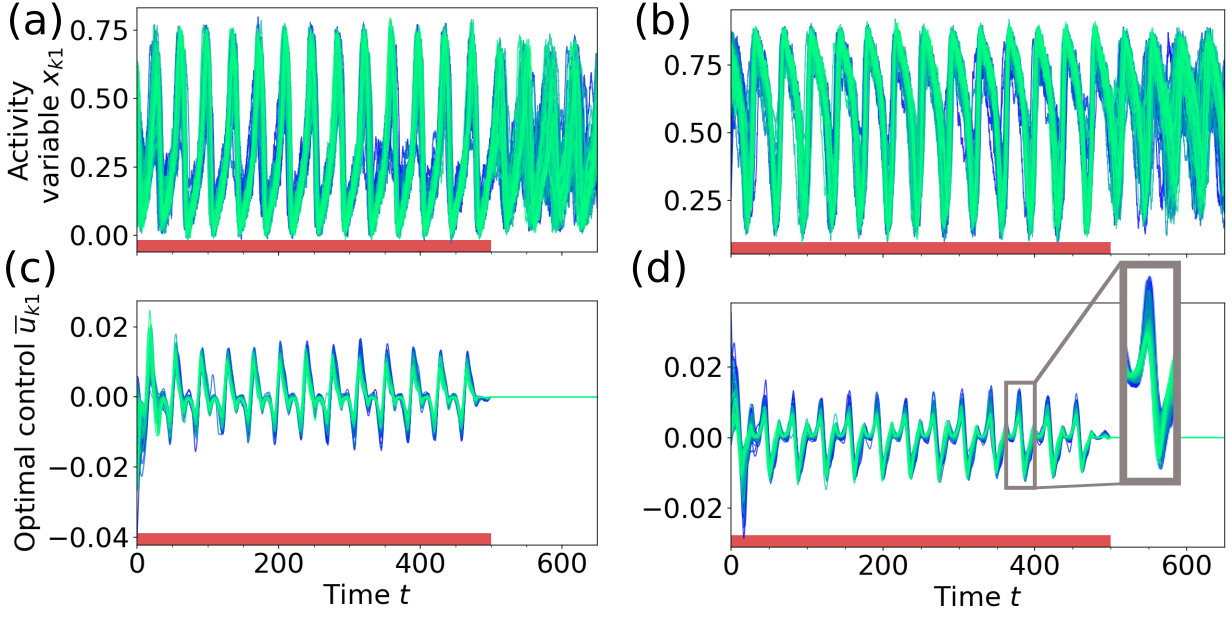

We parameterize the system to be in the two asynchronous regions marked by the labels S1 and S2 in Fig. 3a, close to the low and high bifurcation lines. The optimal control method is then applied to synchronize the dynamics for the noise-free (Fig. 8) and noisy (Fig. 10) case. Both figures show the controlled (synchronous) and uncontrolled (asynchronous) time series and the corresponding optimal control inputs. Since the dynamics in points S1 and S2 are monostable, the synchronous state is not a stable solution and the system returns to its original state as soon as the control is switched off. The network dynamics and the optimal control strategies are best seen in Videos 5-8 (in [78]).

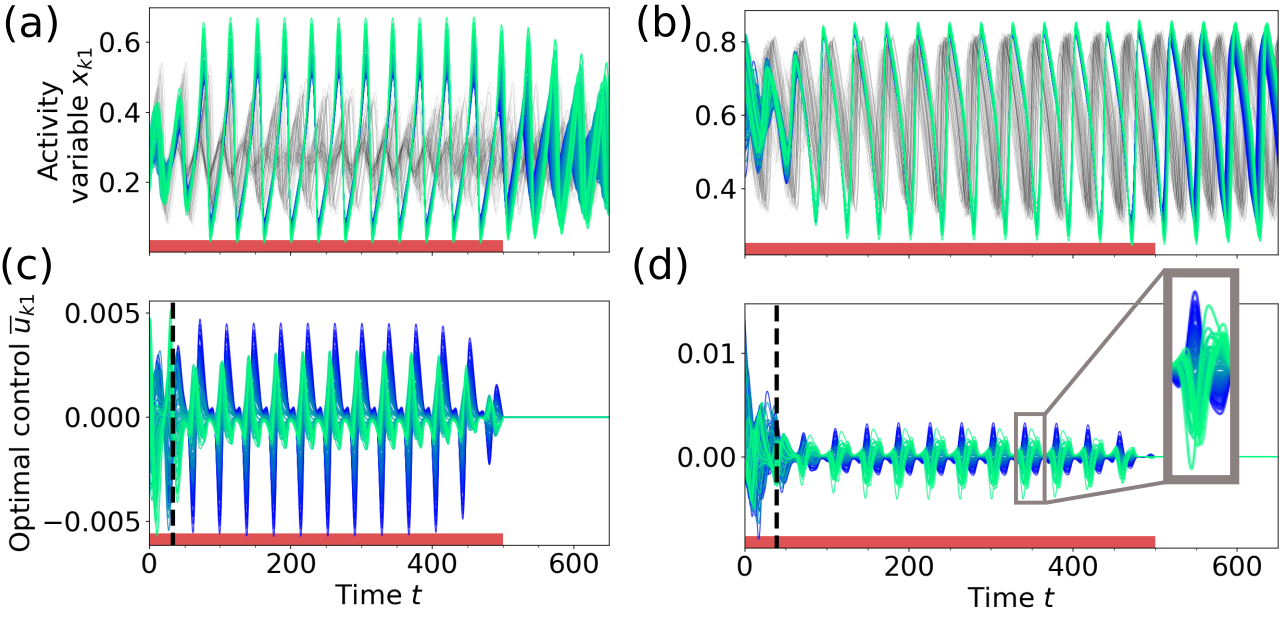

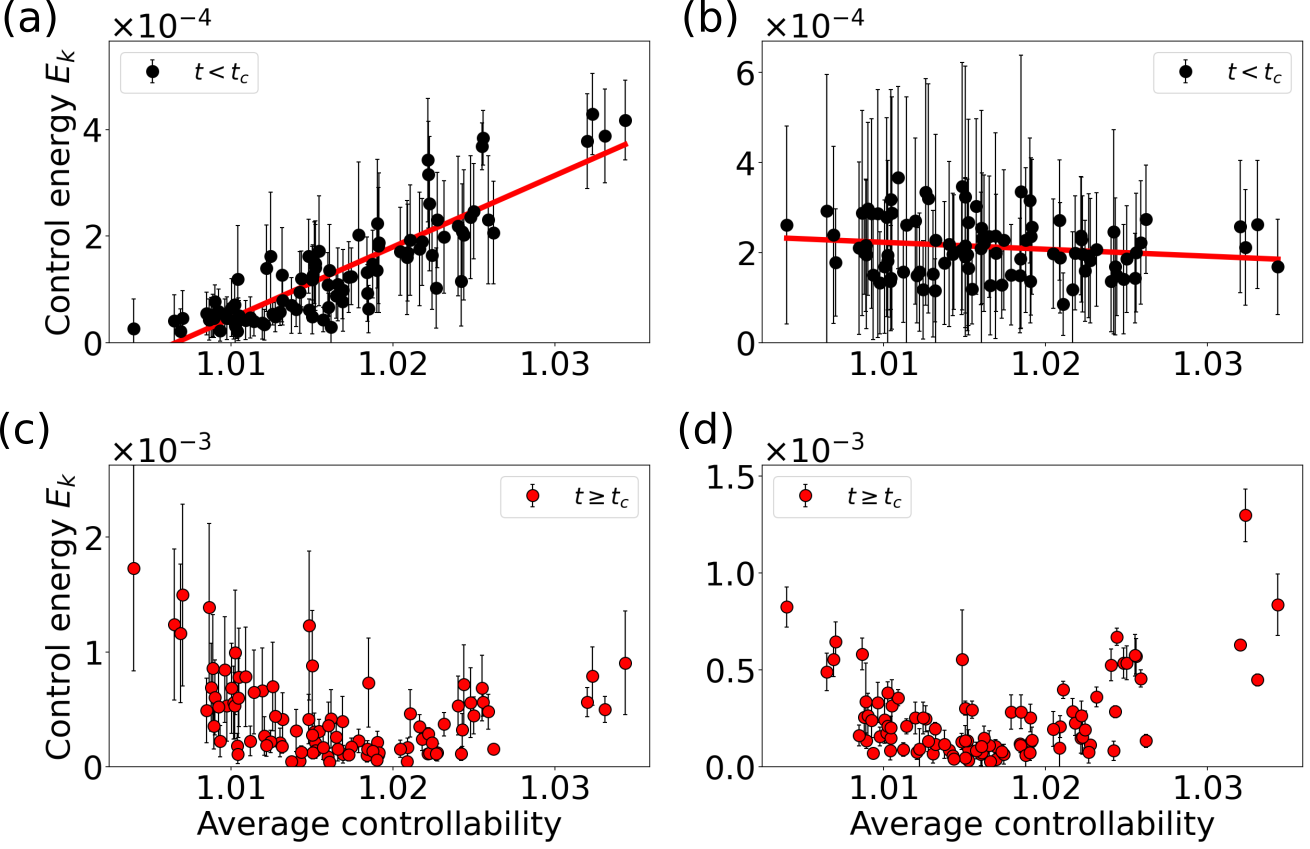

Figure 8 shows how the optimal control inputs synchronize the oscillation of all nodes in the network. In the uncontrolled state (in gray, Figs. 8a, b) the nodes at both bifurcations oscillate with similar frequencies (mean and standard deviation of oscillation frequencies across nodes at S1: and at S2: ). Close inspection of the control inputs in Figs. 8c and d shows that the optimal control acts in two phases. First, the control aligns the phases of all oscillators until all nodes are synchronized for the first time, which we defined as the critical time (please refer to [78] for details about the computation of ). Second, a periodic input maintains the synchronous oscillation during the period the control is active.

For times , Fig. 9a shows that at the low bifurcation the energies of the control inputs to the nodes are positively correlated with the weighted node degrees. Thus, it is beneficial to focus the control input on the network hubs for alignment. A possible interpretation for this strategy is that the optimal control utilizes the influence of the network hubs on the remaining nodes to force them on the synchronous limit cycle trajectory. However, we again observe a different behavior at the high bifurcation, as shown in Fig. 9b. Here, is negatively correlated with the weighted node degree for times . In this case, the network coupling is much smaller compared to the background input and the node’s phase space oscillations are similar to the trajectory of the limit cycle of an uncoupled node (cf. Video 6 in [78]). The negative correlation in Fig. 9b suggests that if the dynamics of the nodes are similar in the first place, it is beneficial to focus the control on more weakly coupled nodes and align their phase space trajectory with the trajectory of the network hubs. Similar to the attractor switching at the high bifurcation (cf. Figs. 7b, d), the control of the low degree nodes drives the network oscillation towards the target state.

In the second phase, for , the high degree nodes without control would have a higher (or lower) phase velocity than the nodes with intermediate degree, while the opposite is true for nodes with low degree. The optimal control strategy is thus to act on the low and high degree nodes in opposite directions, which can be observed as antiphase control inputs shown in Figs. 8c and d, where nodes with intermediate degree receive only small control inputs. (Videos 5 and 6 in [78] show how the control inputs keep the nodes together in phase space.) This is reflected in the arch-like form of the mean energies of the control inputs at both bifurcations, as seen in Figs. 9c and d, with high control energies for nodes with low or high degree. As a result, the optimal control achieves a mean network cross-correlation of in the time interval , compared to the uncontrolled scenario with at point S1 (Fig. 8a), and compared to at point S2 (Fig. 8b).

Figure 10 shows results for the application of optimal control to the synchronization task for the case of finite noise. We obtain a mean network cross-correlation of (analysis of the deviation from in [78], uncontrolled: ) at point S1 (Fig. 10a) and (uncontrolled: ) at point S2 (Fig. 10b). We conclude that the optimal control input successfully synchronizes the network dynamics, just as in the noise-free case.

The control strategy that leads to the respective target state, however, changes substantially when noise is added to the system. The control input in the noise-free case is tailored individually for each node, slowing the phase space velocity of some nodes down and speeding others up at the same time (cf. blue inset in Fig. 8d, mean cross-correlation of the control inputs during this control period is 0.03). In contrast to that, the control input for the noisy network dynamics has a similar shape for all nodes (cf. blue inset in Fig. 10d, mean cross-correlation of the control inputs during control period is 0.88) with varying amplitude depending on the weighted node degree .

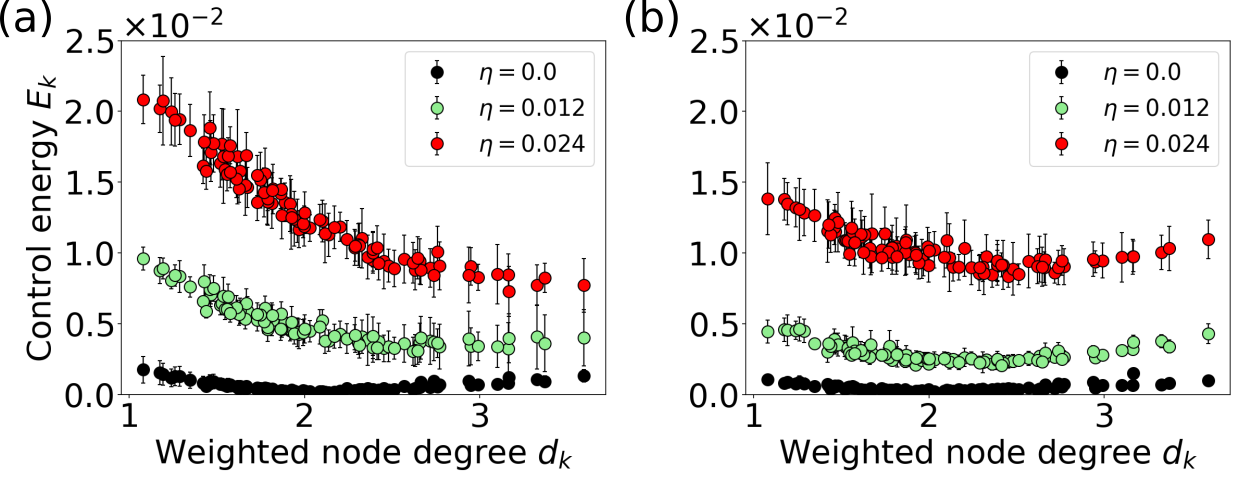

This change in control strategy is also reflected in Fig. 11, which shows the control energy per node for different noise levels. The control energy increases for all nodes with increasing noise strength, both at the low (Fig. 11a) and the high bifurcation (Fig. 11b). With increasing noise level , we observe a gradual transition of the optimal control strategy. Instead of balancing out the phase velocity differences between nodes towards the phase of nodes with intermediate degree (cf. Videos 5 and 7 in [78]), the control in the noisy case forces the system on a limit cycle with a slightly higher oscillation amplitude (cf. Videos 6 and 8 in [78]). This strategy requires a higher control energy but is independent of the specific realization of the noise.

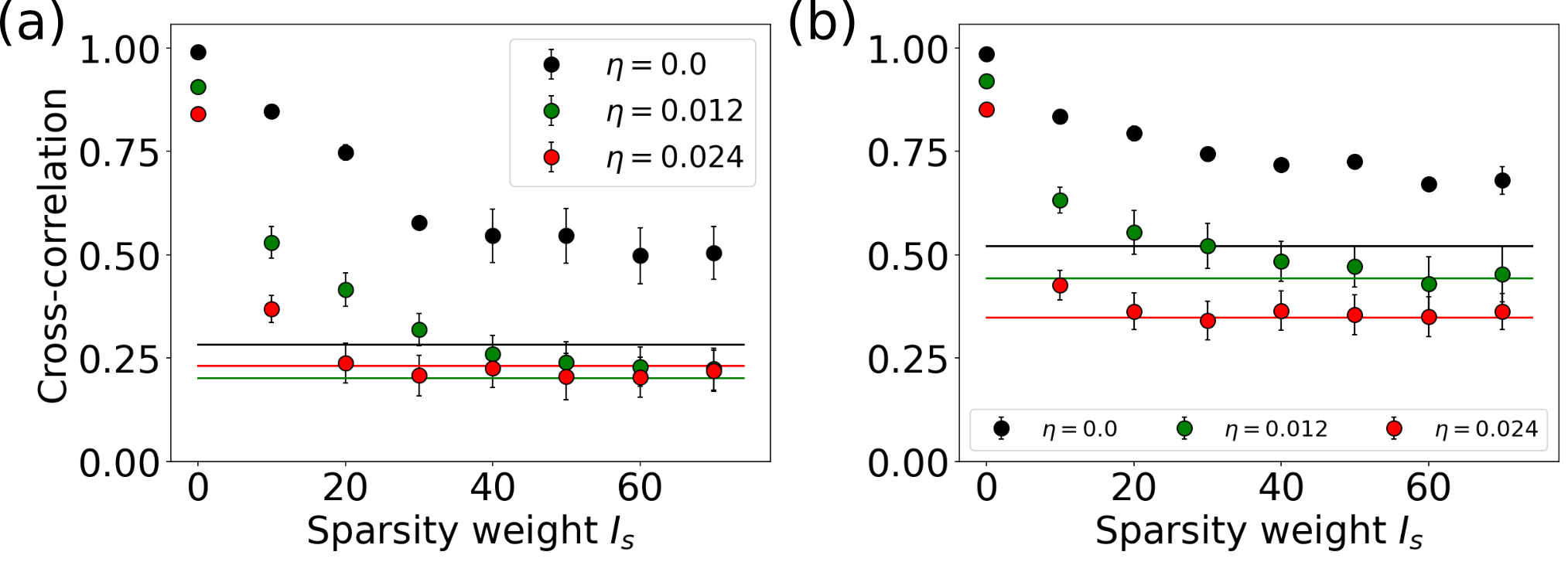

By imposing sparsity constraints on the system, we can investigate to what extent synchronization can be achieved with fewer control sites. Figure 12 shows the relation of the synchrony in the network, measured by the average cross-correlation [Eq. (6)], to the sparsity parameter . The average cross-correlation decreases with increasing sparsity parameter at both locations in state space and for all noise levels. This shows that, under the imposed constraints, it is not possible to fully synchronize the network with sparse control. Optimally synchronizing aperiodic states is a collective effort. Unlike state switching it cannot be achieved by controlling only a few sites, because the reduced number of control sites cannot sufficiently be compensated for by a higher control energy. Especially in the case of noisy network dynamics, where the control must drive all nodes, the network’s synchrony quickly drops to the value of the uncontrolled network when is increased. For fixed the number of controlled nodes and the control sites optimal for synchronizing the network changes for each initial condition (cf. Fig. S1 in [78]), as it depends on details of the exact network dynamics.

V Comparison with controllability measures derived from linear control theory

When relating functional properties of a neural system to properties of the underlying connectome, neural activity is often approximated by linear dynamical systems [60, 61, 23]. This has obvious benefits for analyzing the effects of perturbations. Calculating optimal control inputs for linear systems, as in Refs. [12, 22], can be done analytically and with little computational effort, and conclusions about the effects of external inputs can be drawn from controllability measures, which depend on network topology only [21].

Two of these measures have previously been applied to quantify the impact of perturbations in a whole-brain network setting [23, 25, 22]. Modal controllability refers to the ability of a node to control each evolutionary mode of a dynamical network [62], and the average controllability is given by the average control input energy to the respective node over all possible target states [25, 23] (mathematical description in Appendix C). Nodes with high average controllability require only low energy input to move a linear system into “easy-to-reach” states, while nodes with high modal controllability require a high control energy input to have an effect on the dynamics but are crucial when the target state of the system is “difficult-to-reach” [23, 63, 21]. It has also been shown that the average controllability of a node is strongly correlated with its weighted degree ( for our structural connectivity matrix) while the modal controllability is known to have a strong inverse correlation (, see Fig. S7 in [78]).

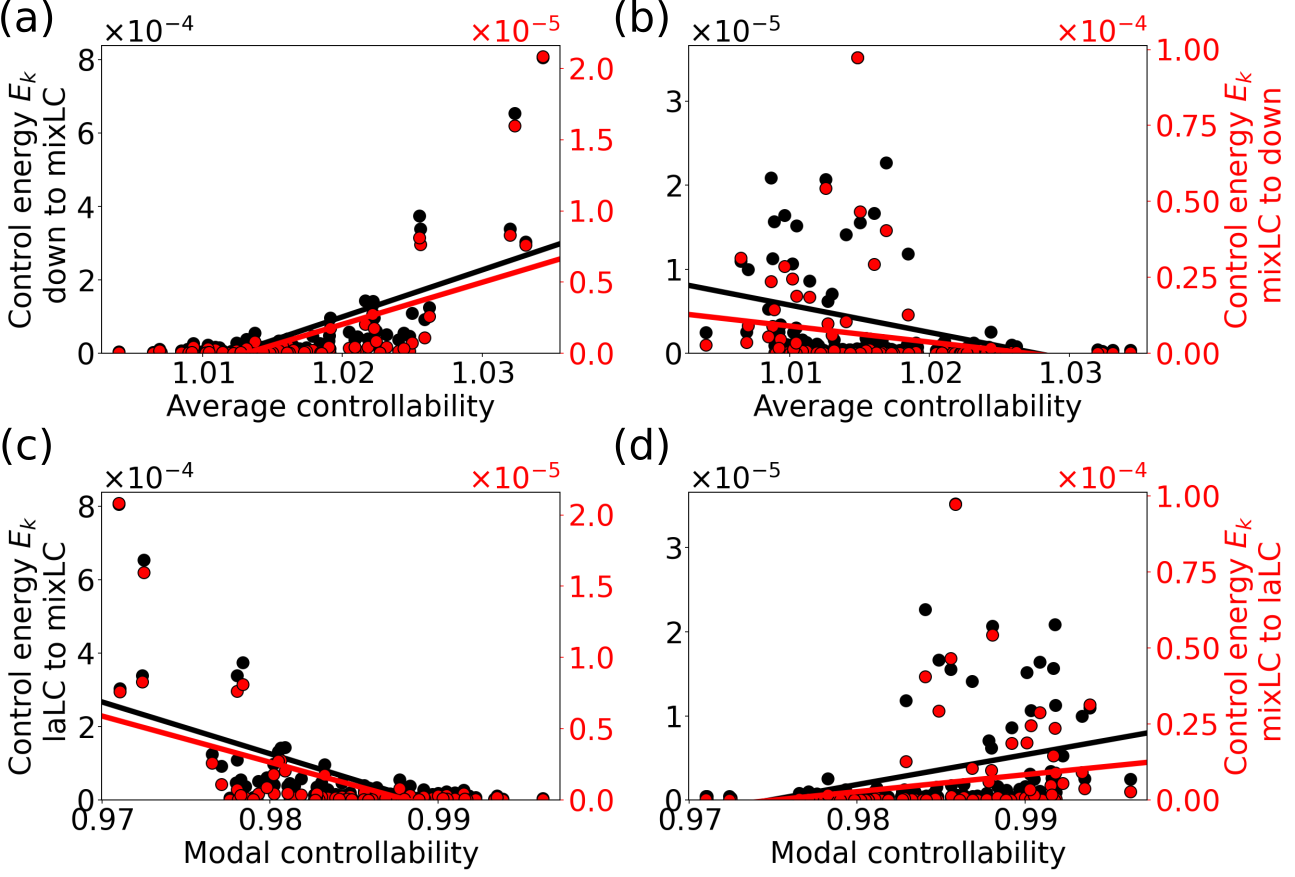

We now apply the diagnostics from linear control theory to the brain network model, Eq. (14). Figure 13 shows the correlations of the energies of the optimal control inputs with the average and modal controllability for the attractor switching tasks. At the low bifurcation A1 (Figs. 13a, c) we find, that the energy of the control input for a node is positively correlated with its average controllability and negatively correlated with its modal controllability, both irrespective of the switching direction. Therefore, the most efficient strategy for switching between the attractors is to control the network hubs with high average controllability which then force the other nodes towards the target state. These results can be considered consistent with predictions from linear control theory [23] and previous results on the global impact of stimulation [25].

At the high bifurcation at location B2 (Figs. 13b, d), however, we observe the opposite trend, with the control energy being negatively correlated with the average and positively correlated with the modal controllability. Predictions based on linear control theory alone are indifferent to the actual dynamics and therefore not able to distinguish between the two cases. Yet, nodes with high modal controllability play a much more important role in affecting the global dynamics at this location in state space.

For the synchronization task, we observe a similar pattern. While there is a clear correlation between the optimal node-wise control energies with the average controllability when aligning the phases (i.e. ) at the low bifurcation (Fig. 14a), no correlation is observed at the high bifurcation (Fig. 14b). (The corresponding results involving modal controllability are shown in Fig. S8 in [78].) In the case of maintaining the synchronous network dynamics (, Figs. 14c, d), we observe – at both bifurcations – a similar but less obvious arclike shape as in Figs. 9c, d. For noisy network dynamics (cf. Fig. S9 in [78]), however, is negatively correlated with the average controllability at both bifurcations (cf. Fig. S9 in [78]).

This shows that in our nonlinear setting, linear controllability measures do not provide additional insights compared to the weighted node degree, and that intuitions based on these measures can be misleading.

Diagnostics from linear control theory have previously been claimed to be predictive for a node’s role in driving brain state transitions [23, 22] or for the global impact of local brain stimulation [25]. Our results however show, that the optimal control inputs and sites in nonlinear systems not only depend

on the structural network connectivity, but also on the location in state space, the control task, and other factors like the amount of noise.

VI Discussion

In this contribution we apply techniques from the optimal control of nonlinear dynamical systems to the dynamics of brain network models. Nodes were equipped with FitzHugh-Nagumo oscillators, since they are simple and well studied nonlinear models for neural dynamics. Changing the background input for the FHN nodes or the global coupling strength of the network can both lead to transitions between two different stable fixed points and two different limit cycle attractors (laLC and haLC). The interaction between nodes in different oscillation states (mixLC) can lead to an asynchronous network dynamics. At the bifurcations, we also find different coexisting stable states.

The general mathematical framework of the optimal control of partial differential equations [29] is adapted for noisy dynamical systems on graphs, where the local network dynamics, the noise level, the network connectivity, and the local coupling schemes [Eq. (1)] can be freely chosen. The state dependent part of the cost functional is averaged over multiple noise realizations and penalizes the deviation of the network dynamics from a task dependent control target [Eq. (3) for attractor switching, Eq. (4) for synchronization]. The part of the cost functional which depends on the control input [Eq. (7)] penalizes the control energy and non-sparse solutions. The presented method is applicable to any network that can be described in the form of Eq. (1), including models of power grids, social networks, or climate dynamics.

A common problem for gradient based methods are potential local optima of the cost functional which may prevent the convergence to a globally optimal solution. To alleviate this problem we performed the minimization as described in Section II.3 with different initial conditions for the control time-series and choose the result with minimal cost. The set of initial conditions included as well as valid control time-series taken from different parametrizations.

We use the optimal control method to cause targeted attractor switching between previously identified coexisting stable states. When no sparsity is enforced, we show that it is optimal to resonantly drive all nodes to transition to the high amplitude oscillatory state. When the task is to switch to a state with lower amplitude or no oscillation, the optimal strategy is to apply a precisely timed biphasic pulse. The nodes that receive the largest control energy are the same for both of these switching directions and are also the ones that are still controlled when we enforce sparsity in space. Depending on the location in state space, either nodes with high degree (at the low bifurcation) or low degree (at the high bifurcation) are the ones that most efficiently drive the network dynamics from the initial to the target state. When sparsity is enforced, controlling only a small number of these driving nodes with increased control energies is sufficient to switch from one attractor to another in an optimal way.

In the second application, we show that our method can also be used to control global properties of the network dynamics, such as the average cross-correlation between node activities in the oscillating regime. Immediately with control onset, the control signal acts on all nodes to align their phases. As soon as this is achieved, the control maintains the synchronous oscillation with periodic control signals. Which nodes receive larger control input for the initial alignment again depends on the location in the state space. Both at the low and high bifurcation, individually adapted control inputs lead to a successful synchronization of the network dynamics. While the average cross-correlation is increased also with sparse control, the synchronous target state is only achieved in an optimal way, when all nodes in the network receive a finite control input. This suggests that synchronizing all nodes needs collective intervention, while attractor switching can be caused by controlling a few selected nodes only. The introduction of noise to the system makes the dynamics of each node less predictable, resulting in a loss in specificity and more similar control inputs to the nodes. Consequently, the optimal control of noisy network dynamics requires higher total control energy (cf. Fig. 11) while resulting in a lower precision (cf. Fig. 12 for ).

The information on the different states and bifurcations, which we show to be a decisive factor for choosing the optimal control sites in our applications, is lost when techniques from linear control theory are applied. While predictions do qualitatively agree for certain dynamical systems, control tasks, and locations in state space (cf. [25]), this agreement does not hold in a general setting. It would, however, be worthwhile investigating for what classes of dynamical systems and control tasks “controllability measures” can be defined, which only depend on the properties of the connectome.

In this contribution, the techniques of nonlinear optimal control were applied to a simplified model of the global brain dynamics. The bifurcations in our FHN model (cf. Fig. 1) phenomenologically capture the state transitions found in more complex, biophysically motivated network models [55] as we have adapted the FHN parameters to resemble their dynamics. Consequently, the activity variables of the FHN nodes can be interpreted as relative output firing rates of cortical nodes, and their total input can – at least qualitatively – be interpreted as a proxy of the local field potential [64]. Given this interpretation, model down-(up-)states are related to down-(up-)states in cortical physiology, while an oscillatory regime of the whole-brain model corresponds to a brain state with oscillations present. Locations at the lower bifurcation of the network model were implicated as proper “operating points” for modeling the brain’s resting state activity [17, 18, 19], while locations at the high bifurcation can be considered a cartoon model for the global brain dynamics during non-Rapid Eye Movement (non-REM) sleep (see [20] for a biophysically more detailed model).

In this work we consider a network with instantaneous couplings, although coupling delays in human white matter are finite. The interpretation of results regarding the model’s state space should thus be limited to cortical states whose dynamics is slow compared to these delays. Given that delays are of the order of approx. 5-15 ms [65] brain oscillations with periods of approx. 1 s or less would qualify. These include the so-called slow oscillations, which are the prominent brain rhythm during non-REM sleep and which have already been subject to external perturbation experiments in human neuroscience and clinical settings [66, 67].

In lieue of a biologically more detailed model of the effects of control inputs on whole-brain dynamics, delays must be included as soon as brain oscillations with frequencies above a few Hz are of interest. Relative delays can be computed from DTI-based estimates of the length of white matter fiber tracts and included in the coupling terms of Eqs. (1) and (14).

When relative delays are scaled by a global delay constant, the ratio of the coupling delays to the FHN oscillation period can be adapted to match the physiologically observed ratios for any given brain rhythm of interest. The formalism of nonlinear optimal control summarized in Section II must extended, though, to cover finite delays by modifying the coupling term in Eq. (1) to, e.g., for the case of constant delays. The adapted coupling term reappears when computing the adjoint state with the differential equation [Eq. (10)], and thus the iterative algorithm for the numerical optimization (cf. Section II.3) has to be adapted accordingly in the calculation of step 3.

Here we used a highly simplified model of the global brain dynamics to showcase the applicability of nonlinear optimal control and its added value beyond connectome-based diagnostics derived from linear control theory. When applied to biophysically more realistic models of whole-brain activity (cf. [20]), nonlinear optimal control may serve as a tool to evaluate the impact of external stimulation and to facilitate the design of new brain stimulation protocols. Non-invasive brain stimulation like transcranial current stimulation [68, 69, 70] is a highly promising technique to perturb the global brain dynamics with the goal to improve sensory [71], motor [72], and cognitive abilities [66] of human subjects. Using a cost functional which penalizes spatial sparseness and control energy may – for example – help reducing the required current applied to a subject’s brain as well as focusing the electrical perturbation on the relevant brain areas only. While exact target trajectories are unlikely to be available in a practical setting, state-dependent cost functionals which refer to global quantities [cf. Eq. (4) for the synchronization task] may well be. Particularly, reformulating the control formalism in frequency space will be beneficial here. It would for example allow for computing optimal interventions for changing the power of certain brain rhythms, which can be monitored by electroencephalography and which are common control targets in clinical settings (cf. [67]). As shown in other computational and physiological studies [55, 73] and emphasized again by our results, the timing of the control inputs may also be crucial for successful interventions. Here we see a high potential of nonlinear optimal control in guiding electrical brain stimulation under electro- or magnetoencephalography [74, 75], a setting which allows for such precisely timed control inputs.

For quantitative predictions, an application of non-linear optimal control to biophysically grounded network models (cf. [20]) is desirable, where the coupling of neurons to externally applied electric fields is included in a biophysically realistic way. Still, results obtained with simplified brain-network models will strongly facilitate biologically detailed but computationally expensive in-silico experiments and may already inspire physiological studies on brain-stimulation to explore new control paradigms for clinical stimulation protocols with potentially better control results, reduced energy expenditure, and higher spatial sparseness.

VII Acknowledgments

We thank Dr. Michael Scholz, Cristiana Dimulescu, and Lena Salfenmoser for scientific discussions, and Prof. Dr. Eckehard Schöll for his comments on the manuscript.

This work was funded by the German Research Foundation (163436311 – SFB 910, 327654276 – SFB 1315, and under Germany’s Excellence Strategy – EXC 2002/1 “Science of Intelligence” – 390523135).

Human connectivity data were provided by the Human Connectome Project, WU-Minn Consortium (Principal Investigators: David Van Essen and Kamil Ugurbil; 1U54MH091657) funded by the 16 NIH Institutes and Centers that support the NIH Blueprint for Neuroscience Research; and by the McDonnell Center for Systems Neuroscience at Washington University.

Appendix A Mathematical formulation of the optimal control problem

A.1 Optimization problem: general formulation

In this section the mathematical foundations for controlling the network dynamics are laid. We use nonlinear sparse optimal control. All derivations below are similar to the ones in Refs. [31, 29], where sparse optimal control was applied to a system of partial differential equations with diffusive coupling.

We consider a network of nodes with -dimensional dynamics each. Its dynamics is defined by a system of stochastic differential equations,

| (17) |

with the state vector and . denotes the Kronecker product. The local node dynamics is governed by with . The coupling term consists of the -dimensional adjacency matrix and the -dimensional local coupling scheme . with denotes the vector of control inputs, is a diagonal dimensional control matrix, and the -dimensional local control scheme. The noise term consists of the Kronecker product of an N-dimensional identity matrix and the -dimensional local noise scheme . The independent white Gaussian stochastic process is given by with standard deviation . Boundary conditions are given by

| (18) |

Since our system is stochastic while the control is deterministic, the optimal control must minimize a cost functional which is an expectation over many realizations of the noise. It is defined as

| (19) |

where the angle brackets denote the expectation.

Our goal is to find the optimal control that minimizes the above mean cost functional. Hence, the variational inequality

| (20) |

holds when regarding every dimension separately. We linearize around the optimal solution and write to obtain

| (21) |

Since above inequality (21) has to hold for all and , we find the stronger necessary condition

| (22) |

for the optimal solution.

Inserting the expression from the right side of Eq. (19) yields

| (23) |

Since Eq. (23) must hold for all components , we write

| (24) |

where denotes the element-wise multiplication (Schur product). Here we assume that the cost functional can be separated into a part , which depends on control inputs only and which is deterministic, and a part , which is a function of the network state and only indirectly depends on the applied control, i.e.:

| (25) |

Equivalently, one can write

| (26) |

Applying the chain rule in Eq. (24) yields

| (27) |

where is the Jacobian matrix of the state with respect to the control vector , evaluated at the optimal control .

To calculate the above derivative we must find an expression for the Jacobian matrix . However, the dependence of the network state on the control inputs cannot be provided in closed form. The Lagrange Formalism enables us to derive an alternative expression for the second term on the right-hand side of Eq. (27), which can be evaluated to find the optimal control.

A.2 The method of Lagrange multipliers

We can use the formalism of Lagrange multipliers to formulate conditions for the optimization of the cost functional, Eq. (19), that is subject to the constraint given in Eq. (17) and the boundary conditions given in Eq. (18). Our approach is similar to the method used in [68], and we will obtain a result that resembles what is found in [31] for a system reaction-diffusion equations.

We define the mean Lagrange function as

| (28) |

Equation (17) for the dynamics of the network state has to be satisfied for all times , which is ensured by the time integration of the constraint. with is the -dimensional vector of time-dependent Lagrange multipliers.

Linearizing around the optimal solution [cf. Eqs. (20), (24)], we obtain the optimality conditions

| (29) |

and

| (30) |

with and .

After inserting the Lagrange function, Eq. (28), into Eq. (30), we apply partial integration and the chain rule, and obtain

| (31) |

The boundary term

| (32) |

must hold for every realization. Since could be finite, the boundary condition for the vector of Lagrange multipliers is

| (33) |

Equation (31) is fulfilled if the integrand vanishes. We thus obtain a -dimensional system of linear ordinary differential equations for the so-called adjoint states ,

| (34) |

where denotes the Jacobian matrix of the local dynamics with respect to the network state . The adjoint states are obtained for every realization by solving Eq. (34) backwards in time obeying the boundary conditions (33).

The first term in Eq. (35) vanishes [see Eq. (24)]. Furthermore, after applying partial integration and the chain rule, above Eq. (35) simplifies to

| (36) |

where the boundary term of the partial integration was set to zero because of the boundary conditions imposed on and .

Appendix B Numerical integration method and local optima

We integrate the equations for the network dynamics [Eq. (1)] and the adjoint state [Eq. (10)] using the fourth order Runge-Kutta (RK4) method. A small step size of arb. units is used to ensure numeric stability and small truncation errors. The noise term is scaled with the factor , such that the effective noise strength does not depend on the integration step size.

When solving the minimization problem for the optimal control numerically (see Section II.3) using gradient based optimization, we may converge to a local minimum of the cost functional. In order to alleviate this problem, we choose multiple initial conditions for the conjugate gradient algorithm. In the case of synchronizing the network dynamics, the control scheme was robust to changes in . The coexistence of multiple local minima of the cost functional was observed only in the case of switching between network states using sparse control (cf. Fig. 7). For larger values for [cf. Eq. (7)] we find one minimum at (smaller cost for the sparsity term) coexisting with another minimum corresponding to a control input affecting a small number of nodes (smaller cost for the precision term). Which of these local optima has minimal cost depends on the value of .

To ensure that the global optimum was found in the latter case for a given value of , we systematically varied the initial conditions. Starting with , we first calculated the optimal control signal, then increased the value of , and used the previous optimal control as the new initial condition (continuation). Likewise, starting with a high enough value for , this process is repeated, but for decreasing values of . For a given value of the control input with the lower cost was chosen for further analysis.

Appendix C Definitions of average and modal controllability

We consider a simplified linear network dynamics of the form

| (40) |

with activity vector , adjacency matrix , control matrix and control inputs . In order to calculate the average controllability of node in the network, we choose the input matrix to target a single node, i.e. for and , otherwise. The controllability Gramian of this system is defined as

| (41) |

and the average controllability as its trace (cf. [23])

| (42) |

Network nodes with high average controllability have a large impact on the network dynamics. This makes them important control sites since by controlling these nodes a large number of possible activity vectors can be reached with little control energy. They are consequently said to be able to move the system to many easy-to-reach states [23].

The modal controllability is defined based on on an eigenvalue decomposition of the network adjacency matrix ,

| (43) |

where are the elements of the eigenvector matrix and are the corresponding eigenvalues [23]. Nodes with high modal controllability tend to be sparsely connected. They are capable of pushing the network dynamics towards activity vectors which require substantial control energy to be reached. Thus, nodes with high modal controllability are said to be capable of steering the network into difficult-to-reach states [23].

For a detailed description and derivation of these regional controllability measures please refer to Refs. [23, 25, 63]. We use the matlab code provided with the publication of Ref. [23] to compute the controllability measures [79]. For their calculation, only the adjaciency matrix (cf. Fig. 2) is required.

Appendix D Diffusion Tensor Imaging

D.1 Subjects

We use the Diffusion Tensor Imaging (DTI) data of 12 human subjects from the Human Connectome Project (Young Adults HCP) [56] with the following IDs: 101309, 121416, 211215, 211619, 212116, 213522, 219231, 220721, 268749, 284646, 303119, 329844. All subjects are male, healthy, and 26-30 years old.

D.2 Data processing pipeline

Data acquisition and preprocessing is described in Ref. [56]. We used BEDPOSTX (Bayesian Estimation of Diffusion Parameters Obtained using Sampling Techniques) from the FSL toolbox [57] for building up distributions on diffusion parameters, which automatically determines the number of crossing fibres per voxel. Based on these diffusion parameters at each voxel, we then applied the PROBTRACKX (probabilistic tractography) algorithm from the same toolbox with 5000 samples per voxel. The resulting connectivity matrix for each subject was normalized by dividing the connections between any two regions by the number of voxels in the source region multiplied by 5000. Self connections were deleted, , and we averaged the structural connectivity matrices of the individual subjects to obtain the adjacency matrix . Probabilistic fiber tracking does not provide information about directionality; hence the resulting graph must be undirected. Following standard procedures (cf. [76]), the structural connectivity matrix was symmetrized , as any deviations from symmetry are artifacts of the processing method. Since application of the PROBTRACKX algorithm typically results in a certain number of false positive fibers and thus assigns non-zero connection strengths to all pairs of brain regions, we enforced a sparsity of 20% [77] by discarding all connections with a relative connection strength smaller than (0.15% of strongest relative connection strength).

References

- Zaehle et al. [2010] T. Zaehle, S. Rach, and C. Herrmann, Transcranial alternating current stimulation enhances individual alpha activity in human EEG, PLoS One 5, e13766 (2010).

- Neuling et al. [2013] T. Neuling, S. Rach, and C. Herrmann, Orchestrating neuronal networks: sustained after-effects of transcranial alternating current stimulation depend upon brain states, Frontiers in Human Neuroscience 7, 161 (2013).

- Polanía et al. [2012] R. Polanía, M. A. Nitsche, C. Korman, G. Batsikadze, and W. Paulus, The importance of timing in segregated theta phase-coupling for cognitive performance, Current Biology 22, 1314 (2012).

- Strüber et al. [2014] D. Strüber, S. Rach, S. Trautmann-Lengsfeld, A. Engel, and C. Herrmann, Antiphasic 40 Hz oscillatory current stimulation affects bistable motion perception, Brain Topography 27, 158 (2014).

- Ladenbauer et al. [2017] J. Ladenbauer, J. Ladenbauer, N. Külzow, R. de Boor, E. Avramova, U. Grittner, and A. Flöel, Promoting sleep oscillations and their functional coupling by transcranial stimulation enhances memory consolidation in mild cognitive impairment, Journal of Neuroscience 37, 7111 (2017).

- Sun et al. [2011] W. Sun, W. Fu, W. Mao, D. Wang, and Y. Wang, Low-frequency repetitive transcranial magnetic stimulation for the treatment of refractory partial epilepsy, Clinical EEG and Neuroscience: Official Journal of the EEG and Clinical Neuroscience Society (ENCS) 42, 40 (2011).

- Fregni et al. [2006] F. Fregni, P. Otachi, A. Valle, P. Boggio, G. Thut, S. Rigonatti, A. Pascual-Leone, and K. Valente, A randomized clinical trial of repetitive transcranial magnetic stimulation in patients with refractory epilepsy, Annals of Neurology 60, 447 (2006).

- Hasan et al. [2013] A. Hasan, P. Falkai, and T. Wobrock, Transcranial brain stimulation in schizophrenia: targeting cortical excitability, connectivity and plasticity, Current Medicinal Chemistry 20, 405 (2013).

- Cole et al. [2015] J. Cole, C. Bernacki, A. Helmer, N. Pinninti, and J. O’reardon, Efficacy of transcranial magnetic stimulation (TMS) in the treatment of schizophrenia: A review of the literature to date, Innovations in Clinical Neuroscience 12, 12 (2015).

- Fried [2016] I. Fried, Brain stimulation in Alzheimer’s disease, Journal of Alzheimer’s Disease 54, 789 (2016).

- George et al. [2013] M. S. George, J. J. Taylor, and E. B. Short, The expanding evidence base for rtms treatment of depression, Current Opinion in Psychiatry 26, 13 (2013).

- Bassett and Sporns [2017] D. S. Bassett and O. Sporns, Network neuroscience, Nature Neuroscience 20, 353 (2017).

- Amunts et al. [2014] K. Amunts, M. Hawrylycz, D. Van Essen, J. Van Horn, N. Harel, J.-B. Poline, F. De Martino, J. Bjaalie, G. Dehaene-Lambertz, S. Dehaene, P. Valdes-Sosa, B. Thirion, K. Zilles, S. Hill, M. Abrams, P. Tass, W. Vanduffel, A. Evans, and S. Eickhoff, Interoperable atlases of the human brain, Neuroimage 99, 525 (2014).

- Rolls et al. [2015] E. T. Rolls, M. Joliot, and N. Tzourio-Mazoyer, Implementation of a new parcellation of the orbitofrontal cortex in the automated anatomical labeling atlas, Neuroimage 122, 1 (2015).

- Eickhoff et al. [2018] S. B. Eickhoff, B. T. Yeo, and S. Genon, Imaging-based parcellations of the human brain, Nature Reviews Neuroscience 19, 672 (2018).

- Breakspear [2017] M. Breakspear, Dynamic models of large-scale brain activity, Nature Neuroscience 20, 340 (2017).

- Deco and Jirsa [2012] G. Deco and V. K. Jirsa, Ongoing cortical activity at rest: criticality, multistability, and ghost attractors, Journal of Neuroscience 32, 3366 (2012).

- Deco et al. [2013] G. Deco, A. Ponce-Alvarez, D. Mantini, G. L. Romani, P. Hagmann, and M. Corbetta, Resting-state functional connectivity emerges from structurally and dynamically shaped slow linear fluctuations, Journal of Neuroscience 33, 11239 (2013).

- Demirtaş et al. [2019] M. Demirtaş, J. B. Burt, M. Helmer, J. L. Ji, B. D. Adkinson, M. F. Glasser, D. C. Van Essen, S. N. Sotiropoulos, A. Anticevic, and J. D. Murray, Hierarchical heterogeneity across human cortex shapes large-scale neural dynamics, Neuron 101, 1181 (2019).

- Cakan et al. [2020] C. Cakan, C. Dimulescu, L. Khakimova, D. Obst, A. Flöel, and K. Obermayer, A deep sleep model of the human brain: how slow waves emerge due to adaptation and are guided by the connectome, arXiv preprint arXiv:2011.14731 (2020).

- Tang and Bassett [2018] E. Tang and D. S. Bassett, Colloquium: Control of dynamics in brain networks, Reviews of Modern Physics 90, 031003 (2018).

- Gu et al. [2017] S. Gu, R. F. Betzel, M. G. Mattar, M. Cieslak, P. R. Delio, S. T. Grafton, F. Pasqualetti, and D. S. Bassett, Optimal trajectories of brain state transitions, Neuroimage 148, 305 (2017).

- Gu et al. [2015] S. Gu, F. Pasqualetti, M. Cieslak, Q. K. Telesford, B. Y. Alfred, A. E. Kahn, J. D. Medaglia, J. M. Vettel, M. B. Miller, S. T. Grafton, et al., Controllability of structural brain networks, Nature Communications 6, 1 (2015).

- Golos et al. [2015] M. Golos, V. Jirsa, and E. Daucé, Multistability in large scale models of brain activity, PLoS Computational Biology 11, e1004644 (2015).

- Muldoon et al. [2016] S. F. Muldoon, F. Pasqualetti, S. Gu, M. Cieslak, S. T. Grafton, J. M. Vettel, and D. S. Bassett, Stimulation-based control of dynamic brain networks, PLoS Computational Biology 12, e1005076 (2016).

- Berkovitz and Medhin [2012] L. D. Berkovitz and N. G. Medhin, Nonlinear optimal control theory (CRC press, 2012).

- Herzog et al. [2012] R. Herzog, G. Stadler, and G. Wachsmuth, Directional sparsity in optimal control of partial differential equations, SIAM Journal on Control and Optimization 50, 943 (2012).

- Casas et al. [2017] E. Casas, R. Herzog, and G. Wachsmuth, Analysis of spatio-temporally sparse optimal control problems of semilinear parabolic equations, ESAIM: Control, Optimisation and Calculus of Variations 23, 263 (2017).

- Tröltzsch [2010] F. Tröltzsch, Optimal control of partial differential equations: theory, methods, and applications, Vol. 112 (American Mathematical Soc., 2010).

- Stannat and Wessels [2020] W. Stannat and L. Wessels, Deterministic control of stochastic reaction-diffusion equations, Evolution Equations & Control Theory (2020).

- Casas et al. [2013] E. Casas, C. Ryll, and F. Tröltzsch, Sparse optimal control of the schlögl and FitzHugh–Nagumo systems, Computational Methods in Applied Mathematics 13, 415 (2013).

- FitzHugh [1961] R. FitzHugh, Impulses and physiological states in theoretical models of nerve membrane, Biophysical Journal 1, 445 (1961).

- Nagumo et al. [1962] J. Nagumo, S. Arimoto, and S. Yoshizawa, An active pulse transmission line simulating nerve axon, Proceedings of the IRE 50, 2061 (1962).

- Kostova et al. [2004] T. Kostova, R. Ravindran, and M. Schonbek, FitzHugh–Nagumo revisited: Types of bifurcations, periodical forcing and stability regions by a lyapunov functional, International Journal of Bifurcation and Chaos 14, 913 (2004).

- Schöll et al. [2009] E. Schöll, G. Hiller, P. Hövel, and M. A. Dahlem, Time-delayed feedback in neurosystems, Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences 367, 1079 (2009).

- Eydam et al. [2019] S. Eydam, I. Franović, and M. Wolfrum, Leap-frog patterns in systems of two coupled FitzHugh–Nagumo units, Physical Review E 99, 042207 (2019).

- Shepelev and Vadivasova [2019] I. Shepelev and T. Vadivasova, Variety of spatio-temporal regimes in a 2d lattice of coupled bistable FitzHugh–Nagumo oscillators. Formation mechanisms of spiral and double-well chimeras, Communications in Nonlinear Science and Numerical Simulation 79, 104925 (2019).

- Plotnikov et al. [2016] S. A. Plotnikov, J. Lehnert, A. L. Fradkov, and E. Schöll, Synchronization in heterogeneous FitzHugh–Nagumo networks with hierarchical architecture, Physical Review E 94, 012203 (2016).

- Lehnert et al. [2011] J. Lehnert, T. Dahms, P. Hövel, and E. Schöll, Loss of synchronization in complex neuronal networks with delay, EPL (Europhysics Letters) 96, 60013 (2011).

- Cakan et al. [2014] C. Cakan, J. Lehnert, and E. Schöll, Heterogeneous delays in neural networks, The European Physical Journal B 87, 1 (2014).

- Nikitin et al. [2019] D. Nikitin, I. Omelchenko, A. Zakharova, M. Avetyan, A. L. Fradkov, and E. Schöll, Complex partial synchronization patterns in networks of delay-coupled neurons, Philosophical Transactions of the Royal Society A 377, 20180128 (2019).

- Omelchenko et al. [2019] I. Omelchenko, T. Hülser, A. Zakharova, and E. Schöll, Control of chimera states in multilayer networks, Frontiers in Applied Mathematics and Statistics 4, 67 (2019).

- Ruzzene et al. [2020] G. Ruzzene, I. Omelchenko, J. Sawicki, A. Zakharova, E. Schöll, and R. G. Andrzejak, Remote pacemaker control of chimera states in multilayer networks of neurons, Physical Review E 102, 052216 (2020).

- Chouzouris et al. [2018] T. Chouzouris, I. Omelchenko, A. Zakharova, J. Hlinka, P. Jiruska, and E. Schöll, Chimera states in brain networks: Empirical neural vs. modular fractal connectivity, Chaos: An Interdisciplinary Journal of Nonlinear Science 28, 045112 (2018).

- Gerster et al. [2020] M. Gerster, R. Berner, J. Sawicki, A. Zakharova, A. Škoch, J. Hlinka, K. Lehnertz, and E. Schöll, FitzHugh–Nagumo oscillators on complex networks mimic epileptic-seizure-related synchronization phenomena, Chaos: An Interdisciplinary Journal of Nonlinear Science 30, 123130 (2020).

- Ramlow et al. [2019] L. Ramlow, J. Sawicki, A. Zakharova, J. Hlinka, J. C. Claussen, and E. Schöll, Partial synchronization in empirical brain networks as a model for unihemispheric sleep, EPL (Europhysics Letters) 126, 50007 (2019).

- Ghosh et al. [2008] A. Ghosh, Y. Rho, A. McIntosh, R. Kötter, and V. Jirsa, Cortical network dynamics with time delays reveals functional connectivity in the resting brain, Cognitive Neurodynamics 2, 115 (2008).

- Vuksanović and Hövel [2015] V. Vuksanović and P. Hövel, Dynamic changes in network synchrony reveal resting-state functional networks, Chaos: An Interdisciplinary Journal of Nonlinear Science 25, 023116 (2015).

- Messé et al. [2015] A. Messé, M.-T. Hütt, P. König, and C. C. Hilgetag, A closer look at the apparent correlation of structural and functional connectivity in excitable neural networks, Scientific Reports 5, 7870 (2015).

- Buchholz et al. [2013] R. Buchholz, H. Engel, E. Kammann, and F. Tröltzsch, On the optimal control of the schlögl-model, Computational Optimization and Applications 56, 153 (2013).

- Polak and Ribiere [1969] E. Polak and G. Ribiere, Note sur la convergence de méthodes de directions conjuguées, ESAIM: Mathematical Modelling and Numerical Analysis-Modélisation Mathématique et Analyse Numérique 3, 35 (1969).

- Nocedal and Wright [2006] J. Nocedal and S. Wright, Numerical optimization (Springer Science & Business Media, 2006).

- Fletcher and Reeves [1964] R. Fletcher and C. M. Reeves, Function minimization by conjugate gradients, The Computer Journal 7, 149 (1964).

- Izhikevich [2007] E. M. Izhikevich, Dynamical systems in neuroscience (MIT press, 2007).

- Cakan and Obermayer [2020] C. Cakan and K. Obermayer, Biophysically grounded mean-field models of neural populations under electrical stimulation, PLoS Computational Biology 16, 1 (2020).

- Van Essen et al. [2013] D. C. Van Essen, S. M. Smith, D. M. Barch, T. E. Behrens, E. Yacoub, K. Ugurbil, W.-M. H. Consortium, et al., The WU-Minn human connectome project: an overview, Neuroimage 80, 62 (2013).

- Jenkinson et al. [2012] M. Jenkinson, C. F. Beckmann, T. E. Behrens, M. W. Woolrich, and S. M. Smith, FSL, Neuroimage 62, 782 (2012).

- hor [1984] Noise-induced transitions in physics, chemistry, and biology, in Noise-Induced Transitions: Theory and Applications in Physics, Chemistry, and Biology (Springer Berlin Heidelberg, Berlin, Heidelberg, 1984) pp. 164–200.