Edge Rewiring Goes Neural: Boosting Network Resilience without Rich Features

Abstract

Improving the resilience of a network is a fundamental problem in network science, which protects the underlying system from natural disasters and malicious attacks. This is traditionally achieved via successive degree-preserving edge rewiring operations, with the major limitation of being transductive. Inductively solving graph-related tasks with sequential actions is accomplished by adopting graph neural networks (GNNs) coupled with reinforcement learning under the scenario with rich graph features. However, such frameworks cannot be directly applied to resilience tasks where only pure topological structure is available. In this case, GNNs can barely learn useful information, resulting in prohibitive difficulty in making actions for successively rewiring edges under a reinforcement learning context. In this paper, we study in depth the reasons why typical GNNs cause such failure. Based on this investigation, we propose ResiNet, the first end-to-end trainable inductive framework to discover Resilient Network topologies while balancing network utility. To this end, we reformulate resilience optimization as an MDP equipped with edge rewiring action space, and propose a pure topology-oriented variant of GNN called Filtration enhanced Graph Neural Network (FireGNN), which can learn from graphs without rich features. Extensive experiments demonstrate that ResiNet achieves a near-optimal resilience gain on various graphs while balancing the utility, and outperforms existing approaches by a large margin.

1 Introduction

Network systems, such as infrastructure systems, supply chains, routing networks, and peer-to-peer computing networks, are vulnerable to malicious attacks. Network resilience, in the context of network science, is a measurement characterizing the ability of a system to defend itself from such failures and attacks [1]. A resilient network should continue to function and maintain an acceptable level of utility when the network partially fails. Therefore, resilience is crucial when designing new systems or upgrading existing systems toward high reliability.

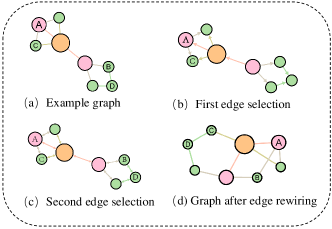

Current network resilience optimization methods improve the resilience of networks over graph topologies based on an atomic operation called edge rewiring [1, 2, 3]. Concretely, for a given graph and two existing edges and , an edge rewiring operation alters the graph structure by removing and and adding and , where and . Edge rewiring operation has some nice properties against simply addition or deletion of edges: 1) it preserves the node degree--the number of edges and the total degree of a graph, while addition/deletion may cause change; 2) it achieves minimal changes in terms of utility measure in terms of graph Laplacian, while addition/deletion may lead to an unpredictable network utility degradation [4, 5]. The challenge of the resilience task lies in determining which two edges are selected for rewiring, with the complexity of for successive steps of edge rewiring.

Traditional non-learning-based resilience optimization methods typically fall into the categories of evolutionary computation [6] or heuristic-based [1, 2, 3, 7], with the following limitations:

-

•

Transductivity. Traditional methods are transductive since they search the resilience topology on a particular problem instance. This search procedure is performed for every individual graph without generalization.

- •

-

•

Utility Loss. Rewiring operation in resilience optimization may potentially lead to an degradation of the network utility, which may jeopardize the network functioning.

Although the learning-based paradigm equipped with GNNs has proved powerful for a large variety of graph tasks with rich features [9, 10, 11, 12, 13, 14, 15], it still remains opaque how to effectively adapt such approaches to resilience optimization where only topological structure is available. One may infer that such a lack of feature can significantly hinder the learning ability, and a case agreeing with this has been discovered in solving the traveling salesman problem (TSP): Boffa et al. showed that the performance of GNNs degenerates largely when node coordinates are missing (but pairwise distance is given), compared to the case when coordinates are available [16]. Although Boffa et al. adopted a distance encoding strategy to alleviate the performance gap in TSP [16, 17], we empirically found that this encoding strategy is not working well for the more challenging resilience task (See Sec. 5.2). As such, it is demanding to devise a novel method that can be applicable for the resilience task without rich features. Readers are referred to Appendix D for a more detailed analysis.

In this work, we present ResiNet, the first inductive learning-based framework for discovering resilient network topology using successive edge rewiring operations. To overcome the above limitation of GNNs in modeling graphs without rich features, we specially design a topology-oriented variant of GNN called Filtration enhanced GNN (FireGNN). FireGNN creates a series of subgraphs (the filtration) by successively removing the node with the highest degree from the graph and then learns to aggregate the node representations from each subgraph. This filtration process innovation is inspired by persistent homology and the approximation of the persistence diagram [18, 19, 20].

The main contributions of this paper are summarized as follows:

-

1)

We propose ResiNet, the first data-driven learning-based framework to boost network resilience inductively in a degree-preserving manner with moderate loss of the network utility. ResiNet forms resilience optimization into a successive sequential decision process of edge rewiring operations. Extensive experiments show that ResiNet achieves near-optimal resilience gain while balancing network utilities. Existing approaches are outperformed by a large margin.

-

2)

FireGNN, our technical innovation serving as the graph feature extractor, is capable of learning meaningful representations from pure topological structures, which provides sufficient training signals to learn an RL agent to perform successive edge rewiring operations inductively.

2 Related work

Network resilience.

Modern network systems are threatened by various malicious attacks, such as the destruction of critical nodes, critical connections and critical subset of the network via heuristics/learning-based attack [21, 22, 23, 24, 25, 26, 21, 27]. Network resilience was proposed and proved as a suitable measurement for describing the robustness and stability of a network system under such attacks [1]. Around optimizing network resilience, various defense strategies have been proposed to protect the network functionality from crashing and preserve network’s topologies to some extent. Commonly used manipulations of defense include adding additional edges [28, 29], protecting vulnerable edges [30] and rewiring two edges [1, 2, 31]. Among these manipulations, edge rewiring fits well to real-world applications as it induces fewer functionality changes to the original network and does not impose additional loads to the vertices (degree-preserving) [1, 3, 7]. By now, there has been no learning-based inductive strategy for the resilience task.

GNNs for graph-related tasks.

GNNs are powerful tools to learn from relational data, providing meaningful representations for the downstream task. Several successful applications using GNNs as backbones include node classification [32, 33, 34], link prediction [35, 32, 33], graph property estimation [36, 32, 35, 37], and combinatorial problems on graphs (e.g., TSP [9, 10, 11, 12, 38], vehicle routing problem [13, 14, 15], graph matching [39] and adversarial attack on GNNs [4, 40]). Although standard message passing GNNs are powerful, their expressive power is upper-bounded by the 1-Weisfeiler-Lehman (1-WL) test [36]. Many advanced techniques are designed for enabling GNNs with greater expressive power over the 1-WL test, such as distance encoding [17] and high-order GNNs [41]. Such strategies are empirically observed to be more expressive than 1-WL versions under rare feature cases [42, 16]. For example, distance encoding was employed by Boffa et al. in a learning-based TSP solver, achieving better performance than standard GNNs when only pairwise distance is given [16]. However, it still obviously under-performs compared to the case when GNNs are fully trained with node coordinates. Till now, it remains prohibitively challenging to adapt GNNs to graphs effectively without rich feature, or even with pure topology, as in the resilience task.

Extended related work.

The related work on network resilience and utility, multi-views graph augmentation for GNNs and deep graph generation is deferred to Appendix A.

3 Problem definition

An undirected graph is defined as , where is the set of nodes, is the set of edges, is the adjacency matrix, and is the -dimensional node feature matrix111A graph without rich feature only has the topology structure and the node feature is not available.. The degree of a node is defined as , and a node with degree 0 is called an isolated node.

Given the network resilience metric and the utility metric , let denote the set of graphs with the same node degrees as . The objective of boosting the resilience of is to find a target graph , which maximizes the network resilience while preserving the network utility. Formally, the problem of maximizing network resilience is formulated as

where is the scalar weight that balances the resilience and the utility.

Consistent with the conventional setting in network science, two families of resilience metrics and two examples of utility metrics are used in our experiments, with detailed definitions deferred to Appendix B.

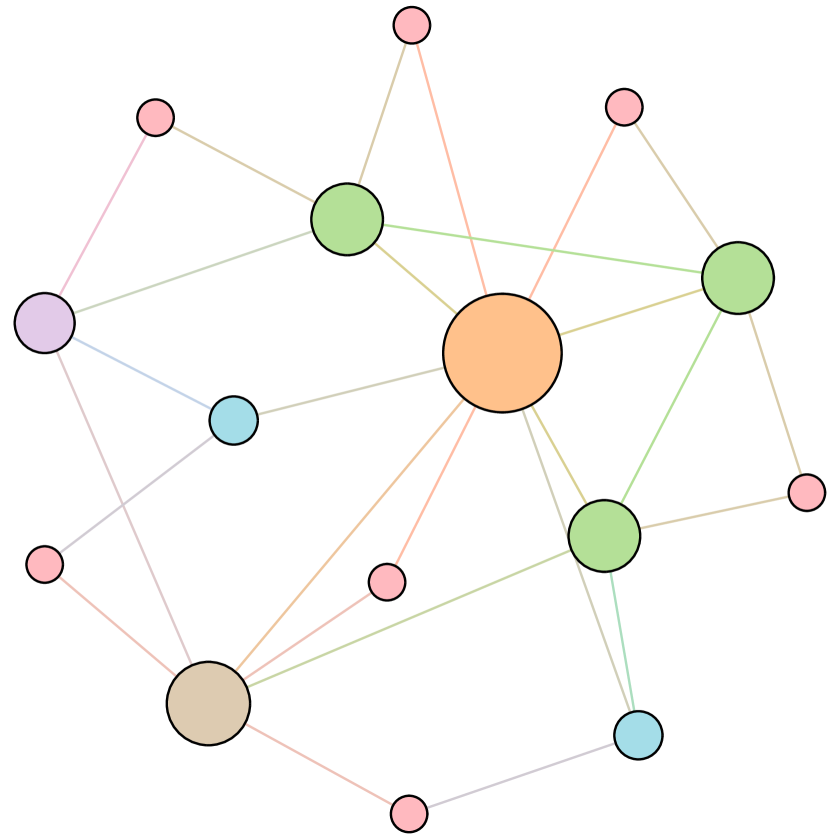

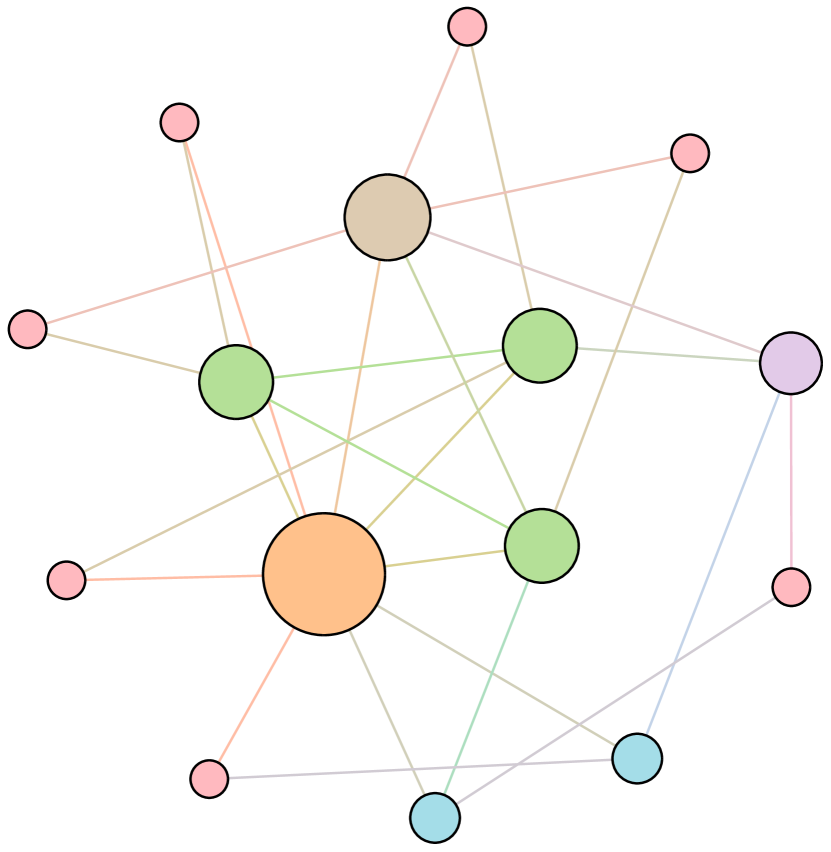

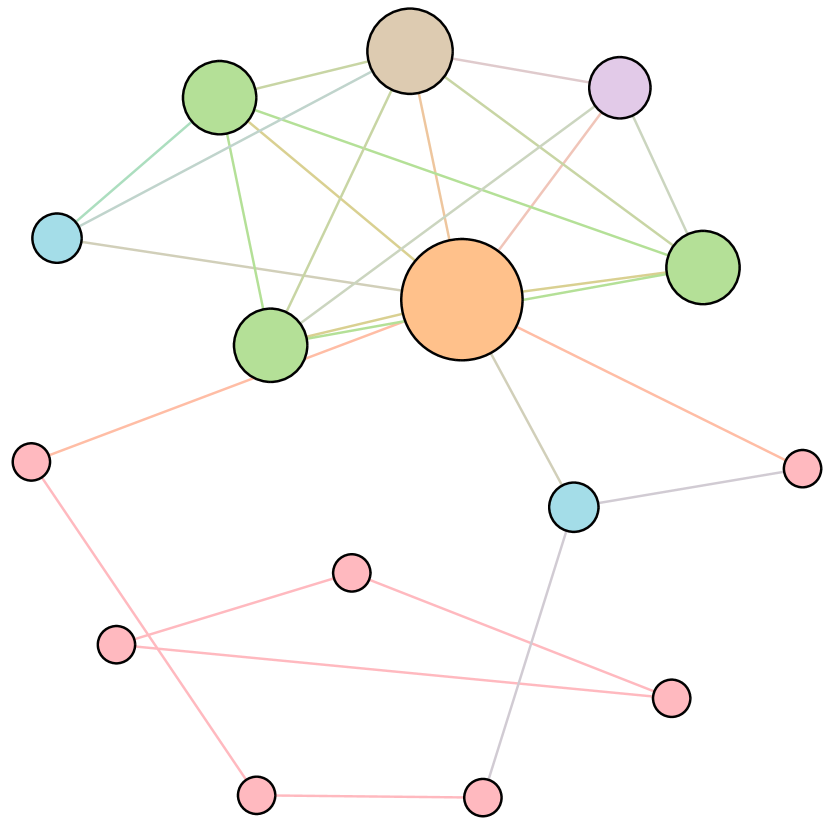

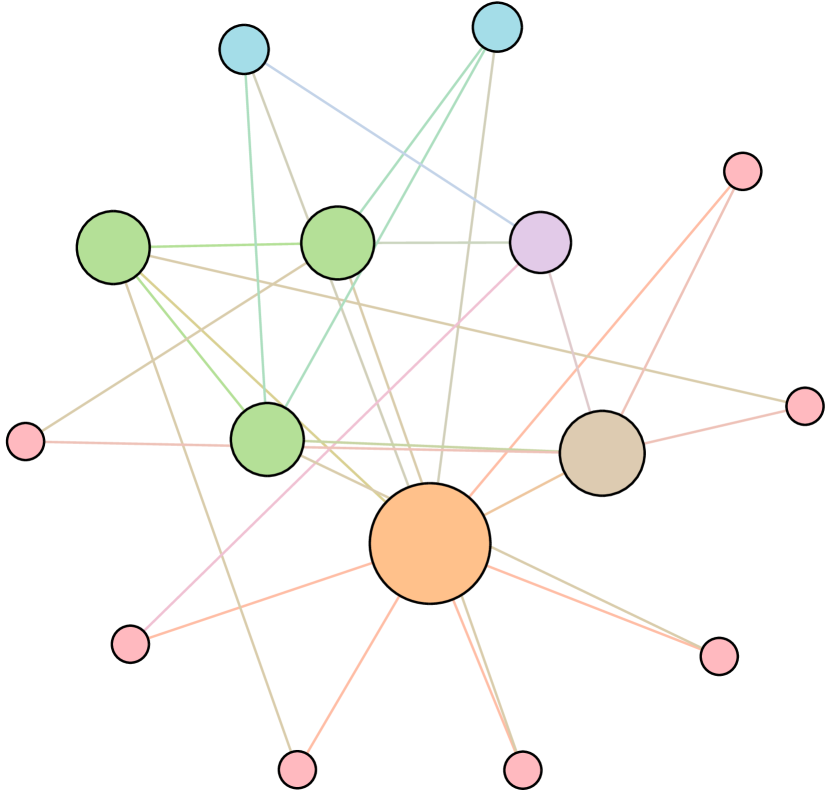

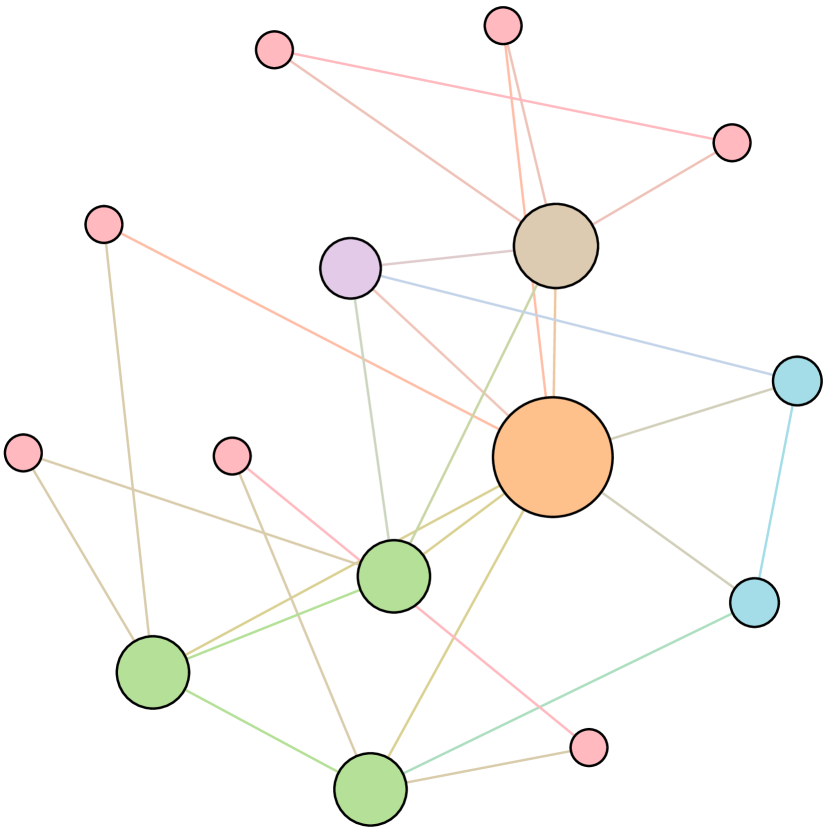

To satisfy the constraint of preserving degree, currently, the edge rewiring operation is the default atomic action for obtaining new graph candidates from . As is shown in Figure 1, at each step, two existing edges and are first selected. Then the edge rewiring alters the graph structure by removing and and adding and , where . Combinatorially, a total of successive steps of edge rewiring has the complexity of .

4 Proposed approach: ResiNet

In this section, we formulate the task of boosting network resilience as a reinforcement learning task by learning to select two edges and rewire them successively. We first present the graph resilience-aware environment design and describe our innovation FireGNN in detail. Finally, we present the graph policy network that guides the edge selection and rewiring process.

4.1 Boosting network resilience via edge rewiring as Markov decision process

We formulate the network resilience optimization problem via successive edge rewiring operations into the MDP framework. The Markov property denotes that the graph obtained at time step relies only on the graph at time step and the rewiring operation, reducing the complexity from original to .

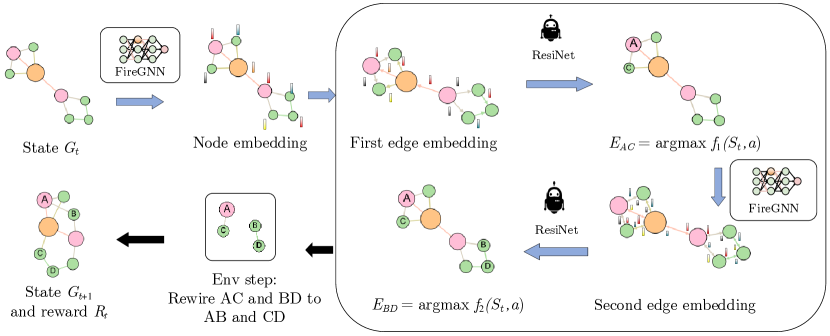

As illustrated in Figure 2, the environment performs the resilience optimization in an auto-regressive step-wise way through a sequence of edge rewiring actions guided by ResiNet. Given an input graph, the agent first decides whether to terminate or not. If it chooses not to terminate, it selects one edge from the graph to remove, receives the very edge it just selected as the auto-regression signal, and then selects another edge to remove. Four nodes of these two removed edges are re-combined, forming two new edges to be added to the graph. The optimization process repeats until the agent decides to terminate. The details of the design of the state, the action, the transition dynamics, and the reward are presented as follows.

State.

The fully observable state is formulated as , where is the current input graph at step . The detailed node feature initialization strategy is given in Appendix C.3.

Action.

ResiNet is equipped with a node permutation-invariant, variable-dimensional action space. Given a graph , the action is to select two edges and the rewiring order. The agent first chooses an edge and a direction . Then conditioning on the state, , and the direction the agents chooses an edge such that and a direction . The heads of the two edges reconnect as a new edge , and so does the tail . As , and , refer to the same rewiring operation, the choice of the direction of is randomized (this randomized bit is still an input of choosing ). This effectively reduces the size of the action space by half. In this way, The action space is the set of all feasible pairs of , with a variable size no larger than .

Transition dynamics.

The formulation of the action space implies that if the agent does not terminate at step , the selected action must form an edge rewiring. This edge rewiring is executed by the environment and the graph transits to the new graph.

Note that in some other work, infeasible operations are also included in the action space (to make the action space constant through the process) [43, 44]. In these work, if the operation is infeasible, it is not executed, and the state is not changed. This reduces the sample efficiency, causes biased gradient estimations [45], and makes the process to be prone to stuck at the state (which requires manually disabling the specific action in the next step). ResiNet takes advantage of the state-dependent variable action space composed of only feasible operations.

Reward.

ResiNet aims to optimize the resilience while preserving the utility, forming a complicated and possibly unknown objective function. Despite this, by [46], an MDP that maximizes a complicated objective is up to an MDP that maximizes the linear combination of resilience and utility for some coefficient factor. This fact motivates us to design the reward as the step-wise gain of such a linear combination as

where and are the resilience and the utility functions, respectively. The cumulative reward up to time is then the total gain of such a linear combination.

4.2 FireGNN

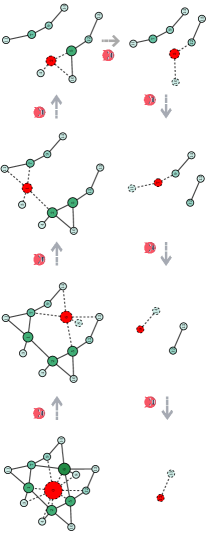

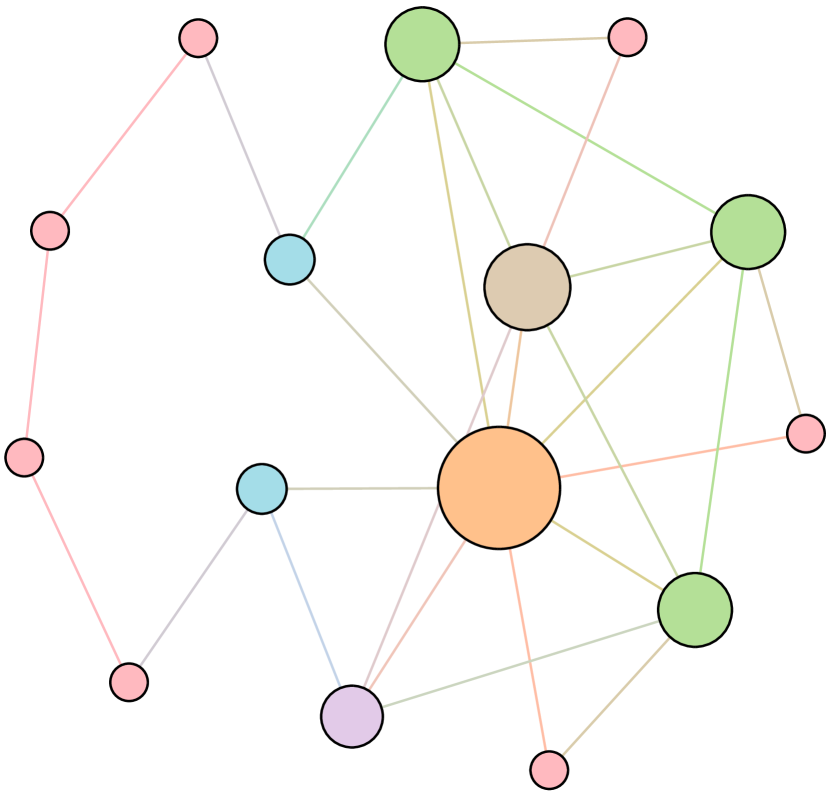

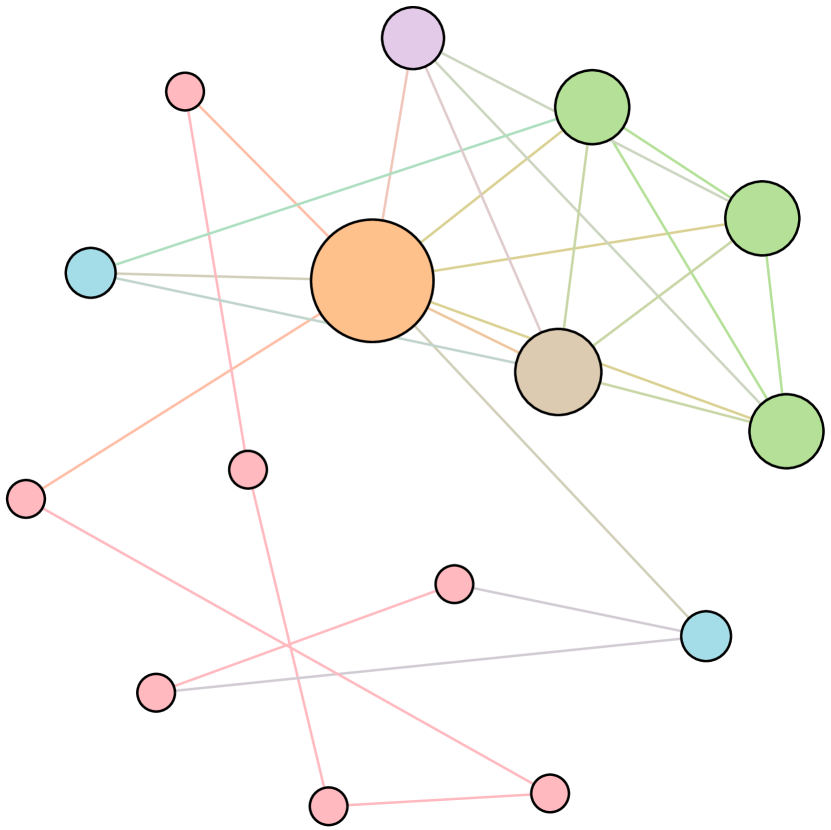

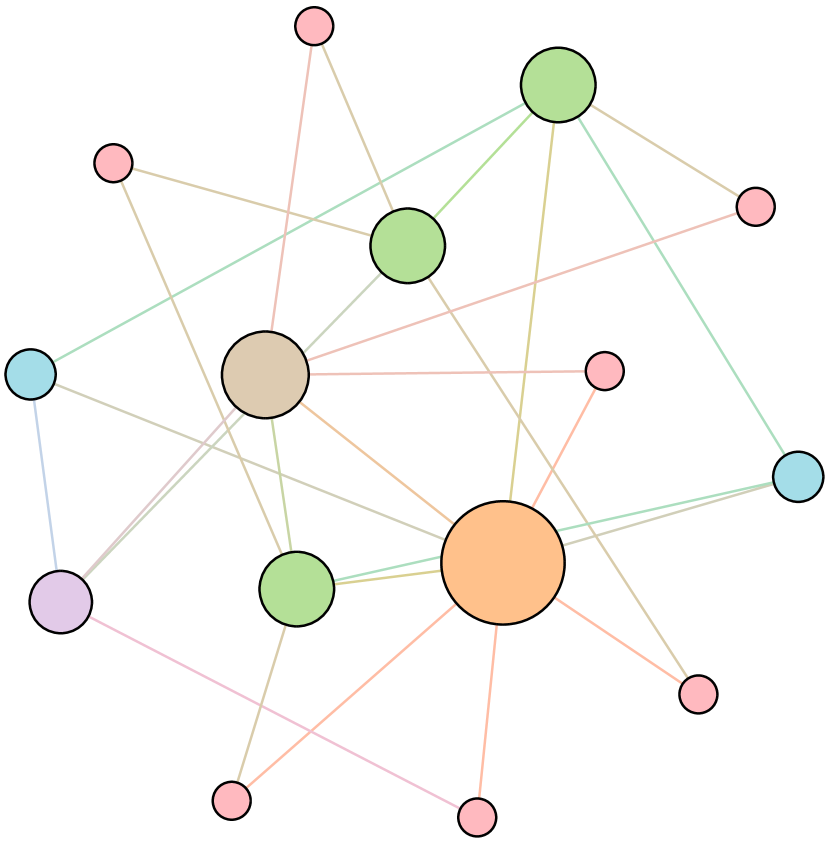

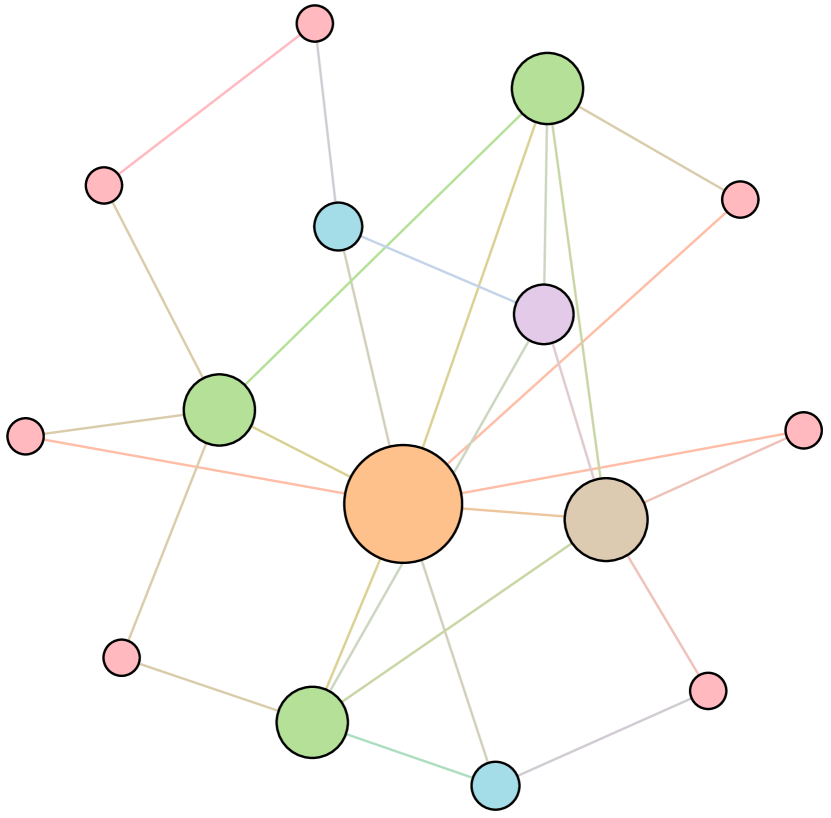

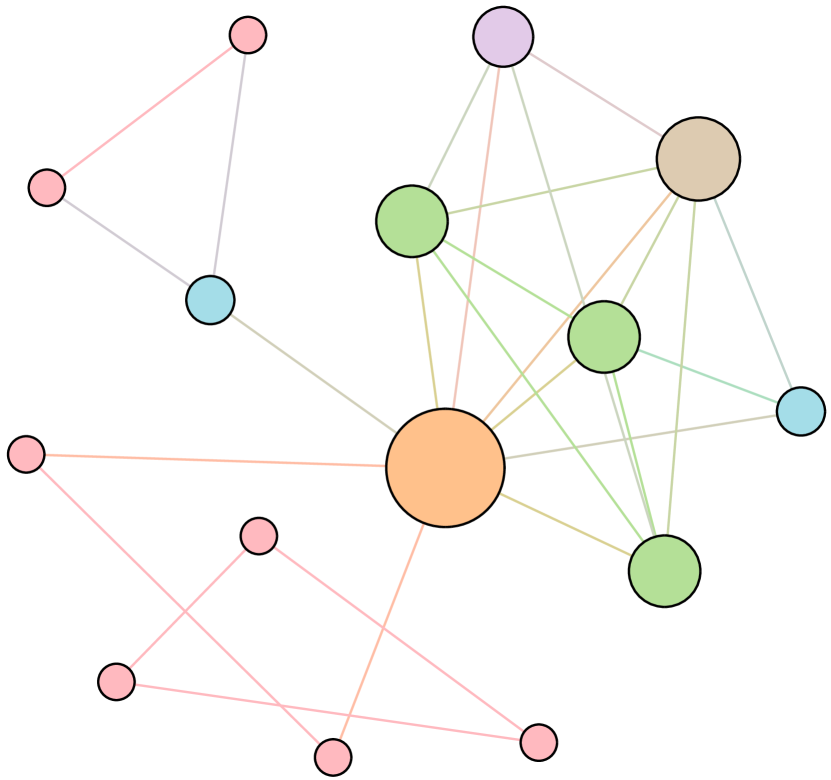

Motivated by graph filtration in persistent homology [18], we design the filtrated graph enhanced GNN termed FireGNN to model graphs without rich features, or even with only topology. As shown in Figure 3, for a given input graph , FireGNN transforms from the static version to a temporal version consisting of a sequence of subgraphs, by repeatedly removing the node with the highest degree222Removing the node with the highest degree leads to an efficient minimal number of resultant subgraphs, comparing to the uniformly random removal of nodes.. Observing a sequence of nested subgraphs of grants FirGNN the capability to observe how evolves towards being empty. Then FireGNN aligns and aggregates the node, edge and graph embedding from each subgraph, leading to meaningful representations in node, edge, and graph levels. Formally, the filtration in FireGNN is constructed as

where denotes the remaining graph after removing nodes with highest node degrees, denotes the node with highest degree in current subgraph , DEGREE measures the node degree, is the original graph, and contains no edge. The sequence of the nested subgraphs of is termed the filtrated graph .

Node embedding.

Regular GNN only operates on the original graph to obtain the node embedding for each node as where denotes a standard GNN model. In FireGNN, by using the top subgraphs in a graph filtration, the final node embedding of is obtained by

where denotes a node-level aggregation function, is the node embedding of in the -th subgraph , and . In practice, is discarded when calculating if is isolated or not included in .

Edge embedding.

The directed edge embedding of the edge from node to node in each subgraph is obtained by combining the embeddings of the two end vertices in as

where denotes an aggregation function for obtaining edge embedding from two end vertices (typically chosen from , , , , and ). is a multilayer perceptron (MLP) model that ensures the consistence between the dimensions of edge embedding and graph embedding.

The final embedding of the directed edge of the filtrated graph is given by

where denotes an edge-level aggregation function.

Graph embedding.

With the node embedding of each subgraph available, the graph embedding of each subgraph is calculated by a readout functions (e.g., , ) on all non-isolated nodes in as

The final graph embedding of the filtrated graph is given by

where denotes a graph-level aggregation function.

4.3 Edge rewiring policy network

Having presented the details of the graph resilience environment and FireGNN, in this section, we describe the policy network architecture of ResiNet in detail, which learns to select two existing edges for rewiring at each step. At time step , the policy network uses FireGNN as the graph extractor to obtain the directed edge embedding and the graph embedding from the filtrated graph , and outputs an action representing two selected rewired edges, leading to the new state and the reward .

To be inductive, we adapt a special autoregressive node permutation-invariant dimension-variable action space to model the selection of edges from graphs with arbitrary sizes and permutations. The detailed mechanism of obtaining the action based on edge embedding and graph embedding is presented as follows, further reducing the complexity from to .

Auto-regressive latent edge selection.

An edge rewiring action at time step involves the prediction of the termination probability and the selection of two edges and and the rewiring order. The action space of is binary, however, the selection of two edges imposes a huge action space in , which is too expensive to sample from even for a small graph. Instead of selecting two edges simultaneously, we decompose the joint action into , where and are two existing edges which do not share any common node (recall that and are directed edges for an undirected graph). Thus the probability of is formulated as

Predicting the termination probability.

The first policy network takes the graph embedding as input and outputs the probability distribution of the first action that decides to terminate or not as

where is implemented by a two layer MLP. Then .

Selecting edges.

If the signal given by the agent decides to continue to rewire, two edges are then selected in an auto-regressive way. The signal of continuing to rewire is input to the selection of two edges as a one-hot encoding vector . The second policy network takes the graph embedding and as input and outputs a latent vector . The pointer network [47] is used to measure the proximity between and each edge embedding in to obtain the first edge selection probability distribution. Then, to select the second edge, the graph embedding and the first selected edge embedding and are concatenated and fed into the third policy network . obtains the latent vector for selecting the second edge using a respective pointer network. The overall process can be formulated as

where is a two-layer MLP model, denotes the concatenation operator, is the embedding of the first selected edge at step , and is a pointer network.

5 Experiments

In this section, we demonstrate the advantages of ResiNet over existing non-learning-based and learning-based methods in achieving superior network resilience, inductively generalizing to unseen graphs, and accommodating multiple resilience and utility metrics. Moreover, we show that FireGNN can learn meaningful representations from graph data without rich features, while current GNNs (including GNNs with stronger power than 1-WL test) fail. Our implementation is already open sourced333Link to code and datasets: https://github.com/yangysc/ResiNet.

5.1 Experimental settings

Datasets.

Synthetic datasets, real EU power network [48] and Internet peer-to-peer networks [49, 50] are used to demonstrate the performance of ResiNet in transductive and inductive settings. The details of data generation and the statistics of the datasets are presented in Appendix C.1. Following the conventional experimental settings in network science, the maximal node size is set to be around 1000 [1], taking into account: 1) the high complexity of selecting two edges at each step is ; 2) evaluating the resilience metric is time-consuming for large graphs.

Baselines.

We compare ResiNet with existing graph resilience optimization algorithms, including non-learning-based methods and learning-based algorithms. Non-learning-based methods (upper half of Table 1) include the hill climbing (HC) [1], the greedy algorithm [2], the simulated annealing (SA) [31], and the evolutionary algorithm (EA) [6]. Since there is no previous learning-based baseline, we specifically devise two counterparts based on our framework by replacing FireGNN with existing well-known powerful GNNs (DE-GNN [17] and -GNN [41]) (lower half of Table 1). The classical GIN model is used as the backbone for FireGNN and DE-GNN [36]. All devised counterparts and selected variants other than FireGNN cannot be successfully applied to the resilience task in an inductive fashion, when only topological structures are available.

The ResiNet’s training setup is detailed in Appendix C.2. All algorithms are repeated for 3 random seeds using default hyper-parameters.

Metrics.

Various definitions of resilience and utility used for evaluating algorithms are deferred to Appendix B.

5.2 Comparisons to the baselines

| Method | BA-15 | BA-50 | BA-100 | BA-500 | BA-1000 | EU | P2P-Gnutella05 | P2P-Gnutella09 | |

|---|---|---|---|---|---|---|---|---|---|

| HC | 26.8 (10) | 30.0 (20) | 24.1 (20) | 6.4 (20) | 66.6 (20) | 19.8 (20) | 6.2 (20) | 8.4 (20) | |

| 18.6 (11.3) | 22.1 (20) | 14.9 (20) | 5.9 (20) | 16.4 (20) | 16.3 (20) | 5.2 (20) | 7.0 (20) | ||

| SA | 21.6 (17.3) | 11.9 (20) | 12.5 (20) | 3.8 (20) | 42.9 (20) | 14.9 (20) | 3.9 (20) | 3.7 (20) | |

| 16.8 (19.0) | 11.4 (20) | 13.4 (20) | 4.0 (20) | 15.4 (20) | 14.0 (20) | 6.3 (20) | 4.8 (20) | ||

| Greedy | 23.5 (6) | 48.6 (13) | 64.3 (20) | ✗ | ✗ | 0.5 (3) | ✗ | ✗ | |

| 5.3 (15) | 34.7 (13) | 42.7 (20) | ✗ | ✗ | 0.3 (3) | ✗ | ✗ | ||

| EA | 8.5 (20) | 6.4 (20) | 4.0 (20) | 8.5 (20) | 174.1 (20) | 8.2 (20) | 2.7 (20) | 0 (20) | |

| 6.4 (20) | 4.7 (20) | 2.8 (20) | 5.6 (20) | 18.7 (20) | 9.3 (20) | 3.7 (20) | 0.1 (20) | ||

| DE-GNN-RL | 13.7 (2) | 0 (1) | 0 (1) | 1.6 (20) | 41.7 (20) | 9.0 (20) | 2.2 (20) | 0 (1) | |

| 10.9 (2) | 0 (1) | 0 (1) | 2.7 (20) | 20.1 (14) | 2.1 (20) | 0 (1) | 1.0 (20) | ||

| -GNN-RL | 13.7 (2) | 0 (1) | 0 (1) | 0 (1) | 8.8 (20) | 4.5 (20) | -0.2 (20) | 0 (1) | |

| 6.3 (2) | 0 (1) | 0 (1) | 0 (20) | -24.9 (20) | 4.8 (20) | -0.1 (20) | 0 (1) | ||

| ResiNet | 35.3 (6) | 61.5 (20) | 70.0 (20) | 10.2 (20) | 172.8 (20) | 54.2 (20) | 14.0 (20) | 18.6 (20) | |

| 26.9 (20) | 53.9 (20) | 53.1 (20) | 15.7 (20) | 43.7 (20) | 51.8 (20) | 12.4 (20) | 15.1 (20) |

In this section, we compare ResiNet to baselines in optimizing the combination of resilience and utility with weight coefficient . Following conventional setting, the graph connectivity-based metric is used as resilience metric [1] and the global efficiency is used as utility metric [51, 52].

Table 1 records the metric gain and the required number of rewiring operations of different methods under the same rewiring budget. ResiNet outperforms all baselines consistently on all datasets. Note that this performance may be achieved by ResiNet under a much fewer number of rewiring operations, such as on BA-15 with . In contrast, despite approximately searching for all possible new edges, the greedy algorithm is trapped in a local optimum (as it maximizes the one-step resilience gain) and is too expensive to optimize the resilience of a network with more than 300 nodes. For SA, the initial temperature and the temperature decay rate need to be carefully tuned for each network. EA performs suboptimally with a limited rewiring budget due to the numerous rewiring operations required in the internal process (e.g., the crossover operator). Learning-based methods (DE-GNN-RL and -GNN-RL) using existing GNNs coupled with distance encoding cannot learn effectively compared to ResiNet, supporting our claim about the effectiveness of FireGNN on graphs without rich features.

All baselines are compared under the same rewiring budget of 20 since each edge rewiring introduces economic costs. We record the performance and speed of each algorithm for a maximal rewiring budget of 200 in Table 5. Solving the resilience task under such a large rewiring budget (200) will not be applicable in practice due to the high cost of many rewiring operations in general, and as such this result is only for the completeness of the presentation.

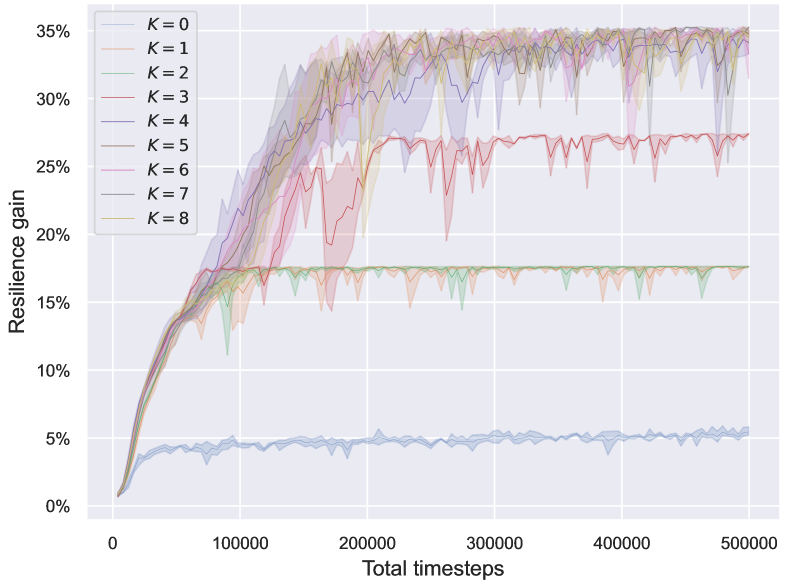

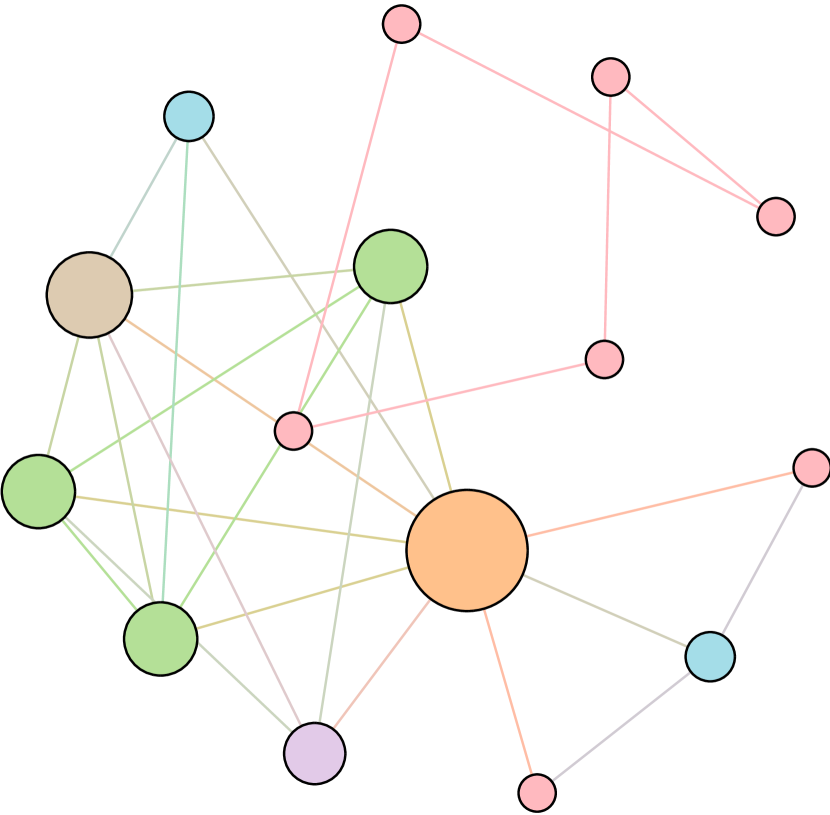

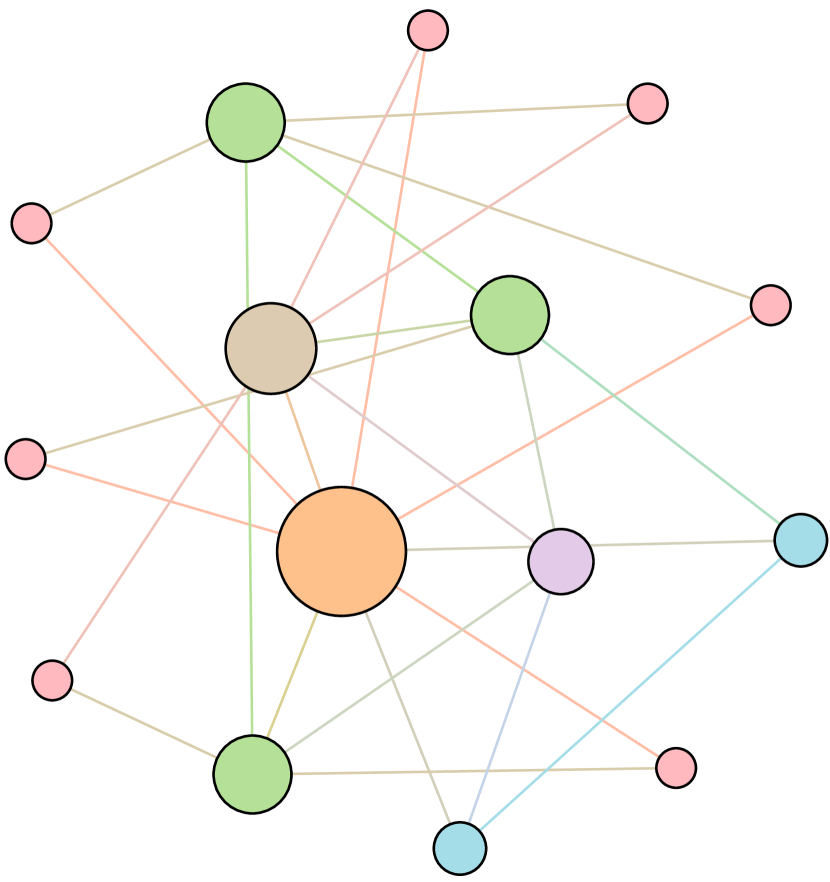

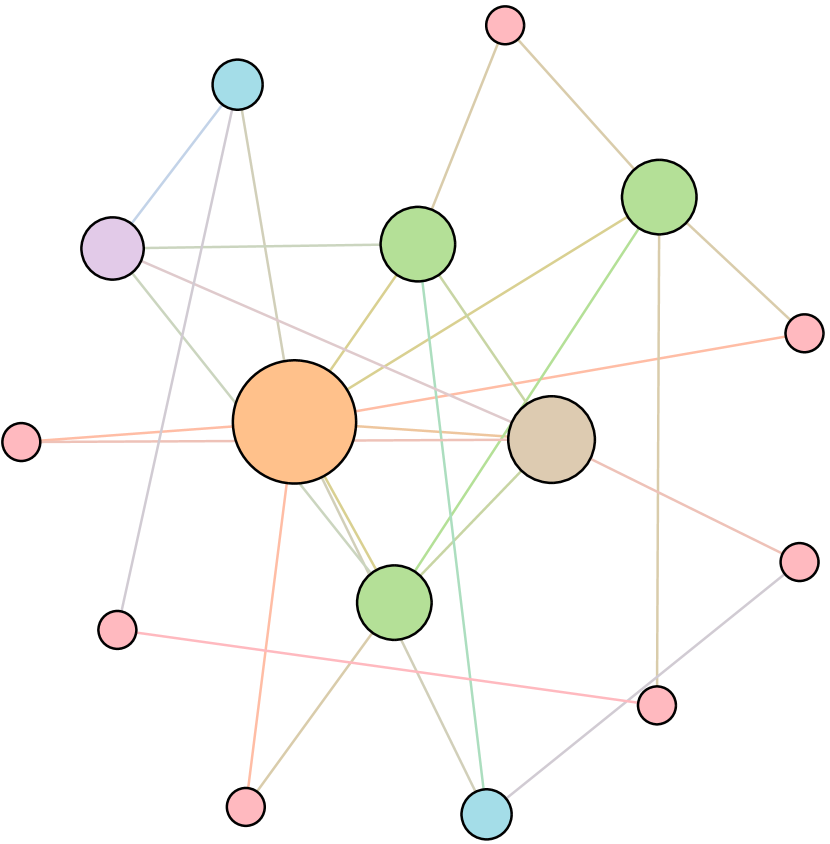

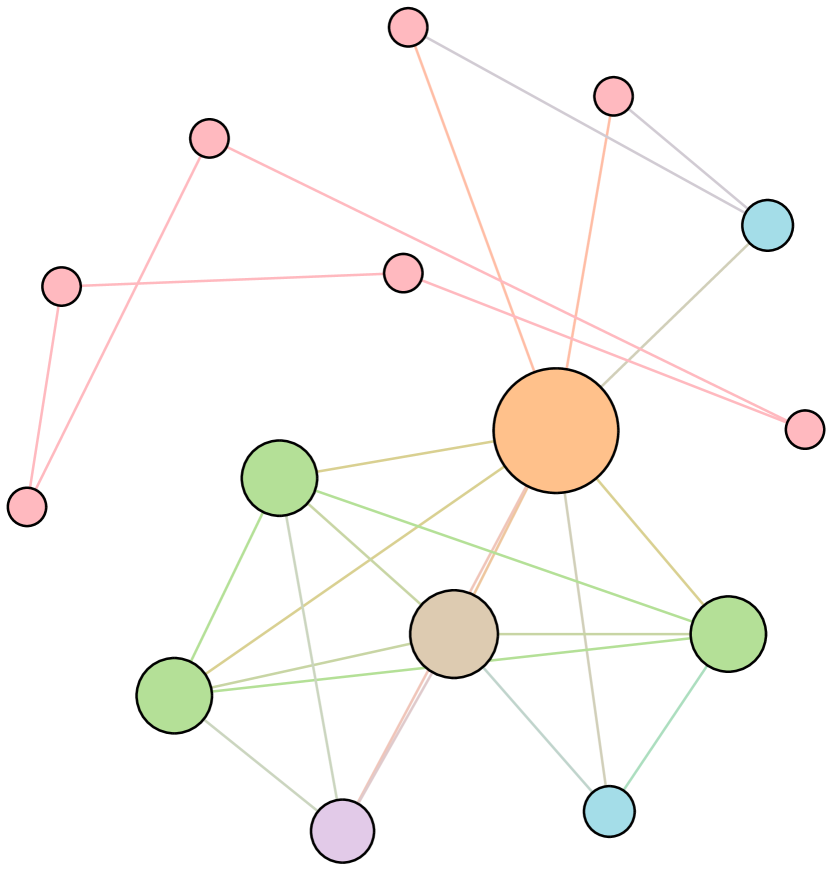

5.3 Ablation study of FireGNN

In FireGNN, the filtration order determines the total number of subgraphs involved in calculating the final node embedding, edge embedding, and graph embedding, where means that only the original graph is used and means that all subgraphs are used. We run a grid search on BA-15 to explore the effect of the filtration order on ResiNet. As shown in Figure 4(a), without FireGNN, ResiNet only achieves a minor gain of around 5%. The performance improved significantly with and ResiNet obtained the optimal resilience gain of about 35% on BA-15 when . We only report the performance when since BA-15 loses all connections when losing more than critical nodes.

FireGNN degenerates to existing GNNs when the filtration order is 0. Aside from the BA-15 dataset, the comparisons between ResiNet and other learning-based methods on other large datasets further validate the effectiveness of FireGNN. Table 1 shows that without FireGNN (replaced by DE-GNN or -GNN), it is generally challenging for ResiNet to find a positive gain and ResiNet cannot learn to select the correct edges with the incorrect learned edge embeddings.

Experimental results in Table 1 validate that FireGNN is critical for the network resilience task without rich features, by exploiting node information of each subgraph in a filtration process. Empirically, we found that ResiNet avoids the following problematic phenomena of existing GNNs during the training. As each rewiring only changes a graph by 4 edges, the graph embedding and the edge embedding may not vary significantly between two consecutive steps. Thus, existing GNNs fail to provide enough information for the RL agent to make correct edge selections. For example, we empirically found that with existing GNNs the RL agent can be stuck into an action loop, where after the rewiring of and to obtain and for at step , the agent may choose to reverse the rewiring (rewire and ) for at step , returning to and then trapped in an infinite loop between and .

5.4 Generalization

In this section, we conduct extensive experiments to show that ResiNet generalizes to unseen graphs, different utility and resilience metrics.

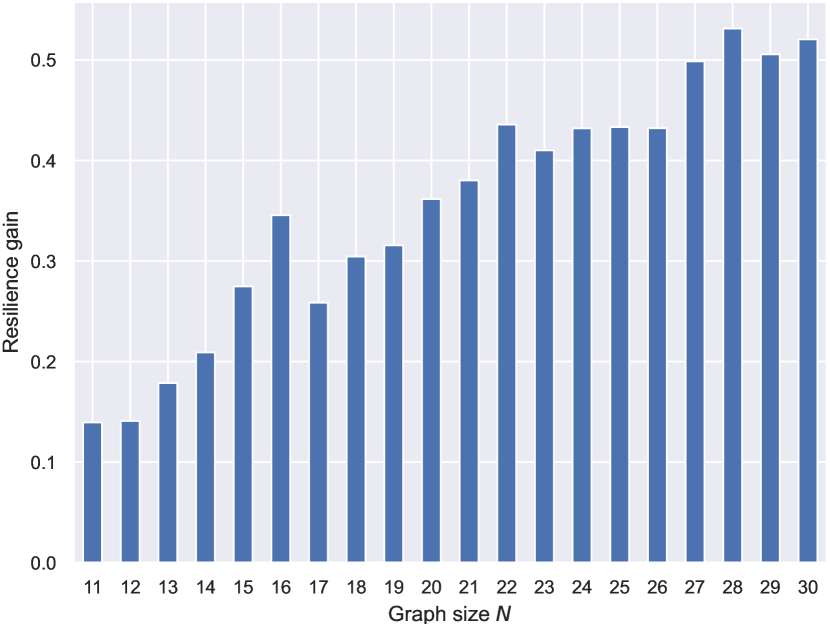

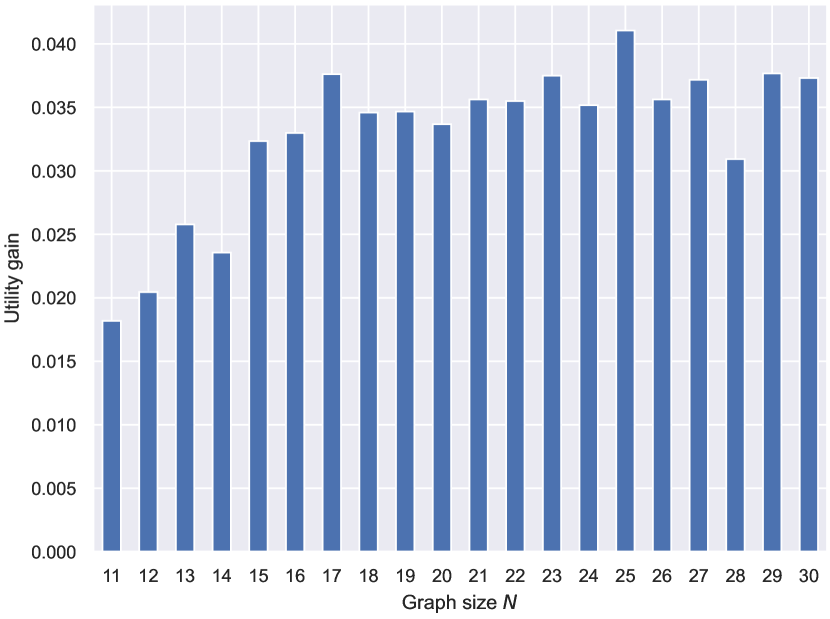

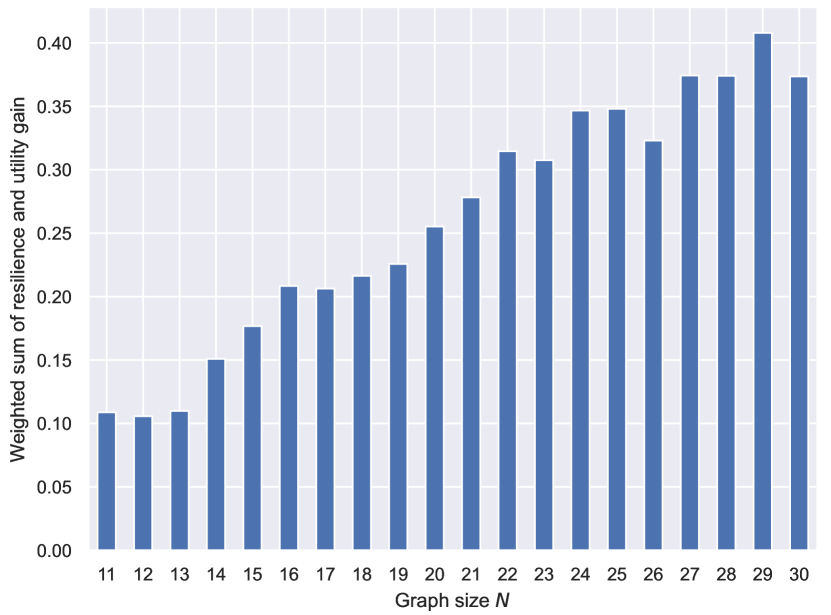

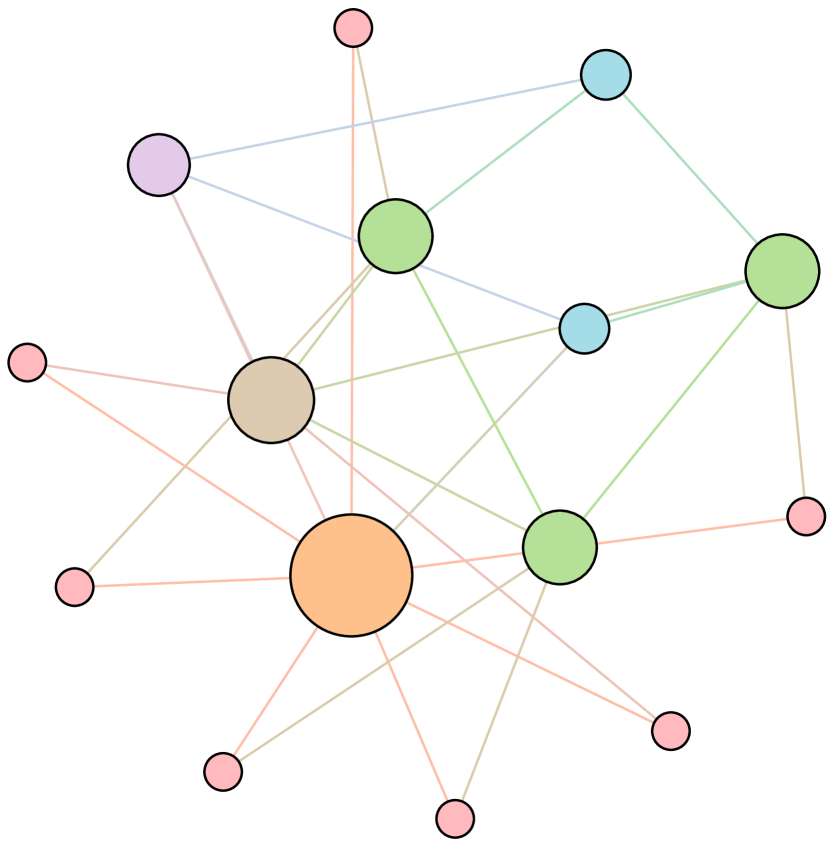

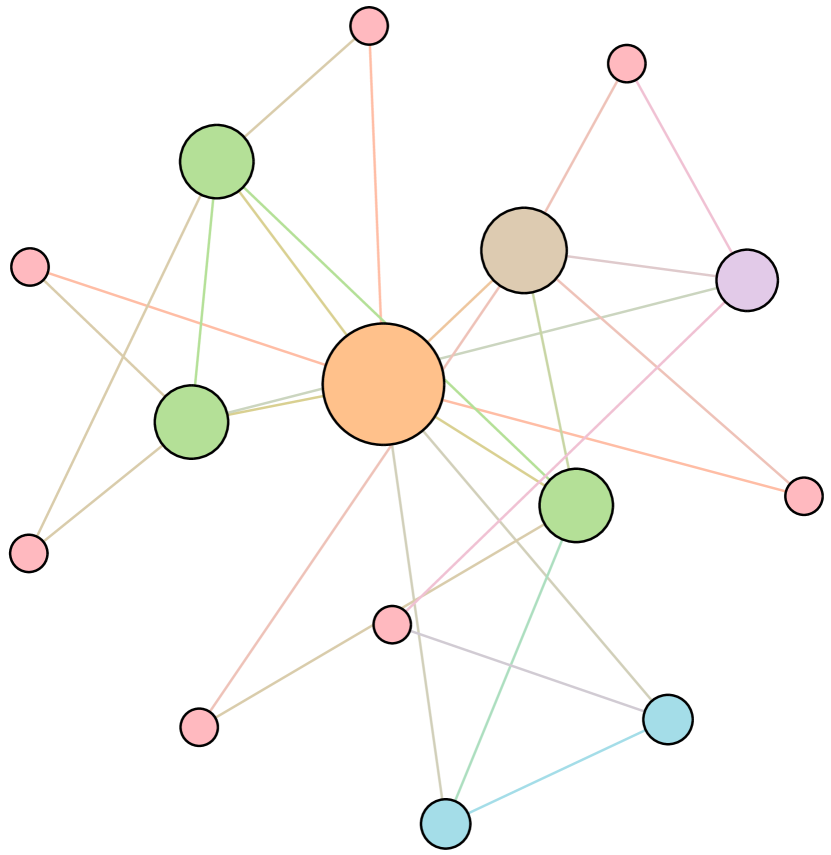

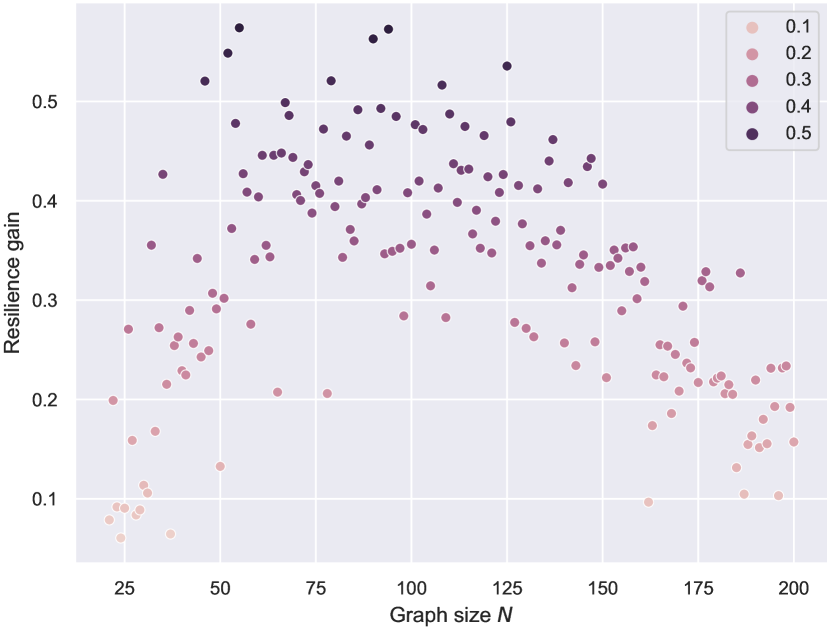

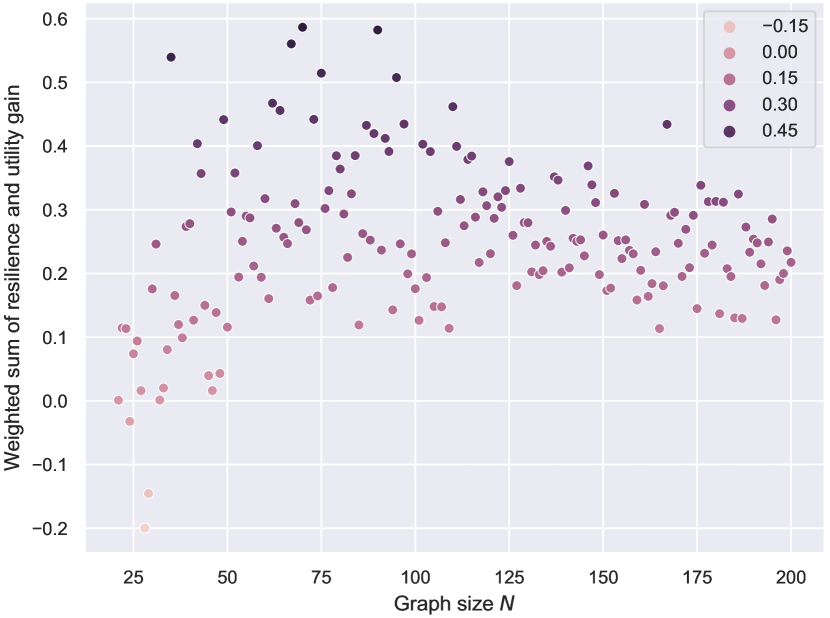

To demonstrate the inductivity of ResiNet, we first train ResiNet on two different datasets with the data setting listed in Table 2, and then evaluate its performance on an individual test dataset. The test dataset is not observed to ResiNet during the training process and fine-tuning is not allowed. We report the averaged resilience gain for the graphs of the same size for each dataset. The performance of ResiNet on BA-10-30 is shown in Figure 5 and the results of other datasets are deferred to Figure 7 in Appendix E. Figure 5 shows a nearly linear improvement of resilience with the increase of graph size, which is also consistent with the results in the transductive setting that larger graphs usually have a larger room to improve.

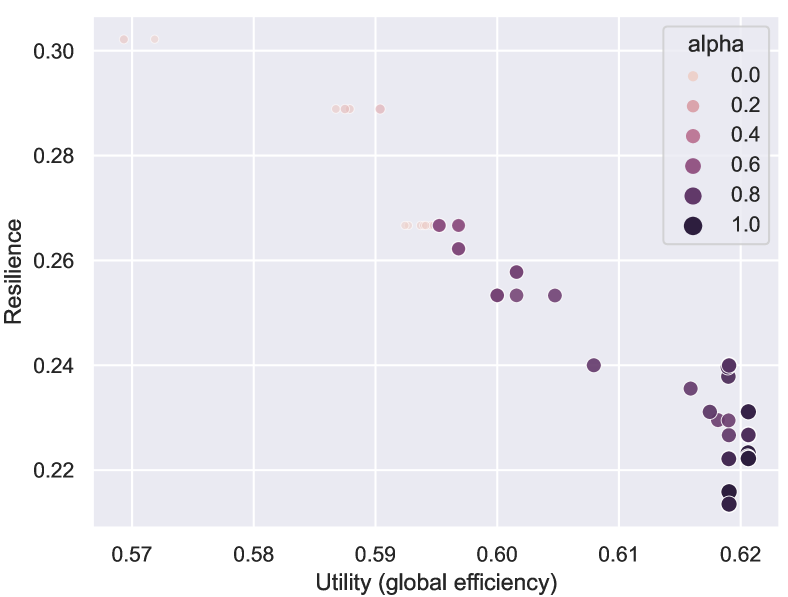

To demonstrate that ResiNet can learn from networks to accommodate different utility and resilience metrics, we conduct experiments based on the BA-15 using multiple resilience and utility metrics. The Pareto points shown in Figure 4(b) denote the optimum under different objectives on BA-15, implying that ResiNet can obtain the approximate Pareto frontier. Surprisingly, the initial gain of resilience (from around 0.21 to around 0.24) is obtained without loss of the utility, which incentivizes almost every network to conduct such optimization to some extent when feasible. More results are included in Appendix E.1 and the optimized network structures are visualized in Figure 6 and Figure 8.

6 Conclusion

In this work, we propose a general learning-based framework, ResiNet, for the discovery of resilient network topologies with minimal changes to the graph structure. ResiNet is the first inductive framework that formulates the task of boosting network resilience as an MDP of successive edge rewiring operations. Our technical innovation, FireGNN, as the graph feature extractor in ResiNet, is motivated by persistent homology. FireGNN alleviates the insufficiency of current GNNs (including GNNs more powerful than 1-WL test) on modeling graphs without rich features. FireGNN can learn meaningful representations on the resilience task to provide sufficient gradients for training the RL agent while current GNNs fail. Our framework is practically feasible as it preserves the utility of the networks when boosting resilience. Both ResiNet and FireGNN are potentially general enough to be applied to solve various graph problems without rich features.

Limitation. Similar to existing baselines, the exact objective oracle in ResiNet is time-consuming for evaluation on giant graphs. Future work should investigate how to combine FireGNN with techniques for handling out-of-distribution data to learn a reliable neural-version oracle for fast evaluation.

Negative social impact. The authors do not foresee the negative social impact of this work.

References

- [1] Christian M Schneider, André A Moreira, José S Andrade, Shlomo Havlin, and Hans J Herrmann. Mitigation of malicious attacks on networks. Proceedings of the National Academy of Sciences, 108(10):3838--3841, 2011.

- [2] Hau Chan and Leman Akoglu. Optimizing network robustness by edge rewiring: a general framework. Data Mining and Knowledge Discovery, 30(5):1395--1425, 2016.

- [3] Lei Rong and Jing Liu. A heuristic algorithm for enhancing the robustness of scale-free networks based on edge classification. Physica A: Statistical Mechanics and its Applications, 503:503--515, 2018.

- [4] Yao Ma, Suhang Wang, Tyler Derr, Lingfei Wu, and Jiliang Tang. Graph adversarial attack via rewiring. In KDD, pages 1161--1169. ACM, 2021.

- [5] Daniel A Jaume, Adrián Pastine, and Victor Nicolas Schvöllner. 2-switch: transition and stability on graphs and forests. arXiv preprint arXiv:2004.11164, 2020.

- [6] Mingxing Zhou and Jing Liu. A memetic algorithm for enhancing the robustness of scale-free networks against malicious attacks. Physica A: Statistical Mechanics and its Applications, 410:131--143, 2014.

- [7] A Yasin Yazıcıoğlu, Magnus Egerstedt, and Jeff S Shamma. Formation of robust multi-agent networks through self-organizing random regular graphs. IEEE Transactions on Network Science and Engineering, 2(4):139--151, 2015.

- [8] Damon Mosk-Aoyama. Maximum algebraic connectivity augmentation is NP-hard. Operations Research Letters, 36(6):677--679, 2008.

- [9] Zhuwen Li, Qifeng Chen, and Vladlen Koltun. Combinatorial optimization with graph convolutional networks and guided tree search. In Advances in Neural Information Processing Systems, 2018.

- [10] Chaitanya K. Joshi, Thomas Laurent, and Xavier Bresson. An efficient graph convolutional network technique for the travelling salesman problem. CoRR, abs/1906.01227, 2019.

- [11] Zhang-Hua Fu, Kai-Bin Qiu, and Hongyuan Zha. Generalize a small pre-trained model to arbitrarily large TSP instances. In Proceedings of the AAAI Conference on Artificial Intelligence, 2020.

- [12] Elias B. Khalil, Hanjun Dai, Yuyu Zhang, Bistra Dilkina, and Le Song. Learning combinatorial optimization algorithms over graphs. In Advances in Neural Information Processing Systems, 2017.

- [13] MohammadReza Nazari, Afshin Oroojlooy, Lawrence V. Snyder, and Martin Takác. Reinforcement learning for solving the vehicle routing problem. In Advances in Neural Information Processing Systems, 2018.

- [14] Bo Peng, Jiahai Wang, and Zizhen Zhang. A deep reinforcement learning algorithm using dynamic attention model for vehicle routing problems. CoRR, abs/2002.03282, 2020.

- [15] James Jian Qiao Yu, Wen Yu, and Jiatao Gu. Online vehicle routing with neural combinatorial optimization and deep reinforcement learning. IEEE Transactions on Intelligent Transportation Systems, 20(10):3806--3817, 2019.

- [16] Matteo Boffa, Zied Ben-Houidi, Jonatan Krolikowski, and Dario Rossi. Neural combinatorial optimization beyond the TSP: existing architectures under-represent graph structure. In Proceedings of the AAAI Conference on Artificial Intelligence, 2022.

- [17] Pan Li, Yanbang Wang, Hongwei Wang, and Jure Leskovec. Distance encoding: Design provably more powerful neural networks for graph representation learning. In Advances in Neural Information Processing Systems, 2020.

- [18] Herbert Edelsbrunner and John Harer. Persistent homology-A survey. Contemporary Mathematics, 453:257--282, 2008.

- [19] Mehmet E Aktas, Esra Akbas, and Ahmed El Fatmaoui. Persistence homology of networks: methods and applications. Applied Network Science, 4(1):1--28, 2019.

- [20] Christoph Hofer, Florian Graf, Bastian Rieck, Marc Niethammer, and Roland Kwitt. Graph filtration learning. In International Conference on Machine Learning, 2020.

- [21] Changjun Fan, Li Zeng, Yizhou Sun, and Yang-Yu Liu. Finding key players in complex networks through deep reinforcement learning. Nature Machine Intelligence, pages 1--8, 2020.

- [22] Kangfei Zhao, Zhiwei Zhang, Yu Rong, Jeffrey Xu Yu, and Junzhou Huang. Finding critical users in social communities via graph convolutions. IEEE Transactions on Knowledge and Data Engineering, pages 1--1, 2021.

- [23] Fan Zhang, Ying Zhang, Lu Qin, Wenjie Zhang, and Xuemin Lin. Finding critical users for social network engagement: The collapsed k-core problem. In Proceedings of the AAAI Conference on Artificial Intelligence, 2017.

- [24] Petter Holme, Beom Jun Kim, Chang No Yoon, and Seung Kee Han. Attack vulnerability of complex networks. Physical Review E, 65(5):056109, 2002.

- [25] Swami Iyer, Timothy Killingback, Bala Sundaram, and Zhen Wang. Attack robustness and centrality of complex networks. PloS One, 8(4):e59613, 2013.

- [26] Marco Grassia, Manlio De Domenico, and Giuseppe Mangioni. Machine learning dismantling and early-warning signals of disintegration in complex systems. Nature Communications, 12(1):1--10, 2021.

- [27] Sourav Medya, Tianyi Ma, Arlei Silva, and Ambuj Singh. A game theoretic approach for k-core minimization. In Proceedings of the International Conference on Autonomous Agents and MultiAgent Systems, 2020.

- [28] Wenguo Li, Yong Li, Yi Tan, Yijia Cao, Chun Chen, Ye Cai, Kwang Y Lee, and Michael Pecht. Maximizing network resilience against malicious attacks. Scientific Reports, 9(1):1--9, 2019.

- [29] Vincenza Carchiolo, Marco Grassia, Alessandro Longheu, Michele Malgeri, and Giuseppe Mangioni. Network robustness improvement via long-range links. Computational Social Networks, 6(1):1--16, 2019.

- [30] Xiangrong Wang, Evangelos Pournaras, Robert E Kooij, and Piet Van Mieghem. Improving robustness of complex networks via the effective graph resistance. The European Physical Journal B, 87(9):1--12, 2014.

- [31] Pierre Buesser, Fabio Daolio, and Marco Tomassini. Optimizing the robustness of scale-free networks with simulated annealing. In International Conference on Adaptive and Natural Computing Algorithms, pages 167--176. Springer, 2011.

- [32] Thomas N Kipf and Max Welling. Semi-supervised classification with graph convolutional networks. In International Conference on Learning Representations, 2017.

- [33] Will Hamilton, Zhitao Ying, and Jure Leskovec. Inductive representation learning on large graphs. In Advances in Neural Information Processing Systems, 2017.

- [34] Petar Velickovic, Guillem Cucurull, Arantxa Casanova, Adriana Romero, Pietro Liò, and Yoshua Bengio. Graph attention networks. In International Conference on Learning Representations, 2018.

- [35] Guohao Li, Chenxin Xiong, Ali Thabet, and Bernard Ghanem. DeeperGCN: All you need to train deeper GCNs. arXiv preprint arXiv:2006.07739, 2020.

- [36] Keyulu Xu, Weihua Hu, Jure Leskovec, and Stefanie Jegelka. How powerful are graph neural networks? In International Conference on Learning Representations, 2019.

- [37] Cristian Bodnar, Fabrizio Frasca, Nina Otter, Yuguang Wang, Pietro Lio, Guido F Montufar, and Michael Bronstein. Weisfeiler and lehman go cellular: CW networks. In Advances in Neural Information Processing Systems, 2021.

- [38] Benjamin Hudson, Qingbiao Li, Matthew Malencia, and Amanda Prorok. Graph neural network guided local search for the traveling salesperson problem. In International Conference on Learning Representations, 2022.

- [39] Tianshu Yu, Runzhong Wang, Junchi Yan, and Baoxin Li. Deep latent graph matching. In International Conference on Machine Learning, 2021.

- [40] Hanjun Dai, Hui Li, Tian Tian, Xin Huang, Lin Wang, Jun Zhu, and Le Song. Adversarial attack on graph structured data. In International Conference on Machine Learning, 2018.

- [41] Christopher Morris, Martin Ritzert, Matthias Fey, William L Hamilton, Jan Eric Lenssen, Gaurav Rattan, and Martin Grohe. Weisfeiler and Leman go neural: Higher-order graph neural networks. In Proceedings of the AAAI Conference on Artificial Intelligence, 2019.

- [42] Jiaxuan You, Jonathan Gomes-Selman, Rex Ying, and Jure Leskovec. Identity-aware graph neural networks. In Proceedings of the AAAI Conference on Artificial Intelligence, 2021.

- [43] Jiaxuan You, Bowen Liu, Zhitao Ying, Vijay S. Pande, and Jure Leskovec. Graph convolutional policy network for goal-directed molecular graph generation. In Advances in Neural Information Processing Systems, 2018.

- [44] Rakshit Trivedi, Jiachen Yang, and Hongyuan Zha. GraphOpt: Learning optimization models of graph formation. In International Conference on Machine Learning, pages 9603--9613. PMLR, 2020.

- [45] Shengyi Huang and Santiago Ontañón. A closer look at invalid action masking in policy gradient algorithms. CoRR, abs/2006.14171, 2020.

- [46] Kazuyoshi Wakuta. Vector-valued markov decision processes and the systems of linear inequalities. Stochastic Processes and Their Applications, 56(1):159--169, 1995.

- [47] Oriol Vinyals, Meire Fortunato, and Navdeep Jaitly. Pointer networks. In Advances in Neural Information Processing Systems, 2015.

- [48] Qiong Zhou and Janusz W Bialek. Approximate model of european interconnected system as a benchmark system to study effects of cross-border trades. IEEE Transactions on Power Systems, 20(2):782--788, 2005.

- [49] Jure Leskovec, Jon Kleinberg, and Christos Faloutsos. Graph evolution: Densification and shrinking diameters. ACM transactions on Knowledge Discovery from Data (TKDD), 1(1):2--es, 2007.

- [50] Matei Ripeanu, Ian Foster, and Adriana Iamnitchi. Mapping the gnutella network: Properties of large-scale peer-to-peer systems and implications for system design. arXiv preprint cs/0209028, 2002.

- [51] Vito Latora and Massimo Marchiori. Economic small-world behavior in weighted networks. The European Physical Journal B-Condensed Matter and Complex Systems, 32(2):249--263, 2003.

- [52] Stefano Boccaletti, Vito Latora, Yamir Moreno, Martin Chavez, and D-U Hwang. Complex networks: Structure and dynamics. Physics Reports, 424(4-5):175--308, 2006.

- [53] Weihua Hu, Bowen Liu, Joseph Gomes, Marinka Zitnik, Percy Liang, Vijay S. Pande, and Jure Leskovec. Strategies for pre-training graph neural networks. In International Conference on Learning Representations, 2020.

- [54] Jiezhong Qiu, Qibin Chen, Yuxiao Dong, Jing Zhang, Hongxia Yang, Ming Ding, Kuansan Wang, and Jie Tang. GCC: graph contrastive coding for graph neural network pre-training. In KDD ’20: The 26th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, 2020.

- [55] Petar Velickovic, William Fedus, William L. Hamilton, Pietro Liò, Yoshua Bengio, and R. Devon Hjelm. Deep graph infomax. In International Conference on Learning Representations, 2019.

- [56] Yuning You, Tianlong Chen, Yongduo Sui, Ting Chen, Zhangyang Wang, and Yang Shen. Graph contrastive learning with augmentations. In Advances in Neural Information Processing Systems, 2020.

- [57] Kaveh Hassani and Amir Hosein Khasahmadi. Contrastive multi-view representation learning on graphs. In Proceedings of the International Conference on Machine Learning, 2020.

- [58] Wei Jin, Yao Ma, Xiaorui Liu, Xianfeng Tang, Suhang Wang, and Jiliang Tang. Graph structure learning for robust graph neural networks. In Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, 2020.

- [59] Xiaojie Guo, Lingfei Wu, and Liang Zhao. Deep graph translation. CoRR, abs/1805.09980, 2018.

- [60] Emmanuel Bengio, Moksh Jain, Maksym Korablyov, Doina Precup, and Yoshua Bengio. Flow network based generative models for non-iterative diverse candidate generation. Advances in Neural Information Processing Systems, 34, 2021.

- [61] Vito Latora and Massimo Marchiori. Efficient behavior of small-world networks. Physical Review Letters, 87(19):198701, 2001.

- [62] Réka Albert and Albert-László Barabási. Statistical mechanics of complex networks. Reviews of Modern Physics, 74(1):47, 2002.

- [63] Béla Bollobás and Oliver Riordan. Robustness and vulnerability of scale-free random graphs. Internet Mathematics, 1(1):1--35, 2004.

- [64] Jure Leskovec and Christos Faloutsos. Sampling from large graphs. In Proceedings of the 12th ACM SIGKDD International conference on Knowledge Discovery and Data Mining, 2006.

- [65] Günter Klambauer, Thomas Unterthiner, Andreas Mayr, and Sepp Hochreiter. Self-normalizing neural networks. In Advances in Neural Information Processing Systems, 2017.

- [66] Tianle Cai, Shengjie Luo, Keyulu Xu, Di He, Tie-Yan Liu, and Liwei Wang. GraphNorm: A principled approach to accelerating graph neural network training. In Proceedings of the International Conference on Machine Learning, 2021.

- [67] Keyulu Xu, Chengtao Li, Yonglong Tian, Tomohiro Sonobe, Ken-ichi Kawarabayashi, and Stefanie Jegelka. Representation learning on graphs with jumping knowledge networks. In International Conference on Machine Learning, 2018.

- [68] John Schulman, Filip Wolski, Prafulla Dhariwal, Alec Radford, and Oleg Klimov. Proximal policy optimization algorithms. CoRR, abs/1707.06347, 2017.

- [69] Marcin Andrychowicz, Anton Raichuk, Piotr Stańczyk, Manu Orsini, Sertan Girgin, Raphaël Marinier, Leonard Hussenot, Matthieu Geist, Olivier Pietquin, Marcin Michalski, Sylvain Gelly, and Olivier Bachem. What matters for on-policy deep actor-critic methods? A large-scale study. In International Conference on Learning Representations, 2021.

- [70] Deheng Ye, Zhao Liu, Mingfei Sun, Bei Shi, Peilin Zhao, Hao Wu, Hongsheng Yu, Shaojie Yang, Xipeng Wu, Qingwei Guo, et al. Mastering complex control in moba games with deep reinforcement learning. In Proceedings of the AAAI Conference on Artificial Intelligence, 2020.

- [71] P. Diederik Kingma and Lei Jimmy Ba. Adam: A method for stochastic optimization. In International Conference on Learning Representations, 2015.

- [72] Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Łukasz Kaiser, and Illia Polosukhin. Attention is all you need. In Advances in Neural Information Processing Systems, 2017.

- [73] Irwan Bello, Hieu Pham, Quoc V Le, Mohammad Norouzi, and Samy Bengio. Neural combinatorial optimization with reinforcement learning. In International Conference on Learning Representations, 2016.

- [74] Chaitanya K Joshi, Quentin Cappart, Louis-Martin Rousseau, and Thomas Laurent. Learning the travelling salesperson problem requires rethinking generalization. Constraints, pages 1--29, 2022.

- [75] Wouter Kool, Herke Van Hoof, and Max Welling. Attention, learn to solve routing problems! In International Conference on Learning Representations, 2018.

- [76] Maximilian Böther, Otto Kißig, Martin Taraz, Sarel Cohen, Karen Seidel, and Tobias Friedrich. What’s wrong with deep learning in tree search for combinatorial optimization. In International Conference on Learning Representations, 2022.

Appendix

Appendix A Extended related work

Network resilience and utility.

Network utility refers to the system’s quality to provide a specific service, for example, transmitting electricity in power networks and transmitting packages in routing networks. A popular metric for network utility is the network efficiency [51, 52]. Network resilience measures the ability to prevent utility loss under failures and attacks. In many previous work, despite that network resilience could be improved, the utility may dramatically drop at the same time [28, 29, 30, 1, 2, 31]. This contradicts the idea behind improving network resilience and will be infeasible in real-world applications. Our goal is to enhance network resilience with moderate loss of network utility by network structure manipulations.

Multi-views graph augmentation for GNNs.

Multi-views graph augmentation is one efficient way to improve the expressive power of GNNs or combine domain knowledge, which is adapted based on the task’s prior [53]. For example, GCC generates multiple subgraphs from the same ego network [54]. DGI maximizes the mutual information between global and local information [55]. GCA adaptively incorporates various priors for topological and semantic aspects of the graph [56]. [57] contrasts representations from first-order neighbors and a graph diffusion. DeGNN[58] was proposed as an automatic graph decomposition algorithm to improve the performance of deeper GNNs. These techniques rely on the existence of rich graph feature and the resultant GNNs cannot work well on graphs without rich features. In the resilience task, only the graph topological structure is available. Motivated by the calculation process of persistent homology [18], we apply the filtration process to enhance the expressive power of GNNs for handling graphs without rich features.

Deep graph generation.

Deep graph generation models learn the distribution of given graphs and generate more novel graphs. Some work use the encoder-decoder framework by learning latent representation of the input graph through the encoder and then generating the target graph through the decoder. For example, GCPN [43] incorporates chemistry domain rules on molecular graph generation. GT-GAN [59] proposes a GAN-based model on malware cyber-network synthesis. GraphOpt [44] learns an implicit model to discover an underlying optimization mechanism of the graph generation using inverse reinforcement learning. GFlowNet learns a stochastic policy for generating molecules with the probability proportional to a given reward based on flow networks and local flow-matching conditions [60]. Graph structure learning aims to learn an optimized graph structure and corresponding graph representations [58]. However, constrained version of graph generation is still under development and none of existing methods can generate desired graphs with the exact node degree preserving constraint, which is required by the resilience task.

Appendix B Definitions of different objective functions

In this section, we present resilience definitions and utility definitions used in our experiments.

B.1 Resilience definitions

Three kinds of resilience metrics are considered:

-

•

The graph connectivity-based measurement is defined as [1]

where is the fraction of nodes in the largest connected remaining graph after removing nodes from graph according to certain attack strategy. The range of possible values of is , where these two extreme values correspond to a star network and a fully connected network, respectively.

-

•

The spectral radius () denotes the largest eigenvalue of an adjacency matrix.

-

•

The algebraic connectivity () represents the second smallest eigenvalue of the Laplacian matrix of .

B.2 Utility definitions

In this paper, the global and local communication efficiency are used as two measurements of the network utility, which are widely applied across diverse applications of network science, such as transportation and communication networks [51, 52].

The average efficiency of a network is defined as inversely proportional to the average over pairwise distances [61] as

where denotes the total nodes in a network and is the length of the shortest path between a node and another node .

We can calculate the global and local efficiency given the average efficiency.

- •

-

•

The local efficiency of a network measures a local average of pairwise communication efficiencies and is defined as [61]

where is the local subgraph including only of a node ’s one-hop neighbors, but not the node itself. The range of is [0, 1].

Appendix C Implementation details of ResiNet

This section provides the implementation details of ResiNet, including dataset, network structure training strategies, and node feature construction.

C.1 Dataset

We first present the data generation strategies. Table 2 summarizes the statistics of each dataset. Synthetic datasets are generated using the Barabasi-Albert (BA) model (known as scale-free graphs) [62], with the graph size varying from =10 to =1000. During the data generation process, each node is connected to two existing nodes for graphs with no more than 500 nodes, and each node is connected to one existing node for graphs with near 1000 nodes. BA graphs are chosen since they are vulnerable to malicious attacks and are commonly used to test network resilience optimization algorithms[63]. We test the performance of ResiNet on both transductive and inductive settings.

-

•

Transductive setting. The algorithm is trained and tested on the same network.

-

–

Randomly generated synthetic BA networks, denoted by BA-, are adopted to test the performance of ResiNet on networks of various sizes, where is the graph size.

-

–

The Gnutella peer-to-peer network file sharing network from August 2002 [49, 50] and the real EU power network [48] are used to validate the performance of ResiNet on real networks. The random walk sampling strategy is used to derive a representative sample subgraph with hundreds of nodes from the Gnutella peer-to-peer network [64].

-

–

-

•

Inductive setting. Two groups of synthetic BA networks denoted by BA-- are randomly generated to test ResiNet’s inductivity, where is the minimal graph size, and indicates the maximal graph size. We first randomly generate the fixed number of BA networks as the training data to train ResiNet and then evaluate ResiNet’s performance directly on the test dataset without any additional optimization.

| Dataset | Node | Edge | Action Space Size | Train/Test | Setting |

|---|---|---|---|---|---|

| BA-15 | 15 | 54 | 5832 | ✗ | Transductive |

| BA-50 | 50 | 192 | 73728 | ✗ | Transductive |

| BA-100 | 100 | 392 | 307328 | ✗ | Transductive |

| BA-500 | 500 | 996 | 1984032 | ✗ | Transductive |

| BA-1000 | 1000 | 999 | 1996002 | ✗ | Transductive |

| EU | 217 | 640 | 819200 | ✗ | Transductive |

| p2p-Gnutella05 | 400 | 814 | 1325192 | ✗ | Transductive |

| p2p-Gnutella09 | 300 | 740 | 1095200 | ✗ | Transductive |

| BA-10-30 () | 10-30 | 112 | 25088 | 1000/500 | Inductive |

| BA-20-200 () | 20-200 | 792 | 1254528 | 4500/360 | Inductive |

C.2 ResiNet setup

In this section, we provide detailed parameter setting and training strategies for ResiNet.

Our proposed FireGNN is used as the graph encoder in ResiNet, including a 5-layer defined GIN [36] as the backbone. The hidden dimensions for node embedding and graph embedding in each hidden layer are set to 64 and the SeLU activation function [65] is used after each message passing propagate. Graph normalization strategy is adopted to stabilize the training of GNN [66]. The jumping knowledge network [67] is used to aggregate node features from different layers of the GNN.

The overall policy is trained by using the highly tuned implementation of proximal policy optimization (PPO) algorithm [68]. Several critical strategies for stabilizing and accelerating the training of ResiNet are used, including advantage normalization [69], the dual-clip PPO (the dual clip parameter is set to 10) [70], and the usage of different optimizers for policy network and value network. Additionally, since the step-wise reward range is small (around 0.01), we scale the reward by a factor of 10 to aim the training of ResiNet. The policy head model and value function model use two separated FireGNN encoder networks with the same architecture. ResiNet is trained using two separate Adam optimizers [71] with batch size 256 and a linearly decayed learning rate of 0.0007 for the policy network and a linearly decayed learning rate of 0.0007 for the value network. The aggregation function of FireGNN is defined as an attention mechanism-based linear weighted combination.

Hardware: We run all experiments for ResiNet on the platform with two GEFORCE RTX 3090 GPU and one AMD 3990X CPU.

C.3 Node feature construction

The widely-used node degree feature cannot significantly benefit the network resilience optimization of a single graph due to the degree-preserving rewiring. Therefore, we construct node features for each input graph to aid the transductive learning and inductive learning, including

-

•

The distance encoding strategy [17]. Node degree feature is a part of it.

-

•

The 8-dimensional position embedding originating from the Transformer [72] as the measurement of the vulnerability of each node under attack. If the attack order is available, we can directly encode it into the position embedding. If the attack order is unknown, node degree, node betweenness, and other node priority metrics can be used for approximating the node importance in practice. In our experiments, we used the adaptive node degree for the position embedding.

C.4 Baseline setup

All baselines share the same action space with ResiNet and use the same action masking strategy to block invalid actions as ResiNet does. The maximal objective evaluation is consistent for all algorithms. Other settings of baselines are consistent with the default values in their paper. The early-stopping strategy is used for baselines, which means that the search process terminates if no improvement is obtained in successive 1000 objective function calling trials. For

Appendix D Deep analysis of why regular GNNs fail in the resilience task

It is well-known that GNNs generally work well for graphs with rich features. Unluckily, the graph network in the resilience task has no node/edge/graph feature, with only the topological structure available. No rich feature means that the output of the GNNs is not distinguishable, and then it is difficult for the RL agent to distinguish different vertices/edges, causing large randomness in the output of the policy. This may cause the rewiring process to alternate between two graphs, forming an infinite loop. And we suspect that this infinite loop failure may explain the poor empirical performance of optimizing network resilience by selecting edges using existing GNNs and reinforcement learning (RL). The infinite loop failure is presented as follows.

Consider the graph with nodes and containing two edges and . The agent selects and for rewiring, leading to with news edges and . A frequent empirical failure of regular GNNs for the resilience task is the infinite loop phenomenon. The agent would select and at step , returning back to and forming a cycled loop between and . Formally, the infinite loop is formulated as

where SIM is a similarity metric, and are embeddings of node and graph at step , and (,) is one edge.

Table 3 compares and summarizes different graph related tasks’ characteristics. We can see that the resilience task is more challenging from many aspects. No prior rule like action masking or negative penalty can be used to avoid selecting visited nodes as in TSP. For the resilience task, all previously visited edges are also possibly valid to be selected again, resulting in insufficient training signals.

The desired GNN model should not depend on rules like action masking to distinguish edge and graph representations for graphs with little node features. Our proposed FireGNN fulfills these requirements to obtain proper training signals. FireGNN has a distinct expressive power and learns to create more meaningful and distinguishable features for each edge. FireGNN is not a simple aggregation of higher-order information of a static graph. It was inspired by homology filtration and the multi-view graph augmentation. Persistence homology motivates us to aggregate more distinct node features by observing how the graph evolves towards being empty, leading to more distinct and meaningful features for each node/edge, thus avoiding the infinite loop. Extensive experimental results in Table 1 validate the necessity and effectiveness of FireGNN. Existing GNNs perform worse while FireGNN performs well.

Approach Task RL component Problem Training & Inference State Action Reward Complexity Size Extrapolate Encoder Action Masking Scalability S2V-DQN [12] MVC node level add node to subset -1 ✗ S2V ✓ 500 Max-Cut node level add node to subset change in cut weight ✗ S2V ✓ 300 TSP node level add node to tour change in tour cost ✗ S2V ✓ 300 Local search [38] TSP edge level relocate node in tour global regret ✓ GNN ✓ 100 RNN-RL [73] TSP node level add node to tour change in tour cost ✗ RNN ✓ 100 GNN-RL [74] TSP node level add node to tour change in tour cost ✓ GNN ✓ 50 Attention-RL [75] TSP node level add node to tour change in tour cost ✗ Attention ✓ 100 VRP node level add node to tour change in tour cost ✗ Attention ✓ 100 Local search [76] MIS node level add node to subset change in IS size ✓ GNN ✓ 800 ResiNet Resilience graph level edge rewiring change in resilience and utility ✓ FireGNN ✗ 1000

Appendix E Extended experimental results

In this section, we present additional experimental results.

This section provides additional experimental results, including optimizing different resilience and utility metrics and validating ResiNet’s inductivity on larger datasets. Finally, we describe future work.

E.1 Learning to balance more utility and resilience metrics

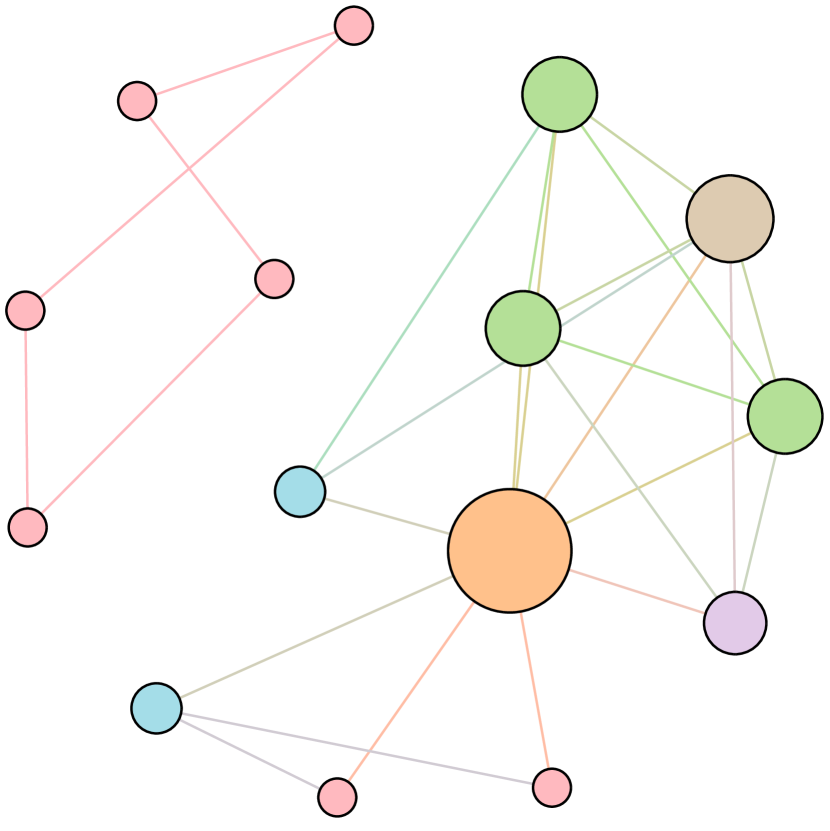

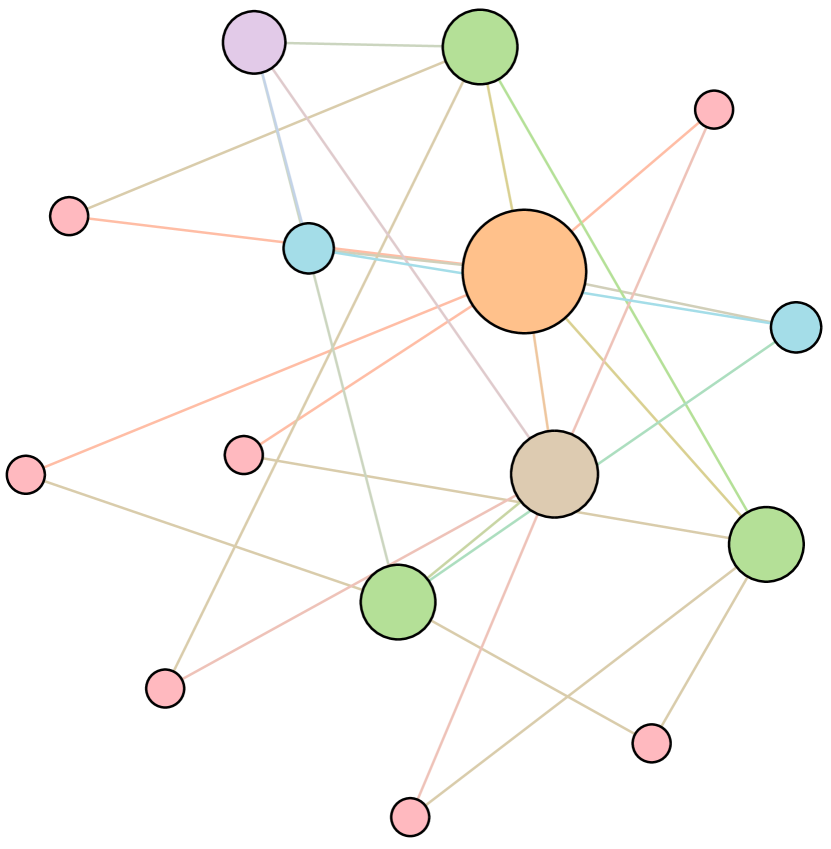

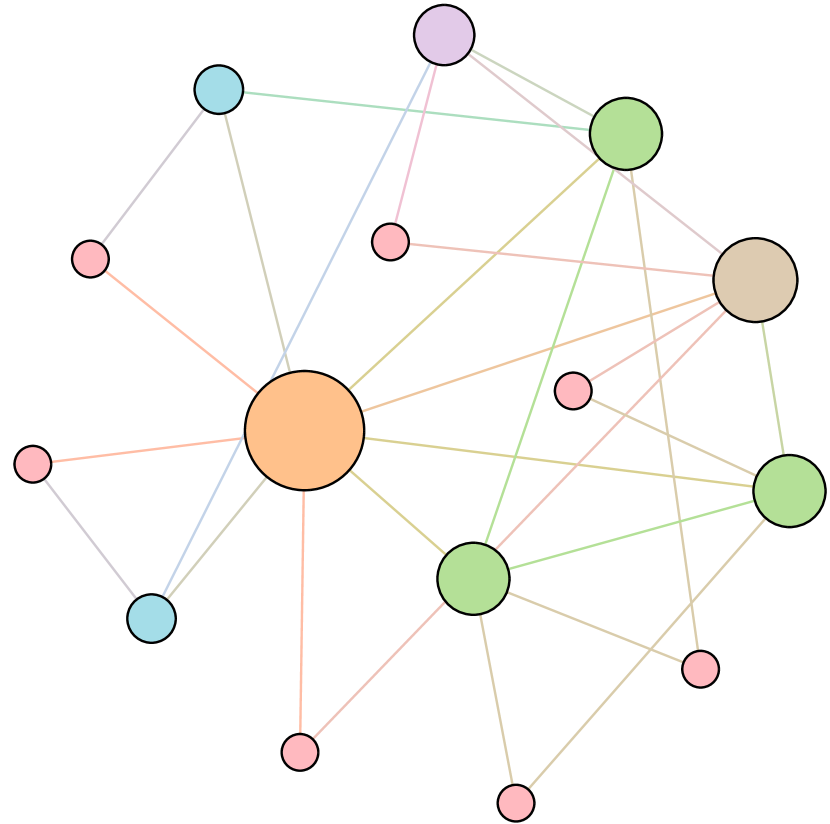

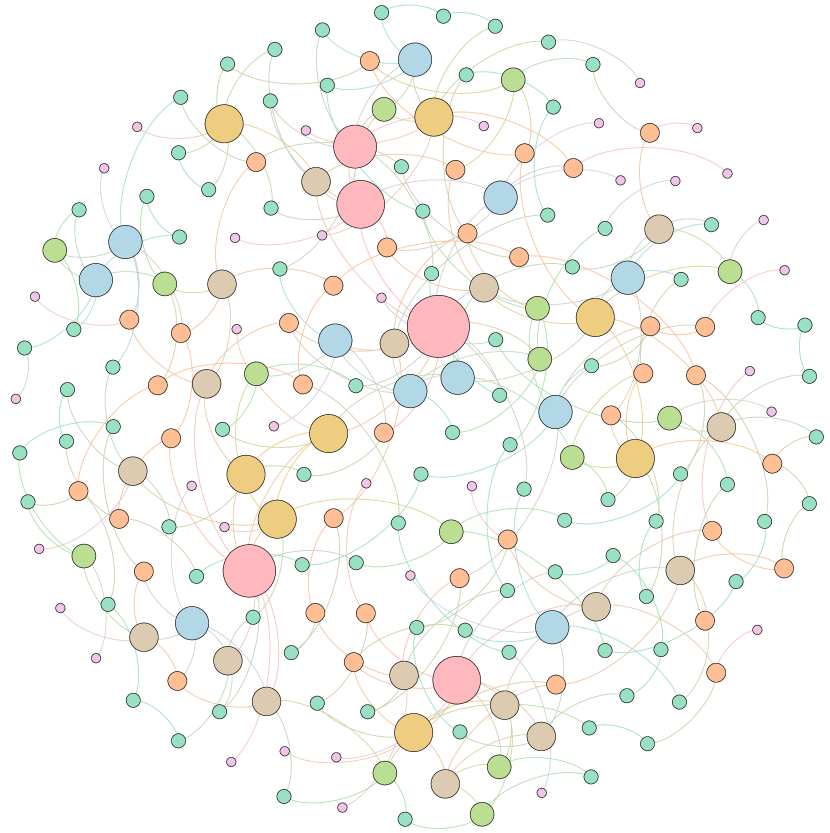

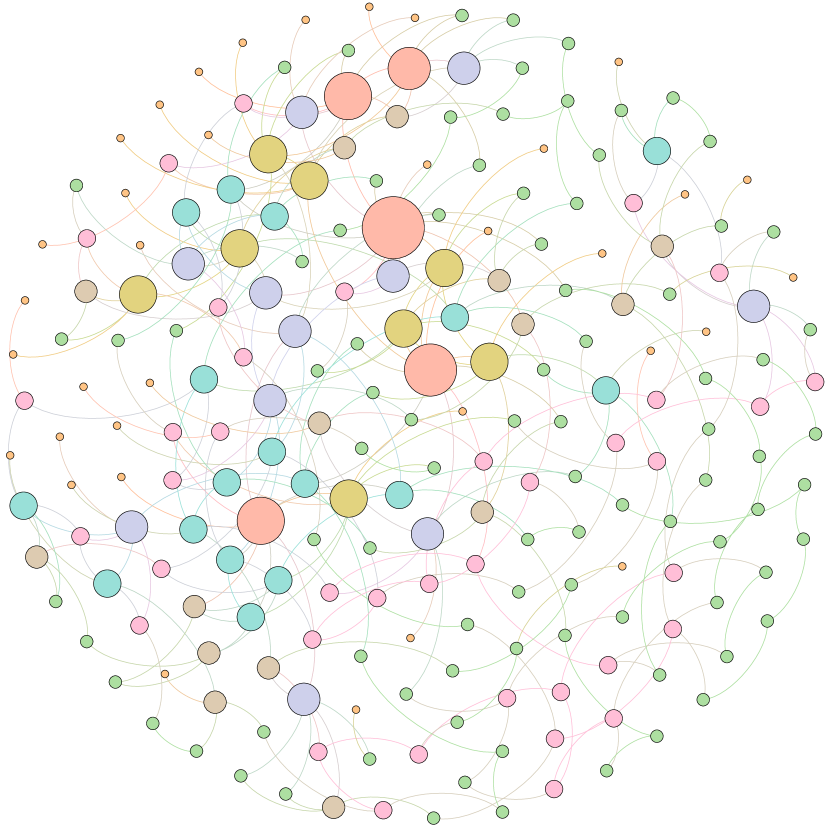

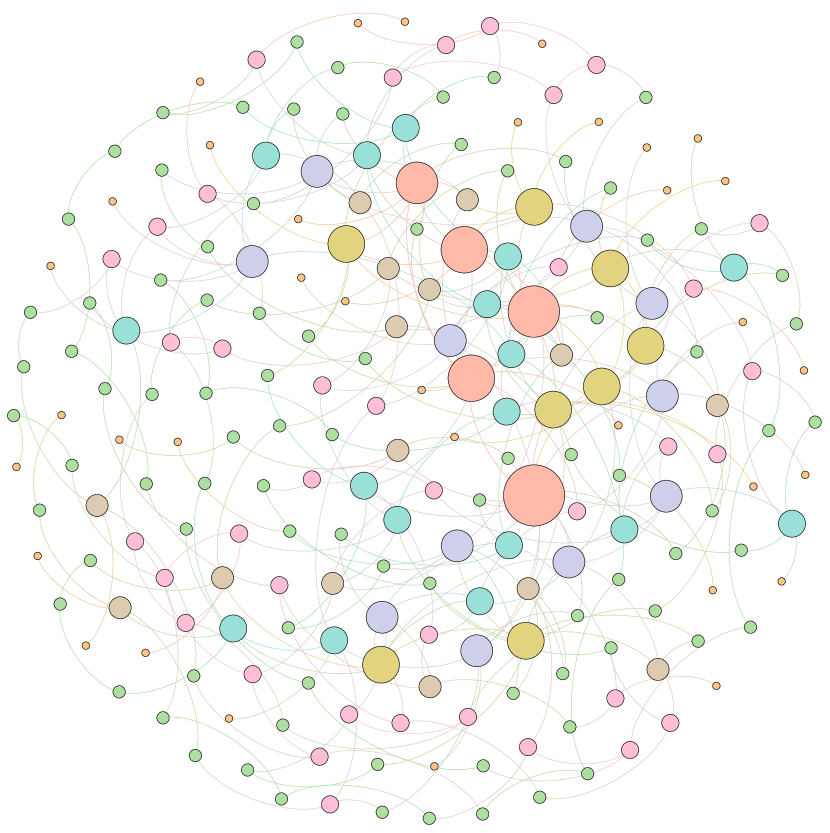

As shown in Figure 6, we conduct extensive experiments on the BA-15 network to demonstrate that ResiNet can learn to optimize graphs with different resilience and utility metrics and to defend against other types of attacks besides the node degree-based attack, such as the node betweenness-based attack.

Table 4 records the improvements in percentage of ResiNet for varying objectives on the BA-15 dataset. As visualized in Figure 6, ResiNet is not limited to defend against the node degree-based attack (Figure 6 (b)-(j)) and also learns to defend against the betweenness-based attack (Figure 6 (k)-(s)). Total three resilience metrics are used, with denoting the graph connectivity-based resilience metric, being the spectral radius and representing the algebraic connectivity. Total two utility metrics are adopted, including the global efficiency and the local efficiency . Not surprisingly, the optimized network with an improvement of about 3.6% for defending the betweenness-based attack also has a higher resilience (around 7.8%) against the node-degree attack. This may be explained as the similarity between node degree and betweenness for a small network.

| Objective | Gain (%) | Objective | Gain(%) |

|---|---|---|---|

| 35.3 | 14.6 | ||

| 15.3 | 15.3 | ||

| 48.2 | 43.2 | ||

| 14.2 | + | 13.1 | |

| + | 14.6 | + | 15.0 |

| + | 34.0 | + | 31.3 |

| + | 24.4 | + | 39.4 |

| + | 17.3 | + | 21.2 |

| + | 9.4 | + | 15.1 |

E.2 Inductivity on larger datasets

Even with limited computational resources, armed with the autoregressive action space and the power of FireGNN, ResiNet can be trained fully end-to-end on graphs with thousands of nodes using RL. We demonstrate the inductivity of ResiNet on graphs of different sizes by training ResiNet on the BA-20-200 dataset, which consists of graphs with the size ranging from 20 to 200, and then report its performance on directly guiding the edges selections on unseen test graphs. The filtration order is set to 1 for the computational limitation. As shown in Figure 7, we can see that ResiNet has the best performance for . The degrading performance with the graph size may be explained by the fact that larger graphs require a larger filtration order for ResiNet to work well. A more stable performance improvement of ResiNet is observed with the increment of graph size when trained to optimize network resilience and utility simultaneously, and ResiNet possibly finds a strategy to balance these two metrics.

E.3 Inspection of optimized networks

Moreover, to provide a deeper inspection into the optimized network structure, we take the EU power network as an example to visualize its network structure and the optimized networks given by ResiNet with different objectives. Compared to the original EU network, Figure 8 (b) is the network structure obtained by only optimizing the graph connectivity-based resilience. We can observe a more crowded region on the left, consistent with the ‘‘onion-like’’ structure concluded in previous studies. If we consider the combination gain of both resilience and utility, we observe a more compact clustering ‘‘crescent moon’’-like structure as shown in Figure 8 (c).

E.4 Performance comparisons under a large rewiring budget

In this section, we present the resilience improvement and the required number of edge rewiring of each algorithm under a large rewiring budget of 20. The running speed is also presented to compare the running time efficiency of each algorithm.

As shown in Table 5, traditional methods improve the network resilience significantly compared to ResiNet under a large rewiring budget of 200. However, traditional methods are still undesired in such a case since a solution with a large rewiring budget is not applicable in practice due to the vast cost of adding many new edges into a real system. For example, the actual number of rewiring budget for EA is hard to calculate since it is a population-based algorithm, so it is omitted in Table 5. All baselines adopt the early-stopping strategy that they will terminate if there is no positive resilience gain in a successive 1000 steps.

Table 6 indicates that the time it takes for the benchmark algorithm to solve the problem usually increases as the test data set size increases. In contrast, our proposed ResiNet is suitable for testing on a large dataset once trained.

| Method | BA-15 | BA-50 | BA-100 | BA-500 | BA-1000 | EU | P2P-Gnutella05 | P2P-Gnutella09 | |

|---|---|---|---|---|---|---|---|---|---|

| HC | 26.8 (10.0) | 52.1 (47.0) | 76.9 (97.3) | 45.8 (200) | 302.5 (200) | 71.9 (152.7) | 37.5 (193.3) | 40.2 (137.7) | |

| 18.6 (11.3) | 43.1 (62.7) | 56.9 (121) | 30.0 (200) | 66.3 (200) | 63.2 (200) | 27.7 (200) | 34.7 (196.3) | ||

| SA | 26.8 (20) | 49.7 (59.0) | 84.5 (119.7) | 43.2 (200) | 271.8 (200) | 73.5(160.3) | 37.1 (200) | 37.2 (134) | |

| 17.8 (21) | 41.1 (79.7) | 57.7 (127.7) | 31.4 (200) | 64.9 (200) | 62.8 (200) | 37.1 (200) | 35.2 (200) | ||

| Greedy | 23.5 (6) | 48.6 (13) | 64.3 (20) | ✗ | ✗ | 0.5 (3) | ✗ | ✗ | |

| 5.3 (15) | 34.7 (13) | 42.7 (20) | ✗ | ✗ | 0.3 (3) | ✗ | ✗ | ||

| EA | 35.3 (✗) | 50.2 (✗) | 61.9 (✗) | 9.9 (200) | 174.1 (200) | 66.2 (✗) | 2.3 (200) | 0 (200) | |

| 27.1 (✗) | 38.3 (✗) | 46.6 (✗) | 6.8 (200) | 18.7 (200) | 58.4 (✗) | 3.2 (200) | 0 (200) | ||

| DE-GNN-RL | 13.7 (2) | 0 (1) | 0 (1) | 1.6 (20) | 41.7 (20) | 9.0 (20) | 2.2 (20) | 0 (1) | |

| 10.9 (2) | 0 (1) | 0 (1) | 2.7 (20) | 20.1 (14) | 2.1 (20) | 0 (1) | 1.0 (20) | ||

| -GNN-RL | 13.7 (2) | 0 (1) | 0 (1) | 0 (1) | 8.8 (20) | 4.5 (20) | -0.2 (20) | 0 (1) | |

| 6.3 (2) | 0 (1) | 0 (1) | 0 (20) | -24.9 (20) | 4.8 (20) | -0.1 (20) | 0 (1) | ||

| ResiNet | 35.3 (6) | 61.5 (20) | 70.0 (20) | 10.2 (20) | 172.8 (20) | 54.2 (20) | 14.0 (20) | 18.6 (20) | |

| 26.9 (20) | 53.9 (20) | 53.1 (20) | 15.7 (20) | 43.7 (20) | 51.8 (20) | 12.4 (20) | 15.1 (20) |

| Method | BA-15 | BA-50 | BA-100 | BA-500 | BA-1000 | EU | P2P-Gnutella05 | P2P-Gnutella09 | |

|---|---|---|---|---|---|---|---|---|---|

| HC | 1.0 (1.0) | 1.1 (6.4) | 1.3 (22.2) | 21.9 (354.1) | 80.3 (1288.3) | 3.1 (94.2) | 15.3 (358.1) | 4.5 (89.1) | |

| 1.5 (11.5) | 1.1 (12.8) | 2.0 (49.0) | 40.9 (589.5) | 148.7 (2603.7) | 5.3 (193.7) | 24.7 (462.8) | 7.0 (190.6) | ||

| SA | 0.5 (0.5) | 0.3 (6.6) | 0.6 (22.6) | 12.2 (313.0) | 45.7 (1051.8) | 2.4 (91.2) | 10.8 (286.4) | 2.6 (89.4) | |

| 0.7 (1.7) | 0.7 (13.2) | 1.7 (47.5) | 33.9 (568.9) | 99.8 (2166.3) | 5.0 (193.5) | 23.9 (454.5) | 6.3 (188.5) | ||

| Greedy | 0.2 (6.0) | 34.1 (34.5) | 766.3 (✗) | ✗ | ✗ | 3061.7 (✗) | ✗ | ✗ | |

| 0.7 (0.7) | 64.1 (65.4) | 1478.9 (✗) | ✗ | ✗ | 6192.6 (✗) | ✗ | ✗ | ||

| EA | 0.01 (✗) | 0.1 (✗) | 1.6 (✗) | 2.5 (✗) | 10.3 (✗) | 0.2 (✗) | 1.6 (✗) | 0.4 (✗) | |

| 0.01 (✗) | 0.1 (✗) | 0.8 (✗) | 4.7 (✗) | 15.0 (✗) | 0.4 (✗) | 3.0 (✗) | 0.8 (✗) | ||

| DE-GNN-RL | 0.1 (✗) | 0.1 (✗) | 0.1 (✗) | 14.9 (✗) | 70.3 (✗) | 3.6 (✗) | 8.7 (✗) | 0.5 (✗) | |

| 0.1 (✗) | 0.1 (✗) | 0.2 (✗) | 13.7 (✗) | 60.9 (✗) | 4.5 (✗) | 1.0 (✗) | 6.7 (✗) | ||

| -GNN-RL | 0.02 (✗) | 0.03 (✗) | 0.07 (✗) | 1.3 (✗) | 56.5 (✗) | 2.6 (✗) | 8.2 (✗) | 0.5 (✗) | |

| 0.02 (✗) | 0.04 (✗) | 0.08 (✗) | 18.3 (✗) | 76.1 (✗) | 3.6 (✗) | 11.5 (✗) | 0.6 (✗) | ||

| ResiNet | 0.5 (✗) | 1.8 (✗) | 2.2 (✗) | 17.5 (✗) | 66.8 (✗) | 4.5 (✗) | 14.7 (✗) | 9.3 (✗) | |

| 0.5 (✗) | 1.9 (✗) | 2.4 (✗) | 18.0 (✗) | 67.5 (✗) | 5.2 (✗) | 15.0 (✗) | 10.3 (✗) |