Efficient AlphaFold2 Training using Parallel Evoformer and Branch Parallelism

Abstract

The accuracy of AlphaFold2, a frontier end-to-end structure prediction system, is already close to that of the experimental determination techniques. Due to the complex model architecture and large memory consumption, it requires lots of computational resources and time to train AlphaFold2 from scratch. Efficient AlphaFold2 training could accelerate the development of life science. In this paper, we propose a Parallel Evoformer and Branch Parallelism to speed up the training of AlphaFold2. We conduct sufficient experiments on UniFold implemented in PyTorch and HelixFold implemented in PaddlePaddle, and Branch Parallelism can improve the training performance by 38.67% and 36.93%, respectively. We also demonstrate that the accuracy of Parallel Evoformer could be on par with AlphaFold2 on the CASP14 and CAMEO datasets. The source code is available on https://github.com/PaddlePaddle/PaddleFleetX.

1 Introduction

Proteins are exceptionally critical for life science, as it plays a wide range of functions in organisms. A protein comprises a chain of amino acid residues and folds into a 3D structure to play its functions. Since the 3D structure determines the protein’s functions, studying the 3D structure helps to understand the mechanism of the protein’s activities. However, it is time-consuming and complex to study protein structure determination through experimental technologies, e.g., X-ray crystallography and nuclear magnetic resonance (NMR). Until now, the experimental methods have determined about two hundred thousand protein structures (Sussman et al. 1998; Burley et al. 2020), only a fairly small portion of hundreds of millions of publicly available amino acid sequences (The UniProt Consortium 2016). Therefore, efficient protein structure estimation methods are in great demand.

Many institutions (Jumper et al. 2021; Yang et al. 2015; Du et al. 2021; Baek et al. 2021; Peng and Xu 2011) made their efforts to develop AI-based protein structure prediction systems due to the efficiency and the capacity of the deep neural networks. In particular, thanks to the fantastic performance in the challenging 14th Critical Assessment of Protein Structure Prediction (CASP14) (Kryshtafovych et al. 2021a), AlphaFold2 (Jumper et al. 2021) from DeepMind has attracted lots of public attention. The accuracy of AlphaFold2 approaches that of the experimental determination technologies. AlphaFold2 is an end-to-end protein estimation pipeline that directly estimates the 3D coordinates of all the atoms in the proteins. A novel and well-designed architecture is proposed to promote the estimation accuracy, which jointly models multiple sequence alignments (MSAs) for evolutionary relationships and pairwise relations between the amino acids to learn the spatial relations.

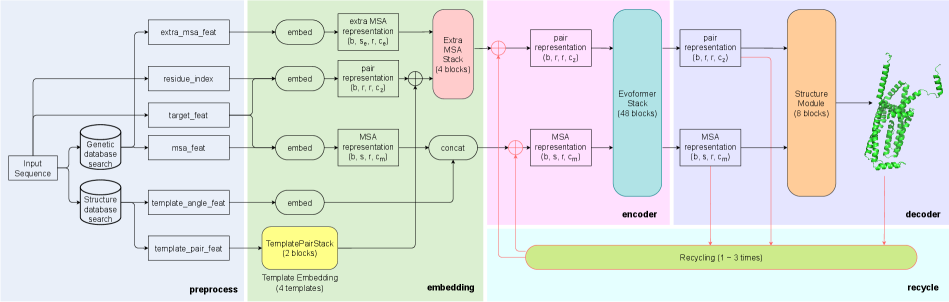

Although the accuracy of AlphaFold2 is satisfactory for protein structure prediction, it also takes 11 days to train end-to-end on 128 TPUv3 cores from scratch, limiting its wide usage. The structure of the AlphaFold2 is complex, as shown in Figure 2, which leads to high training overhead. Specifically, there are three main reasons: First, the AlphaFold2 is relatively deep, and the Evoformer block has two computing branches and cannot be calculated in parallel. Second, the official open-source implemented total batch size is limited to 128, and each device has only 1 batch size, which cannot be extended to more devices in parallel to accelerate training through data parallelism. Third, although the parameters of AlphaFold2 are only 93M, the number of parameter tensors reaches 4630. The time overhead of accessing these small tensors in different training stages of each iteration is not negligible.

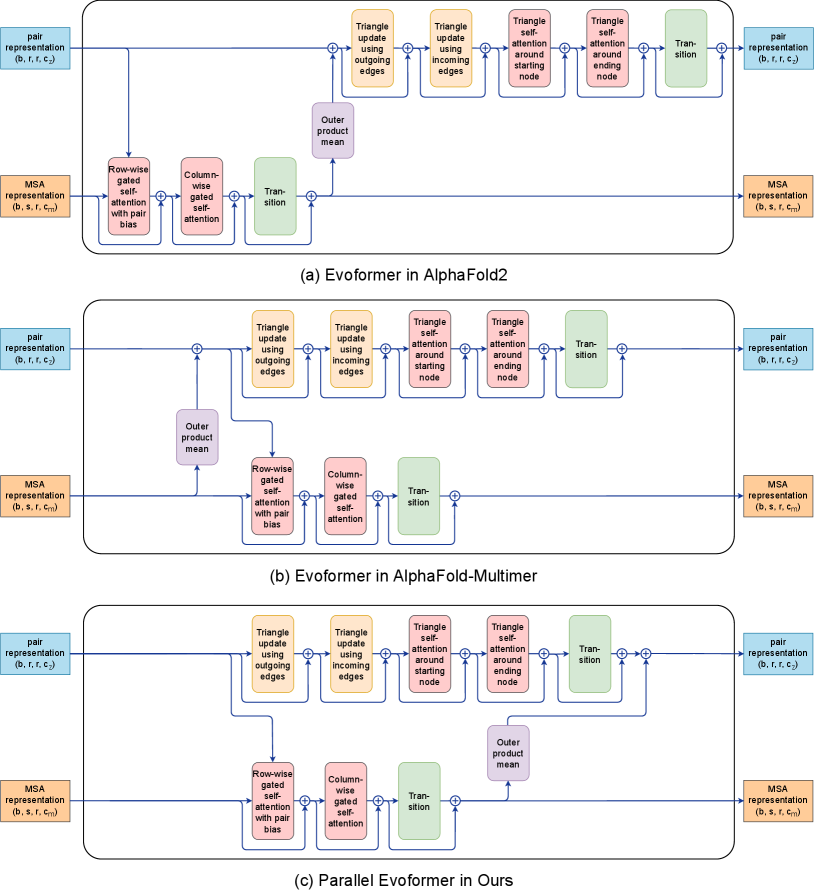

To this end, this paper proposes two optimization techniques for two of the above three problems to achieve efficient AlphaFold2 training under the premise of fully aligning hyperparameters (network model configuration and total batchsize of 128 with 1 protein sample per device). First, inspired by AlphaFold-mutimer (Evans et al. 2021), we modify the two serial computing branches in the Evoformer block into a parallel computing structure, named Parallel Evoformer, as shown in Figure 1. Second, we propose a novel Branch Parallelism (BP) for Parallel Evoformer, which can break the barrier of parallel acceleration that cannot be scaled to more devices through data parallelism due to a batch size of 1 on each device.

The method proposed in this paper to efficiently train AlphaFold2 models is general and not limited to deep learning frameworks and the version of re-implemented AlphaFold2. We perform extensive experimental verification on UniFold implemented in PyTorch and HelixFold implemented in PaddlePaddle. Extensive experimental results show that Branch Parallelism can achieve similar training performance improvements on both UniFold and HelixFold, which are 38.67% and 36.93% higher, respectively. We also demonstrate that the accuracy of Parallel Evoformer could be on par with AlphaFold2 on the CASP14 and CAMEO datasets.

The main contributions of this paper can be summarized as follows:

-

•

We improve the Evoformer in AlphaFold2 to Parallel Evoformer, which breaks the computational dependency of MSA and pair representation, and experiments show that this does not affect the accuracy.

-

•

We propose Branch Parallelism for Parallel Evoformer, which splits different computing branches across more devices in parallel to speed up training efficiency. This breaks the limitation of data parallelism in the official implementation of AlphaFold2.

-

•

We reduce the end-to-end training time of AlphaFold2 to 4.18 days on UniFold and 4.88 days on HelixFold, improving the training performance by 38.67% and 36.93%, respectively. It achieves efficient AlphaFold2 training, saving R&D economic costs for biocomputing research.

2 Background

2.1 Overview of AlphaFold2

Comparing to the traditional protein structure prediction model which usually consists of multiple steps, AlphaFold2 processes the input protein sequence and predicts the 3D protein structure through an end-to-end procedure. In general, AlphaFold2 takes the amino acid sequence as input and then search against protein databases to obtain MSAs and similar templates. By using MSA information, we can detect correlations between the parts of similar sequences that are more likely to mutate. The templates with regards to the input sequence, on the other hand, provide structural information for the model to predict the final structure.

The overall framework of AlphaFold2 can be divided into five parts: Preprocess, Embedding, Encoder, Decoder, and Recycle, which is shown in Figure 2. The Preprocess part mainly parses the input raw sequence and generates MSA-related and template-related features via genetic database search and structure database search. The features are then embedded into MSA representation, pair representation and extra MSA representation during Embedding part. These representations contain sufficient co-evolutionary information among similar sequences and geometric information of residue pairs within the sequence. The third part consists of 48-layer Evoformer blocks that iteratively refine and exchange information between the MSA representation and the pair representation. After obtaining the refined representations, the 8-block Structure Module acts as a decoder to directly generate the final structure. Moreover, AlphaFold2 adopts Recycling technique to improve the accuracy of prediction by passing the representations through Evoformer and Structure Module repeatedly.

2.2 Evoformer

Evoformer is the core module of AlphaFold2, which includes two tracks that handle MSA representation and pair representation, as well as the communication scheme between them. As shown in Figure 1(a), MSA representation is processed with Row-wise gated self-attention with pair bias, Column-wise gated self-attention and Transition, while pair representation is further processed with Triangle update, Triangle self-attention and Transition. The outer product mean is used to pass the information between the two tracks.

3 Related Work

3.1 Evoformer Improvment for AlphaFold2

Although many biological or pharmaceutical studies are based on AlphaFold2 after its open source in July 2021, few works focused on improving the main modules of AlphaFold2. ParaFold (Zhong et al. 2022) aims to accelerate the inference pipeline of AlphaFold2 by performing MSA searches with multiprocessing and distributing different procedures within Evoformer and Structure Module to CPUs or GPUs, instead of optimizing the network structure of AlphaFold2. In order to predict larger proteins, AlphaFold-Multimer (Evans et al. 2021) makes minor architectural modifications of AlphaFold2, including swapping the attention and triangular multiplicative update layers in the template stack which aligns the order in the Evoformer stack, and moving the outer product mean to the start of the Evoformer block which ensures MSA stack and pair stack can be processed in parallel. Recently, several works proposed new training schemes and improved main structures of AlphaFold2 which enable the prediction of proteins accurately from single primary sequence alone. Meta’s ESMFold (Lin et al. 2022) replaces the axial attention with a standard attention in order to adapt to Evoformer block. OmegaFold from HeliXon (Wu et al. 2022) optimizes Evoformer with simplified node attention and edge attention to capture complex interaction patterns among amino acids. HelixFold-Single (Fang et al. 2022) designs an adaptor to align the output of pretrained protein language model to Evoformer and the column-wise gated self-attention is removed due to no necessity of exchanging the messages within the MSAs.

3.2 Distributed Parallel Strategy

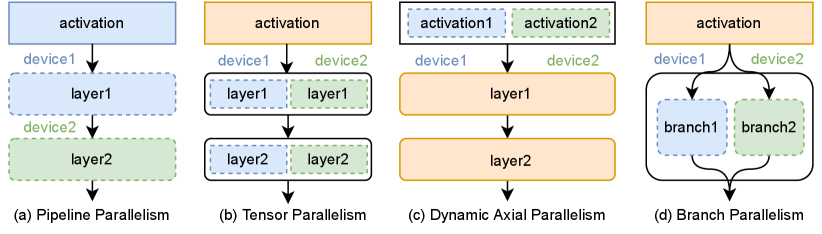

To improve training speed, data parallelism (DP) (Li et al. 2020) is the most popular and efficient method in deep learning distributed training. Each worker has a copy of the model parameters, and parallelism operates in the data dimension. Each device accepts mini-batch samples while scaling to more devices at the expense of increasing the total batch size. DeepSpeed’s ZeRO (Rajbhandari et al. 2020) and FairScale’s Fully Sharded Data Parallel (Baines et al. 2021) reduce redundant storage of tensors through communication costs. Model parallelism (MP) (Narayanan et al. 2021) uses more accelerators to train large-scale models, which can be divided into pipeline model parallelism (PP) and tensor model parallelism (TP). PP splits the whole model to multiple devices at layer dimension and will introduce the idle time named pipeline bubble, and TP distributes the parameter in individual layers to multiple devices at the tensor dimension. Dynamic axial parallelism (DAP) (Cheng et al. 2022) is proposed to solve the inefficient problem of training the AlphaFold2 model with a small parameter shape and a large activation memory consumption. Our proposed branch parallelism is different from other parallel computing methods. For the feature of two computing branches in Evoformer block, BP splits the two computing branches of MSA stack and pair stack into different devices for parallel computing. The difference between the parallel methods is shown in Figure 3.

4 Implementation

4.1 Parallel Evoformer

Evoformer of AlphaFold2 has two computational branches with axial self-attention in the MSA stack; triangular multiplicative updates and triangular self-attention in the pair stack; and an information exchange mechanism that outer product mean and attention biasing to allow communication between the stacks, as shown in Figure 1(a). The two computing branches of the original Evoformer are serial computing, where the input of the pair stack depends on the output of the MSA stack. AlphaFold-Multimer (Evans et al. 2021) moves the outer product mean to the start of the Evoformer block. Its main idea is to save the activation memory of the MSA stack and pair stack during backpropagation at training time and to process both stacks in parallel at inference time, as shown in Figure 1(b). However, the calculation of the pair stack still depends on the output of the outer product mean, which leaves room for improvement in parallel efficiency. In order to achieve independent computing and fully parallel computing of the two branches, we propose to move the outer product mean to the end of the Evoformer block, named Parallel Evoformer, see in Figure 1(c). The main trunk of the AlphaFold2 consists of 52 (48+4) Evoformer blocks. Instead of moving the outer product mean to the start of the Evoformer block, Parallel Evoformer still allows the pair representation and the MSA representation to evolve independently within a given block, with all cross communication happening at the end of the block. Since the overall process across the Evoformer block is learnable, the position of the outer product mean doesn’t affect the accuracy of the final prediction as the number of the Evoformer blocks increases, which is further proved by our experiments shown in Figure 5.

4.2 Branch Parallelism

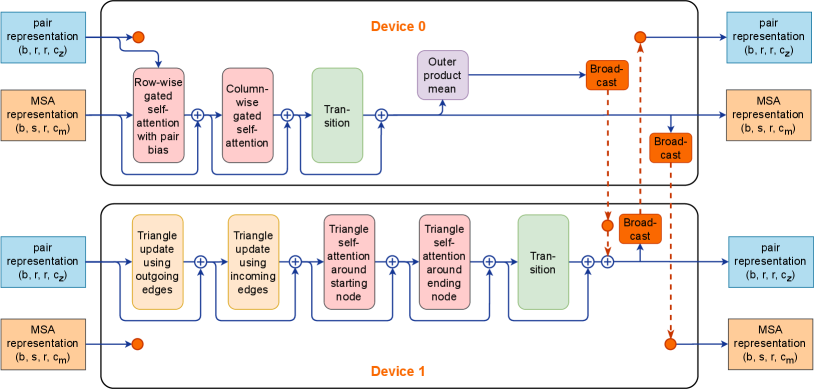

In order for the two computing branches in Parallel Evoformer to be computed in parallel, we propose Branch Parallelism (BP), and Figures 4 shows the details. BP is a novel parallel technique, which can be applied not only to the AlphaFold2 Evoformer model structure, but also to a model structure with multiple parallel computing branches and an approximate amount of computation. BP splits the calculation branch across multiple devices. One device calculates the MSA stack and the other calculates the pair stack. To preserve strict calculation semantics, BP inserts Broadcast to and AllReduce communication.

Specifically, in the forward stage, we do not need to split the input in each Evoformer block as the first device uses the MSA and pair representation to calculate the MSA stack and outer product mean branch then the outputs are broadcasted to the second device. The second device uses the pair representation to calculate the pair stack branch, adds the outer product mean broadcasted from the first device, and then broadcasts the output to the first device. In the backward stage, we insert Broadcast to synchronize the gradient of the outer product mean from the second device and AllReduce to accumulate the sum of the gradient of the input pair representation in each Evoformer block. At the end of backward propagation of the whole Evoformer module, we need an extra broadcast of the gradient of MSA representation. Finally, we adopt the AllReduce or Broadcast communication to synchronize the gradients of Evoformer model parameters.

BP does not split the intermediate activation for the Evoformer block dominated by small kernels, as a result, the same computational intensity is retained. At the same time, BP can compute the two branches of Evoformer completely in parallel. However, BP also has its limitations: it can be scaled up to as many devices as the number of computational branches and requires a similar amount of computation from each branch.

4.3 Hybrid Parallelism

DAP (Cheng et al. 2022) splits the activation to reduce the memory footprint. In addition, DAP also has high parallel efficiency with a large shape of input, e.g. in the fine-tuning stage. Therefore, we can combine BP and DAP to train the AlphaFold2 model when CPU launch overheads are not the performance bottleneck. Since BP and DAP just split the computing branches and the activation across multiple devices respectively, the same protein is processed on each device. To improve communication efficiency, BP and DAP work within a single node where the inter-GPU communication bandwidth is high. With data parallelism, we can scale the total mini-batch to 128. We call this technique BP-DAP-DP hybrid parallelism.

5 Experiments

5.1 Experimental Setup

Third-party implementation of AlphaFold2

Since the original AlphaFold2 only open sourced the inference code but not the training code, multiple teams have re-implemented and optimized AlphaFold2 training on different deep learning frameworks. We perform experimental verification on UniFold (Li et al. 2022) implemented in PyTorch (Paszke et al. 2019) and HelixFold (Wang et al. 2022) implemented in PaddlePaddle (Ma et al. 2019).

Datasets

For training, we follow the settings reported in the paper of AlphaFold2 to collect the training data, including 25% of samples from RCSB PDB (https://www.rcsb.org/) (Berman et al. 2000; Burley et al. 2020) and 75% of self-distillation samples. For evaluation, we collect two datasets: CASP14 and CAMEO. We collect 87 domain targets from CASP14 (https://predictioncenter.org/casp14/index.cgi) (Jumper et al. 2021; Kinch et al. 2021; Kryshtafovych et al. 2021b). We also collect 371 protein targets from CAMEO (https://www.cameo3d.org/) (Robin et al. 2021), ranging from 2021-09-04 to 2022-02-19.

Settings of Model Architectures

We use two model settings to assess the AlphaFold2 model training speed improved by this paper. The model settings are shown in Table 1, with initial training setting corresponding to model 1 and fine-tuning setting corresponding to model 1.1.1 reported in the supplementary information of paper AlphaFold2.

Hyperparameter setting

All our experiments are run on NVIDIA A100 (40G) and the mini-batch size is 1 on each device. We strictly follow the settings in AlphaFold2. As a feature in AlphaFold2, recycling iteration is to randomize a number from 1 to 4 in each step, performs the forward pass multiple times, and then performs the backward pass. To compare performance quickly and fairly, we firstly fix the random seed, then run 105 training steps, discard the first 5 steps, and finally calculate the average speed for the last 100 steps. After our extensive experimental verification, the average speed of 100 steps can get a similar global speed. We fix , then the random seed for each step is calculated by . Unless otherwise specified, we use AMP for training, using the Float32 parameter and BFloat16 intermediate activation.

| Training Process | Model setting | ||||

|---|---|---|---|---|---|

| Initial training | Model 1 | 4 | 256 | 128 | 1024 |

| Fine-tuning | Model 1.1.1 | 4 | 384 | 512 | 5120 |

5.2 Effectiveness of Parallel Evoformer

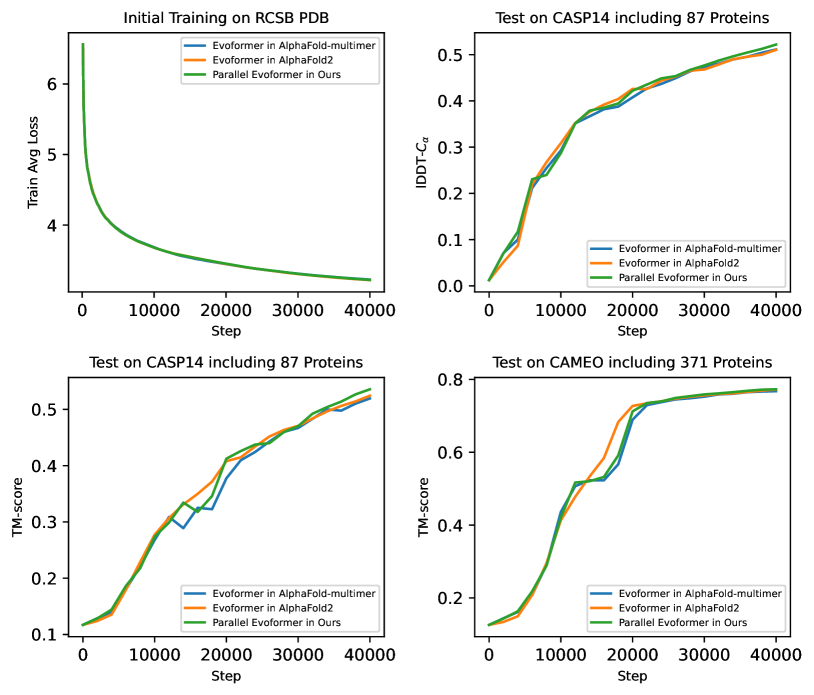

To demonstrate the effectiveness of the Evoformer block modification, we test 3 different Evoformer blocks shown in Figure 1. We train it from scratch on a single node with 8 A100 GPUs and the batch size is 1 per GPU. The training dataset consists of 120,000 proteins from the RCSB PDB, the learning rate is 5e-5, the global gradient clipping value is 0.1, the number of warm-up steps is 1000 and total training step is 40,000. Figure 5 shows the training loss, TM-score and lDDT-Cα metrics on the CASP14 and CAMEO test sets. The results show that Parallel Evoformer can achieve competitive accuracy with Evoformer in AlphaFold2 and AlphaFold-Mutimer. The training speed of the 3 different Evoformer blocks is the same. We also report the computational overhead ratio for 52 (48+4) Evoformer blocks, as shown in Table 2. It means that the position of the outer product mean does not affect the accuracy and training speed of AlphaFold2.

5.3 Training Performance

| Training Process | Evoformer | s/step | |||

|---|---|---|---|---|---|

| Evoformer | Other | Total | (%) | ||

| Initial training | AlphaFold2 | 3.12 | 1.88 | 5.00 | 62.40% |

| AlphaFold-Multimer | 3.09 | 1.89 | 4.98 | 62.04% | |

| Parallel Evoformer | 3.11 | 1.89 | 5.00 | 62.20% | |

| Fine-tuning | AlphaFold2 | 12.81 | 3.69 | 16.50 | 77.63% |

| AlphaFold-Multimer | 12.86 | 3.65 | 16.51 | 77.89% | |

| Parallel Evoformer | 12.83 | 3.67 | 16.50 | 77.75% | |

| Implementation | Training Process | Dtype | BP | s/step | protein/s | (%) |

|---|---|---|---|---|---|---|

| UniFold | Initial training | FP32 | 1 | 7.04 | 18.18 | - |

| FP32 | 2 | 5.41 | 23.65 | +30.12% | ||

| HelixFold | Initial training | FP32 | 1 | 6.48 | 19.75 | - |

| FP32 | 2 | 4.55 | 28.13 | +42.41% | ||

| UniFold | Initial training | BF16 | 1 | 4.16 | 30.76 | - |

| BF16 | 2 | 3.02 | 42.38 | +37.74% | ||

| Fine-tuning | BF16 | 1 | 15.02 | 8.52 | - | |

| BF16 | 2 | 10.70 | 11.96 | +40.37% | ||

| HelixFold | Initial training | BF16 | 1 | 4.92 | 26.01 | - |

| BF16 | 2 | 3.55 | 36.05 | +38.59% | ||

| Fine-tuning | BF16 | 1 | 16.45 | 7.78 | - | |

| BF16 | 2 | 12.29 | 10.41 | +33.84% |

Performance Comparison of BP and DAP

The author of FastFold (Cheng et al. 2022) has open-sourced DAP. To compare with the performance of DAP, we firstly use PaddlePaddle to reproduce the open-source code of FastFold, called PPFold. Then we add the implementation of BP on PPFold to compare the performance of DAP and BP.

Table 5 shows the performance comparison of FastFold and PPFold. As can be seen in the table, DAP=2 uses 2 GPUs compared to DAP=1, but there is a performance drop in both FastFold and PPFold as the last two dimensions of the inputs are relatively small with a low computational intensity. DAP splits the input into smaller tensors, and the computational intensity is not improved. In addition, a lot of additional communication overhead is introduced, resulting in a decrease in performance. BP splits the computing branches across different GPUs, which can be calculated in parallel while maintaining computational intensity, and only introducing a small amount of communication overhead. Thus, the performance is improved by 67.45%. Similar performance is also observed in end-to-end training, see Table 6.

These require an extra declaration. This paper does not compare BP with other distributed parallelisms such as TP and PP. There are two reasons for this. First, FastFold has compared DAP, TP and PP on AlphaFold2. The experimental results show that the acceleration efficiency of DAP is higher than that of TP and PP. Second, implementing TP and PP on AlphaFold2 requires a lot of work.

| Implementation | Training Process | Hardware | Step Time (s) | Protein/s | Training Time (days) | Total (days) | (%) |

|---|---|---|---|---|---|---|---|

| AlphaFold2-DP | Initial training | 128 × TPUv3 | 7.513 | 17.037 | 6.793 | 10.961 | |

| Fine-tuning | 30.729 | 4.165 | 4.167 | ||||

| OpenFold-DP | Initial training | 128 × A100(40G) | 8.9 | 14.382 | 8.047 | 10.849 | |

| Fine-tuning | 20.657 | 6.196 | 2.801 | ||||

| UniFold-DP | Initial training | 128 × A100(40G) | 4.16 | 30.76 | 3.761 | 5.798 | - |

| Fine-tuning | 15.02 | 8.52 | 2.037 | ||||

| UniFold-BP | Initial training | 256 × A100(40G) | 3.02 | 42.38 | 2.730 | 4.181 | +38.67% |

| Fine-tuning | 256 × A100(40G) | 10.70 | 11.96 | 1.451 | |||

| HelixFold-DP† | Initial training | 128 × A100(40G) | 4.925 | 25.989 | 4.453 | 6.685 | - |

| Fine-tuning | 16.458 | 7.777 | 2.232 | ||||

| HelixFold-BP | Initial training | 256 × A100(40G) | 3.555 | 36.005 | 3.214 | 4.882 | +36.93% |

| Fine-tuning | 256 × A100(40G) | 12.298 | 10.407 | 1.668 |

Performance of Branch Parallelism

Branch Parallelism is a general distributed parallelism strategy that can be applied to AlphaFold2 models implemented by different deep learning frameworks, such as UniFold implemented in PyTorch and HelixFold implemented in PaddlePaddle. We conduct extensive experiments with Float32 and BFloat16 data types, in the mode of initial training and fine-tuning, on UniFold and HelixFold, respectively. As shown in Table 3, the performance improvement of BP on Float32 is lower than that on BFloat16, but both still have more than 30% performance speedups. In general, on UniFold and HelixFold, two different AlphaFold2 implementations both have similar performance speedups. With the default BFloat16 data type, UniFold improves by 37.74% and 40.37% in the initial training and fine-tuning training stages, respectively. Similarly, HelixFold also achieved 38.59% and 33.84% performance speedup respectively. The performance improvement is lower than about 40%. The main reason is that the main module of the AlphaFold2 model is the Evoformer block, but there are other module computing overheads, as shown in Table 2. Branch Parallelism is only calculated in parallel in the two branches of the Evoformer block.

| Method | DAP | BP | Fwd + Bwd Time / Layer (ms) | |

| FastFold | 1 | 1 | 30.98 | - |

| 2 | 1 | 32.25 | -3.94% | |

| PPFold | 1 | 1 | 32.47 | - |

| 2 | 1 | 33.21 | -2.22% | |

| 1 | 2 | 19.39 | +67.45% |

Performance of Hybrid Parallelism

To illustrate the effectiveness of BP combined with other parallel strategies, we conduct experiments with different configurations using hybrid parallelism on HelixFold. As shown in Table 6, in the initial training, where the dimensions involved in the computation are relatively small, the performance of DAP=2 drops compared to that of unused, showing a negative gain. When BP=2, the performance is improved by 38.51%, indicating a positive benefit. Conversely, in the fine-tuning, where the dimensions of MSA depth and the length of amino acid sequences increase, DAP achieves a higher performance improvement than BP by splitting larger activations across multiple GPUs for parallel computing. However, the hybrid parallelism of DAP=2 and BP=2 has the approximate throughput of DAP=4 and BP=1 while DAP=4 and BP=2 have higher throughput than DAP=8 and BP=1, demonstrating that when the activation is divided to a certain size, the parallelism efficiency of BP is higher than that of DAP.

| Training Process | DAP | BP | s/step | protein/s | (%) |

| Initial training | 1 | 1 | 4.925 | 25.989 | - |

| 2 | 1 | 5.170 | 24.758 | -4.73% | |

| 1 | 2 | 3.555 | 36.005 | +38.51% | |

| Fine-tuning | 1 | 1 | 16.458 | 7.777 | - |

| 2 | 1 | 11.110 | 11.521 | +48.13% | |

| 1 | 2 | 12.298 | 10.408 | +33.82% | |

| 2 | 2 | 7.887 | 16.229 | +108.66% | |

| 4 | 1 | 7.883 | 16.237 | +108.77% | |

| 8 | 1 | 7.315 | 17.498 | +124.97% | |

| 4 | 2 | 5.700 | 22.456 | +188.71% |

End-to-end training Training Performance

We make a comparison among AlphaFold2 (Jumper et al. 2021), OpenFold (Ahdritz et al. 2021), HelixFold (Wang et al. 2022) and UniFold (Li et al. 2022), in terms of hardware, time cost of each step, training throughput and total training time as shown in Table 4. We also report training performance using Branch Parallelism on UniFold and HelixFold. The *-DP method uses 128 accelerator cards only with data parallelism, such as TPUv3 core or A100, while the *-BP method uses 256 accelerator cards in combination with data parallelism and branch parallelism.

Compared with the original AphaFold2-DP, UniFold-DP has been optimized, and the training time has been reduced from 10.961 days to 5.798 days, with an increase of 89%. We add Branch Parallelism to UniFold to obtain UniFold-BP, which further shortens the training time to 4.181 days, and improves the training performance by 38.67%.

Similarly, HelixFold has been optimized by operator fusion and tensor fusion to improve the training throughput to obtain the HelixFold-DP performance and the training time has been reduced from 10.961 days to 6.685 days. We use Branch Parallelism to further improve training throughput. The total training time was shortened from 6.685 days to 4.882 days, with an increase of 36.93%.

Sufficient experimental results show that Branch Parallelism has similar performance improvements in different deep learning frameworks and optimized AlphaFold2 implementations, which is enough to illustrate the effectiveness and generalization of Branch Parallelism.

6 Conclusion

As the end-to-end training of AlphaFold2 takes lots of computational resources, it is a great burden for the individuals and institutions who are interested in applying AlphaFold2. This paper improves the Evoformer block of AlphaFold2 into Parallel Evoformer, which can be extended to more accelerators by the proposed Branch Parallelism to speed up training. After optimization, the training time was shortened to 4.18 days on UniFold and 4.88 days on HelixFold. We believe that the efficient AlphaFold2 training proposed in this paper can help accelerate research progress in the field of protein structure prediction.

References

- Ahdritz et al. (2021) Ahdritz, G.; Bouatta, N.; Kadyan, S.; Xia, Q.; Gerecke, W.; and AlQuraishi, M. 2021. OpenFold.

- Baek et al. (2021) Baek, M.; DiMaio, F.; Anishchenko, I.; Dauparas, J.; Ovchinnikov, S.; Lee, G. R.; Wang, J.; Cong, Q.; Kinch, L. N.; Schaeffer, R. D.; et al. 2021. Accurate prediction of protein structures and interactions using a three-track neural network. Science, 373(6557): 871–876.

- Baines et al. (2021) Baines, M.; Bhosale, S.; Caggiano, V.; Goyal, N.; Goyal, S.; Ott, M.; Lefaudeux, B.; Liptchinsky, V.; Rabbat, M.; Sheiffer, S.; Sridhar, A.; and Xu, M. 2021. FairScale: A general purpose modular PyTorch library for high performance and large scale training. https://github.com/facebookresearch/fairscale.

- Berman et al. (2000) Berman, H. M.; Westbrook, J.; Feng, Z.; Gilliland, G.; Bhat, T. N.; Weissig, H.; Shindyalov, I. N.; and Bourne, P. E. 2000. The Protein Data Bank. Nucleic Acids Research, 28(1): 235–242.

- Burley et al. (2020) Burley, S. K.; Bhikadiya, C.; Bi, C.; Bittrich, S.; Chen, L.; Crichlow, G. V.; Christie, C. H.; Dalenberg, K.; Di Costanzo, L.; Duarte, J. M.; Dutta, S.; Feng, Z.; Ganesan, S.; Goodsell, D. S.; Ghosh, S.; Green, R. K.; Guranović, V.; Guzenko, D.; Hudson, B. P.; Lawson, C.; Liang, Y.; Lowe, R.; Namkoong, H.; Peisach, E.; Persikova, I.; Randle, C.; Rose, A.; Rose, Y.; Sali, A.; Segura, J.; Sekharan, M.; Shao, C.; Tao, Y.-P.; Voigt, M.; Westbrook, J.; Young, J. Y.; Zardecki, C.; and Zhuravleva, M. 2020. RCSB Protein Data Bank: powerful new tools for exploring 3D structures of biological macromolecules for basic and applied research and education in fundamental biology, biomedicine, biotechnology, bioengineering and energy sciences. Nucleic Acids Research, 49(D1): D437–D451.

- Cheng et al. (2022) Cheng, S.; Wu, R.; Yu, Z.; Li, B.; Zhang, X.; Peng, J.; and You, Y. 2022. FastFold: Reducing AlphaFold Training Time from 11 Days to 67 Hours. arXiv preprint arXiv:2203.00854.

- Du et al. (2021) Du, Z.; Su, H.; Wang, W.; Ye, L.; Wei, H.; Peng, Z.; Anishchenko, I.; Baker, D.; and Yang, J. 2021. The trRosetta server for fast and accurate protein structure prediction. Nature protocols, 16(12): 5634–5651.

- Evans et al. (2021) Evans, R.; O’Neill, M.; Pritzel, A.; Antropova, N.; Senior, A.; Green, T.; Žídek, A.; Bates, R.; Blackwell, S.; Yim, J.; Ronneberger, O.; Bodenstein, S.; Zielinski, M.; Bridgland, A.; Potapenko, A.; Cowie, A.; Tunyasuvunakool, K.; Jain, R.; Clancy, E.; Kohli, P.; Jumper, J.; and Hassabis, D. 2021. Protein complex prediction with AlphaFold-Multimer. bioRxiv.

- Fang et al. (2022) Fang, X.; Wang, F.; Liu, L.; He, J.; Lin, D.; Xiang, Y.; Zhang, X.; Wu, H.; Li, H.; and Song, L. 2022. HelixFold-Single: MSA-free Protein Structure Prediction by Using Protein Language Model as an Alternative. arXiv preprint arXiv:2207.13921.

- Jumper et al. (2021) Jumper, J.; Evans, R.; Pritzel, A.; Green, T.; Figurnov, M.; Ronneberger, O.; Tunyasuvunakool, K.; Bates, R.; Žídek, A.; Potapenko, A.; et al. 2021. Highly accurate protein structure prediction with AlphaFold. Nature, 596(7873): 583–589.

- Kinch et al. (2021) Kinch, L. N.; Schaeffer, R. D.; Kryshtafovych, A.; and Grishin, N. V. 2021. Target classification in the 14th round of the critical assessment of protein structure prediction (CASP14). Proteins: Structure, Function, and Bioinformatics, 89(12): 1618–1632.

- Kryshtafovych et al. (2021a) Kryshtafovych, A.; Schwede, T.; Topf, M.; Fidelis, K.; and Moult, J. 2021a. Critical assessment of methods of protein structure prediction (CASP)—Round XIV. Proteins: Structure, Function, and Bioinformatics, 89(12): 1607–1617.

- Kryshtafovych et al. (2021b) Kryshtafovych, A.; Schwede, T.; Topf, M.; Fidelis, K.; and Moult, J. 2021b. Critical assessment of methods of protein structure prediction (CASP)—Round XIV. Proteins: Structure, Function, and Bioinformatics, 89(12): 1607–1617.

- Li et al. (2020) Li, S.; Zhao, Y.; Varma, R.; Salpekar, O.; Noordhuis, P.; Li, T.; Paszke, A.; Smith, J.; Vaughan, B.; Damania, P.; et al. 2020. Pytorch distributed: Experiences on accelerating data parallel training. arXiv preprint arXiv:2006.15704.

- Li et al. (2022) Li, Z.; Liu, X.; Chen, W.; Shen, F.; Bi, H.; Ke, G.; and Zhang, L. 2022. Uni-Fold: An Open-Source Platform for Developing Protein Folding Models beyond AlphaFold. bioRxiv.

- Lin et al. (2022) Lin, Z.; Akin, H.; Rao, R.; Hie, B.; Zhu, Z.; Lu, W.; Santos Costa, A. d.; Fazel-Zarandi, M.; Sercu, T.; Candido, S.; and Rives, A. 2022. Language models of protein sequences at the scale of evolution enable accurate structure prediction. bioRxiv.

- Ma et al. (2019) Ma, Y.; Yu, D.; Wu, T.; and Wang, H. 2019. PaddlePaddle: An open-source deep learning platform from industrial practice. Frontiers of Data and Domputing, 1(1): 105–115.

- Narayanan et al. (2021) Narayanan, D.; Shoeybi, M.; Casper, J.; LeGresley, P.; Patwary, M.; Korthikanti, V. A.; Vainbrand, D.; Kashinkunti, P.; Bernauer, J.; Catanzaro, B.; et al. 2021. Efficient large-scale language model training on gpu clusters. arXiv preprint arXiv:2104.04473.

- Paszke et al. (2019) Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; Desmaison, A.; Kopf, A.; Yang, E.; DeVito, Z.; Raison, M.; Tejani, A.; Chilamkurthy, S.; Steiner, B.; Fang, L.; Bai, J.; and Chintala, S. 2019. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Advances in Neural Information Processing Systems 32, 8024–8035. Curran Associates, Inc.

- Peng and Xu (2011) Peng, J.; and Xu, J. 2011. RaptorX: exploiting structure information for protein alignment by statistical inference. Proteins: Structure, Function, and Bioinformatics, 79(S10): 161–171.

- Rajbhandari et al. (2020) Rajbhandari, S.; Rasley, J.; Ruwase, O.; and He, Y. 2020. Zero: Memory optimizations toward training trillion parameter models. In SC20: International Conference for High Performance Computing, Networking, Storage and Analysis, 1–16. IEEE.

- Robin et al. (2021) Robin, X.; Haas, J.; Gumienny, R.; Smolinski, A.; Tauriello, G.; and Schwede, T. 2021. Continuous Automated Model EvaluatiOn (CAMEO)—Perspectives on the future of fully automated evaluation of structure prediction methods. Proteins: Structure, Function, and Bioinformatics, 89(12): 1977–1986.

- Sussman et al. (1998) Sussman, J. L.; Lin, D.; Jiang, J.; Manning, N. O.; Prilusky, J.; Ritter, O.; and Abola, E. E. 1998. Protein Data Bank (PDB): database of three-dimensional structural information of biological macromolecules. Acta Crystallographica Section D: Biological Crystallography, 54(6): 1078–1084.

- The UniProt Consortium (2016) The UniProt Consortium. 2016. UniProt: the universal protein knowledgebase. Nucleic Acids Research, 45(D1): D158–D169.

- Wang et al. (2022) Wang, G.; Fang, X.; Wu, Z.; Liu, Y.; Xue, Y.; Xiang, Y.; Yu, D.; Wang, F.; and Ma, Y. 2022. HelixFold: An Efficient Implementation of AlphaFold2 using PaddlePaddle. arXiv preprint arXiv:2207.05477.

- Wu et al. (2022) Wu, R.; Ding, F.; Wang, R.; Shen, R.; Zhang, X.; Luo, S.; Su, C.; Wu, Z.; Xie, Q.; Berger, B.; Ma, J.; and Peng, J. 2022. High-resolution de novo structure prediction from primary sequence. bioRxiv.

- Yang et al. (2015) Yang, J.; Yan, R.; Roy, A.; Xu, D.; Poisson, J.; and Zhang, Y. 2015. The I-TASSER Suite: protein structure and function prediction. Nature methods, 12(1): 7–8.

- Zhong et al. (2022) Zhong, B.; Su, X.; Wen, M.; Zuo, S.; Hong, L.; and Lin, J. 2022. ParaFold: Paralleling AlphaFold for Large-Scale Predictions. In International Conference on High Performance Computing in Asia-Pacific Region Workshops, HPCAsia 2022 Workshop, 1–9. New York, NY, USA: Association for Computing Machinery. ISBN 9781450395649.