Efficient correlation-based discretization of continuous variables for annealing machines

Abstract

Annealing machines specialized for combinatorial optimization problems have been developed, and some companies offer services to use those machines. Such specialized machines can only handle binary variables, and their input format is the quadratic unconstrained binary optimization (QUBO) formulation. Therefore, discretization is necessary to solve problems with continuous variables. However, there is a severe constraint on the number of binary variables with such machines. Although the simple binary expansion in the previous research requires many binary variables, we need to reduce the number of such variables in the QUBO formulation due to the constraint. We propose a discretization method that involves using correlations of continuous variables. We numerically show that the proposed method reduces the number of necessary binary variables in the QUBO formulation without a significant loss in prediction accuracy.

I Introduction

Combinatorial optimization problems aim to find the optimal combination satisfying certain constraints. It is crucial to obtain combinatorial optimization problems quickly in practice. However, a computational explosion occurs when the number of combinations becomes enormous; a brute-force method cannot solve this problem.

Simulated and quantum annealing principles in physics play a crucial role in developing specialized machines for combinatorial optimization problems. Typical examples include D-Wave Advantage by D-Wave Systems Inc.[1], Digital Annealer by Fujitsu Ltd.[2], and CMOS Annealing Machine by Hitachi Ltd.[3] The input form of these annealing machines is a quadratic form of binary variables called the quadratic unconstrained binary optimization (QUBO) formulation, which is equivalent to the Ising model. Hence, one should convert the original combinatorial optimization problems to the QUBO formulation. Then, the specialized machines quickly explore the optimal solution based on simulated or quantum annealing principles. Reference [4] gives various examples of the Ising models and QUBO formulations for famous combinatorial optimization problems.

Although specialized machines give only approximate solutions, we can use them in various practical situations. Many research institutes and companies are investigating applications of annealing machines to address social issues. Such applications include machine learning [5, 7, 6], financial optimization [8], space debris removal [9], traffic flow [10, 11], medical imaging [12], and E-commerce [13]. As mentioned above, the annealing machines can solve combinatorial optimization problems that are difficult to solve with conventional computers within reasonable calculation time. Hence, they are attracting much attention as a new computational technology.

Annealing machines with the QUBO formulation can also solve problems with continuous variables; binary expansions of the original continuous variables are used [14]. The corresponding QUBO formulation is then derived. There are studies on the binary expansion method with which annealing machines solve linear regression problems [15] and support vector machines [16]. Annealing machines reduce the computational time by a factor of 2.8 for relatively large linear regression problems [15]; this study showed the superiority of annealing machines.

However, annealing machines have the limitation that the number of variables is not very large. Practical optimization problems require many variables, which makes it impossible to embed the QUBO formulation. For example, D-Wave Advantage can only solve problems up to about 5000 qubits. As mentioned above, continuous optimization problems require the discretization of variables. Previous studies used a naive discretization with a fixed number of binary variables for each continuous variable. Hence, the number of necessary binary variables increases proportionally with the number of continuous variables. It is problematic for a large-scale optimization problem with continuous variables. A rough discretization decreases the prediction accuracy because of insufficient resolution.

We propose an efficient discretization method for annealing machines, which involves the correlation between continuous variables. We carry out a short sampling for the original problems with continuous variables in advance. Then, the information on the correlations leads to a problem-specific discretization. The basic idea is as follows. We share the binary variables between the continuous variables that tend to be roughly the same value. Thus, the number of binary variables can be reduced without degrading the prediction accuracy. We investigated the effectiveness of the proposed method through a numerical experiment on linear regression problems.

The remainder of the paper is as follows. In Sec. 2 we give a brief review of the formulation for the annealing machines. We review the linear regression problem and its expression as a QUBO formulation in Sec. 3. In Sec. 4, we present the proposed efficient correlation-based discretization method. In Sec. 5, we explain the numerical experiment to investigate the effectiveness of the proposed method. Finally, we conclude the paper in Sec. 6.

II Basic Formulation for Annealing Machines

II.1 Ising model and QUBO formulation

The Ising model is one of the most fundamental models to describe the properties of magnetic materials. Let denote a variable for the -th spin. Then, the energy function of the Ising model with the state vector is defined as follows:

| (1) |

where corresponds to the two-body interaction between the spins and , and is the external magnetic field on .

The QUBO formulation has the following cost function for the state vector :

| (2) |

where is -th binary variable in , and is the strength of the interaction between the binary variables and . This formulation is equivalent to the Ising model via the variable transformation with

| (3) |

Conversion between and is also possible.

From the computational viewpoint, annealing machines are domain-specific, and their role is simple; to find the ground state that minimizes Eqs. (1) or (2). Despite the domain-specific characteristic, we can solve various combinatorial optimization problems with annealing machines. The reason is that various optimization problems are converted into the QUBO formulation [4]; we formulate the Ising model or QUBO formulation so that the ground state coincides with the optimal solution of the combinatorial optimization problem. Hence, we can solve combinatorial optimization problems with the annealing machines.

As stated above, the Ising model and the QUBO formulation are equivalent. However, the QUBO formulation is more suitable for computation, and then we employ the QUBO formulation below.

II.2 Two types of annealing machines

The QUBO formulation is widely applicable to different types of annealing machines.

There are two main types of annealing machines currently under development. One uses simulated annealing (SA) and the other uses quantum annealing (QA). Although the mechanisms of the search process differ, both types accept the QUBO formulation as the input for specific hardware. We briefly review why the QUBO formulation is suitable for both types of machines.

SA is an algorithm based on thermal fluctuations. When the temperature is high, the thermal fluctuations are large. The algorithm searches the large area in the state spaces. A gradual decrease in temperature indicates the settling down to the ground state. Numerically, the probability of a state change is often defined as follows:

| (4) |

where is the energy difference when a certain state is changed, and is temperature. The ground state is adequately obtained if the temperature decreases slowly enough [17]. Note that in Eq. (4) is evaluated using Eq. (2). The algorithm uses the cost function of the QUBO formulation. It indicates that the QUBO formulation is suitable as the input for machines.

QA was proposed by Kadowaki and Nishimori and uses quantum fluctuation [18]. Instead of the classical binary spin variables, we need quantum spins denoted by the Pauli matrix. Introducing the state vector with the component of the Pauli matrices, , and the component for -th spin, , the Hamiltonian is defined as follows:

| (5) |

where is a control parameter. The second term in the right-hand side (r.h.s.) of Eq. (5) corresponds to the quantum fluctuations. The initial state is prepared as the ground state under quantum fluctuations. By gradually weakening the quantum effect, i.e., , QA seeks the ground state determined by the first term on the r.h.s. of Eq. (5). QA can use more rapid scheduling than SA [19]. Such a computation method is also called adiabatic quadratic computing [20].

The annealing mechanism of QA differs from that of SA. However, the final ground state is defined only by the first term on the r.h.s. of Eq. (5). It is straightforward to obtain the first term from the QUBO formulation in Eq. (2). Hence, practical QA machines, such as the D-Wave Advantage, accept the QUBO formulation as the input.

The main topic of this paper is the discretization of continuous variables. Although the annealing mechanisms vary between SA and QA, we can use the following discussions with the QUBO formulation for both SA and QA machines. Because of the simpler notations, only the classical cases, i.e., SA cases, are considered in the following sections; we do not consider any quantum effects.

III Linear Regression and QUBO Formulation

III.1 Linear regression problems

Linear regression is one of the fundamental methods in data analysis with which we seek the relationship between a response variable and explanatory variables. Although matrix operations are sufficient to solve simple linear regression problems, further computational efforts are necessary in large-scale cases or when there are regularization terms. Hence, the use of an annealing machine is desirable. With these problems in mind, Date and Potok discussed the implementation and increasing the speed of simple linear regression problems using an annealing machine [15]. Linear regression problems also yield an example to investigate discretization methods of continuous variables. We briefly review Date and Potok’s discussion.

A linear regression model is defined as

| (6) |

where is the -th parameter, and is the -th input variable. They are summarized in the following forms:

| (7) | ||||

| (8) |

where the first component in is a dummy variable; the dummy variable is convenient for the vector expression of Eq. (6) as follows:

| (9) |

In linear regression problems, we seek the model parameters in Eq. (6) that are suitable for our obtained data. Let be a set of training data, where is a -dimensional input vector, and is an output for -th data. We introduce an output vector and matrix defined as

| (10) |

Then, a conventional linear regression problem has the following cost function:

| (11) |

In Eq. , is meaningless in optimizing . Hence, Eq. comes down to the following problem:

| (12) |

Of course, it is possible to find the minimum for Eq. (12) via simple matrix operations. However, the matrix operations include a matrix inversion with a high computational cost. Annealing machines directly solve the optimization problem in the form of Eq. (12).

III.2 QUBO formulation for linear regression

As denoted in Sec. I, Date and Potok showed that the QUBO formulation and the annealing machines enable faster computation than conventional classical computers even in simple linear regression problems without any regularization term [15]. We now explain the QUBO formulation for simple linear regression problems.

First, we introduce as a basis vector. For later use, the components in are in ascending order of the absolute value. An example of this vector is .

Second, we introduce a binary matrix . Then, the discretization of the linear regression weight is

| (13) |

which indicates that the binary variable acts like a flag to determine whether the basis is used for the expression. For the above example of , we can express the values with . Note that there may not be a one-to-one correspondence between and ; we can use redundant expressions for original variables. Although the redundant basis could yield good annealing performance, this point is not our main research topic.

Third, let denote the flattened one-dimensional vector derived from the binary matrix . Then, we rewrite Eq. (13) in a matrix form as follows:

| (14) |

where is the basis matrix derived from the Kronecker product of the -dimensional unit matrix and . An example of the matrix is given later.

IV Proposed Efficient Correlation-based Discretization Method

IV.1 Problems with naive method

As mentioned in Sec. III.2, the size of the binary vector is because we assign binary variables to each continuous variable with the naive discretization method. However, for large-scale problems, this method is likely to exceed the size limit of the annealing machine. How can we reduce the number of binary variables within the size limit of the annealing machine?

The simplest method is to reduce the components of the basis assigned in the binary expansion. However, this discretization reduces the precision of the original continuous variables and the ranges that the variables can represent.

One may employ the discretization method for each continuous variable. The flexible discretization may yield good performance. However, we cannot determine the required expression for each original continuous variable before solving the problem.

It is also possible to choose randomly continuous variables with reduced bases; this randomized method might yield a good accuracy on average. However, it could degrade the accuracy of a realization.

In summary, the reduction in the basis degrades the precision of each original continuous variable and yields narrow ranges. Hence, this reduction could lead to poor prediction accuracy for the linear regression.

IV.2 Basic idea of proposed method

To avoid the degradation in precision and narrower ranges, we exploit the same binary variables for discretization. For example, expresses and as

When we consider two large negative values, will commonly be used. Hence, the coefficient of is always . If two variables are strongly correlated, the coefficients of for them will be the same. That is, the change in the coefficients of largely affects these two variables, which indicates the strong correlation between them. Hence, we can use the same binary variable for in this example.

Note that using the same binary variable does not degrade the precision of approximation, and the range is unchanged. These are different from the naive methods in Sec. IV.1. Note that we must find pairs of continuous variables with strong correlations. Hence, we need a preliminary evaluation of correlations in the original continuous variables. Although this evaluation takes some computational effort, only rough estimations for the correlations are sufficient; the computational cost is not high.

The basic idea of the proposed method is summarized as follows: evaluate correlations, find strongly correlated pairs, and reduce redundant binary variables. In the following sections, we first explain how to reduce the number of binary variables and then give the entire procedure to derive the QUBO formulation.

IV.3 Choice of basis matrix

As reviewed in Sec. III.2, continuous parameters are discretized using Eq. (14). For example, we here consider , , . Then, Eq. (14) yields the following discretization:

| (16) |

That is, bits are assigned to each of the continuous variables and , and the total number of binary variables is .

We assume that the continuous variables and are strongly correlated. We then modify matrix by considering the strong correlation between the continuous variables. In accordance with the modification, the size of is also reduced. The result of the modification is as follows:

| (17) |

Note that the redundant binary variable is deleted by sharing the binary variable with and . If further reduction is necessary, we can use the following expression:

| (18) |

In summary, we seek a reduced matrix and the corresponding vector for the binary variables with the following form:

| (19) |

Note that the binary variable with a large absolute value is shared first. As mentioned in Sec. III.2, is in ascending order. Therefore, the order of sharing is . For a linear regression problem, there is a change in the optimization variables, and Eq. (15) is modified as

| (20) |

Note that the cost function is still in the QUBO formulation.

IV.4 Procedure to obtain reduced QUBO formulation

To reduce the redundant binary variables, we should find strongly correlated pairs in advance. Hence, we carry out a short sampling procedure for the original problem with the continuous variables with a conventional computer. We then choose correlated pairs that share the same binary variables. We determine the correlated pairs using the correlation matrix with a certain threshold.

Note that the threshold is determined by how many pairs we want to make; the limitation of the number of spins in the annealing machines affects this.

The procedure is summarized as follows:

-

(i)

Sample short-time series data of continuous variables using Monte Carlo methods.

-

(ii)

Calculate the correlations between continuous variables from the time series data.

-

(iii)

Define a basis matrix and binary vector using the information on the correlations. That is, reduce matrix and for the variable pairs whose correlation values exceed a certain threshold.

-

(iv)

Derive the QUBO formulation by using matrix and the reduced .

We give some comments on this procedure.

First, we need additional samplings in step (i). If the sampling step takes a long time, this procedure could not be practical even if the optimization is fast in annealing machines. However, we do not need highly accurate information on the correlations; only a rough estimation is sufficient for the pairing in step (ii). Hence, the short-time sampling is sufficient, and its computational cost is low.

Second, one may obtain the correlations analytically in some cases, for example, in Gaussian random Markov fields. In such cases, we can skip the Monte Carlo samplings in step (i).

Third, the number of reduced binary variables depends on the threshold with which we define the strongly correlated pairs. As stated above, we simply set the threshold in accordance with how much we want to reduce the number of variables.

V Numerical Experiment

As mentioned above, continuous variables with strong correlations tend to have the same value. Hence, the proposed method reduces the number of binary variables without a significant loss in prediction accuracy.

To verify the performance of the proposed method, we applied it to a linear regression problem. We calculate the mean absolute errors by generating artificial data. We compared the performance of the proposed method with that of the random reduction methods.

V.1 Artificial data generation

We use the following linear function to generate artificial data:

| (21) |

Hence, the number of parameters is . First, we generate from a uniform distribution and we set it as the -th input data . Observation noise is then sampled from the standard normal distribution. By adding to the true output as

| (22) |

we have the -th data .

We repeat this process times and split the generated data into two sets, i.e., training and test. The size of the training data set is , and the remaining data pairs are used as the test data set. Since the problem is not difficult to solve, we use a small amount of the training data.

V.2 Samplings and evaluating the correlations

Once we fix the training data, the cost function in Eq. (12) is determined. As the preparation step, we then obtain short-time series data from the Monte Carlo sampling for the linear regression cost function in Eq. (12), which has the original continuous variables. Samples are generated from the Gibbs-Boltzmann distribution with the aid of the Metropolis rule. We generate the candidates for the Metropolis rule by adding a random variable generated from to a randomly selected parameter. The sampling interval is steps, and the length of the time-series data is . The sampling temperature is , although we confirmed that the other temperatures are also suitable to obtain a rough estimation of the correlations.

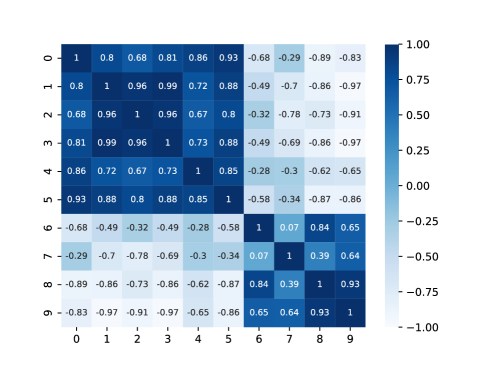

Figure 1 shows an example of correlation matrices. There are several correlated pairs. The highest correlated one is . Although and are candidates, we do not use them because and appear in the pair . We then select the next pairs and . We set the threshold to . Hence, we reduced the binary variables from these three pairs, i.e., , , and for the case in Fig. 1.

V.3 Creating basis matrix and binary vector

Although we do not know detailed information about parameters in advance, it is necessary to define a basis vector in Eq. (13). Since our aim was to show the effectiveness of the proposed method, we choose the basis vector as follows:

| (23) |

Hence, in Eq. (13). Note that the choice of the basis vector is sufficient to represent the target function in Eq. (21). The yields the basis matrix via .

Next, we redefine matrix with the aid of the above-mentioned highly correlated pairs. For the selected pairs, we share certain binary variables, as exemplified in Sec. IV.3; the order is from highest absolute value to lowest. In the experiment, we changed the number of shared binary variables to each pair and investigated the decrease in the prediction accuracy.

Finally, we redefine in accordance with the redefined matrix . We then obtain the final QUBO formulation in Eq. (20) for the annealing machines.

V.4 Annealing procedure

We use a simple SA algorithm to find optimal solutions of the derived QUBO formulation. The SA simulations ran on a MacBookAir with Inter Core i5. In SA, the temperature schedule was as follows:

| (24) |

where is the temperature at the -th iteration, is the initial temperature, and is the decay rate. One iteration is defined as updates in accordance with the conventional Metropolis rule. After the iterations, we output the state that minimizes the QUBO formulation during the search procedures. Table 1 shows the SA parameters.

The obtained leads to the final estimation for the continuous variables via Eq. (19).

| Number of iterations | |

|---|---|

| Initial temperature | |

| Decay rate for temperature scheduling |

V.5 Numerical results

V.5.1 Performance in prediction accuracy

First, we investigated how the number of reductions affects the prediction accuracy. We used another reduction method for comparison, with which we randomly select the same number of pairs of continuous variables. We conducted -fold cross-validation and evaluated the mean absolute errors (MAEs) and the standard deviations. Note that the correlation matrix and selected pairs vary with the training data set. Hence, the number of selected pairs varied with each training data set.

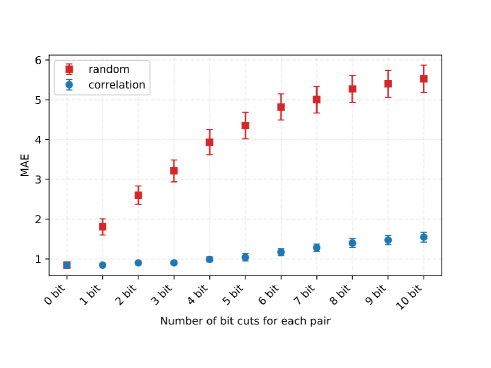

Figure 2 shows the numerical results. The horizontal axis represents the number of reduced binary variables for each pair; for example, ‘2bit cut’ indicates that a selected pair of continuous variables share two binary variables. Note that the total number of reduced binary variables was generally larger than the value of the horizontal axis because we selected several pairs of variables. The filled squares show the errors with the reduction method using randomly selected pairs. The filled circles correspond to those with the proposed method. The error bars indicate the standard deviations.

Since we added the noise with the standard normal distribution, the minimum value of MAE was about . Figure 2 shows that the random reduction method performed worse due to increasing the number of shared bits. Even the -bit cut resulted in a sudden decrease in the prediction accuracy.

We see a moderate increase in the MAE for the proposed, indicating its validity. Even the -bit cut case yielded better accuracy than the -bit cut case with the random reduction method, although this extreme case is not practical.

Therefore, it is possible to reduce several shared binary variables without significant degradation in prediction accuracy.

V.5.2 Performance in variable reduction and computational time

| Number of reduced binary variables per pair | Total number of binary variables (average for trials) | Computational time [sec] (average for trials) |

|---|---|---|

Table 2 shows the number of binary variables and computational time; the values in the table are the average for ten trials in the cross-validation procedure. The first row in the table corresponds to the naive application of the QUBO formulation without any reduction.

We see that the reduction with variables per pair yielded about a 20% reduction in the total number of variables. Even in the -bit cut case, the prediction accuracy did not degrade much, as we see in Fig. 2. As stated in Sec. I, the limitation of available variables is severe with annealing machines. From a practical viewpoint, the proposed method will be crucial to embedding the QUBO formulation into the annealing machines. The reduction in variables also contributed to rapid computation, as shown in Table 2.

VI Conclusion

We proposed an efficient correlation-based discretization method for annealing machines. The numerical experiment showed that it is possible to reduce the number of binary variables without a large loss in prediction accuracy. Recall that there are the following two constraints with annealing machines:

-

1.

We need the QUBO formulation as the input; i.e., only binary variables are acceptable.

-

2.

There is a severe limitation on the number of binary variables; the number of binary variables for annealing machines is not large.

Compared with naive methods used in previous studies, the proposed method adequately addresses these two constraints. Note that we can flexibly increase or decrease the total number of binary variables by changing the correlation threshold. When one wants to use annealing machines for large-scale problems, the proposed method could be of great help in practice.

This study was the first attempt at a correlation-based reduction idea. Although the proposed method was verified, further studies are needed with other examples and more practical ideas. There are remaining issues. First, the proposed method handles only correlated pairs. There are various other ways to combine correlated variables. We will investigate which type of combination yields good performance. Second, we assumed the fully connecting type annealing machines. Some annealing machines are loosely coupled, such as the D-Wave Advantage and CMOS Annealing Machine. We need another procedure to embed the derived QUBO formulation into such machines.

References

- [1] C. McGeoch and P. Farré, D-Wave Tech. Report Series 14-1049A-A (2020).

- [2] M. Aramon, G. Rosenberg, E. Valiante, T. Miyazawa, H. Tamura, and H. G. Katzgraber, Front. Phys. 7, 48 (2019).

- [3] T. Takemoto, K. Yamamoto, C. Yoshimura, M. Hayashi, M. Tada, H. Saito, M. Mashimo, and M. Yamaoka, IEEE Int. Solid-State Circ. Conf. 64, 64 (2021).

- [4] A. Lucas, Front. Phys. 2, 2 (2014).

- [5] V. S. Denchev, N. Ding, S. V. N. Vishwanathan, and H. Neven, Proc. 29th Int. Conf. Mach. Learn., 1003 (2012).

- [6] S. Izawa, K. Kitai, S. Tanaka, R. Tamura, and K. Tsuda, Phys. Rev. Res. 4, 023062 (2022).

- [7] A. Davis and D. Prasanna, Quantum Inf. Process. 20, 294 (2021).

- [8] G. Rosenberg, P. Haghnegahdar, P. Goddard, P. Carr, K. Wu, and M. L. de Prado, IEEE J. Selected Topics in Signal Process. 10, 1053 (2016).

- [9] D. Snelling, E. Devereux, N. Payne, M. Nuckley, G. Viavattene, M. Ceriotti, S. Workes, G. di Mauro, and H. Brettle, Proc. 8th Eur. Conf. Space Debris (online) (2021).

- [10] M. Ohzeki, A. Miki, M. J. Miyama, and M. Terabe, Front. Comput. Sci. 1, 9 (2019).

- [11] F. Neukart, G. Compostella, C. Seidel, D. von Dollen, S. Yarkoni, and B. Parney, Front. ICT 4, 29 (2017).

- [12] D. P. Nazareth and J. Spaans, Phys. Med. Biol. 60, 4137 (2015).

- [13] N. Nishimura, K. Tanahashi, K. Suganuma, M. J. Miyama, and M. Ohzeki, Front. Comput. Sci. 1, 2 (2019).

- [14] K. Tamura, T. Shirai, H. Katsura, S. Tanaka, and N. Togawa, IEEE Access 9, 81032 (2021).

- [15] P. Date and T. Potok, Sci. Rep. 11, 21905 (2021).

- [16] D. Willsch, M. Willsch, H. de Raedt, and K. Michielsen, Comput. Phys. Commun. 248, 107006 (2020).

- [17] S. Geman and D. Geman, IEEE Trans. Pattern Anal. Mach. Intell. PAMI-6, 721 (1984).

- [18] T. Kadowaki and H. Nishimori, Phys. Rev. E 58, 5355 (1998).

- [19] S. Suzuki and M. Okada, J. Phys. Soc. Jpn. 74, 1649 (2005).

- [20] E. Farhi, J. Goldstone, S. Gutman, J. Lapan, A. Lundgren, and D. Preda, Science 292, 472 (2001).