Efficient Estimation of Sensor Biases for the 3-Dimensional Asynchronous Multi-Sensor System

Abstract

An important preliminary procedure in multi-sensor data fusion is sensor registration, and the key step in this procedure is to estimate sensor biases from their noisy measurements. There are generally two difficulties in this bias estimation problem: one is the unknown target states which serve as the nuisance variables in the estimation problem, and the other is the highly nonlinear coordinate transformation between the local and global coordinate systems of the sensors. In this paper, we focus on the 3-dimensional asynchronous multi-sensor scenario and propose a weighted nonlinear least squares (NLS) formulation by assuming that there is a target moving with a nearly constant velocity. We propose two possible choices of the weighting matrix in the NLS formulation, which correspond to classical and weighted NLS estimation and maximum likelihood (ML) estimation, respectively. To address the intrinsic nonlinearity, we propose a block coordinate descent (BCD) algorithm for solving the formulated problem, which alternately updates different kinds of bias estimates. Specifically, the proposed BCD algorithm involves solving linear LS problems and nonconvex quadratically constrained quadratic program (QCQP) problems with special structures. Instead of adopting the semidefinite relaxation technique, we develop a much more computationally efficient algorithm based on the alternating direction method of multipliers (ADMM) to solve the nonconvex QCQP subproblems. The convergence of the ADMM to the global solution of the QCQP subproblems is established under mild conditions. The effectiveness and efficiency of the proposed BCD algorithm are demonstrated via numerical simulations.

Index Terms:

Alternating direction methods of multipliers, block coordinate decent algorithm, nonlinear least square, sensor registration problemI Introduction

In the past decades, multi-sensor data fusion has attracted a lot of research interests in various applications, e.g., tracking system [2, 3]. Compared with the single sensor system, the performance can be significantly improved by integrating inexpensive stand-alone sensors into an integrated multi-sensor system, which is also a cost-effective way in practice. However, the success of data fusion not only depends on the data fusion algorithms but also relies on an important calibration process called sensor registration. The sensor registration process refers to expressing each sensor’s local data in a common reference frame, by removing the biases caused by the improper alignment of each sensor [4]. Consequently, the key step in the sensor registration process is to estimate the sensor biases.

I-A Related Works

An early work [4] for sensor registration dates back to the 1980s, where the authors identified various factors which dominate the alignment errors in the multi-sensor system and established a bias model to compensate the alignment errors. Under the assumption that there exists a bias-free sensor, the maximum likelihood (ML) estimation approach [4, 5, 6] and the least squares (LS) estimation approach [7] were proposed. However, the bias-free sensor assumption is not practical and a more common situation in practice is that all sensors contain biases. For the general situation where all sensors are biased, various approaches have been proposed in the last four decades. From the perspective of parameter estimation, these approaches can be divided into three types: LS estimation [8, 9], ML estimation [10, 11, 6], Bayesian estimation [12, 13, 14, 15, 16, 17, 18, 19, 20, 21]. Note that the sensor registration problem not only contains unknown sensor biases to be estimated, but also the target states, i.e., positions of the target at different time instances. The major differences among the above three types of approaches lie in the treatment for the unknown target states. For the LS approach, the target states are expressed as nonlinear functions of sensor biases in a common coordinate system, where the target states are eliminated and only sensor biases need to be estimated. As for the ML approach, a joint ML function for the sensor biases and the target states is formulated and maximized in an iterative manner (with two steps per iteration), i.e., one step to estimate the sensor biases and one step to estimate the target’s states. Instead of jointly estimating the sensor biases and the target states as in the ML approach, the (recursive) Bayesian approach exploits the Markov property of the transition of the target states between different time instances, i.e., the probability distribution of the target state at the next time instance only depends on the target state at the current time instance. The sensor biases and the target states are recursively estimated at every time instance.

Since sensor measurements are in its local polar coordinate system, the nonlinear coordinate transformation between the local polar and global Cartesian coordinates introduces an intrinsic nonlinearity in the sensor registration problem. The approaches mentioned above adopted different kinds of approximation schemes to deal with the nonlinearity, i.e., using linear expansions to approximate some nonlinear functions [8, 5, 9, 7, 4, 10, 12, 11, 6, 13, 14, 15, 16, 17] which usually leads to an uncontrolled model mismatch or using a large number of discrete samples (particles) to approximate some nonlinear functions which often requires an expensive computational cost when the number of sensors is large [18, 20, 21]. In addition to the schemes of dealing with the nonlinearity, many of the above works only consider the synchronous case, i.e., all sensors simultaneously measure the position of the target at the same time instance, which may not be always satisfied in many practical scenarios.

Recent works in [22, 23] proposed a semidefinite relaxation (SDR) based block coordinate descent (BCD) optimization approach to address the nonlinearity issue in the asynchronous sensor registration problem in the 2-dimensional scenario. The approach assumes the presence of one reference target moving at a nearly constant velocity (e.g., commercial planes and drones in the real world) and is effective in handling the nonlinearity while also guaranteeing an exact recovery of all sensor biases in the noiseless case. Consequently, it is interesting to explore such BCD algorithm in the 3-dimensional scenario, where more kinds of biases are involved and the estimation problem becomes even more highly nonlinear, i.e., more trigonometric functions with respect to different biases are multiplied together in the coordinate transformation. Furthermore, the BCD algorithm in [22, 23] needs to solve convex semidefinite programing (SDP) subproblems at each iteration, whose computational cost is (where is the total number of sensors). Such a high computational complexity limits the practical use of the BCD algorithm though it can well deal with the nonlinearity issue. An interesting question is, instead of solving SDPs, whether there is an algorithm which has a lower computational complexity and can solve the original nonconvex problem with a guaranteed convergence. This paper provides a positive answer to the above question.

I-B Our Contributions

In this paper, we consider the 3-dimensional sensor registration problem, where more kinds of biases and noises are involved. Few works tackle the 3-dimensional registration problem except some notable works [12, 11, 7, 6, 1]. However, all of these works assume that sensors work synchronously. In this paper, we consider a practical scenario where all sensors are biased and work asynchronously. The major contributions are summarized as below:

3-dimensional asynchronous sensor registration: We consider the sensor registration problem in a general scenario, where sensors work asynchronously in 3-dimension and all sensors are biased. Such a scenario is of great practical interest but has not been well studied yet. Compared with most of existing researches which consider the 2-dimensional scenario, there are more kinds of sensor biases in the 3-dimensional scenario, i.e., polar measurement biases and orientation angle biases, which increases the intrinsic nonlinearity in the bias estimation problem.

A weighted nonlinear least squares formulation: By exploiting the prior knowledge that the target moves with a nearly constant velocity, we propose a weighted nonlinear LS formulation for the 3-dimensional asynchronous multi-sensor registration problem, which takes different practical uncertainties into consideration, i.e., noise in sensor measurements and dynamic maneuverability in the target motion. The proposed formulation incorporates the covariance information of different practical uncertainties by appropriately choosing the weight matrix, which enables it to efficiently handle situations with different uncertainties.

A computationally efficient BCD algorithm: We exploit the special structure of the proposed weighted nonlinear LS formulation and develop a computationally efficient BCD algorithm for solving it. The proposed algorithm alternately updates different kinds of biases by solving linear LS problems and nonconvex quadratically constrained quadratic program (QCQP) problems. Compared with the previous work [1] which utilizes the SDR-based technique for solving the nonconvex QCQP subproblems with a computational complexity of , we develop a low computational cost algorithm based on the alternating direction method of multipliers (ADMM) for solving those QCQP subproblems with a global optimality guarantee (under mild conditions), which only requires a computational complexity of .

I-C Organization and Notations

The organization of this paper is as follows. In Section II, we introduce basic models of sensor measurements and the target motion. In Section III, we propose a nonlinear LS formulation for estimating all kinds of biases. To effectively solve the proposed nonlinear LS formulation, we develop a BCD algorithm in Section IV. Numerical simulation results are presented in Section V.

We adopt the following notations in this paper. Normal case letters, lower case letters in bold, and upper case letters in bold represent scalar numbers, vectors, and matrices, respectively. and represent the transpose of matrix and the inverse of invertible matrix , respectively. represents the matrix formed by elements of matrix from rows to and columns to . denotes the -th component of and denotes the Euclidean norm of . is the expectation operator with respect to random variable . represents the set of -dimensional real vectors. Other notions will be explained when they appear for the first time.

II Sensor Measurement Model

Consider a 3-dimensional multi-sensor system with () sensors located at different known positions . Assume there is a reference target moving with a nearly constant velocity in the space111Such a reference target can be selected from the branches of civilian airplanes. and sensors measure the relative polar coordinates (i.e., range, azimuth, and elevation) at different time instances in an asynchronous mode, i.e., polar coordinates of the target at different time instances are measured by different sensors. After a time period in which a set of local measurements are collected by sensors, all sensors’ measurements are sent to a fusion center and compactly, all sensors’ measurements are sorted and mapped onto a time axis, indexed by time instance . Without loss of generality, we make the following (mild) regularity assumption.

Assumption 1.

Assume that only one sensor observes the target at each time instance and every sensor has at least one measurement in the whole observation interval. In addition, the total number of observations is greater than the number of sensors, i.e., .

At time instance , let the corresponding sensor be indexed by and be the position of the target in the global Cartesian coordinate system. Then this target’s position in sensor ’s local Cartesian coordinate system is denoted as , which can be expressed as a function with respect to given as:

| (1) |

where

| (2) | ||||

In the above, is a rotation matrix, which represents the coordinate rotation from the local to the global Cartesian coordinate systems, and parameters there are rotation angles corresponding to roll, pitch, and yaw, respectively. Parameter in (1) is the presumed rotation angles which is measured by sensor ’s orientation system and is the unknown rotation angle biases. Note that is due to the imperfection of the orientation system of sensor and we call them the orientation biases in this paper. Hence, the term represents the true rotation angles of sensor .

In the following, we will discuss another category of sensor biases arising from imperfections in the sensor measurement system. Define the Cartesian-to-polar coordinate transformation function as

where , , and are polar coordinates corresponding to range, azimuth, and elevation, respectively. Then based on (1) and the fact , the polar coordinates of measured by sensor is denoted as which can be expressed as

| (3) | ||||

In (3), is the measurement biases of sensor is the zero-mean Gaussian noise with

where , and are variances corresponding to range, azimuth, and elevation, respectively.

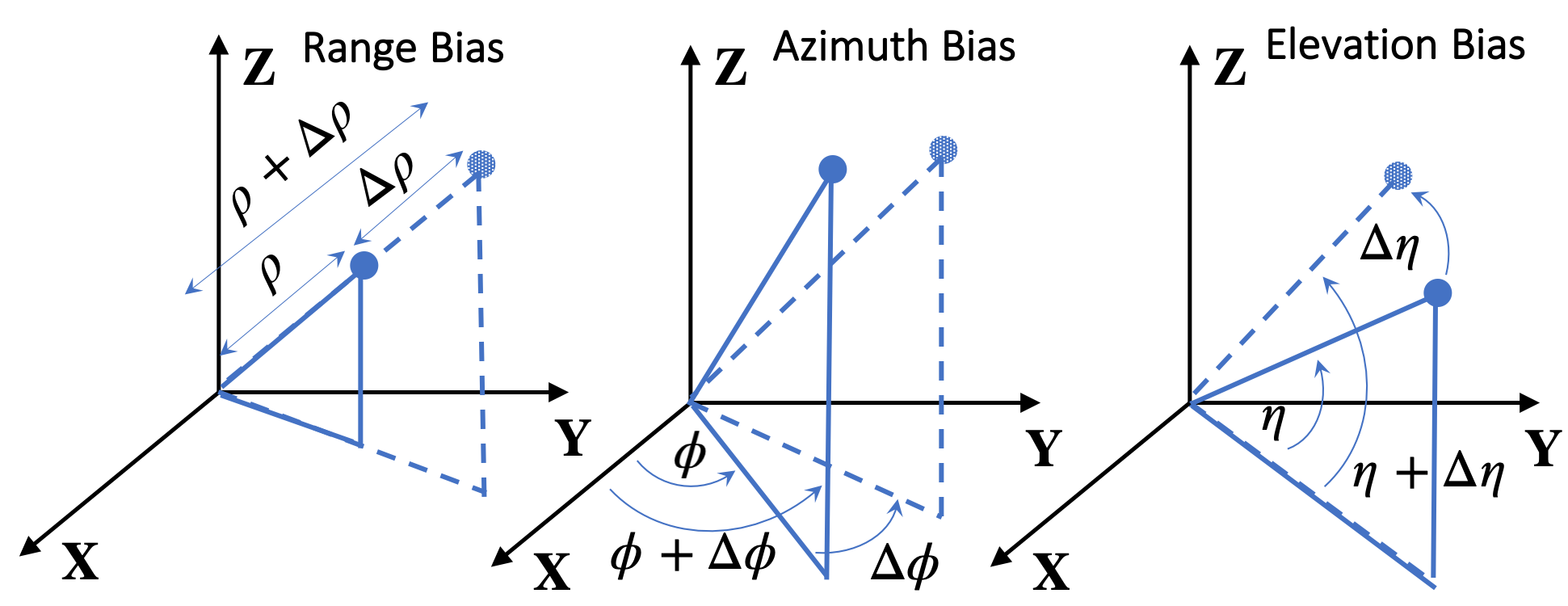

In view of the measurement model in (3), there are two types of biases, i.e., orientation biases and measurement biases . Both of them affect the expression for the global Cartesian coordinates . For each sensor, there are in total six biases, i.e., , and . Each of these biases has a different impact on and the geometric illustration of them is shown in Fig. 1. We note that there is an intrinsic ambiguity among these six kinds of biases, and this is formally stated in Proposition 1.

Proposition 1.

Let , then any pair satisfying gives the same in (3).

Proof.

See Appendix A. ∎

Proposition 1 states that biases and cannot be distinguished since they are intrinsically coupled in the measurement model (3). Only their sum affects the measurements and they can be treated as one kind of bias [7]. In the rest part of this paper, we fix and only consider estimating .

Consequently, the goal of the 3-dimensional multi-sensor registration problem is to determine sensor biases

from sensor measurements . It is worth noting that determining the sensor biases solely from (3) is a challenging task since the target position at time instance is unknown. Any combination of and can satisfy (3). However, by leveraging the fact that the target moves with nearly constant velocity, the registration problem can be addressed.

III A Weighted Nonlinear LS Formulation

In this section, we first introduce the unbiased spherical-to-Cartesian coordinate transformation to represent local noisy measurements in the common Cartesian coordinate system as well as the nearly-constant-velocity motion model for the target. Then, we propose a weighted nonlinear least squares formulation for estimating sensor biases.

According to the sensor measurement model in (3), the target position can be expressed by a nonlinear transformation with respect to and , given in Proposition 2.

Proposition 2 (Unbiased Coordinate Transformation [24]).

Given the measurement model in (3), and suppose take the value of the true bias. Then, we have

| (4) |

where is a zero-mean random noise and

is the unbiased polar-to-Cartesian transformation function with and being the compensation factors.

Note that in Proposition 2 compensates the effects of the Gaussian noise (in ) after the nonlinear mapping and can be viewed as a function of . The compensation factors ( and ) in ensures but is no longer a Gaussian noise due to the nonlinear mapping.

Next, assume that the reference target moves with a nearly constant velocity [25], i.e.,

| (5) | ||||

where and are the position and velocity of the target at time instance , respectively, is the interval time between time instances and , and and are the motion process noises for position and velocity at time instance , respectively. The process noises and obey the Gaussian distribution

| (6) |

In the above, is the value of the noise power spectral density of the target motion and denotes the Gaussian distribution with mean and covariance .

Combining Proposition 2 with the target motion model in (5), we can immediately connect the measurements at time instances and with each other, given in Proposition 3.

Proposition 3.

By Proposition 3, we can establish the following nonlinear equations for the sensor biases estimation problem:

| (7) | ||||

where is also a zero-mean random noise.

Equations in (7) is a combination of sensor measurement model (3) and target motion model (5). They can be regarded as the constructed measurement models for parameters and , where and are the zero-mean noise.

Based on (7), we propose to minimize the squares of and , which results in the following nonlinear least squares formulation for estimating sensor biases:

| (8) |

where denotes the weighted norm associated with the positive definite symmetric matrix , i.e., . Notice that the LS form in (8) is derived from the nearly constant velocity model (5), where the term represents the mismatch of the position of the target in the global coordinate system and the other term corresponds to the mismatch of the nearly constant velocity. The current LS formulation cannot be directly applied to handle reference targets with strong maneuverability, such as those performing a constant turn motion. However, the fundamental concept of linking measurements at different time instances using the maneuver motion model is still relevant and can be further explored for more practical scenarios.

The choices of the weight matrix in (8) could be either the identity matrix or the (approximate) covariance matrix of and . More specifically, with , problem (8) is a classical nonlinear LS problem based on the zero-mean property of noises and . Instead, let be the approximated covariance matrix with respect to given in [24], then problem (8) with

| (9) |

becomes a weighted NLS estimation. It is worth mentioning that two comprehensive works [26, 27] have provided different formulations for evaluating and to resolve the incompatibility issue in [24]. However, as the focus of this paper is to utilize optimization techniques to handle the nonlinearity in the bias estimation problem, we have chosen to use the basic version of the unbiased coordinate transformation [24]. Nevertheless, the optimization techniques developed in this paper are also applicable to other unbiased coordinate transformations.

The mathematical difficulty of solving problem (8) (no matter how to choose ) lies in its nonlinearity, as contains multiple products of trigonometric functions. In the next section, we will exploit the special structures of problem (8) and develop a BCD algorithm for solving it (with any positive definite matrix ).

IV A Block Coordinate Descent Algorithm

The proposed BCD algorithm iteratively minimizes the objective function with respect to one type of the biases with the others being fixed. The intuition of such decomposition is that different types of biases affect the measurements differently. By dividing the optimization variables into multiple blocks according to the type of bias, we can leverage the distinct mathematical effects of each type of biases on problem (8). In particular, each subproblem with respect to one type of biases has a compact form, which can be solved uniquely under mild conditions. This enables us to develop a computationally efficient algorithm.

Let , and similar to the other kinds of biases. The objective function in (8) can be expressed as , and the proposed BCD algorithm at iteration is

| (10a) | |||

| (10b) | |||

| (10c) | |||

| (10d) | |||

| (10e) | |||

| (10f) | |||

In the next, we derive the solutions to the above subproblems (10a)–(10f). For simplicity, we will omit the iteration index in the coming derivation.

IV-A LS Solution for Subproblems (10a) and (10b)

By (8), we have the following convex quadratic reformulation for subproblem (10a):

| (11) |

where is the coefficient matrix, is the constant vector, and is a positive definite matrix. Detailed expressions of them are given in Appendix C-A of the Supplementary Material. Setting the gradient to be zero, the closed-form solution to subproblem (10a) is

| (12) |

where denotes the pseudo-inverse operator.

The subproblem (10b) for updating also has a closed-form solution since its objective is also convex quadratic with respect to . Subproblem (10b) can be reformulated as

| (13) |

where is the coefficient matrix, and is the constant vector. Detailed expressions of them are given in Appendix C-B of the Supplementary Material. Similar to subproblem (10a), the closed-form solution of (10b) is

| (14) |

IV-B Solution for Subproblems (10c)–(10f)

Since in (8) is linear with respect to the trigonometric functions of the angle biases, all the four subproblems (10c)-(10f) can be reformulated into a same QCQP form. For better presentation, we use to denote one kind of these angle biases and the corresponding subproblem can be reformulated as

| (15) |

where

and set is defined as

In (15), is the coefficient matrix and is the constant vector. Detailed expressions of them are given in Appendix C-C of the Supplementary Material.

Problem (15) is a non-convex problem due to its non-convex equality constraints. In general, such class of problem is known to be NP-hard [28] and the SDR based approach [29, 30] was used to solve it. In particular, in [23], the authors utilized the SDR technique to solve a specific case of problem (15) where it can be reformulated as a complex QCQP, and proved that the SDR is tight, i.e., the global minimizer of the QCQP problem can be obtained by solving the SDR under a mild condition. This motivates us to ask whether problem (15) can also be globally solved and whether there are some efficient algorithms better than solving the SDR.

Note that the non-convex constraints in problem (15) enjoy an “easy-projection” property. In particular, let denote the projection of any non-zero point onto . Then admits the following closed-form solution:

where is a diagonal matrix defined as

and denotes the Kronecker product. Such “easy-projection” property together with recent results of the ADMM for nonconvex problems [31] provides us a potential way of efficiently solving problem (15) with a guaranteed convergence. The choice of the ADMM algorithm over other potential methods such as the gradient projection (GP) method is discussed in Remark 1. In the next, we will apply the ADMM for solving it and also show that the ADMM is able to globally solve problem (15) under mild conditions.

Remark 1 (ADMM versus GP).

Note that the GP method is a good option for solving problem (15), whose convergence to a stationary point can be guaranteed under the Kurdyka-Lojasiewicz framework [32]. In this paper, we choose the ADMM rather than the GP method for solving problem (15) is because the ADMM shows a more stable numerical behavior than the GP method and in particular the ADMM is more stable to the choice of the step size than the GP method. This is particularly important for solving our interested problem (8) as we need to solve subproblems in the form of (15) many times in the proposed BCD algorithm.

Based on such “easy-projection” property, we introduce an auxiliary variable to split problem (15), which makes all subproblems in the ADMM iterations admit closed-form solutions. In particular, subproblem (15) is reformulated as

| (16) |

where is the indicator function for set , given as

| (17) |

The augmented Lagrangian function for problem (16) is

| (18) |

where is the penalty parameter and is the Lagrange multiplier for the equality constraint . Then, the ADMM updates at iteration are

| (19a) | ||||

| (19b) | ||||

| (19c) | ||||

Note that subproblem (19a) is an unconstrained strongly convex quadratic problem and subproblem (19b) is actually a projection problem, both of which can be solved in closed forms. Details are given in Algorithm 1.

Next, we discuss the convergence property of Algorithm 1. Before doing this, we first study the structures of coefficients and in problem (15). Note that and can be viewed as random matrices/vectors and the randomness is caused by those zero-mean Gaussian noises, i.e., in (3) and in (5). To understand the effects of these noises, let us suppose that there is no noise, i.e., , for all , and all the other biases and velocity take the true values. Then by the same derivations in Appendix C of the Supplementary Material (with compensation factors ), we attain new and . Intuitively, and represent the “true” parts of and by removing measurement noise , motion process noises and , and errors of other biases and velocity. Consequentially, the “errors” in and can be defined as

| (20) |

Technically, decomposing and in (20) provides a natural way of characterizing the convergence behavior of Algorithm 1, given in Theorem 1.

Theorem 1.

Proof.

See Appendix B. ∎

The first statement of Theorem 1 is a direct result of [31, Corollary 2], which ensures the convergence of the ADMM for a class of the nonconvex problem (with a Lipschitiz continuous gradient) over a compact manifold. The second statement of Theorem 1 on the uniqueness of the stationary point is due to the special structure of problem (16). The entire sequence convergence in Theorem 1 is a combined result of the general convergence of the ADMM in [31, Corollary 2] and the special structure of problem (16).

IV-C Proposed BCD Algorithm

The proposed BCD algorithm for solving problem (8) is summarized as Algorithm 2. Three remarks on its convergence, computational complexity, and comparison with other BCD variants are given in order.

Convergence of Algorithm 2. The well-known convergence analysis of BCD [33, 34] shows that if all subproblems in the proposed BCD Algorithm 2 have unique solutions and can be globally solved, then the iterates can converge to a stationary point of the non-convex problem (8). The uniqueness and the global optimality of solutions (in (12)) for subproblem (10a) and (in (14)) for subproblem (10b) can be guaranteed if and are of full rank. For subproblems (10c)–(10f), the full rankness of together with sufficiently small and in (20) further ensure that each of the corresponding subproblems has a unique solution and can be numerically attained by the proposed ADMM Algorithm 1 (cf. Theorem 1). According to the definitions of , , and in Appendix C of the Supplementary Material and Assumption 1, we know that (i) in (11) is always of full rank since each sensor has at least one measurement; (ii) in (13) and in (15) are of full rank with probability one (whose proof is provided in Appendix D of the Supplementary Material). Based on the above discussion, we can conclude that Algorithm 2 can converge to a stationary point of the non-convex problem (8) if those and along the BCD iterates are all sufficiently small. Finally, we remark that this sufficient small condition provides a theoretical understanding for the convergence of Algorithm 2 and in general it depends on the initial point. Our numerical simulation results in Section V suggest that such a condition always holds in various scenarios by simply initializing all biases to be zeros. The results in the noiseless setting (Section V-A) even verify the global optimality of Algorithm 2.

Computational complexity of Algorithm 2. All subproblems in the proposed BCD updates (10a)–(10f) take two special forms, i.e., unconstrained linear LS problems (i.e., subproblems (11) and (13)) and quadratic programs with special non-convex constraints (i.e., subproblem (15)). It can be shown that the computational complexity of solving subproblem (11), (13), and (15) is , , and (where is the total iteration number of the ADMM), respectively. It is worthwhile mentioning that the computational complexity of solving (15) by using the ADMM, which is is generally much less than that of using the SDR technique, which is [29]. The computational time comparison in Table II (Section V-B2) demonstrates the much better computational efficiency of using the ADMM over the SDR based approach for solving subproblem (15).

BCD variants. Finally, we remark that there are several nice extensions of the BCD framework such as the block successive minimization method [35] and the maximum block improvement (MBI) method [36]. Both of them can be applied to solve problem (8) with guaranteed convergence (with a suitable approximation of the objective function). The sequential optimization version of MBI [36] can effectively handle nonconvex constraints. In this paper, we still use the basic version of BCD because the nonconvex BCD subproblems with respect to angle biases enjoy the unique solution property (cf. Theorem 1), which reveals an interesting insight into the considered bias estimation problem that the true bias can be recovered under the ideal case (i.e., and ). MBI also enjoys the same property of the uniqueness of the subproblems but it requires more computational cost than classical BCD. A parallel implementation of MBI can improve its computational efficiency but this is beyond the scope of this paper. Simulation results in Section V-A further show that all biases are exactly recovered by our proposed BCD Algorithm 2 in the noiseless case.

V Simulation Results

In this section, we present simulation results to illustrate the performance of using our proposed BCD Algorithm 2 to solve the proposed formulation (8).

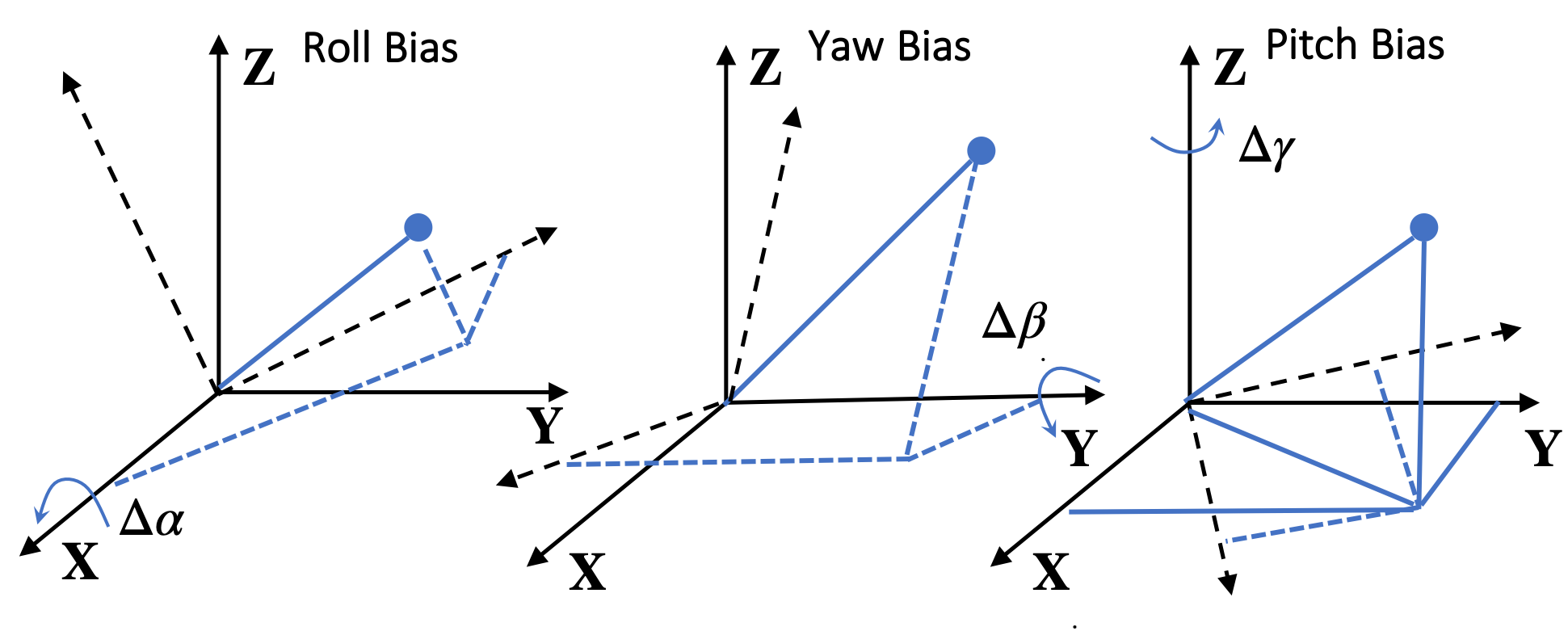

Scenario setting: We consider a scenario with four sensors and one target in the 3-dimensional space, as illustrated in Fig. 2. The locations and biases of each sensor are listed in Table I. The target moves with a nearly constant velocity km/s with the initial position being km. The presumed rotation angle is fixed at for all . The sensors work in an asynchronous mode that four sensors measure the target positions every s with different starting times, i.e., s, s, s, s, respectively. Moreover, each sensor sends stamped measurements to a fusion center for sensor registration and the total observation last s. All simulations are done on a laptop (Intel Core i7) with Matlab2018b.

Baseline approaches: We select three representative approaches in the literature and extend them to the considered 3-dimensional case where all sensors are biased and asynchronous. The first approach is the augmented state Kalman filtering (ASKF) approach [37] which treats sensor biases as the augmented states and uses the Kalman filter to jointly estimate sensor biases and target states. The second approach is the LS approach [7] which solves the proposed NLS formulation in (8) using the linear approximation (referred as linearized LS). The third approach is the ML approach [11, 6], which is originally proposed for the synchronous multi-sensor system [11] or for the scenario with one sensor being bias-free [6]. To extend it to the considered 3-dimensional case with a stable numerical behavior, we first estimate the target state by the smoothed Kalman filter (SKF) and then estimate sensor biases by the Gaussian-Newton method [33]. For simplicity, we refer this approach as SKF-GN.

Proposed algorithms’ parameter selection: In all of our following simulations, the parameters in the BCD and ADMM algorithms are selected as follows: the proposed BCD algorithm is initialized with all biases being zeros and the proposed ADMMs for solving subproblems (10c)–(10f) are also initialized with the corresponding angle biases being zeros; the penalty parameter in the ADMM is set to be the average of the diagonal elements of matrix in (15); the proposed BCD algorithm is terminated when the maximum difference between two consecutive BCD iterates is less than and the ADMM is terminated when both primal and dual residuals are less than .

| Position | ||||||

|---|---|---|---|---|---|---|

| Sensor 1 | -0.5 | -2 | -2 | 1 | -1 | |

| Sensor 2 | 0.3 | -2 | 2 | -1 | -1 | |

| Sensor 3 | -0.4 | -2 | 2 | -2 | 2 | |

| Sensor 4 | -0.2 | -1 | -2 | -1 | 1 |

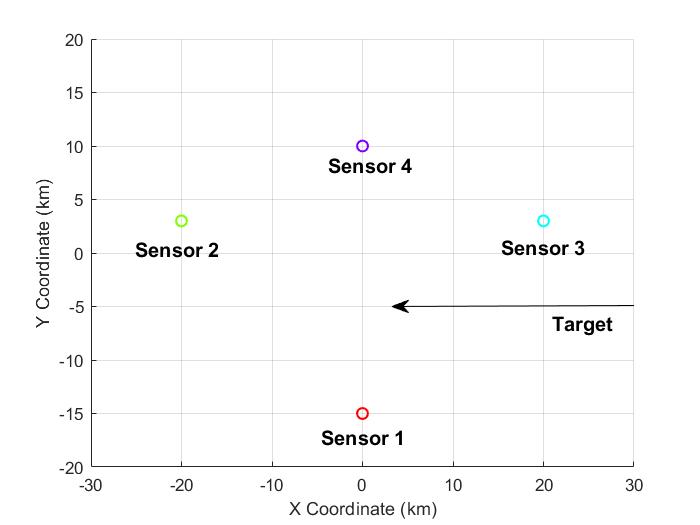

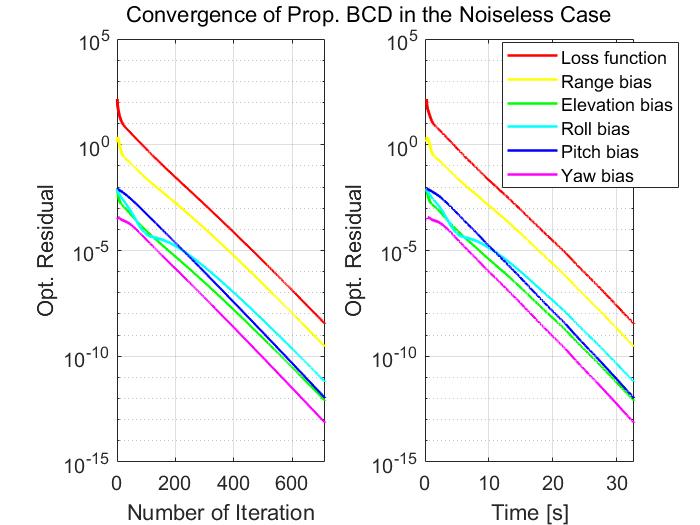

V-A Global Optimality of Proposed BCD in the Noiseless Case

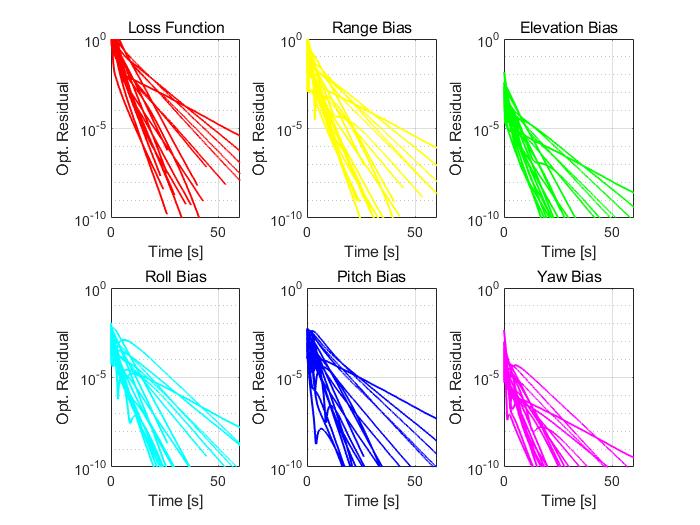

In this subsection, we test the convergence behavior of the proposed BCD for solving problem (8) with , . We consider the noiseless case with the measurement noise variance , and the target motion noise . In such a case, the true biases achieve a zero loss and hence they are a global solution of problem (8). Fig. 3 plots the optimization residuals over the number of iterations and the elapsed time. The optimization residuals for different kinds of biases are defined as the sum of the squared estimation error over all sensors, e.g., the optimization residual for the range bias is , where is the estimated range bias of sensor . The convergence curves in Fig. 3 demonstrate the global optimality of the proposed BCD algorithm in the noiseless case, i.e., the loss function converges to zero and the finally returned solution by the BCD algorithm is very close to the true biases. The similar convergence behavior has also been observed in many other scenarios. In particular, Fig. 4 shows the convergence curves of the proposed BCD algorithm in randomly generated scenarios222 The sensors’ positions are uniformly placed over the region km and sensors’ biases are generated from the Gaussian distribution, i.e., , and ..

V-B Estimation Performance in the Noisy Case

In this subsection, we evaluate the estimation performance of the proposed BCD algorithm in the noisy case. Note that the global optimality in the noisy case is generally hard to examine, as the global solution of the corresponding problem is unknown. However, our numerical results show that the solution found by the BCD algorithm achieves a pretty satisfactory estimation performance.

In the rest of simulations, we use the root mean square error (RMSE) as the estimation performance metric. The root of hybrid Cramer-Rao lower bound (RHCRLB) [38, 7] is used as the performance benchmark. The RMSEs and RHCRLBs in all of the following figures are obtained by averaging 100 independent Monte Carlo runs. We will compare the proposed BCD algorithm with the aforementioned three approaches under different setups.

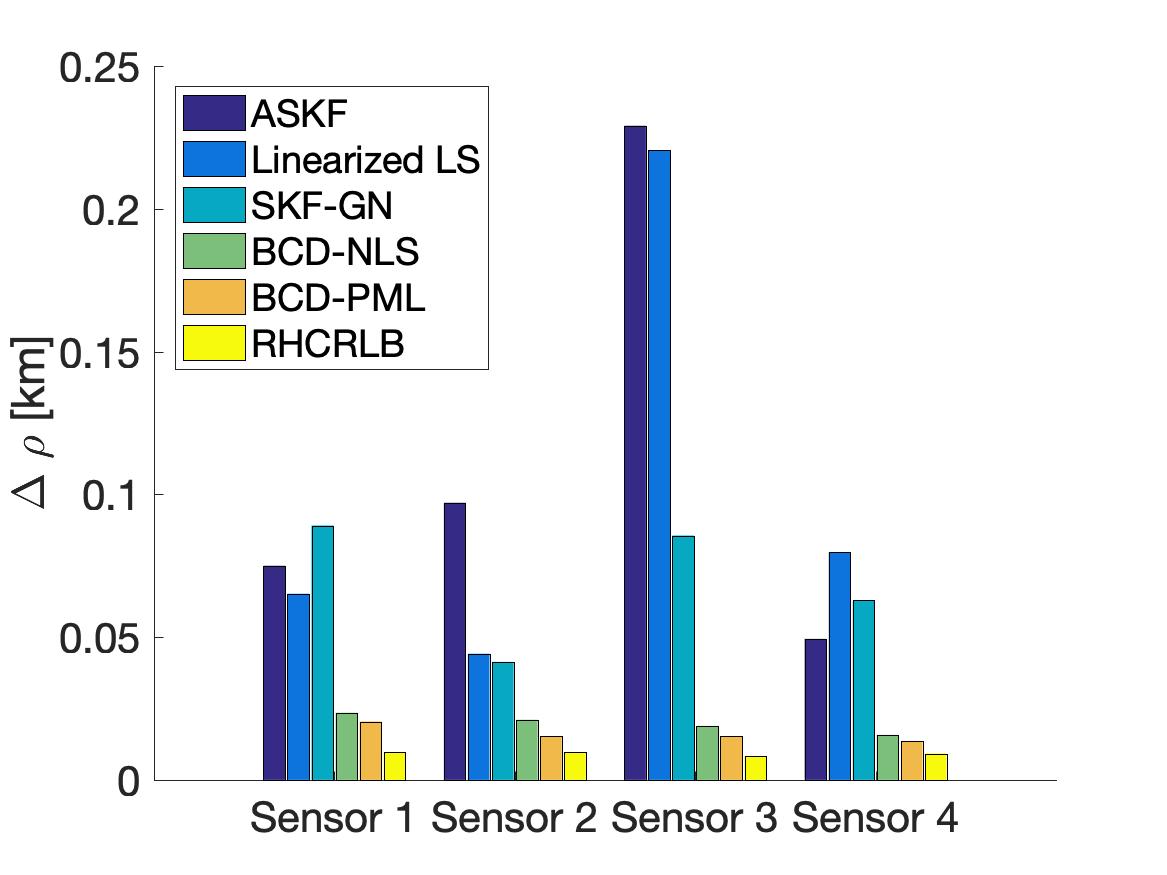

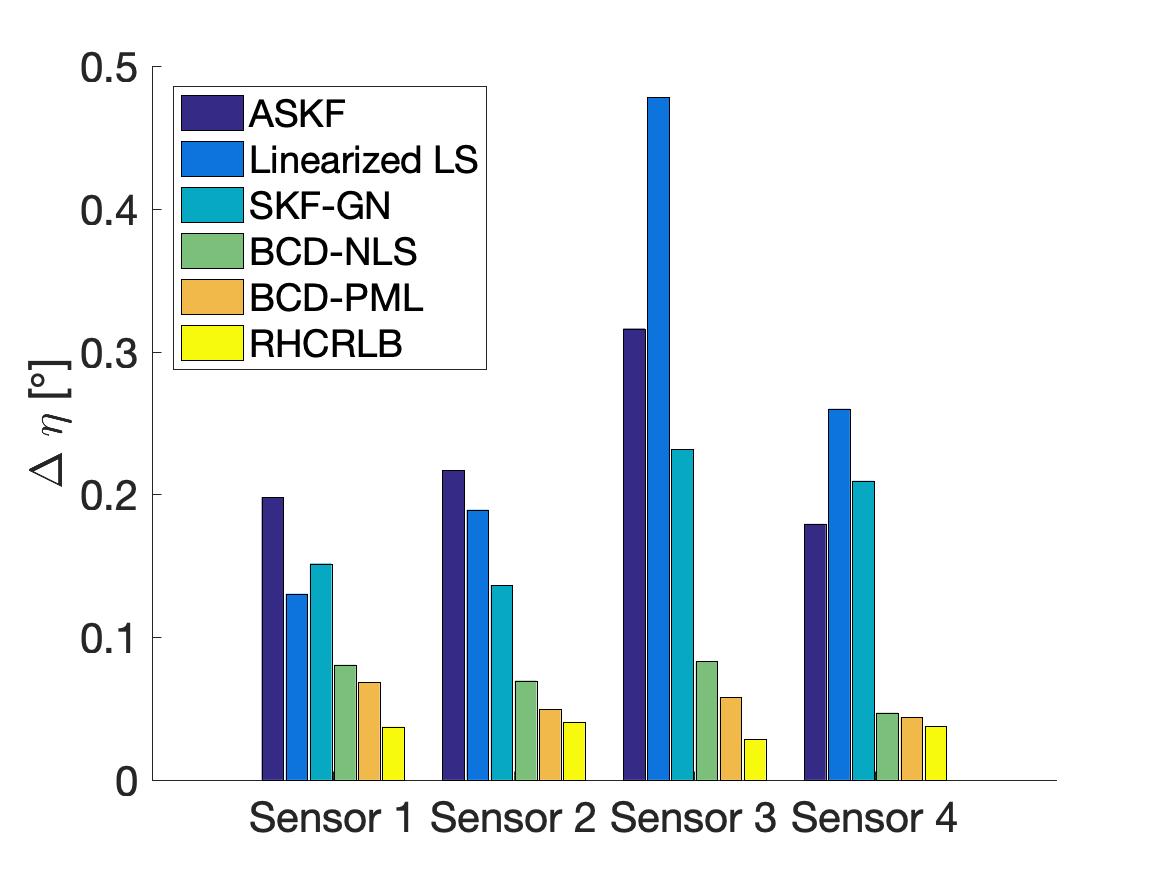

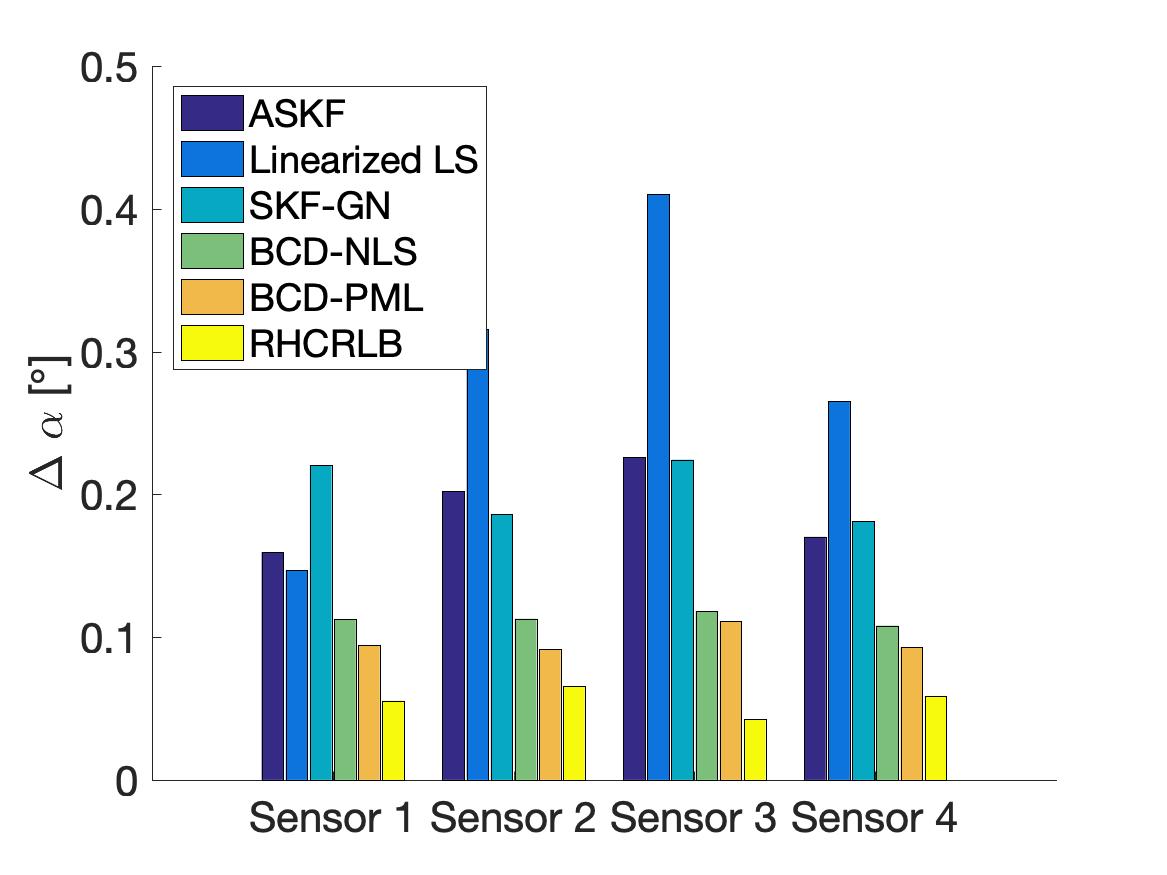

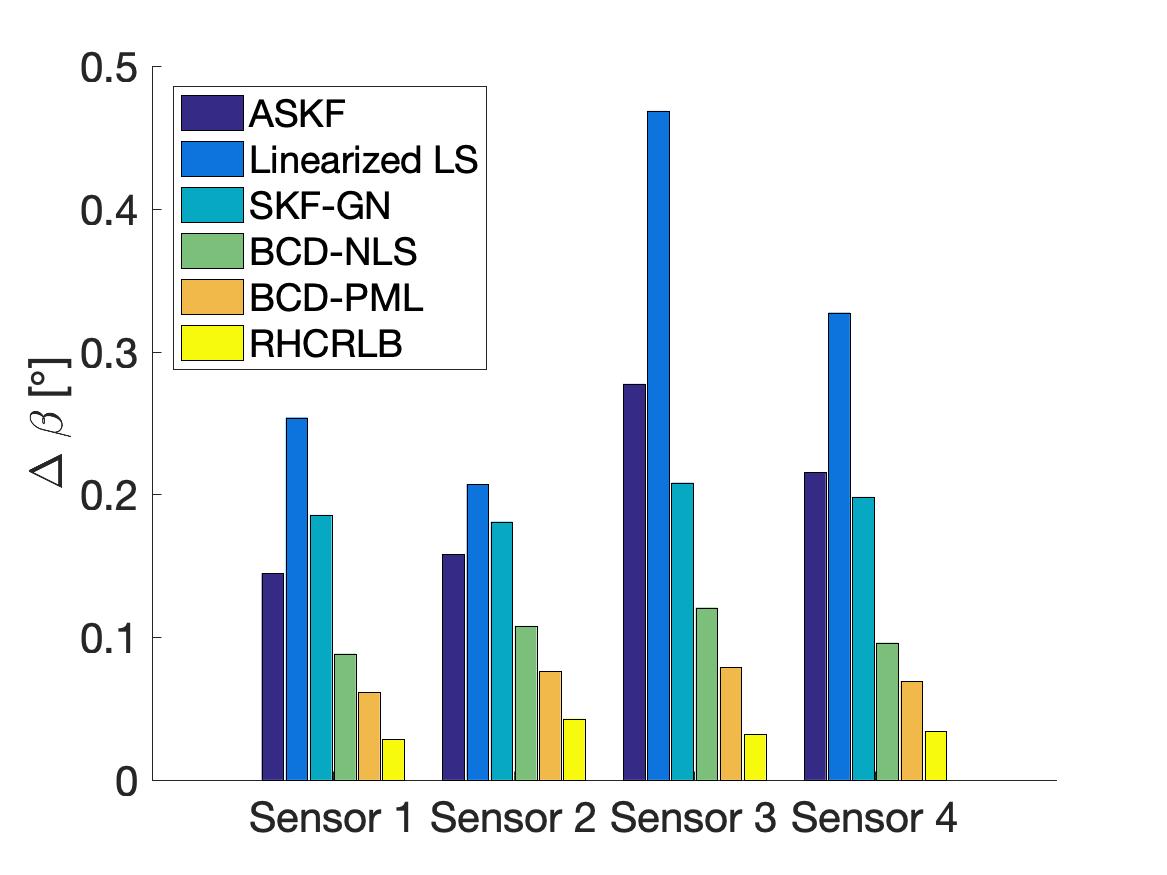

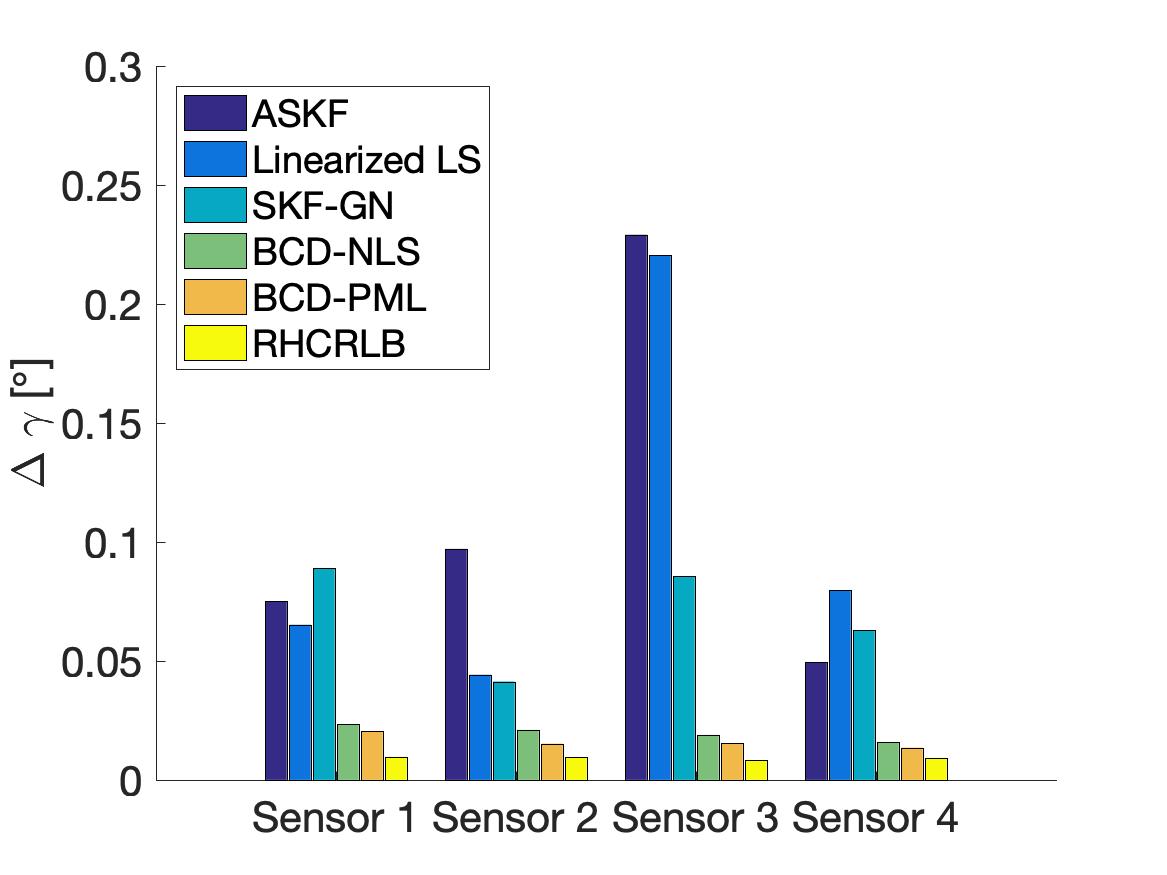

V-B1 Estimation Performance Comparison

In this part, we compare the estimation performance of different approaches with , , and . Two different choices of in the proposed formulation (8) are considered: one is which corresponds to classical nonlinear LS estimation (referred as BCD-NLS), and the other is in (9) which can be regarded as pseudo ML estimation (referred as BCD-PML). The RMSEs of the range bias of different sensors are shown in Fig. 5 and the RMSEs of different kinds of angle biases are shown in Fig. 6. We can observe that the two proposed approaches (i.e., BCD-NLS and BCD-PML) achieve much smaller RMSEs than the other three approaches. The main reason for this is that all the other three approaches utilize the linear approximation to deal with the nonlinearity, and such approximation usually results into a model mismatch which degrades the estimation performance. Notice that the only difference between Linearized LS and BCD-NLS is the algorithm used for solving problem (8) with . More specifically, Linearized LS utilizes the linear approximation which admits a closed-form solution for estimating biases while BCD-NLS uses the proposed BCD algorithm to deal with the nonlinearity. Clearly, BCD-NLS achieves smaller RMSE. Furthermore, by incorporating the second-order statistics, BCD-PML achieves a smaller RMSE than BCD-NLS, which demonstrates the effectiveness of the proposed choice of in (9).

V-B2 Computational Time Comparison

In this part, we compare the computational time of different approaches. The averaged computational time of different approaches is summarized in Table II. Notice that the SDR technique in [23] can be extended to deal with the nonlinearity in the 3-dimensional scenario333Under mild conditions, the SDR technique gives the same solution of problem (15) obtained by the proposed ADMM. In particular, the proof in Appendix B can be modified to show that the SDR admits a unique rank-one solution if and in (15) are sufficiently small. This implies that the SDR technique and the ADMM give the same solution.. We also include the computational time of employing the SDR technique to solve QCQP subproblems in form of (15) to demonstrate the computational effectiveness of the proposed ADMM. Values in the bracket in Table II correspond to the computational time of the BCD algorithm where CVX [39] is used to solve the SDRs of the corresponding QCQP subproblems. In our simulations, we observe that the difference of the final solutions returned by the BCD algorithm with the SDR technique and the ADMM (for solving the subproblems) is less than or equal to . This implies the two approaches return almost the same solutions.

From Table II, it can be observed that the linear approximation simplifies the estimation procedure which makes the three approaches, i.e., ASKF, Linearized LS, and SKF-GN, take much less computational time than the proposed two approaches. This is because all of these three approaches are based on the linear approximation, which simplify the estimation procedure but also degrades the estimation performance. Comparing our proposed algorithms with the ADMM and the SDR technique (in bracket) being used for solving the subproblems, we can observe that the proposed ADMM significantly reduces the computational time. We remark that sensor biases in practice usually change slowly [7], i.e., in hours or days, and thus the computational time of the proposed approaches is acceptable for practical applications.

| ASKF | Linearized LS | SKF-GN | BCD-NLS | BCD-PML |

| 0.03 | 0.02 | 0.16 | 34.61 (1309.21) | 33.28 (1289.54) |

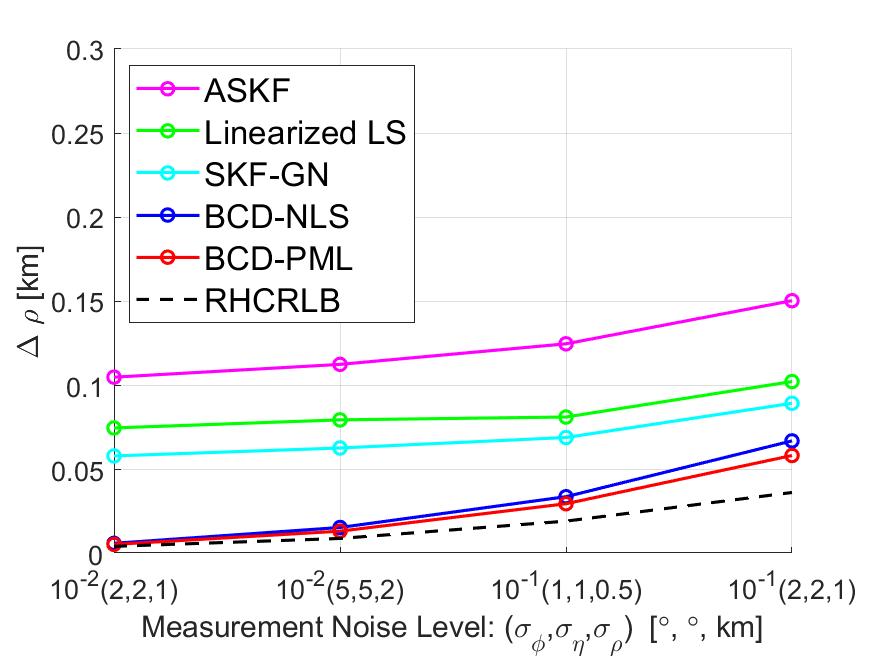

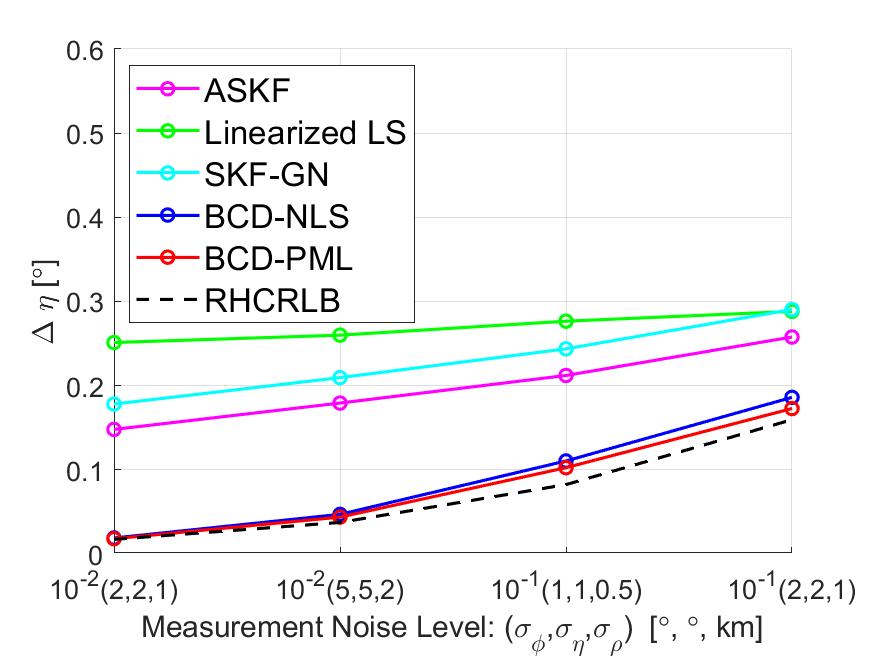

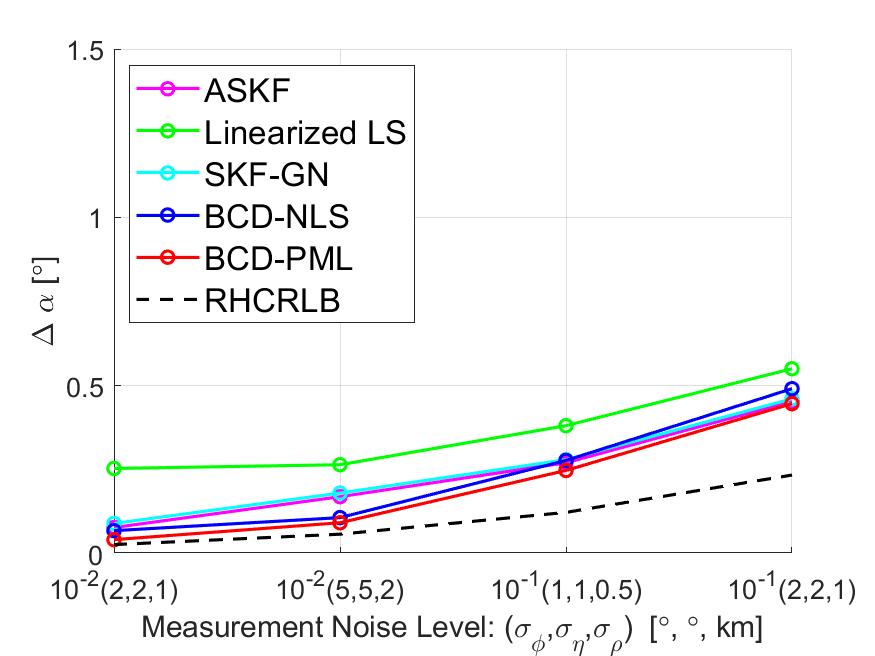

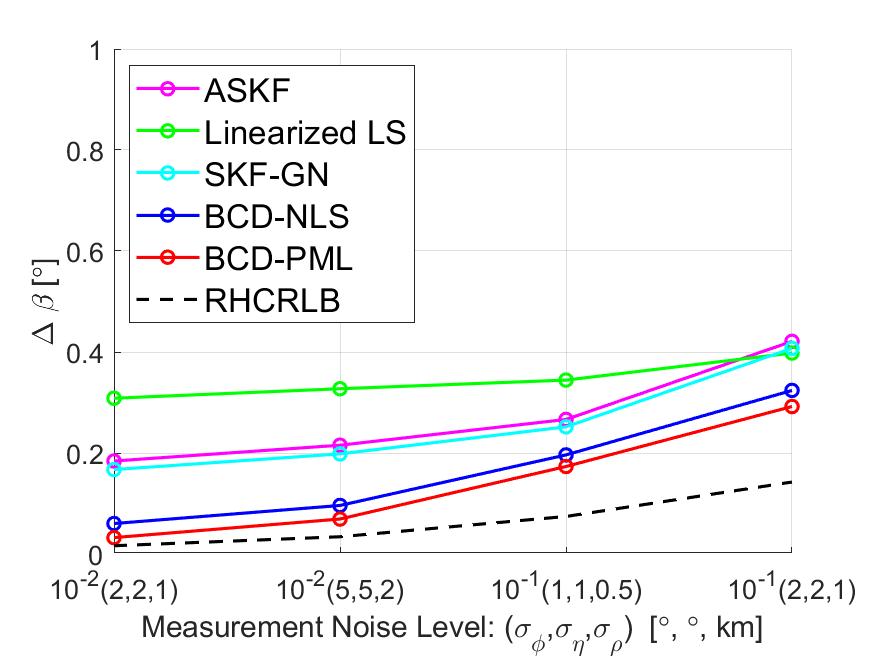

V-B3 Impact of Measurement Noise

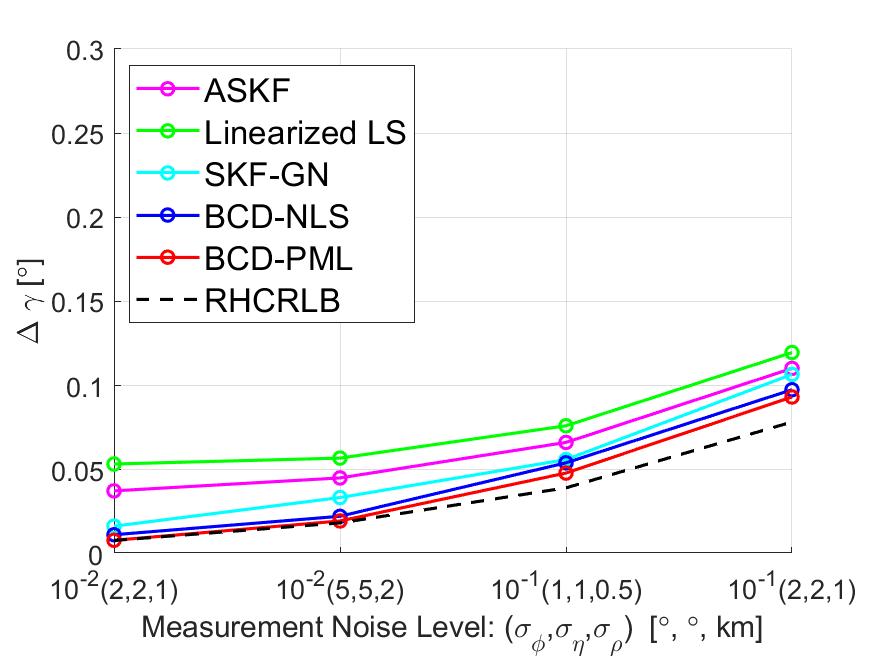

In this part, we present some simulation results to illustrate the effect of the measurement noise on the estimation performance. We fix the motion noise density and set different values of the measurement noise level . Since the measurement noise level has similar impacts on the estimation performance of the four sensors, we only present the averaged RMSEs and RHCRLBs over four sensors. The comparison of the range biases is presented in Fig. 7 and the comparison of other biases is presented in Fig. 10. It can be observed that BCD-PML achieves the smallest RMSEs among all cases and BCD-NLS is a little worse than BCD-PML. For the other three approaches, due to the model mismatch error introduced by the linear approximation procedure, they all suffer a “threshold” effect when the measurement noise is small, i.e., . In contrast, the proposed BCD algorithm exploits the special problem structure and utilizes the nonlinear optimization techniques to deal with the nonlinearity issue. Therefore, BCD-NLS and BCD-PML do not have the ‘threshold’ effects and their RMSEs are quite close to RHCRLBs when the measurement noise level is small.

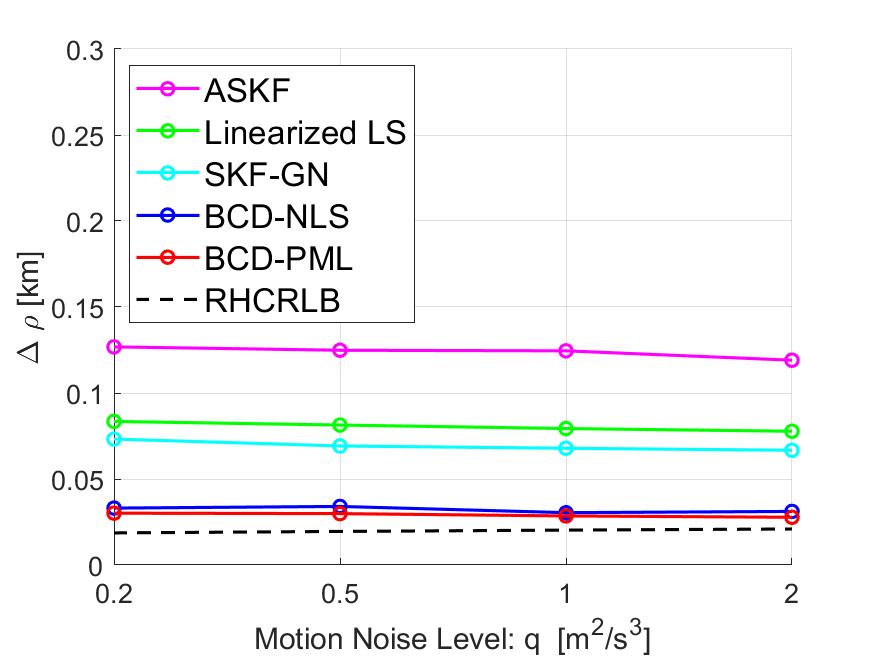

V-B4 Impact of Target Motion Noise

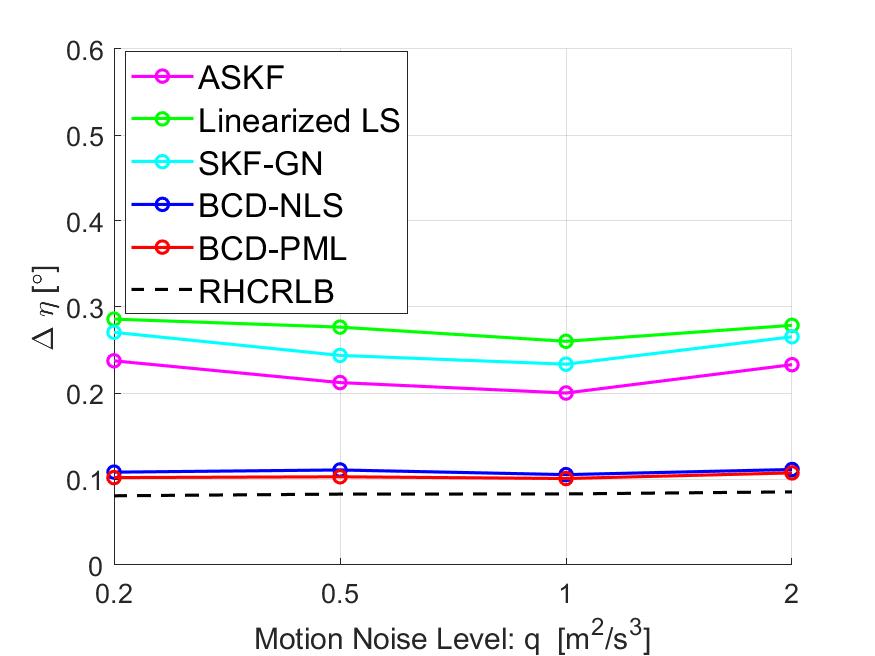

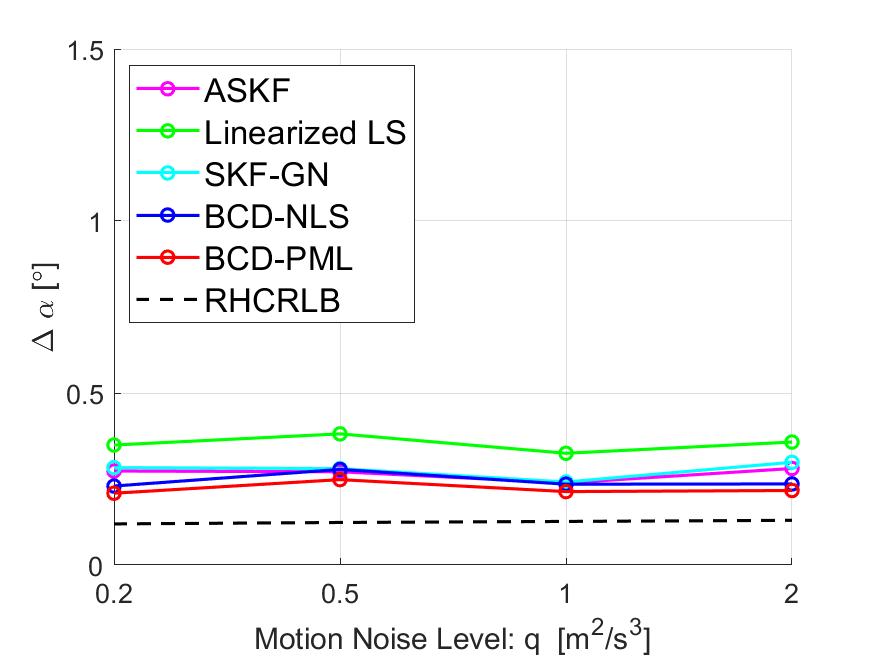

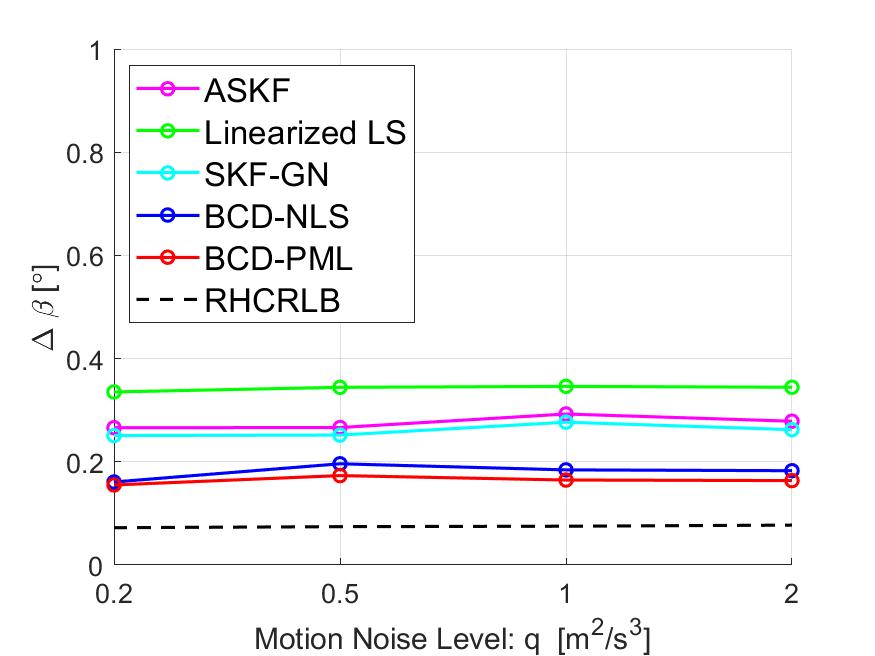

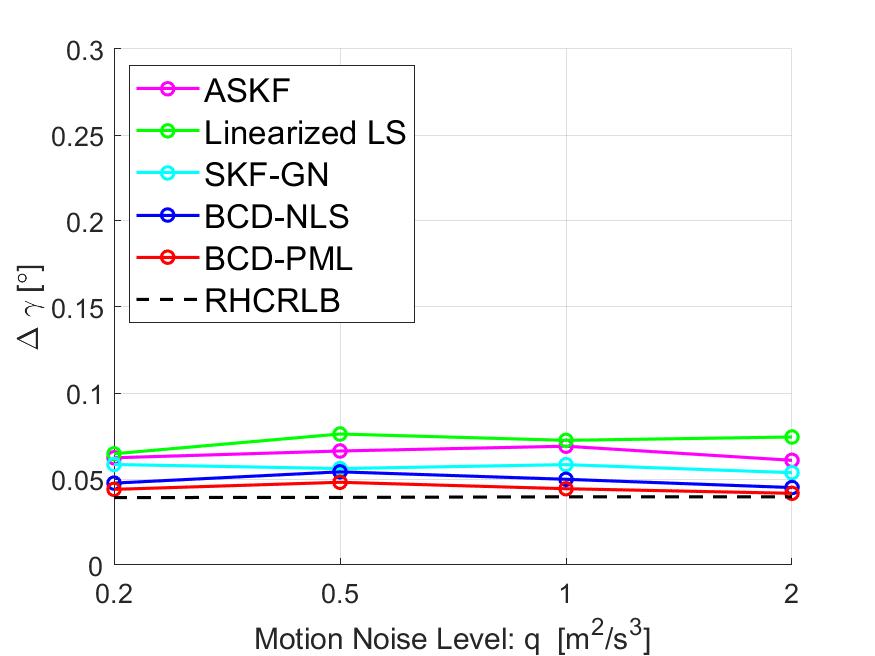

In this part, we present some simulation results to illustrate the effect of the target motion noise on the estimation performance. We fix the measurement noise level to be and and change the values of the motion noise density in interval . Similar to Section V-B3, we compare the averaged RMSEs of the range in Fig. 8 and the RMSEs of other angle biases in Fig. 11. It can be observed that all approaches are somehow robust to the motion process noise. Similarly, we can observe that the proposed approaches perform better than the other three approaches and their RMSEs are very close to RHCRLBs.

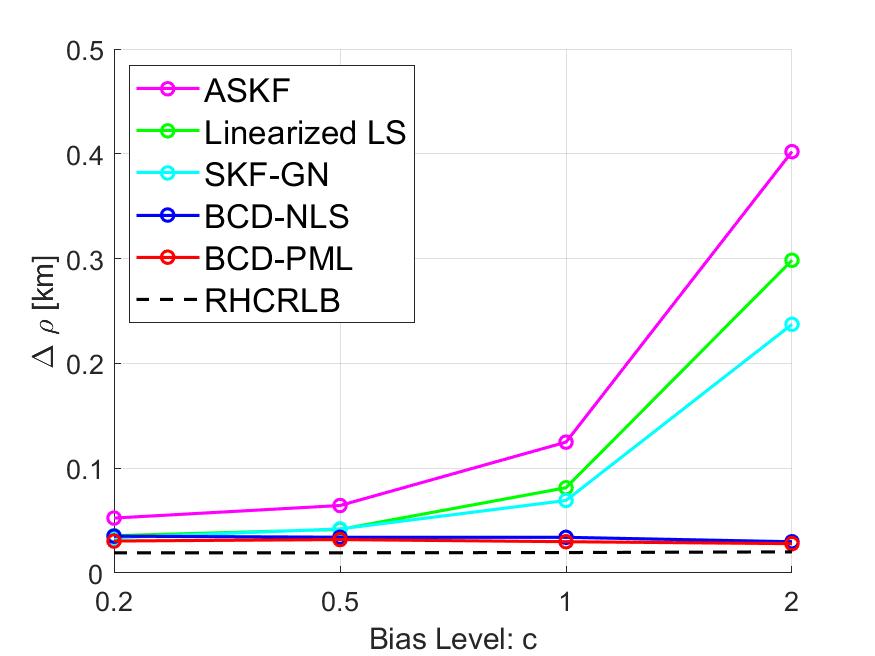

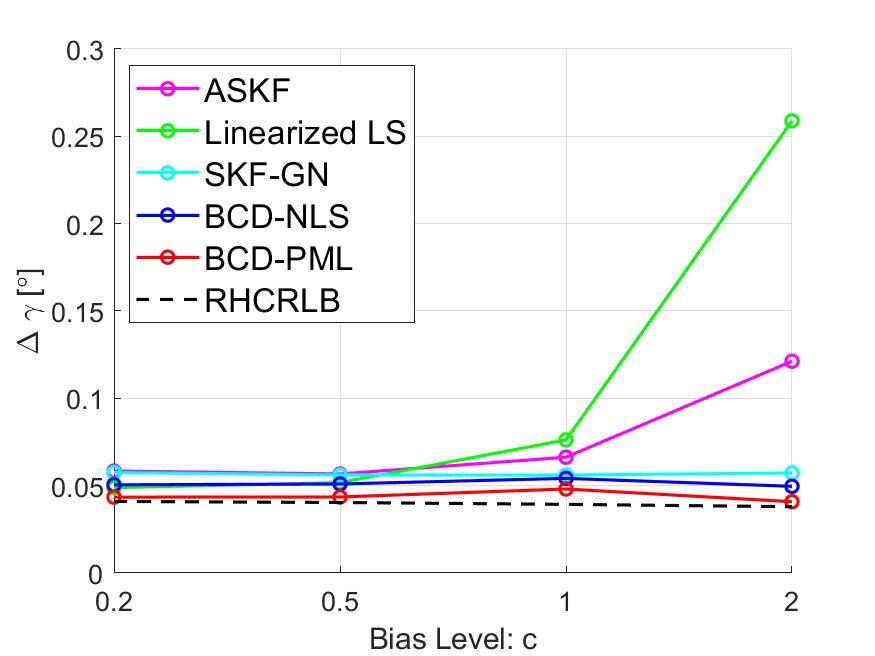

V-B5 Impact of Sensor Bias

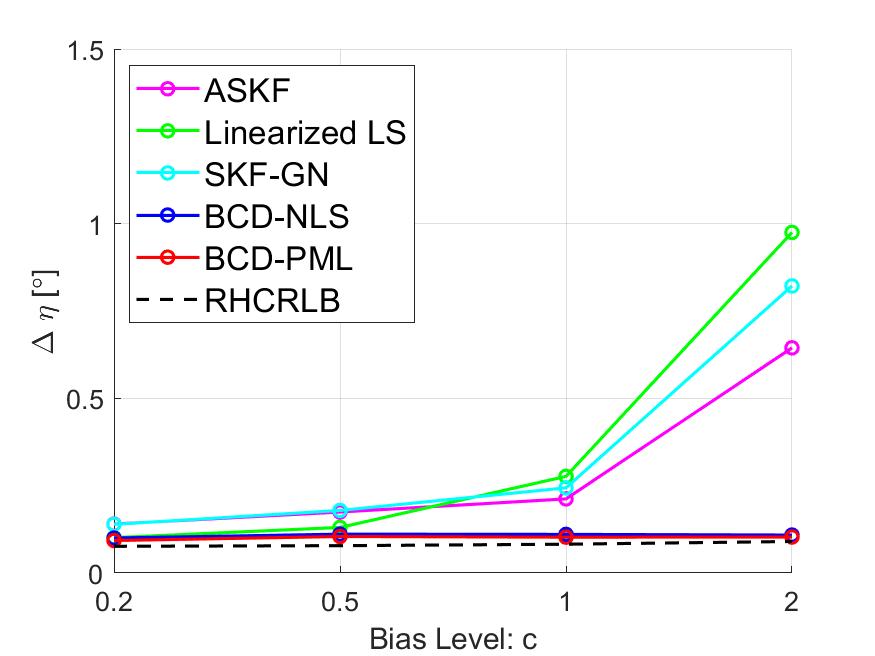

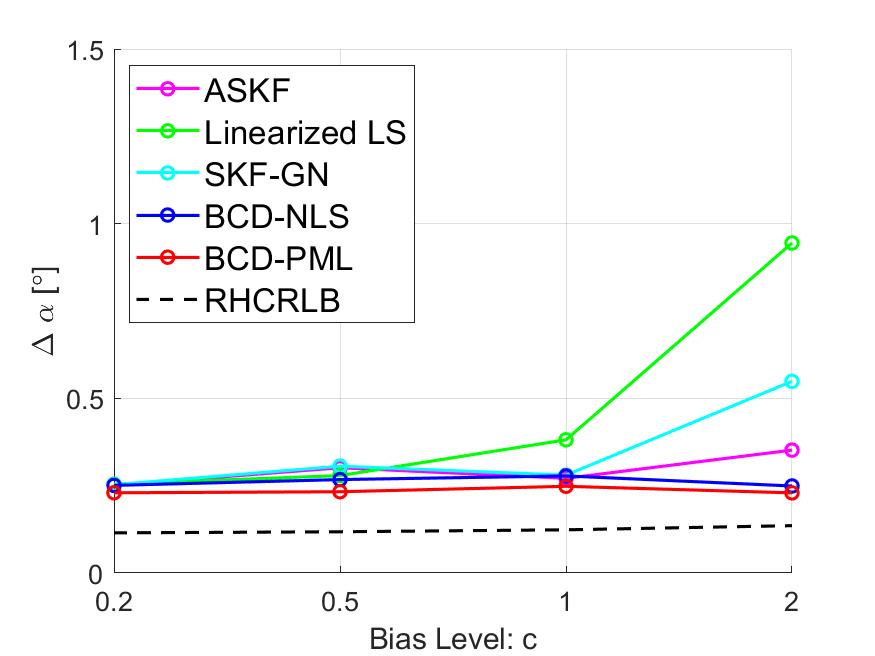

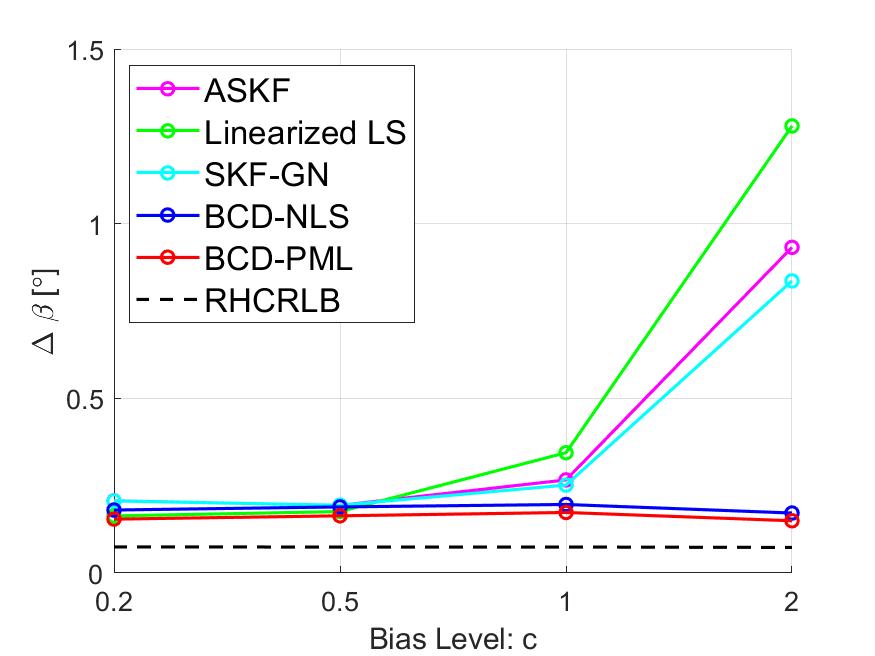

To illustrate the effect of the bias level on the estimation performance (especially for the other three approaches which use the linear approximation), we present some simulation results with different levels of the sensor biases. Specifically, we fix , , and , and change the bias values in Table I by multiplying them with a positive constant , i.e., . The averaged RMSEs of the range biases and other angle biases are compared in Figs. 9 and 12, respectively. In the case where the bias level is small, i.e., , all approaches have similar RMSEs since the linear approximation leads to a smaller model mismatch error. However, as increases, the RMSEs of the other three approaches increase very fast but the proposed two approaches are robust to the bias level.

VI Conclusion and Future Work

In this paper, we have presented a weighted nonlinear LS formulation for the 3-dimensional asynchronous multi-sensor registration problem by assuming the existence of a reference target moving with a nearly constant velocity. By choosing appropriate weight matrices, the proposed formulation includes classical nonlinear LS and ML estimation as special cases. Moreover, we have proposed an efficient BCD algorithm for solving the formulated problem and also have developed a computationally efficient algorithm based on the ADMM to globally solve nonconvex subproblems with respect to angle biases in the BCD iteration. Numerical simulation results demonstrate the effectiveness and efficiency of the proposed formulation and the BCD algorithm. In particular, our proposed approaches can achieve significantly better estimation performance than existing celebrated approaches based on the linear approximation and our proposed approaches are the most robust to the sensor bias level.

It is important to note that the proposed formulation (8) implicitly assumes that all observed data are originated by a single moving target. Other important issues in practical multi-sensor systems such as missed detection, multiple targets, and clutters [40] are not considered. Such more complicated scenarios are of greatly practical interests, and dealing with the data association problem (with biased data) raised there is necessary [19]. Combining the developed optimization technique together with advanced multi-target tracking techniques [41] would be an interesting direction to further explore, especially for sensors on moving platforms. Finally, we remark that the use of the rotation matrix in (2) may lead to a gimbal lock problem when the pitch angle is . However, in the context of this study, where sensors are fixed at a ground location with fixed positions and attitude angles, this issue can be avoided by pre-aligning the rotation angles between the local and global Cartesian coordinates being zeros. In contrast, for sensors mounted on moving platforms, it is advisable to use quaternions to prevent gimbal lock from occurring. Exploring techniques for compensating biases in quaternion representations would be an interesting future research.

Appendix A Proof of Proposition 1

Since the result is independent with respect to noise , we assume for notational simplicity. Define such that . Then, by (3) and omitting the subscripts and , we have

This completes the proof.

Appendix B Proof of Theorem 1

In this appendix, we use notation to denote the true value of the corresponding bias and define . Also, for notational simplicity, we ignore the subscript of and . Since the objective function in (15) is a quadratic function whose gradient is Lipschitz continuous and the constraint set is a smooth compact manifold, then by [31, Corollary 2], for a sufficiently large penalty parameter , the sequence generated by Algorithm 1 has at least one limit point and any limit point is a stationary point of the augmented Lagrangian function . This completes the first statement in Theorem 1.

Next, we prove the second statement. From the definition of in (18), its stationary point is defined as

| (21a) | |||

| (21b) | |||

| (21c) | |||

In the above, denotes the Fréchet subdifferential [42], and by Proposition 4 below, we have , where and . Then, (21) can be simplified as follows:

| (22) |

where and . By (20), we have and .

Express by , i.e., . Then the constraint in (22) can be eliminated and the left-hand side of (22) can be regarded as a function with respect to , , , and , denoted as ; see (24) further ahead. Thus, (22) is further re-expressed as

Then, by Proposition 5 below, there exist two continuous functions and in the neighborhood of and these two functions are uniquely defined in this neighborhood such that

holds with and . The uniqueness of these two functions implies that, for any sufficiently small , is the only solution (depending on ) such that (22) holds. Hence, by the equivalence between (21) and (22),

is the unique stationary point of (for sufficiently small ). Furthermore, combining with [31, Corollary 2], we know that is the unique limit point of the sequence . By [31, Lemma 6], is bounded, and hence every subsequence of converges Otherwise, there exists some subsequence of converging to a point which is different from and this contradicts the fact that is the unique limit point. As such, the entire sequence converges to .

Finally, if and , corresponds to the gradient of with respect to at point . Then, by Proposition 6, it is straightforward to check that , where corresponds to the true bias. Therefore, the unique stationary point is . This completes the proof the second statement of Theorem 1.

Proposition 4.

For the indicator function defined in (17), its Fréchet subdifferential at point is

Proof.

We first reformulate the indicator function as where

| (23) |

Then, the Fréchet subdifferential of at point is the Fréchet normal cone of set [42, Proposition 1.18], which is defined as

where the notation represents with . By expressing and with , we have

where (or ) denotes that approaches from (or ). In the above, (i) is due to , (ii) is by , and (iii) is by the L’Höpital’s rule. Hence,

and

For , denoting

we have

where is the Kronecker product. ∎

Proposition 5.

Express by , i.e., , then can be expressed as , where

| (24) | ||||

Further, there exist two continuous functions and in the neighborhood of and these two functions are uniquely defined in this neighborhood such that .

Proof.

By (20), we have and . From Proposition 6 below, we know that (22) holds for , and . This is equivalent to . Moreover, by [43, Theorem 9.3], if the Jacobian matrix of with respect to and is invertible at point , then Proposition 5 holds. Next, we show that the Jacobian matrix at is indeed invertible.

Proposition 6.

If and , then we have , where is the true value of the corresponding bias.

Proof.

According to the definitions of and and the derivations in Appendix C, we know that and hold when , for all , , and all the other biases and velocity take the true values. In this case, the constructed measurement equations in (7) become

where , take the true bias values. Since problem (15) is an equivalent reformulation of problem (8) with respect to one type of bias , then the above equation can also be reformulated with respect to as below

where corresponds to the true bias value. This completes the proof. ∎

References

- [1] S. Jiang, W. Pu, and Z.-Q. Luo, “Optimal asynchronous multi-sensor registration in 3 dimensions,” in Proc. of IEEE Global Conference on Signal and Information Processing (GlobalSIP), 2018, pp. 81–85.

- [2] Y. Bar-Shalom, “Multitarget-Multisensor Tracking: Advanced Applications,” Norwood, MA, Artech House, 1990.

- [3] J. Yan, W. Pu, S. Zhou, H. Liu, and M. S. Greco, “Optimal resource allocation for asynchronous multiple targets tracking in heterogeneous radar networks,” IEEE Transactions on Signal Processing, vol. 68, pp. 4055–4068, 2020.

- [4] W. L. Fischer, C. E. Muehe, and A. G. Cameron, “Registration errors in a netted air surveillance system,” DTIC Document, Tech. Rep., 1980.

- [5] M. P. Dana, “Registration: A prerequisite for multiple sensor tracking,” Multitarget-Multisensor Tracking: Advanced Applications, 1990.

- [6] S. Fortunati, F. Gini, A. Farina, and A. Graziano, “On the application of the expectation-maximisation algorithm to the relative sensor registration problem,” IET Radar Sonar Navigation, vol. 7, no. 2, pp. 191–203, Feb. 2013.

- [7] S. Fortunati, A. Farina, F. Gini, A. Graziano, M. S. Greco, and S. Giompapa, “Least squares estimation and Cramér-Rao type lower bounds for relative sensor registration process,” IEEE Transactions on Signal Processing, vol. 59, no. 3, pp. 1075–1087, Mar. 2011.

- [8] H. Leung, M. Blanchette, and C. Harrison, “A least squares fusion of multiple radar data,” in Proceedings of RADAR, vol. 94, 1994, pp. 364–369.

- [9] D. C. Cowley and B. Shafai, “Registration in multi-sensor data fusion and tracking,” in Proc. 1993 American Control Conference, June 1993, pp. 875–879.

- [10] Y. Zhou, H. Leung, and P. C. Yip, “An exact maximum likelihood registration algorithm for data fusion,” IEEE Transactions on Signal Processing, vol. 45, no. 6, pp. 1560–1573, Jun. 1997.

- [11] B. Ristic and N. Okello, “Sensor registration in ECEF coordinates using the MLR algorithm,” in Proc. of the Sixth International Conference of Information Fusion, 2003, pp. 135–142.

- [12] R. E. Helmick and T. R. Rice, “Removal of alignment errors in an integrated system of two 3-D sensors,” IEEE Transactions on Aerospace and Electronic Systems, vol. 29, no. 4, pp. 1333–1343, Oct. 1993.

- [13] N. Nabaa and R. H. Bishop, “Solution to a multisensor tracking problem with sensor registration errors,” IEEE Transactions on Aerospace and Electronic Systems, vol. 35, no. 1, pp. 354–363, Jan. 1999.

- [14] N. N. Okello and S. Challa, “Joint sensor registration and track-to-track fusion for distributed trackers,” IEEE Transactions on Aerospace and Electronic Systems, vol. 40, no. 3, pp. 808–823, July 2004.

- [15] X. Lin and Y. Bar-Shalom, “Multisensor target tracking performance with bias compensation,” IEEE Transactions on Aerospace and Electronic Systems, vol. 42, no. 3, pp. 1139–1149, July 2006.

- [16] Z. Li, S. Chen, H. Leung, and E. Bosse, “Joint data association, registration, and fusion using EM-KF,” IEEE Transactions on Aerospace and Electronic Systems, vol. 46, no. 2, pp. 496–507, April 2010.

- [17] E. Taghavi, R. Tharmarasa, T. Kirubarajan, Y. Bar-Shalom, and M. Mcdonald, “A practical bias estimation algorithm for multisensor-multitarget tracking,” IEEE Transactions on Aerospace and Electronic Systems, vol. 52, no. 1, pp. 2–19, February 2016.

- [18] A. Zia, T. Kirubarajan, J. P. Reilly, D. Yee, K. Punithakumar, and S. Shirani, “An EM algorithm for nonlinear state estimation with model uncertainties,” IEEE Transactions on Signal Processing, vol. 56, no. 3, pp. 921–936, Mar. 2008.

- [19] D. F. Crouse, Y. Bar-Shalom, and P. Willett, “Sensor bias estimation in the presence of data association uncertainty,” in Proc. of Signal and Data Processing of Small Targets 2009, vol. 7445. SPIE, 2009, pp. 281–302.

- [20] R. Mahler and A. El-Fallah, “Bayesian unified registration and tracking,” in Proc. of SPIE Defense, Security, and Sensing. International Society for Optics and Photonics, 2011.

- [21] B. Ristic, D. E. Clark, and N. Gordon, “Calibration of multi-target tracking algorithms using non-cooperative targets,” IEEE Journal of Selected Topics in Signal Processing, vol. 7, no. 3, pp. 390–398, June 2013.

- [22] W. Pu, Y.-F. Liu, J. Yan, S. Zhou, H. Liu, and Z.-Q. Luo, “A two-stage optimization approach to the asynchronous multi-sensor registration problem,” in Proc. of IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Mar. 2017, pp. 3271–3275.

- [23] W. Pu, Y.-F. Liu, J. Yan, H. Liu, and Z.-Q. Luo, “Optimal estimation of sensor biases for asynchronous multi-sensor data fusion,” Mathematical Programming, vol. 170, no. 1, pp. 357–386, 2018.

- [24] X. Song, Y. Zhou, and Y. Bar-Shalom, “Unbiased converted measurements for tracking,” IEEE Transactions on Aerospace and Electronic Systems, vol. 34, no. 3, pp. 1023–1027, 1998.

- [25] X. R. Li and V. P. Jilkov, “Survey of maneuvering target tracking. part i. dynamic models,” IEEE Transactions on Aerospace and Electronic Systems, vol. 39, no. 4, pp. 1333–1364, 2004.

- [26] Z. Duan, C. Han, and X. R. Li, “Comments on” unbiased converted measurements for tracking”,” IEEE transactions on aerospace and electronic systems, vol. 40, no. 4, p. 1374, 2004.

- [27] S. Bordonaro, P. Willett, and Y. Bar-Shalom, “Decorrelated unbiased converted measurement kalman filter,” IEEE Transactions on Aerospace and Electronic Systems, vol. 50, no. 2, pp. 1431–1444, 2014.

- [28] Z.-Q. Luo and T.-H. Chang, “SDP relaxation of homogeneous quadratic optimization: Approximation bounds and applications,” in Convex Optimization in Signal Processing and Communications, 2010, pp. 117–165.

- [29] Z.-Q. Luo, W.-K. Ma, A. M.-C. So, Y. Ye, and S. Zhang, “Semidefinite relaxation of quadratic optimization problems,” IEEE Signal Processing Magazine, vol. 27, no. 3, pp. 20–34, May 2010.

- [30] C. Lu, Y.-F. Liu, W.-Q. Zhang, and S. Zhang, “Tightness of a new and enhanced semidefinite relaxation for MIMO detection,” SIAM Journal on Optimization, vol. 29, no. 1, pp. 719–742, 2019.

- [31] Y. Wang, W. Yin, and J. Zeng, “Global convergence of ADMM in nonconvex nonsmooth optimization,” Journal of Scientific Computing, vol. 78, no. 1, pp. 29–63, 2019.

- [32] H. Attouch, J. Bolte, and B. F. Svaiter, “Convergence of descent methods for semi-algebraic and tame problems: Proximal algorithms, forward–backward splitting, and regularized gauss–seidel methods,” Mathematical Programming, vol. 137, no. 1, pp. 91–129, 2013.

- [33] D. P. Bertsekas, Nonlinear Programming (Third Edition). Athena Scientific Belmont, 1999.

- [34] S. J. Wright, “Coordinate descent algorithms,” Mathematical Programming, vol. 151, no. 1, pp. 3–34, 2015.

- [35] M. Razaviyayn, M. Hong, and Z.-Q. Luo, “A unified convergence analysis of block successive minimization methods for nonsmooth optimization,” SIAM Journal on Optimization, vol. 23, no. 2, pp. 1126–1153, 2013.

- [36] A. Aubry, A. De Maio, A. Zappone, M. Razaviyayn, and Z.-Q. Luo, “A new sequential optimization procedure and its applications to resource allocation for wireless systems,” IEEE Transactions on Signal Processing, vol. 66, no. 24, pp. 6518–6533, 2018.

- [37] Y. Zhou, “A Kalman filter based registration approach for asynchronous sensors in multiple sensor fusion applications,” in Proc. of IEEE International Conference on Acoustics, Speech, and Signal Processing, vol. 2. IEEE, May 2004, pp. ii–293.

- [38] Y. Noam and H. Messer, “Notes on the tightness of the hybrid cramer-rao lower bound,” IEEE Transactions on Signal Processing, vol. 57, no. 6, pp. 2074–2084, June 2009.

- [39] M. Grant and S. Boyd, “CVX: Matlab software for disciplined convex programming, version 2.1,” http://cvxr.com/cvx, Mar. 2014.

- [40] Y. Bar-Shalom, T. E. Fortmann, and P. G. Cable, Tracking and Data Association. Acoustical Society of America, 1990.

- [41] F. Meyer, T. Kropfreiter, J. L. Williams, R. Lau, F. Hlawatsch, P. Braca, and M. Z. Win, “Message passing algorithms for scalable multitarget tracking,” Proceedings of the IEEE, vol. 106, no. 2, pp. 221–259, 2018.

- [42] A. Y. Kruger, “On Fréchet subdifferentials,” Journal of Mathematical Sciences, vol. 116, no. 3, pp. 3325–3358, 2003.

- [43] L. H. Loomis and S. Sternberg, Advanced Calculus. Reading, Massachusetts: Addison–Wesley, 1968.

- [44] R. A. Horn and C. R. Johnson, Matrix Analysis. Cambridge University Press, 1985.

Supplementary Material

Appendix C Derivations of Subproblems (10a)–(10f)

Denote where is the diagonalizing operation for stacking matrices as a block diagonal matrix. Also, for , define vector .

C-A Derivation for Subproblem (10a)

C-B Derivation for Subproblem (10b)

C-C Derivation for Subproblems (10c)–(10f)

For notational simplicity, we firstly define as

which will be used in this subsection.

C-C1 Subproblem (10c)

C-C2 Subproblem (10d)

C-C3 Subproblem (10e)

C-C4 Subproblem (10f)

Appendix D Full Rankness of Matrices and

This section is to show, under Assumption 1, matrices in (13) and in (15) are of full rank with probability one.

First, we note that the structures of and are closely related to a binary matrix (with ), where is the total number of measurements, and is the number of sensors. Each entry represents that sensor has a measurement at time instance , and otherwise. Once is of full (column) rank, then it is not hard to show that and are also of full (column) rank (this will be discussed later). By Assumption 1, we know that each row of is a one-hot row vector. Then, by applying proper row permutation of , we have

and hence is always of full (column) rank.

Next, we discuss the relation between and matrices and . We take as an example and the analysis is similar for other matrices , , , and . First, we need to expand to a matrix , where in the -th row of is replaced by

and in is replaced by . Vectors and are defined in Appendix C-C2. According to the definition of , the event that is not of rank 2 is with zero probability, since this event requires that the measurement noise (contained in ) satisfies the following equation

for a constant . To be specific, denote

Then, we see that is uniquely determined by the polar coordinate and the above equation becomes

It is easy to check that the above linear equation (in terms of and ) has the unique solution However, as are continuous random variables, the event that happens is of zero probability.

Finally, let denote the submatrix stacked by the rows of from index to (). Then by Assumption 1 (i.e., each sensor has at least one measurement and ), we know that is of full (column) rank with probability one. Performing proper elementary row operations for , we get , where and . Due to the above simple row operations and the fact that that (with ) is of full (column) rank with probability one (which can be proved using the same argument as in the proof that is of full column rank), one can show that is also of full (column) rank with probability one. Noticing that we immediately obtain that is also of full (column) rank with probability one.

Finally, we use the following toy example to illustrate the above proof. Suppose that there are two sensors and three measurements. Without loss of generality, we assume that sensor has measurement at time instance and sensor has measurement at time instance Now there are two different cases where sensor has measurement at time instance and sensor has measurement at time instance We first consider the case where sensor has measurement at time instance In this case, we have

and

One can show that is of full (column) rank with probability one and hence in the above is also of full (column) rank with probability one. In the second case where sensor has measurement at time instance , we have

and

Again, and in the above are of full (column) rank with probability one.