Efficient recycled algorithms for quantitative trait models on phylogenies

University of Otago, Dunedin, New Zealand

)

Abstract

We present an efficient and flexible method for computing likelihoods of phenotypic traits on a phylogeny. The method does not resort to Monte-Carlo computation but instead blends Felsenstein’s discrete character pruning algorithm with methods for numerical quadrature. It is not limited to Gaussian models and adapts readily to model uncertainty in the observed trait values. We demonstrate the framework by developing efficient algorithms for likelihood calculation and ancestral state reconstruction under Wright’s threshold model, applying our methods to a dataset of trait data for extrafloral nectaries (EFNs) across a phylogeny of 839 Labales species.

Keywords: Likelihood algorithm, quantitative traits, continuous traits, comparative method, numerical quadrature, numerical integration.

Introduction

Models for the evolution of continuous traits on a phylogeny are an essential tool of evolutionary genomics. These models are used to describe a huge range of biological phenomena, including morphological characteristics (Felsenstein, 2002, Harmon et al., 2010, Ronquist, 2004, Stevens, 1991), expression levels (Khaitovich et al., 2005, 2006), geography (Lemey et al., 2010), and gene frequency dynamics (Cavalli-Sforza and Edwards, 1967, Felsenstein, 1981a, Sirén et al., 2011). Model-based statistical techniques can detect or test for association between different traits, reconstruct ancestral states, and infer subtle features of the evolutionary process that generated them.

The usefulness of continuous trait models is contingent on our ability to compute with them. Early work of Felsenstein (Felsenstein, 1968, 1973) demonstrated that if traits are evolving according to Brownian motion then we can compute likelihoods quickly and (up to numerical precision) exactly. Felsenstein’s method extends directly to other Gaussian processes, notably the Ornstein-Uhlenbeck (OU) process (Felsenstein, 1988, Hansen, 1997, Lande, 1976). These methods break down for more complex models, in which case researchers have typically resorted to Monte Carlo strategies (e.g. (Landis et al., 2013, Ronquist, 2004)).

Computing the probability of a qualitative character is essentially a numerical integration (quadrature) problem. For most models, if we know the value of the trait at each ancestral node in the phylogeny we can quickly compute the various transition probabilities. Since we do not usually know these ancestral trait values we integrate them out. This is a multi-dimensional integration problem with one dimension for each ancestral node (or two dimensions for each node if we are modelling covarying traits).

Methods for estimating or approximating integrals are usually judged by their rate of convergence: how quickly the error of approximation decreases as the amount of work (function evaluations) increases. Consider the problem of computing a one-dimensional integral

| (1) |

where is a ‘nice’ function with continuous and bounded derivatives. Simpson’s rule, a simple textbook method reviewed below, can be shown to have an rate of convergence, meaning that, asymptotically in , evaluating 10 times more points reduces the error by a factor of . In contrast, a standard Monte Carlo method has a rate of convergence of , meaning that evaluating 10 times more points will only reduced the error by a factor of around 3 For this reason, numerical analysis texts often refer to Monte Carlo approaches as ‘methods of last resort.’

Despite this apparently lacklustre performance guarantee, Monte-Carlo methods have revolutionised phylogenetics in general and the analysis of quantitative characters in particular. The reason is their partial immunity to the curse of dimensionality. Methods like Simpson’s rule are not practical for a high number of dimensions as the asymptotic convergence rate, quoted above, is only achieved for an infeasibly large number of function evaluations . The effective convergence rate for small can be very poor, and typically worse than Monte-Carlo. In contrast, there are Monte Carlo approaches which achieve close to convergence irrespective of dimension. This has been critical when computing with complex evolutionary models with as many dimensions as there are nodes in the phylogeny.

The main contribution of our paper is to demonstrate how to efficiently and accurately compute likelihoods on a phylogeny using a sequence of one-dimensional integrations. We obtain a fast algorithm with convergence guarantees that far exceed what can be obtained by Monte Carlo integration. Our approach combines two standard tools: classical numerical integrators and Felsenstein’s pruning algorithm for discrete characters (Felsenstein, 1981b). Indeed, the only real difference between our approach and Felsenstein’s discrete character algorithm is that we use numerical integration techniques to integrate states at ancestral nodes, instead of just carrying out a summation.

The running time of the algorithm is , where is the number of points used in the numerical integration at each node and is the number of taxa (leaves) in the tree. Using Simpson’s method, we obtain a convergence rate of , meaning that if we increase by a factor of we will obtain an estimate which is accurate to four more decimal places.

To illustrate the application of our general framework, we develop an efficient algorithm for computing the likelihood of a tree under the threshold model of Sewell Wright and Felsenstein (Felsenstein, 2005, 2012, Wright, 1934). We also show how to infer marginal trait densities at ancestral nodes. We have implemented these algorithms and used them to study evolution of extrafloral nectaries on an 839-taxon phylogeny Marazzi et al. (2012).

The combination of numerical integrators and the pruning algorithm opens up a large range of potential models and approaches which we have only just begun to explore. In the discussion, we briefly review developments in numerical integration techniques that could well be brought to bear on these problems, and a few suggestions of directions and problems which can now be addressed.

Material and Methods

Models for continuous trait evolution

Phylogenetic models for continuous trait evolution, like those for discrete traits, are specified by the density of trait values at the root and the transition densities along the branches. We use to denote the density for the trait value at the root, where is a set of relevant model parameters. We use to denote the transitional density for the value at node , conditional on the trait value at its parent node . Here, represents a bundle of parameters related to node such as branch length, population size, and mutation rate. All of these parameters could vary throughout the tree.

To see how the model works, consider how continuous traits might be simulated. A state is sampled from the root density . We now proceed through the phylogeny from the root to the tips, each time visiting a node only after its parent has already been visited. For each node , we generate the value at that node from the density , where is the simulated trait value at node , the parent of node . In this way, we will eventually generate trait values for the tips.

We use to denote the random trait values at the tips and to denote the random trait values at the internal nodes, ordered so that children come before parents. Hence is the state assigned to the root. Let

| (2) |

denote the set of branches in the tree. The joint density for all trait values, observed and ancestral, is given by multiplying the root density with all of the transition densities

| (3) |

The probability of the observed trait values is now determined by integrating out all of the ancestral trait values:

| (4) |

In these integrals, the bounds of integration will vary according to the model.

The oldest, and most widely used, continuous trait models assume that traits (or transformed gene frequencies) evolve like Brownian motion (Cavalli-Sforza and Edwards, 1967, Felsenstein, 1973). For these models, the root density is Gaussian (normal) with mean and unknown variance . The transition densities are also Gaussian, with mean (the trait value of the parent) and standard deviation proportional to branch length. Note that there are identifiability issues which arise with the inference of the root position under this model, necessitating a few tweaks in practice.

It can be shown that when the root density and transitional densities are all Gaussian, the joint density (4) is multivariate Gaussian. Furthermore, the covariance matrix for this density has a special structure which methods such as the pruning technique of Felsenstein (1973) exploit. This technique continues to work when Brownian motion is replaced by an OU process (Felsenstein, 1988, Hansen, 1997, Lande, 1976).

Landis et al. (2013) discuss a class of continuous trait models which are based on Lévy processes and include jumps. At particular times, as governed by a Poisson process, the trait value jumps to a value drawn from a given density. Examples include a compound Poisson process with Gaussian jumps and a variance Gamma model with Gamma distributed jumps. Both of these processes have analytical transition probabilities in some special cases.

Lepage et al. (2006) use the Cox-Ingersoll-Ross (CIR) process to model rate variation across a phylogeny. Like the OU process (but unlike Brownian motion), the CIR process is ergodic. It has a stationary Gamma density which can be used for the root density. The transition density is a particular non-central Chi-squared density and the process only assumes positive values.

Kutsukake and Innan (2013) examine a family of compound Poisson models, focusing particularly on a model where the trait values make exponentially distributed jumps upwards or downwards. In the case that the rates of upward and downward jumps are the same, the model reduces to the variance Gamma model of Landis et al. (2013) and has an analytical probability density function.

Sirén et al. (2011) propose a simple and elegant model for gene frequencies whereby the root value is drawn from a Beta distribution and each transitional density is Beta with appropriately chosen parameters.

Trait values at the tips are not always observed directly. A simple, but important, example of this is the threshold model of Wright (1934), explored by Felsenstein (2005). Under this model, the trait value itself is censored and we only observe whether or not the value is positive or negative. A similar complication arises when dealing with gene frequency data as we typically do not observe the actual gene frequency but instead a binomially distributed sample based on that frequency (Sirén et al., 2011).

If the trait values at the tip are not directly observed we integrate over these values as well. Let denote the probability of observing given the trait value . The marginalised likelihood is then

| (5) |

Numerical integration

Analytical integration can be difficult or impossible. For the most part, it is unusual for an integral to have an analytical solution, and there is no general method for finding it when it does exist. In contrast, numerical integration techniques (also known as numerical quadrature) are remarkably effective and are often easy to implement. A numerical integration method computes an approximation of the integral from function values at a finite number of points. Hence we can obtain approximate integrals of functions even when we don’t have an equation for the function itself. See Cheney and Kincaid (2012) for an introduction to numerical integration, and Dahlquist and Björck (2008) and Davis and Rabinowitz (2007) for more comprehensive technical surveys.

The idea behind most numerical integration techniques is to approximate the target function using a function which is easy to integrate. In this paper we will restrict our attention to Simpson’s method which approximates the original function using piecewise quadratic functions. To approximate an integral we first determine equally spaced points

| (6) |

We now divide the integration into intervals

| (7) |

Within each interval , there is a unique quadratic function which equals at each the three points , and . The integral of this quadratic on the interval is . Summing over , we obtain the approximation

| (8) |

With a little rearrangement, the approximation can be written in the form

| (9) |

where when is odd and when is even, with the exception of and which both equal . Simpson’s method is embarrassingly easy to implement and has a convergence rate of . Increasing the number of intervals by a factor of decreases the error by a factor of . See Dahlquist and Björck (2008) and Davis and Rabinowitz (2007) for further details.

It should be remembered, however, that the convergence rate is still only an asymptotic bound, and gives no guarantees on how well the method performs for a specific function and choice of . Simpson’s method, for example, can perform quite poorly when the function being integrated has rapid changes or soft peaks. We observed this behaviour when implementing threshold models, as described below. Our response was to better tailor the integration method for the functions appearing. We noted that the numerical integrations we carried out all had the form

| (10) |

where and varied. Using the same general approach as Simpson’s rule, we approximated , rather than the whole function , by a piecewise quadratic function . We could then use standard techniques and tools to evaluate numerically. The resulting integration formula, which we call the Gaussian kernel method, gives a significant improvement in numerical accuracy.

A further complication is that, in models of continuous traits, the trait value often ranges over the whole real line, or at least over the set of positive reals. Hence, we need to approximate integrals of the form

| (11) |

though the methods discussed above only apply to integrals on finite intervals. We truncate these integrals, determining values and such that the difference

| (12) |

between the full integral and the truncated integral can be bounded analytically. Other strategies are possible; see Dahlquist and Björck (2008) for a comprehensive review.

A pruning algorithm for integrating continuous traits

Felsenstein has developed pruning algorithms for both continuous and discrete characters (Felsenstein, 1981a, b). His algorithm for continuous characters works only for Gaussian processes. Our approach is to take his algorithm for discrete characters and adapt it to continuous characters.

The (discrete character) pruning algorithm is an application of dynamic programming. For each node , and each state , we compute the probability of observing the states for all tips which are descendants of node , conditional on node having ancestral state . This probability is called the partial likelihood at node given state . Our algorithm follows the same scheme, with one major difference. Since traits are continuous, we cannot store all possible partial likelihoods. Instead, we store likelihoods for a finite set of values and plug these values into a numerical integration routine.

Let be the index of a node in the tree not equal to the root, let node be its parent node. We define the partial likelihood, to be the likelihood for the observed trait values at the tips which are descendants of node , conditional on the parent node having trait value . If node is a tip with observed trait value we have

| (13) |

recalling that is the density for the value of the trait at node conditional on the value of the trait for its parent. More generally, we may only observe some value for which we have the conditional probability conditional on the trait value . In this case the partial likelihood is given by

| (14) |

Suppose node is not the root and that it has two children . Since trait evolution is conditionally independent on disjoint subtrees, we obtain the recursive formula

| (15) |

Finally, suppose that node is the root and has two children . We evaluate the complete tree likelihood using the density of the trait value at the root,

| (16) |

The bounds of integration in (14)—(16) will vary according to the model.

We use numerical integration techniques to approximate (14)—(16) and dynamic programming to avoid an exponential explosion in the computation time. Let denote the number of function evaluations for each node. In practice, this might vary over the tree, but for simplicity we assume that it is constant. For each node , we select trait values

| (17) |

How we do this will depend on the trait model and the numerical integration technique. If, for example, the trait values vary between and and we are applying Simpson’s method with intervals we would use for .

We traverse the tree starting at the tips and working towards the root. For each non-root node and we compute and store an approximation of , where node is the parent of node . Note that this is an approximation of rather than of since is the partial likelihood conditional on the trait value for the parent of node . The value approximation is computed by applying the numerical integration method to the appropriate integral (14)—(16), where we replace function evaluations with approximations previously computed. See below for a worked example of this general approach.

The numerical integration methods we use run in time linear in the number of points being evaluated. Hence if is the number of tips in the tree, the algorithm will run in time . For the integration techniques described above, the convergence rate (in ) for the likelihood on the entire tree had the same order as the convergence rate for the individual one-dimensional integrations (see below for a formal proof of a specific model). We have therefore avoided the computational blow-out typically associated with such high-dimensional integrations, and achieve this without sacrificing accuracy.

Posterior densities for ancestral states

The algorithms we have described compute the joint density of the states at the tips, given the tree, the branch lengths, and other parameters. As with discrete traits, the algorithms can be modified to infer ancestral states for internal nodes in the tree. Here we show how to carry out reconstruction of the marginal posterior density of a state at a particular node. The differences between marginal and joint reconstructions are reviewed in (Yang, 2006, pg 121).

First consider marginal reconstruction of ancestral states at the root. Let and be the children of the root. The product equals the probability of the observed character conditional on the tree, branch lengths, parameters and a state of at the root. The marginal probability of , ignoring the data, is given by the root density . Integrating the product of and gives the likelihood , as in (16). Plugging these into Bayes’ rule, we obtain the posterior density of the state at the root:

| (18) |

With general time reversible models used in phylogenetics, the posterior distributions at other nodes can be found by changing the root of the tree. Unfortunately the same trick does not work for many qualitative trait models, including the threshold model we study here. Furthermore, recomputing likelihoods for each possible root entails a large amount of unneccessary computation.

Instead we derive a second recursion, this one starting at the root and working towards the tips. A similar trick is used to compute derivatives of the likelihood function in Felsenstein and Churchill (1996). For a node and state we let denote the likelihood for the trait values at tips which are not descendants of node , conditional on node having trait value . If node is the root , then is for all .

Let node be any node apart from the root, let node be its parent and let node be the other child of (that is, the sibling of node ). We let denote the trait value at node . Then can be written

| (19) |

This integral can be evaluated using the same numerical integrators used when computing likelihoods. Note that is the conditional density of the parent state given the child state, which is the reverse of the transition densities used to formulate the model. How this is computed will depend on the model and its properties; see below for an implementation of this calculation in the threshold model.

Once has been computed for all nodes, the actual (marginal) posterior densities are computed from Bayes’ rule. Letting be the children of node ,

| (20) |

Case study: threshold models

In this section we show how the general framework can be applied to the threshold model of Wright (1934) and Felsenstein (2005, 2012). Each trait is modelled by a continuously varying liability which evolves along branches according to a Brownian motion process. While the underlying liability is continuous, the observed data is discrete: at each tip we observe only whether the liability is above or below some threshold.

We will use standard notation for Gaussian densities. Let denote the density of a Gaussian random variable with mean and variance ; let

| (21) |

denote its cumulative density function, with inverse .

Let denote the (unobserved) liability values at the tips and internal nodes. As above we assume that the whenever node is a child of node , so that the root has index .

The liability value at the root has a Gaussian density with mean and variance :

| (22) |

Consider any non-root node and let be the index of its parent. Let denote the length of the branch connecting nodes and . Then has a Gaussian density with mean and variance :

| (23) |

Following Felsenstein (2005), we assume thresholds for the tips are all set at zero. We observe if the liability is positive, if the liability is negative, and if data is missing. We can include the threshold step into our earlier framework by defining

| (24) |

The likelihood function for observed discrete values is then given by integrating over liability values for all nodes on the tree:

| (25) |

The first step towards computing is to bound the domain of integration so that we can apply Simpson’s method. Ideally, we would like these bounds to be as tight as possible, for improved efficiency. For the moment we will just outline a general procedure which can be adapted to a wide range of evolutionary models.

The marginal (prior) density of a single liability or trait value at a single node is the density for that liability value marginalizing over all other values and data. With the threshold model, the marginal density for the liability at node is Gaussian with mean (like the root) and variance equal to the sum of the variance at the root and the transition variances on the path from the root to node . If is the set of nodes from the root to node , then

| (26) |

The goal is to constrain the error introduced by truncating the integrals with infinite domain. Let be the desired bound on this truncation error. Recall that the number of internal nodes in the tree is . Define

| (27) |

and

| (28) |

so that the probability lies outside the interval is at most . By the inclusion-exclusion principle, the joint probability for any internal node is at most . We use this fact to bound the contribution of the regions outside these bounds.

| (29) | ||||

| (30) | ||||

| (31) | ||||

| (32) | ||||

| (33) | ||||

| (34) |

We therefore compute values for and use these bounds when carrying out integration at the internal nodes. We define

| (35) |

for for each internal node .

The next step is to use dynamic programming and numerical integration to compute the approximate likelihood. Let node be a tip of the tree, let node be its parent and let be the binary trait value at this tip. For each we use standard error functions to compute

| (36) | |||||

| (37) |

Here is the density of a Gaussian with mean and variance .

Now suppose that node is an internal node with parent node and children and . Applying Simpson’s rule to the bounds to (15) we have for each :

| (38) | |||||

| (39) |

Suppose node is the root, and are its children. Applying Simpson’s rule to (16) gives

| (40) | |||||

| (41) |

Pseudo-code for the algorithm appears in Algorithm 1. Regarding efficiency and convergence we have:

Theorem 1.

Algorithm 1 runs in time and approximates with error.

Proof

The running time follows from the fact that for each of the nodes in the tree we carry out applications of Simpson’s method.

Simpson’s rule has convergence on functions with bounded fourth derivatives (Dahlquist and Björck, 2008). The root density and each of the transition densities are Gaussians, so have individually have bounded fourth derivatives. For each node , let denote the number of tips which are descendents of the node. Using induction on (15), we see that for all nodes , the fourth derivate of is .

Letting we have from above that the error introduced by replacing the infinite domain integrals with integrals on introduces at most error. Using a second induction proof on (15) and (38) together with the bound on fourth derivatives we have that is at most for all nodes , where node is the parent of node . In this way we obtain error bound of on the approximation of .

| Algorithm 1: Compute probability of a threshold character. | ||||

| Input: | ||||

| : Number of intervals in numerical integration. | ||||

| : branch lengths in tree. | ||||

| : mean and variance of root density | ||||

| : variance of transition densities (per unit branch length) | ||||

| observed character () | ||||

| Output: | ||||

| Probability of observed character under the threshold model. | ||||

| Construct the vector . | ||||

| Construct the vector as in (9) | ||||

| Compute the path length from the root to each node . | ||||

| Initialize for all nodes and . | ||||

| For all | ||||

| For all tip nodes | ||||

| Let be the index of the parent of node | ||||

| For | ||||

| If | ||||

| else if | ||||

| For all internal nodes , excluding the root | ||||

| Let be the index of the parent of node | ||||

| Let be the indices of the children of node | ||||

| For | ||||

| Let be indices of the the children of the root. | ||||

We can estimate posterior densities using the recursion (19) followed by equation (20). The conditional density

| (42) |

can be obtained by plugging the transitional density

| (43) |

and the two marginal densities (26)

| (44) |

into the identity . We thereby obtain the recursion

| (45) |

which we estimate using Simpson’s method. Algorithm estimates values of the posterior densities at each node, evaluated using the same set of grid points as used in Algorithm 1. An additional round of numerical integration can be used to obtain posterior means and variances.

| Algorithm 2: Compute posterior probabilities | |||

| Input: | |||

| , , , , and as in Algorithm 1 | |||

| Vector , likelihood and arrays computed in Algorithm 1. | |||

| Output: | |||

| Arrays for each internal node . | |||

| Construct the vectors , , , , and path lengths as in Algorithm 1. | |||

| for all . | |||

| For all | |||

| Let be the index of the parent of node . | |||

| Let be the index of the sibling of node . | |||

| For | |||

| For all | |||

| Let be the children of node . | |||

| For all | |||

Evolutionary precursors of plant extrafloral nectaries

To study the methods in practice, we reanalyse trait data published by Marazzi et al. (2012), using a fixed phylogeny. Marazzi et al. (2012) introduce and apply a new discrete state model for morphological traits which, in addition to states for presence and absence, incorporates an intermediate ‘pre-cursor’ state. Whenever the intermediate state is observed at the tips it is coded as ‘absent’. The motivation behind the model is that the intermediate state represents evoutionary pre-cursors, changes which are necessary for the evolution of a new state but which may not be directly observed. These pre-cursors could explain repeated parallel evolution of a trait in closely related traits (Marazzi et al., 2012). They compiled a data set recording presence or absence of plant extrafloral nectaries (EFNs) across a phylogeny of 839 species of Fabales, fitting their models to these data.

The threshold model also involves evolutionary pre-cursors in terms of changes in ancestral liabilities. We use these models, and our new algorithms to analyse the EFN dataset. Our analysis also makes use of the time-calibrated phylogeny inferred by Simon et al. (2009), although unlike Marazzi et al. (2012) we ignore phylogenetic uncertainty.

Experimental protocol

We conduct three separate experiments. For the first experiment, we examine the rate of convergence of the likelihood algorithm as we increase . This is done for the ‘All’ EFN character (Character 1 in Marazzi et al. (2012)) for a range of estimates for the liability variance at the root, . The interest in stems from its use in determining bounds for each node, with the expectation that as increases, the convergence of the integration algorithm will slow. The mean liability at the root, was determined from the data using Maximum Likelihood estimation.

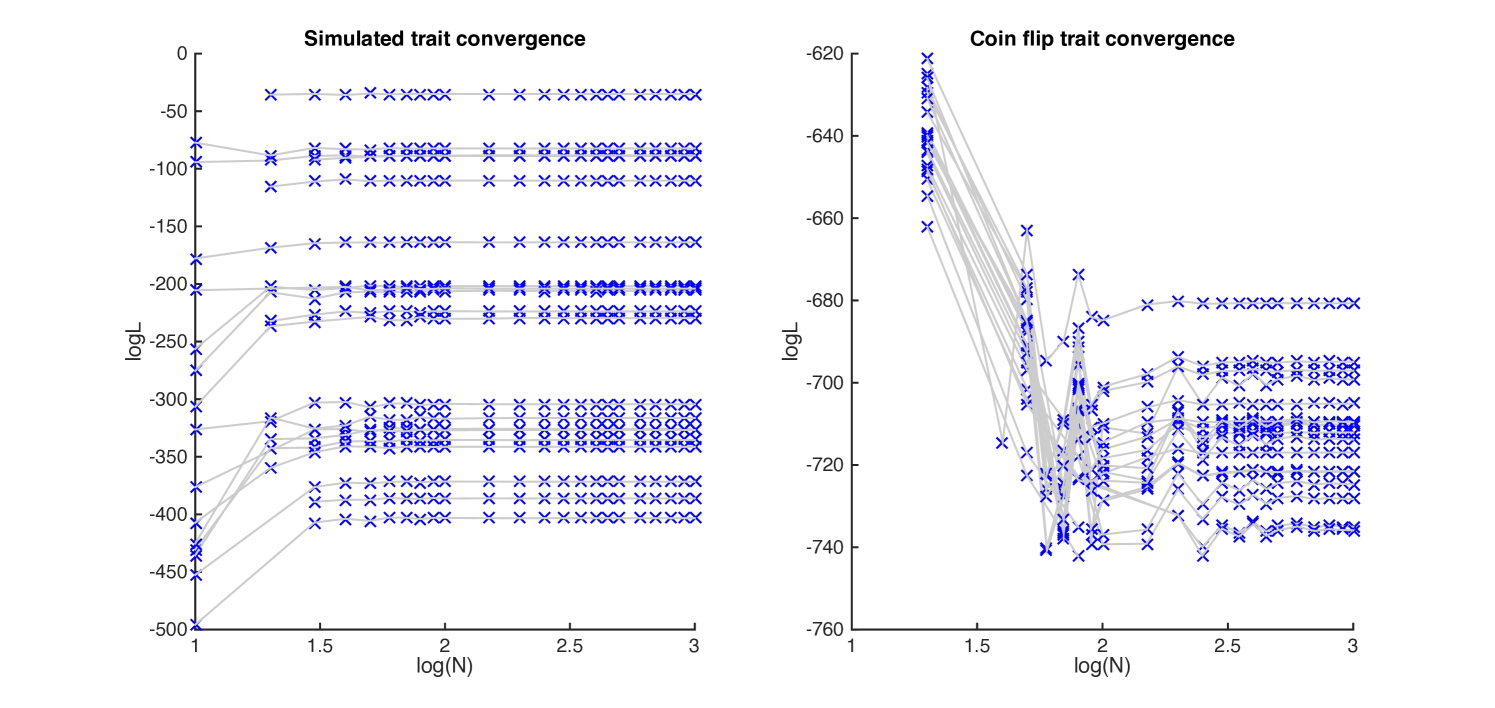

We also examined convergence of the algorithm on randomly generated characters. We first evolved liabilities according to the threshold model, using the parameter settings obtained above. To examine the difference in performance for non-phylogenetic characters we also simulated binary characters by simulated coin flipping. Twenty replicates were carried out for each case.

The second experiment extends the model comparisons carried out in Marazzi et al. (2012) to include the threshold models. For this comparison we fix the transitional variance at one, since changing this values corresponds to a rescaling of the Brownian process, with no change in likelihood. With only one character, the maximum likelihood estimate of the root variance is zero, irrespective of the data. This leaves a single parameter to infer: the value of the liability at the root state. We computed a maximum likelihood estimate for the state at the root, then applied our algorithm with a sufficiently large value of to be sure of convergence. The Akaike Information Criterion (AIC) was determined and compared with those obtained for the model of Marazzi et al. (2012).

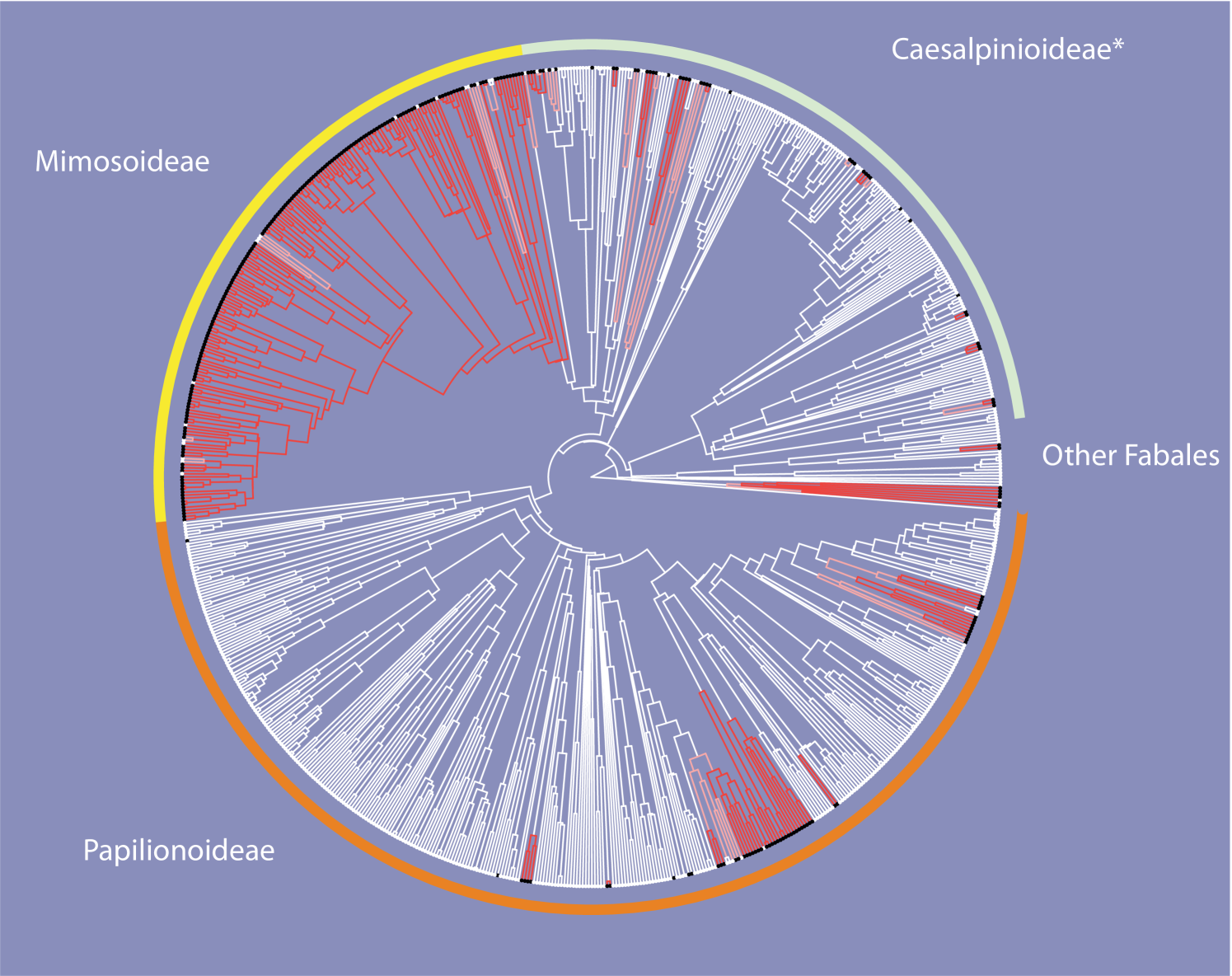

For the third experiment, we determine the marginal posterior densities for the liabilities at internal nodes, using Algorithm 2. These posterior probabilities are then mapped onto the phylogeny, using shading to denote the (marginal) posterior probability that a liability is larger than zero. We therefore obtain a figure analogous to Supplementary Figure 7 of Marazzi et al. (2012).

Results

Convergence of the algorithm

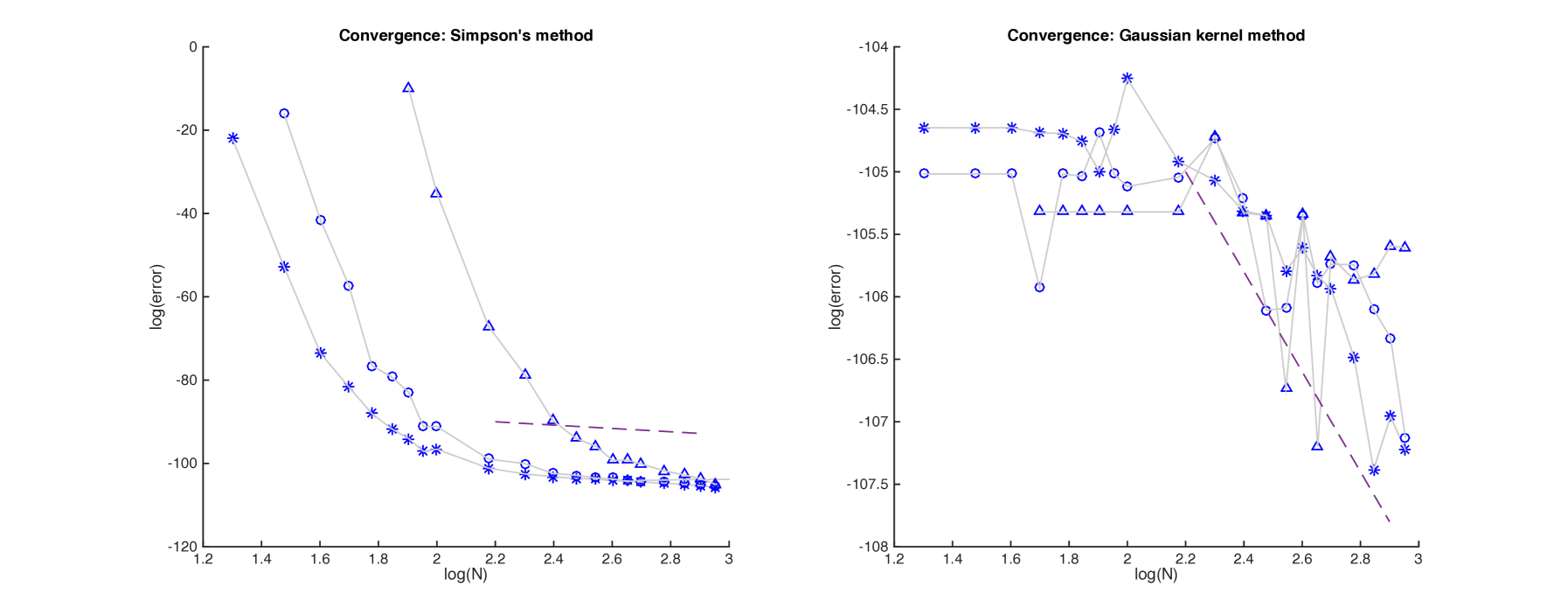

Plots of error versus are given in Figure 1, both for Simpson’s method (left) and for the modified Gaussian kernel method (right). For larger , the error in a log-log plot decreases with slope at most (as indicated), corresponding to convergence of the method. Log-log plots of error versus for the simulated data are given in Figure 2. In each case, the method converges for by .

While the level of convergence for both algorithms is correct, the accuracy of the method based on Simpson’s method is far worse. When a branch length is short, the transition density becomes highly peaked, as does the function being integrated. Such functions are difficult to approximate with piecewise quadratics, and Simpson’s method can fail miserably. Indeed, for , we would often observe negative estimated probabilities, or estimates greater than one! (These were omitted from the plots). While we can always bound estimates computed by the algorithm, a sounder approach is to improve the integration technique. This we did using the Gaussian kernel method, and the result was far improved accuracy for little additional computation. For the remainder of the experiments with this model we used the Gaussian kernel method when carrying out numerical integration.

Model comparison

Marazzi et al. (2012) describe AIC comparisons between their pre-cursor model and a conventional binary trait model. We extend this comparison to include the threshold model. This is a one parameter model, the parameter being the value of the liability at the root. We used the MATLAB command fminsearch with multiple starting points to compute the maximum likelihood estimate for this value. The resulting log-likelihood was , giving an AIC of . This compares to an AIC of for the (two parameter) binary character model and an AIC of for the (one parameter) precursor model of Marazzi et al. (2012).

We analyzed the five other EFN traits in the same way, and present the computed AIC values in Table 1, together with AIC values for the two parameter binary state model and one parameter precursor model computed by Marazzi et al. (2012) (and the 2 parameter precursor model for trait 6). We see that the threshold model fits better than either the binary or precursor models for all of the six traits.

| Trait | Model | AIC | ||

|---|---|---|---|---|

| 1 (All) | Binary | 2 | -251.7 | 507.4 |

| Precursor | 1 | -246.7 | 495.4 | |

| Threshold | 1 | -240.6 | 483.2 | |

| 2 (Leaves) | Binary | 2 | -240.3 | 484.6 |

| Precursor | 1 | -234.5 | 470.9 | |

| Threshold | 1 | -230.6 | 463.1 | |

| 3 (Inflorescence) | Binary | 2 | -108.3 | 220.5 |

| Precursor | 1 | -110.9 | 223.9 | |

| Threshold | 1 | -108.3 | 218.5 | |

| 4 (Trichomes) | Binary | 2 | -86.7 | 177.3 |

| Precursor | 1 | -86.9 | 325.3 | |

| Threshold | 1 | -85.8 | 173.5 | |

| 5 (Substitutive) | Binary | 2 | -163.0 | 330.1 |

| Precursor | 1 | -161.6 | 325.3 | |

| Threshold | 1 | -161.3 | 324.6 | |

| 6 (True) | Binary | 2 | -132.3.1 | 268.7 |

| Precursor | 1 | -131.1 | 264.3 | |

| Precursor | 2 | -126.7 | 257.3 | |

| Threshold | 1 | -125.3 | 252.6 |

It is not clear, a priori, why the threshold model would appear to fit some data better than the precursor model since they appear to capture similar evolutionary phenomena. It would be useful to explore this observation more thoroughly, given the new computational tools, perhaps incorporating phylogenetic error in a manner similar to Marazzi et al. (2012).

Inferring ancestral liabilities

Figure 3 gives a representation of how the (marginal) posterior liabilities change over the tree. Branches are divided into three classes according to the posterior probability that the liability is positive, with lineages with posterior probability colored red, lineages with posterior probability colored white, and remaining lineages colored pink.

This diagram can be compared to Supplementary Figure 7, of Marazzi et al. (2012). The representations are, on the whole, directly comparable. An positive liability corresponds, roughly, to an ancestral precursor state. Both analyses suggest multiple origins of a precursor state, for example for a large clade of Mimosoidae. Interestingly, there are several clades where the analysis of Marazzi et al. (2012) suggests widespread ancestral distribution of the precursor state whereas our analysis indicates a negative liability at the same nodes.

Once again, our analysis is only preliminary, our goal here simply being to demonstrate what calculations can now be carried out.

Discussion

We have introduced a new framework for the computation of likelihoods from continuous characters, and illustrated the framework using an efficient algorithm for evaluating (approximate) likelihoods under Wright and Felsenstein’s threshold model.

This framework opens up possibilities in several directions. The numerical integration, or numerical quadrature, literature is vast. In this article, we have focused in on a popular and simple numerical integration method, and our algorithm should be seen as a proof of principle rather than a definitive threshold likelihood method. There is no question that the numerical efficiency of Algorithm 1 could be improved significantly through the use of more sophisticated techniques: better basis functions or adaptive quadrature methods for a start.

The connection with Felsenstein’s (discrete character) pruning algorithm also opens up opportunities for efficiency gains. Techniques such as storing partial likelihoods, or approximating local neighborhoods, are fundamental to efficient phylogenetic computations on sequence data (Felsenstein, 1981b, Larget and Simon, 1998, Pond and Muse, 2004, Stamatakis, 2006, Swofford, 2003). These tricks could all be now applied to the calculation of likelihoods from continuous traits.

Finally, we stress that the algorithm does not depend on special characteristics of the continuous trait model, beyond conditional independence of separate lineages. Felsenstein’s pruning algorithm for continuous characters is limited to Gaussian processes and breaks down if, for example, the transition probabilities are governed by Levy processes (Landis et al., 2013). In contrast, our approach works whenever we can numerically evaluation transition densities, an indeed only a few minor changes would transform our Algorithm 1 to one implementing on a far more complex evolutionary process.

Acknowledgements

This research was supported by an Allan Wilson Centre Doctoral Scholarship to GH, financial support to DB from the Allan Wilson Centre, a Marsden grant to DB, and financial support to all authors from the University of Otago.

References

- Cavalli-Sforza and Edwards [1967] L. L. Cavalli-Sforza and A. W. Edwards. Phylogenetic analysis. models and estimation procedures. American journal of human genetics, 19(3 Pt 1):233, 1967.

- Cheney and Kincaid [2012] E. Cheney and D. Kincaid. Numerical Mathematics and Computing. Cengage Learning, 2012.

- Dahlquist and Björck [2008] G. Dahlquist and Å. Björck. Chapter 5: Numerical integration. Numerical Methods in Scientific Computing, 1, 2008.

- Davis and Rabinowitz [2007] P. J. Davis and P. Rabinowitz. Methods of numerical integration. Courier Corporation, 2007.

- Felsenstein [1968] J. Felsenstein. Statistical inference and the estimation of phylogenies. 1968.

- Felsenstein [1973] J. Felsenstein. Maximum likelihood and minimum-steps methods for estimating evolutionary trees from data on discrete characters. Systematic zoology, pages 240–249, 1973.

- Felsenstein [1981a] J. Felsenstein. Evolutionary Trees From Gene Frequencies and Quantitative Characters: Finding Maximum Likelihood Estimates. Evolution, 35(6):1229–1242, Nov. 1981a. ISSN 0014-3820. doi: 10.2307/2408134. URL http://www.jstor.org/stable/2408134.

- Felsenstein [1981b] J. Felsenstein. Evolutionary trees from dna sequences: a maximum likelihood approach. Journal of molecular evolution, 17(6):368–376, 1981b.

- Felsenstein [1988] J. Felsenstein. Phylogenies and quantitative characters. Annual Review of Ecology and Systematics, pages 445–471, 1988.

- Felsenstein [2002] J. Felsenstein. Quantitative characters, phylogenies, and morphometrics. Morphology, shape and phylogeny, pages 27–44, 2002.

- Felsenstein [2005] J. Felsenstein. Using the quantitative genetic threshold model for inferences between and within species. Philosophical Transactions of the Royal Society B: Biological Sciences, 360(1459):1427–1434, 2005.

- Felsenstein [2012] J. Felsenstein. A comparative method for both discrete and continuous characters using the threshold model. The American Naturalist, 179(2):145–156, 2012.

- Felsenstein and Churchill [1996] J. Felsenstein and G. A. Churchill. A hidden markov model approach to variation among sites in rate of evolution. Molecular Biology and Evolution, 13(1):93–104, 1996.

- Hansen [1997] T. F. Hansen. Stabilizing selection and the comparative analysis of adaptation. Evolution, pages 1341–1351, 1997.

- Harmon et al. [2010] L. J. Harmon, J. B. Losos, T. Jonathan Davies, R. G. Gillespie, J. L. Gittleman, W. Bryan Jennings, K. H. Kozak, M. A. McPeek, F. Moreno-Roark, T. J. Near, et al. Early bursts of body size and shape evolution are rare in comparative data. Evolution, 64(8):2385–2396, 2010.

- Khaitovich et al. [2005] P. Khaitovich, S. Pääbo, and G. Weiss. Toward a neutral evolutionary model of gene expression. Genetics, 170(2):929–939, 2005. URL http://www.genetics.org/content/170/2/929.short.

- Khaitovich et al. [2006] P. Khaitovich, W. Enard, M. Lachmann, and S. Pääbo. Evolution of primate gene expression. Nature Reviews Genetics, 7(9):693–702, Sept. 2006. ISSN 1471-0056. doi: 10.1038/nrg1940. URL http://www.nature.com.ezproxy.otago.ac.nz/nrg/journal/v7/n9/full/nrg1940.html.

- Kutsukake and Innan [2013] N. Kutsukake and H. Innan. Simulation-based likelihood approach for evolutionary models of phenotypic traits on phylogeny. Evolution, 67(2):355–367, 2013.

- Lande [1976] R. Lande. Natural selection and random genetic drift in phenotypic evolution. Evolution, pages 314–334, 1976.

- Landis et al. [2013] M. J. Landis, J. G. Schraiber, and M. Liang. Phylogenetic analysis using lévy processes: finding jumps in the evolution of continuous traits. Systematic biology, 62(2):193–204, 2013.

- Larget and Simon [1998] B. Larget and D. Simon. Faster likelihood calculations on trees. Pittsburgh, Pennsylvania: Department of Mathematics and Computer Science, Duquesne University, pages 98–02, 1998.

- Lemey et al. [2010] P. Lemey, A. Rambaut, J. J. Welch, and M. A. Suchard. Phylogeography takes a relaxed random walk in continuous space and time. Molecular biology and evolution, 27(8):1877–1885, 2010.

- Lepage et al. [2006] T. Lepage, S. Lawi, P. Tupper, and D. Bryant. Continuous and tractable models for the variation of evolutionary rates. Mathematical biosciences, 199(2):216–233, 2006.

- Marazzi et al. [2012] B. Marazzi, C. Ané, M. F. Simon, A. Delgado-Salinas, M. Luckow, and M. J. Sanderson. Locating evolutionary precursors on a phylogenetic tree. Evolution, 66(12):3918–3930, 2012.

- Pond and Muse [2004] S. L. K. Pond and S. V. Muse. Column sorting: Rapid calculation of the phylogenetic likelihood function. Systematic biology, 53(5):685–692, 2004.

- Ronquist [2004] F. Ronquist. Bayesian inference of character evolution. Trends in Ecology & Evolution, 19(9):475–481, 2004. URL http://www.sciencedirect.com/science/article/pii/S0169534704001831.

- Simon et al. [2009] M. F. Simon, R. Grether, L. P. de Queiroz, C. Skema, R. T. Pennington, and C. E. Hughes. Recent assembly of the cerrado, a neotropical plant diversity hotspot, by in situ evolution of adaptations to fire. Proceedings of the National Academy of Sciences, 106(48):20359–20364, 2009.

- Sirén et al. [2011] J. Sirén, P. Marttinen, and J. Corander. Reconstructing population histories from single nucleotide polymorphism data. Molecular biology and evolution, 28(1):673–683, 2011.

- Stamatakis [2006] A. Stamatakis. Raxml-vi-hpc: maximum likelihood-based phylogenetic analyses with thousands of taxa and mixed models. Bioinformatics, 22(21):2688–2690, 2006.

- Stevens [1991] P. F. Stevens. Character states, morphological variation, and phylogenetic analysis: a review. Systematic Botany, pages 553–583, 1991. URL http://www.jstor.org/stable/2419343.

- Swofford [2003] D. L. Swofford. PAUP*. Phylogenetic analysis using parsimony (* and other methods). Version 4.. 2003.

- Wright [1934] S. Wright. An analysis of variability in number of digits in an inbred strain of guinea pigs. Genetics, 19(6):506, 1934.

- Yang [2006] Z. Yang. Computational molecular evolution, volume 21. Oxford University Press Oxford, 2006.