Empirical eigenvalue based testing for structural breaks in linear panel data models

Abstract.

Testing for stability in linear panel data models has become an important topic in both the statistics and econometrics research communities. The available methodologies address testing for changes in the mean/linear trend, or testing for breaks in the covariance structure by checking for the constancy of common factor loadings. In such cases when an external shock induces a change to the stochastic structure of panel data, it is unclear whether the change would be reflected in the mean, the covariance structure, or both. In this paper, we develop a test for structural stability of linear panel data models that is based on monitoring for changes in the largest eigenvalue of the sample covariance matrix. The asymptotic distribution of the proposed test statistic is established under the null hypothesis that the mean and covariance structure of the panel data’s cross sectional units remain stable during the observation period. We show that the test is consistent assuming common breaks in the mean or factor loadings. These results are investigated by means of a Monte Carlo simulation study, and their usefulness is demonstrated with an application to U.S. treasury yield curve data, in which some interesting features of the 2007-2008 subprime crisis are illuminated.

Key words and phrases:

panel data, change point detection, time series, empirical eigenvalues, CUSUM process, weak convergence1. Introduction

We consider in this paper the problem of testing for the presence of a structural break in linear panel data models. Structural breaks in panel data may result from any of a number of sources. For example, if the data under consideration consists of U.S. macroeconomic indicators, then the onset of a recession, or the introduction of a new technology, may be evidenced by changes in the correlations between indicators or linear model parameters fitted from the data.

Change point analysis has been extensively developed to study such features in data; we refer to Aue and Horváth (2012) for a recent survey of the field in the context of time series. Adapting change point methodology to the panel data setting presents a difficulty since the dimension, or number of cross sectional units (), may be larger in relation to the sample size () than is typical in classical change point analysis. This encourages asymptotic frameworks in which both and tend jointly to infinity.

Most of the literature in this direction address either testing for changes in the mean, or testing for changes in the correlation structure as measured by changes in common factor loadings. With regards to testing for and estimating changes in the mean, we refer to Bai (2010), who derives a least squares change point estimator. Kim (2011, 2014), and Baltagi et al. (2015) extend this methodology to account for changes in linear trends in the presence of cross sectional dependence modeled by common factors. Horváth and Hušková (2012) develop a test for a structural change in the mean based on the CUSUM estimator. Li et al. (2014) and Qian and Su (2014) consider multiple structural breaks in panel data, and Kao et al. (2014) considers break testing under cointegration.

Estimating and testing for changes in the covariance of scalar and vector valued time series of a fixed dimension are considered in Galeano and Peña (2007), Aue et al. (2009), and Wied et al (2012). With regards to testing for changes in the factor structure of panel data, Breitung and Eickmeier (2011) develop methodology that relies on testing for constancy of the least squares estimates obtained by regression on the principal component factors. Their test depends on estimating the number of common factors according to the information criterion developed in Bai and Ng (2002). In both the testing procedure, and the method used to determine the number of common factors, it is presumed that the mean remains constant.

In such instances when external shocks induce a change to the stochastic structure of panel data, it is unclear whether or not the change would affect the mean, the covariance structure, or both. Methods for detecting changes in the mean appear to be somewhat robust to small changes in the covariance structure of the panels, however the methods proposed in Breitung and Eickmeier (2011) to test for changes in the common factor loadings are sensitive to both changes in the mean, and large changes in the covariance, evidenced by non-monotonic power. This was recently addressed in Yamamoto and Tanaka (2015), in which a correction is proposed, but it raises the question of whether alternative methods to estimating principal components, and the number of common factors, might be effective in terms of detecting instability in panel data.

The alternative that we explore here relies on analyzing the largest eigenvalues of the covariance matrix. Using the largest eigenvalues of a covariance matrix as a simplified summary of the covariance structure of multivariate time series has served an important role in finance and econometrics for quite some time. This idea is utilized in Markowitz portfolio optimization (cf. Markowitz (1952, 1956)), and to model co–movements of markets and stocks as a barometer for risk (cf. Keogh et al. (2004) and Zovko and Farmer (2007)), among other applications.

In this paper, we propose methodology for testing structural stability in linear panel data models that is based on a process derived from the largest eigenvalue of the covariance matrix based on an increasing proportion of the total sample. The asymptotic distribution of the eigenvalue process is established assuming structural stability. Furthermore, we show that functionals of the eigenvalue process diverge when there is a common break in the mean or covariance as measured by the common factor loadings.

The rest of the paper is organized as follows. In Section 2, we present the linear panel data models and assumptions considered in the paper, as well as the main asymptotic results for the largest eigenvalue under the null hypothesis of stability of the model parameters. Section 3 contains the details of applying the results of Section 2 to the change point problem, including asymptotic consistency results under the mean break and factor loading break alternatives. In Section 4, we discuss the practical implementation of the test, and present the results of a Monte Carlo simulation study. Section 5 contains an application of the methodology developed in the paper to US treasury yield curve data. Analogous results for smaller eigenvalues are considered in Section 6. All proofs of the technical results are collected in Section 7.

2. Models, assumptions, and asymptotics under

We consider the model

| (2.1) |

where denotes the cross section of the panel at time , denotes the initial mean of the cross section that changes to at the unknown time , denotes a real valued common factor with initial loadings that may change to , and denote the idiosyncratic errors. It is presumed that both the common factor and idiosyncratic errors may be serially correlated. As we develop asymptotics, we assume that the number of cross sections depends on the observation period , and is allowed to tend to infinity with . We make the assumption that for the sake of simplicity; these results could be extended to the more general case of a vector valued common factor and factor loading.

We are interested in testing the null hypothesis that the model parameters remain stable during the observation period , i.e.

When holds, the model of (2.1) reduces to

| (2.2) |

Let denote the matrix transpose, and define the vectors . We define

| (2.3) |

to be the sample covariance matrix based on the proportion of the sample, where

In order to test , we utilize the processes derived from the largest eigenvalues of . We focus our attention at first on the process derived from the largest eigenvalue, and make the primary objective of this section is to establish the weak convergence of under . Analogous results for processes derived from the smaller eigenvalues are provided in Section 6. We note that an alternative to using is to use , which are equivalent with the largest eigenvalues of

| (2.4) |

Assuming that holds, does not depend on , and in this case we define the eigenvalues and eigenvectors of by

| (2.5) |

where , and denotes the Euclidean norm in . Since is allowed to depend on , both the eigenvalues and eigenvectors may evolve as . Throughout this paper, we make use of the following assumptions:

Assumption 2.1.

The eigenvalues satisfy that for some constant .

Assumption 2.2.

The common factor loadings satisfy that

Assuming that the eigenvalues of are distinct is necessary to derive a normal approximation for their estimates, and is a common assumption in the literature. We assume that the common factors and idiosyncratic errors satisfy a fairly general weak dependence condition.

Definition 2.1.

We say that a stationary time series is an approximable Bernoulli shift with rate function if and for some measurable function where are independent and identically distributed random variables, and with and the are independent and identically distributed copies of .

The space of stationary processes that may be represented as Bernoulli shifts is enormous; we refer to Wu (2005) for a discussion. Examples include stationary ARMA, ARCH, and GARCH processes. The rate function describes the rate at which such processes can be approximated with sequences exhibiting a finite range of dependence. In many examples of interest, the rate function may be taken to decay exponentially in the lag parameter.

Assumption 2.3.

-

(a)

is with rate function for constants and , and .

-

(b)

The sequences , are each with rate functions for constants and . There exist constants and such that .

-

(c)

The sequences and are independent.

The least restrictive moment condition that could be assumed in order to obtain a normal approximation for the empirical eigenvalues is four moments. Our assumption of twelve moments comes from the fact that we apply a third order Taylor series expansion for the difference between the empirical eigenvalue process and , (cf. Hall and Hosseini–Nasab (2009)) and twelve moments are needed to get an upper bound for the highest order term that is uniform with respect to . The condition in Assumption 2.3 that is nonrestrictive; it makes the model (2.2) identifiable. In order to state the main result, we define

Theorem 2.1.

| (2.6) |

then

where is a Wiener process, denotes weak convergence in the Skorokhod topology, and

Theorem 2.1 shows that the distribution of the largest eigenvalue process may be approximated by a Brownian motion. We note that the norming sequence , which is essentially the long run variance of the quadratic forms , may change with . In fact, we show in Section 7 that if , then under , as , if . The necessity of including the logarithm term in the rate condition (2.6) comes from the fact that we establish weak convergence on the entire unit interval. This condition can be improved by considering convergence on an interval that is bounded away from zero.

Theorem 2.2.

Conditions (2.6) and (2.7) require that the sample size is asymptotically larger than the squared dimension . The case when is proportional to has received considerable attention in the probability and statistics literature. Assuming that is based on independent and identically distributed entries, the distribution of converges to a Tracy-Widom distribution (cf. Johnstone (2008)). For a survey of the theory of eigenvalues of large random matrices, we refer to Aue and Paul (2014).

3. Changepoint detection

3.1. Estimating the norming sequence

Consistent estimation of is required in order to apply Theorems 2.1 and 2.2 to test . As is defined as the long run covariance of the quadratic forms , we propose a natural nonparametric estimator. We define by

Let where

and is the least squares change point estimator for a change in the mean defined in Section 3 of Bai (2010). Estimating the mean under the alternative of a mean change is done to ensure monotonic power in that case. Let be a kernel/weight function that is continuous and symmetric about the origin in with bounded support, and satisfying . Examples of such functions include the Bartlett and Parzen kernels; further examples and discussion may be found in Taniguchi and Kakizawa (2000). We define the estimator for by

| (3.1) |

where denotes a smoothing bandwidth parameter, and

with

The results in Theorems 2.1, 2.2, and 3.1 can be used to test for the stability of the largest eigenvalue, which, as we show below, suggests stability of the model parameters.

Corollary 3.1.

The continuous mapping theorem and Corollary 3.1 imply that

| (3.4) |

The limiting distribution on the right hand side of (3.4) is commonly referred to as the Kolmogorov distribution. An approximate test of size of is to reject if is larger than the critical value of the Kolmogorov distribution. One could also consider alternate functionals of to test . The distributions of many functionals of are well–known (cf. Shorack and Wellner (1986), pp. 142–149).

3.2. Consistency under alternatives

We now turn our attention to studying the consistency of tests for based on under the mean break and factor loading break alternatives. Following the literature, we assume that the change does not occur too close to the end points of the sample:

| (3.5) |

First we consider the case of a break in the mean, i.e. the model

| (3.6) |

holds. Let and assume

| (3.7) |

Theorem 3.2.

We note that assumptions (3.5) and (3.7) also appeared in Horváth and Hušková (2012) where the optimality of these conditions are discussed. It is clear if is large, relatively small changes can be detected by . As a consequence of the proof of Theorem 3.2, it follows that

i.e. a change in the mean is asymptotically entirely captured by the largest eigenvalue of the partial covariance matrices.

The condition (3.7) suggests how a local change in the mean alternative may be considered. For example, if and , is fixed , we need that for (3.7) to hold, which describes at what rate may tend to zero while maintaining consistency.

Next we consider the model

| (3.9) |

i.e. the means of the panels remain the same but the loadings change at time . Let .

Theorem 3.3.

hold, then , as .

Roughly speaking, it is possible that the covariance might change on a subspace that is orthogonal to the first eigenvector (or more generally the first eigenvectors), and then if this change is not sufficiently large, the first eigenvalue cannot have power to detect it. Condition (3.10) is sufficient to imply that this does not occur.

4. Finite Sample Performance

In order to demonstrate how the result in (3.4) is manifested in finite samples, we present here the results of a Monte Carlo simulation study involving several different data generating processes (DGP’s) that follow (2.1). All simulations were carried out in the R programming language (cf. R Development Core Team (2010)). In order to compute the long run variance estimate defined in (3.1), we used the “sandwich” package (cf. Zeileis (2006)), in particular the “kernHAC” function. The Parzen kernel with corresponding bandwidth defined in Andrews (1991) were employed.

4.1. Empirical Size

We begin by presenting the results on the empirical size of the test for stability based on the largest eigenvalue by considering two examples of synthetic data generated according to model (2.2). We use the notation to denote that the sequence of random variables are independent and identically distributed with distribution . Let and denote iid standard normal random variables, and let denote independent autoregressive one processes with parameter based on standard normal errors. We generated observations according to (2.2) and the DGP’s

(IID): , , ,

and

(AR-1): , , .

The purpose of choosing random parameters , which define the standard deviations of the idiosyncratic errors, and is two fold. Firstly, this forces Assumption 2.1 to hold. Secondly, this choice highlights that the methodology is relatively robust to variations in the parameter values.

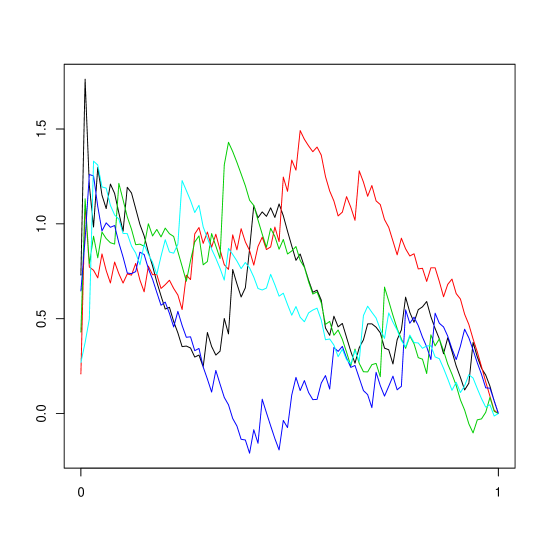

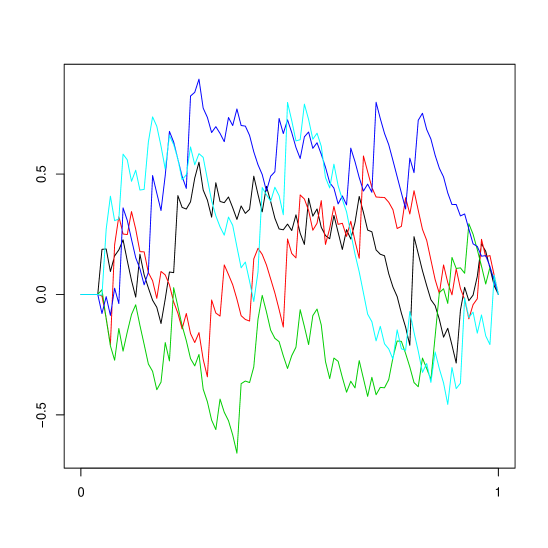

Five simulated paths of the process are shown in the left hand panel of 4.1 when and , under IID. The most notable feature is that each process always starts with a spike near the origin, i.e. is much larger than when is small. The reason for this is that, when is small, is computed from a matrix that is low rank, and hence will tend to be closer to the norm of the observation vectors, which is on the order of , than the eigenvalue that it being estimated. This problem is ameliorated when decreases or increases, but significantly affects the results for many practical values of and .

| DGP | IID | AR-1 | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| N | T | 10% | 5% | 1% | 10% | 5% | 1% | 10% | 5% | 1% | 10% | 5% | 1% | |||

| 10 | 50 | 18.1 | 11.2 | 3.8 | 8.8 | 4.9 | 1.8 | 26.7 | 18.4 | 10.0 | 24.7 | 17.9 | 8.4 | |||

| 100 | 8.3 | 3.5 | .7 | 9.2 | 3.6 | .7 | 17.1 | 10.3 | 3.4 | 9.2 | 3.6 | .7 | ||||

| 200 | 8.7 | 4.1 | .7 | 8.6 | 4.3 | 1.0 | 11.7 | 5.7 | 2.0 | 10.4 | 5.1 | 1.6 | ||||

| 20 | 50 | 18.6 | 12.3 | 5.5 | 9.5 | 4.8 | .7 | 23.7 | 16.5 | 8.0 | 25.8 | 17.8 | 8.7 | |||

| 100 | 8.5 | 3.6 | .6 | 9.1 | 4.5 | .3 | 14.9 | 9.4 | 3.4 | 14.9 | 9.0 | 3.7 | ||||

| 200 | 8.4 | 4.2 | .6 | 8.8 | 3.3 | .5 | 11.8 | 6.5 | 2.0 | 12.4 | 6.8 | 1.5 | ||||

| 50 | 50 | 23.3 | 13.7 | 5.3 | 10.2 | 3.9 | .7 | 24.8 | 17.3 | 8.8 | 24.4 | 18.6 | .9 | |||

| 100 | 8.8 | 3.5 | .6 | 9.0 | 4.2 | 1.0 | 17.8 | 11.6 | 4.0 | 15.3 | 8.5 | 3.5 | ||||

| 200 | 10.0 | 5.0 | 1.3 | 8.9 | 3.8 | .5 | 13.0 | 7.1 | 2.1 | 12.2 | 6.4 | 1.7 | ||||

In order to correct for this, we define

for a trimming parameter . Five corresponding paths of are illustrated in the right panel of Figure 4.1, with .

Table 4.1 contains the percentages of the test statistic that are larger than the 10%, 5%, and 1% critical values of the Kolmogorov distribution. The results can be summarized as follows:

-

(1)

When is small (), then the size of the test may be inflated by two sources. One of them is the spiked effect, and this is particularly pronounced when is small and is large. If the temporal dependence in the data is low, then increasing can allow the test to achieve good size even for small and relatively large . However, strong temporal dependence can cause size inflation for small that cannot be accounted for by increasing .

-

(2)

Another source of size inflation that is present for larger values of may be attributed to estimating the variance under the alternative of a break in the mean. This may be improved by considering alternative variance estimation approaches, such as those developed in Kejriwal (2009).

-

(3)

The difference in the results between the IID and AR-1 DGP’s were small for larger values of , indicating the variance estimation is performing well.

-

(4)

For , the empirical sizes are close to nominal in all cases.

4.2. Empirical Power

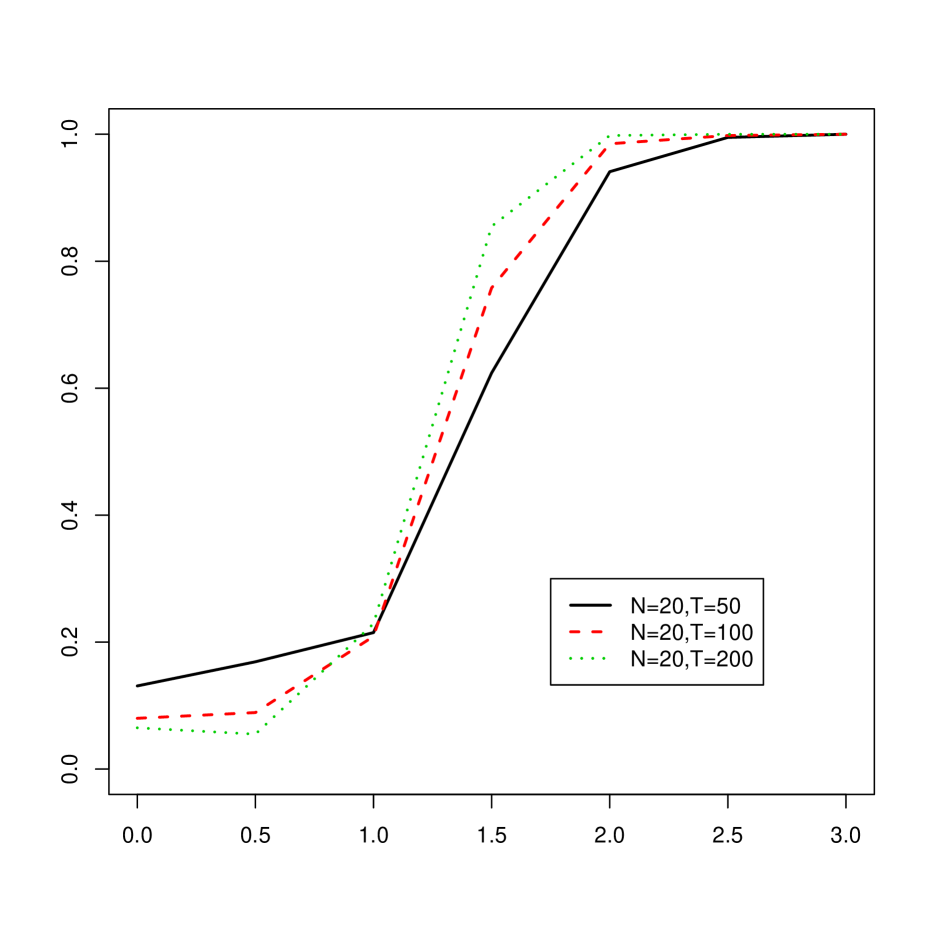

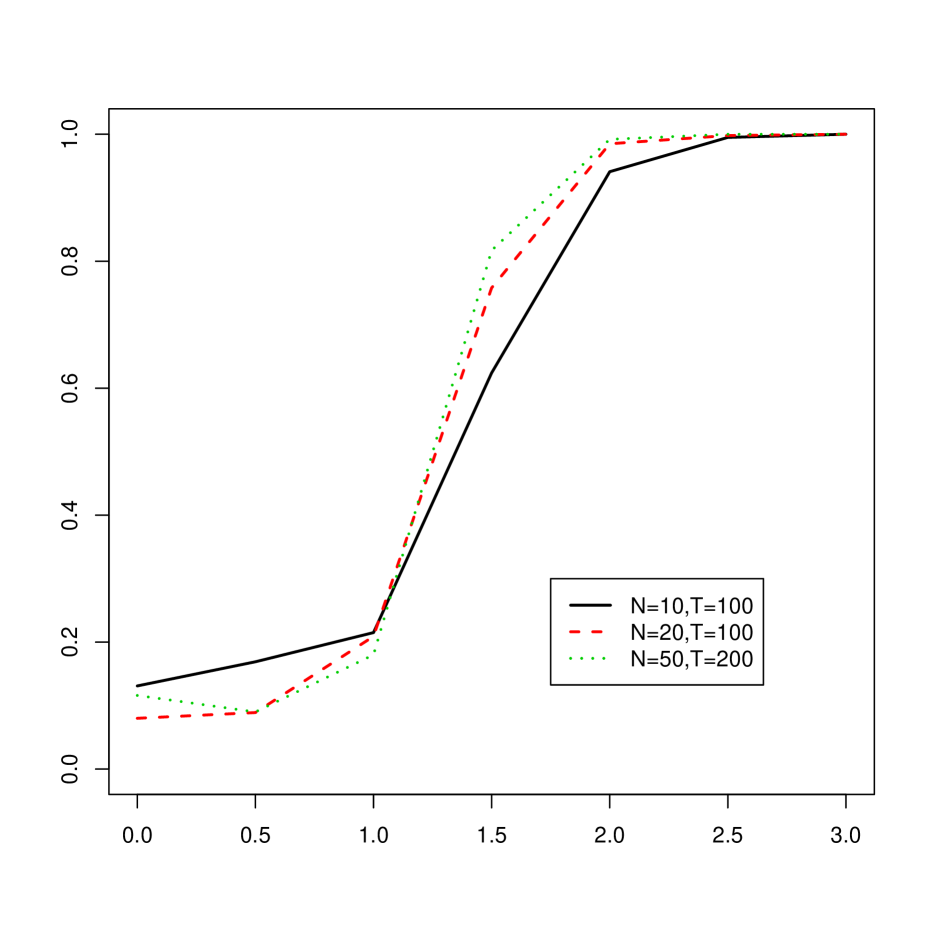

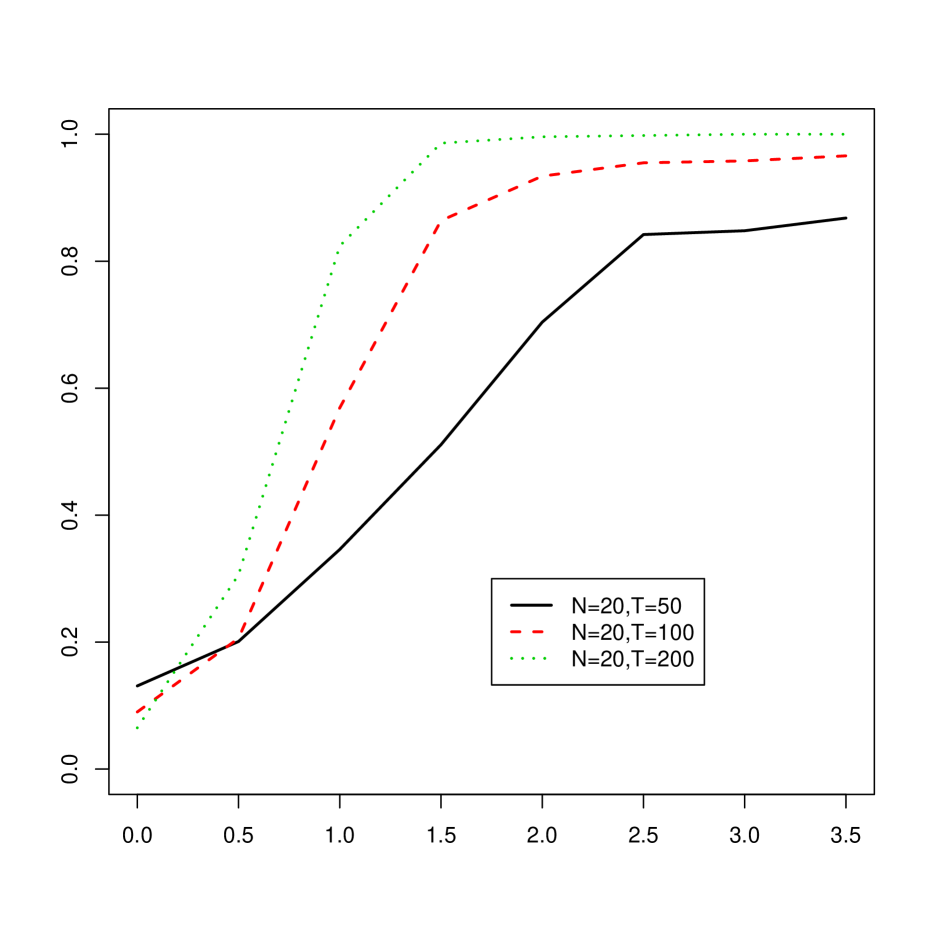

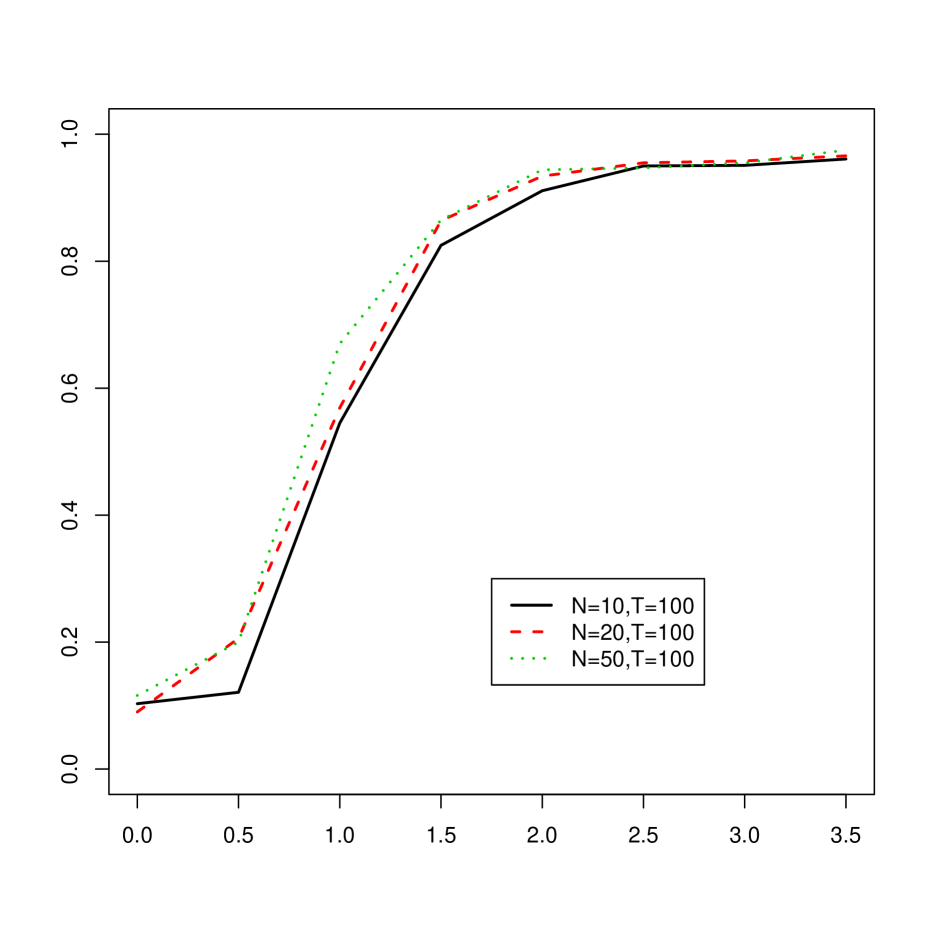

In order to study the power of our test under both the mean break and loading break alternatives, we considered two processes that satisfy (2.1) with with . Throughout the simulations below, we set , i.e. the break was in the middle of the sample. We also studied the situation in which breaks occured towards the endpoints of the sample. The results in those cases tended to be worse, but not more so than expected. We define the DGP’s

MB(): , where

and

LB(): , where

In each case we take the other terms in (2.1), i.e. the idiosyncratic errors, common factor, and factor loadings, to satisfy AR-1. We let the parameters and vary between and at increments of , and let , and . The results are displayed in terms of power curves in Figures 4.2 and 4.3 in case of a mean break alternative (MB()) and in Figures 4.4 and 4.5 in case of breaks in the factor loadings (LB()) when the size of the significance level of the test was fixed at 5%. We summarize the results as follows:

Mean Break:

-

(1)

In the case of a mean break, for each value of and that we considered there is a substantial gain in power for exceeding 1.5. We note that data generated according to AR-1 have cross-sectional standard deviations of on average 1.6, and, when , the average squared size of the change in the mean of each cross section is 1.33. Thus testing based on the largest eigenvalue seemed very sensitive to detect changes in the mean.

-

(2)

Due to the estimation of the variance under a mean break, the test exhibited monotonic power.

-

(3)

Increasing with fixed improved the empirical power, as expected, and the same was observed when was fixed and increased. The latter occurrence is likely attributable to the fact that as increases, changes in the mean occur in more cross sections, and the size is inflated in these cases due to the spiked effect.

Loading Break

-

(1)

In the case of a break in the factor loadings, even smaller changes relative to the size of the standard deviation of the idiosyncratic errors resulted in dramatic increases in power.

-

(2)

We noticed that for smaller values of the power seemed to level off for larger breaks in the common factors, and never reached more than .

-

(3)

For larger , the power approached 1 at a much faster rate for breaks in the factor loadings, and this occurrence seemed to be independent of the value of .

-

(4)

Increasing resulted in reduced power in this case, although the effects of changing were not particularly pronounced.

5. Application to U.S. Yield Curve Data

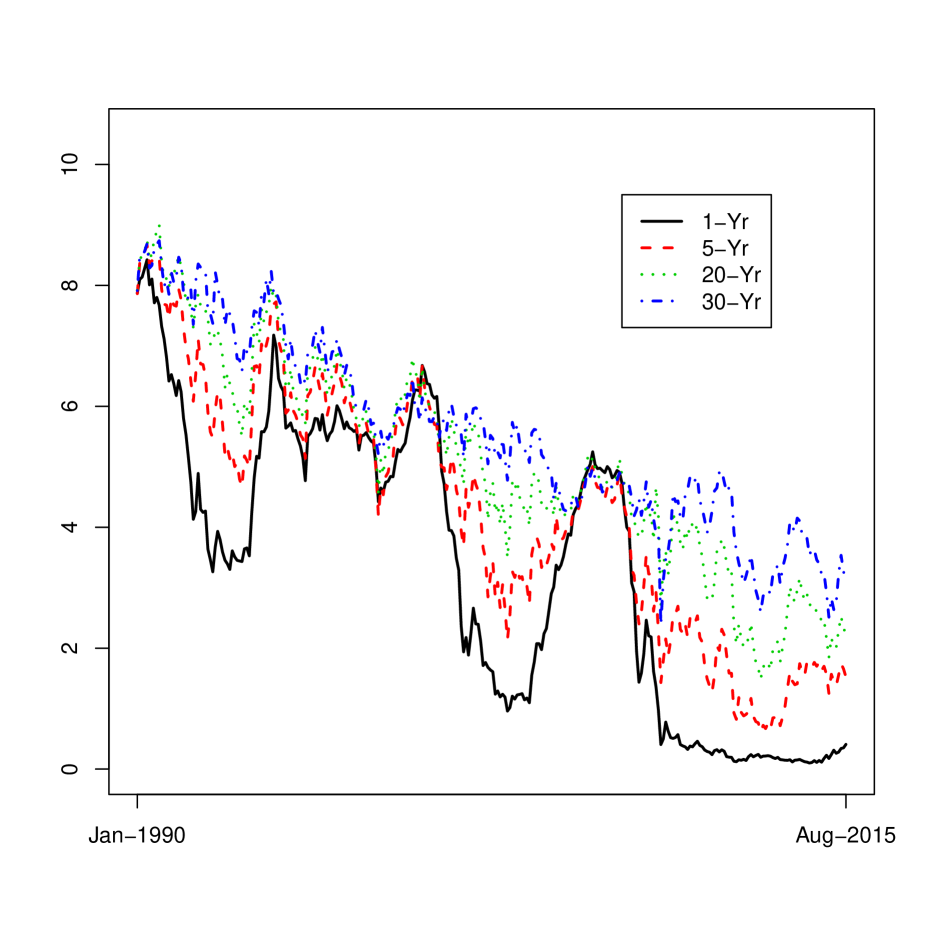

Following Yamamoto and Tanaka (2015), we consider an application of our methodology to test for structural breaks in U.S. Treasury yield curve data considered in Gürkaynak et al. (2007), which is available at http://www.federalreserve.gov/

econresdata, and which the authors graciously maintain. The data consists of yields for fixed interest securities with maturities between one and thirty years with one year increments (). We studied a portion of this data set spanning from January 1st, 1990 to August 28th, 2015, that we further reduced from daily to monthly observations by considering only the data from the last day of each month. Figure 5.1 illustrates the yield curves corresponding to 1, 5, 10, and 30 year maturities.

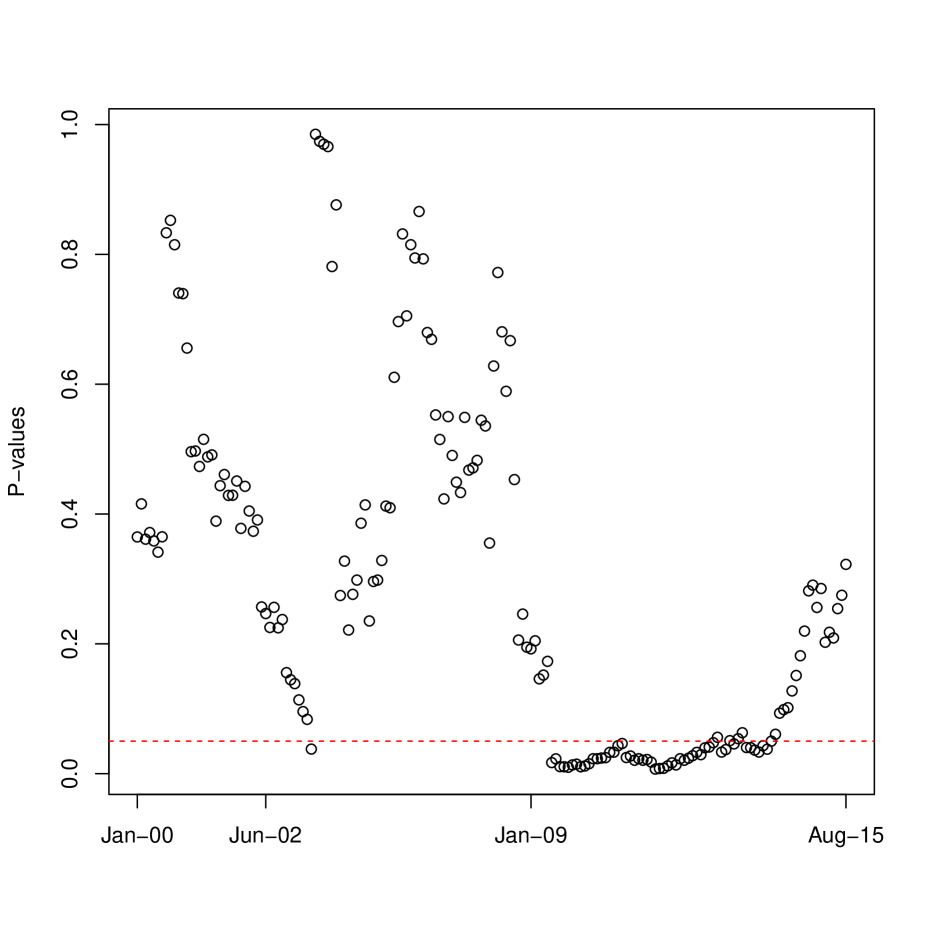

In order to remove the effects of stochastic trends, and to allow for a comparison of our results to Yamamoto and Tanaka (2015), we first differenced each series. We applied the hypothesis test for stability of the largest eigenvalue based on with trimming parameter to sequential blocks of the first differenced data of length 10 years, corresponding to 120 monthly observations in each sample (). The first block contained data spanning from January, 1990 to December, 1999, and the last block contained data spanning from September, 2005 to August, 2015, which constituted a total of 172 tests. The P-value from each test is plotted against the end date of the corresponding 10 year block in Figure 5.2.

The most notable result of this analysis is the persistent instability of the largest eigenvalue evident in the samples that end in late 2008 to early 2009. This seems to correspond with the subprime crisis, which sparked what has been termed the “Great Recession”. The stability of the largest eigenvalue seems to return near the end of 2013. This may be indicative of the economic recovery, and provides a way of dating the end of the recession. The findings of structural breaks in the correlation structure of the yield curves during the 2007-2009 recession are consistent with those of Yamamoto and Tanaka (2015).

Also notable is the lack of persistent instability in relation to the 2001 economic recession. This illuminates a difference between the two recessions: The 2001 recession may be better modeled as a first order structural break, which is not as evident in the first differenced yield curve series, whilst the 2009 recession, which generated numerous policy changes and endured for a longer period, is manifested as a change in the largest eigenvalue.

6. Results for smaller eigenvalues

In this section, we provide analogous results to Theorems 2.1 and 2.2 for the smaller eigenvalues. Namely, we aim to establish the weak convergence of the –dimensional process

where for ,

Let

| (6.1) |

| (6.2) |

| (6.3) | ||||

We use the notation and .

Remark 6.1.

If, for example, we assume that for all , then is a diagonal matrix with

The expression for also simplifies since by the orthonormality of the ’s we have

If we further assume that each of the sequences are Gaussian, then , and also reduces to a diagonal matrix with .

Theorem 6.1.

If and the conditions of Theorem 2.1 hold, and

| (6.5) |

then we have that where is a –dimensional Wiener process, i.e. is Gaussian with and .

Remark 6.2.

If , as , then according to Lemma 7.4. In this case the weak limit of is the –dimensional Wiener process , since .

To state the next result we introduce the covariance matrix : and .

Theorem 6.2.

If and the conditions of Theorem 2.1 hold, and

| (6.6) |

then we have that converges weakly in to , where is a –dimensional Wiener process, i.e. is Gaussian with and .

Remark 6.3.

Remark 6.4.

7. Technical Results

7.1. Proof of Theorems 2.1, 2.2, 6.1 and 6.2

Throughout these proofs we use the terms of the form to denote unimportant numerical constants. We can assume without loss of generality that , and so we define

Proof.

It is easy to see that

and therefore

Using assumption (2.2) we obtain that

First we prove that

| (7.1) |

It follows from Proposition 4 of Berkes et al. (2011) that under conditions Assumption 2.3(a) and Assumption 2.3(a) we have for any that

| (7.2) |

and therefore the maximal inequality of Móricz et al. (1982) implies (7.1). Next we show that

| (7.3) |

Following the arguments leading to (7.2) one can verify that for any

| (7.4) |

with some constant for all . Hence for any we have via Rosenthal’s inequality (cf. Petrov (1995), p. 59) and (7.4) that

Using again the maximal inequality of Móricz et al. (1982) we conclude

by Assumption 2.2. This completes the proof of (7.3).

Similarly to (7.3) we show that

| (7.5) |

First we note

and by Jensen’s inequality we have

Using again Proposition 4 of Berkes et al. (2011) we get for all that

and therefore the maximal inequality of Móricz et al. (1982) yields

This completes the proof of (7.3).

The upper bounds in (7.1)–(7.5) imply

and

Assumption 2.2 implies that the proof of Lemma 7.1 is complete. ∎

Let denote the largest eigenvalues of .

Proof.

It is well–known (cf. Dunford and Schwartz (1988)) that

with some absolute constant and therefore the result follows from Lemma 7.1. ∎

Let

Proof.

According to formula (5.17) of Hall and Hosseini–Nasab (2009) we have for all that

where

and and denote the element of and , respectively. Hence

where

with

where denotes the element of the matrix . By inequality (2.30) in Petrov (1995, p. 58) we conclude

and hence

Using the definitions of and we write

Utilizing Assumption 2.3(a), we obtain along the lines of (7.2) that , so by the stationarity of and the maximal inequality of Móricz et al. (1982) we obtain that

Similarly, for all

Hence for all we have by Assumption 2.2 that

| (7.6) |

Using (7.6) we conclude for all

which shows that

| (7.7) |

∎

Since is defined via (2.5) up to a sign, we can assume without loss of generality that .

Lemma 7.4.

| (7.10) |

and

| (7.11) |

Proof.

By (2.2) we have

where is the diagonal matrix with in the diagonal. We can write

It follows from the definition of and that

and

| (7.12) |

where is defined in Assumption 2.3(b). Thus we conclude

By assumption and therefore . Hence and . Thus we get

| (7.13) |

completing the proof of (7.8). Since , (7.9) follows from (7.12). For all we have

by (7.13) which gives (7.10). Since and by Assumption 2.3(b), the last claim of this lemma follows from (7.10). ∎

Proof.

It follows from (2.5) that , if . Hence we get

First we assume that . It follows from the definition of that

where is defined in Assumption 2.1. Let and write with

Thus we get for any via Markov’s inequality that

| (7.15) | ||||

Using (2.2) we obtain with that

since for we have . Clearly, on account of , the Cauchy–Schwarz inequality implies

Following the proofs of (7.2), we get that from Assumption 2.3(a) that

| (7.16) |

Let

where and are defined in Assumption 2.3(a) and Assumption 2.3(b), respectively. By independence we have

By the independence of the variables and the Rosenthal inequality (cf. Petrov (1995)) we conclude

where is a constant, on account of Assumption 2.3(b) and . Due to the independence of and , if , we can apply again the Rosenthal inequality to get

resulting in

| (7.17) |

Hence the moment inequality in Berkes et al. (2011) yields

| (7.18) |

Similarly to (7.18) we have

| (7.19) |

Let

and

where defined in Assumption 2.3(b). Clearly,

and

Thus we get by the Cauchy–Schwarz inequality that

Using again Rosenthal’s and Jensen’s inequalities, we obtain that

and similarly

Thus we have

| (7.20) |

and therefore Proposition 4 of Berkes et al. (2011) implies

| (7.21) |

Putting together (7.16)–(7.21) we conclude

| (7.22) |

Since is a stationary sequence, (7.22) and the maximal inequality of Móricz et al. (1982) imply

| (7.23) |

Now we use (7.15) with resulting in

implying

This completes the proof of (7.14).

Next we assume that . It is easy to see that for for

If , then the proof of (7.22) shows that

and therefore by Assumption 2.1 for any we have

By (7.21) we have along the lines of the proof of (7.15)

| (7.24) | ||||

where in the last step we used (7.10). Also, (7.18) and (7.19) imply via the maximal inequality in Móricz et al. (1982) that

| (7.25) |

and

| (7.26) |

Using now (7.25) and (7.26) we conclude that

Since by Lemma 7.4 we have that , the proof of (7.14) is complete when . It is easy to see that by (7.24) and Lemma 7.4

an account of (7.25) and (7.26). According to Lemma 7.4 we have that , completing the proof of Lemma 7.5.

∎

Lemma 7.6.

Proof.

First we define the –dependent processes

and

where and are defined in Assumption 2.3(a) and Assumption 2.3(b), respectively. We show that for any

| (7.27) |

| (7.28) |

and

| (7.29) |

for all and . It follows from Assumption 2.3(a) and the Cauchy–Schwarz inequality that

| (7.30) | ||||

By stationarity, we get that

| var | |||

Since is independent of , if , we obtain that

The independence of and , (7.30), and Hölder’s inequality yield

with . The same argument gives that

On the other hand, applying again (7.30) and the Cauchy–Schwarz inequality we conclude

Chebyshev’s inequality now implies (7.27). The proofs of (7.28) and (7.29) go along the lines of (7.27), we only need to replace (7.30) with (7.17) and (7.20), respectively. Next we show that for each , converges in to the Gaussian process , with , and

with

| (7.31) |

| (7.32) |

and

| (7.33) | ||||

Let and . We can write

where

The variables are –dependent and therefore , , are asymptotically independent. Hence we need only show the asymptotic normality of for all . For every fixed the variables form an –dependent stationary sequence with zero mean,

and , where does not depend on nor on . Due to the –dependence, these properties imply the asymptotic normality of . Applying the Cramér–Wold device (cf. Billingsley (1968)), we get that the finite dimensional distributions of converge to that of . Since as , and and are Gaussian processes we conclude that that converges in to . On account of (7.27)–(7.29) we obtain that the finite dimensional distributions of converge to that of . It is shown in the proof of Lemma 7.1 that

and

Due to the stationarity of , the tightness follows from Theorem 8.4 of Billingsley (1968). ∎

Proof of Theorem 6.1. By Lemmas 7.2, 7.3 and 7.5 we have that

Also,

since by Lemma 7.6

By the Cauchy–Schwarz inequality we have that and therefore

The weak convergence of is proven in Lemma 7.6, which completes the proof of Theorem 2.1.

∎

Proof of Theorem 6.2.

Thus Lemma 7.6 yields

According to Lemma 7.6 and since by Lemma 7.4, we conclude

| (7.34) |

| (7.35) | ||||

Combining (7.34) and (7.35) with Lemma 7.6, we obtain that converges weakly in to , where and . The computation of the covariance function of finishes the proof of Theorem 6.2.

∎

∎

Proof of Theorem 2.2 and Remark 6.5. Theorem 2.2 follows from Remark 6.5. Remark 6.5 follows from Theorems 6.1 and 6.2 when the condition (2.6) is replaced with (2.7). This requires replacing Lemma 7.5 with the result that for all

which follows from (7.23) and Markov’s inequality.

∎

7.2. Proof of Theorems 3.1 and 3.2

We prove a more general result concerning consistent estimates for norming sequences for each eigenvalue process. Let

and define

where

where

We show that if as , then

| (7.36) |

Moreover, if as , then

| (7.37) |

and for ,

| (7.38) |

We can assume without loss of generality that . Elementary algebra gives that

It is easy to see that

and therefore by Markov’s inequality we have

| (7.39) | ||||

Using the same arguments as above, for every one can find such that

| (7.40) |

for every there is such that

| (7.41) |

We note

By (2.2) and assumption we get that from Assumption 2.3(a)–Assumption 2.3(b) and Assumption 2.2

using the arguments in the proof of Lemma 7.1. Due to stationarity we have

and

Hence for every there is such that

| (7.42) |

Putting together (7.39)–(7.41) we conclude

where

where

with .

It follows from Dunford and Schwartz (1988) and Assumption 2.1 that with some constant

| (7.43) |

where are random signs. We write

and since we can assume without loss of generality that we get from the proof of Lemma 7.1

Also,

and

Thus we have

Similarly,

We conclude from (7.43) that

| (7.44) |

Next we define

where

where .

We write

For ,

According to the definitions of and ,

where , from which it follows that,

| (7.45) |

According to the Cauchy-Schwarz and triangle inequalities,

According to 7.44 . Furthermore, since , and has bounded support, the Cauchy-Schwarz and triangle inequalities imply that

| (7.46) |

For some constant . Hence, according to (7.46) and Markov’s inequality, we obtain that

| (7.47) |

Similar arguments applied to the remaining terms in show that

| (7.48) |

It follows from (2.2) and Assumption 2.3(b) that

and

Since

| (7.49) |

if we show that

| (7.50) |

we get immediately that

To this end, we have that

and

with some constant , since we can assume without loss of generality that if .

Now we assume that the conditions of Theorem 6.2 are satisfied. First we prove (7.37). It follows from (2.2) and (7.49) that

Following the proof of one can verify that

completing the proof of (7.37). The proof of (7.38) goes along the lines of that of (7.36) and therefore the details are omitted.∎

Proof of Theorem 3.2. We can assume without loss of generality that . Using (2.1) we have

where

with

and

It follows along the same lines as the proof of (7.23) that

for with . Hence

and

which completes the proof of Theorem 3.2.

∎

The proof of Theorem 3.3 is based on the following lemma.

Lemma 7.7.

Proof.

Since the means of the panels do not change during the observation period in (3.9), we can assume without loss of generality that . It follows from Theorems 6.1 and 6.2 that for any that

One can show that Lemmas 7.1 and 7.2 hold with minor modifications under model (3.9), and thus

where is the largest eigenvalue of . Simple arithmetic shows that

where

and

It follows along the lines of the proof of (7.44) that

and thus if denotes the largest eigenvalue of , then we also have that

Let be the largest eigenvalue of . Then one can show using the arguments establishing Theorems 6.1 and 6.2 that

Assumption (7.51) implies that there is an for all sufficiently large

and therefore there is a constant such that

Observing that and , the proof of (3.8) is complete. ∎

References

- [1] Ang, A. and Chen, J.: Asymmetric correlation of equity portfolios. Journal of Financial Economics 63(2002), 443–494.

- [2] Aue, A., Hörmann, S., Horváth, L. and Reimherr, M.: Break detection in the covariance structure of multivariate time series models. The Annals of Statistics 37(2009), 4046 -4087.

- [3] Aue, A. and Horváth, L.: Structural breaks in time series. Journal of Time Series Analysis. 34(2013), 1–16.

- [4] Aue, A. and Paul, D.: Random matrix theory in statistics: a review. Statistical Planning and Inference 150(2014), 1–29.

- [5] Bai, J.: Common breaks in means and variances for panel data. Journal of Econometrics 157(2010), 78–92.

- [6] Bai, J. and Ng, S.: Determining the number of factors in approximate factor models. Econometrica 70(2002), 191- 221.

- [7] Berkes, I., Hörmann, S. and Schauer, J.: Split invariance principles for stationary processes. The Annals of Probability 39(2011), 2441 -2473.

- [8] Billingsley, P. (1968) Convergence of Probability Measures. Wiley, New York, 1968.

- [9] Breitung, J. and Eickmeier, S.: Testing for structural breaks in dynamic factor models. Journal of Econometrics 163 (2011), 71- 84.

- [10] Dunford, N. and Schwartz, J.T.: Linear Operators, General Theory (Part 1). Wiley, New York, 1988.

- [11] Galeano, P. and Peña, D.: Covariance changes detection in multivariate time series. Journal of Statistical Planning and Inference 137(2007), 194 -211.

- [12] Gürkaynak, R. Sack, B. and Wright, J.: The US treasury yield curve: 1961 to the present. Journal of Monetary Economics 54(2007), 2291 2304.

- [13] Horváth, L. and Hušková, M.: Change–point detection in panel data. Journal of Time Series Analysis 33(2012), 631–648.

- [14] Hall, P. and Hosseini–Nasab, M.: Theory for high–order bounds in functional principal components analysis. Mathematical Proceedings of the Cambridge Philosophical Society 146(2009), 225–256.

- [15] Johnstone, I.: Multivariate analysis and Jacobi ensembles: largest eigenvalue, Tracy Widom limits and rates of convergence. Annals of Statistics 36 (2008), 2638 2716.

- [16] Kao, C., Trapani, L. and Urga, G.: Testing for breaks in cointegrated panels. Econometric Reviews(2015) To appear.

- [17] Kejriwal, M.: Test of a mean shift with good size and monotonic power. Economics Letters102(2015),78–82.

- [18] Keogh, G., Sharifi, S., Ruskin, H. and Crane, M.: Epochs in market sector index data–empirical or optimistic? In: The Application of Econophysics 2004, pp. 83–89, Springer, New York.

- [19] Kim, D.: Estimating a common deterministic time trend break in large panels with cross sectional dependence Journal of Econometrics 164(2011), 310–330.

- [20] Kim, D.: Common breaks in time trends for large panel data with a factor structure. Econometrics Journal (2014)In press.

- [21] Li, D., Qian, J. and Su, L.: Panel Data Models with Interactive Fixed Effects and Multiple Structrual Breaks. (2015) Under Revision

- [22] Markowitz,H.: Portfolio selection. Journal of Finance 7(1952), 77- 91.

- [23] Markowitz,H.: The optimization of aquadratic function subject to linear constraints. Naval Research Logistics Quarterly 3(1956), 111- 133.

- [24] Móricz, F., Serfling, R. and Stout, W.: Moment and probability bounds with quasi-superadditive structure for the maximal partial sum. Annals of Probability 10 (1982) , 1032–1040.

- [25] Petrov, V.V.: Limit Theorems of Probability Theory. Clarendon Press, Oxford, 1995.

- [26] Qian, J., and Su, L.: Shrinkage Esimation of Common Breaks in Panel Data Models via Adaptive Group Fused Lasso. Working Paper, (2014) Singapore Management University.

- [27] R Development Core Team (2008). R: A language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria.

- [28] Rényi, A.: On the theory of order statistics. Acta Mathematica Academiae Scientiarum Hungaricae 4(1953), 191–227.

- [29] Shorack, G.R. and Wellner, J.A.: Empirical Processes with Applications to Statistics. Wiley, New York, 1986.

- [30] Taniguchi, A. and Kakizawa, Y.: Asymptotic Theory of Statistical Inference for Time Series. Springer, New York, 2000.

- [31] Wu, W.: Nonlinear System Theory: Another Look at Dependence, Proceedings of The National Academy of Sciences of the United States 102 (2005), 14150–14154.

- [32] Wied, D., Krämer, W. and Dehling, H.: Testing for a change in correlation at an unknown point in time using an extended functional delta method. Econometric Theory 28(2012), 570–589.

- [33] Yamamoto, Y. and Tanaka, S.: Testing for factor loading structural change under common breaks. Journal of Econometrics 189(2015), 187–206.

- [34] Zeileis, A.: Object-Oriented Computation of Sandwich Estimators. Journal of Statistical Software 16(2006), 1 16.

- [35] Zovko, I.I. and Farmer, J.D.: Correlations and clustering in the trading of members of the London Stock Exchange. In: In Complexity, Metastability and Nonextensivity: An International Conference AIP Conference Proceedings, 2007, Springer, New York.