Enhance Gender and Identity Preservation in Face Aging Simulation for Infants and Toddlers

Abstract

Realistic age-progressed photos provide invaluable biometric information in a wide range of applications. In recent years, deep learning-based approaches have made remarkable progress in modeling the aging process of the human face. Nevertheless, it remains a challenging task to generate accurate age-progressed faces from infant or toddler photos. In particular, the lack of visually detectable gender characteristics and the drastic appearance changes in early life contribute to the difficulty of the task. We propose a new deep learning method inspired by the successful Conditional Adversarial Autoencoder (CAAE, 2017) model. In our approach, we extend the CAAE architecture to 1) incorporate gender information, and 2) augment the model’s overall architecture with an identity-preserving component based on facial features. We trained our model using the publicly available UTKFace dataset and evaluated our model by simulating up to 100 years of aging on 1,156 male and 1,207 female infant and toddler face photos. Compared to the CAAE approach, our new model demonstrates noticeable visual improvements. Quantitatively, our model exhibits an overall gain of 77.0% (male) and 13.8% (female) in gender fidelity measured by a gender classifier for the simulated photos across the age spectrum. Our model also demonstrates a 22.4% gain in identity preservation measured by a facial recognition neural network.

Index Terms:

Deep learning, age progression/regression, gender consistency, identity preservation, generative adversarial networks, conditional adversarial autoencoder, image generation, face recognition.I Introduction

Face progression, also known as face aging, is the process of rendering face images to different age groups to simulate an identity-preserving aging effect. Generating accurate prediction images from a reference photo facilitates many practical applications to help create a safe and secure society. For example, the quality of age-progressed photos plays a critical role in finding long lost children, identifying fugitives, as well as developing age-invariant security systems. Furthermore, being described as ”half art and half science”, face progression also benefits the entertainment, cosmetology, and biometrics industries.

In recent years, deep learning-based approaches have made remarkable progress in modeling the aging process of the human face [1, 2, 3, 4, 5, 6]. Nevertheless, generating accurate age-progressed faces is still a challenging task. This is particularly true when the original face photos are of young children. For example, infants and toddlers often lack salient gender characteristics. Thus, a machine learning model may guess a child’s sex incorrectly and produce an inaccurate simulation. Furthermore, even with a correct guess, such models can fail to capture the drastic physical changes which occur during early childhood and adolescence. As a result, predicting future appearance based on a two-year-old face photo is significantly harder than extrapolating from a 20-year-old one.

In this paper, we introduce a new deep learning model to address the two challenges mentioned above. Our approach is inspired by the highly successful Conditional Adversarial Autoencoder (CAAE) [5] model. First, we incorporate additional gender information into the latent vector of the CAAE architecture. We evaluate the gender consistency of the generated face images using a gender classifier based on a deep neural network model [7]. Our study suggests that the CAAE model is prone to generate female faces when sex characteristics are not evident on the original photo. Providing additional gender information is essential in helping the model make proper adjustments.

The second contribution of our study is to enhance identity preservation during the aging simulation for young faces. Before entering early adulthood, the human body undergoes rapid physical growth and puberty development. These physiological changes (e.g., height, weight, and voice changes) are accompanied by substantial changes in facial features. For example, compared to the archetypal adult face, an infant’s face tends to have bigger eyes, shorter and flatter eyebrows, and a smaller and turned-up nose. As a result, it is challenging to preserve identity from baby photos in simulating the aging process. To address this issue, we leverage the popular VGG[8] architecture to maximize the similarity of high-level facial features between the original and simulated faces. Compared to the baseline CAAE model, our approach demonstrates significant improvement in identity preservation as measured by a face recognition deep neural network developed by Schroff et al. [9].

II Related Work

II-A Face Age Progression/Regression

Human face progression/regression has always been an active area of research due to its broad applications across various disciplines [10, 11, 12, 13]. In its early stages, face progression techniques simulated biomechanical aspects of human skin (e.g., anisotropy, visco-elasticity, etc.) to artificially produce wrinkles, creases, and folds [14]. These simulations accounted for many specific aging factors, including the facial muscles [15, 16], cranium [17], facial skin [18, 14, 19, 20], depth of wrinkles [21, 22], facial structure [23, 24], etc. While successful, these methods involved parameter tweaking and domain-specific knowledge. As the state-of-the-arts transitioned to image data driven approaches, methods which modeled face aging as a mapping from young to old faces became more common. For example, Park et al. [25] used sequential face data to approximate the aging process as a Markov process. Shu et al. presented an efficient and effective Kinship-Guided Age Progression (KinGAP) approach [26], which can automatically generate personalized aging images with guidance of the senior kinship face. One limitation of these methods is the difficulty of collecting a large dataset with chronologically sequential faces for each individual.

Another data-based approach is the prototype-based method, which first divides all the faces into different age groups. The technique then estimates the average face within a predefined age group denoted as the prototype. Differences between prototypes form the axes, along which faces can smoothly transition across the aging spectrum. Prototype-based methods are simple, straightforward, and fast. However, since the generated faces are averaged, high frequency details such as wrinkles are not accurately captured. To address this issue, researchers developed models [27, 28, 29], which captured person-specific facial features via sparse representation.

II-B Deep Learning-based Approaches

In recent years, deep learning techniques have made remarkable progress in the quality of face progression/regression using large datasets of raw face images. Wang et al.[30] employed a recurrent neural network [31] model to capture the common transition patterns among all age groups. Leveraging the slowly evolving intermediate images, their model offers more realistic and smoothly progressing faces than other non-temporal models. The limitation of their approach is that it can only perform one directional (i.e., from young to old) simulation.

Another class of popular deep learning-based methods is derived from the novel generative adversarial networks (GANs)[32] framework, in which a model trains a generator and a discriminator simultaneously. While the generator strives to generate realistic faces, the discriminator acts as the adversary to distinguish fake (i.e., generated) vs. real faces. At equilibrium, the generator is forced to produce photo-realistic faces to fool the discriminator. Generative approaches facilitate both face progression and rejuvenation on a face photo of any age.

One variant of the GAN architecture is the conditional generative adversarial network (C-GAN) [33]. C-GANs models provide the generator with additional information to control the scope (e.g., a specific age) of the generated images. These models [1, 34, 30] are effective, but they require paired images in training their models, i.e., pictures of the same person across the entire age spectrum, which is difficult to collect. Recent work by Zhang et al. [5] overcomes this difficulty by combining the concepts of C-GAN and autoencoder[35]. Their conditional adversarial autoencoder (CAAE) model embeds age-specific information in the latent vector of the autoencoder. Additionally, the authors employed an extra discriminator to impose a uniform prior on the latent distribution of the input data. We give a more detailed introduction to the CAAE model in Section III-A because our model is an extension to its architecture.

II-C Our Contribution

Most of the existing studies focus on the photo-realistic quality of the generated faces, including richer face texture (e.g., wrinkles), smooth transitions, and lesser ghosting effects. Evaluations are typically conducted using visual examinations centered on adult and senior faces. In our study, we focus on face aging simulation on baby photos in which the gender information is not detectable and the facial features undergo drastic changes over the years. To the best of our knowledge, there is no prior work addressing this task that is both challenging and of significant practical value. We also establish rigorous quantitative metrics to evaluate the efficacy of our approach.

III Methods

In this section, we first give a brief introduction to the conditional adversarial autoencoder (CAAE) model [5]. We then present our proposed approach which extends the CAAE architecture with gender and identity preserving components.

III-A Conditional Adversarial Autoencoder

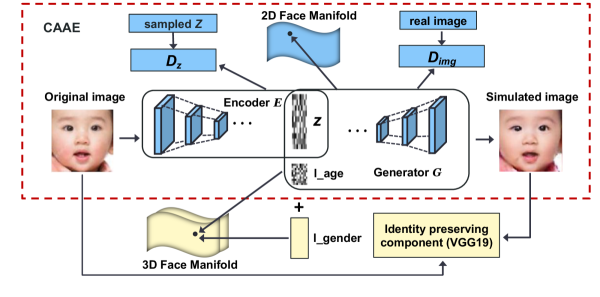

As illustrated within the dashed bounding box in Fig. 1, a CAAE [5] model consists of three separate neural networks: a convolutional autoencoder (AE, i.e., Encoder + Generator) and two adversarial discriminators (i.e., and ). For the AE component, the encoder () maps the input face to a vector which can be interpreted as the personality of a particular face input . The generator () regenerates the input photo based on the latent vector and an additional age label (). Thus, a concatenated latent vector serves as the input to the generator. Incorporating specific age information into the latent space facilitates clustering face images by age groups. Consequently, the model produces smooth age transitions and forces the output face to be photo-realistic and plausible for a given age.

The second component is the discriminator which regularizes the latent space using a uniform distribution. The uniform prior forces to evenly populate the latent space without large gaps. Aassuming that images of different personalities and ages form a 2D manifold () along the two corresponding directions, generating age-progressed photos can be viewed as traversing the along the age direction. Thus, continuity in the latent space is desirable because it ensures sufficient intermediate data points to guide the model along the traversal.

The last component is an inherited concept from the effective GAN[32] methodology. In particular, while the generator strives to produce as realistic as possible photos for a given age, acts as an adversary to discriminate between real and generated images. During the training process, contributes to the loss function based on how well it detects generated images, while the generator is evaluated based on how well its constructed images can fool the discriminator. Thus, this adversarial process forces the generator to produce high quality images that are indistinguishable from the real ones.

The overall loss of the CAAE model is the sum of corresponding losses from its three components. Following the same notation as in [5], the objective function can be formulated as follows:

| (1) | ||||

where denotes norm, denotes the distribution of the training data, and denotes the random sampling process from the prior distribution of . The first line of equation (1) is the loss of the CAE component. Specifically, and represent the image reconstruction loss and total variation respectively. The coefficients and balance the smoothness and high resolution. The remaining four terms are the standard adversarial loss for discriminators and respectively.

III-B Our Approach

Fig. 1 presents the structure of our proposed model. The components outside the dashed box are extensions to the CAAE model.

III-B1 Integrating Gender Information

One limitation of the CAAE model is that it does not account for gender differences in the input data. While gender can generally be inferred from adult photos, this is not the case with infant or toddler pictures. We demonstrate in our experimental results that the CAAE model tends to age-progress a baby photo toward the female direction. To address this issue, we augment the latent space with additional gender information. As illustrated in Fig. 1, the input to our generator is where . We can further interpret our model as a 3D extension to the 2D manifold of the CAAE model with gender being the third dimension.

We evaluate the efficacy of integrating the new gender indicator using simulated aging images constructed from 0-5 years old photos. Besides visual examinations, we quantify the generated images with a gender score measured by a gender classifier. We present our gender classifier and the evaluation results in Section IV.

III-B2 Enhancing Identify Preservation

Our second improvement is to leverage a VGG [8] model to preserve each individual’s identity in the face aging process. The VGG network was introduced by Simonyan and Zisserman in 2014. It employs deep convolutional layers and is one of the most popular architectures for image feature extraction. In our approach, we utilize a pre-trained VGG19 (i.e., 19 convolutional layers) model to minimize the differences in high-level facial features between the input and generated images. Specifically, we apply the VGG19 model to both the input and the generated images and compute the distance between the two feature maps after the last convolutional layer. The difference is added to the total training loss to force the model to maintain the same personalities as the original faces in its aging simulation process.

We evaluate the efficacy of this new identity preservation component using a Face Recognition (FR) score from the FaceNet system[9] developed by Google in 2015. Specifically, the FaceNet system maps a pair of images to an embedded Euclidean space, and the distance (e.g., ) in the embedded space measures the similarity of two identities. We present the FR score calculation and the evaluation of the VGG component in Section IV.

III-B3 Objective Function

We make two modifications to the objective function in Equation (1) to serve the two new components in our model. First, we augment the terms of adversarial loss of with additional gender information (). Formally, the last two lines in Equation (1) are adjusted as follows:

Next we add a new term to Equation (1) representing the feature map differences between the input and simulated images. Formally, the loss can be expressed as:

where denotes the feature map vector of the last convolutional layer after applying VGG19 to an image. is the gender indicator. All other symbols have been kept the same as in Equation (1).

IV Experiments

In this section, we first describe the data we used to conduct our study. We then introduce two quantitative measures to evaluate the quality of gender and identify preservation for the age-progressed photos. Lastly, we present the experimental results of our model in comparison to the baseline CAAE model.

IV-A Data Acquisition

We conduct our experiments using the UTKFace dataset[36], a large-scale face dataset with a long age span (from 0 to 116 years old). The dataset consists of 12,391 male and 11,317 female face images with annotations of age, gender, and ethnicity. We divided the photos into ten groups (i.e., 0-5, 6-10, 11-15, 16-20, 21-30, 31-40, 41-50, 51-60, 61-70, and ¿70) according to the stages of life in which the human face goes through significant changes. Additionally, the face images are cropped to 228228 and aligned to make training more tractable.

IV-B Quantitative Metrics

Besides visually examining the quality of generated photos, we introduce ”gender score” and ”FR (Face Recognition) score” to assess the sex and identity consistencies between the original and simulated images.

IV-B1 Gender Score

To quantitatively evaluate gender fidelity during the face aging process, we employ a binary gender classifier () to measure masculinity and femininity in the generated photos. We define the male/female gender score for a given age group as the accuracy when applying to all of the expected male/female photos in the group. For example, the male gender score for the 16-20-year old group is the accuracy of applying to those photos in the group that are simulated from male input. Thus, a higher gender score is more desirable because it indicates higher consistency with the expected sex.

Our gender classifier is based on the architecture of the deep neural network model introduced by Levi and Hassner [7] in 2015. We trained our model using a total of 8,659 male and 7,936 female adult instances from the UTKFace dataset with a 70%, 15%, 15% split for model training, validation, and testing respectively. Table I presents the gender scores of each age group in the test data. We observe that our gender classifier has an over 90% average accuracy for both classes. Furthermore, the model has a more balanced performance in the middle section (21 to 70 years old) of the age spectrum. Since we are interested in the relative performance of various models within a specific class and age group, the imbalanced performance at the two tails is insignificant because the same biases will apply to all models.

| Age Group | Male | Female |

|---|---|---|

| 0.77 (138/179)* | 0.70 (128/183) | |

| 0.75 (45/60) | 0.96 (75/78) | |

| 0.72 (29/40) | 0.93 (51/55) | |

| 0.88 (52/59) | 0.96 (102/106) | |

| 0.93 (471/504) | 0.96 (684/714) | |

| 0.97 (373/383) | 0.92 (226/245) | |

| 0.98 (171/174) | 0.94 (84/89) | |

| 0.98 (236/240) | 0.90 (84/93) | |

| 0.98 (122/125) | 0.91 (49/54) | |

| 70 | 0.95 (86/91) | 0.73 (61/84) |

| Overall accuracy | 0.93 (1723/1855) | 0.91 (1544/1701) |

*: (M/N) denotes the correctly classified instances M over the total instances N for a particular gender category and age group.

IV-B2 FR (Face Recognition) Score

We institute a second quantitative measure to evaluate the identity-preserving effect in the age-progressed photos. To this end, we utilize a pre-trained FaceNet classifier[9], a state-of-the-art face recognition system developed by Google in 2015. The system achieved record-high accuracy on a range of face recognition benchmark datasets, including accuracy in the Wild (LFW) dataset[37] for face verification. Conceptually, FaceNet learns a direct mapping from face images to a compact Euclidean space where distances correspond to a measure of face similarity.

We define the FR score for a particular age group to be the accuracy of applying the FaceNet classifier to all generated and corresponding input image pairs within the group. A higher FR score indicates an overall higher likelihood of being the same person; thus, it can assess the identity-preserving effects of age-progressed photos. Clearly, the FaceNet model’s performance is dependent on the distance threshold that the classifier uses to decide if two faces are considered from the same person.

| Age Group | Male Samples | Female Samples | ||||||

|---|---|---|---|---|---|---|---|---|

| CAAE | CAAE-G | CAAE-V | CAAE-GV | CAAE | CAAE-G | CAAE-V | CAAE-GV | |

| (19.5%)* | (-0.1%) | (5.2%) | (8.0%) | (5.3%) | (14.7%) | |||

| (40.3%) | (3.5%) | (25.6%) | (7.1%) | (3.9%) | (15.0%) | |||

| (61.2%) | (-2.5%) | (38.2%) | (5.6%) | (3.6%) | (11.0%) | |||

| (129.4%) | (6.1%) | (100.6%) | (0.1%) | (-0.1%) | (6.4%) | |||

| (152.2%) | (-7.6%) | (126.4%) | (6.0%) | (2.0%) | (10.2%) | |||

| (133.9%) | (-2.3%) | (113.1%) | (7.1%) | (0.2%) | (13.5%) | |||

| (110.2%) | (-6.8%) | (95.4%) | (9.5%) | (1.5%) | (16.0%) | |||

| (106.1%) | (-5.6%) | (93.2%) | (11.6%) | (5.1%) | (21.3%) | |||

| (101.2%) | (-4.3%) | (89.7%) | (11.8%) | (6.0%) | (18.8%) | |||

| 70 | (94.0%) | (-5.9%) | (83.0%) | (-3.1%) | (7.5%) | (10.9%) | ||

| Average | 0.82 (94.8%) | (-2.5%) | 0.75 (77.0%) | (6.4%) | (3.5%) | 0.84 (13.8%) | ||

*: Numbers in parenthesis are the percentage gains over the CAAE model

CAAE-G: CAAE model + gender component

CAAE-V: CAAE model + VGG component

CAAE-GV: CAAE model + both gender and VGG components

IV-C Experimental Results

Our model consists of two extensions to the baseline CAAE model, each of which serves a different purpose. To examine the individual and combined effects of these two components, we present and analyze the results of four models, CAAE, CAAE-G, CAAE-V, and CAAE-GV, which denote the baseline, baseline+gender, baseline+VGG, and baseline+gender+VGG respectively. Each model is trained using the UTKFace dataset and applied to 1156 male and 1207 female infant and toddler photos to generate simulated faces in ten age groups.

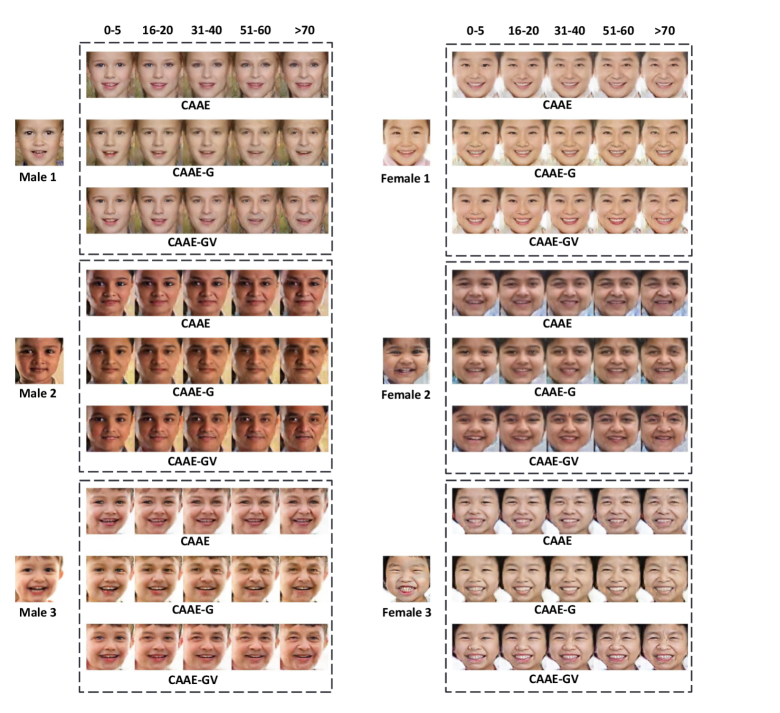

Fig. 2 presents sample simulated faces across different models and the age spectrum. We selected three test samples from each of the male and female categories. For each test photo, we display a simulation block of three rows, each of which consists of predicted faces by the indicated model for the 0-5, 16-20, 31-40, 51-60, and 70 age buckets. Note that the CAAE-V model was excluded in Fig. 2 because we found that the effects of adding the VGG component alone are not visually pronounced. Nevertheless, we analyze its impact quantitatively using the gender and FR scores.

IV-C1 Visual Evaluation of the Gender Component

We examine the visual effects of the augmented gender indicator by comparing the faces in the top two rows in each simulation block in Fig. 2. We observe that, for male samples, there is a pronounced difference in masculinity between the two rows of simulated faces. Furthermore, the discrepancies are more salient as age progresses. The baseline CAAE model (i.e., top row) tends to project the faces toward the female direction, and the CAAE-G model (i.e., second row) corrects the mistake and generates the faces along the correct gender trajectory.

For the female samples, the difference in gender characteristics between the CAAE and CAAE-G models is not as notable as that of the male ones. This is expected if the baseline model is biased toward females. Nevertheless, we do observe the differences and it is arguable that the second rows exhibit more femininity compared to the first rows. To establish a more rigorous evaluation, we employ the ”gender score” (described in Section IV-B1) to quantify the fidelity of the sex category in the simulated photos compared to the ground-truth.

The third row in each of the simulation blocks presents the results of the CAAE-GV model. We observe that the gender advantage of the CAAE-G model is preserved and the faces are more realistic due to the additional VGG components. We quantitatively confirm this observation in Section IV-C4.

IV-C2 Quantitative Evaluation of the Gender Component

Tables II present the gender scores of different models over the age spectrum for both male and female categories. For the male samples, we observe that the CAAE-G model demonstrates an average gain of 94.8% across all age groups. This confirms our visual observation discussed in the above section. We further observe that the improvements are consistent across all age groups and there is a monotonic upward trend until the 31-40 group. The trend then reverts itself afterwards to decrease monotonically. There is a sharp gain (i.e., from 61.2% to 129.4%) when entering the 16-20 group. This is consistent with physical development during adolescence when gender features become apparent in facial images.

For the female category, we observe that the CAAE-G model demonstrates an overall 6.4% gain for the female test samples over the baseline model. Compared to the male case, the gains are consistent but at a much smaller scale. They are also more evenly distributed over the age spectrum. We conclude that the additional gender information also helps to produce more sex consistent photos in female samples in all age groups. Furthermore, compared to the male category, the considerably higher gender scores in the CAAE column for the female samples suggests that the baseline model has a bias towards the female gender in its simulations.

The CAAE-V columns in Table II demonstrate that the VGG component does not have a high influence on the gender characteristics in the simulated faces. This is plausible because the purpose of the VGG discriminator is for preserving the facial features that are essential for identity verification. In our study, all input photos are from infants and toddlers whose gender features are not fully developed on their faces. Indeed, the CAAE-V model exhibits a marginal improvement (3.5%) for the female class and a marginal negative impact (-2.5%) for the male class over the baseline model. However, we present next that the VGG component leads to a significant improvement in preserving individual identities in the face aging simulations.

| CAAE | CAAE-G | CAAE-V | CAAE-GV | |

|---|---|---|---|---|

| min | (10.8%) | (31.6%) | 0.17 (49.8%) | |

| max | (14.5%) | (15.4%) | 4.81 (10.7%) | |

| mean | (2.3%) | (0.8%) | 1.77 (5.8%) | |

| SD | (-3.1%) | (-3.1%) | (-8.2%) | |

| 10-PCTL | (6.3%) | 0.98 (4.0%) | 0.85 (16.8%) | |

| 20-PCTL | (4.4%) | (4.4%) | 1.07 (14.1%) | |

| 30-PCTL | (5.3%) | (3.9%) | 1.27 (12.3%) | |

| 40-PCTL | (4.8%) | (1.9%) | 1.47 (10.1%) | |

| 50-PCTL | (2.9%) | (1.2%) | 1.68 (7.2%) | |

| 60-PCTL | (2.3%) | (0.4%) | 1.91 (4.9%) | |

| 70-PCTL | (0.8%) | (-0.7%) | 2.14 (3.0%) | |

| 80-PCTL | (0.4%) | (-0.7%) | 2.44 (0.7%) | |

| 90-PCTL | (0.2%) | (-0.9%) | 2.79 (1.5%) |

*: Numbers in parenthesis are the percentage gains over the CAAE model

IV-C3 Visual Evaluation of the VGG Component

The effect of the VGG component can be visualized by comparing the second and third rows in each of the simulation blocks in Fig. 2. While both models have integrated the gender indicator, the CAAE-GV model (i.e., third row) has the extra VGG loss (described in Section III-B2) incorporated into its model training process.

We observe that the third row has more vivid facial expressions and better skin tones in general compared to the second row. For all examples, faces in the third row also develop more natural wrinkles and nasolabial folds in the older groups. Consequently, the CAAE-GV model produces more realistic simulations compared to the CAAE-G model. We confirm our observation more rigorously using the ”FR score” in the next section.

IV-C4 Quantitative Evaluation of the VGG Component

As described in Section IV-B2, we institute an ”FR (Face Recognition) score” to quantify the degree of identity preservation throughout the face aging process. Because the FR score relies on the distance measure in the embedded Euclidean space, we first examine the statistics of the distance between the original image and the age-progressed faces for different models.

Table III presents, for each model, the distribution of distances between the test samples and their corresponding generated images. The smaller the distance, the more likely the two faces belong to the same person. We observe that the CAAE-GV model produces the smallest mean (1.77) among the four distributions with a 5.8% gain over the baseline model, while adding gender information or the VGG component alone results in a 2.3% and 0.8% gain respectively. Similarly, for the min/max statistics, the CAAE-GV model demonstrates 49.8%/10.7% improvements over the baseline model, while the CAAE-G and CAAE-V models exhibit 10.8%/14.5% and 31.6%/15.4% gains respectively. Furthermore, the advantage of the CAAE-GV model over the other three models is consistent across all percentile groups.

Next, we compare the FR score of the four models. Recall that an FR score represents the FaceNet model’s classification accuracy in determining if the simulated face is the same as the original person’s. This accuracy depends on the distance threshold we use to make the verification decision. A lower threshold (i.e., closer distance) requires a higher similarity between the paired images to be identified as the same person. On the other hand, a higher threshold relaxes the verification criteria. In the extreme case, when the threshold is set above the max distance of the distributions in Table III, all FR scores will degenerate to 1. Thus, the FR score improvement with a lower threshold is more substantial than that with a higher threshold, even though lower thresholds are associated with lower FR scores.

Table IV presents the FR score for different models using three distance thresholds. We observe that in each model’s corresponding column, the FR score increases as expected when we relax the identity verification criteria. When we set a high verification standard (threshold=1.6), the CAAE-GV model exhibits a 22.4% gain over the baseline CAAE model. The CAAE-G and CAAE-V models offer a 12.9% and 5.3% gain, respectively. As we lower the criteria (i.e., increase the threshold), the advantage of our model reduces due to the expected diminishing FR score differences among all four models.

| Threshold | CAAE | CAAE-G | CAAE-V | CAAE-GV |

|---|---|---|---|---|

| 1.6 | 0.38 | 0.43 (12.9%)* | 0.40 (5.3%) | 0.47 (22.4%) |

| (3.9%) | (0.8%) | (8.2%) | ||

| (-0.2%) | (-1.0%) | (0.3%) |

*: Numbers in parenthesis are the percentage gains over the CAAE model

V Conclusion

In this paper, we focused on the challenging task of age progression/regression for photos of infants and toddlers. We proposed two enhancements to the existing CAAE architecture to help ensure gender and identity consistencies in the face aging process. In our approach, we augmented the input vector of the generator with gender information since young faces lack salient gender characteristics. We further strengthened the model with a new identity preservation component based on facial features extracted by the VGG19 convolutional neural network. Our experimental results demonstrate significant visual and quantitative improvements over the CAAE model for our particular task. Our methods and findings can be adopted by other deep learning approaches in the face progression and regression studies.

References

- [1] S. Liu, Y. Sun, D. Zhu, R. Bao, W. Wang, X. Shu, and S. Yan, “Face aging with contextual generative adversarial nets,” in Proceedings of the 25th ACM international conference on Multimedia, 2017, pp. 82–90.

- [2] A. Radford, L. Metz, and S. Chintala, “Unsupervised representation learning with deep convolutional generative adversarial networks,” arXiv preprint arXiv:1511.06434, 2015.

- [3] J.-Y. Zhu, T. Park, P. Isola, and A. A. Efros, “Unpaired image-to-image translation using cycle-consistent adversarial networks,” in Proceedings of the IEEE international conference on computer vision, 2017, pp. 2223–2232.

- [4] G. Antipov, M. Baccouche, and J.-L. Dugelay, “Face aging with conditional generative adversarial networks,” in 2017 IEEE international conference on image processing (ICIP). IEEE, 2017, pp. 2089–2093.

- [5] Z. Zhang, Y. Song, and H. Qi, “Age progression/regression by conditional adversarial autoencoder,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, pp. 5810–5818.

- [6] J. Song, T. He, L. Gao, X. Xu, A. Hanjalic, and H. T. Shen, “Binary generative adversarial networks for image retrieval,” in Thirty-Second AAAI Conference on Artificial Intelligence, 2018.

- [7] G. Levi and T. Hassner, “Age and gender classification using convolutional neural networks,” in Proceedings of the IEEE conference on computer vision and pattern recognition workshops, 2015, pp. 34–42.

- [8] K. Simonyan and A. Zisserman, “Very deep convolutional networks for large-scale image recognition,” arXiv preprint arXiv:1409.1556, 2014.

- [9] F. Schroff, D. Kalenichenko, and J. Philbin, “Facenet: A unified embedding for face recognition and clustering,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2015, pp. 815–823.

- [10] X. Shu, G. S. Xie, Z. Li, and J. Tang, “Age progression: Current technologies and applications,” Neurocomputing, vol. 208, pp. 249–261, 2016.

- [11] N. Ramanathan, R. Chellappa, and S. Biswas, “Computational methods for modeling facial aging: A survey,” Journal of Visual Languages & Computing, vol. 20, no. 3, pp. 131–144, 2009.

- [12] N. Ramanathan, R. Chellappa, S. Biswas, et al., “Age progression in human faces: A survey,” Journal of Visual Languages and Computing, vol. 15, pp. 3349–3361, 2009.

- [13] Y. Fu, G. Guo, and T. S. Huang, “Age synthesis and estimation via faces: A survey,” IEEE transactions on pattern analysis and machine intelligence, vol. 32, no. 11, pp. 1955–1976, 2010.

- [14] L. Boissieux, G. Kiss, N. M. Thalmann, and P. Kalra, “Simulation of skin aging and wrinkles with cosmetics insight,” in Computer Animation and Simulation 2000. Springer, 2000, pp. 15–27.

- [15] J. Suo, X. Chen, S. Shan, W. Gao, and Q. Dai, “A concatenational graph evolution aging model,” IEEE transactions on pattern analysis and machine intelligence, vol. 34, no. 11, pp. 2083–2096, 2012.

- [16] A. C. Berg and S. C. Justo, “Aging of orbicularis muscle in virtual human faces,” in Proceedings on Seventh International Conference on Information Visualization, 2003. IV 2003. IEEE, 2003, pp. 164–168.

- [17] J. T. Todd, L. S. Mark, R. E. Shaw, and J. B. Pittenger, “The perception of human growth,” Scientific american, vol. 242, no. 2, pp. 132–145, 1980.

- [18] Y. Bando, T. Kuratate, and T. Nishita, “A simple method for modeling wrinkles on human skin,” in 10th Pacific Conference on Computer Graphics and Applications, 2002. Proceedings. IEEE, 2002, pp. 166–175.

- [19] W.-S. Lee, Y. Wu, and N. Magnenat-Thalmann, “Cloning and aging in a vr family,” in Proceedings IEEE Virtual Reality (Cat. No. 99CB36316). IEEE, 1999, pp. 61–68.

- [20] Y. Wu, P. Beylot, and N. M. Thalmann, Skin aging estimation by facial simulation. IEEE, 1999.

- [21] N. Ramanathan and R. Chellappa, “Modeling shape and textural variations in aging faces,” in 2008 8th IEEE International Conference on Automatic Face & Gesture Recognition. IEEE, 2008, pp. 1–8.

- [22] J. Suo, S.-C. Zhu, S. Shan, and X. Chen, “A compositional and dynamic model for face aging,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 32, no. 3, pp. 385–401, 2009.

- [23] N. Ramanathan and R. Chellappa, “Modeling age progression in young faces,” in 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), vol. 1. IEEE, 2006, pp. 387–394.

- [24] A. Lanitis, C. J. Taylor, and T. F. Cootes, “Toward automatic simulation of aging effects on face images,” IEEE Transactions on pattern Analysis and machine Intelligence, vol. 24, no. 4, pp. 442–455, 2002.

- [25] U. Park, Y. Tong, and A. K. Jain, “Age-invariant face recognition,” IEEE transactions on pattern analysis and machine intelligence, vol. 32, no. 5, pp. 947–954, 2010.

- [26] X. Shu, J. Tang, H. Lai, Z. Niu, and S. Yan, “Kinship-guided age progression,” Pattern Recognition, vol. 59, pp. 156–167, 2016.

- [27] X. Shu, J. Tang, Z. Li, H. Lai, L. Zhang, S. Yan, et al., “Personalized age progression with bi-level aging dictionary learning,” IEEE transactions on pattern analysis and machine intelligence, vol. 40, no. 4, pp. 905–917, 2017.

- [28] H. Yang, D. Huang, Y. Wang, H. Wang, and Y. Tang, “Face aging effect simulation using hidden factor analysis joint sparse representation,” IEEE Transactions on Image Processing, vol. 25, no. 6, pp. 2493–2507, 2016.

- [29] W. Wang, Y. Yan, S. Winkler, and N. Sebe, “Category specific dictionary learning for attribute specific feature selection,” IEEE Transactions on Image Processing, vol. 25, no. 3, pp. 1465–1478, 2016.

- [30] W. Wang, Z. Cui, Y. Yan, J. Feng, S. Yan, X. Shu, and N. Sebe, “Recurrent face aging,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2016, pp. 2378–2386.

- [31] M. Schuster and K. K. Paliwal, “Bidirectional recurrent neural networks,” IEEE transactions on Signal Processing, vol. 45, no. 11, pp. 2673–2681, 1997.

- [32] I. Goodfellow, J. Pouget-Abadie, M. Mirza, B. Xu, D. Warde-Farley, S. Ozair, A. Courville, and Y. Bengio, “Generative adversarial nets,” in Advances in neural information processing systems, 2014, pp. 2672–2680.

- [33] M. Mirza and S. Osindero, “Conditional generative adversarial nets,” arXiv preprint arXiv:1411.1784, 2014.

- [34] X. Shu, J. Tang, H. Lai, L. Liu, and S. Yan, “Personalized age progression with aging dictionary,” in Proceedings of the IEEE international conference on computer vision, 2015, pp. 3970–3978.

- [35] G. E. Hinton and R. R. Salakhutdinov, “Reducing the dimensionality of data with neural networks,” science, vol. 313, no. 5786, pp. 504–507, 2006.

- [36] J. Geralds, “Utkface large scale face dataset,” github.com.

- [37] G. B. Huang, M. Mattar, T. Berg, and E. Learned-Miller, “Labeled faces in the wild: A database for studying face recognition in unconstrained environments,” 2008.