Enhancing Conditional Image Generation with Explainable Latent Space Manipulation

††copyright: none1. Abstract

In the realm of image synthesis, achieving fidelity to a reference image while adhering to conditional prompts remains a significant challenge. This paper proposes a novel approach that integrates a diffusion model with latent space manipulation and gradient-based selective attention mechanisms to address this issue. Leveraging Grad-SAM (Gradient-based Selective Attention Manipulation), we analyze the cross attention maps of the cross attention layers and gradients for the denoised latent vector, deriving importance scores of elements of denoised latent vector related to the subject of interest. Using this information, we create masks at specific timesteps during denoising to preserve subjects while seamlessly integrating the reference image features. This approach ensures the faithful formation of subjects based on conditional prompts, while concurrently refining the background for a more coherent composition. Our experiments on places365 dataset demonstrate promising results, with our proposed model achieving the lowest mean and median Frechet Inception Distance (FID) scores compared to baseline models, indicating superior fidelity preservation. Furthermore, our model exhibits competitive performance in aligning the generated images with provided textual descriptions, as evidenced by high CLIP scores. These results highlight the effectiveness of our approach in both fidelity preservation and textual context preservation, offering a significant advancement in text-to-image synthesis tasks.

2. Introduction

Image synthesis, particularly in the context of adhering to both reference images and conditional prompts, poses a significant challenge in contemporary research. While numerous approaches exist, achieving fidelity to reference images while integrating specified conditional prompts remains elusive. This paper presents a novel methodology aimed at addressing this challenge through the integration of a diffusion model with latent space manipulation and gradient-based selective attention mechanisms.

In recent years, diffusion models have emerged as powerful tools for image generation, allowing for the creation of high-quality images by iteratively denoising a latent representation. However, preserving the fidelity of a reference image while adhering to conditional prompts requires further refinement. State-of-the-art models like Stable Diffusion have demonstrated remarkable capabilities in generating images based on a conditional reference image. However, they face challenges when adhering to both the reference image and an additional text condition simultaneously. These models typically operate by controlling the level of noise added to the latent representation, with lesser noise injection required to retain properties from the reference image. This approach often leads to a compromise in faithfully rendering the subjects specified in the text condition, as the reduced number of denoising timesteps limits the model’s ability to accurately generate these subjects.

To address this limitation, we propose the integration of Absolute Gradient-based Selective Attention Manipulation for images (Abs-Grad-SAM) motivated from Grad-SAM (Barkan et al., 2022), a technique that analyzes cross-attention maps and gradients within the denoised latent space to generate importance scores. By leveraging Abs-Grad-SAM, we derive importance scores for elements of the denoised latent vector related to the subject of interest. Since we are dealing with images and the Grad-SAM (Barkan et al., 2022) technique is originally designed for language models, here, instead of using ReLU, we utilize the absolute values of gradients. This adaptation is crucial as attention can impact pixel values both positively and negatively, requiring a more comprehensive consideration of gradient magnitudes.

This enables the creation of masks at specific timesteps during denoising, facilitating the preservation of subjects derived from the text condition while seamlessly integrating background elements from the reference image. Consequently, our approach ensures faithful adherence to both the text prompt and the reference image, overcoming the trade-off between subject integrity and background preservation faced by existing methods.

In this paper, we present preliminary findings. Through comprehensive experiments conducted on the Places 365 dataset (Bobaaayoung, Year), we demonstrate the superiority of our proposed method over baseline models. Specifically, our method yields improved Frechet Inception Distance (FID) scores and CLIP scores, indicative of higher-quality image synthesis. Furthermore, our approach generates images that are not only of superior quality but also inherently explainable, accurately reflecting both the specified conditional prompts and the features of reference images. We also address challenges such as object overlap and interference, thus advancing the state-of-the-art in image synthesis techniques.

3. Related Works

Denoising Diffusion Probabilistic Models: DDPMs (Ho et al., 2020) have emerged as a powerful framework for high-quality image synthesis. These models operate by gradually removing noise from an initial noisy latent representation, guided by a neural network trained to predict the noise distribution. [Ho et al., 2020] (Ho et al., 2020) introduced DDPMs and demonstrated their effectiveness in generating high-quality images on datasets like CIFAR-10 and LSUN. [Nichol and Dhariwal, 2021] (Nichol and Dhariwal, 2021) extended DDPMs to enable class-conditional image generation, while [Rombach et al., 2022] (Rombach et al., 2022) proposed Latent Diffusion Models (LDMs) that apply diffusion models in the latent space of pre-trained autoencoders, enabling high-resolution synthesis with reduced computational requirements.

Text-to-Image Diffusion Models: Building upon the success of DDPMs, researchers have explored techniques to generate images conditioned on text prompts. [Rombach et al., 2022] (Rombach et al., 2022) introduced cross-attention layers into the diffusion model architecture, enabling text-to-image synthesis. The Stable Diffusion model [Rombach et al., 2022] (Rombach et al., 2022) demonstrated state-of-the-art performance in this domain. However, these models often struggle with faithfully rendering all aspects of the text prompt, leading to the phenomenon of ”catastrophic neglect” [Chefer et al., 2023] (Chefer et al., 2023). To control the trade-off between sample quality and diversity, classifier guidance [Nichol and Dhariwal, 2021] (Nichol and Dhariwal, 2021) was introduced, which combines the score estimate of the diffusion model with the estimates of a separately trained image classifier. Alternatively, classifier-free guidance [Ho and Salimans, 2022] (Ho and Salimans, 2022) jointly trains a conditional and an unconditional diffusion model, and combines their respective score estimates to achieve a similar trade-off without requiring a separate classifier. Nichol et al. (2022) (Nichol et al., 2022) introduced GLIDE, a text-guided diffusion model for photorealistic image generation and editing. They compare two guidance strategies—CLIP guidance and classifier-free guidance—and find that the latter is preferred by human evaluators for both photorealism and caption similarity, often producing photorealistic samples. Additionally, they demonstrate the capability of their models for image inpainting, enabling powerful text-driven image editing.

Improving Diffusion Model Performance: Several works have aimed to enhance the performance of diffusion models, particularly in the context of text-to-image generation. [Chefer et al., 2023] (Chefer et al., 2023) proposed the Attend-and-Excite technique, which guides the model to attend to all subject tokens in the text prompt and strengthen their activations, improving the faithfulness of the generated images. [Hoogeboom and Salimans, 2022] (Hoogeboom and Salimans, 2022) showed that blurring can be equivalently defined through a Gaussian diffusion process with non-isotropic noise, bridging the gap between inverse heat dissipation and denoising diffusion, and proposed Blurring Diffusion Models that offer the benefits of both standard Gaussian denoising diffusion and inverse heat dissipation. [Lee et al., 2022] (Lee et al., 2022) introduced a progressive deblurring approach for diffusion models, enabling coarse-to-fine image synthesis by diffusing and deblurring different frequency components of an image at different speeds.. [Ji et al., 2023] (Huang et al., 2023) proposed Self-Attention Control for Diffusion Models Training (SAT), leveraging attention maps to refine intermediate samples during training. [Ruiz et al. 2023] (Ruiz et al., 2023) presented DreamBooth, a method for fine-tuning text-to-image diffusion models to generate personalized images of subjects based on a few reference images. Saharia et al. (2022) (Saharia et al., 2022) introduced Imagen, a text-to-image diffusion model with an unprecedented degree of photorealism and a deep level of language understanding, leveraging techniques from denoising diffusion probabilistic models and latent diffusion models to enhance faithfulness and context adherence in image generation.

In our work, we build upon the advancements in denoising diffusion probabilistic models and latent diffusion models, aiming to improve the faithfulness and context adherence of text-to-image generation. We leverage techniques like attention-based guidance and latent space manipulation to address challenges such as catastrophic neglect and attribute binding, while preserving the strengths of diffusion models in generating high-quality and diverse images.

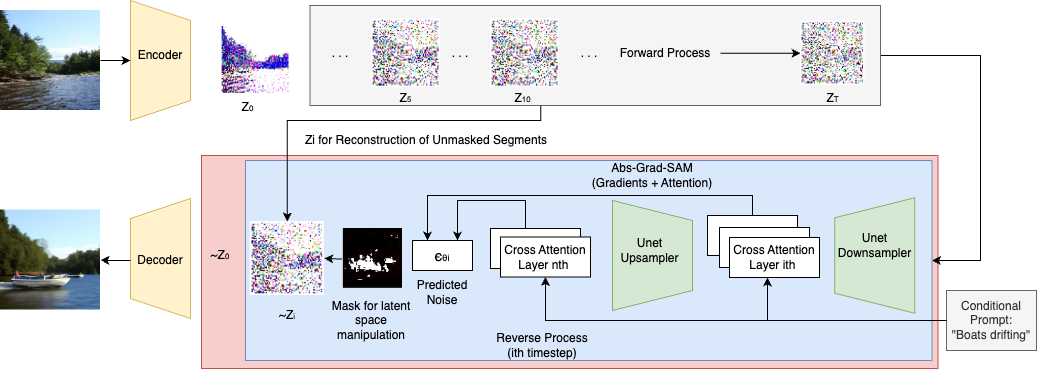

4. Methodology

In our approach, we extend upon the breakthroughs achieved by the state-of-the-art Stable Diffusion model (SD) introduced by Rombach et al. (Rombach et al., 2022) in 2022. Unlike traditional image-based methods, SD operates within the latent space of an autoencoder architecture. Initially, an encoder () is trained to transform input images () into spatial latent vectors (). We then utilize a denoising diffusion probabilistic model (DDPM) (Ho et al., 2020) to first add noise to the latent vector through a forward diffusion process, and subsequently denoise the noisy latent vector through a reverse diffusion process over the learned latent space. This process generates a denoised version of the input latent vector at each timestep and ultimately we get the final denoised latent vector . Throughout the denoising procedure, the diffusion model is conditioned on an additional input condition. Following denoising, a decoder () reconstructs the input image, ensuring that the output of the decoder () closely resembles the desired image (). Leveraging this latent space framework allows for efficient manipulation and enhancement of image representations, laying the foundation for our integration of importance scores based on Abs-Grad-SAM (Barkan et al., 2022) and latent space manipulation techniques.

4.1. Mask Creation

The denoising diffusion probabilistic model (DDPM) utilizes a UNet architecture to predict noise at each timestamp. Conditional guidance is provided through the use of cross-attention (Vaswani et al., 2017) modules within the UNet. These cross-attention maps within the UNet has varying spatial dimensions and comprises a query, derived from the transformation of the input latent vector, and a key, derived from the input text embedding. Specifically, we define a cross attention map weights as as a tensor in where is the number of attention heads, is the spatial dimension of the latent vector, and is the input text size.

For each subject within the input text processed by the cross-attention layer, we compute the Jacobian of gradients of the predicted noise with respect to the corresponding attention weights. This calculation is mathematically represented as:

where indexes the channel, represent the attention-head, represent the spatial coordinates, indexes the token, and indexes the variable in the attention map weight vector.

Now, based on the Jacobian calculated and the attention scores for a particular subject , we calculate the importance score of predicted noise elements corresponding to that subject based on the findings from Grad-SAM (Barkan et al., 2022). Mathematically, importance score is given by:

where represents the gradients of the particular subject in the Jacobian of gradients, and denotes the Hadamard product.

Based on the calculated importance scores, we create a mask based on a dynamic threshold as described:

Additionally, to smooth these masks, we apply a Gaussian filter and morphological dilation on them. Similarly, we compute the importance score for all the subjects in the input text and generate a mask for each of them. Subsequently, we take union of these masks to create the corresponding mask for the cross-attention layer, which selectively masks all the relevant subjects in the latent vector. Mathematically, we denote this final mask as is given by,

Finally, we compute the intersection of these binary masks from all cross-attention layers to derive the ultimate mask , serving as the composite representation.:

4.2. Latent Space Manipulation

In latent space manipulation, we manipulate the final denoised latent vector for the particular timestamp . We utilize the previously formed mask to retain the formation of subjects in the image while altering the background from the reference image by replacing the unmasked elements with the corresponding elements from the latent vector obtained by denoising the reference image up to timestep . Mathematically, the manipulated latent vector is given by:

| (1) |

We perform this latent space manipulation for selected timesteps, which we identify by fine-tuning on a validation set. The choice of these timesteps plays a crucial role in determining the quality of the final output image, as manipulating the latent vector at different timesteps can lead to variations in the preservation of subject details and the incorporation of background elements from the reference image.

4.3. Mask Refinement and Smoothing

While the initial masks generated from the importance scores capture the desired regions, their boundaries often exhibit abrupt transitions, which can lead to visual artifacts in the synthesized images. To mitigate this issue and ensure smooth transitions between the preserved subjects and the integrated background elements, we apply two post-processing operations on the importance scores: Gaussian blurring and morphological dilation.

Mathematically, the Gaussian blurring operation can be expressed as:

where represents the importance score, is the Gaussian kernel with standard deviation , and denotes the convolution operation. By applying Gaussian blurring to the generated importance score and use them for generating masks, we effectively smooth the boundaries, reducing abrupt transitions and promoting gradual changes between the preserved and integrated regions.

Morphological dilation is another image processing technique which involves convolving the input mask with a structuring element, typically a small binary kernel. The dilation operation can be mathematically expressed as:

where is the importance score, is the structuring element (e.g., a disk or square kernel), and is the domain of the structuring element. By applying dilation to the the scores regions, we slightly expand their boundaries, ensuring a smoother transition between the preserved subjects and the integrated background.

The combination of Gaussian blurring and morphological dilation on the importance scores helps generated masks to mitigate visual artifacts and promote seamless integration of the preserved subjects with the background elements from the reference image. The specific parameters, such as the standard deviation for Gaussian blurring and the structuring element for dilation, are fine-tuned empirically to achieve the desired level of smoothness and boundary preservation.

5. Experiments and Results

5.1. Evaluation Setup

In our experiments, we utilized the Stable Diffusion v1-5 (Rombach and Esser, 2022) model for text-to-image generation tasks. We downloaded the necessary tokenizer files from the Hugging Face model hub and for text embeddings, we employed the CLIP model. This setup allowed us to leverage the capabilities of Stable Diffusion v1-5 for generating high-quality images based on text prompts, while utilizing CLIP for text embeddings to facilitate the generation process.

Additionally, our experiments involved utilizing the Place365 dataset, a subset of which was obtained from kaggle (Bobaaayoung, Year). This dataset consisted of images resized to 256x256 pixels for data compression purposes. We carefully curated the dataset by selecting and identifying specific photos from various categories. The final conditional images dataset had a structured organization, including both artificial and natural scenes each containing several subcategories. Under the artificial category, we have athletic field, industrial area, residential area, downtown, and park. Under the natural category, we have arid area, forest, inland water body, mountain, and ocean. For each subcategory, specific prompts were provided to guide the image generation process.

To assess the quality of the generated images, we relied on two key metrics: Frechet Inception Distance (FID) (Heusel et al., 2017) scores and CLIP scores (Hessel et al., 2021). FID scores measure the similarity between the distribution of real and generated images in feature space [3], while CLIP scores evaluate the alignment between generated images and corresponding textual prompts [4].

5.2. Results Analysis

In this section, we present the results of our experiments on text-to-image generation tasks. Initially, we employed the Stable Diffusion v1-5 model (Rombach and Esser, 2022) as our baseline model, coupled with CLIP for text embeddings. To enhance the fidelity of generated images and ensure alignment with the provided conditional image, we fine-tuned the baseline model, identifying optimal hyperparameters. This fine-tuned model is referred to as SD tuned. Subsequently, leveraging the dynamic masking technique outlined in the paper, we developed our model. We utilized the Place365 dataset (Bobaaayoung, Year) and evaluated the generated images using two key metrics: Frechet Inception Distance (FID) (Heusel et al., 2017) and CLIP scores (Hessel et al., 2021).

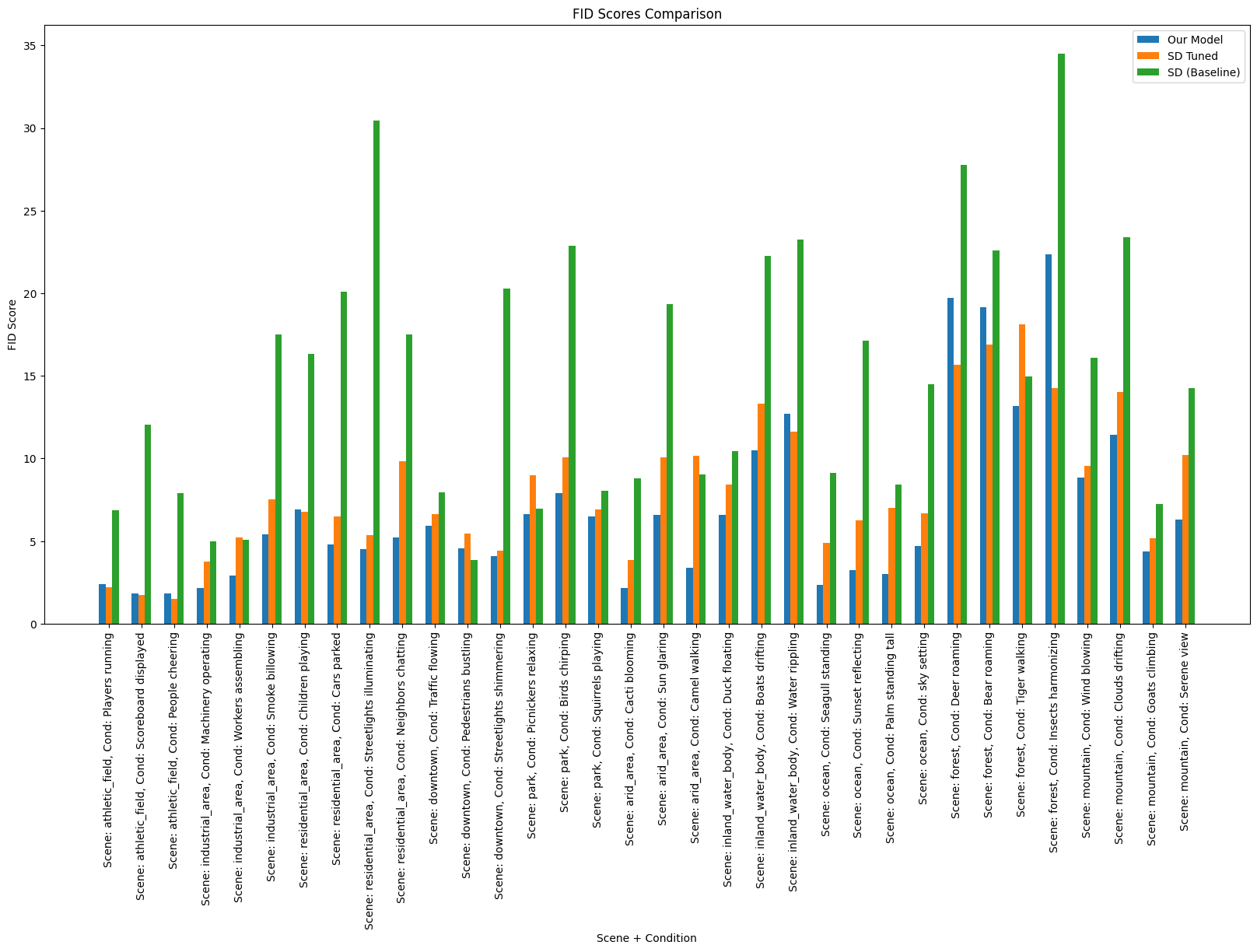

5.2.1. Frechet Inception Distance (FID) Analysis

In the FID scores comparison plot, each bar represents the FID score obtained by a specific model configuration, distinguished by different colors. The x-axis enumerates various scenes and subcategories, providing insights into how each model performs across different visual contexts. Figure 3 shows the FID scores obtained for each model.

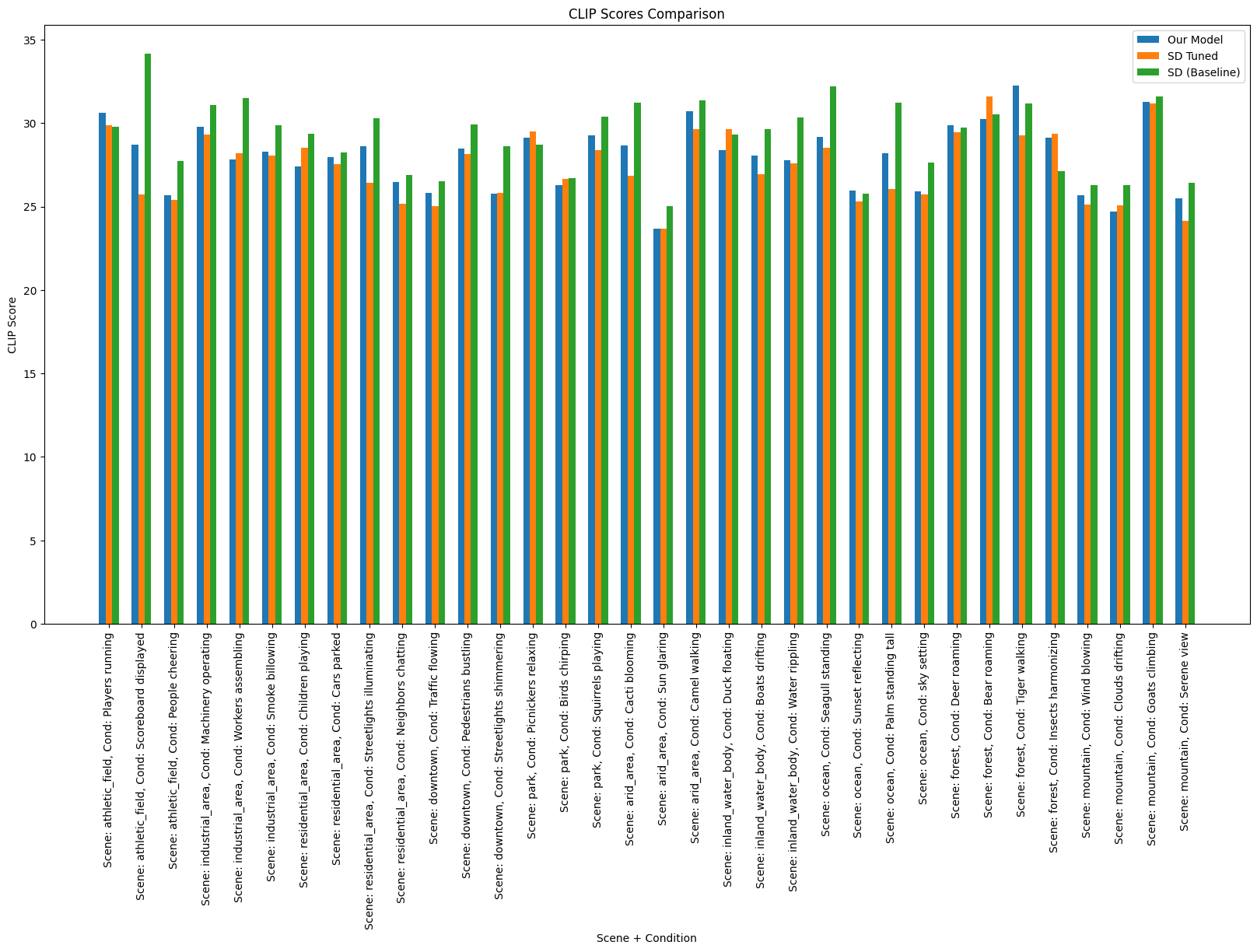

5.2.2. CLIP Score Analysis

The CLIP scores comparison plot follows a similar format as FID scores, with each bar illustrating the CLIP score achieved by a particular model. Figure 4 illustrates the CLIP scores obtained for each model.

5.2.3. Aggregated Results

| Model | FID Scores | CLIP Scores | ||||

|---|---|---|---|---|---|---|

| Our Model |

|

|

||||

| SD Tuned |

|

|

||||

| SD (Baseline) |

|

|

Our model demonstrates notable performance in both fidelity preservation, as indicated by the Frechet Inception Distance (FID) scores, and textual context preservation, as demonstrated by the CLIP scores. FID scores provide crucial insights into the similarity between the generated images and the real images from the dataset. Lower FID scores imply better fidelity, suggesting that the generated images closely resemble the real images in terms of visual features. Our model achieved the lowest mean and median FID scores compared to the SD Tuned and SD (Baseline) models. With a mean FID score of 6.89 and a median FID score of 5.32, our model demonstrates a high degree of fidelity, maintaining consistency across various scenes and categories. These results indicate our model’s effectiveness in capturing the visual characteristics of the scenes from the Place365 dataset, producing images that closely align with real images in terms of visual quality and features. CLIP scores offer insights into the alignment between the generated images and the textual descriptions provided as input. Higher CLIP scores suggest better alignment, indicating that the generated images effectively capture the intended textual context. Despite primarily focusing on fidelity, our model excels in preserving textual context as well.

With a mean CLIP score of 27.98 and a median CLIP score of 28.24, our model achieves competitive performance in aligning the generated images with the provided textual descriptions. This highlights our model’s capability to not only produce visually appealing images but also ensure that these images accurately reflect the intended textual context. Overall, our model’s balanced performance in both fidelity preservation and textual context preservation demonstrates its effectiveness in text-to-image generation tasks, providing high-quality image generation while maintaining fidelity to the provided textual descriptions.

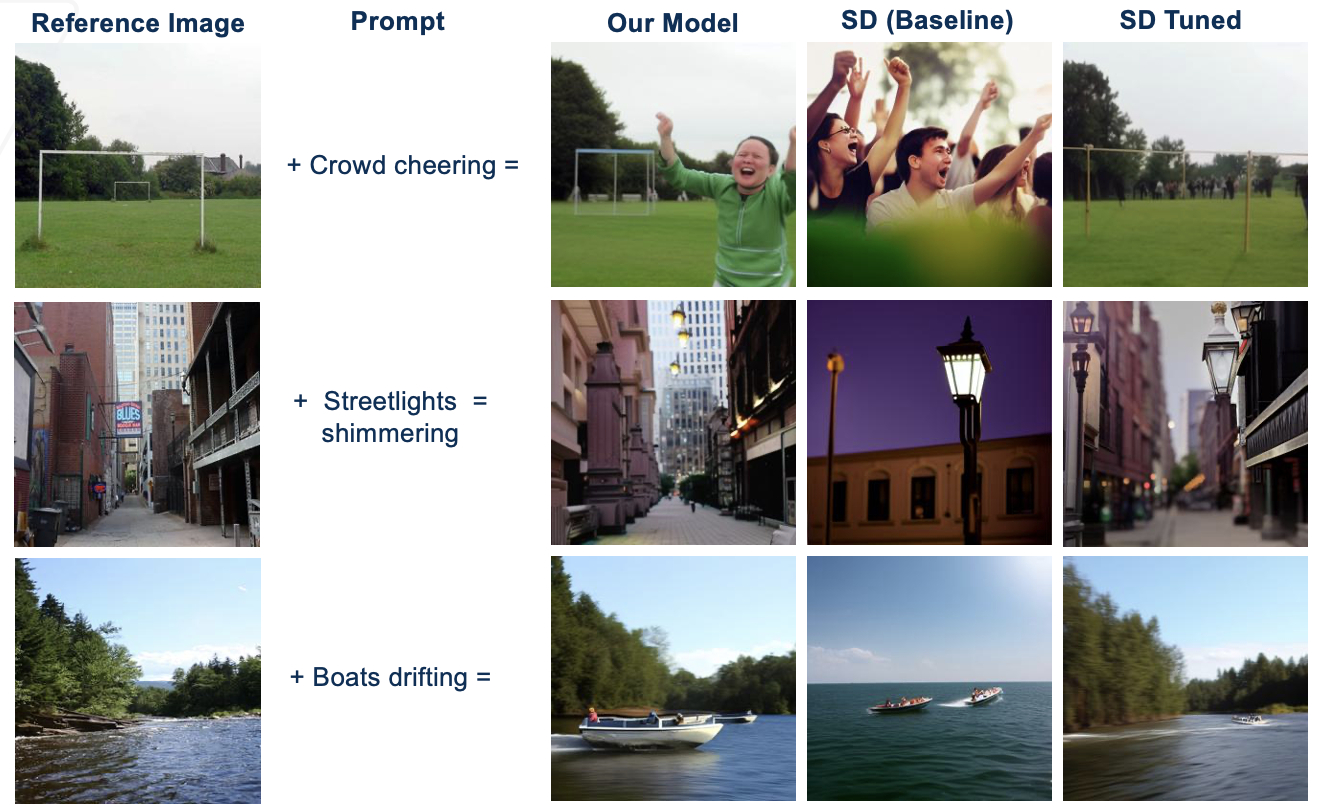

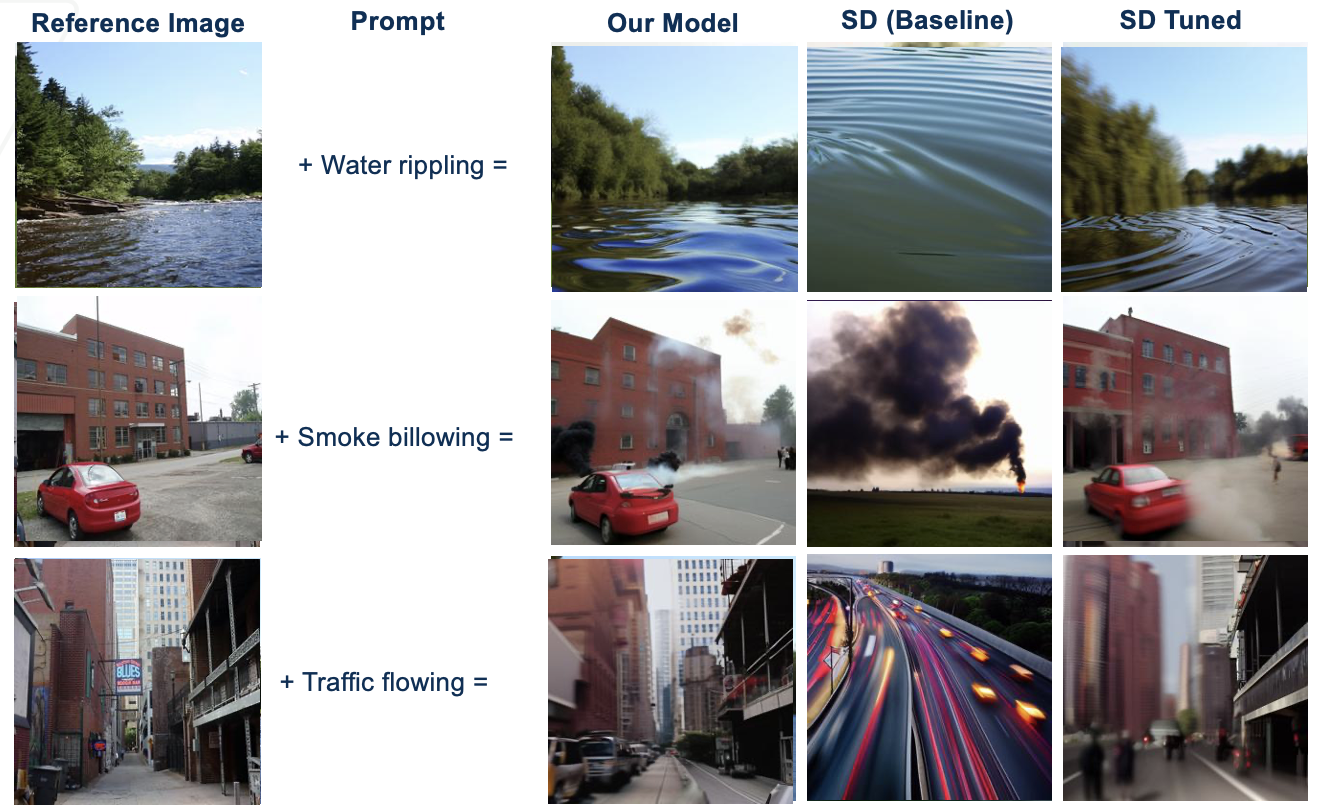

5.3. Qualitative Results

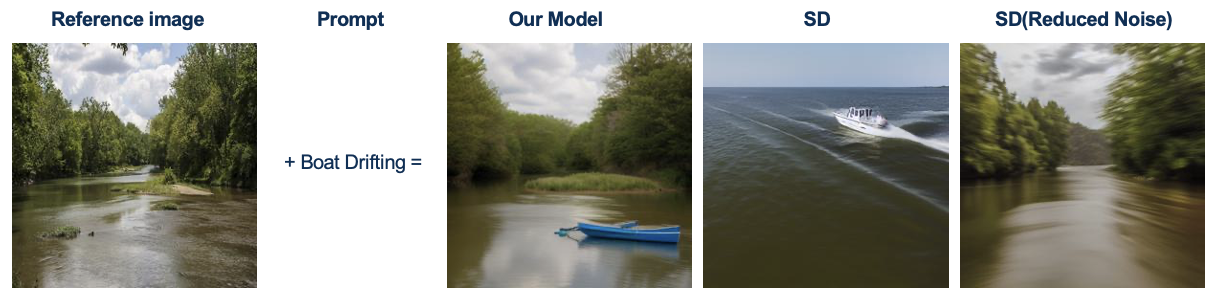

Based on qualitative analysis of the images presented in comparative analysis, it is apparent that our model exhibits a notably higher adherence to the specified condition in comparison to the tuned model. While the baseline model strictly adheres to the condition, it falls short in integrating essential features and contextual cues from the reference image, primarily attributable to the increased noise present in its latent vector.

Conversely, the tuned model displays adeptness in capturing features from the reference image, facilitated by a latent vector having reduced noise levels. However, this diminished noise level contributes to a compromised fidelity in incorporating conditional elements into the image. Our proposed model effectively preserves the contextual integrity of the image while concurrently upholding fidelity to the prescribed condition.

6. Conclusion

In this study, we explored text-to-image generation tasks using the Stable Diffusion model coupled with Abs-Grad-SAM technique for latent space manipulation. Through experimentation, we fine-tuned the baseline model and developed our model by leveraging the dynamic masking technique. We evaluated the generated images using Frechet Inception Distance (FID) scores and CLIP scores, which provided insights into fidelity preservation and textual context alignment, respectively.

Our model exhibited superior performance compared to both the baseline SD model and the fine-tuned SD model (SD Tuned). With lower FID scores indicating better fidelity and competitive CLIP scores suggesting effective alignment with textual prompts, our model demonstrated balanced performance in both visual quality and textual context preservation.

Furthermore, the incorporation of the Abs-Grad-SAM method enabled us to manipulate latent space vectors in an explainable manner, potentially enhancing image precision and control over the generation process. While our experiments utilized importance scores from a single cross-attention layer, future research could explore leveraging all cross-attention layers for even more precise image generation.

Overall, our findings underscore the effectiveness of our model in text-to-image generation tasks, offering high-quality image generation while maintaining fidelity to textual descriptions. This research contributes to advancing the capabilities of generative models and opens avenues for further exploration in controlled image generation and explainable latent space manipulation.

7. Acknowledgements

This research was conducted as part of the CS 7476 Advanced Computer Vision course in Spring 2024. I thank Professor James Hays and the course Teaching Assistant Akshay Krishnan for their guidance and support throughout the project.

References

- (1)

- Barkan et al. (2022) Oren Barkan, Edan Hauon, Avi Caciularu, Ori Katz, Itzik Malkiel, Omri Armstrong, and Noam Koenigstein. 2022. Grad-SAM: Explaining Transformers via Gradient Self-Attention Maps. arXiv preprint arXiv:2204.11073 (2022). arXiv:2204.11073 [cs.LG] https://arxiv.org/abs/2204.11073

- Bobaaayoung (Year) Bobaaayoung. Year. Place365 Dataset. https://www.kaggle.com/datasets/bobaaayoung/place365?select=DATA-1. Accessed on: Date.

- Chefer et al. (2023) Hila Chefer, Yuval Alaluf, Yael Vinker, Lior Wolf, and Daniel Cohen-Or. 2023. Attend-and-Excite: Attention-Based Semantic Guidance for Text-to-Image Diffusion Models. arXiv preprint arXiv:2301.13826 (2023). arXiv:2301.13826 [cs.CV]

- Hessel et al. (2021) Jack Hessel, Ari Holtzman, Maxwell Forbes, Ronan Le Bras, and Yejin Choi. 2021. CLIPScore: A Reference-free Evaluation Metric for Image Captioning. EMNLP 2021 (2021). https://doi.org/10.48550/arXiv.2104.08718 arXiv:2104.08718 [cs.CV]

- Heusel et al. (2017) Martin Heusel, Hubertus Ramsauer, Thomas Unterthiner, Bernhard Nessler, and Sepp Hochreiter. 2017. GANs trained by a two time-scale update rule converge to a local Nash equilibrium. In Advances in Neural Information Processing Systems. 6626–6637.

- Ho et al. (2020) Jonathan Ho, Ajay Jain, and Pieter Abbeel. 2020. Denoising Diffusion Probabilistic Models. arXiv preprint arXiv:2006.11239 (2020). arXiv:2006.11239 [cs.LG] https://doi.org/10.48550/arXiv.2006.11239 [Submitted on 19 Jun 2020 (v1), last revised 16 Dec 2020 (this version, v2)].

- Ho and Salimans (2022) Jonathan Ho and Tim Salimans. 2022. Classifier-Free Diffusion Guidance. arXiv preprint arXiv:2207.12598 (2022). arXiv:2207.12598 [cs.LG]

- Hoogeboom and Salimans (2022) Emiel Hoogeboom and Tim Salimans. 2022. Blurring Diffusion Models. arXiv preprint arXiv:2209.05557 (2022). arXiv:2209.05557 [cs.LG]

- Huang et al. (2023) Jing Huang, Tianyi Zhang, and Wei Shi. 2023. SAT: Self-Attention Control for Diffusion Models Training. In LGM3A ’23: Proceedings of the 1st Workshop on Large Generative Models Meet Multimodal Applications. Association for Computing Machinery, 15–22. https://doi.org/10.1145/3607827.3616838

- Lee et al. (2022) Sangyun Lee, Hyungjin Chung, Jaehyeon Kim, and Jong Chul Ye. 2022. Progressive Deblurring of Diffusion Models for Coarse-to-Fine Image Synthesis. arXiv preprint arXiv:2207.11192 (2022). arXiv:2207.11192 [cs.CV]

- Nichol and Dhariwal (2021) Alex Nichol and Prafulla Dhariwal. 2021. Improved Denoising Diffusion Probabilistic Models. arXiv preprint arXiv:2102.09672 (2021). arXiv:2102.09672 [cs.LG]

- Nichol et al. (2022) Alex Nichol, Prafulla Dhariwal, Aditya Ramesh, Pranav Shyam, Pamela Mishkin, Bob McGrew, Ilya Sutskever, and Mark Chen. 2022. GLIDE: Towards Photorealistic Image Generation and Editing with Text-Guided Diffusion Models. arXiv preprint arXiv:2112.10741 (2022). https://doi.org/10.48550/arXiv.2112.10741 arXiv:2112.10741 [cs.CV]

- Rombach et al. (2022) Robin Rombach, Andreas Blattmann, Dominik Lorenz, Patrick Esser, and Björn Ommer. 2022. High-Resolution Image Synthesis with Latent Diffusion Models. arXiv preprint arXiv:2112.10752 (2022). arXiv:2112.10752 [cs.CV] https://arxiv.org/abs/2112.10752

- Rombach and Esser (2022) Robin Rombach and Patrick Esser. 2022. Stable Diffusion v1-5. https://huggingface.co/runwayml/stable-diffusion-v1-5. Hugging Face model hub.

- Ruiz et al. (2023) Nataniel Ruiz, Yuanzhen Li, Varun Jampani, Yael Pritch, Michael Rubinstein, and Kfir Aberman. 2023. DreamBooth: Fine Tuning Text-to-Image Diffusion Models for Subject-Driven Generation. arXiv preprint arXiv:2208.12242 (2023). https://doi.org/10.48550/arXiv.2208.12242 arXiv:2208.12242 [cs.CV]

- Saharia et al. (2022) Chitwan Saharia, William Chan, Saurabh Saxena, Lala Li, Jay Whang, Emily Denton, S. Kamyar Seyed Ghasemipour, Burcu Karagol Ayan, S. Sara Mahdavi, Rapha Gontijo Lopes, Tim Salimans, Jonathan Ho, David J Fleet, and Mohammad Norouzi. 2022. Photorealistic Text-to-Image Diffusion Models with Deep Language Understanding. arXiv preprint arXiv:2205.11487 (2022). https://doi.org/10.48550/arXiv.2205.11487 arXiv:2205.11487 [cs.CV]

- Vaswani et al. (2017) Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Lukasz Kaiser, and Illia Polosukhin. 2017. Attention Is All You Need. arXiv preprint arXiv:1706.03762 (2017). https://doi.org/10.48550/arXiv.1706.03762 arXiv:1706.03762 [cs.CL]