Entropic probability and context states

Abstract

In a previous paper, we introduced an axiomatic system for information thermodynamics, deriving an entropy function that includes both thermodynamic and information components. From this function we derived an entropic probability distribution for certain uniform collections of states. Here we extend the concept of entropic probability to more general collections, augmenting the states by reservoir and context states. This leads to an abstract concept of free energy and establishes a relation between free energy, information erasure, and generalized work.

1 Introduction

In [1], we developed an axiomatic system for thermodynamics that incorporated information as a fundamental concept. This system was inspired by previous axiomatic approaches [2, 3] and discussions of Maxwell’s demon [4, 5]. The basic concept of our system is the eidostate, which is a collection of possible states from the point of view of some agent. A review of our axioms and a few their consequences can be found in the Appendix. The axioms imply the existence of additive conserved quantities called components of content and an entropy function that identifies reversible and irreversible processes. The entropy includes both thermodynamic and information components.

One of the surprising things about this axiomatic system is that, despite the absence of probabilistic ideas in the axioms, a concept of probability emerges from the entropy . If state is an element of a uniform eidostate , then we can define

| (1) |

States in with higher entropy are assigned higher probability. As we will review below, this distribution has a uniquely simple relationship to the entropies of the individual states and the overall eidostate .

The emergence of an entropic probability distribution motivates us to ask several questions. Can this idea be extended beyond uniform eidostates? Can we interpret an arbitrary probability distribution over a set of states as an entropic distribution within a wider context? What does the entropic probability tell us about probabilistic processes affecting the states within our axiomatic system? In this paper we will address these questions.

2 Coin-and-box model

We first review a few of the ideas of the system in [1] by introducing a simple model of the axioms. None of our later results depend on this model, but a definite example will be convenient for explanatory purposes. Our theory deals with configurations of coins and boxes; as we will see below, the states are arrangements of coins, memory records, and closed boxes containing coins. States are combined together using the operation, which simply stands for ordered pairing of two states. If , is not the same as , and is distinct from . Thus, the combination operation is neither commutative nor associative.

We construct our states from some elementary pieces:

-

•

Coin states, which can be either (heads) or (tails) or combinations of these. It is also convenient to define a stack state to be a particular combination of coin states : . The coin value of a compound of coin states is just the total number of coins involved. A finite set of coin states is said to be -uniform if every element has the same -value.

-

•

Record states . As the name suggests, these should be interpreted as specific values in some available memory register. The combination of two record states is another record state. Thus, , , , etc., are all distinct record states. Record states are not coins, so .

-

•

Box states. For any -uniform set of coin states , there is a sequence of box states . Intuitively, this represents a kind of closed box containing coins, so that . If then we denote the corresponding “basic” box states by .

An eidostate is any finite, non-empty, -uniform set of states. The operation on eidostates is just the Cartesian product of the sets, and always yields another eidostate. For convenience, we identify the state with the singleton eidostate .

We now must define the relation , which tells us which states can be transformed into which other states. We will first give some elementary relations:

-

•

Two eidostates are similar (written ) if they are composed of the same Cartesian factors, perhaps combined in a different way. If , then . (The notation means and .) As far as the relation is concerned, we can freely rearrange the “pieces” in a compound eidostate.

-

•

For coin states, .

-

•

If is a record state, for any . In a similar way, for an empty box state, .

-

•

If is a -uniform eidostate of coin states, .

Now we add some rules that allow us to extend these to more complex situations. In what follows, , , , etc., are eidostates, and is a state.

- Transitivity.

-

If and , then .

- Augmentation.

-

If , then .

- Cancelation.

-

If , then .

- Subset.

-

If and , then .

- Disjoint union.

-

If and are both disjoint unions and , and both and , then .

Using these rules we can prove a lot of relations. For example, for a basic box state we have . From the subset rule we have (but not the reverse). Then we can say,

| (2) |

from which we can conclude (via transitivity and cancelation) that . The use of a basic box allows us to “randomize” the state of one coin.

Or consider two coin states and distinct record states and . Then

| (3) |

from which can show that . That is, we can set an unknown coin state to , if we also make a record of which state it is. A pretty similar argument establishes the following:

| (4) | |||||

The eidostate (called a bit state) can be deleted at the cost of a coin absorbed by the basic box. The basic box is a coin-operated deletion device; and since each step above is reversible, we can also use it to dispense a coin together with a bit state (that is, an unknown bit in a memory register).

These examples help us to clarify an important distinction. What is the difference between the box state and the eidostate ? Could we simply replace all box states with a simple combination of possible coin eidostates? We cannot, because such a replacement would preclude us from using the subset rule to obtain Equation 2. The whole point of the box state is that the detailed state of its contents is entirely inaccessible for determining possible processes. Putting a coin in a box effectively randomizes it.

It is not difficult to show that our model satisfies all of the axioms presented in the Appendix, with the mechanical states in identified as coin states. The key idea in the proof is that we can reversibly reduce any eidostate to one with a special form:

| (5) |

where is a stack state of coins and is an information state containing possible record states. Relations between eidostates are thus reduced to relations between states of this form. We note that the coin value is conserved in every relation, and no relation allows us to decrease the value of . In our model, there is just one independent component of content ( itself), and the entropy function is . (We use base-2 logarithms throughout.)

3 The entropy formula and entropic probability

Now let us return to the general axiomatic system. A uniform eidostate is one for which, given any two states , either or . (We may write this disjunction as .) The set of all uniform eidostates is called . Then the axioms imply the following theorem (Theorem 8 in [1]):

Theorem.

There exist an entropy function and a set of components of content on with the following properties:

-

(a) For any , .

-

(b) For any and component of content , .

-

(c) For any , if and only if and for every component of content .

-

(d) for all .

The entropy function is determined111Up to a non-mechanical component of content by the relations among the eidostates.

We can compute the entropy of a uniform eidostate in terms of the entropies of its elements . This is

| (6) |

It is this equation that motivates our definition of the entropic probability of within the eidostate :

| (7) |

Then and the probabilities sum over to 1. As we have mentioned, the entropy function may not be quite unique; nevertheless, two different admissible entropy functions lead to the same entropic probability distribution. Even better, our definition gives us a very suggestive formula for the entropy of :

| (8) | |||||

| (9) |

where the mean is taken with respect to the entropic probability, and is the Shannon entropy of the distribution [6, 7].

Equation 9 is very special. If we choose an arbitrary distribution (say ), then with respect to this probability we find

| (10) |

with equality if and only if is the entropic distribution [7]. Therefore we might define the entropic probability to be the distribution that maximizes the sum of average state entropy and Shannon entropy—a kind of “maximum entropy” characterization.

4 Uniformization

A unique entropic probability rule arises from our relations among eidostates, which in the real world might summarize empirical data about possible state transformations. But so far, this entropic probability distribution is only defined within a uniform eidostate .

In part this makes sense. An eidostate represents represents the knowledge of an agent—i.e., that the state must be one of those included in the set. This is the knowledge upon which the agent will assign probabilities, which is why we have indicated the eidostate as the condition for the distribution. Furthermore, these might be the only eidostates, since the axioms themselves do not guarantee that any non-uniform eidostates exist. (Some models of the axioms have them, and some do not.) But can we generalize the probabilities to distributions over non-uniform collections of states?

Suppose is a finite set of states, possibly not uniform. Then we say that is uniformizable if there exists a uniform eidostate , where the states are mechanical states in . The idea is that the states in , which vary in their components of content, can be extended by mechanical states that “even out” these variations. Since is uniform, then for any . The abstract process is said to be adiabatically possible [2]. Mechanical states have , so the entropy of the extended is just

| (11) |

which is independent of our choice of the uniformizing mechanical states.

What is the significance of this entropy? Suppose and are not themselves uniform, but their union is uniformizable. Then we may construct uniform eidostates and such that either or , depending on whether or the reverse. In short, the entropies of the extended eidostates determine whether the set of states can be turned into the set , if we imagine that these states can be augmented by mechanical states, embedding them in a larger, uniform context.

Given the entropy of the extended state, we can define

| (12) |

This extends the entropic probability to the uniformizable set .

Let us consider an example from our coin-and-box model. We start out with the non-uniform set . These two basic box states have different numbers of coins. But we can uniformize this set by adding stack states, so that is a uniform eidostate. The entropy of a basic box state is , so we have

| (13) |

The entropic probabilities are thus

| (14) |

5 Reservoir states

So far, we have uniformized a non-uniform set by augmenting its elements with mechanical states, which act as a sort of “reservoir” of components of content. These mechanical states have no entropy of their own. But we can also consider a procedure in which the augmenting states act more like the states of a thermal reservoir in conventional thermodynamics.

We begin with a mechanical state , and posit a sequence of reservoir states , which have the following properties.

-

•

For any , .

-

•

if and only if .

The reservoir states form a ladder. We can ascend one rung in the ladder by “dissolving” the mechanical state into the reservoir. If we have more than one reservoir state, we can ascend one ladder provided we descend another by the same number of rungs.

For any and , we have that , so that

| (15) |

is a non-negative constant for the particular sequence of reservoir states. This sequence is characterized by the state and the entropy increment . Note that we can write , where .

For example, in our coin-and-box model, the basic box states act as a sequence of reservoir states with a mechanical (coin) state and an entropy increment . The more general box states form a reservoir state sequence with and , where and is the number of states in . For each of these bojx-state reservoir sequences, .

One particular type of reservoir is a mechanical reservoir consisting of the states , , , etc. We denote the th such state by . For the reservoir states, . If we have a finite set of states that can be uniformized by the addition of the states, they can also be uniformized by a corresponding set of non-mechanical reservoir states :

| (16) |

As before, we can find the entropy of this uniform eidostate and define entropic probabilities. But the reservoir states now contribute to the entropy and affect the probabilities.

First, the entropy:

| (17) |

The entropic probability—which now depends on the choice of reservoir states—is

| (18) |

The reservoir states affect the relative probabilities of the states. For example, suppose for a pair of states in . We might naively think that these states would end up with the same entropic probability, as they would if we uniformized by mechanical states. But since we are uniformizing using the reservoir states, it may be that and have different entropies. Then the ratio of the probabilities is

| (19) |

which may be very different from 1.

Again, let us consider our coin-and-box model. We begin with the non-uniform set . Each of these states has the same entropy , that is, zero. We choose to uniformize using basic box states . For instance, we might have

| (20) |

Recalling that , the entropy is

| (21) |

This yields probabilities

| (22) |

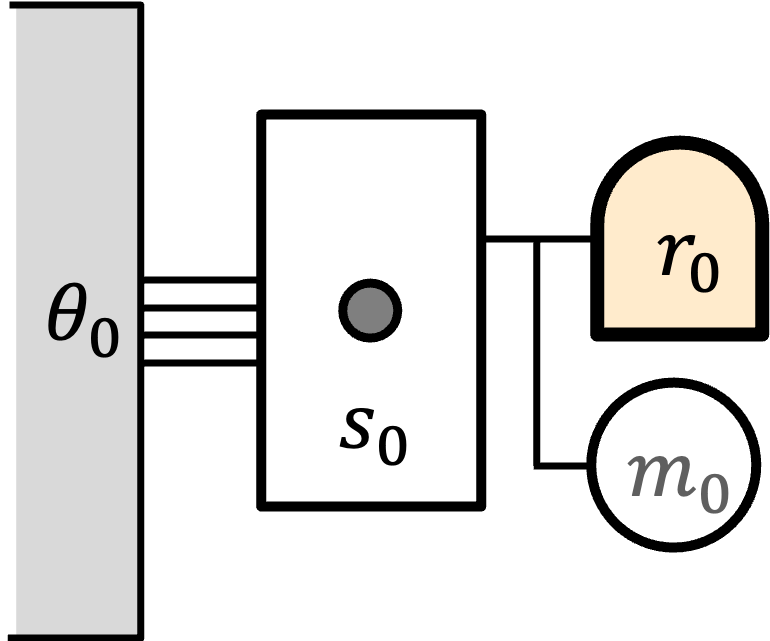

As an illustration of these ideas, consider the version of Maxwell’s demon shown in Figure 1.

The demon is a reversible computer with an initial memory state . It is equipped with a reversible battery for storing energy, initially in mechanical state . The demon interacts with a one-particle “Szilard” gas, in which the single particle can move freely within its volume (state ). The gas is maintained in thermal equilibrium with a heat reservoir, whose initial state is . We might denote the overall initial state by .

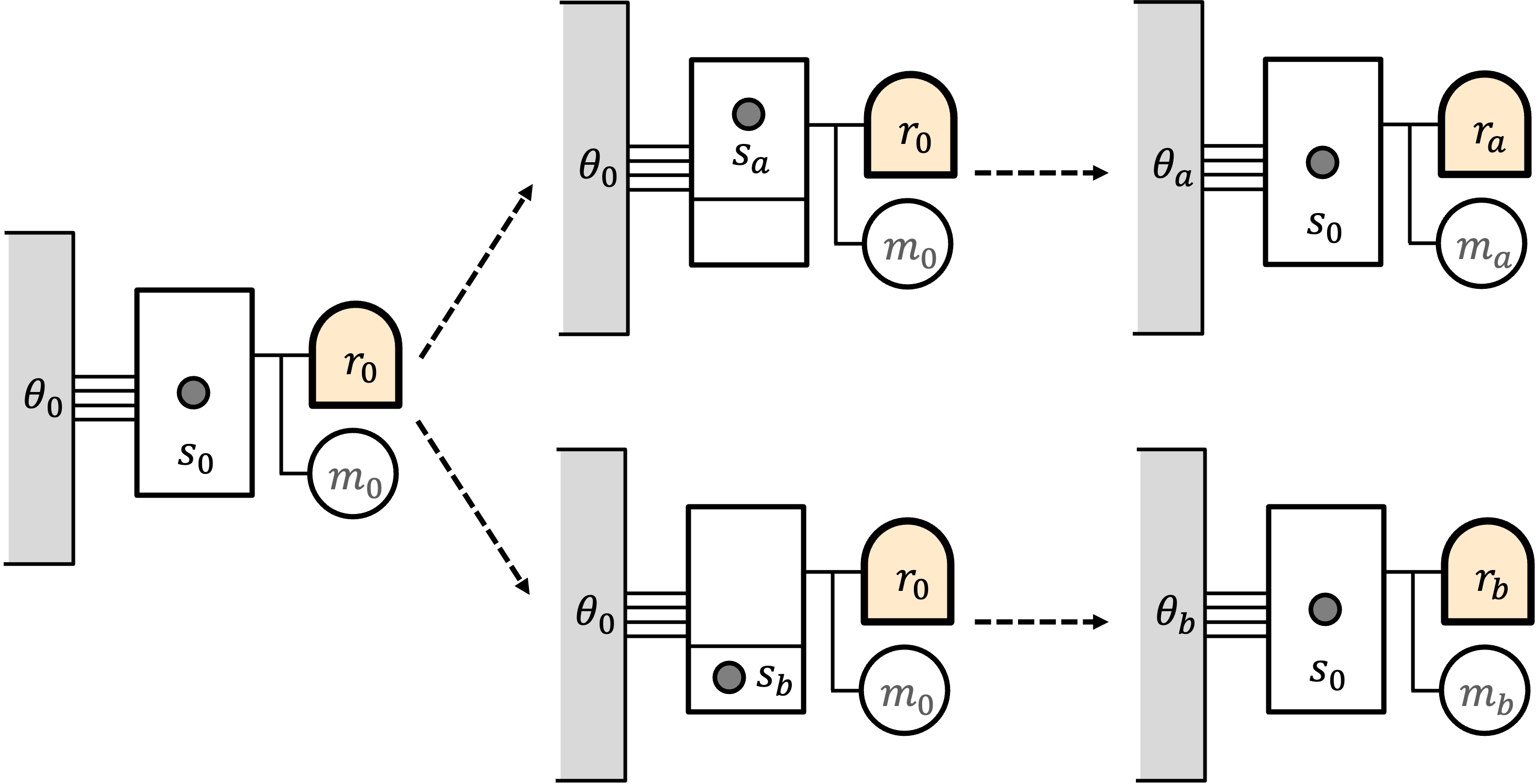

Now the demon introducers a partition into the gas, separating the enclosure into unequal subvolumes, as in Figure 2. The two resulting states are and , which are not equally probable. The probabilities here are entropic probabilities due to the difference in entropy of and .

Now the demon records the location of the particle in its memory and uses this to control the isothermal expansion of the one-particle gas. The work is stored in the battery. At the end of this process, the demon retains its memory record, the battery is in one of two mechanical states and . The gas is again in state . But different amounts of heat have been extracted from the reservoir during the expansion, so the reservoir has two different states and .

The overall final eidostate might be represented as

| (23) |

The states of the demon and the gas, and , have different energies and the same entropy. It is the reservoir states and that (1) make uniform (constant energy), and (2) introduce the entropy differences leading to different entropic probabilities for the two states.

A conventional view would suppose that the unequal probabilities for the two final demon states comes from their history—that is, that the probabilities are inherited from the unequal partition of the gas. In the entropic view, the unequal probabilities are due to differences in the environment of the demon, represented by the different reservoir states and . The environment, in effect, serves as the “memory” of the history of the process.

6 Context states

When we uniformize a non-uniform by means of a sequence of reservoir states, the reservoir states affect the entropic probabilities. We can use this idea more generally.

For example, in our coin-and-box model, suppose we flip a coin but do not know how it lands. This might be represented by the eidostate . Without further information, we would assign the coin states equal probability 1/2, which is the simple entropic probability. But suppose we have additional information about the situation that would lead us to assign probabilities 1/3 and 2/3 to the coin states. This additional information—this context—must be reflected in the eidostate. The example in Equation 14 tells us that this does the job:

| (24) |

The extended coin-flip state includes extra context so that the entropic probability reflects our additional information.

In general, we can adjust our entropic probabilities by incorporating context states. Suppose we have a uniform eidostate , but we wish to specify a particular non-entropic distribution over these states. Then for each we introduce eidostates , leading to an extended eidostate

| (25) |

which we assume is uniform. The ’s are the context states. Our challenge is to find a set of context states so that the entropic probability in equals the desired distribution .

We cannot always do this exactly, but we can always approximate it as closely as we like. First, we note that we can always choose our context eidostates to be information states. The information state containing record states has entropy . Now for each , we closely approximate the ratio by a rational number; and since there are finitely many of these numbers, we can represent them using a common denominator. In our approximation,

| (26) |

Now choose for each . The entropy of becomes

| (27) | |||||

From this, we find that the entropic probability is

| (28) |

as desired.

We find, therefore, that the introduction of context states allows us to “tune” the entropic probability to approximate any distribution that we like. This is more than a trick. The distribution represents additional implicit information (beyond the mere list of states ), and such additional information must have a physical representation. The context states are that representation.

7 Free energy

The tools we have developed can lead to some interesting places. Suppose we have two sets of states, and , endowed with a priori probability distributions and , respectively. We wish to know when the states in can be turned into the states in , perhaps augmented by reservoir states. That is, we wish to know when .

We suppose we have a mechanical state , leading to a ladder of mechanical reservoir states . The mechanical state is non-trivial, in the the sense that for any . This means that there is a component of content such that . The set can be uniformized by augmenting the and states by mechanical reservoir states.

However, we still need to realize the and probabilities. We do this by introducing as context states a corresponding ladder of reservoir states such that is very small. Essentially, we assume that the reservoir states are “fine-grained” enough that we can approximate any positive number by for some positive or negative integer . Then, if we augment the and states by combinations of and states, we can uniformize and also tune the entropic probabilities to match the a priori and . The final overall uniform eidostate is

| (29) |

for integers , , and . The uniformized and eidostates are subsets of this, and thus are themselves uniform eidostates. The entropic probabilities have been adjusted so that

| (30) |

We now choose a component of content such that . Since the overall state is is uniform, it must be true that

| (31) |

for all choices of . Of course, if all of these values are the same, we can average them together and obtain

| (32) |

We can write the average change in the -value of the mechanical state as

| (33) |

Since all of the states lie within the same uniform eidostate, if and only if —that is,

| (34) |

From this it follows that

| (35) |

If we substitute this inequality into Equation 33, we obtain

We can get insight into this expression as follows. Given the process ,

-

•

is the average increase in -value of the mechanical state, which we can call . Intuitively, this might be regarded as the “work” stored in the process.

-

•

We can denote the change in the Shannon entropy of the probabilities by . Since each or state could be augmented by a corresponding record state, this is the change in the information entropy of the stored record.

-

•

For each state , we can define the free energy . We call this free “energy”, even though does not necessarily represent energy, because of the analogy with the familiar expression for the Helmholtz free energy in conventional thermodynamics. The average change in the free energy is

(37) The free energy depends on the particular reservoir states only via the ratio . Given this value, depends only on the and states, together with their a priori probabilities.

To return to our coin-and-box example, suppose we use the basic box states as reservoir states , and we choose the coin number as our component of content. Then and , so that the free energy function . (If we use different box states as reservoir states, the ratio is different.)

With these definitions, Equation 7 becomes

| (38) |

Increases in the average stored mechanical work, and decreases in the stored information, must be paid for by a corresponding decrease in the average free energy.

Many useful inferences can be drawn from this. For example, the erasure -cost of one bit of information in the presence of the -reservoir is . This cost can be paid from either the mechanical -reservoir state or the average free energy, or from a combination of these. This amounts to a very general version of Landauer’s principle [8], one that involves any type of mechanical component of content.

Appendix

In this appendix we review some of the main definitions and axioms of the theory, as well as some of its key results. For more details, please see [1].

The theory is built a few essential elements:

-

•

A set of states and a set of eidostates. Each eidostate is a finite collection of states. Without too much confusion, we may identify a state with the singleton eidostate , so that can be regarded as a subset of .

-

•

An operation by which eidostates are combined. This is just the Cartesian product of the sets. Two eidostates and are similar () if they are formed by the same Cartesian factors, perhaps put together in a different way.

-

•

A relation on . We interpret to mean that eidostate may be transformed into eidostate . A process is a pair of eidostates, and it is said to be possible if either or . An eidostate is uniform if, for all , is possible.

-

•

Special states in called record states. State is a record state if there exists another state such that . An information state is an eidostate containing only record states; the set of these is called . A bit state is an information state with exactly two elements, and a bit process is a process of the form .

Given this background, we can present our axioms.

- Axiom I

-

(Eidostates.) is a collection of sets called eidostates such that:

- (a)

-

Every is a finite nonempty set with a finite prime Cartesian factorization.

- (b)

-

if and only if .

- (c)

-

Every nonempty subset of an eidostate is also an eidostate.

- Axiom II

-

(Processes.) Let eidostates , and .

- (a)

-

If , then .

- (b)

-

If and , then .

- (c)

-

If , then .

- (d)

-

If , then .

- Axiom III

-

If and is a proper subset of , then .

- Axiom IV

-

(Conditional processes.)

- (a)

-

Suppose and . If and then .

- (b)

-

Suppose and are uniform eidostates that are each disjoint unions of eidostates: and . If and then .

- Axiom V

-

(Information.) There exist a bit state and a possible bit process.

- Axiom VI

-

(Demons.) Suppose and such that .

- (a)

-

There exists such that .

- (b)

-

For any , either or .

- Axiom VII

-

(Stability.) Suppose and . If for arbitrarily large values of , then .

- Axiom VIII

-

(Mechanical states.) There exists a subset of mechanical states such that:

- (a)

-

If , then .

- (b)

-

For , if then .

- Axiom IX

-

(State equivalence.) If is a uniform eidostate then there exist states such that and .

A component of content is a real-valued additive function on the set of states . (Additive in this context means that .) Components of content represent quantities that are conserved in every possible process. In a uniform eidostate , every element has the same values of all components of content, so we can without ambiguity refer to the value . The set of uniform eidostates is denoted . This set includes all singleton states in , all information states in and so forth, and it is closed under the operation.

References

- [1] Austin Hulse, Benjamin Schumacher, and Michael D. Westmoreland. Axiomatic information thermodynamics. Entropy, 20(4):237, 2018.

- [2] R. Giles. Mathematical Foundations of Thermodynamics. Pergamon Press Ltd., Oxford, 1964.

- [3] Elliott H. Lieb and Jakob Yngvason. A guide to entropy and the second law of thermodynamics. Notices of the American Mathematical Society, 45:571–581, 1998.

- [4] Leo Szilard. On the decrease of entropy in a thermodynamic system by the intervention of intelligent beings. Zeitschrift fur Physik, 53:840–856, 1929. (English translation in Behavioral Science 1964, 9, 301–310.).

- [5] Charles H. Bennett. The thermodynamics of computation—a review. International Journal of Theoretical Physics, 21:905–940, 1982.

- [6] C. E. Shannon. A mathematical theory of communication. Bell System Technical Journal, 27:379–423,623–656, 1948.

- [7] Thomas M. Cover and Joy A. Thomas. Elements of Information Theory (Second Edition). John Wiley and Sons, Hoboken, 2006.

- [8] R. Landauer. Irreversibility and heat generation in the computing process. IBM Journal of Research and Development, 5:183–191, 1961.