Estimating Parking Occupancy using Smart Meter Transaction Data

Abstract.

The excessive search for parking, known as cruising, generates pollution and congestion. Cities are looking for approaches that will reduce the negative impact associated with searching for parking. However, adequately measuring the number of vehicles in search of parking is difficult and requires sensing technologies.

In this paper, we develop an approach that eliminates the need for sensing technology by using parking meter payment transactions to estimate parking occupancy and the number of cars searching for parking. The estimation scheme is based on Particle Markov Chain Monte Carlo. We validate the performance of the Particle Markov Chain Monte Carlo approach using data simulated from a queue. We show that the approach generates asymptotically unbiased Bayesian estimates of the parking occupancy and underlying model parameters such as arrival rates, average parking time, and the payment compliance rate. Finally, we estimate parking occupancy and cruising using parking meter data from SFpark, a large scale parking experiment and subsequently, compare the Particle Markov Chain Monte Carlo parking occupancy estimates against the ground truth data from the parking sensors. Our approach is easily replicated and scalable given that it only requires using data that cities already possess, namely historical parking payment transactions.

1. Introduction

Finding an available parking space is an annoyance of modern life. The search for parking has external costs as well given that excess driving increases traffic congestion and pollutes the environment. In response to these negative externalities, city officials are deploying parking information and pricing systems. Parking occupancy information and market based parking pricing strategies reduce the time to find a parking space and pollution ([fabusuyi2014, pierce2013getting, millard2014curb, chatman2014theory]). These strategies require cities to install both parking occupancy sensors and automated payment stations. However, on-street parking sensors are expensive to install and maintain, and many cities cannot afford to deploy parking sensors. In contrast to parking sensors, the automated payment stations are more affordable and more widely deployed. In this paper, we attempt to answer the following question: is it possible to estimate parking occupancy and the number of drivers searching for parking using only parking meter payment data?

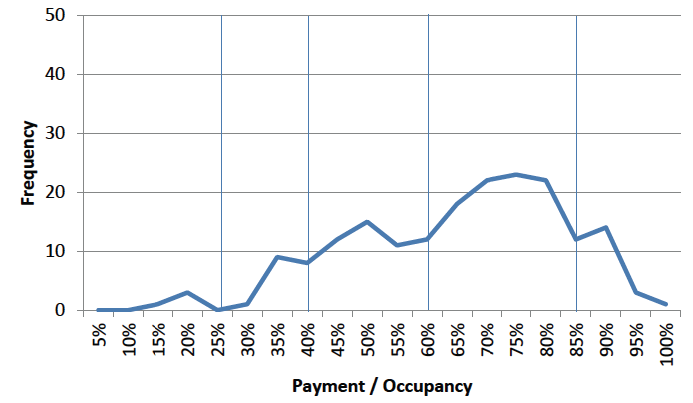

Two challenges stand in the way of answering this question. First, a large fraction of drivers do not pay at all or do not pay enough to cover their parking time. For example, in San Francisco the non-payment rate is roughly 30 percent, driven in part by the perennial problem with the abuse of disabled parking placards for on-street parking [SIRA2014]. Figure 1 plots the probability distribution of the non-payment rates in San Francisco. During metered hours, only 70% of parking time is paid for on the modal block. The second challenge is that drivers almost never pay for the exact amount of parking that they need, as they either pay too much or too little.

It is these challenges that are estimation approach seeks to address. Specific contributions of our approach include the:

-

•

The introduction of a modeling framework to infer parking occupancy and the time to find parking given a sequence of parking meter payments using a variant of the Particle Markov Chain Monte Carlo (PMCMC) method. We show that this algorithm generates asymptotically unbiased estimates of parking occupancy of the underlying model parameters, such as arrival rates, average parking time, and non-compliance rates (§4.3).

-

•

The use of a stochastic queueing model to provide constraints for inferring the unobserved parking occupancy and the model parameters. (§3.1).

- •

The sample path construction for the queue is the foundation of the our queueing inference methods. The sample path formulation leads to a flexible modelling framework for inference. Our proposed method extends to time varying model parameters (arrival rates, service rates, etc). For the ease of exposition, we focus on the case of constant model parameters. Furthermore, our methods extends to the case of random order of service. There exists an analogous sample path construction of a GI/GI/s queue with random order of service (see Sutton and Jordon [sutton2011bayesian]). For the ease of exposition, we focus on the first come first served service discipline.

The balance of the paper is organized as follows: Section 2 provides a review of the existing literature. The details of the application of a stochastic queuing model to parking occupancy is discussed in Section 3. Information on the computational algorithm and how the queuing behavior is inferred from smart parking meter transaction data is provided in Section 4. Numerical experiments are carried out in Section 5 and Section 6, the last section, concludes.

2. Literature Review

Traffic congestion has a huge negative impact not just on local municipalities but also on the global economy. It increases pollution, wastes energy, and costs billions in lost time and productivity. A significant contributor to traffic congestion is vehicles searching for parking and it is estimated that in busy areas, an average of 30 percent of traffic congestion is from drivers cruising for open parking spots [shoup2006cruising]. Also contributing to this problem is the increase in vehicle ownership [Schaller2021], a development which adds to the scarcity of parking spaces, particularly, on-street parking. Monitoring on-street parking is a much more difficult task than monitoring garage parking, which is relatively easy to compute through gate counts of entering and exiting vehicles. And cities often price on-street parking significantly lower than off-street parking, leading drivers rationally to choose to cruise [millard2014curb].

Intelligent transportation systems (ITS) such as smart parking solutions are being used to help relieve such congestion and help drivers navigate this situation by providing real-time and predictive information about current and future traffic and parking availability [fabusuyi2014]. One well-known smart parking solution to the “cruising for parking” problem was SFpark [SIRA2014]. SFpark was a Federal Highway Administration (FHWA) funded parking management project in San Francisco, California from 2009 to 2014. The City of San Francisco installed close to 12,000 magnetometer sensors in 8000 parking spaces, creating a wireless sensor network to collect and distribute information on parking availability [6]. Though conceived as a demonstration project, the problems with replicating the program elsewhere include the enormous cost, which is out of the reach of most cities, the limited life-cycle of the fixed sensors, and the variable pricing mechanism used by SFpark [fabusuyi2018].

Apart from the demonstration program, other approaches of managing on-street parking include sensing using smartphones [nawaz2013] or through sensors attached to vehicles [mathur2010] which requires drivers to opt into the program. Crowdsensing, via on-vehicle sensors, is another opt-in system since it depends entirely on the willingness of drivers to attach the sensors to their vehicles and to provide information for others to use [Liao2016]. Other forms of sensing include a combination of on-vehicle sensors and crowdsourcing [roman2018]. User opt-in is required in this type of project as well.

Detecting parking occupancy is also carried out via vehicle to infrastructure (V2I) communication. An example is a novel on-street parking management system that detects parking occupancy using low-cost Bluetooth beacon transmitters installed in vehicles that are connected to receivers located near on-street parking spots [Chen2019]. Some of these solutions are being advocated by the private sector or by the private sector in partnership with academia. These include the use of radar sensors mounted on streetlights by Siemens called Integrated Smart Parking Solution [Siemens2015]. Here, the radar sensors are mounted on streetlights to scan a bigger area than can be done from a single vehicle. The radar sensors monitor both traffic flows and parking spots, to help provide information to drivers searching for parking

Ford Motor Company and Georgia Institute of Technology (Georgia Tech) have collaborated to build a system that gives information about on-street parking availability using sonars and radars on existing cars [Ford2015]. This technology is available to Ford drivers as a paid service. Other systems use vehicles’ pre-installed parking sensors to better classify on-street areas into either legal or illegal parking spots in order to give more accurate information to drivers searching for parking [coric2013]. Using data from parking meters or kiosks is yet another method of collecting valuable parking information for drivers given that parking meters or kiosks are responsible for 95 percent of on-street paid parking spots [Yang2017]. These transaction data have been used with other data to provide not just the real time information on parking availability but also to forecast short term on-street parking occupancy. Yang et. al. [Yang2019], for example, use a deep neural network -based model that uses parking meter transactions along with traffic and weather conditions to predict parking occupancy. Yang et. al. earlier approach [Yang2017] simulated individual payment and parking behavior using a probabilistic payment model, which when accumulated over all transactions, creates time-varying occupancy estimations with a low error rate.

The use of parking kiosks or meters to estimate parking occupancy is however, complicated by the fact that drivers can neglect to pay for parking, pay more than required for their time spent, or pay less than required. We can use the parking meter payments in a queueing process model of on-street parking to create a queueing inference engine (QIE) [larson1990queue, bertsimas1992deducing, mandelbaum1998estimating]. However, our approach builds on the literature in three significant ways. First, our underlying queueing model has no restriction on the inter-arrival time distribution. Second, our parking meter data makes up a subset of service commencement times, in contrast to the QIE whose observations are the complete set of departure times. Additionally, we only observe a noisy estimate of the service times; namely the payment amounts. Finally, we introduce a completely different framework based on simulating the queuing process and Markov chain Monte Carlo methods.

Our approach is innovative given that the authors know of no existing work that applies a QIE approach for estimating on-street parking spaces using parking meter transaction data. Our approach improves on the San Francisco Municipal Transportation Authority [SIRA2014, demisch2016demand] regression-based model for estimating hourly parking occupancy using payment data. First, our model provides predictions at the time scale of observed payments while SFMTA’s regression-based approach is at the hourly level. Secondly, our framework learns the model parameters on the fly, while previous methods require the user to tune the regression approach for each geographic region. Thus, the ease of replicating our approach is afforded users as they can deploy our method more broadly compared to the regression-based approach.

3. Models

3.1. Parking Model

The unit of analysis is a single on-street parking block with parking spaces. We model parking occupancy at the block level as a stochastic queueing model. We define to be a sequence of random independent inter-arrival times of the drivers with a general distribution and as a sequence of random independent parking (service) times with a general distribution. These inter-arrival and service times are the random input primitives to the queue. The queue transforms the random input primitives into arrival times, service start times, and departure times. The physics of the queue determine the values of these outputs. The queue transforms the random primitives according to the set of equations (see [kiefer1955theory, krivulin1994recursive, sutton2011bayesian])

| (1) |

where the outputs are the sequences of arrival times and departure times . Intermediate variables assist the transformation; is the service start time of -th arrival, the -th arrival parks at space , and is the first time a parking space becomes available to serve the -th arrival. This transformation is one-to-one and invertible [sutton2011bayesian]. Additionally, the likelihood of any set of arrival and departure times is

| (2) |

where is the density of the inter-arrival times with parameters and is the density of the service times with parameters . This product form of the likelihood is due to the independence of the inter-arrival times and the service times.

These equations correspond to drivers parking at available spaces in the order they arrive. We can extend our results to random order of service by slightly modifying the queue input and output equations [sutton2011bayesian]. For ease of presentation, we focus on the first-come-first-served (FCFS) service discipline

Using this transformation, we construct the number of parked cars, the number of cars searching for parking, and the time needed to find an available parking space.

3.2. Payment Observation Model

We now introduce payments into the parking model. We assume that a driver pays immediately after parking. The driver pays at an automated payment station that aggregates payments for all spaces on the block. After the -th driver parks, the time remaining on the meter is

where is the amount paid time by the -th parking driver and is the time of the -th payment. The payment amount relates to the true parking time through a general joint distribution. For the sake of simplicity, we assume that the payment amount is the mixture of two distributions. The first component of the mixture is an exponential distribution with a mean equal to the true parking time. The second component of the mixture is a point mass at zero which represents the probability, , of a driver not paying for parking.

The observations consist of the time of each payment and the time remaining on the meter . We focus on analyzing a batch of observations .

3.3. State Space

An important feature of the model is that not all parking drivers pay for parking. Therefore, the number of payments do not correspond to the number of arriving vehicles. We now introduce a random variable to track the number of arriving vehicles. Let the random variable be the number of arriving drivers before the -th observed payment. Since the -th payment occurs at time , the number of arrivals up to the -th payment satisfies

| (3) |

where is a geometric random variable with rate

The number of arrivals before the -th payment, , evolves according to a Markov chain at payment times. It is a counting process with independent increments. The number of arrivals until payments has a negative binomial distribution with parameter and .

The state space is the total number of arrivals and the corresponding collection of arrival times, service start times, and departures times of all customers in the queue at the time of the -th payment

The state has two components, the number of arrivals before the -th payment and the queueing quantities for these arrivals. The dimension of grows randomly with due to the unknown number of arrivals between payments.

4. Inference

Let be the parameters of the inter-arrival time, service time and payment distributions in the parking and payment models. Our goal is to estimate the

| (4) |

where is the prior distribution on the model parameters

where the process has an initial density and the payments are independent of each other given the state and have a common density where is the state the system. The transition probability is

This joint density has the form of a Feynman-Kac model. The rich literature on Feynman-Kac models (see Del Moral [del2000branching]) provides the theoretical foundation for the proposed computational algorithms.

4.1. Particle Filter

The queue transformation constrains the likely values of the random primitives given the payment observations. First, we consider the case where the parameters of the inter-arrival, service time, and payment distributions are known. Our goal is to compute the posterior distribution of the state given payment observations . The traditional Kalman filter [kalman1960new] does not apply to this goal because the dynamics of the state are neither linear nor does it have a Gaussian distribution. The queueing inference engine techniques [larson1990queue, bertsimas1992deducing, mandelbaum1998estimating] do not apply to the goal either due to the general nature of the distribution of the random primitives.

The solution is to simulate sample trajectories from using Sequential Monte Carlo (SMC), also known as a particle filter [gordon1993novel, doucet2009tutorial]. The particle filter approximates the posterior distribution over state trajectories using particles. The particle filter proceeds in three steps. First, for each particle we simulate a one-step state transition. Next, we compute an importance weight for each particle based on the likelihood of observing the current payment given the state of the particle. Finally, we propagate, kill, or replicate each particle in proportion to its importance weight. Particles with larger weights have a higher likelihood of replicating, and those with smaller weights tend to die out. In this way, the surviving particles approximate samples from the posterior distribution . For any number of particles, the particle filter produces an unbiased estimate of the posterior distribution [doucet2009tutorial]. It also produces an unbiased estimate of the likelihood of the observations

| (5) |

where is the importance weight of the -th particle at step .

The two ingredients of the particle filter are the state transition probabilities and the observation likelihood model. The particle filter requires only the ability to simulate the one step transitions of the state. The observation likelihood model is more complex to compute than the state transitions.

4.2. Approximate Bayesian Computation (ABC)

In this section, we construct the observation likelihood, , of the -th observation given the state. The observations consist of the payment time and time remaining on the meter just after the -th payment. The time of the -th payment must correspond to either an arrival time or a departure time of a previous arrival. However, the structure of the departure times is complex and does not preserve arrival order. Further, the departure times depend on residual service times that are difficult to compute for general service distributions. The amount of the -th payment depends on the service time of the corresponding driver who need not be the -th arrival. These factors make the likelihood challenging to compute in closed form, therefore, we simulate an estimate of the likelihood density.

For each particle we simulate realizations of the -th payment given the state . This simulation is fast due to the sample path construction of the queue and payment models. A particle receives a high weight if the simulated -th payments are “close” to the observed -th payment, and receives a low weight otherwise. This is called approximate Bayesian computation (ABC) [marjoram2003markov, beaumont2002approximate]. Jasra et al. [jasra2012filtering] and Calvet et al. [calvet2014accurate] successfully use ABC in the particle filtering context. The full algorithm appears in Algorithm 1. We approximate the likelihood density function by using a kernel-based distance metric in the observation space

| (6) |

where is the bandwidth of the kernel, a is the number of simulations.

4.3. Particle Marginal Metropolis-Hastings (PMMH)

We turn to jointly estimating both model parameters. We are interested in the parameters of the inter-arrival time distribution (), the service time distribution () and payment probability (). We implement the Particle Markov Chain Monte Carlo method (PMCMC) [andrieu2010particle]. This combines two powerful methods: Markov Chain Monte Carlo and the particle filter. Specifically, we use the Particle Marginal-Metropolis Hastings (PMMH) method. The outputs of PMMH are an estimate of the data likelihood, , and samples from the posterior distribution .

The key idea of the approach is that the particle filter generates state proposals within the Metropolis-Hastings algorithm. The particle filter also generates an estimate of the likelihood which is part of the proposal acceptance probability. We accept joint state and parameters proposals that explore parts of the posterior distribution that are more likely. In essence, we are searching over time evolution of the state space and parameters that are the most likely given the observed sequence of payments.

Here is how it works. The input to the method is a batch of payments observations, . First, we generate proposed values for the parameters using a Gaussian random walk proposal mechanism. Next, we run the particle filter to generate a proposed state trajectory under the posterior distribution and we generate a likelihood estimate under the proposed parameters. We accept the proposed parameters and state trajectory according to an acceptance probability that depends on the likelihood and the parameter proposal distribution. We iterate this process which results in unbiased estimates of joint distribution over the parameters and the state trajectory. The estimates are consistent for any number of particles. The output of PMMH is a distribution of accepted state proposal-parameter pairs. Each accepted proposal of the MCMC step consists of both a state trajectory and corresponding model parameters.

We modify the PMMH algorithm by plugging in the ABC particle filter to generate the state proposals. The full algorithm appears in Algorithm 2. In their discussion of Andrieu et al. [andrieu2010particle], Cornebise and Peters [cornebise2009comments] advocate this approach when the likelihood is intractable. Our main result shows that the ABC-PMMH methods generates unbiased estimates of the the target joint distribution as the number of particles and simulated ABC observations go to infinity.

Theorem 4.1.

The ABC-PMMH converges to the correct posterior distribution when the number of particles grows appropriately with the size of the kernel density estimator, that is

when the number of particles scales with the number of simulated payments

and the bandwidth of the kernel density estimator decreases with according to .

Proof.

The Particle Marginal Metropolis-Hasting acceptance probability depends on the likelihood estimate generated by the particle filter

where is the number of steps (payments) and is the number of particles. This is an unbiased estimator of the likelihood [del2000branching] for any number of particles.

However, the expression for the density function is intractable. Therefore, we estimate the density using a Kernel density estimator with bandwidth ,

where are simulated from true distribution . The corresponding observation likelihood estimate generated by the particle filter is

If , then the posterior distribution converges to the correct posterior distribution by applying Theorem 4 of Andrieu et al. [andrieu2010particle].

The difference between the true and approximate log likelihood is bounded above

The last inequality follows from the uniform convergence results for kernel density estimators. ∎

Theorem 4.2 (Fan and Yao [fan2003nonlinear] ).

If the density has a bound second derivative and the bandwidth is chosen so that , then

We adopt the PMMH method for two reasons. First, the approach allows for flexibility in model specification and only requires the ability to simulate sample paths of the model. Secondly, PMMH handles state spaces with random dimensions. Our state space is of an unknown dimension due to the unknown number of arrivals between payments. In the literature this problem is called trans-dimensional [green2003trans]. One way to perform estimation on trans-dimensional problems is the reversible jump Markov chain Monte Carlo (RJMCMC) method [green1995reversible]. This popular method requires the development of problem specific and often complex proposal moves [karagiannis2013annealed]. There are several papers that use the PMCMC methods in the trans-dimensional problems. For example, PMCMC was used to construct phylogenetic trees [persing2015simulation], and to estimate of volatility in asset pricing models [bauwens2014marginal].

-

•

Pick an initial value of parameters: . ;

Run Algorithm 1 targeting and the data likelihood . ;

Sample and the likelihood estimate . ;

-

•

Sample from parameter proposals: ;

Run Algorithm 1 targeting and the likelihood . ;

Sample and the likelihood ;

Accept proposed parameters and workload trajectories with probability

If accepted, set and . If not accepted, set and . ;

5. Numerical Experiments

In this section we test the performance of the PMMH approach to jointly estimating parking occupancy and the model parameters. First, we validate the approach by estimating parking occupancy and the model parameters using simulated data from a queue assuming all customers pay for parking (). Next, we jointly estimate the parking occupancy and the model parameters under partial payment compliance. Finally, we apply the method to parking meter payment data from SFpark, a large scale parking intervention policy in San Francisco.

5.1. Simulated Data

First, we assume that the drivers pay for parking 100% of the time. In order to validate the estimation technique, we simulate 40 payments using the parking (Section 3.1) and payment (Section 3.1) model with parameters and with spaces. We perform the inference according to Algorithm 2 by simulating a candidate set of state trajectories and parking model parameters . Next, we run a particle filter to generate samples of the state from the posterior distribution, and an estimate of the data likelihood. The proposed state is a sample from the particles and the model parameters. We accept or reject the proposal with a probability proportional to the estimated likelihood of the observed payments. We iterate this procedure until we accept a predefined number of proposals in excess of a burn-in period.

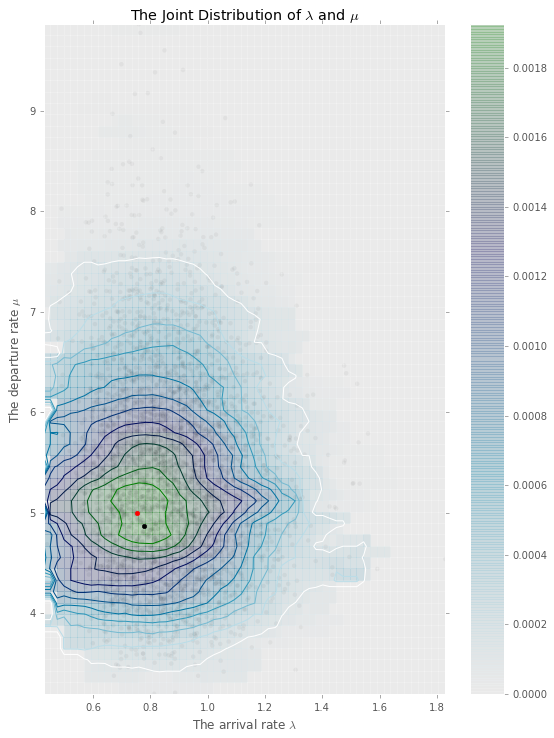

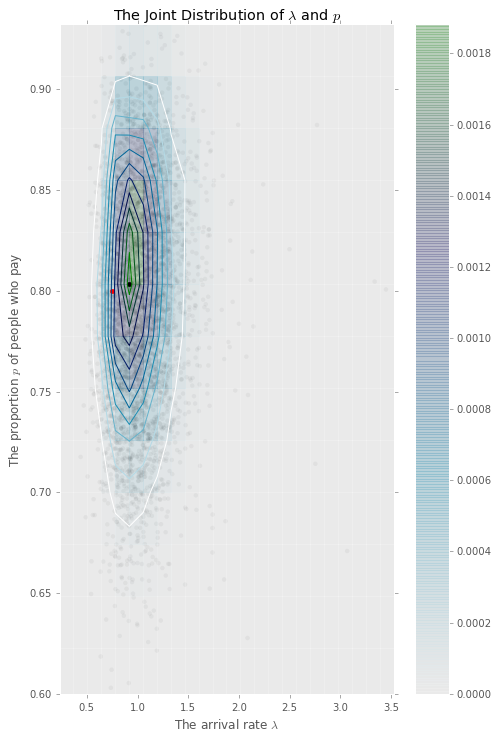

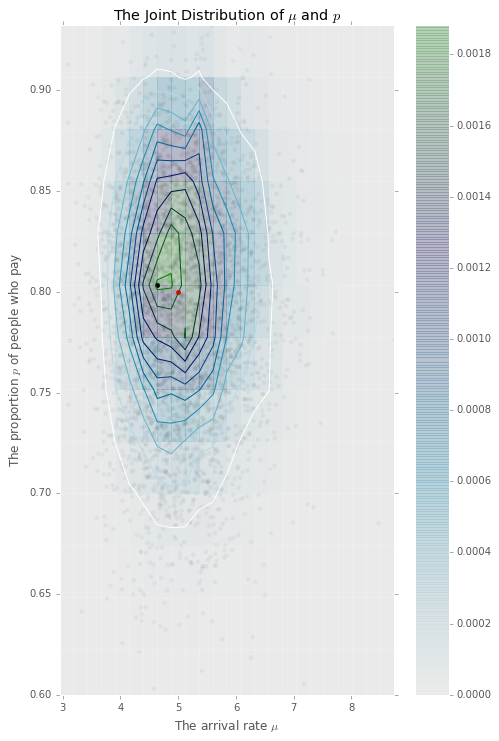

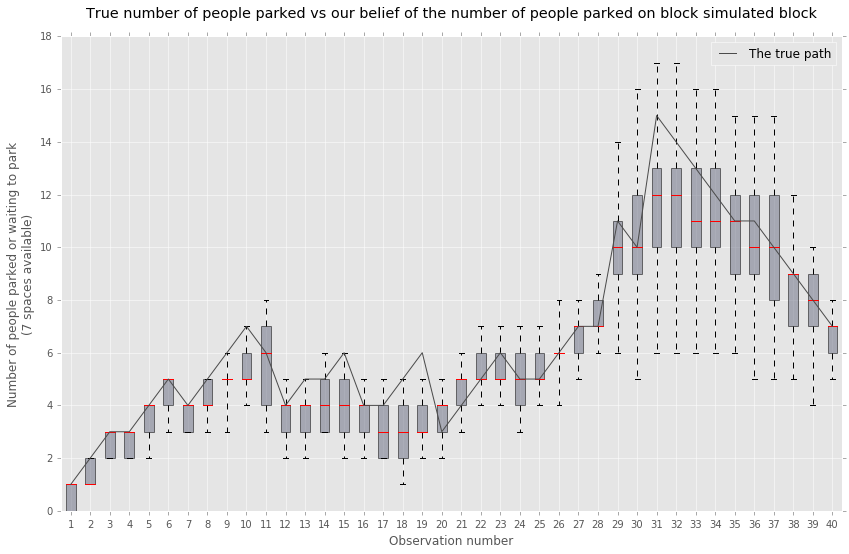

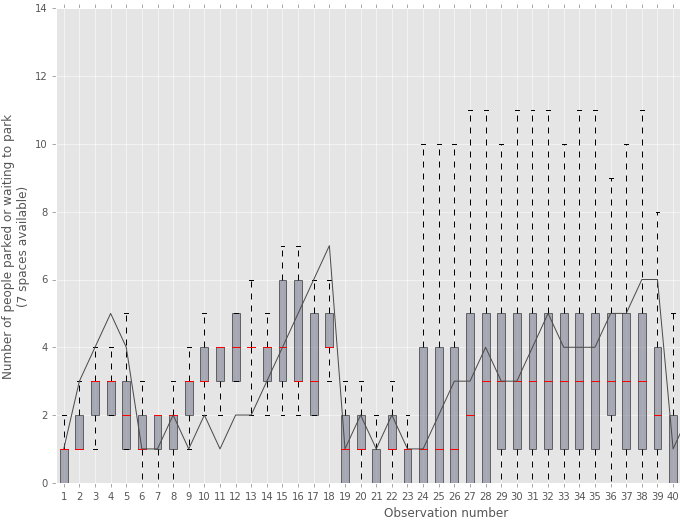

The output of the PMMH is a joint posterior distribution of the model parameters and state trajectories. Figure 6 is the contour plot of the accepted model parameters ). From this joint distribution, we select the most likely parameter-trajectory pair, , with the largest value of the data likelihood. Figure 2 plots the mean and confidence intervals of accepted trajectories generated by the PMMH algorithm. The true path is computed from the parking queueing model corresponding to the observed payments. We find that the true trajectory falls well within the 5% and 95% confidence intervals of the estimated trajectory distribution. The corresponding root mean square error is 1.12 cars.

Next, we assume that 80% of drivers pay for their parking while 20% do not. We simulate a new sequence payments from a model with the same parameters and 7 parking spaces. Figure 7 displays the contour plots of the accepted model parameters . From this joint distribution, we select the most likely parameter-trajectory pair, , which has the largest value of the data likelihood. Figure 2 plots the mean and confidence intervals of accepted trajectories generated by the PMMH algorithm. The true path is computed from the parking queueing model corresponding with the observed payments.

There is a noticed decrease in estimation accuracy caused by the increased number of model parameters. When , the particle filter estimates the number of arriving drivers between consecutive payments. This trans-dimensional (see Green [green1995reversible]) feature of the problem increases the number of variables that need to be inference. This increases the complexity and decreases the accuracy of the estimator. The resulting root mean square error is 1.65 cars.

5.2. Field Experiment: SFpark

We now apply the PMMH to SFpark, a large-scale smart parking initiative in the City of San Francisco. The goal is to reduce traffic by helping drivers find parking. SFpark uses real-time parking information and demand responsive pricing to achieve this goal. SFpark’s slogan is “circle less and live more111http://SFpark.org/about-the-project/.” The program included more that on 7,000 on-street parking spaces.

We use two data sources in this experiment: occupancy snapshots and payment transactions. The payment transactions are inputs to the model. While the occupancy data set serves as ground truth to test the accuracy of the model predictions. Many cities now have electronic parking payment meters/stations for on-street parking. These payment data are collected and stored in the memory of the parking meters. These parking data are commonly transmitted wirelessly from the parking meter to the vendor.

We use the same occupancy dataset as in Millard-Ball et al. [millard2014curb]. We collected the occupancy data set by developing a web application that interacts with the SFpark API. These data provide snapshots of parking availability and capacity for each side of the street. The data contains occupancy snapshots at 5 minute intervals for 340 blocks. The observations are from January 1, 2012 to February 14, 2012. The API data set contains 1,730,770 observations during metered hours.

The input dataset to the model consists of a payment data set that contains the date, time, block id, and payment amount for every transaction. One row of the payment has the form . These payment data cover the same observation window as the occupancy data. There are over 3 millions payment transactions during the observation period January 1, 2012 to February 14, 2012.

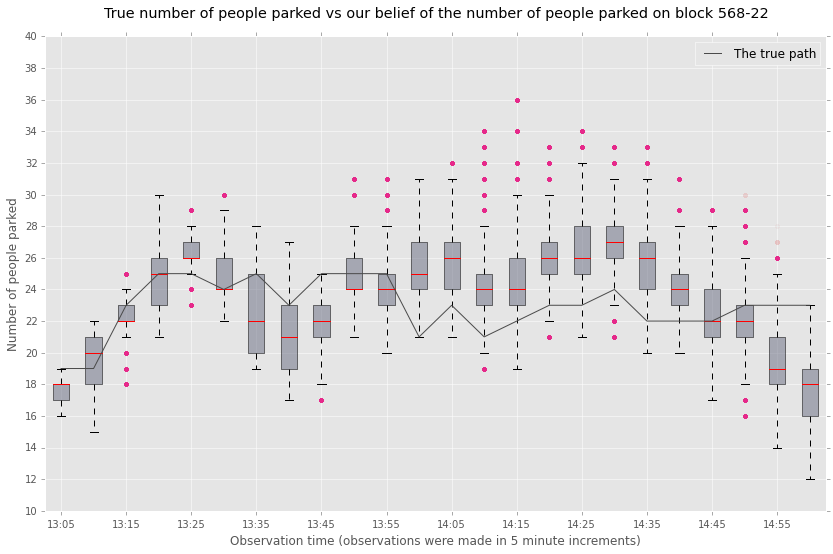

In this experiment, we use 160,000 particles for each run of the particle filter. In order to speed up the Metropolis-Hastings algorithm, we use an adaptive random walk mechanism to generate parameter proposals [peters2010ecological]. The burn-in period of the adaptive Metropolis-Hastings step was 200 accepts, with 3,800 accepts after burn-in. We selected the 2200 and 2400 blocks of Mission Street in San Francisco for the inference. Figure 4 shows a plot of the estimated and true occupancy trajectory over a 2 hours time window on the 2200 block of Mission Street in San Francisco on January 12, 2013. Figure 5 shows a plot of the estimated and true occupancy trajectory over the 2 hours time window on the 2400 block of Mission Street in San Francisco on January 18, 2013. As expected, the mean squared error is the larger for the field test than the simulate data. However, the performance of our approach is superior to SFpark’s Sensor Independent Rate Adjustment (SIRA) regression approach.

5.3. Sensor Independent Rate Adjustment Approach

SFpark developed a method to estimate the hourly parking occupancy rate from hourly payment rate data [SIRA2014, demisch2016demand]. Occupancy rate is total occupied time divided by the sum of occupied and unoccupied time. The payment rate is the total paid time divided by the sum of the total paid time and unpaid time. It is based on multiple linear regression, where dummy variables correspond to the various neighborhood in San Francisco. SFpark currently uses SIRA to generate monthly occupancy estimates. These occupancy estimates influence the monthly changes in parking prices.

Our method produces occupancy estimates at a finer time resolution than the SIRA method. In addition to parking occupancy, we estimate the arrival rates and parking times which SIRA does not do. We also ran test of our method against SFpark SIRA method. During the same time and location that we used above, the true hourly average occupancy was 84%. Our method estimated the hourly average occupancy at 88%, while SIRA estimated an hourly average occupancy of 105%.

5.4. Implications for practice

As cities and urban areas become more data rich, examples of data being re-purposed or given parallel lives will become more ubiquitous, we just demonstrated one. In addition, our numerical methods show that revisions to the estimates could be made in real time, thus allowing the dynamic pricing of parking spaces in a manner that reflect both the temporal and spatial dimension of the demand. We have demonstrated the effectiveness of our approach in achieving SFpark’s occupancy objective given the increased reliability in our estimates compared to SIRA’s. We have also shown how our approach could be replicated nationwide – a feature that delivers on a core objective of SFpark as a demonstration project. This could be achieved at minimal cost compared to the invasive and expensive price tags typically associated with in-ground sensors and the reduction in reliability when their batteries start to fail.

The ability to modify parking rates, particularly in real time, has implications for alleviating parking search related congestion. Although urban planners know of the areas where there is scarce parking, they do not have a good way of quantifying the magnitude of the problem. Once these neighborhoods are identified, we can look to a variety of remedies, such as: changing parking rates to incentivize drivers to choose off-street instead of searching for on-street parking; rezoning areas so that more off-street parking is available; increasing off-street parking with direct investments; or implementing congestion pricing in neighborhoods with high rates of cruising.

All four of the remedies mentioned above can be viewed as policies that either explicitly or implicitly change the price of parking. Changing the price of on-street parking is the most direct way to reduce drivers’ incentive to search for on-street parking and is the path that policy makers in SFpark took. Investing directly in off-street parking and rezoning for more off-street parking increases off-street parking supply and should reduce the price of off-street parking. These approaches are often difficult to implement in practice, and their effects are difficult to estimate beforehand. Using congestion pricing in high traffic areas functions as an implicit increase in the price of both on-street and off-street parking. Congestion pricing has other benefits since it reduces traffic overall, however, it is difficult to implement in a targeted manner.

6. Conclusion

This paper introduces an inference framework for estimating parking occupancy from parking meter payment data. Given a historical record of parking payment transactions, our method provides estimates of second-by-second parking occupancy. This framework allows cities to use existing infrastructure (parking meters) to estimate parking occupancy without installing specialized parking sensors. The inference methodology is an extension of a simulation based approach called Particle Markov Chain Monte Carlo method that includes an approximate Bayesian computation step to compute the likelihood weights. We show that this method yields unbiased asymptotic estimates of the posterior distribution.

The framework has the virtue of being unsupervised; that is, no training set of ground truth parking occupancy data is required to perform the inference. The inference relies solely on the underlying model for the parking search process. Although we employed the queue with first-come first-serve, the PMMH framework supports any simulated parking search model. Beyond introducing the inference framework and establishing its basic theoretical properties, subsequent efforts may focus on developing the most appropriate parking search queuing model. This methodology can serve as the basis for a tool, that can potentially, enable a parking information system for citizens and avail policy makers and city planners the ability to evaluate the impact of parking policy interventions such as pricing modifications, information provision, and time limit changes.

References

Appendix A Joint Distributions