0 \vgtccategoryResearch \vgtcinsertpkg

Evaluation of cinematic volume rendering open-source and commercial solutions for the exploration of congenital heart data

Abstract

Detailed anatomical information is essential to optimize medical decisions for surgical and pre-operative planning in patients with congenital heart disease. The visualization techniques commonly used in clinical routine for the exploration of complex cardiac data are based on multi-planar reformations, maximum intensity projection, and volume rendering, which rely on basic lighting models prone to image distortion. On the other hand, cinematic rendering (CR), a three-dimensional visualization technique based on physically-based rendering methods, can create volumetric images with high fidelity. However, there are a lot of parameters involved in CR that affect the visualization results, thus being dependent on the user’s experience and requiring detailed evaluation protocols to compare available solutions. In this study, we have analyzed the impact of the most relevant parameters in a CR pipeline developed in the open-source version of the MeVisLab framework for the visualization of the heart anatomy of three congenital patients and two adults from CT images. The resulting visualizations were compared to a commercial tool used in the clinics with a questionnaire filled in by clinical users, providing similar definitions of structures, depth perception, texture appearance, realism, and diagnostic ability.

Cinematic renderingopen-sourcecommercial toolcongenital heart data

Introduction

Congenital heart disease (CHD) is one of the most frequently diagnosed defects afflicting approximately 0.8% to 1.2% of live births worldwide [2]. As the cardiovascular morphology varies greatly between individual patients, it is important for clinicians to have a comprehensive understanding of the spatial relationship between the cardiac structures, in order to make optimal medical decisions. As a result, there is a growing number of computer-aided software tools available to assist radiologists in this process. One of the widely used methods is volume rendering, which was found to represent human structures in an artificial way, also being prone to image distortions [8] due to their reliance on basic lighting models. On the other hand, cinematic rendering (CR) is a novel post-processing tool [10] that renders the volumetric medical data using physically-based advanced lighting models [12]. CR resembles the casting of billions of light rays from all possible directions to create volumetric images with a remarkable level of realism [6].

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Benefits of cinematic vs volume rendering are increasingly being reported in medical applications, such as for faster comprehension of anatomy [9] and for pre-operative planning[17]. Additionally, studies have shown that more accurate visualization of medical data benefits imaging tasks including delineating complex congenital heart pathologies[15]. Apart from its clinical utility, CR also has the potential to be useful in patient communication [7] and education [1].

The most widely used commercial implementation of CR[5] is offered by Siemens Healthineers as part of their syngo.via platform111https://www.siemenshealthineers.com/digital-health-solutions/cinematic-rendering. Several other license-limited solutions include Global Illumination Vitrea by Canon Medical Informatics222https://www.vitalimages.com/global-illumination/ and MeVisLab333https://www.mevislab.de/download/ are also available. Vitrea offers global illumination methods for rendering volumetric data in a photo-realistic manner, while MeVisLab offers a path tracer module, which is a significantly enhanced version of the ExposureRender [12] framework by Thomas Kroes. For instance, MeVisLab has recently been used for post-surgical assessment in oncologic head and neck reconstructive surgery comparing path tracing and volume rendering techniques [4]. While these vendor-provided solutions often have high rendering capabilities and are utilized in advanced healthcare centers, their cost can be prohibitive for smaller institutions or individual researchers. However, there are some open-source alternatives. Voreen444https://www.uni-muenster.de/Voreen and Inviwo555http://www.inviwo.org offer better volumetric rendering capabilities by implementing ray casting with global illumination. Yet, neither of these applications utilizes volumetric path tracing or equivalent state-of-the-art volumetric rendering techniques. Another open-source and freely available solution supporting CR in web browsers is VolView [18]. These solutions are typically free to use, customizable to meet specific needs, and can be used by anyone with an internet connection, regardless of their location or financial resources. While these solutions are affordable and flexible, there is a lack of research comparing different solutions in cardiac applications, to identify the strengths and weaknesses of open-source software tools as compared to commercial solutions.

In this study, we utilized a free version of MeVisLab to design a pipeline for cinematically rendering a CHD dataset. We conducted a detailed evaluation of several critical parameters to enhance the shape and depth perception of the heart anatomy. Furthermore, we assessed the performance of the developed open-source rendering pipeline by comparing it with a commercial solution available in clinics. This evaluation was conducted using a questionnaire filled out by cardiology experts.

1 Materials and methods

1.1 Patient cases and reconstructions

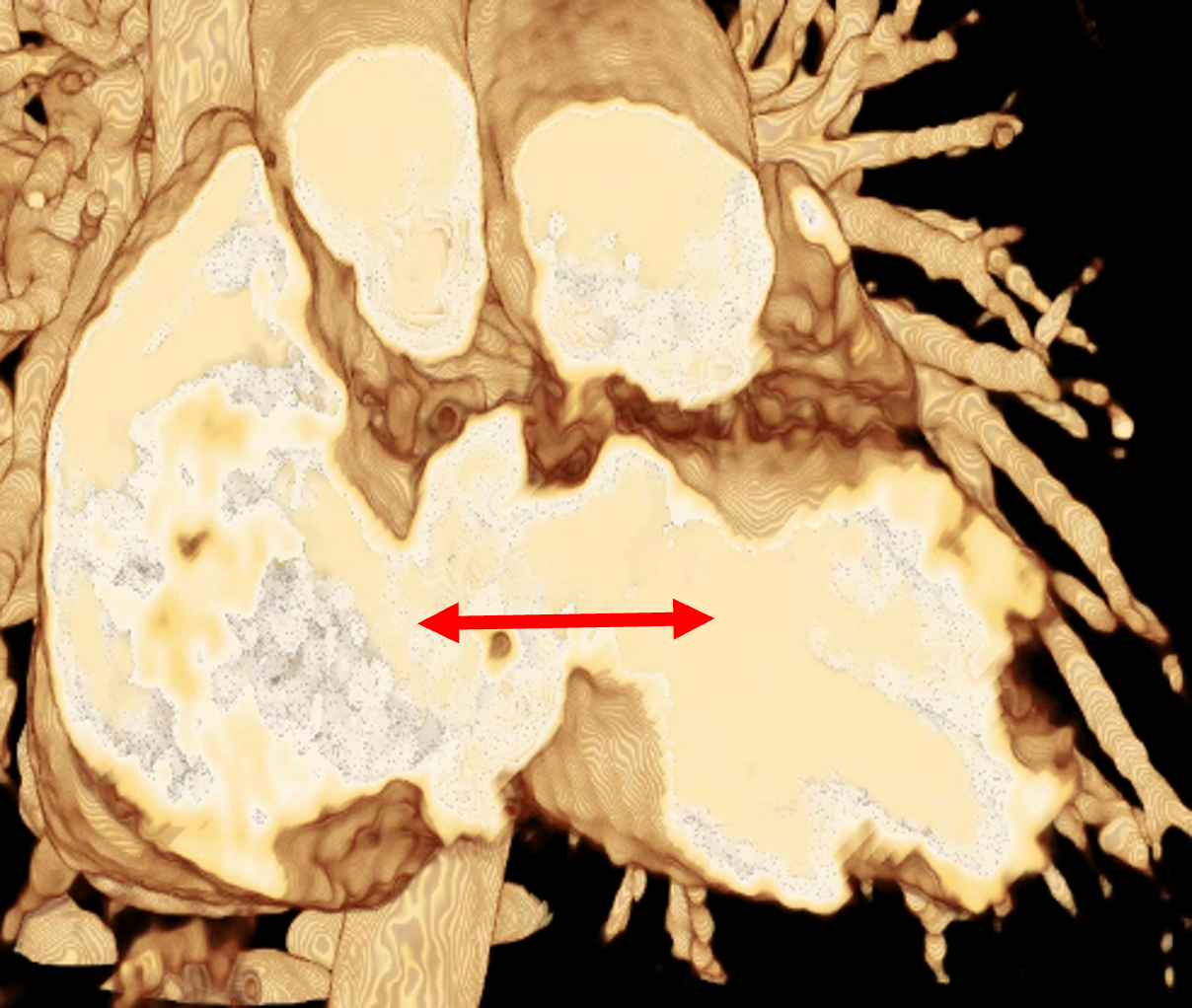

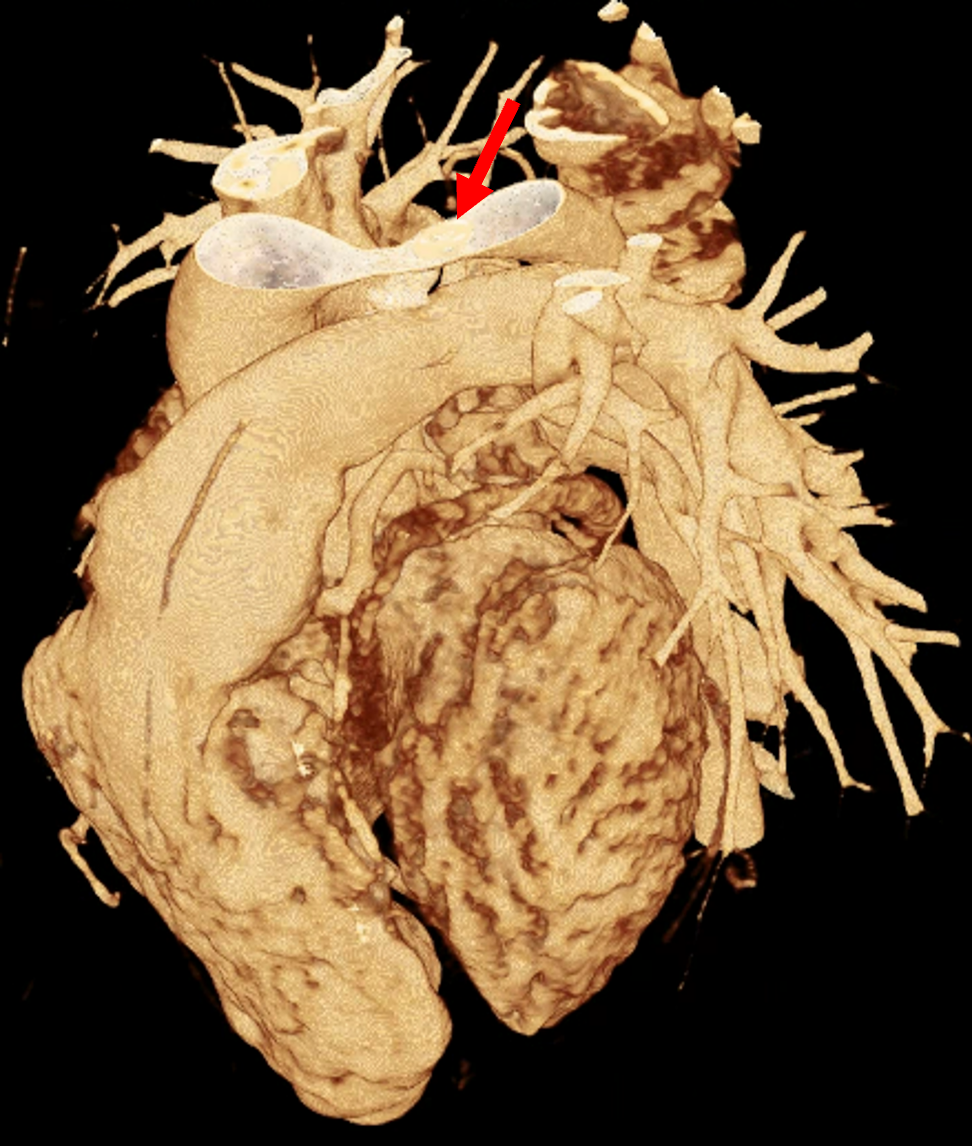

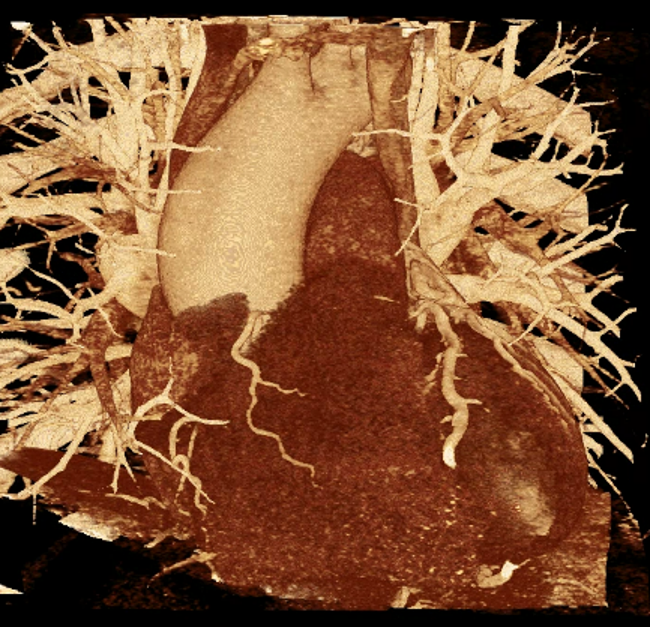

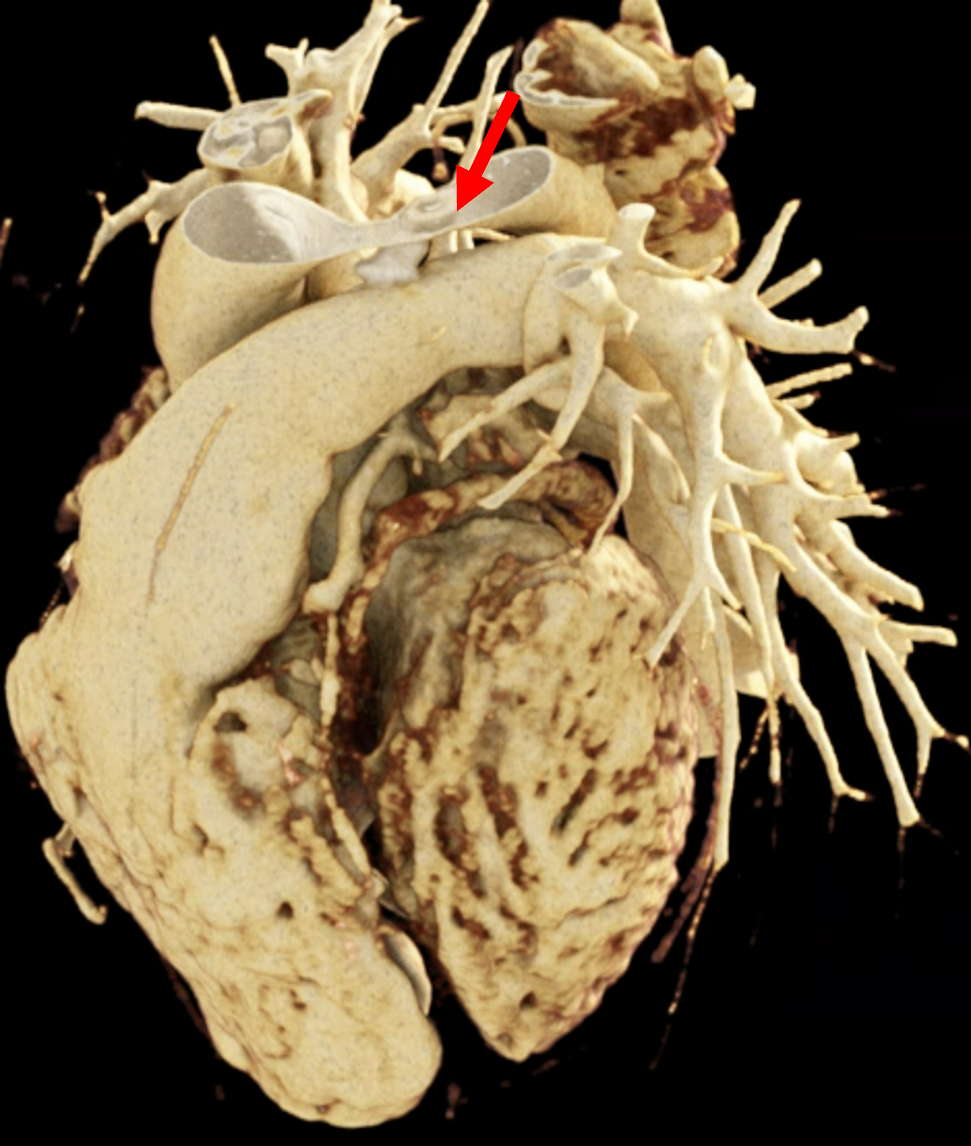

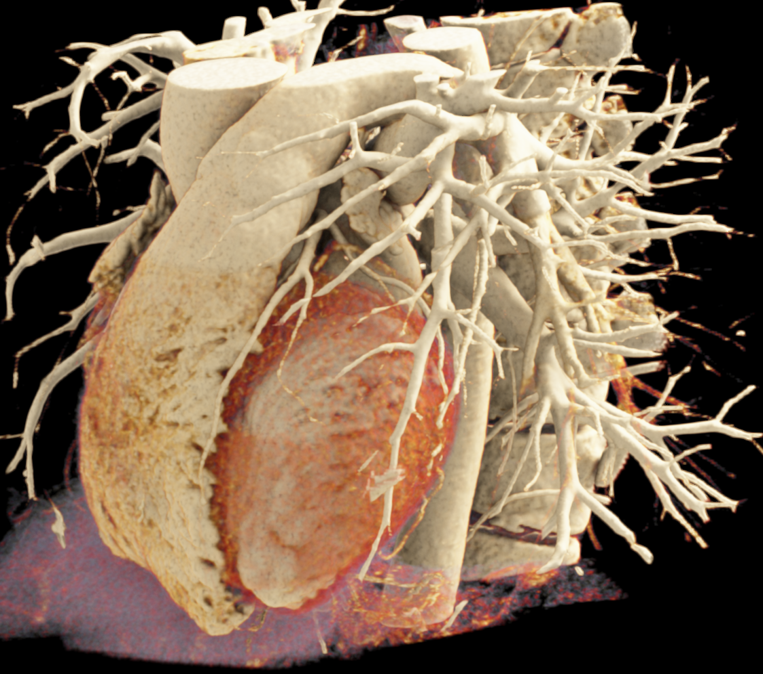

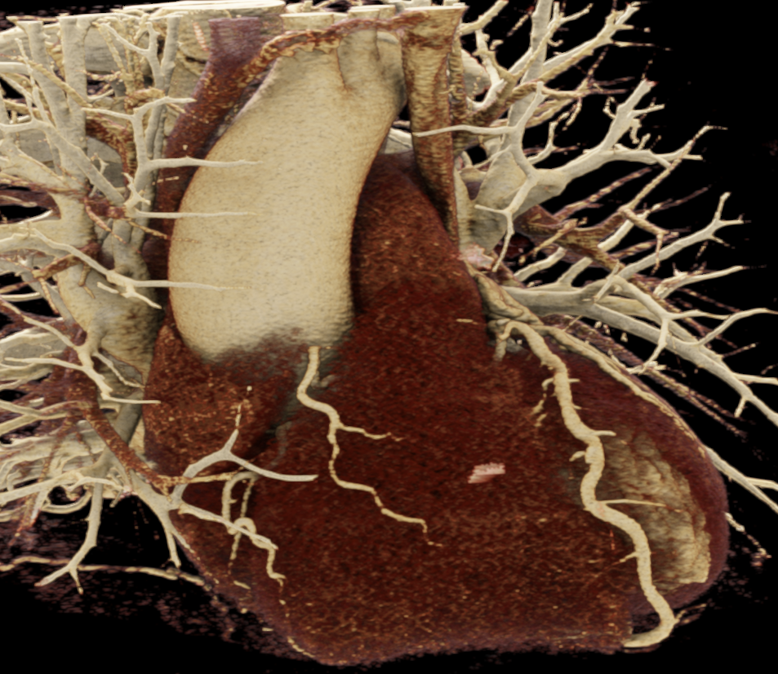

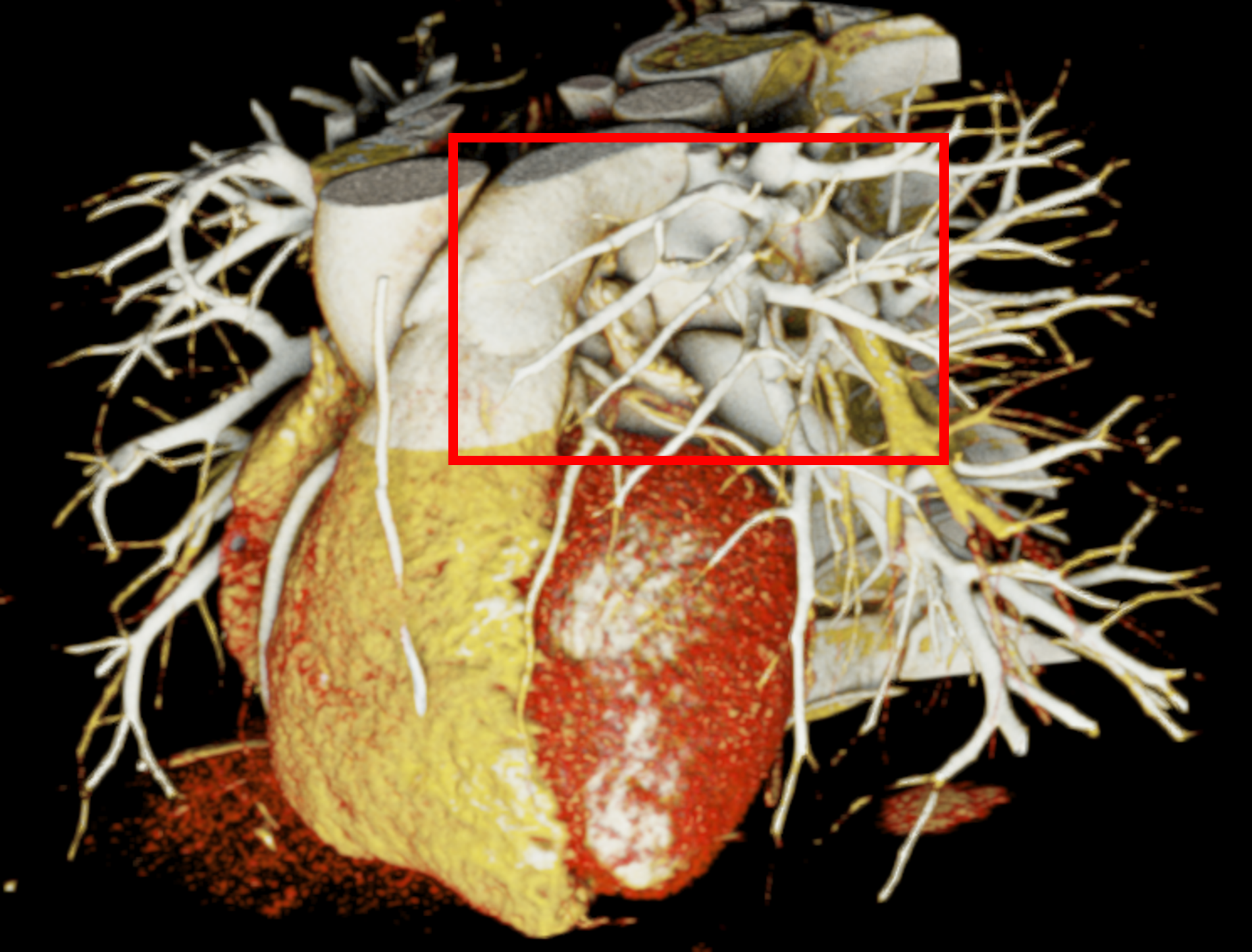

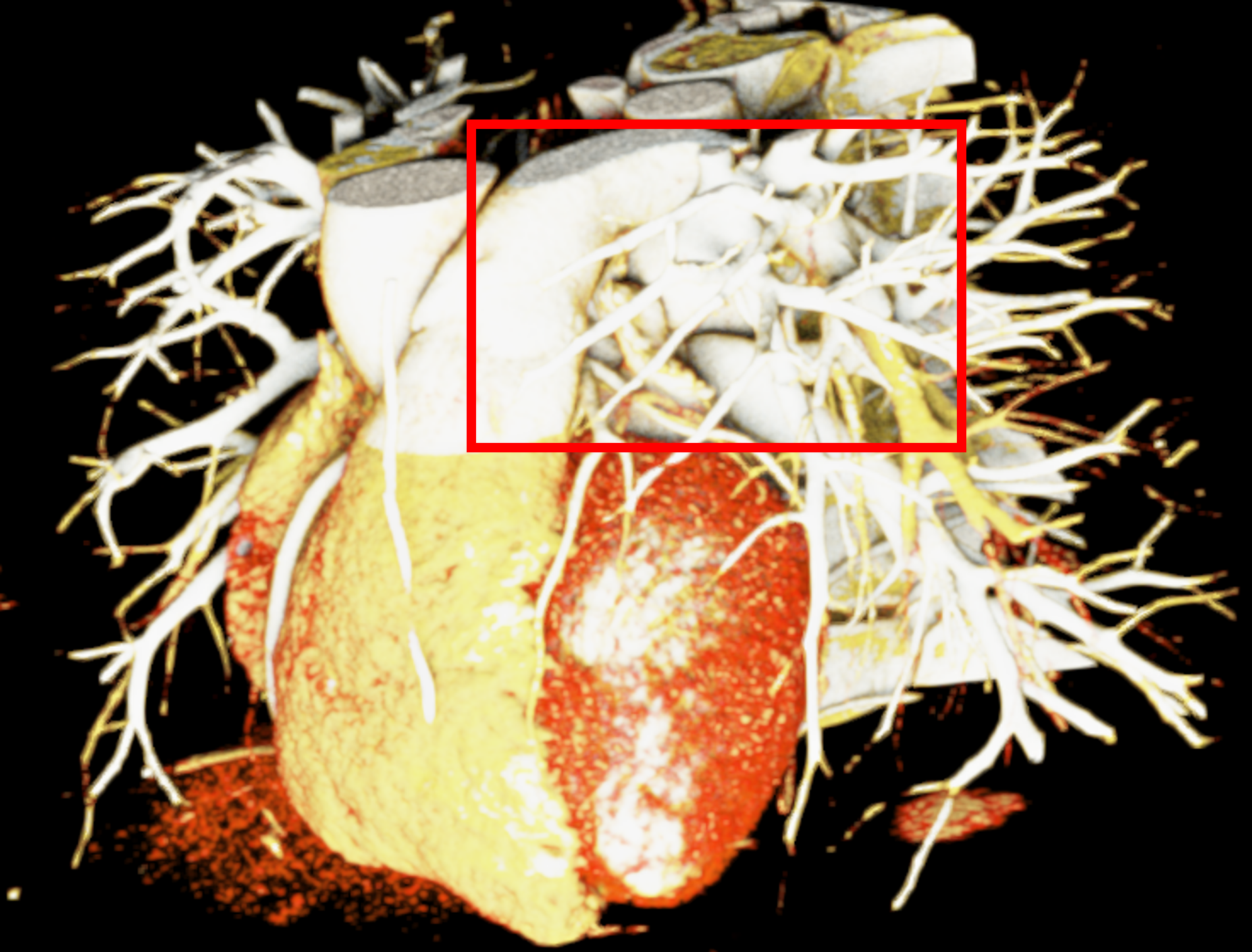

We included clinical data of 3 congenital heart disease patients who underwent CT imaging for diagnosis or treatment, along with CT data of two normal adult hearts. A brief summary of patient data is provided in Table 1. For creating CR visualizations from CT data, anonymized DICOM reconstructions were transferred to both used environments: MeVisLab and a workstation with prototype commercial software (syngo.via cinematic VRT, Siemens Healthineers). Each case was carefully displayed using the same zooming and rotation features in both software; the cutting tool was applied to eliminate any bones and remaining lines that could obscure the view. To ensure comparability, the generated reconstructions were captured using the same angle of view, color, and opacity settings (see Figure 1).

| Case | M/F | Age | Condition | Manufacturer | Voxel spacing |

|---|---|---|---|---|---|

| 1 | F | 3 years | Pulmonary atresia | GE Medical | |

| 2 | M | 4 days | Ventricular septal defect | Canon Medical | |

| 3 | M | 2 years | Occluded arterial duct | Siemens | |

| 4 | F | 46 years | Normal heart | GE Medical | |

| 5 | M | 63 years | Normal heart | Philips |

1.2 Cinematic rendering pipeline and sensitivity analysis

To build the cinematic rendering pipeline, the MeVisLab software (version 3.5.0) was installed on a personal computer (AMD FX (tm), eight-core processor, 3.50GHz, 32 GB RAM, 64-bits operating system). The pipeline involved a visual programming approach, combining various modules to load imaging data, perform pre-processing, design transfer functions, set material and lighting properties, and render the volume followed by post-processing. These steps are briefly explained below.

Data loading and pre-processing. The first step of the rendering involved loading patient data, usually in the form of a series of slices. MeVisLab’s DirectDicomImport module was used to directly import the image files. To reduce noise in the acquired data, the GaussSmoothing module in MeVisLab was applied.

Transfer function. After pre-processing, the next step involved designing a transfer function. A transfer function maps voxel values to visual properties like color and opacity, enabling the distinction of anatomical structures in the image. The SoLUTEditor module allowed interactive editing of RGBA lookup tables to design transfer functions. We generated a range of preset transfer functions, saving them in CSV format for easy loading via a Python script. The module’s window level and width option allowed for setting a color range for displaying specific anatomical structures, similar to conventional CT reconstructions.

Shading and lighting. Once the data was mapped to the transfer function, lighting and shading were applied to the volume using a physically-based rendering (PBR) workflow. PBR has two principal workflows[14]: metal/roughness and specular/glossiness. In PBR, shading is achieved using various Bidirectional Reflectance Distribution Function (BRDF), which are mathematical models that describe how light reflects off surfaces based on their physical properties. The SoPathTracerMaterial module in MeVisLab provides a range of different materials from which Material_Microfacet is based on the specular/glossiness workflow of PBR, whereas Material_Principled is based on the metal/roughness workflow. We used ’Material_Principled’, a physically-based material whose parameters are based on the Disney BRDF model[3]. We adjusted parameters like base color, metallic, roughness, and specular properties to achieve realistic material appearances. For lighting, the pipeline included the SoPathTracerAreaLight and SoPathTracerBackgroundLight modules to simulate realistic lighting effects in the 3D scene. The intensity, color, and position of both lights were carefully adjusted to create the desired lighting effect.

|

|

|

| (a) | (b) | (c) |

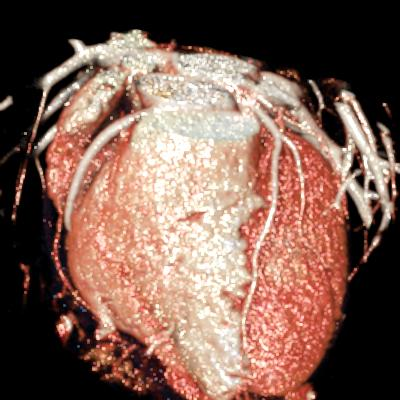

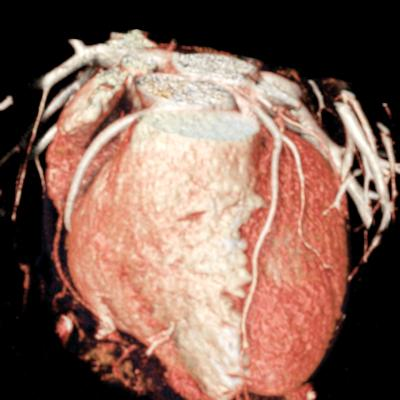

In order to determine which parameters should be adjusted and which values achieved the most realistic appearance, a sensitivity analysis was performed. Specifically, the roughness parameter was tested with constant metallic and specular values of 0.5, producing three images with different roughness levels, as shown in Figure 2. The impact of lighting on the final image was also examined by varying the number and position of lights. For instance, adding two light sources in the same position tends to create blurry reflections (overexposure) as compared to a single light source, which focuses better on the details of the image as can be seen in Figure 3. Moreover, adjusting the position of light sources improved the visualization of specific regions, including shadows and depth. We also tested the effects of area and background lighting, where area lighting is positioned on the top right and the background light source is positioned behind the objects being lit, providing overall illumination of the scene. As illustrated in Figure 4, the use of only area lighting tended to overexpose certain areas and obscure details. On the other hand, the use of background white lighting alone resulted in a more diffused and natural-looking, while combining area and background lighting produced dynamic effects, highlighting specific features of the volume with area light, and creating a sense of depth and dimensionality using the background light.

|

|

| (a) | (b) |

|

|

|

| (a) | (b) | (c) |

Image-based lighting (IBL). IBL is a computer graphics technique that employs a high-dynamic-range image (HDRI) as a light source. In PBR, background light refers to the light coming from the environment surrounding the rendered scene. We utilized the SoPathTracerBackgroundLight module to support IBL with cubemaps for rendering the volume (Figure 5), demonstrating how different lighting strategies can influence the overall appearance of the rendered image. For instance, the use of two area light sources in the first image provides a more focused and detailed illumination, while IBL with HDRI creates a more realistic and immersive illumination by capturing lighting and reflection information from the surroundings.

|

|

|

|

|

|

| (a) | (b) | (c) |

Rendering. Once color, material, and lighting are adjusted, we integrate the SoPathTracer module for rendering. This module utilizes a Monte Carlo path tracing method to simulate light transport through the anatomy and create photo-realistic images. Path tracing is a common technique in computer graphics [11], generating paths of scattering events from the camera to light sources, resulting in multi-scattering. The Monte Carlo integration method solves the following multi-dimensional and non-continuous rendering equation 1, considering the properties of the scene and the physical interactions of light with the materials:

| (1) |

where and are the emitted (from the surface) and outgoing radiances, respectively, at point in direction , is the bidirectional reflectance distribution function (BRDF) that describes how much light is reflected in different directions from direction , is the incoming radiance at point from direction , and where theta is the angle between the surface normal and .

After setting up all the light sources and material properties, the user can interact with the rendering and adjust the camera projection type. Furthermore, post-processing tools such as the SoPostEffectAmbientOcclusion module were applied to improve the shadowing effect and depth perception. Moreover, the SoVolumeCutting and clip plane modules were added to allow image editing, enabling the exposure and display of specific regions of interest, as well as isolating the heart from adjacent structures such as bones and vessels.

open-source solutions.

| Characteristics Anatomical structure | Definition of structure | Depth perception | Texture appearance | Fidelity | Diagnostic ability | |||||

| Commercial | Open | Commercial | Open | Commercial | Open | Commercial | Open | Commercial | Open | |

| Atria | 4.00 | 3.67 | 4.67 | 4.33 | 3.67 | 3.33 | 3.67 | 3.33 | 4.67 | 4.00 |

| Ventricles | 4.00 | 3.33 | 4.67 | 4.33 | 4.00 | 3.67 | 4.00 | 3.67 | 4.67 | 4.00 |

| Great Arteries | 4.33 | 4.00 | 4.67 | 4.33 | 4.67 | 4.67 | 4.00 | 3.67 | 4.33 | 4.00 |

| Coronary Arteries | 2.33 | 2.33 | 4.67 | 4.33 | 4.67 | 4.33 | 3.67 | 3.33 | 4.33 | 4.00 |

| Average | 3.67 | 3.33 | 4.67 | 4.33 | 4.25 | 4.00 | 3.83 | 3.50 | 4.50 | 4.00 |

2 Evaluation of cinematic rendering

2.1 Assessment protocol

The overall evaluation consisted of subjective assessments of photo-realistic static snapshots. Three independent domain-expert cardiologists, two cardiac radiologists with 8 years of experience, and one pediatric cardiologist with 15 years of experience, conducted the evaluations. The snapshots were acquired in such a way as to enable independent ratings for various anatomical structures, including the atria, ventricles, great arteries, and coronary arteries. A score was required for the snapshots on a Likert scale from 1 to 5 (e.g., very unsatisfied to very satisfied). Specifically, five questions were asked per case, related to the following visual characteristics, adapted from [13, 16]:

-

•

Definition of structure describes the sharpness of the edges, e.g., for performing an anatomical measurement.

-

•

Depth perception describes the ability to perceive spatial relationships in 3D (e.g., anterior/posterior).

-

•

Texture appearance refers to the appearance of the surfaces in terms of their degree of roughness and metalness.

-

•

Fidelity is a characteristic analyzing the sensation of resembling real cardiac tissue on screen.

-

•

Diagnostic ability refers to the effectiveness of the rendered images in supporting clinical diagnosis.

The questionnaire also included questions about using open-source and commercial solutions for CR. Two questions used a Likert scale to assess the reliability of open-source tools and whether the investigators would recommend them to others. The third question asked for an open-ended opinion about the preference for using open-source or commercial tools for advanced rendering.

2.2 Results

Figure 1 presents the conventional volume alongside cinematic renderings of all five analyzed cases. The top row showcases the volume rendering, while the middle and bottom rows display cinematic renderings created using the commercial solution and open-source framework. In the open-source framework, all cases underwent CR with two area light sources, background white light, and distinct material properties, followed by a post-processing step. Furthermore, noise removal was applied during preprocessing for the congenital cases, as they often had higher noise levels compared to normal adult hearts. Alongside these visual results, table 2 complements these visual results by providing a comprehensive overview of our evaluation findings. The analysis was performed by taking the average of all anatomical structure scores across all characteristics. All investigators found the renderings from the commercial solution to be superior or equivalent to the open-source alternative reconstructions, for all analyzed anatomical structures and visual characteristics. However, both solutions obtained satisfaction scores of a similar scale, with no significant differences overall. Case 3 notably highlights the importance of CR. Here, the occluded arterial duct (indicated by the red arrow) is challenging to identify using only VR; however, both alternative methods offer comparable visual results. The analysis further reveals minor distinctions in each visual characteristic assessment for the Great Arteries, with variances of approximately 0.33 and 0.34. Interestingly, the texture appearance exhibits remarkable equivalence, emphasizing the effectiveness of both solutions in capturing texture details. Moreover, case 2, involving a ventricular septal defect, exhibited enhanced visual appeal when rendered using an open-source solution; however, the analysis indicates that commercial CRs outperformed across all visual characteristics. Furthermore, in perceiving the depth of anatomical structures both CRs performed equally. Additionally, the evaluators found that the edges of anatomical structures were better visualized with the commercial tool, with a mean difference in the definition of structure characteristic of 0.34 with respect to the open-source renderings. We can also observe that the lowest scores for both CRs solutions corresponded to the definition of the coronary arteries, due to their complex anatomy.

3 Discussion and conclusions

Cinematic rendering for complex cardiac data is a promising 3D visualization tool, which is mainly performed on commercial tools in clinical environments. These tools have high-performance capabilities but are relatively costly, thus not being accessible to everyone. In the present study, we have designed a photo-realistic rendering pipeline using the open-source SDK version of MeVisLab as an alternative.

To achieve the best possible cinematic rendering visualizations, we performed a sensitivity analysis to identify the most relevant parameters (e.g., material properties) and their effect on the final results. A limitation of fixing values for material properties is that the same value will not result in the same texture appearance for each case since materials behave differently for each anatomy which also depends on the image quality [9]. We also analyzed the effects of lighting and found that the number of light sources and their positions are crucial for creating visually compelling and informative images with higher depth perception. Moreover, area lights offer focused and detailed lighting, while background lighting with HDRI provides more realistic and immersive illumination by capturing lighting and reflection information from the surrounding environment.

An evaluation protocol was jointly designed with cardiologists to compare the CRs provided by the developed open-source pipeline and from a commercial solution available in a hospital environment, assessing several visual characteristics of different cardiac structures. Results obtained by three independent cardiologists were consistent in recognizing an overall superior performance of the commercial solution, with slightly higher scores on the Likert scale, especially for the definition of structures, texture appearance, and diagnostic ability. However, the open-source solution had several visual characteristics with a 4.00 or beyond, being similar to the commercial alternative for fidelity and depth perception. Both types of CRs performed worst for the definition of structures due to the low scores given to the coronary arteries. The cardiologists also expressed their satisfaction with the reliability of open-source rendering tools and their recommendation to others for advanced rendering purposes. Their overall preference was for open-source tools, mainly due to their cost-effectiveness.

Computationally speaking, both solutions were highly dependent on suitable hardware. The quality of the renderings was dependent on the number of rays to be traced, which in turn depended on the computational power. For example, on a workstation with a GPU RTX 1050, the image was completely rendered in 29 sec (300 iterations) with the open-source solution. However, with a GPU NVIDIA RTX A6000, it only took 3 seconds to completely render the same image.

The main strength of the open-source CR pipeline developed in the study is that it allows continuous improvement (GitHub Project), being a ”white box” tool enabling to tune parameters such as transfer functions, materials, and lighting to obtain better visualizations, as per clinician’s requirement on specific patients. Considering the accessibility of the open-source solution and the similarity of the corresponding satisfaction scores to the commercial tool, the developed pipeline is an exciting alternative, mainly for educational purposes and to support medical diagnosis as a pre-operative planning tool. Future work will be devoted to a more extensive evaluation with more analyzed cases, evaluators, and used software tools, including web-based solutions democratizing the use of open-source CR in hospitals independently of their resources.

Acknowledgements.

This project has received funding from the European Union’s Horizon 2020 research and innovation programme under grant agreement No 101016496 (SimCardioTest).References

- [1] J. Binder, C. Krautz, K. Engel, R. Grützmann, F. A. Fellner, P. H. Burger, and M. Scholz. Leveraging medical imaging for medical education—a cinematic rendering-featured lecture. Annals of Anatomy-Anatomischer Anzeiger, 222:159–165, 2019.

- [2] B. J. Bouma and B. J. Mulder. Changing landscape of congenital heart disease. Circulation research, 120(6):908–922, 2017.

- [3] B. Burley and W. D. A. Studios. Physically-based shading at disney. In ACM Siggraph, vol. 2012, pp. 1–7. vol. 2012, 2012.

- [4] N. Cardobi, R. Nocini, G. Molteni, V. Favero, A. Fior, D. Marchioni, S. Montemezzi, and M. D’Onofrio. Path tracing vs. volume rendering technique in post-surgical assessment of bone flap in oncologic head and neck reconstructive surgery: A preliminary study. Journal of Imaging, 9(2), 2023.

- [5] D. Comaniciu, K. Engel, B. Georgescu, and T. Mansi. Shaping the future through innovations: From medical imaging to precision medicine. Medical image analysis, 33:19–26, 2016.

- [6] E. Dappa, K. Higashigaito, J. Fornaro, S. Leschka, S. Wildermuth, and H. Alkadhi. Cinematic rendering–an alternative to volume rendering for 3D computed tomography imaging. Insights into imaging, 7(6):849–856, 2016.

- [7] L. C. Ebert, W. Schweitzer, D. Gascho, T. D. Ruder, P. M. Flach, M. J. Thali, and G. Ampanozi. Forensic 3D visualization of CT data using cinematic volume rendering: a preliminary study. American Journal of Roentgenology, 208(2):233–240, 2017.

- [8] M. Eid, C. N. De Cecco, J. W. Nance Jr, D. Caruso, M. H. Albrecht, A. J. Spandorfer, D. De Santis, A. Varga-Szemes, and U. J. Schoepf. Cinematic rendering in CT: a novel, lifelike 3D visualization technique. American Journal of Roentgenology, 209(2):370–379, 2017.

- [9] M. Elshafei, J. Binder, J. Baecker, M. Brunner, M. Uder, G. F. Weber, R. Grützmann, and C. Krautz. Comparison of cinematic rendering and computed tomography for speed and comprehension of surgical anatomy. JAMA surgery, 154(8):738–744, 2019.

- [10] F. A. Fellner. Introducing cinematic rendering: a novel technique for post-processing medical imaging data. Journal of Biomedical Science and Engineering, 9(3):170–175, 2016.

- [11] J. T. Kajiya. The rendering equation. In Proceedings of the 13th annual conference on Computer graphics and interactive techniques, pp. 143–150, 1986.

- [12] T. Kroes, F. H. Post, and C. P. Botha. Exposure render: An interactive photo-realistic volume rendering framework. PloS one, 7(7):e38586, 2012.

- [13] B. Preim, A. Baer, D. Cunningham, T. Isenberg, and T. Ropinski. A survey of perceptually motivated 3D visualization of medical image data. In Computer Graphics Forum, vol. 35, pp. 501–525. Wiley Online Library, 2016.

- [14] P. M. Rea, ed. Biomedical Visualisation. Springer International Publishing, 2022. doi: 10 . 1007/978-3-030-87779-8

- [15] F. Röschl, A. Purbojo, A. Rüffer, R. Cesnjevar, S. Dittrich, and M. Glöckler. Initial experience with cinematic rendering for the visualization of extracardiac anatomy in complex congenital heart defects. Interactive cardiovascular and thoracic surgery, 28(6):916–921, 2019.

- [16] T. Steffen, S. Winklhofer, F. Starz, D. Wiedemeier, U. Ahmadli, and B. Stadlinger. Three-dimensional perception of cinematic rendering versus conventional volume rendering using CT and CBCT data of the facial skeleton. Annals of Anatomy-Anatomischer Anzeiger, 241:151905, 2022.

- [17] L. M. Wollschlaeger, J. Boos, P. Jungbluth, J.-P. Grassmann, C. Schleich, D. Latz, P. Kroepil, G. Antoch, J. Windolf, and B. M. Schaarschmidt. Is CT-based cinematic rendering superior to volume rendering technique in the preoperative evaluation of multifragmentary intraarticular lower extremity fractures? European Journal of Radiology, 126:108911, 2020.

- [18] J. Xu, G. Thevenon, T. Chabat, M. McCormick, F. Li, T. Birdsong, K. Martin, Y. Lee, and S. Aylward. Interactive, in-browser cinematic volume rendering of medical images. Computer Methods in Biomechanics and Biomedical Engineering: Imaging & Visualization, pp. 1–8, 2022.