Evaluation of point forecasts for extreme events using consistent scoring functions

Abstract

We present a method for comparing point forecasts in a region of interest, such as the tails or centre of a variable’s range. This method cannot be hedged, in contrast to conditionally selecting events to evaluate and then using a scoring function that would have been consistent (or proper) prior to event selection. Our method also gives decompositions of scoring functions that are consistent for the mean or a particular quantile or expectile. Each member of each decomposition is itself a consistent scoring function that emphasises performance over a selected region of the variable’s range. The score of each member of the decomposition has a natural interpretation rooted in optimal decision theory. It is the weighted average of economic regret over user decision thresholds, where the weight emphasises those decision thresholds in the corresponding region of interest.

Keywords: Consistent scoring function; Decision theory; Forecast ranking; Forecast verification; Point forecast; Proper scoring rule; Rare and extreme events.

1 Introduction

Extreme events occur in many systems, from atmospheric to economic, and present significant challenges to society. Hence the accurate prediction of extreme events is of vital importance. In many such situations, competing forecasts are produced by a variety of forecast systems and it is natural to want to compare the performance of such forecasts with emphasis on the extremes.

In this context, it is critical that the methodology for requesting, evaluating and ranking competing forecasting systems is decision-theoretically coherent. Since the future is not precisely known, ideally forecasts ought to be probabilistic in nature, taking the form of a predictive probability distribution and assessed using a proper scoring rule (Gneiting and Katzfuss, 2014). Nonetheless, in many contexts and for a variety of reasons, point forecasts (i.e. single-valued forecasts taking values from the prediction space) are issued and used. For decision-theoretically coherent evaluation of point forecasts, either the scoring function (such as the squared error or absolute error scoring function) that will be used to assess predictive performance should be advertised in advance, or a specific functional (such as the mean or median) of the forecaster’s predictive distribution should be requested and evaluation then conducted using a scoring function that is consistent for that functional (Gneiting, 2011a ). The use of proper scoring rules and consistent scoring functions encourage forecasters to quote honest, carefully considered forecasts.

To compare competing forecasts for the extremes, one may be tempted to use what would otherwise be a proper scoring rule or consistent scoring function, but restrict evaluation to a subset of events for which extremes were either observed, or forecast or perhaps both. However, such methodologies promote hedging strategies (as illustrated in Section 2) and can result in misguided inferences. This gives rise to the forecaster’s dilemma, whereby “if forecast evaluation proceeds conditionally on a catastrophic event having been observed [then] always predicting a calamity becomes a worthwhile strategy” (Lerch et al., 2017).

Nonetheless, for predictive distributions Gneiting and Ranjan, (2011) showed that a suitable alternative exists in the threshold-weighted continuous ranked probability score, which is a weighted version of the commonly used continuous ranked probability score (CRPS). The weight is selected to emphasise the region of interest (such as the tails or some other region of a variable’s range) and induces a proper scoring rule. This technique extends in a natural way to point forecasts targeting the median functional, since the CRPS is a generalisation of the absolute error scoring function. The UK Met Office has recently applied this method to report the performance of temperature forecasts, with emphasis on climatological extremes (Sharpe et al., 2020).

Despite this progress, Lerch et al., (2017) offer this summary of the general situation for evaluating point forecasts at the extremes: “there is no obvious way to abate the forecaster’s dilemma by adapting existing forecast evaluation methods appropriately, such that particular emphasis can be put on extreme outcomes.” In this paper we remedy this situation. We construct consistent scoring functions that can be used evaluate point forecasts that emphasise performance in the region of interest for important classes of functionals, including expectations and quantiles. Moreover, the relevant specific case of these constructions agrees with the extension of the threshold-weighted CRPS to the median functional.

The main idea of this paper can be illustrated using the squared error scoring function , which is consistent for point forecasts of the expectation functional. Suppose that the outcome space is partitioned as , where and for some in . Corollary 3.2 gives the decomposition , where each scoring function is consistent for expectations whilst also emphasising predictive performance on the interval . In particular, if then . Given a point forecast and corresponding observation , the explicit formula for is

| (1.1) |

Here denotes the indicator function, so that equals if and otherwise.

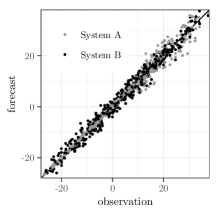

The performance of competing point forecasts for expectations can then be compared by computing the mean scores , and for each forecast system, over the same set events. To illustrate, consider two forecast systems A and B, whose error characteristics are depicted by the scatter plot of Figure 1. System B is homoscedastic (i.e., has even scatter about the diagonal line throughout the variable’s range) whereas System A is heteroscedastic (with relatively small errors over lower parts of the variable’s range and relatively large over higher parts). For this set of events, the mean squared error for each system is very similar and there is no statistical significance in their difference. However, with , the mean score of System A is significantly higher than that of B (i.e., B is superior when emphasis is placed on the region ) whilst the mean score of System A is significantly lower than that of B (i.e., A is superior when emphasis is placed on the region ). Full details for this case study are given in Section 3.5.

This example is illustrative. Decompositions of the outcome space can consist of more than two intervals, and the boundary between intervals can also be ‘blurred’ by selecting suitable weight functions. Each decomposition of the outcome space then induces a decomposition

| (1.2) |

of a given consistent scoring function , whose summands are also consistent for the functional of concern and with each emphasising forecast performance in the relevant region of the outcome space. Such decompositions are presented for the consistent scoring functions of quantiles, expectations, expectiles, and Huber means. The approach is unified, in that the same decomposition of the outcome space induces decompositions of the CRPS and of a variety of scoring functions for point forecasts. Details are given in Section 3 with illustrative examples.

Mathematically, the main result of this paper is a corollary of the mixture representation theorems for the consistent scoring functions of quantiles, expectiles (Ehm et al., 2016) and Huber functionals (Taggart, 2021). This furnishes each member of the decomposition (1.2) with an interpretation rooted in optimal decision theory. Certain classes of optimal decision rules elicit a user action if and only if a point forecast exceeds a particular decision threshold . The score is a measure of economic regret, relative to decisions based on a perfect forecast, of using the point forecast when the observation realises, averaged over all decision thresholds belonging to corresponding interval of the partition. Precise details are given in Section 4 and illustrated with the aid of Murphy diagrams.

2 Hedging strategies for naïve assessments of forecasts of extreme events

We illustrate how a seemingly natural approach for comparing predictive performance at the extremes creates opportunities for hedging strategies.

A meteorological agency maintains two forecast systems A and B, each of which produces predictive distributions for hourly rainfall at a particular location. System A is fully automated and hence cheaper to support than B. System B has knowledge of the System A forecast prior to issuing its own forecast. The agency requests the mean value of their predictive distributions with a lead time of 1 day. These point forecasts are assessed using the squared error scoring function, which is consistent for forecasts of the mean (i.e., the forecast strategy that minimises one’s expected score is to issue the mean value of one’s predictive distribution). For a two year period, the bulk of observed and forecast values fall in the interval and there is no statistically significant difference between the performance of the two systems when scored using the squared error function.

However, the maintainers of System B claim that B performs better for the extremes and that bulk verification statistics fail to highlight this. The agency decides to use forecasts from A for the majority of cases, but will test whether B is significantly better than A at forecasting the heavy rainfall events. The agency considers four options for selecting hourly events to assess, after which the squared error scoring function will be used to compare predictive performance on those events. If System B does not perform significantly better than A over the next 12 months then it will be decommissioned.

For a given forecast case, let and denote the predictive distributions produced by each system, and and the respective point forecasts issued. Suppose that the random variable has a distribution specified by . For each option, the maintainers of System B have a strategy to optimise their expected score; that is, to choose such that is minimised.

-

Option 1:

Only assess events where the observation is at least 20 mm.

Strategy: If then , which denotes the predictive distribution of conditioned on the event , exists. In this case, forecast and otherwise forecast . -

Option 2:

Only assess events where either or is at least 20 mm.

Strategy: If then . Otherwise if then else . This will ensure that the event is assessed whenever System B expects that a forecast of 20 will receive a more favourable score than than a forecast of . -

Option 3:

Only assess events where either , or the observation is at least 20 mm.

Strategy: If then . Else if then . Otherwise, the only other way the event will be assessed is if the observation is at least 20mm, so forecast . -

Option 4:

Only assess events where .

Strategy: In this case there is nothing that System B can to do influence which events will be assessed. Therefore .

Of these, only Option 4 does not expose the agency to a hedging strategy from System B. However, under Option 4 the developers of A may employ the strategy of forecasting , so that no further comparison of systems is made.

There is an Option 5: use a scoring function that is consistent for the mean functional and that emphasises performance at the extremes. We turn attention to this now.

3 Decompositions of scoring functions

We work in a setting where point forecasts and observations belong to some interval of the real line (possibly with ). In Section 3.1 we introduce partitions of unity, which are used to ‘subdivide’ the outcome space into subregions of interest. We then illustrate in Section 3.2 how such subdivisions induce decompositions of the CRPS, where each member of the decomposition emphasises performance on the corresponding subregion of whilst retaining propriety. To obtain analogous decompositions for consistent scoring functions, we recall in Section 3.3 that the consistent scoring functions for quantiles and expectations (among others) have general mathematical forms. The aim is to find which specific instances of the general form emphasise performance on the subregions specified by the partition of unity. This is answered in Section 3.4. Section 3.5 contains examples of such decompositions, and opens with a worked example showing how to find the formula for the squared error decomposition of Equation (1.1).

3.1 Partitions of unity

Recall that the support of a function , denoted , is defined by

In this paper, we say that is a partition of unity on if is a finite set of measurable functions such that and

whenever . (For readers unfamiliar with the concept of measurability, any piecewise continuous function is measurable.) We will call each a weight function. We note that these differ from typical definitions in that we do not require to be continuous or have bounded support.

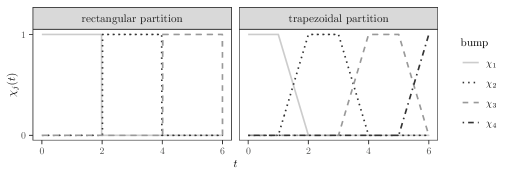

Figure 2 illustrates two different partitions of unity for the interval . The rectangular partition of unity is consists of rectangular weight functions, each of the form

| (3.1) |

for suitable constants satisfying , both depending on . The trapezoidal partition of unity consists of trapezoidal weight functions, each typically having the form

| (3.2) |

for suitable constants satisfying , all depending on , with appropriate modification for the end cases.

More generally, if is a set of piecewise nonnegative measurable functions with the property that

whenever , then a partition of unity can be constructed by defining

3.2 Decomposition of the CRPS

Each partition of unity induces a corresponding decomposition of the CRPS. Given a predictive distribution , expressed as a cumulative density function, and a corresponding observation , the CRPS is defined by

and for each the threshold-weighted CRPS by

Both are proper scoring rules (Gneiting and Ranjan, 2011). Thus the has a decomposition

where each component is proper and emphasises performance in the region determined by the weight . The Sydney rainfall forecasts example of Section 3.5 illustrates the application of such a decomposition. We now establish analogous decompositions for a wide range of scoring functions.

3.3 Consistent scoring functions

For decision-theoretically coherent point forecasting, forecasters need a directive in the form of a statistical functional (Gneiting, 2011a ) or a scoring function which should be minimised (Patton, 2020). A statistical functional is a (potentially set-valued) mapping from a class of probability distributions to the real line . Examples include the mean (or expectation) functional, quantiles and expectiles (Newey and Powell, 1987), the latter recently attracting interest in risk management (Bellini and Di Bernardino, 2017). A consistent scoring function is a special case of a proper scoring rule in the context of point forecasts, and rewards forecasters who make careful honest forecasts.

Definition 3.1.

(Gneiting, 2011a ) A scoring function is consistent for the functional relative to a class of probability distributions if

| (3.3) |

for all probability distributions , all and all . The function is strictly consistent if is consistent and if equality in Equation (3.3) implies that .

The consistent scoring functions for many commonly used statistical functionals have general forms.

Given and , define the ‘quantile scoring function’ by

| (3.4) |

The name QSF is justified because, subject to slight regularity conditions, a scoring function is consistent for the -quantile functional if and only if where is nondecreasing (Gneiting, 2011b ; Gneiting, 2011a ; Thomson, 1979). The absolute error scoring function for the median functional arises from Equation (3.4) when and . The commonly used -quantile scoring function

| (3.5) |

arises when .

Given a convex function with subderivative and , define the function by

| (3.6) |

(The subderivative is a generalisation of the derivative for convex functions and coincides with the derivative when the convex function is differentiable.) Subject to weak regularity conditions, a scoring function is consistent for the -expectile functional if and only if where is convex (Gneiting, 2011a ; Savage, 1971). The expectation (or the mean) functional corresponds to the special case , with the squared error scoring function for expectations arising from Equation (3.6) when and . A special case of the squared error scoring function is the Brier score, where and observations typically take values in .

Given with subderivative and , define the function by

| (3.7) |

where is the ‘capping’ function defined by . Subject to slight regularity conditions, a scoring function is consistent for the Huber mean functional (with tuning parameter ) if and only if where is convex (Taggart, 2021). The Huber loss scoring function

arises from when , and is used by the Bureau of Meteorology to score forecasts of various parameters. The Huber mean is an intermediary between the median and the mean functionals, and is a robust measure of the centre of a distribution (Huber, 1964; Taggart, 2021).

3.4 Decomposition of consistent scoring functions

We now state the main result of this paper, namely that the consistent scoring functions for the quantile, expectile and Huber mean functionals can be written as a sum of consistent scoring functions with respect to the chosen partition of unity. It is presented as a corollary since it follows from the mixture representation theorems of Ehm et al., (2016) and Taggart, (2021).

Corollary 3.2.

Suppose that is a partition of unity on . For each in , fix any points and in .

-

(a)

If is a nondecreasing differentiable function and then

where is nondecreasing and defined by

(3.8) Moreover, if is an interval and then on .

-

(b)

If is a convex twice-differentiable function, and then

(3.9) and

where is convex and defined by

(3.10) Moreover, if is an interval and then on .

See Appendix A for the proof. Appendix B states closed-form expressions for and for commonly used scoring functions when the partition of unity is rectangular or trapezoidal. We note that the natural analogue of Corollary 3.2 for the consistent scoring functions of Huber functionals (which are generalised Huber means) also holds.

One can show that , and are independent of the choice of points and in . In practice, the choice of and may be determined for computational convenience, such as selecting (if it exists) the minimum of .

Finally, we discuss strict consistency. Suppose that , and are strictly consistent for the quantile, expectile or Huber mean functionals respectively for some class of probability distributions. This occurs when is strictly positive, or when is strictly convex, perhaps subject to mild regularity conditions on (Gneiting, 2011a ; Taggart, 2021). If is strictly positive on then , and are also strictly consistent for their respective functionals. An example of such a partition of unity on is given by

and . Here, induces scoring functions that emphasise performance on but do not completely ignore performance on any subinterval of .

3.5 Examples

We begin by demonstrating how to find explicit formulae for a particular decomposition.

Example 3.3 (Decomposition of the squared error scoring function).

Let denote the scoring function . Note that , where via Equation (3.6), so that Corollary 3.2 applies. We use a simple rectangular partition of unity, where

and . Corollary 3.2 gives the corresponding decomposition , where . To find the explicit formula for , we compute the function using Equation (3.10). Integrating twice with the choice gives

Thus , which yields the explicit formula given by Equation (1.1) via Equation (3.6). The explicit formula for follows easily from the identity .

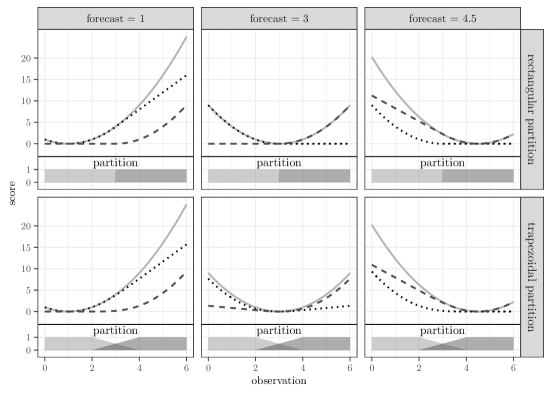

Figure 3 illustrates the decomposition with respect to the rectangular partition of unity with , and also with respect to a trapezoidal partition. For each forecast and observation , the solid line represents the score , the dotted line the score corresponding to the weight function with support on the left of the interval, and the dashed line the score corresponding to the weight function with support on the right of the interval. Note that when the forecast and observation both lie outside the support of then .

Example 3.4 (Decomposition of a quantile scoring function).

Let denote the scoring function

so that where and . The decomposition is illustrated in Figure 4 with respect to two different partitions of unity. The solid line represents , the dotted line and the dashed line .

Example 3.5 (Synthetic data).

Suppose that the climatological distribution of an unknown quantity is normal with . Two forecast systems A and B issue point forecasts for targeting the mean functional. Their respective forecast errors are identically independently normally distributed with error characteristics (where is the observation) and . Hence the standard deviation of is lower than the standard deviation of when is less than while the reverse is true when is greater than . This is evident in the varying degree of scatter about the diagonal line in forecast–observation plot of Figure 1.

We sample 10000 independent observations and corresponding forecasts, and compare both forecast systems using the squared error scoring function along with the two components of its decomposition . The decomposition is induced from a rectangular partition of unity on with and . The mean scores for each system are shown in Table 1, along with a 95% confidence interval of the difference in mean scores.

An analysis of this sample concludes that neither System A nor System B is significantly better than its competitor as measured by , since belongs to the confidence interval of the difference in their mean scores. However, one can infer with high confidence that A performs better when scored by , since is well outside the corresponding confidence interval. Similarly, one may conclude with high confidence that B performs better when scored by .

Based on the results in the table, one would use the forecast from A if both forecasts are less than 10, and the forecast from B if both forecasts are greater than 10. If the forecasts lie on opposite sides of 10, one option is to take the average of the two forecasts. We revisit this example again in Section 4 from the perspective of users and optimal decision rules.

| Mean score | System A | System B | 95% confidence interval of difference |

|---|---|---|---|

| 4.14 | 4.02 | (-0.12, 0.36) | |

| 0.61 | 2.65 | (-2.16, -1.92) | |

| 3.53 | 1.36 | (1.97, 2.36) |

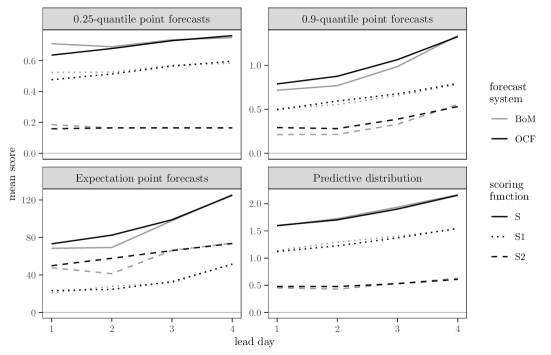

Example 3.6 (Sydney rainfall forecasts).

Two Bureau of Meteorology forecast systems BoM and OCF issue predictive distributions for daily rainfall at Sydney Observatory Hill. Climatologically, daily rainfall exceeds 35.8 mm on average 12 times a year, and 42.2 mm on average 6 times a year. We partition the outcome space using a trapezoidal partition of unity, where if , if and if . Naturally, .

Over the entire outcome space, the quality of each system’s forecasts is assessed using the CRPS (for predictive distributions), the squared error scoring function (for expectation point forecasts), and the standard quantile scoring function of Equation (3.5) (for quantile point forecasts). Moreover, each of these scores are decomposed as using the common partition . Their mean scores by forecast lead day, for the period July 2018 to June 2020, are shown in Figure 5. This example illustrates that the same partition of unity can be used to hone in on particular regions of interest across a variety of forecast types, using an assessment method that cannot be hedged. When performance is assessed with emphasis on heavier rainfall via , BoM is better than OCF at lead days 1 and 2 for expectile and 0.9-quantile forecasts, marginally better with its predictive distributions but worse than OCF at lead day 1 for 0.25-quantile forecasts.

4 Decision theoretic interpretation of scoring function decompositions

Mixture representations and elementary scoring functions facilitate a decision-theoretic interpretation of the scoring function decompositions of Corollary 3.2.

To avoid unimportant technical details, in this section assume that , is a nondecreasing differentiable function on and is convex twice-differentiable function on . Suppose that is any one of the scoring functions , or . Thus is consistent for some functional (either a specific quantile, expectile or Huber mean). Then admits a mixture representation

| (4.1) |

where each is an elementary scoring function for and the mixing measure is nonnegative (Ehm et al., 2016; Taggart, 2021). That is, is a weighted average of elementary scoring functions. Explicit formulae for these elementary scoring functions and mixing measures are given in Tables 2 and 3.

The elementary scoring functions and their corresponding functionals arise naturally in the context of optimal decision rules. In a certain class of such rules, a predefined action is taken if and only if the point forecast for some unknown quantity exceeds a certain decision threshold . The usefulness of the forecast for the problem at hand can be assessed via a loss function , where encodes the economic regret, relative to a perfect forecast, of applying the decision rule with forecast when the observation is realised. Typically whenever lies between and (e.g., the forecast exceeded the decision threshold but the realisation did not, resulting in regret), and otherwise (i.e., a perfect forecast would have resulted in the same decision, resulting in no regret).

| Functional | Elementary score |

|---|---|

| -quantile | |

| -expectile | |

| -Huber mean |

For the decision rule to be optimal over many forecast cases, the point forecast should be one that minimises the expected score , where for the predictive distribution . Binary betting decisions (Ehm et al., 2016) and simple cost–loss decision models (where the user must weigh up the cost of taking protective action as insurance against an event which may or may not occur (Richardson, 2000)) give rise to some -quantile of being the optimal point forecast. The -expectile functional gives the optimal point forecast for investment problems with cost basis , revenue and simple taxation structures (Ehm et al., 2016). The Huber mean generates optimal point forecasts when profits and losses are capped in such investment problems (Taggart, 2021). Though the language here is financial, such investment decisions can be made using weather forecasts (Taggart, 2021, Example 5.4). In each case, the particular score is, up to a multiplicative constant, the elementary score in Equation (4.1) for the appropriate functional .

Thus the representation (4.1) expresses as a weighted average of elementary scores, each of which encodes the economic regret associated with a decision made using the forecast with decision threshold . The weight on each is determined by the mixing measure , as detailed in Table 3. Relative to , the scoring function weighs the economic regret for decision threshold by a factor of , thus privileging certain decision thresholds over others.

| Scoring function | |

|---|---|

| , | |

| , |

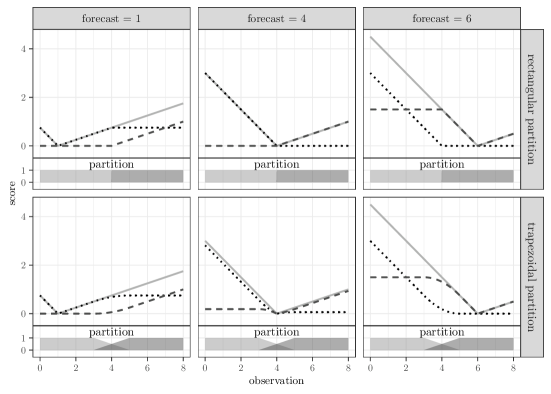

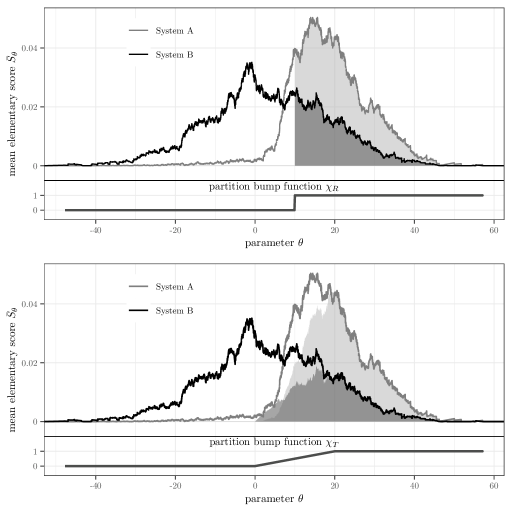

We illustrate these ideas using the point forecasts generated by Systems A and B from the Synthetic data example (Section 3.5). These point forecasts target the mean functional, and so induce optimal decision rules for investment problems with cost basis . The decision rule is to invest if and only if the forecast future value of the investment exceeds the fixed up-front cost of the investment. As previously noted, neither forecast system performs significantly better than the other when scored by squared error. However, for certain decision thresholds , the mean elementary score (which measures the average economic regret) of one system is clearly superior to the other. This is illustrated by the Murphy diagram of Figure 6, which is a plot of against (Ehm et al., 2016). Since a lower score is better, users with a decision threshold exceeding about should use forecasts from B, while those with a decision threshold less than should use forecasts from A.

Let denote the same decomposition of the squared error scoring function used for the Synthetic data example (Section 3.5), induced from a rectangular partition of unity. To aid simplicity of interpretation, assume for the user group under consideration that there is one constant such that the each elementary score equals multiplied by the economic regret. We observe the following.

For , and so by Table 3. So by Equation (4.1), the mean squared error for each forecast system is proportional to the total area under the respective Murphy graph. Hence mean squared error can be interpreted as being proportional to the mean economic regret averaged across all decision thresholds.

For , , where is the weight function on the top panel of Figure 6. From Equation (4.1), the mean score for each forecast system is proportional to the area under the respective Murphy curve for decision thresholds in . This is illustrated on the top panel of Figure 6 with shaded regions. System B has the smaller area and mean score and is hence superior. So can be interpreted as being proportional to the mean economic regret averaged across all decision thresholds in . Similarly, can be interpreted as being proportional to the mean economic regret averaged across all decision thresholds less than .

The bottom panel of Figure 6 illustrates the effect of using a trapezoidal weight function . The corresponding scoring function applies zero weight to economic regret experienced by users with decision thresholds less than 0, and increasing weight to thresholds greater than zero until a full weight of 1 is applied for decision thresholds greater than or equal to 20. The areas of the shaded regions are proportional to the mean score for Systems A and B.

5 Conclusion

We have shown that the scoring functions that are consistent for quantiles, expectations, expectiles or Huber means can be decomposed as a sum of consistent scoring functions, each of which emphasises predictive performance in a region of the variable’s range according to the selected partition of unity. In particular, this allows the comparison of predictive performance for the extreme region of a variable’s range in a way that cannot be hedged and is not susceptible to misguided inferences.

The decomposition is consonant with the analogous decomposition of the CRPS using the threshold-weighting method, and hence by extension with known decompositions for the absolute error scoring function.

Such decompositions are a consequence of the mixture representation for each of the aforementioned classes of consistent scoring functions and their associated functionals. This has two implications. First, each score in the decomposition can be interpreted as the weighted average of economic regret associated with optimal decision rules, where the average is taken over all user decision thresholds and the weight applied is given by the corresponding weight function in the partition of unity. Second, such decompositions will also exist for the consistent scoring functions of other functionals that have analogous mixture representations.

Acknowledgements

The author thanks Jonas Brehmer, Deryn Griffiths, Michael Foley and Tom Pagano for their constructive comments, which helped improve the quality of this paper.

Appendix A Proof of Corollary 1

As mentioned in Section 3.4, Corollary 3.2 essentially follows from the mixture representation of theorems of Ehm et al., (2016) and Taggart, (2021) by decomposing the mixing measure. However, we provide a direct proof to avoid some technical hypotheses of those theorems.

Proof.

We prove for the case . The proof for the cases and are similar.

To begin, note that if whenever for some real constants and then . So without loss of generality we may assume that and in the definition of whenever for some constants and in .

Suppose that is a convex twice-differentiable function and . Given the partition of unity , define by Equation (3.10). Then

which establishes Equation (3.9). The convexity of follows from the fact that , since is convex. Finally, suppose that and . Now

and since lies between and , it follows that also lies in . But on and hence the inner integral vanishes. This shows that on . ∎

Appendix B Formulae for commonly used scoring functions

Table 4 presents closed-form expressions for of Equation (3.8) in the case when and for of Equation (3.10) in the case when , each induced by rectangular weight functions of the form (3.1) or trapezoidal weight functions of the form (3.2). These expressions facilitate the computation of decompositions for the absolute error, standard quantile, squared error and Huber loss scoring functions. Note also that for each weight function we have .

| weight function | expression |

|---|---|

References

- Bellini and Di Bernardino, (2017) Bellini, F. and Di Bernardino, E. (2017). Risk management with expectiles. The European Journal of Finance, 23(6):487–506.

- Ehm et al., (2016) Ehm, W., Gneiting, T., Jordan, A., and Krüger, F. (2016). Of quantiles and expectiles: consistent scoring functions, choquet representations and forecast rankings. J. R. Statist. Soc. B, 78:505–562.

- (3) Gneiting, T. (2011a). Making and evaluating point forecasts. Journal of the American Statistical Association, 106(494):746–762.

- (4) Gneiting, T. (2011b). Quantiles as optimal point forecasts. International Journal of forecasting, 27(2):197–207.

- Gneiting and Katzfuss, (2014) Gneiting, T. and Katzfuss, M. (2014). Probabilistic forecasting. Annual Review of Statistics and Its Application, 1:125–151.

- Gneiting and Ranjan, (2011) Gneiting, T. and Ranjan, R. (2011). Comparing density forecasts using threshold-and quantile-weighted scoring rules. Journal of Business & Economic Statistics, 29(3):411–422.

- Huber, (1964) Huber, P. J. (1964). Robust estimation of a location parameter. Annals of Mathematical Statistics, 35:73–101.

- Lerch et al., (2017) Lerch, S., Thorarinsdottir, T. L., Ravazzolo, F., and Gneiting, T. (2017). Forecaster’s dilemma: Extreme events and forecast evaluation. Statistical Science, 32(1):106–127.

- Newey and Powell, (1987) Newey, W. K. and Powell, J. L. (1987). Asymmetric least squares estimation and testing. Econometrica, 55:819–847.

- Patton, (2020) Patton, A. J. (2020). Comparing possibly misspecified forecasts. Journal of Business & Economic Statistics, 38(4):796–809.

- Richardson, (2000) Richardson, D. S. (2000). Skill and relative economic value of the ECMWF ensemble prediction system. Quarterly Journal of the Royal Meteorological Society, 126(563):649–667.

- Savage, (1971) Savage, L. J. (1971). Elicitation of personal probabilities and expectations. Journal of the American Statistical Association, 66(336):783–801.

- Sharpe et al., (2020) Sharpe, M., Bysouth, C., and Gill, P. (2020). New operational measure to assess extreme events using site-specific climatology. Presented at 2020 International Verification Methods Workshop Online. Retreived from https://jwgfvr.univie.ac.at/presentations-and-notes/ on 5 January 2021.

- Taggart, (2021) Taggart, R. (2021). Point forecasting and forecast evaluation with generalized Huber loss. Electronic Journal of Statistics, to appear.

- Thomson, (1979) Thomson, W. (1979). Eliciting production possibilities from a well-informed manager. Journal of Economic Theory, 20:360–380.