∎

Excitable Networks for Finite State Computation with Continuous Time Recurrent Neural Networks

Abstract

Continuous time recurrent neural networks (CTRNN) are systems of coupled ordinary differential equations that are simple enough to be insightful for describing learning and computation, from both biological and machine learning viewpoints. We describe a direct constructive method of realising finite state input-dependent computations on an arbitrary directed graph. The constructed system has an excitable network attractor whose dynamics we illustrate with a number of examples. The resulting CTRNN has intermittent dynamics: trajectories spend long periods of time close to steady-state, with rapid transitions between states. Depending on parameters, transitions between states can either be excitable (inputs or noise needs to exceed a threshold to induce the transition), or spontaneous (transitions occur without input or noise). In the excitable case, we show the threshold for excitability can be made arbitrarily sensitive.

Keywords:

Continuous Time Recurrent Neural Network Nonlinear Dynamics Excitable Network Attractor1 Introduction

It is natural to try to understand computational properties of neural systems through the paradigm of network dynamical systems, where a number of dynamically simple units (i.e. with attracting equilibria or periodic orbits) interact to give computation as an emergent property of the system. This is the as much the case for biological models of information processing, pattern generation and decision making as it is for artificial neural networks inspired by these. A variety of specific models have been developed to describe the dynamics and training of recurrent networks comprised of coupled neurons in biological and artificial settings. The particular challenge that we address here is the construction of arbitrarily complex, but specified, dynamical structures that enable discrete (finite-state) computation in an input-driven system that is nonetheless continuous in both state and time. Clearly, invariant objects of the autonomous system (such as equilibria, periodic orbits and chaotic attractors) only form part of the picture and an input-dependent (non-autonomous) approach such as manjunath2012theory is needed.

To help understand the response of systems to inputs the authors introduced in Ashwin2016a a notion of a “network attractor”, namely an invariant object in phase space that may contain several local invariant sets, but also systems of interconnections between them. This generalises the notion of “heteroclinic network” and “winnerless competition/stable heteroclinic channels” afraimovich2004origin which have been used to describe a range of sequence generation, computational and cognitive effects in neural systems: see for example rabinovich2001dynamical ; rabinovich2006generation ; afraimovich2004heteroclinic ; rabinovich2020sequential ; hutt2017sequences . These models connect computational states (represented as saddles in the dynamics). In the presence of inputs or noise, the switching between saddles is a useful model for spontaneous computational processes but there are two problems. One of these is that the states are dynamically unstable and so there are spontaneous transitions that are not noise driven. The other is that heteroclinic chains are destroyed by arbitrarily small perturbations, unless there are special structures in phase space (symmetries or invariant subspaces).

Network attractors joining stable equilibria Ashwin2016a overcome both of these issues. In this paper we show that arbitrarily complex network attractors exist in idealised class of model is the continuous time recurrent neural network (CTRNN) beer1995dynamics . A CTRNN is a set of differential equations each with one scalar variable that represents the level of activation of a neuron, and feedback via a saturating nonlinearity or “activation function”. These models have been extensively investigated in the past decades as simple neurally-inspired systems that can nonetheless (without input) have complex dynamics by virtue of the nonlinearities present beer1995dynamics . They can also be trained to perform arbitrarily complex tasks depending on time-varying input tuci2002evolutionary ; yamauchi1994sequential . They are frequently used in investigations of evolutionary robotics blynel2003exploring and (in various equivalent formulations chow2019before ) as models for neural behaviour in biological or cognitive systems. For example, the classical work of Hopfield and Tank hopfield1985neural considers such systems with symmetric weights to solve optimization problems, while more recently, bhowmik2016reservoir discusses CTRNN models for episodic memory, and several other biological and cognitive applications are discussed in nikiforou2019dynamics .

CTRNNs are often referred to as “universal dynamical approximators” funahashi1993approximation , meaning that the trajectory of a CTRNN can approximate, to arbitrary precision, any other prescribed (smooth) trajectory in . However, this does not mean that the dynamics of CTRNNs are simple to understand, or that it is easy to form the above approximation. It also raises the question of how a “trained” CTRNN performs a complex task. Gradient descent or more general evolutionary training algorithms train the network by navigating through a high dimensional landscape of possible feedback weightings and moving these towards a setting that is sufficiently optimal for the task. It may be possible to give a clear description of the resulting nonlinear dynamics of the autonomous (constant input) CTRNN, but we want to understand not only this but also how inputs affect the state of the system.

The main theoretical result in this paper focusses on “excitable network attractors”: these consist of a finite set of local attracting equilibria and excitable connections between them (see Appendix A). It was demonstrated in Ashwin2018sensitive that an excitable network attractor can be used to embed an arbitrary Turing machine within a class of purpose-designed coupled dynamical system with two different cell types. Rather than relying on an optimization approach to design the system, that paper gave a constructive method for designing a realisation of any desired network attractor. However, this construction required specialist dynamical cells with quite complex nonlinear couplings between them, and a comparatively large number of cells. It was recently shown in ceni2020interpreting that trained RNNs in the form of echo-state networks can realise excitable networks for certain tasks and that structural errors in the trained network can explain errors in imperfectly trained systems.

The current paper demonstrates that CTRNN dynamics is sufficiently rich to realise excitable networks with arbitrary graph topology, simply by specifying appropriate connection weights. The construction algorithm in the proof of Theorem 2.1, assigns one of only four values to each of the connection weights to realise an arbitrary graph on vertices as an excitable network on states (subject to some minor constraints on its connectivity), using a CTRNN with cells. The CTRNN we consider in this paper (see for example beer1995dynamics , which corresponds to a continuous time Hopfield model hopfield1985neural in the symmetric coupling case ) are ordinary differential equations

| (1) |

where is the internal state of the cells of the system, is a matrix of connection weights, is a (sigmoid) activation function that introduces a saturating nonlinearity into the system and is an input. We say system (1) is input-free if for all and .

We consider two cases for , a smooth function

| (2) |

and a piecewise affine function

| (3) |

In both cases, is monotonic increasing with

and a maximum derivative at , equal to In both cases, and are parameters, and we are interested in the case . In general, the function need not be the same in every component of (1), but here we make a simplifying assumption that it is.

Note that both activation functions (2) and (3) have piecewise constant limits in the singular limit . Such limiting systems are of Fillipov type and have been explored in various biological contexts, especially for gene regulatory dynamics. These can also have rich dynamics as discussed in the literature (for example gouze2003aclass ; harris2015bifurcations ) but we do not consider this limit here.

The main contribution of Section 2 is to give, in Theorem 2.1, a construction of a connection weight matrix such that the dynamics of the input-free system (1) contains (or realises: definition given below) an excitable network attractor, as defined in Ashwin2016a . We prove this (with details in Appendix B) for the case of the piecewise affine function . In section 3 we present evidence that this is also true for the smooth case for an open set of parameters. Qualitatively, this means the system will contain a number of stable equilibrium states, and small inputs (either deterministic, or noisy) will push the trajectory from one stable equilibrium into the basin of attraction of another. In this way, transitions can be made around the network, and the transition time between states tends to be much smaller than the residence times of the trajectory in neighbourhoods of the states. In particular, we can choose so that the network attractor has (almost) any desired topology. In Appendix A we recall formal definitions of network attractors from Ashwin2016a ; Ashwin2018sensitive .

In section 3 we consider several examples of simple graphs, and demonstrate that the desired networks do indeed exist in the systems as designed. We also perform numerical bifurcation analysis to demonstrate the connection between periodic orbits in the input-free deterministic system (1) and excitable networks in the same system with additive noise, that is, the system of stochastic differential equations (SDEs):

| (4) |

where are independent standard Wiener processes. Here, the noise plays the role of inputs that propel the trajectory around the network, although of course this occurs in a random manner. In sections 3.3 and 3.4 we consider graphs that have multiple edges leading out from a single vertex, and show that additional equilibria may appear in the network attractor where two or more cells are active simultaneously. We further show that the existence of these additional equilibria can be suppressed by choosing one of the parameters used in the construction of the weight matrix to be sufficiently large.

Section 4 concludes by relating our results to other notions of sequential computation. We also conjecture some extensions of the results shown in this paper.

2 Construction of a CTRNN with a network attractor

Let be an arbitrary directed graph between vertices, and let be the adjacency matrix of . That is, if there is a directed edge from vertex to vertex , and otherwise.

Let be an invariant set for a system of ordinary differential equations. We say is an excitable network that realises a graph for some amplitude if for each vertex in there is a unique stable equilibrium in , and if there is an excitable connection with amplitude in from to whenever there is an edge in from to . The existence of an excitable connection means that there exists a trajectory with initial condition within a distance of , which asymptotes in forward time to (formal definitions are given in Appendix A).

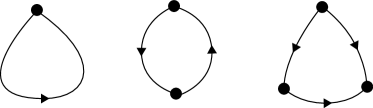

For the purposes of our construction of a network attractor, we assume that contains no loops of order one, no loops of order two, and no -cliques. Figure 1 shows each of these graph components schematically.

In terms of the adjacency matrix, for to contain no loops of order one requires that

| (5) |

for to contain no loops of order two requires that

| (6) |

and for to contain no -cliques requires that

| (7) |

In our earlier work, we have demonstrated a network design which can admit order-two loops and -cliques Ashwin2016a , although this previous construction is not motivated by neural networks per se, and requires a higher dimensional system of ODEs (for a given graph) than the one presented here.

Before we move into the details of the construction, we briefly discuss our terminology. A graph has vertices, which correspond to (stable) equilibria in the phase space of the dynamical system (1). Also within the phase space, there exist excitable connections (sometimes abbreviated to connections) between the equilibria, which correspond to the edges of the graph. When a trajectory in the phase space moves between neighbourhoods of equilibria along a path close to one of these connections, we say that a transition between the equilibria has occurred. We refer to each of the components of the dynamical system (1) as a cell, and say that a cell is active if is close to one.

2.1 Realization of arbitrary directed graphs as network attractors

We construct a weight matrix that depends only on the adjacency matrix , and on four parameters , , and . It is given by

| (8) |

where is the Kronecker . This choice of ensures that , if there is a directed edge in from vertex to (i.e. ), if there is a directed edge in from vertex to vertex (i.e. ), and otherwise. In later sections we allow for different weights along different edges by allowing to depend on and (i.e. ). We give an overview of how each of the parameters affect the dynamics of the system in section 2.2.

We write to be a vector of all parameter values. The next result shows that for the piecewise affine activation function and suitable choice of parameters, there is an embedding of as an excitable network attractor for the input-free system.

Theorem 2.1

For any directed graph with vertices containing no loops of order one, no loops of order two, and no -cliques, and any small enough , there is an open set such that if the parameters then the dynamics of input-free equation (1) on cells with piecewise affine activation (3) and defined by (8) contains an excitable network attractor with threshold , that realises the graph .

Recall that by realises we mean that all edges in the graph are present as transitions between stable equilibria using perturbations of size at most .

Proof

We give the main ideas behind the proof here, deferring some of the details to Appendix B. We construct an excitable network attractor in for (1) with piecewise activation function (3) and weight matrix (8). For any , we show there exist parameters (with small) and stable equilibria () that are connected according to the adjacency matrix by excitable connections with amplitude . Below, we provide an explicit set of parameters that make such a realisation, and note that the realisation will hold for an open set of nearby parameters.

We show in Appendix B that the equilibria have components (cells) that are close to one of four values , , , related to the edges attached to the corresponding vertex in the graph . For any , we use the following parameters:

| (9) |

and then and are given by

| (10) |

We then set

| (11) |

We use square brackets and subscripts to identify the components of points in phase space, that is, is the th component of . Each has:

-

•

Exactly one cell that is Active:

-

•

A number of cells that are Leading: (if )

-

•

A number of cells that are Trailing: (if )

-

•

All remaining cells are Disconnected: ().

Note that the requirement of no loops of order one or two and no -cliques implies that this labelling is well defined.

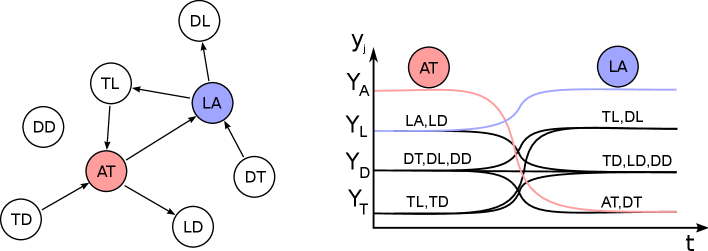

From equilibrium , there is an excitable connection to any of the equilibria with , that is, any of the Leading cells can become the Active cell. During a transition, the remaining cells can be classified into six types, which are identified in figure 2, and depend (for each ) on the values of the four entries in the adjacency matrix , , and . We label cell as AT (Active–Trailing) and cell as LA (Leading–Active). Note that the lack of two cycles means that the cases with or (a total of seven possibilities) cannot occur, and the lack of -cliques mean that the cases with , , or also cannot occur (which includes the cases where a cell would switch from Leading to Trailing). The remaining six possibilities are listed below.

-

•

Type DD: ; the cell is Disconnected throughout.

-

•

Type TD: , ; the cell switches from Trailing to Disconnected.

-

•

Type LD: , ; the cell switches from Leading to Disconnected.

-

•

Type TL: , ; the cell switches from Trailing to Leading.

-

•

Type DT: , ; the cell switches from Disconnected to Trailing.

-

•

Type DL: , ; the cell switches from Disconnected to Leading.

The right panel in figure 2 shows how a transition from cell AT active to cell LA active will occur in a general network.

To prove the existence of an excitable connection giving a realisation we consider a perturbation from to the point

where is the unit basis vector, and we show in Appendix B that, for small enough , is in the basin of attraction of if . This means there is an excitable connection from to in this case. ∎

We believe that for small enough and suitable choice of weights, the realisation of in Theorem 2.1 can be made almost complete in the sense analogous to the similar notion for heteroclinic networks ashwin2020almost , namely that the set

has zero measure. If a network realization is almost complete then almost all trajectories starting close to some will remain close, or will follow a connection corresponding to the realization. We do not have a proof of this, though Appendix B.2 shows that for small enough , is in the basin of attraction of if and only if . This does not preclude the possibility that there exist perturbations in other than resulting in trajectories asymptotic to , or indeed to other attractors. Our numerical investigations suggest that by choosing non-zero and large enough, any connections to other equilibria can be suppressed. This is discussed more in sections 3.4 and 4.

2.2 Excitable networks for smooth nonlinearities

For small enough , the smooth activation function (2) can be made arbitrarily close to the piecewise activation function (3), and so we expect Theorem 2.1 to also apply in the smooth case, but do not give a proof here. However, throughout section 3 we use the smooth activation function (2) in our examples. We use

| (12) |

as our default parameter set (compare this choice of parameters with that given in equations (9) and (10), with ), though there will be an open set of nearby parameters with analogous behaviour. In section 3 we provide several examples of using this choice of weight matrix to realise a graph .

From equation (11) we can see that the parameter choices directly affect the location of the equilibria in phase space. As we will see in the following sections, the parameters also have further effects on the dynamics. In particular, the relative sizes of the parameters and determine whether the dynamics are excitable or spontaneous: essentially, for small enough, needs to be smaller than to observe excitable dynamics. If is too large, then the equilibria cease to exist: periodic orbits can exist instead. The parameter controls how fast a Trailing cell decays, and the parameter controls the suppression effects when there is more than one Leading cell. We discuss the effects of in more detail in section 3.3.

For a smooth activation function such as (2) that is invertible on its range, there is a useful change of coordinates to (a similar transformation is made in beer1995dynamics ). The input-free equations (1) then become

| (13) |

where each (which is the domain of the function ), and

Each vertex in the graph is realised in the phase space of the variables by a stable equilibrium with one of the close to and the remainder close to . With a slight abuse of notation, we still refer to these equilibria as . As we will see in the examples which follow, the parameters are chosen such that the dynamics are close to a saddle-node bifurcation; more precisely such that the system is near a codimension bifurcation where there are saddle-nodes of the equilibria . This bifurcation has a global aspect analogous to a saddle-node on an invariant circle (SNIC) or saddle-node homoclinic bifurcation in that coupling weights are such that there are global connections between the saddle nodes that reflect the network structure.

If parameters are on one side of the saddle-node bifurcations then for each there will exist a pair of equilibria, one of which will be stable (this is the equilibrium ), and a nearby saddle. A small perturbation can then move the trajectory out of the basin of attraction of the stable equilibrium and effect a transition to another equilibrium: this is an excitable connection from .

If parameters are on the other side of the saddle-node bifurcations then the flow through the corresponding region of phase space will be slow but we will still observe intermittent dynamics as the trajectory passes through this region (strogatz1994nonlinear, , p99). In this case, we refer to a region where (a) there is a unique local minimum of and (b) a large subset of initial conditions in this region pass close to this minimum, as a bottleneck region , and refer to a spontaneous transition past . (This is also called the ghost of a saddle-node bifurcation in strogatz1994nonlinear .) If all the connections corresponding to edges in the graph are excitable, then the system contains an excitable network attractor. If all connections are spontaneous, then we typically see a periodic orbit, although we do not prove this.

3 Examples for the smooth activation function

3.1 Two vertex graph

For our first example we consider the connected graph with two vertices and a single edge joining them, that is , and for . We use bifurcation analysis to show that the transition between spontaneous and excitable dynamics is caused by a saddle-node bifurcation, and find an approximation to the location of the saddle-node bifurcation in parameter space.

The two-dimensional system of equations is:

| (14) | ||||

| (15) |

In the variables, this becomes

| (16) | ||||

where . We note the following properties of the function , with :

If , then has local extrema at and , where

| (17) |

The and nullclines of system (16) are at, respectively

| (18) |

In figure 3, we show a sketch of the phase space of (16); the nullcline is shown in blue, and the nullcline in red. Solid dots show stable equilibria, open dots show unstable equilibria and arrows show the direction of flow. Note that we do not include any nullclines at or because they are not in the domain of equations (16). As is decreased (in the figures, moving from left to right), a saddle-node bifurcation creates a pair of equilibrium solutions.

Lemma 1

If , and , then a saddle node bifurcation occurs in system (16) when

| (19) |

To begin the proof, we note that the saddle-node bifurcation will occur when the points and (marked by squares in the left-hand panel of figure 3) coincide. These points are defined as the intersection of the nullclines with the line at , i.e. at the local extrema of the nullcline.

Let the coordinate of be

Let the coordinate of be , and write , for some . Substituting this, along with , into the expression for the nullcline in (18) gives

Expanding in terms of the small quantities and , this gives

which we rearrange to find

Since we are assuming , then is exponentially small, that is for all , thus, .

The points and collide when , that is, when

For the default parameters (12), except for , we find (4 s.f.). Note that this means for we are close to saddle node and there is an excitable connection with small . More generally, note that for any fixed , as we have as expected from Theorem 2.1.

The following result gives an approximation of the positions of the equilibria that are created in the saddle-node bifurcation. Methods similar to those used in this proof are used in later sections for larger networks.

Lemma 2

If , , and , then if , the system (16) has a pair of equilibria at

Recall that . Thus,

Using the earlier results on the location of the nullcline, we will have equilibria when

Expanding about and writing gives,

where the final line follows because . Substituting for then gives the result.

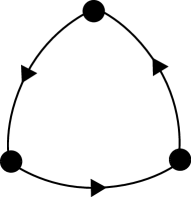

3.2 Three vertex cycle

Our second example is the cycle between three vertices shown schematically in figure 4. As a heteroclinic cycle between equilibria, this system has been studied extensively in the fields of populations dynamics ML75 , rotating convection BH80 and symmetric bifurcation theory GH88 .

We give some numerical examples of the dynamics of this system as realised by the CTRNN excitable network, and use the continuation software AUTO auto to show that the transition from excitable to spontaneous dynamics occurs at a saddle-node on an invariant circle (SNIC) bifurcation generating a periodic orbit.

The deterministic equations realising this graph are:

| (20) | ||||

We also consider the noisy case, using the setup given in equations (4).

Figure 5 show sample time series for two different parameter sets. On the left, we show a noisy realisation with , and on the right, a periodic solution in the deterministic system (equations (1)) with .

Note that in both cases, for this system the variables oscillate between three values: high (), intermediate (), and low (), as the cells shift between Active, Trailing and Leading. Only the first of these corresponds to , as can be seen in the time series plots of the variables in the lower panels of the figure, the other two correspond to .

Figure 6 shows a bifurcation diagram of this system as is varied. Stable solutions are shown in red. There is a saddle-node on an invariant circle (SNIC) bifurcation at . For , the diagram shows a stable equilibrium solution with (and , ). As increases through , this equilibrium disappears in a SNIC bifurcation creating a stable periodic orbit. Note that the period of the periodic orbit asymptotes to as the SNIC bifurcation is approached. Due to the symmetry, there are of course two further pairs of equilibria, one pair with , , , and another with , , . The symmetry causes three saddle-node bifurcations to occur simultaneously, creating the periodic orbit. If we were to instead choose the to be different in each of the lines in (20), the saddle-nodes would occur independently, and a periodic orbit would exist only if all three ’s were greater than .

The time-series on the left-hand side of figure 5 has . Without noise, at these parameter values, the system would remain at one equilibrium point indefinitely. The noise acts as inputs pushing the trajectory along the excitable connections. The time series on the right-hand side of figure 5 has , and shows the periodic orbit which has resulted from the SNIC bifurcation.

We note that this SNIC bifurcation occurs at approximately the same value of as the saddle-node bifurcation found in section 3.1. This is not surprising; using similar methods to those in the previous section, we can show that to lowest order in , the SNIC bifurcation occurs when . For there thus exists an excitable network in the sense defined in appendix A.

3.3 Four node Kirk–Silber network

For our next example, we consider a graph with the structure of the Kirk–Silber network KS94 , shown schematically in figure 7. This graph has one vertex which has two outgoing edges, and the dynamics here are somewhat different to vertices with only one outgoing edge. The bulk of this section is devoted to an analysis of these differences, in particular, the possibility of an additional equilibrium in the network attractor with two active cells.

The corresponding deterministic equations for this network are (moving immediately into the variables):

| (21) | ||||

We can break the symmetry between and by choosing . In fact, in what follows, we will frequently write , for , and choose for simplicity.

We consider first the dynamics near each of the vertices that have exactly one outgoing edge (vertices 1, 3 and 4 in the graph; see figure 7). Again using the same techniques that were used in section 3.1 (lemma 2), we can show that for , and , , there exist equilibria solutions at, for example

That is, there is a transition from excitable to spontaneous dynamics (in this case between cells and , but also between cells and and cells and ) as is increased through .

The dynamics close to the vertex with two outgoing connections (vertex 2) is modified by the presence of the additional parameter . Consider the three dimensional subsystem of (21) with , that is:

| (22) | ||||

We can perform a similar calculation to that shown in section 3.1 to show that there is a section of the null-surface which lies asymptotically close to the surface . Equilibria solutions exist on this null-surface if the and null-surfaces intersect there, that is, if there are solutions to the pair of equations

| (23) | ||||

| (24) |

We assume without loss of generality that (i.e. ) and then the arrangement of these curves is in one of the configurations shown in figure 8. If (lower panel), equilibria solutions exist (i.e. the red and blue curves intersect) for a range of and with both larger than : that is, the transition to spontaneous dynamics happens at a larger value of (than if ). If (upper panel), the opposite happens: that is, the transition to spontaneous dynamics occurs at a smaller value of . Solving these equations exactly requires solving a quartic equation, and the resulting expression is not illuminating. We label the value of at which this transition from spontaneous to excitable dynamics occurs as , and note that this is a function of , , , as well as more generally, the number of Leading directions from that cell.

For the specific system (21), with , we thus have two conditions. If then the system is excitable along all connections. If then a periodic orbit will exist. If neither of these conditions holds then we will see excitable connections in some places and spontaneous transitions in others.

In figure 9 we show some example time-series of the system (21) (in the coordinates). In panel (a), parameters are such that , so we see a periodic solution in the deterministic system. Note that the (yellow) coordinate becomes close to during this trajectory, but the (purple) coordinate does not: it switches between , and . In panel (b), parameters are such that , so without noise the trajectory would remain at a single equilibrium solution. Here we add noise with , and the trajectory can be seen exploring the network. Note that there are some transitions between ( is red) and ( is yellow), and some from to ( is purple). In panels (c) and (d), we increase further away from the saddle-node bifurcation (further into the regime of spontaneous transitions) and observe some qualitative differences in the trajectories. In the deterministic case (c), the periodic solution now transitions from near the bottleneck region to a region of phase space where and are both Active. We label this region of phase space as . In the noisy case (d) (which is also in the spontaneous regime), the trajectory makes transitions from the bottleneck region to each of , , and to .

In the above simulations, we have used , but we observe that the type of qualitative behaviour observed depends both on the parameters and . Specifically, a sufficiently negative provides a suppression effect, meaning that only a single cell can be active at any one time, but the transitional value of depends on . In figure 10 we show maximum values of and along the periodic orbits as is varied. It can be seen clearly here that the transition between periodic orbits which visit (where is significantly larger than ) and those which visit (where ) is quite sharp.

We extend these results to show the behaviour as both parameters and are varied in figure 11. The data in this figure shows the observed behaviours for both noisy and deterministic systems as the parameters and are varied. The red lines are the curves (dotted) and (dashed). If is above both of these lines then all transitions are spontaneous, and so a periodic orbit exists in the system. If lies below either one (or both) of these lines, then at least one of the transitions will be excitable and so there will be no periodic solutions. The black line shows the boundary between those periodic solutions which visit (to the left of the black line) and those which visit (to the right of the black line), as determined by the location of the sharp transition in calculations similar to those shown in figure 10 for a range of . The background colours are results from noisy simulations. The colour indicates the ratio of transitions to to the total number of transitions to , and . Interestingly, the noisy solutions require a much larger value of than the deterministic ones to have a significant proportion of transitions to .

These changes in qualitative dynamics can be explained in terms of the three-dimensional subsystem with , given by equations (22). In this three-dimensional system, there are stable equilibria at

as well as further unstable/saddle equilibria. Recall that these equilibria are not on the boundaries of the box (which are not part of the domain). In figure 12 we show solutions from the full four-dimensional system (21) projected onto the three-dimensional space with . In panel (a), we show the periodic solutions from the deterministic systems for five different values of , ranging from (left curve, dark purple), to (right curve, red) in increments of . It can be seen that the first two of these trajectories approach the saddle equilibria (marked as a blue dot) from one side of its stable manifold, and the latter three from the other. The first three thus visit , and the latter three visit . It is the transition of the periodic orbit across the stable manifold which results in the rapid change in the qualitative behaviour of the periodic orbit, and likely indicates that a homoclinic bifurcation to this saddle point separates these behaviours. That is, the sharp transition in in figure 10 should actually extend to on both sides. In panel (b), we show both noisy and deterministic trajectories with . Note that only one of the noisy trajectories follows the deterministic trajectory closely: the majority visit .

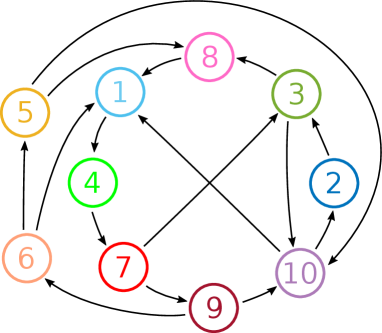

3.4 A ten node network

In this section, we demonstrate the method of construction described in section 2 for a larger network. Specifically, we randomly generated a directed graph between 10 vertices, with the constraints that it contained no one-loops, two-loops or -cliques, and such that the graph does not have feed-forward structure (i.e. you cannot get ‘stuck’ in a subgraph by following the arrows). The graph we consider is shown in figure 13.

We ran one simulation of the deterministic CTRNN system (1), and two simulations of the noisy CTRNN system (4), and in each case, randomly generated the entries for the in the equation (8). For the deterministic system, the entries of were chosen independently from the uniform distribution . For the noisy systems, the entries of were chosen independently from the uniform distribution . The remaining parameters were set at the default parameter values given in (12) except for the deterministic system, and one of the noisy systems, and for the noisy systems. Note that for the deterministic parameter values there are bottlenecks in the phase space close to the locations in phase space for the excitable states that are present for the parameter values used in the stochastic cases.

The results of the simulations are shown in figures 14, 15 and 16. For the deterministic simulation (figure 14), we see that the system has an attracting period orbit, which visits the nodes in the order . The entries of were randomly generated as described above, and we do not give them all here for space reasons, but we note that in all cases in which a vertex in this cycle has two ‘choices’ for which direction to leave (in the graph shown in figure 13), the attracting periodic orbit chooses the more unstable direction. That is, if is a vertex in the above cycle, and if and are connections in the directed graph, with being a part of the attracting periodic orbit, then . Although we do not prove here that this will always be the case, it is intuitively what one might expect, that is, the connection from to is stronger than the connection from to .

In the noisy simulation with (figure 15), the equilibria are not visited in a regular pattern, but random choices are made at each equilibria from which there is more than one direction in which to leave. See, for instance, the transition at , and the transition at . The length of time spent near each equilibria is also irregular; note for instance, the variable amount of time spent near and . In this simulation, because the transverse parameter is sufficiently negative, only one node is active at any given time.

By contrast, the simulation in figure 16 has . Here, the transitions again are made randomly, but without the suppression provided by the transverse parameter it is possible to have multiple cells active at once. For instance, at around , both cells 1 and 5 become active at the same time; they were both leading cells from the previously active cell 6. As cells 1 switches off, cell 4 becomes active, and as cell 5 switches off, cell 8 becomes active. The system continues to have two active cells around , at which point a third cell also becomes active. If the trajectory were to run for longer then the number of active cells could decrease again, if an active cell suppresses more than one previously active cells.

The entire excitable network attractor for this level of noise is clearly more complicated than the design shown in figure 13, in that additional equilibria (with more than one active cell) are accessible to those encoded and described in Theorem 2.1. An interesting extension of this work would be to understand which additional equilibria appear in a network attractor generated from a given directed graph in this manner: figure 16 suggests that at least seven levels of cell activity are needed to uniquely describe the states that can appear when more than one cell becomes “active”. In particular, when a cell is Trailing to more than one Active cell, the value of for that cell is even lower than (compare the top panel of figure 16 with the schematic in figure 2).

4 Discussion

The main theoretical result of this paper is Theorem 2.1, which states that it is possible to design the connection weight matrix of a CTRNN such that there exists a network attractor with a specific graph topology embedded within the phase space of the CTRNN. The graph topology is arbitrary except for minor restrictions: namely there should be no loops of order one or two, and no -cliques. Theorem 2.1 assumes a piecewise affine activation function, but the examples in Section 3 suggest that the results generalise to CTRNN using any suitable smooth activation function. More generally, note that the coupled network is in some sense close to simultaneous saddle-node bifurcations. However, the units are not weakly coupled and indeed this is necessary to ensure that when one cell becomes active, the previous active cell is turned off.

Theorem 2.1 proves the existence of an excitable network with threshold where not only the connection weights, but also and (properties of the activation function) may depend on . We believe that a stronger result will be true, namely that can be chosen independent of properties of the activation function, and also that this can be made an almost complete realisation by appropriate choice of parameters.

Conjecture 1

Assume the hypotheses on the directed graph with vertices as in Theorem 2.1 hold. Assume that is small and . Then there is a such that for any there is an open set . If the parameters then the dynamics of input-free equation (1) with cells and piecewise affine activation function (3) and defined by (8) contains an excitable network attractor with threshold that gives an almost complete realisation of the graph .

The construction in Theorem 2.1 uses a comparatively sparse encoding of network states - each of the vertices in the network is associated with precisely one of the cells being in an active state. Indeed, the connection weights (8) assign one of only four possible weights to each connection, depending on whether that cell can become active next, was active previously or neither. Other choices of weights will allow more dense encoding: and many more than excitable states within a network of cells. However the combinatorial properties of the dynamics seem to be much more difficult to determine and presumably additional connection weightings will be needed, not the just four values considered in Theorem 2.1.

Section 3 illustrates specific examples of simple excitable networks for smooth activation function (2) on varying parameters - this requires numerical continuation to understand dependence on parameters even for fairly low dimension. For this reason we expect that a proof of an analogous result to Theorem 2.1 for the smooth activation function may be a lot harder. These examples also give some insight into bifurcations that create the excitable networks.

In general there is no reason that the realisation constructed in Theorem 2.1 is almost complete (in the sense that almost all initial conditions in evolve towards some with , analogous to (ashwin2020almost, , Defn 2.6)). If it is not then other attractors may be reachable from the excitable network. Conjecture 1 suggests that the realisation can be made almost complete for small enough : we expect that for this we will require to be sufficiently negative. If is too large we cannot expect almost completeness: there are other stable equilibria (notably the origin) that can be reached with large perturbations, and the simulation in figure 16 shows that other equilibria may be reachable from the network. It will be a challenge to strengthen our results to show that the excitable network is an almost complete realization. However, the examples studied in section 3 confirm that, at least for relatively simple graphs, this conjecture is reasonable.

Our theoretical results are for networks with excitable connections. We expect much of the behaviour described here is present in the spontaneous case, if the coupling weights are chosen such that equilibria are replaced with bottlenecks. In the absence of noise we expect to see a deterministic switching between slow moving dynamics within bottlenecks. This dynamical behaviour is very reminiscent of the stable heteroclinic channels described, for example, in afraimovich2004heteroclinic ; rabinovich2020sequential . However, stable heteroclinic switching models require structure in the form of multiplicative coupling or symmetries that are not present in CTRNN or related Wilson-Cowan neural models wilson1972excitatory (see chow2019before for a recent review of related neural models). Other models showing sequential excitation include chow2019before : this relies on a fast-slow decomposition to understand various different modes of sequential activation in a neural model of rhythm generation. It will be an interesting challenge to properly describe possible output dynamics of our model in the case of bottlenecks.

We remark that asymmetry of connection weights is vital for constructing a realisation as an excitable networks - indeed, the lack of two-cycles precludes . While this may be intuituively obvious, it was not so obvious that we also need to exclude one-cycles and -cliques in the graph to make robust realisations.

Finally, although we do not consider specific natural or machine learning applications of CTRNN here, the structures found here may give insights that give improved training for CTRNN. In particular, it seems plausible that CTRNN may use excitable networks to achieve specific input-output tasks (especially those requiring internal states). For example, recent work ceni2020interpreting demonstrates that echo state networks can create excitable networks in their phase space to encode input-dependent behaviour. It is also likely there are novel optimal training strategies that take advantage of excitable networks, for example, choosing connection weights that are distributed close to one of the four values we use.

Acknowledgements.

We gratefully acknowledge support from the Marsden Fund Council from New Zealand Government funding, managed by Royal Society Te Apārangi. PA acknowledges funding from EPSRC as part of the Centre for Predictive Modelling in Healthcare grant EP/N014391/1. CMP is grateful for additional support from the London Mathematical Laboratory.Appendix A Definition of excitable network

This appendix (which extends ideas in Ashwin2016a ) gives formal definitions for excitable networks considered in this paper. We say a system has an excitable connection for amplitude from one equilibrium to another if

(where is the open ball of radius centred at ) and this connection has threshold if

We define the excitable network of amplitude between the equilibria to be the set

We say the excitable network for amplitude realises a graph if each vertex in corresponds to an equilibrium in and there is an edge in from to if only if there is a connection in for amplitude from to .

Appendix B Proof of Theorem 2.1

As in equations (9) and (10), for any we choose

| (25) |

and then and are given by

| (26) |

We define equilibria as in Section 2.1, where we write the th component of the equilibrium as :

| (27) |

where

are the values of the Active, Leading, Trailing and Disconnected components, respectively. Note that

| (28) |

As mentioned before, the hypotheses of Theorem 2.1 imply that this labelling is well defined and Figure 2 shows how a transition from cell 1 active to cell 2 active will occur in a general network. It is simple to check that (27) is an equilbrium solution of (1) with activation function (3). Moreover, is linearly stable with eigenvalues . We define then note that (28) implies that

in terms of the Kronecker .

We consider two cases. Case 1 is where and we expect to see a connection from to . Case 2 is and we don’t expect a connection.

B.1 Case 1

Suppose (recall that the lack of -cycles means that ), and then we define two regions of phase space, which we label and , as follows:

The regions and the dynamics within them are shown schematically in figure 17.

Within , if then the dynamics are governed by the equations

where

| (29) |

where the type of coordinate (see figure 2) is given in parentheses on each line. Within , the dynamics are governed by the equations

| (30) |

where

| (31) |

The equilbrium lies in the interior of the region , whereas lies in the interior of the region . We show that there is a connection of amplitude from to any with , by considering a trajectory starting at

where is a unit vector in the -direction: clearly (see figure 17). Note that

| (32) |

where etc are given in equation (11), and . We define , and then

Now, we note that . We next show that a trajectory with initial condition at will asymptotically approach . We show first that the trajectory enters in finite time. Then, since in , the flow is linear, with stable equilibrium , all trajectories in eventually approach .

Define to be the region between and , namely,

First consider the dynamics of all , with . This means that for all points in . While the trajectory remains in , it can be shown that is negative along the line with . Hence, none of the will leave .

Within , the dynamics of and are governed by

| (33) | ||||

| (34) |

Consider the equation for in , and , namely:

Recall that , and . We can use these bounds to show that

Furthermore, if and , then . In particular, we note that for , with , is negative and bounded below zero.

Now, consider the equation for in , and , namely:

We use this to compute along the lower boundary of the three regions, , and , that is, the line , and we find

Since and , we see that in all three cases.

Combining our knowledge of and tells us that a trajectory which starts in , or more specifically, a trajectory starting in a small neighbourhood of will have monotonic decreasing component until (at least) . Furthermore, the component cannot decrease below . Thus the trajectory will move through and into in a bounded time.

Within , is a linearly stable fixed point. In summary, we have demonstrated that if then there is a connection from a -neighbourhood of to . Moreover, as the equilibria are linearly stable and having a connection is an open condition, the realisation will persist for an open set of parameters.

B.2 Absence of excitable connections for edges absent from

Now suppose that . Then the dynamics for and is shown schematically in figure 18. Equilibria are shown with dots, and all equilibria shown in this figure are linearly stable. Note that the equilibrium has , and . It is clear that there are no small perturbations which allow for a connection between and .

For , if then for any and such that , all trajectories starting in

with . In particular, perturbations of the form (32) will return to . This is because this set is remains in the region of validity for the equivalent of (30) for which the only attractor is . In the case and a similar argument holds as the phase portrait corresponds to figure 17 reflected in the diagonal.

This shows that no perturbations of amplitude within the plane that give a connection from to of amplitude . However, we cannot rule out the existence of connections from other locations in to . This would be needed to prove that the realisation is almost complete.

References

- (1) Afraimovich, V., Zhigulin, V., Rabinovich, M.: On the origin of reproducible sequential activity in neural circuits. Chaos: An Interdisciplinary Journal of Nonlinear Science 14(4), 1123–1129 (2004)

- (2) Afraimovich, V.S., Rabinovich, M.I., Varona, P.: Heteroclinic contours in neural ensembles and the winnerless competition principle. International Journal of Bifurcation and Chaos 14(04), 1195–1208 (2004)

- (3) Ashwin, P., Castro, S.B., Lohse, A.: Almost complete and equable heteroclinic networks. Journal of Nonlinear Science 30(1), 1–22 (2020)

- (4) Ashwin, P., Postlethwaite, C.: Designing Heteroclinic and Excitable Networks in Phase Space Using Two Populations of Coupled Cells. J. Nonlinear Science 26(2), 345–364 (2016). DOI 10.1007/s00332-015-9277-2

- (5) Ashwin, P., Postlethwaite, C.: Sensitive finite-state computations using a distributed network with a noisy network attractor. IEEE Transactions on Neural Networks and Learning Systems 29(12), 5847–5858 (2018)

- (6) Beer, R.D.: On the dynamics of small continuous-time recurrent neural networks. Adaptive Behavior 3(4), 469–509 (1995)

- (7) Bhowmik, D., Nikiforou, K., Shanahan, M., Maniadakis, M., Trahanias, P.: A reservoir computing model of episodic memory. In: 2016 International Joint Conference on Neural Networks (IJCNN), pp. 5202–5209. IEEE (2016)

- (8) Blynel, J., Floreano, D.: Exploring the T-maze: Evolving learning-like robot behaviors using CTRNNs. In: Applications of Evolutionary Computing, pp. 593–604. Springer Berlin Heidelberg, Berlin, Heidelberg (2003)

- (9) Busse, F.M., Heikes, K.E.: Convection in a rotating layer: A simple case of turbulence. Science 208, 173–175 (1980)

- (10) Ceni, A., Ashwin, P., Livi, L.: Interpreting recurrent neural networks behaviour via excitable network attractors. Cognitive Computation 12(2), 330–356 (2020)

- (11) Chow, C.C., Karimipanah, Y.: Before and beyond the Wilson–Cowan equations. Journal of Neurophysiology 123(5), 1645–1656 (2020). DOI 10.1152/jn.00404.2019

- (12) Doedel, E.J., Champneys, A.R., Dercole, F., Fairgrieve, T.F., Kuznetsov, Y.A., Oldeman, B., Paffenroth, R., Sandstede, B., Wang, X., Zhang, C.: AUTO-07P: Continuation and bifurcation software for ordinary differential equations (2007)

- (13) Doedel, E.J., Champneys, A.R., Fairgrieve, T.F., Kuznetsov, Y.A., Sandstede, B., Wang, X.J.: AUTO97: Continuation and bifurcation software for ordinary differential equations. Tech. rep., Department of Computer Science, Concordia University, Montreal, Canada (1997). (Available by FTP from ftp.cs.concordia.ca in directory pub/doedel/auto)

- (14) Funahashi, K., Nakamura, Y.: Approximation of dynamical systems by continuous time recurrent neural networks. Neural Networks 6, 801–806 (1993)

- (15) Gouzé, J.L., Sari, T.: A class of piecewise linear differential equations arising in biological models. Dynamical Systems 17, 299–316 (2003)

- (16) Guckenheimer, J., Holmes, P.: Structurally stable heteroclinic cycles. Math. Proc. Camb. Phil. Soc. 103, 189–192 (1988)

- (17) Harris, J., Ermentrout, B.: Bifurcations in the Wilson–Cowan equations with nonsmooth firing rate. SIAM Journal on Applied Dynamical Systems 14(1), 43–72 (2015)

- (18) Hopfield, J.J., Tank, D.W.: Neural computation of decisions in optimization problems. Biological Cybernetics 52(3), 141–152 (1985)

- (19) Hutt, A., beim Graben, P.: Sequences by metastable attractors: Interweaving dynamical systems and experimental data. Frontiers in Applied Mathematics and Statistics 3, 11 (2017). DOI 10.3389/fams.2017.00011

- (20) Kirk, V., Silber, M.: A competition between heteroclinic cycles. Nonlinearity 7, 1605–1621 (1994)

- (21) Manjunath, G., Tiño, P., Jaeger, H.: Theory of input driven dynamical systems. ESANN 2012 proceedings (2012)

- (22) May, R., Leonard, W.: Nonlinear aspects of competition between three species. SIAM J. Appl. Math. 29, 243–253 (1975)

- (23) Nikiforou, K.: The dynamics of continuous-time recurrent neural networks and their relevance to episodic memory. Ph.D. thesis, Imperial College, London (2019)

- (24) Rabinovich, M., Volkovskii, A., Lecanda, P., Huerta, R., Abarbanel, H., Laurent, G.: Dynamical encoding by networks of competing neuron groups: winnerless competition. Physical review letters 87(6), 068102 (2001)

- (25) Rabinovich, M.I., Huerta, R., Varona, P., Afraimovich, V.S.: Generation and reshaping of sequences in neural systems. Biological cybernetics 95(6), 519–536 (2006)

- (26) Rabinovich, M.I., Zaks, M.A., Varona, P.: Sequential dynamics of complex networks in mind: Consciousness and creativity. Physics Reports (2020)

- (27) Strogatz, S.H.: Nonlinear dynamics and chaos: With applications to physics, biology, chemistry, and engineering. Addison-Wesley (1994)

- (28) Tuci, E., Quinn, M., Harvey, I.: An evolutionary ecological approach to the study of learning behavior using a robot-based model. Adaptive Behavior 10(3-4), 201–221 (2002)

- (29) Wilson, H.R., Cowan, J.D.: Excitatory and inhibitory interactions in localized populations of model neurons. Biophysical Journal 12(1), 1–24 (1972)

- (30) Yamauchi, B.M., Beer, R.D.: Sequential behavior and learning in evolved dynamical neural networks. Adaptive Behavior 2(3), 219–246 (1994)