Exploring Fast Fourier Transforms on the Tenstorrent Wormhole

Abstract

Whilst numerous areas of computing have adopted the RISC-V Instruction Set Architecture (ISA) wholesale in recent years, it is yet to become widespread in HPC. RISC-V accelerators offer a compelling option where the HPC community can benefit from the specialisation offered by the open nature of the standard but without the extensive ecosystem changes required when adopting RISC-V CPUs. In this paper we explore porting the Cooley–Tukey Fast Fourier Transform (FFT) algorithm to the Tenstorrent Wormhole PCIe RISC-V based accelerator. Built upon Tenstorrent’s Tensix architecture, this technology decouples the movement of data from compute, potentially offering increased control to the programmer. Exploring different optimisation techniques to address the bottlenecks inherent in data movement, we demonstrate that for a 2D FFT whilst the Wormhole n300 is slower than a server-grade 24-core Xeon Platinum CPU, the Wormhole draws around 8 times less power and consumes around 2.8 times less energy than the CPU when computing the Fourier transform.

Keywords:

RISC-V Tenstorrent Wormhole Fourier Transforms FFTs Cooley-Tukey accelerator1 Introduction

The recent availability of high core count commodity available RISC-V CPUs [2] is driving increased interest in the role of RISC-V for HPC [3]. However, there is still some way to go for the ecosystem to fully support CPU based RISC-V supercomputers, and instead a more gradual short term adoption route is likely to be in RISC-V based PCIe accelerator cards. The major benefit of these is that they fit into existing, x86 or AArch64, systems as an add-ons. Several vendors are developing such accelerator cards, often aimed at Artificial Intelligence (AI) and Machine Learning (ML) workloads, driven by the current boom in AI. Indeed, each vendor is taking a different approach to the design of their technology based upon a set of principals that they consider important, and this demonstrates the flexibility provided by RISC-V where hardware designers can use the standard in the manner most suitable to them.

Whether initially designed for ML or HPC, RISC-V accelerator hardware fundamentally provides the building blocks to accelerate mathematical operations. Consequently, there is a role for these accelerator technologies to be leveraged by the HPC community, and one such RISC-V based accelerator card is the Wormhole developed by Tenstorrent. Available for purchase at a modest price, this commodity card is, as of 2025, one of the few RISC-V based accelerators that are publicly available. The availability and moderate price-point not only means that these can be leveraged in best-of-class supercomputers, but furthermore that they are also suitable for smaller HPC machines and even workstations. Indeed, Tenstorrent have opened up their entire software stack and work in the open, in collaboration with the wider community.

In this paper we calculate the Discrete Fourier Transform (DFT) by porting the Cooley–Tukey Fast Fourier Transform (FFT) algorithm to the Tenstorrent Wormhole accelerator. An algorithm that is ubiquitous, not only in scientific computing but also far more generally such as signal processing, our hypothesis was that the decoupling of data movement from compute provided by the Tenstorrent architecture has the potential to deliver performance and energy efficiency improvements compared to running on a CPU. The paper is structured as follows; in Section 2 we survey the Tenstorrent Tensix architecture and describe the FFT algorithm, before reporting the experimental setup in Section 3 used throughout this paper. Section 4 describes the design of the FFT algorithm for the Tenstorrent architecture and then explores the efficacy of optimisation techniques that aim at reducing the overhead of data movement. We then compare all 24-cores of a Xeon Platinum Cascade Lake CPU against 64 Tensix cores on the n300 for a 2D FFT in Section 5, before drawing conclusions and discussing further work in Section 6.

The novel contributions of this paper are:

-

•

We undertake, to the best of our knowledge, the first study of porting the FFT algorithm to a RISC-V PCIe accelerator.

-

•

An exploration of optimisation strategies for data reordering on the Tenstorrent Tensix architecture.

-

•

A performance and energy efficiency analysis of an FFT solver on the Tenstorrent Wormhole against a server grade CPU.

2 Background

2.1 The Tenstorrent architecture

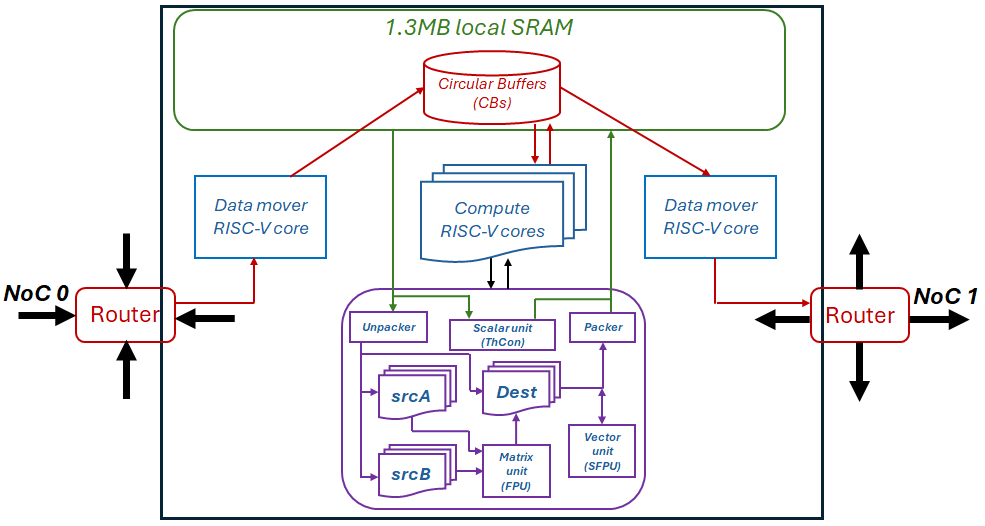

The Grayskull, Wormhole and Blackhole PCIe accelerator cards, developed by Tenstorrent, are built around the Tensix architecture. As sketched in Figure 1, each Tensix core contains five RISC-V CPUs, known as baby cores, 1.3MB of local SRAM, two routers each of which are connected to separate Networks on Chip (NoCs), and compute engine. Out of the five RISC-V baby cores, one of these is for moving data into the Tensix core, one for moving data out, and three drive the compute engine. The compute engine itself provides scalar (ThCon), vector (SFPU) and matrix (FPU) units that support a range of precisions up to FP32, which we use throughout this paper, although the matrix unit relaxes IEEE compliance. Out of the three RISC-V baby cores driving the compute engine, one called UNPACK issues instructions to the unpacker in the compute engine, which copies data from SRAM into the source registers, srcA and srcB. The MATH RISC-V baby compute core issues instructions to the ThCon, SFPU and FPU units, directing them to undertake operations on source registers. The third RISC-V baby compute core, PACK, packs (or copies) result data from the dst register to SRAM. The srcA and srcB registers are 4KiB in size, holding a maximum of 1024 single precision floating point numbers, and the dst register is of size 32KiB and segmented into 16 chunks [4]. To avoid being a bottleneck, input and result values are never transferred through the RISC-V compute baby cores, but instead instruct the unpacker and packer in the compute unit to accesses SRAM directly.

The dst register is used as both an input to, and output from, the vector unit and this is why it is split into 16 segments. Consequently, compute can undertaken by the matrix unit and results can then be provided to the vector unit for further processing. Indeed, the matrix unit only supports a restricted set of operations including matrix multiplication, addition, subtraction, multiplication, transposition and reduction. By contrast, the vector unit is more general and in addition to binary arithmetic also provides support for operations including squares, square root, cos, tan, sin, and conditionals. In-fact, the programmer can write their own code directly for the SFPU and the compiler will then vectorise this. The matrix unit can perform 2048 floating point multiplications and 2048 floating point additions per cycle. The vector unit comprises eight registers, each with thirty two 32-bit lanes.

The abstraction of Circular Buffers (CBs) represents data held in local SRAM. These are First In First Out (FIFO) queues and follow a producer-consumer model, where a page of data is requested in the CB by the producer, populated and pushed to be made available. The consumer blocks on the availability of a page, and once it is, then consumes the data before freeing the memory so that this can be reused. CBs combine semantics around memory and synchronisation, enabling coordination between the RISC-V baby cores. An example is the common situation where a data mover core requests a CB page, then fills this with data read from external DRAM and pushes this. The RISC-V compute cores then consume this CB, with the UNPACK core instructing the unpacker in the compute engine to copy data from this page in the CB into a target register.

In this work we focus on a Wormhole n300 which contains 120 Tensix cores that are provided as two, 60-core, chips running at 1 GHz. There is a total of 24GB of GDDR6, external, memory on the board which is split across twenty four banks each of 1GB, with twelve banks directly connected to each chip [6]. Whilst, at the time of writing, Tenstorrent have recently released their next generation accelerator, the Blackhole, the Wormhole is currently by far the more common technology and due to the same underlying architecture, lessons learnt on this generation will apply to the next.

The TT-Metalium framework, tt-metal, is Tenstorrent’s direct programming SDK which exposes access to the hardware, providing an API for kernel development. The SDK provides an API that programmers can use to undertake a range of low level activities such as the movement of data, driving the compute engine, and interacting with Circular Buffers (CBs). The programmer develops three kernels, one for each data movement RISC-V baby core and one for the compute cores.

Previous work [1] explored stencil applications on the first generation, Grayskull e150, Tenstorrent accelerator. This work demonstrated that it was possible for the architecture to perform comparatively to a server-grade CPU but at five times less energy. However, a major challenge was in reworking the algorithm to suit the architecture, with the final version of the code around 160 times faster on the Grayskull than the initial, naive, kernel implementation.

2.2 Fast Fourier Transforms (FFTs)

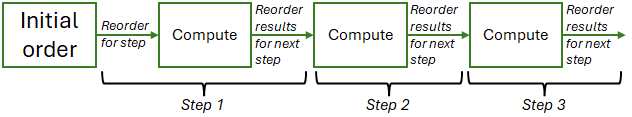

The calculation of the Discrete Fourier Transform (DFT) is of critical importance to a wide variety of applications ranging from digital signal processing to solving systems of partial differential equations. Converting between the spatial and frequency domains, the former represents a collection of values and the later describes the rate at which these values are changing. Fast Fourier Transforms (FFTs) are a class of fast algorithms that compute the DFT, with the Cooley–Tukey algorithm [5] being the most common and the focus in this work. Indeed, the FFT algorithm was described as the most important numerical algorithm of our lifetime [5] as it reduces the computational complexity to O(N log N). Cooley-Tukey follows a divide-and-conquer approach and simple implementations adopt a recursive form, however for performance this is expressed iteratively with an outer loop of log N steps.

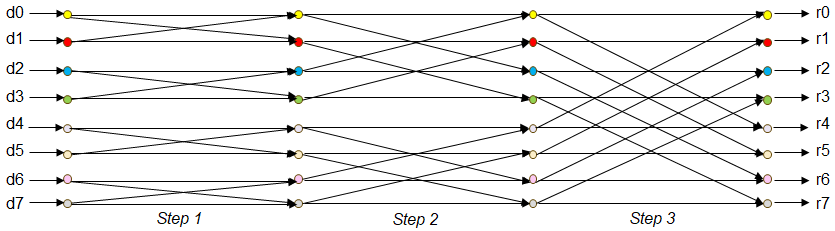

Figure 2 illustrates an FFT operating on eight input values, requiring three steps. We follow a 2-radix approach, were in the first step calculations involving neighbouring values are undertaken, in the second step intermediate results are then calculated between neighbour plus (or minus) 2, and in the third step intermediates with neighbour plus (or minus) four.

Consequently, in addition to the calculation itself, the movement of data is an important consideration as from one step to the next the compute requires different pairs of numbers. This is illustrated in Listing 1 where, for each step, individual points in groups of spectra are processed. There are two data elements per point, and it can be seen that the indices of these, d0_data_index and d1_data_index, are calculated at lines 6 and 7, with matching_second_point providing the offset for the second element, for instance 1, 2, 4 for steps 1, 2 and 3 in Figure 2. Values f0 and f1 are then calculated based upon twiddle factors and the second element of data at lines 9 and 10, before these values are applied to the real and imaginary components of the two data elements at lines 12 to 15. Twiddle factors are trigonometric constants that are multiplied by the data during execution of the algorithm.

3 Experimental setup

Results reported from the experiments run throughout this paper are averaged over five runs. All Tenstorrent runs were undertaken on a Wormhole n300 connected to the host system by PCIe Gen 3. The host machine contains a 26-core 8170 Skylake Intel Xeon Platinum CPU and 128 GB of DRAM. All experiments are built using version 0.56 of the tt-metal framework, and Clang 17 is used to compile host codes. CPU runs are undertaken on a 24-core 8260M Cascade Lake Xeon Platinum CPU, which is equipped with 512GB of DRAM and codes are compiled using GCC version 11.2. All codes are compiled at optimisation level three. Multi-core runs on the CPU are multi threaded using OpenMP. Energy usage on the CPU is based upon values reported by RAPL, and on the n300 from the Tenstorrent System Management Interface (TT-SMI).

All results reported in this paper are running single precision, FP32, which is the maximum precision supported by the n300. Unless otherwise stated, performance numbers reported for the Wormhole are execution time only.

4 Porting Fourier transforms to the Wormhole

In this section we focus on porting FFTs to the Wormhole and optimising for a single Tensix core. Whilst complex numbers are not directly supported by the Tensix’s compute engine, it is possible to work with the real and imaginary components individually as illustrated in Listing 1 and this is the approach adopted here.

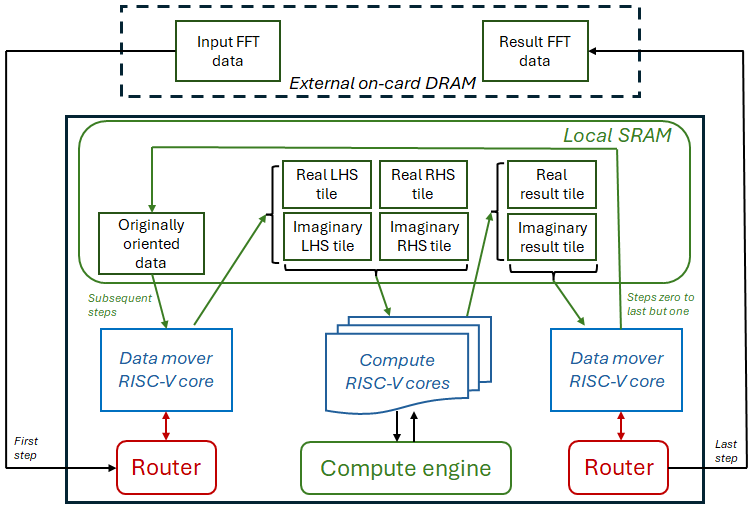

Figure 3 illustrates the design that we initially leveraged when porting the FFT algorithm to the Tensix core. For each step, the in data mover core will read the input data for that step. This is read either from external on-card DDR for the first step or from local SRAM for subsequent ones, and a page in four CBs is populated with the data correctly ordered for that specific step. These CBs are the computation’s Left Hand Side (LHS) and Right Hand Side (RHS) for both real and imaginary components. These are then used as an input to the compute core which provides these to the compute unit to undertake the calculations. The output of these calculations is stored in real and imaginary CBs which are consumed by the out data mover core which then reorders the data into the original order and will either store this in SRAM, ready to be consumed by the next step, or external DDR for the final results. Consequently, for each step we undertake two reorderings of data, from the original data order to the order required for pair wise operations by that current step, and then results are ordered from this stepwise ordering back to the original orientation.

Figure 3 is simplified somewhat, for instance twiddle factors are calculated by the compute engine on initialisation and stored in SRAM but these do not change from step to step. Listing 2 sketches the FFT compute kernel, at line 1 operating in steps, and lines 2 and 3 waiting for pages of real and imaginary data to be available in these CBs for the second element of data. Lines 5 to 13 then use the SFPU to compute f0 and f1 before the real and imaginary pages of the first element of data in the CBs are waited on at lines 15 and 16. Lines 21 to 28 then applying f0 and f1 to the real and imaginary components of the two data values before popping the input data CBs at lines 27 to 30, which will enable the pages of memory to be reused by the next step. The cb_int0 and cb_int1 CBs are circular buffers used to hold intermediate calculation data, and the cb_twiddle_r and cb_twiddle_i hold twiddle values.

The maths_sfpu_op function that is called in Listing 2 is sketched in Listing 3. We developed this as a helper function due to the boilerplate code that is required for each operation, where a lock on the dst register must first be acquired by the MATH RISC-V compute core, tiles are then copied in from page location 0 in CBs cb_in_1 and cb_in_2 to segments 0 and 1 of the dst register at lines 7 and 8, before the compute operation is performed on dst register segments 0 and 1 at lines 9 to 17 and results written to the 0 segment. The MATH RISC-V compute core then releases its lock on the dst register at line 18, before a page in the cb_tgt CB is waited on at line 22, and then the PACK RISC-V compute core acquires a lock on the dst register, packs values from the 0 segment of the dst register to a page in the CB, and releases the lock at lines 23 to 25, before making the page in cb_tgt CB available. Optionally, this function will also wait for pages in input CBs and free these up, and it can be see in Listing 2 that this is used when working with intermediate values. Whilst we focus here on the vector unit, SFPU, we also provide versions of this maths operation function for computing with matrix unit, FPU, which is similar to Listing 3 but somewhat simplified because the Metalium API call performs copying of input data into the srcA and srcB registers itself.

Table 1 reports performance of an FFT implementation for a problem size of 16384 elements on a single Xeon Platinum (Cascade Lake) CPU core, along with different versions on a single Tensix core in the Wormhole. The first version on the Wormhole is as illustrated in Figure 3 and Listing 2, using the SFPU for the calculation. It can be seen that it is around eight times slower than a single core is on the Xeon Platinum CPU.

| Version | Runtime (ms) |

| Xeon Platinum CPU single core | 1.85 |

| Initial | 14.39 |

| Chunked | 9.38 |

| Data copy by ThCon | 7.56 |

| 128-bit copies | 6.61 |

| Single data copy | 5.31 |

To explore the underlying reasons for this performance on the Tensix we experimented with disabling certain components in the algorithm and the performance that these individual components delivered is reported in Table 2. The major overhead is in the reordering of data, for instance when disabling data reordering for reading the runtime halves, and when also disabling write reordering the runtime is around a sixteenth of the original. Clearly data reordering is a significant bottleneck, and by comparing the performance when only read or write reordering is enabled in Table 2 it can be observed that read reordering is the more expensive of the two operations. Incidently, compute-only performance was comparable regardless of whether we used the FPU (matrix unit) or SFPU (vector unit) to undertake mathematical operations.

| External read | Read reorder | Compute | Write reorder | External write | Runtime (ms) |

| Y | Y | Y | Y | Y | 14.4 |

| Y | N | Y | Y | Y | 7.3 |

| N | N | Y | Y | Y | 7.3 |

| N | Y | Y | N | N | 10.5 |

| Y | Y | Y | N | N | 10.6 |

| N | N | Y | N | Y | 0.9 |

| N | N | Y | N | N | 0.9 |

Our initial approach placed the entire domain into single CB pages step by step, for instance loading and reordering all the data, then computing with this, and lastly reordering and writing this all out. Whilst placing the entire domain in a single page was the simplest approach, it meant that different components of the Tensix core could not run concurrently, for instance whilst data loading was occurring by the data mover RISC-V core, the compute engine and data writing kernels were idle. Consequently, we enhanced the code to operate in chunks, chunked in Table 1, where the entire domain is split into segments and consequently the components of the Tensix core can run in parallel on different chunks.

When reordering the data, this data is loaded into the data mover cores from SRAM and then written out to a new location. The cores themselves are fairly limited and designed more for driving components such as the routers, compute engine and marshalling control with the CBs rather than undertaking extensive data loads and stores. Instead, the compute engine contains a scalar unit, ThCon, which itself can load and write data and the hypothesis [4] was that this could reduce the runtime due to improved performance of compute engine to SRAM data transfers. Whilst there are no API calls to ThCon exposed directly by Metalium, it is possible to program this via intrinsics provided by the Tenstorrent Low Level Kernels (llk) underlying library. The code for this is sketched in Listing 4 which loads 32-bit data from SRAM. The address from_addr is decomposed into a base address and offset and then stored into registers 0 and 1 via the TT_SETDMAREG intrinsic. These registers are then provided to the TT_LOADIND intrinsic, with register 2 provided as the destination to hold the loaded value. There are four tiles of data being reordered, the LHS and RHS for both real and imaginary components, and we found the most effective approach was to distribute these across all three RISC-V compute cores; UNPACK, MATH and PACK which individually issue instructions to ThCon for their respective pages.

The result of this optimisation is reported as Data copy by ThCon in Table 1 where it can be seen that it reduced the runtime by around a millisecond. It is possible for ThCon to read and write data of size 8, 16, 32 or 128 bits, and due to us working with FP32 up until this point all data accesses were 32 bit. However, when the kernel reorders input data, stores are all contiguous and when reordering results for writing the loads are all contiguous. The kernel was therefore modified to unroll the reordering loop by four and to use 128-bit wide data accesses for contiguous data. This is reported as 128-bit copies in Table 1 and it can be seen that this further reduced the runtime.

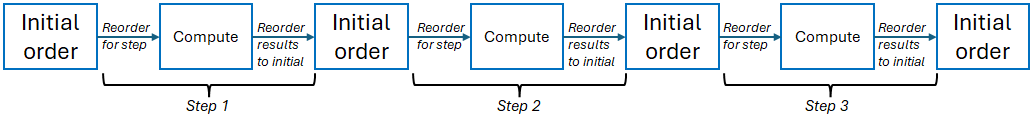

To this point, as per Figure 4, there were two data reorderings per step. This is because, at the end of a step, the data is reordered back to the original data order. Whilst this was the simplest approach from a code perspective, the reordering of data is expensive and we therefore modified this to instead reorder data to the arrangement required by the next step. This is illustrated in Figure 5, and it reduces the number of reorderings per step to one, apart from the initial and last step. However, this increases the complexity of the code which resulted in link errors where the compiler reported .́bssẃill not fit in region T̀RISC2_LOCAL_DATA_MEMḿissing. The bss section holds uninitialized data and this was overflowing, likely due to too many variables. To address this we edited the kernel_trisc2.ld linker script to increase the TRISC2_LOCAL_DATA_MEM from 1280 to 3328 bytes.

Performance of the one-copy optimisation is reported as Single data copy in Table 1 and, whilst it resulted in a performance improvement, the limited nature of this surprised us as we had expected a more considerable reduction in runtime. When investigating we found that this was due to all data accesses now being non-contiguous and therefore 32 rather than 128 bits wide. Effectively we had increased the number of individual memory accesses with a larger number of narrow accesses, whereas before we had fewer wider accesses.

5 Scaling up with a 2D FFT

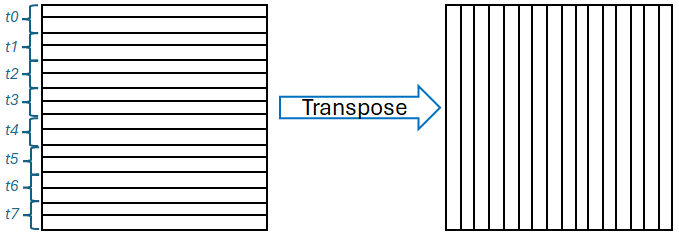

Thus far we have concentrated on running over a single Tensix core and in this section we scale up by studying a 2D FFT. Figure 6 illustrates the data orientation required by such an operation, where rows of data are distributed across the Tensix cores, here t0 to t7, and one dimensional FFTs are first executed across each row. The data is then globally transposed so that each Tensix core receives a column of data comprising parts made up from across each Tensix core. Each core then undertakes another FFT on its newly transposed local data. Ultimately, an FFT has been performed across each global row and down each global column to produce the overall result.

We enhanced our FFT implementation to work across a several locally held rows and leveraged the transpose routine from Tenstorrent’s tt-nn library to undertake transposition across the Tensix cores. For simplicity we assume an even decomposition of rows across Tensix cores, and with a problem size of 1024 by 1024 this limits us to 64 Tensix cores, each holding 16 local rows. Table 3 reports performance and power of a 2D FFT of size 1024 by 1024 elements parallelised via OpenMP on all 24 cores of the Xeon Platinum Cascade Lake CPU against 64 Tensix cores of the n300. It can be seen that whilst the CPU is faster than the n300, it draws over eight times more power, ultimately resulting in the n300 being 3.6 times more energy efficient.

| Version | Number of cores | Runtime (ms) | Average Power (Watts) | Energy usage (J) |

| Xeon Platinum CPU | 24 | 10.24 | 353 | 3.62 |

| Wormhole n300 | 64 | 23.56 | 42 | 0.99 |

6 Conclusions and future work

In this paper we have explored calculating the DFT by porting the Cooley-Tukey FFT algorithm to the Tensix architecture. We explored our general design approach of building up tiles of real and imaginary data which are then used as inputs to the compute engine, and abstracting the boilerplate around Metalium mathematical operators via C++ templated functions. We found data reordering was a significant bottleneck and explored optimisation techniques to address this, with the use of ThCon and 128-bit wide memory accesses especially effective.

Whilst the performance of a single Tensix core was around 2.8 times lower than a Xeon Platinum Cascade Lake CPU core, the Wormhole n300 contains many more energy efficient Tensix cores. Consequently we scaled up our approach and explored 2D FFT demonstrating that, whilst 64 Tensix cores in the n300 were slower than the Xeon Platinum CPU, they also drew around eight times less power, ultimately with the Wormhole being 3.6 times more energy efficient.

This paper has highlighted that there is great potential for Tenstorrent technology to benefit HPC workloads, especially due to its energy efficiency nature. However, given that these accelerators have been designed for ML workloads a challenge is to understand how to adapt the algorithms and tooling to best suit general purpose HPC. To this end, more integrated control of ThCon in Metalium would be beneficial, especially if this better abstracted the reordering of data. Furthermore, the ability to load in, and store, data from the matrix and vector registers based upon a user defined mapping directly would likely improve performance as it would avoid additional data copying.

In this paper we limited ourselves to holding the entirety of the domain in local SRAM and, with other data structures required for reordering and tiling, this resulted in a maximum problem size of 16384 FP32 elements. When scaling to 2D, our problem size was 1 million elements, and this is also small. The next step will be to support larger domains by reordering from external, on-card, DRAM, using SRAM as a temporary staging area. Furthermore, we might be able to obtain improved performance within a single Tensix core by reworking the one-copy data reordering scheme to work across contiguous memory, thus resulting in single 128-bit rather than four 32-bit memory accesses. Moreover, expanding the transposition to support an uneven distribution of rows across Tensix cores will enable us to leverage all 120 cores on the n300, likely closing the gap significantly with the Xeon Platinum CPU. Whilst in this paper we focussed on a single Wormhole card, a major bottleneck for multi-dimensional FFTs is the all-to-all communications required during transposition. This work acts as a foundation that can be built upon in the future to explore how the high performance network connecting multiple n300 cards can remove this bottleneck.

Acknowledgement

The CPU runs in this paper ran on NextGenIO which which funding from the EU Horizon 2020 research and innovation programme under grant agreement No 671591. This research was supported by an RSE personal research fellowship. We thank Tenstorrent, especially Pete Cawley, for their technical assistance. For the purpose of open access, the author has applied a Creative Commons Attribution (CC BY) licence to any Author Accepted Manuscript version arising from this submission.

References

- [1] Brown, N., Barton, R.: Accelerating stencils on the tenstorrent grayskull risc-v accelerator. In: SC24-W: Workshops of the International Conference for High Performance Computing, Networking, Storage and Analysis. pp. 1690–1700. IEEE (2024)

- [2] Brown, N., Jamieson, M.: Performance characterisation of the 64-core sg2042 risc-v cpu for hpc. In: International Conference on High Performance Computing. pp. 354–367. Springer (2025)

- [3] Brown, N., Jamieson, M., Lee, J., Wang, P.: Is risc-v ready for hpc prime-time: Evaluating the 64-core sophon sg2042 risc-v cpu. In: Proceedings of the SC’23 Workshops of The International Conference on High Performance Computing, Network, Storage, and Analysis. pp. 1566–1574 (2023)

- [4] Cawley, P.: Tenstorrent Wormhole Series (September 2025), https://www.corsix.org/

- [5] Strang, G.: Linear algebra and its applications (2000)

- [6] Tenstorrent: Wormhole Tensix Processor (August 2025), https://cdn.sanity.io/files/jpb4ed5r/production/6217a901d675d37ccb3cf1e8ba74f91a9f992577.pdf