Factorization over interpolation: A fast continuous orthogonal matching pursuit

Abstract

We propose a fast greedy algorithm to compute sparse representations of signals from continuous dictionaries that are factorizable, i.e., with atoms that can be separated as a product of sub-atoms. Existing algorithms strongly reduce the computational complexity of the sparse decomposition of signals in discrete factorizable dictionaries. On another flavour, existing greedy algorithms use interpolation strategies from a discretization of continuous dictionaries to perform off-the-grid decomposition. Our algorithm aims to combine the factorization and the interpolation concepts to enable low complexity computation of continuous sparse representation of signals. The efficiency of our algorithm is highlighted by simulations of its application to a radar system.

Introduction

Computation of sparse representations is beneficial in many applicative fields such as radar signal processing, communication or remote sensing [1, 2, 3]. This computation is based on the assumption that a signal decomposes as a linear combination of a few atoms taken from a dictionary . In this paper, we focus on continuous parametric dictionaries [4, 5, 6] which associate each parameter from the continuous parameter set to an atom . Thereby, the decomposition of reads

| (1) |

where for all , and .

In some applications [7, 8, 9], the atoms factorize as a product of sub-atoms, each depending on a distinct set of components of . In that case, greedy algorithms such as presented in [10, 11] can leverage this property to strongly reduce the computational complexity of the decomposition.

These approaches, however, capitalize a discretization of and assume that the parameters match the resulting grid [12]. Yet, estimations of parameters from such discretized models are affected by grid errors [13]. Although a denser grid reduces this effect, it tremendously increases the dimensionality of the problem to solve. Continuous reconstruction algorithms do not require such dense grids as they perform off-the-grid estimations of parameters [14, 15, 16]. In [17], a continuous version of the Basis Pursuit is derived from the construction of an interpolated model that approximates the atoms. In [18], the authors similarly designed the Continuous OMP (COMP) from the same interpolation concept.

We propose a Factorized COMP (F-COMP) that combines the concepts of interpolation and factorization to enable a fast and accurate reconstruction of sparse signals. We applied our algorithm to the estimation of the ranges and speeds of targets using a Frequency Modulated Continuous Wave (FMCW) radar. Simulations validated the superiority of using low-density grids with off-the-grid algorithms instead of denser grids with on-the-grid algorithms.

Notations:

Matrices and vectors are denoted by bold uppercase and lowercase symbols, respectively. The tensors are denoted with bold calligraphic uppercase letters. The outer product is , is the Frobenus norm, , , and is the speed of light.

Problem Statement

We consider the problem of estimating the values of parameters from a measurement vector . This measurement is assumed to decomposes as (1), with . The parameters are known to lie in a separable parameter domain such that with for each . For all , decomposes in

| (2) |

with for all . In (1) the atoms are taken from a continuous dictionary defined by .

In this paper, we consider the particular case of dictionaries of atoms that factorize in sub-atoms. More precisely, introducing the tensor reshaping

| (3) |

with , for all and , we assume that the atom decomposes in

| (4) |

In (4), each is a sub-atom taken from the continuous dictionary . In the tensor reshaped domain, the decomposition (1) becomes

| (5) |

where is the tensor-shaped measurement, i.e., .

Recovering the parameters from the factorized model (5) can be made fast. For instance, in work [10, 11], the authors consider an adaptation of OMP, that we coin Factorized OMP (F-OMP), which leverages the decomposition (4) to reduce the dimensionality of the recovery problem. Yet, F-OMP only enables the estimation of on-the-grid parameters taken from a finite discrete set of parameters. In the next section, we build a model based on the same grid which enables the greedy estimation of off-the-grid parameters while similarly leveraging the factorization.

Factorization over Interpolation

From the general non-factorized model (1), the algorithm Continuous OMP (COMP) [18] extends OMP and succeeds to greedily estimate off-the-grid parameters. COMP operates with a parameter grid which results from the sampling of . The atoms of the continuous dictionary are approximated by a linear combination a multiple atoms which are defined from the grid. This combination enables to interpolate (from the grid) the atoms of that are parameterized from off-the-grid parameters. Our algorithm F-COMP applies the same interpolation concept to the atoms , which are factorized by (4).

Let us define the separable grid such that , with for all . We propose a “factorization over interpolation" strategy where each off-the-grid atom is interpolated by on-the-grid atoms

| (6) |

In (6), the indices depend on the interpolation scheme and on , and each is the -th interpolation atom associated to the -th element of the grid . The coefficients are obtained from

| (7) |

where is a function defined from the choice of interpolation pattern [17, 18]. In this scheme, for all , we decompose the global interpolation atoms using interpolation sub-atoms denoted by , i.e.,

| (8) |

The factorization (8) is enabled by the properties of the interpolant dictionaries. It is for instance the case for the dictionaries describing FMCW chirp-modulated radar signals we detail in Sec. 5. From such signals, we can efficiently estimate off-the-grid values of using the Factorized Continuous OMP that we explain in the next section.

Factorized Continuous OMP

| (10) |

Alg. 1 formulates F-COMP for a generic interpolation scheme. F-COMP leverages the factorized interpolated model (6) to estimate off-the-grid parameters with a reduced complexity with respect to COMP [18]. It follows the same steps as COMP and greedily minimizes . In Alg. 1, we use to denote and , where estimates .

Application to Radars

We applied F-COMP for the estimation of ranges and radial speeds of point targets using a Frequency Modulated Continuous Wave (FMCW) radar that emits chirp-modulated waveforms. The model is similar to the one provided in [9]. The received radar signal is coherently demodulated and sampled with a regular sampling rate , samples acquired per chirp, and chirps are acquired. With a few assumptions on the radar system [9, 19, 20], the resulting sampled measurement vector is approximated for , and by

| (11) |

where . In (11), and respectively are the bandwidth and the carrier frequency of the transmitted waveform. Given (11), the measurement is reshaped as explained in Sec. 2 and expressed by (5) and (4) where ,

| (12) | ||||

| (13) |

In our application, we used an order-1 Taylor interpolation such as explained in [17, 18] to implement (6) and (8).

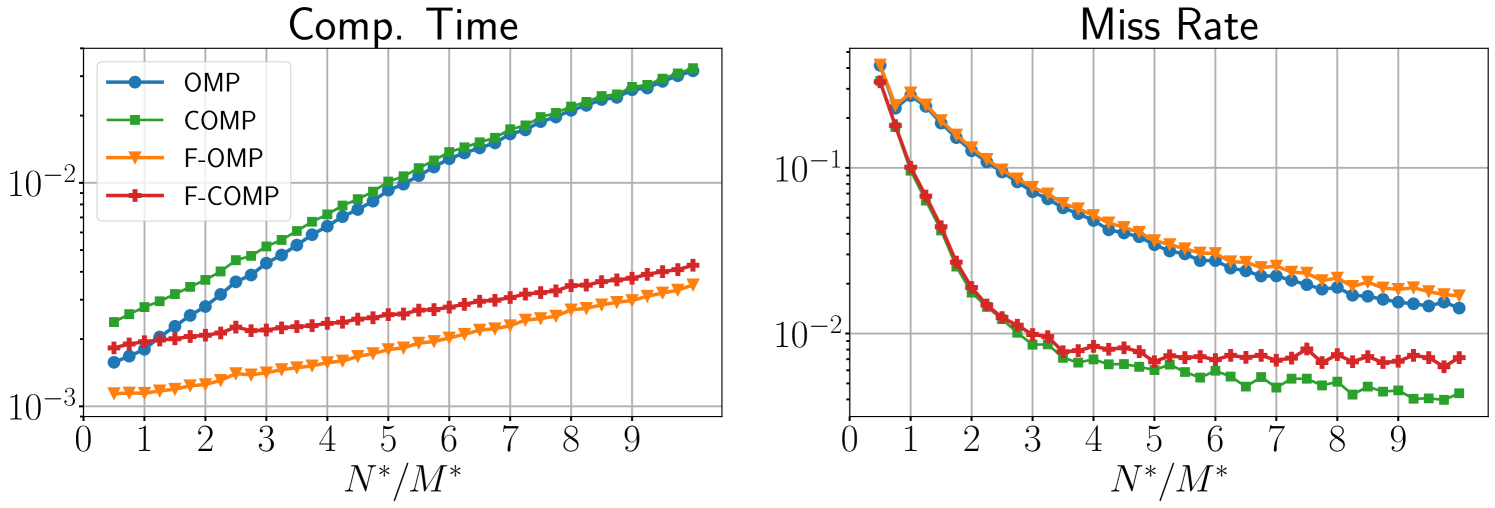

The radar model (11) is an approximation of the exact radar signal that enables the use of F-COMP. With the exact model, we can use COMP and expect more accurate estimation of ranges and speeds with a higher computation time than F-COMP. This is observed in Fig. 1, where an estimation is a miss when . The factorized algorithms (F-OMP and F-COMP) are faster but miss more often the estimation than their non-factorized counterparts (OMP and COMP). The continuous algorithms have a lower miss rate because they are not affected by the grid errors. F-COMP appears as the best trade-off between performance and computation time for most values of MR it can reach.

Conclusion

In this work, we designed the Factorized Continuous OMP which leverages the factorized structure of dictionaries to efficiently compute continuous sparse representations. We proposed an implementation of the algorithm for a practical radar application. Although this implementation remains simple, our simulations showed F-COMP as the best trade-off between performance and compuation time. In future work, we may investigate the extension of more sophisticated, and of higher order, interpolation schemes to factorizable dictionaries.

References

- [1] R. Baraniuk and P. Steeghs. Compressive radar imaging. In 2007 IEEE Radar Conference, pages 128–133, April 2007.

- [2] L. Zheng and X. Wang. Super-resolution delay-doppler estimation for ofdm passive radar. IEEE Transactions on Signal Processing, 65(9):2197–2210, May 2017.

- [3] Y. D. Zhang, M. G. Amin, and B. Himed. Sparsity-based doa estimatioin using co-primes array. IEEE Int. Conf. Acoustics, Speech, and Signal Processing, 5 2013.

- [4] Rémi Gribonval. Fast matching pursuit with a multiscale dictionary of gaussian chirps. Signal Processing, IEEE Transactions on, 49:994 – 1001, 06 2001.

- [5] R. M. Figueras i Ventura, P. Vandergheynst, and P. Frossard. Low-rate and flexible image coding with redundant representations. IEEE Transactions on Image Processing, 15(3):726–739, March 2006.

- [6] Laurent Jacques and Christophe Vleeschouwer. A geometrical study of matching pursuit parametrization. Signal Processing, IEEE Transactions on, 56:2835 – 2848, 08 2008.

- [7] V. Winkler. Range doppler detection for automotive fmcw radars. pages 166–169, 11 2007.

- [8] S. Lutz, D. Ellenrieder, T. Walter, and R. Weigel. On fast chirp modulations and compressed sensing for automotive radar applications. In 2014 15th International Radar Symposium (IRS), pages 1–6, June 2014.

- [9] T. Feuillen, A. Mallat, and L. Vandendorpe. Stepped frequency radar for automotive application: Range-doppler coupling and distortions analysis. In MILCOM 2016 - 2016 IEEE Military Communications Conference, pages 894–899, Nov 2016.

- [10] S. Zubair and W. Wang. Tensor dictionary learning with sparse tucker decomposition. pages 1–6, 07 2013.

- [11] Y. Fang, B. Huang, and J. Wu. 2d sparse signal recovery via 2d orthogonal matching pursuit. Science China Information Sciences, 55, 01 2011.

- [12] E. J. Candes and M. B. Wakin. An introduction to compressive sampling. IEEE Signal Processing Magazine, 25(2):21–30, March 2008.

- [13] H. Azodi, C. Koenen, U. Siart, and T. Eibert. Empirical discretization errors in sparse representations for motion state estimation with multi-sensor radar systems. pages 1–4, 04 2016.

- [14] G. Tang, B. N. Bhaskar, P. Shah, and B. Recht. Compressed sensing off the grid. IEEE Transactions on Information Theory, 59(11):7465–7490, Nov 2013.

- [15] K. V. Mishra, M. Cho, A. Kruger, and W. Xu. Super-resolution line spectrum estimation with block priors. pages 1211–1215, Nov 2014.

- [16] Y. Traonmilin and J-F. Aujol. The basins of attraction of the global minimizers of the non-convex sparse spikes estimation problem. ArXiv, abs/1811.12000, 2018.

- [17] C. Ekanadham, D. Tranchina, and E. P. Simoncelli. Recovery of sparse translation-invariant signals with continuous basis pursuit. IEEE Transactions on Signal Processing, 59(10):4735–4744, Oct 2011.

- [18] K. Knudson, J. Yates, A. Huk, and J. Pillow. Inferring sparse representations of continuous signals with continuous orthogonal matching pursuit. Advances in neural information processing systems, 27, 04 2015.

- [19] Y. Liu, H. Meng, G. Li, and X. Wang. Velocity estimation and range shift compensation for high range resolution profiling in stepped-frequency radar. Geoscience and Remote Sensing Letters, IEEE, 7:791 – 795, 11 2010.

- [20] H. Bao. The research of velocity compensation method based on range-profile function. International Journal of Hybrid Information Technology, 7:49–56, 03 2014.