FAQS: Communication-efficient Federate DNN Architecture and Quantization Co-Search for personalized Hardware-aware Preferences

††thanks:

Abstract

Due to user privacy and regulatory restrictions, federate learning (FL) is proposed as a distributed learning framework for training deep neural networks (DNN) on decentralized data clients. Recent advancements in FL have applied Neural Architecture Search (NAS) to replace the predefined one-size-fit-all DNN model, which is not optimal for all tasks of various data distributions, with searchable DNN architectures. However, previous methods suffer from expensive communication cost rasied by frequent large model parameters transmission between the server and clients. Such difficulty is further amplified when combining NAS algorithms, which commonly require prohibitive computation and enormous model storage. Towards this end, we propose FAQS, an efficient personalized FL-NAS-Quantization framework to reduce the communication cost with three features: weight-sharing super kernels, bit-sharing quantization and masked transmission. FAQS has an affordable search time and demands very limited size of transmitted messages at each round. By setting different personlized pareto function loss on local clients, FAQS can yield heterogeneous hardware-aware models for various user preferences. Experimental results show that FAQS achieves average reduction of 1.58x in communication bandwith per round compared with normal FL framework and 4.51x compared with FL+NAS framwork.

Index Terms:

Federate Learning, Neural Architecture Search, Quantization, Communication-efficiencyI Introduction

Federated Learning (FL) is a promising distributed training approach for deep neural networks (DNN) when processing decentralized data due to user privacy and regulatory restrictions [1]. It allows multiple data client to collaboratively train a global predifined model by exchanging intermediate parameters (e.g. the weights and biases) while keeping the raw local data samples private. As such, FL has been extensively applied in various challenging machine learning tasks such as data mining, computer vision and natural language processing. Despite its widespread superiority, one major challenge involved in FL is data heterogeneity, which means that the data distributions across clients are not identically or independently (non-IID) in nature. As a result, it is difficult to manually design optimization schemes for pre-defined model architectures.

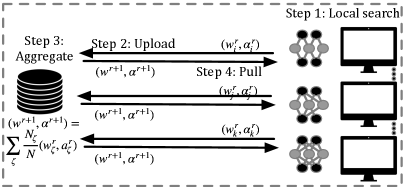

In order to relax this constraint, recent research have combined Neural Architecture Search (NAS) algorithm to automatically generate high performance models instead of exploiting pre-defined architectures [2]-[5]. As showed in Fig. 1, the common NAS-based FL framework [2] can be divided into 4 steps. First, a NAS agent is ultizied as a client searcher to generate the network weights and the neural architecture parameters with several epochs of local training at round . Second, every client node uploads and to the center server. Third, the server then aggregates all parameters to obtain a global version of and . At last, the clients pull the updated parameters for the following round of searching. However, there are two challenges in front of the current framework. First, the NAS algorithms conducted on local clients require prohibitive computation and enormous model storage, which makes the parameters transmission extremely expensive because attempting many rounds of training on edge devices results in a remarkably huge communication cost between the server and clients. Second, it still searches for a unified global model, whih may not perform optimally on all local clients.

Based on the previous observations, we propose FAQS, an efficient personalized FL-NAS-Quantization Co-Search framework to reduce the communication cost in three dimensions. First, we adopt the single-path based NAS algorithm [12] as our searching agent featuring weight-sharing super kernel, which slims the redundant supernet to the similar size of a normal network. Second, the network parameters is further compressed with mixed-precision bit-sharing quantization, which is jointly conducted with neural architecture search to save extra search time expense. Third, only a part of the local network is transmitted using masked parameters when uploading or pulling to save considerable size of communications. All these factors contribute to a communication-efficient manner of generating personalized local models. In addition, FAQS can satisfy heterogeneous hardware-aware preferences by setting various personlized pareto functions on different local clients.

Our contributions of this work can be concluded as follows:

-

•

We propose a communication-efficient personalized FL-NAS-Quantization framework to reduce the transmission cost between the server and clients. This is the first work (to the best of our knowledge) to joint optimize NAS and Quantization policy in a FL framework.

-

•

We diagnose and lift three techniques featuring weight-sharing super kernel, bit-sharing quantization and masked transmission to achieve average reduction of 1.58x in communication bandwith per round compared with normal FL framework and 4.51x compared with FL+NAS framwork.

-

•

We demonstrate that FAQS can yield heterogeneous hardware-aware models for various user preferences by setting different personlized pareto functions on accuracy, latency and model size.

II Motivation

II-A Differential Neural Architecture Search for FL

Great research interests have been raised in Neural Architecture Search for automatically genereting high-performance DNNs without human bias. Current NAS algorithms is typically based on three methods: Reinforcement Learnning (RL) [6]-[10], Evolutionary Algorithm (EA) [16], [17] and Gradient Differential supernet (GD) [11]-[15]. Both RL and EA require an enormous searching time to find the best operations due to ineffective search direction proposals. While GD-based methods dramatically decreases the search time by formulating a differentiable search space, which has obtained momentum in recent research of FL to search for efficient DNNs. Direct Federated NAS [3] ultizes DSNAS [21] with Fed averaging algorithm in search of a unified model. FedNAS [2] combines MileNAS [13] with Fed averaging algorithm to search for a global model. [22] explores the concept of differential privacy with DARTs [11] to analyze the trade-off between accuracy and privacy of a global model. However, all these models are faced with two challenges. First, prior research is based on a differentiable multi-path NAS algorithm which searchs over a supernet that encompasses all candidate architecture paths. Although it reduces the total search epochs to acceptable quantity, the number of candidate paths grows exponentially w.r.t. the number of hyper-parameter types which makes the whole supernet overweighted. As a result, every client needs to transmit tremendous size of the local model to the center server on each round and thus leads to huge communication cost. Second, existing methods converge on a single unified model’s architecture and weight parameters. As a result, there can only be one predictive outcome which may not perform optimally on all local clients’ tasks.

II-B Joint optimization on quantization and NAS

In order to achieve higher energy efficiency with limited resource budget, DNNs must be carefully designed in two steps: the architecture design and quantization policy choice. However, taking the two steps separately is time-consuming and leads to a sub-optimal final deployment [20]. Recent research have attempted joint optimization for both quantizaiton and NAS. [20] ultizes a RL agent based on a multi-objective evolutionary search algorithm to search the models under the balance between model size and performance accuracy. [19] formulates the co-search problem by fusing DNN search variables and hardware implementation (quantization and other implementation) variables into one solution space, and maximize both algorithm accuracy and hardware implementation quality. [18] proposes a novel co-exploration framework which parameterizes the layer-wise quantization and searches these parameters jointly with the hyperparameters of the architecture. However, prior methods are lacking in searching efficiency. [18] and [20] simply wrap an outer loop of the original NAS framework to handle the quantization selection and [19] encompasses quantization policy as another multi-path dimension which increases both the time of search and the model size.

III FAQS Framework

In this section, we introduce our entire framework FAQS, an efficient personalized FL-NAS-Quantization Co-Search framework to reduce the communication cost between the server and clients. In the rest part of this section, we first introduce the problem definition including FAQS problem formulation and the communication cost. Next we lift and explain three key techniques used in our framework to reduce communication cost in different dimensions. Last, we give an overall explaination on FAQS algorithm.

III-A Problem Definition

FAQS problem formulation: Mathematically, FAQS can be represented by the following optimization problem. In FAQS, the objective is to find a personalized model for each device that performs well on the local data distribution (we assume each client has the same number of samples for simplicity):

| (1) |

Where is the number of clients and is the trainable parameters including weights and architecture thresholds . represents local data of each client. is the personalized loss function of with soften pareto coefficient [19] on accuracy (converged by cross-entropy loss), latency and model size. We utilize the differentiable and loss used in [27], which regards the weighted sum of latency or model size on each type of block, as final and result for end-to-end training.

Communication Cost: The total communication cost (bits) transmitted between the server and clients through all rounds in FAQS can be formulated as:

| (2) |

Where represents the total rounds of FL, is the number of clients involved in the collaborative training and is the client’s total layer number. and are learnable weights and architecture parameters respectively. FAQS generates specific architectures based on thresholds instead of the weighted path-level coefficient used in muti-path [2]. represents the proportion of the entire network parameters which are not masked during message transmimission. is the total number of parameters including weights (or gradients) and architecture parameters while represents the number of bits on of in . Since each round catains parameters pulling (from the server to clients) and uploading (from clients to the server), the commuication cost is times by 2 for the final result. For a better understanding on previous parameters, we compare FAQS with FedAvg [26] and FedNAS+EDD [2], [19] (our implement of EDD with FedNAS) on each variable. As showed in Table I, we focus on a single muti-path MobileNetV2 block [23] which is the basic searchable unit in [2] and [19]. By adapting single-path weight-sharing super kernel and bit-sharing quantization, both the number of total parameters and the average quantization bits are reduced in FAQS. represents the percentage of the parameters that are actually transmitted. FedAvg and FedNAS-EDD both transmit the entire network for a full traning, while FAQS masks part of the network inspired by [25]. In other words, FAQS only transmit the crucial parts that affect the gradient aggregation hence can be decreased.

| Method | (KB) | Personalized | |||

|---|---|---|---|---|---|

| FedAvg(MBV2) | 1 | 9k | 32(float) | 72 | no |

| FedNAS+EDD | 1 | 25k | 28(quant) | 175 | yes |

| FAQS(ours) | 0.51 | 9k | 16(quant) | 18.4 | yes |

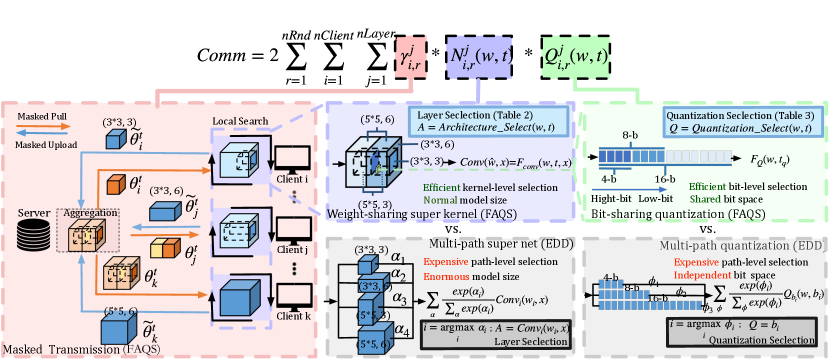

III-B Technique Details On Communication Reduce

As showed in Fig. 2, the framework of FAQS adpats three techniques to reduce diverse parameters in Eq. 2 for a communication-efficient manner of generating personalized local models including weight-sharing super kernel (top middle), bit-sharing quantization (top right) and masked transmission (left).

Weight-sharing super kernel: In this dimension, we reduce the total number of parameters in Eq. 2 Compared with the famous differential multi-path NAS [11] (showed in Fig. 2 bottom middle), which constructs and directly trains an over-parameterized network named SuperNet containing all possible candidate paths. The key idea of single-path NAS is relaxing architecture decisions over a weight-sharing over-parameterized kernel called super kernel [12]. A super kernel is the max size of the potential kernel. For MobilenetV2, it is a kernel with the expansion ratio of 6. As showed in Fig. 2 (top middle), we divide the whole kernel in several parts. For searching kernel size, choosing or is equivalent to whether we choose the subset of or not. And for searching expand ratio and skip opertaion, it is determined by if we choose to preserve the entire length, half length or zero of the kernel.

| (3) |

| (4) |

Where and represet weights of the 3*3 part and 5*53*3 part of the orginal super kernel. is the kernel shape selection output. While and represent the first and the second half of the expanded super kernel. , and are three thresholds fed on three sigmoid functions to decide whether we choose the subset of , the skip operation and the expand ratio of 3. The original problem is a hard-decision problem which can be softened by using these sigmoid functions. The function is used to caculate the indicator value as input of the sigmoid. We simply use the group Lasso term of the corresponding weight values and a threshold to make our decisions. For example, towards the following equation:

We can duduce from the the result that if is much bigger than zero, then most variables in the are likely to have important values hence is almost equal to 1. As a result yield a complete kernel size according to Eq. 2. By using this single-path NAS based on weight-sharing super kernels, the original redunt supernet in multi-path NAS can be slimmed to the similar size of a normal network, making the entire searh agent light and efficient. The relationship between the block type and result is enumerated in Table II.

The final output of this layer can be caculated as a convolution on transformed weight and input or as a general function on original weight , architecture threshold and .

Bit-sharing quantization: In this dimension, we focus on decreasing the average number of bits on all quantized variables in Eq. 2 when transmitting messages between the server and the clients. Quantization is a model compression method when deploying DNNs. We follow the widely used quantization aware training method [24], which uniformly round floating point values to the nearest integer point values based on the maximum and the minimum representative values. We fomulate the quantization process as follows:

| (5) |

Where is the weight vector of the real value and the approximate quantization value, and is the bit number. is a linear scaling functiong which normalizes the values of the vectors into [0,1] and is the inversed function. In paticular, is the quantization function which takes in the normalized real value and the bit number and outputs the closest quantization value of the corresponding weight element. Different from the differencial multi-path quantization policy in [19] that allocates independent bits for diverse quantization policy storage (Fig. 2 bottom right), FAQS stores all quantization policies in shared space. For example, for a bit search space, [19] takes 28 bits to store each potential quantization choice independently while FAQS only needs 16 fixed bits (b=16). As a result, 42% of model size can be saved on communications in FL. As showed in Fig. 2 (top right), instead of selecting quantization policy out of independent multi-path quantization policy, the basic idea of bit-sharing is similar to weight-sharing super kernels. For each layer, the quantization policy is decided by whether we choose to preserve the 58 bits and the 916 bits in a fixed 16-bit space or not.

| (6) |

Where is the to bits of the approximate quantization value in Eq. 5 (left to right: high bit to low bit). Similarly, and are two thresholds as inputs for respective functions to determine whether we preserve the 58 bits and the 916 bits. Similar to the former weight-sharing super kernels, towards the following function:

If is much bigger than the threshold , most weights’ bits are non-zeros. As a result, an 8-bit(at least) quantization policy is needed for the fine-grained weight distribution. In contrast, when most bits are zeros, we can approxiamtely regard the original 16-bit weight as a 4-bit ( bits) manner. The relationship between quantization policy and result is enumerated in Table III.

Masked transmission: In this part, we seek the oppotunity to reduce the valid proportion of network parameters transmission in Eq. 2. Inspired from FedMask [25] which masks unimportant channels and only aggregates the overlapped weights, the basic idea behind masked transmission is similar: we only transmit the important parts of kernels generated by local search agents. For example, as showed in Fig. 2 (left): towards a paticular layer in round , masked transmission means that we do not pull or upload the entire MBV2 block with regressed values caculated by Eq. 3 and Eq. 4. Instead, discrete architectures are selected according the mapping relationship in Table II. In this case, and select , and MBV2 blocks respectively. Where represents the MBV2 block shape with kernel size and expand ratio . Then FAQS only transmits the part of blocks with respective kernel shape and masks the other unimportant values. In this way, large amount of kernel weights can be left out and the valid proportion of network parameters can be reduced to small numbers. When it comes to weights aggregation, only corresponding overlapped shape of kernels are calculated for aggregation. For example, the part is shared among all these clients while the part accounts for and . And the part only accounts for . As a result, is aggregated for all clients and the part is pulled for and . while is monopolized by . Since it is not required to transmit the complete block for all clients on each round, is greatly is reduced for a communication-efficient FL framework.

III-C Overall Algorithm

As showed in Algorithm 1, FAQS is generally a two-step algorithm. First, a two-depth nested loop is adapted to generate personalized local models with a communication-efficient manner. Second, after the final epoch of local search and server aggregation, each local model is discretely selected for finetuning to yield the final model with architecture , quantization and Weight . To be more specific, FAQS fisrtly initialize each client with trainable parameters including weights and architecture thresholds . For each round, every client conducts local joint search on neural architecture and quantization. The process of function is simple. The original kernel weights is firstly quantized in a fixed 16-bit format using Eq. 5 and Eq. 6. Then the output quantized weights is transformed into a regressed combination of different parts of kernels using Eq. 3 and Eq. 4 (denoted as ). To this end, we regard as the direct form of kernel weights where . The personalized batch loss is a function parameterized by and pareto coefficients , where is parameterized by and . As a result, we can directly conduct mini-batch gradient descent on and . Afterwards, we discretely select the masked weight of each layer according to Table II and transmitt them to the server for aggregation. When finishing the final round of local search, FAQS generates the architecture and quantization selection based on the current and according to Table II and Table III. The finetune checkpoint starts on the masked sample weight and the final local model is generated with architecture , quantization and Weight .

| Model | ||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| (3,6) | (3,6) | (3,6) | (3,6) | (3,6) | (3,6) | (3,6) | (3,6) | (3,6) | (3,6) | (3,6) | (3,6) | (3,6) | (3,6) | (3,6) | (3,6) | 85.1 | 8.9 | 19.2 | ||

| (3,6) | (3,3) | (3,3) | (3,3) | (5,6) | (5,3) | (3,3) | (3,3) | (5,6) | (5,3) | (3,3) | (3,3) | (5,6) | (5,6) | (3,6) | (3,6) | 88.2 | 8.6 | 15.1 | ||

| 16 | 16 | 16 | 8 | 16 | 16 | 8 | 8 | 16 | 16 | 16 | 8 | 16 | 8 | 16 | 16 | |||||

| (5,6) | (5,3) | skip | skip | (5,6) | (5,6) | skip | skip | (5,6) | (5,6) | skip | skip | (5,6) | (5,6) | (5,6) | (5,6) | 83.6 | 5.2 | 15.4 | ||

| 16 | 16 | 16 | 16 | 16 | 16 | 16 | 16 | 16 | 16 | |||||||||||

| (3,6) | (3,3) | (3,3) | skip | (3,6) | (3,6) | skip | (3,3) | (3,6) | (3,3) | (3,3) | (3,3) | (3,6) | (3,6) | (3,6) | (3,6) | 86.1 | 7.8 | 11.3 | ||

| 16 | 16 | 16 | 16 | 8 | 8 | 16 | 8 | 8 | 8 | 16 | 8 | 16 | 16 | |||||||

IV Evaluations

Experiments are conducted on the standard classification benchmark to evaluate our method. We implement FAQS for distributed computing with nine nodes each equipped with a GPU (NVIDIA Tesla V100). We set up our experiment in a cross-silo setting for simplicity with one node representing the server and eight nodes representing the clients.

IV-A Experimental Setup

Dataset preparation: We select CIFAR10 dataset on image classification task for a fair comparison, which is commonly used as a benchmark in FL and NAS [2], [19]. We generate non-IID data across clients by exploiting LDA distribution [2] with parameter (=0.2).

Search space: For neural architecture search space, our framework builds upon hierarchical MBV2-like search space used in [23], [12], [14], [19]. The main goal is to decide the type of mobile inverted bottleneck convolution. To be more specific, MBConv layer is decided by the kernel size of the depthwise convolution and by the expansion ratio . In particular, we consider layers with kernel sizes and expansion ratios of . The backbone has 4 blocks each block consists of 4 groups of a point-wise 1*1 convolution, a k*k depthwise convolution, and a linear 1*1 convolution. To reduce the complexity of the problem, we only quantize weights and asume each layer within a group share the same quantization policy. The choices of quantization are limited within (4, 8, 16) bits.

IV-B Training and Communication Efficiency

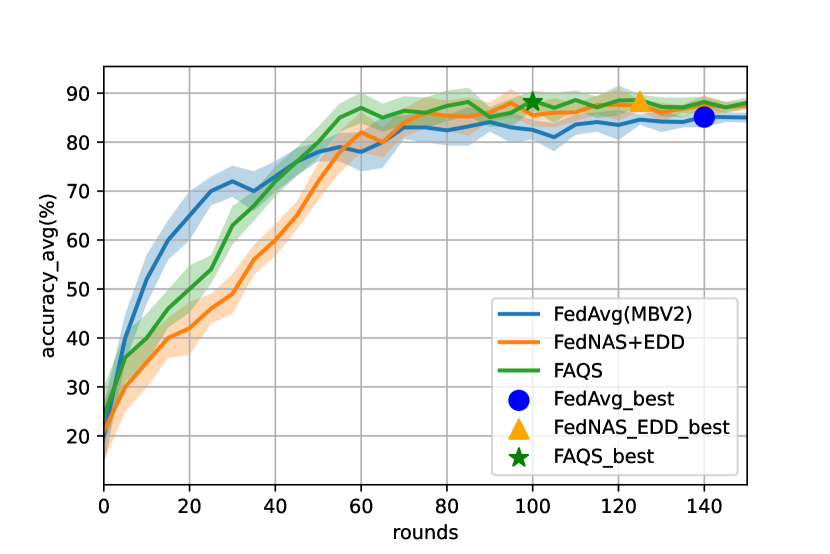

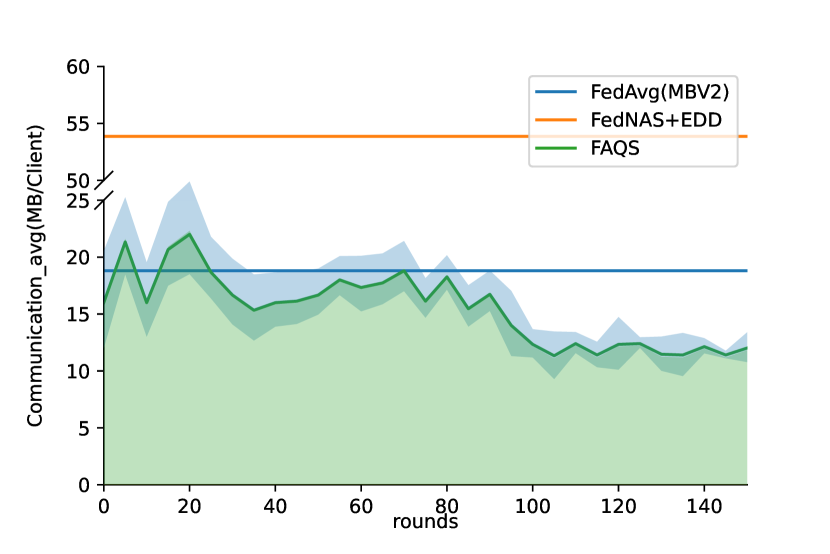

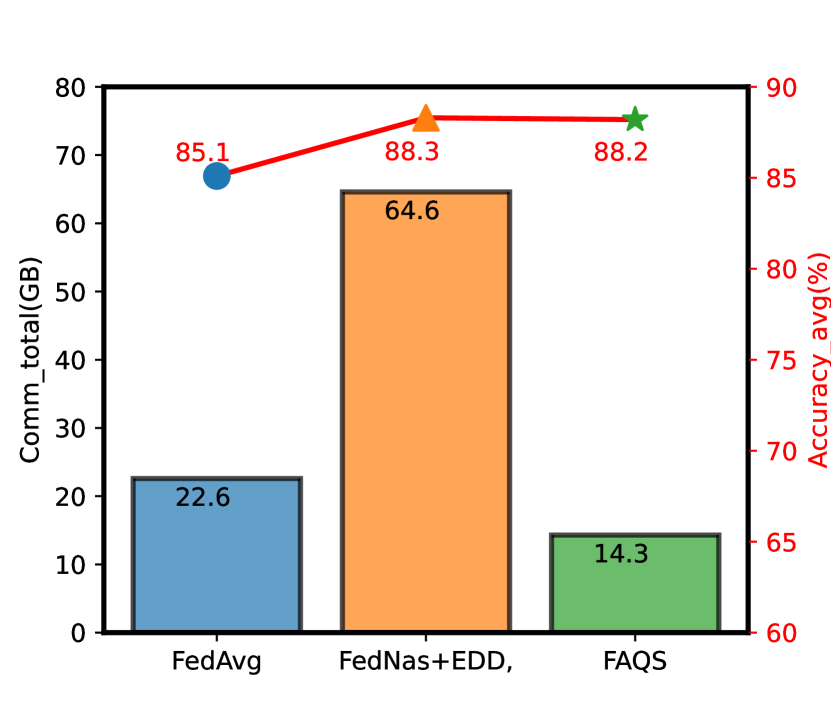

For a fair comparison with FedAvg and FedNAS+EDD, we fisrt conduct simple experiments without hardware-aware preferences ( and ) as FedAvg does not apply latency or model size analysis. As showed in Fig. 3 (left), FedAvg converges faster than FedNAS+EDD and FAQS due to no additional trainable architecture parameters. While both FedNAS+EDD and FAQS outperform FedAvg in final top 1 accuracy (88.3 88.2 and 85.1) with enough training rounds(100 rounds). Meanwhile, FAQS demands less affordable search time than FedNAS+EDD due to the efficiency of weight-sharing super kernels and bit-sharing quantization. By plotting the average communication bits of FAQS curve on each round showed in Fig. 3 (middle), the total communication cost can be caculated on the area under the curves. As showed in Fig. 3 (right), FAQS achieves average reduction of 1.58x in communication bandwith per round compared with normal FedAvg and 4.51x in total communication compared with FedNAS+EDD framwork.

IV-C Personalized Hardware-aware Preferences

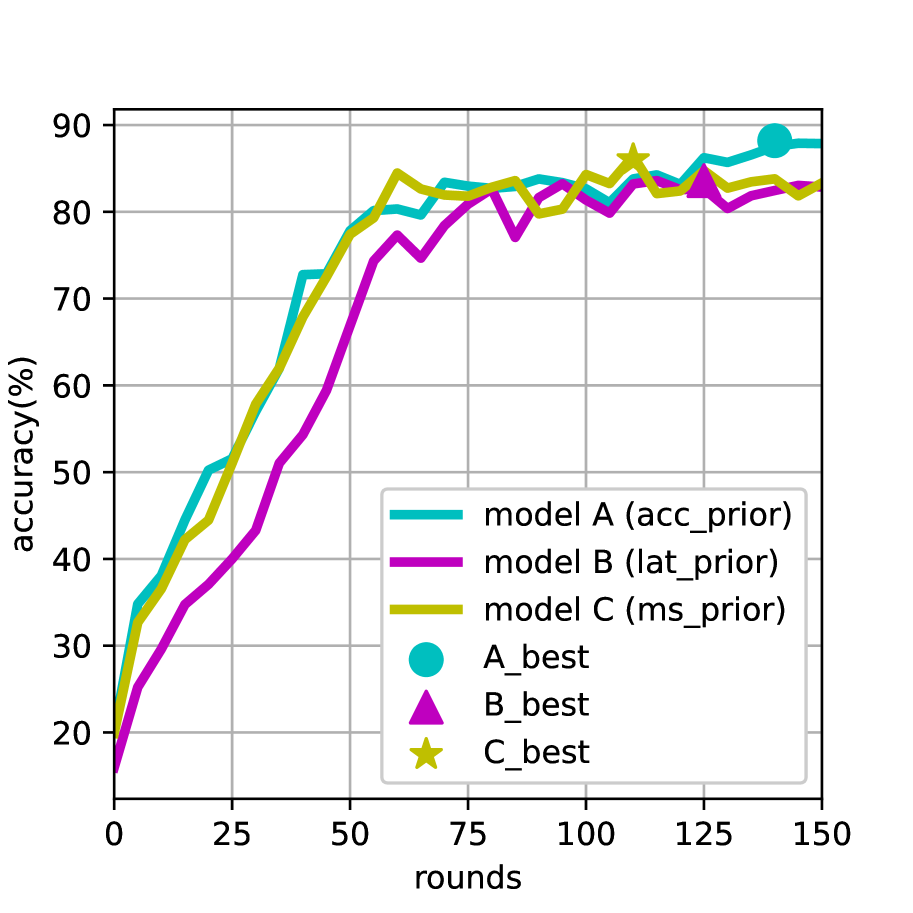

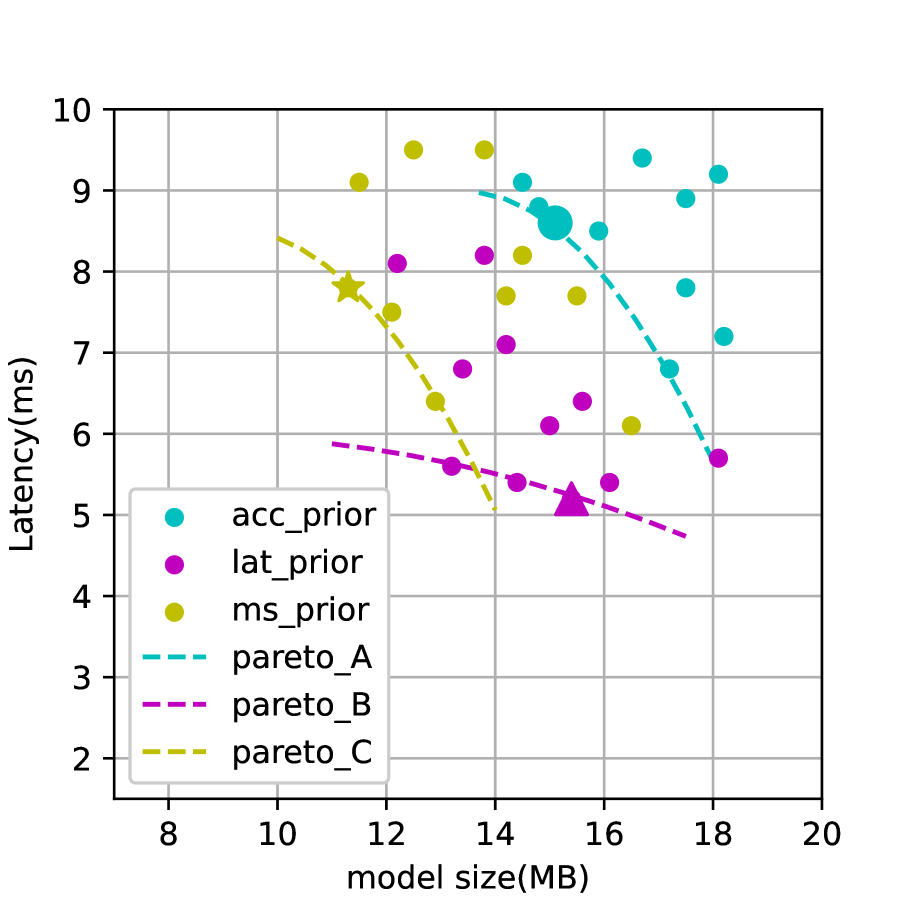

In order to demonstrate the ability of generating heterogeneous models for personalized hardware-aware preferences, we use 8 groups of pareto loss with various for each node. By running through 150 rounds of FAQS, we record the accuracy curves and the scatterings on three clients’ latency and model size. Client with (0.8,0.1,0.1) prefers accuracy much more than latency and model size, while client with (0.4,0.5,0.1) and with (0.4,0.1,0.5) are lat-prior and ms-prior respectively. As showed in Fig. 4 (left), the model searched in node outperforms and in accuracy. While both and yield high-performance models very near to corresponding Pareto boundries showed in Fig. 4 (right). The searched architectures and quantization policies are detailed in Table VI along with their performance and hardware-aware parameters. We give a brief analysis on the searched architectures and quantization policies. As client prefers accuracy much more than latency and model size, it tends to search for deep and wide architectures with long bit(16-b) quantizations. Latency is the main optimizing component for Client . As a result, it prefers wide but shallow architectures as the GPU device is good at performing parallel computings. Preserving large kernels can make up for the accuracy loss introduced by shallow architectures. For the ms-prior client , many layers adapt narrow and shallow block types to reduce model size. We have similar findings in [14] that both lat-prior and ms-prior models have quite shallow layers but long quantizaiton bit length in early stage, because feartures of early stages are relatively small and need higher resolution. Besides, both of them prefer larger MBConv and longer quantization bit in downsampled layers to prevent severe accuracy loss.

V conclusion

In this paper, we have presented FAQS, the first joint neural architecture and quantization optimization framework in federate learning. FAQS reduces the communication cost by utilizing weight-sharing super kernels, bit-sharing quantization and masked transmission. Moreover, FAQS can yield heterogeneous hardware-aware models for various user preferences by setting different personlized pareto functions on accuracy, latency and model size. Our evaluation shows that FAQS can achieve average reduction of 1.58x in communication bandwith per round compared with normal FL framework and 4.51x compared with FL+NAS framwork.

References

- [1] McMahan, Brendan, et al. ”Communication-efficient learning of deep networks from decentralized data.” Artificial intelligence and statistics. PMLR, 2017.

- [2] Chaoyang He, Murali Annavaram, and Salman Avestimehr. ”Fednas: Federated deep learning via neural architecture search.” arXiv e-prints, pages arXiv–2004, 2020. 2

- [3] Garg, Anubhav, Amit Kumar Saha, and Debo Dutta. ”Direct federated neural architecture search.” arXiv preprint arXiv:2010.06223 (2020).

- [4] Zhu, Hangyu, and Yaochu Jin. ”Real-time federated evolutionary neural architecture search.” IEEE Transactions on Evolutionary Computation 26.2 (2021): 364-378.

- [5] Singh, Ishika, et al. ”Differentially-private federated neural architecture search.” arXiv preprint arXiv:2006.10559 (2020).

- [6] Zoph, Barret, and Quoc V. Le. ”Neural architecture search with reinforcement learning.” arXiv preprint arXiv:1611.01578 (2016).

- [7] Zoph, Barret, et al. ”Learning transferable architectures for scalable image recognition.” Proceedings of the IEEE conference on computer vision and pattern recognition. 2018.

- [8] Baker, Bowen, et al. ”Designing neural network architectures using reinforcement learning.” arXiv preprint arXiv:1611.02167 (2016).

- [9] Zhong, Zhao, et al. ”Blockqnn: Efficient block-wise neural network architecture generation.” IEEE transactions on pattern analysis and machine intelligence 43.7 (2020): 2314-2328

- [10] Pham, Hieu, et al. ”Efficient neural architecture search via parameters sharing.” International conference on machine learning. PMLR, 2018.

- [11] Liu, Hanxiao, Karen Simonyan, and Yiming Yang. ”Darts: Differentiable architecture search.” arXiv preprint arXiv:1806.09055 (2018).

- [12] Stamoulis, Dimitrios, et al. ”Single-path nas: Designing hardware-efficient convnets in less than 4 hours.” Joint European Conference on Machine Learning and Knowledge Discovery in Databases. Springer, Cham, 2019.

- [13] He, Chaoyang, et al. ”Milenas: Efficient neural architecture search via mixed-level reformulation.” Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2020.

- [14] Cai, Han, Ligeng Zhu, and Song Han. ”Proxylessnas: Direct neural architecture search on target task and hardware.” arXiv preprint arXiv:1812.00332 (2018).

- [15] Dong, Xuanyi, and Yi Yang. ”Searching for a robust neural architecture in four gpu hours.” Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2019.

- [16] Xie, Lingxi, and Alan Yuille. ”Genetic cnn.” Proceedings of the IEEE international conference on computer vision. 2017.

- [17] Chen, Yukang, et al. ”Renas: Reinforced evolutionary neural architecture search.” Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2019.

- [18] Lu, Qing, et al. ”On neural architecture search for resource-constrained hardware platforms.” arXiv preprint arXiv:1911.00105 (2019).

- [19] Li, Yuhong, et al. ”Edd: Efficient differentiable dnn architecture and implementation co-search for embedded ai solutions.” 2020 57th ACM/IEEE Design Automation Conference (DAC). IEEE, 2020.

- [20] Chen, Yukang, et al. ”Joint neural architecture search and quantization.” arXiv preprint arXiv:1811.09426 (2018).

- [21] Hu, Shoukang, et al. ”Dsnas: Direct neural architecture search without parameter retraining.” Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2020.

- [22] Singh, Ishika, et al. ”Differentially-private federated neural architecture search.” arXiv preprint arXiv:2006.10559 (2020).

- [23] Sandler, Mark, et al. ”Mobilenetv2: Inverted residuals and linear bottlenecks.” Proceedings of the IEEE conference on computer vision and pattern recognition. 2018.

- [24] Jacob, Benoit, et al. ”Quantization and training of neural networks for efficient integer-arithmetic-only inference.” Proceedings of the IEEE conference on computer vision and pattern recognition. 2018.

- [25] Li, Ang, et al. ”Fedmask: Joint computation and communication-efficient personalized federated learning via heterogeneous masking.” Proceedings of the 19th ACM Conference on Embedded Networked Sensor Systems. 2021.

- [26] McMahan, Brendan, et al. ”Communication-efficient learning of deep networks from decentralized data.” Artificial intelligence and statistics. PMLR, 2017.

- [27] Wu, Bichen, et al. ”Fbnet: Hardware-aware efficient convnet design via differentiable neural architecture search.” Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2019.