Fast and Feature-Complete Differentiable Physics for Articulated Rigid Bodies with Contact

Abstract

We present a fast and feature-complete differentiable physics engine, Nimble (nimblephysics.org), that supports Lagrangian dynamics and hard contact constraints for articulated rigid body simulation. Our differentiable physics engine offers a complete set of features that are typically only available in non-differentiable physics simulators commonly used by robotics applications. We solve contact constraints precisely using linear complementarity problems (LCPs). We present efficient and novel analytical gradients through the LCP formulation of inelastic contact that exploit the sparsity of the LCP solution. We support complex contact geometry, and gradients approximating continuous-time elastic collision. We also introduce a novel method to compute complementarity-aware gradients that help downstream optimization tasks avoid stalling in saddle points. We show that an implementation of this combination in a fork of an existing physics engine (DART) is capable of a 87x single-core speedup over finite-differencing in computing analytical Jacobians for a single timestep, while preserving all the expressiveness of original DART.

I Introduction

Many modern robotics problems are optimization problems. Finding optimal trajectories, guessing the physical parameters of a world that best fits our observed data, or designing a control policy to optimally respond to a dynamic environment are all types of optimization problems. Optimization methods can be sorted into two buckets: gradient-based, and gradient-free. Despite the well-known drawbacks of gradient-free methods (high sample complexity and noisy solutions), they remain popular in robotics because physics engines with the necessary features to model complex robots are generally non-differentiable. When gradients are required, we typically approximate them using finite differencing [41].

Recent years have seen many differentiable physics engines published [11, 19, 15, 42, 38, 10, 34, 29, 12], but none has yet gained traction as a replacement for popular non-differentiable engines [9, 41, 26]. We hypothesize that an ideal differentiable physics engine needs to implement an equivalent feature set to existing popular non-differentiable engines, as well as provide excellent computational efficiency, in order to gain adoption. In this paper, we extend the existing physics engine DART [26], which is commonly used in robotics and graphics communities, and make it differentiable. Our resulting engine supports all features available to the forward simulation process, meaning existing code and applications will remain compatible while also enjoying new capabilities enabled by efficient analytical differentiability.

A fully-featured physics engine like DART is complex and has many components that must be differentiated. Some components (such as collision detection and contact force computation) are not naively differentiable, but we show that under very reasonable assumptions we can compute useful Jacobians regardless. In order to differentiate through contacts and collision forces, we introduce an efficient method for differentiating the contact Linear Complementarity Problem (LCP) that exploits sparsity, as well as novel contact geometry algorithms and an efficient continuous-time approximation for elastic collisions. As a result, our engine is able to compute gradients with hard contact constraints up to 87 times faster than finite differencing methods, depending on the size of the dynamic system. Our relative speedup over finite differencing grows as the system complexity grows. In deriving our novel method to differentiate through the LCP, we also gain an understanding of the nature of contact dynamics Jacobians that allows us to propose heuristic “complementarity-aware gradients” that may be good search directions to try, if a downstream optimizer is getting stuck in a saddle point.

We provide an open-source implementation of all of these ideas, as well as derivations from previous work [25, 7], in a fully differentiable fork of the DART physics engine, which we call Nimble. Code and documentation are available at nimblephysics.org.

To summarize, our contributions are as follows:

-

•

A novel and fast method for local differentiability of LCPs that exploits the sparsity of the LCP solution, which gives us efficient gradients through static and sliding contacts and friction without changing traditional forward-simulation formulations. Section IV

-

•

A novel method that manipulates gradient computation to help downstream optimization problems escape saddle points due to discrete contact states. Section IV-D

-

•

Fast geometric analytical gradients through collision detection algorithms which support various types of 3D geometry and meshes. Section V

-

•

A novel analytical approximation of continuous-time gradients through elastic contact, which otherwise can lead to errors in discrete-time systems. Section VI

-

•

An open-source implementation of all of our proposed methods (along with analytical gradients through Featherstone first described in GEAR [25]) in a fork of the DART physics engine which we call Nimble. We have created Python bindings and a PyPI package, pip install nimblephysics, for ease of use.

II Related Work

| Engine | Contact | Dynamic | Collision | Gradients |

|---|---|---|---|---|

| Force | State | Geometry | Method | |

| MuJoCo | customized | generalized | complete | finite |

| Degrave | impulse | cartesian | primitives | auto |

| DiffTaichi | impulse | caresian | primitives | auto |

| Heiden | iter LCP | generalized | primitives | auto |

| de A. B.-P. | direct LCP | cartesian | primitives | symbolic |

| Geilinger | customized | generalized | primitives | symbolic |

| Ours | direct LCP | generalized | complete | symbolic |

Differentiable physics simulation has been investigated previously in many different fields, including mechanical engineering [16], robotics [14], physics [23, 21] and computer graphics [32, 28]. Enabled by recent advances in automatic differentiation methods and libraries [31, 1, 18], a number of differentiable physics engines have been proposed to solve control and parameter estimation problems for rigid bodies [25, 11, 18, 15, 10, 12, 34] and non-rigid bodies [37, 27, 13, 20, 34, 17, 12]. While they share a similar high-level goal of solving “inverse problems”, the features and functionality provided by these engines vary widely, including the variations in contact handling, state space parameterization and collision geometry support. Table I highlights the differences in a few differentiable physics engines that have demonstrated the ability to simulate articulated rigid bodies with contact. Based on the functionalities each engine intends to support, the approaches to computing gradients can be organized in following categories.

Finite-differencing is a straightforward way to approximate gradients of a function. For a feature-complete physics engine, where analytical gradients are complex to obtain, finite-differencing provides a simpler method. For example, a widely used physics engine, MuJoCo [41], supports gradient computation via finite differencing. However, finite-differencing tends to introduce round-off errors and performs poorly for a large number of input variables.

Automatic differentiation (auto-diff) is a method for computing gradients of a sequence of elementary arithmetic operations or functions automatically. However, the constraint satisfaction problems required by many existing, feature-complete robotic physics engines are not supported by auto-diff libraries. To avoid this issue, many recent differentiable physics engines instead implement impulse-based contact handling, which could lead to numerical instability and constraint violation if the contact parameters are not tuned properly for the specific dynamic system and the simulation task. Degrave et al. [11] implemented a rigid body simulator in the Theano framework [1], while DiffTaichi [18] implemented a number of differentiable physics engines, including rigid bodies, extending the Taichi programming language [19], both representing dynamic equations in Cartesian coordinates and handling contact with impulse-based methods. In contrast, Tiny Differentiable Simulator [15] models contacts as an LCP, but they solve the LCP iteratively via Projected Gauss Siedel (PGS) method [24], instead of directly solving a constraint satisfaction problem, making it possible to compute gradient using auto-diff libraries.

Symbolic differentiation is another way to compute gradients by directly differentiate mathematical expressions. For complex programs like Lagrangian dynamics with constraints formulated as a Differential Algebraic Equations, symbolic differentiation can be exceedingly difficult. Earlier work computed symbolic gradients for smooth dynamic systems [25], and [7] simplified the computation of the derivative of the forward dynamics exploiting the derivative of the inverse dynamics. Symbolic differentiation becomes manageable when the gradients are only required within smooth contact modes [42] or a specific contact mode is assumed [38]. Recently, Amos and Kolter proposed a method, Opt-Net, that back-propagates through the solution of an optimization problem to its input parameters [2]. Building on Opt-Net, de Avila Belbute-Peres et al. [10] derived analytical gradients through LCP formulated as a QP. Their method enables differentiability for rigid body simulation with hard constraints, but their implementation represents 2D rigid bodies in Cartesian coordinates and only supports collisions with a plane, insufficient for simulating complex articulated rigid body systems. More importantly, computing gradients via QP requires solving a number of linear systems which does not take advantage of the sparsity of the LCP structure. Qiao et al. [34] built on [2] and improved the performance of contact handling by breaking a large scene into smaller impact zones. A QP is solved for each impact zone to ensure that the geometry is not interpenetrating, but contact dynamics and conservation laws are not considered. Solving contacts for localized zones has been previously implemented in many existing physics engines [41, 9, 26]. Adapting the collision handling routine in DART, our method by default utilizes the localized contact zones to speed up the performance. Adjoint sensitivity analysis [35] has also been used for computing gradients of dynamics. Millard et al. [29] combined auto-diff with adjoint sensitivity analysis to achieve faster gradient computation for higher-dof systems, but their method did not handle contact and collision. Geilinger et al. [12] analytically computed derivatives through adjoint sensitivity analysis and proposed a differentiable physics engine with implicit forward integration and a customized frictional contact model that is natively differentiable.

Approximating physics with neural networks is a different approach towards differentiable physics engine. Instead of forward simulating a dynamic system from the first principles of Newtonian mechanics, a neural network is learned from training data. Examples of this approach include Battaglia et. al [4], Chang et. al. [8], and Mrowca et. al [30].

Our engine employs symbolic differentiation to compute gradients through every part of the engine using hand-tuned C++ code. We introduce a novel method to differentiate the LCP analytically that takes advantage of the sparsity of the solution and is compatible with using direct methods to solve the LCP. In addition, our engine supports a richer set of geometry for collision and contact handling than has been previously available, including mesh-mesh and mesh-primitive collisions, in order to achieve a fully functional differentiable version of the DART physics engine for robotic applications.

III Overview

A physics engine can be thought of as a simple function that takes the current position , velocity , control forces and inertial properties , and returns the position and velocity at the next timestep, and :

| (1) |

In an engine with simple explicit time integration, our next position is a trivial function of current position and velocity, , where is the descritized time interval.

The computational work of the physics engine comes from solving for our next velocity, . We are representing our articulated rigid body system in generalized coordinates using the following Lagrangian dynamic equation:

| (2) |

where is the mass matrix, is the Coriolis and gravitational force, and is the contact impulse transformed into the generalized coordinates by the contact Jacobian matrix . Note that multiple contact points and/or other constraint impulses can be trivially added to Equation 2.

Every term in Equation 2 can be evaluated given , and except for the contact impulse , which requires the engine to form and solve an LCP:

| (3) |

The velocity of a contact point at the next time step, , can be expressed as a linear function in

| (4) |

where and . The LCP procedure can then be expressed as a function that maps to the contact impulse :

| (5) |

As such, the process of forward stepping is to find and resulting that satisfy Equation 2 and Equation 5.

The main task in developing a differentiable physics engine is to solve for the gradient of next velocity with respect to the input to the current time step, namely , and . The data flow is shown in Figure 2. For brevity we refer to the ouput of a function for a given timestep by the same name as the function with a subscript (e.g. ). The velocity at the next step can be simplified to

| (6) |

where . The gradients we need to compute at each time step are written as:

| (7) | ||||

| (8) | ||||

| (9) | ||||

| (10) |

We tackle the tricky intermediate Jacobians in sections that follow. In Section IV we will introduce a novel sparse analytical method to compute the gradients of contact force with respect to . Section V will discuss —how collision geometry changes with respect to changes in position. In Section VI we will tackle and , which is not as simple as it may at first appear, because naively taking gradients through a discrete time physics engine yields problematic results when elastic collisions take place. Additionally, the appendix gives a way to apply the derivations from [25] and [7] to analytically find , , and .

IV Differentiating the LCP

This section introduces a method to analytically compute and . It turns out that it is possible to efficiently get unambiguous gradients through an LCP in the vast majority of practical scenarios, without recasting it as a QP (which throws away sparsity information by replacing the complementarity constraint with an objective function). To see this, let us consider a hypothetical LCP problem parameterized by with a solution found during the forward pass: .

For brevity, we only include the discussion on normal contact impulses in this section and leave the extension to friction impulses in Appendix -A. Therefore, each element in indicates the normal impulse of a point contact. By complementarity, we know that if some element , then . Intuitively, the relative velocity at contact point must be 0 if there is any non-zero impulse being exerted at contact point . We call such contact points “Clamping” because the impulse is adjusted to keep the relative velocity . Let the set to be all indices that are clamping. Symmetrically, if , then the relative velocity is free to vary without the LCP needing to adjust to compensate. We call such contact points “Separating” and define the set to be all indices that are separating. Let us call indices where and “Tied.” Define the set to be all indices that are tied.

If no contact points are tied (), the LCP is strictly differentiable and the gradients can be analytically computed. When some contact points are tied (), the LCP has valid subgradients and it is possible to follow any in an optimization. The tied case is analogous to the non-differentiable points in a QP where an inequality constraint is active while the corresponding dual variable is also zero. In such a case, computing gradients via taking differentials of the KKT conditions will result in a low-rank linear system and thus non-unique gradients [2].

IV-A Strictly differentiable cases

Consider the case where . We shuffle the indices of , , and to group together members of and . The LCP becomes:

| (11) |

Since we know the classification of each contact that forms the valid solution , we rewrite the LCP constraints as follows:

| (12) |

From here we can see how the valid solution changes under infinitesimal perturbations to and . Since and , the LCP can be reduced to three conditions on :

| (13) | ||||

| (14) | ||||

| (15) |

We will show that these conditions will always be possible to satisfy under small enough perturbations in the neighborhood of a valid solution. Let us first consider tiny perturbations to and . If the perturbations are small enough, then Equation 15 will still be satisfied with our original , because we know Equation 15 already holds strictly such that there is some non-zero room to decrease any element of without violating Equation 15. Therefore,

| (16) |

Next let us consider an infinitesimal perturbation to and the necessary change on the clamping force to satisfy Equation 13:

| (17) |

Setting and assuming is invertible, the change of the clamping force is given as . Since is strictly greater than , it is always possible to choose an small enough to make and remain true. Therefore,

| (18) |

Note that is not always invertible because is positive semidefinite. We will discuss the case when is not full rank in Section IV-C along with a method to stabilize the LCP when there exists multiple LCP solutions in Appendix -B.

Lastly, we compute gradients with respect to . In practice, changes to only happen because we are differentiating with respect to parameters or , which also changes . As such, we introduce a new scalar variable, , which could represent any arbitrary scalar quantity that effects both and . Equation 13 can be rewritten as:

| (19) |

Because and are continuous, and the original solution is valid, any sufficiently small perturbation to will not reduce below 0 or violate Equation 15. The Jacobian with respect to can be expressed as:

| (20) |

Using and , along with the derivations of Featherstone presented in Appendix -F, it is possible to compute and for any specific .

Remark: Previous methods [10, 27, 34] cast an LCP to a QP and solved for a linear system of size derived from taking differentials of the KKT conditions of the QP, where is the dimension of the state variable and is the number of contact constraints. Our method also solves for linear systems to obtain , but the size of is often much less than due to the sparsity of the solution .

IV-B Subdifferentiable case

Now let us consider when . Replacing the LCP constraints with linear constraints will no longer work because any perturbation will immediately change the state of the contact and the change also depends on the direction of perturbation. Including the class of “tied” contact points to Equation 11, we need to satisfy an additional linear system,

| (21) |

where and and are both zero at the solution. Let be the index of a tied contact point. Consider perturbing the ’th element of by . If , cannot become negative to balance Equation IV-B because is positive semidefinite and must be nonnegative. Therefore, must become positive, resulting contact point being separated and remaining zero. If , then is immediately bumped into the “clamping” set because cannot be negative. Therefore, must become positive to balance Equation IV-B. The gradients for the clamping and separating cases are both valid subgraidents for a tied contact point. In an optimization, we can choose either of the two subgradients at random without impacting the convergence [6]. In practice, encountering elements in is quite rare for practical numerical reasons.

IV-C When is not full rank

When is not full rank, the solution to is no longer unique. Nevertheless, once a solution is computed using any algorithm and the clamping set is found, we can use the stabilization method proposed in Appendix -B to find the least-squares minimal that solves the LCP. The gradient of clamping forces can then be written as:

| (22) |

where is the pseudo inverse matrix of the low-rank . For numerical stability, we can solve a series of linear systems instead of explicitly evaluating .

IV-D Complementarity-aware gradients via contact constraints

Sometimes analytically correct Jacobians through the LCP can actually prevent an optimizer from finding a good solution. When a contact is clamping (), we effectively impose a constraint that the relative velocity at that contact point is zero no matter how we push or pull on the contact. This prevents the gradients from pointing towards any motions that require breaking contact, because our constraints will zero out gradients that lead to .

This behavior is caused by the complementarity constraint requiring that at most one of or can be non-zero. This phenomenon grows out of the short-sightedness of the gradient (it only considers an infinitely small neighborhood around the current state). While it is true that small attempts to push will result in no change to as compensates to keep , eventually we will reach a discontinuity where can no longer prevent the contact from separating. However, a gradient-based optimizer may never take steps in that direction because gradients in that direction are 0. In this section we propose a heuristic to opportunistically explore “projecting forward” to the discontinuity where the complementarity constraint flips during backpropagation, depending on the gradient of loss function with respect to and .

To make it more concrete, let us consider a simple example of a 2D circle attached to a linear actuator that can produce force along the vertical axis (Figure 3). Our goal is to lift the circle up to a target height above the ground by generating an upward velocity using the linear actuator. When the circle is resting on the ground under gravity, the contact point with the ground is classified as “clamping”. This means that any tiny perturbation in the control force of linear actuator will be met with an exactly offsetting contact force to ensure that the relative velocity between the circle and the ground remains zero. As such, no matter what gradient of loss function with respect to the control force we try to backpropagate through the contact, we will always get .

Consider another example where the same 2D circle is uncontrolled and resting on a linearly actuated platform (Figure 4). To lift the circle up, we need to utilize the contact force from the platform. If the initialization of the problem results in the contact point being classified as “separating”, no constraint force will be applied on the contact point, as if the contact did not exist. Therefore, the gradient of contact force with respect to the platform control force is zero, which may cause the optimization to be trapped in a saddle point.

In both examples, the issues can be resolved by classifying the contact point differently. If we know that to solve this problem we need to actuate the circle and the platform separately, we would set the contact point to be “separating.” This gives the optimizer a chance to explore a solution with contact breaking. On the other hand, if we know that we need to exploit the contact force between the circle and the platform, we would relabel a separating contact point to be “clamping”. This lets the optimizer search for the most effective contact force that reduces the loss function.

Is there a way to know which strategy to use in advance? In the general case, the answer seems to be no, but we can do better than naive gradients which stick with whatever contact classification they were initialized into and do not explore to break out of resulting saddle points. We propose a heuristic to explore more broadly at a constant additional cost.

We propose that always picking the contact strategy that results in the largest is a good heuristic during learning for avoiding saddle points. While we could try backprop through all possible strategies and pick the best one, that gets expensive as the number of contacts becomes larger than a small handful. Instead of exhaustively searching for all possible combinations of contact states with exponential complexity, we propose to only check two classifications:

-

1.

First, check the “correct” contact classification solved by the LCP.

-

2.

Second, we compute (the gradient of loss with respect to relative contact velocity), and use the elements of to compute the “clamping” and “separating” sets as follows. Take any indices that are trying to increase the relative velocity at that contact , which implies the optimizer is feebly trying to separate the objects, and put those indices into “separating” to remove the constraint that . Take any indices where , which implies the optimizer is trying to move the objects closer together (which would violate contact constraints), and put them into “clamping” to impose the constraint that .

The contact strategy with the larger is used during backpropagation for learning. We call the gradient produced by this exploratory procedure a “Complementarity-aware Gradient,” and find empirically that it can help avoid saddle points during trajectory optimization. We show an example of this in Section VII.

The complementarity-aware gradients do not guarantee to improve global convergence because the gradient is chosen for each contact point independently, which might not result in an aggregated gradient that moves in a globally optimal direction. However, if an optimization problem currently gets stuck in a saddle point, complementarity-aware gradients provide another tool for practitioners to try.

V Gradients through collision geometry

This section addresses efficient computation of , the relationship between position and joint impulse. In theory, we could utilize auto-diff libraries for the derivative computation. However, using auto-diff and passing every gradient through a long kinematic chain of transformations is inefficient for complex articulated rigid body systems. In contrast, computing the gradients symbolically shortcuts much computation by operating directly in the world coordinate frame.

Let be the screw axis for the ’th DOF, expressed in the world frame. Let the ’th contact point give an impulse , also expressed in the world frame. Let be the relationship between contact and joint . or means contact is on a child body of joint . Otherwise, . The total joint impulse caused by contact impulses for the ’th joint is given by:

| (23) |

Taking the derivative of Equation 23 gives

| (24) |

Evaluating is straightforward, but computing requires understanding how the contact normal and contact position change with changes in .

Let be a concatenation of torque and force in . The derivative with respect to the current joint position is:

| (25) |

It turns out that computing for curved primitives shape colliders (spheres and capsules) requires different treatment from meshes or polygonal primitives. To understand why, consider using a high polycount mesh to approximate a true sphere as shown in Figure 5.

On a mesh approximation of a sphere, infinitesimally moving the contact point by (by perturbing of the triangle) will not cause the normal of the contact face to change at all, because we remain on the same face of the mesh. So is always zero for a mesh or polygonal shape. However, on a true sphere, an infinitesimal perturbation of the contact point with the sphere (no matter how small) will cause the contact normal with the sphere to change. The way the contact normal changes with position (e.g. ) is often crucial information for the optimizer to have in order to solve complex problems, and mesh approximations to curved surfaces falsely set .

We refer the interested reader to Appendix -C for a discussion of how we compute and for different combinations of collider types.

VI Gradients through elastic contacts

DiffTaichi [18] pointed out an interesting problem that arises from discretization of time in simulating elastic bouncing phenomenon between two objects. The problems arise from the discrete time integration of position: . The Jacobians and are correct for most scenarios. However, when an elastic collision occurs, the discrete time integration scheme creates problems for differentiation. In a discrete time world, the closer the object is to the collision site at the beginning of the time step when collision happens, the closer to the collision site it ends up at the end of that time step. In continuous time, however, the closer the object begins to its collision site, the further away it ends up, because the object changes velocity sooner (Figure 6).

DiffTaichi [18] proposed using continuous-time collision detection and resolution during training, but noted that switching to discrete-time at test time did not harm performance. Since introducing full continuous-time collision detection is computationally costly for complex dynamic systems and scenes, we instead opt to find a and that approximates the behavior of continuous-time Jacobians when elastic collisions occur.

Let be the relative distance at contact at time and be the relative velocity. We further define as the coefficient of restitution at contact and as the time of collision of contact , where . Assuming the relative velocity is constant in this time step, we get and . From there we have:

| (26) |

After finding the above gradients for each collision independently, we need to find a pair of Jacobians, and , that approximate our desired behavior at each of the contact points as closely as possible.

| (27) |

where is the Jacobian matrix of contact , is the pseudo inverse Jacobian, and is the total number of contact points. Our goal is to find a Jacobian that satisfies the above equations (Equation VI) as closely as possible (details in Appendix -E). Once we find a satisfactory approximation for , we can get

| (28) |

| Environment | Analytical | Central | Speedup |

|---|---|---|---|

| Differences | |||

| Atlas | 16.1ms | 737ms | 45.8x |

| Half Cheetah | 0.870ms | 7.52ms | 8.64x |

| Jump-Worm | 0.484ms | 3.08ms | 6.36x |

| Catapult | 0.576ms | 3.69ms | 6.40x |

VII Evaluation

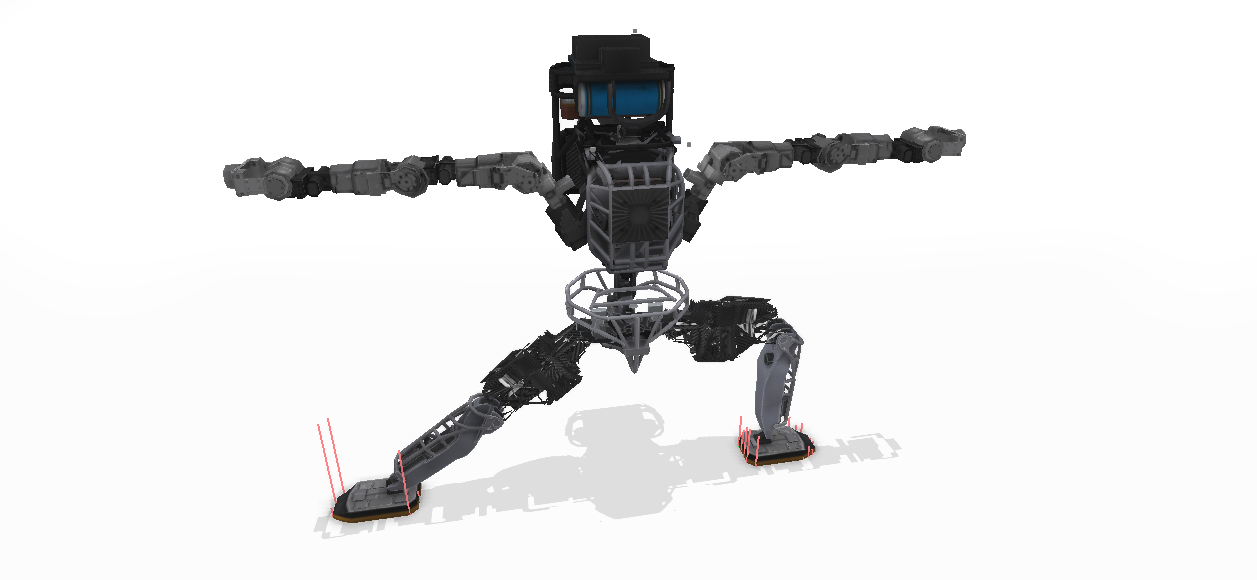

First, we evaluate our methods by testing the performance of gradient computation. In addition, we compare gradient-based trajectory optimization enabled by our method to gradient-free stochastic optimization, and discuss implications. We also demonstrate the effectiveness of the complementarity-aware gradients in a trajectory optimization problem. Finally, we show that our physics engine can solve optimal control problems for complex dynamic systems with contact and collision, including an Atlas humanoid jumping in the air.

VII-A Performance

Controlled comparison to existing methods can be challenging because they use different formulations for forward simulation, essentially simulating different physical phenomena. We therefore benchmark the performance of our method at the atomic level–measuring the computation time of a Jacobian for a single time step using a single-core CPU and comparing it to finite differencing methods, using the same forward simulation process provided by an existing non-differentiable physics engine (DART). This comparison removes factors due to differences in forward simulation, implementation techniques, and computation resources, and focuses on the speed gain solely contributed by our gradient computation method.

Table II contains abbreviated benchmark performance of our Jacobians against central differencing. We evaluate our results in four environments: the Atlas robot on the ground (33 DOFs, 12 contact points), Half Cheetah on the ground (9 DOFs, 2 contact points), Jump-Worm (5 DOFs, 2 contact points), and Catapult (5 DOFs, 2 contact points). For each environment, we compare the speed of evaluating all five primary Jacobians. For a complete table, including the speed of individual Jacobian evaluations, see Appendix -D.

VII-B Gradient-based vs gradient-free trajectory optimization

To demonstrate the benefit of analytical Jacobians of dynamics, we compare trajectory optimization on our catapult trajectory problem between Multiple Shooting and Stochastic Search (SS) [5], as well as cartpole and the double-pendulum using both Differential Dynamic Programming (DDP) [39, 22, 40] and Stochastic Search [5]. The gradient-based methods (DDP and Multiple Shooting) are able to converge much more quickly, because the additional convergence gained from analytical Jacobians more than offsets the time to compute them. See Figure 7.

VII-C Complementarity-aware gradients

To highlight the complementarity-aware gradients, we solve a trajectory optimization problem of a drone taking-off from the ground and reaching a fixed height in timesteps.

Because the drone is initialized resting on the ground, it has a contact with the ground that is classified as “clamping.” When we attempt to optimize the control on the drone using correct gradients, we get zero gradients and make no progress. By contrast, when we use our complementarity aware gradient, while we still see no change in loss for the first few iterations of SGD, we’re able to get non-zero gradients and escape the saddle point (Figure 7). See the supplementary video for the resulting drone trajectories.

VII-D Optimal control with contact

We present several trajectory optimization problems that involve complex contact dynamics, optimized using multiple shooting. We demonstrate “Catapult”, which is a 3-dof robot that is tasked with batting a free ball towards a target, in such a way that it exactly hits the target at the desired timestep (pictured in Figure 7). We also demonstrate “Jump-Worm”, which is a 5-dof worm-shaped robot that is attempting to jump as high as possible at the end of the trajectory. Both of these problems involve complex contact switching throughout the course of the trajectory. We also optimize a trajectory where the “Atlas” robot learns to jump. See the supplementary video for the resulting trajectories.

VIII Conclusions

We present a fast and feature-complete differentiable physics engine for articulated rigid body simulation. We introduce a method to compute analytical gradients through the LCP formulation of inelastic contact by exploiting the sparsity of the LCP solution. Our engine supports complex contact geometry and approximating continuous-time elastic collision. We also introduce a novel method to compute complementarity-aware gradients that help optimizers to avoid stalling in saddle points.

There a few limitations of our current method. Currently, computing , the way the joint torques produced by the contact forces change as we vary joint positions (which in turn varies contact positions and normals) takes a large portion of the total time to compute all Jacobians of dynamics for a given timestep. Perhaps a more efficient formulation can be found.

Our engine also doesn’t yet differentiate through the geometric properties (like link length) of a robot, or friction coefficients. Extending to these parameters is an important step to enable parameter estimation applications.

We are excited about future work that integrates gradients and stochastic methods to solve hard optimization problems in robotics. In running the experiments for this paper, we found that stochastic trajectory optimization methods could often find better solutions to complex problems than gradient-based methods, because they were able to escape from local optima. However, stochastic methods are notoriously sample inefficient, and have trouble fine-tuning results as they begin to approach a local optima. The authors speculate that there are many useful undiscovered techniques waiting to be invented that lie at the intersection of stochastic gradient-free methods and iterative gradient-based methods. It is our hope that an engine like the one presented in this paper will enable such research.

Acknowledgments

References

- Al-Rfou et al. [2016] Rami Al-Rfou, Guillaume Alain, Amjad Almahairi, Christof Angermueller, Dzmitry Bahdanau, Nicolas Ballas, Frédéric Bastien, Justin Bayer, Anatoly Belikov, Alexander Belopolsky, et al. Theano: A python framework for fast computation of mathematical expressions. arXiv e-prints, pages arXiv–1605, 2016.

- Amos and Kolter [2017] Brandon Amos and J Zico Kolter. Optnet: Differentiable optimization as a layer in neural networks. In Proceedings of the 34th International Conference on Machine Learning-Volume 70, pages 136–145. JMLR. org, 2017.

- Baraff [1994] David Baraff. Fast contact force computation for nonpenetrating rigid bodies. In Proceedings of the 21st annual conference on Computer graphics and interactive techniques, pages 23–34, 1994.

- Battaglia et al. [2016] Peter Battaglia, Razvan Pascanu, Matthew Lai, Danilo Jimenez Rezende, et al. Interaction networks for learning about objects, relations and physics. In Advances in neural information processing systems, pages 4502–4510, 2016.

- Boutselis et al. [2020] George I Boutselis, Ziyi Wang, and Evangelos A Theodorou. Constrained sampling-based trajectory optimization using stochastic approximation. In 2020 IEEE International Conference on Robotics and Automation (ICRA), pages 2522–2528. IEEE, 2020.

- Boyd et al. [2003] Stephen Boyd, Lin Xiao, and Almir Mutapcic. Subgradient methods. lecture notes of EE392o, Stanford University, Autumn Quarter, 2004:2004–2005, 2003.

- Carpentier and Mansard [2018] Justin Carpentier and Nicolas Mansard. Analytical derivatives of rigid body dynamics algorithms. In Robotics: Science and Systems (RSS 2018), 2018.

- Chang et al. [2016] Michael B Chang, Tomer Ullman, Antonio Torralba, and Joshua B Tenenbaum. A compositional object-based approach to learning physical dynamics. arXiv preprint arXiv:1612.00341, 2016.

- Coumans [2010] Erwin Coumans. Bullet physics engine. Open Source Software: http://bulletphysics. org, 1(3):84, 2010.

- de Avila Belbute-Peres et al. [2018] Filipe de Avila Belbute-Peres, Kevin Smith, Kelsey Allen, Josh Tenenbaum, and J Zico Kolter. End-to-end differentiable physics for learning and control. In Advances in Neural Information Processing Systems, pages 7178–7189, 2018.

- Degrave et al. [2019] Jonas Degrave, Michiel Hermans, Joni Dambre, and Francis Wyffels. A differentiable physics engine for deep learning in robotics. Frontiers in neurorobotics, 13:6, 2019.

- Geilinger et al. [2020] Moritz Geilinger, David Hahn, Jonas Zehnder, Moritz Bächer, Bernhard Thomaszewski, and Stelian Coros. Add: analytically differentiable dynamics for multi-body systems with frictional contact. ACM Transactions on Graphics (TOG), 39(6):1–15, 2020.

- Hahn et al. [2019] David Hahn, Pol Banzet, James M. Bern, and Stelian Coros. Real2sim: Visco-elastic parameter estimation from dynamic motion. ACM Trans. Graph., 38(6), November 2019.

- Heiden et al. [2019] Eric Heiden, David Millard, Hejia Zhang, and Gaurav S Sukhatme. Interactive differentiable simulation. arXiv preprint arXiv:1905.10706, 2019.

- Heiden et al. [2020] Eric Heiden, David Millard, Erwin Coumans, and Gaurav S Sukhatme. Augmenting differentiable simulators with neural networks. arXiv preprint arXiv:2007.06045, 2020.

- Hermans et al. [2014] Michiel Hermans, Benjamin Schrauwen, Peter Bienstman, and Joni Dambre. Automated design of complex dynamic systems. PLOS ONE, 9(1):1–11, 01 2014.

- Holl et al. [2020] Philipp Holl, Nils Thuerey, and Vladlen Koltun. Learning to control pdes with differentiable physics. In International Conference on Learning Representations, 2020.

- Hu et al. [2019a] Yuanming Hu, Luke Anderson, Tzu-Mao Li, Qi Sun, Nathan Carr, Jonathan Ragan-Kelley, and Frédo Durand. Difftaichi: Differentiable programming for physical simulation. arXiv preprint arXiv:1910.00935, 2019a.

- Hu et al. [2019b] Yuanming Hu, Tzu-Mao Li, Luke Anderson, Jonathan Ragan-Kelley, and Frédo Durand. Taichi: a language for high-performance computation on spatially sparse data structures. ACM Transactions on Graphics (TOG), 38(6):1–16, 2019b.

- Hu et al. [2019c] Yuanming Hu, Jiancheng Liu, Andrew Spielberg, Joshua B Tenenbaum, William T Freeman, Jiajun Wu, Daniela Rus, and Wojciech Matusik. Chainqueen: A real-time differentiable physical simulator for soft robotics. Proceedings of IEEE International Conference on Robotics and Automation (ICRA), 2019c.

- Iollo et al. [2001] A. Iollo, M. Ferlauto, and L. Zannetti. An aerodynamic optimization method based on the inverse problem adjoint equations. Journal of Computational Physics, 173(1):87–115, 2001.

- Jacobson and Mayne [1970] David H Jacobson and David Q Mayne. Differential dynamic programming. 1970.

- Jarny et al. [1991] Y. Jarny, M.N. Ozisik, and J.P. Bardon. A general optimization method using adjoint equation for solving multidimensional inverse heat conduction. International Journal of Heat and Mass Transfer, 34(11):2911–2919, 1991.

- Jourdan et al. [1998] Franck Jourdan, Pierre Alart, and Michel Jean. A gauss-seidel like algorithm to solve frictional contact problems. Computer Methods in Applied Mechanics and Engineering, 155(1):31–47, 1998.

- Kim [2012] Junggon Kim. Lie group formulation of articulated rigid body dynamics. Technical report, Technical Report. Carnegie Mellon University, 2012.

- Lee et al. [2018] Jeongseok Lee, Michael X Grey, Sehoon Ha, Tobias Kunz, Sumit Jain, Yuting Ye, Siddhartha S Srinivasa, Mike Stilman, and C Karen Liu. Dart: Dynamic animation and robotics toolkit. Journal of Open Source Software, 3(22):500, 2018.

- Liang et al. [2019] Junbang Liang, Ming Lin, and Vladlen Koltun. Differentiable cloth simulation for inverse problems. In H. Wallach, H. Larochelle, A. Beygelzimer, F. d'Alché-Buc, E. Fox, and R. Garnett, editors, Advances in Neural Information Processing Systems, volume 32. Curran Associates, Inc., 2019.

- McNamara et al. [2004] Antoine McNamara, Adrien Treuille, Zoran Popović, and Jos Stam. Fluid control using the adjoint method. ACM Trans. Graph., 23(3):449–456, August 2004.

- Millard et al. [2020] David Millard, Eric Heiden, Shubham Agrawal, and Gaurav S. Sukhatme. Automatic differentiation and continuous sensitivity analysis of rigid body dynamics, 2020.

- Mrowca et al. [2018] Damian Mrowca, Chengxu Zhuang, Elias Wang, Nick Haber, Li F Fei-Fei, Josh Tenenbaum, and Daniel L Yamins. Flexible neural representation for physics prediction. In Advances in neural information processing systems, pages 8799–8810, 2018.

- Paszke et al. [2017] Adam Paszke, Sam Gross, Soumith Chintala, Gregory Chanan, Edward Yang, Zachary DeVito, Zeming Lin, Alban Desmaison, Luca Antiga, and Adam Lerer. Automatic differentiation in pytorch. 2017.

- Popović et al. [2000] Jovan Popović, Steven M. Seitz, Michael Erdmann, Zoran Popović, and Andrew Witkin. Interactive manipulation of rigid body simulations. In Proceedings of the 27th Annual Conference on Computer Graphics and Interactive Techniques, SIGGRAPH ’00, page 209–217. ACM Press/Addison-Wesley Publishing Co., 2000.

- Press et al. [2007] William H. Press, Saul A. Teukolsky, William T. Vetterling, and Brian P. Flannery. Numerical Recipes 3rd Edition: The Art of Scientific Computing. Cambridge University Press, USA, 3 edition, 2007.

- Qiao et al. [2020] Yi-Ling Qiao, Junbang Liang, Vladlen Koltun, and Ming C. Lin. Scalable differentiable physics for learning and control. In ICML, 2020.

- Rackauckas et al. [2018] Christopher Rackauckas, Yingbo Ma, Vaibhav Dixit, Xingjian Guo, Mike Innes, Jarrett Revels, Joakim Nyberg, and Vijay Ivaturi. A comparison of automatic differentiation and continuous sensitivity analysis for derivatives of differential equation solutions, 2018.

- Ridders [1982] C.J.F. Ridders. Accurate computation of f’(x) and f’(x) f”(x). Advances in Engineering Software (1978), 4(2):75–76, 1982.

- Schenck and Fox [2018] Connor Schenck and Dieter Fox. Spnets: Differentiable fluid dynamics for deep neural networks. In Proceedings of The 2nd Conference on Robot Learning, volume 87 of Proceedings of Machine Learning Research, pages 317–335. PMLR, 29–31 Oct 2018.

- Song and Boularias [2020] Changkyu Song and Abdeslam Boularias. Learning to slide unknown objects with differentiable physics simulations. In Robotics: Science and Systems, Oregon State University at Corvallis, Oregon, USA, 14–16 July 2020.

- Tassa et al. [2012] Yuval Tassa, Tom Erez, and Emanuel Todorov. Synthesis and stabilization of complex behaviors through online trajectory optimization. In 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, pages 4906–4913. IEEE, 2012.

- Todorov and Li [2005] Emanuel Todorov and Weiwei Li. A generalized iterative lqg method for locally-optimal feedback control of constrained nonlinear stochastic systems. In Proceedings of the 2005, American Control Conference, 2005., pages 300–306. IEEE, 2005.

- Todorov et al. [2012] Emanuel Todorov, Tom Erez, and Yuval Tassa. Mujoco: A physics engine for model-based control. In 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, pages 5026–5033. IEEE, 2012.

- Toussaint et al. [2018] Marc Toussaint, Kelsey R. Allen, K. Smith, and J. Tenenbaum. Differentiable physics and stable modes for tool-use and manipulation planning. In Robotics: Science and Systems, 2018.

| Environment | Jacobian | Analytical | Central Differences | Speedup | Ridders | Speedup |

|---|---|---|---|---|---|---|

| Time | Time | Time | ||||

| Atlas | All | 8.53ms | 749ms | 87.84x | 2229ms | 261x |

| 0.0204ms | 160ms | 7850x | 581ms | 28480x | ||

| 6.75ms | 161ms | 23.8x | 581ms | 86x | ||

| 0.016ms | 107.2ms | 6570.9x | 304ms | 19000x | ||

| 0.778ms | 160ms | 205.7x | 487ms | 626x | ||

| 0.981ms | 161ms | 163.9x | 524ms | 471x | ||

| Half Cheetah | All | 0.395ms | 8.64ms | 21.86x | 24ms | 60x |

| 0.0059ms | 1.69ms | 284x | 3.17ms | 537x | ||

| 0.254ms | 1.874ms | 7.35x | 7.92ms | 31.1x | ||

| 0.00358ms | 1.228ms | 342x | 2.42ms | 675.9x | ||

| 0.053ms | 1.98ms | 37.1x | 5.63ms | 106.2x | ||

| 0.077ms | 1.87ms | 24.1x | 5.75ms | 74.6x | ||

| Jump-Worm | All | 0.256ms | 3.82ms | 14.89x | 9.727ms | 37.9x |

| 0.00433ms | 0.732ms | 168.8x | 1.58ms | 364.8x | ||

| 0.174ms | 0.836ms | 4.78x | 2.84ms | 16.32x | ||

| 0.00307ms | 0.532ms | 173.1x | 0.81ms | 263.8x | ||

| 0.0343ms | 0.872ms | 25.4x | 2.17ms | 63.2x | ||

| 0.040ms | 0.851ms | 21.2x | 2.31ms | 57.75x | ||

| Catapult | All | 0.265ms | 4.36ms | 16.45x | 12.0ms | 45.2x |

| 0.0050ms | 0.845ms | 167.8x | 1.79ms | 358x | ||

| 0.181ms | 0.972ms | 5.36x | 3.71ms | 20.49x | ||

| 0.0036ms | 0.574ms | 156.7x | 0.958ms | 266x | ||

| 0.035ms | 1.01ms | 28.42x | 2.72ms | 77.7x | ||

| 0.039ms | 0.960ms | 24.3x | 2.85ms | 73.1x |

-A Frictional impulse

In DART, friction impulses are solved by the boxed LCP method, using the same implementation of the boxed variant of the Dantzig algorithm found in the Open Dynamics Engine, and originally proposed in [3]. Boxed LCPs are not theoretically guaranteed to be solvable, but are quite common in practice because of their speed and high-quality results. We therefore extend our formulation in Section IV to compute the gradient of frictional impulse magnitudes found in a boxed LCP solver with respect to and . Similar to normal impulses, each frictional impulse is classified into one of the two states:

Clamping ()

If the relative velocity along the frictional impulse direction is zero, and friction impulse magnitude is below its bound, then any attempt to push this contact will be met with an increase in frictional impulse holding the point in place. This means the contact point is clamping, which behaves will be treated in the same way as clamped normal forces.

Bounded ()

If the frictional impulse magnitude is at its bound (either positive or negative) then the contact point is sliding or about to slide along this friction direction. Bounded frictional impulse is quite like “Separating” in normal impulse. The difference is that frictional impulses in are not zero but at a non-zero bound based on the corresponding normal impulses.

Bounded frictional impulse can be expressed by , where each row of contains a single non-zero value with the friction coefficient, , for the corresponding normal impulse index in .

We define to be the matrix with just the columns of corresponding to the indices in . Likewise, we define containing just the columns of that are bounded. If we multiply we get the joint torques due to the clamping constraint forces. Similarly, if we multiply we get the joint torques due to bounded friction impulses. Since , we can modify to take bounded frictional impulses into account:

With this one small change, all the formulation in Section IV works with frictional forces. An interesting observation is that the bounded frictional cases are analogous to the separating cases for the normal forces, in that the force value is constrained at (or ), where is the corresponding normal force for the same contact. The only way the bounded force values will change is through the change of corresponding clamping normal force, which needs to be accounted for when computing the gradients.

Just like the overall boxed LCP problem is not solvable, is no longer guaranteed to be exactly invertible. In order to support this, we need to use the pseudoinverse of during the forward pass, and the gradient of the pseudoinverse when computing Jacobians.

-B LCP stabilization

When is not full rank, the solution to is no longer unique. To grasp this intuitively, consider a 2D case where a box of unit mass that cannot rotate is resting on a plane. The box has two contact points, with identical contact normals, and because the box is not allowed to rotate, the effect of an impulse at each contact point is exactly the same (it causes the box’s upward velocity to increase). This means that both columns of (one per contact) are identical. That means that is actually only rank one. Let’s assume we need a total upward impulse of to prevent the box from interpenetrating the floor. Because is a low rank, we’re left with one equation and two unknowns:

It is easy to see that this is just . And that means that we have an infinite number of valid solutions to the LCP.

In order to have valid gradients, our LCP needs to have predictable behavior when faced with multiple valid solutions. Thankfully, our analysis in the previous sections suggests a quite simple and efficient (and to the authors’ knowledge novel) LCP stabilization method. Once an initial solution is computed using any algorithm, and the clamping set is found, we can produce a least-squares-minimal (and numerically exact) solution to the LCP by setting:

This is possible with a single matrix inversion because the hard part of the LCP problem (determining which indices belong in which classes) was already solved for us by the main solver. Once we know which indices belong in which classes, solving the LCP exactly reduces to simple linear algebra.

As an interesting aside, this “LCP stabilization” method doubles as an extremely efficient LCP solver for iterative LCP problems. In practical physics engines, most contact points do not change from clamping () to separating () or back again on most time steps. With that intuition in mind, we can opportunistically attempt to solve a new at a new timestep by simply guessing that the contacts will sort into and in exactly the same way they did on the last time step. Then we can solve our stabilization equations for as follows:

If we guessed correctly, which we can verify in negligible time, then is a valid, stable, and perfectly numerically exact solution to the LCP. When that happens, and in our experiments this heuristic is right of the time, we can skip the expensive call to our LCP solver entirely. As an added bonus, because is usually not all indices, inverting can be considerably cheaper than inverting all of , which can be necessary in an algorithm to solve the full LCP.

When our heuristic does not result in a valid , we can simply return to our ordinary LCP solver to get a valid set and , and then re-run our stabilization.

As long as results from our LCP are stabilized, the gradients through the LCP presented in this section are valid even when is not full rank.

-C Contact point and normal stabilization and gradients

To compute gradients through each contact point and normal with respect to , we need to provide specialized routines for each type of collision geometry, including collisions between spheres, capsules, boxes, and arbitrary convex meshes. Deriving the routines is a matter of straightforward algebra. However in order for well-defined gradients to exist at all, each collision must have precisely specified contact points and normals, so that we can compute well-behaved gradients.

We describe in this appendix how we produce well-defined contact points and normals, since this is a design choice. The gradients of these methods are left as an exercise to the reader, who is invited to check their work against our open-source implementation.

-C1 Mesh-mesh collisions

Mesh-mesh collisions only have two types of contacts: vertex-face and edge-edge collisions. The other cases (vertex-edge, vertex-vertex, edge-face, face-face) are degenerate and easily mapped into vertex-face and edge-edge collisions.

Mesh-mesh collision detection algorithms like Gilbert-Johnson-Keerthi or Minkowski Portal Refinement are iterative, and so produce imprecise collision points and normals that can vary depending on initialization. Both algorithms produce a separating “witness plane” as part of their output, which is a 3D plane that approximately separates the two meshes (as much as possible, if they’re intersecting). Going from an approximate separating witness plane to precisely specified vertex-face and edge-edge collisions in the general case is complex. Take all the points on object A that lie within some tiny of the witness plane, and map them into 2D coordinates within the witness plane. Do likewise with the points on object B. Now we have two convex 2D shapes, because any linear slice of a convex shape is itself convex. Throw out any vertices that are not on the 2D convex hull of the point cloud for A and B respectively. Call the resulting convex shapes “witness hulls” of A and B. Now any vertices on the witness hull of A that lie within the witness hull of B are vertex-face collisions from A to B. Analogously, any vertices on the witness hull of B that lie within the witness hull of A are face-vertex collisions from A to B. Finally, any edges of the witness hulls A and B that intersect are edge-edge collisions.

For all vertex-face collisions, during the forward simulation, the collision detector places a collision at the point of the vertex, with a normal dictated by the face under collision. The body providing the vertex can only influence the collision location , and the body providing the face can only influence the collision normal .

For all edge-edge collisions, the collision detector first finds the nearest point on edge A to edge B (call that ), and the nearest point on edge B to edge A (call that ). Then the contact point is set to the average of and , which is . The normal is given by the cross product of the two edges. That means changing of Object A can affect the contact normal and the contact location along the other edge from Object B. For this reason, we need to construct our Jacobians globally.

-C2 Sphere-sphere collisions

These are straightforward. We have sphere A, with center and radius , and sphere B, with center and radius . The contact normal is the normalized vector pointing from to . The contact point is a weighted combination of the two sphere centers, .

-C3 Mesh-sphere collisions

These can be divided into three categories: sphere-face collisions, sphere-edge collisions, and sphere-vertex collisions. Starting from the simplest, a sphere-vertex collision places the contact point at the vertex and the normal points from the vertex to the sphere center.

A sphere-edge collision places the contact point on the closest point to the sphere center along the edge. The contact normal points from the collision point to the sphere center.

A sphere-face collision gets the contact normal from the normal of the colliding face. Call the contact normal , and define the sphere center as and radius as . For the simplicity of downstream gradient computation, we then place the contact point at the point on the sphere, projected along with the contact normal towards the contact: .

-C4 Pipe-pipe collisions

In a capsule-capsule collision, when both capsule cylinders are colliding, we call that a pipe-pipe collision. Mechanically, this is very similar to an edge-edge collision, only with added radius variables and . Let be the nearest point on the centerline of pipe A to the centerline of pipe B. Let be the nearest point on the centerline of pipe B to the centerline of pipe A. Then we say the contact point is . The contact normal points from to .

-C5 Pipe-sphere collisions

These look a lot like pipe-pipe collisions, except that is now fixed to the center of the sphere. Let be the nearest point on the centerline of the pipe to . Then we say the contact point is . The contact normal points from to .

-C6 Pipe-mesh collisions

This breaks down into two types of contact: vertex-pipe, and edge-pipe. Face-pipe contacts reduce to two edge-pipe contacts.

For vertex-pipe contacts, we put the contact point at the vertex, and point the normal towards the nearest point on the centerline of the pipe.

For edge-pipe contacts, we treat them as pipe-pipe contacts where the radius of the first pipe is 0.

-C7 Gradients

Once all the contact behavior is firmly established, it’s just a matter of rote calculus to compute derivatives. We refer the reader to our open source code for the implementation of those derivatives. Once a stable collision detection system and its derivatives are implemented that, it’s possible to efficiently compute .

-D Jacobian Benchmark Evaluation

In addition to speed, we are interested in the accuracy of our Jacobians, as gradients computed via finite differencing become more inaccurate as the system becomes more complex, which can lead to instability in optimization. As another baseline, we apply Ridders’ method [36] to efficiently calculate Jacobians to a higher-order error than central differencing, using the stopping criterion given in [33].

Table III contains the full benchmark performance of our analytical Jacobians against central differencing and the accurate Ridders’ extrapolated finite differences. For each environment, we compare the speed of evaluation of each individual component Jacobian as well as the total time.

-E Calculating the Bounce Approximation Jacobian

Recall the matrix that transforms contact forces to joint forces. By conservation of momentum , leading to:

| (29) |

Our matrix notation is somewhat misleading here, because we do not want our approximation to capture off-diagonals of . Because we construct our approximate by assuming each bounce is independent, we end up with 0s on the off-diagonals, but we know that assumption to be false. We just want to find a where the above equation matches the diagonals as closely as possible, ignoring other elements. The following derives this relation in closed form.

Since we are only interested in enforcing the diagonal entries, so we can write out a series of linear constraints we would like to attempt to satisfy. Let denote the ’th column of . Recall that .

| (30) |

We would like to find some approximate matrix that satisfies all of the above constraints as closely as possible. Stated directly as a least-squares optimization object:

| (31) |

The solution to this optimization can be found without iterative methods.

Let be the ’th column of , and be the ’th row and the ’th column of . Note that:

| (32) |

| (33) |

It becomes clear that we could construct a long vector , which will map to every column of placed end to end. We can also construct a matrix where every column is the vectors placed end to end (iterating over ). Now if we take the values of as entries of a vector , we can write our optimization problem as a linear equation:

| (34) |

This is a standard least squares problem, and is solved when:

| (35) |

Once we have a value of , we can reconstruct the original matrix by taking each column of the appropriate segment of .

This is almost always an under-determined system, and we want to default to having as close to as possible, rather than as close to 0 as possible. We can slightly reformulate our optimization problem where the least square objective tries to keep the diagonals at 1, rather than 0. If we define an arbitrary vector (for “center”), we can use the identity:

| (36) |

| (37) |

If we set to the mapping for , then we get a solution that minimizes the distance to the identity while satisfying the constraints, measured as the sum of the squares of all the terms.

Also, remember that to get our Jacobian with respect to velocity, we simply:

| (38) |

And that should approximately solve the “gradient bounce” problem. On timesteps where there is only a single bouncing contact, this will provide an exact solution. With more than one bounce in a single frame, this may be approximate.

-F Analytical derivatives through Featherstone

We present the derivation of analytical derivatives computation through the Featherstone algorithm. Although the intellectual computation should be credited entirely to [25] and [7], we specialized the derivations to obtain , , and for our implementation, rather than the entire inverse and forward dynamics. The detailed derivation might be of interest to some readers.

The partial derivative of the inverse of joint space inertia matrix can be computed from the partial derivative of the joint space inertia matrix through the relation [7]:

| (39) |

Algorithm 1 and 2 show the recursive algorithms to compute and , respectively. In Algorithm 3, the derivatives of the Coriolis force with respect to the joint position and the joint velocity are given.