Fast frequency modulation is encoded according to the listener expectations in the human subcortical auditory pathway

Abstract

Expectations aid and bias our perception. In speech, expected words are easier to recognise than unexpected words, particularly in noisy environments, and incorrect expectations can make us misunderstand our conversational partner. Expectations are combined with the output from the sensory pathways to form representations of speech in the cerebral cortex. However, it is unclear whether expectations are propagated further down to subcortical structures to aid the encoding of the basic dynamic constituent of speech: fast frequency-modulation (FM). Fast FM-sweeps are the basic invariant constituent of consonants, and their correct encoding is fundamental for speech recognition. Here we tested the hypothesis that subjective expectations drive the encoding of fast FM-sweeps characteristic of speech in the human subcortical auditory pathway. We used fMRI to measure neural responses in the human auditory midbrain (inferior colliculus) and thalamus (medial geniculate body). Participants listened to sequences of FM-sweeps for which they held different expectations based on the task instructions. We found robust evidence that the responses in auditory midbrain and thalamus encode the difference between the acoustic input and the subjective expectations of the listener. The results indicate that FM-sweeps are already encoded at the level of the human auditory midbrain and that encoding is mainly driven by subjective expectations. We conclude that the subcortical auditory pathway is integrated in the cortical network of predictive speech processing and that expectations are used to optimise the encoding of even the most basic acoustic constituents of speech.

Introduction

Expectations can have dramatic effects on sensory processing [1]. A prime example is speech perception, where expectations influence processing on many different levels. For instance, word recognition is often completed before the entire word has been heard [2], and strongly affected by semantic context [3], word prevalence [4], and prior knowledge [5].

Predictive coding is one of the leading frameworks explaining how expectations affect perceptual encoding [6, 7, 8]. Predictive coding has been suggested to form the basis for encoding of speech in the auditory system [5, 9, 10, 11, 12]. Predictive coding formulates perception as a constant process of hypothesis testing [7, 8]: neural centres at high-order levels perform predictions on the sensory world via a generative model, while neurons at lower levels test these predictions against the actual sensory input [13, 14]. A key hypothesis of the framework is that sensory neurons at lower levels do not encode the features of the stimuli but prediction error: the difference between the sensory input and the expectations of the observer on that sensory input. When the input matches the expectations, prediction error neurons do not need to communicate with higher-level centres, speeding up recognition and optimising the neural code.

In the human auditory cortex, speech sounds are encoded as prediction error [15, 16, 17, 18, 19, 20, 21]; whether that is also the case in the subcortical auditory pathway is unclear. Anatomical and physiological properties make the subcortical auditory pathway a prime candidate for predictive coding: The subcortical auditory pathway contains massive cortico-thalamic and cortico-collicular efferent systems [22, 23, 24, 25] that are well suited to transmit complex expectations to subcortical nuclei. Moreover, neural populations in the subcortical auditory pathway are endowed with much shorter time constants and faster access to acoustic information than neural populations in the cerebral cortex [26]. This feature renders subcortical auditory pathways better suited to test hypotheses on the incoming fast dynamics of speech sounds [27, 28, 29].

Stimulus-specific adaptation (SSA) has been used as a first attempt to test for predictive coding in the subcortical pathways. SSA is a phenomenon where individual neurons adapt to repetitions of a pure tone but show recovered responses to a frequency deviant [30]. SSA is present in single neurons of the rodent’s auditory thalamus (medial geniculate body; MGB) [31, 32, 33, 34] and auditory midbrain (inferior colliculus; IC) [31, 35, 36, 37, 38, 39], and in neural populations of the human IC and MGB [40, 41, 42, 43, 37, 44]. SSA can, however, be explained both by neural habituation or predictive coding (see [45] for a review). In the case of pure tones, we have recently used a novel SSA paradigm which revealed that SSA in human IC and MGB is driven largely by subjective expectations of the listeners, as hypothesised by predictive coding but not by neural habituation [40].

In contrast to pure tones, speech sounds comprise highly dynamic elements. These elements cannot be fully characterised by mixtures of static pure tones. The most ubiquitous of these dynamic elements are fast frequency-modulated (FM)-sweeps [46, 47]. Combinations of three fast FM-sweeps of different average frequency acoustically characterise consonants preceding a vowel. Accurately perceiving the modulation direction and rate of those FM-sweeps is crucial for speech comprehension; for instance, the phonemes /ba/ and /da/ differ only on the modulation direction of one of their comprised FM-sweeps [46].

Whilst pure tones are encoded according to their frequency along the tonotopic axis already at the basilar membrane [48], FM-sweeps are encoded in FM-direction and FM-rate selective neurons [49]. In humans, the lowest level in the auditory hierarchy with evidence for fast FM-direction [50, 51] and rate [52] selectivity is in auditory cortex. Although FM-sensitive neurons have been reported in the rodent IC and MGB [53, 54, 55, 49, 56], it is currently unclear whether FM is also encoded in subcortical stations of the human auditory pathway. If that was the case, an important question is whether FM-sweeps, as basic constituent of speech sounds, are encoded according to the subjective expectations of the listener.

Given the paramount importance of fast FM-sweeps for speech comprehension and the privileged temporal properties of the subcortical elements of the auditory pathway, we addressed two key questions. First, whether FM-rate and FM-direction are already encoded in neural populations of the human IC and MGB. Second, whether fast FM-sweeps are encoded in IC and MGB according to the principles of predictive coding; i.e., as prediction error with respect to the subjective expectations of the listener. To address these questions we measured blood-oxygen level dependent (BOLD) responses in two key subcortical structures of the auditory pathway, IC and MGB, while participants listened to sequences of FM-sweeps. The FM-sweeps were constructed as closely as possible to FM-sweeps characteristic of speech sounds, and designed in such a way that they all elicited the same pitch percept and the same average activity along the tonotopic axis [57, 58]. To test if encoding was mediated by predictive coding, we introduced abstract rules that assigned different likelihoods to different FM-sweeps without affecting stimulus statistics [40]. We reasoned that, if FM-sweeps were encoded according to their objective properties, an FM-sweep embedded in a specific statistical context should elicit the same activation no matter the expectations that participants have on its occurrence. Reversely, if FM-sweeps were encoded according to the principles of predictive coding, BOLD responses should directly depend on how well the sensory input fits the expectations of the listeners.

Once established that fast FM-sweeps are encoded as prediction error in IC and MGB, we addressed two additional questions. First, we investigated whether the same encoding mechanisms operate in primary (or lemniscal) and secondary (or non-lemniscal) MGB. Previous findings in the animal literature indicated that SSA and predictive coding are stronger in secondary subdivisions of the subcortical pathways (e.g., [31], a finding that is not apparent in humans [40, 41]). Second, we tested whether the topographic distribution of neural populations encoding prediction error in IC and MGB were replicated in FM-sweeps and pure tones. Similar topographies in both stimulus families would indicate a common mechanism for subcortical predictive coding.

Results

Experimental Design and Hypotheses

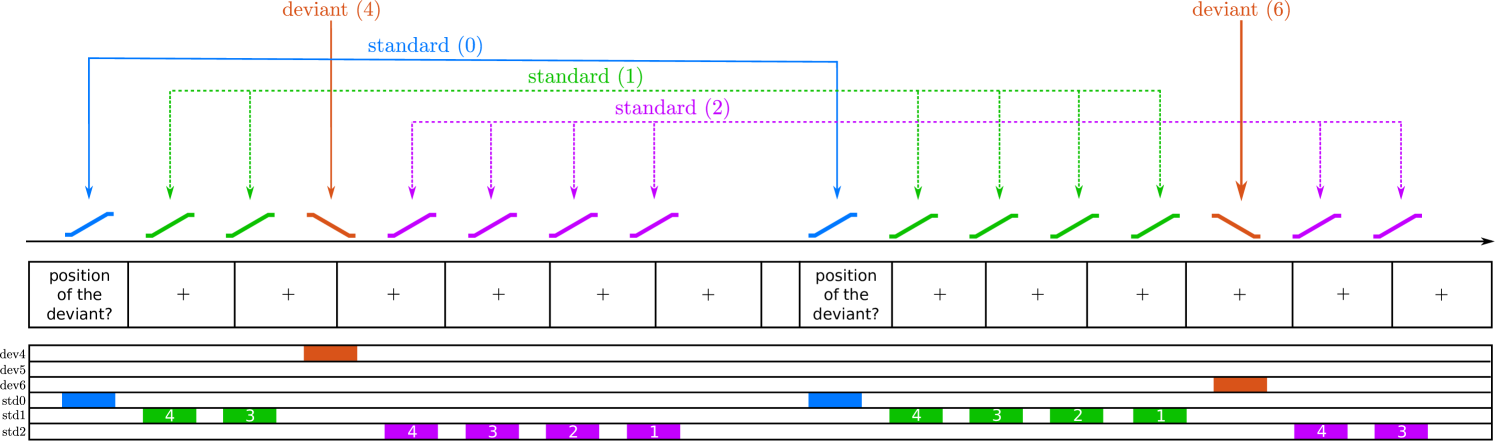

The stimuli were three fast FM-sweeps: One sweep with a fast negative FM-rate (frequency span Hz), one with a fast positive FM-rate (frequency span Hz), and one with a slow positive FM-rate (frequency span Hz; Fig 1A-B). We used 50 ms long sweeps in the frequency range of Hz so that they had the typical properties of formant transitions in speech [47]. The sweep average frequencies were adjusted so that all FM-sweeps elicited the same average activity along the tonotopic axis and were perceived as having the same pitch [58, 57]; this design guaranteed that FM-direction and FM-rate selective neurons were necessary to differentiate between any two sweeps in the paradigm.

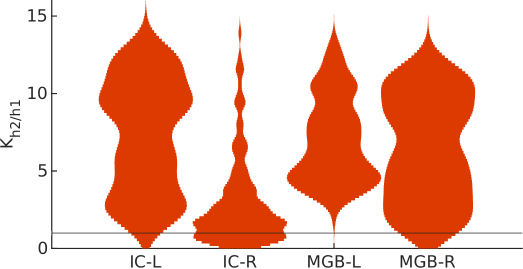

We arranged the stimuli in sequences of 8 FM-sweeps with 7 repetitions of the same sweep (standard) and one deviating sweep (deviant) (Fig 1C). Participants were instructed to report, with a button press, the position of the deviant within the sequence as fast and accurately as possible after identifying the deviant. Each sequence was characterised by the position of the deviant and : the absolute difference between the frequency spans of the deviant and the standard. With the three FM-sweeps we built three different combinations: one with Hz, where the two FM-sweeps have the same direction (up) but different modulation rate; one with Hz, where the two FM-sweeps have different rate and different direction; and one with Hz, where to two FM-sweeps have the same modulation rate ( Hz) but different direction (Fig 1B).

Expectations for each of the deviant positions were modulated by two abstract rules that were disclosed to the participants: 1) all sequences have a deviant, and 2) the deviant is always located in position 4, 5, or 6. The three deviant positions were used the same number of times along the experiment, so that the three deviant positions were equally likely at the beginning of the sequence. Therefore, the likelihood of finding a deviant in position 4 () after hearing 3 standards is . However, if the deviant is not located in position 4, it must be located in either position 5 or 6, which makes the likelihood of finding a deviant in position 5 () after hearing 4 standards . The likelihood of finding a deviant in position 6 () after hearing 5 standards is .

To address the first research question, whether neural populations of human IC and MGB encode FM-rate and FM-direction, we tested whether these two nuclei show SSA to the FM-sweeps used in the experiment; namely, if neural responses in IC and MGB adapt to repeated FM-sweeps while preserving high responsiveness to FM-sweeps that deviate from the standards in FM-rate or FM-direction (Fig 1D). Since all sweeps were designed to elicit the same average activation across the tonotopic axis and elicited the same pitch percept, neural populations showing SSA to these FM-sweeps necessarily comprise neurons that are sensitive to FM-rate and FM-direction.

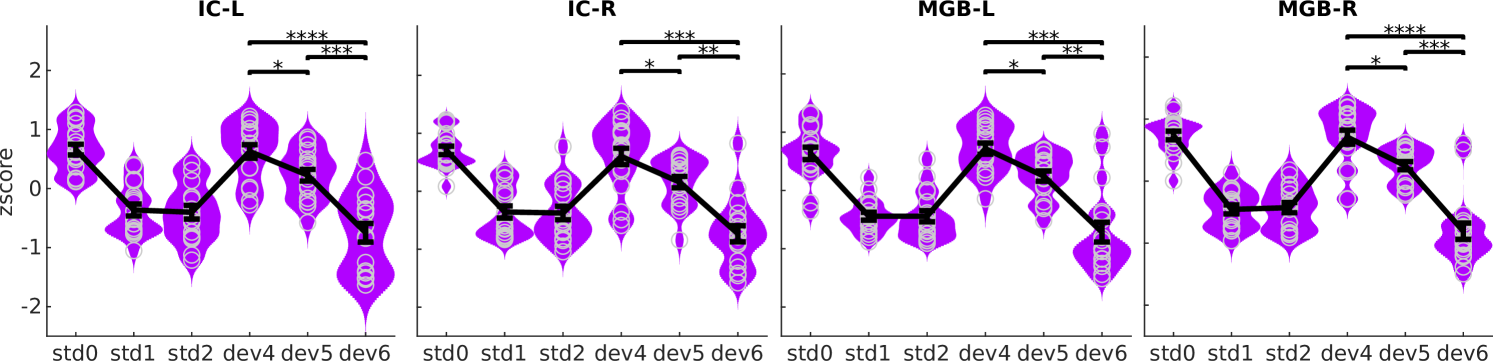

To address the second research question, whether IC and MGB responses encode FM-sweeps as prediction error with respect to the listener expectations, we used Bayesian model comparison. We considered two models. The first model assumed that adaptation to repeated fast FM-sweeps was mostly driven by habituation to the stimulus sequence properties, independently of participant’s expectations; namely, that neural populations habituate to repetitions of the standard, but show recovered responses to deviant irregardless of their position (habituation hypothesis; Fig 1E, h1). The second model assumed that adaptation was driven by predictive coding; namely, that neural responses to the deviants reflect prediction error with respect to the expectations of the participants (predictive coding hypothesis; Fig 1E, h2).

We measured BOLD responses in participants’ IC and MGB with an fMRI-sequence at 3-Tesla. The sequence was optimised to measure BOLD at relatively high spatial resolution (1.75 mm isotropic) while maintaining a high SNR (around 25).

Behavioural Responses

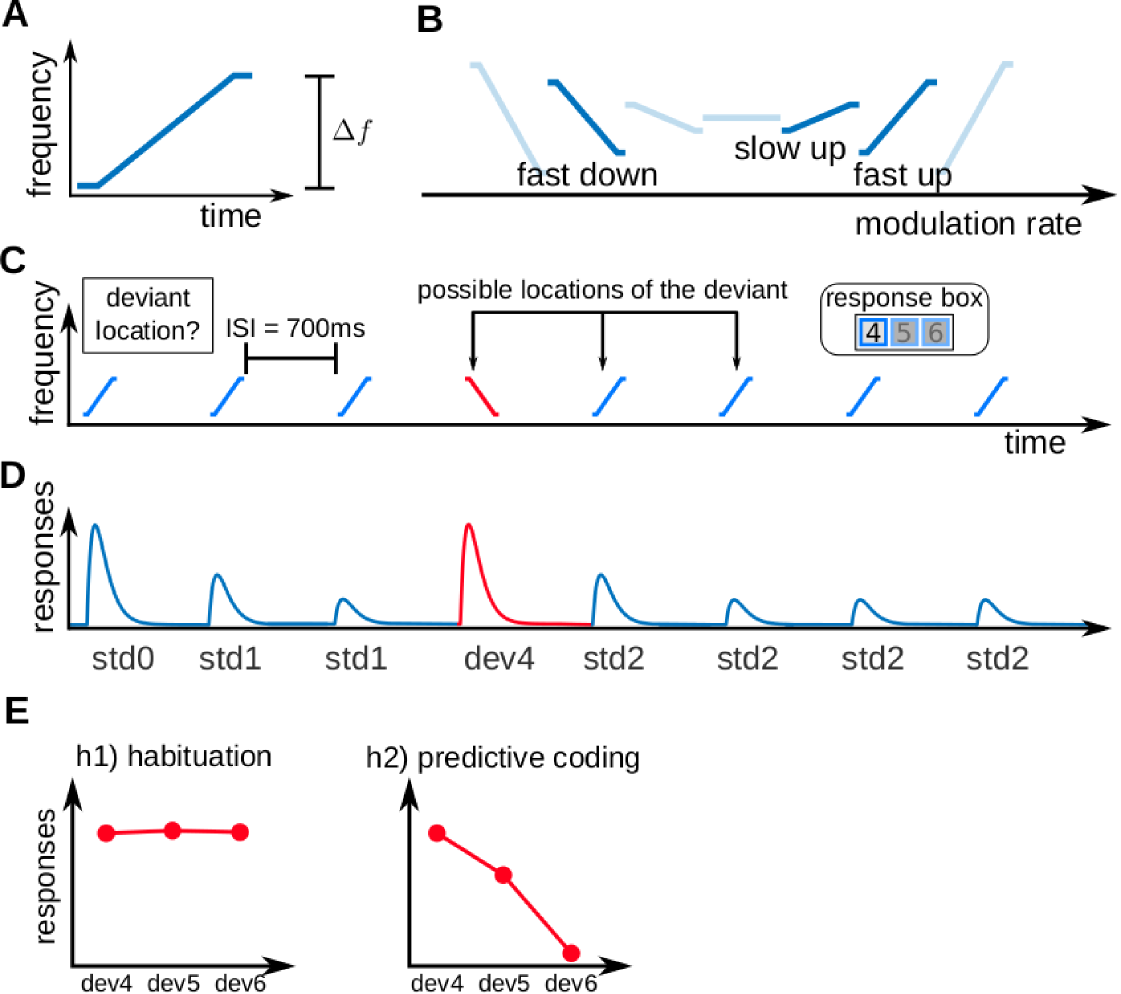

All participants showed accuracies over 0.96 to all deviant positions (Fig 2A). Accuracy was slightly higher for the two more expected deviant positions, but differences between conditions were not significant (, uncorrected). Reaction times (Fig 2B) showed a behavioural benefit of expectations: Participants reacted faster to more expected deviants (average ms, ms and ms for deviants at positions 4, 5, and 6, respectively (Fig 2C); all differences were significant with , corrected for 3 comparisons).

Human IC and MGB show stimulus specific adaptation (SSA) to FM-sweeps

We first studied whether the IC and MGB show SSA to fast FM-sweeps to test if the two nuclei are sensitive to FM-rate and FM-direction in humans. We estimated BOLD responses using a general linear model (GLM) with 6 different regressors: the first standard (), the standards after the first standard but before the deviant (), the standards after the deviant (), and deviants at positions 4, 5, and 6 (, , and , respectively; Fig 1D). The conditions and were parametrically modulated [59] according to their positions to account for possible variations in the responses over subsequent repetitions (see Methods and Fig S1).

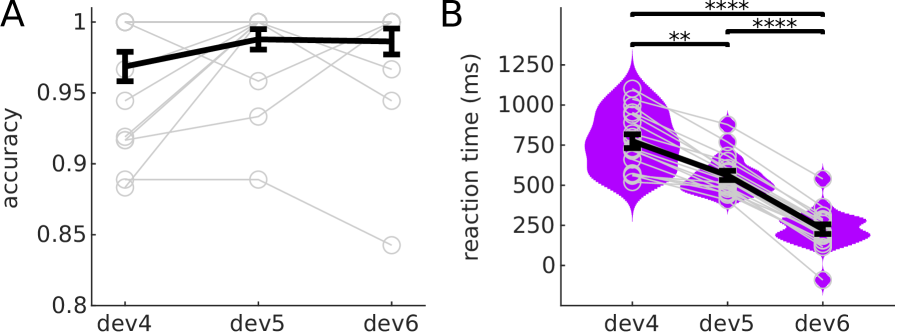

To compute SSA, we determined which voxels within the ICs and MGBs adapted to the standard (i.e., adaptation) and recovered responsiveness to deviants (i.e., deviant detection). SSA regions were then defined as the intersection between adaptation and deviant detection regions. ICs and MGBs were identified based on structural MRI data and an independent functional localiser (see Methods; IC and MGB ROIs; coloured patches in Fig 3). Within these ROIs, we used non-parametric ranksum tests (; one sample per participant) to find which voxels showed significant adaptation to repeated standards (contrast ). The associated -maps were thresholded so that the false-discovery-rate . Surviving voxels constituted the adaptation ROIs (blue and purple patches in Fig 3). The same procedure was used to delimit the deviant detection ROIs (red and purple patches in Fig 3): the set of voxels within each anatomical ROI that responded significantly stronger to deviants than to repeated standards (contrast ; note that we compare the responses to the repeated standards with as this is the deviant position for which participants have the lowest expectation). The four anatomical ROIs showed significant adaptation (peak ) and deviant detection (peak ; cluster size, exact peak -values and MNI coordinates are shown in Table 1; all -values corrected for four comparisons).

SSA regions were computed combining the unthresholded adaptation and deviant detection -maps. The uncorrected -value associated to SSA for a given voxel was . SSA -maps where thresholded to to compute the SSA ROIs (Fig 3, purple). The four anatomical ROIs had extensive SSA regions (cluster sizes larger than 90 ; peak ; exact peak -values and MNI coordinates are shown in Table 1; all -values corrected for four comparisons).

| contrast | ROI | cluster size | MNI coordinates (mm) | peak-level -value |

|---|---|---|---|---|

| adaptation | left IC | 130 voxels | ||

| right IC | 124 voxels | |||

| left MGB | 152 voxels | |||

| right MGB | 146 voxels | |||

| deviant detection | left IC | 92 voxels | ||

| right IC | 91 voxels | |||

| left MGB | 136 voxels | |||

| right MGB | 140 voxels | |||

| SSA | left IC | 91 voxels | ||

| right IC | 91 voxels | |||

| left MGB | 136 voxels | |||

| right MGB | 140 voxels |

Significant SSA was also found at the single-subject level in 15 of the 18 participants ( for each of the 15 participants, corrected for the 596 voxels included in a global subcortical auditory ROI that comprised bilateral IC and MGB), but not all participants showed significant SSA in all ROIs (IC-L: 8 participants, ; IC-R, MGB-L, MGB-R: 6 participants each, with ; all -values corrected for the number of voxels in the ROI and further corrected for four ROIs).

Human IC and MGB are sensitive to FM-direction and FM-rate

In the next step, we specifically tested whether the IC and MGB are similarly sensitive to FM-rate and FM-direction. To do that we analysed the data corresponding to: 1) trials where the standard and deviant differed only in modulation direction but not in absolute modulation rate; and 2) trials where the standard and deviant differed only in modulation rate but not in direction. If IC and MGB encode direction and rate, we would expect similar results in both partitions of the data. Conversely, if human IC and MGB are only sensitive to one of the two properties, we would expect null effects in the partition of the data where the standard and deviants differ only in that property.

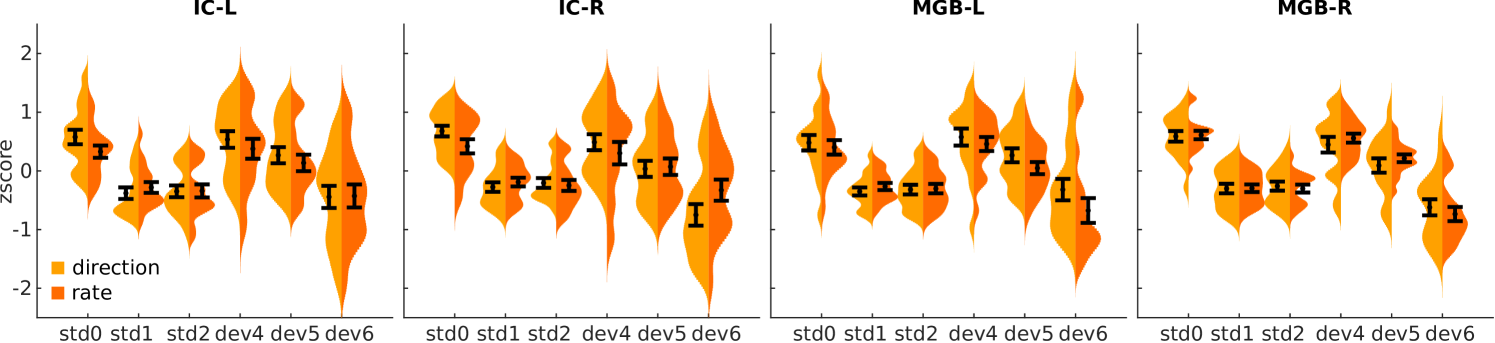

Results were similar in both partitions of the data (Fig 4), demonstrating that the human IC and MGB encode both FM-direction and FM-rate.

We further corroborated that the levels of SSA were comparable for both types of FM changes at the single-subject level. In order to characterise FM-sensitivity with a number for each subject and FM-sweep combination, we used the SSA index [30] (Eq (1); note that is equivalent to the deviant detection contrast used in Fig 3).

| (1) |

We measured the difference in to FM-direction () and FM-rate () in the voxels of the subject-specific SSA regions calculated in the previous section for each of the 15 subjects for which we obtained significant SSA. If FM-direction and FM-rate are both encoded in IC and MGB, we would expect no difference between these two partitions of the data. We measured the difference using Cohen’s ), where is the average of and is the pooled standard deviation. The difference ranged between and across participants. The expected value of the difference () overlapped with zero, indicating once again that both FM-direction and FM-rate are already encoded in the subcortical auditory pathway.

Expectations drive the encoding of FM-sweeps in IC and MGB

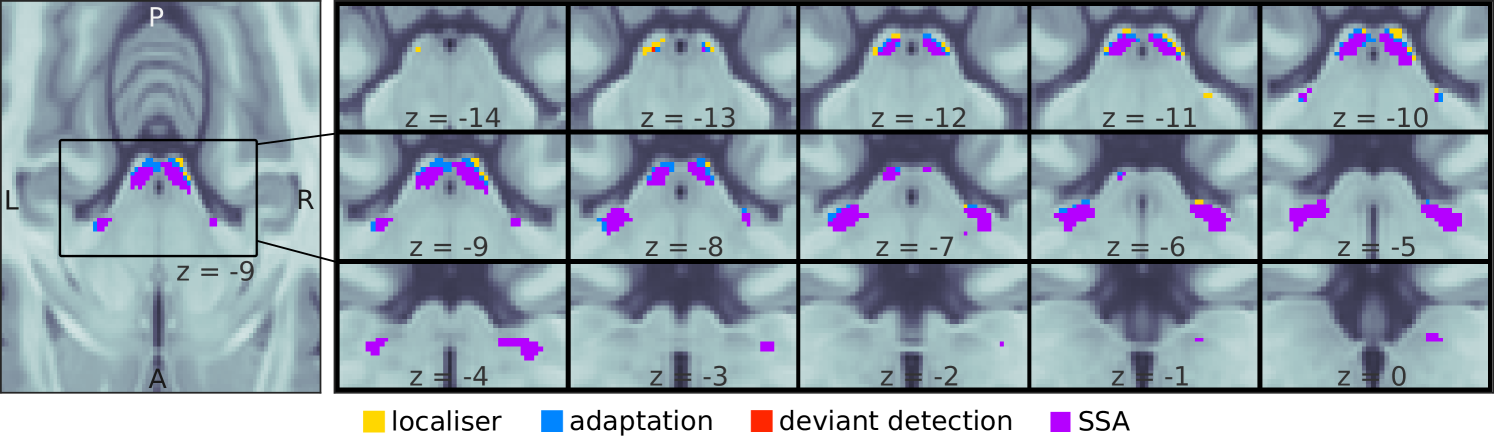

To address our second question, we evaluated whether the average BOLD responses to deviants in the three different positions were affected by participant’s subjective expectations within the SSA regions. In congruence with the predictive coding hypothesis (Fig 1E, h2), the response profile showed reduced responses for more expected deviants (Fig 5).

Formal statistical testing confirmed that responses to different deviant positions were different in all ROIs for all contrasts among deviant positions: ( and ), ( and ), and ( and ; all -values corrected for 12 comparisons). Exact -values and effect sizes are listed in Table 2. All statistical tests included one sample per participant, ROI, and deviant position.

| IC-L | ||||

| dev5 | dev6 | |||

| dev4 | ||||

| dev5 | ||||

| IC-R | ||||

| dev5 | dev6 | |||

| dev4 | ||||

| dev5 | ||||

| MGB-L | ||||

| dev5 | dev6 | |||

| dev4 | ||||

| dev5 | ||||

| MGB-R | ||||

| dev5 | dev6 | |||

| dev4 | ||||

| dev5 | ||||

To corroborate that differences were present at the single-subject level we run a correlation analysis for each of the 15 participants for which we obtained significant SSA. In each participant, we computed the Pearson’s correlation between the BOLD responses elicited by each deviant location with its likelihood of occurrence (namely, for deviant 4, for deviant 5, and for deviant 6). If BOLD responses reflect prediction error, we would expect a negative correlation between the likelihood and the responses. We found significantly negative correlations in all 15 participants (, all ; all Pearson tests had samples, 3 per run).

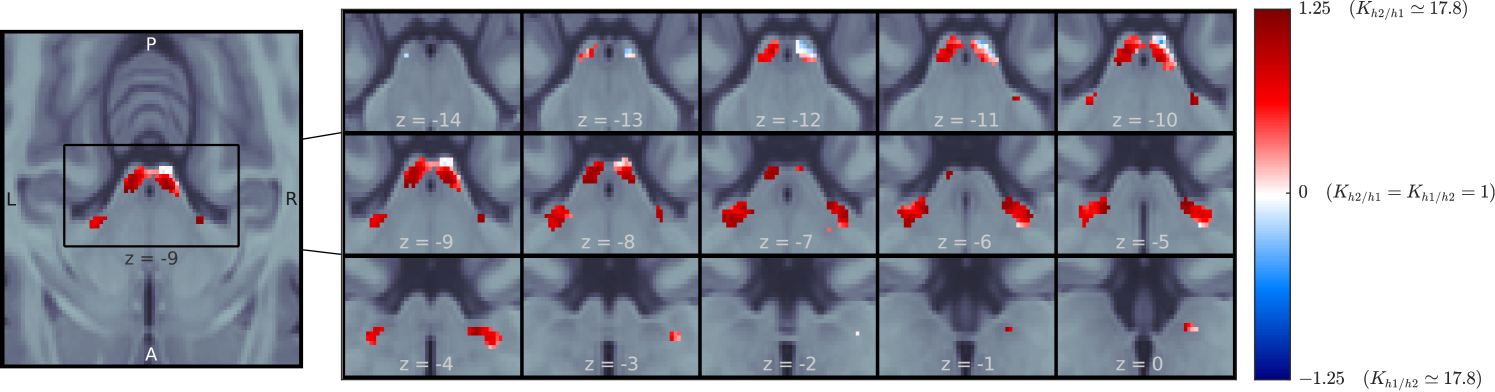

FM-sweeps are encoded as prediction error in the IC and MGB

We used Bayesian model comparison to formally evaluate the response properties in each voxel of the IC and MGB ROIs. This approach provides for a quantitative assessment of the likelihood that each of the two hypotheses (Fig 1E) can explain the responses in each voxel. This analysis is sensitive to possible region-specific effects that could have been averaged out when aggregating the z-scores across voxels in each ROI.

Following the methodology described in [60, 61], we first calculated the log-likelihood of each model in each voxel of the two ICs and MGBs in each participant. Each model yields different predictions on the relative amplitudes to different positions in the sequences (Fig 1E). We tested h1 and h2 to adjudicate between the habituation and predictive coding explanations of the responses. H1 assumed an asymptotic decay of the standards and recovered responses to the deviants; h2 assumed that the responses to both deviants and standards would depend on the participant’s expectations (Fig 6; for exact values, see Methods). Participant-specific log-likelihoods were used to compute the Bayes factor (i.e., the ratio of the posterior likelihoods) between h1 and h2.

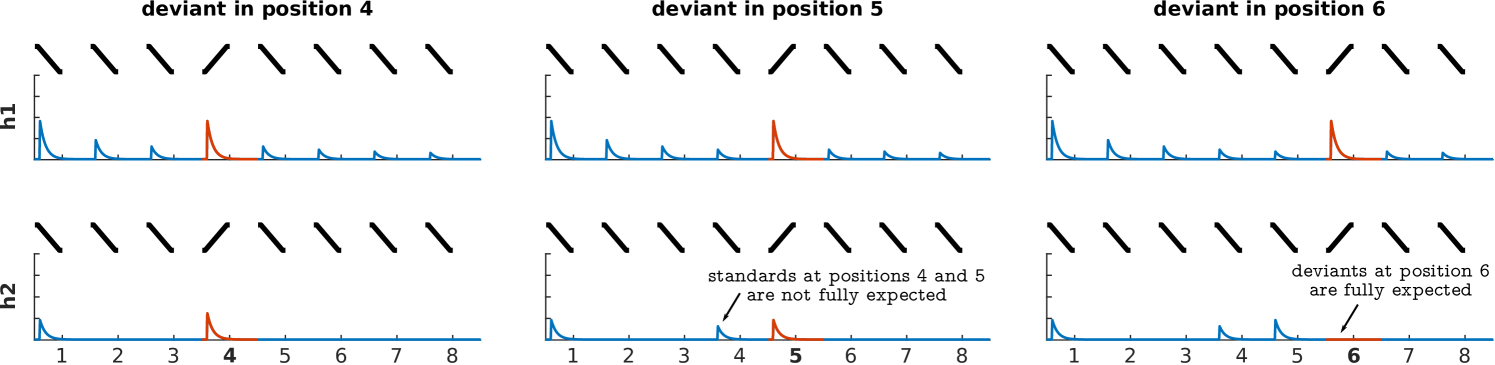

H2 was the best explanation for the data in the majority of voxels of the four ROIs (Figures 7 and 8): h2 was more likely than h1 in 99% and 80% of the left and right IC, respectively, and in all voxels of the left and right MGB.

To test whether the effect was present at the single-subject level, we computed independently for each subject in the subject-specific bilateral IC and MGB. Since we performed the group analyses over the entire ROIs (and not only the SSA regions), here we used the full anatomical ROIs of each participant. We measured for how many voxels within each region and participant h2 was the better explanation of the data. H2 was the better explanation of the data (more than 50% of voxels) in 16 (IC-L), 13 (IC-R), 13 (MGB-L), and 15 (MGB-R) participants. In 7 (IC-L), 5 (IC-R), 10 (MGB-L), and 8 (MGB-R) participants H2 was the better explanation even in more than 75% of voxels.

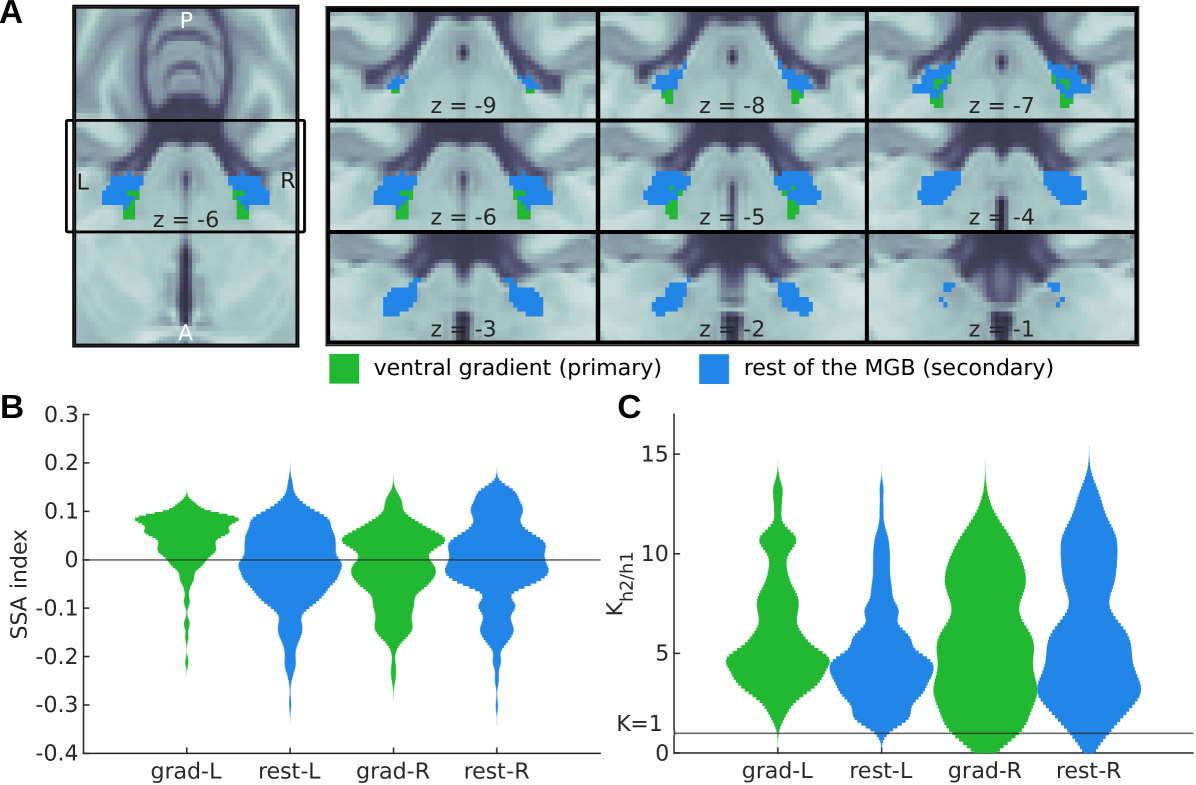

FM-sweeps are encoded as prediction error in primary and secondary MGB

The auditory pathway is anatomically subdivided into two sections: the primary (lemniscal) or secondary (non-lemniscal) pathways. The primary pathway is characterised by neurons that carry auditory information with high fidelity and it is generally regarded as responsible for the transmission of bottom-up sensory input [48]. The secondary pathway has wider tuning curves and it is generally regarded as responsible for the integration of contextual and multisensory information [48].

Both IC and MGB comprise regions that participate in both, the primary and secondary pathways [48]. The primary subdivision of the IC is its central nucleus, while the cortices constitute the secondary subdivisions. The primary subdivision of the MGB is its ventral section, while the medial and dorsal sections constitute the secondary subdivisions.

In rodents, SSA and prediction error to pure tones are significantly stronger in secondary subdivisions (e.g., [31]). In humans, prediction error is similarly strong in primary and secondary MGB for pure tones [40]. Here we test for differential representations of prediction error to FM-sweeps in MGB.

Distinguishing between the primary and secondary subsection of the IC and MGB non-invasively is technically challenging [62]. A recent study [63] distinguished two distinct tonotopic gradients of the MGB. The ventral tonotopic gradient was identified as the ventral or primary (vMGB) subsection of the MGB (see Fig 9A, green). Although the parcellation is based only on the topography of the tonotopic axes and their anatomical location, the region is the best approximation to-date of the vMGB in humans. No parcellation of the IC is available to-date.

Both primary and secondary subdivisions of bilateral MGB showed SSA. SSA strength was measured in each voxel using the SSA index (Eq (1)). Distributions of the across the voxels of each of the subdivisions were comparable in both hemispheres (Fig 9B) demonstrating that SSA is not confined to nor stronger in the secondary MGB.

Predictive coding (h2) was the best explanation for the responses of all voxels in the two subdivisions of the left MGB, and in 95% and 97% of the primary and secondary subdivisions of the right MGB, demonstrating that FM-sweeps are encoded as prediction error in both, primary and secondary subdivisions of bilateral MGB. Moreover, the distributions of the Bayes’ factor between the predictive coding (h2) and adaptation (h1) hypotheses were comparable across subdivisions (Fig 9C).

Prediction error to FM-sweeps and pure tones has similar topographic distributions in the IC

To study whether the same neural populations are in charge of encoding prediction error to FM-sweeps and pure tones, we compared the topographic distribution of the Bayes factor between the h2 and h1 in our data with the topographic distribution of the Bayes factor we obtained in a previous experiment, where we measured BOLD responses to the same experimental paradigm as here but using pure tones [40]. We computed the correlation between both across voxels of each of the four ROIs, as defined by the anatomical atlas from [64].

Distribution of to both families of stimuli was strongly correlated across voxels of bilateral IC (left, , ; right, , ; -values corrected for 4 comparisons), but not across voxels of the MGBs (left, , ; right, , ; uncorrected -values).

Discussion

The effects of expectations on speech recognition are readily evident in our daily lives. However, the neural mechanisms underlying the integration of expectations in at early stages of the speech processing pipeline are poorly understood. Here we have investigated how fast FM-sweeps, the dynamic building blocks of speech sounds, are encoded in the human subcortical auditory pathway, and how the subjective expectations of the listener influence their processing. Our study provided four main findings: first, we showed that the human IC and MGB comprise FM-direction and FM-rate selective neuronal populations. Second, we showed that responses in IC and MGB were driven by subjective expectations of the participants, demonstrating that the IC and MGB are integrated in a global network of predictive coding. The findings were robust and present at the single-subject level, demonstrating the generalisation power of the result. Third, we showed that the expectations determined the responses to FM-sweeps in primary and secondary subdivisions of bilateral MGB. Last, we showed that the topographic distribution of neural populations encoding the FM-sweeps as prediction error was similar to that of pure tones in the IC.

Combined, our results provide first demonstration that the human IC and MGB are actively engaged in the predictive processing of the building blocks of speech, fast FM-sweeps. This confirms the long-standing hypothesis that predictive coding combines high-level expectations with the exquisite temporal properties of the subcortical auditory pathway to promote the encoding of low-level features of the speech signal [65, 28]. This mechanism might be responsible for boosting encoding efficiency and aiding speech recognition.

Neurons that respond selectively to FM-direction and FM-rate have been located in rodents in the IC [66, 67, 68], MGB [54, 49], and auditory cortex [53, 55, 69, 56]. In contrast, FM-selectivity has only been reported in humans in auditory cortex [52] or higher-order areas of the cerebral cortex [50, 51]. Here, we have established that neural populations in the human IC and MGB show SSA to FM-direction and FM-rate. Since our FM-sweeps were matched in duration, pitch, and expected elicited activity along the tonotopic axis, this finding provides first evidence for FM-selectivity as early as in the IC in humans.

Animal studies have extensively shown that the SSA index to pure tones in IC and MGB increases with increasing rarity and frequency difference of the deviant with respect to the standard [70, 71, 72, 73, 39, 74, 33, 34, 75]. These studies implicitly assume that sensory neurons form expectations based on the local statistics of the stimuli. Whether adaptation driven by such potential expectations is a true reflection of predictive coding is, however, still a matter of debate [76, 77]. Modelling studies have demonstrated that identical behaviours can be produced by synaptic fatigue without the need of expectations [78, 79, 80]. Manipulating expectations orthogonally to stimulus regularities is the only way to assess if prediction error is computed with respect to a global model of the sensory world [45].

To-date, the only evidence (see [45] for a review) that subcortical nuclei encode stimuli according to subjective expectations independently of stimulus regularities was provided by our previous study on pure tones in human IC and MGB [40]. Here we used fast FM-sweeps that were explicitly designed to elicit the same activation across the tonotopic axis [58] to ensure that participants had to make use of FM-direction and FM-rate selective neurons to differentiate the deviant from the standards. The current findings demonstrate that the same principles apply to the encoding of dynamic FM-sweeps, even though FM is decoded much later than pure tone frequency in the auditory system [49].

Our results also showed that the topographic distribution of voxels encoding pure tones and FM-sweeps according to the principles of predictive coding were highly correlated in the IC, but not in the MGB. This divergence might indicate a different functional role of the IC and the MGB with respect to both families of stimuli; however, it might also be caused by a greater variability in the anatomical location and orientation of the MGB across subjects [62] and should be considered with caution until replications are available.

Although the expectations we induced in our participants had a relatively simple structure, they had a comparable level of abstraction as expectation stemming from grammatical rules [81], semantic context [3], or familiarity with the speaker’s style [82]. An integrated inverted hierarchy could propagate linguistic predictions all the way to formant transitions [45, 12, 28], and use neural centres in the IC and MGB to compute prediction error with respect to these predictions.

The expectations induced by our paradigm are most likely generated in the cerebral cortex. However, since we optimised our paradigm to study prediction error rather than the generation of expectations, we cannot test whether the subcortical responses we measured are driven or not by corticofugal projections. This possibility would be consistent with the massive corticofugal connections from cerebral cortex to MGB and IC [25, 83], and with results from animal studies where the deactivation of unilateral auditory cortex [32] or the TRN [84] led to reduction of SSA in the ventral MGB (but also see contradictory findings in non-lemniscal MGB [74] and non-lemniscal IC [85]).

Despite the fact that the MGB is at a higher processing stage than the IC, we found similar prevalence of predictive coding in both nuclei for FM-sweeps (Fig 7) as well as for pure tones [40]. These results contrast with a study in rodents concluding that the MGB encodes prediction error more strongly than the IC [31]. We speculate that this fundamental difference between the human and rodent studies is caused by the introduction of abstract rules in our paradigm: if prediction error is computed with respect to high-level expectations, there is no reason for prediction error to vary across the hierarchy, since the same expectations are used to compute prediction error at all levels. Rodent studies use passive listening tasks where expectations are induced by repetition. Without an explicit high-level model, prediction error can only be computed with respect to implicit local models that monitor local stimulus statistics and that may vary in complexity across processing stages.

Our study provides also novel insight into characteristics of SSA. Previous studies on subcortical SSA rested almost exclusively on pure tones [76, 77, 45]. Only three studies considered whether SSA generalised to other acoustic properties. Thomas et al. [86] reported SSA to FM-rate in the IC of the big brown bat; however, since big brown bats use FM for echolocation and the authors used stimuli in the rate range of echolocation signals, it was unclear whether this behaviour would generalise to auditory FM. Gao et al. [37] measured SSA using ramped and damped broadband noises in the IC, demonstrating that neurons in the IC adapt to intensity modulation. Last, Duque et al. [70] measured SSA to intensity, and showed that neurons in the IC do not adapt to nominal loudness. Our findings complement these results showing that the human IC and MGB adapt to fast FM characteristic of speech without loudness or spectral changes, and provides first evidence for SSA to acoustic properties other than pitch and loudness in the subcortical pathways.

Nuclei in the auditory pathway are organised in primary (or lemniscal) and secondary (or non-lemniscal) subdivisions. The lemniscal division of the auditory pathway has narrowly tuned frequency responses and is considered as responsible for the transmission of bottom-up information; the non-lemniscal division presents wider tuned frequency responses, is involved in multisensory integration, and is more heavily targeted by corticofugal connections [48]. Because of these properties, it has been previously suggested that prediction error may be encoded exclusively in secondary subdivisions of the IC and MGB [72, 76, 31]. In agreement with this hypothesis, SSA is stronger in secondary subdivisions of the rodent’s IC [38, 37, 87, 72, 88] and MGB [33, 74, 87].

In contrast, our results indicated an apparent lack of specialisation across subdivisions of the MGB during the encoding of FM-sweeps. Namely, both primary and secondary MGB were similarly responsive to FM, and they both encoded FM as prediction error. Similar results were apparent in our previous study when we investigated the encoding of pure tones [40]. This lack of specialisation would fit with the idea that expectations are used in the subcortical pathways to aid encoding: to optimise the resources of the subcortical stations requires to make use of the the narrow receptive fields of the primary subdivisions [48].

The fundamental difference between our results and the findings in animals might stem from a number of reasons. First, our design involved an active task: lemniscal pathways might only be strongly modulated by predictions when they carry behaviourally relevant sensory information. Second, the modulation of the subcortical auditory pathway might be fundamentally different in humans compared to other mammals, as they have to accomplish processing of such complex and dynamic signals as speech. Last, given the strength of the SSA effects reported in this study, it is possible that regions with weak SSA might have been contaminated with signal stemming from areas with strong SSA due to smoothing and interpolation necessary for the analysis of fMRI data.

Given the paramount role of predictions on speech perception [17, 5, 3, 89], atypical predictive coding in the subcortical sensory pathway could have profound repercussion at the cognitive level [90, 91, 45]. For instance, developmental dyslexia, a disorder characterised by difficulties with processing speech sounds, has been attributed to altered adaption dynamics to stimulus regularities [92, 93, 94], altered responses in the left MGB [91, 94], and atypical left hemispheric cortico-thalamic pathways [95, 96]. Understanding the mechanisms underlying the predictive processing of low-level features of speech in subcortical sensory pathways is an essential prerequisite to understand dysfunction.

Methods

This study was approved by the Ethics committee of the Technische Universtät Dresden, Germany (ethics approval number EK 315062019). All listeners provided written informed consent and received monetary compensation for their participation.

Participants

Eighteen German native speakers (12 female), aged 19 to 31 years (mean 24.6), participated in the study. None of them reported a history of psychiatric or neurological disorders, hearing difficulties, or current use of psychoactive medications. Normal hearing abilities were confirmed with pure tone audiometry (250 Hz to 8000 Hz); all participants had hearing threshold equal to or below 15 dB SPL in the frequency range of the FM sweeps (1000 Hz-3000 Hz). Participants were also screened for dyslexia (German SLRT-II test [97], RST-ARR [98], and rapid automatised naming (RAN) test of letters, numbers, objects, and colours [99]) and autism (Autism Spectrum Quotient; AQ [100]). All scores were within the neurotypical range (SLRT: , higher than the cut-off value of 16, following the same guidelines as [101]; RST-ARR: all , higher than the cut-off value of 16; RAN: maximum of 3 errors and seconds in each of the four categories; AQ: all participants , under or equal to the cut-off value of 32).

Since we had no estimations of the possible sizes of the effects, we maximised our statistical power by recruiting as many participant as we could fit in the MRI measurement time allocated to the study. This number was fixed to twenty before we started data collection, but two participants dropped out of the study during data collection. We maximised the amount of data collected for participant to reduce random error to a minimum and maximise the likelihood of measuring effects at the single-subject level.

Experimental paradigm

All sounds were 50 ms long (including 5 ms in/out ramps) sinusoidal FM sweeps. The frequency sweeps lasted for 40 ms and were preceded and followed by 5 ms long segments of constant frequency that overlapped with the in/our ramps. The sweeps were assembled in the frequency space to avoid discontinuities of the final waveforms.

We used a total of three sweeps during the experiment: a fast up sweep with starting frequency Hz and ending frequency Hz ( Hz); a slow up sweep with Hz and Hz ( Hz), and a fast down sweep with Hz and Hz ( Hz). The sweeps had different average frequencies to ensure that they elicited the same average spectral activity along the tonotopic axis and the same pitch percept (see [58] for details).

From those 3 sweeps we constructed 6 standard-deviant frequency combinations that were used the same number of times across each run, so that all sweeps were used the same number of times as deviant and standards. Each sequence consisted of 7 repetitions of the standard sweep and a single instance of the deviant sweep. Sweeps were separated by 700 ms inter-stimulus-intervals (ISI), the shortest possible ISI that allowed the participants to predict the fully expected deviant [40], amounting to a total duration of 5300 ms per sequence.

In each trial of the fMRI experiment, participants listened to one tone sequence and reported, as fast and accurately as possible using a button box with three buttons, the position of the deviant (4, 5 or 6). The inter-trial-interval (ITI) was jittered so that deviants were separated by an average of 5 seconds, up to a maximum of 11 seconds, with a minimum ITI of 1500 ms. We chose such ITI properties to maximise the efficiency of the response estimation of the deviants [102] while keeping a sufficiently long ITI to ensure that the sequences belonging to separate trials were not confounded.

All but one participant completed 9 runs of the main experiment across three sessions; participant 18 completed only 8 runs for technical reasons. Each run contained 6 blocks of 10 trials. The 10 trials in each block used one of the 6 possible sweep combinations, so that all the sequences within each block had the same standard and deviant. Thus, within a block only the position of the deviant was unknown, while the deviant’s FM-direction and FM-rate were known. The order of the blocks within the experiment was randomised. The position of the deviant was pseudorandomised across all trials in each run so that each deviant position happened exactly 20 times per run but an unknown amount of times per block. This constraint allowed us to keep the same a priori probability for all deviant positions in each block. In addition, there were 23 silent gaps of 5300 ms duration (i.e., null events of the same duration as the tone sequences) randomly located in each run [102]. Each run lasted around 10 minutes, depending on the reaction times of the participant.

Due to an undetected bug in the presentation code, information on the exact sweep combination used in each trial was unavailable for some runs. The bug affected the first three runs of participants 1, 2, 4, and 5; and the first six runs of participant 3. This information was not relevant for the analyses that aggregated the data across sweep combinations, and affected only the analyses of Fig 4, where we excluded the affected runs of participants 1, 2, 4, and 5, and participant 3 altogether.

We also run a functional localiser that was designed to activate the participant’s IC and MGB. Each run of the functional localiser consisted on 20 blocks of 16 seconds and lasted for about 6.5 minutes. Ten of the blocks were silent; the remaining blocks consisted on presentations of 16 sounds of one second duration each. Sounds were taken from a collection of 85 natural sounds collected by [62]. Participants were instructed to press a key when the same sound was repeated twice to ensure that they attended the sounds; behavioural data from the functional localiser was not used in the analysis.

Each session consisted on three runs of the main experiment, interspersed with two runs of the functional localiser. All runs were separated by breaks of a minimum of 1 minute to allow the participants rest. Fieldmaps and a whole-head EPI (see Data acquisition) were acquired between the third and fourth run. In the first session, we also measured an structural image before the fieldmaps. The first run of the first session was preceded by a practice run of four randomly chosen trials to ensure the participants had understood the task. We acquired fMRI during the practice run in order to allow the participants to undertake the training with MRI-noise.

Data acquisition

MRI data were acquired using a Siemens Trio 3 T scanner (Siemens Healthineers, Erlangen, Germany) with a 32-channel head coil.

Functional MRI data were acquired using echo planar imaging (EPI) sequences. We used partial coverage with 24 slices. The volume was oriented in parallel to the superior temporal gyrus such that the slices encompassed the IC, the MGB, and the superior temporal gyrus. In addition, we acquired one volume of an additional whole-head EPI with the same parameters (including the FoV) and 84 slices during resting to aid the coregistration process (see Data preprocessing).

The EPI sequence had the following acquisition parameters: TR = 1900 ms, TE = 42 ms, flip angle 66∘, matrix size , FoV 154 mm154 mm, voxel size 1.75 mm isotropic, bandwidth per pixel Hz/px, and interleaved acquisition. During functional MRI data acquisition, cardiac signal was acquired using a scanner pulse oximeter (Siemens Healthineers, Erlangen, Germany).

Structural images were recorded using an MPRAGE [103] T1 protocol with 1 mm isotropic resolution, TE = 1.95 ms, TR = 1000 ms, TI = 880 ms, flip angle 1 = 8∘, FoV = 256 mm256 mm.

Stimuli were presented using MATLAB (The Mathworks Inc., Natick, MA, USA) with the Psychophysics Toolbox extensions [104] and delivered through an Optoacoustics (Optoacoustics Ltd, Or Yehuda, Israel) amplifier and headphones equipped with active noise-cancellation. Loudness was adjusted independently for each participant to a comfortable level before starting the data acquisition.

Data preprocessing

The preprocessing pipeline was coded in Nipype 1.5.0 [105], and carried out using tools of the Statistical Parametric Mapping toolbox, version 12; Freesurfer, version 6 [106]; the FMRIB Software Library, version 5 (FSL) [107]); and the Advanced Normalization Tools, version 2.3 (ANTS) [108]. All data were coregistered to the Montreal Neurological Institute (MNI) MNI152 1 mm isotropic symmetric template.

First, we realigned the functional runs. We used SPM’s FieldMap Toolbox to calculate the geometric distortions caused in the EPI images due to field inhomogeneities. Next, we used SPM’s Realign and Unwarp to perform motion and distortion correction on the functional data. Motion artefacts, recorded using SPM’s ArtifactDetect, were later added to the design matrix (see Estimation of the BOLD responses).

Next, we used Freesurfer’s recon-all routine to calculate the boundaries between grey and white matter (these are necessary to register the functional data to the structural images) and ANTs to compute the transformation between the structural images and the MNI152 symmetric template.

Last, we coregistered the functional data to the structural image with Freesurfer’s BBregister, using the boundaries between grey and white matter of the structural data and the whole-brain EPI as an intermediate step. Data was analysed in the participant space, and then coregistred to the MNI152 template. Note that, since the resolution of the MNI space (1 mm isotropic) was higher than the resolution of the functional data (1.75 mm isotropic), the transformation resulted in a spatial oversampling.

All the preprocessing parameters, including the smoothing kernel size, were fixed before we started fitting the general linear model (GLM) and remained unchanged during the subsequent steps of the data analysis.

Physiological (heart rate) data was processed by the PhysIO Toolbox [109], that computes the Fourier expansion of each component along time and adds the coefficients as covariates of no interests in the model’s design matrix.

Estimation of the BOLD responses

First level analyses were coded in Nipype and carried out using SPM. Second level analyses were carried out using custom code in MATLAB. The coregistered data were first smoothed using a 2 mm full-width half-maximum kernel Gaussian kernel with SPM’s Smooth.

The first level GLM’s design matrix for the main experiment included 6 regressors: first standard (std0), standards before the deviant (std1), standards after the deviant (std2), and deviants in positions 4, 5, and 6 (dev4, dev5, and dev6, respectively; Fig 1). Conditions std1 and std2 were modelled using linear parametric modulation [59], whose linear factors were coded according to the position of the sound within the sequence to account for effects of habituation [40]. The first level GLM’s design matrix for the functional localiser included 2 conditions: sound and silence. On top of the main regressors, the design matrix also included the physiological PhysIO and artefact regressors of no-interest.

Definition of the anatomical and SSA ROIs

We used a recent anatomical atlas of the subcortical auditory pathway [64] to compute prior regions corresponding to the left IC, right IC, left MGB, and right MGB, respectively. The atlas comprises three different definitions of the ROIs calculated using 1) data from the big brain project, 2) postmortem data, and 3) fMRI in vivo-data. To compute the prior coarse region for each nuclei we combined the three masks and inflated the resulting regions with a Gaussian kernel of 1 mm fwdh.

The final IC and MGB regions were computed by combining the prior coarse regions with the results from the contrast of the functional localiser. Within each region, we thresholded the contrast to increasingly higher values until the number of surviving voxels equalled the volume of the region reported in [64]; namely, 146 voxels for each of the ICs, and 152 for each of the MGBs.

We used the coefficients of the GLM or beta estimates from the first level analysis to calculate the adaptation (Fig 3, blue patches) and deviant detection (red patches) ROIs, defined as the sets of voxels within the IC and MGB ROIs that responded significantly to the contrasts and , respectively. Significance was defined as , family-wise-error (FDR)-corrected for the number of voxels within each of the IC/MGB ROIs. SSA voxels are defined as voxels that show both, adaptation and deviant detection; thus, we calculated an upper bound of the -value maps for the SSA contrast as the maximum of the uncorrected -values associated to the adaptation and deviant detection contrasts. The SSA ROIs (Fig 3, purple patches) were calculated by FDR-correcting and thresholding the resulting -maps at . All calculations were performed using custom-made scripts (see Data and code availability).

Bayesian model comparison

The Bayesian analysis of the data consisted as well of first and second level analyses. In the first level, we used SPM via nipype to compute the log-evidence in each voxel of each participant for each of the four models (see Fig 6). The models were described using one regressor with parametric modulation whose coefficients corresponded to a simplified view of the expected responses according to each model (Table 3). The expected responses of each model were the same in all trials that had the same standard-deviant combination and deviant position. Given the model amplitude(s) and the timecourse of a voxel , SPM calculates the log-evidence of the linear model , where are the linear coefficients of each regressor and are noise terms.

| h1 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | |

|---|---|---|---|---|---|---|---|---|---|

| deviant at 4 | |||||||||

| deviant at 5 | |||||||||

| deviant at 6 | |||||||||

| h2 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | |

| deviant at 4 | |||||||||

| deviant at 5 | |||||||||

| deviant at 6 | |||||||||

Log-evidence maps for each participant were combined following the random-effects-equivalent procedure described in [60, 61] to compute the posterior probability maps associated to each model at the group level. We combined the maps using custom scripts (see Data and code availability). Histograms shown in Figures 7 and 9 are kernel-density estimates computed with the distribution of the posterior probabilities across voxels for each of the SSA ROIs.

Statistical analysis

All pairwise comparisons reported in the study were evaluated for significance using two-tailed Ranksum tests. Unless stated otherwise, -values for all analyses that comprised multiple testing were corrected using the Holm-Bonferroni method. A result was deemed statistically significant when the corrected .

Data and code availability

Derivatives (beta maps and log-likelihood maps, computed with SPM) and all code used for data processing and analysis are publicly available in https://osf.io/f5tsy/.

References

- [1] F. P. de Lange, M. Heilbron, and P. Kok, “How Do Expectations Shape Perception?,” Trends in Cognitive Sciences, vol. 22, no. 9, pp. 764–779, 2018.

- [2] W. Marslen-Wilson, “Linguistic Structure and Speech Shadowing at Very Short Latencies,” Nature, vol. 244, pp. 522–523, aug 1973.

- [3] M. H. Davis, M. A. Ford, F. Kherif, and I. S. Johnsrude, “Does semantic context benefit speech understanding through ”top-down” processes? Evidence from time-resolved sparse fMRI,” Journal of Cognitive Neuroscience, vol. 23, no. 12, pp. 3914–3932, 2011.

- [4] S. C. Sereno, C. C. Brewer, and P. J. O’Donnell, “Context Effects in Word Recognition: Evidence for Early Interactive Processing,” Psychological Science, vol. 14, no. 4, pp. 328–333, 2003.

- [5] E. Sohoglu, J. E. Peelle, R. P. Carlyon, and M. H. Davis, “Predictive top-down integration of prior knowledge during speech perception,” Journal of Neuroscience, vol. 32, no. 25, pp. 8443–8453, 2012.

- [6] R. P. N. Rao and D. H. Ballard, “Predictive coding in the visual cortex: a functional interpretation of some extra-classical receptive-field effects,” Nature Neuroscience, vol. 2, no. 1, pp. 79–87, 1999.

- [7] K. Friston, “Learning and inference in the brain.,” Neural networks: the official journal of the International Neural Network Society, vol. 16, pp. 1325–52, nov 2003.

- [8] K. Friston, “A theory of cortical responses.,” Philosophical transactions of the Royal Society of London. Series B, Biological sciences, vol. 360, no. 1456, pp. 815–36, 2005.

- [9] M. J. Pickering and S. Garrod, “An integrated theory of language production and comprehension,” Behavioral and Brain Sciences, vol. 36, no. 4, pp. 329–347, 2013.

- [10] G. R. Kuperberg and T. F. Jaeger, “What do we mean by prediction in language comprehension?,” Language, Cognition and Neuroscience, vol. 31, pp. 32–59, jan 2016.

- [11] T. E. Cope, E. Sohoglu, W. Sedley, K. Patterson, P. S. Jones, J. Wiggins, C. Dawson, M. Grube, R. P. Carlyon, T. D. Griffiths, M. H. Davis, and J. B. Rowe, “Evidence for causal top-down frontal contributions to predictive processes in speech perception,” Nature Communications, vol. 8, p. 2154, dec 2017.

- [12] K. J. Friston, N. Sajid, D. R. Quiroga-Martinez, T. Parr, C. J. Price, and E. Holmes, “Active listening,” Hearing Research, vol. 399, p. 107998, jan 2021.

- [13] M. W. Spratling, “A review of predictive coding algorithms,” Brain and Cognition, vol. 112, pp. 92–97, 2017.

- [14] G. B. Keller and T. D. Mrsic-Flogel, “Predictive Processing: A Canonical Cortical Computation,” Neuron, vol. 100, no. 2, pp. 424–435, 2018.

- [15] H. Blank and M. H. Davis, “Prediction Errors but Not Sharpened Signals Simulate Multivoxel fMRI Patterns during Speech Perception.,” PLoS Biology, vol. 14, no. 11, p. e1002577, 2016.

- [16] S. Ylinen, M. Huuskonen, K. Mikkola, E. Saure, T. Sinkkonen, and P. Paavilainen, “Predictive coding of phonological rules in auditory cortex: A mismatch negativity study,” Brain and Language, vol. 162, pp. 72–80, 2016.

- [17] H. Blank, M. Spangenberg, and M. H. Davis, “Neural Prediction Errors Distinguish Perception and Misperception of Speech,” The Journal of Neuroscience, vol. 38, pp. 6076–6089, jul 2018.

- [18] Y. Vidal, P. Brusini, M. Bonfieni, J. Mehler, and T. A. Bekinschtein, “Neural signal to violations of abstract rules using speech-like stimuli,” eNeuro, vol. 6, no. 5, 2019.

- [19] C. Signoret, L. M. Andersen, Ö. Dahlström, R. Blomberg, D. Lundqvist, M. Rudner, and J. Rönnberg, “The Influence of Form- and Meaning-Based Predictions on Cortical Speech Processing Under Challenging Listening Conditions: A MEG Study,” Frontiers in Neuroscience, vol. 14, no. September, pp. 1–15, 2020.

- [20] E. Sohoglu and M. H. Davis, “Rapid computations of spectrotemporal prediction error support perception of degraded speech,” eLife, vol. 9, pp. 1–25, 2020.

- [21] S. Hovsepyan, I. Olasagasti, and A. L. Giraud, “Combining predictive coding and neural oscillations enables online syllable recognition in natural speech,” Nature Communications, vol. 11, no. 1, pp. 1–12, 2020.

- [22] C. C. Lee and S. M. Sherman, “On the classification of pathways in the auditory midbrain, thalamus, and cortex,” Hearing Research, vol. 276, no. 1-2, pp. 79–87, 2011.

- [23] B. R. Schofield, “Central Descending Auditory Pathways,” in Auditory and Vestibular Efferents. (D. Ryugo and R. Fay, eds.), ch. 9, pp. 261–290, Springer Handbook of Auditory Research, 2011.

- [24] J. A. Winer, “Decoding the auditory corticofugal systems,” Hearing Research, vol. 207, no. 1-2, pp. 1–9, 2005.

- [25] J. a. Winer, “The human medial geniculate body,” Hearing Research, vol. 15, no. 3, pp. 225–247, 1984.

- [26] M. A. Steadman and C. J. Sumner, “Changes in neuronal representations of consonants in the ascending auditory system and their role in speech recognition,” Frontiers in Neuroscience, vol. 12, no. OCT, pp. 1–16, 2018.

- [27] A. L. Giraud, C. Lorenzi, J. Ashburner, J. Wable, I. Johnsrude, R. Frackowiak, and A. Kleinschmidt, “Representation of the temporal envelope of sounds in the human brain,” Journal of Neurophysiology, vol. 84, no. 3, pp. 1588–1598, 2000.

- [28] K. von Kriegstein, R. D. Patterson, and T. D. Griffiths, “Task-Dependent Modulation of Medial Geniculate Body Is Behaviorally Relevant for Speech Recognition,” Current Biology, vol. 18, no. 23, pp. 1855–1859, 2008.

- [29] A. F. Osman, C. M. Lee, M. A. Escabí, and H. L. Read, “A hierarchy of time scales for discriminating and classifying the temporal shape of sound in three auditory cortical fields,” Journal of Neuroscience, vol. 38, no. 31, pp. 6967–6982, 2018.

- [30] N. Ulanovsky, L. Las, and I. Nelken, “Processing of low-probability sounds by cortical neurons,” Nature Neuroscience, vol. 6, no. 4, pp. 391–398, 2003.

- [31] G. G. Parras, J. Nieto-Diego, G. V. Carbajal, C. Valdés-Baizabal, C. Escera, and M. S. Malmierca, “Neurons along the auditory pathway exhibit a hierarchical organization of prediction error,” Nature Communications, vol. 8, no. 1, 2017.

- [32] P. Bauerle, W. von der Behrens, M. Kossl, and B. H. Gaese, “Stimulus-Specific Adaptation in the Gerbil Primary Auditory Thalamus Is the Result of a Fast Frequency-Specific Habituation and Is Regulated by the Corticofugal System,” Journal of Neuroscience, vol. 31, no. 26, pp. 9708–9722, 2011.

- [33] F. M. Antunes, I. Nelken, E. Covey, and M. S. Malmierca, “Stimulus-Specific Adaptation in the Auditory Thalamus of the Anesthetized Rat,” PLoS ONE, vol. 5, no. 11, p. e14071, 2010.

- [34] L. A. Anderson, G. B. Christianson, and J. F. Linden, “Stimulus-Specific Adaptation Occurs in the Auditory Thalamus,” Journal of Neuroscience, vol. 29, no. 22, pp. 7359–7363, 2009.

- [35] B. L. Robinson, N. S. Harper, and D. McAlpine, “Meta-adaptation in the auditory midbrain under cortical influence,” Nature Communications, vol. 7, p. 13442, dec 2016.

- [36] Y. A. Ayala, A. Udeh, K. Dutta, D. Bishop, M. S. Malmierca, and D. L. Oliver, “Differences in the strength of cortical and brainstem inputs to SSA and non-SSA neurons in the inferior colliculus.,” Scientific reports, vol. 5, p. 10383, 2015.

- [37] P. P. Gao, J. W. Zhang, J. S. Cheng, I. Y. Zhou, and E. X. Wu, “The inferior colliculus is involved in deviant sound detection as revealed by BOLD fMRI,” NeuroImage, vol. 91, pp. 220–227, 2014.

- [38] D. Pérez-González, O. Hernández, E. Covey, and M. S. Malmierca, “GABA A-mediated inhibition modulates stimulus-specific adaptation in the inferior colliculus,” PLoS ONE, vol. 7, no. 3, 2012.

- [39] L. Zhao, Y. Liu, L. Shen, L. Feng, and B. Hong, “Stimulus-specific adaptation and its dynamics in the inferior colliculus of rat.,” Neuroscience, vol. 181, pp. 163–74, may 2011.

- [40] A. Tabas, G. Mihai, S. Kiebel, R. Trampel, and K. Von Kriegstein, “Abstract rules drive adaptation in the subcortical sensory pathway,” eLife, vol. 9, pp. 1–19, 2020.

- [41] R. Cacciaglia, C. Escera, L. Slabu, S. Grimm, A. Sanjuán, N. Ventura-Campos, and C. Ávila, “Involvement of the human midbrain and thalamus in auditory deviance detection,” Neuropsychologia, vol. 68, pp. 51–58, 2015.

- [42] M. Cornella, A. Bendixen, S. Grimm, S. Leung, E. Schröger, and C. Escera, “Spatial auditory regularity encoding and prediction: Human middle-latency and long-latency auditory evoked potentials,” Brain Research, vol. 1626, pp. 21–30, nov 2015.

- [43] C. Escera and M. S. Malmierca, “The auditory novelty system: An attempt to integrate human and animal research,” Psychophysiology, vol. 51, no. 2, pp. 111–123, 2014.

- [44] S. Grimm, C. Escera, L. Slabu, and J. Costa-Faidella, “Electrophysiological evidence for the hierarchical organization of auditory change detection in the human brain,” Psychophysiology, vol. 48, no. 3, pp. 377–384, 2011.

- [45] A. Tabas and K. von Kriegstein, “Adjudicating Between Local and Global Architectures of Predictive Processing in the Subcortical Auditory Pathway,” Frontiers in Neural Circuits, vol. 15, no. March, pp. 1–14, 2021.

- [46] A. M. Liberman, P. C. Delattre, L. J. Gerstman, and F. S. Cooper, “Tempo of frequency change as a cue for distinguishing classes of speech sounds,” Journal of Experimental Psychology, vol. 52, no. 2, pp. 127–137, 1956.

- [47] A. M. Liberman and M. Studdert-Kennedy, “Phonetic Perception,” in Perception, pp. 143–178, Springer Berlin Heidelberg, 1978.

- [48] B. Hu, “Functional organization of lemniscal and nonlemniscal auditory thalamus,” Experimental Brain Research, vol. 153, no. 4, pp. 543–549, 2003.

- [49] R. I. Kuo and G. K. Wu, “The Generation of Direction Selectivity in the Auditory System,” Neuron, vol. 73, no. 5, pp. 1016–1027, 2012.

- [50] I.-H. Hsieh, P. Fillmore, F. Rong, G. Hickok, and K. Saberi, “FM-selective Networks in Human Auditory Cortex Revealed Using fMRI and Multivariate Pattern Classification,” Journal of Cognitive Neuroscience, vol. 24, no. 9, pp. 1896–1907, 2012.

- [51] M. F. Joanisse and D. D. DeSouza, “Sensitivity of human auditory cortex to rapid frequency modulation revealed by multivariate representational similarity analysis,” Frontiers in Neuroscience, vol. 8, no. SEP, pp. 1–10, 2014.

- [52] H. Okamoto and R. Kakigi, “Encoding of frequency-modulation (FM) rates in human auditory cortex,” Scientific Reports, vol. 5, pp. 1–9, 2015.

- [53] L. I. Zhang, A. Y. Y. Tan, C. E. Schreiner, and M. M. Merzenich, “Topography and synaptic shaping of direction selectivity in primary auditory cortex,” Nature, vol. 424, pp. 201–205, jul 2003.

- [54] B. Lui and J. R. Mendelson, “Frequency modulated sweep responses in the medial geniculate nucleus,” Experimental Brain Research, vol. 153, pp. 550–553, dec 2003.

- [55] C.-q. Ye, M.-m. Poo, Y. Dan, and X.-h. Zhang, “Synaptic mechanisms of direction selectivity in primary auditory cortex,” Journal of Neuroscience, vol. 30, no. 5, pp. 1861–1868, 2010.

- [56] J. B. Issa, B. D. Haeffele, E. D. Young, and D. T. Yue, “Multiscale mapping of frequency sweep rate in mouse auditory cortex,” Hearing Research, vol. 344, pp. 207–222, 2016.

- [57] I. Nabelek, A. Nabelek, and I. J. Hirsh, “Pitch of Short Tone Bursts of Changing Frequency,” The Journal of the Acoustical Society of America, vol. 45, no. 1, pp. 293–293, 1970.

- [58] A. Tabas and K. Von Kriegstein, “Neural modelling of the encoding of fast frequency modulatio,” PLoS Computational Biology, vol. xxx, p. xxxxx, 2021.

- [59] J. P. O’Doherty, A. Hampton, and H. Kim, “Model-based fMRI and its application to reward learning and decision making,” Annals of the New York Academy of Sciences, vol. 1104, pp. 35–53, 2007.

- [60] M. Rosa, S. Bestmann, L. Harrison, and W. Penny, “Bayesian model selection maps for group studies,” NeuroImage, vol. 49, pp. 217–224, jan 2010.

- [61] K. E. Stephan, W. D. Penny, J. Daunizeau, R. J. Moran, and K. J. Friston, “Bayesian model selection for group studies,” NeuroImage, vol. 46, no. 4, pp. 1004–1017, 2009.

- [62] M. Moerel, F. De Martino, K. Uğurbil, E. Yacoub, and E. Formisano, “Processing of frequency and location in human subcortical auditory structures.,” Scientific reports, vol. 5, p. 17048, 2015.

- [63] G. Mihai, M. Moerel, F. De Martino, R. Trampel, S. Kiebel, and K. von Kriegstein, “Modulation of tonotopic ventral mgb is behaviorally relevant for speech recognition.,” eLife, 2019.

- [64] K. R. Sitek, O. F. Gulban, E. Calabrese, G. A. Johnson, A. Lage-castellanos, M. Moerel, S. S. Ghosh, and F. D. Martino, “Mapping the human subcortical auditory system using histology , postmortem MRI and in vivo MRI at 7T,” eLife, vol. 8, p. e48932, 2019.

- [65] I. B. Yildiz, K. von Kriegstein, and S. J. Kiebel, “From Birdsong to Human Speech Recognition: Bayesian Inference on a Hierarchy of Nonlinear Dynamical Systems,” PLoS Computational Biology, vol. 9, no. 9, 2013.

- [66] S. R. Hage and G. Ehret, “Mapping responses to frequency sweeps and tones in the inferior colliculus of house mice,” European Journal of Neuroscience, vol. 18, no. 8, pp. 2301–2312, 2003.

- [67] A.-A. Li, A.-Y. Zhang, Q.-C. Chen, and F.-J. Wu, “Effects of modulation range and presentation rate of FM stimulus on auditory response properties of mouse inferior collicular neurons.,” Sheng li xue bao : [Acta physiologica Sinica], vol. 62, no. 3, pp. 210–8, 2010.

- [68] H.-R. A. P. Geis and J. G. G. Borst, “Intracellular responses to frequency modulated tones in the dorsal cortex of the mouse inferior colliculus,” Frontiers in Neural Circuits, vol. 7, pp. 2002–2016, feb 2013.

- [69] M. Trujillo, M. M. Carrasco, and K. Razak, “Response properties underlying selectivity for the rate of frequency modulated sweeps in the auditory cortex of the mouse,” Hearing Research, vol. 298, pp. 80–92, 2013.

- [70] D. Duque, X. Wang, J. Nieto-Diego, K. Krumbholz, and M. S. Malmierca, “Neurons in the inferior colliculus of the rat show stimulus-specific adaptation for frequency, but not for intensity,” Scientific Reports, vol. 6, no. April, pp. 1–15, 2016.

- [71] D. Duque and M. S. Malmierca, “Stimulus-specific adaptation in the inferior colliculus of the mouse : anesthesia and spontaneous activity effects,” Brain Structure and Function, vol. 220, pp. 3385–3398, 2015.

- [72] Y. A. Ayala, A. Udeh, K. Dutta, D. Bishop, M. S. Malmierca, and D. L. Oliver, “Differences in the strength of cortical and brainstem inputs to SSA and non-SSA neurons in the inferior colliculus.,” Scientific reports, vol. 5, p. 10383, 2015.

- [73] Y. A. Ayala, D. Pérez-gonzález, D. Duque, I. Nelken, and M. S. Malmierca, “Frequency discrimination and stimulus deviance in the inferior colliculus and cochlear nucleus,” Frontiers in Neural Circuits, vol. 6, p. 119, 2013.

- [74] F. M. Antunes and M. S. Malmierca, “Effect of Auditory Cortex Deactivation on Stimulus-Specific Adaptation in the Medial Geniculate Body,” Journal of Neuroscience, vol. 31, no. 47, pp. 17306–17316, 2011.

- [75] M. S. Malmierca, S. Cristaudo, D. Pérez-González, and E. Covey, “Stimulus-specific adaptation in the inferior colliculus of the anesthetized rat.,” The Journal of Neuroscience, vol. 29, no. 17, pp. 5483–5493, 2009.

- [76] M. S. Malmierca, L. A. Anderson, and F. M. Antunes, “The cortical modulation of stimulus-specific adaptation in the auditory midbrain and thalamus: a potential neuronal correlate for predictive coding.,” Frontiers in systems neuroscience, vol. 9, p. 19, 2015.

- [77] G. V. Carbajal and M. S. Malmierca, “The Neuronal Basis of Predictive Coding Along the Auditory Pathway : From the Subcortical Roots to Cortical Deviance Detection,” Trends in Hearing, vol. 22, pp. 1–33, 2018.

- [78] D. Eytan, N. Brenner, and S. Marom, “Selective adaptation in networks of cortical neurons.,” The Journal of Neuroscience, vol. 23, no. 28, pp. 9349–9356, 2003.

- [79] R. Mill, M. Coath, T. Wennekers, and S. L. Denham, “A Neurocomputational Model of Stimulus-Specific Adaptation to Oddball and Markov Sequences,” PLoS Computational Biology, vol. 7, no. 8, 2011.

- [80] R. Mill, M. Coath, T. Wennekers, and S. L. Denham, “Characterising stimulus-specific adaptation using a multi-layer field model,” Brain Research, vol. 1434, pp. 178–188, jan 2012.

- [81] T. C. Gunter, A. D. Friederici, and H. Schriefers, “Syntactic Gender and Semantic Expectancy,” Journal of Cognitive Neuroscience, vol. 12, no. 4, 2000.

- [82] S. Regel, S. Coulson, and T. C. Gunter, “The communicative style of a speaker can affect language comprehension? ERP evidence from the comprehension of irony,” Brain Research, vol. 1311, no. July 2014, pp. 121–135, 2010.

- [83] J. A. Winer, “Three Systems of Descending Projections to the Inferior Colliculus,” in The Inferior Colliculus, pp. 231–247, New York: Springer-Verlag, 2005.

- [84] X.-J. Yu, X.-X. Xu, S. He, and J. He, “Change detection by thalamic reticular neurons.,” Nature Neuroscience, vol. 12, no. 9, pp. 1165–1170, 2009.

- [85] L. A. Anderson and M. S. Malmierca, “The effect of auditory cortex deactivation on stimulus-specific adaptation in the inferior colliculus of the rat,” European Journal of Neuroscience, vol. 37, no. 1, pp. 52–62, 2013.

- [86] J. M. Thomas, C. Morse, L. Kishline, A. O’Brien-Lambert, A. Simonton, K. E. Miller, and E. Covey, “Stimulus-specific adaptation in specialized neurons in the inferior colliculus of the big brown bat, Eptesicus fuscus,” Hearing Research, vol. 291, no. 1-2, pp. 34–40, 2012.

- [87] D. Duque, M. S. Malmierca, and D. M. Caspary, “Modulation of stimulus-specific adaptation by GABA(A) receptor activation or blockade in the medial geniculate body of the anaesthetized rat.,” The Journal of physiology, vol. 592, no. Pt 4, pp. 729–43, 2014.

- [88] Y. A. Ayala and M. S. Malmierca, “The effect of inhibition on stimulus-specific adaptation in the inferior colliculus,” Brain Structure and Function, vol. 223, pp. 1391–1407, 2018.

- [89] M. H. Davis and I. S. Johnsrude, “Hearing speech sounds: Top-down influences on the interface between audition and speech perception,” Hearing Research, vol. 229, pp. 132–147, jul 2007.

- [90] J. McFadyen, R. J. Dolan, and M. I. Garrido, “The influence of subcortical shortcuts on disordered sensory and cognitive processing,” Nature Reviews Neuroscience, vol. 21, no. May, 2020.

- [91] B. Diaz, F. Hintz, S. J. Kiebel, and K. von Kriegstein, “Dysfunction of the auditory thalamus in developmental dyslexia,” Proceedings of the National Academy of Sciences, vol. 109, no. 34, pp. 13841–13846, 2012.

- [92] T. K. Perrachione, S. N. Del Tufo, R. Winter, J. Murtagh, A. Cyr, P. Chang, K. Halverson, S. S. Ghosh, J. A. Christodoulou, and J. D. Gabrieli, “Dysfunction of Rapid Neural Adaptation in Dyslexia,” Neuron, vol. 92, no. 6, pp. 1383–1397, 2016.

- [93] M. Ahissar, Y. Lubin, H. Putter-Katz, and K. Banai, “Dyslexia and the failure to form a perceptual anchor,” Nature Neuroscience, vol. 9, no. 12, pp. 1558–1564, 2006.

- [94] B. Chandrasekaran, J. Hornickel, E. Skoe, T. Nicol, and N. Kraus, “Context-Dependent Encoding in the Human Auditory Brainstem Relates to Hearing Speech in Noise: Implications for Developmental Dyslexia,” Neuron, vol. 64, no. 3, pp. 311–319, 2009.

- [95] C. Müller-Axt, A. Anwander, and K. von Kriegstein, “Altered Structural Connectivity of the Left Visual Thalamus in Developmental Dyslexia,” Current Biology, vol. 27, pp. 3692–3698, 2017.

- [96] N. Tschentscher, A. Ruisinger, H. Blank, B. Diaz, and K. von Kriegstein, “Thalamus and the Motion-Sensitive Planum Temporale in Developmental Dyslexia,” The Journal of Neuroscience, vol. 39, no. 9, pp. 1720–1732, 2019.

- [97] K. Moll and K. Landerl, “Lese-und rechtschreibtest (slrt-ii). weiterentwicklung des salzburger lese-und rechtschreibtests (slrt), 2., korrigierte auflage mit erweiterten normen,” 2014.

- [98] N. Ibrahimović and S. Bulheller, Rechtschreibtest RST-ARR: aktuelle Rechtschreibregelung: Lückendiktate. Pearson Assessment & Information, 2013.

- [99] M. B. Denckla and R. Rudel, “Rapid “Automatized” Naming of Pictured Objects, Colors, Letters and Numbers by Normal Children,” Cortex, vol. 10, no. 2, pp. 186–202, 1974.

- [100] S. Baron-Cohen, S. Wheelwright, J. Hill, Y. Raste, and I. Plumb, “The ”Reading the Mind in the Eyes” Test revised version: A study with normal adults, and adults with Asperger syndrome or high-functioning autism,” Journal of Child Psychology and Psychiatry and Allied Disciplines, vol. 42, no. 2, pp. 241–251, 2001.

- [101] K. Gutschmidt, S. Wenninger, F. Montagnese, and B. Schoser, “Dyslexia and cognitive impairment in adult patients with myotonic dystrophy type 1: a clinical prospective analysis,” Journal of Neurology, 2020.

- [102] K. Friston, E. Zarahn, O. Josephs, R. Henson, and A. Dale, “Stochastic Designs in Event-Related fMRI,” NeuroImage, vol. 10, no. 5, pp. 607–619, 1999.

- [103] M. Brant-Zawadzki, G. D. Gillan, and W. R. Nitz, “MP RAGE: A three-dimensional, T1-weighted, gradient-echo sequence - Initial experience in the brain,” Radiology, vol. 182, no. 3, pp. 769–775, 1992.

- [104] D. H. Brainard, “The Psychophysics Toolbox,” Spatial Vision, vol. 10, no. 4, pp. 433–436, 1997.

- [105] K. Gorgolewski, C. D. Burns, C. Madison, D. Clark, Y. O. Halchenko, M. L. Waskom, and S. S. Ghosh, “Nipype: A Flexible, Lightweight and Extensible Neuroimaging Data Processing Framework in Python,” Frontiers in Neuroinformatics, vol. 5, 2011.

- [106] B. Fischl, D. H. Salat, E. Busa, M. Albert, M. Dieterich, C. Haselgrove, A. Van Der Kouwe, R. Killiany, D. Kennedy, S. Klaveness, A. Montillo, N. Makris, B. Rosen, and A. M. Dale, “Whole brain segmentation: Automated labeling of neuroanatomical structures in the human brain,” Neuron, vol. 33, no. 3, pp. 341–355, 2002.

- [107] M. Jenkinson, C. F. Beckmann, T. E. Behrens, M. W. Woolrich, and S. M. Smith, “Fsl,” NeuroImage, vol. 62, no. 2, pp. 782–790, 2012.

- [108] B. B. Avants, N. J. Tustison, G. Song, P. A. Cook, A. Klein, and J. C. Gee, “A reproducible evaluation of ANTs similarity metric performance in brain image registration,” NeuroImage, vol. 54, no. 3, pp. 2033–2044, 2011.

- [109] L. Kasper, S. Bollmann, A. O. Diaconescu, C. Hutton, J. Heinzle, S. Iglesias, T. U. Hauser, M. Sebold, Z. M. Manjaly, K. P. Pruessmann, and K. E. Stephan, “The PhysIO Toolbox for Modeling Physiological Noise in fMRI Data,” Journal of Neuroscience Methods, vol. 276, pp. 56–72, 2017.

Supplementary materials