Fast Nonlinear Risk Assessment for Autonomous Vehicles Using Learned Conditional Probabilistic Models of Agent Futures

Abstract

This paper presents fast non-sampling based methods to assess the risk for trajectories of autonomous vehicles when probabilistic predictions of other agents’ futures are generated by deep neural networks (DNNs). The presented methods address a wide range of representations for uncertain predictions including both Gaussian and non-Gaussian mixture models to predict both agent positions and control inputs conditioned on the scene contexts. We show that the problem of risk assessment when Gaussian mixture models (GMMs) of agent positions are learned can be solved rapidly to arbitrary levels of accuracy with existing numerical methods. To address the problem of risk assessment for non-Gaussian mixture models of agent position, we propose finding upper bounds on risk using nonlinear Chebyshev’s Inequality and sums-of-squares (SOS) programming; they are both of interest as the former is much faster while the latter can be arbitrarily tight. These approaches only require higher order statistical moments of agent positions to determine upper bounds on risk. To perform risk assessment when models are learned for agent control inputs as opposed to positions, we propagate the moments of uncertain control inputs through the nonlinear motion dynamics to obtain the exact moments of uncertain position over the planning horizon. To this end, we construct deterministic linear dynamical systems that govern the exact time evolution of the moments of uncertain position in the presence of uncertain control inputs. The presented methods are demonstrated on realistic predictions from DNNs trained on the Argoverse and CARLA datasets and are shown to be effective for rapidly assessing the probability of low probability events.

I Introduction

Prediction under uncertainty plays a key role in safety of autonomous vehicles. More precisely, in order for autonomous vehicles to drive safely on public roads, they need to predict the future states of other agents (e.g. human-driven vehicles, pedestrians, cyclists) and plan accordingly. Predictions, however, are inherently uncertain, so it is desirable to represent uncertainty in predictions of possible future states and reason about this uncertainty while planning. This desire is motivating ongoing work in the behavior prediction community to go beyond single mean average precision (MAP) prediction and develop methods for generating probabilistic predictions [1, 2, 3, 4]. In the most general sense, this involves learning joint distributions for the future states of all the agents conditioned on their past trajectories and other context specific variables (e.g. an agent is at a stop light, lane geometry, the presence of pedestrians, etc). However, learning such a distribution can often be intractable, so current works use a wide variety of different simplified representations for probabilistic predictions. For example [3] trains a conditional Variational Autoencoder (CVAE) to generate samples of possible future trajectories. Other works use generative adversarial networks (GANs) to generate multiple trajectories with probabilities assigned to each of them [4, 5]. As a discrete alternative, [6, 7] train a DNN to generate a probabilistic occupancy grid map with a probability assigned to each cell. However, such grid-based approaches effectively treat possible agents’ trajectories as belonging to a discrete space, while, in reality, agents may be at an uncountable number of points in continuous space. Many recent papers try to account for the continuous nature of uncertainty in space by learning Gaussian mixture models (GMMs) for vehicle positions [1, 8, 6] or coefficients of polynomials in that represent the vehicles’ positions [9]. Since learning uncertain models for position or pose can also sometimes produce results that are inconsistent with basic kinematics, some recent works develop DNNs that predict future control inputs which are then propagated through a kinematic model to predict future positions [10, 2].

Given a probabilistic prediction, an autonomous vehicle still needs to be able to rapidly evaluate the probability of a given plan resulting in a collision or, more generally, a constraint violation. We will refer to this problem as risk assessment and it is particularly challenging in the context of autonomous driving as 1) autonomous vehicles need to reason about low probability events to be safer than human drivers and 2) there are hard real time constraints on algorithm latency. Latency is a critical consideration for safety and will be a major consideration motivating the methods presented in this paper. While an algorithm with a latency of, for example, one second would often be acceptable in other robotics applications, it would be unacceptable for an autonomous vehicle traveling at 20 m/s on public roads. This requirement of low latency while retaining the ability to reason about low probability events makes naive Monte Carlo computationally intractable. To address this problem, adaptive and importance sampling methods have been proposed to estimate these probabilities with fewer samples [11, 12]. However, such methods i) do not provide any bound on the risk and any safety guarantees, ii) are not suitable for real-time risk assessment without parallelization and running on GPU, and iii) lead to performance that is highly sensitive to algorithm parameters and proposal distributions.

Non-sampling based methods are widely used in risk assessment problems. Although existing non-sampling based methods are fast, they are limited to a particular class of uncertainties and safety constraints. For example, Gaussian-Linear methods use Boole’s inequality [13, 14, 15] to estimate the probability of violation of linear constraints in the presence of Gaussian uncertainties. More precisely, the probability of a convex polytope is calculated as: . This results in a conservative upper bound on the risk. Chebyshev inequality based methods provide conservative bound on the risk of linear safety constraints using first two moments of uncertainties[16, 17]. Conditional-value-at-risk (CVaR) based methods use a conservative approximation of the indicator functions of the safety constraints, [18, 19]. To evaluate the CVaR based risk constraints sampling-based methods or Gaussian uncertainties are used, [20, 21].

Statement of Contributions: We present fast methods to assess the risk of trajectories for both Gaussian and non-Gaussian position and control models of other agents. In section IV, we begin by addressing the case when GMMs are used for agent position predictions. We show this particular case can be reduced to the problem of computing the CDF of a quadratic form in a multivariate Gaussian (QFMVG)- a well-studied problem in the statistics community for which methods exist that can rapidly solve it to arbitrary accuracy. To address the more general case when potentially non-Gaussian mixture models are used for agent position predictions, we apply statistical moment-based approaches to determine upper bounds on risk, which we will refer to as risk bounds.

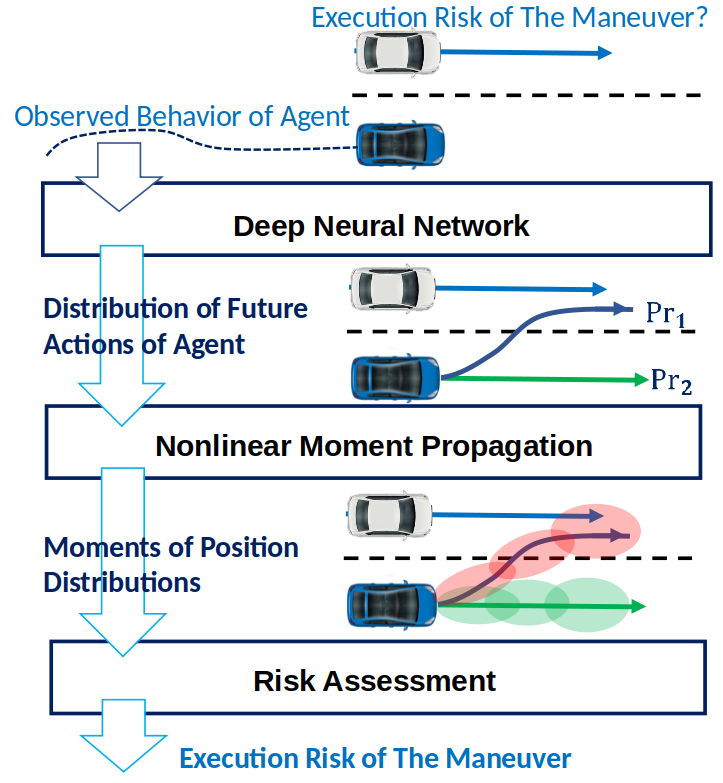

Namely, we propose using nonlinear Chebyshev’s Inequality and a univariate sums-of-squares (SOS) program that can be seen as a generalization of Chebyshev’s Inequality; the former is faster, while the latter can provide arbitrarily tight risk bounds. These moment-based approaches have the feature of being distributionally robust, producing risk bounds that are true for all possible distributions that take on the value of the given moments. To address uncertain models for control inputs, in Section V, we propagate the moments of predicted probabilistic control inputs through the nonlinear motion dynamics to obtain the exact moments of uncertain position over the planing horizon. For this purpose, given the nonlinear motion dynamics and moments of uncertain control inputs, we construct new deterministic moment-state linear systems that govern the exact time evolution of the moments of uncertain position. This enables the application of our non-Gaussian position risk assessment methods to the problem of risk assessment when models are learned for agent control inputs. Figure 1 illustrates our framework in this case. In Section VI, we present an encoder-decoder-based conditional predictor generating GMM predictions given the observed data and scene context. In Section VII, we demonstrate our methods on realistic predictions generated by DNNs trained on the Argoverse and CARLA datasets [22, 23]. Source code can be found at https://github.com/allen-adastra/risk_assess.

II Notation

Let denote the set of positive definite matrices. For any matrix and vector , let . Let denote the element in the row and column of . For any , let be the 2D rotation matrix parameterized by . For a vector and multi-index , let . For , let . For a vector valued function , denotes the component of .

Polynomials: Let be the set of real polynomials in the variables . Given polynomial , we represent as using the standard monomial basis of , and denotes the coefficients and . Also, let denotes the set of polynomials of degree at most .

Sum of Squares Polynomials: Polynomial is a sum of squares (SOS) polynomial if it can be written as a sum of finitely many squared polynomials, i.e., for some and for , SOS condition is a convex constraint that can be represented as a linear matrix inequality (LMI) in terms of coefficients of polynomial, i.e., , where is the vector of standard basis and is a positive semidefinite matrix in terms of the coefficients of the polynomial,

Moments of Probability Distribution: For a random vector and any , let denote its mean vector and covariance matrix respectively, and denote its characteristic function. Moments of random variables, are the generalization of mean and covariance and are defined as expected values of monomials of random variables. More precisely, given where , moment of order of random vector is defined as . Hence, the sequence of all moments of order is defined as expected values of all monomials of order . For example, sequence of the moments of order for is defined as .

Moment of order can be computed by applying partial derivatives of the characteristic function as follows:

| (1) |

for . Note that for any probability density function, the characteristic function always exists [24]. We will use finite sequence of the moments to represent Non-Gaussian probability distributions.

III Problem Statement

We define risk as the probability of an agent entering an ellipsoid around the ego vehicle. Thus, we are interested in computing the probability of other agents entering:

| (2) |

We argue ellipsoids are a useful representation as: 1) they can be fit relatively tightly to the profiles of vehicles, and 2) the sizes of both the ego vehicle and agent can be accounted for by properly scaling the size of the ellipsoid around the vehicle. Throughout the paper, agent positions at each time step are always defined in the frame of the planned future poses of the ego vehicle unless stated otherwise; Section IV-A shows how moments of distributions can be expressed in different frames. Given this formulation, the ellipsoid is parameterized by a constant matrix in the ego vehicle frame. In practice, multiple ellipsoids can be defined around the vehicle and an appropriate one selected at run-time. We restrict our focus to the single agent case and note that the risk in a multi-agent setting can be upper bounded by summing the risk associated with each agent.

If is some random vector for the position of the agent at time , then the risk associated with an agent across the whole step time horizon is:

| (3) |

By the inclusion-exclusion principle, the probability can be computed as the sum of the probabilities of the marginal events and the probabilities of all possible intersections of events:

| (4) |

In many works, the random variables are assumed to be independent across time or can be made to be independent across time by conditioning on a discrete mode [1, 8, 6]. If there is dependence across time, one would need the conditional distributions of the events which require additional information to be learned. As most work on behavior prediction currently assumes independence across time, this paper restricts its focus to the time independent case, and so:

| (5) |

Thus, the problem of risk assessment along the trajectory can be solved by computing the marginals at each time step , so the rest of the paper restricts its focus to the marginals.

IV Risk Assessment

In this section, we present solutions for both Gaussian and non-Gaussian risk assessment when moments of the random vector for agent position are known. We begin by addressing the problem of determining moments of agent positions in different frames to account for the ego vehicles planned trajectory. We then present our solution for the GMM case using numerical approximations of the CDFs of QFMVGs. To address the non-Gaussian case, we present methods based off Chebyshev’s Inequality and SOS programming. We assume basic knowledge of SOS programming; We refer the reader to [25, 26] for an overview of SOS programming and [27, 28, 29, 30, 31, 26] for moment-SOS based planning under uncertainty. Throughout this section, we assume the necessary moments of are known.

IV-A Changing Frames

Predictions are usually given in a global frame, so this section provides a method for transforming the global frame distribution moments into the ego vehicle frame. More generally, we are concerned with computing moments of in a new frame offset by and rotated by . As shown in the appendix, if is a mixture model, its moments can be computed in terms of moments of its components, so, in this section, let be a component of a mixture model. We propose only translating the moments and then accounting for the rotation by using instead of . The rotation can be accounted for by using instead of because:

| (6) | ||||

| (7) |

The translated moments can be computed by applying the binomial theorem to (here, the power is applied element-wise). Note that applying the binomial theorem to requires moments of up to order .

IV-B Risk Assessment for GMM Position Models

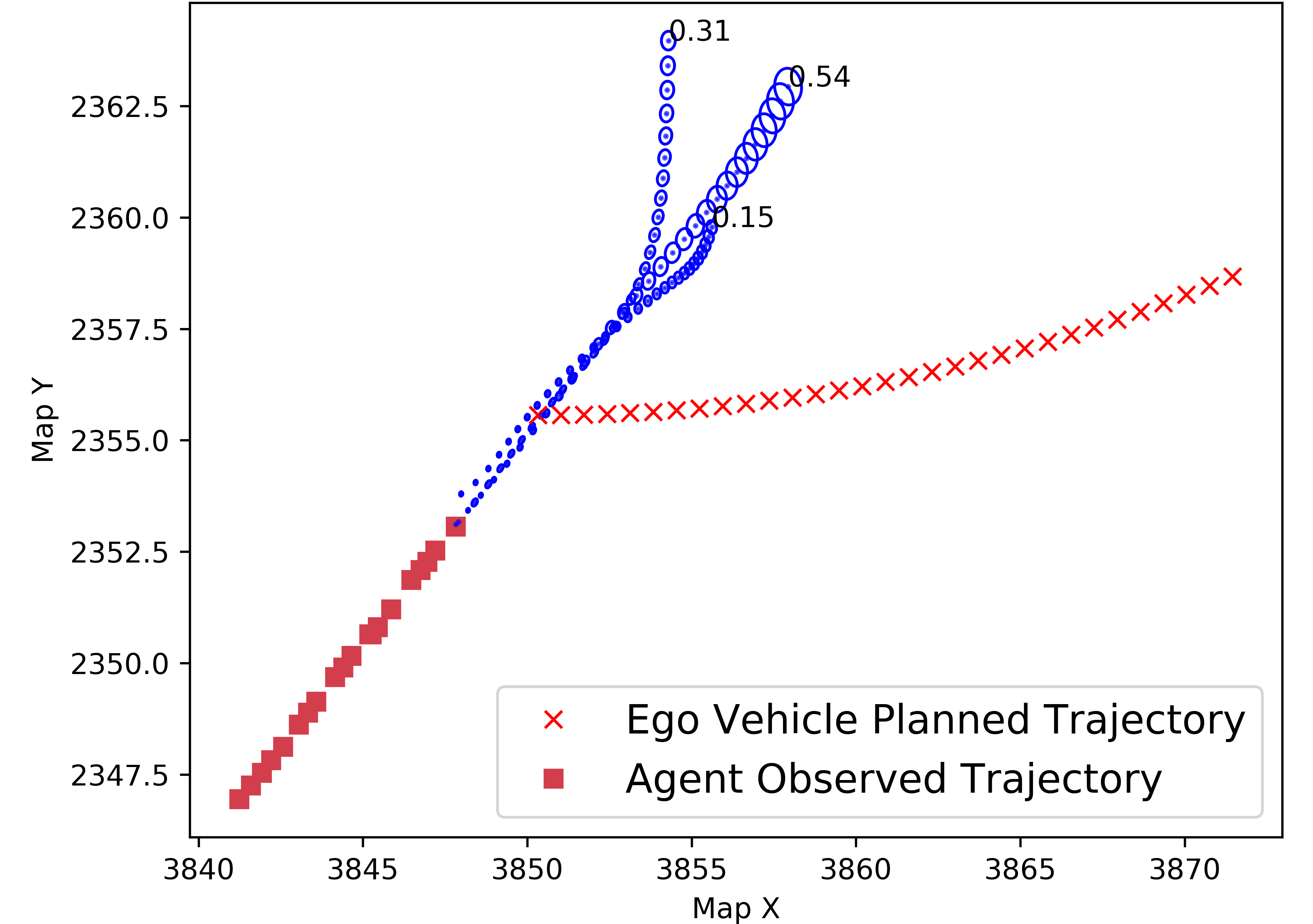

In this section, we provide a method to solve the risk assessment problem when the uncertain prediction is represented as a sequence of GMMs, , of the agents position with discrete modes determined by the Multinoulli . Many works currently learn GMMs for vehicle position as they express both multi-modal and continuous uncertainty [1, 8, 6]. As shown in Figure 2, they provide an intuitive representation of uncertainty in both the drivers high level decisions and low level execution. With time independence, the risk is:

| (8) |

Note that the above expression can be easily modified for the case when there is a single Multinoulli random variable that is constant across all time, an assumption used in, for example, [1]. The probabilities are learned parameters of GMMs, so the problem of risk assessment can be solved by computing for each agent, time step, and mode. Note that this is exactly the CDF of conditioned on which is a quadratic form in a multivariate Gaussian (QFMVG). Unfortunately, there does not exist a known closed form solution to exactly evaluate the CDF of QFMVGs, but fast approximation methods with bounded errors have been studied within the statistics community [32, 33, 34, 35, 36]. Several of these methods have been implemented in the R package CompQuadForm [37]. Of particular interest is the method of Imhof, which produces results with bounded approximation error by numerical inversion of the characteristic function of the QFMVG [36]. A faster, but less accurate, alternative is the method of Liu-Tang-Zhang which involves approximating the CDF of the QFMVG with the CDF of a non-central chi square distribution with parameters chosen to minimize the difference in kurtosis and skew between the approximate and target distributions [32].

IV-C Non-Gaussian Risk Assessment with Chebyshevs Inequality

As a consequence of the one-tailed Chebyshev’s Inequality, for any measurable function , whenever , we have that:

| (9) |

That is, the first two moments of are sufficient to establish a bound on the risk that the constraint is violated. We note that the requirement is not particularly restrictive because means the average case involves collision, thus corresponding to what is usually an unacceptable level of risk.

IV-C1 Applying Chebyshev’s Inequality to the Quadratic Form

To apply Chebyshev’s inequality to , we would need the first two moments of which can be expressed in terms of the first two moments of . The first moment can be expressed in terms of the mean vector and covariance matrix of [38]:

| (10) |

We can determine an expression for via an alternate representation for the quadratic form:

| (11) |

Thus, to compute the second moment of , we would need the moments of of order up to four.

IV-C2 Conservative Approximation with Half-Spaces

It’s possible to reduce the order of the moments that need to be propagated to two by instead approximating the ellipsoid as the intersection of half-spaces parameterized by and . The approximated set is thus:

| (12) |

Since the probability of any individual event is greater than the probability of the intersection of events, we have that:

| (13) |

So if we determine an upper bound on the probability of each with Chebyshev’s Inequality, the minimum of the Chebyshev bounds will be an upper bound on our risk. Since is an affine transformation of , its mean and variance can be expressed with the mean vector and covariance matrix of .

IV-D Non-Gaussian Risk Assessment with SOS Programming

When tighter risk bounds are desired than those obtained via Chebyshev’s Inequality, for any measurable function , an univariate SOS program can be used to upper-bound – the SOS program is univariate in the sense that it searches for a polynomial in a single indeterminant, not in the sense that there is only one decision variable [28]. The fact that the SOS program is univariate is significant because the key disadvantages of SOS, scalability and conservatism, are not as limiting for univariate SOS because: 1) the number of decision variables in the resulting SDP scales quadratically w.r.t. the order of the polynomial we are searching for and 2) the set of nonnegative univariate polynomials is equivalent to the set of univariate SOS polynomials, allowing univariate SOS to explore the full space of possible solutions.

We begin by noting that the probability of constraint violation is equivalent to the expectation of the indicator function of the sub-level set of :

| (14) |

where, is probability density function of and is the indicator function of the sub-level set of defined as if , and 0 otherwise.

The expectation of the indicator function, however, is not necessarily easily computable. To solve this problem, we find some polynomial with a more easily computable expectation that upper bounds the indicator function. If we can find some univariate polynomial, of order in some indeterminant with coefficients that upper bounds the indicator function, then clearly the following implication holds by substitution:

| (15) |

Given the coefficients , if we apply the expectation w.r.t. the density function of to both sides, then we can reduce the problem of finding an upper bound on to that of computing moments of the random variable :

| (16) |

where, is the moment of order of random variable . The moments of , in turn, are computable in terms of the moments of , i.e., , by expanding out the polynomial power and applying the linearity of expectation, [28]. For example, if , then:

| (17) |

The moments of can be computed using the moment generating function as in (1). In this section, we assume that we know the necessary moments of to compute the moments . We also normalize the moments, as doing so improves the numerical conditioning of the problem 111normalization is valid because for .

Now consider the following univariate SOS program in the indeterminant which can search for upper bound polynomial indicator function, i.e., , which minimizes the upper bound on risk [28]:

| (18a) | ||||

| (18b) | ||||

| (18c) | ||||

If the order of the polynomial is chosen to be for some , then we should have that and . If for some , then we should have that and . Note that constraints (18b) and (18c) are the nonnegativity constraints of the indicator function , i.e., if , and otherwise . More precisely, the constraint (18b) enforces:

| (19) |

Also, according to the constraint (18c), is constrained to be SOS; So it is globally nonnegative, i.e, . Hence, polynomial is an upper bound polynomial approximation of the indicator function, i.e., . Thus, according to (15) and (16), the optimal objective value of this SOS program yields an upper bound on .

Also, note that SOS optimization is a convex optimization. More precisely, it has a linear cost function in terms of the coefficients of the polynomial and convex constraints in the form of linear matrix inequalities in terms of the coefficients of the polynomials , and . Hence, we can solve the SOS optimization efficiently using the off-the-shelf LMI optimization solvers.

Remark 1: We can solve the optimization in to obtain the polynomial indicator function i.e., , in the offline step. Hence, we can compute the upper bound of the risk in terms of the obtained polynomial indicator function and moments of the uncertain states of the agent vehicle, in real-time, i.e.,

For more information see [28] and

https://github.com/jasour/Nonlinear-Risk-Assessment

Remark 2: The provided non-Gaussian risk assessment approaches in Sections IV.C and IV.D are less conservative than the existing non-sampling based methods. This is because, we are using higher order moments of uncertainties and also tight polynomial approximation of the indicator functions of safety constraints. One can improve the risk assessment results by increasing the number of the moments in SOS programming based approach.

V Moment Propagation

While directly learning distributions for agents future positions can be an effective strategy, one major disadvantage is it can produce physically unrealistic predictions. [10, 2] address this by learning distributions for control inputs and then propagating samples through a kinematic model. While the Kalman filter and its variants, such as the extended and unscented Kalman filters, can be used to propagate mean and covariance, they are not exact and do not immediately apply to higher order moments [39, 40, 41].

In this section, we provide an approach for nonlinear moment propagation that can, in principle, work for moments up to arbitrary order [42, 30]. Given a nonlinear motion model and a random vector for control inputs, , this section is concerned with the problem of computing statistical moments of the uncertain position s.t. the non-Gaussian risk assessment methods presented in Section IV can be applied. More precisely, we are looking for the moments of uncertain position of the form where . We use a stochastic version of the discrete-time Dubin’s car to both demonstrate the general approach and to address the problem of agent risk assessment:

| (20a) | ||||

| (20b) | ||||

| (20c) | ||||

| (20d) | ||||

Above, the control vector is where and are random variables describing the agent’s acceleration and steering at time and are assumed to be independent. is the position of some reference point on the agent in a fixed frame, is its speed, and is the angle of its velocity vector with respect to the fixed frame. The time steps for discretization are omitted for brevity; the values of the variables can simply be scaled accordingly.

To obtain the moments of the uncertain position over the planning horizon, we will propagate the moments of uncertain control inputs through the nonlinear stochastic Dubin’s model. To this end, we construct deterministic linear dynamical systems, i.e., mapping between the moments at time and , that govern the exact time evolution of the moments of uncertain position in the presence of uncertain control inputs. By obtaining such dynamical systems in terms of the moments, we can recursively propagate the initial moments of the uncertain position over the planning horizon.

V-A Motivating Example

To show how our moment propagation algorithm works, we begin by showing how the dynamics of the first order moment for the state in system can be found manually, i.e., mapping between and . Our proposed algorithm is essentially an automated version of this process. By substituting the equations in and applying the linearity of expectation, we arrive at the dynamics of the moment:

| (21) |

To complete the obtained mapping between and , we need to compute the update rule for the term . By substituting the equations in and applying the linearity of expectation, we arrive at the update rule of the moment as:

where, is the first order moment of uncertain control input and can be computed using it’s characteristic function as in (1). Also, and are the first order trigonometric moments of uncertain control input and can be computed using it’s characteristic function as shown in Appendix.B. To complete the obtained update rule, we need to compute the update rule of the terms , , and . By doing so, we can complete the dynamics of the moment in (21) in terms of a set of slack moments , , , and . This will produces a closed form set of equations that can recursively compute in terms of the moments of the initial uncertain states and moments of uncertain control inputs at each time step.

Similarly, we can obtain the dynamics of the higher order moments of the uncertain position, i.e., . This process, however, is tedious and is easily subject to human error, especially for larger moment orders . To address these issues, in [43, 44], we developed TreeRing algorithm that uses a dependency graph to identify all the slack moment states needed to construct the moment dynamical systems.

In this paper, we use a different approach and provide a general framework to construct the moment dynamical systems. The main idea is to transform the nonlinear stochastic Dubbin’s model in (20) into a equivalent new augmented linear-state system. In this case, due to the linear relation of the states of the augmented linear-state system at time and , moments of order of the states at time can be described only in terms of the moments of order of the states at time . Hence, we do not need to look for a set of slack moment states as described in (21).

V-B Equivalent Augmented Linear-State System

In [42], we show that nonlinear stochastic motion dynamics can be transformed in to equivalent linear-state dynamical systems by introducing suitable new state variables. In this section, we define such equivalent augmented linear-state system for stochastic nonlinear Dubin’s model (20) as follows:

| (22) |

where is the augmented state vector in terms of the position and a set of nonlinear functions of the states of the original nonlinear model in (20). Also, matrix is defined only in terms of the nonlinear functions of the uncertain control inputs and as follows:

Note that the obtained augmented system in (22) is linear in terms of the states and also equivalent to the original stochastic nonlinear Dubin’s model in (20). We use the obtained augmented linear-state system in (22) to obtain moment-state linear dynamical systems that govern the exact time evolution of the moments of the uncertain position states in nonlinear stochastic system (20).

V-C Moment-State Linear Systems

We define the moment-state linear systems for the obtained augmented linear-state system in (22) as follows [42]:

| (23) |

where, is the vector of all moments of order of the augmented state vector and is a matrix in terms of the moments of the uncertain control inputs. To construct the moment-state linear system in (23), we need the expected values of the monomials of order of the vector , e.g., . Since is a linear function of as in (22), we can describe the moments of order of completely in terms of the moments of order of . Hence, we do not need to look for a set of slack moment states as described in the motivating example of Section V-A.

For example, we obtain the moment-state linear system of order of the form where

is the vector of all moments of order of . Also, matrix is described in terms the first order moments of uncertain control input and first order trigonometric moments of uncertain control input as follows:

We can compute the moments of uncertain control inputs in terms of the characteristic functions as shown in (1) and Appendix.B. The obtained first order moment-state linear dynamical system describes the exact time evolution of the first order moments of the uncertain position of the original stochastic nonlinear system in (20). Similarly, we can obtain the moment-state linear system of the form (23) for higher moment order to describe the exact time evolution of the higher order moments of the uncertain position.

Note that we can construct the deterministic linear moment-state systems of the form (23) for different moment order in the offline step. Hence, we can use the obtained moment systems to propagate the moments of the uncertain initial states over the planning horizon in real-time.

More precisely, given initial moments and , the moments at time step can be obtained by recursion of

. Similarly, we can describe the moments by the solution of the linear moment system as .

Remark 3: The provided approach is not limited to the motion dynamics in . We can use different motion dynamics with different sources of uncertainties to model the uncertain behaviour of the agent vehicles. For more information see [42] and https://github.com/jasour/Uncertainty-Propagation

VI Deep Neural Network Predictor

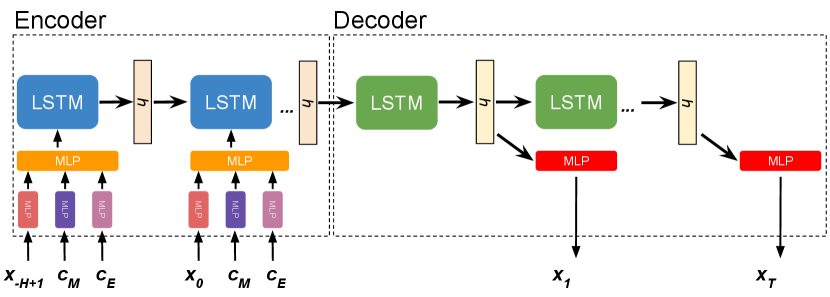

To obtain probabilistic future states of agents for risk assessment, we propose a conditional deep neural network that generates Gaussian mixture model parameters for positions or controls for a given sequence of observed positions over the past time steps and scene context. Although our framework works with any conditional prediction model that outputs GMM parameters for positions, we aim to generate accurate and realistic predictions by selecting an encoder-decoder-based predictor that utilizes long short-term memory (LSTM) units, because of the recent success of recurrent neural networks in trajectory prediction on different benchmarks [45, 4, 8, 10].

VI-A Input

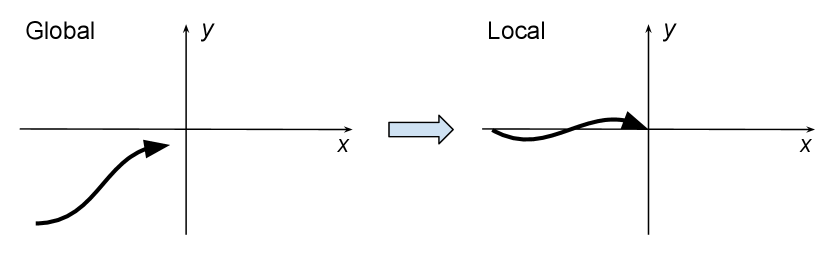

The input consists of observed vehicle positions for the past 20 timesteps, as well as scene context representing driving context such as lanes coordinates and the future trajectory of the ego car . Since the observed trajectory is usually collected in global coordinate, which can lead to bias in prediction, we normalize the past trajectory of the target vehicle, so that the last and first observed positions are at origin and the -axis, respectively, as shown in Figure 3.

The conditioning scene context is represented by a set of coordinates of the lanes that are close to the target vehicle (e.g., within 50 meters) and also the future planned trajectory of the ego car. The coordinates of the lanes and the ego car trajectory are normalized into the local coordinate of the target car. Such scene context provides additional cues on the possible future location of the target vehicle. Note that these contexts are usually not available across all driving platforms. Thus, we design a prediction model that is flexible in terms of the inputs, as we show in Section VI-C.

VI-B Output

The output consists of a sequence of GMM parameters over the prediction horizon as follows:

where represents the number of components in the GMM and represents the weight of th component at time step such that . Also, mean and covariance represent the predicted uncertain position and predicted uncertain control inputs in GMM position predictor and GMM control predictor, respectively.

VI-C Model Architecture

The encoder-decoder predictor is shown in Figure 4. The encoder is a sequence of LSTM units taking observed trajectories of the target agent and scene context as input and outputs a latent vector encoding agent hidden state. The input modalities are processed with separate multilayer perceptrions (MLPs) before being fed into the LSTM unit. This allows our model to handle different input options given their availability. The decoder is also a sequence of LSTM units that takes the latent vector and generates a set of GMM parameters from each LSTM unit with MLPs. For simplicity, we use three component mixture models. For each component, we generate a weight value, a mean vector, and a covariance matrix representing uncertainties of predictions.

VI-D Losses

Given the prediction and the groundtruth position or control values , the loss function is composed of 2 terms as follows:

| (24) |

where measures the loss between the predicted mean values and ground truth observations over the future trajectories, measures the negative log-likelihood loss between the predicted distributions and groundtruth observations, and is the weight coefficient for the loss.

VII Experiments

In this section, we demonstrate the performance of our system through two learning-based predictors that predict stochastic position and control for the target agent. For each predictor, we describe its network details and training procedure, before presenting risk assessment results. All computations were performed on a desktop with an Intel Core i9-7980XE CPU at 2.60 GHz. All Monte Carlo (MC) methods are implemented with vectorized NumPy operations to have a realistic assessment of run times for naive MC.

VII-A GMM Position Predictor

VII-A1 Training Details

To obtain probabilistic trajectory distributions of agents, we trained an encoder-decoder DNN described in Section VI, with the following details. Each MLP in Encoder is a 2-layer MLP followed by a ReLU activation function, where each layer consists of 32 hidden units. The LSTM units in both Encoder and Decoder has a hidden size of 32 with one layer. The MLP in Decoder is a single layer MLP that produces the desired output parameters. The model is trained and validated on a subset of the Argoverse dataset [22]. During training, we use a batch size of 32, learning rate of 0.0001, and .

VII-A2 Prediction Experiments

In order to validate the prediction performance of our model, we perform an ablation study and compare our model with baseline models, as summarized in Table I. The performance is evaluated over standard Argoverse trajectory forecasting metrics [22] such as minimum-of-N average displacement error (MoN ADE) and minimum-of-N final displacement error(MoN FDE), which measures the best prediction error over the entire prediction horizon and at the end of prediction horizon, respectively.

We first present prediction results from two physics-based baselines that assume constant velocity and constant acceleration when generating predictions. Although working well over short horizons such as a few seconds, physics-based models fail to generate accurate predictions over 3 seconds. Furthermore, we compare our model with other models whose inputs are i) unnormalized global trajectories, ii) normalized trajectories, iii) normalized trajectories and ego car trajectory, and iv) normalized trajectories and both map context and ego trajectory. We observe that normalizing target car’s past trajectory improve prediction performance by 12.67% in terms of MoN ADE metric. On the other hand, ego car’s trajectory is not as helpful as map context in terms of improving the prediction error.

| Model | MoN ADE (m) | MoN FDE (m) |

|---|---|---|

| Constant Velocity | 1.75 | 3.96 |

| Constant Acceleration | 3.17 | 8.12 |

| DNN Unnormalized | 1.50 | 2.80 |

| DNN Normalized | 1.31 | 2.64 |

| DNN Normalized+Ego | 1.30 | 2.62 |

| DNN Normalized+Ego+Map | 1.22 | 2.49 |

VII-A3 Risk Assessment Experiments

On a dataset of 500 scenarios similar to that shown in Figure 2, predictions were made and the risk was evaluated along a predefined trajectory for the ego vehicle as shown in Table II. To evaluate QFMVG’s, we tested both the methods of Imhof and Liu-Tang-Zhang. The methods proposed are much faster than naive Monte Carlo with far lower error. The method of Imhof with an error tolerance of was used as ground truth [36]. Only scenarios were used for error computation as results from scenarios with computed ground truth errors within tolerances (i.e: ) were neglected for error computation. We note that the method of Liu-Tang-Zhang empirically produces results with very small errors while being several times faster than the method of Imhof, which may prove useful in certain contexts.

| Method |

|

|

|

||||||

| Imhof | |||||||||

| Liu-Tang-Zhang | |||||||||

| MC | 106.9 | 0.38 | |||||||

| MC | 422.5 | 0.13 | |||||||

| MC | 1329 | 0.12 |

VII-B GMM Control Predictor

VII-B1 Training Details

We use a similar DNN as the GMM position predictor, but the output becomes instead a set of GMM parameters for control signals defined in (20). When training and validating our model, instead of using the Argoverse dataset which has noisy differentiated control data due to perception noise, we use our own data collected from a naturalistic driving simulator called CARLA [23] that provides accurate ground truth control values. The model is trained and validated on 10k samples collected in CARLA.

VII-B2 Chebyshev Experiments

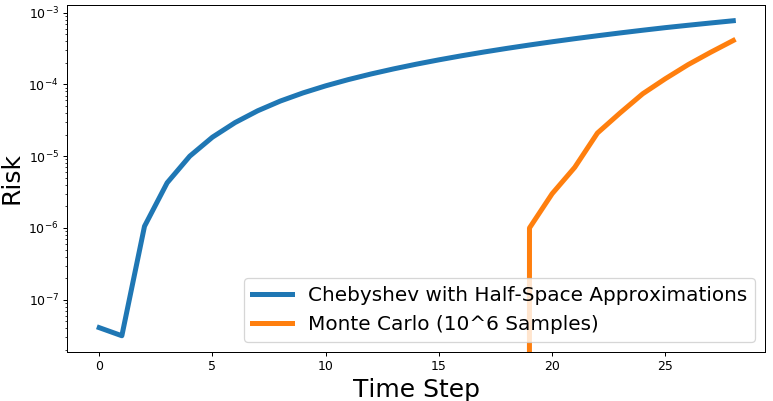

In this section the half-space approximation method with half-spaces was tested. The initial state of the agent vehicles was assumed to be known and deterministic. Random variables for control obtained using the DNN and moment-state dynamical systems were then used to compute the mean and covariance matrix of position at each time step. Over 50 scenarios, the mean time to evaluate the risk for a given trajectory for the Chebyshev method was 80ms while the Monte Carlo method with samples took 140 seconds. The average worst-case conservatism of the Chebyshev risk estimate for a given time step along a trajectory was (assuming the Monte Carlo results represent ground truth). Figure 5 shows the risk for both methods.

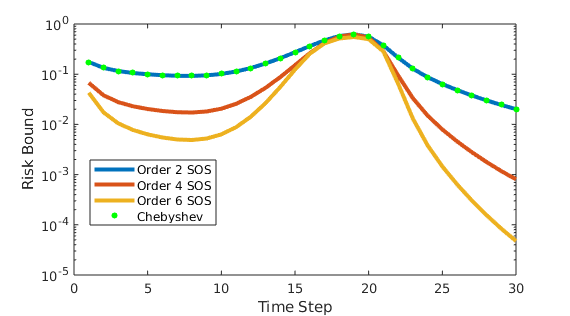

VII-B3 Comparing SOS + Chebyshev

Experiments were run to test and compare the Chebyshev and SOS methods described in (IV-C1) and (IV-D). For this experiment, higher order moments were obtained by automatic differentiation of the MVG moment generating function and the resulting moments of were normalized. YALMIP was used to transcribe the SOS programs into Semidefinite programs, and SeDuMi was used to solve the resulting semidefinite programs [46, 47]. As shown in Figure 6, we observe that 1) Chebyshev bound produces nearly the same result as the second order SOS program and 2) the SOS program with higher order moments can yield significantly better bounds, especially in the tails. The solve times for each time step only marginally increased for the higher order SOS programs; the mean solve times were 42, 44, and 49 ms for the second, fourth, and sixth order SOS formulations, respectively. While these solve times obtained by solving univariate SOS programs are much better than those often encountered with multivariate SOS programs, further advances in performance are needed for this to be used online.

VIII Conclusions

In this paper, we provided fast non-sampling based methods to propagate uncertainties and assess the risk for trajectories of autonomous vehicles when probabilistic predictions of other agents’ futures are generated by deep neural networks. Our experimental results with risk assessment methods for learned Gaussian mixture models of position and the Chebyshev and SOS program based risk assessment methods for learned non-Gaussian position and control models suggest that high performance implementations would be immediately practical for use in online applications. As future work, we will incorporate uncertainty propagation and risk assessment into motion planning algorithms, but we note that it may be easily incorporated into standard algorithms with “collision check” primitives such as RRTs and PRMs [26]. The SOS method does significantly improve upon the risk bounds from the Chebyshev method at the cost of additional computation; future research should further develop methods to make the SOS step offline to improve runtimes for online applications as proposed in [28]. Future work in both prediction and risk assessment should also work towards relaxing assumptions such as time independence.

Acknowledgements

This work was supported in part by Boeing grant MIT-BA-GTA-1 and by the Masdar Institute grant 6938857. Allen Wang was supported in part by a NSF Graduate Research Fellowship.

IX Appendix

IX-A Moments and Characteristic Functions of Mixture Models

Let denote the pdf of a -component mixture model , with pdf components and let denote the pdf of the category Multinoulli. Then, by definition . For any measurable function , by interchanging the order of integration and summation, the following holds true

| (25) | ||||

| (26) |

By letting or , the moments and characteristic function of can both be computed as the weighted sum of those of their components.

IX-B Moments of Trigonometric Random Variables

In this section, we show how trigonometric moments of the form , , and can be computed in terms of the characteristic function of the random variable , denoted by [42, 43]. We begin by applying Euler’s Identity to the definition of the characteristic function as follows:

| (27) | ||||

Thus, we have that and . This immediately gives us the ability to compute the first moments of our trigonometric random variables. For higher moments, the trigonometric power formulas can be used to express quantities of the form as the sum of quantities of the form where and similarly for [48]. Thus, higher moments of and can be computed using . More precisely, given trigonometric moments of order of the forms and reads as [42]:

| (28) |

| (29) |

where, .

Trigonometric moments of the form:

| (30) |

can also ultimately be computed in terms of . This can be seen if we make the substitutions and , then (30) can be expressed as:

| (31) |

By applying the binomial theorem to both expressions in parentheses, and multipying the resulting expressions, we find the entire expression in the expectation operator can be expressed as a polynomial in and . Thus, the entire expression can be written as the sum of terms of the form for which is in the definition of . More precisely, given , trigonometric moment of the form reads as [42]:

| (32) |

References

- [1] Y. Chai, B. Sapp, M. Bansal, and D. Anguelov, “Multipath: Multiple probabilistic anchor trajectory hypotheses for behavior prediction,” in Conference on Robot Learning (CoRL), 2019.

- [2] N. Rhinehart, K. M. Kitani, and P. Vernaza, “R2P2: A reparameterized pushforward policy for diverse, precise generative path forecasting,” in Proceedings of the European Conference on Computer Vision (ECCV), 2018, pp. 772–788.

- [3] N. Lee, W. Choi, P. Vernaza, C. B. Choy, P. H. Torr, and M. Chandraker, “Desire: Distant future prediction in dynamic scenes with interacting agents,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2017, pp. 336–345.

- [4] X. Huang, S. G. McGill, J. A. DeCastro, L. Fletcher, J. J. Leonard, B. C. Williams, and G. Rosman, “Diversitygan: Diversity-aware vehicle motion prediction via latent semantic sampling,” IEEE Robotics and Automation Letters, vol. 5, no. 4, pp. 5089–5096, 2020.

- [5] J. Li, H. Ma, and M. Tomizuka, “Interaction-aware multi-agent tracking and probabilistic behavior prediction via adversarial learning,” in 2019 International Conference on Robotics and Automation (ICRA). IEEE, 2019, pp. 6658–6664.

- [6] J. Hong, B. Sapp, and J. Philbin, “Rules of the road: Predicting driving behavior with a convolutional model of semantic interactions,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2019, pp. 8454–8462.

- [7] M. Bansal, A. Krizhevsky, and A. Ogale, “Chauffeurnet: Learning to drive by imitating the best and synthesizing the worst,” in Robotics: Science and Systems, 2019.

- [8] N. Deo and M. M. Trivedi, “Multi-modal trajectory prediction of surrounding vehicles with maneuver based lstms,” in 2018 IEEE Intelligent Vehicles Symposium (IV). IEEE, 2018, pp. 1179–1184.

- [9] X. Huang, S. G. McGill, B. C. Williams, L. Fletcher, and G. Rosman, “Uncertainty-aware driver trajectory prediction at urban intersections,” in 2019 International Conference on Robotics and Automation (ICRA). IEEE, 2019, pp. 9718–9724.

- [10] H. Cui, T. Nguyen, F.-C. Chou, T.-H. Lin, J. Schneider, D. Bradley, and N. Djuric, “Deep kinematic models for physically realistic prediction of vehicle trajectories,” arXiv preprint arXiv:1908.00219, 2019.

- [11] E. Schmerling and M. Pavone, “Evaluating trajectory collision probability through adaptive importance sampling for safe motion planning,” in Robotics: Science and Systems, 2017.

- [12] J. Norden, M. O’Kelly, and A. Sinha, “Efficient black-box assessment of autonomous vehicle safety,” arXiv preprint arXiv:1912.03618, 2019.

- [13] L. Blackmore, M. Ono, and B. C. Williams, “Chance-constrained optimal path planning with obstacles,” IEEE Transactions on Robotics, vol. 27, no. 6, pp. 1080–1094, 2011.

- [14] L. Blackmore and M. Ono, “Convex chance constrained predictive control without sampling,” in AIAA Guidance, Navigation, and Control Conference, 2009, p. 5876.

- [15] B. Luders, M. Kothari, and J. How, “Chance constrained rrt for probabilistic robustness to environmental uncertainty,” in AIAA guidance, navigation, and control conference, 2010, p. 8160.

- [16] T. Summers, “Distributionally robust sampling-based motion planning under uncertainty,” in 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2018, pp. 6518–6523.

- [17] Y. K. Nakka and S.-J. Chung, “Trajectory optimization for chance-constrained nonlinear stochastic systems,” in 2019 IEEE 58th Conference on Decision and Control (CDC). IEEE, 2019, pp. 3811–3818.

- [18] A. Nemirovski and A. Shapiro, “Convex approximations of chance constrained programs,” SIAM Journal on Optimization, vol. 17, no. 4, pp. 969–996, 2007.

- [19] L. J. Hong, Y. Yang, and L. Zhang, “Sequential convex approximations to joint chance constrained programs: A monte carlo approach,” Operations Research, vol. 59, no. 3, pp. 617–630, 2011.

- [20] A. Hakobyan, G. C. Kim, and I. Yang, “Risk-aware motion planning and control using cvar-constrained optimization,” IEEE Robotics and Automation Letters, vol. 4, no. 4, pp. 3924–3931, 2019.

- [21] D. D. Fan, K. Otsu, Y. Kubo, A. Dixit, J. Burdick, and A.-A. Agha-Mohammadi, “Step: Stochastic traversability evaluation and planning for safe off-road navigation,” arXiv preprint arXiv:2103.02828, 2021.

- [22] M.-F. Chang, J. Lambert, P. Sangkloy, J. Singh, S. Bak, A. Hartnett, D. Wang, P. Carr, S. Lucey, D. Ramanan et al., “Argoverse: 3d tracking and forecasting with rich maps,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2019, pp. 8748–8757.

- [23] A. Dosovitskiy, G. Ros, F. Codevilla, A. Lopez, and V. Koltun, “CARLA: An open urban driving simulator,” in Proceedings of the 1st Annual Conference on Robot Learning, 2017, pp. 1–16.

- [24] E. Çınlar, Probability and stochastics. Springer Science & Business Media, 2011, vol. 261.

- [25] P. A. Parrilo, “Semidefinite programming relaxations for semialgebraic problems,” Mathematical programming, vol. 96, no. 2, pp. 293–320, 2003.

- [26] A. Jasour, “Risk aware and robust nonlinear planning,” Course Notes for MIT 16.S498, rarnop.mit.edu, 2019.

- [27] A. Jasour and B. C. Williams, “Risk contours map for risk bounded motion planning under perception uncertainties,” in 2019 Robotics: Science and System (RSS), Germany, 2019.

- [28] A. Jasour, A. Hofmann, and B. C. Williams, “Moment-sum-of-squares approach for fast risk estimation in uncertain environments,” in 2018 IEEE Conference on Decision and Control (CDC), 2445–2451, 2018.

- [29] A. Jasour and B. C. Williams, “Sequential convex chance optimization for flow-tube based control of probabilistic nonlinear systems,” in 2019 IEEE Conference on Decision and Control (CDC), France, 2019.

- [30] A. Jasour, “Convex approximation of chance constrained problems: Application in systems and control,” in Dissertation in School of Electrical Engineering and Computer Science, The Pennsylvania State University, 2016.

- [31] A. Jasour, N. S. Aybat, and C. M. Lagoa, “Semidefinite programming for chance constrained optimization over semialgebraic sets,” SIAM Journal on Optimization, vol. 25, no. 3, pp. 1411–1440, 2015.

- [32] H. Liu, Y. Tang, and H. H. Zhang, “A new chi-square approximation to the distribution of non-negative definite quadratic forms in non-central normal variables,” Computational Statistics & Data Analysis, vol. 53, no. 4, pp. 853–856, 2009.

- [33] H. Solomon and M. A. Stephens, “Distribution of a sum of weighted chi-square variables,” Journal of the American Statistical Association, vol. 72, no. 360a, pp. 881–885, 1977.

- [34] S. Kotz, N. L. Johnson, and D. Boyd, “Series representations of distributions of quadratic forms in normal variables II. Non-central case,” The Annals of Mathematical Statistics, vol. 38, no. 3, pp. 838–848, 1967.

- [35] P. Duchesne and P. L. De Micheaux, “Computing the distribution of quadratic forms: Further comparisons between the liu–tang–zhang approximation and exact methods,” Computational Statistics & Data Analysis, vol. 54, no. 4, pp. 858–862, 2010.

- [36] J.-P. Imhof, “Computing the distribution of quadratic forms in normal variables,” Biometrika, vol. 48, no. 3/4, pp. 419–426, 1961.

- [37] P. L. De Micheaux, “Compquadform: distribution function of quadratic forms in normal variables,” R package version, vol. 1, no. 3, 2017.

- [38] S. B. Provost and A. Mathai, Quadratic forms in random variables: theory and applications. M. Dekker, 1992.

- [39] E. A. Wan and R. Van Der Merwe, “The unscented kalman filter for nonlinear estimation,” in Proceedings of the IEEE 2000 Adaptive Systems for Signal Processing, Communications, and Control Symposium (Cat. No. 00EX373). Ieee, 2000, pp. 153–158.

- [40] R. E. Kalman and R. S. Bucy, “New results in linear filtering and prediction theory,” 1961.

- [41] S. J. Julier and J. K. Uhlmann, “Unscented filtering and nonlinear estimation,” Proceedings of the IEEE, vol. 92, no. 3, pp. 401–422, 2004.

- [42] A. Jasour, A. Wang, and B. C. Williams, “Moment-based exact uncertainty propagation through nonlinear stochastic autonomous systems,” arXiv:2101.12490, 2021.

- [43] A. Wang, X. Huang, A. Jasour, , and B. C. Williams, “Fast risk assessment for autonomous vehicles using learned models of agent futures,” Robotics: Science and System (RSS), 2020.

- [44] A. Wang, A. Jasour, , and B. C. Williams, “Non-gaussian chance-constrained trajectory planning for autonomous vehicles in the presence of uncertain agents,” IEEE Robotics Automation Letters (RA-L), vol. 5(4), pp. 6041–6048, 2020.

- [45] A. Alahi, K. Goel, V. Ramanathan, A. Robicquet, L. Fei-Fei, and S. Savarese, “Social LSTM: Human trajectory prediction in crowded spaces,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2016, pp. 961–971.

- [46] J. Löfberg, “YALMIP: A toolbox for modeling and optimization in MATLAB,” in In Proceedings of the CACSD Conference, Taipei, Taiwan, 2004.

- [47] J. F. Sturm, “Using SeDuMi 1.02, a MATLAB toolbox for optimization over symmetric cones,” Optimization methods and software, vol. 11, no. 1-4, pp. 625–653, 1999.

- [48] D. Zwillinger, CRC standard mathematical tables and formulae. Chapman and Hall/CRC, 2002.