Fast User Adaptation for Human Motion Prediction in Physical Human–Robot Interaction

Abstract

Accurate prediction of human movements is required to enhance the efficiency of physical human–robot interaction. Behavioral differences across various users are crucial factors that limit the prediction of human motion. Although recent neural network-based modeling methods have improved their prediction accuracy, most did not consider an effective adaptations to different users, thereby employing the same model parameters for all users. To deal with this insufficiently addressed challenge, we introduce a meta-learning framework to facilitate the rapid adaptation of the model to unseen users. In this study, we propose a model structure and a meta-learning algorithm specialized to enable fast user adaptation in predicting human movements in cooperative situations with robots. The proposed prediction model comprises shared and adaptive parameters, each addressing the user’s general and individual movements. Using only a small amount of data from an individual user, the adaptive parameters are adjusted to enable user-specific prediction through a two-step process: initialization via a separate network and adaptation via a few gradient steps. Regarding the motion dataset that has 20 users collaborating with a robotic device, the proposed method outperforms existing meta-learning and non-meta-learning baselines in predicting the movements of unseen users.

Index Terms:

Physical human-robot interaction, deep learning methods, human motion prediction, fast user adaptation, meta-learning.I Introduction

With recent advancements in robotics, collaborative robots are now considered to move in physical contact with humans [1, 2, 3, 4]. One representative example of the physical human–robot interaction (pHRI) is a situation in which a person performs a task while receiving physical assistance from a robot [5]. Under robotic guidance, humans can utilize the repeatability and accuracy of the robot, which leads to improved productivity and reduced workload [6]. The movement of robots for human assistance can be planned based on human behavior prediction [7, 8]. However, if the robot mispredicts the next human motion and a conflict between the human’s intention and the robotic guidance occurs, it can lead to a decrease in the collaborative task performance and an increase in the discomfort of the human operator [9]. Therefore, expanding the robot’s capability to predict human motion is garnering considerable interest in the HRI field [10, 11].

The different behavioral patterns of individual human operators (i.e., users) are contributing factors that limit the prediction of human motion. People have different behaviors owing to a variety of factors, such as their motor skills or personal preferences [12]. Recent deep learning-based approaches have succeeded in enhancing the accuracy of human motion prediction; however, only a few studies have addressed how to respond to different users. Most of the previous methods employed a neural network model with fixed parameters to predict the movements of various users. A straightforward alternative that can cope with various users is to train a new model from scratch or to fine-tune a pretrained model for each new user. However, acquiring sufficient data for every new user is time-consuming and impractical in real-world applications. Therefore, training a single prediction model with user-adaptive characteristics is crucial for further advancement in human motion prediction.

To address this challenge, a meta-learning approach can be considered as a possible solution. Meta-learning, learning to learn, is a promising machine learning technique for solving the fast adaptation problem, i.e., enabling a model to rapidly adapt to previously unseen tasks with small amounts of data. In the wake of model-agnostic meta-learning (MAML) [13], optimization-based meta-learning algorithms have demonstrated significant success in fast adaptation problems, such as few-shot image classification tasks.

The meta-learning approach is effective for solving fast adaptation problems. However, it is unclear whether the meta-learning approach is effective in the fast user adaptation problem, that is, enabling a single model to swiftly adapt to previously unseen users with a small amount of their behavioral data. Adapting to different user behaviors in a cooperative situation with a robot has different characteristics from the problems previously addressed by the meta-learning approach, because of its unique situation. For example, the movements of users to perform a specific task can be divided into general movements (i.e., with low variance between users) to achieve a goal and individual movements (i.e., with high variance between users) affected by individual factors. Therefore, it is necessary to distinguish between them to adapt effectively to different user behaviors. This distinction has not been the focus of previous applications using the meta-learning approach.

We have recently demonstrated in [8] that the parameter adaptation of the user prediction model using MAML is effective in providing personalized haptic guidance to each user. This was the first attempt to apply meta-learning to a fast user adaptation problem. However, that study was limited to the direct application of MAML, which is not specifically designed to solve fast user adaptation problems. Therefore, the applicability of various meta-learning algorithms to fast user adaptation still remains unclear.

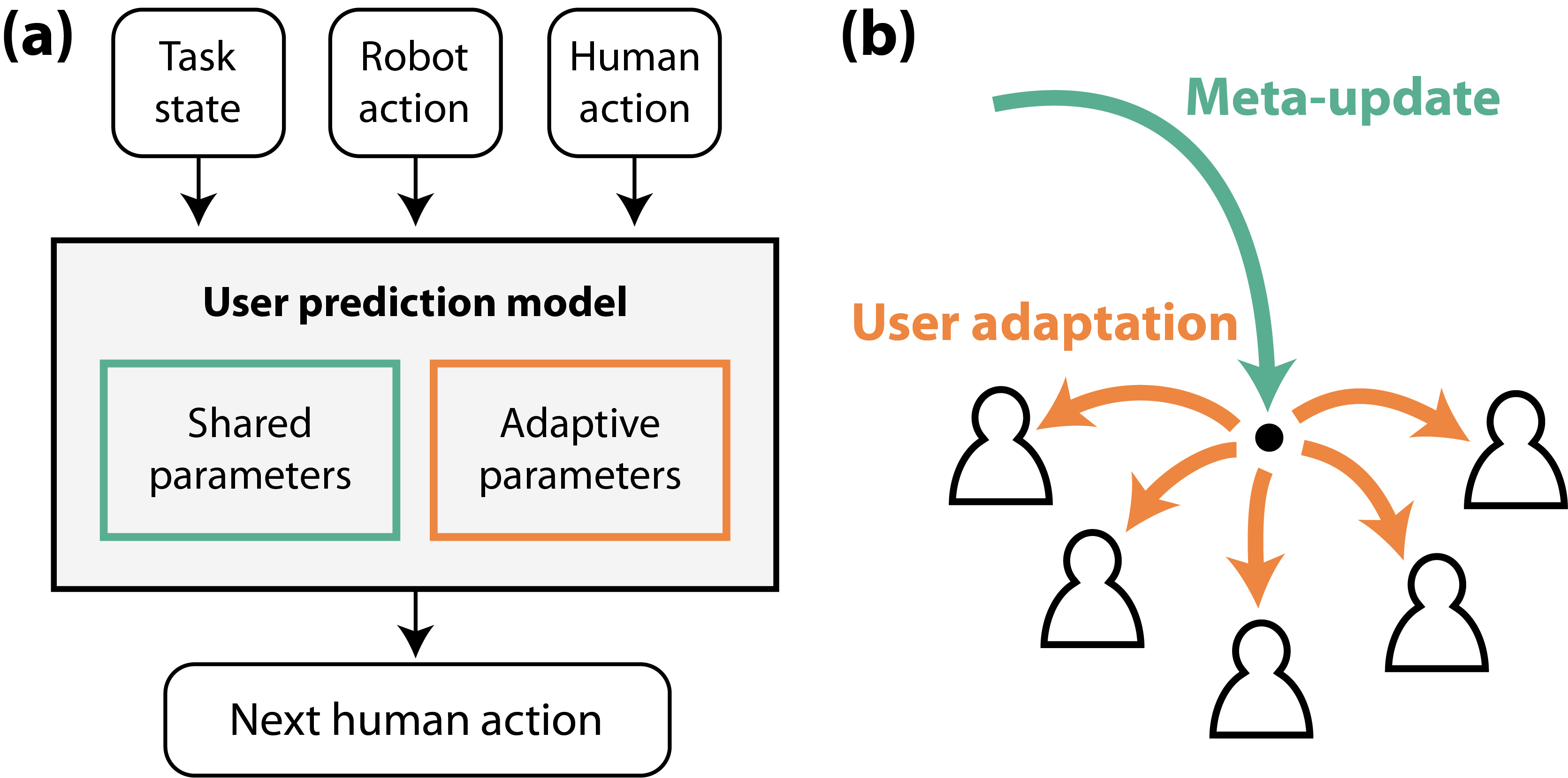

In this study, we demonstrate the feasibility of various existing meta-learning algorithms for solving fast user adaptation problems. Moreover, we propose a model structure and a meta-learning algorithm that is specialized for fast user adaptation. We focus on dividing human motions into common user movements and additional movements triggered by individual differences. Therefore, the proposed prediction model consists of shared and adaptive parameters, each of which is responsible for general and individual movements, respectively (Fig. 1(a)). In particular, the proposed method has two unique approaches: determining the user-specific initialization of the adaptive parameters via a separate network and enforcing the adaptive parameters to handle individual differences via a meta-loss function.

We evaluated the human motion prediction performance of the proposed method and compared it with several major meta-learning methods using a dataset acquired in a situation wherein a user performed a task while being assisted by a robotic device. The meta-learning methods exhibited better prediction performance than the non-meta-learning methods. This implies that meta-learning methods can be applied to solve fast user adaptation problems. In particular, our proposed meta-learning method with the initialization network and meta-loss function exhibited the best prediction accuracy among the meta-learning algorithms. In addition, we analyzed how the proposed model distinguished different users by visualizing the adaptive parameters that were adjusted to different users.

The contributions of this study are presented as follows: (1) We validate the applicability of existing meta-learning algorithms for fast user adaptation. To the best of our knowledge, this is the first attempt to compare the performance of meta-learning methods in solving fast user adaptation problems. (2) We propose a novel model structure and meta-learning algorithm specialized for enabling fast user adaptation in predicting human movements during pHRI. (3) We experimentally demonstrate that the proposed meta-learning method with our initialization network and meta-loss function exhibits the best accuracy compared to other meta-learning algorithms for predicting user movements during pHRI.

II Related Work

II-A Human Motion Prediction

Early studies on human motion prediction were developed based on probabilistic models such as the hidden Markov model [14, 15], Gaussian mixture regression [10], and probabilistic movement primitives [16]. The recent use of deep neural network architectures has resulted in a remarkable improvement in prediction performance. Fragkiadaki et al. [17] first proposed an encoder-recurrent-decoder model structure, which successfully predicted human body movements using a dataset spanning multiple subjects and activity domains. Subsequent studies have focused on utilizing context information as clues for prediction, such as the motion data of nearby people [18], or multimodal responses of the user [19]. In addition, there have been attempts to consider other learning techniques that increase prediction accuracy and robustness. For example, variational autoencoder [20] or adversarial learning [21, 22] frameworks have been adopted in motion prediction.

We focus on a learning framework that enables the proposed model to effectively respond to differences between individuals for human motion prediction, which has not been addressed in the aforementioned studies that employed fixed model parameters for all users. To solve this problem, we present a meta-learning approach that can quickly adapt the prediction model to novel users.

There have been attempts to apply a meta-learning technique to adapt the prediction model to novel tasks. Proactive and adaptive meta-learning (PAML) [23] integrates MAML and model regression networks [24] to learn an effective adaptation strategy. MoPredNet [25] is a method with a parameter generation module that utilizes external memory. These two previous studies [23, 25] focused on predicting human motion across specific categories (e.g., walking, eating, or smoking) without any interaction with a robot.

Our work is distinguished from the prior works in that we consider a situation wherein a human and a robot physically interact. In the pHRI situation of our work (i.e., virtual air hockey environment, described in Section IV-A), the user behavior is strongly affected by the robotic guidance at every timestep, making it difficult to predict the user behavior over a long time horizon because the interacting robot’s guidance over the horizon is already unpredictable. The robotic guidance depends on the opponent’s play, which is obviously unpredictable. In [8], it has been shown that even one-step human motion prediction can improve the user’s task performance under a certain pHRI situation. Therefore, we focus on predicting the user’s movement at the immediate next timestep given the dynamically changing and unpredictable robotic guidance of the current timestep. It is worth mentioning that we have tested and compared the prediction performance when using the data of the past several timesteps as the input and when using the data of the current timestep only, but there was no significant performance difference. Therefore, we decided to use the knowledge at the current timestep for the prediction.

II-B Optimization-based Meta-learning

Optimization-based meta-learning is a technique that allows a model to learn a new task quickly via an optimization procedure based on small data samples. A powerful and representative example is MAML [13], a meta-learning algorithm with a dual-structured training procedure consisting of inner and outer loop updates. The training procedure for MAML aims to attain model parameters that can reach task-specific parameters of a new task within a few gradient steps. Since MAML outperformed previous methods, such as the meta-learner with recurrent layers [26], various other optimization-based algorithms have followed, for example, Reptile [27], which simplifies the second-order gradient computation of the MAML; LEO [28], which performs adaptation in the low-dimensional embedding of model parameters; and multimodal MAML [29], which pursues a more diverse task distribution through parameter modulation.

The aforementioned meta-learning algorithms updated all of the parameters of every layer during the adaptation process. However, Raghu et al. [30] discovered that the entire parameter adaptation changed the parameters of the last layer. Therefore, the authors proposed the ANIL algorithm that adapts only the parameters of the last layer while fixing the parameters of the body layers. Even with this simplification, ANIL exhibited a performance comparable to that of the other meta-learning algorithms utilizing the entire parameter adaptation. CAVIA [31] separates adaptive parameters (which are updated during the adaptation and condition the body layers) and shared parameters (which are fixed). By separating these parameters, CAVIA outperformed MAML despite adapting fewer parameters.

Fast user adaption can be a new application of existing meta-learning algorithms. However, the applicability of the existing meta-learning algorithms and a comparison of their performance have not yet been studied. We propose a novel meta-learning framework specialized in user movement prediction after investigating the user prediction performance of existing meta-learning algorithms. Inspired by the CAVIA model, our prediction model consists of shared and adaptive parameters. Shared and adaptive parameters predict general and individual user movements, respectively. Our model responds to movement differences between individual users by adjusting adaptive parameters. In the CAVIA algorithm, the adaptive parameters are updated for each new task from a zero vector. This initialization method can impede the ability to adapt to various tasks within a few gradient steps. Hence, we propose a model structure that determines the effective user-specific initialization of adaptive parameters. In addition, we suggest a meta-loss function for the meta-update that induces the adaptive parameters to handle individual differences exclusively.

III Proposed Method

III-A Problem Definition

The goal of our meta-learning approach is to train a human motion prediction model that can quickly adapt to a new user with small data samples. In a situation wherein a user performs a task while being guided by a robot, the prediction problem can be formulated as follows: to predict , which indicates the human action at the next timestep, when given an input , which indicates knowledge at the current timestep consisting of , interaction state (e.g., state of the cooperative task), , robot action, and , human action. We let denote the dataset collected from one user of index . consists of the interaction data of the timestep length (i.e., pairs of size ).

For the fast user adaptation problem, two batches and are given, each consisting of pairs of size () and sampled from the same dataset without overlapping. is employed to adapt the parameters of the prediction model to user , and the accuracy of the mapping of the adapted model for is investigated. For the model training, a meta-dataset is employed, which is composed of datasets spanning multiple users (e.g., ). Another meta-dataset , collected from users who do not overlap with the users of , is adopted to evaluate the performance of the fast user adaptation on previously unseen users.

III-B Model Overview

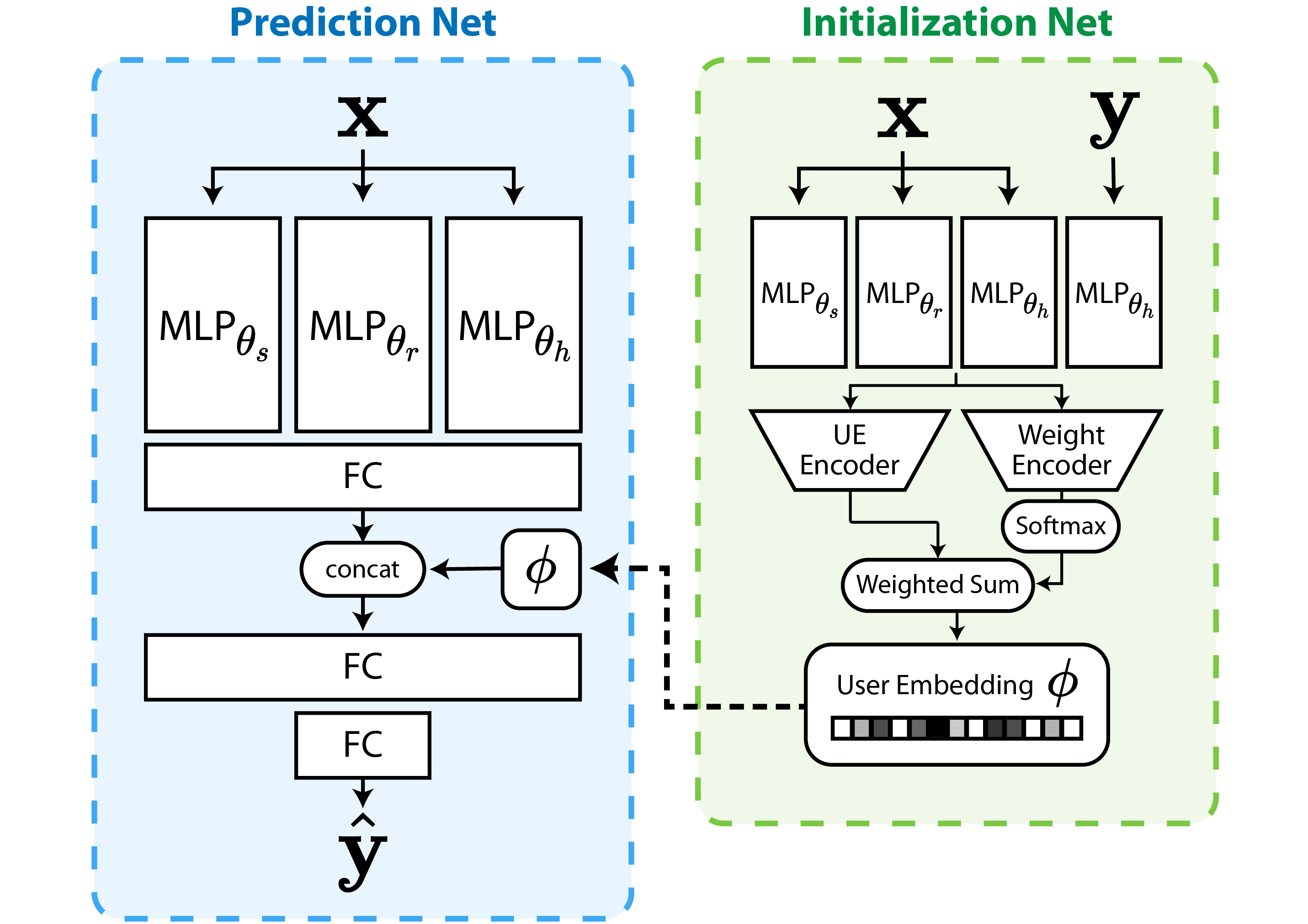

Our model parameters consist of , which is shared across all users, and , which is adapted according to each user. Because exhibits user-specific values after a series of initialization and adaptation processes, we refer to as the user embedding (UE). To implement a prediction model conditioned by , we concatenated to a hidden state vector of the model’s middle layer and adopted the concatenated vector as the input of the next layer, which was originally proposed in [31]. In addition, we propose a model structure that determines the effective initial values of user embedding for a user based on small data samples . Accordingly, as illustrated in Fig. 2, the entire structure of our model is composed of two parts: a prediction network that outputs user-specific predicted movements of the user, and an initialization network that outputs the effective initial point of user embedding before adaptation. The shared parameters contains all trainable parameters of the prediction network and the initialization network, except for the user embedding (see Fig. 3(c)).

In the prediction network, three types of inputs (i.e., and ) were fed into separate multilayer perceptron (MLP) blocks (with parameters , and , which are subsets of ) that extract each feature. Subsequently, feature vectors passed through the integrating layers to produce , which is the predicted user movement. The user embedding was concatenated with the input vector of the second integrating layer to allow user-specific prediction.

The initialization network received the entire consisting of pairs of as the input. To extract the features of the , MLPs that shared the parameters and from the prediction network were employed, and an MLP with parameter was again adopted to extract the features of . Note that and are vectors of the same format that represent human actions. To obtain a representative corresponding to pairs of , we first obtained the user embedding candidates and weight values, each corresponding to one pair, by feeding the feature vectors into a UE encoder and weight encoder, respectively. The weight value indicates the extent to which the corresponding pair expresses the user characteristics. The relative importance of the corresponding pair among the batches was determined by passing the weight value through the softmax function. Therefore, the representative (, where is the size of ) was acquired by matrix multiplication of the weight values () and user embedding candidates (). We employed three-layered MLPs for each UE and weight encoder. For the UE encoder, because it is unnecessary to embed different users to have the same bias, we deleted the bias term of the last layer.

III-C Meta-learning Procedure

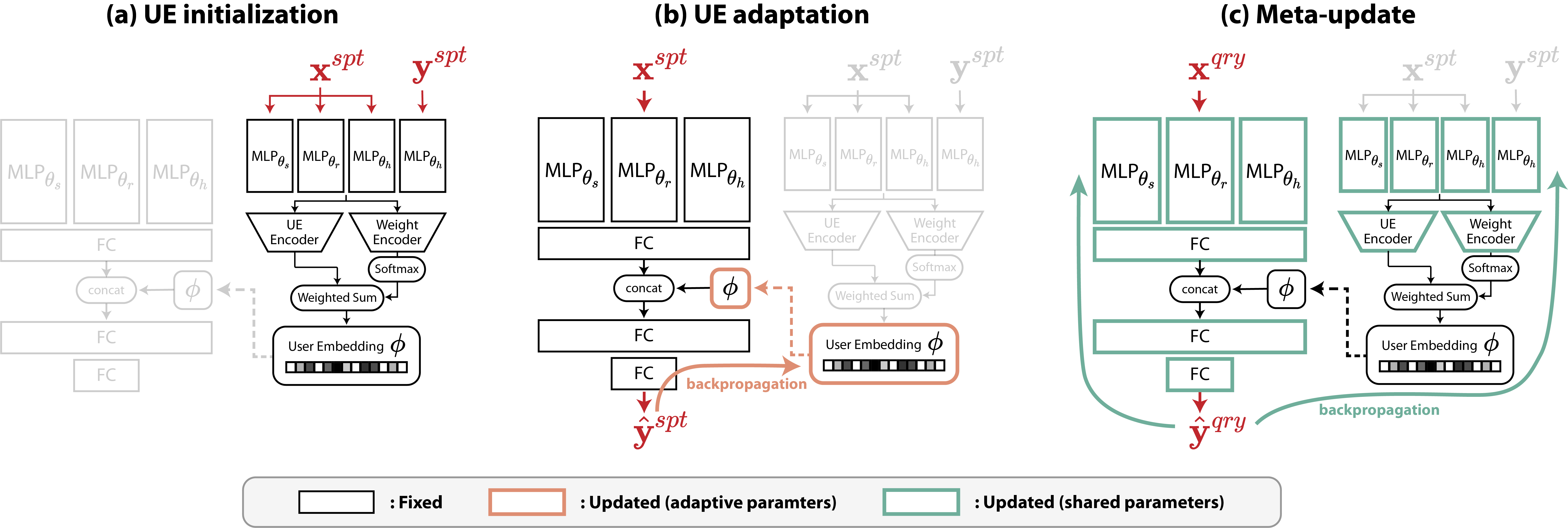

The meta-training process of the proposed model is realized in three steps: (1) UE initialization, (2) UE adaptation, and (3) meta-update, as summarized in Algorithm 1 and Fig. 3. User-specific parameters (i.e., ) are determined via the UE initialization and UE adaptation steps, and the parameters shared across various users (i.e., ) are learned via the meta-update step.

During the first step, UE initialization, is obtained by passing through the initialization network described in Section III-B. Subsequently, in the UE adaptation step, is updated to by backpropagating , which is the regression loss of the prediction network conditioned by when using . Either one or a few gradient steps relative to can be taken. For example, using one gradient step, can be computed as follows:

| (1) |

where denotes the inner learning rate. In the case of adopting multiple gradient steps, decaying the inner learning rate by the decay rate for every update can benefit a delicate adaptation.

In the meta-update step, we update using , while fixing the determined by . The meta-update is performed by overseeing the user-specific prediction results and values of the adapted from multiple users. The meta-loss we propose consists of three terms expressed as:

| (2) |

where represents the number of users sampled for the one meta-update, whereas and are the weights of each loss term. The first term aims to update to reduce the regression loss of in the prediction network with adapted user-specific parameters , which allows to infer general human movements across the users. The second term, which is inspired by [28], enables the initialization network to output an effective close to the adapted . The denotes that we consider it as a constant, therefore the derivative of with respect to is zero. The last term encourages the average of multiple , each adapted to a different user, to move toward a zero vector. The common nonzero bias from multiple indicates the general movement tendency of the users. Therefore, by forcibly reducing the bias, we induce a general tendency to learn by . In addition, the of different users are induced to be disentangled around zero and eventually learned to address individual differences. Taken together, is updated with a gradient step of the meta-loss; therefore,

| (3) |

where indicates the outer learning rate. The prediction and initialization networks were gradually trained by repeatedly performing the three steps using the sampled batch for each iteration. During the evaluation phase, the meta-update step is omitted, and only the UE initialization and UE adaptation processes are performed to achieve user-adapted motion prediction.

IV Experiment and Results

IV-A Data Acquisition

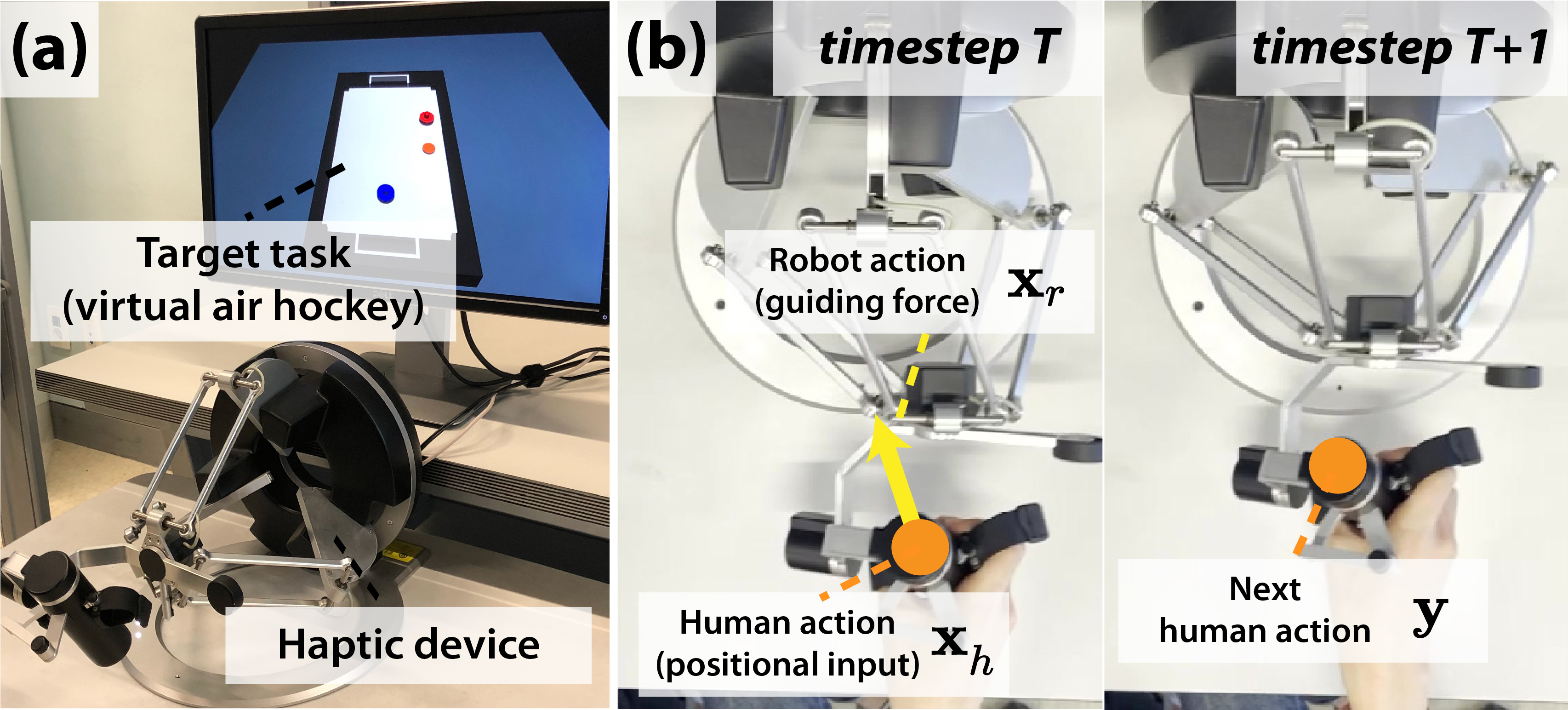

To evaluate our meta-learning approach for the fast user adaptation problem, it is necessary to collect a multi-person motion dataset acquired in a situation wherein a human physically interacts with a robot. A representative pHRI situation occurs when a user performs a target task with haptic guidance from a robot. The haptic guidance system, which has been recognized as a promising human–machine interface [5], can be defined as a system in which the control input of the target task is determined by the interaction between the force exerted by the user and the guiding force of the robot [32]. Because users are free to decide how much guiding force they will accept every moment, different users exhibit different responses to the guiding force. Therefore, it is possible to obtain a wide variety of human motion data, which is suitable for evaluating fast user adaptation performance.

We utilized a dataset consisting of motion data from 20 participants performing a target task with haptic guidance, which was collected in our previous work [8]. In the experimental environment, as illustrated in Fig. 4(a), the participants were instructed to play a virtual air hockey game controlled with a haptic device. In the hockey environment, a user receives points by smashing the puck with their paddle and putting the puck into the opponent’s goalpost. Conversely, the user loses points if they fail to defend a puck heading to their goal. Through the haptic device, the participants consistently received a guiding force to assist them; however, they were allowed to choose whether to follow the guidance. The robotic guiding force dynamically changed according to the opponent’s play.

The entire dataset is composed of the following three data types suitable for our prediction model structure, as described in Section III-B. First, the human action data (corresponding to and y) consist of 2-D position vectors of the end-effector of the haptic device determined by the user, which was transmitted to the target task as the control input. Second, the robot action data (corresponding to ) consist of 2-D force vectors that the robot exerts on the user. Finally, the state data of the target task (corresponding to ) consist of the 2-D position and velocity vectors of the paddles and the puck in the virtual air hockey environment. Fig. 4(b) presents an example of the interaction process between a user and a robot that occurred on a haptic device. In the timestep , the user action corresponds to the position vector of the end-effector of the haptic device (marked as an orange dot). Simultaneously, the user receives a guiding force (marked as a yellow arrow). The example illustrates the next user action (i.e., the position vector at timestep ) when the user follows the guiding force. If the guiding force does not match the user’s intention, the user is allowed to control the end-effector in their desired direction by applying a force exceeding the guiding force.

A total of 1.52 M timesteps of data (i.e., 1.52 M pairs of ) were collected from 20 participants aged (mean standard deviation across participants) years. Each participant performed trials, and the length of data collected per participant (i.e., ) was K timesteps. The total duration of the data collection per participant was approximately 1–1.5 h, and the details of the procedure are described in [8]. All collected data were normalized. The mean values of each participant’s action (i.e., ) were (horizontal direction) and (vertical direction).

According to Article 15 (2) of the Bioethics and Safety Act and Article 13 of the Enforcement Rule of Bioethics and Safety Act in Korea, a research project “which utilizes a measurement equipment with simple physical contact that does not cause any physical change in the subject” (Korean to English translation by the authors) is exempted from the approval. The entire experimental procedure was designed to use only a haptic device and a monitor that did not cause any physical changes in the subject.

IV-B Experimental Setting

Evaluation: To evaluate the fast user adaptation performance, we measured the prediction performance for , which consisted of user datasets that were not used for training, that is, users in were not included in . We assumed a realistic sampling situation during the evaluation procedure. If (i.e., batch for adaptation) and (i.e., batch for prediction) were randomly sampled from the entire user dataset as in the training procedure (Algorithm 1), there may be unrealistic cases of predicting the current human motion while utilizing the later motion data within the same episode for adaptation. To prevent this, for the evaluation, we utilized data from different episodes to adapt the model and to validate the prediction, that is, and consisted of data from separate episodes.

As a metric for prediction performance, we adopted the mean squared error (MSE) between the predicted value and the ground-truth value of the next user action. Five-fold cross-validation was conducted to reduce the effect of the discrepancy between the training and test datasets. Therefore, the 20-user dataset was divided into five sub-datasets consisting of four users each, and a total of five training-validation processes were performed using five pairs. The averaged values of the resulting five prediction errors (MSE) were used to compare the learning methods.

Baselines: We set baseline methods, including non-meta-learning and meta-learning approaches. Ahead of both approaches, the zero-velocity baseline [33] was adopted, which assumed that the user maintained the previous action. This helps to understand the prediction performance of the other learning methods at an appropriate scale.

As a non-meta-learning approach, we trained the same structured prediction model using a conventional supervised learning method. For a fair comparison with the adaptation process of the meta-learning approach, we aimed to determine the extent to which performance is improved when the model trained with a non-meta-learning method goes through the parameter update process with (i.e., fine-tuned). Therefore, we implemented the non-meta-learning baselines in two ways: when the model parameters were fixed and when they were fine-tuned with a few gradient steps, similar to the adaptation steps of the meta-learning approach.

For the meta-learning approaches, we evaluated the most representative methods, MAML and Reptile. In addition, we tested the performance of integrating the model regression network (MRN) [24] into the adaptation process of MAML, which is equivalent to PAML [23]. Our approach to solve the fast user adaptation problem was to separate the adaptive parameters and enforce them to represent only user-specific movements. Therefore, we also considered two other meta-learning baselines, ANIL and CAVIA, that distinguish between adaptive and shared parameters. There is a difference in how each method divides the parameters. Whereas ANIL has the body layers be fixed and the last layer adaptive, CAVIA adopts the adaptive parameters that join as an auxiliary input for the body layers.

Implementation details: All learning-based baseline methods were implemented using our prediction network structure (Fig. 2), except for the user embedding . Only the CAVIA method adopts additional adaptive parameters to the body layers; therefore, it can be implemented using the same structure as our prediction network. We set the hyper-parameters of our method and baseline methods to be as similar as possible. For the meta-learning approaches, including our method, the adaptation process was conducted through five gradient steps based on a stochastic gradient descent optimizer. CAVIA and the proposed method that updates (we set the size of to 32) adopted an inner learning rate of 0.05, and the other methods, which directly update the parameters of the network layers, employed an inner learning rate of 0.01, for both training and evaluation phases. Exceptionally, in the training phase of the MAML and ANIL algorithms, a small inner learning rate of 0.003 was adopted, because it exhibited more stable learning. Regarding the meta-update, an Adam optimizer with an outer learning rate of 0.001 was adopted for all methods, and each model was trained for 500 K steps. The batch sizes of and were set to 1 K samples. For the methods adopting multi-person data for one meta-update, such as Reptile or our method, the total number of data samples used for one meta-update was maintained at 1 K samples by adopting 200 samples each from five different users. Regarding the non-meta-learning approaches, an Adam optimizer with a learning rate of 0.001 was employed to train the model for 500 K steps, using batches consisting of 1 K samples. In the fine-tuned baseline case, five gradient steps with a learning rate of 0.01 were taken in the same manner as the adaptation process in the meta-learning approaches.

IV-C Results

| Methods | MSE | |

|---|---|---|

| Zero-velocity [33] | ||

| Non-meta-learning | Fixed | |

| Fine-tuned | ||

| Meta-learning | MAML [13] | |

| Reptile [27] | ||

| MAML + MRN [24] | ||

| ANIL [30] | ||

| CAVIA [31] | ||

| Ablation | w/o UE initialization | |

| w/o UE bias reduction | ||

| Our method | ||

The quantitative results are presented in Table IV-C. Compared to the zero-velocity baseline, all learning-based baselines exhibited a significantly lower prediction error. Meta-learning approaches exhibited more accurate performance than non-meta-learning approaches. The fine-tuned baseline did not exhibit a significant difference in prediction performance when compared to the fixed baseline. In contrast, the superior performance of the meta-learning approaches indicates that they succeeded in adapting rapidly to predict the movements of previously unseen users with only a few gradient steps. In other words, a meta-learning approach can be an effective solution for fast user adaptation problems.

A performance difference existed within the meta-learning baselines, and CAVIA exhibited the best performance among the baselines. As stated in [31], CAVIA outperformed MAML in solving various problems, such as image classification or reinforcement learning, and we observed the same results for the fast user adaptation problem. Notably, CAVIA and ANIL both applied separate parameters for adaptation; however, the performance of ANIL did not differ from that of MAML, whereas CAVIA exhibited better performance. This implies that designing the stage at which the model divides shared and adaptive parameters plays an important role in improving user adaptation performance. For example, in ANIL, because all body layers are fixed across the users and only the last layer is adapted, shared features are obtained from input data and user-specific computation is performed in the process of assembling the shared features. However, in CAVIA, because the adaptive parameters join in the middle stage, the model can consider both the shared and user-specific features, which is consistent with our approach that considers user motion as a combination of general motion across users and motion with individual differences.

Our method outperformed all other baseline methods. In particular, the superior performance over CAVIA indicates the benefits of the two components we proposed: (i) the user embedding initialization and (ii) the meta-update reducing the non-zero bias of multiple user embeddings. We verified the contribution of each component by conducting an ablation study. We implemented the prediction models by excluding each component as follows:

-

•

Without UE initialization: Each user embedding was initialized with a zero vector. The meta-loss in (2) without the second term was utilized.

-

•

Without UE bias reduction: Each meta-update was performed with a batch sampled from a single user. The meta-loss in (2) without the last term was utilized.

As shown in Table IV-C, both models without either UE initialization or UE bias reduction outperformed all the baselines, indicating that each component contributed to the performance improvement of the proposed method with UE initialization and UE bias reduction. Among the two components, the bias reduction of user embeddings contributed more than the UE initialization.

IV-D Analysis of User Embeddings

Implementing the adaptation with independent embedding parameters, rather than updating the entire model parameters such as MAML, has the advantage of being easily interpretable [31]. Moreover, the embedding can efficiently reflect the behavioral characteristics of each user in a low-dimensional space because the embeddings are induced to exclusively learn the individual differences.

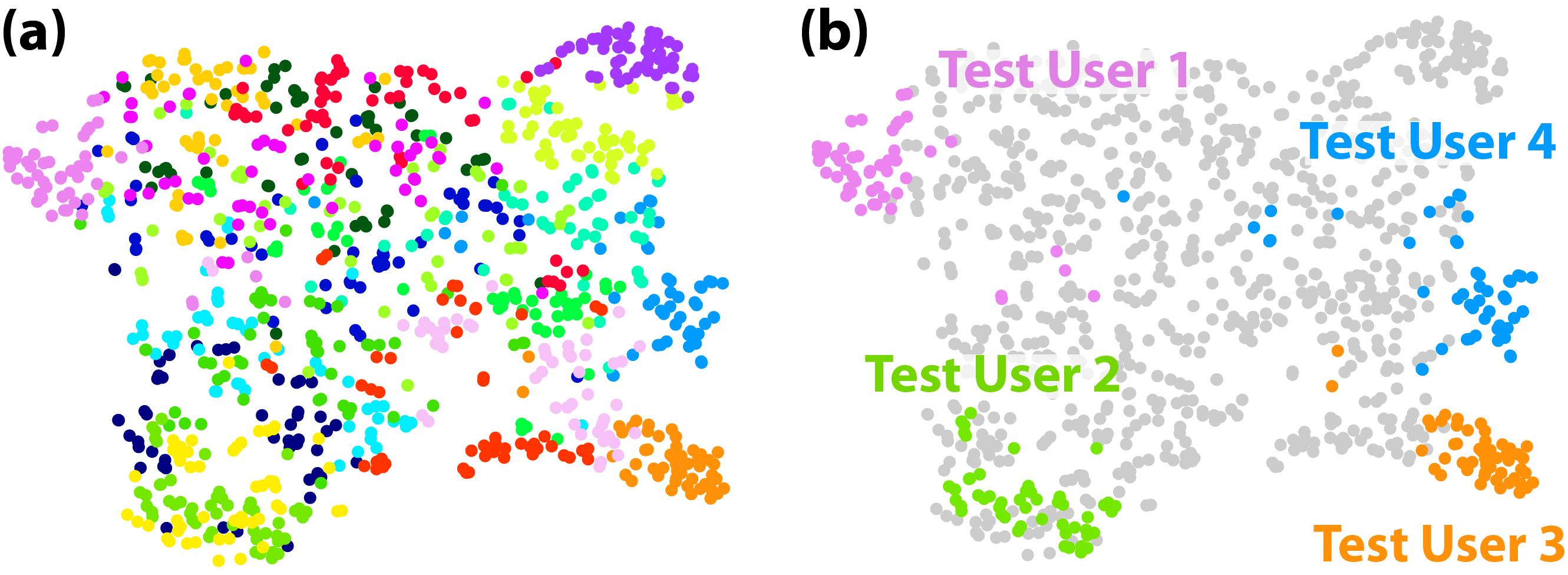

We qualitatively investigated the user adaptation performance of our method by visualizing user embeddings adapted to different users. Within the dataset of 20 participants, we sampled 50 batches, each comprising 1 K timesteps of data, for each user. Using our trained model, one user embedding per batch was produced via UE initialization and adaptation processes (i.e., five gradient steps). Fig. 5(a) presents the t-SNE [34] projection results of the 32-dimensional user embeddings onto 2-D space. It can be observed that user embeddings generated from the same user dataset (i.e., same-colored dots) are clustered together, and user embeddings from different user datasets are disentangled. This indicates that our user adaptation approach succeeded in rapidly extracting user characteristics using only small data samples. Furthermore, Fig. 5(b) highlights the embedding generation results of four users whose data were not employed for model training. Our method effectively responded to previously unseen users, as evidenced by the well-disentangled embeddings of test users.

V Discussion and Conclusion

We focused on the one-step prediction of human behavior because we considered a situation in which a human was guided by a robot at every timestep, and the robotic guidance was dynamically changing and unpredictable. However, our proposed meta-learning algorithm can be applied to train a model that predicts human motion over a longer time horizon (assuming that the robotic guidance over the horizon is known in advance). In this case, the prediction network (Fig. 2) needs to be modified based on recurrent neural networks instead of the current fully connected layers [17, 23]. The modified model can be trained using the same meta-learning procedure as in Algorithm 1.

In this study, we introduced a meta-learning approach to train a human prediction model that facilitates fast user adaptation, that is, allowing the model to swiftly respond to previously unseen users with small data samples. We proposed a meta-learning algorithm and a model structure that predicts the movement of individual users in pHRI situations. The superior prediction performance of the proposed method was quantitatively verified using a 20-user dataset. We also qualitatively validated the fast user adaptation performance of the proposed method by investigating the disentanglement of user embeddings adapted to various users. The proposed meta-learning framework for fast user adaptation can be useful when robots cannot obtain sufficient data from new users, such as service robots that encounter many people in a short period of time.

References

- [1] J. R. Medina, M. Lawitzky, A. Mörtl, D. Lee, and S. Hirche, “An experience-driven robotic assistant acquiring human knowledge to improve haptic cooperation,” in Proc. IROS, 2011, pp. 2416–2422.

- [2] A. Mörtl, M. Lawitzky, A. Kucukyilmaz, M. Sezgin, C. Basdogan, and S. Hirche, “The role of roles: Physical cooperation between humans and robots,” Int. J. Robot. Res., vol. 31, no. 13, pp. 1656–1674, 2012.

- [3] V. V. Unhelkar, P. A. Lasota, Q. Tyroller, R.-D. Buhai, L. Marceau, B. Deml, and J. A. Shah, “Human-aware robotic assistant for collaborative assembly: Integrating human motion prediction with planning in time,” IEEE Robot. Automat. Lett., vol. 3, no. 3, pp. 2394–2401, 2018.

- [4] H.-S. Moon and J. Seo, “Sample-efficient training of robotic guide using human path prediction network,” arXiv preprint arXiv:2008.05054, 2020.

- [5] D. A. Abbink, M. Mulder, and E. R. Boer, “Haptic shared control: smoothly shifting control authority?” Cogn. Technol. Work, vol. 14, no. 1, pp. 19–28, 2012.

- [6] P. Salvine, M. Nicolescu, and H. Ishiguro, “Benefits of human-robot interaction,” IEEE Robot. Automat. Mag., vol. 18, no. 4, pp. 98–99, 2011.

- [7] J. R. Medina, T. Lorenz, and S. Hirche, “Synthesizing anticipatory haptic assistance considering human behavior uncertainty,” IEEE Trans. Robot., vol. 31, no. 1, pp. 180–190, 2015.

- [8] H.-S. Moon and J. Seo, “Optimal action-based or user prediction-based haptic guidance: Can you do even better?” in Proc. CHI, 2021, pp. 1–12.

- [9] C. Passenberg, A. Glaser, and A. Peer, “Exploring the design space of haptic assistants: The assistance policy module,” IEEE Trans. Haptics, vol. 6, no. 4, pp. 440–452, 2013.

- [10] A. Kanazawa, J. Kinugawa, and K. Kosuge, “Adaptive motion planning for a collaborative robot based on prediction uncertainty to enhance human safety and work efficiency,” IEEE Trans. Robot., vol. 35, no. 4, pp. 817–832, 2019.

- [11] Y. Cheng, L. Sun, C. Liu, and M. Tomizuka, “Towards efficient human-robot collaboration with robust plan recognition and trajectory prediction,” IEEE Robot. Automat. Lett., vol. 5, no. 2, pp. 2602–2609, 2020.

- [12] N. Mitsunaga, C. Smith, T. Kanda, H. Ishiguro, and N. Hagita, “Adapting robot behavior for human–robot interaction,” IEEE Trans. Robot., vol. 24, no. 4, pp. 911–916, 2008.

- [13] C. Finn, P. Abbeel, and S. Levine, “Model-agnostic meta-learning for fast adaptation of deep networks,” in Proc. ICML, 2017, pp. 1126–1135.

- [14] D. Kulić, C. Ott, D. Lee, J. Ishikawa, and Y. Nakamura, “Incremental learning of full body motion primitives and their sequencing through human motion observation,” Int. J. Robot. Res., vol. 31, no. 3, pp. 330–345, 2012.

- [15] M. Power, H. Rafii-Tari, C. Bergeles, V. Vitiello, and G.-Z. Yang, “A cooperative control framework for haptic guidance of bimanual surgical tasks based on learning from demonstration,” in Proc. ICRA, 2015, pp. 5330–5337.

- [16] A. Paraschos, C. Daniel, J. Peters, and G. Neumann, “Probabilistic movement primitives,” in Proc. NeurIPS, 2013.

- [17] K. Fragkiadaki, S. Levine, P. Felsen, and J. Malik, “Recurrent network models for human dynamics,” in Proc. ICCV, 2015, pp. 4346–4354.

- [18] V. Adeli, E. Adeli, I. Reid, J. C. Niebles, and H. Rezatofighi, “Socially and contextually aware human motion and pose forecasting,” IEEE Robot. Automat. Lett., vol. 5, no. 4, pp. 6033–6040, 2020.

- [19] H.-S. Moon and J. Seo, “Prediction of human trajectory following a haptic robotic guide using recurrent neural networks,” in Proc. WHC, 2019, pp. 157–162.

- [20] J. Bütepage, H. Kjellström, and D. Kragic, “Anticipating many futures: Online human motion prediction and generation for human-robot interaction,” in Proc. ICRA, 2018, pp. 4563–4570.

- [21] L.-Y. Gui, K. Zhang, Y.-X. Wang, X. Liang, J. M. Moura, and M. Veloso, “Teaching robots to predict human motion,” in Proc. IROS, 2018, pp. 562–567.

- [22] M. S. Yasar and T. Iqbal, “A scalable approach to predict multi-agent motion for human-robot collaboration,” IEEE Robot. Automat. Lett., vol. 6, no. 2, pp. 1686–1693, 2021.

- [23] L.-Y. Gui, Y.-X. Wang, D. Ramanan, and J. M. Moura, “Few-shot human motion prediction via meta-learning,” in Proc. ECCV, 2018, pp. 432–450.

- [24] Y.-X. Wang and M. Hebert, “Learning to learn: Model regression networks for easy small sample learning,” in Proc. ECCV, 2016, pp. 616–634.

- [25] C. Zang, M. Pei, and Y. Kong, “Few-shot human motion prediction via learning novel motion dynamics.” in Proc. IJCAI, 2020, pp. 846–852.

- [26] S. Ravi and H. Larochelle, “Optimization as a model for few-shot learning,” in Proc. ICLR, 2016.

- [27] A. Nichol, J. Achiam, and J. Schulman, “On first-order meta-learning algorithms,” arXiv preprint arXiv:1803.02999, 2018.

- [28] A. A. Rusu, D. Rao, J. Sygnowski, O. Vinyals, R. Pascanu, S. Osindero, and R. Hadsell, “Meta-learning with latent embedding optimization,” in Proc. ICLR, 2019.

- [29] R. Vuorio, S.-H. Sun, H. Hu, and J. J. Lim, “Multimodal model-agnostic meta-learning via task-aware modulation,” in Proc. NeurIPS, 2019.

- [30] A. Raghu, M. Raghu, S. Bengio, and O. Vinyals, “Rapid learning or feature reuse? towards understanding the effectiveness of maml,” in Proc. ICLR, 2020.

- [31] L. Zintgraf, K. Shiarli, V. Kurin, K. Hofmann, and S. Whiteson, “Fast context adaptation via meta-learning,” in Proc. ICML, 2019, pp. 7693–7702.

- [32] D. A. Abbink and M. Mulder, “Neuromuscular analysis as a guideline in designing shared control,” Advances in Haptics, pp. 499–516, 2010.

- [33] J. Martinez, M. J. Black, and J. Romero, “On human motion prediction using recurrent neural networks,” in Proc. CVPR, 2017, pp. 4674–4683.

- [34] L. Van der Maaten and G. Hinton, “Visualizing data using t-sne,” J. Mach. Learn. Res., vol. 9, no. 11, 2008.