Faster Approximation Algorithms for Parameterized Graph Clustering and Edge Labeling

Abstract.

Graph clustering is a fundamental task in network analysis where the goal is to detect sets of nodes that are well-connected to each other but sparsely connected to the rest of the graph. We present faster approximation algorithms for an NP-hard parameterized clustering framework called LambdaCC, which is governed by a tunable resolution parameter and generalizes many other clustering objectives such as modularity, sparsest cut, and cluster deletion. Previous LambdaCC algorithms are either heuristics with no approximation guarantees, or computationally expensive approximation algorithms. We provide fast new approximation algorithms that can be made purely combinatorial. These rely on a new parameterized edge labeling problem we introduce that generalizes previous edge labeling problems that are based on the principle of strong triadic closure and are of independent interest in social network analysis. Our methods are orders of magnitude more scalable than previous approximation algorithms and our lower bounds allow us to obtain a posteriori approximation guarantees for previous heuristics that have no approximation guarantees of their own.

1. Introduction

In network analysis, graph clustering is the task of partitioning a graph into well-connected sets of nodes (called communities, clusters, or modules), that are more densely connected to each other than they are to the rest of the graph (Fortunato and Hric, 2016; Schaeffer, 2007; Porter et al., 2009). This fundamental task has widespread applications across numerous domains, including detecting related genes in biological networks (Shamir et al., 2004; Ben-Dor et al., 1999), finding communities in social networks (Veldt et al., 2018; Newman and Girvan, 2004), and image segmentation (Shi and Malik, 2000), to name only a few. A standard approach for finding clusters in a graph is to optimize some type of combinatorial objective function that encodes the quality of a clustering of nodes. Just as there are many different applications and reasons why one may wish to partition the nodes of a graph into clusters, there are many different types of objective functions for graph clustering (Newman and Girvan, 2004; Shi and Malik, 2000; Shamir et al., 2004; Bohlin et al., 2014; Delvenne et al., 2010), all of which strike a different balance between the goal of making clusters dense internally and the goal of ensuring that few edges cross cluster boundaries. In order to capture many different notions of community structure within the same framework, many graph clustering optimization objectives come with tunable resolution parameters (Schaub et al., 2012; Veldt et al., 2019a; Reichardt and Bornholdt, 2006; Delvenne et al., 2010; Newman, 2016), which control the tradeoff between the internal edge density and the inter-cluster edge density resulting from optimizing the objective.

One of the biggest challenges in graph clustering is that the vast majority of clustering objectives are NP-hard. Thus, while it is often easy to define a new way to measure clustering structure, it is very hard to find optimal (or even certifiably near-optimal) clusters in practice for any given objective. There has been extensive theoretical research on approximation algorithms for different clustering objectives (Arora et al., 2009; Leighton and Rao, 1999; Veldt et al., 2018; Charikar et al., 2003), but most of these come with high computational costs and memory constraints, often because they rely on expensive convex relaxations of the NP-hard clustering objective. On the other hand, scalable graph clustering algorithms have been designed based on local node moves and greedy heuristics (Newman and Girvan, 2004; Newman, 2006; Blondel et al., 2008; Traag et al., 2019; Veldt et al., 2018; Shi et al., 2021), but these come with no theoretical approximation guarantees. As a result, it can be challenging to tell whether the structure of an output clustering depends more on the underlying objective function or on the mechanisms of the algorithm being used.

This paper focuses on an existing optimization graph clustering framework called LambdaCC (Veldt et al., 2018; Gleich et al., 2018; Shi et al., 2021; Gan et al., 2020), which comes with two key benefits. The first is that it can detect different types of community structures by tuning a resolution parameter . Many existing clustering objectives can be recovered as special cases for specific choices of (Veldt et al., 2018). The second benefit is that LambdaCC can be viewed as a special case of correlation clustering (Bansal et al., 2004), a framework for clustering based on similarity and dissimilarity scores, that has been studied extensively from the perspective of approximation algorithms (Charikar et al., 2005; Demaine et al., 2006; Gleich et al., 2018). As a result, LambdaCC directly inherits an approximation algorithm that holds for any correlation clustering problem (Charikar et al., 2005; Demaine et al., 2006) and is amenable to even better approximation guarantees in some parameter regimes. Gleich et al. (Gleich et al., 2018) showed that for very small values of , the approximation is the best that can be achieved by rounding a linear programming relaxation (the most successful approach known for approximating the objective). However, a 3-approximation algorithm has been developed for the regime where (Veldt et al., 2018). Despite these results, LambdaCC suffers from a similar theory-practice gap as many other clustering frameworks. These previous approximation algorithms rely on expensive linear programming relaxations and are therefore not scalable. While faster heuristic algorithms do exist (Veldt et al., 2018; Shi et al., 2021), these come with no approximation guarantees.

The present work: fast approximation algorithms for parameterized graph clustering.

We develop algorithms for LambdaCC that come with rigorous approximation guarantees and are also far more scalable than existing approximation algorithms for this problem. We present new algorithms for all values of the parameter , focusing especially on the regime , since constant factor approximations are possible in this regime and have been a focus in previous research. This is also the regime where LambdaCC interpolates between two existing objectives known as cluster editing and cluster deletion (Shamir et al., 2004). We first of all design a fast combinatorial approximation algorithm that returns a 6-approximation for any value of , that runs in only time, where is the degree of node . While this is a factor of 2 worse than the best existing 3-approximation, it is orders of magnitude faster than this previous approach, which requires solving an LP relaxation with variables for an -node graph and takes time. Our second algorithm is an improved approximation for (which ranges from 3 to 5 as ) based on rounding an LP relaxation with far fewer constraints. In numerical experiments, we confirm for a large collection of real-world networks that the number of constraints in this cheaper LP tends to be orders of magnitude smaller than the constraint set of the canonical LP relaxation. It can also be run on graphs that are so large that even forming the constraint matrix for the canonical LP relaxation leads to memory issues. Even more significantly, this cheaper LP that we consider is a covering LP, a special type of LP that can be solved using combinatorial algorithms based on the multiplicative weights update method (Fleischer, 2004; Quanrud, 2020; Garg and Khandekar, 2004).

We also adapt our techniques to obtain a approximation by rounding the cheaper LP when . As is the case when rounding the righter and more expensive canonical LP relaxation, this gets increasingly worse as decreases. This is not surprising, given that even the canonical LP relaxation has an integrality gap (Gleich et al., 2018). Our approximation is in fact quite close to the previous approximation for small that was previously developed by Gleich et al. (Gleich et al., 2018) based on the canonical LP.

All of our approximation algorithms rely on a new connection between LambdaCC and an edge labeling problem that is based on the social network analysis principle of strong triadic closure (Easley and Kleinberg, 2010; Sintos and Tsaparas, 2014; Granovetter, 1973). This principle posits that if two people share strong links to a mutual friend, then they are likely to share at least a weak connection with each other. This principle has inspired a line of research on strong triadic closure (STC) labeling problems (Sintos and Tsaparas, 2014; Oettershagen et al., 2023; Veldt, 2022; Grüttemeier and Komusiewicz, 2020; Grüttemeier and Morawietz, 2020), which label edges in a graph as weak or strong (or in some cases add “missing” edges) in order to satisfy the strong triadic closure property. Previous research has shown that unweighted variants of this labeling problem are related to cluster editing and cluster deletion (Grüttemeier and Komusiewicz, 2020; Grüttemeier and Morawietz, 2020) (special cases of LambdaCC when and respectively). Recently it was shown that lower bounds and algorithms for these unweighted STC problems can be useful tools in designing faster approximation algorithms for cluster editing and cluster deletion (Veldt, 2022). We generalize this strategy by defining a new parameterized edge-labeling problem we call LambdaSTC, which provides new types of lower bounds for LambdaCC. We also provide a 3-approximation algorithm for LambdaSTC that applies for every value of . All of these constitute new results for an edge labeling problem of independent interest in social network analysis, but our primary motivation is to use them to develop faster clustering approximation algorithms.

We demonstrate in numerical experiments that our algorithms are fast and effective, far surpassing their theoretical guarantees. In our experiments, we even find that solving our cheaper LP relaxation actually tends to return a solution that can quickly be certified to be the optimal solution for the more expensive canonical LP relaxation for LambdaCC. When this happens, we can use previous rounding techniques that guarantee a 3-approximation for .

2. Preliminaries and Related Work

We begin with technical preliminaries on graph clustering, correlation clustering, and strong triadic closure edge labeling problems.

2.1. The LambdaCC Framework

Given an undirected graph the high-level goal of a graph clustering algorithm is to partition the node set into disjoint clusters in such a way that many edges are contained inside clusters, and few edges cross between clusters. These two goals are often in competition with each other, and there have been many different approaches for defining and forming clusters, all of which implicitly strike a different type of tradeoff between these goals. The LambdaCC clustering objective (Veldt et al., 2018) provides one approach for implicitly controlling this tradeoff using a resolution parameter . Formally, given and parameter , LambdaCC seeks a clustering that minimizes the following objective

| (1) |

where is a binary cluster indicator for every node-pair, i.e., if and are clustered together and otherwise. The number of clusters to form is not specified. Rather, the optimal number of clusters is controlled implicitly by tuning . Observe that the two pieces of the LambdaCC objective directly correspond to the two goals of graph clustering: the term for is a penalty incurred if and are separated, and the term for places a penalty on putting and together if they do not share an edge. The relative importance of the two competing goals of graph clustering (form clusters that are internally dense and externally sparse) is then controlled by tuning . Smaller values of tend to produce a smaller number of (larger) clusters, and choosing large leads to a larger number of (smaller) clusters.

One of the benefits of the LambdaCC framework is that it generalizes and unifies a number of previously studied objectives for graph clustering, including the sparsest cut objective (Arora et al., 2009), cluster editing (Shamir et al., 2004; Bansal et al., 2004), and cluster deletion (Shamir et al., 2004). It also is equivalent to the popular modularity clustering objective (Newman and Girvan, 2004) under certain conditions. The definition of modularity depends on underlying null distributions for graphs. When the Erdős-Rényi null model is used, modularity is equivalent to Objective (1) for an appropriate choice of . When the Chung-Lu null model is used, modularity can be viewed as a special case of a degree-weighted version of LambdaCC (Veldt et al., 2018), though we focus on Objective (1) in this paper. Finally, for an appropriate choice of , the LambdaCC objective is equivalent to graph clustering based on maximum likelihood inference for the popular stochastic block model (Abbe, 2018), which can be seen from LambdaCC’s relationship to modularity (Newman, 2016).

2.2. Correlation Clustering

The CC in LambdaCC stands for correlation clustering (Bansal et al., 2004), a framework for clustering based on pairwise similarity and dissimilarity scores. In the most general setting, an instance of weighted correlation clustering is given by a set of vertices , along with two non-negative weights for each pair of distinct vertices . If nodes and are placed in the same cluster, this incurs a disagreement penalty of whereas if they are separated, a disagreement penalty of is imposed. In correlation clustering, disagreements are also called mistakes. This terminology is especially natural when only one of the weights is positive and the other is zero (which is true for the most widely-studied special cases). In this case, each node pair is either “similar” () and wants to be clustered together, or “dissimilar” () and wants to be clustered apart. A mistake or disagreement happens precisely when nodes are clustered in a way that does not match this “preference.” Formally, the disagreements minimization objective for correlation clustering can be represented as the following integer linear program (ILP):

| (2) | min | |||

| subject to | ||||

where is a binary distance variable between nodes , i.e., means nodes and are clustered together, and means they are separated. The most well-studied special case is when . This is known as complete unweighted correlation clustering, as it is often viewed as a clustering objective on a complete signed graph where each pair of nodes either defines a positive edge or a negative edge. This is equivalent to the cluster editing problem (Shamir et al., 2004), which seeks to add or delete the minimum number of edges in an unsigned graph to partition it into a disjoint set of cliques. This is in turn related to cluster deletion, where one can only delete edges in in order to partition it into cliques. Cluster deletion is the same as solving Objective (2) when for every pair of nodes .

Approximation algorithms. Correlation clustering is NP-hard even for special cases of cluster editing and cluster deletion, but many approximation algorithms have been designed (Ailon et al., 2008; Charikar et al., 2005; Chawla et al., 2015). Most of these algorithms rely on solving and rounding a linear programming (LP) relaxation of ILP (2), obtained by replacing with the constraint . For the general weighted case, the best approximation guarantee is , which matches the integrality gap of the linear program (Charikar et al., 2005; Demaine et al., 2006). However, constant factor approximations are possible for certain weighted cases (Ailon et al., 2008; Veldt et al., 2020; Ailon et al., 2012). Ailon et al. (Ailon et al., 2008) designed a fast randomized combinatorial algorithm called Pivot for the complete unweighted case. This algorithm repeatedly selects a uniform random pivot node in each iteration and clusters it together with its unclustered neighboring nodes that share a positive edge. This algorithm comes with an expected 3-approximation guarantee. However, for general weighted correlation clustering, it can produce poor results.

Deterministic pivot. A derandomized version of the standard Pivot algorithm was developed by van Zuylen and Williamson (van Zuylen and Williamson, 2009), which can be applied to a broader class of weighted correlation clustering problems. Instead of randomly choosing pivot nodes, this technique relies on solving the LP relaxation of correlation clustering, constructing a derived graph based on the solution to this LP, and then running a pivoting procedure in that deterministically selects pivot nodes based on the LP output. They showed that this can produce a deterministic 3-approximation algorithm for the complete unweighted case, and can also be applied to other weighted cases including the case of probability constraints (where for every pair ). In proving these results, van Zuylen and Williamson presented a useful theorem (Theorem 3.1 in (van Zuylen and Williamson, 2009)) that can be used as a general strategy for developing approximation algorithms for other special weighted variants. We state a version of this theorem below, as it will be a useful step for some of our results. The original theorem includes details for choosing pivot nodes deterministically. The approximation holds in expectation when choosing pivot nodes uniformly at random.

Theorem 2.1.

Consider an instance of weighted correlation clustering given by a node-set and weights . Let represent a budget for node pair where , and be a graph which for satisfies the following conditions:

-

(1)

for all edges , and

for all edges , -

(2)

for every triplet in where .

Applying Pivot to will return a clustering solution with an expected weight of disagreements bounded above by .

Approximations for LambdaCC. The LambdaCC objective on a graph corresponds to a special case of Objective (2) where if and if . Veldt et al. (Veldt et al., 2018) previously showed a 3-approximation algorithm for the case where , based on rounding the standard correlation clustering LP relaxation. However, because this LP has constraints for an -node graph, in practice it is challenging to solve it for graphs with even a thousand nodes. Later, Gleich et al. (Gleich et al., 2018) proved that the LP integrality gap can be for small values of . They also developed approximation guarantees for smaller values , but these get increasingly worse as . Faster heuristics algorithms for LambdaCC have also been developed (Veldt et al., 2018; Shi et al., 2021), but these come with no approximation guarantees. Thus, a limitation of this previous work is that existing LambdaCC algorithms either depend on an extremely expensive linear programming relaxation or come with no guarantees. The focus of our paper is to bridge this gap.

2.3. Strong Triadic Closure and Edge Labeling

In social network analysis, the principle of strong triadic closure (Easley and Kleinberg, 2010; Granovetter, 1973) posits that two individuals in a social network will share at least a weak connection if they both share strong connections to a common friend. This has been used to define certain types of edge labeling problems where the goal is to label edges in a graph in such a way that this principle holds (Sintos and Tsaparas, 2014; Oettershagen et al., 2023; Grüttemeier and Komusiewicz, 2020; Grüttemeier and Morawietz, 2020; Adriaens et al., 2020).

Given a graph (which could represent an observed set of social interactions), a triplet of vertices is an open wedge centered at if the vertex pairs and are edges (i.e., in ) while is not. The strong triadic closure principle suggests that if such an open wedge exists, then either or is a weak edge, or else is a missing connection that should appear as an edge in but was not observed when was constructed. With this principle in mind, Sintos and Tsaparas (Sintos and Tsaparas, 2014) defined the strong triadic closure labeling problem (minSTC), where the goal is to label edges as weak and strong so that every open wedge contains as least one weak edge, and in such a way that the number of edges labeled weak is as small as possible. They showed that the problem is NP-hard but has a 2-approximation algorithm based on reduction to the Vertex Cover problem. They also considered a variation of the problem that allows for edge additions (minSTC+).

In our paper, we use to denote the set of open wedges centered at in , and let . We use the term STC-labeling to indicate labeling of node pairs that satisfies the strong triadic closure in the following sense: for every open wedge centered at , at least one of the edges is labeled weak, or the non-edge is labeled as a missing edge. Such labeling is encoded by a collection of weak edges denoted as , as well as a set of missing edges denoted as . The minSTC+ problem seeks an STC-labeling that minimizes . This can be formally cast as the following ILP:

| (3) |

If , this represents the presence of either a weak edge (if ) or a missing edge (if ). This problem is also NP-hard but can be reduced to Vertex Cover in a 3-uniform hypergraph in order to obtain a 3-approximation algorithm.

While the strong triadic closure principle and the resulting edge labeling problems are of their own independent interest, we are particularly interested in these problems given their relationships with certain clustering objectives. The solution for minSTC is known to lower bound the cluster deletion objective, and minSTC+ similarly lower bounds cluster editing (Grüttemeier and Komusiewicz, 2020; Grüttemeier and Morawietz, 2020; Konstantinidis et al., 2018; Veldt, 2022). The LP relaxations for these problems, therefore, provide lower bounds for cluster deletion and clustering editing that are cheaper and easier to compute than the standard linear programming relaxations. Veldt (Veldt, 2022) recently showed how to round these LP relaxations—and how to round approximation solutions for minSTC+ and minSTC—to develop faster approximation algorithms for cluster editing and cluster deletion. We generalize these techniques in order to develop faster approximation algorithms for the full parameter regime of LambdaCC.

3. Lambda STC Labeling

We now introduce a parameterized edge labeling problem called LambdaSTC, which generalizes previous edge labeling problems and can also be used to develop new approximations for LambdaCC.

Problem definition. Given graph and a parameter , LambdaSTC is the problem of finding an STC-labeling that minimizes . This can be formulated as:

| (4) | min | |||

| s.t | ||||

We first note that this problem is equivalent to the minSTC+ problem when . When is close enough to 1, LambdaSTC is equivalent to minSTC. To see why, note that if , then labeling a single non-edge as “missing” is more expensive than labeling all edges in as “weak”. Hence, with a couple of steps of algebra, we can see that when , the optimal LambdaSTC solution will not place any non-edges in , but will only add edges to in order to construct a valid STC-labeling, so this differs from minSTC only by a multiplicative constant factor.

Varying between and offers us the flexibility to interpolate between minSTC+ and minSTC. Meanwhile, the regime corresponds to a new family of edge labeling problems where it is cheaper to label non-edges as missing. If we think of the graph as a (potentially noisy) representation of some social network observed from the real world, then the parameter can be chosen based on a user’s belief about the accuracy of the process that was used to observe edges. If the user has a strong belief that there are many friendships in the social network that were just not directly observed (and hence are not included as edges in the graph) then a smaller value of may be appropriate. If missing edges are unlikely, then a large value of is appropriate.

Approximating LambdaSTC. Approximation algorithms for minSTC and minSTC+ can be obtained by reducing these problems to unweighted Vertex Cover problems (in graphs and hypergraphs, respectively) (Sintos and Tsaparas, 2014; Grüttemeier and Morawietz, 2020). We generalize this approach and design an approximation algorithm that applies to LambdaSTC for any choice of the parameter , based on the Local-Ratio algorithm for weighted Vertex Cover (Bar-Yehuda and Even, 1985).

Algorithm 1 is pseudocode for our method, CoverLabel. This method “covers” all the open wedges in graph by either adding a missing edge between a pair of non-adjacent nodes or labeling at least one of the two edges as weak. This can be seen as finding a weighted vertex cover on a 3-uniform hypergraph constructed as follows:

-

•

Every node pair is assigned to a vertex in with a node-weight of if and otherwise.

-

•

A hyperedge is created for each open wedge .

Nodes in correspond to edges in , and hyperedges in correspond to open wedges in . Therefore, a vertex cover in corresponds to a labeling of edges in that “covers” all open wedges in a way that produces an STC-labeling. If the covered vertex is associated with an edge , we consider a weak edge. However, if it corresponds to a non-edge pair , this is labeled as a missing edge. This provides an approximation-preserving reduction from LambdaSTC to 3-uniform hypergraph weighted Vertex Cover, so employing a 3-approximation algorithm for hypergraph vertex cover results in a 3-approximation for LambdaSTC. CoverLabel is equivalent to implicitly applying the Local-Ratio algorithm (Bar-Yehuda and Even, 1985) to the hypergraph described above. By implicitly, we mean that we do not form explicitly, but we apply the mechanics of this algorithm directly to find an STC-labeling in . The following theorem follows from the guarantee of the Local-Ratio algorithm for node-weighted 3-uniform hypergraphs.

Theorem 3.1.

Algorithm 1 is a 3-approximation algorithm for the LambdaSTC labeling problem.

New Lower bounds for LambdaCC. The LambdaSTC objective lower bounds LambdaCC, and a solution to the LambdaSTC LP yields a new type of lower bound for LambdaCC. To see why, consider the following change of variables: if , and otherwise. This gives us the following equivalent formulation for the LP relaxation of LambdaSTC:

| (5) | min | |||

| s.t. | ||||

This linear program shares the same objective function as the canonical LambdaCC LP relaxation, but has a subset of the triangle inequality constraints. In particular, it only constraints when is an open wedge, rather than for all triplets of edges. This makes this LP relaxation easier to solve on a large scale. Furthermore, this is an example of a covering LP, which can be solved much more quickly than a generic LP using the multiplicative weights update method (Fleischer, 2004; Quanrud, 2020; Garg and Khandekar, 2004).

4. Faster LambdaCC Algorithms

We now present faster algorithms for LambdaCC, using lower bounds derived from the LambdaSTC objective. For a fixed value, we use the notation and to represent the optimal solution values for LambdaSTC and LambdaCC, respectively.

4.1. CoverFlipPivot algorithm

We present the first combinatorial algorithm for LambdaCC, called CoverFlipPivot (CFP), which provides a 6 approximation for every . As outlined in Algorithm 2, CFP comprises three steps:

-

(1)

Cover: Generate a feasible LambdaSTC labeling of by running the 3-approximate CoverLabel algorithm.

-

(2)

Flip: Flip the edges in the original graph to create a derived graph , by deleting ‘weak’ edges and adding ‘missing’ edges .

-

(3)

Pivot: Run Pivot on the derived graph .

Before proving any approximation guarantees for CFP, we begin with a more general result that sheds light on the relationship between LambdaSTC and LambdaCC. This generalizes previous results showing that the optimal cluster deletion and minSTC objectives (and similarly, the cluster editing and minSTC+ objectives) differ by at most a factor of 2 (Veldt, 2022).

Theorem 4.1.

Given an input graph , a clustering parameter , and an STC-labeling , running Pivot on the derived graph where , returns a LambdaCC clustering solution with a bound of on the expected cost of disagreements.

Proof.

To prove this result, we show that all conditions of Theorem 2.1 are satisfied for . Recall that for the LambdaCC framework, weights are defined as:

| (6) |

To bound the LambdaCC objective in terms of the STC-labeling, we define budgets based on flipped edges:

| (7) |

The sum of budgets can now be written as . Now, we show that Condition (1) of Theorem 2.1 is satisfied for , by considering four cases:

Next, we check Condition (2) of Theorem 2.1, i.e., we prove that for every open wedge centered at in . The budgets and weights depend on which pairs , , are edges in . Table 1 covers all 8 cases for how a triplet of nodes in could be mapped to an open wedge centered at in . The first column indicates which of the pairs are edges in , e.g., Y-Y-N (“yes-yes-no”) means that and are in , but is not. In each case, we show why is a lower bound for when . By Theorem 2.1 the total cost of mistakes is then bounded by .

| Edges in | ||

|---|---|---|

| Y-Y-Y | ||

| Y-Y-N | Not Applicable | N.A |

| Y-N-N | ||

| Y-N-Y | ||

| N-N-Y | ||

| N-Y-Y | ||

| N-Y-N | ||

| N-N-N |

The second row of Table 1 corresponds to the case where an open wedge in maps to an open wedge in . However, this is in fact impossible, as it implies that none of the node pairs in were flipped, even though is an open wedge in , which violates the assumption that is an STC-labeling. ∎

Corollary 4.2.

Proof.

The optimal LambdaCC solution provides an upper bound for the optimal LambdaSTC solution, i.e., . Algorithm produces a -approximate labeling solution with the LambdaSTC objective value , so we have that

Therefore, lower bounds . Applying Theorem 2 with algorithm , we obtain a clustering with LambdaCC objective score of . Thus,

so is a -approximation for LambdaCC. If optimally solves LambdaSTC, then and so . If represents our 3-approximate CoverLabel algorithm for LambdaSTC, then combining it with Theorem 4.1 shows that Algorithm 2 is a -approximation for LambdaCC. ∎

4.2. Faster LP algorithm

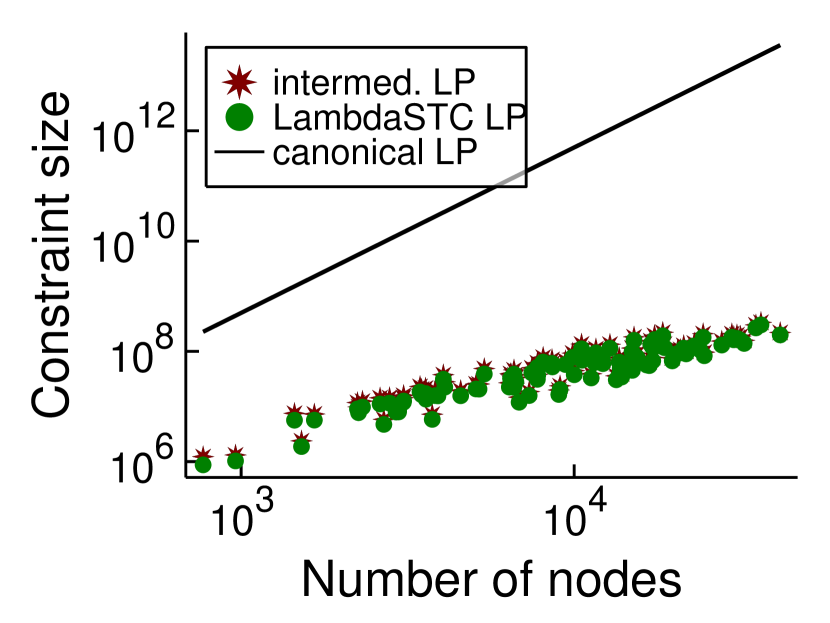

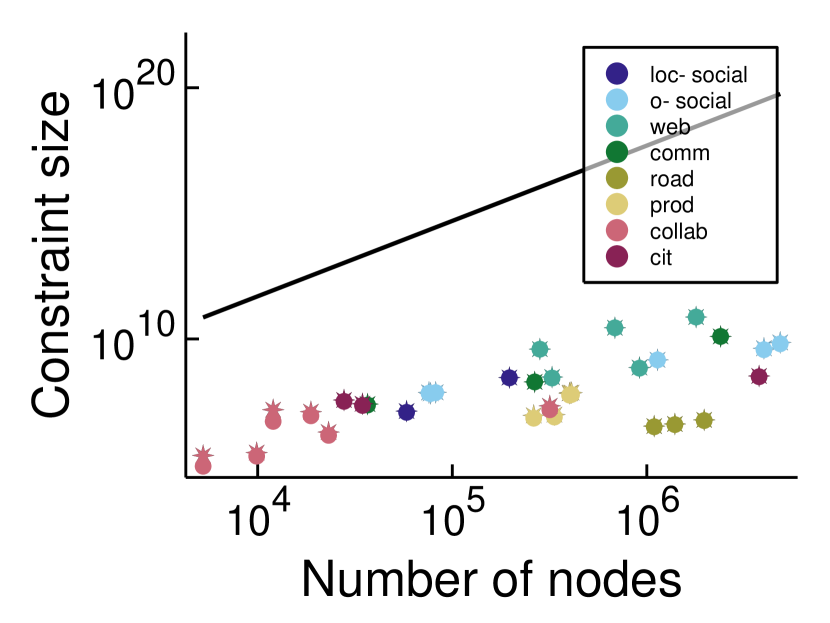

Algorithm 3 is an approximation algorithm for LambdaCC based on rounding the LambdaSTC LP relaxation. This LP has constraints, whereas the canonical LP has . In the worst case, it is possible for , but our experimental results demonstrate that is far smaller for all of the real-world graphs we consider. Even more significantly, the LambdaSTC LP is a covering LP, which makes it possible to use fast existing techniques for approximating covering LPs. This leads to much faster algorithms, at the expense of only a slightly worse approximation factor since the LP is only solved approximately. The next section provides a more detailed runtime analysis.

Our approach for rounding the LambdaSTC LP follows a similar strategy as CFP, which involves building a new graph and then running Pivot. The construction of depends on the LP variables , the edge structure in , and the value of . When , we always ensure that a non-edge in maps to a non-edge in . For , we always ensure that an edge in maps to an edge in . We first prove that the algorithm has an approximation factor that ranges from to as goes from to .

Theorem 4.3.

When , Algorithm 3 is a randomized -approximation algorithm for LambdaCC.

Proof.

To prove Theorem 4.4, we show the conditions in Theorem 2.1 are satisfied for . In our analysis, we define budgets for each distinct pair of nodes based on the LP objective (5). Specifically, we set if , and if . We begin by checking Condition (1) in Theorem 2.1 for each distinct pair of nodes , i.e.,

| (8) | |||

| (9) |

If , then so Condition (8) holds. Similarly, when and , then , trivially satisfying Condition (9). By the construction of , it is impossible for if . So the last case to consider is when and , in which case , so

Next, for every triplet such that , and , we need to check that

| (10) |

By our construction of , if and , then and are both edges in as well. Since , may or may not be an edge in , we prove (10) by considering two cases.

Case 1: . Here we have and . Using the open wedge inequality and the fact that both , we know . Therefore,

Case 2: . In this case, and . Then,

∎

Gleich et al. (Gleich et al., 2018) showed that for small enough , the canonical LambdaCC LP relaxation has an integrality gap, but showed how to round that LP to obtain a -approximation, which is better than for all . These previous results rule out the possibility of obtaining an approximation better than for arbitrarily small by rounding the (looser) LambdaSTC LP relaxation. However, we can show that Algorithm 3 will still provide a approximation, which is very close to the approximation factor obtained by Gleich et al. (Gleich et al., 2018) for rounding a much more expensive LP.

Theorem 4.4.

When , Algorithm 3 is a randomized -approximation algorithm for LambdaCC.

Proof.

We prove the result by showing that the conditions of Theorem 2.1 are satisfied with . Condition (1) of this theorem is easy to satisfy for a node pair if , since . It is similarly easy to satisfy if and since then . The construction of ensures it is impossible for if . If but , then we know and and . Thus,

which proves Condition (1) of Theorem 2.1.

Next, we prove Condition (2) for every triplet that defines an open wedge centered at in , i.e., , , and . We know that and , or else by the construction of we would have . Node pairs and may or may not be edges in , so we separately consider 4 cases in proving .

Case 1: When and , we have . The triplet is also an open wedge in , so we have

Case 2: When and , we have and we know . Then,

Case 3: When and , this is symmetric to Case 2.

Case 4: When and , we have , and both and are strictly less than , so

∎

4.3. A 3-approximation via an intermediate LP

Veldt et al. (Veldt et al., 2018, 2017) originally presented a 3-approximation algorithm for based on the canonical LP relaxation. This algorithm however comes with an size constraint matrix since all triangle inequality constraints are considered for all triplet of nodes . In contrast, in the previous section, we proposed a faster 6-approximation algorithm based on the LambdaSTC LP relaxation that includes a triangle inequality constraint only when an open wedge (centered at ) in . In this section, we show how to obtain a 3-approximation by rounding an LP relaxation whose constraint set lies somewhere between the LambdaSTC and canonical LambdaCC LP relaxation. In more detail, we include a triangle inequality constraint for every wedge in as well as for every triangle in . This is a superset of the constraint set for the LambdaSTC LP relaxation but does not include a triangle inequality constraint for every . Formally, this LP relaxation is given by

| (11) | min | |||

| s.t. | ||||

where represents a triangle that includes node as one of its vertices. Algorithm 4 uses the same rounding strategy that was used for the canonical LP relaxation (Veldt et al., 2018), except that it is applied to the LP in equation (11) rather than the canonical LP.

The LP relaxation presented here has a constraint size determined by the number of wedges and triangles, denoted as , in the graph. While both and can potentially be in the worst case, this is not typically the scenario in practical situations. In real-world networks, the number of wedges and triangles is significantly smaller. Figure 1 illustrates this observation by comparing the number of constraints in the intermediate LP (11) against the canonical LP. Thus, solving and rounding this LP is more efficient compared to existing techniques, and we now prove that this can be done without a loss in the approximation factor.

Theorem 4.5.

Algorithm 4 is a randomized 3-approximation algorithm for LambdaCC when .

Proof.

We can prove that Algorithm 4 satisfies Theorem 2.1 by making slight modifications to the proof presented in Theorem 6 in the work of Veldt et al. (Veldt et al., 2017). Condition (1) of Theorem 2.1 can be satisfied following the proof as is in (Veldt et al., 2017). To prove Condition (2), we demonstrate that

| (12) |

for every triplet of nodes that is mapped to an open wedge centered at in . This means that , . Note that we are only able to apply the triangle inequality if is also an open wedge or a triangle in the original graph . Given an arbitrary triplet that maps to an open wedge in , there are 8 possibilities for the edge structure in , depending on which pairs of nodes in share an edge in . Following Veldt et al. (Veldt et al., 2017), we can prove inequality (12) for each of the 8 cases separately. Note that we do not need to update the analysis for cases where is an open wedge or a triangle in , since our new LP in (11) includes triangle inequality constraints for these cases. This means that the following cases from the analysis of Veldt et al. (Veldt et al., 2017) remain unchanged:

-

•

Case 1: forms a wedge centered at in .

-

•

Case 5 and Case 6: forms a wedge centered at either or .

-

•

Case 8: forms a triangle.

For Case 7, where , , and , the proof is trivial since . We update the proof for the remaining cases as follows:

Case 2: When , we have and . Thus,

Case 3: When is symmetric to Case 2 and the same result holds.

Case 4: When , we have and . Then,

∎

Therefore, considering all the cases, we can conclude that Theorem 2.1 holds for , satisfying the 3-approximation guarantee.

4.4. Runtime Analysis

For a graph , let and . When written in the form , the canonical LP relaxation for LambdaCC has constraints and variables. Even using recent theoretical algorithms for solving linear programs in matrix multiplication time (Cohen et al., 2021; Jiang et al., 2021), the runtime is where is the matrix multiplication exponent, so the runtime for solving and rounding the canonical relaxation is . Not only does this have a prohibitively expensive runtime, but in practice even forming such a large constraint matrix can lead to memory issues that make it infeasible to solve this on a very large scale. Thus, although this approach provides the best theoretical approximation factor, it is not scalable.

Our new approximation algorithms come with good approximation guarantees and are much faster than solving the canonical relaxation, both in theory and practice. Finding the open wedges of can be done in time by visiting each node, and then visiting each pair of neighbors of that node in turn. This runtime is upper bounded by . When applying the randomized Pivot algorithm, this is in fact the most expensive part of CFP, so the overall runtime for CFP is . If we use the deterministic pivoting strategy of van Zuylen and Williamson (van Zuylen and Williamson, 2009), this can be implemented in time so that is the runtime for a derandomized version of CFP.

The LambdaSTC LP is a covering LP, so for we can find a -approximate solution in time using the multiplicative weights update method (Quanrud, 2020; Garg and Khandekar, 2004; Fleischer, 2004), where suppresses logarithmic factors. This assumes we already know ; if we factor in the time it takes to find all open wedges the runtime comes to . Minor alteration to our analysis quickly shows that a -approximate solution to the LP translates to approximation factors that are a factor larger. Once again, applying the deterministic pivot selection adds to the runtime, which is still far better than .

5. Experiments

This section presents a performance of our algorithms. We conduct experiments on publicly available datasets from various domains, including the SNAP (Leskovec and Sosič, 2016) and Facebook100 (Traud et al., 2012) datasets, which are available at the Suitsparse matrix collection (Davis and Hu, 2011). To implement the algorithms, we use the Julia programming language, and we run all experiments on a Dell XPS machine with 16 GB RAM and an Intel Core i7 processor. Both the canonical and the LambdaSTC LP relaxations are solved using Gurobi optimization software (Gurobi Optimization, 2021). We focus here on finding exact solutions for the LambdaSTC LP relaxation using existing optimization software. This is already far more scalable than trying to form the constraint matrix for the canonical LP relaxation and solve it using Gurobi. Finding faster approximate solutions for the LP using the multiplicative weights update method is a promising direction for future research, but is beyond the scope of the current paper. Code for our implementations and experiments is available at https://github.com/Vedangi/FastLamCC.

5.1. Approximation algorithms for LambdaCC

A natural question to ask is how well our approximation algorithms compare against previous algorithms for LambdaCC based on the canonical LP relaxation. It is worth noting first of all that even forming the full constraint matrix for the canonical LP (which has triangle inequality constraints) becomes infeasible for even modest-sized graphs due to memory constraints. Meanwhile, the LambdaSTC LP relaxation has one triangle inequality constraint for each open wedge. Although there exist graphs such that , this is not the case in practical situations. Figure 1 plots the size of for all of the Facebook100 networks, as well as for a range of graphs of different classes from SNAP (e.g., social networks, citation networks, web networks, etc.). In all cases is orders of magnitude smaller than , illustrating that solving and rounding this LP is far more practical than using existing LP-based techniques. We also plot the number of constraints in the intermediate LP relaxation from Section 4.3, showing that it has only a slight increase in constraint size over the LambdaSTC LP.

An additional reason to use the LambdaSTC relaxation is that in practice, solving the LambdaSTC relaxation often also solves the canonical LP relaxation. This can be checked by seeing whether the optimal LP variables for the LambdaSTC relaxation are also feasible for the canonical LP.111This can also be viewed as the first step in a more memory efficient approach for solving correlation clustering LP relaxations that has been applied elsewhere (Veldt et al., 2019b; Veldt, 2022): solve the LP over a subset of the constraints and iteratively add more constraints until the variables satisfy all triangle inequalities. Our results indicate that for these graphs and values, enforcing triangle inequality constraints just at open wedge is sufficient. Table 2 shows results for solving and rounding the LambdaSTC LP (Algorithm 3) on three graphs for a range of different values. The graphs are Simmons81 (a social network), ca-GrQc (a collaboration network), and Polblogs (a Political blogs network). We attempted to form the full canonical LP relaxation for these graphs and solve it but quickly ran out of memory. We were able to form and solve the LambdaSTC LP relaxation, and in almost all cases the optimal solution variables for this LP were certified as being feasible (and hence optimal) for the canonical LP. Thus, our LP-based algorithm far exceeded its theoretical guarantees. In practice, it produced solutions that are within a factor of 2 or less from the LP lower bound. When rounding, we applied both our new approach (Algorithm 3) as well as the existing rounding strategy for the canonical LP relaxation, since the rounding step is very fast. We used the previous rounding strategy for the canonical LP whenever we could certify we had solved the canonical LP (since this has an improved a priori guarantee). In practice though, the results for the two different rounding strategies were nearly indistinguishable.

Table 2 also displays results for CFP, showing that it is even orders of magnitude faster than solving and rounding the LambdaSTC LP relaxation while producing comparable approximation ratios (ratio between clustering solution and the computed lower bound). While solving our LP relaxation takes up to hundreds of seconds on the three graphs, CFP takes mere fractions of a second.

| Lower Bound | Clustering score | Ratio | Runtime (seconds) | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Graph | CFP | LambdaSTC | CFP | LambdaSTC | CFP | LambdaSTC | CFP | LamdaSTC | |

| 0.4 | 2668 | 2889.7 | 6043 | 4611 | 2.3 | 1.6 | 0.34 | 38.3 | |

| ca-GrQc | 0.55 | 2064 | 2236.5∗ | 4092 | 3708 | 2.0 | 1.7 | 0.061 | 29.7 |

| n = 5242 | 0.75 | 1179 | 1278.2 | 2373 | 2118 | 2.0 | 1.7 | 0.058 | 27.7 |

| m = 14484 | 0.95 | 239 | 259.3 | 469 | 430 | 2.0 | 1.7 | 0.055 | 25.4 |

| 0.4 | 9823 | 9893.8∗ | 21569 | 20674 | 2.2 | 2.1 | 0.48 | 3064.3 | |

| Simmons81 | 0.55 | 7392 | 7420.5∗ | 15797 | 14839 | 2.1 | 2.0 | 0.25 | 2935.4 |

| n = 1518 | 0.75 | 4113 | 4122.5∗ | 8657 | 8244 | 2.1 | 2.0 | 0.12 | 619.7 |

| m = 32988 | 0.95 | 822 | 824.5∗ | 1646 | 1649 | 2.0 | 2.0 | 0.098 | 464.8 |

| 0.4 | 4960 | 5013.1∗ | 10591 | 9997 | 2.1 | 2.0 | 0.49 | 244.4 | |

| Polblogs | 0.55 | 3745 | 3760.2∗ | 7883 | 7517 | 2.1 | 2.0 | 0.21 | 217.4 |

| n = 1222 | 0.75 | 2084 | 2089.0∗ | 4377 | 4177 | 2.1 | 2.0 | 0.071 | 187.9 |

| m = 16714 | 0.95 | 417 | 417.8∗ | 837 | 835 | 2.0 | 2.0 | 0.052 | 114.2 |

5.2. Combining CFP with Fast Heuristics

The Louvain method is a widely used heuristic clustering technique that greedily moves nodes in order to optimize a clustering objective (Blondel et al., 2008). The original Louvain method was designed for maximum modularity clustering, but many variations of the method have been designed. This includes a fast heuristic called LambdaLouvain (Veldt et al., 2018), that greedily optimizes the LambdaCC objective for a given parameter , as well as a parallel version of this method (Shi et al., 2021). Although these methods are fast and perform well in practice, they do not compute lower bounds for the LambdaCC objective nor provide any approximation guarantees. One benefit of our algorithms is that they come with lower bounds that can be used not only to design faster approximation algorithms for LambdaCC, but also to obtain a posteriori guarantees for other heuristic methods.

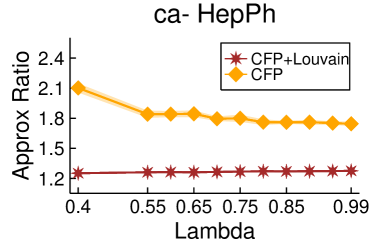

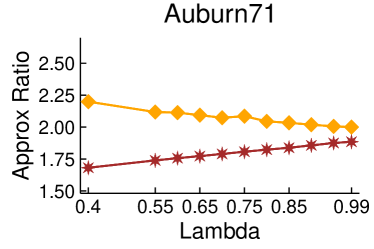

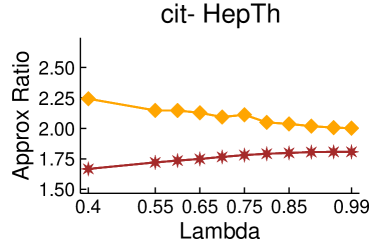

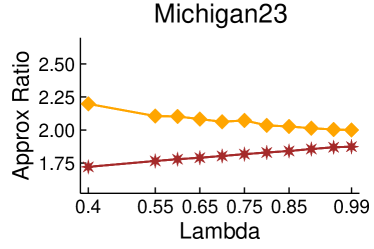

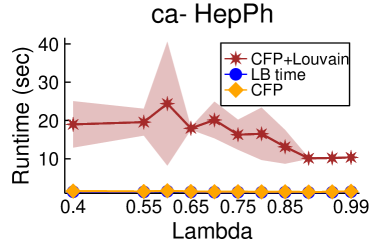

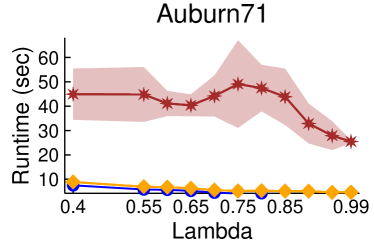

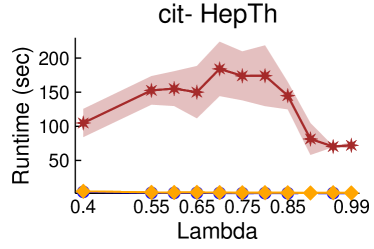

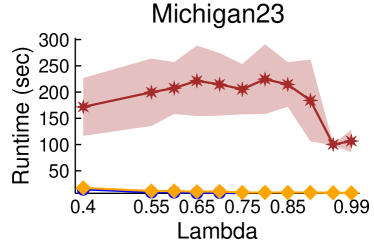

Figures 2 and 3 showcase the combined results of LambdaLouvain with CFP lower bounds on graphs even larger than those considered in Table 2. These results demonstrate superior a posteriori approximation ratios (clustering objective divided by CFP lower bound) compared to running CFP by itself. Notably, as , the difference in approximation factors between CFP and LambdaLouvain decreases, converging toward a similar outcome. We execute both the CFP rounding procedures and LambdaLouvain method for 15 iterations, reporting the mean and standard deviation for approximation ratios and runtimes. While the CFP+LambdaLouvain yields better approximations, it comes with longer runtimes compared to the CFP alone. Even for small values of , we can certify that LambdaLouvain can produce a respectable factor of around 2 by using the lower bounds generated by CFP.

5.3. Scalability of CoverFlipPivot

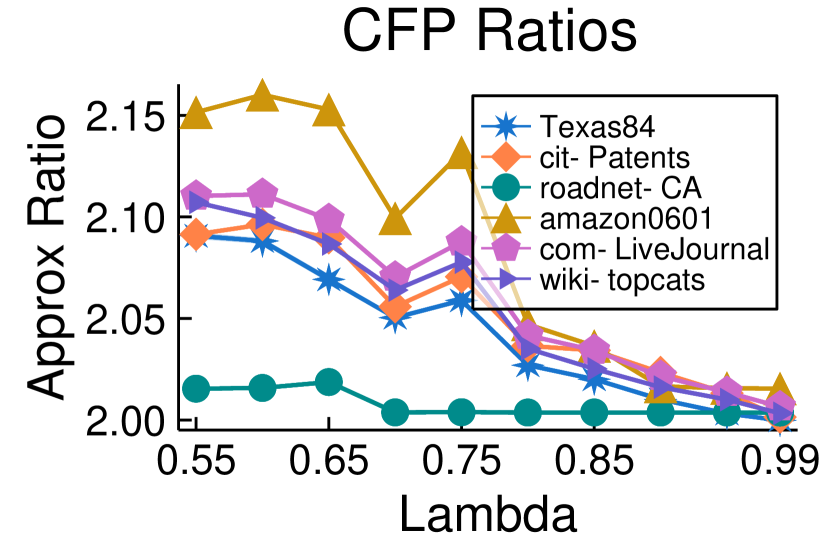

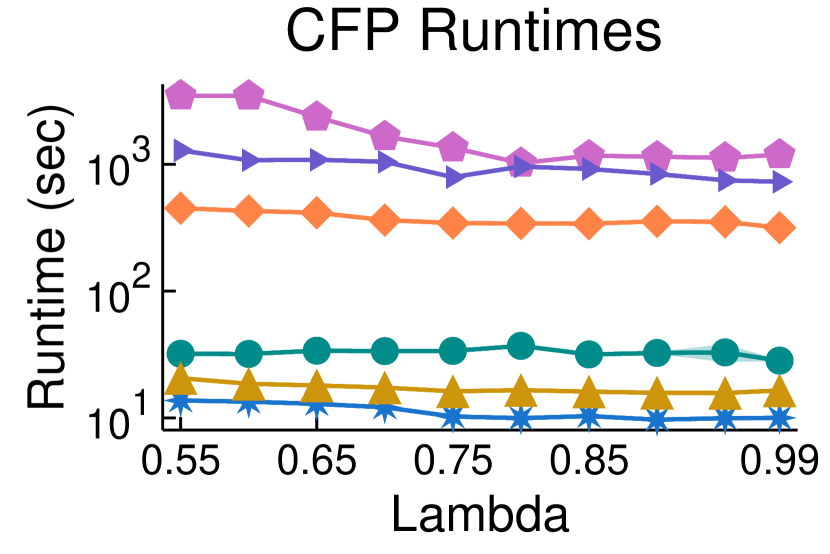

We further test the limits of CFP by running it on much larger graphs. Figure 4 shows approximation results on a social network with 1.59 million edges (Texas84), a road network with 2.76 million edges (roadNet-CA), a citation network with 1.65 billion edges (cit-Patents), an Amazon product co-purchasing network with 2.4 million edges (amazon0601), Wikipedia web network with 2.54 billion edges (wiki-topcats) and a blogging community network with 3.46 billion edges (com-Journal). CFP consistently outperforms its theoretical 6-approximation guarantee. For , it produces approximations of 2.1 or better. When , approximation factors increase to between 2.4-2.8, which still outperforms the 6-approximation guarantee. (We omit these results from the plot in Figure 4 in order to zoom in and better display factors near 2 for .) For each value of , the method takes around 58 minutes for the larger com-LiveJournal graph (with 3.46B edges). In contrast, the cit-Patents graph, consisting of 1.65 billion edges, is processed in less than 11 minutes, showcasing a notably faster runtime. Furthermore, the method exhibits even quicker processing times for the other graphs. An intriguing observation in this context is that as the objective transitions from cluster editing to cluster deletion (), both the approximation factor and the runtime exhibit improvements.

6. Conclusion

We present new approximation algorithms for LambdaCC graph clustering framework that are far more scalable than existing approximation algorithms relying on LP relaxations with constraints. We introduce the first combinatorial algorithm for LambdaCC in the parameter regime —where the problem interpolates between cluster editing and cluster deletion—which comes with a 6-approximation guarantee. We then provide algorithms for all parameter regimes based on rounding a less expensive LP relaxation. A major theoretical benefit of these alternative LPs is that they are covering LPs. This means that the multiplicative weights update method provides fast combinatorial methods for finding approximation solutions. Although in our work we focused on using existing optimization software to exactly solve these relaxations, a clear direction for future research is to implement these faster approximate solvers in order to achieve additional runtime improvements. Another direction for future work is to see whether it is possible to develop a 3-approximation for all by rounding the LambdaSTC LP. Though our theoretical approximation factors get increasingly worse for , in practice we see no deterioration in approximations. Finally, a compelling open question is whether we can develop an approximation algorithm for LambdaCC that applies for all values of and can be made purely combinatorial, and does not rely on the canonical LP.

References

- (1)

- Abbe (2018) Emmanuel Abbe. 2018. Community Detection and Stochastic Block Models: Recent Developments. Journal of Machine Learning Research 18, 177 (2018), 1–86.

- Adriaens et al. (2020) Florian Adriaens, Tijl De Bie, Aristides Gionis, Jefrey Lijffijt, Antonis Matakos, and Polina Rozenshtein. 2020. Relaxing the strong triadic closure problem for edge strength inference. Data Mining and Knowledge Discovery 34 (2020), 611–651.

- Ailon et al. (2012) Nir. Ailon, Noa. Avigdor-Elgrabli, Edo. Liberty, and Anke. van Zuylen. 2012. Improved Approximation Algorithms for Bipartite Correlation Clustering. SIAM J. Comput. 41, 5 (2012), 1110–1121.

- Ailon et al. (2008) Nir Ailon, Moses Charikar, and Alantha Newman. 2008. Aggregating inconsistent information: ranking and clustering. Journal of the ACM (JACM) 55, 5 (2008), 23.

- Arora et al. (2009) Sanjeev Arora, Satish Rao, and Umesh Vazirani. 2009. Expander flows, geometric embeddings and graph partitioning. Journal of the ACM (JACM) 56, 2 (2009), 1–37.

- Bansal et al. (2004) Nikhil Bansal, Avrim Blum, and Shuchi Chawla. 2004. Correlation Clustering. Machine Learning 56 (2004), 89–113.

- Bar-Yehuda and Even (1985) Reuven Bar-Yehuda and Shimon Even. 1985. A local-ratio theorem for approximating the weighted vertex cover problem. Annals of Discrete Mathematics 25, 27-46 (1985), 50.

- Ben-Dor et al. (1999) Amir Ben-Dor, Ron Shamir, and Zohar Yakhini. 1999. Clustering gene expression patterns. Journal of computational biology 6, 3-4 (1999), 281–297.

- Blondel et al. (2008) Vincent D Blondel, Jean-Loup Guillaume, Renaud Lambiotte, and Etienne Lefebvre. 2008. Fast unfolding of communities in large networks. Journal of statistical mechanics: theory and experiment 2008, 10 (2008), P10008.

- Bohlin et al. (2014) Ludvig Bohlin, Daniel Edler, Andrea Lancichinetti, and Martin Rosvall. 2014. Community detection and visualization of networks with the map equation framework. In Measuring Scholarly Impact. Springer, 3–34.

- Charikar et al. (2003) Moses Charikar, Venkatesan Guruswami, and Anthony Wirth. 2003. Clustering with qualitative information. In Foundations of Computer Science, 2003. Proceedings. 44th Annual IEEE Symposium on. IEEE, 524–533.

- Charikar et al. (2005) Moses Charikar, Venkatesan Guruswami, and Anthony Wirth. 2005. Clustering with qualitative information. J. Comput. System Sci. 71, 3 (2005), 360 – 383. https://doi.org/10.1016/j.jcss.2004.10.012 Learning Theory 2003.

- Chawla et al. (2015) Shuchi Chawla, Konstantin Makarychev, Tselil Schramm, and Grigory Yaroslavtsev. 2015. Near optimal LP rounding algorithm for correlation clustering on complete and complete k-partite graphs. In Proceedings of the Forty-Seventh Annual ACM on Symposium on Theory of Computing. ACM, 219–228.

- Cohen et al. (2021) Michael B Cohen, Yin Tat Lee, and Zhao Song. 2021. Solving linear programs in the current matrix multiplication time. Journal of the ACM (JACM) 68, 1 (2021), 1–39.

- Davis and Hu (2011) Timothy A. Davis and Yifan Hu. 2011. The University of Florida Sparse Matrix Collection. ACM Trans. Math. Softw. 38, 1, Article 1 (dec 2011), 25 pages. https://doi.org/10.1145/2049662.2049663

- Delvenne et al. (2010) J.-C. Delvenne, Sophia N Yaliraki, and Mauricio Barahona. 2010. Stability of graph communities across time scales. Proceedings of the National Academy of Sciences 107, 29 (2010), 12755–12760.

- Demaine et al. (2006) Erik D. Demaine, Dotan Emanuel, Amos Fiat, and Nicole Immorlica. 2006. Correlation clustering in general weighted graphs. Theoretical Computer Science 361, 2 (2006), 172 – 187. https://doi.org/10.1016/j.tcs.2006.05.008 Approximation and Online Algorithms.

- Easley and Kleinberg (2010) David Easley and Jon Kleinberg. 2010. Networks, crowds, and markets. Vol. 8. Cambridge university press Cambridge.

- Fleischer (2004) Lisa Fleischer. 2004. A fast approximation scheme for fractional covering problems with variable upper bounds. In Proceedings of the fifteenth annual ACM-SIAM symposium on Discrete algorithms. 1001–1010.

- Fortunato and Hric (2016) Santo Fortunato and Darko Hric. 2016. Community detection in networks: A user guide. Physics Reports 659 (2016), 1 – 44. https://doi.org/10.1016/j.physrep.2016.09.002 Community detection in networks: A user guide.

- Gan et al. (2020) Junhao Gan, David F. Gleich, Nate Veldt, Anthony Wirth, and Xin Zhang. 2020. Graph Clustering in All Parameter Regimes. In 45th International Symposium on Mathematical Foundations of Computer Science (MFCS ’20, Vol. 170). 39:1–39:15. https://doi.org/10.4230/LIPIcs.MFCS.2020.39

- Garg and Khandekar (2004) Naveen Garg and Rohit Khandekar. 2004. Fractional covering with upper bounds on the variables: Solving LPs with negative entries. In Algorithms–ESA 2004: 12th Annual European Symposium, Bergen, Norway, September 14-17, 2004. Proceedings 12. Springer, 371–382.

- Gleich et al. (2018) David F. Gleich, Nate Veldt, and Anthony Wirth. 2018. Correlation Clustering Generalized. In 29th International Symposium on Algorithms and Computation (ISAAC 2018, Vol. 123). Schloss Dagstuhl–Leibniz-Zentrum fuer Informatik, Dagstuhl, Germany, 44:1–44:13. https://doi.org/10.4230/LIPIcs.ISAAC.2018.44

- Granovetter (1973) Mark S Granovetter. 1973. The strength of weak ties. American journal of sociology 78, 6 (1973), 1360–1380.

- Grüttemeier and Komusiewicz (2020) Niels Grüttemeier and Christian Komusiewicz. 2020. On the relation of strong triadic closure and cluster deletion. Algorithmica 82, 4 (2020), 853–880. https://doi.org/10.1007/s00453-019-00617-1

- Grüttemeier and Morawietz (2020) Niels Grüttemeier and Nils Morawietz. 2020. On Strong Triadic Closure with Edge Insertion. Technical report (2020).

- Gurobi Optimization (2021) LLC Gurobi Optimization. 2021. Gurobi optimizer reference manual.

- Jiang et al. (2021) Shunhua Jiang, Zhao Song, Omri Weinstein, and Hengjie Zhang. 2021. A Faster Algorithm for Solving General LPs. In Proceedings of the 53rd Annual ACM SIGACT Symposium on Theory of Computing (STOC ’21). Association for Computing Machinery, New York, NY, USA, 823–832. https://doi.org/10.1145/3406325.3451058

- Konstantinidis et al. (2018) Athanasios L Konstantinidis, Stavros D Nikolopoulos, and Charis Papadopoulos. 2018. Strong triadic closure in cographs and graphs of low maximum degree. Theoretical Computer Science 740 (2018), 76–84.

- Leighton and Rao (1999) Tom Leighton and Satish Rao. 1999. Multicommodity max-flow min-cut theorems and their use in designing approximation algorithms. Journal of the ACM (JACM) 46, 6 (November 1999), 787–832.

- Leskovec and Sosič (2016) Jure Leskovec and Rok Sosič. 2016. Snap: A general-purpose network analysis and graph-mining library. ACM Transactions on Intelligent Systems and Technology (TIST) 8, 1 (2016), 1–20.

- Newman (2006) Mark EJ Newman. 2006. Finding community structure in networks using the eigenvectors of matrices. Physical review E 74, 3 (2006), 036104.

- Newman (2016) Mark EJ Newman. 2016. Equivalence between modularity optimization and maximum likelihood methods for community detection. Physical Review E 94, 5 (2016), 052315.

- Newman and Girvan (2004) Mark EJ Newman and Michelle Girvan. 2004. Finding and evaluating community structure in networks. Physical review E 69, 2 (2004), 026113.

- Oettershagen et al. (2023) Lutz Oettershagen, Athanasios L Konstantinidis, and Giuseppe F Italiano. 2023. Inferring Tie Strength in Temporal Networks. In Machine Learning and Knowledge Discovery in Databases: European Conference, ECML PKDD 2022, Grenoble, France, September 19–23, 2022, Proceedings, Part II. Springer, 69–85.

- Porter et al. (2009) Mason A Porter, Jukka-Pekka Onnela, and Peter J Mucha. 2009. Communities in networks. Notices of the AMS 56, 9 (2009), 1082–1097.

- Quanrud (2020) Kent Quanrud. 2020. Nearly linear time approximations for mixed packing and covering problems without data structures or randomization. In Symposium on Simplicity in Algorithms. SIAM, 69–80.

- Reichardt and Bornholdt (2006) Jörg Reichardt and Stefan Bornholdt. 2006. Statistical mechanics of community detection. Physical Review E 74, 016110 (2006).

- Schaeffer (2007) Satu Elisa Schaeffer. 2007. Graph clustering. Computer Science Review 1, 1 (2007), 27 – 64. https://doi.org/10.1016/j.cosrev.2007.05.001

- Schaub et al. (2012) Michael T Schaub, Renaud Lambiotte, and Mauricio Barahona. 2012. Encoding dynamics for multiscale community detection: Markov time sweeping for the map equation. Physical Review E 86, 2 (2012), 026112.

- Shamir et al. (2004) Ron Shamir, Roded Sharan, and Dekel Tsur. 2004. Cluster graph modification problems. Discrete Applied Mathematics 144, 1-2 (2004), 173–182.

- Shi et al. (2021) Jessica Shi, Laxman Dhulipala, David Eisenstat, Jakub Łăcki, and Vahab Mirrokni. 2021. Scalable Community Detection via Parallel Correlation Clustering. Proc. VLDB Endow. 14, 11 (jul 2021), 2305–2313. https://doi.org/10.14778/3476249.3476282

- Shi and Malik (2000) Jianbo Shi and J. Malik. 2000. Normalized cuts and image segmentation. Pattern Analysis and Machine Intelligence, IEEE Transactions on 22, 8 (August 2000), 888–905. https://doi.org/10.1109/34.868688

- Sintos and Tsaparas (2014) Stavros Sintos and Panayiotis Tsaparas. 2014. Using strong triadic closure to characterize ties in social networks. In Proceedings of the 20th ACM SIGKDD international conference on Knowledge discovery and data mining (KDD ’14). 1466–1475. https://doi.org/10.1145/2623330.2623664

- Traag et al. (2019) Vincent A Traag, Ludo Waltman, and Nees Jan Van Eck. 2019. From Louvain to Leiden: guaranteeing well-connected communities. Scientific reports 9, 1 (2019), 5233.

- Traud et al. (2012) Amanda L Traud, Peter J Mucha, and Mason A Porter. 2012. Social structure of facebook networks. Physica A: Statistical Mechanics and its Applications 391, 16 (2012), 4165–4180.

- van Zuylen and Williamson (2009) Anke van Zuylen and David P. Williamson. 2009. Deterministic Pivoting Algorithms for Constrained Ranking and Clustering Problems. Mathematics of Operations Research 34, 3 (2009), 594–620. http://www.jstor.org/stable/40538434

- Veldt (2022) Nate Veldt. 2022. Correlation Clustering via Strong Triadic Closure Labeling: Fast Approximation Algorithms and Practical Lower Bounds. In International Conference on Machine Learning. PMLR, 22060–22083.

- Veldt et al. (2017) Nate Veldt, David Gleich, and Anthony Wirth. 2017. Unifying sparsest cut, cluster deletion, and modularity clustering objectives with correlation clustering. arXiv preprint arXiv:1712.05825 (2017).

- Veldt et al. (2018) Nate Veldt, David F Gleich, and Anthony Wirth. 2018. A correlation clustering framework for community detection. In Proceedings of the 2018 World Wide Web Conference. 439–448.

- Veldt et al. (2019a) Nate Veldt, David F. Gleich, and Anthony Wirth. 2019a. Learning Resolution Parameters for Graph Clustering. In Proceedings of the 28th International Conference on World Wide Web (San Francisco, CA, USA) (WWW ’19). International World Wide Web Conferences Steering Committee, Republic and Canton of Geneva, Switzerland, 11 pages. https://doi.org/10.1145/3308558.3313471

- Veldt et al. (2019b) Nate Veldt, David F. Gleich, Anthony Wirth, and James Saunderson. 2019b. Metric-Constrained Optimization for Graph Clustering Algorithms. SIAM Journal on Mathematics of Data Science 1, 2 (2019), 333–355. https://doi.org/10.1137/18M1217152 arXiv:https://doi.org/10.1137/18M1217152

- Veldt et al. (2020) Nate Veldt, Anthony Wirth, and David F Gleich. 2020. Parameterized correlation clustering in hypergraphs and bipartite graphs. In Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining. 1868–1876.