11email: {jlhou18,jilanxu18,ruifeng,yjzhang}@fudan.edu.cn

FDVTS’s Solution for 2nd COV19D Competition on COVID-19 Detection and Severity Analysis

Abstract

This paper presents our solution for the 2nd COVID-19 Competition, occurring in the framework of the AIMIA Workshop in the European Conference on Computer Vision (ECCV 2022). In our approach, we employ an effective 3D Contrastive Mixup Classification network for COVID-19 diagnosis on chest CT images, which is composed of contrastive representation learning and mixup classification. For the COVID-19 detection challenge, our approach reaches 0.933 macro F1 score on 484 validation CT scans, which significantly outperforms the baseline method by 16.3%. In the COVID-19 severity detection challenge, our approach achieves 0.770 macro F1 score on 61 validation samples, which also surpasses the baseline by 14%.

Keywords:

COVID-19 detection, COVID-19 severity detection, chest CT images, contrastive learning, mixup1 Introduction

The Coronavirus Disease 2019 SARS-CoV-2 (COVID-19) is a highly infectious disease, which emerged in December, 2019 [16]. Early detection based on chest CT scans is important to the timely treatment of patients and the slowdown of viral transmission. However, a volumn of CT scans contains hundreds of slices, which requires a heavy workload on radiologists. Recently, deep learning approaches have achieved excellent performance in fighting against COVID-19. They have been widely applied to many aspects, including the lung and infection region segmentation [14, 11, 1, 4] as well as the clinical diagnosis and assessment [15, 13, 12, 3].

In this paper, we present deep learning based solution for the 2nd COV19D Competition of the Workshop “AI-enabled Medical Image Analysis – Digital Pathology & Radiology/COVID19 (AIMIA)”, which occurs in conjunction with the European Conference on Computer Vision (ECCV) 2022. The competition includes two challenges, namely COVID-19 Detection Challenge and COVID-19 Severity Detection Challenge. COVID-19 detection aims to identify COVID from non-COVID cases. Each CT scan is manually annotated with respect to COVID-19 and non-COVID-19 categories. The severity of COVID-19 can be further divided into four stages, including Mild, Moderate, Severe, and Critical. Each severity category is determined by the presence of ground glass opacities and the pulmonary parenchymal involvement.

A natural way to diagnose COVID-19 based on 3D CT images is to use 3D networks. To address both challenges, we employ the advanced 3D contrastive mixup classification network (CMC-COV19D) in our previous work [2], which won the first price in the ICCV 2021 COVID-19 Diagnosis Competition of AI-enabled Medical Image Analysis Workshop [7]. Our CMC-COV19D framework introduces contrastive representation learning to discover more discriminative representations of COVID-19 cases. Besides, we use a joint training loss that combines the classification loss, mixup loss, and contrastive loss. We further employ an inflated 3D ImageNet pre-trained ResNest50 [17] as a strong feature extractor to boost more accurate COVID-19 diagnostic performance. Experimental results on both challenges show that our approach significantly surpasses the baseline model provided by organizers.

2 Dataset

COV19-CT-DB [6] includes 3D chest CT scans annotated for existence of COVID-19. It consists of 1,650 COVID and 6,100 non-COVID chest CT scan series, which correspond to a high number of patients (more than 1150) and subjects (more than 2600). In total, 724,273 slices correspond to the CT scans of the COVID-19 category and 1,775,727 slices correspond to the non COVID-19 category. Each of the 3D scans includes different number of slices, ranging from 50 to 700.

The database has been split in training, validation and testing sets. The training set contains 1992 3D CT scans. The validation set consists of 494 3D CT scans. A further split of the COVID-19 cases has been implemented, based on the severity of COVID-19, in the range from 1 to 4. In particular, parts of the COV19-CT-DB COVID-19 training and validation datasets have been accordingly split for severity classification in training, validation and testing sets. The training set contains, in total, 258 3D CT scans. The validation set consists of 61 3D CT scans.

3 Methodology

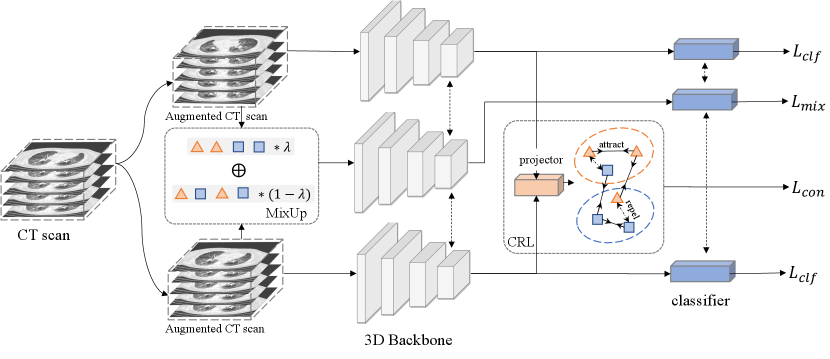

As shown in Fig. 1, Our CMC-COV19D network is composed of contrastive representation learning (CRL) and mixup classification.

3.0.1 Contrastive Representation Learning.

Our CMC-COV19D network employs the contrastive representation learning (CRL) as an auxiliary task to learn more discriminative representations of COVID-19. The CRL is comprised of the following components. 1) A stochastic data augmentation module , which transforms an input CT into a randomly augmented sample . We generate two augmented volumes from each input CT. 2) A base encoder , which maps the augmented CT sample to a representation vector . 3) A projection network , which is used to map the representation vector to a relative low-dimension vector . 4) A classifier , which classifies the vector to the final prediction.

Given a minibatch of CT images and their labels , we can generate a minibatch of samples after data augmentations. Inspired by the supervised contrastive loss [5], we define the positives as any augmented CT samples from the same category, whereas the CT samples from different classes are considered as negative pairs. Let be the index of an arbitrary augmented sample, the contrastive loss function is defined as:

| (1) |

where is an indicator function, and denotes a scalar temperature hyper-parameter. is the total number of samples in a minibatch that have the same label .

3.0.2 Mixup classification

We adopt the mixup [18] strategy during training to further boost the generalization ability of the model. For each augmented CT sample , we generate the mixup sample and it label as:

| (2) |

where is randomly selected indice. The mixup loss is defined as the cross entropy loss of mixup samples:

| (3) |

Different from the original design [18] where they replaced the classification loss with the mixup loss, we merge the mixup loss with the classification loss to enhance the classification ability on both raw samples and mixup samples. The classification loss on raw samples is defined as:

| (4) |

where denotes the one-hot vector of ground truth label, and is predicted probability of the sample .

Finally, we merge the CRL loss, mixup loss, and classification loss into a combined objective function:

| (5) |

where denote the balance weights.

4 Experimental Results

4.1 Implementation Details

For the COVID-19 detection, each CT volume is resized from to , where denotes the number of slices. Data augmentation includes RandomResizedCrop, random crop on the z-axis to 64, random contrast changes. Other augmentations such as flip and rotation are also tried, but no significant improvement is yielded.

For the COVID-19 severity detection, we resample each CT volume into . The same augmentations are applied, except for random crop on the z-axis.

We employ inflated 3D ResNest50 and Uniformer-S as the backbones in our experiments. The networks are trained for 100 epochs. We optimize the networks using the Adam algorithm with a weight decay of . The initial learning rate is set to and then divided by 10 at and of the total number of training epochs. Our methods are implemented in PyTorch and run on four NVIDIA Tesla V100 GPUs.

We adopt the Macro F1 score as the evaluation metric. The score is defined as the unweighted average of the class-wise/label-wise F1 Scores.

4.2 Results on the COVID-19 detection challenge

| ID | Backbone | Pretrain | CRL | Mixup | macro F1 score |

|---|---|---|---|---|---|

| 1 | CNN-RNN [6] | - | - | - | 0.770 |

| 2 | ResNest50 | ImageNet | 0.897 | ||

| 3 | ResNest50 | ImageNet | 0.924 | ||

| 4 | Uniformer-S | ImageNet | 0.915 | ||

| 5 | Uniformer-S | ImageNet | 0.920 | ||

| 6 | Uniformer-S | k400 | 0.925 | ||

| 7 | Uniformer-S | k400_16x8 | 0.927 | ||

| 8 | Uniformer-S* | k400_16x8 | 0.933 |

Table 1 shows the results of the baseline model and our methods on the validation set of COVID-19 detection challenge. The baseline CNN-RNN approach follows the work [8, 10, 9] on developing deep neural architectures for predicting COVID-19. It achieves 0.77 macro F1 score. Our 3D CMC-COV19D models with different backbones obtain significant improvements compared with the baseline. The effectiveness of CRL and Mixup modules are also verified.

4.3 Results on the COVID-19 severity detection challenge

| ID | Methods | CRL | Mixup | Macro F1 score |

|---|---|---|---|---|

| 1 | CNN-RNN [6] | - | - | 0.630 |

| 2 | ResNest50 | 0.655 | ||

| 3 | ResNest50 | 0.673 | ||

| 4 | ResNest50 | 0.719 | ||

| 5 | ResNest50+Lesion | 0.770 |

5 Conclusions

In this paper, we present our solution for the 2nd COVID-19 Competition on two challenges: COVID-19 detection and COVID-19 severity detection. Our network is composed of contrastive representation learning and mixup classification for more accurate COVID-19 diagnosis. We achieve 0.933 and 0.770 macro F1 score on the the validation set of COVID-19 detection and severity detection, respectively.

References

- [1] Chen, J., Wu, L., Zhang, J., Zhang, L., Gong, D., Zhao, Y., et al.: Deep learning-based model for detecting 2019 novel coronavirus pneumonia on high-resolution computed tomography. Scientific Reports 10(1), 1–11 (2020)

- [2] Hou, J., Xu, J., Feng, R., Zhang, Y., Shan, F., Shi, W.: Cmc-cov19d: Contrastive mixup classification for covid-19 diagnosis. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. pp. 454–461 (2021)

- [3] Hou, J., Xu, J., Jiang, L., Du, S., Feng, R., Zhang, Y., Shan, F., Xue, X.: Periphery-aware covid-19 diagnosis with contrastive representation enhancement. Pattern Recognition 118, 108005 (2021)

- [4] Jin, S., Wang, B., Xu, H., Luo, C., Wei, L., Zhao, W., et al.: Ai-assisted ct imaging analysis for covid-19 screening: building and deploying a medical ai system in four weeks. MedRxiv (2020)

- [5] Khosla, P., Teterwak, P., Wang, C., Sarna, A., Tian, Y., Isola, P., Maschinot, A., Liu, C., Krishnan, D.: Supervised contrastive learning. In: Annual Conference on Neural Information Processing Systems 2020 (2020)

- [6] Kollias, D., Arsenos, A., Kollias, S.: Ai-mia: Covid-19 detection & severity analysis through medical imaging. arXiv preprint arXiv:2206.04732 (2022)

- [7] Kollias, D., Arsenos, A., Soukissian, L., Kollias, S.: Mia-cov19d: Covid-19 detection through 3-d chest ct image analysis. arXiv preprint arXiv:2106.07524 (2021)

- [8] Kollias, D., Bouas, N., Vlaxos, Y., Brillakis, V., Seferis, M., Kollia, I., Sukissian, L., Wingate, J., Kollias, S.: Deep transparent prediction through latent representation analysis. arXiv preprint arXiv:2009.07044 (2020)

- [9] Kollias, D., Tagaris, A., Stafylopatis, A., Kollias, S., Tagaris, G.: Deep neural architectures for prediction in healthcare. Complex & Intelligent Systems 4(2), 119–131 (2018)

- [10] Kollias, D., Vlaxos, Y., Seferis, M., Kollia, I., Sukissian, L., Wingate, J., Kollias, S.D.: Transparent adaptation in deep medical image diagnosis. In: TAILOR. pp. 251–267 (2020)

- [11] Li, L., Qin, L., Xu, Z., Yin, Y., Wang, X., Kong, B., et al.: Artificial intelligence distinguishes covid-19 from community acquired pneumonia on chest ct. Radiology 296, 200905 (2020)

- [12] Song, Y., Zheng, S., Li, L., Zhang, X., Zhang, X., Huang, Z., et al.: Deep learning enables accurate diagnosis of novel coronavirus (covid-19) with ct images. MedRxiv (2020)

- [13] Wang, S., Kang, B., Ma, J., Zeng, X., Xiao, M., Guo, J., et al.: A deep learning algorithm using ct images to screen for corona virus disease (covid-19). European radiology pp. 1–9 (2021)

- [14] Wang, X., Deng, X., Fu, Q., Zhou, Q., Feng, J., Ma, H., et al.: A weakly-supervised framework for covid-19 classification and lesion localization from chest ct. IEEE Transactions on Medical Imaging 39(8), 2615–2625 (2020)

- [15] Wang, Z., Xiao, Y., Li, Y., Zhang, J., Lu, F., Hou, M., et al.: Automatically discriminating and localizing covid-19 from community-acquired pneumonia on chest x-rays. Pattern Recognition 110, 107613 (2020)

- [16] WHO: Coronavirus disease (covid-19) pandemic. https://www.who.int/emergencies/diseases/novel-coronavirus-2019 (2022)

- [17] Zhang, H., Wu, C., Zhang, Z., Zhu, Y., Lin, H., Zhang, Z., Sun, Y., He, T., Mueller, J., Manmatha, R., et al.: Resnest: Split-attention networks. arXiv preprint arXiv:2004.08955 (2020)

- [18] Zhang, H., Cisse, M., Dauphin, Y.N., Lopez-Paz, D.: mixup: Beyond empirical risk minimization. arXiv preprint arXiv:1710.09412 (2017)