Feasibility-aware Imitation Learning from Observations

through a Hand-mounted Demonstration Interface

Abstract

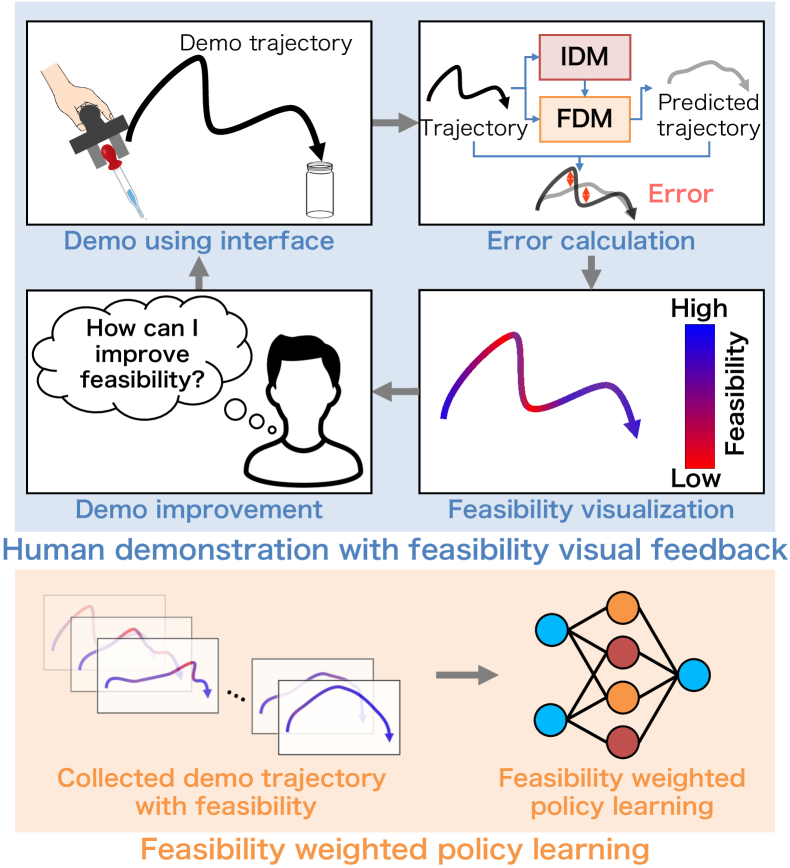

Imitation learning through a demonstration interface is expected to learn policies for robot automation from intuitive human demonstrations. However, due to the differences in human and robot movement characteristics, a human expert might unintentionally demonstrate an action that the robot cannot execute. We propose feasibility-aware behavior cloning from observation (FABCO). In the FABCO framework, the feasibility of each demonstration is assessed using the robot’s pre-trained forward and inverse dynamics models. This feasibility information is provided as visual feedback to the demonstrators, encouraging them to refine their demonstrations. During policy learning, estimated feasibility serves as a weight for the demonstration data, improving both the data efficiency and the robustness of the learned policy. We experimentally validated FABCO’s effectiveness by applying it to a pipette insertion task involving a pipette and a vial. Four participants assessed the impact of the feasibility feedback and the weighted policy learning in FABCO. Additionally, we used the NASA Task Load Index (NASA-TLX) to evaluate the workload induced by demonstrations with visual feedback.

I Introduction

Imitation learning [1] is a method for learning policies that assign automation using human demonstrations rather than manually designing them through environmental models. Recently, approaches have been explored that learn policies for robotic automation only from environmental observations collected during direct demonstrations by a human expert. To simplify policy learning in such imitation learning, demonstration interfaces that mimic the structure of a robot’s hand have been proposed [2, 3, 4, 5].

Imitation learning with a demonstration interface cannot directly learn a policy since the training data do not include action information. To address this issue, we focus on imitation learning from observation (ILfO), an imitation learning method that assumes that the only available option is the observation of demonstrations and incorporates a mechanism to complement the action information. ILfO assumes that a policy is learned using the robot’s motion data. An ILfO method, behavior cloning from observation (BCO), learns an inverse dynamics model (IDM) using the robot’s motion data and a policy by predicting the action based on the demonstration data by IDM[6]. Reinforcement learning-based methods that use demonstration data as rewards have also been proposed[7, 8, 9].

Imitation learning with a demonstration interface allows human experts to intuitively demonstrate actions without prior robotics knowledge. However, due to differences in human and robot movement characteristics, a human expert may demonstrate an infeasible action for a robot. A policy learned using such demonstrations cannot achieve the task, and the error between the behaviors of the policy and the demonstration is compounded by a covariate shift [10].

We believe that the above problems can be alleviated by introducing a robot’s feasibility into imitation learning with a demonstration interface and using it to provide feedback to the human expert and the policy learning. Since feasibility can be calculated using the IDM and the forward dynamics model (FDM), it can be introduced to BCO by simultaneously training the FDM and the IDM.

In this study, we propose a novel ILfO method called feasibility-aware behavior cloning from observation (FABCO) that has a demonstration interface. In the FABCO framework, the feasibility of the demonstration is evaluated using the robot’s pre-trained FDM and IDM. This feasibility is presented as visual feedback to the demonstrator, encouraging clarification of the demonstration data. During policy learning, the estimated feasibility is used as a weight to the demonstration data, enhancing both the data efficiency and the robustness of the learned policy. An overview of FABCO is shown in Fig. 1. We verify the effectiveness of the proposed method by applying it to a pipette insertion task using a pipette and a vial. In this experiment, four participants verify the effectiveness of feasibility visual feedback and feasibility-weighted policy learning on FABCO. We also used NASA-TLX [11] to investigate the workload induced by demonstrations using visual feedback.

II Related Work

II-A Imitation Learning using Robot Demonstrations

Imitation learning methods acquire a control policy using demonstration data, which are collected through a robot operation. Wong et al. proposed a system that enables users to control a mobile robot by a smartphone while viewing images from a camera mounted on the robot. This situation allows for the teaching of both mobility operations and robotic arm manipulation for imitating kitchen tasks [12]. Cuan et al. demonstrated that adding real-time haptic feedback to a teleoperation device improves the efficiency and quality of the data collection and imitated a door-opening task[13]. However, in these imitation learning methods, the learned policy is known to be vulnerable to modeling errors and noise, which can cause the robot’s behavior based on the learned policy to become unstable. This problem is called a covariate shift. Interactive approaches have been proposed to address this issue.

Interactive imitation learning alternates between demonstration and policy learning and acquires a stable policy by utilizing a learned policy for demonstration. Two representative methods are DAgger and DART. In the former approach, the robot moves according to a learned policy, and the operator takes action and collects data only when learning is insufficient [14, 15, 16, 17, 18]. The DART approach injects noise into human operations and prompts human operators to take action to deal with it [19, 20, 21, 22, 23].

These imitation learning methods assume that demonstration data are collected by directly operating a robot. However, operating it requires specialized skills. To enable robots to automate a broader range of tasks, methods are desirable that allow them to learn from demonstrations without requiring such specialized skills.

II-B Imitation Learning using Human Hand Demonstrations

Imitation learning using human hand demonstrations allows human experts to perform intuitive motion demonstrations. However, the corresponding robot states and actions must be estimated from human demonstration data since the states and actions of humans and robots are different. Some methods use trial and error to estimate actions that imitate the estimated robot state sequences [7, 24, 25]. Smith et al. used a CycleGAN model to replace humans with robots in demonstration videos and learned corresponding robot actions through model-based reinforcement learning [24]. While those studies provide intuitive demonstration, this approach struggles with the physical differences between humans and robots.

Recent research has focused on unifying human and robot end-effectors to simplify imitation [2, 3, 4, 5]. A previous work developed a demonstration interface that unified human and robot end-effectors, enabling through behavior cloning the imitation of tasks like grasping and pushing [5]. Chi et al. also developed a similar demonstration interface that facilitated the learning of generalizable policies [2].

In contrast to those studies, we unify end-effectors using a hand-mounted demonstration interface and provide feasibility visual feedback for demonstrators and robot-executable demonstrations.

II-C Feasibility in Imitation Learning

In the imitation learning framework, the differences between the demonstrator and robot dynamics are addressed by estimating feasibility, which is utilized for policy learning [26, 27, 28]. Since these methods evaluate feasibility after demonstrations, they may collect many demonstrations with low feasibility. Our method introduces feasibility into ILfO and proposes a method that incorporates it into feedback to the demonstrator and policy learning.

III Behavior Cloning from Observation

BCO is an imitation learning method inspired by learning through human imitation. It acquires a policy that imitates the demonstrator’s behavior using only the state sequences observed by the demonstrator during task execution [6]. This method compensates for the lack of action information in the demonstration data by learning the agent’s IDM. It predicts the agent’s actions based on the state transitions in the demonstration data and learns the policy accordingly.

III-A Problem Statement

BCO assumes that the environment follows a Markov decision process (MDP). At time , initial state follows initial state distribution , and at time , given state and action , subsequent state follows state transition probability . The policies of the demonstrator and the agent are denoted as and . While the demonstrator provides demonstrations based on , during policy learning for the agent, only state sequence from the demonstrations is available.

III-B IDM Learning and Prediction of Demonstrator’s Actions

BCO utilizes IDM to predict actions from demonstration state sequence . The IDM is trained using state sequence and action sequence collected when the agent follows arbitrary policy in the environment. IDM predicts action using states and , and assuming that the agent’s state sequence and action sequence follow , IDM parameters are optimized:

| (1) |

Using optimized parameters , action is predicted for demonstration states and .

III-C Policy Learning

Parameters of agent’s policy are learned using demonstration state sequence and IDM. When demonstration state sequence follows , the parameters are learned:

| (2) |

IV Feasibility-aware Behavior Cloning from Observation

In this section, we propose FABCO, a novel ILfO method with a hand-mounted demonstration interface that combines visual feasibility feedback for the demonstrators and feasibility-weighted policy learning for the imitation learning (Fig. 1). FABCO is based on the assumption that motion data can be collected from a robot’s random actions. It evaluates the feasibility of demonstrations by learning FDM and IDM with the robot’s motion data.

IV-A Hand-mounted Demonstration Interface

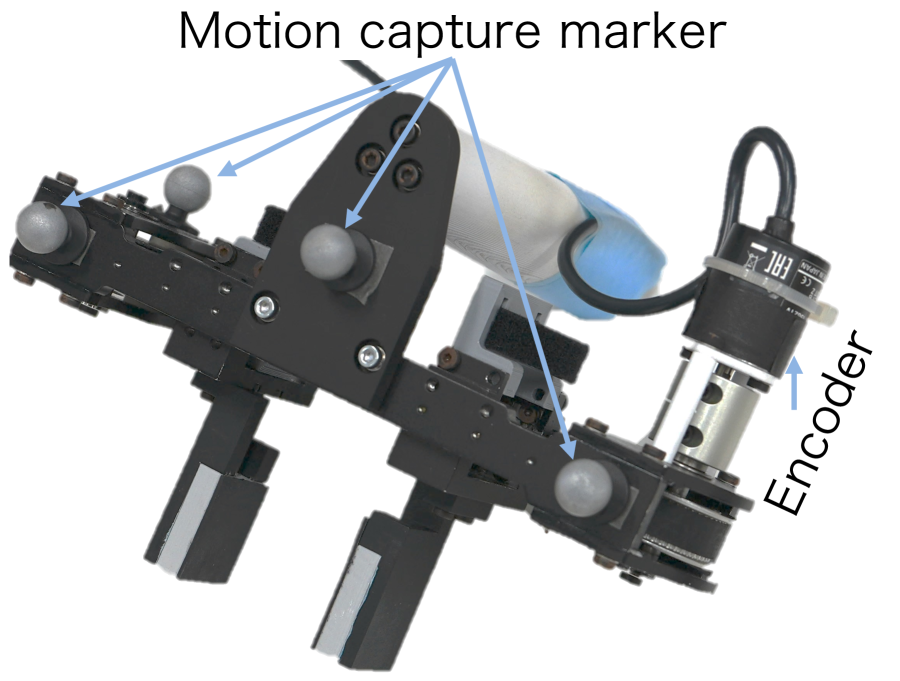

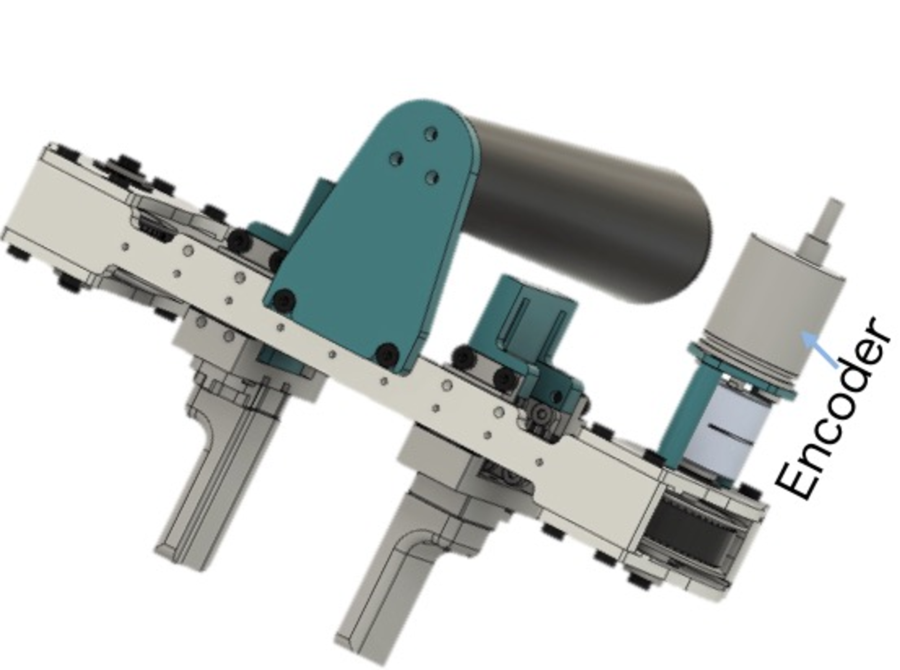

To collect demonstration data with a shared hand structure between a robot and a human, we developed a hand-mounted demonstration interface that shares the two-finger opposing structure of the robot hand with the human hand (Fig. 2). This hand-mounted demonstration interface, which was developed with reference to Hamaya et al. [4], allows the demonstrator to grasp the handle and open/close the fingers. The distance between the fingers can be calculated from an encoder attached to the hand-mounted demonstration interface, and the interface’s pose is observed using a motion capture system.

IV-B Problem Statement

Demonstration data from the task execution consists of state , which is composed of hand-mounted demonstration interface pose information and environmental observation . This method focuses on capturing the robot’s dynamics and assumes that the robot’s actions can be estimated from the sequence of its poses to acquire a generalizable model for the task. For IDM and FDM learning, the hand pose information from the demonstrations is used to predict the actions and the next hand pose. The training data for IDM and FDM consist of the robot’s pose series data and action series data , collected as the robot follows randomly generated motion trajectories within the workspace using tracking policy . For policy learning, the actions are predicted from the pose transitions included in demonstration data , and the policy is learned based on these predictions.

IV-C Learning of Inverse and Forward Dynamics Models

IDM and FDM are trained using data collected when the robot moves randomly within the workspace. The training data for IDM and FDM are collected by generating random motion trajectories within the robot’s operational environment. The robot follows these trajectories using a tracking policy , collecting a series of robot pose data and action data during the trajectory tracking. Here robot’s pose series data and action series data are assumed to follow .

IDM is a model, , which predicts action required to reproduce a change in the pose between and . FDM is a model, , which estimates pose that results from pose and action . Parameters and of IDM and FDM are optimized based on the following equations:

| (3) | ||||

| (4) |

Using the optimized parameters, IDM and FDM predict the action that changes the robot’s pose, , and the robot’s pose at the next time step, .

IV-D Feasibility Calculation

The feasibility of demonstrated hand poses is calculated and fed back to the demonstrator to encourage demonstrations that are easier for the robot to imitate. The feasibility is calculated using action prediction from the IDM and the predicted robot pose at the next time step using FDM. Feasibility at time step is calculated as follows:

| (5) |

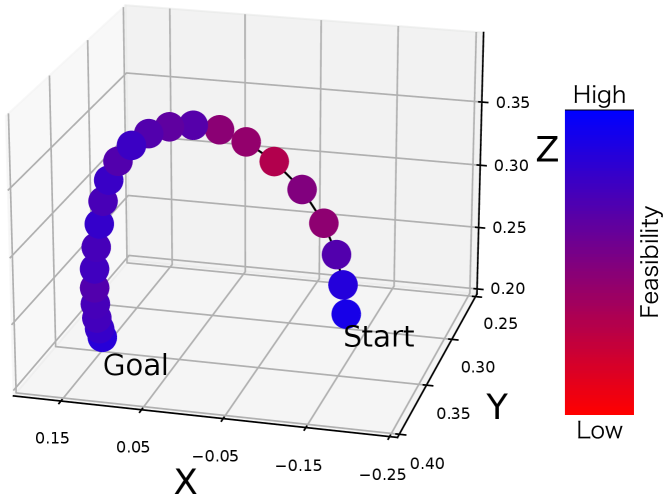

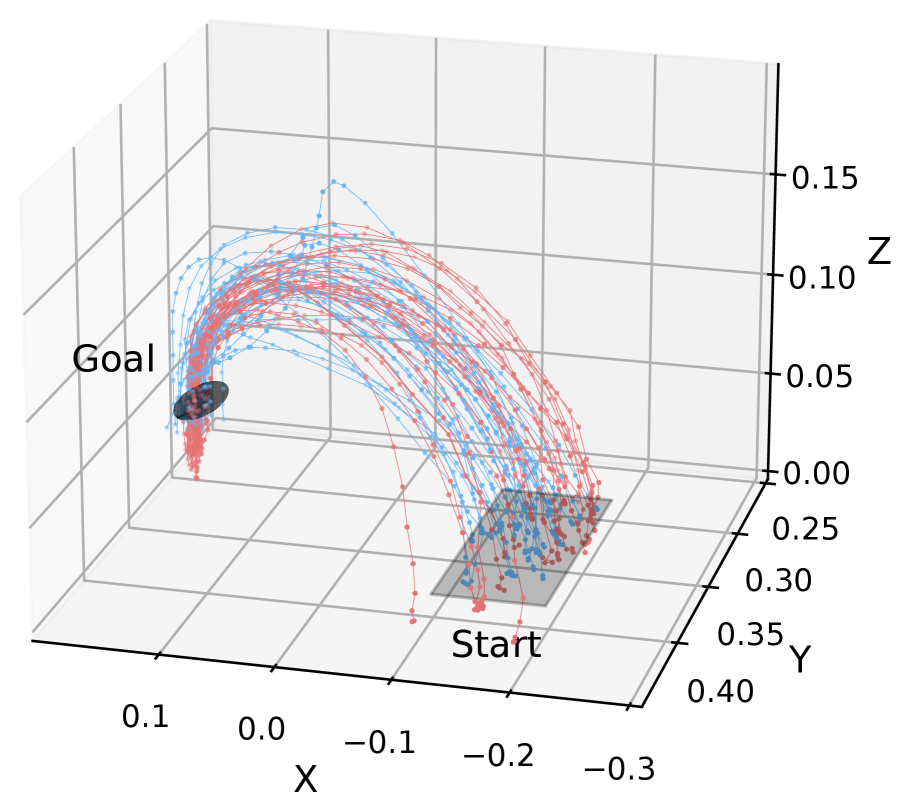

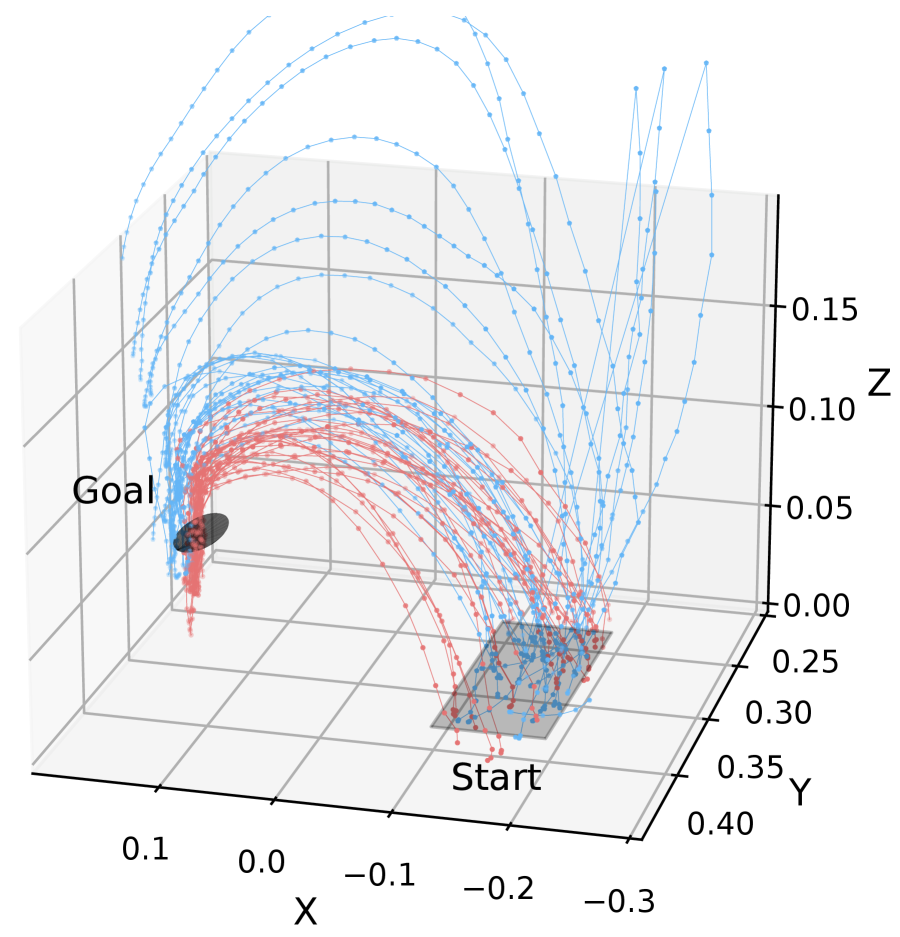

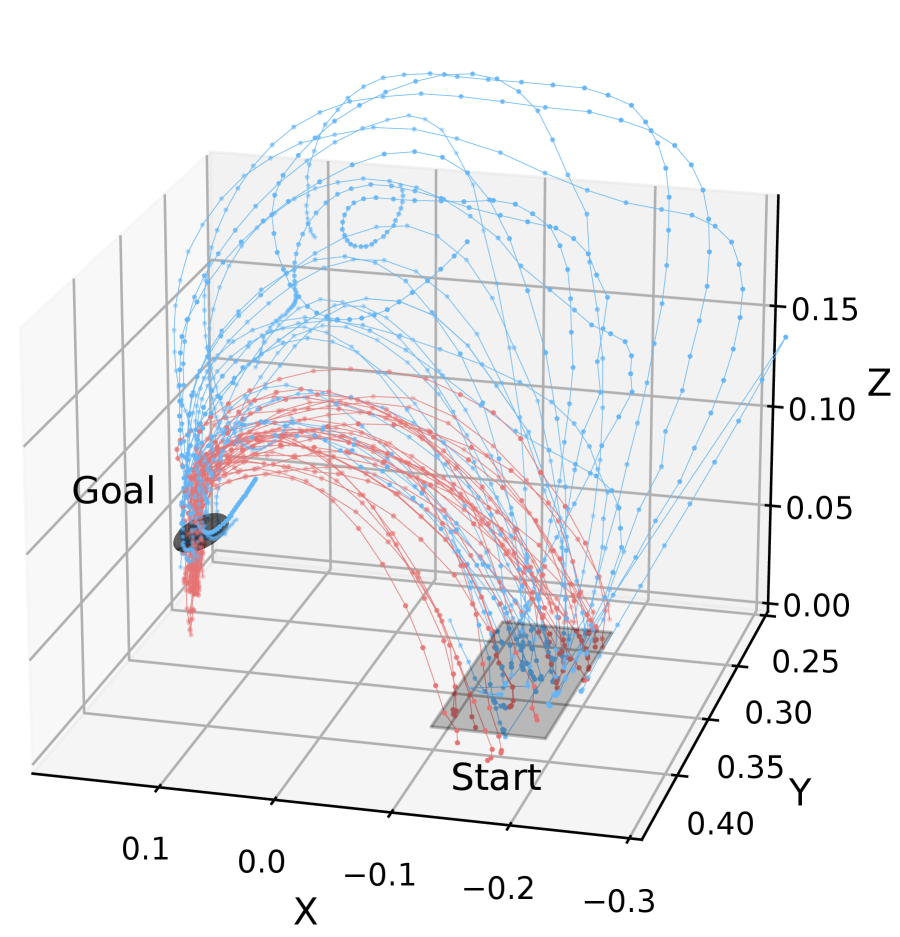

Here is a parameter that adjusts the scale of feasibility. Hand pose information from the demonstrated motion is plotted on a graph, and feasibility is visualized by coloring the plot according to the values of (Fig. 3) to provide visual feedback to the demonstrator.

IV-E Feasibility-weighted Policy Learning

A policy is learned using demonstration data , a trained IDM, and feasibility of the demonstration data, where higher feasibility actions are weighted. Parameters of policy are learned using the following equation:

| (6) | ||||

| (7) |

When using learned policy , state , which includes robot’s pose and environmental information , is provided as input to the policy. FABCO’s learning process is shown in Algorithm 1.

V Experiment

In this study, we apply our proposed method, FABCO, to a pipette insertion task. We employ BCO as a comparison and conduct ablation studies with FABCO without visual feedback and without weighting. Four participants demonstrated the task, and the proposed method’s effectiveness was evaluated based on their demonstrations. Additionally, we compared the workload on the demonstrators with/without visual feedback based on the NASA-TLX results collected after the demonstration tasks were completed.

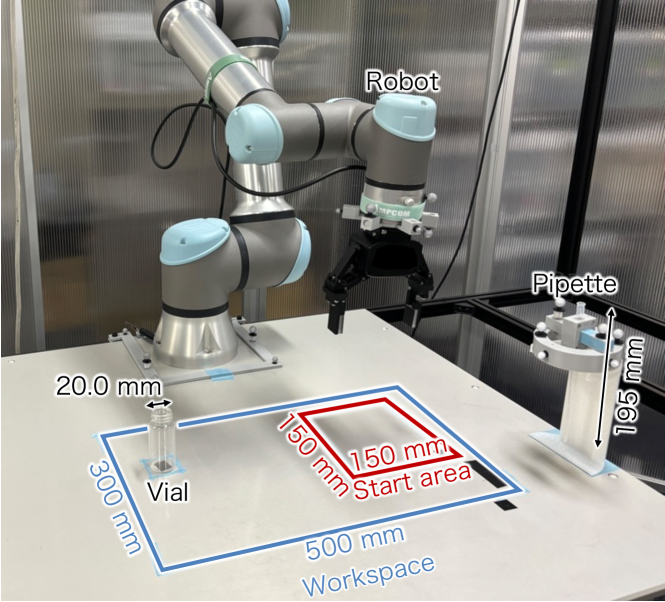

V-A Pipette Insertion Task

The experimental setup for the pipette insertion task is shown in Fig. 4. Its goal is for the robot to insert a pipette, which it is gripping, into a vial. The pipette is 195 mm long, and the diameter of the vial’s opening is 20.0 mm. The robot begins the task while holding the pipette in the start area, and its initial pose is randomized within a range of 15.0 degrees for each of the xyz axes in the Euler angles. The state of this task () is represented by 12-dimensional data consisting of the 6-dimensional position and the orientation data of the robot (or the interface) and the 6-dimensional position and the orientation data of the pipette. The robot’s action () is a 6-dimensional velocity command applied to the position and the orientation of the robot’s end-effector.

In this experiment, we used UR5e from UNIVERSAL ROBOTS as the manipulator and a ROBOTIS HAND RH-P12-RN-UR from ROBOTIS as the robot hand. To measure the pose of the hand-mounted demonstration interface and the robot’s hand, we employed six Flex13 cameras from OptiTrack. The workspace in this experiment, limited by the robot’s range of motion, was 300 mm vertically, 500 mm horizontally, and 450 mm high. The angular range was set to ±30.0 degrees for rotation around the x-axis, 30.0 degrees for rotation around the y-axis, and 45.0 degrees for rotation around the z-axis.

V-B Learning Settings of IDM and FDM

To train IDM and FDM, we generated random motion trajectories within the workspace shown in Fig. 4 and collected training data. Five points were randomly sampled from the workspace, and the robot followed a trajectory that connected them. Each trajectory consists of 50 position and orientation points; the robot followed one trajectory to collect a series of 50 state-action data points. The training data were collected by running the robot for approximately 2.5 hours, obtaining data from 2500 trajectories.

The IDM takes hand poses as input and outputs action . The FDM takes hand pose and action as input and outputs the hand pose at the next time step, . Both IDM and FDM are modeled using neural networks with five hidden layers, consisting of 256, 1024, 2048, 1024, and 256 nodes. ReLU is used as an activation function for the hidden layers; Sigmoid is used for the output layer. The optimizer is Adam, the loss function is L1 loss, and the batch size is set to 256. Out of the 2500 trajectories, 2000 were used as training data and 500 as validation data. The model was trained for 2000 epochs, and we selected the model with the highest prediction performance on the validation data at the end of each epoch.

V-C Human Demonstration

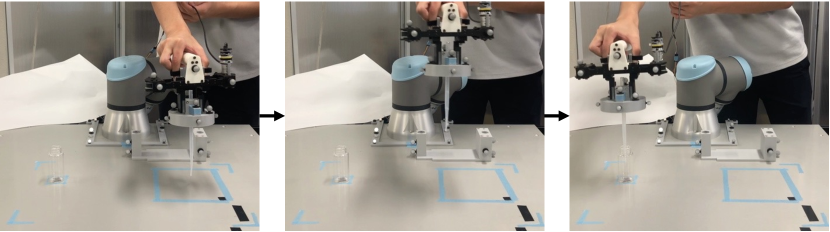

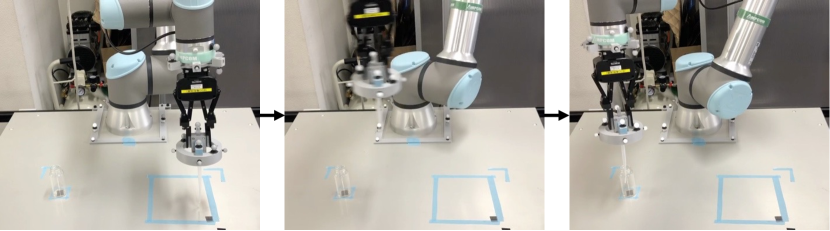

We collected pipette insertion demonstrations using the hand-mounted demonstration interface . The process of inserting the pipette into the vial is shown in Fig. 6. The demonstrators were informed in advance about the robot’s operational range and speed and were instructed to demonstrate the task in such a way to complete it as quickly as possible within the robot’s allowable range of motion and speed. The initial states of the demonstrations were selected to ensure diversity within the experimental setup. In this experiment, we collected 50 trajectories each for demonstrations with FABCO visual feasibility feedback and demonstrations without visual feedback. After the two types of demonstrations, the participants completed the NASA-TLX[11] to evaluate their workload during the experiment.

V-D Policy Learning

The policy model used for all the methods is neural networks with two hidden layers, with 256 and 128 nodes in the first and second hidden layers. ReLU was used as the activation function for both the hidden layers and the output layer. In this experiment, parameter , which adjusts the scale of feasibility, was set to . The optimizer is Adam; the loss function is L1 loss, with a batch size of 256. The model was trained for 2000 epochs, and the model was selected with the highest prediction performance on the validation data at the end of each epoch.

V-E Results

Figure. 6 shows the robot performing a pipette insertion using the policy learned with FABCO, based on demonstrations provided by Subject 1. It successfully imitated the pipette insertion task (Fig. 6).

Table I presents the success rates of the pipette insertion task, tested 30 times for the four methods (FABCO, FABCO w/o weighting, FABCO w/o FB, and BCO) that were trained using demonstrations from four participants. When comparing the success rates, the policy performance clearly improved with the visual feasibility feedback and weighting based on feasibility during the demonstrations. Notably, the visual feasibility feedback significantly improved the performance. Subject 2 tends to achieve a high task success rate regardless of the presence/absence of feedback.

|

FABCO |

|

|

BCO | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| No. 1 | 93.3% | 93.3% | 56.7% | 53.3% | ||||||

| No. 2 | 96.7 % | 96.7 % | 100% | 86.7% | ||||||

| No. 3 | 93.3% | 90.0 % | 66.7% | 26.7% | ||||||

| No. 4 | 90.0% | 60.0 % | 26.7% | 20.0% |

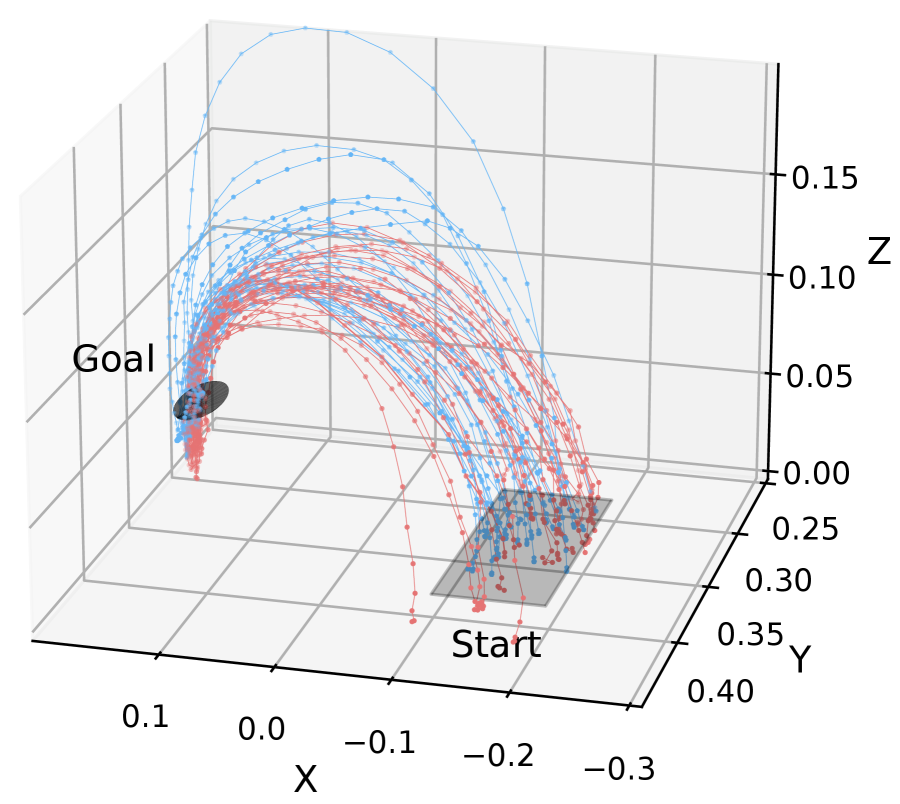

Fig. 7 shows the demonstration trajectory of Subject 4 and the trajectory generated by the learned policy. The demonstrated motion clearly generated a trajectory from the initial position to the pipette insertion position regardless of the presence of feedback. However, the policy learned from the demonstration data collected without visual feedback failed to imitate the demonstrated motion due to error compounding.

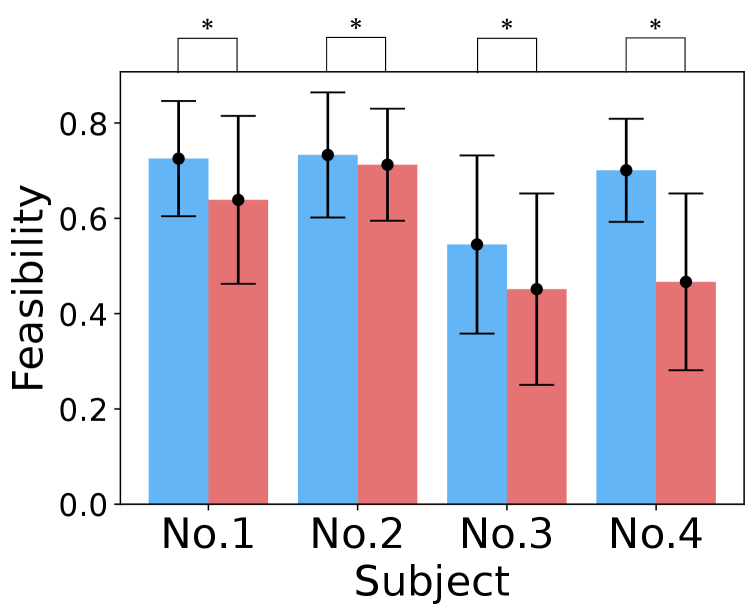

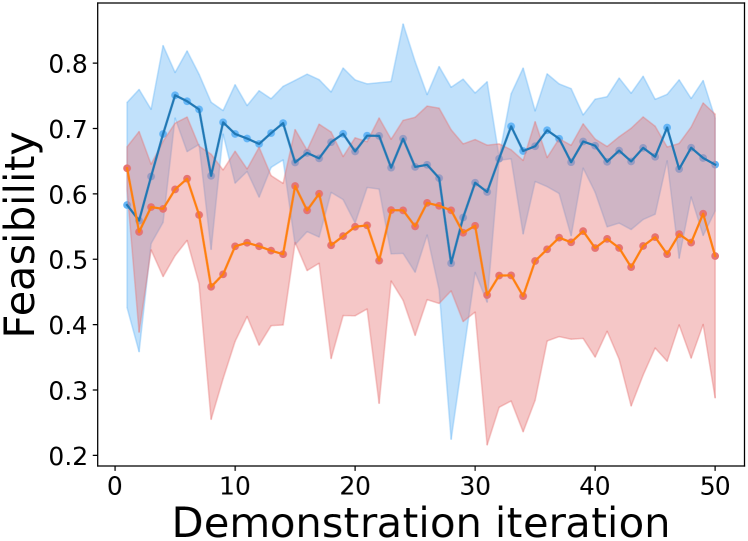

Fig. 8 shows the feasibility of the demonstration trajectories with/without visual feasibility feedback, indicating that using visual feasibility feedback helps demonstrate executable motion by the robot. Additionally, a t-test confirmed a significant difference in feasibility between the demonstrations with/without visual feedback.

Fig. 9 illustrates the transition of feasibility over 50 demonstrations by the four participants. Demonstrations without visual feedback show low feasibility and high variance throughout; demonstrations with visual feedback start with low feasibility but improve as the participants adjust their motions based on the feedback, leading to demonstrations with higher feasibility.

The results of the NASA-TLX survey completed by the participants after the experiment are shown in Table II. The NASA-TLX results indicated that while visual feedback slightly increased the demonstrators’ mental demand and effort, it allowed them to perform the demonstrations with a greater sense of task accomplishment. This suggests that although the feedback slightly increased the number of factors the demonstrators needed to consider to collect data that the robot could easily imitate, the sense of contributing valuable data to the robot’s policy learning increased their sense of accomplishment.

| NASA-TLX | w FB | w/o FB |

|---|---|---|

| Mental demand | 5.50 2.42 | 4.38 1.85 |

| Physical demand | 6.75 0.90 | 6.88 0.22 |

| Temporal demand | 2.62 1.56 | 2.88 2.16 |

| Performance | 2.00 0.87 | 5.50 1.27 |

| Effort | 4.50 2.55 | 3.88 1.75 |

| Frustration | 2.75 1.35 | 2.75 1.25 |

VI Discussion

We identified three limitations involving the utilization of FABCO in practical applications. The first concerns the learning of the forward and inverse dynamic models. The dynamics model in this study is a simple neural network, which is expected to improve model performance. By using a model that considers the robot’s kinematic characteristics[29, 30], perhaps more complex movements can be achieved. By learning a model that considers object contact, our method may be applied to contact-rich tasks. The second limitation is related to the feasibility calculation. FABCO only provides feedback on feasibility, meaning that the demonstrator determines the causes of any decrease in feasibility. Therefore, we need to identify the factors that reduce feasibility and visualize them to the demonstrators. The third involves the adjustment of the feasibility scale parameters. The current method determines them heuristically. To make FABCO more practical, they should be adjusted using demonstration and robot motion data.

VII Conclusions

We proposed FABCO, an imitation learning framework that encourages demonstrators to provide feasible demonstrations for robots by giving visual feedback on the feasibility of the demonstrated actions. To validate the effectiveness of FABCO, we collected pipette insertion data from four participants, compared the feasibility with/without visual feedback as well as the performance between the policies learned using FABCO and BCO, and analyzed the mental workload using NASA-TLX. The results showed an improvement in feasibility with visual feedback. Additionally, the policies learned with FABCO achieved higher task success rates than those learned with BCO.

References

- [1] T. Osa, J. Pajarinen, G. Neumann, J. A. Bagnell, P. Abbeel, and J. Peters, “An algorithmic perspective on imitation learning,” Foundations and Trends® in Robotics, vol. 7, no. 1-2, pp. 1–179, 2018.

- [2] C. Chi, Z. Xu, C. Pan, E. Cousineau, B. Burchfiel, S. Feng, R. Tedrake, and S. Song, “Universal manipulation interface: In-the-wild robot teaching without in-the-wild robots,” in Proceedings of Robotics: Science and Systems (RSS), 2024.

- [3] N. M. M. Shafiullah, A. Rai, H. Etukuru, Y. Liu, I. Misra, S. Chintala, and L. Pinto, “On bringing robots home,” arXiv preprint arXiv:2311.16098, 2023.

- [4] M. Hamaya, F. von Drigalski, T. Matsubara, K. Tanaka, R. Lee, C. Nakashima, Y. Shibata, and Y. Ijiri, “Learning soft robotic assembly strategies from successful and failed demonstrations,” in 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2020, pp. 8309–8315.

- [5] S. Young, D. Gandhi, S. Tulsiani, A. Gupta, P. Abbeel, and L. Pinto, “Visual Imitation Made Easy,” in Proceedings of the 2020 Conference on Robot Learning (CoRL), 2021, pp. 1992–2005.

- [6] F. Torabi, G. Warnell, and P. Stone, “Behavioral cloning from observation,” in Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence, IJCAI, 7 2018, pp. 4950–4957.

- [7] Y. Liu, A. Gupta, P. Abbeel, and S. Levine, “Imitation from observation: Learning to imitate behaviors from raw video via context translation,” in 2018 IEEE International Conference on Robotics and Automation (ICRA), 2018, pp. 1118–1125.

- [8] R. Rafailov, T. Yu, A. Rajeswaran, and C. Finn, “Visual adversarial imitation learning using variational models,” in Advances in Neural Information Processing Systems (NeurIPS), vol. 34, 2021, pp. 3016–3028.

- [9] A. Escontrela, A. Adeniji, W. Yan, A. Jain, X. B. Peng, K. Goldberg, Y. Lee, D. Hafner, and P. Abbeel, “Video prediction models as rewards for reinforcement learning,” in Advances in Neural Information Processing Systems (NeurIPS), vol. 36, 2023, pp. 68 760–68 783.

- [10] S. Ross and D. Bagnell, “Efficient reductions for imitation learning,” in Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics ((AISTATS)), vol. 9, 2010, pp. 661–668.

- [11] S. G. Hart and L. E. Staveland, “Development of nasa-tlx (task load index): Results of empirical and theoretical research,” in Human Mental Workload, 1988, vol. 52, pp. 139–183.

- [12] J. Wong, A. Tung, A. Kurenkov, A. Mandlekar, L. Fei-Fei, S. Savarese, and R. Martín-Martín, “Error-aware imitation learning from teleoperation data for mobile manipulation,” in Conference on Robot Learning (CoRL). PMLR, 2022, pp. 1367–1378.

- [13] C. Cuan, A. Okamura, and M. Khansari, “Leveraging haptic feedback to improve data quality and quantity for deep imitation learning models,” IEEE Trans. Haptics, vol. 17, no. 4, pp. 984–991, 2024.

- [14] S. Ross, G. J. Gordon, and J. A. Bagnell, “A reduction of imitation learning and structured prediction to no-regret online learning,” in Proceedings of the Fourteenth International Conference on Artificial Intelligence and Statistics (AISTATS), 2011, pp. 627–635.

- [15] M. Laskey, S. Staszak, W. Y. Hsieh, J. Mahler, F. T. Pokorny, A. D. Dragan, and K. Goldberg, “Shiv: Reducing supervisor burden in dagger using support vectors for efficient learning from demonstrations in high dimensional state spaces,” in 2016 IEEE International Conference on Robotics and Automation (ICRA), 2016, pp. 462–469.

- [16] M. Kelly, C. Sidrane, K. Driggs-Campbell, and M. J. Kochenderfer, “Hg-dagger: Interactive imitation learning with human experts,” in 2019 International Conference on Robotics and Automation (ICRA), 2019, pp. 8077–8083.

- [17] K. Menda, K. Driggs-Campbell, and M. J. Kochenderfer, “Ensembledagger: A bayesian approach to safe imitation learning,” in 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2019, pp. 5041–5048.

- [18] A. Mandlekar, D. Xu, R. Martín-Martín, Y. Zhu, L. Fei-Fei, and S. Savarese, “Human-in-the-loop imitation learning using remote teleoperation,” CoRR, 2020.

- [19] M. Laskey, J. Lee, R. Fox, A. Dragan, and K. Goldberg, “Dart: Noise injection for robust imitation learning,” in Proceedings of the 1st Annual Conference on Robot Learning (CoRL), vol. 78, 2017, pp. 143–156.

- [20] H. Tahara, H. Sasaki, H. Oh, B. Michael, and T. Matsubara, “Disturbance-injected robust imitation learning with task achievement,” in 2022 International Conference on Robotics and Automation (ICRA), 2022, pp. 2466–2472.

- [21] H. Tahara, H. Sasaki, H. Oh, E. Anarossi, and T. Matsubara, “Disturbance injection under partial automation: Robust imitation learning for long-horizon tasks,” IEEE Robotics and Automation Letters, vol. 8, no. 5, pp. 2724–2731, 2023.

- [22] H. Oh, H. Sasaki, B. Michael, and T. Matsubara, “Bayesian disturbance injection: Robust imitation learning of flexible policies for robot manipulation,” Neural Networks, vol. 158, pp. 42–58, 2023.

- [23] H. Oh and T. Matsubara, “Leveraging demonstrator-perceived precision for safe interactive imitation learning of clearance-limited tasks,” IEEE Robotics and Automation Letters, vol. 9, no. 4, p. 3387â3394, 2024.

- [24] L. Smith, N. Dhawan, M. Zhang, P. Abbeel, and S. Levine, “Avid: Learning multi-stage tasks via pixel-level translation of human videos,” in Robotics: Science and Systems (RSS), 2020.

- [25] Y. Qin, H. Su, and X. Wang, “From one hand to multiple hands: Imitation learning for dexterous manipulation from single-camera teleoperation,” IEEE Robotics and Automation Letters, vol. 7, no. 4, pp. 10 873–10 881, 2022.

- [26] Z. Cao, Y. Hao, M. Li, and D. Sadigh, “Learning feasibility to imitate demonstrators with different dynamics,” in Proceedings of the 5th Conference on Robot Learning (CoRL), 2022, pp. 363–372.

- [27] Z. Cao and D. Sadigh, “Learning from imperfect demonstrations from agents with varying dynamics,” IEEE Robotics and Automation Letters, vol. 6, no. 3, pp. 5231–5238, 2021.

- [28] T. Betz, H. Fujiishi, and T. Kobayashi, “Behavioral cloning from observation with bi-directional dynamics model,” in 2021 IEEE/SICE International Symposium on System Integration (SII), 2021, pp. 184–189.

- [29] M. Lutter, K. Listmann, and J. Peters, “Deep lagrangian networks for end-to-end learning of energy-based control for under-actuated systems,” in 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2019, pp. 7718–7725.

- [30] A. Carron, E. Arcari, M. Wermelinger, L. Hewing, M. Hutter, and M. N. Zeilinger, “Data-driven model predictive control for trajectory tracking with a robotic arm,” IEEE Robotics and Automation Letters, vol. 4, no. 4, pp. 3758–3765, 2019.