9 \acmNumber4 \acmArticle39 \acmYear2010 \acmMonth3

Feature Selection: A Data Perspective

Abstract

Feature selection, as a data preprocessing strategy, has been proven to be effective and efficient in preparing data (especially high-dimensional data) for various data mining and machine learning problems. The objectives of feature selection include: building simpler and more comprehensible models, improving data mining performance, and preparing clean, understandable data. The recent proliferation of big data has presented some substantial challenges and opportunities to feature selection. In this survey, we provide a comprehensive and structured overview of recent advances in feature selection research. Motivated by current challenges and opportunities in the era of big data, we revisit feature selection research from a data perspective and review representative feature selection algorithms for conventional data, structured data, heterogeneous data and streaming data. Methodologically, to emphasize the differences and similarities of most existing feature selection algorithms for conventional data, we categorize them into four main groups: similarity based, information theoretical based, sparse learning based and statistical based methods. To facilitate and promote the research in this community, we also present an open-source feature selection repository that consists of most of the popular feature selection algorithms (http://featureselection.asu.edu/). Also, we use it as an example to show how to evaluate feature selection algorithms. At the end of the survey, we present a discussion about some open problems and challenges that require more attention in future research.

keywords:

Feature Selection¡ccs2012¿ ¡concept¿ ¡concept_id¿10010147.10010257.10010321.10010336¡/concept_id¿ ¡concept_desc¿Computing methodologies Feature selection¡/concept_desc¿ ¡concept_significance¿500¡/concept_significance¿ ¡/concept¿ ¡/ccs2012¿

[500]Computing methodologies Feature selection

Jundong Li, Kewei Cheng, Suhang Wang, Fred Morstatter, Robert P. Trevino, Jiliang Tang, and Huan Liu, 2017. Feature Selection: A Data Perspective

This material is based upon work supported by, or in part by, the NSF grant 1217466 and 1614576.

Author’s addresses: J. Li, K. Cheng, S. Wang, F. Morstatter, R.P. Trevino, H. Liu, Computer Science and Engineering, Arizona State University, Tempe, AZ, 85281; email: {jundongl, kcheng18, swang187, fmorstat, rptrevin, huan.liu}@asu.edu; J. Tang, Michigan State University, East Lansing, MI 48824; email: tangjili@msu.edu.

1 Introduction

We are now in the era of big data, where huge amounts of high-dimensional data become ubiquitous in a variety of domains, such as social media, healthcare, bioinformatics and online education. The rapid growth of data presents challenges for effective and efficient data management. It is desirable to apply data mining and machine learning techniques to automatically discover knowledge from data of various sorts.

When data mining and machine learning algorithms are applied on high-dimensional data, a critical issue is known as the curse of dimensionality. It refers to the phenomenon that data becomes sparser in high-dimensional space, adversely affecting algorithms designed for low-dimensional space [Hastie et al. (2005)]. Also, with a large number of features, learning models tend to overfit which may cause performance degradation on unseen data. Data of high dimensionality can significantly increase the memory storage requirements and computational costs for data analytics.

Dimensionality reduction is one of the most powerful tools to address the previously described issues. It can be mainly categorized into two main components: feature extraction and feature selection. Feature extraction projects the original high-dimensional features to a new feature space with low dimensionality. The newly constructed feature space is usually a linear or nonlinear combination of the original features. Feature selection, on the other hand, directly selects a subset of relevant features for model construction [Guyon and Elisseeff (2003), Liu and Motoda (2007)].

Both feature extraction and feature selection have the advantages of improving learning performance, increasing computational efficiency, decreasing memory storage, and building better generalization models. Hence, they are both regarded as effective dimensionality reduction techniques. On one hand, for many applications where the raw input data does not contain any features understandable to a given learning algorithm, feature extraction is preferred. On the other hand, as feature extraction creates a set of new features, further analysis is problematic as we cannot retain the physical meanings of these features. In contrast, by keeping some of the original features, feature selection maintains physical meanings of the original features and gives models better readability and interpretability. Therefore, feature selection is often preferred in many applications such as text mining and genetic analysis. It should be noted that in some cases even though feature dimensionality is often not that high, feature extraction/selection still plays an essential role such as improving learning performance, preventing overfitting, and reducing computational costs.

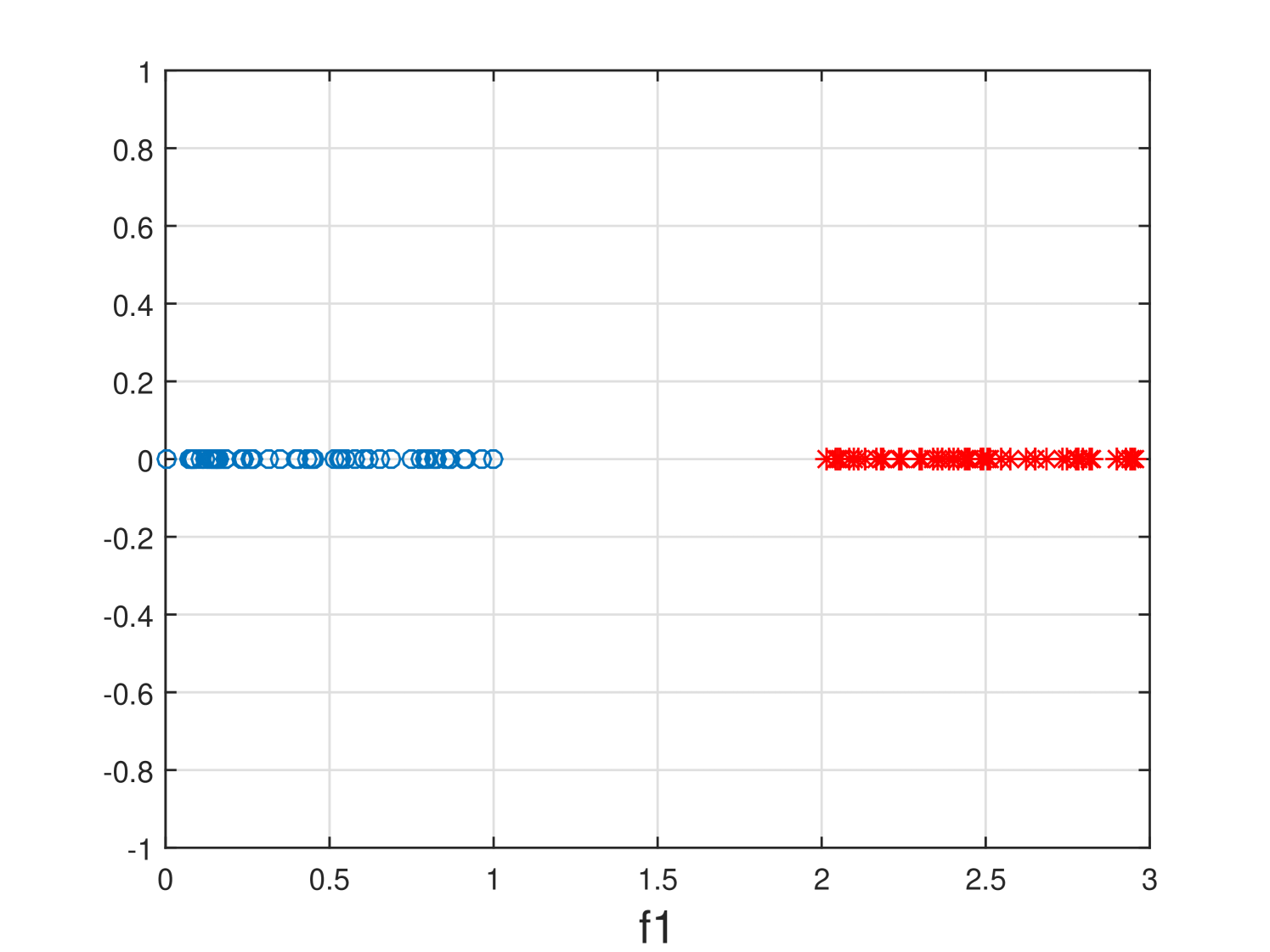

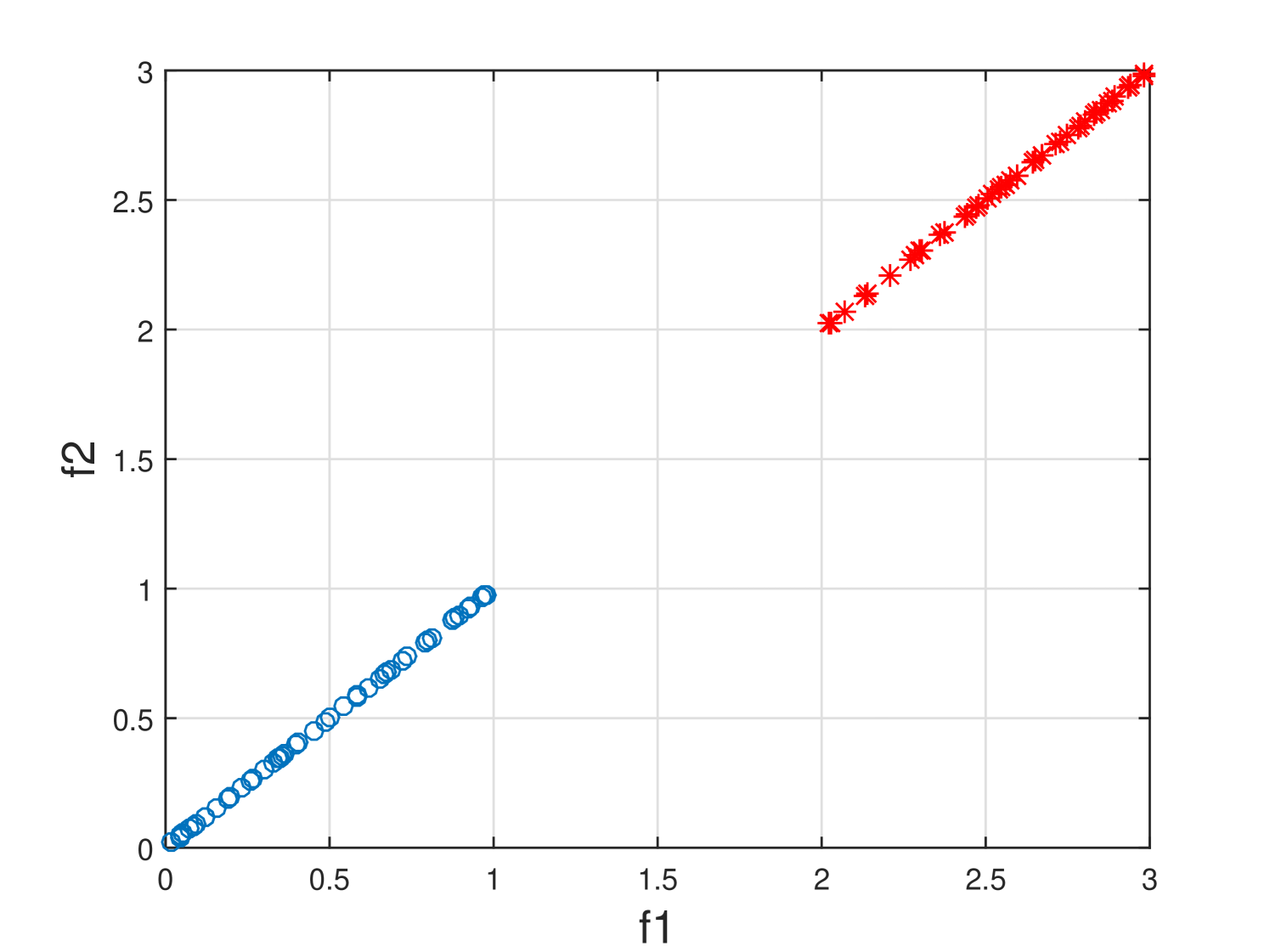

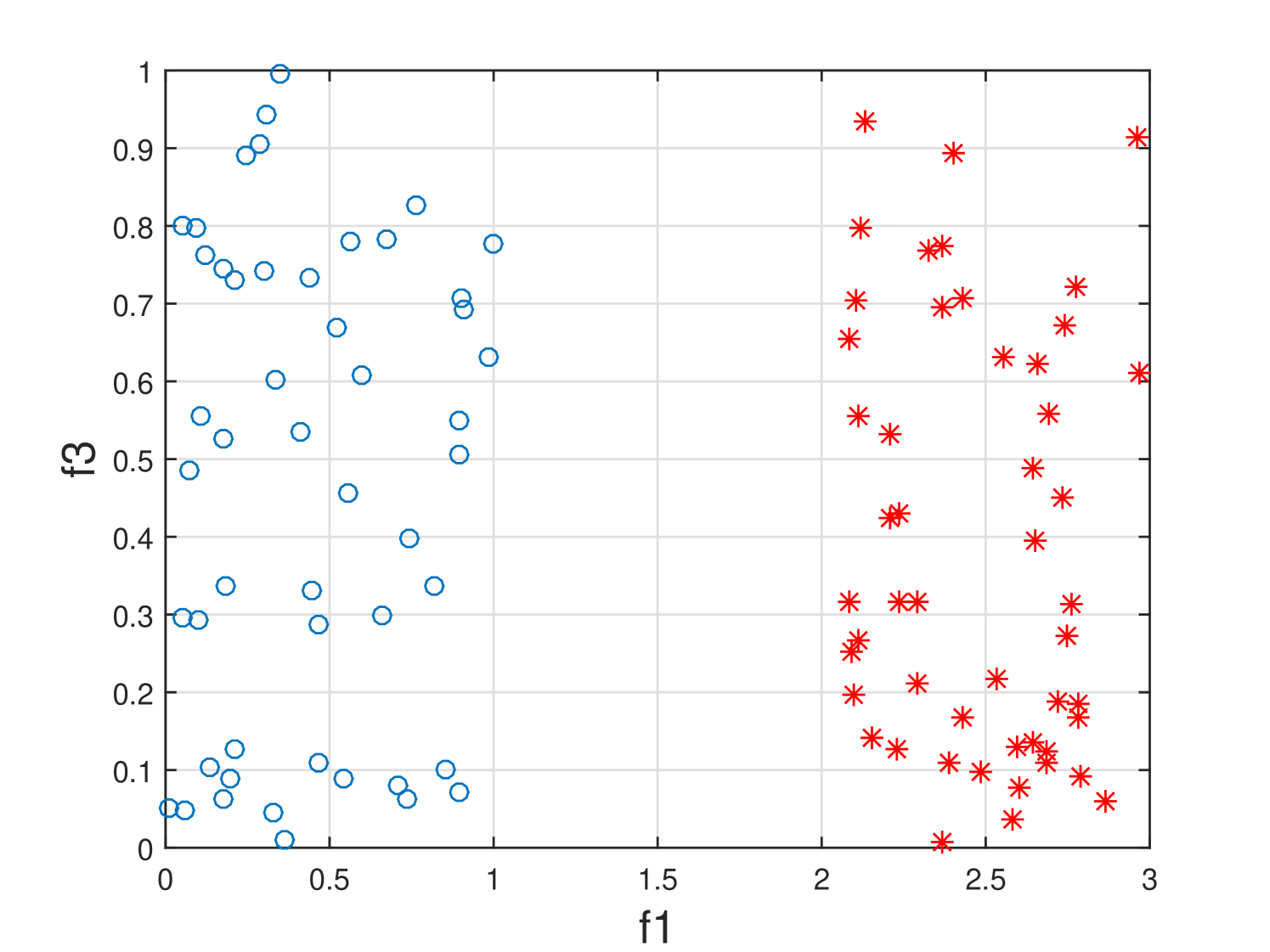

Real-world data contains a lot of irrelevant, redundant and noisy features. Removing these features by feature selection reduces storage and computational cost while avoiding significant loss of information or degradation of learning performance. For example, in Fig. 1(a), feature is a relevant feature that is able to discriminate two classes (clusters). However, given feature , feature in Fig. 1(b) is redundant as is strongly correlated with . In Fig. 1(c), feature is an irrelevant feature as it cannot separate two classes (clusters) at all. Therefore, the removal of and will not negatively impact the learning performance.

1.1 Traditional Categorization of Feature Selection Algorithms

1.1.1 Supervision Perspective

According to the availability of supervision (such as class labels in classification problems), feature selection can be broadly classified as supervised, unsupervised and semi-supervised methods.

Supervised feature selection is generally designed for classification or regression problems. It aims to select a subset of features that are able to discriminate samples from different classes (classification) or to approximate the regression targets (regression). With supervision information, feature relevance is usually assessed via its correlation with the class labels or the regression target. The training phase highly depends on the selected features: after splitting the data into training and testing sets, classifiers or regression models are trained based on a subset of features selected by supervised feature selection. Note that the feature selection phase can be independent of the learning algorithms (filter methods); or it may iteratively take advantage of the learning performance of a classifier or a regression model to assess the quality of selected features so far (wrapper methods); or make use of the intrinsic structure of a learning algorithm to embed feature selection into the underlying model (embedded methods). Finally, the trained classifier or regression model predicts class labels or regression targets of unseen samples in the test set with the selected features. In the following context, for supervised methods, we mainly focus on classification problems, and use label information, supervision information interchangeably.

Unsupervised feature selection is generally designed for clustering problems. As acquiring labeled data is particularly expensive in both time and efforts, unsupervised feature selection has gained considerable attention recently. Without label information to evaluate the importance of features, unsupervised feature selection methods seek alternative criteria to define feature relevance. Different from supervised feature selection, unsupervised feature selection usually uses all instances that are available in the feature selection phase. The feature selection phase can be independent of the unsupervised learning algorithms (filter methods); or it relies on the learning algorithms to iteratively improve the quality of selected features (wrapper methods); or embed the feature selection phase into unsupervised learning algorithms (embedded methods). After the feature selection phase, it outputs the cluster structure of all data samples on the selected features by using a standard clustering algorithm [Guyon and Elisseeff (2003), Liu and Motoda (2007), Tang et al. (2014a)].

Supervised feature selection works when sufficient label information is available while unsupervised feature selection algorithms do not require any class labels. However, in many real-world applications, we usually have a limited number of labeled data. Therefore, it is desirable to develop semi-supervised methods by exploiting both labeled and unlabeled data samples.

1.1.2 Selection Strategy Perspective

Concerning different selection strategies, feature selection methods can be broadly categorized as wrapper, filter and embedded methods.

Wrapper methods rely on the predictive performance of a predefined learning algorithm to evaluate the quality of selected features. Given a specific learning algorithm, a typical wrapper method performs two steps: (1) search for a subset of features; and (2) evaluate the selected features. It repeats (1) and (2) until some stopping criteria are satisfied. Feature set search component first generates a subset of features; then the learning algorithm acts as a black box to evaluate the quality of these features based on the learning performance. For example, the whole process works iteratively until such as the highest learning performance is achieved or the desired number of selected features is obtained. Then the feature subset that gives the highest learning performance is returned as the selected features. Unfortunately, a known issue of wrapper methods is that the search space for features is , which is impractical when is very large. Therefore, different search strategies such as sequential search [Guyon and Elisseeff (2003)], hill-climbing search, best-first search [Kohavi and John (1997), Arai et al. (2016)], branch-and-bound search [Narendra and Fukunaga (1977)] and genetic algorithms [Golberg (1989)] are proposed to yield a local optimum learning performance. However, the search space is still extremely huge for high-dimensional datasets. As a result, wrapper methods are seldom used in practice.

Filter methods are independent of any learning algorithms. They rely on characteristics of data to assess feature importance. Filter methods are typically more computationally efficient than wrapper methods. However, due to the lack of a specific learning algorithm guiding the feature selection phase, the selected features may not be optimal for the target learning algorithms. A typical filter method consists of two steps. In the first step, feature importance is ranked according to some feature evaluation criteria. The feature importance evaluation process can be either univariate or multivariate. In the univariate scheme, each feature is ranked individually regardless of other features, while the multivariate scheme ranks multiple features in a batch way. In the second step of a typical filter method, lowly ranked features are filtered out. In the past decades, different evaluation criteria for filter methods have been proposed. Some representative criteria include feature discriminative ability to separate samples [Kira and Rendell (1992), Robnik-Šikonja and Kononenko (2003), Yang et al. (2011), Du et al. (2013), Tang et al. (2014b)], feature correlation [Koller and Sahami (1995), Guyon and Elisseeff (2003)], mutual information [Yu and Liu (2003), Peng et al. (2005), Nguyen et al. (2014), Shishkin et al. (2016), Gao et al. (2016)], feature ability to preserve data manifold structure [He et al. (2005), Zhao and Liu (2007), Gu et al. (2011b), Jiang and Ren (2011)], and feature ability to reconstruct the original data [Masaeli et al. (2010), Farahat et al. (2011), Li et al. (2017b)].

Embedded methods is a trade-off between filter and wrapper methods which embed the feature selection into model learning. Thus they inherit the merits of wrapper and filter methods – (1) they include the interactions with the learning algorithm; and (2) they are far more efficient than the wrapper methods since they do not need to evaluate feature sets iteratively. The most widely used embedded methods are the regularization models which target to fit a learning model by minimizing the fitting errors and forcing feature coefficients to be small (or exact zero) simultaneously. Afterwards, both the regularization model and selected feature sets are returned as the final results.

It should be noted that some literature classifies feature selection methods into four categories (from the selection strategy perspective) by including the hybrid feature selection methods [Saeys et al. (2007), Shen et al. (2012), Ang et al. (2016)]. Hybrid methods can be regarded as a combination of multiple feature selection algorithms (e.g., wrapper, filter, and embedded). The main target is to tackle the instability and perturbation issues of many existing feature selection algorithms. For example, for small-sized high-dimensional data, a small perturbation on the training data may result in totally different feature selection results. By aggregating multiple selected feature subsets from different methods together, the results are more robust and hence the credibility of the selected features is enhanced.

1.2 Feature Selection Algorithms from a Data Perspective

The recent popularity of big data presents unique challenges for traditional feature selection [Li and Liu (2017)], and some characteristics of big data such as velocity and variety necessitate the development of novel feature selection algorithms. Here we briefly discuss some major concerns when applying feature selection algorithms.

Streaming data and features have become more and more prevalent in real-world applications. It poses challenges to traditional feature selection algorithms, which are designed for static datasets with fixed data samples and features. For example in Twitter, new data like posts and new features like slang words are continuously being user-generated. It is impractical to apply traditional batch-mode feature selection algorithms to find relevant features from scratch when new data or new feature arrives. Moreover, the volume of data may be too large to be loaded into memory. In many cases, a single scan of data is desired as further scans is either expensive or impractical. Given the reasons mentioned above, it is appealing to apply feature selection in a streaming fashion to dynamically maintain a set of relevant features.

Most existing algorithms of feature selection are designed to handle tasks with a single data source and always assume that data is independent and identically distributed (i.i.d.). However, data could come from multiple sources in many applications. For example, in social media, data comes from heterogeneous sources such as text, images, tags, videos. In addition, linked data is ubiquitous and presents in various forms such as user-post relations and user-user relations. The availability of multiple data sources brings unprecedented opportunities as we can leverage shared intrinsic characteristics and correlations to find more relevant features. However, challenges are also unequivocally presented. For instance, with link information, the widely adopted i.i.d. assumption in most learning algorithms does not hold. How to appropriately utilize link information for feature selection is still a challenging problem.

Features can also exhibit certain types of structures. Some well-known structures among features are group, tree, and graph structures. When performing feature selection, if the feature structure is not taken into consideration, the intrinsic dependencies may not be captured, thus the selected features may not be suitable for the target application. Incorporating prior knowledge of feature structures can help select relevant features to improve the learning performance greatly.

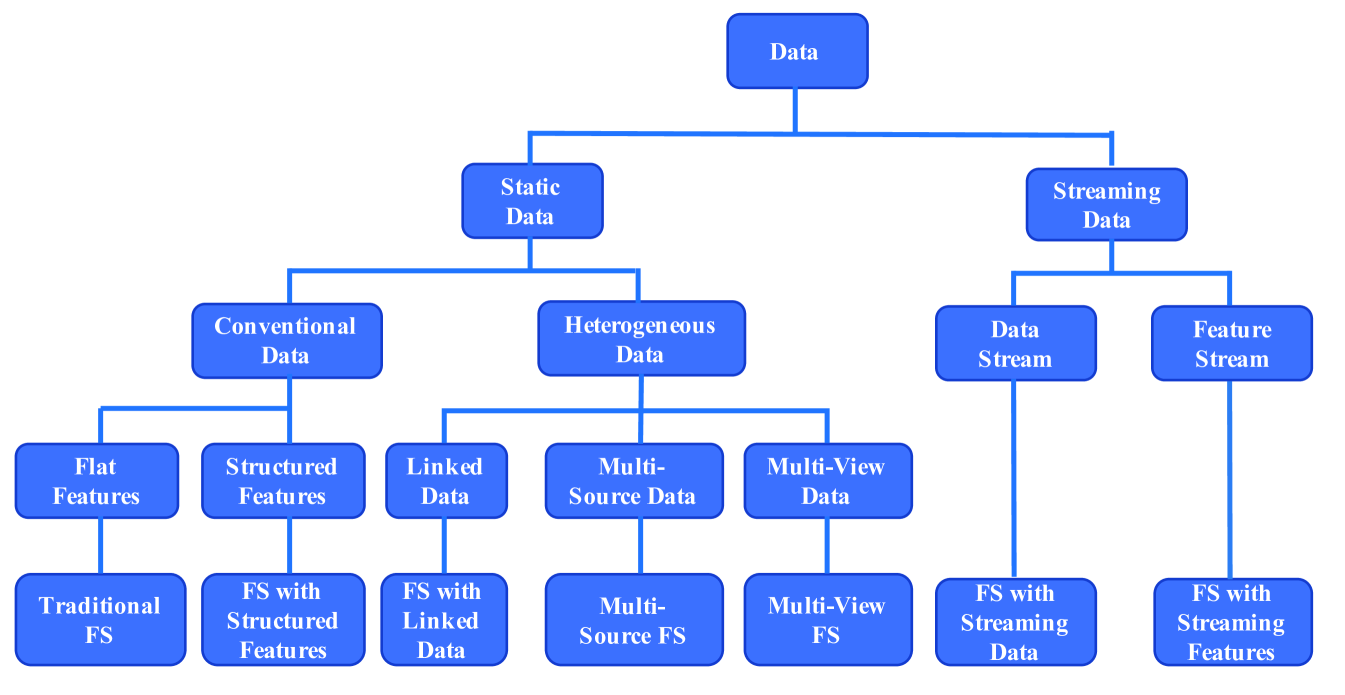

The aforementioned reasons motivate the investigation of feature selection algorithms from a different view. In this survey, we revisit feature selection algorithms from a data perspective; the categorization is illustrated in Fig. 2. It is shown that data consists of static data and streaming data. For the static data, it can be grouped into conventional data and heterogeneous data. In conventional data, features can either be flat or possess some inherent structures. Traditional feature selection algorithms are proposed to deal with these flat features in which features are considered to be independent. The past few decades have witnessed hundreds of feature selection algorithms. Based on their technical characteristics, we propose to classify them into four main groups, i.e., similarity based, information theoretical based, sparse learning based and statistical based methods. It should be noted that this categorization only involves filter methods and embedded methods while the wrapper methods are excluded. The reason for excluding wrapper methods is that they are computationally expensive and are usually used in specific applications. More details about these four categories will be presented later. We present other methods that cannot be fitted into these four categories, such as hybrid methods, deep learning based methods and reconstruction based methods. When features express some structures, specific feature selection algorithms are more desired. Data can be heterogeneous such that data could come from multiple sources and could be linked. Hence, we also show how new feature selection algorithms cope with these situations. Second, in the streaming settings, data arrives sequentially in a streaming fashion where the size of data instances is unknown, feature selection algorithms that make only one pass over the data is proposed accordingly. Similarly, in an orthogonal setting, features can also be generated dynamically. Streaming feature selection algorithms are designed to determine if one should accept the newly added features and remove existing but outdated features.

1.3 Differences with Existing Surveys

Currently, there exist some other surveys which give a summarization of feature selection algorithms, such as those in [Guyon and Elisseeff (2003), Alelyani et al. (2013), Chandrashekar and Sahin (2014), Tang et al. (2014a)]. These studies either focus on traditional feature selection algorithms or specific learning tasks like classification and clustering. However, none of them provide a comprehensive and structured overview of traditional feature selection algorithms in conjunction with recent advances in feature selection from a data perspective. In this survey, we will introduce representative feature selection algorithms to cover all components mentioned in Fig. 2. We also release a feature selection repository in Python named scikit-feature which is built upon the widely used machine learning package scikit-learn (http://scikit-learn.org/stable/) and two scientific computing packages Numpy (http://www.numpy.org/) and Scipy (http://www.scipy.org/). It includes near 40 representative feature selection algorithms. The web page of the repository is available at http://featureselection.asu.edu/.

1.4 Organization of the Survey

We present this survey in seven parts, and the covered topics are listed as follows:

-

1.

Traditional Feature Selection for Conventional Data (Section 2)

-

(a)

Similarity based Feature Selection Methods

-

(b)

Information Theoretical based Feature Selection Methods

-

(c)

Sparse Learning based Feature Selection Methods

-

(d)

Statistical based Feature Selection Methods

-

(e)

Other Methods

-

(a)

-

2.

Feature Selection with Structured Features (Section 3)

-

(a)

Feature Selection Algorithms with Group Structure Features

-

(b)

Feature Selection Algorithms with Tree Structure Features

-

(c)

Feature Selection Algorithms with Graph Structure Features

-

(a)

-

3.

Feature Selection with Heterogeneous Data (Section 4)

-

(a)

Feature Selection Algorithms with Linked Data

-

(b)

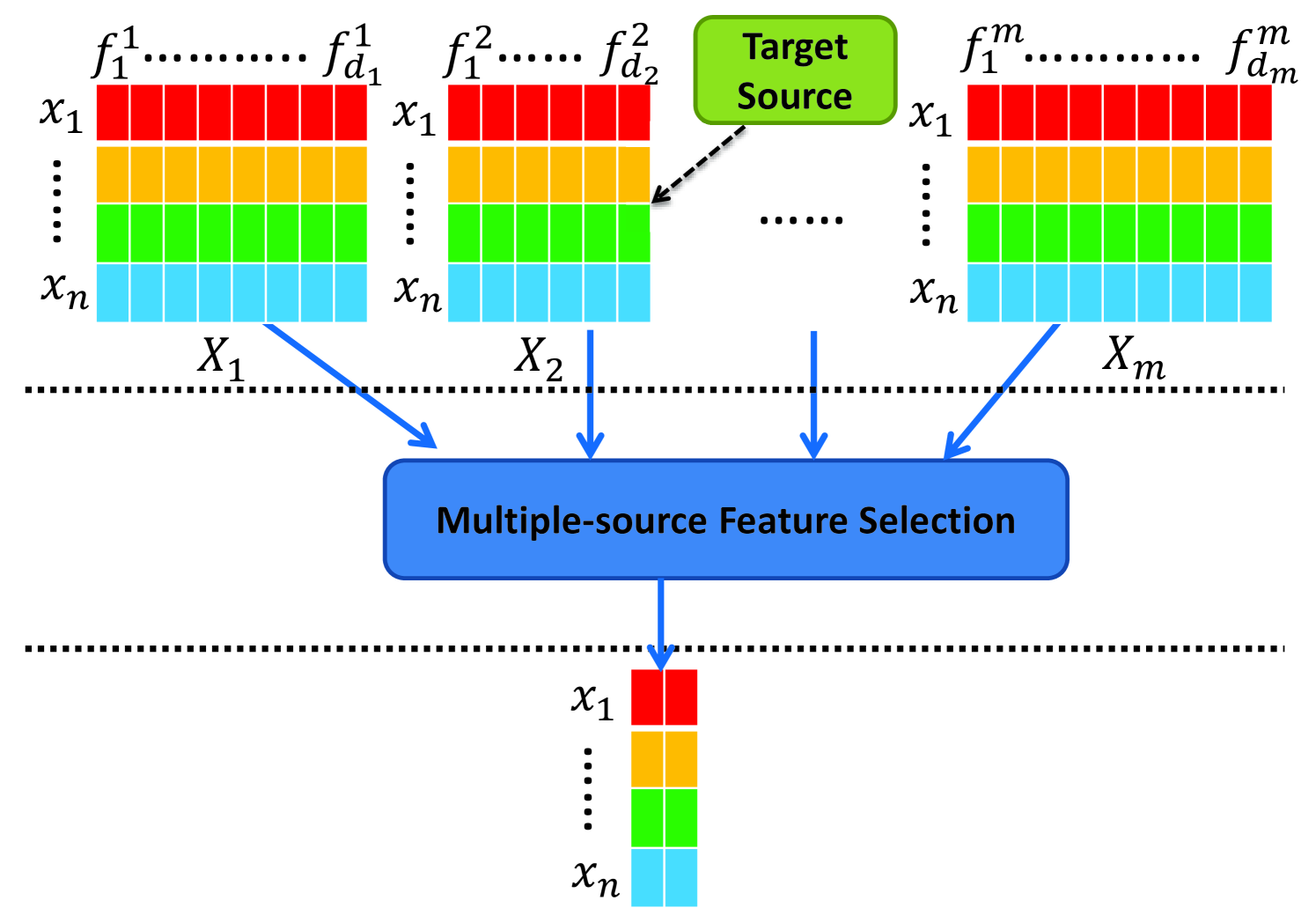

Multi-Source Feature Selection

-

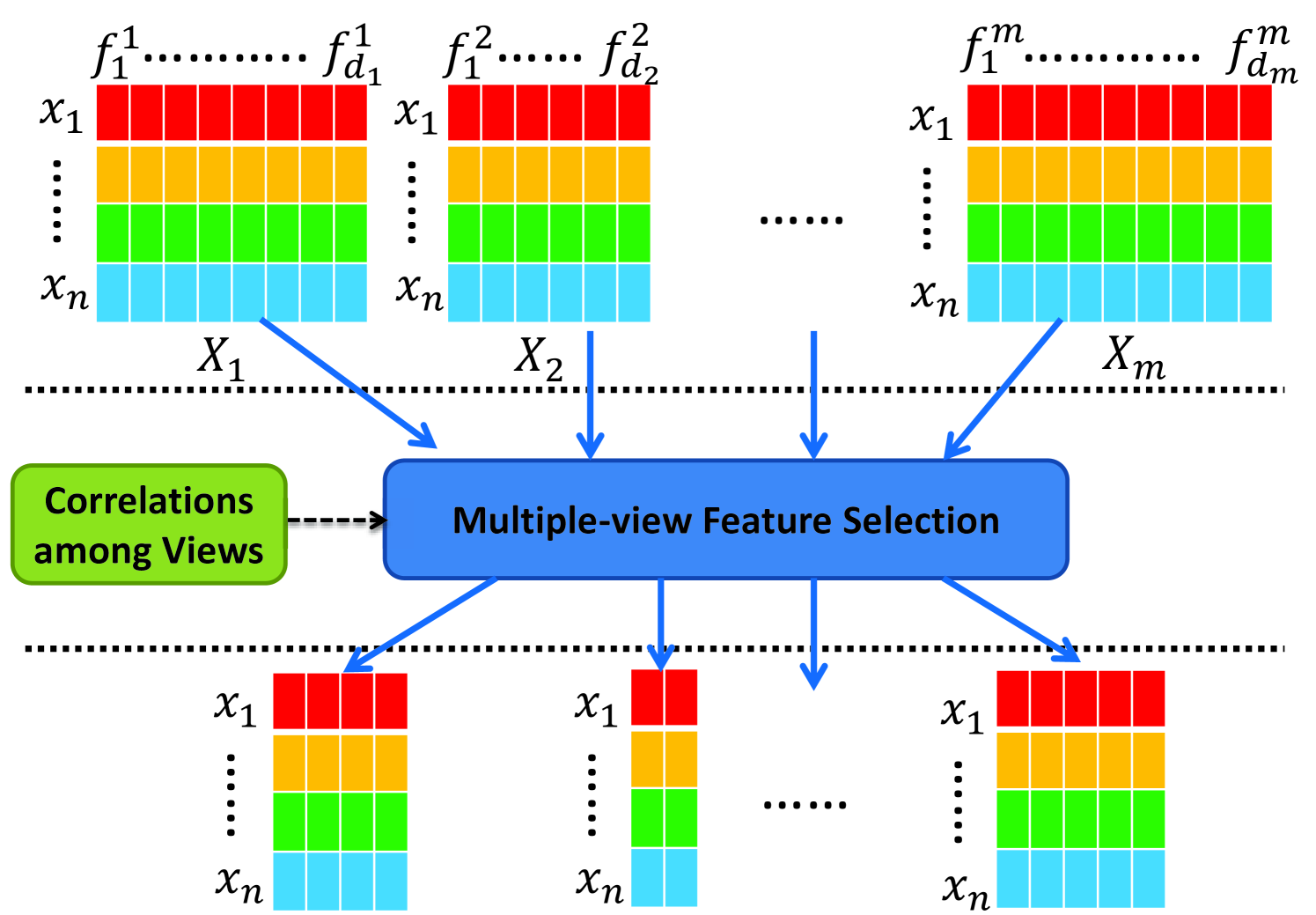

(c)

Multi-View Feature Selection

-

(a)

-

4.

Feature Selection with Streaming Data (Section 5)

-

(a)

Feature Selection Algorithms with Data Streams

-

(b)

Feature Selection Algorithms with Feature Streams

-

(a)

-

5.

Performance Evaluation (Section 6)

-

6.

Open Problems and Challenges (Section 7)

-

7.

Summary of the Survey (Section 8)

1.5 Notations

We summarize some symbols used throughout this survey in Table 1. We use bold uppercase characters for matrices (e.g., ), bold lowercase characters for vectors (e.g., ), calligraphic fonts for sets (e.g., ). We follow the matrix settings in Matlab to represent -th row of matrix as , -th column of as , -th entry of as , transpose of as , and trace of as . For any matrix , its Frobenius norm is defined as , and its -norm is . For any vector , its -norm is defined as , and its -norm is . is an identity matrix and is a vector whose elements are all 1’s.

| Notations | Definitions or Descriptions |

|---|---|

| number of instances in the data | |

| number of features in the data | |

| number of selected features | |

| number of classes (if exist) | |

| original feature set which contains features | |

| selected feature set which contains selected features | |

| index of selected features in | |

| original features | |

| selected features | |

| data instances | |

| feature vectors corresponding to | |

| feature vectors corresponding to | |

| data vectors corresponding to | |

| class labels of all instances (if exist) | |

| data matrix with instances and features | |

| data matrix on the selected features | |

| class label vector for all instances (if exist) |

2 Feature Selection on Conventional Data

Over the past two decades, hundreds of feature selection algorithms have been proposed. In this section, we broadly group traditional feature selection algorithms for conventional data as similarity based, information theoretical based, sparse learning based and statistical based methods, and other methods according to the used techniques.

2.1 Similarity based Methods

Different feature selection algorithms exploit various types of criteria to define the relevance of features. Among them, there is a family of methods assessing feature importance by their ability to preserve data similarity. We refer them as similarity based methods. For supervised feature selection, data similarity can be derived from label information; while for unsupervised feature selection methods, most methods take advantage of different distance metric measures to obtain data similarity.

Given a dataset with instances and features, pairwise similarity among instances can be encoded in an affinity matrix . Suppose that we want to select most relevant features , one way is to maximize their utility: , where denotes the utility of the feature subset . As algorithms in this family often evaluate features individually, the utility maximization over feature subset can be further decomposed into the following form:

| (1) |

where is a utility function for feature . denotes the transformation (e.g., scaling, normalization, etc) result of the original feature vector . is a new affinity matrix obtained from affinity matrix . The maximization problem in Eq. (1) shows that we would select a subset of features from such that they can well preserve the data manifold structure encoded in . This problem is usually solved by greedily selecting the top features that maximize their individual utility. Methods in this category vary in the way the affinity matrix is designed. We subsequently discuss some representative algorithms in this group that can be reformulated under the unified utility maximization framework.

2.1.1 Laplacian Score

Laplacian Score [He et al. (2005)] is an unsupervised feature selection algorithm which selects features that can best preserve the data manifold structure. It consists of three phases. First, it constructs the affinity matrix such that if is among the -nearest neighbor of ; otherwise . Then, the diagonal matrix is defined as and the Laplacian matrix is . Lastly, the Laplacian Score of each feature is computed as:

| (2) |

As Laplacian Score evaluates each feature individually, the task of selecting the features can be solved by greedily picking the top features with the smallest Laplacian Scores. The Laplacian Score of each feature can be reformulated as:

| (3) |

where is the standard data variance of feature , and the term is interpreted as a normalized feature vector of . Therefore, it is obvious that Laplacian Score is a special case of utility maximization in Eq. (1).

2.1.2 SPEC

SPEC [Zhao and Liu (2007)] is an extension of Laplacian Score that works for both supervised and unsupervised scenarios. For example, in the unsupervised scenario, the data similarity is measured by RBF kernel; while in the supervised scenario, data similarity can be defined by: , where is the number of data samples in the -th class. After obtaining the affinity matrix and the diagonal matrix , the normalized Laplacian matrix . The basic idea of SPEC is similar to Laplacian Score: a feature that is consistent with the data manifold structure should assign similar values to instances that are near each other. In SPEC, the feature relevance is measured by three different criteria:

| (4) |

In the above equations, ; is the -th eigenpair of the normalized Laplacian matrix ; , is the angle between and ; is an increasing function to penalize high frequency components of the eigensystem to reduce noise. If the data is noise free, the function can be removed and . When the second evaluation criterion is used, SPEC is equivalent to the Laplacian Score. For , it uses the top eigenpairs to evaluate the importance of feature .

All these three criteria can be reduced to the the unified similarity based feature selection framework in Eq. (1) by setting as , , ; and as , , in SPEC_score1, SPEC_score2, SPEC_score3, respectively. and are the singular vectors and singular values of the normalized Laplacian matrix .

2.1.3 Fisher Score

Fisher Score [Duda et al. (2012)] is a supervised feature selection algorithm. It selects features such that the feature values of samples within the same class are similar while the feature values of samples from different classes are dissimilar. The Fisher Score of each feature is evaluated as follows:

| (5) |

where , , and indicate the number of samples in class , mean value of feature , mean value of feature for samples in class , variance value of feature for samples in class , respectively. Similar to Laplacian Score, the top features can be obtained by greedily selecting the features with the largest Fisher Scores.

According to [He et al. (2005)], Fisher Score can be considered as a special case of Laplacian Score as long as the affinity matrix is . In this way, the relationship between Fisher Score and Laplacian Score is . Hence, the computation of Fisher Score can also be reduced to the unified utility maximization framework.

2.1.4 Trace Ratio Criterion

The trace ratio criterion [Nie et al. (2008)] directly selects the global optimal feature subset based on the corresponding score, which is computed by a trace ratio norm. It builds two affinity matrices and to characterize within-class and between-class data similarity. Let be the selection indicator matrix such that only the -th entry in is 1 and all the other entries are 0. With these, the trace ratio score of the selected features in is:

| (6) |

where and are Laplacian matrices of and respectively. The basic idea is to maximize the data similarity for instances from the same class while minimize the data similarity for instances from different classes. However, the trace ratio problem is difficult to solve as it does not have a closed-form solution. Hence, the trace ratio problem is often converted into a more tractable format called the ratio trace problem by maximizing . As an alternative, [Wang et al. (2007)] propose an iterative algorithm called ITR to solve the trace ratio problem directly and was later applied in trace ratio feature selection [Nie et al. (2008)].

Different and lead to different feature selection algorithms such as batch-mode Lalpacian Score and batch-mode Fisher Score. For example, in batch-mode Fisher Score, the within-class data similarity and the between-class data similarity are and respectively. Therefore, maximizing the trace ratio criterion is equivalent to maximizing Since is constant, it can be further reduced to the unified similarity based feature selection framework by setting and . On the other hand in batch-mode Laplacian Score, the within-class data similarity and the between-class data similarity are and respectively. In this case, the trace ratio criterion score is . Therefore, maximizing the trace ratio criterion is also equivalent to solving the unified maximization problem in Eq. (1) where and .

2.1.5 ReliefF

ReliefF [Robnik-Šikonja and Kononenko (2003)] selects features to separate instances from different classes. Assume that data instances are randomly selected among all instances, then the feature score of in ReliefF is defined as follows:

| (7) |

where NH and NM are the nearest instances of in the same class and in class , respectively. Their sizes are and , respectively. is the ratio of instances in class .

ReliefF is equivalent to selecting features that preserve a special form of data similarity matrix. Assume that the dataset has the same number of instances in each of the classes and there are instances in both and . Then according to [Zhao and Liu (2007)], the ReliefF feature selection can be reduced to the utility maximization framework in Eq. (1).

Discussion: Similarity based feature selection algorithms have demonstrated with excellent performance in both supervised and unsupervised learning problems. This category of methods is straightforward and simple as the computation focuses on building an affinity matrix, and afterwards, the scores of features can be obtained. Also, these methods are independent of any learning algorithms and the selected features are suitable for many subsequent learning tasks. However, one drawback of these methods is that most of them cannot handle feature redundancy. In other words, they may repeatedly find highly correlated features during the selection phase

2.2 Information Theoretical based Methods

A large family of existing feature selection algorithms is information theoretical based methods. Algorithms in this family exploit different heuristic filter criteria to measure the importance of features. As indicated in [Duda et al. (2012)], many hand-designed information theoretic criteria are proposed to maximize feature relevance and minimize feature redundancy. Since the relevance of a feature is usually measured by its correlation with class labels, most algorithms in this family are performed in a supervised way. In addition, most information theoretic concepts can only be applied to discrete variables. Therefore, feature selection algorithms in this family can only work with discrete data. For continuous feature values, some data discretization techniques are required beforehand. Two decades of research on information theoretic criteria can be unified in a conditional likelihood maximization framework [Brown et al. (2012)]. In this subsection, we introduce some representative algorithms in this family. We first give a brief introduction about basic information theoretic concepts.

The concept of entropy measures the uncertainty of a discrete random variable. The entropy of a discrete random variable is defined as follows:

| (8) |

where denotes a specific value of random variable , denotes the probability of over all possible values of .

Second, the conditional entropy of given another discrete random variable is:

| (9) |

where is the prior probability of , while is the conditional probability of given . It shows the uncertainty of given .

Then, information gain or mutual information between and is used to measure the amount of information shared by and together:

| (10) |

where is the joint probability of and . Information gain is symmetric such that , and is zero if the discrete variables and are independent.

At last, conditional information gain (or conditional mutual information) of discrete variables and given a third discrete variable is given as follows:

| (11) |

It shows the amount of mutual information shared by and given .

Searching for the global best set of features is NP-hard, thus most algorithms exploit heuristic sequential search approaches to add/remove features one by one. In this survey, we explain the feature selection problem by forward sequential search such that features are added into the selected feature set one by one. We denote as the current selected feature set that is initially empty. represents the class labels. is a specific feature in the current . is a feature selection criterion (score) where, generally, the higher the value of , the more important the feature is. In the unified conditional likelihood maximization feature selection framework, the selection criterion (score) for a new unselected feature is given as follows:

| (12) |

where is a function w.r.t. two variables and . If is a linear function w.r.t. these two variables, it is referred as a criterion by linear combinations of Shannon information terms such that:

| (13) |

where and are two nonnegative parameters between zero and one. On the other hand, if is a non-linear function w.r.t. these two variables, it is referred as a criterion by non-linear combination of Shannon information terms.

2.2.1 Mutual Information Maximization (Information Gain)

Mutual Information Maximization (MIM) (a.k.a. Information Gain) [Lewis (1992)] measures the importance of a feature by its correlation with class labels. It assumes that when a feature has a strong correlation with the class label, it can help achieve good classification performance. The Mutual Information score for feature is:

| (14) |

It can be observed that in MIM, the scores of features are assessed individually. Therefore, only the feature correlation is considered while the feature redundancy is completely ignored. After it obtains the MIM feature scores for all features, we choose the features with the highest feature scores and add them to the selected feature set. The process repeats until the desired number of selected features is obtained.

It can also be observed that MIM is a special case of linear combination of Shannon information terms in Eq. (13) where both and are equal to zero.

2.2.2 Mutual Information Feature Selection

A limitation of MIM criterion is that it assumes that features are independent of each other. In reality, good features should not only be strongly correlated with class labels but also should not be highly correlated with each other. In other words, the correlation between features should be minimized. Mutual Information Feature Selection (MIFS) [Battiti (1994)] considers both the feature relevance and feature redundancy in the feature selection phase, the feature score for a new unselected feature can be formulated as follows:

| (15) |

In MIFS, the feature relevance is evaluated by , while the second term penalizes features that have a high mutual information with the currently selected features such that feature redundancy is minimized.

MIFS can also be reduced to be a special case of the linear combination of Shannon information terms in Eq. (13) where is between zero and one, and is zero.

2.2.3 Minimum Redundancy Maximum Relevance

[Peng et al. (2005)] proposes a Minimum Redundancy Maximum Relevance (MRMR) criterion to set the value of to be the reverse of the number of selected features:

| (16) |

Hence, with more selected features, the effect of feature redundancy is gradually reduced. The intuition is that with more non-redundant features selected, it becomes more difficult for new features to be redundant to the features that have already been in . In [Brown et al. (2012)], it gives another interpretation that the pairwise independence between features becomes stronger as more features are added to , possibly because of noise information in the data.

MRMR is also strongly linked to the Conditional likelihood maximization framework if we iteratively revise the value of to be , and set the other parameter to be zero.

2.2.4 Conditional Infomax Feature Extraction

Some studies [Lin and Tang (2006), El Akadi et al. (2008), Guo and Nixon (2009)] show that in contrast to minimize the feature redundancy, the conditional redundancy between unselected features and already selected features given class labels should also be maximized. In other words, as long as the feature redundancy given class labels is stronger than the intra-feature redundancy, the feature selection will be affected negatively. A typical feature selection under this argument is Conditional Infomax Feature Extraction (CIFE) [Lin and Tang (2006)], in which the feature score for a new unselected feature is:

| (17) |

Compared with MIFS, it adds a third term to maximize the conditional redundancy. Also, CIFE is a special case of the linear combination of Shannon information terms by setting both and to be 1.

2.2.5 Joint Mutual Information

MIFS and MRMR reduce feature redundancy in the feature selection process. An alternative criterion, Joint Mutual Information [Yang and Moody (1999), Meyer et al. (2008)] is proposed to increase the complementary information that is shared between unselected features and selected features given the class labels. The feature selection criterion is listed as follows:

| (18) |

The basic idea of JMI is that we should include new features that are complementary to the existing features given the class labels.

JMI cannot be directly reduced to the condition likelihood maximization framework. In [Brown et al. (2012)], the authors demonstrate that with simple manipulations, the JMI criterion can be re-written as:

| (19) |

Therefore, it is also a special case of the linear combination of Shannon information terms by iteratively setting and to be .

2.2.6 Conditional Mutual Information Maximization

Previously mentioned criteria could be reduced to a linear combination of Shannon information terms. Next, we show some other algorithms that can only be reduced to a non-linear combination of Shannon information terms. Among them, Conditional Mutual Information Maximization (CMIM) [Vidal-Naquet and Ullman (2003), Fleuret (2004)] iteratively selects features which maximize the mutual information with the class labels given the selected features so far. Mathematically, during the selection phase, the feature score for each new unselected feature can be formulated as follows:

| (20) |

Note that the value of is small if is not strongly correlated with the class label or if is redundant when is known. By selecting the feature that maximizes this minimum value, it can guarantee that the selected feature has a strong predictive ability, and it can reduce the redundancy w.r.t. the selected features.

The CMIM criterion is equivalent to the following form after some derivations:

| (21) |

Therefore, CMIM is also a special case of the conditional likelihood maximization framework in Eq. (12).

2.2.7 Informative Fragments

In [Vidal-Naquet and Ullman (2003)], the authors propose a feature selection criterion called Informative Fragments (IF). The feature score of each new unselected features is given as:

| (22) |

The intuition behind Informative Fragments is that the addition of the new feature should maximize the value of conditional mutual information between and existing features in over the mutual information between and . An interesting phenomenon of IF is that with the chain rule that , IF has the equivalent form as CMIM. Hence, it can also be reduced to the general framework in Eq. (12).

2.2.8 Interaction Capping

Interaction Capping [Jakulin (2005)] is a similar feature selection criterion as CMIM in Eq. (21), it restricts the term to be nonnegative:

| (23) |

Apparently, it is a special case of non-linear combination of Shannon information terms by setting the function to be .

2.2.9 Double Input Symmetrical Relevance

Another class of information theoretical based methods such as Double Input Symmetrical Relevance (DISR) [Meyer and Bontempi (2006)] exploits normalization techniques to normalize mutual information [Guyon et al. (2008)]:

| (24) |

It is easy to validate that DISR is a non-linear combination of Shannon information terms and can be reduced to the conditional likelihood maximization framework.

2.2.10 Fast Correlation Based Filter

There are other information theoretical based feature selection methods that cannot be simply reduced to the unified conditional likelihood maximization framework. Fast Correlation Based Filter (FCBF) [Yu and Liu (2003)] is an example that exploits feature-class correlation and feature-feature correlation simultaneously. The algorithm works as follows: (1) given a predefined threshold , it selects a subset of features that are highly correlated with the class labels with , where is the symmetric uncertainty. The between a set of features and the class label is given as follows:

| (25) |

A specific feature is called predominant iff and there does not exist a feature such that . Feature is considered to be redundant to feature if ; (2) the set of redundant features is denoted as , which will be further split into and where they contain redundant features to feature with and , respectively; and (3) different heuristics are applied on , and to remove redundant features and keep the features that are most relevant to the class labels.

Discussion: Unlike similarity based feature selection algorithms that fail to tackle feature redundancy, most aforementioned information theoretical based feature selection algorithms can be unified in a probabilistic framework that considers both “feature relevance” and “feature redundancy”. Meanwhile, similar as similarity based methods, this category of methods is independent of any learning algorithms and hence are generalizable. However, most of the existing information theoretical based feature selection methods can only work in a supervised scenario. Without the guide of class labels, it is still not clear how to assess the importance of features. In addition, these methods can only handle discrete data and continuous numerical variables require discretization preprocessing beforehand

2.3 Sparse Learning based Methods

The third type of methods is sparse learning based methods which aim to minimize the fitting errors along with some sparse regularization terms. The sparse regularizer forces many feature coefficients to be small, or exactly zero, and then the corresponding features can be simply eliminated. Sparse learning based methods have received considerable attention in recent years due to their good performance and interpretability. In the following parts, we review some representative sparse learning based feature selection methods from both supervised and unsupervised perspectives.

2.3.1 Feature Selection with -norm Regularizer

First, we consider the binary classification or univariate regression problem. To achieve feature selection, the -norm sparsity-induced penalty term is added on the classification or regression model, where . Let denotes the feature coefficient, then the objective function for feature selection is:

| (26) |

where is a loss function, and some widely used loss functions include least squares loss, hinge loss and logistic loss. is a sparse regularization term, and is a regularization parameter to balance the contribution of the loss function and the sparse regularization term for feature selection.

Typically when , the -norm regularization term directly seeks for the optimal set of nonzero entries (features) for the model learning. However, the optimization problem is naturally an integer programming problem and is difficult to solve. Therefore, it is often relaxed to a -norm regularization problem, which is regarded as the tightest convex relaxation of the -norm. One main advantage of -norm regularization (LASSO) [Tibshirani (1996)] is that it forces many feature coefficients to become smaller and, in some cases, exactly zero. This property makes it suitable for feature selection, as we can select features whose corresponding feature weights are large, which motivates a surge of -norm regularized feature selection methods [Zhu et al. (2004), Xu et al. (2014), Wei et al. (2016a), Wei and Yu (2016), Hara and Maehara (2017)]. Also, the sparse vector enables the ranking of features. Normally, the higher the value, the more important the corresponding feature is.

2.3.2 Feature Selection with -norm Regularizer

Here, we discuss how to perform feature selection for the general multi-class classification or multivariate regression problems. The problem is more difficult because of the multiple classes and multivariate regression targets, and we would like the feature selection phase to be consistent over multiple targets. In other words, we want multiple predictive models for different targets to share the same parameter sparsity patterns – each feature either has small scores or large scores for all targets. This problem can be generally solved by the -norm sparsity-induced regularization term, where (most existing work focus on or ) and (most existing work focus on or ). Assume that denotes the data matrix, and denotes the one-hot label indicator matrix. Then the model is formulated as follows:

| (27) |

where ; and the parameter is used to control the contribution of the loss function and the sparsity-induced regularization term. Then the features can be ranked according to the value of , the higher the value, the more important the feature is.

Case 1: ,

To find relevant features across multiple targets, an intuitive way is to use discrete optimization through the -norm regularization. The optimization problem with the -norm regularization term can be reformulated as follows:

| (28) |

However, solving the above optimization problem has been proven to be NP-hard, and also, due to its discrete nature, the objective function is also not convex. To solve it, a variation of Alternating Direction Method could be leveraged to seek for a local optimal solution [Cai et al. (2013), Gu et al. (2012)]. In [Zhang et al. (2014)], the authors provide two algorithms, proximal gradient algorithm and rank-one update algorithm to solve this discrete selection problem.

Case 2: ,

The above sparsity-reduced regularization term is inherently discrete and hard to solve. In [Peng and Fan (2016), Peng and Fan (2017)], the authors propose a more general framework to directly optimize the sparsity-reduced regularization when and provided efficient iterative algorithm with guaranteed convergence rate.

Case 3: ,

Although the -norm is more desired for feature sparsity, however, it is inherently non-convex and non-smooth. Hence, the -norm regularization is preferred and widely used in different scenarios such as multi-task learning [Obozinski et al. (2007), Zhang et al. (2008)], anomaly detection [Li et al. (2017a), Wu et al. (2017)] and crowdsourcing [Zhou and He (2017)]. Many -norm regularization based feature selection methods have been proposed over the past decade [Zhao et al. (2010), Gu et al. (2011c), Yang et al. (2011), Hou et al. (2011), Li et al. (2012), Qian and Zhai (2013), Shi et al. (2014), Liu et al. (2014), Du and Shen (2015), Jian et al. (2016), Liu et al. (2016b), Nie et al. (2016), Zhu et al. (2016), Li et al. (2017c)]. Similar to -norm regularization, -norm regularization is also convex and a global optimal solution can be achieved [Liu et al. (2009a)], thus the following discussions about the sparse learning based feature selection will center around the -norm regularization term. The -norm regularization also has strong connections with group lasso [Yuan and Lin (2006)] which will be explained later. By solving the related optimization problem, we can obtain a sparse matrix where many rows are exact zero or of small values, and then the features corresponding to these rows can be eliminated.

Case 4: ,

In addition to the -norm regularization term, the -norm regularization is also widely used to achieve joint feature sparsity across multiple targets [Quattoni et al. (2009)]. In particular, it penalizes the sum of maximum absolute values of each row, such that many rows of the matrix will all be zero.

2.3.3 Efficient and Robust Feature Selection

Authors in [Nie et al. (2010)] propose an efficient and robust feature selection (REFS) method by employing a joint -norm minimization on both the loss function and the regularization. Their argument is that the -norm based loss function is sensitive to noisy data while the -norm based loss function is more robust to noise. The reason is that -norm loss function has a rotational invariant property [Ding et al. (2006)]. Consistent with -norm regularized feature selection model, a -norm regularizer is added to the -norm loss function to achieve group feature sparsity. The objective function of REFS is:

| (29) |

To solve the convex but non-smooth optimization problem, an efficient algorithm is proposed with strict convergence analysis.

It should be noted that the aforementioned REFS is designed for multi-class classification problems where each instance only has one class label. However, data could be associated with multiple labels in many domains such as information retrieval and multimedia annotation. Recently, there is a surge of research work study multi-label feature selection problems by considering label correlations. Most of them, however, are also based on the -norm sparse regularization framework [Gu et al. (2011a), Chang et al. (2014), Jian et al. (2016)].

2.3.4 Multi-Cluster Feature Selection

Most of existing sparse learning based approaches build a learning model with the supervision of class labels. The feature selection phase is derived afterwards on the sparse feature coefficients. However, since labeled data is costly and time-consuming to obtain, unsupervised sparse learning based feature selection has received increasing attention in recent years. Multi-Cluster Feature Selection (MCFS) [Cai et al. (2010)] is one of the first attempts. Without class labels to guide the feature selection process, MCFS proposes to select features that can cover multi-cluster structure of the data where spectral analysis is used to measure the correlation between different features.

MCFS consists of three steps. In the first step, it constructs a -nearest neighbor graph to capture the local geometric structure of data and gets the graph affinity matrix and the Laplacian matrix . Then a flat embedding that unfolds the data manifold can be obtained by spectral clustering techniques. In the second step, since the embedding of data is known, MCFS takes advantage of them to measure the importance of features by a regression model with a -norm regularization. Specifically, given the -th embedding , MCFS regards it as a regression target to minimize:

| (30) |

where denotes the feature coefficient vector for the -th embedding. By solving all sparse regression problems, MCFS obtains sparse feature coefficient vectors and each vector corresponds to one embedding of . In the third step, for each feature , the MCFS score for that feature can be computed as . The higher the MCFS score, the more important the feature is.

2.3.5 -norm Regularized Discriminative Feature Selection

In [Yang et al. (2011)], the authors propose a new unsupervised feature selection algorithm (UDFS) to select the most discriminative features by exploiting both the discriminative information and feature correlations. First, assume is the centered data matrix such and is the weighted label indicator matrix, where . Instead of using global discriminative information, they propose to utilize the local discriminative information to select discriminative features. The advantage of using local discriminative information are two folds. First, it has been demonstrated to be more important than global discriminative information in many classification and clustering tasks. Second, when it considers the local discriminative information, the data manifold structure is also well preserved. For each data instance , it constructs a -nearest neighbor set for that instance . Let denotes the local data matrix around , then the local total scatter matrix and local between class scatter matrix are and respectively, where is the centered data matrix and . Note that is a subset from and can be obtained by a selection matrix such that . Without label information in unsupervised feature selection, UDFS assumes that there is a linear classifier to map each data instance to a low dimensional space . Following the definition of global discriminative information [Yang et al. (2010), Fukunaga (2013)], the local discriminative score for each instance is :

| (31) |

A high local discriminative score indicates that the instance can be well discriminated by . Therefore, UDFS tends to train which obtains the highest local discriminative score for all instances in ; also it incorporates a -norm regularizer to achieve feature selection, the objective function is formulated as follows:

| (32) |

where is a regularization parameter to control the sparsity of the learned model.

2.3.6 Feature Selection Using Nonnegative Spectral Analysis

Nonnegative Discriminative Feature Selection (NDFS) [Li et al. (2012)] performs spectral clustering and feature selection simultaneously in a joint framework to select a subset of discriminative features. It assumes that pseudo class label indicators can be obtained by spectral clustering techniques. Different from most existing spectral clustering techniques, NDFS imposes nonnegative and orthogonal constraints during the spectral clustering phase. The argument is that with these constraints, the learned pseudo class labels are closer to real cluster results. These nonnegative pseudo class labels then act as regression constraints to guide the feature selection phase. Instead of performing these two tasks separately, NDFS incorporates these two phases into a joint framework.

Similar to the UDFS, we use to denote the weighted cluster indicator matrix. It is easy to show that we have . NDFS adopts a strategy to learn the weight cluster matrix such that the local geometric structure of the data can be well preserved [Shi and Malik (2000), Yu and Shi (2003)]. The local geometric structure can be preserved by minimizing the normalized graph Laplacian , where is the Laplacian matrix that can be derived from RBF kernel. In addition to that, given the pseudo labels , NDFS assumes that there exists a linear transformation matrix between the data instances and the pseudo labels . These pseudo class labels are utilized as constraints to guide the feature selection process. The combination of these two components results in the following problem:

| (33) |

where is a parameter to control the sparsity of the model, and is introduced to balance the contribution of spectral clustering and discriminative feature selection.

Discussion: Sparse learning based feature selection methods have gained increasing popularity in recent years. A merit of such type of methods is that it embeds feature selection into a typical learning algorithm (such as linear regression, SVM, etc.). Thus it can often lead very good performance for the underlying learning algorithm. Also, with sparsity of feature weights, the model poses good interpretability as it enables us to explain why we make such prediction. Nonetheless, there are still some drawbacks of these methods: First, as it directly optimizes a particular learning algorithm by feature selection, the selected features do not necessary achieve good performance in other learning tasks. Second, this kind of methods often involves solving a non-smooth optimization problem, and with complex matrix operations (e.g., multiplication, inverse, etc) in most cases. Hence, the expensive computational cost is another bottleneck

2.4 Statistical based Methods

Another category of feature selection algorithms is based on different statistical measures. As they rely on various statistical measures instead of learning algorithms to assess feature relevance, most of them are filter based methods. In addition, most statistical based algorithms analyze features individually. Hence, feature redundancy is inevitably ignored during the selection phase. We introduce some representative feature selection algorithms in this category.

2.4.1 Low Variance

Low Variance eliminates features whose variance are below a predefined threshold. For example, for the features that have the same values for all instances, the variance is 0 and should be removed since it cannot help discriminate instances from different classes. Suppose that the dataset consists of only boolean features, i.e., the feature values are either 0 and 1. As the boolean feature is a Bernoulli random variable, its variance value can be computed as:

| (34) |

where denotes the percentage of instances that take the feature value of 1. After the variance of features is obtained, the feature with a variance score below a predefined threshold can be directly pruned.

2.4.2 T-score

-score [Davis and Sampson (1986)] is used for binary classification problems. For each feature , suppose that and are the mean feature values for the instances from two different classes, and are the corresponding standard deviations, and denote the number of instances from these two classes. Then the -score for the feature is:

| (35) |

The basic idea of -score is to assess whether the feature makes the means of two classes statistically different, which can be computed as the ratio between the mean difference and the variance of two classes. The higher the -score, the more important the feature is.

2.4.3 Chi-Square Score

Chi-square score [Liu and Setiono (1995)] utilizes the test of independence to assess whether the feature is independent of the class label. Given a particular feature with different feature values, the Chi-square score of that feature can be computed as:

| (36) |

where is the number of instances with the -th feature value given feature . In addition, , where indicates the number of data instances with the -th feature value given feature , denotes the number of data instances in class . A higher Chi-square score indicates that the feature is relatively more important.

2.4.4 Gini Index

Gini index [Gini (1912)] is also a widely used statistical measure to quantify if the feature is able to separate instances from different classes. Given a feature with different feature values, suppose and denote the set of instances with the feature value smaller or equal to the -th feature value, and larger than the -th feature value, respectively. In other words, the -th feature value can separate the dataset into and , then the Gini index score for the feature is given as follows:

| (37) |

where denotes the probability. For instance, is the conditional probability of class given . For binary classification, Gini Index can take a maximum value of 0.5, it can also be used in multi-class classification problems. Unlike previous statistical measures, the lower the Gini index value, the more relevant the feature is.

2.4.5 CFS

The basic idea of CFS [Hall and Smith (1999)] is to use a correlation based heuristic to evaluate the worth of a feature subset :

| (38) |

where the CFS score shows the heuristic “merit” of the feature subset with features. is the mean feature class correlation and is the average feature-feature correlation. In Eq. (38), the numerator indicates the predictive power of the feature set while the denominator shows how much redundancy the feature set has. The basic idea is that a good feature subset should have a strong correlation with class labels and are weakly intercorrelated. To get the feature-class correlation and feature-feature correlation, CFS uses symmetrical uncertainty [Vetterling et al. (1992)]. As finding the globally optimal subset is computational prohibitive, it adopts a best-search strategy to find a local optimal feature subset. At the very beginning, it computes the utility of each feature by considering both feature-class and feature-feature correlation. It then starts with an empty set and expands the set by the feature with the highest utility until it satisfies some stopping criteria.

Discussion: Most of the statistical based feature selection methods rely on predefined statistical measures to filter out unwanted features, and are simple, straightforward in nature. And the computational costs of these methods are often very low. To this end, they are often used as a preprocessing step before applying other sophisticated feature selection algorithms. Also, as similarity based feature selection methods, these methods often evaluate the importance of features individually and hence cannot handle feature redundancy. Meanwhile, most algorithms in this family can only work on discrete data and conventional data discretization techniques are required to preprocess numerical and continuous variables

2.5 Other Methods

In this subsection, we present other feature selection methods that do not belong to the above four types of feature selection algorithms. In particular, we review hybrid feature selection methods, deep learning based and reconstruction based methods.

Hybrid feature selection methods is a kind of ensemble-based methods that aim to construct a group of feature subsets from different feature selection algorithms, and then produce an aggregated result out of the group. In this way, the instability and perturbation issues of most single feature selection algorithms can be alleviated, and also, the subsequent learning tasks can be enhanced. Similar to conventional ensemble learning methods [Zhou (2012)], hybrid feature selection methods consist of two steps: (1) construct a set of different feature selection results; and (2) aggregate different outputs into a consensus result. Different methods differ in the way how these two steps are performed. For the first step, existing methods either ensemble the selected feature subsets of a single method on different sample subset or ensemble the selected feature subsets from multiple feature selection algorithms. In particular, a sampling method to obtain different sample subsets is necessary for the first case; and typical sampling methods include random sampling and bootstrap sampling. For example, [Saeys et al. (2008)] studied the ensemble feature selection which aggregates a conventional feature selection algorithm such as RELIEF with multiple bootstrapped samples of the training data. In [Abeel et al. (2010)], the authors improved the stability of SVM-RFE feature selection algorithm by applying multiple random sampling on the original data. The second step involves in aggregating rankings of multiple selected feature subset. Most of the existing methods employ a simple yet effective linear aggregation function [Saeys et al. (2008), Abeel et al. (2010), Yang and Mao (2011)]. Nonetheless, other ranking aggregation functions such as Markov chain-based method [Dutkowski and Gambin (2007)], distance synthesis method [Yang et al. (2005)], and stacking method [Netzer et al. (2009)] are also widely used. In addition to using the aggregation function, another way is to identify the consensus features directly from multiple sample subsets [Loscalzo et al. (2009)].

Nowadays, deep learning techniques are popular and successful in various real-world applications, especially in computer vision and natural language processing. Deep learning is distinct from feature selection as deep learning leverages deep neutral networks structures to learn new feature representations while feature selection directly finds relevant features from the original features. From this perspective, the results of feature selection are more human readable and interpretable. Even though deep learning is mainly used for feature learning, there are still some attempts that use deep learning techniques for feature selection. We briefly review these deep learning based feature selection methods. For example, in [Li et al. (2015a)], a deep feature selection model (DFS) is proposed. DFS selects features at the input level of a deep neural network. Typically, it adds a sparse one-to-one linear layer between the input layer and the first hidden layer of a multilayer perceptrons (MLP). To achieve feature selection, DFS imposes sparse regularization term, then only the features corresponding to nonzero weights are selected. Similarly, in [Roy et al. (2015)], the authors also propose to select features at the input level of a deep neural network. The difference is that they propose a new concept - net positive contribution, to assess if features are more likely to make the neurons contribute in the classification phase. Since heterogeneous (multi-view) features are prevalent in machine learning and pattern recognition applications, [Zhao et al. (2015)] proposes to combine deep neural networks with sparse representation for grouped heterogeneous feature selection. It first extracts a new unified representation from each feature group using a multi-modal neural network. Then the importance of features is learned by a kind of sparse group lasso method. In [Wang et al. (2014a)], the authors propose an attentional neural network, which guides feature selection with cognitive bias. It consists of two modules, a segmentation module, and a classification module. First, given a cognitive bias vector, segmentation module segments out an object belonging to one of classes in the input image. Then, in the classification module, a reconstruction function is applied to the segment to gate the raw image with a threshold for classification. When features are sensitive to a cognitive bias, the cognitive bias will activate the corresponding relevant features.

Recently, data reconstruction error emerged as a new criterion for feature selection, especially for unsupervised feature selection. It defines feature relevance as the capability of features to approximate the original data via a reconstruction function. Among them, Convex Principal Feature Selection (CPFS) [Masaeli et al. (2010)] reformulates the feature selection problem as a convex continuous optimization problem that minimizes a mean-squared-reconstruction error with linear and sparsity constraint. GreedyFS [Farahat et al. (2011)] uses a projection matrix to project the original data onto the span of some representative feature vectors and derives an efficient greedy algorithm to obtain these representative features. Zhao et al. [Zhao et al. (2016)] formulates the problem of unsupervised feature selection as the graph regularized data reconstruction. The basic idea is to make the selected features well preserve the data manifold structure of the original data, and reconstruct each data sample via linear reconstruction. A pass-efficient unsupervised feature selection is proposed in [Maung and Schweitzer (2013)]. It can be regarded as a modification of the classical pivoted QR algorithm, the basic idea is still to select representative features that can minimize the reconstruction error via linear function. The aforementioned methods mostly use linear reconstruction functions, [Li et al. (2017b)] argues that the reconstruction function is not necessarily linear and proposes to learn the reconstruction function automatically function from data. In particular, they define a scheme to embed the reconstruction function learning into feature selection.

3 Feature Selection with Structured Features

Existing feature selection methods for conventional data are based on a strong assumption that features are independent of each other (flat) while ignoring the inherent feature structures. However, in many real applications features could exhibit various kinds of structures, e.g., spatial or temporal smoothness, disjoint groups, overlap groups, trees and graphs [Tibshirani et al. (2005), Jenatton et al. (2011), Yuan et al. (2011), Huang et al. (2011), Zhou et al. (2012), Wang and Ye (2015)]. If this is the case, feature selection algorithms incorporating knowledge about the structure information may help find more relevant features and therefore can improve subsequent learning tasks. One motivating example is from bioinformatics, in the study of array CGH, features have some natural spatial order, incorporating such spatial structure can help select more important features and achieve more accurate classification accuracy. Therefore, in this section, we discuss some representative feature selection algorithms which explicitly consider feature structures. Specifically, we will focus on group structure, tree structure and graph structure.

A popular and successful approach to achieve feature selection with structured features is to minimize an empirical error penalized by a structural regularization term:

| (39) |

where denotes the structures among features and is a trade-off parameter between the loss function and the structural regularization term. To achieve feature selection, is usually set to be a sparse regularization term. Note that the above formulation is similar to that in Eq. (26), the only difference is that for feature selection with structured features, we explicitly consider the structural information among features in the sparse regularization term.

3.1 Feature Selection with Group Feature Structures

First, features could exhibit group structures. One of the most common examples is that in multifactor analysis-of-variance (ANOVA), each factor is associated with several groups and can be expressed by a set of dummy features [Yuan and Lin (2006)]. Some other examples include different frequency bands represented as groups in signal processing [McAuley et al. (2005)] and genes with similar functionalities acting as groups in bioinformatics [Ma et al. (2007)]. Therefore, when performing feature selection, it is more appealing to model the group structure explicitly.

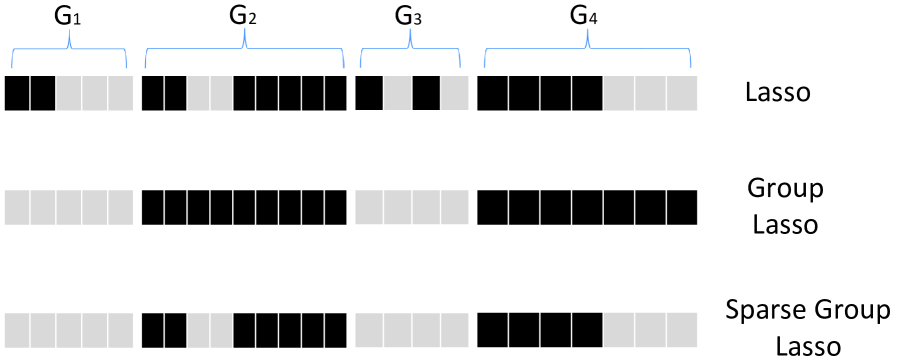

3.1.1 Group Lasso

Group Lasso [Yuan and Lin (2006), Bach (2008), Jacob et al. (2009), Meier et al. (2008)], which derives feature coefficients from certain groups to be small or exact zero, is a solution to this problem. In other words, it selects or ignores a group of features as a whole. The difference between Lasso and Group Lasso is shown by the illustrative example in Fig. 3. Suppose that these features come from 4 different groups and there is no overlap between these groups. Lasso completely ignores the group structures among features, and the selected features are from four different groups. On the contrary, Group Lasso tends to select or not select features from different groups as a whole. As shown in the figure, Group Lasso only selects the second and the fourth group and , features in the other two groups and are not selected. Mathematically, Group Lasso first uses a -norm regularization term for feature coefficients in each group , then it performs a -norm regularization for all previous -norm terms. The objective function of Group Lasso is formulated as follows:

| (40) |

where is a weight for the -th group which can be considered as a prior to measuring the contribution of the -th group in the feature selection process.

3.1.2 Sparse Group Lasso

Once Group Lasso selects a group, all the features in the selected group will be kept. However, in many cases, not all features in the selected group could be useful, and it is desirable to consider the intrinsic feature structures and select features from different selected groups simultaneously (as illustrated in Fig. 3). Sparse Group Lasso [Friedman et al. (2010), Peng et al. (2010)] takes advantage of both Lasso and Group Lasso, and it produces a solution with simultaneous intra-group and inter-group sparsity. The sparse regularization term of Sparse Group Lasso is a combination of the penalty term of Lasso and Group Lasso:

| (41) |

where is parameter between 0 and 1 to balance the contribution of inter-group sparsity and intra-group sparsity for feature selection. The difference between Lasso, Group Lasso and Sparse Group Lasso is shown in Fig. 3.

3.1.3 Overlapping Sparse Group Lasso

Above methods consider the disjoint group structures among features. However, groups may also overlap with each other [Jacob et al. (2009), Jenatton et al. (2011), Zhao et al. (2009)]. One motivating example is the usage of biologically meaningful gene/protein groups mentioned in [Ye and Liu (2012)]. Different groups of genes may overlap, i.e., one protein/gene may belong to multiple groups. A general Overlapping Sparse Group Lasso regularization is similar to the regularization term of Sparse Group Lasso. The difference is that different feature groups can have an overlap, i.e., there exist at least two groups and such that .

3.2 Feature Selection with Tree Feature Structures

In addition to the group structures, features can also exhibit tree structures. For example, in face recognition, different pixels can be represented as a tree, where the root node indicates the whole face, its child nodes can be different organs, and each specific pixel is considered as a leaf node. Another motivating example is that genes/proteins may form certain hierarchical tree structures [Liu and Ye (2010)]. Recently, Tree-guided Group Lasso is proposed to handle the feature selection for features that can be represented in an index tree [Kim and Xing (2010), Liu and Ye (2010), Jenatton et al. (2010)].

3.2.1 Tree-guided Group Lasso

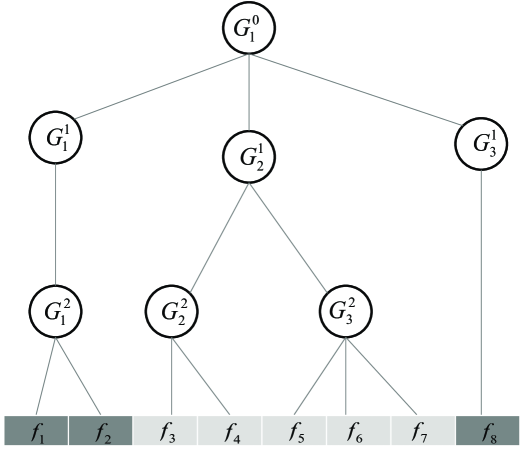

In Tree-guided Group Lasso [Liu and Ye (2010)], the structure over the features can be represented as a tree with leaf nodes as features. Each internal node denotes a group of features such that the internal node is considered as a root of a subtree and the group of features is considered as leaf nodes. Each internal node in the tree is associated with a weight that represents the height of its subtree, or how tightly the features in this subtree are correlated.

In Tree-guided Group Lasso, for an index tree with a depth of , denotes the whole set of nodes (features) in the -th level (the root node is in level 0), and denotes the number of nodes in the level . Nodes in Tree-guided Group Lasso have to satisfy the following two conditions: (1) internal nodes from the same depth level have non-overlapping indices, i.e., , , , ; and (2) if is the parent node of , then .

We explain these conditions via an illustrative example in Fig. 4. In the figure, we can observe that 8 features are organized in an indexed tree of depth 3. For the internal nodes in each level, we have , , . is the root node of the index tree. In addition, internal nodes from the same level do not overlap while the parent node and the child node have some overlap such that the features of the child node is a subset of those of the parent node. In this way, the objective function of Tree-guided Group Lasso is:

| (42) |

where is a regularization parameter and is a predefined parameter to measure the contribution of the internal node . Since parent node is a superset of its child nodes, thus, if a parent node is not selected, all of its child nodes will not be selected. For example, as illustrated in Fig. 4, if the internal node is not selected, both of its child nodes and will not be selected.

3.3 Feature Selection with Graph Feature Structures

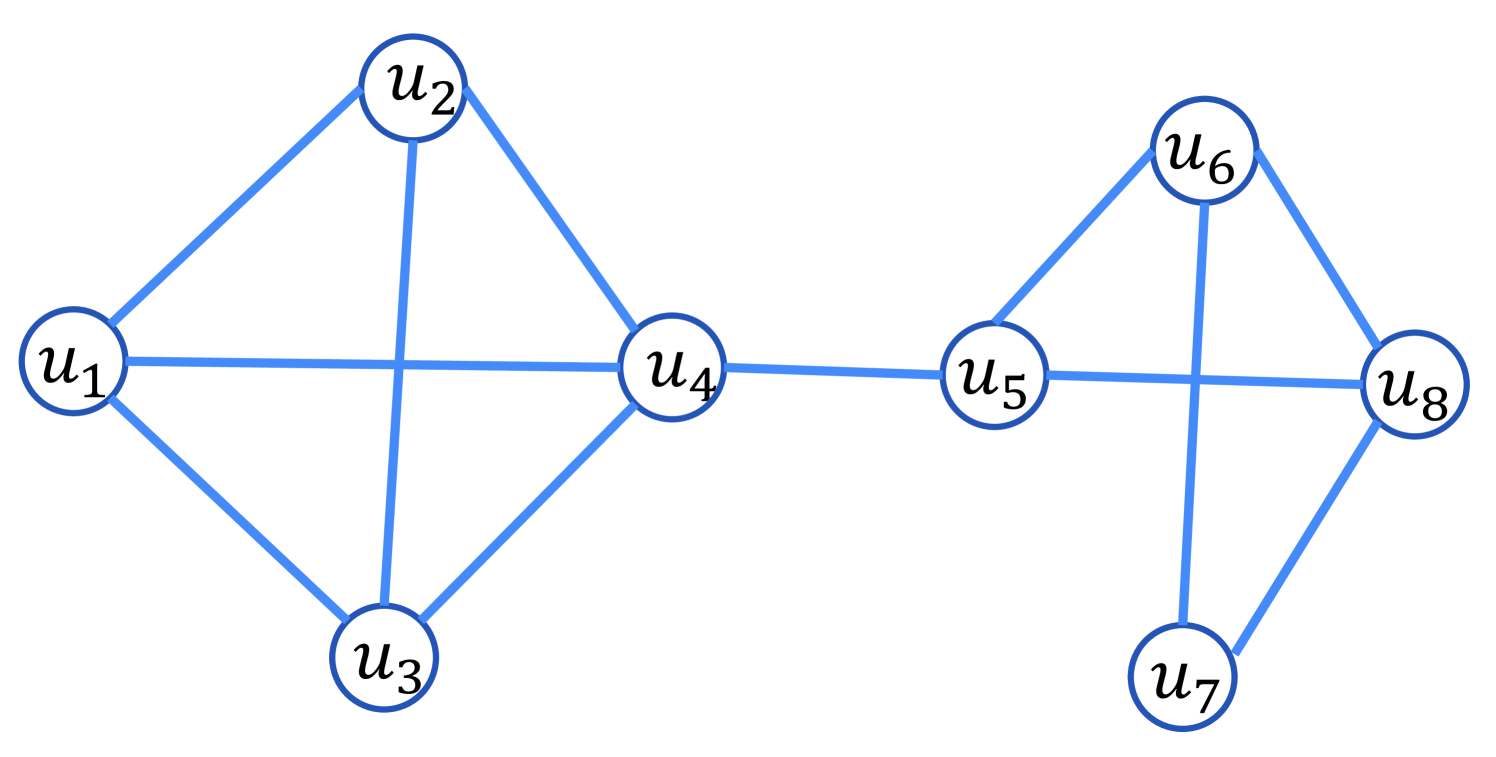

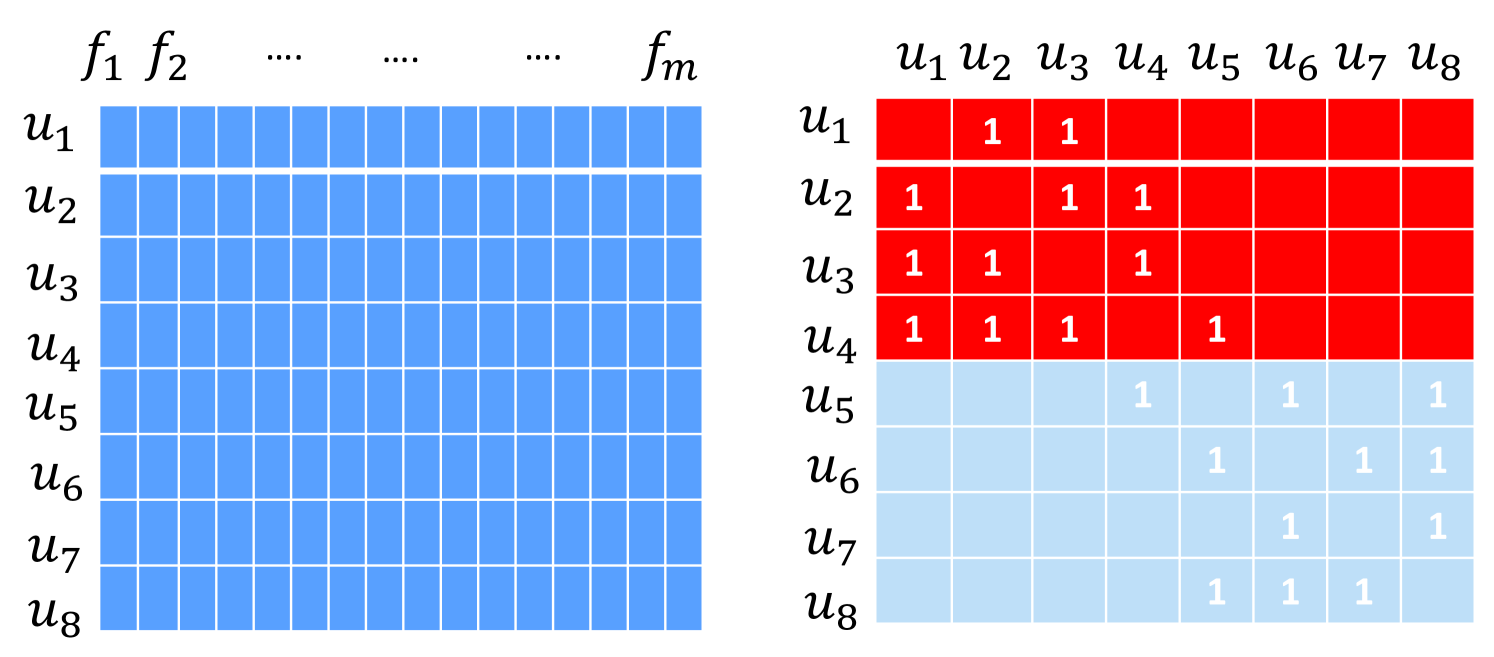

In many cases, features may have strong pairwise interactions. For example, in natural language processing, if we take each word as a feature, we have synonyms and antonyms relationships between different words [Fellbaum (1998)]. Moreover, many biological studies show that there exist strong pairwise dependencies between genes. Since features show certain kinds of dependencies in these cases, we can model them by an undirected graph, where nodes represent features and edges among nodes show the pairwise dependencies between features [Sandler et al. (2009), Kim and Xing (2009), Yang et al. (2012)]. We can use an undirected graph to encode these dependencies. Assume that there are nodes and a set of edges in . Then node corresponds to the -th feature and the pairwise feature dependencies can be represented by an adjacency matrix .

3.3.1 Graph Lasso

Since features exhibit graph structures, when two nodes (features) and are connected by an edge in , the features and are more likely to be selected together, and they should have similar feature coefficients. One way to achieve this target is via Graph Lasso – adding a graph regularizer for the feature graph on the basis of Lasso [Ye and Liu (2012)]. The formulation is:

| (43) |

where the first regularization term is from Lasso while the second term ensures that if a pair of features show strong dependency, i.e., large , their feature coefficients should also be similar to each other.

3.3.2 GFLasso

In Eq. (43), Graph Lasso encourages features connected together have similar feature coefficients. However, features can also be negatively correlated. In this case, the feature graph is represented by a signed graph, with both positive and negative edges. GFLasso [Kim and Xing (2009)] is proposed to model both positive and negative feature correlations, the objective function is:

| (44) |