Federated Adversarial Learning: A Framework with Convergence Analysis

Federated learning (FL) is a trending training paradigm to utilize decentralized training data. FL allows clients to update model parameters locally for several epochs, then share them to a global model for aggregation. This training paradigm with multi-local step updating before aggregation exposes unique vulnerabilities to adversarial attacks. Adversarial training is a popular and effective method to improve the robustness of networks against adversaries. In this work, we formulate a general form of federated adversarial learning (FAL) that is adapted from adversarial learning in the centralized setting. On the client side of FL training, FAL has an inner loop to generate adversarial samples for adversarial training and an outer loop to update local model parameters. On the server side, FAL aggregates local model updates and broadcast the aggregated model. We design a global robust training loss and formulate FAL training as a min-max optimization problem. Unlike the convergence analysis in classical centralized training that relies on the gradient direction, it is significantly harder to analyze the convergence in FAL for three reasons: 1) the complexity of min-max optimization, 2) model not updating in the gradient direction due to the multi-local updates on the client-side before aggregation and 3) inter-client heterogeneity. We address these challenges by using appropriate gradient approximation and coupling techniques and present the convergence analysis in the over-parameterized regime. Our main result theoretically shows that the minimum loss under our algorithm can converge to small with chosen learning rate and communication rounds. It is noteworthy that our analysis is feasible for non-IID clients.

1 Introduction

Federated learning (FL) is playing an important role nowadays, as it allows different clients to train models collaboratively without sharing private information. One popular FL paradigm called FedAvg [MMR+17] introduces an easy-to-implement distributed learning method without data sharing. Specifically, it requires a central server to aggregate model updates computed by the local clients (also known as nodes or participants) using local imparticipable private data. Then with these updates aggregated, the central server use them to train a global model.

Nowadays deep learning model are exposed to severe threats of adversarial samples. Namely, small adversarial perturbations on the inputs will dramatically change the outputs or output wrong answers [SZS+13]. In this regard, much effort has been made to improve neural networks’ resistance to such perturbations using adversarial learning [TKP+17, SKC18, MMS+18]. Among these studies, the adversarial training scheme in [MMS+18] has achieved the good robustness in practice. [MMS+18] proposes an adversarial training scheme that uses projected gradient descent (PGD) to generate alternative adversarial samples as the augmented training set. Generating adversarial examples during neural network training is considered as one of the most effective approaches for adversarial training up to now according to the literature [CW17, ACW18, CH20].

Although adversarial learning has attracted much attention in the centralized domain, its practice in FL is under-explored [ZRSB20]. Like training classical deep neural networks that use gradient-based methods, FL paradigms are vulnerable to adversarial samples. Adversarial learning in FL brings multiple open challenges due to FL properties on low convergence rate, application in non-IID environments, and secure aggregation solutions. Hence applying adversarial learning in an FL paradigm may lead to unstable training loss and a lack of robustness. However, a recent practical work [ZRSB20] observed that although there exist difficulties of convergence, the federation of adversarial training with suitable hyperparameter settings can achieve adversarial robustness and acceptable performance. Motivated by the empirical results, we want to address the provable property of combining adversarial learning into FL from the theoretical perspective.

This work aims to theoretically study the unexplored convergence challenges that lie in the interaction between adversarial training and FL. To achieve a general understanding, we consider a general form of federated adversarial learning (FAL), which deploys adversarial training scheme on local clients in the most common FL paradigm, FedAvg [MMR+17] system. Specifically, FAL has an inner loop of local updating that generates adversarial samples (i.e., using [MMS+18]) for adversarial training and an outer loop to update local model weights on the client side. Then global model is aggregated using FedAvg [MMR+17]. Our algorithm is detailed in Algorithm 1.

We are interested in theoretically understanding the proposed FAL scheme from the aspects of model robustness and convergence:

Can federated adversarial training fit training data robustly and converge with an over-parameterized neural network?

The theoretical convergence analysis of adversarial training itself is challenging in the centralized training setting. [TZT18] recently proposed a general theoretical method to analyze the risk bound with adversaries but did not address the convergence problem. The investigation of convergence on over-parameterized neural network has achieved tremendous progress [DLL+19, AZLS19b, AZLS19a, DZPS19, ADH+19b]. The basic statement is that training can converge to sufficiently small training loss in polynomial iterations using gradient descent or stochastic gradient descent when the width of the network is polynomial in the number of training examples when initialized randomly. Recent theoretical analysis [GCL+19, ZPD+20] extends these standard training convergence results to adversarial training settings. To answer the above interesting but challenging question, we formulate FAL as an min-max optimization problem. We extend the convergence analysis on the general formulation of over-parameterized neural networks in the FL setting that allows each client to perform min-max training and generate adversarial examples (see Algorithm 1). Involved challenges are arising in FL convergence analysis due to its unique optimization method: 1) unlike classical centralized setting, the global model of FL does not update in the gradient direction; 2) inter-client heterogeneity issue needs to be considered.

Despite the challenges, we give an affirmative answer to the above question. To the best of our knowledge, this work is the first theoretical study that studies those unexplored problems about the convergence of adversarial training with FL. The contributions of this paper are:

-

•

We propose a framework to analyze a general form of FAL in over-parameterized neural networks. We follow a natural and valid assumption of data separability that the training dataset are well separated apropos of the adversarial perturbations’ magnitude. After sufficient rounds of global communication and certain steps of local gradient descent for each , we obtain the minimal loss close to zero. Notably, our assumptions do not rely on data distribution. Thus the proposed analysis framework is feasible for non-IID clients.

-

•

We are the first to theoretically formulate the convergence of the FAL problem into a min-max optimization framework with the proposed loss function. In FL, the update in the global model is no longer directly determined by the gradient directions due to multiple local steps. To tackle the challenges, we define a new ‘gradient’, FL gradient. With valid ReLU Lipschitz and over-parameterized assumptions, we use gradient coupling for gradient updates in FL to show the model updates of each global updating is bounded in federated adversarial learning.

2 Related Work

Federated Learning

A efficient and privacy-preserving way to learn from the distributed data collected on the edge devices (a.k.a clients) would be FL. FedAvg is a easy-to-implement distributed learning strategy by aggregating local model updates of the server’s side, and then transmitting the averaged model back to the local clients. Later, many FL methods are developed baed on FedAvg. Theses FL schemes can be divided into aggregation schemes [MMR+17, WLL+20, LJZ+21] and optimization schemes [RCZ+20, ZHD+20]. Nearly all the them have the common characteristics that client model are updating using gradient descent-based methods, which is venerable to adversarial attacks. In addition, data heterogeneity brings in huge challeng in FL. For IID data, FL has been proven effective. However, in practice, data mostly distribute as non-IID. Non-IID data could substantially degrade the performance of FL models [ZLL+18, LHY+19, LJZ+21, LSZ+20]. Despite the potential risk in security and unstable performance in non-IID setting, as FL mitigates the concern of data sharing, it is still a popular and practical solution for distributed data learning in many real applications, such as healthcare [LGD+20, RHL+20], autonomous driving [LLC+19], IoTs [WTS+19, LLH+20].

Learning with Adversaries

Ever since adversarial examples are discovered [SZS+13], to make neural networks robust to perturbations, efforts have been made to propose more effective defense methods. As adversarial examples are an issue of robustness, the popular scheme is to include learning with adversarial examples, which can be traced back to [GSS14]. It produces adversarial examples and injecting them into training data. Later, Madry et al. [MMS+18] proposed training on multi-step PGD adversaries and empirically observed that adversarial training consistently achieves small and robust training loss in wide neural networks.

Federated Adversarial Learning

Adversarial examples, which may not be visually distinguishable from benign samples, are often classified. This poses potential security threats for practical machine learning applications. Adversarial training [GSS14, KGB16] is a popular protocol to train more adversarial robust models by inserting adversarial examples in training. The use of adversarial training in FL presents a number of open challenges, including poor convergence due to multiple local update steps, instability and heterogeneity of clients, cost and security request of communication, and so on. To defend the adversarial attacks in federated learning, limited recent studies have proposed to include adversarial training on clients in the local training steps [BCMC19, ZRSB20]. These two works empirically showed the performance of adversarial training, while the theoretical analysis of convergence is under explored. [DKM20] focused the problem of distributionally robust FL with an emphasis on reducing the communication rounds, they traded convergence rate for communication rounds. In addition, different from our focus on a generic theoretical analysis framework, [ZLL+21] is a methodology paper that proposed an adversarial training strategy in classical distributed setting, with focus on specific training strategy (PGD, FGSM), which could be generalized to a method in FAL.

Convergence via Over-parameterization

Convergence analysis on over-parameterized neural networks falls in two lines. In the first line of work [LL18, AZLS19b, AZLS19a, AZLL19] data separability plays a crucial role, and is widely used in theoretically showing the convergence result in the over-parameterized neural network setting. To be specific, data separability theory shows that to guarantee convergence, the width () of a neural network shall be at least polynomial factor of all parameters (i.e. ), where is the minimum distance between all pairs of data points, is the number of data points and is the data dimension. Another line of work [DZPS19, ADH+19a, ADH+19b, SY19, LSS+20, BPSW21, SYZ21, SZZ21, HLSY21, Zha22, MOSW22] builds on neural tangent kernel (NTK) [JGH18]. In this line of work, the minimum eigenvalue () of the NTK is required to be lower bounded to guarantee convergence. Our analysis focuses on the former approach based on data separability.

Robustness of Federated Learning

Previously there were several works that theoretically analyzed the robustness of federated learning under noise. [YCKB18] developed distributed optimization algorithms that were provably robust against arbitrary and potentially adversarial behavior in distributed computing systems, and mainly focused on achieving optimal statistical performance. [RFPJ20] developed a robust federated learning algorithm by considering a structured affine distribution shift in users’ data. Their analysis was built on several assumptions on the loss functions without a direct connection to neural network.

3 Problem Formulation

To explore the properties of FAL in deep learning, we formulate the problem in over-parameterized neural network regime. We start by presenting the notations and setup required for federated adversarial learning, then we will describe the loss function we use and our FAL algorithm.

3.1 Notations

For a vector , we use to denote its norm, in this paper we mainly consider the situation when or . For a matrix , we use to denote its transpose and use to denote its trace. We use to denote its entry-wise norm. We use to denote its spectral norm. We use to denote its Frobenius norm. For , we let be the -th column of . We let denotes . We let denotes .

We denote Gaussian distribution with mean and covariance as . We use to denote the ReLU function , and use to denote the indicator function of event .

3.2 Problem Setup

Two-layer ReLU network in FAL

Following recent theoretical work in understanding neural networks training in deep learning [DZPS19, ADH+19a, ADH+19b, SY19, LSS+20, SYZ21, Zha22], in this paper, we focus on a two-layer neural network that has neurons in the hidden layer, where each neuron is a ReLU activation function.

We define the global network as

| (1) |

and for , we define the local network of client as

| (2) |

Here is the global hidden weight matrix, is the local hidden weight matrix of client , denotes the output weight, denotes the bias.

During the process of federated adversarial learning, we only update the value of and , while keeping and equal to their initialization, so we can write the global network as and the local network as . For the situation we don’t care about the weight matrix, we write or for short.

Next, we make some standard assumptions regarding our training set.

Definition 3.1 (Dataset).

There are clients and data in total.111For simplicity, we assume that all clients have same number of training data. Our result can be generalized to the setting where each client has a different number of data as the future work. Let where denotes the training data of client . Without loss of generality, we assume holds for all , and the last coordinate of each point equals to , so we consider . For simplicity, we assume that holds for all and .222Our assumptions on data points are reasonable since we can do scale-up. In addition, norm normalization is a typical technique in experiments. Same assumptions also appears in many previous theoretical works like [ADH+19b, AZLL19, AZLS19b].

We now define the initialization for the neural networks.

Definition 3.2 (Initialization).

The initialization of is . The initialization of client c’s local weight matrix is . Here the second term in denotes iteration of local steps.

-

•

For each , are i.i.d. sampled from uniformly.

-

•

For each , and are i.i.d. random Gaussians sampled from . Here means the -entry of .

For each global iteration ,

-

•

For each , the initial value of client c’s local weight matrix is .

Next we formulate the adversary model that will be used.

Definition 3.3 (-Bounded adversary).

Let denote the function class. An adversary is a mapping which denotes the adversarial perturbation. For , we define the ball , we say an adversary is -bounded if it satisfies . Furthermore, given , we denote the worst-case adversary as , where is defined in Definition 3.5.

Well-separated training set

In the over-parameterized regime, it is a standard assumption that the training set is well-separated. Since we deal with adversarial perturbations, we require the following -separability, which is a bit stronger.

Definition 3.4 (-separability).

Let denote three parameters such that . We say our training set is globally -separable w.r.t a -bounded adversary, if holds for any and .

Note that in the above definition, the introducing of is for expression simplicity of Theorem 4.1, and the assumption is reasonable and easy to achieve in adversarial training. It is also noteworthy that, our problem setup does not need the assumption on independent and identically distribution (IID) on data, thus such a formation can be applied to unique challenge of the non-IID setting in FL.

3.3 Federated Adversarial Learning

Adversary and robust loss

We set the following loss for the sake of technical presentation simplicity, as is customary in prior studies [GCL+19, AZLL19]:

Definition 3.5 (Lipschitz convex loss).

A loss function is said to be a Lipschitz convex loss, if it satisfies the following properties: (i) convex w.r.t. the first input of ; (ii) Lipshcitz, which means and (iii) for all .

In this paper we assume is a Lipschitz convex loss. Next we define our robust loss function of a network, which is based on the adversarial samples generated by a -bounded adversary .

Definition 3.6 (Training loss).

Given a client’s training set of samples. Let be a net. The classical training loss of is . Given , we define the global loss as

Given an adversary that is -bounded, we define

the global loss with respect to as

and also define the global robust loss (in terms of worst-case) as

Moreover, since we deal with pseudo-net (Definition 5.1), we also define the loss of a pseudo-net as

and

Algorithm

We focus on a general FAL framework that is adapted from the most common adversarial training in the classical setting on the client. Specifically, we describe the adversarial learning of a local neural network against an adversary that generate adversarial examples during training as shown in Algorithm 1. As for the analysis of a general theoretical analysis framework, we do not specify the explicit format of .

The FAL algorithm contains two procedures: one is ClientUpdate running on client side and the other is ServerExecution running on server side. These two procedures are iteratively processed through communication iterations. Adversarial training is addressed in procedure ClientUpdate. Hence, there are two loops in ClientUpdate procedure: the outer loop is iteration for local model updating; and the inner loop is iteratively generating adversarial samples by the adversary . In the outer loop in ServerExecution procedure, the neural network’s parameters are updated to reduce its prediction loss on the new adversarial samples.

Notations: Training sets of clients with each client is indexed by , ; adversary ; local learning rate ; global learning rate ; local updating iterations ; global communication round .

4 Our Result

The main result of this work is showing the convergence of FAL algorithm (Algorithm 1) in overparameterized neural networks. Specifically, our defined global training loss (Definition 3.6) converges to a small with the chosen communication round , local and global learning rate , .

We now formally present our main result.

Theorem 4.1 (Federated Adversarial Learning).

Let be a fixed constant. Let denotes the total number of clients and denotes the number of data points per client. Suppose that our training set is globally -separable for some . Then, for all , there exists that satisfies: for every and , for all , with probability , running federated adversarial learning (Algorithm 1) with step size choices

will output a list of weights that satisfy:

The randomness comes from , , for .

Discussion

As we can see in Theorem 4.1, one key element that affects parameters is the data separability . As the data separability bound becomes larger, the parameter becomes smaller, resulting in the need of a larger global learning rate to achieve convergence. We also conduct numerical experiments in Appendix 6 to verify Theorem 4.1 empirically.

5 Proof Sketch

To handle the min-max objective in FAL, we formulate the optimization of FAL in the framework of online gradient descent333We refer our readers to [Haz16] for more details regarding online gradient descent. : at each local step on the client side, firstly the adversary generates adversarial samples and computes the loss function , then the local client learner takes the fresh loss function and update .

Compared with the centralized setting, the key difficulties in the convergence analysis of FL are induced by multiple local step updates of the client side and the step updates on both local and global sides. Specifically, local updates are not the standard gradient as the centralized adversarial training when . We used in substitution of the real gradient of to update the value of . This brings in challenges to bound the gradient of the neural networks. Nevertheless, gradient bounding is challenging in adversarial training solely.

To this end, we use gradient coupling method twice to solve this core problem: firstly we bound the difference between real gradient and FL gradient (defined below), then we bound the difference between pseudo gradient and real gradient.

5.1 Existence of small robust loss

In this section, we denote as the initialization of global weights and denote as the global weights of communication round . is the value of after small perturbations from which satisfies , here is a constant (e.g. ), is the width of the neural network and is a parameter. We will specify the concrete value of these parameters later in appendix.

We study the over-parameterized neural nets’ well-approximated pseudo-network to learn gradient descent for over-parameterized neural nets whose weights are close to initialization. Pseudo-network can be seen as a linear approximation of our two layer ReLU neural network near initialization, and the introducing of pseudo-network makes the proof more intuitive.

Definition 5.1 (Pseudo-network).

Given weights , and , for a neural network , we define the corresponding pseudo-network as

Existence of small robust loss

To obtain our main theorem, first we show that we can find a which is close to and also makes sufficiently small. Later in Theorem 5.6 we show that the average of is dominated by , thus we can prove the minimum of is small.

Theorem 5.2 (Existence, informal version of Theorem F.3).

For all , there are and satisfying: for all , with high probability there exists that satisfies and .

5.2 Convergence result for federated learning

Definition 5.3 (Gradient).

For a local real network , we denote its gradient by

If the corresponding pseudo-network is , then denote the pseudo-network gradient by

Now we consider the global network. We define pseudo gradient as and define FL gradient as , which is used in the proof of Theorem 5.6. We present our gradient coupling methods in the following two lemmas.

Lemma 5.4 (Bound the difference between real gradient and FL gradient, informal version of Lemma E.4).

With probability , for iterations satisfying , the gradients satisfy

The randomness is from , , for .

Lemma 5.5 (Bound the difference between pseudo gradient and real gradient, informal version of Lemma E.5).

With probability , for iterations satisfying , the gradients satisfy

The randomness is from , , for .

The above two lemmas are essential in proving Theorem 5.6, which is our convergence result.

Theorem 5.6 (Convergence result, informal version of Theorem E.3).

Let . Suppose . Let , let . There is , such that for every , with probability , for every satisfying , running Algorithm 1 with setting and will output weights that satisfy

The randomness comes from , , for .

In the proof of Theorem 5.6 we first bound the local gradient . We consider the pseudo-network and bound

where

In bounding , we unfold and have

We bound . By doing summation over , we have

In bounding , we apply Lemma 5.4 and have

where . As for the first term, we bound

and have , then we do summation and obtain

In bounding , we apply Lemma 5.5 and have

Then we do summation over and have

Putting it together with our choice of our all parameters (i.e. ), we obtain

From Theorem D.2 in appendix, we have and thus,

| (3) |

From the definition of we have . From the definition of loss we have . Moreover, since Eq. (3) holds for all , we can replace with . Thus we prove that for all ,

Combining the results

6 Numerical Results

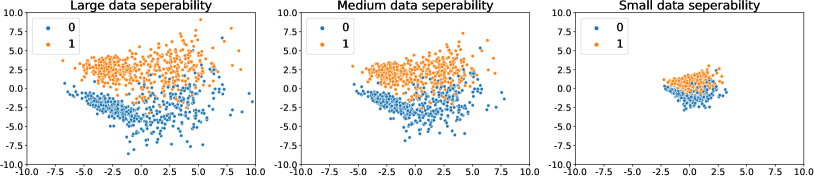

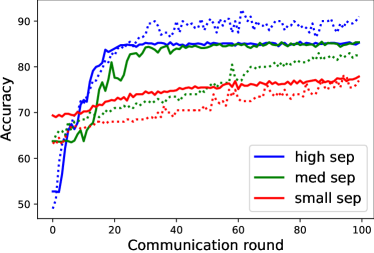

In this section, we examine our theoretical results (Theorem 4.1) on data separability, (Definition 3.4), a standard assumption is an over-parameterized neural network convergence analysis. We simulate synthetic data with different levels of data separability as shown in Fig. 1. Specifically, each data point contains two dimensions. Each class of data is generated from two Gaussian distributions (std=1) with different means. Each class consists of two Gaussian clusters where the intra-class cluster centroids are closer than the inter-class distances. We perform binary classification tasks on the simulated datasets using multi-layer perceptrons MLP with one hidden layer with 128 neurons. To increase learning difficulty, 5% of labels are randomly flipped. For each class, we simulated 400 data points as training sets and 100 data points as a testing set. The training data is even divided into four parts to simulate four clients. To simulate different levels of separability, we expand/shrink data features by (2.5, 1.5, 0.85) to construct (large, medium, small) data separability. Note that the whole dataset is not normalized before feeding into the classifier.

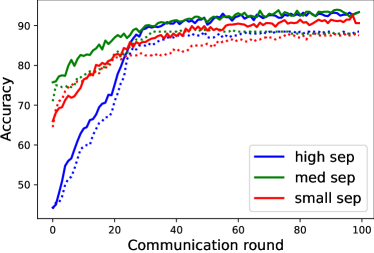

We deploy PGD [MMS+18] to generate adversarial examples during FAL training with the box of radius , each perturbation step of 7, and step length of 0.00784. Model aggregation follows FedAvg [MMR+17] after each local update. The batch size is 50, and SGD optimizer is used. We depict the training and testing accuracy curves in Fig. 2(a), where solid lines strand for training and dash line stand for testing. The total communication round for is 100, and we observe training convergence for high (blue) and medium (green) separability datasets with learning rate 1e-5. However, a low separability dataset requires a smaller learning rate (i.e., 5e-6) to avoid divergence. From Theorem 4.1, it is easy to see a larger data separability bound results in a smaller , and we can choose a larger learning rate to achieve convergence. Hence, the selection of learning rate for small separability is consistent with the constraint of learning rate implied in Theorem 4.1. We empirically observe results that a dataset with larger data separability converges faster with the flexibility of choosing a large learning rate, which is affirmative of our theoretical results that convergence round has a larger lower bound with a smaller , where . In addition, we compare with the accuracy curves obtained by using FedAvg [MMR+17]. As shown Fig. 2(b), all the datasets converge at around round 40. Therefore, we notice that the same data separability scales have larger affect in FAL training.

7 Conclusion

We have studied the convergence of a general format of adopting adversarial training in FL setting to improve FL training robustness. We propose the general framework, FAL, which deploys adversarial samples generation-based adversarial training method on the client-side and then aggregate local model using FedAvg [MMR+17]. In FAL, each client is trained via min-max optimization with inner loop adversarial generation and outer loop loss minimization. As far as we know, we are the first to detail the proof of theoretical convergence guarantee for over-parameterized ReLU network on the presented FAL strategy, using gradient descent. Unlike the convergence of adversarial training in classical settings, we consider the updates on both local client and global server sides. Our result indicates that we can control learning rates and according to the local update steps and global communication round to make the minimal loss close to zero. The technical challenges lie in the multiple local update steps and heterogeneous data, leading to the difficulties of convergence. Under ReLU Lipschitz and over-prameterization assumptions, we use gradient coupling methods twice. Together, we show the model updates of each global updating bounded in our federated adversarial learning. Note that we do not require IID assumptions for data distribution. In sum, the proposed FAL formulation and analysis framework can well handle the multi-local updates and non-IID data in FL. Moreover, our framework can be generalized to other FL aggregation methods, such as sketching and selective aggregation.

Roadmap

The appendix is organized as follows. We introduce the probability tools to be used in our proof in Section A. In addition, we introduce the preliminaries in Section B. We present the proof overview in Section C and additional remarks used in the proof sketch in Section D. We show the detailed proof for the convergence in Section E and the detailed proof of existence in Section F correspondingly.

Appendix A Probability Tools

We introduce the probability tools that will be used in our proof. First we present two lemmas about random variable’s tail bound in Lemma A.1 and A.2:

Lemma A.1 (Chernoff bound [Che52]).

Let , where with probability and with probability , and all are independent. Let . Then

1. , ;

2. , .

Lemma A.2 (Bernstein inequality [Ber24]).

Let be independent zero-mean random variables. Suppose that for almost surely. Then for all , we have

Next, we introduce Lemma A.3 about CDF of Gaussian distributions:

Lemma A.3.

Let denotes a Gaussian random variable, then we have

Finally, we introduce Claim A.4 about elementary anti-concentration property of Gaussian distribution.

Claim A.4.

Let and are independent Gaussian random variables. Then for all and that satisfies , we have

Appendix B Preliminaries

B.1 Notations

For a vector , we use to denote its norm, in this paper we mainly consider the situation when or .

For a matrix , we use to denote its transpose and use to denote its trace. We use to denote its entry-wise norm. We use to denote its spectral norm. We use to denote its Frobenius norm. For , we let be the -th column of . We let denotes . We let denotes . For two matrices and , we denote their Euclidean inner product as .

We denote Gaussian distribution with mean and covariance as . We use to denote the ReLU function, and use to denote the indicator function of .

B.2 Two layer neural network and initialization

In this paper, we focus on a two-layer neural network that has neurons in the hidden layer, where each neuron is a ReLU activation function. We define the global network as

| (4) |

and for , we define the local network of client as

| (5) |

Here is the global hidden weight matrix, is the local hidden weight matrix of client , and denotes the output weight, denotes the bias. During the process of federated adversarial learning, for convenience we keep and equal to their initialized values and only update and , so we can write the global network as and the local network as . For the situation we don’t care about the weight matrix, we write or for short. Next, we make some standard assumptions regarding our training set.

Definition B.1 (Dataset).

There are clients and data in total.444For simplicity, we assume that all clients have same number of training data. Our result can be generalized to the setting where each client has a different number of data as the future work. Let where denotes the training data of client . Without loss of generality, we assume that holds for all , and the last coordinate of each point equals to , so we consider . For simplicity, we assume that holds for all and .555Our assumptions on data points are reasonable since we can do scale-up. In addition, norm normalization is a typical technique in experiments. Same assumptions also appears in many previous theoretical works like [ADH+19b, AZLL19, AZLS19b].

We now define the initialization for the neural networks.

Definition B.2 (Initialization).

The initialization of is . The initialization of client c’s local weight matrix is . Here the second term in denotes iteration of local steps.

-

•

For each , are i.i.d. sampled from uniformly.

-

•

For each , and are i.i.d. random Gaussians sampled from . Here means the -entry of .

For each global iteration ,

-

•

For each , the initial value of client c’s local weight matrix is .

B.3 Adversary and Well-separated training sets

We first formulate the adversary as a mapping.

Definition B.3 (-Bounded adversary).

Let denote the function class. An adversary is a mapping which denotes the adversarial perturbation. For , we define the ball as , we say an adversary is -bounded if it satisfies

Moreover, given , we denote the worst-case adversary as , where is defined in Definition B.5.

In the over-parameterized regime, it is a standard assumption that the training set is well-separated. Since we deal with adversarial perturbations, we require the following -separability, which is a bit stronger.

Definition B.4 (-separability).

Let denote three parameters such that . We say our training set is globally -separable w.r.t a -bounded adversary, if

It is noteworthy that our problem setup does not need the assumption on independent and identically distribution (IID) on data, thus such a formation can be applied to unique challenge of the non-IID setting in FL.

B.4 Robust loss function

We define the following Lipschitz convex loss function that will be used.

Definition B.5 (Lipschitz convex loss).

A loss function is said to be a Lipschitz convex loss, if it satisfies the following four properties:

-

•

non-negative;

-

•

convex in the first input of ;

-

•

Lipshcitz, which means ;

-

•

for all .

In this paper we assume is a Lipschitz convex loss. Next, we define our robust loss function of a neural network, which is based on the adversarial examples generated by a -bounded adversary .

Definition B.6 (Training loss).

Given a client’s training set of samples. Let be a net. We define loss to be . Given , the global loss is defined as

Given an adversary that is -bounded, we define the global loss with respect to as

and also define the global robust loss (in terms of worst-case) as

Moreover, since we deal with pseudo-net which is defined in Definition D.1, we also define the loss of a pseudo-net as and .

B.5 Federated Adversarial Learning algorithm

Classical adversarial training algorithm can be found in [ZPD+20]. Different from the classical setting, our federated adversarial learning of a local neural network against an adversary is shown in Algorithm 2, where there are two procedures: one is ClientUpdate running on client side and the other is ServerExecution running on server side. These two procedures are iteratively processed through communication iterations. Adversarial training is addressed in procedure ClientUpdate. Hence, there are two loops in ClientUpdate procedure: the outer loop is iteration for local model updating; and the inner loop is iteratively generating adversarial samples by the adversary . In the outer loop in ServerExecution procedure, the neural network’s parameters are updated to reduce its prediction loss on the new adversarial samples. These loops constitute an intertwining dynamics.

Appendix C Proof Overview

In this section we give an overview of our main result’s proof. Two theorems to be used are Theorem E.3 and Theorem F.3.

C.1 Pseudo-network

We study the over-parameterized neural nets’ well-approximated pseudo-network to learn gradient descent for over-parameterized neural nets whose weights are close to initialization. The introducing of pseudo-network makes the proof more intuitive.

C.2 Online gradient descent in federated adversarial learning

Our federated adversarial learning algorithm is formulated in online gradient descent framework: at each local step on the client side, firstly the adversary generates adversarial samples and computes the loss function , then the local client learner takes the fresh loss function and update . We refer our readers to [GCL+19, Haz16] for more details regarding online learning and online gradient descent.

Compared with the centralized setting, the key difficulties in the convergence analysis of FL are induced by multiple local step updates of the client side and the step updates on both local and global sides. Specifically, local updates are not the standard gradient as the centralized adversarial training when . We used in substitution of the real gradient of to update the value of . This brings in challenges to bound the gradient of the neural networks. Nevertheless, gradient bounding is challenging in adversarial training solely. We use gradient coupling method twice to solve this core problem: firstly we bound the difference between real gradient and FL gradient in Lemma E.4, then we bound the difference between pseudo gradient and real gradient in Lemma E.5. We show the connection of online gradient descent and federated adversarial learning in the proof of Theorem E.3.

C.3 Existence of robust network near initialization

In Section F we show that there exists a global network whose weight is close to the initial value and makes the worst-case global loss sufficiently small. We show that the required width is .

Appendix D Real approximates pseudo

To make additional remark to proof sketch in Section 5, in this section, we state a tool that will be used in our proof that is related to our definition of pseudo-network. First, we recall the definition of pseudo-network.

Definition D.1 (Pseudo-network).

Given weights , and , the global neural network function is defined as

Given this , we define the corresponding pseudo-network function as

From the definition we can know that pseudo-network can be seen as a linear approximation of the two layer ReLU network we study near initialization. Next, we cite a Theorem from [ZPD+20], which gives a uniform bound of the difference between a network and its pseudo-network.

Theorem D.2 (Uniform approximation, Theorem 5.1 in [ZPD+20]).

Suppose is a constant. Let . As long as , with prob. , for every satisfying , we have is at most .

The randomness is due to initialization.

Appendix E Convergence

| Section | Statement | Comment | Statements Used |

|---|---|---|---|

| E.1 | Definition E.1 and E.2 | Definition | - |

| E.2 | Theorem E.3 | Convergence result | Lem. E.4, E.5, Thm. D.2 |

| E.3 | Lemma E.4 | Approximates real gradient | - |

| E.4 | Lemma E.5 | Approximates pseudo gradient | Claim E.6 |

| E.5 | Claim E.6 | Auxiliary bounding | Claim A.4 |

E.1 Definitions and notations

In Section E, we follow the notations used in Definition D.1. Since we are dealing with pseudo-network, we first introduce some additional definitions and notations regarding gradient.

Definition E.1 (Gradient).

For a local real network , we denote its gradient by

If the corresponding pseudo-network is , then we define the pseudo-network gradient as

Now we consider the global matrix. For convenience we write and . We define the FL gradient as .

Definition E.2 (Distance).

For such that , we define the following distance for simplicity:

We have and by using triangle inequality.

| Notation | Meaning | Satisfy |

|---|---|---|

| or | Initialization of | |

| The value of after iterations | ||

| The value of after small perturbations from |

E.2 Convergence result

We are going to prove Theorem E.3 in this section.

Theorem E.3 (Convergence, formal version of Theorem 5.6).

Let . Suppose . Let . Let . There is , such that for every , with probability , if we run Algorithm 2 by setting

then for every such that , the output weights satisfy

The randomness is from , , .

Proof.

We set our parameters as follows:

Since the loss function is -Lipschitz, we first bound the norm of real net gradient:

| (6) |

Now we consider the pseudo-net gradient. The loss is convex in due to the fact that is linear with . Then we have

where the last step follows from

Note that the FL gradient is the direction moved by center, in contrast, is the true gradient of function . We deal with these three terms separately. As for , we have

and by rearranging the equation we get

Next, we need to upper bound ,

| (7) |

where the last step follows from . Then we do summation over and have

where the last step follows from Eq. (E.2) and .

As for , we apply Lemma E.4 and also triangle inequality and have

By using Eq. (6) we bound the size of :

and have

Then we do summation over and have

As for , we apply Lemma E.5 and have

Since , we have

Then we do summation over and have

Next we put it altogether. Note that , thus we obtain

We then have

| (8) | ||||

From Theorem D.2 we know

In addition,

| (9) |

From the definition of we have . From the definition of loss we have . Moreover, since Eq. (9) holds for all , we can replace with . Thus we prove that for ,

∎

E.3 Approximates real global gradient

We are going to prove Lemma E.4 in this section.

Lemma E.4 (Bounding the difference between real gradient and FL gradient).

Let With probability , for iterations satisfying

the following holds:

The randomness is from , , for at .

Proof.

Notice that and

So we have

where the last step follows from the assumption that .

As for , we have

Then we do summation and have

Thus we finish the proof. ∎

E.4 Approximates pseudo global gradient

We are going to prove Lemma E.5 in this section.

Lemma E.5.

Let . With probability , for iterations satisfying

the following holds:

The randomness is because , , , for .

Proof.

Notice that and . By Claim E.6, with the given probability we have

For indices satisfying , the following holds:

where the first step is definition, the second step follows that the loss function is -Lipschitz, the third step follows from and , the last step follows from the bound of the indicator function. Thus, we do the conclusion:

and finish the proof. ∎

E.5 Bounding auxiliary

Claim E.6 (Bounding auxiliary).

Let . With probability , we have

The randomness is from , , for .

Proof.

For , let . By Claim A.4 we know that for each we have

By putting a union bound on and , we get

Since

we have

By applying concentration inequality on (independent Bernoulli) for , we obtain that with prob.

the following holds:

Thus we finish the proof.

∎

E.6 Further Discussion

Note that in the proof of Theorem E.3 we set the hidden layer’s width to be greater than , which seems impractical in reality: if we choose our convergence accuracy to be , the width will become which is impossible to achieve.

However, we want to claim that the "" term is not intrinsic in our theorem and proof, and we can actually further improve the lower bound of to where is some constant between and . To be specific, we observe from Eq. (E.2) that the "" term comes from , where appears in Lemma E.4 and appears in the assumption that in Definition E.2. As for our observations, the term is hard to improve. On the other hand, we can actually adjust the value of as long as we ensure

for some constant . When we let , the final result will achieve

which is much more feasible in reality.

As the first work and the first step towards understanding the convergence of federated adversarial learning, the priority of our work is not achieving the tightest bounds. Instead, our main goal is to show the convergence of a general federated adversarial learning framework. Nevertheless, we will improve the bound in the final version.

Appendix F Existence

In this section we prove the existence of that is close to and makes close to zero.

F.1 Tools from previous work

In order to prove our existence result, we first state two lemmas that will be used.

Lemma F.1 (Lemma 6.2 from [ZPD+20]).

Suppose that holds for each pair of two different data points . Let , then there a polynomial with size of coefficients no bigger than and degree no bigger than , that satisfies for all and ,

We let and have .

Lemma F.2 (Lemma 6.5 from [ZPD+20]).

Suppose . Suppose

As long as , with prob. , there that satisfies

The randomness is due to , , for .

F.2 Existence result

We are going to present Theorem F.3 in this section and present its proofs.

Theorem F.3 (Existence, formal version of Theorem 5.2).

Suppose that . Suppose

As long as , then with prob. , there exists satisfying

The randomness comes from , , for .

Proof.

For convenient, we define

By combining these two results with Theorem D.2, we have that for all , with prob.

there that satisfies .

In addition, the following properties:

-

•

is at most

-

•

is at most

Consider the loss function. For all , and , we have

Thus, we have that

Furthermore, since the we consider satisfies , the holding probability is

Thus, it finishes the proof of this theorem.

∎

References

- [ACW18] Anish Athalye, Nicholas Carlini, and David Wagner. Obfuscated gradients give a false sense of security: Circumventing defenses to adversarial examples. arXiv preprint arXiv:1802.00420, 2018.

- [ADH+19a] Sanjeev Arora, Simon S Du, Wei Hu, Zhiyuan Li, Ruslan Salakhutdinov, and Ruosong Wang. On exact computation with an infinitely wide neural net. In NeurIPS. https://arxiv.org/pdf/1904.11955.pdf, 2019.

- [ADH+19b] Sanjeev Arora, Simon S Du, Wei Hu, Zhiyuan Li, and Ruosong Wang. Fine-grained analysis of optimization and generalization for overparameterized two-layer neural networks. In ICML. https://arxiv.org/pdf/1901.08584.pdf, 2019.

- [AZLL19] Zeyuan Allen-Zhu, Yuanzhi Li, and Yingyu Liang. Learning and generalization in overparameterized neural networks, going beyond two layers. In NeurIPS. https://arxiv.org/pdf/1811.04918.pdf, 2019.

- [AZLS19a] Zeyuan Allen-Zhu, Yuanzhi Li, and Zhao Song. A convergence theory for deep learning via over-parameterization. In ICML. https://arxiv.org/pdf/1811.03962.pdf, 2019.

- [AZLS19b] Zeyuan Allen-Zhu, Yuanzhi Li, and Zhao Song. On the convergence rate of training recurrent neural networks. In NeurIPS. https://arxiv.org/pdf/1810.12065.pdf, 2019.

- [BCMC19] Arjun Nitin Bhagoji, Supriyo Chakraborty, Prateek Mittal, and Seraphin Calo. Analyzing federated learning through an adversarial lens. In International Conference on Machine Learning, pages 634–643. PMLR, 2019.

- [Ber24] Sergei Bernstein. On a modification of chebyshev’s inequality and of the error formula of laplace. Ann. Sci. Inst. Sav. Ukraine, Sect. Math, 1(4):38–49, 1924.

- [BPSW21] Jan van den Brand, Binghui Peng, Zhao Song, and Omri Weinstein. Training (overparametrized) neural networks in near-linear time. In ITCS. https://arxiv.org/pdf/2006.11648.pdf, 2021.

- [CH20] Francesco Croce and Matthias Hein. Reliable evaluation of adversarial robustness with an ensemble of diverse parameter-free attacks. In International Conference on Machine Learning, pages 2206–2216. PMLR, 2020.

- [Che52] Herman Chernoff. A measure of asymptotic efficiency for tests of a hypothesis based on the sum of observations. The Annals of Mathematical Statistics, pages 493–507, 1952.

- [CW17] Nicholas Carlini and David Wagner. Towards evaluating the robustness of neural networks. In 2017 IEEE Symposium on Security and Privacy (SP), pages 39–57. IEEE, 2017.

- [DKM20] Yuyang Deng, Mohammad Mahdi Kamani, and Mehrdad Mahdavi. Distributionally robust federated averaging. Advances in Neural Information Processing Systems, 33:15111–15122, 2020.

- [DLL+19] Simon S Du, Jason D Lee, Haochuan Li, Liwei Wang, and Xiyu Zhai. Gradient descent finds global minima of deep neural networks. In ICML. https://arxiv.org/pdf/1811.03804, 2019.

- [DZPS19] Simon S Du, Xiyu Zhai, Barnabas Poczos, and Aarti Singh. Gradient descent provably optimizes over-parameterized neural networks. In ICLR. https://arxiv.org/pdf/1810.02054.pdf, 2019.

- [GCL+19] Ruiqi Gao, Tianle Cai, Haochuan Li, Cho-Jui Hsieh, Liwei Wang, and Jason D Lee. Convergence of adversarial training in overparametrized neural networks. Advances in Neural Information Processing Systems, 32:13029–13040, 2019.

- [GSS14] Ian J Goodfellow, Jonathon Shlens, and Christian Szegedy. Explaining and harnessing adversarial examples. arXiv preprint arXiv:1412.6572, 2014.

- [Haz16] Elad Hazan. Introduction to online convex optimization. Foundations and Trends in Optimization, 2(3-4):157–325, 2016.

- [HLSY21] Baihe Huang, Xiaoxiao Li, Zhao Song, and Xin Yang. Fl-ntk: A neural tangent kernel-based framework for federated learning analysis. In International Conference on Machine Learning, pages 4423–4434. PMLR, 2021.

- [JGH18] Arthur Jacot, Franck Gabriel, and Clément Hongler. Neural tangent kernel: Convergence and generalization in neural networks. In Advances in neural information processing systems (NeurIPS), pages 8571–8580, 2018.

- [KGB16] Alexey Kurakin, Ian Goodfellow, and Samy Bengio. Adversarial machine learning at scale. arXiv preprint arXiv:1611.01236, 2016.

- [LGD+20] Xiaoxiao Li, Yufeng Gu, Nicha Dvornek, Lawrence Staib, Pamela Ventola, and James S Duncan. Multi-site fmri analysis using privacy-preserving federated learning and domain adaptation: Abide results. Medical Image Analysis, 2020.

- [LHY+19] Xiang Li, Kaixuan Huang, Wenhao Yang, Shusen Wang, and Zhihua Zhang. On the convergence of fedavg on non-iid data. arXiv preprint arXiv:1907.02189, 2019.

- [LJZ+21] Xiaoxiao Li, Meirui JIANG, Xiaofei Zhang, Michael Kamp, and Qi Dou. Fedbn: Federated learning on non-iid features via local batch normalization. In International Conference on Learning Representations, 2021.

- [LL18] Yuanzhi Li and Yingyu Liang. Learning overparameterized neural networks via stochastic gradient descent on structured data. In NeurIPS. https://arxiv.org/pdf/1808.01204.pdf, 2018.

- [LLC+19] Xinle Liang, Yang Liu, Tianjian Chen, Ming Liu, and Qiang Yang. Federated transfer reinforcement learning for autonomous driving. arXiv preprint arXiv:1910.06001, 2019.

- [LLH+20] Wei Yang Bryan Lim, Nguyen Cong Luong, Dinh Thai Hoang, Yutao Jiao, Ying-Chang Liang, Qiang Yang, Dusit Niyato, and Chunyan Miao. Federated learning in mobile edge networks: A comprehensive survey. IEEE Communications Surveys & Tutorials, 22(3):2031–2063, 2020.

- [LSS+20] Jason D Lee, Ruoqi Shen, Zhao Song, Mengdi Wang, and Zheng Yu. Generalized leverage score sampling for neural networks. In NeurIPS, 2020.

- [LSZ+20] Tian Li, Anit Kumar Sahu, Manzil Zaheer, Maziar Sanjabi, Ameet Talwalkar, and Virginia Smith. Federated optimization in heterogeneous networks. In Conference on Machine Learning and Systems, 2020a, 2020.

- [MMR+17] Brendan McMahan, Eider Moore, Daniel Ramage, Seth Hampson, and Blaise Aguera y Arcas. Communication-efficient learning of deep networks from decentralized data. In Artificial Intelligence and Statistics, pages 1273–1282. PMLR, 2017.

- [MMS+18] Aleksander Madry, Aleksandar Makelov, Ludwig Schmidt, Dimitris Tsipras, and Adrian Vladu. Towards deep learning models resistant to adversarial attacks. In ICLR. https://arxiv.org/pdf/1706.06083.pdf, 2018.

- [MOSW22] Alexander Munteanu, Simon Omlor, Zhao Song, and David Woodruff. Bounding the width of neural networks via coupled initialization a worst case analysis. In International Conference on Machine Learning, pages 16083–16122. PMLR, 2022.

- [RCZ+20] Sashank Reddi, Zachary Charles, Manzil Zaheer, Zachary Garrett, Keith Rush, Jakub Konečnỳ, Sanjiv Kumar, and H Brendan McMahan. Adaptive federated optimization. arXiv preprint arXiv:2003.00295, 2020.

- [RFPJ20] Amirhossein Reisizadeh, Farzan Farnia, Ramtin Pedarsani, and Ali Jadbabaie. Robust federated learning: The case of affine distribution shifts. arXiv preprint arXiv:2006.08907, 2020.

- [RHL+20] Nicola Rieke, Jonny Hancox, Wenqi Li, Fausto Milletari, Holger R Roth, Shadi Albarqouni, Spyridon Bakas, Mathieu N Galtier, Bennett A Landman, Klaus Maier-Hein, et al. The future of digital health with federated learning. NPJ digital medicine, 3(1):1–7, 2020.

- [SKC18] Pouya Samangouei, Maya Kabkab, and Rama Chellappa. Defense-gan: Protecting classifiers against adversarial attacks using generative models. arXiv preprint arXiv:1805.06605, 2018.

- [SY19] Zhao Song and Xin Yang. Quadratic suffices for over-parametrization via matrix chernoff bound. In arXiv preprint. https://arxiv.org/pdf/1906.03593.pdf, 2019.

- [SYZ21] Zhao Song, Shuo Yang, and Ruizhe Zhang. Does preprocessing help training over-parameterized neural networks? Advances in Neural Information Processing Systems, 34, 2021.

- [SZS+13] Christian Szegedy, Wojciech Zaremba, Ilya Sutskever, Joan Bruna, Dumitru Erhan, Ian Goodfellow, and Rob Fergus. Intriguing properties of neural networks. In arXiv preprint. https://arxiv.org/pdf/1312.6199.pdf, 2013.

- [SZZ21] Zhao Song, Lichen Zhang, and Ruizhe Zhang. Training multi-layer over-parametrized neural network in subquadratic time. arXiv preprint arXiv:2112.07628, 2021.

- [TKP+17] Florian Tramèr, Alexey Kurakin, Nicolas Papernot, Ian Goodfellow, Dan Boneh, and Patrick McDaniel. Ensemble adversarial training: Attacks and defenses. arXiv preprint arXiv:1705.07204, 2017.

- [TZT18] Zhuozhuo Tu, Jingwei Zhang, and Dacheng Tao. Theoretical analysis of adversarial learning: A minimax approach. arXiv preprint arXiv:1811.05232, 2018.

- [WLL+20] Jianyu Wang, Qinghua Liu, Hao Liang, Gauri Joshi, and H Vincent Poor. Tackling the objective inconsistency problem in heterogeneous federated optimization. arXiv preprint arXiv:2007.07481, 2020.

- [WTS+19] Shiqiang Wang, Tiffany Tuor, Theodoros Salonidis, Kin K. Leung, Christian Makaya, Ting He, and Kevin Chan. Adaptive federated learning in resource constrained edge computing systems. IEEE Journal on Selected Areas in Communications, 37(6):1205–1221, 2019.

- [YCKB18] Dong Yin, Yudong Chen, Ramchandran Kannan, and Peter Bartlett. Byzantine-robust distributed learning: Towards optimal statistical rates. In International Conference on Machine Learning, pages 5650–5659. PMLR, 2018.

- [Zha22] Lichen Zhang. Speeding up optimizations via data structures: Faster search, sample and maintenance. Master’s thesis, Carnegie Mellon University, 2022.

- [ZHD+20] Xinwei Zhang, Mingyi Hong, Sairaj Dhople, Wotao Yin, and Yang Liu. Fedpd: A federated learning framework with optimal rates and adaptivity to non-iid data. arXiv preprint arXiv:2005.11418, 2020.

- [ZLL+18] Yue Zhao, Meng Li, Liangzhen Lai, Naveen Suda, Damon Civin, and Vikas Chandra. Federated learning with non-iid data. arXiv preprint arXiv:1806.00582, 2018.

- [ZLL+21] Gaoyuan Zhang, Songtao Lu, Sijia Liu, Xiangyi Chen, Pin-Yu Chen, Lee Martie, and Mingyi Horesh, Lior abd Hong. Distributed adversarial training to robustify deep neural networks at scale. 2021.

- [ZPD+20] Yi Zhang, Orestis Plevrakis, Simon S Du, Xingguo Li, Zhao Song, and Sanjeev Arora. Over-parameterized adversarial training: An analysis overcoming the curse of dimensionality. In NeurIPS. arXiv preprint arXiv:2002.06668, 2020.

- [ZRSB20] Giulio Zizzo, Ambrish Rawat, Mathieu Sinn, and Beat Buesser. Fat: Federated adversarial training. arXiv preprint arXiv:2012.01791, 2020.