Federated Geometric Monte Carlo Clustering to Counter Non-IID Datasets

Abstract

Federated learning allows clients to collaboratively train models on datasets that are acquired in different locations and that cannot be exchanged because of their size or regulations. Such collected data is increasingly non-independent and non-identically distributed (non-IID), negatively affecting training accuracy. Previous works tried to mitigate the effects of non-IID datasets on training accuracy, focusing mainly on non-IID labels, however practical datasets often also contain non-IID features. To address both non-IID labels and features, we propose FedGMCC111 Code and genome dataset available at: https://figshare.com/s/dc2f4280ce012e12f414 , a novel framework where a central server aggregates client models that it can cluster together. FedGMCC clustering relies on a Monte Carlo procedure that samples the output space of client models, infers their position in the weight space on a loss manifold and computes their geometric connection via an affine curve parametrization. FedGMCC aggregates connected models along their path connectivity to produce a richer global model, incorporating knowledge of all connected client models. FedGMCC outperforms FedAvg and FedProx in terms of convergence rates on the EMNIST62 and a genomic sequence classification datasets (by up to +63%). FedGMCC yields an improved accuracy (+4) on the genomic dataset with respect to CFL, in high non-IID feature space settings and label incongruency.

I Introduction

Federated learning (FL) frameworks [1, 2] are commonly used when regulations (such as the GDPR222https://gdpr.eu/) or mere data volume prevent data exchanges. Datasets are distributed across the members (clients) of the federation and the global machine learning optimization problem can approximately be split up into smaller sub-problems that are distributed across independently acting clients. Client solutions are aggregated either by a central server or in a distributed manner. However, this approximation only holds in an idealised settings where client datasets are sampled from the same distribution and data samples form independent events. Deviating from independent and identically distributed datasets (IID) poses a challenge to most FL approaches and results in global models that fail to accurately represent the entire aggregated dataset [3, 4, 5, 6]. Sources of non-IID-ness can be found in both the label space and the feature space. In the former, for a given feature, different labels might be attributed due to regional differences (e.g., different sentiments in fashion). This is also termed concept shift and leads ultimately to clients with incongruent labelling participating to the training of a common FL model. In addition, classes may be imbalanced due to one class of labels being over-represented with respect to others. This paper focuses on the latter, i.e., feature space IID violations. They appear for example when a particular feature is over-represented in a member’s dataset compared to other datasets. A biobank might sample genes that bias towards a particular local population [7] with the consequence of possibly overlooking regional differences, such as the well known preponderance of a specific gene mutation coding for sickle cell disease in the Sub-Saharan Africa population [8]. For a given label, features might also vary across datasets, such as different handwriting styles for the same alphanumeric character (e.g., 7 with and without bar). Non-IID-ness may lead to feature and label skew [3] and accuracy degradation [4, 6, 9] by leaving the choice between starting from different initialization weights, causing models to diverge, or accepting diversity suppression when starting from the same weights [5].

Besides statistical heterogeneity, FL algorithms need to cope with system heterogeneity, leading to asynchronous model updates or incomplete local training. The first proposed FL attempt, FedAvg [1], aggregates all client models by averaging their weights, leading to the above inaccuracies in the presence of non-IID-ness. Variations [6, 10] of FedAvg have been proposed, with satisfactory results when the non-IID-ness is less pronounced and exclusively in the label space (see Sec. II). Federated Clustering [11, 12, 13, 14] tackles this problem by assigning clients to separate clusters and hence limiting the deleterious transfers of negative knowledge between member models that have been trained on distinct datasets. These approaches result in the construction of trained models with reasonable accuracy despite IID violations, however, at the cost of significant communication and/or computational overheads.

In this paper, focusing on non-IID-ness in the feature space, we propose a novel Monte Carlo Clustering approach, called FedGMCC, to counter non-IID-ness without compromising final model accuracy and while maintaining low communication costs and data privacy. We show that in order to train a global FL model in a non-IID setting, the objective function to be minimized has to be conceptually split up into two components: the first encompasses the IID component for which the usual FedAvg solution holds; the second captures the non-IID contribution, which we show can be solved by introducing interaction between client models. We derive this interaction by leveraging an observation about the geometry of training loss manifolds [15, 16, 17, 18], namely that seemingly different, stationary solutions to the training loss minimization problem, obtained at the individual member sites, are often connected via simple parametric curves where the training loss is approximately flat. The existence of these curves reveals the pair-wise interaction between models needed to solve the non-IID problem, which leads us to establish a criterion for clustering seemingly different solutions and suppressing negative knowledge transfer between non-connected ones. More importantly, we show that drawing from [19], averaging models along the curve parametrization, can produce global models with enhanced accuracy and generalization. As a summary, we make the following contributions.

-

1.

We separate the FL objective in an IID and a non-IID components and, based on the geometry of curved training loss manifolds, put forward an Ansatz solution that simultaneously minimizes both components.

-

2.

We demonstrate how to construct this solution using a Monte Carlo sampling of the received client model output spaces, and present novel model weight clustering and aggregation rules.

- 3.

II Related Work

Among the myriad of published FL algorithms, most of them rely on clients to upload model weight updates onto a central server that proceeds to aggregate them and to redistribute the result back to clients. The main difference often relies in the way the aggregation rule is applied. FedAvg [1] was first to allow clients to train a global model locally, instead of transferring data. FedAvg returns to a central server only for aggregating all local models by averaging their weights. FedProx [6] inhibits local updates, by adding a proximal term to the loss function, which restrains divergence between local and global model weights. FedBn [10] achieves accurate results on non-IID datasets by batch normalizing the local neural networks’ input layer before aggregation. FedFV [20] detects inconsistent gradient updates and corrects them before the aggregation step. Our approach (FedGMCC) aggregates models by averaging weights along their geometric connection.

Federated clustering groups certain client models into separate clusters to prevent negative knowledge transfer such as the iterative federated clustering algorithm (IFCA) [11] which solves an incongruency that occurs when non-IID datasets exhibit concept shifts by training multiple models on local data and returning the one with the lowest aggregation loss. However, these works primarily focus on label-space non-IID-ness, leading to inaccurate results in the presence of feature-space non-IID-ness. Moreover, training multiple models induce high communication and computation costs.

Merging local models with approximately the same weights (according to cosine similarity, L1 or L2 norm) reduces the number of models in the ensemble [12, 13]. For example, clustered FL (CFL) [13] merges models if their weights (or gradients) compare well enough based on a specified metric. Again CFL focuses on label non-IID-ness. In contrast, FedGMCC explicitly considers feature-space non-IID-ness. Among the aforementioned FL approaches, CFL is the most reminiscent to our approach, with two differences. First in CFL, the central server applies clustering after multiple FedAvg iterations whereas FedGMCC clusters after each client-server communicating round. Second, CFL’s clustering rule relies on measuring whether the gradients of individual client-weight models are coherent (parallel) via the cosine similarity measure. Our approach verifies that a parallel transport of one client model weights to the other is feasible. Hence, FedGMCC extends CFL’s gradient coherency measure to include intermediary models along the affine connection.

III Problem Description

We consider clients that aim to locally minimize a local objective . Clients send their respective solutions (client model weights) to a central server to approximate with aggregate a global solution for the loss minimization problem , which maps the classes of the compact input space into the label space , where . We consider the neural network as a function , parametrized by weights , that maps to the probability simplex . We write for the probability of the -th class and define the population loss at federation member as the cross-entropy loss .

In an IID setting, the optimization problem minimizing can be expressed as sub-problems that train local models via stochastic gradient descent (SGD), which are then communicated to a server for aggregation after each communication round (indexed by ):

| (1) |

FedAvg [1] makes use of the weighted averaging aggregation function , where is the fraction of the -th member’s local dataset with repect to the overall dataset size. Unfortunately, in a non-IID setting, this aggregation is not a valid approximation.

We follow [5] in quantifying non-IID-ness with the help of the Earth Mover Distance (EMD) [21] and write for the EMD of distributions obtained by averaging the set of pairwise EMDs (cf. Apx. A).

Weighted averaging fails as an aggregation function under non-IID datasets [5] because: (1) if weights in client models are all initialized identically, the label-space non-IID-ness between client dataset distributions will be the dominant factor and weights will diverge; and (2) if weights are initialized differently, client models risk converging to different solutions 333Without a formal proof,a third contribution term to weight divergence could be added in terms of feature-space non-iid. The steepness of local loss function pockets in relation to the local flatness of the loss manifold exacerbates the divergence after aggregation and hence leads to sub-optimal global model performance [22], which we address with Geometric Monte Carlo Clustering.

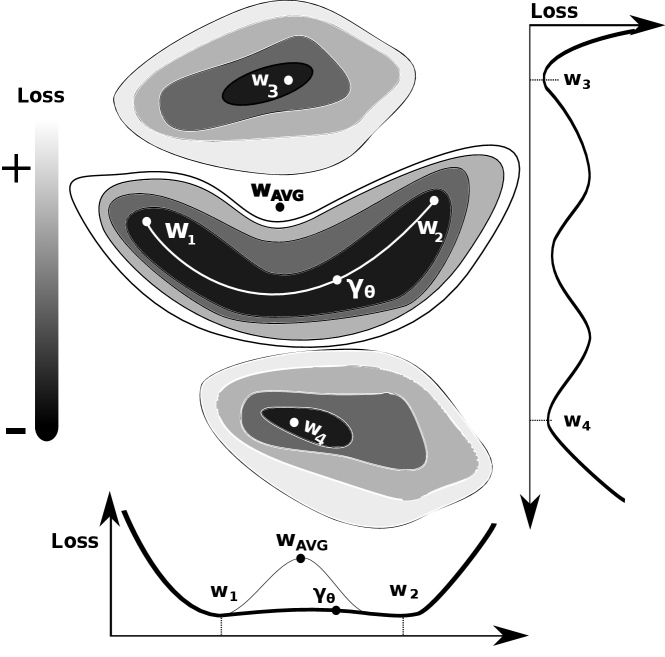

IV Geometric Monte Carlo Clustering

In a non-IID setting, individual client models are set to converge during training towards distinct local minima, i.e., different coordinates in weight space on the training loss manifold (see Fig. 1). In particular, when the non-IID-ness lies exclusively in the feature space between two client datasets and , we hypothesize the existence of a continuous transformation (e.g. pixel rotation, color inversion, shape distortion, etc.) that maps, on average, one subset of features detained by one client to a subset of features of a different client . As a consequence, we suppose that the model weights of one client are trained to encode a set of transformed features with respect to another client. Hence we hypothesize the existence of a continuous transformation that maps one subset of weights to another one and that can alternatively be modeled by a continuous affine connection via a curve parametrization . This curve connects and (with and ) ideally on a loss surface where the loss value does not vary. That is, two neural nets parametrized by and agree on the output space given the same input. Along this curve of invariant loss reside a family of intermediary model weights that can be aggregated to produce a richer global model, incorporating the knowledge of both client models. In contrast, under label-space IID violations, particularly concept shifts, we expect no continuous curve to be found (e.g. between and on Fig. 1) since they disagree on the classification of a same input feature. In this case, a well constructed clustering rule should separate both models to avoid negative knowledge transfer. This curve finding is at the heart of our novel Federated Geometric Monte Carlo Clustering algorithm (FedGMCC).

IV-A Prerequisites

Key to FedGMCC is the treatment of non-IID datasets as perturbations of probability distributions from the ideal IID baseline , where and . Datasets that originally had overlapping feature and label representations have, after perturbation, a component that contributes to their non-IID-ness. Let us denote as the labeled dataset drawn from the distribution such that and as the datasets drawn from such that , where . The training objective is:

| (2) |

is the usual IID loss function (see Eq. 1). The pairwise interaction loss captures the training loss on a pair of non-IID distributions. We use the solutions to the pure IID objective to approximate the solution to .

IV-B Curve Finding

We intuit the solution to the minimization of Eq. 2 to be of the form where introduces a pairwise interaction between two client model weights and balances its contribution to the total solution with a coupling constant . As we have already hinted in the previous section on the possibility of connecting distinct model weights via a transformation, we propose to model this interaction as an affine connection between weights and by a smooth curve on the loss function manifold of dimension equal to the number of parameters of the neural net and parametrized by . We need to find the transport parameter that leaves the gradient of the second term in Eq. 2 parallel for every . This amounts to solving the geodesic equation with boundary conditions and . Ideally, the central server, which detains the individual client model weights, should execute the curve finding procedure after every local update. However, since the central server does not have access to the datasets in order to explore the loss manifold, we sample a surrogate version of the latter by generating a Monte Carlo type input dataset drawn from a uniform distribution to reconstruct the loss from the label-space output , using mean-squared error (MSE) as loss function, since we merely compare raw outputs. The curve is then derived by perturbing the parameter in the direction where the loss does not vary. We rely on a polygonal chain as Ansatz, as it has been proven to lead to optimal curve finding results [15].

| (3) |

Following [15], we simplify the loss to be minimized to the expectation of with respect to uniform distribution on the curve on , making the loss computationally tractable.

| (4) |

IV-C Model Weights Clustering and Aggregation

Garipov et. al [15] showed that two models initialized differently and trained on the same dataset can be connected via a simple curve. Two models trained independently on distributed datasets with only partially overlapping features are also expected to converge to different stationary solutions. Nevertheless, we expect these seemingly different solutions to be interlinked via an affine connection in weight space i.e a smooth curve can be constructed that along a approximately flat loss manifold. Hence, seeminly different solution form a family of equivalent solutions to the loss minimization problem which leads us to put forward the following clustering rule.

Proposition 1.1 , two models with weights and belong to the same cluster if there exists a that parametrizes the curve and such that

| (5) |

Because generates a family of intermediary model weights, all approximate solutions to the optimization problem (see Eq. 2), their sum must also be a solution.

[19] showed that averaging intermediary model weights on the constructed curve along can lead to an aggregated model with improved generalization.

Proposition 1.2 Model weights belonging to the same cluster are aggregated with

| (6) |

IV-D Algorithm

FedGMCC is described in Alg. 1 and schematized in Fig. 1. The server samples the output space of each received client model by generating a Monte Carlo input dataset and tests via Prop. 1.1 whether two models can be grouped together. This procedure can be seen as a disjoint-set query in which model weights that could not be connected to any other model weights are kept as singleton sets (e.g., on Fig. 1). With disjoint sets, the weights that belong to a same cluster are aggregated by averaging all pairwise interactions leading to clustered global solutions to the non-IID problem (see Proposition 1.2). is then sent to the clients that contributed to it.

V Evaluation

To evaluate our approach, we show that real-life data is in fact non-IID in the feature space and compare the accuracy of our approach — Federated Geometric Monte-Carlo Clustering (FedGMCC) — against state-of-the art federated learning approaches (FedAVG [1], FedProx [6], CFL [13] and standard SGD). We leverage the well known image dataset EMNIST62 [23] and two sequential genomic datasets (SENSG-A and SENSG-R) we created (see Appx. B). EMNIST62 contains 814255 handwritten characters, labeled as 62 unbalanced classes in 28x28 pixel format. The genomic dataset contains reads (i.e., sequences of the bases A, T, C, G) of size 150 and every position is labelled according to whether or not it is part of a genomic variation. Such labelling is important, for example, to filter out and protect private information in the genomic information processing pipeline [24]. We introduce a concept shift in SENSG-R by randomly flipping the label of the most recurrent genomic features. EMNSIT62 and SENSG-A were distributed among clients following the procedure detailed in Appx. B. This partitioning introduces non-IID-ness in the feature space but also in the label space because certain classes might be over-represented in some client datasets compared to others. The genomic dataset SENSG-R is naturally partitioned using the populations of individuals (Asian, European, African). In addition, we partition both sets artificially into 10 client datasets to consider a wider range of non-IID-ness. Models were trained on an AMD Ryzen7 3700x system with 8 3.6 GHz cores, a NVIDIA Geforce RTX 3090 GPU with 10496 CUDA cores and 24 GB of GDDR6X memory. We used the binary-cross entropy loss function for the training of all classifiers and Tensorflow 2.6 [25].

V-A Network Architecture and Baselines

We construct two neural network architectures for the benchmarks: for EMNIST62, we use 2 stacked CNN layers, activated by ReLu, and followed by a fully connected neural network. The genomic datasets are classified with a network comprised of 2 stacked bidirectional LSTMs that feed into a densely connected neural network. We compare our approach FedGMCC against three federated learning methods: FedAvg [1], FedProx [6] and CFL [13]. We assume a federation of members for all experiments, each solving its local optimization problem using SGD. FedAvg performs weighted average aggregation in the central server after each local training round, which is performed on the member datasets. FedProx [6] corrects FedAvg’s loss function by the proximal term , where is a positive constant, the weights of the most recent global model and the weights of the local model at training step . We implement CFL by following the procedure laid out in [13]. With FedAvg the central server receives, aggregates and distributes client updates until the average of received client gradients decreases below . FedGMCC applies the clustering, aggregation and distribution scheme laid out in Alg. 1. In the first round, the value of was set to the median value of the training losses associated with the curve finding procedure. This value is subject to a 5 percentile increase if the number of clusters was higher than 1 and a 5 percentile decreases otherwise. The learning rate in the curve finding procedure was set to 0.1. In addition, we train two central models ( and ) on the combined genomic dataset using standard SGD as baselines. Training of two models is necessary due to the concept shift we introduce in SENSG-A. As usual, we separate the whole dataset into disjoint training (80%) and validation sets (20%). Hyperparameters have been fine-tuned for every training algorithm to give best possible results in terms of convergence rate. The mini-batch size was set to 64, and learning rates were set to 0.001. Local epoch numbers were respectively set to 10 and 5 for the EMNIST62 and SENSG-A datasets.

V-B Results

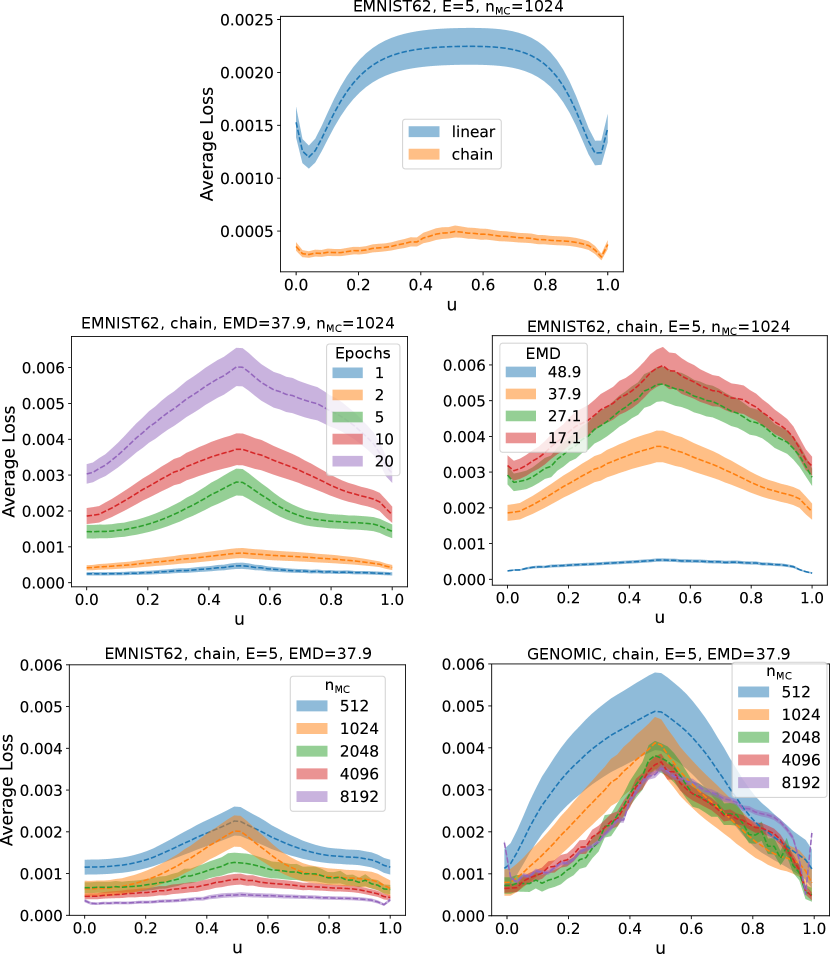

Curve Fitting between Local Models: Fig. 2 shows the average training loss for our curve-fitting approach for different parameters and setups. The top graph compares a naive linear curve with a polygonal one-bend chain curve (see 3). Using the latter we were able to find a region of low loss and hence prove the existence of multiple simple connections between pairs of client models, i.e., pairs of client models belonging to the same cluster. Note that by setting , the linear parametrization reduces to the classical FedAvg aggregated model situated in a region of higher loss with respect to the chain curve loss . When clients train local models with varying local epoch numbers (middle left) or EMD values (middle right) the average loss is directly affected. When the number of local epochs is decreased or the data distribution setting tends towards the ideal IID setting, the GMCC losses flatten out and the inter-client variability decreases. This is an important finding that could previously only be obtained when computing loss surface manifold with real datasets. Our approach achieves this on a generated dataset using Monte Carlo sampling. More interestingly, increasing the sample size of the GMCC input dataset makes it easier to find connections between client model weights with low training losses. For EMNIST62, where no concept shift was present (bottom left), finding an optimal curve parametrization is only a matter of increasing computational costs (due to increased GMCC sample sizes). On the other hand, for the genomic datasets, because the concept-shift introduced an incongruency in the output space between two models, no amount of GMCC sample size will be able to connect models hence leading to the splitting of client models into separate clusters. We validate our approach, where the server substitutes the client datasets by a surrogate Monte Carlo type generated dataset.

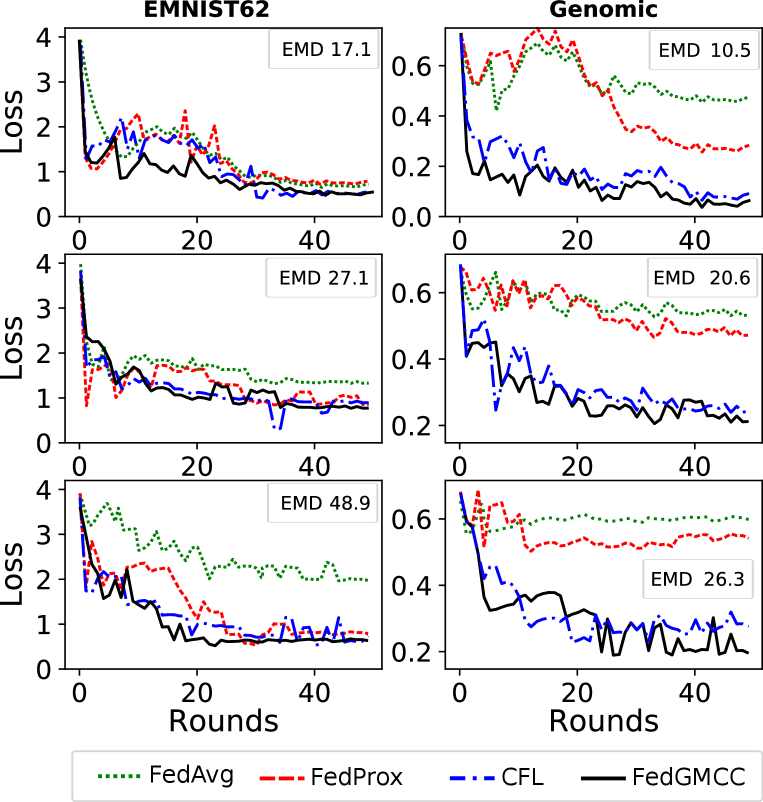

Loss: Fig. 3 reports the loss of the FL models for varying EMD values and for the EMNIST62 and genomic datasets. Loss is computed on the validation set after every communication round. For CFL and FedGMCC, where the aggregation can lead to multiple models, the average loss is shown. We highlight the increasing difficulty for FL models to converge when the EMD increases. As expected, FedAvg shows the worst results for both datasets. FedProx is able to deal with high EMD values in the EMNIST62 dataset but does not converge to a lower loss value at high EMD compared to the FL clustering algorithms (CLF and FedGMCC). The latter are able to cluster dissimilar models and avoid negative transfer of knowledge between them, which allows them to obtain the two aggregated final models with optimal loss.

Accuracy: For the EMNIST62 dataset the FL algorithms achieved a similar performance with low EMD values than the one obtained in the centralized setting (85) (see Table I). However, their accuracy degrades quickly when the EMD increases, with values for FedAVG that dropped from 0.81 at EMD 17.1 to 0.43 at EMD 48.9. This drop was less pronounced for FedProx, CFL and FedGMCC. The two latter maintained a 0.79 accuracy at high EMD. The situation was different for the genomic datasets. The centralized SGD models cSGD0 and cSGD1 achieved a 0.88 accuracy. CFL and FedGMCC attained comparable 0.85 accuracy, while FedProx and FedAvg respectively obtained 0.71 and 0.75 at low EMD. As expected, FedAvg’s performance dramatically degraded at high EMD to 0.18. The drop in accuracy was less severe for FedProx and stayed around 0.79. FedGMCC led to two global models, each of which maintained an accuracy of 0.85 at EMD 17.1 and 0.84 at higher EMD values. This high performance was also achieved by CFL but FedGMCC yielded a better accuracy at high EMD. This is not surprising because of CFL’s and FedGMCC’s aggregation rules, which enable them to create multiple personalized models to accommodate a certain degree of IID-ness in particular the incongruency due to the concept shift injected in the genomic dataset.

| EMNIST62 | ||||

| EMD | FedAvg | FedProx | CFL | FedGMCC |

| 17.1 | 0.81 | 0.83 | 0.83 | 0.83 |

| 27.1 | 0.76 | 0.83 | 0.83 | 0.83 |

| 48.9 | 0.43 | 0.71 | 0.79 | 0.79 |

| GENOMIC | ||||

| EMD | FedAvg | FedProx | CFL | FedGMCC |

| 6.2 (real) | 0.88 | 0.89 | 0.91 | 0.93 |

| 10.5 | 0.88 | 0.91 | 0.93 0.92 | 0.930.93 |

| 20.6 | 0.56 | 0.70 | 0.800.79 | 0.840.83 |

| 26.3 | 0.21 | 0.65 | 0.800.79 | 0.840.83 |

VI Conclusion

We presented FedGMCC, a federated learning framework that consists of novel clustering rules and a new aggregation procedure. Substituting real datasets by a surrogate Monte Carlo dataset, we show how the curve finding procure can reveal the geometric connection between congruent models serving as a clustering rule of client model weights. Furthermore, FedGMCC aggregation rule averages all the intermediary model weights on the curve parametrization, leading to better generalization and extending the classical FedAvg aggregation rule to weight spaces of curves training loss manifold.

FedGMCC outperformed other FL training algorithms on the EMNSIT62 and genomic sequence sensitivity classification tasks where we controlled the non-IID-ness using an artificial partitioning technique and the EMD measure. In high non-IID setting FedGMCC yielded convergence rates respectively 36% and 8% faster than those of FedAvg and FedProx on EMNSIT62. FedGMCC outperformed FedAvg, FedProx and CFL on the genomic datasets by 63, 19 and 4 .

References

- [1] H. McMahan, Eider Moore, Daniel Ramage and Blaise Agüera Arcas “Federated Learning of Deep Networks using Model Averaging” In CoRR abs/1602.05629, 2016 arXiv: http://arxiv.org/abs/1602.05629

- [2] He Yang “H-FL: A Hierarchical Communication-Efficient and Privacy-Protected Architecture for Federated Learning” In Proc. of 13th Int. Conf. on Artificial Intelligence, IJCAI, Montreal, Canada, 19-27 August, 2021, pp. 479–485 DOI: 10.24963/ijcai.2021/67

- [3] Peter Kairouz, H Brendan McMahan and Brendan Avent “Advances and open problems in federated learning” In arXiv:1912.04977, 2019

- [4] Kevin Hsieh, Amar Phanishayee, Onur Mutlu and Phillip Gibbons “The non-iid data quagmire of decentralized machine learning” In Int. Conf. on Machine Learning, 2020, pp. 4387–4398 PMLR

- [5] Yue Zhao et al. “Federated learning with non-iid data” In arXiv preprint arXiv:1806.00582, 2018

- [6] Tian Li et al. “Federated Optimization in Heterogeneous Networks” In Proc. of Machine Learning and Systems (MLSys), Austin, TX, USA, March 2-4, 2020 URL: https://proceedings.mlsys.org/book/316.pdf

- [7] Jeroen GJ Rooij, Mila Jhamai and Pascal P Arp “Population-specific genetic variation in large sequencing data sets: why more data is still better” In Eu. J. of Human Genetics 25.10 Nature Publishing Group, 2017, pp. 1173–1175

- [8] Thomas N Williams “Sickle cell disease in sub-Saharan Africa” In Hematology/Oncology Clinics 30.2 Elsevier, 2016, pp. 343–358

- [9] Qinbin Li, Yiqun Diao, Quan Chen and Bingsheng He “Federated learning on non-iid data silos: An experimental study” In arXiv preprint arXiv:2102.02079, 2021

- [10] Xiaoxiao Li et al. “Fedbn: Federated learning on non-iid features via local batch normalization” In arXiv preprint arXiv:2102.07623, 2021

- [11] Avishek Ghosh, Jichan Chung, Dong Yin and Kannan Ramchandran “An efficient framework for clustered federated learning” In 34th Conf. on Neural Information Processing Systems (NeurIPS), 2020

- [12] Christopher Briggs, Zhong Fan and Peter Andras “Federated learning with hierarchical clustering of local updates to improve training on non-IID data” In IEEE Int. Conf. on Neural Networks (IJCNN 2020), pp. 1–9

- [13] Felix Sattler, Klaus-Robert Müller and Wojciech Samek “Clustered federated learning: Model-agnostic distributed multitask optimization under privacy constraints” In IEEE T. on neural net. and learning sys. IEEE, 2020

- [14] Kavya Kopparapu and Eric Lin “Fedfmc: Sequential efficient federated learning on non-iid data” In arXiv preprint arXiv:2006.10937, 2020

- [15] Timur Garipov et al. “Loss surfaces, mode connectivity, and fast ensembling of dnns” In Proc. of the 32nd Int. Conf. on Neural Information Processing Systems, 2018, pp. 8803–8812

- [16] C. Freeman and Joan Bruna “Topology and Geometry of Half-Rectified Network Optimization” In 5th Int. Conf. on Learning Representations, (ICLR), Toulon, France, April 24-26, 2017 URL: https://openreview.net/forum?id=Bk0FWVcgx

- [17] Seyed Iman Mirzadeh et al. “Linear mode connectivity in multitask and continual learning” In arXiv preprint arXiv:2010.04495, 2020

- [18] Anna Choromanska et al. “The loss surfaces of multilayer networks” In Artificial intelligence and statistics, 2015, pp. 192–204 PMLR

- [19] Pavel Izmailov et al. “Averaging weights leads to wider optima and better generalization” In Conf. on Uncertainty in Artificial Intelligence, Monterey, CA, USA, 2018

- [20] Zheng Wang et al. “Federated Learning with Fair Averaging” In Proc. of 13th Int. Conf. on Artificial Intelligence, IJCAI, Montreal, Canada, 19-27 August, 2021, pp. 1615–1623

- [21] Yossi Rubner, Carlo Tomasi and Leonidas J Guibas “A metric for distributions with applications to image databases” In 6th Int. Conf. on Comp. Vision (IEEE Cat. No. 98CH36271), 1998, pp. 59–66

- [22] Nitish Shirish Keskar et al. “On large-batch training for deep learning: Generalization gap and sharp minima” In arXiv preprint arXiv:1609.04836, 2016

- [23] Gregory Cohen, Saeed Afshar, Jonathan Tapson and Andre Van Schaik “EMNIST: Extending MNIST to handwritten letters” In Int. Conf. on Neural Networks (IJCNN), 2017 DOI: 10.1109/ijcnn.2017.7966217

- [24] Jérémie Decouchant et al. “Accurate filtering of privacy-sensitive information in raw genomic data” In J. of Biomed. Informatics 82, 2018, pp. 1–12

- [25] Martín Abadi et al. “Tensorflow: A system for large-scale machine learning” In 12th USENIX Symp. on Operating Systems Design and Implementation (OSDI 16), 2016, pp. 265–283

Appendix A Earth Mover Distance

| Encoder Layers | dim(features) | Decoder layers | Learning rate | Reconstruction loss | |

|---|---|---|---|---|---|

| EMNIST62 | 4 x CNN | 7x7x2 | 4 x TransCNN | 0.01 | |

| SENSG-* | 2 x CNN + 2 x LSTM | 30x1 | 2 x LSTM + 2xTransCNN | 0.001 |

Earth Mover Distance (EMD) is defined as a distance metric between two distributions and . It computes the minimal distance for mapping all clusters of distribution (set of suppliers) to any cluster of distribution (set of consumers). The distributions are thereby characterized by signatures and likewise , where clusters are represented as bin centroids with weight (and with weight , respectively). The overall cost for transferring all clusters from to is:

| (7) |

where is the ground distance between the supports and and is the flow between and that needs to be minimized under the constraints:

-

1.

(unidirectional flow),

-

2.

(limited consumer storage),

-

3.

(limited supply), and

-

4.

(transfer all).

With optimal flow , EMD for a pair of distributions follows as:

| (8) |

We extend to a set of distributions by averaging the set of pairwise EMDs between the -th distribution and the distribution over the whole population , using

| (9) |

where is the sample size of the dataset generated by distribution , is the total population size and . In the context where models a labeled dataset with classes, the joint probability distribution is factorized in terms of its conditional and marginal probability distribution for , i.e, the EMD is computed over all the classes for a given feature .

To accelerate EMD computation we reduce datasets to their essentials by training autoencoders using a SGD optimizer and mean square error as reconstruction loss function. Table II shows the parameters for the autoencoders for the two benchmarks we used to evaluate our approach.

Appendix B Datasets

B-A Image Datasets

EMNIST62 444https://colab.research.google.com/drive/1r-c6UTkJEQx3Pi-Hl9q_MoveIF_0h03M is an image dataset for simulating non-IID image classification [23]. It comprises a set of 814255 handwritten alpha-numeric characters, labeled as 62 unbalanced classes, formatted in 28x28 pixel images.

B-B Genomic Datasets

We used two different genomic datasets: SENSG-R and SENSG-S.

SENSG-R is composed of 7 500 000 reads from four randomly selected genomes from each of the three major populations represented in the 1000 Genomes Project (1000GP) 555https://www.internationalgenome.org/: African, European and Asian(see Table III). With this we intend to resemble the natural representation one would obtain when sampling in regions where these populations are dominant.

SENSG-S is composed of one million reads generated from twenty individual genomes ( reads for each genome) and the randomly selected individuals are the following: HG00096, HG00097, HG00099, HG00100, HG00101, HG00102, HG00103, HG00105, HG00106, HG00107, NA21128, NA21129, NA21130, NA21133, NA21135, NA21137, NA21141, NA21142, NA21143, and NA21144.

| ASIA | AFRI | EURO |

|---|---|---|

| HG00543 | HG02703 | HG00315 |

| HG00559 | HG02769 | HG00327 |

| HG00566 | HG02715 | HG00334 |

| HG00578 | HG02771 | HG00339 |

| HG00581 | HG02722 | HG00341 |

| HG00580 | HG02676 | HG00346 |

| HG00593 | HG02808 | HG00353 |

| HG00598 | HG02810 | HG00358 |

| HG00592 | HG02614 | HG00360 |

| HG00613 | HG02839 | HG00365 |

For generating these datasets, we follow three steps:

Step 1 - Genomic sequence generation: We compiled the chromosome 1 sequence of each individual selected by combining the human reference genome GRCh37 and the individual’s variants in the 1000 GP. Second, we randomly select positions in the sequence to use as seed for obtaining 150 character sequences (reads), comprised of the nucleotides A, T, G, and C.

Reads constitute the first digitized information obtained from next

generation sequencing machines, which are subsequently processed to

extract variations (i.e., what distinguishes us one from another and

what might carry sensitive information, like, disease prepositions).

Therefore, genomic data is a practical example where data is generated in multiple geo-distributed locations, e.g., hospitals in different continents, and it must not be shared among different locations for privacy reasons.

Step 2 – Labeling: We labeled each nucleotide by deeming it as sensitive if a genomic variation reported in the 1000 GP or insensitive otherwise, respectively, 1 or 0.

For SENSG-S only, we simulate a concept shift by flipping some labels manually in order to obtain non-IID-ness.

Step 3 – Reads encoding: Next, we used word2vect encoding to convert the genomic sequence in a vector. After, this step the reads are ready to be used for the training.

In this step we consider a vocabulary (word size) of 5 letters and we generated all the consecutive 5 nucleotides sequences of each read.

B-C Artificial Partitioning

SENSG-A and EMNIST62 are partitioned artificially into subsets using our iterative clustering and distribution algorithm, which we describe in the following. We first apply -mean clustering to divide the dataset into subsets with maximal EMD. Then, computing the center of gravity (COG) of all subsets, we randomly select elements from the farthest subset and assign them to other subsets . This reduces the inter-subset distance and hence the overall EMD. We retain the current partition if pairwise EMD values () are normally distributed (according to the Shapiro normality test). We repeat this process until a we found a partition with a total EMD value that is low enough.